Abstract

This work presents a universal accelerated primal–dual method for affinely constrained convex optimization problems. It can handle both Lipschitz and Hölder gradients but does not need to know the smoothness level of the objective function. In line search part, it uses dynamically decreasing parameters and produces approximate Lipschitz constant with moderate magnitude. In addition, based on a suitable discrete Lyapunov function and tight decay estimates of some differential/difference inequalities, a universal optimal mixed-type convergence rate is established. Some numerical tests are provided to confirm the efficiency of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the minimization problem

where \(Q\subset \,{{\mathbb {R}}}^n\) is a simple closed convex subset, \(\varOmega : = \left\{ x\in \,{{\mathbb {R}}}^n:\,Ax = b\right\} \) is an affine set with \(A\in \,{{\mathbb {R}}}^{m\times n}\) and \(b\in \,{{\mathbb {R}}}^m\), and \(f:\,{{\mathbb {R}}}^n\rightarrow \,{{\mathbb {R}}}\cup \{+\infty \}\) is properly closed and convex, with smooth part h and nonsmooth (simple) part g. The model problem (1) arises from many practical applications, such as compressed sensing [6], image processing [8] and decentralized distributed optimization [4].

In the literature, existing algorithms for solving (1) mainly include Bregman iteration [5, 29, 67], quadratic penalty method [35], augmented Lagrangian method (ALM) [26,27,28, 33, 41, 56], and alternating direction method of multipliers [22, 36, 40, 50, 53, 57, 60, 61, 65]. Generally speaking, these methods have sublinear rate \(\mathcal O(1/k)\) for convex problems and can be further accelerated to \({\mathcal {O}}(1/k^2)\) for (partially) strongly convex objectives. We also note that primal–dual methods [7, 20, 25, 31, 58, 59, 62] and operator splitting schemes [13, 18, 19] can be applied to (1) with two-block structure.

However, among these works, it is rare to see the optimal mixed-type convergence rate, i.e., the lower complexity bound [51]

where \(\mu \ge 0\) is the convexity parameter of f and L is the Lipschitz constant of \(\nabla h\). Both Nesterov’s smoothing technique [46] and the accelerated primal–dual method in [12] achieve the lower bound for convex case \(\mu =0\). The inexact ALM framework in [66] possesses the optimal complexity (2) but involves a subroutine for inexactly solving the subproblem.

We mention that the second part of (2) corresponding to L and \(\mu \) agrees with the well-known lower complexity bound of first-order methods for unconstrained convex problems with Lipschitz gradients, namely (the affine set \(\varOmega \) is the entire space \(\,{{\mathbb {R}}}^n\))

The intermediate non-Lipschitz case is also of interest to be considered [43, 45]. Particularly, when \(\nabla h\) is Hölder continuous (cf.(11)) with exponent \(\nu \in [0,1)\), Nesterov [48] presented a universal fast gradient method (FGM) for solving (3) that did not require à priori knowledge of the smoothness parameter \(\nu \) and the Hölderian constant \(M_\nu (h)\). A key ingredient of FGM is that Hölderian gradients can be recast into the standard Lipschitz case but with inexact computations [15, 54, 55], and it achieves the optimal complexity [44]

More extensions of FGM can be found in [23, 24, 32].

The dual problem of (1) reads equivalently as

If f is uniformly convex of degree \(p\ge 2\) (see [48, Definition 1]), then \(\nabla d\) is Hölder continuous with exponent \(\nu = 1/(p-1)\) (cf. [48, Lemma 1]). The methods in [16, 37] work for (5) with strongly convex objective f, i.e., the Lipschitzian case (\(\nu = 1\)). Yurtsever et al. [68] proposed an accelerated universal primal–dual gradient method (AccUniPDGrad) for general Hölderian case (\(\nu <1\)) and established the complexity bound (4) for the objective residual and the feasibility violation, with \(M_\nu (h)\) being replaced by \(M_\nu (d)\). Similarly with the spirit of FGM, the presented method utilizes the “inexactness” property of \(\nabla d\) and applies FISTA [2] to (5) with line search.

In this work, we propose a universal accelerated primal–dual method (see Algorithm 1) for solving (1). Compared with existing works, the main contributions are highlighted as follows:

-

It is first-order black-box type for both Lipschitz and Hölder cases but does not need to know the smoothness level priorly.

-

It uses the Bregman divergence and can handle the non-Euclidean setting.

-

In line search part, it adopts dynamically decreasing tolerance while FGM [48] and AccUniPDGrad [68] use the desired fixed accuracy.

-

By using the tool of Lyapunov function and tight decay estimates of some differential/difference inequalities, we prove the universal mixed-type estimate that achieves the optimal complexity (including (2),(4) as special cases).

We also provide some numerical tests to validate the practical performance. It is confirmed that: (i) a proper choice of Bregman distance is crucial indeed; (ii) our method outperforms FGM and AccUniPDGrad especially for non-Lipschitz problems and smooth problems with large Lipschitz constants, as the automatically decreasing tolerance leads to approximate Lipschitz constants with moderate magnitude.

Our method here is motivated from an implicit-explicit time discretization of a novel accelerated Bregman primal–dual dynamics (see (24)), which is an extension of the accelerated primal–dual flow in [39] to the non-Euclidean case. For unconstrained problems, there are some existing continuous dynamics [34, 63, 64] with Bregman distances. For linearly constrained case, we see an accelerated primal–dual mirror model [69], which is inspired by the accelerated mirror descent [34] and the primal–dual dynamical approach [21] but without time discretizations.

The rest of the paper is organized as follows. In Sect. 2, we provide some preliminaries including the Bregman divergence and the Hölder continuity. Then, the main algorithm together with its universal mixed-type estimate is presented in Sect. 3, and rigorous proofs of two technical lemmas are summarized in Sects. 4 and 5, respectively. Finally, some numerical results are reported in Sect. 6.

2 Preliminary

2.1 Notations

Let \(\left\langle {\cdot ,\cdot } \right\rangle \) be the usual inner product of vectors and \(\left\Vert {\cdot } \right\Vert \) be the standard Euclidean norm (of vectors and matrices). Given a proper function \(g:\,{{\mathbb {R}}}^n\rightarrow \,{{\mathbb {R}}}\cup \{+\infty \}\), the effective domain of g is denoted as usual by \( \mathbf{dom\,}g\), and the subdifferential of g at any \(x\in \mathbf{dom\,}g\) is the set of all subgradients:

Recall that \(Q\subset \,{{\mathbb {R}}}^n\) is a nonempty closed convex subset. Let \(\iota _Q(\cdot )\) be the indicator function of Q and \(N_Q(\cdot ): =\partial \iota _Q(\cdot )\) be its normal cone.

Introduce the Lagrangian for the model problem (1):

We say \((x^*,\lambda ^*)\in Q\times \,{{\mathbb {R}}}^m\) is a saddle point of \({\mathcal {L}}\) if

which also implies the optimality condition:

2.2 Bregman Divergence

Let \(\phi :Q\rightarrow \,{{\mathbb {R}}}\) be a smooth prox-function and define the Bregman divergence

Throughout, suppose \(\phi \) is 1-strongly convex:

Particularly, \(\phi (x) = \frac{1}{2}\left\Vert {x} \right\Vert ^2\) leads to \(D_\phi (x,y)=D_\phi (y,x)=1/2\left\Vert {x-y} \right\Vert ^2\), which boils down to the standard Euclidean setting. In addition, we have the following three-term identity; see [9, Lemma 3.2] or [17, Lemma 3.3].

Lemma 2.1

( [9, 17]) For any \(x,y,z\in Q\), it holds that

If \(\phi (x) = \frac{1}{2}\left\Vert {x} \right\Vert ^2\), then

2.3 Hölder Continuity

Let h be a differentiable function on Q. For \(0\le \nu \le 1\), define

If \(M_\nu (h)<\infty \), then \(\nabla h\) is Hölder continuous with exponent \(\nu \):

and this also implies that

When \(\nu = 1\), \(M_1(h)\) corresponds to the Lipschitz constant of \(\nabla h\), and we also use the conventional notation \(L_h = M_1(h)\).

According to [48, Lemma 2], the estimate (12) can be transferred into the usual gradient descent inequality, with “inexact computations”. Based on this, (accelerated) gradient methods can be used to minimize functions with Hölder continuous gradients [15, 54, 55].

Proposition 2.1

([48]) Assume \(M_\nu (h)<\infty \) and for \(\delta >0\), define

Then, for any \(M\ge M(\nu ,\delta )\), we have

3 Main Algorithm

Throughout, we make the following assumption on \(f = h+g\):

Assumption 3.1

The nonsmooth part g is properly closed convex on Q. The smooth part h satisfies \( \inf _{0\le \nu \le 1}M_\nu (h)<\infty \) and is \(\mu \)-convex on Q with \(\mu \ge 0\), namely,

Remark 3.1

For some cases, h might not be smooth (in \(C^1\) globally) enough but at least Lipschitz continuous. By Rademacher’s theorem [30, Theorem 3.1], Lipschitzian functions are differentiable almost everywhere. Thus, the assumption \(M_\nu (h)<\infty \) holds true with \(\nu =0\). Besides, in practical computations, we expect that the proximal calculation (cf.(14)) of the nonsmooth part g with respect to proper \(D_\phi (\cdot ,\cdot )\) is easy to compute (or has closed solution); see the matrix game problem in Sect. 6.1. \(\square \)

Our main algorithm, called universal accelerated primal–dual (UAPD) method, is summarized in Algorithm 1, where the subpart “\( \texttt{sub}\text {-}\texttt{UAPD}\)” in lines 3 and 6 has been given by Algorithm 2. Note that we do not require priorly the exponent \(\nu \) and the smoothness constant \(M_\nu (h)\) but perform a line search procedure (see lines 4–7).

3.1 Line Search

In Algorithm 1, the parameter \(\rho _{\!u}>1\) enlarges the approximate Lipschitz constant \(M_{k,i}\) (cf. line 5) to meet the following descent condition (cf. line 4)

For each \(k\in {\mathbb {N}}\), the line search part will end up with the smallest integer \(i_k\) satisfying (15) and call the subpart \(\texttt{sub}\)-\(\texttt{UAPD}\) \(i_k+1\) times in total. The extra parameter \(\rho _{_{\!d}}\ge 1\) reduces the output constant \(M_{k,i_k}\) and updates \(M_{k+1} = M_{k,i_k}/\rho _{_{\!d}}\) (cf. line 8).

Remark 3.2

We introduce the pair \((\rho _{\! u},\rho _{_{\!d}})\) in our method for generality. Practically, it is not easy to find the optimal choice. If \(\rho _{_{\!d}} = 1\), then \(M_k\) is nondecreasing. Otherwise, \(M_k\) can be nonmonotone. Consider two situations.

-

For the standard Lipschitz case \((\nu =1)\), as analyzed in [47, Section 3], if \(M_0\le L_h\), then the choice \(\rho _{_{\!d}}\ge \rho _{\! u}\) promises that \(M_k\le L_h\) for all \(k\ge 0\).

-

For the Hölder continuous case \((\nu <1)\), we have \(M_k\rightarrow \infty \) as \(k\rightarrow \infty \) (since \(M(\nu ,\delta )\rightarrow \infty \) as \(\delta \rightarrow 0\)). Taking \(\rho _{_{\!d}}>1\) may reduce \(M_k\) (locally for some k) but will increase the burden on the line search procedure. \(\square \)

Below, let us show that \(i_k\) is finite for \(k\in {\mathbb {N}}\). Indeed, we see \(M_{k,i} = \rho _{\! u}^{i}M_{k,0}\) increases as i does, and the step size (cf. line 1 of Algorithm 2)

has to be decreasing. Thus the tolerance (cf. line 2 of Algorithm 2)

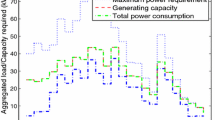

is increasing and by (13), \(M(\nu ,\delta _{k,i})\) is decreasing. This together with Proposition 2.1 and Assumption 3.1 concludes that

where \( \lceil s^*\rceil \) denotes the ceiling function (the minimal integer that is no less than \(s^*\)), and \(s^*\in [0,\infty )\) solves the equation \(M_{k,s^*} = M(\nu ,\delta _{k,s^*})\); see Fig. 1. In particular, we have

This eventually leads to

Remark 3.3

In the line search part, our Algorithm 1 adopts dynamically decreasing tolerance (17). However, the methods in [48] and [68, Algorithm 2] chose \(\delta _k=\epsilon /k\), where \(\epsilon \) is the desired accuracy. Hence, by Proposition 2.1, the approximate smoothness constant \(M_k\) of our method is smaller than the other two methods, especially for the Hölderian case. This will be verified by numerical experiments in Sect. 6. \(\square \)

Below, we give an upper bound of \(M_k\) and the total number of line search steps. By Theorem 3.1, \(\beta _k\) corresponds to the convergence rate of Algorithm 1 and admits explicit decay estimate (see Lemma 3.3). If the desired accuracy \(\beta _{k+1}={\mathcal {O}}(\epsilon )\) is given, then the term \(\left|{\log _{\rho _{\!u}}\beta _{k+1}} \right|\) in (19) can be replaced by \(\left|{\log _{\rho _{\!u}}\epsilon } \right|\).

Lemma 3.1

For any \(k\in {\mathbb {N}}\), we have

and consequently, it holds that

Proof

See Sect. Appendix A. \(\square \)

3.2 Time Discretization Interpretation

Below, we provide a time discretization interpretation of Algorithm 1. Given the k-th iterations \((x_k,v_k,\lambda _k)\) and the parameters \((\gamma _k,\beta _k,M_{k})\), the line search procedure returns \((y_k,x_{k+1},v_{k+1},\lambda _{k+1})\) that satisfy

where \({\mathcal {G}}(y_k,v_{k+1},\lambda _k):=\nabla h(y_k)+\partial g(v_{k+1})+N_Q(v_{k+1})+A^\top \widetilde{\lambda }_k\) with \(\widetilde{\lambda }_k= \lambda _k+\alpha _k/\beta _k(Av_k-b)\), and the step size \(\alpha _k\) solves (cf.(16))

where we used the relation \(M_{k,i_k} = \rho _{_{\!d}} M_{k+1}\). Besides, \(y_k\) and \(x_{k+1}\) fulfill (cf. (15))

and the parameters \((\gamma _{k+1},\beta _{k+1})\) are governed by (cf. line 9 of Algorithm 1)

with \(\beta _0 = 1\) and \(\gamma _0>0\). For clarity, in Sect. Appendix B, we give a detailed derivation of the reformulation (20) from Algorithms 1 and 2.

As one can see, \(y_k\) in (20a) is an intermediate which provides a “prediction”, and then the “correction” step (20c) is used to update \(x_{k+1}\). From (20a), (20b), and (20c), it is not hard to find that \(y_k,\,v_{k+1},\,x_{k+1}\in Q\), as long as \(x_k,v_k\in Q\). Therefore, with \(x_0,v_0\in Q\), it holds that \(\{x_k,y_k,v_k\}_{k\in {\mathbb {N}}}\subset Q\).

Furthermore, we mention that the reformulation (20) itself admits an implicit-explicit time discretization for the following primal–dual dynamics:

where \(\gamma \) and \(\beta \) are governed by continuous analogues to (23):

We call (24) the Accelerated Bregman Primal–Dual (ABPD) flow. For \(\phi (x) = 1/2\left\Vert {x} \right\Vert ^2\), it amounts to the accelerated primal–dual flow in [39].

3.3 A Universal Estimate

Let \(\{(x_k,v_k,\lambda _k,\gamma _k,\beta _k)\}_{k\in {\mathbb {N}}}\) be the sequence generated from Algorithm 1. We introduce the discrete Lyapunov function

A one-step estimate is presented below.

Lemma 3.2

Under Assumption 3.1, we have

Proof

See Sect. 4. \(\square \)

Using this lemma, we obtain the following theorem, which says the final rate is given by the sharp decay estimate of the sequence \(\{\beta _k\}_{k\in {\mathbb {N}}}\); see Lemma 3.3.

Theorem 3.1

Under Assumption 3.1, we have \(\{x_k,v_k\}_{k\in {\mathbb {N}}}\subset Q\) and

for all \(k\in {\mathbb {N}}\), where \({\mathcal {R}}_{k}:={\mathcal {E}}_0+\ln (k+1),\,{\mathcal {T}}_{k}:=\left\Vert {Ax_0-b} \right\Vert +2\sqrt{2{\mathcal {R}}_{k}}\) and \({\mathcal {W}}_{k}:={\mathcal {R}}_{k}+\left\Vert {\lambda ^*} \right\Vert {\mathcal {T}}_{k}\). Moreover, we have

where \(\gamma _{\min }:= \min \{\gamma _0,\mu \}\ge 0\).

Proof

From (23) and the contraction estimate (27) follows immediately that

which further implies

which proves (30). In view of (23), it holds that \(\gamma _{k+1} = (\gamma _k + \mu \alpha _k)/(1+\alpha _k)\ge \gamma _{\min }\). This together with (32) gives

It is clear that \({\mathcal {L}}(\cdot ,\lambda )\) is convex and by (7,30,3.1),

Hence, combining the above two estimates with (8) leads to (31).

Following [39, Theorem 3.1], we can establish (28,29). For the sake of completeness, we provide the details as below. Thanks to (20c) and (20d), we have

which yields that

By (32), we obtain \(\left\Vert {\lambda _k-\lambda ^*} \right\Vert ^2\le 2{\mathcal {R}}_k\) and

In view of \({\mathcal {R}}_k={\mathcal {E}}_0+\ln (k+1)\) and \(\beta _0=1\), we find that \(\left\Vert {\lambda _0-\lambda ^*} \right\Vert ^2\le 2{\mathcal {R}}_k\). Plugging this into the above estimate gives (28). Observing (30), it follows that

which promises

Consequently, this proves (29) and concludes the proof of this theorem. \(\square \)

Remark 3.4

Note that the choice (17) can be replaced with

Then the quantity \({\mathcal {R}}_{k} ={\mathcal {E}}_0+\ln (k+1)\) in Theorem 3.1 becomes \( {\mathcal {R}}_{k}=\mathcal E_0+\delta \ln (k+1)\). Taking \(\delta = 1/\ln (K+1)\) cancels the logarithm factor, where \(K\in {\mathbb {N}}\) is the maximal number of iterations chosen in advance. \(\square \)

It remains to investigate the decay rate of \(\{\beta _k\}_{k\in {\mathbb {N}}}\). From (21,23), we obtain

A careful investigation into this difference equation gives the desired result, which involves the following quantity

Lemma 3.3

Assume that \(\max \{\gamma _0,\mu \}\le \left\Vert {A} \right\Vert ^2\), then

and moreover, we have

where \(\gamma _{\min } = \min \{\gamma _0,\mu \}\ge 0\).

Proof

Since \(M(\nu ,\delta _{k+1}) = \delta _{k+1}^{\frac{\nu -1}{\nu +1}}[M_\nu (h)]^{\frac{2}{\nu +1}}\) and \(\delta _{k+1} = \beta _{k+1}/(k+1)\le 1\), by Lemma 3.1, we have

Plugging this into (33) gives

From this we obtain (35,36). Missing proofs are provided in Sect. 5. \(\square \)

Remark 3.5

Both two estimates (35,36) hold for \(\mu \ge 0\). In addition, for the limiting case \(\nu \rightarrow 1-\), we have

which matches the case \(\nu = 1\). \(\square \)

By the universal mixed-type estimate in Lemma 3.3, our Algorithm 1 achieves the lower complexity bound for both the affinely constrained case (i.e., \(A \ne 0_{m\times n}\)) and the unconstrained case \(\varOmega =\,{{\mathbb {R}}}^n\) (i.e., \(A = 0_{m\times n}\) and \(b = 0_{m}\)), with Hölderian smoothness exponent \(\nu \in [0,1]\). Detailed comparisons with existing results are summarized in order.

Remark 3.6

Consider the unconstrained case \(\varOmega =\,{{\mathbb {R}}}^n\) (i.e.,\(A = 0_{m\times n}\) and \(b = 0_{m}\)).

-

The Lipschitzian case \(\nu = 1\): From (29), it follows that (neglecting the logarithm factor \(\ln (k+1)\))

$$\begin{aligned} f(x_k) - f^* \le \beta _k{\mathcal {E}}_0, \end{aligned}$$where by Lemma 3.3, we have (taking \(\gamma _0>\mu \))

$$\begin{aligned} \beta _k\le \min \left\{ \frac{2\cdot 16^2\varDelta }{\gamma _0k^2},\,\exp \left( -\frac{k}{8}\sqrt{\frac{\mu }{\varDelta }}\right) \right\} . \end{aligned}$$Hence, to achieve the accuracy \(f(x_k)-f^*\le \epsilon \), the iteration complexity is no more than (recalling (34) and assuming \(\varDelta \sim L_h\))

$$\begin{aligned} \mathcal O\left( \min \left\{ \sqrt{\frac{L_h}{\epsilon }},\, \sqrt{\frac{L_h}{\mu }}\cdot \left|{\ln \epsilon } \right|\right\} \right) . \end{aligned}$$This is the well-known lower bound (cf. [45, 49]) of first-order methods for smooth convex functions with Lipschitzian gradients; see [10, 11, 38, 42, 47].

-

The Hölderian case \(0\le \nu < 1\): Similarly with the above analysis, the iteration complexity for \(f(x_k)-f^*\le \epsilon \) is bounded by

$$\begin{aligned} {\mathcal {O}}\left( \min \left\{ \left( \frac{M_\nu (h)}{\epsilon }\right) ^\frac{2}{1+3\nu },\quad \left( \frac{M_\nu (h)}{\mu }\right) ^{\frac{2}{1+3\nu }}\cdot \left( \frac{\mu }{\epsilon }\right) ^{\frac{1-\nu }{1+3\nu }}\right\} \right) . \end{aligned}$$(38)This matches the lower bound in [43, 44]. The convex case \(\mu = 0\) has been obtained by the methods in [32, 43, 48], and the restarted schemes in [32, 52] attained the complexity bound for \(\mu >0\). Besides, Guminov et al. [24] obtained (38) for nonconvex problems, with an extra one-dimensional line search step. \(\square \)

Remark 3.7

Then, let us focus on the affine constraint case (i.e., \(A \ne 0_{m\times n}\)).

-

The Lipschitzian case \(\nu = 1\): By (28,29), to achieve \(\left|{f(x_k)-f^*} \right|\le \epsilon \) and \(\left\Vert {Ax_k-b} \right\Vert \le \epsilon \), the iteration complexity (neglecting the logarithm factor \(\ln (k+1)\)) is

$$\begin{aligned} {\mathcal {O}}\left( \min \left\{ \frac{\left\Vert {A} \right\Vert }{\epsilon }+\sqrt{\frac{L_h}{\epsilon }},\quad \frac{\left\Vert {A} \right\Vert }{\sqrt{\mu \epsilon }}+\sqrt{\frac{L_h}{\mu }}\cdot \left|{\ln \epsilon } \right| \right\} \right) . \end{aligned}$$(39)This coincides with the lower complexity bound in [51]. The methods in [12, 46, 66] achieved the bound for convex case \(\mu =0\), and the strongly convex case \(\mu >0\) can be found in [66].

-

The Hölderian case \(0\le \nu < 1\):

$$\begin{aligned} {\mathcal {O}}\left( \min \left\{ \frac{\left\Vert {A} \right\Vert }{\epsilon }+\left( \frac{M_\nu (h)}{\epsilon }\right) ^\frac{2}{1+3\nu },\quad \frac{\left\Vert {A} \right\Vert }{\sqrt{\mu \epsilon }}+ \left( \frac{M_\nu (h)}{\mu }\right) ^{\frac{2}{1+3\nu }}\cdot \left( \frac{\mu }{\epsilon }\right) ^{\frac{1-\nu }{1+3\nu }} \right\} \right) . \end{aligned}$$Similarly with (39), this universal mixed-type estimate has optimal dependence on \(\left\Vert {A} \right\Vert \) (corresponding to the affine constraint), and the remainder agrees with (38), which is optimal with respect to \(\mu \) and \(M_\nu (h)\) (related to the objective). \(\square \)

4 Proof of Lemma 3.2

Start from the difference \( {\mathcal {E}}_{k+1}-{\mathcal {E}}_{k} = {\mathbb {I}}_1+{\mathbb {I}}_2+{\mathbb {I}}_3\), where

Notice that \(x_k,\,x_{k+1}\in Q\) and the first term is easy to handle:

We give the estimate of \({\mathbb {I}}_2\) in Sect. 4.1 and finish the proof of (27) in Sect. 4.2.

4.1 Estimate of \({\mathbb {I}}_2\)

Invoking the three-term identity (9) and the difference equation of \(\{\gamma _k\}_{k\in {\mathbb {N}}}\) in (23), we split the second term \({\mathbb {I}}_2\) as follows

Let us prove

which leads to the desired estimate of \({\mathbb {I}}_2\):

To do this, define \(\zeta _{k+1}\) by that

Observing (20b), it follows that \(\zeta _{k+1}\in \partial g(v_{k+1})+N_Q(v_{k+1})\) and

Thanks to (9), we have the decomposition

and invoking (20a) leads to

Since \(x_k,\,y_k\in Q\), by Assumption 3.1 we obtain

Hence, combining the above estimates with (44) proves (42).

4.2 Estimate of \({\mathbb {I}}_3\)

Similarly with (41), by (9), (20d) and (23), the third term \({\mathbb {I}}_3\) is rearranged by that

To match the cross term \( -\alpha _k \big \langle \widetilde{\lambda }_k, Av_{k+1} -b\big \rangle \) in the estimate of \({\mathbb {I}}_2\) (cf.(43)), we rewrite the last term as follows:

In view of (10) and (20d), we get

which gives

4.3 Proof of (27)

Combining (43) and the estimate of \({\mathbb {I}}_3\), we obtain

Inserting the identity (40) into the above inequality and observing that

and

we arrive at

The first two lines equal to \( -\alpha _k{\mathcal {E}}_{k+1}\) and a careful collection of the rest terms gives

From (20c), we see \(x_{k+1}\) is a convex combination of \(x_k\) and \(v_{k+1}\), which implies

Thanks to (22) and the relation \(\alpha _k(v_{k+1}-v_k)=(1+\alpha _k) (x_{k+1}-y_k) \) (cf.(20a) and (20c)), we obtain the estimate

Consequently, plugging these two estimates into (45) and using (8) leads to

Recall that \(\widetilde{\lambda }_k= \lambda _k+\alpha _k/\beta _k(Av_k-b)\), which together with (20d) gives \(\lambda _{k+1}- \widetilde{\lambda }_k=\alpha _k/\beta _k A(v_{k+1}-v_k)\) and

Since \(\beta _{k+1}\le \beta _{k}\) (cf.(23)), the desired estimate (27) follows immediately from (21). This finishes the proof of Lemma 3.2.

5 Proof of Lemma 3.3

The key to complete the proof of Lemma 3.3 is the difference inequality (37). In Sect. 5.1, we shall introduce an auxiliary differential inequality (cf.(46)) that can be viewed as a continuous analogue to (37). Later in Sects. 5.2 and 5.3, we finish the proofs of (35),(36) by using the asymptotic estimate of (46).

5.1 A Differential Inequality

In the sequel, we need some functional spaces in one dimension; see [14, Definitions 1.87 and 2.1]. Denote by \(C^1(I)\) the space of (real-valued) continuous functions on the interval \(I\subset \,{{\mathbb {R}}}\) with continuous derivatives. Let \(L^\infty (I)\) be the space of essentially bounded measurable functions, which means any \(\sigma \in L^\infty (I)\) is bounded almost everywhere. The space \(L^1(I)\) consists of measurable functions that are absolutely summable (integrable). As usual, we denote by \(W^{1,\infty }(I)\) the set of all real-valued functions which, together with their generalized derivatives, belong to \(L^\infty (I)\).

Let \(\eta ,\,R\ge 0\) and \(\theta >1\) be constants such that \(\eta \le \theta -1\). Suppose \(y\in W^{1,\infty }(0,\infty )\) is positive and satisfies the differential inequality

where \(\sigma \in L^1(0,\infty )\) is nonnegative, and \(\varphi \in C^1[0,\infty )\) is positive and nondecreasing. The decay rate of y(t) is given below.

Lemma 5.1

Assume \(y\in W^{1,\infty }(0,\infty )\) is positive and satisfies (46). Then, for all \(t>0\), we have

where \(\varSigma (t):=\int _{0}^{t}\sigma (s)\,\textrm{d}s\).

Proof

Write (46) as follows

Shifting the denominator from the right to the left and using the trivial estimate

we obtain that

To the end, we shall discuss in two cases: \(\eta =\theta -1\) and \(\eta <\theta -1\). Detailed proofs can be found in Sect. Appendix C. \(\square \)

5.2 Proof of (35)

By (23), we have

Since \(\gamma _{0}=\gamma _{0}\beta _0\), it follows that \(\gamma _k \ge \gamma _0\beta _{k}\) and (37) becomes

where \(\delta _{k+1} = \beta _{k+1}/(k+1)\).

Define a piecewise continuous linear interpolation

Clearly, \(y\in W^{1,\infty }(0,\infty )\) is positive and \(0<y(t)\le y(0) = 1\). In particular, we have \(\beta _k = y(k)\) for all \(k\in {\mathbb {N}}\), and the decay estimate of \(\beta _k\) is transferred into the asymptotic behavior of y(t), which satisfies (the proof is given below)

where \(\varphi (t):=4\varDelta (t+1)^{\frac{1-\nu }{1+\nu }}\). Thus, utilizing Lemma 5.1 gives

which establishes (35).

Below, let us verify (50). Since \(\gamma _k\le \max \{\gamma _0,\mu \}\le \left\Vert {A} \right\Vert ^2\), from (21) we find that \(\alpha _k\le \sqrt{\gamma _k\beta _k}/\left\Vert {A} \right\Vert \le 1\). For any \(t\in (k,k+1)\), it is clear that

which implies

Since \(y'(t) = \beta _{k+1}-\beta _k\), plugging the above estimate into (48) proves (50).

5.3 Proof of (36)

Again, by (23), we have \(\gamma _{k}\ge \gamma _{\min } = \min \{\gamma _0,\mu \}\), and (37) becomes

Recall the interpolation y(t) defined by (49). Similarly with (50), we claim that

and invoking Lemma 5.1 again gives

6 Numerical Examples

In this part, we provide several experiments to validate the performance of our Algorithm 1 (denoted by UAPD). It is compared with Nesterov’s FGM [48] and the AccUniPDGrad method [68], for solving unconstrained and affinely constrained problems.

Both UAPD and FGM involve the proximal mapping of the nonsmooth part g, and FGM performs one more proximal calculation for updating \(v_{k+1}\). In line search part, FGM and AccUniPDGrad use the tolerance \(\delta _k = \epsilon \tau _k\) with \(\tau _k = \mathcal O(1/k)\), where \(\epsilon \) is the desired accuracy. Clearly, this is smaller than ours \(\delta _k = \beta _k/k\). As discussed in Remark 3.3, this will lead to over-estimate issue, especially for Hölderian case (cf. Sect. 6.1) and smooth problems with large Lipschitz constants (cf. Sect. 6.2).

6.1 Matrix Game

The problem reads as

where \(P =(p_1,p_2,\cdots ,p_m)\in \,{{\mathbb {R}}}^{n\times m}\) is the given payoff matrix and \(\varDelta _{\times }\subset \,{{\mathbb {R}}}^\times \) is the simplex with \(\times =m\) or n. By von Neumann’s minimax theorem [1, Corollary 15.30], we can change the min-max order of (51) and obtain

where \(P^\top =(q_1,q_2,\cdots ,q_n)\) with \(q_i\in \,{{\mathbb {R}}}^m\). Moreover, there is no duality gap, i.e., \(\varphi ^* = \psi ^*\). According to [1, Proposition 5.12], we claim that \(\varphi (x^\#) = \psi (y^\#)\) for some \((x^\#,y^\#)\in \varDelta _n\times \varDelta _m\) if and only if \(\varphi (x^\#) = \varphi ^*\) and \(\psi (y^\#) = \psi ^*\).

As we do not know the optimal value, consider the unconstrained problem

Clearly, the minimal value is zero:

Moreover, \((x^\#,y^\#)\) is an optimal solution to (53) if and only if \(x^\#\) and \(y^\#\) are optimal solutions to (51,52), respectively.

Since \(\psi \) is concave, f is convex but nonsmooth (Lipschitz continuous). In view of Remark 3.1, f satisfies Assumption 3.1 without the simple part g. In addition, to work with the simplex constraint, a proper prox-function is the entropy \(\phi (x) = \left\langle {x,\ln x} \right\rangle \), which gives closed solution of the proximal calculation (14).

Numerical results of FGM and UAPD for solving (53) with \(m = 100,\,n = 400\). The desired accuracy of FGM is \(\epsilon = 1e\)-5

Numerical results are displayed in Fig. 2. We record (i) the decay behavior of the objective residual \(\left|{f(x_k,y_k)-f^*} \right|\) with respect to both iteration number k and running time t (in seconds), (ii) the total number \(\#i_k\) of the line search step \(i_k\) and its average \(\bar{i}_k\), and (iii) the approximate Lipschitz constant \(M_k\). The pay off matrix P is generated from the normal distribution. For FGM, the priori accuracy is \(\epsilon = 1e\)-5. For our method, the line search parameters are \(\rho _{\!u} = 2\) and \(\rho _{_{\!d}} = 1\).

From Fig. 2, our UAPD outperforms FGM, with faster convergence, less number of line search steps and smaller Lipschitz constants. Within the same iteration number \(k = 1e5\), UAPD achieves ten times smaller error (1e-2 v.s. 1e-1) but takes only about half less time (20 s v.s. 40 s). It can be seen that, FGM has much more line search steps and the average is 1, which means it performs at least one step of line search in each iteration. Since \(\rho _{_{\!d}}=1\), according to Remark 3.2, the Lipschitz constant \(M_k\) of our UAPD is nondecreasing, which agrees with the numerical illustration. As mentioned in Remark 3.3, FGM suffers from over-estimated Lipschitz parameters with dramatically growth behavior since it adopts smaller tolerance \(\epsilon /k\) (comparable to our dynamically decreasing choice \(\beta _k/k\)). Besides, in step 3 of FGM, it updates the Lipschitz constant by \(L_{k+1} = L_{k,i_k}/2=2^{i_k}L_k/2\) (here L agrees with our notation M), which corresponds to choosing \(\rho _{_{\!d}}=2\) in our method. Hence, the Lipschitz constant sequence of FGM is nonmonotone and changes with high oscillation. This verifies the claim in Remark 3.2 that large \(\rho _{_{\!d}}>1\) reduces \(M_k\) locally but increases the burden on the line search procedure.

Numerical performances of UAPD on the problem (53) with different prox-functions

In Fig. 3, we check also the difference between the Euclidean distance \(\phi (x) = 1/2\left\Vert {x} \right\Vert ^2\) and the entropy \(\phi (x) = \left\langle {x,\ln x} \right\rangle \). It is observed that these two cases are very similar in line search part but the latter leads to better convergence rate.

Numerical performances of UAPD on the problem (53) with \(\rho _{\!u} = 2\) and different \(\rho _{_{\!d}}\)

Besides, we report the performance of our UAPD with different \(\rho _{_{\!d}}\). From Fig. 4, we see little improvement on the convergence rate with respect to the iteration k, and large \(\rho _{_{\!d}}\) does not win smaller choices because it runs with more than triple time (120.12 s v.s. 39.62 s) but has not reduced the residual by third (6.07e-3 v.s. 4.37e-3). Moreover, the magnitude of \(M_k\) does not differ too much but large \(\rho _{_{\!d}}\) call more line search steps, which increases the computational cost. In conclusion, for matrix game problem, \(\rho _{_{\!d}} = 1\) seems a doable choice.

6.2 Regularized Matrix Game Problem

The objective of (51) admits an approximation (cf. [46])

where \(\sigma >0\) denotes the smoothing parameter. This regularized objective is smoother than the original one. According to [46, Eq.(4.8)], we choose \(\sigma = \epsilon /(2\ln m)\), and the Lipschitz constant of \(\nabla \varphi _\sigma \) is \(L_\sigma =\max _{i,j}|P_{i,j}|^2/(4\sigma )\). To avoid the overflow issue, we adopt the shifting technique [3].

We then apply UAPD and FGM (with \(\epsilon = 1e\)-6) to the smooth objective (54) and report the numerical outputs in Fig. 5. The optimal value \(\varphi _\sigma ^* \) is obtained by running UAPD with enough iterations. Similarly as before, our UAPD is superior to FGM in convergence, line search cost and approximate Lipschitz constant. Also, we plot the results of the original matrix game problem (see the top row in Fig. 5) and find that with smoothing technique both two methods perform better than before.

Numerical performances of FGM and UAPD on minimizing the regularized objective (54) with \(m = 100,\,n = 400\). The desired accuracy of FGM is \(\epsilon =1e\)-6

6.3 Continuous Steiner Problem

Let us consider one more unconstrained problem

where \(a_j\in \,{{\mathbb {R}}}^n\) denotes the given location. Note that the objective is actually quite smooth far away from each \(a_j\). We generate \(a_j\) from the uniform distribution and run UAPD with enough times to obtain an approximated optimal value \(f^*\). Numerical results in Fig. 6 show that both FGM (with \(\epsilon = 1e\)-8) and UAPD work well and possess similar convergence behaviors. Moreover, as \(\nabla f\) is almost Lipschitz continuous and the magnitude of the Lipschitz constant is not large, the over-estimated issue of FGM is negligible, and the approximated constant \(M_k\) is the same as that of our UAPD.

Numerical results of FGM and UAPD for solving (55) with \(m = 800,\,n = 400\). The desired accuracy of FGM is \(\epsilon = 1e\)-8

6.4 Basis Pursuit Problem

In the last example, we look at the basis pursuit problem

where \(A\in \,{{\mathbb {R}}}^{m\times n}\) and \(b\in \,{{\mathbb {R}}}^m\). To be compatible with the problem setting of AccUniPDGrad [68], consider an equivalent formulation

The dual problem reads as

Note that existing accelerated methods in [29, 39, 65] can be applied to solving (56) with theoretical rate \(\mathcal O(1/k)\). But we only focus on the comparison between UAPD and AccUniPDGrad, as black-box type methods with line search procedure. We mention that AccUniPDGrad also uses smaller tolerance \(\epsilon /k\) as that in FGM, where \(\epsilon >0\) denotes the desired accuracy, and it takes \(M_{k+1} = M_{k,i_k}=2^{i_k}M_k\), which coincides with our choice \(\rho _{_{\!d}}=1\).

To obtain a reasonable approximate minimal value \(f^*\), we run the accelerated primal–dual method proposed in [39] with enough iterations. Numerical results are shown in Fig. 7, which indicate that (i) our UAPD has smaller objective residual and feasibility violation, (ii) the line search steps are very close and the Lipschitz constant sequences are nondecreasing (since \(\rho _{_{\!d}}=1\)), and (iii) AccUniPDGrad generates over-estimated \(M_k\) because of its small tolerance \(\epsilon /k\).

Numerical performances of AccUniPDGrad and UAPD on the basis pursuit problem (56) with \(m = 100,\,n = 400\). The desired accuracy for AccUniPDGrad is \(\epsilon = 1e\)-3

References

Bauschke, H., Combettes, P.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. CMS Books in Mathematics. Springer Science+Business Media, New York (2011)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Blanchard, P., Higham, D.J., Higham, N.J.: Accurately computing the log-sum-exp and softmax functions. IMA J. Numer. Anal. 41(4), 2311–2330 (2021)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2010)

Cai, J.-F., Osher, S., Shen, Z.: Linearized Bregman iterations for compressed sensing. Math. Comput. 78(267), 1515–1536 (2009)

Candès, E., Romberg, J., Tao, T.: Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 52(2), 489–509 (2006)

Chambolle, A., Pock, T.: A first-order primal–dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Chambolle, A., Pock, T.: An introduction to continuous optimization for imaging. Acta Numer. 25, 161–319 (2016)

Chen, G., Teboulle, M.: Convergence analysis of a proximal-like minimization algorithm using Bregman functions. SIAM J. Optim. 3(3), 538–543 (1993)

Chen, L., Luo, H.: First order optimization methods based on Hessian-driven Nesterov accelerated gradient flow. arXiv:1912.09276 (2019)

Chen, L., Luo, H.: A unified convergence analysis of first order convex optimization methods via strong Lyapunov functions. arXiv: 2108.00132 (2021)

Chen, Y., Lan, G., Ouyang, Y.: Optimal primal–dual methods for a class of saddle point problems. SIAM J. Optim. 24(4), 1779–1814 (2014)

Davis, D., Yin, W.: Convergence rate analysis of several splitting schemes. Splitting Methods in Communication, Imaging, Science, and Engineering, pages 115–163 (2016)

Demengel, F., Demengel, G., Erné, R.: Functional Spaces for the Theory of Elliptic Partial Differential Equations. Universitext. Springer, London (2012)

Devolder, O., Glineur, F., Nesterov, Y.: First-order methods of smooth convex optimization with inexact oracle. Math. Program. 146(1–2), 37–75 (2014)

Dvurechensky, P, Gasnikov, A., Kroshnin, A.: Computational optimal transport: complexity by accelerated gradient descent is better than by Sinkhorn’s algorithm. In: Proceedings of the 35 th International Conference on Machine Learning, volume 80, Stockholm, Sweden (2018). PMLR

Dvurechensky, P., Staudigl, M., Shtern, S.: First-order methods for convex optimization. arXiv:2101.00935 (2021)

Eckstein, J.: Splitting Methods for Monotone Operators with Applications to Parallel Optimization. PhD Thesis, Massachusetts Institute of Technology (1989)

Eckstein, J., Bertsekas, D.P.: On the Douglas–Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55(1), 293–318 (1992)

Esser, E., Zhang, X., Chan, T.F.: A general framework for a class of first order primal-dual algorithms for convex optimization in imaging science. SIAM J. Imaging Sci. 3(4), 1015–1046 (2010)

Feijer, D., Paganini, F.: Stability of primal–dual gradient dynamics and applications to network optimization. Automatica 46(12), 1974–1981 (2010)

Goldstein, T., O’Donoghue, B., Setzer, S., Baraniuk, R.: Fast alternating direction optimization methods. SIAM J. Imaging Sci. 7(3), 1588–1623 (2014)

Guminov, S., Gasnikov, A., Anikin, A., Gornov, A.: A universal modification of the linear coupling method. Optim. Methods Softw. 34(3), 560–577 (2019)

Guminov, S.V., Nesterov, Y.E., Dvurechensky, P.E., Gasnikov, A.V.: Primal–dual accelerated gradient descent with line search for convex and nonconvex optimization problems. arXiv:1809.05895 (2018)

He, B., You, Y., Yuan, X.: On the convergence of primal-dual hybrid gradient algorithm. SIAM J. Imaging Sci. 7(4), 2526–2537 (2014)

He, B., Yuan, X.: On the acceleration of augmented Lagrangian method for linearly constrained optimization. https://optimization-online.org/2010/10/2760/ (2010)

He, X., Hu, R., Fang, Y.-P.: Fast primal–dual algorithm via dynamical system for a linearly constrained convex optimization problem. Automatica 146, 110547 (2022)

He, X., Hu, R., Fang, Y.-P.: Inertial accelerated primal–dual methods for linear equality constrained convex optimization problems. Numer. Algorithms 90(4), 1669–1690 (2022)

Huang, B., Ma, S., Goldfarb, D.: Accelerated linearized Bregman method. J. Sci. Comput. 54, 428–453 (2013)

Heinonen, J.: Lectures on Lipschitz analysis. Technical Report vol. 100, Rep. Univ. Jyväskylä Dept. Math. Stat., University of Jyväskylä (2005)

Jiang, F., Cai, X., Wu, Z., Han, D.: Approximate first-order primal-dual algorithms for saddle point problems. Math. Comput. 90(329), 1227–1262 (2021)

Kamzolov, D., Dvurechensky, P., Gasnikov, A.: Universal intermediate gradient method for convex problems with inexact oracle. arXiv:1712.06036 (2019)

Kang, M., Kang, M., Jung, M.: Inexact accelerated augmented Lagrangian methods. Comput. Optim. Appl. 62(2), 373–404 (2015)

Krichene, W., Bayen, A., Bartlett, P.: Accelerated mirror descent in continuous and discrete time. Adv. Neural Inf. Process. Syst. (NIPS) 28, 2845–2853 (2015)

Li, H., Fang, C., Lin, Z.: Convergence rates analysis of the quadratic penalty method and its applications to decentralized distributed optimization. arXiv:1711.10802 (2017)

Li, H., Lin, Z.: Accelerated alternating direction method of multipliers: an optimal \({O}(1/{K})\) nonergodic analysis. J. Sci. Comput. 79(2), 671–699 (2019)

Lin, T., Ho, N., Jordan, M.I.: On efficient optimal transport: An analysis of greedy and accelerated mirror descent algorithms. In International Conference on Machine Learning, pp. 3982–3991. PMLR (2019)

Luo, H.: Accelerated differential inclusion for convex optimization. Optimization (2021). https://doi.org/10.1080/02331934.2021.2002327

Luo, H.: Accelerated primal-dual methods for linearly constrained convex optimization problems. arXiv:2109.12604 (2021)

Luo, H., Zhang, Z.-H.: A unified differential equation solver approach for separable convex optimization: splitting, acceleration and nonergodic rate. arXiv:2109.13467 (2023)

Luo, H.: A primal–dual flow for affine constrained convex optimization. ESAIM: Control Optim. Calc. Var. 28, 33 (2022)

Luo, H., Chen, L.: From differential equation solvers to accelerated first-order methods for convex optimization. Math. Program. (2021). https://doi.org/10.1007/s10107-021-01713-3

Nbmirovskii, A.S., Nrsterov, Y.E.: Optimal methods of smooth convex minimization. USSR Comput. Math. Math. Phys. 25(2), 21–30 (1985)

Nemirovsky, A., Yudin, D.: Problem Complexity and Method Efficiency in Optimization. John Wiley & Sons, New York (1983)

Nesterov, Y.: Introductory Lectures on Convex Optimization. Applied Optimization, vol. 87. Springer, US, Boston, MA (2004)

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103(1), 127–152 (2005)

Nesterov, Y.: Gradient methods for minimizing composite functions. Math. Program. Ser. B 140(1), 125–161 (2013)

Nesterov, Y.: Universal gradient methods for convex optimization problems. Math. Program. 152, 381–404 (2015)

Nesterov, Y.: Lectures on Convex Optimization. Springer Optimization and Its Applications, vol. 137. Springer International Publishing, Cham (2018)

Ouyang, Y., Chen, Y., Lan, G., Pasiliao, E.: An accelerated linearized alternating direction method of multipliers. SIAM J. Imaging Sci. 8(1), 644–681 (2015)

Ouyang, Y., Xu, Y.: Lower complexity bounds of first-order methods for convex–concave bilinear Saddle-point problems. Math. Program. 185(1–2), 1–35 (2021)

Roulet, V., d’Aspremont, A.: Sharpness, restart, and acceleration. In: 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA (2017)

Sabach, S., Teboulle, M.: Faster Lagrangian-based methods in convex optimization. SIAM J. Optim. 32(1), 204–227 (2022)

Stonyakin, F., Dvinskikh, D., Dvurechensky, P., Kroshnin, A., Kuznetsova, O., Agafonov, A., Gasnikov, A., Tyurin, A., Uribe, C.A., Pasechnyuk, D., Artamonov, S.: Gradient methods for problems with inexact model of the objective. arXiv:1902.09001 (2019)

Stonyakin, F., Gasnikov, A., Dvurechensky, P., Alkousa, M., Titov, A.: Generalized mirror prox for monotone variational inequalities: Universality and inexact oracle. arXiv:1806.05140 (2022)

Tao, M., Yuan, X.: Accelerated Uzawa methods for convex optimization. Math. Comput. 86(306), 1821–1845 (2016)

Tian, W., Yuan, X.: An alternating direction method of multipliers with a worst-case \({O}(1/n^2)\) convergence rate. Math. Comput. 88(318), 1685–1713 (2018)

Tran-Dinh, Q.: A unified convergence rate analysis of the accelerated smoothed gap reduction algorithm. Optim. Lett. (2021). https://doi.org/10.1007/s11590-021-01775-4

Tran-Dinh, Q., Fercoq, O., Cevher, V.: A smooth primal-dual optimization framework for nonsmooth composite convex minimization. SIAM J. Optim. 28(1), 96–134 (2018)

Tran-Dinh, Q., Zhu, Y.: Augmented Lagrangian-based decomposition methods with non-ergodic optimal rates. arXiv:1806.05280 (2018)

Tran-Dinh, Q., Zhu, Y.: Non-stationary first-order primal–dual algorithms with faster convergence rates. SIAM J. Optim. 30(4), 2866–2896 (2020)

Valkonen, T.: Inertial, corrected, primal–dual proximal splitting. SIAM J. Optim. 30(2), 1391–1420 (2020)

Wibisono, A., Wilson, A.C., Jordan, M.I.: A variational perspective on accelerated methods in optimization. Proc. Natl. Acad. Sci. 113(47), E7351–E7358 (2016)

Wilson, A., Recht, B., Jordan, M.: A Lyapunov analysis of momentum methods in optimization. arXiv:1611.02635 (2016)

Xu, Y.: Accelerated first-order primal-dual proximal methods for linearly constrained composite convex programming. SIAM J. Optim. 27(3), 1459–1484 (2017)

Xu, Y.: Iteration complexity of inexact augmented Lagrangian methods for constrained convex programming. Math. Program. 185(1–2), 199–244 (2021)

Yin, W., Osher, S., Goldfarb, D., Darbon, J.: Bregman iterative algorithms for \(\ell _1\)-minimization with applications to compressed sensing. SIAM J. Imaging Sci. 1(1), 143–168 (2008)

Yurtsever, A., Tran-Dinh, Q., Cevher, V.: A universal primal-dual convex optimization framework. arXiv: 1502.03123 (2015)

Zhao, Y., Liao, X., He, X., Li, C.: Accelerated primal-dual mirror dynamical approaches for constrained convex optimization. arXiv:2205.15983 (2022)

Acknowledgements

The author would like to thank the Editor and two anonymous referees, for their careful readings and valuable comments that improve significantly the early version of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Olivier Fercoq.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the Foundation of Chongqing Normal University (Grant No. 202210000161) and the Science and Technology Research Program of Chongqing Municipal Education Commission (Grant No. KJZD-K202300505)

Appendices

Proof of Lemma 3.1

Let us first prove (18). Recall that \(i_k\) is the smallest nonnegative integer such that (cf. (15))

If \(i_k = 0\), then \(M_{k+1} = M_{k,0} /\rho _{_{\!d}}= M_{k}/\rho _{_{\!d}}\). Otherwise (i.e., \(i_k\ge 1\)), we claim that

If this is violated, then \(M_{k,i_{k}-1} = M_{k,i_k}/\rho _{\!u}>M(\nu ,\delta _{k,i_{k}-1})\). According to Proposition 2.1, this implies immediately that

which yields a contradiction and thus verifies (57). Additionally, by (16), we have \( \alpha _{k,i_{k}-1}\le \sqrt{\rho _{\! u}}\alpha _{k,i_{k}}\). Therefore, collecting (13,17,57) leads to

This means that for all \(k\in {\mathbb {N}}\), we have

Since \(\delta _{k+1} = \beta _{k+1}/{(k+1)}\) with \(\{\beta _k\}_{k\in {\mathbb {N}}}\) being decreasing (cf.(17)), it follows that \(\delta _{k+1}\le \delta _{\ell +1}\) and \(M(\nu ,\delta _{\ell +1})\le M(\nu ,\delta _{k+1})\) for all \(0\le \ell \le k\), which together with (59) indicates the estimate

This proves the desired result (18).

Then, let us verify (19). Observing that \(M_{k+1} = M_{k,i_k} /\rho _{_{\!d}}= \rho _{\! u}^{i_k}M_{k}/\rho _{_{\!d}}\), we have

Invoking the estimate of \(M_{k+1}\) gives

Since \(M(\nu ,\delta _{k+1}) = \delta _{k+1}^{\frac{\nu -1}{\nu +1}}[M_\nu (h)]^{\frac{2}{\nu +1}}\), we obtain (19) and complete the proof of Lemma 3.1.

Derivation of the Reformulation (20)

Observing line 10 of Algorithm 1, we obtain (20d). Given \((x_k,v_k,\lambda _k)\) and \((\gamma _k,\beta _k,M_{k})\), \((y_k,x_{k+1},v_{k+1})\) are nothing but the output of Algorithm 2 with the input \((k,S_k,M_{k,i_k})\), where \(S_k=\{x_k,v_k,\lambda _k,\beta _k,\gamma _k\}\). Hence, from lines 3 and 4, we obtain

which gives (20a) and (20c). Besides, we have

with \(\widetilde{\lambda }_k= \lambda _k+\alpha _k/\beta _k(Av_k-b)\). The optimality condition reads as

After rearranging, we get (20b).

Proof of Lemma 5.1

1.1 The Case \(\eta =\theta -1\)

The estimate (47) becomes

Since \(y(0) = 1\) and \(y'(t)\le 0\), it holds that \(0<y(t)\le 1\) for all \(t\ge 0\). As \(\varphi (t)\) is positive and nondecreasing, we obtain

Combining this with (60) gives

and integrating over (0, t) leads to

Define

Then one finds that

This also implies

where \(Y(t):= Y_1(t)+Y_2(t)\). For fixed \(t>0\), the function

is monotonously decreasing in terms of \(v\in (0,\infty )\). Collecting (61,63) yields that

This completes the proof of Lemma 5.1 with \(\eta =\theta -1\).

1.2 The Case \(\eta <\theta -1\)

The proof is in line with the previous case. With some elementary calculus computations, the estimate (61) now becomes

where \(G:(0,\infty )\times (0,\infty )\rightarrow \,{{\mathbb {R}}}\) is defined by

for all \(w,\,v>0\). In addition to \(Y_2(t)\) defined in (62), we need

Since \(G(w,\cdot )\) is monotonously decreasing and

we obtain

This concludes the proof of Lemma 5.1 with \(\eta <\theta -1\).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Luo, H. A Universal Accelerated Primal–Dual Method for Convex Optimization Problems. J Optim Theory Appl 201, 280–312 (2024). https://doi.org/10.1007/s10957-024-02394-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-024-02394-6

Keywords

- Convex optimization

- Primal–dual method

- Mixed-type estimate

- Optimal complexity

- Bregman divergence

- Lyapunov function