Abstract

This paper studies a bilevel polynomial program involving box data uncertainties in both its linear constraint set and its lower-level optimization problem. We show that the robust global optimal value of the uncertain bilevel polynomial program is the limit of a sequence of values of Lasserre-type hierarchy of semidefinite linear programming relaxations. This is done by first transforming the uncertain bilevel polynomial program into a single-level non-convex polynomial program using a dual characterization of the solution of the lower-level program and then employing the powerful Putinar’s Positivstellensatz of semi-algebraic geometry. We provide a numerical example to show how the robust global optimal value of the uncertain bilevel polynomial program can be calculated by solving a semidefinite programming problem using the MATLAB toolbox YALMIP.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The bilevel optimization problems arise when two independent decision makers, ordered within a hierarchical structure, try to optimize their objectives over a jointly dependent set. They appear as hierarchical decision-making problems, such as risk management and economic planning problems, in engineering, governments, and industries [1,2,3,4,5]. The commonly used bilevel optimization techniques (see [3, 6, 7] and the references therein) assume precise information (i.e., accurate values for the input quantities or system parameters), despite the reality that such precise knowledge is rarely available in hierarchical decision-making problems. The data of these problems are often uncertain (i.e., they are not known exactly at the time of the decision) due to estimation errors, prediction errors, or lack of information. Consequently, the development of optimization methodologies, which are capable of generating robust optimal solutions that are immunized against data uncertainty, such as the deterministic robust optimization techniques, has become more important than ever in mathematics, commerce, and engineering [8,9,10,11,12].

The bilevel program is a class of NP-hard optimization problems even in the case, where all the functions are linear and are free of data uncertainty [13]. A general approach for studying bilevel optimization problems is to transform them into single-level optimization problems [2, 3, 6]. The resulting single-level optimization problems are generally non-convex constrained optimization problems. It is often difficult to find global optimal solutions of non-convex optimization problems. However, Putinar’s Positivstellensatz [14] together with Lasserre-type semidefinite relaxations allows us to characterize global optimal solutions and find the global optimal value of a non-convex optimization problem involving polynomials. The reader is referred to [15,16,17,18,19,20] for related recent work on single-level convex and non-convex polynomial optimization in the literature.

In this paper, we study a bilevel polynomial program that finds robust global optimal values under box data uncertainties. The main purpose of this paper is to show how the robust global optimal value of a bilevel polynomial program in the face of uncertain linear constraints can be calculated by solving a sequence of semidefinite linear programming relaxations. More concretely, under the coercivity of the objective polynomial, we prove that the values of Lasserre-type semidefinite relaxations converge to the robust global optimal value of the uncertain bilevel polynomial program.

This is done by first transforming the uncertain bilevel polynomial program into a single-level non-convex polynomial program using a dual characterization of the solution of the lower-level program and then employing Putinar’s Positivstellensatz [14]. The dual characterization is achieved by using a generalized Farkas lemma with a numerical certificate for semi-infinite linear inequality systems [21], whose dual statement can be verified by solving a semidefinite linear program. Related recent work on global bilevel polynomial optimization in the absence of data uncertainty can be found in [17, 22].

We would also like to point out that some other approaches to treating uncertain bilevel optimization problems are available in the literature. For instance, the authors in [23] used a stochastic approach to obtain robust solutions to hierarchical problems, where the lower-level program is described by equilibrium constraints and the uncertain data include random inputs as well as discretely distributed probability distributions. A fuzzy approach was employed in [24] for studying a linear bilevel optimization problem, in which the concept of the solution for both levels is understood in the sense of fuzzy. An approximation/perturbation approach was used in [25] for treating a two-level optimization problem by approximating it by a sequence of mathematical programs. However, to the best of our knowledge, a mathematical theory and the associated methods for uncertain bilevel optimization problems that employ the deterministic robust optimization approach [8,9,10, 12] do not appear to be available in the literature.

The outline of the paper is as follows. Section 2 presents preliminaries and auxiliary results, where we can find dual characterizations for robust solutions of the lower-level program and for robust feasible points of the uncertain bilevel polynomial problem. The main result is given in Sect. 3, where we prove the convergence of robust global optimal values via semidefinite linear programming relaxations for the uncertain bilevel polynomial problem. Section 4 provides conclusions and discusses further research perspectives.

2 Preliminaries and Auxiliary Results

Let us start this section by fixing some notation and definitions. The notation \(\mathbb {R}^n\) signifies the Euclidean space, whose norm is denoted by \(\Vert \cdot \Vert \) for each \(n\in \mathbb {N}:=\{1,2,\ldots \}\). The inner product in \(\mathbb {R}^n\) is defined by \(\langle x,y\rangle :=x^\top y\) for all \(x, y\in \mathbb {R}^n.\) As usual, \(\mathrm{cone\,}\varOmega :=\{\sum _{i=1}^k\alpha _ix_i\,:\, x_i\in \varOmega , \alpha _i\in \mathbb {R}_+, i=1,\ldots ,k, k\in \mathbb {N}\}\) stands for the convex conical hull of \(\varOmega \), where \(\mathbb {R}_+:=[0,+\infty [\subset \mathbb {R}.\) The notation \(\mathrm{conv\,}\varOmega \) denotes the convex hull of \(\varOmega \subset \mathbb {R}^n.\) A symmetric \((n\times n)\) matrix A is said to be positive semidefinite, denoted by \(A\succeq 0\), whenever \(x^\top Ax\ge 0\) for all \(x\in \mathbb {R}^n.\) A real-valued function \(f:\mathbb {R}^n\rightarrow \mathbb {R}\) is coercive on \(\mathbb {R}^n\) if \(\liminf \limits _{||x||\rightarrow \infty }{f(x)}=+\infty \). In particular, it is shown in [19, Lemma 3.1] that a convex polynomial f is coercive on \(\mathbb {R}^n\) if there exists \(\bar{x}\in \mathbb {R}^n\) such that the Hessian \(\nabla ^2f(\bar{x})\) is positive definite, i.e., \(x^\top \nabla ^2f(\bar{x}) x> 0\) for all \(x\in \mathbb {R}^n\setminus \{0\}.\) Some numerically checkable sufficient conditions for the coercivity of non-convex polynomials have also been given in [15].

Denote by \(\mathbb {R}[x]\) the ring of polynomials in x with real coefficients. One says that (cf. [20]) \(f\in \mathbb {R}[x]\) is sum-of-squares if there exist polynomials \(f_j\in \mathbb {R}[x], j=1,\ldots , r,\) such that \(f=\sum _{j=1}^rf_j^2.\) The set of all sum-of-squares polynomials in x is denoted by \(\Sigma ^2[x].\) Given polynomials \(\{g_1,\ldots ,g_r\}\subset \mathbb {R}[x],\) the notation \({\mathbf {M}}(g_1,\ldots ,g_r)\) stands for the set of polynomials generated by \(\{g_1,\ldots ,g_r\},\) i.e.,

If there exists \(h\in {\mathbf {M}}(g_1,\ldots ,g_r)\) such that the set \(\{x\in \mathbb {R}^n\,:\, h(x)\ge 0\}\) is compact, then \({\mathbf {M}}(g_1,\ldots ,g_r)\) is called to be archimedean (cf. [26]).

The following lemma of Putinar (cf. [14, 20]), which provides a positivity representation for a polynomial over a system of polynomial inequalities under the archimedean property, can be viewed as a polynomial analog of Farkas’s lemma.

Lemma 2.1

(Putinar’s Positivstellensatz [14]) Let \(f, g_j\in \mathbb {R}[x], j=1,\ldots , r.\) Suppose that \({\mathbf {M}}(g_1,\ldots ,g_r)\) is archimedean. If \(f(x)>0\) for all \(x\in \{y\in \mathbb {R}^n \,:\, g_j(y)\ge 0, j=1,\ldots ,r\}\), then \(f\in {\mathbf {M}}(g_1,\ldots ,g_r),\) i.e., there exist \(\sigma _j\in \Sigma ^2[x], j=0,1,\ldots ,r,\) such that \(f=\sigma _0+\sum _{j=1}^r\sigma _jg_j.\)

Consider an uncertain linear inequality system,

where \((\hat{a}_j, \hat{b}_j), j=1,\ldots , q\) are uncertain and they belong to the uncertainty sets \(\widehat{U}_j, j=1,\ldots , q.\) The uncertainty sets are given by

where \(a_j^i\in \mathbb {R}^n, b_j^i\in \mathbb {R}, i=0,1,\ldots ,s, j=1,\ldots ,q\) are fixed and \(U_j, j=1,\ldots ,q\) are spectrahedra (see, e.g., [27]) described by

with \(A^i_j, i=0,1,\ldots ,s, j=1,\ldots ,q\) symmetric \((p_j\times p_j)\) matrices.

Now, consider the affine mappings \(a_j:\mathbb {R}^s\rightarrow \mathbb {R}^n\) and \(b_j:\mathbb {R}^s\rightarrow \mathbb {R}, j=1,\ldots ,q\) given, respectively, by

with \(a_j^i\in \mathbb {R}^n, b_j^i\in \mathbb {R}, i=0,1,\ldots ,s, j=1,\ldots ,q\) fixed as above. Then, the robust counterpart of the uncertain system (2),

can be expressed equivalently as

The generalized non-homogeneous Farkas lemma, which provides a numerically tractable certificate for nonnegativity of an affine function over the uncertain linear inequality system (5), plays an important role in characterizing robust solutions of the lower-level optimization problem.

Lemma 2.2

(See [21, Theorem 2.1]) Let \(C:=\mathrm{cone}\big \{\big (a_j(u_j),b_j(u_j)\big ) \,:\, u_j\in U_j, j=1,\ldots ,q\big \}\) be closed, where \(U_j, j=1,\ldots ,p,\) in (3) are assumed to be bounded, and let \((\wp ,r)\in \mathbb {R}^n\times \mathbb {R}\). Assume that the robust linear inequality system (5) has a solution, i.e.,

Then, the following statements are equivalent:

-

(i)

\(x\in \mathbb {R}^n,\; a_j(u_j)^\top x\le b_j(u_j),\; \forall u_j\in U_j,\; j=1,\ldots ,q\Longrightarrow \wp ^\top x -r \ge 0;\)

-

(ii)

\(\exists \lambda _j^0\ge 0, \lambda _j^i\in \mathbb {R}, j=1,\ldots ,q, i=1,\ldots ,s, \text { such that}\)

$$\begin{aligned}&\wp +\sum \limits _{j=1}^q\left( \lambda _j^0a^0_j+\sum ^{s}_{i=1}\lambda ^i_ja^i_j\right) =0,\; -r-\sum \limits _{j=1}^q\left( \lambda _j^0b^0_j+\sum ^{s}_{i=1}\lambda ^i_jb^i_j\right) \ge 0\\&\text {and } \lambda _j^0A^0_j+\sum _{i=1}^s\lambda ^i_jA^i_j\succeq 0, j=1,\ldots ,q. \end{aligned}$$

Bilevel Polynomial Problems. Let \(f:\mathbb {R}^m\times \mathbb {R}^n\rightarrow \mathbb {R}\) be a real polynomial. We consider an uncertain bilevel polynomial optimization problem with linear constraints as

where \((\tilde{a}_i, \tilde{b}_i, \tilde{c}_i)\in \widetilde{U}_i, i=1,\ldots ,l\) and \((\hat{a}_j,\hat{b}_j)\in \widehat{U}_j, j=1,\ldots , q\) are uncertain and

denotes the optimal solution set of the uncertain lower-level optimization problem

In the above data, \(c_0\in \mathbb {R}^m, d_0\in \mathbb {R}^n, c_j \in \mathbb {R}^m, j=1,\ldots ,q,\) are fixed, the uncertainty sets \(\widetilde{U}_i, i=1,\ldots ,l,\) are boxes given by

with \(\underline{a}_i:=\left( \underline{a}_i^1,\ldots ,\underline{a}_i^m\right) , \overline{a}_i:=\left( \overline{a}_i^1,\ldots ,\overline{a}_i^m\right) \in \mathbb {R}^m, \underline{a}_i^k\le \overline{a}_i^k, k=1,\ldots ,m, \underline{b}_i:=\left( \underline{b}_i^1,\ldots ,\underline{b}_i^n\right) , \overline{b}_i:=\left( \overline{b}_i^1,\ldots ,\overline{b}_i^n\right) \in ~\mathbb {R}^n,\) \(\underline{b}_i^k\le \overline{b}_i^k, k=1,\ldots ,n,\) and \(\underline{c}_i, \overline{c}_i\in \mathbb {R}, \underline{c}_i\le \overline{c}_i\) for \(i=1,\ldots ,l,\) while the uncertainty sets \(\widehat{U}_j, j=1,\ldots , q,\) are given by

with \(U_j:=[-\gamma _1,\gamma _1]\times \cdots \times [-\gamma _s,\gamma _s], \gamma _i>0, i=1,\ldots , s\) and \(a_j^i\in \mathbb {R}^n, b_j^i\in \mathbb {R}, i=0,1,\ldots ,s, j=1,\ldots ,q,\) fixed.

By considering the affine mappings \(a_j:\mathbb {R}^s\rightarrow \mathbb {R}^n, b_j:\mathbb {R}^s\rightarrow \mathbb {R}, j=1,\ldots ,q,\) given as in (4), the uncertain lower-level optimization problem (6) can be formulated equivalently as

where \(u_j\in U_j, j=1,\ldots ,q.\) In the formulation of (LP), the boxes \(U_j, j=1,\ldots , q,\) play the role of uncertainty sets.

Now, the robust counterpart of the problem (P) is defined by

where \(Y(x):=\mathrm{argmin}_{z\in \mathbb {R}^n}\big \{c_0^\top x+d_0^\top z \,:\, c_j^\top x +a_j(u_j)^\top z\le b_j(u_j),\; \forall u_j\in U_j,\; j=1,\ldots ,q\big \}.\) Note that in the robust counterpart (RP), the uncertain constraint inequalities of both the lower-level and upper-level problems are enforced for every possible value of the data within the uncertainty sets \(U_j, j=1,\ldots ,q,\) and \(\widetilde{U}_i,\; i=1,\ldots ,l.\)

Given \(x\in \mathbb {R}^m\), we first focus on the robust counterpart of the lower-level optimization problem (LP) given by

As usual, a point \(y\in \mathbb {R}^n\) is called to be a robust solution of the lower-level optimization problem (LP) (see, e.g., [8]) if it is an optimal solution of the problem (RLP), i.e., \(y\in Y(x).\)

The next lemma establishes a characterization for robust solutions of the lower-level optimization problem (LP). In what follows, for the sake of simplicity, we denote by \(V_s\) the boxes \(U_j, j=1,\ldots ,q\), and use \(\left\{ \check{u}_k:=\left( \check{u}^{1}_k,\ldots ,\check{u}^{s}_k\right) \in \mathbb {R}^s\mid k=1,\ldots , 2^s\right\} \) to indicate the set of extreme points of the box \(V_s\).

Lemma 2.3

(Tractable characterization for robust solutions of (LP)) Let \(x\in \mathbb {R}^m\). Then, \(y\in Y(x)\) if and only if

Proof

For each \( j\in \{1,\ldots , q\},\) put

where \(E_0\) is the \((s\times s)\) diagonal matrix with the diagonal entries, say \(\gamma _i>0, i=1,\ldots , s,\) and \(E_i\) is the \((s\times s)\) diagonal matrix with one in the (i, i)th entry and zeros elsewhere. Then, it follows that

which shows how the box \(V_s\) can be expressed in terms of spectrahedra in (3).

Since \(U_j, j=1,\ldots ,q,\) are boxes, the cone \(C(x):=\mathrm{cone}\big \{\big (a_j(u_j),b_j(u_j)-c_j^\top x\big ) \,:\, u_j\in U_j, j=1,\ldots ,q\big \}\) is closed (see, e.g., [21, Proposition 2.2(i)]). Invoking Lemma 2.2, we can verify that \(y\in Y(x)\) if and only if the following conditions hold:

It is easy to see that (8) amounts to the following one

which in turn is equivalent to the assertion

where \(\big \{\check{u}_k:=\left( \check{u}^{1}_k,\ldots ,\check{u}^{s}_k\right) \in \mathbb {R}^s \,:\, k=1,\ldots , 2^s\big \}\) stands for the set of extreme points of the box \(V_s\) as above. Therefore, (8) becomes

In addition, it can be checked that, under our setting, (9) is equivalent to

So, we come to the assertion that \(y\in Y(x)\) if and only if

To complete the proof, it remains to show that (II) and (I) are equivalent to each other. To this end, suppose that (II) holds. By setting \(\mu _0:=\frac{1}{\sqrt{1+\sum \nolimits _{j=1}^q\left( \lambda _j^0\right) ^2+\sum \nolimits _{j=1}^q\sum \nolimits _{i=1}^s\left( \lambda _j^i\right) ^2}}\) and \(\mu _j:=\frac{\lambda _j^0}{\sqrt{1+\sum \nolimits _{j=1}^q\left( \lambda _j^0\right) ^2+\sum \nolimits _{j=1}^q\sum \nolimits _{i=1}^s\left( \lambda _j^i\right) ^2}},\) \( \mu _j^i:=~\frac{\lambda _j^i}{\sqrt{1+\sum \nolimits _{j=1}^q\left( \lambda _j^0\right) ^2+\sum \nolimits _{j=1}^q\sum \nolimits _{i=1}^s\left( \lambda _j^i\right) ^2}}, j=1,\ldots ,q, i=1,\ldots , s\), we come to (I). Conversely, assume that (I) holds. Letting \(\lambda _j^0:=\frac{\mu _j}{\mu _0}, \lambda _j^i:=\frac{\mu _j^i}{\mu _0}, j=1,\ldots , q, i=1,\ldots , s\), we arrive at (II). \(\square \)

To proceed further, we should define concepts of robust feasible/solutions for the uncertain bilevel polynomial optimization problem (P).

Definition 2.1

-

(i)

We say that \((\bar{x},\bar{y})\in \mathbb {R}^m\times \mathbb {R}^n\) is a robust feasible point of problem (P) if it satisfies

$$\begin{aligned} \bar{y}\in Y(\bar{x}),\;\tilde{a}_i^\top \bar{x}+\tilde{b}_i^\top \bar{y}\le \tilde{c}_i,\; \forall (\tilde{a}_i, \tilde{b}_i, \tilde{c}_i)\in \widetilde{U}_i,\; i=1,\ldots ,l, \end{aligned}$$or equivalently, it is a feasible point of its robust counterpart (RP).

-

(ii)

Let \((\bar{x},\bar{y})\in \mathbb {R}^m\times \mathbb {R}^n\) be a robust feasible point of problem (P). We say that \((\bar{x},\bar{y})\) is a global robust solution of problem (P) if \(f(\bar{x},\bar{y})\le f(x,y)\) for every robust feasible point (x, y) of problem (P), or equivalently, it is a global solution of its robust counterpart (RP).

-

(iii)

We say that the problem (P) satisfies the lower-level Slater condition (LSC) if for each \(x\in \mathbb {R}^m\) there exists \(z\in \mathbb {R}^n\) such that

$$\begin{aligned} c_j^\top x +a_j(u_j)^\top z< b_j(u_j),\;\forall u_j\in U_j,\; j=1,\ldots ,q. \end{aligned}$$(11)

The forthcoming lemma supplies a characterization for robust feasible points of the uncertain bilevel polynomial optimization problem (P). Hereafter, we use notation as before, and in addition, we denote by \(\bigg \{\left( \check{a}^{k}_i,\check{b}^{k}_i,\check{c}^{k}_i\right) \in \mathbb {R}^m\times \mathbb {R}^n\times \mathbb {R}\,:\, k=1,\ldots ,2^{m+n+1}\bigg \}\) the set of extreme points of the box \(\widetilde{U}_i\) for \(i=1,\ldots ,l,\) and let \(d_0:=\left( d_0^1,\ldots ,d_0^n\right) , a_j^i:=\left( a^{i1}_j,\ldots ,a^{in}_j\right) \in \mathbb {R}^n, i=0,1,\ldots , s, j=1,\ldots , q\).

Lemma 2.4

(Robust feasibility for (P))

-

(i)

Let \((x,y)\in \mathbb {R}^m\times \mathbb {R}^n\). Then, (x, y) is a robust feasible point of problem (P) if and only if there exists \(\mu :=(\mu _0,\mu _1,\ldots ,\mu _q,\mu _1^1,\ldots ,\mu ^1_q,\ldots ,\mu ^s_1,\ldots ,\mu ^s_q)\in \mathbb {R}^{q(s+1)+1}\) such that

$$\begin{aligned} (\mathrm{III}){\left\{ \begin{array}{ll} &{}g_i(x,y,\mu )\ge 0,\; i=1,\ldots , l2^{m+n+1}+q2^s,\\ &{}g_i(x,y,\mu )>0,\; i=l2^{m+n+1}+q2^s+1,\\ &{} g_i(x,y,\mu )\ge 0,\; i=l2^{m+n+1}+q2^s+2,\ldots ,L,\\ &{} h_j(x,y,\mu )\ge 0, -h_j(x,y,\mu )\ge 0,\; j=1,\ldots ,n+1,\end{array}\right. } \end{aligned}$$where \(L:=l2^{m+n+1}+q(2^s+s+1)+2\) and

$$\begin{aligned} g_i(x,y,\mu )&:=- \left( \check{a}_k^{i-(k-1)2^{m+n+1}}\right) ^\top x-\left( \check{b}_k^{i-(k-1)2^{m+n+1}}\right) ^\top y+ \check{c}_k^{i-(k-1)2^{m+n+1}} \text { for } \\ i&=(k-1)2^{m+n+1}+1, \ldots , k2^{m+n+1} \text { with } k=1,\ldots ,l,\\ g_i(x,y,\mu )&:=-c^\top _{k}x-\left( a_{k}^0+\sum ^{s}_{j=1}\check{u}^{j}_{i-(k-1)2^{s}-l2^{m+n+1}}a^j_{k}\right) ^\top y +b_{k}^0\\&\qquad +\sum ^{s}_{j=1}\check{u}^{j}_{i-(k-1)2^{s}-l2^{m+n+1}}b^j_{k} \text { for} \\ i&=l2^{m+n+1}+(k-1)2^{s}+1, \ldots , l2^{m+n+1}+k2^{s} \text { with } k=1,\ldots ,q,\\ g_i(x,y,\mu )&:=\mu _{0} \text { for } i=l2^{m+n+1}+q2^s+1,\\ g_i(x,y,\mu )&:=\mu _{i-l2^{m+n+1}-q2^s-1} \text { for } i=l2^{m+n+1}\\&\qquad +q2^s+2,\ldots ,l2^{m+n+1}+q2^s+q+1,\\ g_i(x,y,\mu )&:=-\mu _0d_0^\top y-\sum \limits _{k=1}^q\left( \mu _kb^0_k-\mu _kc_k^\top x+\sum ^{s}_{j=1}\mu ^j_kb^j_k\right) \text { for } \\ i&=l2^{m+n+1}+q2^s+q+2,\\ g_i(x,y,\mu )&:=\left( \mu _k\gamma _{i-(k-1)s-(l2^{m+n+1}+q2^s+q+2)}\right) ^2-\left( \mu _k^{i-(k-1)s-(l2^{m+n+1}+q2^s+q+2)}\right) ^2 \text { for } \\ i&=(l2^{m+n+1}+q2^s+q+2)\\&\qquad +(k-1)s+1,\ldots , (l2^{m+n+1}+q2^s+q+2)+ks \\&\qquad \text {with } k=1,\ldots ,q, \end{aligned}$$and

$$\begin{aligned} h_j(x,y,\mu ):=&\mu _0d_0^j+\sum \limits _{k=1}^q\left( \mu _ka^{0j}_k+\sum _{i=1}^s\mu _k^ia^{ij}_k\right) \text { for } j=1,\ldots ,n,\\ h_j(x,y,\mu ):=&1- \sum \limits _{k=0}^q(\mu _k)^2-\sum \limits _{k=1}^q\sum \limits _{i=1}^s\left( \mu _k^i\right) ^2 \text { for } j=n+1. \end{aligned}$$ -

(ii)

Assume that the (LSC) in (11) is satisfied. Then, (III) is equivalent to

$$\begin{aligned} \mathrm{(IV)}{\left\{ \begin{array}{ll} &{}g_i(x,y,\mu )\ge 0,\; i=1,\ldots ,L,\\ &{} h_j(x,y,\mu )\ge 0, -h_j(x,y,\mu )\ge 0,\; j=1,\ldots ,n+1\end{array}\right. } \end{aligned}$$(12)for each \((x,y,\mu )\in \mathbb {R}^m\times \mathbb {R}^n\times \mathbb {R}^{q(s+1)+1}.\)

Proof

-

(i)

Let us first show that the set

$$\begin{aligned} \left\{ (x,y)\in \mathbb {R}^m\times \mathbb {R}^n\,:\,\tilde{a}_i^\top x+\tilde{b}_i^\top y\le \tilde{c}_i,\; \forall (\tilde{a}_i, \tilde{b}_i, \tilde{c}_i)\in \widetilde{U}_i,\,i=1,\ldots ,l\right\} \end{aligned}$$(13)is equivalent to the following one

$$\begin{aligned} \left\{ (x,y)\in \mathbb {R}^m\times \mathbb {R}^n: \check{a}_i^{k\top } x+\check{b}_i^{k\top } y- \check{c}_i^{k}\le 0, k=1,\ldots , 2^{m+n+1},\,i=1,\ldots ,l\right\} , \end{aligned}$$(14)where \(\left\{ \left( \check{a}^{k}_i,\check{b}^{k}_i,\check{c}^{k}_i\right) \in \mathbb {R}^m\times \mathbb {R}^n\times \mathbb {R}\,:\, k=1,\ldots ,2^{m+n+1}\right\} \) denotes the set of extreme points of the box \(\widetilde{U}_i\) for \(i=1,\ldots ,l\) as denoted above. Indeed, for each \((x,y)\in \mathbb {R}^m\times \mathbb {R}^n\), by letting \(X:=(x,y,-1)\), we obtain that

$$\begin{aligned}&\max {\bigg \{\tilde{a}_i^\top x+\tilde{b}_i^\top y-\tilde{c}_i \,:\, (\tilde{a}_i,\tilde{b}_i,\tilde{c}_i)\in \widetilde{U}_i, \, i=1,\ldots ,l\bigg \}}\\&=\max {\bigg \{\tilde{a}_i^\top x+\tilde{b}_i^\top y-\tilde{c}_i \,:\, (\tilde{a}_i,\tilde{b}_i,\tilde{c}_i)\in \mathrm{conv\,}\bigg \{\left( \check{a}^{k}_i,\check{b}^{k}_i,\check{c}^{k}_i\right) , k=1,\ldots , 2^{m+n+1}\bigg \},\, i=1,\ldots ,l\bigg \}}\\&=\max {\bigg \{ W_i^\top X \,:\, W_i\in \mathrm{conv\,}\bigg \{\left( \check{a}^{k}_i,\check{b}^{k}_i,\check{c}^{k}_i\right) , k=1,\ldots , 2^{m+n+1}\bigg \}, i=1,\ldots ,l\bigg \}}\\&=\max {\bigg \{\check{W}_i^{k\top } X \,:\, k=1,\ldots , 2^{m+n+1},\, i=1,\ldots ,l \bigg \}}\\&=\max {\bigg \{\check{a}_i^{k\top } x+\check{b}_i^{k\top }y-\check{c}^{k}_i \,:\, k=1,\ldots , 2^{m+n+1},\,i=1,\ldots ,l \bigg \}}, \end{aligned}$$where \(W_i:=(\tilde{a}_i,\tilde{b}_i,\tilde{c}_i)\) and \(\check{W}^{k}_i:=\left( \check{a}^{k}_i,\check{b}^{k}_i,\check{c}^{k}_i\right) , k=1,\ldots , 2^{m+n+1}\) for \(i=1,\ldots ,l.\) So, we conclude that (13) is equivalent to (14).

Now, let (x, y) be a robust feasible point of problem (P). It means that

$$\begin{aligned} y\in Y(x),\; \tilde{a}_i^\top x+\tilde{b}_i^\top y\le \tilde{c}_i,\; \forall (\tilde{a}_i, \tilde{b}_i, \tilde{c}_i)\in \widetilde{U}_i,\; i=1,\ldots ,l. \end{aligned}$$(15)Due to the equivalence between (13) and (14), (15) is nothing else, but the assertion that

$$\begin{aligned} y\in Y(x),\; \check{a}_i^{k\top } x+\check{b}_i^{k\top } y- \check{c}_i^{k}\le 0,\; k=1,\ldots , 2^{m+n+1},\; i=1,\ldots ,l. \end{aligned}$$(16)Invoking Lemma 2.3, (16) can be equivalently

$$\begin{aligned}&(\mathrm{V}){\left\{ \begin{array}{ll}&{}(x,y)\in \mathbb {R}^m\times \mathbb {R}^n, \check{a}_i^{k\top } x+\check{b}_i^{k\top } y- \check{c}_i^{k}\le 0,\; k=1,\ldots , 2^{m+n+1},\; i=1,\ldots ,l,\\ &{} c^\top _jx +\left( a_j^0+\sum ^{s}_{i=1}\check{u}^{i}_ka^i_j\right) ^\top y-\left( b_j^0+ \sum ^{s}_{i=1}\check{u}^{i}_kb^i_j\right) \le 0, k=1,\ldots , 2^s, j=1,\ldots ,q, \\ &{} \exists \mu _0> 0, \mu _j\ge 0, \mu _j^i\in \mathbb {R}, j=1,\ldots ,q, i=1,\ldots ,s \\ &{}\text { such that } \sum \limits _{j=0}^q(\mu _j)^2+\sum \limits _{j=1}^q\sum \limits _{i=1}^s\left( \mu _j^i\right) ^2=1, \\ &{}\mu _0d_0+\sum \limits _{j=1}^q\left( \mu _ja^0_j+\sum ^{s}_{i=1}\mu ^i_ja^i_j\right) =0, \; -\mu _0d_0^\top y-\sum \limits _{j=1}^q\left( \mu _jb^0_j-\mu _jc_j^\top x+\sum ^{s}_{i=1}\mu ^i_jb^i_j\right) \ge 0 \\ &{}\text {and } (\mu _j\gamma _i)^2-\left( \mu ^i_j\right) ^2\ge 0, i=1,\ldots , s, j=1,\ldots ,q,\end{array}\right. } \end{aligned}$$where \(\bigg \{\check{u}_k:=\left( \check{u}^{1}_k,\ldots ,\check{u}^{s}_k\right) \in \mathbb {R}^s\mid k=1,\ldots , 2^s\bigg \}\) stands for the set of extreme points of the box \(V_s.\)

Denote \(\mu :=~\left( \mu _0,\mu _1,\ldots ,\mu _q,\mu _1^1,\ldots ,\mu ^1_q,\ldots ,\mu ^s_1,\ldots ,\mu ^s_q\right) \in \mathbb {R}^{q(s+1)+1}, g_i(x,y,\mu ), i=1,\ldots ,L\) and \( h_j(x,y,\mu ),\) \( j=1,\ldots , n+1\) as stated in the lemma. Then, we conclude by (V) that (x, y) is a robust feasible point of problem (P) if and only if there exists \(\mu \in \mathbb {R}^{q(s+1)+1}\) such that

$$\begin{aligned} g_i(x,y,\mu )\ge & {} 0,\, i=1,\ldots , l2^{m+n+1}+q2^s,\\ g_i(x,y,\mu )> & {} 0,\, i=l2^{m+n+1}+q2^s+1,\\ g_i(x,y,\mu )\ge & {} 0,\, i=l2^{m+n+1}+q2^s+2,\ldots ,L,\\ h_j(x,y,\mu )\ge & {} 0, -h_j(x,y,\mu )\ge 0,\; j=1,\ldots ,n+1. \end{aligned}$$So, we have proved (i).

-

(ii)

Let the (LSC) in (11) be satisfied, and consider any \((x,y,\mu )\in \mathbb {R}^m\times \mathbb {R}^n\times \mathbb {R}^{q(s+1)+1}.\) If \((x,y,\mu )\) satisfies (III), then it obviously satisfies (IV).

Conversely, let \((x,y,\mu )\) be such that (IV) holds. It means that

$$\begin{aligned} \mathrm{(VI)}&{\left\{ \begin{array}{ll}&{}(x,y)\in \mathbb {R}^m\times \mathbb {R}^n,\\ &{}\mu :=\left( \mu _0,\mu _1,\ldots ,\mu _q,\mu _1^1,\ldots ,\mu ^1_q,\ldots ,\mu ^s_1,\ldots ,\mu ^s_q\right) \in \mathbb {R}^{q(s+1)+1},\\ &{} \check{a}_i^{k\top } x+\check{b}_i^{k\top } y- \check{c}_i^{k}\le 0,\; k=1,\ldots , 2^{m+n+1},\; i=1,\ldots ,l,\\ &{} c^\top _jx +\left( a_j^0+\sum ^{s}_{i=1}\check{u}^{i}_ka^i_j\right) ^\top y \\ &{}-\left( b_j^0+ \sum ^{s}_{i=1}\check{u}^{i}_kb^i_j\right) \le 0, k=1,\ldots , 2^s, j=1,\ldots ,q, \\ &{} \mu _0\ge 0, \mu _j\ge 0, j=1,\ldots ,q, \; \sum \limits _{j=0}^q(\mu _j)^2+\sum \limits _{j=1}^q\sum \limits _{i=1}^s\left( \mu _j^i\right) ^2=1, \\ &{}\mu _0d_0+\sum \limits _{j=1}^q\left( \mu _ja^0_j+\sum ^{s}_{i=1}\mu ^i_ja^i_j\right) =0, \\ &{} -\mu _0d_0^\top y-\sum \limits _{j=1}^q\left( \mu _jb^0_j-\mu _jc_j^\top x+\sum ^{s}_{i=1}\mu ^i_jb^i_j\right) \ge 0 \\ &{}\text {and } (\mu _j\gamma _i)^2-\left( \mu ^i_j\right) ^2\ge 0, i=1,\ldots , s, j=1,\ldots ,q.\end{array}\right. } \end{aligned}$$We need to show that \((x,y,\mu )\) satisfies (III). It suffices to verify that \(\mu _0\ne 0.\) Assume on the contrary that \(\mu _0=0.\) We get by (VI) that

$$\begin{aligned} \sum \limits _{j=1}^q(\mu _j)^2+\sum \limits _{j=1}^q\sum \limits _{i=1}^s\left( \mu _j^i\right) ^2&=1, \end{aligned}$$(17)$$\begin{aligned} \sum \limits _{j=1}^q\left( \mu _ja^0_j+\sum ^{s}_{i=1}\mu ^i_ja^i_j\right)&=0, \quad \sum \limits _{j=1}^q \mu _jc_j^\top x -\sum \limits _{j=1}^q\mu _jb^0_j-\sum \limits _{j=1}^q\sum ^{s}_{i=1}\mu ^i_jb^i_j\ge 0, \end{aligned}$$(18)$$\begin{aligned} (\mu _j\gamma _i)^2-\left( \mu ^i_j\right) ^2&\ge 0, i=1,\ldots , s, j=1,\ldots ,q. \end{aligned}$$(19)By (19), for each \(j\in \{1,\ldots ,q\}\), \(\mu _j^i=0\) for \(i=1,\ldots ,s\) whenever \(\mu _j=0,\) and hence, we conclude from (17) that there exists \(j\in \{1,\ldots ,q\}\) such that \(\mu _j\ne 0\), i.e., \(\sum \limits _{j=1}^q\mu _j\ne 0.\) For each \(j\in \{1,\ldots ,q\},\) let \(\bar{u}_j:=(\bar{u}^1_j,\ldots ,\bar{u}^s_j)\in \mathbb {R}^s\) with

$$\begin{aligned} \bar{u}^i_j:={\left\{ \begin{array}{ll}0 &{}\text {if } \mu _j=0\\ \frac{\mu ^i_j}{\mu _j} &{}\text {if } \mu _j\ne 0,\end{array}\right. }\; i=1,\ldots , s. \end{aligned}$$Then, \(|\bar{u}^i_j|\le \gamma _i\) for \(i=1,\ldots ,s, j=1,\ldots ,q\), and so, \(\bar{u}_j\in V_s=U_j\) for all \(j=1,\ldots ,q.\) On the one hand, in view of the (LSC) in (11), we find \(z\in \mathbb {R}^n\) such that

$$\begin{aligned} c_j^\top x +a_j(\bar{u}_j)^\top z< b_j(\bar{u}_j),\; j=1,\ldots ,q.\end{aligned}$$Since \(\sum \limits _{j=1}^q\mu _j\ne 0,\) it follows that

$$\begin{aligned} \sum \limits _{j=1}^q\mu _j c_j^\top x +\sum \limits _{j=1}^q\mu _j a_j(\bar{u}_j)^\top z< \sum \limits _{j=1}^q\mu _jb_j(\bar{u}_j),\end{aligned}$$or equivalently,

$$\begin{aligned} \sum \limits _{j=1}^q\mu _j c_j^\top x +\bigg (\sum \limits _{j=1}^q\mu _j a_j^0+\sum \limits _{j=1}^q\sum ^{s}_{i=1}\mu ^i_ja^i_j\bigg )^\top z- \sum \limits _{j=1}^q\mu _jb_j^0-\sum \limits _{j=1}^q\sum ^{s}_{i=1}\mu ^i_jb^i_j<0.\end{aligned}$$(20)On the other hand, we get by (18) that

$$\begin{aligned} \sum \limits _{j=1}^q\mu _j c_j^\top x +\bigg (\sum \limits _{j=1}^q\mu _j a_j^0+\sum \limits _{j=1}^q\sum ^{s}_{i=1}\mu ^i_ja^i_j\bigg )^\top z- \sum \limits _{j=1}^q\mu _jb_j^0-\sum \limits _{j=1}^q\sum ^{s}_{i=1}\mu ^i_jb^i_j\ge 0,\end{aligned}$$which contradicts (20). Therefore, we conclude that \(\mu _0> 0.\) It entails that \((x,y,\mu )\) satisfies (III). The proof is complete.

\(\square \)

3 Robust Bilevel Polynomial Problems via Semidefinite Programming Relaxations

In this section, we present semidefinite programming relaxations for the uncertain bilevel polynomial optimization problem (P) and show how the robust global optimal value of problem (P) can be found by solving a sequence of corresponding semidefinite programming relaxation problems.

Sum-of-Squares Relaxation Problems. For each \(k\in \mathbb {N}\), we consider a relaxation problem in terms of sum of squares for the uncertain bilevel polynomial optimization problem (P) given by

where \(g_i, i=1,\ldots ,L:=l2^{m+n+1}+q(2^s+s+1)+2, h_j, j=1,\ldots , n+1,\) are defined as in the statement of Lemma 2.4 and \(\kappa \ge f(\bar{x},\bar{y})\) with \((\bar{x},\bar{y})\) being a robust feasible point of problem (P).

It is worth mentioning here that for each fixed \(k\in \mathbb {N}\), the problem (\(\mathrm{D}_\mathrm{k}\)) can be regarded as a sum of squares relaxation problem of the problem (P), and more interestingly, it can be reformulated as a semidefinite linear programming problem [20]. We denote the robust optimal value of problem (P) and the optimal value of problem (\(\mathrm{D}_\mathrm{k}\)), respectively, by \({\mathrm{val}}\) (P) and val(\(\mathrm{D}_\mathrm{k}\)).

In the next theorem, we show that, under some additional conditions, the uncertain bilevel polynomial optimization problem (P) has a global robust solution and the optimal values of the relaxation problem (\(\mathrm{D}_\mathrm{k}\)) converge to the robust global optimal value of the problem (P) when the degree bound k goes to infinity.

Theorem 3.1

(Computing robust global optimal value by SDP) Let f be coercive on \(\mathbb {R}^m\times \mathbb {R}^n\). Assume that the (LSC) in (11) is satisfied. Then, the uncertain bilevel polynomial optimization problem (P) has a global robust solution \((x_0,y_0)\) satisfying

Moreover, we have

Proof

(Proving the existence of global robust solutions of (P)) Thanks to Lemma 2.4(i), we assert that \((x,y)\in \mathbb {R}^m\times \mathbb {R}^n\) is a robust feasible point of problem (P) if and only if there exists \(\mu \in \mathbb {R}^{q(s+1)+1}\) such that

where \(g_i, i=1,\ldots ,L, h_j, j=1,\ldots , n+1\) are defined as in the statement of Lemma 2.4.

Let \(k\in \mathbb {N}\), and let \((\bar{x},\bar{y})\in \mathbb {R}^m\times \mathbb {R}^n\) be a robust feasible point of problem (P) as in the construction of problem (\(\mathrm{D}_\mathrm{k}\)). Then, one has \(\kappa \ge f(\bar{x},\bar{y}),\) and there exists \(\bar{\mu }\in \mathbb {R}^{q(s+1)+1}\) such that (23) holds at \((\bar{x},\bar{y},\bar{\mu })\). Putting \(\hat{h}:=\kappa -f+h_{n+1}\), we assert that

Indeed, as \((\bar{x},\bar{y},\bar{\mu })\) satisfies (23), it entails that \(h_{n+1}(\bar{x},\bar{y},\bar{\mu })=0\). Then, \(\hat{h}(\bar{x},\bar{y},\bar{\mu })=~\kappa -f(\bar{x},\bar{y})\ge ~0\), and so, \((\bar{x},\bar{y},\bar{\mu })\in H\).

For each \((x,y,\mu )\in H\), we have \(\hat{h}(x,y,\mu )\ge 0\). It entails that

Since f is coercive on \(\mathbb {R}^m\times \mathbb {R}^n,\) it follows that \(\inf _{(x,y)\in \mathbb {R}^m\times \mathbb {R}^n}f(x,y)>-\infty \), and hence, (24) guarantees that H is a compact set. Setting

we easily verify that \((\bar{x},\bar{y},\bar{\mu })\in K,\) and hence, \(K\ne \emptyset .\) Moreover, it can be checked that \(K\subset H\), and so, K is compact as well.

Now, consider the function \(\tilde{f}(x,y):=f(x,y)-f(\bar{x},\bar{y})\) for \((x,y)\in \mathbb {R}^m\times \mathbb {R}^n.\) Since \(\tilde{f}\) is a polynomial and hence continuous, we conclude that there exists \((x_0,y_0,\mu _0)\in K\) such that

where \(\mu \in \mathbb {R}^{q(s+1)+1}.\)

We claim that \((x_0,y_0)\) is a global robust solution of problem (P). Indeed, by \((x_0,y_0,\mu _0)\in K,\) it follows that

and that

Under the fulfillment of the (LSC) in (11), invoking Lemma 2.4(ii), we assert by (27) that (23) holds at \((x_0,y_0,\mu _0)\) and so, \((x_0,y_0)\) is a robust feasible point of problem (P). Let \((x,y)\in \mathbb {R}^m\times \mathbb {R}^n\) be a robust feasible point of problem (P). Then, there is \(\mu \in \mathbb {R}^{q(s+1)+1}\) such that (23) holds. If in addition \( \kappa -f(x,y)\ge 0\), then \((x,y,\mu )\in K\), and so, we get by (26) that \(f(x_0,y_0)\le f(x,y).\) Otherwise, \( \kappa -f(x,y)< 0,\) then \(f(x,y)> \kappa \ge f(x_0,y_0),\) where the last inequality holds by virtue of (28). Consequently, our claim holds.

Furthermore, it holds that

[Verifying (21)] If the feasible set of problem (\(\mathrm{D}_\mathrm{k}\)) is empty, then \(\mathrm{val}\) (Dk \(=-\infty \), and in this case, (21) holds trivially. Now, let \((t,\sigma _0,\sigma _i,\xi _j,\zeta ), i=1,\ldots ,L, j=1,\ldots , n+1 \) be a feasible point of problem (\(\mathrm{D}_\mathrm{k}\)). It means that there exist \( t\in \mathbb {R}, \zeta ,\sigma _0,\sigma _i\in \Sigma ^2[x,y,\mu ],\xi _j\in \mathbb {R}[x,y,\mu ], \mathrm{deg}(\sigma _0)\le k, \mathrm{deg}(\zeta f)\le k, \mathrm{deg}(\sigma _ig_i)\le k,\) \( \mathrm{deg}(\xi _jh_j)\le k, i=1,\ldots ,L, j=1,\ldots , n+1\) such that \(f-\sum \limits _{i=1}^{L}\sigma _ig_i-\sum \limits _{j=1}^{n+1}\xi _jh_j-\zeta (\kappa -f)-t=\sigma _0\) or equivalently,

where \((x_0,y_0)\) is the global robust solution of problem (P) as shown above. Recall here that (28) and (23) hold at \((x_0,y_0,\mu _0).\) Due to the nonnegativity of sum-of-squares polynomials, estimating (30) at \((x_0,y_0,\mu _0)\), we obtain that \(\big (1+\zeta (x_0,y_0,\mu _0)\big )f(x_0,y_0)\ge t+\zeta (x_0,y_0,\mu _0)f(x_0,y_0),\) or equivalently, \(f(x_0,y_0)\ge t.\) It guarantees that \(\mathrm{val}\) (\(\mathrm{D}_\mathrm{k}\)) \(\le f(x_0,y_0)\), which together with (29) proves that (21) is valid.

[Verifying (22)] Let us consider a set of polynomials \({\mathbf {M}}(g_1 \), \(\ldots , g_{L}\), \(h_1,\ldots \), \(h_{n+1}\),\(-h_1,\ldots ,-h_{n+1}\),\(\kappa -f)\) as defined in (1). It is obvious by definition that

As shown above, the set \(H:=\{(x,y,\mu )\in \mathbb {R}^m\times \mathbb {R}^n\times \mathbb {R}^{q(s+1)+1} \,:\, \hat{h}(x,y,\mu )\ge 0\}\) is compact. Hence, \({\mathbf {M}}(g_1,\ldots ,g_{L},h_1,\ldots ,h_{n+1},-h_1,\ldots ,-h_{n+1},\kappa -f)\) is archimedean.

Let \(\epsilon >0.\) We pay attention to the set K given in (25). For any \((x,y,\mu )\in K\), it follows that

which is nothing else but (23) under the fulfillment of the (LSC) in (11), and so, (x, y) is a robust feasible point of problem (P) by virtue of Lemma 2.4. This fact gives us that \(f(x,y)\ge f(x_0,y_0)\) inasmuch as \((x_0,y_0)\) is a global robust solution of problem (P) as shown above. Consequently, it guarantees that

[Applying Putinar’s Positivstellensatz] Now, applying Lemma 2.1, we find sum-of-squares polynomials \(\sigma _i, i=0,1,\ldots ,L, \xi ^1_j, \xi ^2_j, j=1,\ldots , n+1, \zeta \in \Sigma ^2[x,y,\mu ]\) such that

Let \(\xi _j\in \mathbb {R}[x,y,\mu ], j=1,\ldots , n+1\) be real polynomials defined by \(\xi _j:= \xi ^1_j-\xi ^2_j, j=1,\ldots , n+1.\) Then, we deduce from (31) that \(f-\sum \limits _{i=1}^{L}\sigma _ig_i-\sum \limits _{j=1}^{n+1}\xi _jh_j-\zeta (\kappa -f)-f(x_0,y_0)+\epsilon =\sigma _0,\) or equivalently,

with \(t_0:=f(x_0,y_0)-\epsilon \in \mathbb {R}.\) So, there exists \(k_\epsilon \in \mathbb {N}\) such that \(\mathrm{deg}(\sigma _0)\le k_\epsilon , \mathrm{deg}(\zeta f)\le k_\epsilon , \mathrm{deg}(\sigma _ig_i)\le k_\epsilon ,\) \( \mathrm{deg}(\xi _jh_j)\le k_\epsilon , i=1,\ldots ,L, j=1,\ldots , n+1,\) and that

Since \(\epsilon >0\) was arbitrarily taken, we conclude that \(\liminf \limits _{k\rightarrow \infty }\mathrm{val}\) (\(\mathrm{D}_\mathrm{k}\)) \(\ge f(x_0,y_0).\) This together with (21) establishes (22), which ends the proof of the theorem. \(\square \)

Remark 3.1

It is worth noticing that if f is a convex polynomial and there exists \((\bar{x},\bar{y})\in \mathbb {R}^m\times \mathbb {R}^n\) such that its Hessian \(\nabla ^2f(\bar{x},\bar{y})\) is positive definite, then f is coercive on \(\mathbb {R}^m\times \mathbb {R}^n\) (see, e.g., [19, Lemma 3.1]) and hence, the above theorem can be obviously applied for this convex setting under the fulfillment of the (LSC) in (11).

The next example illustrates that if the (LSC) in (11) is violated, then the conclusion of Theorem 3.1 may go awry. Below, we consider the case of \(l:=1\) and \(q:=1\) for the purpose of simplicity.

Example 3.1

(The importance of the Slater condition) Consider an uncertain bilevel polynomial optimization problem of the form:

where \(u\in U:=[-1,1]\subset \mathbb {R}\) and \(Y(x, u):=\mathrm{argmin}_{z\in \mathbb {R}}\{x-z \,:\, (1+u)z\le 0\}.\) The problem (EP1) can be expressed in terms of problem (P), where \(\widetilde{U}:=[\underline{a},\overline{a}]\times [\underline{b},\overline{b}]\times [\underline{c},\overline{c}]\) with \(\underline{a}:=\overline{a}:=\underline{b}:=\overline{b}:=\underline{c}:=\overline{c}:=0\in \mathbb {R}\), and \(c_0:=1\in \mathbb {R}, d_0:=-1\in \mathbb {R}, c:=0\in \mathbb {R},\) the affine mappings \(a:\mathbb {R}\rightarrow \mathbb {R}\) and \(b:\mathbb {R}\rightarrow \mathbb {R}\) are given by \(a(u):=a^0+ua^1\) and \(b(u):=b^0+ub^1\) with \( a^0:=a^1:=1\in \mathbb {R}, b^0:=b^1:=0\in \mathbb {R}\) for \(u\in \mathbb {R}\).

In this setting, it is easy to see that \(Y(x)=\{0\}\) for any \(x\in \mathbb {R},\) and therefore, we see that \((x_0,y_0):=(0,0)\) is a global robust solution of problem (EP1). Obviously, f is coercive on \(\mathbb {R}^2\). Let \(\mu :=(\mu _0,\mu _1,\mu ^1_1)\in \mathbb {R}^3\), and denote the functions \(g_i(x,y,\mu )\) and \(h_j(x,y,\mu )\) as in the statement of Lemma 2.4. For the sake of clear representation, we first remove the null functions and then relabel them as

Let \(\kappa \ge -2= f(x_0,y_0)\). In this setting, the problem (\(\mathrm{D}_\mathrm{k}\)), \(k\in \mathbb {N},\) becomes

We claim that, for each \(k\in \mathbb {N}\), the representation of sum-of-squares polynomials in (32) fails to hold for any \(t\in ]-3,+\infty [\). Indeed, assume on the contrary that there exist \(k\in \mathbb {N}\), \(t\in ]-3,+\infty [\) and sum-of-squares polynomials \(\zeta , \sigma _0, \sigma _i\in \Sigma ^2[x,y,\mu ], i=1,\ldots ,5,\) as well as real polynomials \(\xi _j\in \mathbb {R}[x,y,\mu ], j=1,2\) such that

where \(\mathrm{deg}(\sigma _0)\le k, \mathrm{deg}(\zeta f)\le k, \mathrm{deg}(\sigma _ig_i)\le k, \mathrm{deg}(\xi _jh_j)\le k, i=1,\ldots ,5, j=1,2.\) Setting \(\tilde{x}:=0, \tilde{y}:=-1\) and \( \tilde{\mu }:=(0,\frac{1}{\sqrt{2}},\frac{-1}{\sqrt{2}})\), and then substituting \((\tilde{x},\tilde{y},{\tilde{\mu }})\) into (33) we obtain that

Hence, it entails that \(\sigma _0(\tilde{x},\tilde{y},{\tilde{\mu }})\le -3-t<0\), which is absurd.

Consequently, the conclusion (22) of Theorem 3.1 fails due to the fact that \(\mathrm{val}\) (\(\mathrm{D}_\mathrm{k}\)) \(\le -3\) for all \(k\in \mathbb {N}\) and \(\mathrm{val}\) (EP1) \(= f(x_0,y_0)=-2.\) The reason is that the (LSC) in (11) is violated.

Finally in this section, we provide a simple example which shows how our schemes can be applied to find the robust global optimal value of an uncertain bilevel polynomial optimization problem.

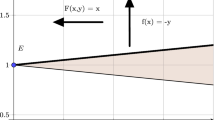

Example 3.2

(Uncertain bilevel polynomial problem) Consider an uncertain bilevel polynomial optimization problem of the form:

where \(u\in U:=[-\frac{1}{2},\frac{1}{2}]\subset \mathbb {R}\) and \(Y(x, u):=\mathrm{argmin}_{z\in \mathbb {R}}\{x-z \,:\, (1+u)z\le 0\}.\) The problem (EP2) can be expressed in terms of problem (P), where \(\widetilde{U}:=[\underline{a},\overline{a}]\times [\underline{b},\overline{b}]\times [\underline{c},\overline{c}]\) with \(\underline{a}:=\overline{a}:=\underline{b}:=\overline{b}:=\underline{c}:=\overline{c}:=0\in \mathbb {R}\), and \(c_0:=1\in \mathbb {R}, d_0:=-1\in \mathbb {R}, c:=0\in \mathbb {R},\) the affine mappings \(a:\mathbb {R}\rightarrow \mathbb {R}\) and \(b:\mathbb {R}\rightarrow \mathbb {R}\) are given by \(a(u):=a^0+ua^1\) and \(b(u):=b^0+ub^1\) with \( a^0:=a^1:=1\in \mathbb {R}, b^0:=b^1:=0\in \mathbb {R}\) for \(u\in \mathbb {R}\). A direct calculation shows that \((x_0,y_0):=(0,0)\) is a global robust solution of problem (EP2) with the robust global optimal value \(-2.\)

Now, we employ the relaxation schemes formulated in Theorem 3.1 to verify this robust global optimal value. In this setting, it is easy to see that f is coercive on \(\mathbb {R}^2\) and the (LSC) in (11) is fulfilled. Let \(\mu :=(\mu _0,\mu _1,\mu ^1_1)\in \mathbb {R}^3\), and denote the functions \(g_i(x,y,\mu )\) and \(h_j(x,y,\mu )\) as in the statement of Lemma 2.4. For the sake of clear representation, we first remove the null functions and then relabel them as

Let \((\bar{x},\bar{y}):=(1,0)\) be a feasible point, and take \(\kappa :=-1\ge -1= f(\bar{x},\bar{y})\). In this setting, the problem (\(\mathrm{D}_\mathrm{k}\)) becomes

Using the MATLAB toolbox YALMIP (see, e.g.,[28]), we convert the above optimization problem into an equivalent semidefinite program and solve it with \(k:=4\). The solver returns the true robust global optimal value as \(-2.000\).

4 Conclusions

We have shown that the robust global optimal value of the uncertain bilevel polynomial program with box data uncertainties is the limit of a sequence of values of Lasserre-type hierarchy of semidefinite linear programming relaxations. It has been achieved by transforming the uncertain bilevel polynomial program into a single-level non-convex polynomial program using a dual characterization of the solution of the lower-level program and Putinar’s Positivstellensatz of semi-algebraic geometry. We have provided a numerical example to show how the robust global optimal value of the uncertain bilevel polynomial program can be calculated via a semidefinite programming problem using the MATLAB toolbox YALMIP.

It would be of interest to study how a global robust solution of the uncertain bilevel polynomial program can be found from its semidefinite linear programming relaxations, or to extend the obtained results to the case of spectrahedral uncertainty, where explicit sum-of-squares/SDP relaxations in terms of the original data are often not available.

References

Bard, J.F.: Practical Bilevel Optimization: Algorithms and Applications. Kluwer Academic Publishers, Dordrecht (1998)

Colson, B., Marcotte, P., Savard, G.: An overview of bilevel programming. Ann. Oper. Res. 153, 235–256 (2007)

Dempe, S.: Foundations of Bilevel Programming. Kluwer Academic Publishers, Dordrecht (2002)

Dempe, S., Kalashnikov, V., Perez-Valdes, G.A., Kalashnykova, N.: Bilevel Programming Problems: Theory, Algorithms and Application to Energy Networks. Springer, Berlin (2015)

Gümüs, Z.H., Floudas, C.A.: Global optimization of nonlinear bilevel programming problems. J. Global Optim. 20, 1–31 (2001)

Dempe, S., Gadhi, N., Zemkoho, A.B.: New optimality conditions for the semivectorial bilevel optimization problem. J. Optim. Theory Appl. 157, 54–74 (2013)

Ye, J.J., Zhu, D.L.: New necessary optimality conditions for bilevel programs by combining MPEC and the value function approach. SIAM J. Optim. 20, 1885–1905 (2010)

Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust Optimization, Princeton Series Applied Mathematics. Princeton University Press, Princeton (2009)

Bertsimas, D., Brown, D.B., Caramanis, C.: Theory and applications of robust optimization. SIAM Rev. 53, 464–501 (2011)

Ben-Tal, A., Nemirovski, A.: Robust optimizationmethodology and applications. ISMP 2000, Part 2 (Atlanta, GA). Math. Program. 92, 453–480 (2002)

Ben-Tal, A., Nemirovski, A.: Selected topics in robust convex optimization. Math. Program. 112, 125–158 (2008)

Gabrel, V., Murat, C., Thiele, A.: Recent advances in robust optimization: an overview. Eur. J. Oper. Res. 235, 471–483 (2014)

Ben-Ayed, O., Blair, C.E.: Computational difficulties of bilevel linear programming. Oper. Res. 38, 556–560 (1990)

Putinar, M.: Positive polynomials on compact semi-algebraic sets. Indiana Univ. Math. J. 42, 203–206 (1993)

Jeyakumar, V., Lasserre, J.B., Li, G.: On polynomial optimization over non-compact semi-algebraic sets. J. Optim. Theory Appl. 163, 707–718 (2014)

Jeyakumar, V., Kim, S., Lee, G.M., Li, G.: Semidefinite programming relaxation methods for global optimization problems with sparse polynomials and semialgebraic feasible sets. J. Global Optim. 65, 175–190 (2016)

Jeyakumar, V., Lasserre, J.B., Li, G., Pham, T.S.: Convergent semidefinite programming relaxations for global bilevel polynomial optimization problems. SIAM J. Optim. 26, 753–780 (2016)

Jeyakumar, V., Vicente-Perez, J.: Dual semidefinite programs without duality gaps for a class of convex minimax programs. J. Optim. Theory Appl. 162, 735–753 (2014)

Jeyakumar, V., Pham, T.S., Li, G.: Convergence of the Lasserre hierarchy of SDP relaxations for convex polynomial programs without compactness. Oper. Res. Lett. 42(1), 34–40 (2014)

Lasserre, J.B.: Moments, Positive Polynomials and Their Applications. Imperial College Press, London (2009)

Chuong, T.D., Jeyakumar, V.: A generalized Farkas lemma with a numerical certificate and linear semi-infinite programs with SDP duals. Linear Algebra Appl. 515, 38–52 (2017)

Jeyakumar, V., Li, G.: A bilevel Farkas lemma to characterizing global solutions of a class of bilevel polynomial programs. Oper. Res. Lett. 43, 405–410 (2015)

Patriksson, M., Wynter, L.: Stochastic mathematical programs with equilibrium constraints. Oper. Res. Lett. 25, 159–167 (1999)

Budnitzki, A.: The solution approach to linear fuzzy bilevel optimization problems. Optimization 64, 1195–1209 (2015)

Loridan, P., Morgan, J.: Approximate solutions for two-level optimization problems. Trends in mathematical optimization (Irsee 1986), Internat. Schriftenreihe Numer. Math., 84, pp. 181–196. Birkhuser, Basel, (1988)

Demmel, J., Nie, J., Powers, V.: Representations of positive polynomials on noncompact semialgebraic sets via KKT ideals. J. Pure Appl. Algebra 209, 189–200 (2007)

Vinzant, C.: What is a spectrahedron? Not. Am. Math. Soc. 61, 492–494 (2014)

Lofberg, J.: YALMIP: A toolbox for modeling and optimization in MATLAB. In: Proceedings of the CACSD Conference, Taipei, Taiwan (2004)

Acknowledgements

The authors would like to thank the referees for the valuable comments and suggestions, which have improved the final preparation of the paper. The authors are grateful to Dr Guoyin Li for discussing about the Slater condition and for his help in the computer implementation of our methods. Research of the first author was supported by the UNSW Vice-Chancellor’s Postdoctoral Research Fellowship (RG134608/SIR50). Research of the second author was partially supported by a grant from the Australian Research Council.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chuong, T.D., Jeyakumar, V. Finding Robust Global Optimal Values of Bilevel Polynomial Programs with Uncertain Linear Constraints. J Optim Theory Appl 173, 683–703 (2017). https://doi.org/10.1007/s10957-017-1069-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-017-1069-4

Keywords

- Bilevel programming

- Robust optimization

- Uncertain linear constraints

- Global polynomial optimization

- Semidefinite program