Abstract

In dynamical systems, some of the most important questions are related to phase transitions and convergence time. We consider a one-dimensional probabilistic cellular automaton where their components assume two possible states, zero and one, and interact with their two nearest neighbors at each time step. Under the local interaction, if the component is in the same state as its two neighbors, it does not change its state. In the other cases, a component in state zero turns into a one with probability \(\alpha ,\) and a component in state one turns into a zero with probability \(1-\beta \). For certain values of \(\alpha \) and \(\beta \), we show that the process will always converge weakly to \(\delta _{0},\) the measure concentrated on the configuration where all the components are zeros. Moreover, the mean time of this convergence is finite, and we describe an upper bound in this case, which is a linear function of the initial distribution. We also demonstrate an application of our results to the percolation PCA. Finally, we use mean-field approximation and Monte Carlo simulations to show coexistence of three distinct behaviours for some values of parameters \(\alpha \) and \(\beta \).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the study of dynamical systems the key questions are those of stability, convergence time, and existence of phase transition. In simple terms, probabilistic cellular automata (PCA) are discrete dynamical systems, which means that these same investigations can be applied to them. According to what we know so far, there are few general results [1,2,3,4,5,6,7,8,9] that aim to answer some of these questions.

The study of PCA is linked with several research areas, among them: statistical physics and theoretical computer science, each with a different viewpoint. The study of PCA is rendered difficult by the existence of various algorithmically unsolvable problems [8, 10,11,12]. One form to bypass unsolvability is to assume monotonicity of our processes; however, assuming the monotonicity is often not enough to counter the unsolvability, as Toom and Mityushin have shown [12]. In our study, we consider a PCA, which is a generalization of the process described computationally in [13] and analytically in [14]. In this particular case, we are studying the elementary cellular automata 178, with \(\alpha \)-asynchronous dynamics [15] for which only few results are known in particular. Regnault [14] shows that under certain conditions the process will never reach the measure concentrated in the configuration made of all zeros.

In our PCA, components are placed on a one-dimensional. Each component assumes the state 0(zero) or 1(one), and they interact with their two closest neighbors. Informally speaking, whenever a component in state 1 has at least one neighbor in state 0, it becomes 0 with probability \(1-\beta \) independently of what happen at all the other places. On the other hand, whenever a component in state 0 has at least one neighbor in state 1, it becomes 1 with probability \(\alpha \) independently of what happen at all the other places. The measure concentrated on the bi-infinite sequence whose components are all zeros (or all ones, \(\delta _1\)), \(\delta _0,\) is invariant under our process.

The main challenge of the presented study was the fact that the considered PCA is non-monotonic, meaning that many of the existing techniques could not be used. While our study concerns a specific PCA, we consider it a first step towards development of more general results in the future, in a similar manner that many general results originated from studies of the contact process [16, 17] and the Stavskaya process [6, 18].

In this paper, we prove that the considered PCA converges to \(\delta _0\) in the finite mean time; for certain values of \(\beta \) and any initial distribution belonging to a class of uniform measures on a set of bi-infinite sequences, in which for each sequence there is only a finite number of components in state 1. Moreover, we show that the upper bound for this mean time is as a linear function of the length of the regions of ones in the initial distribution. Additionally, using our result, we show that the same is true for the percolation PCA, i.e., we show that its upper bound for the mean time of convergence is a linear function of the length of the islands of ones in the initial distribution.

Finally, we performed the mean-field approximation and Monte Carlo simulation of our process. The numerical studies show qualitatively existence of phase transition phenomena. We observed three regions in the parameter space: in the first one, it goes to \(\delta _{0}\); in the second one, it goes to \(\delta _{1}\) (measure concentrated on the bi-infinite sequence of ones); and in the third one, the density of ones in our process remains strictly between 0 and 1 all the time.

2 Definitions and Main Result

We study an operator whose configuration space is \(\Omega =\{0,1\}^{\mathbb {Z}},\) where \(\mathbb {Z}\) is the set of integer numbers, and 0 and 1 are two possible states. A configuration is a bi-infinite sequence of zeros or ones. A configuration \(x \in \Omega \) is determined by its components \(x_i\) for all \(i \in \mathbb {Z}\). The configuration where all components are zeros or ones are called “all zeros” and “all ones” respectively. The normalized measures concentrated in the configuration “all zeros” and “all ones” are denoted by \(\delta _{0}\) and \(\delta _{1},\) respectively. Also, given a configuration x, we denote the normalized measure concentrated in x by \(\delta _x.\)

Two configurations x and y are called close to each other if the set \(\{i \in \mathbb {Z}: x_i \ne y_i\}\) is finite. A configuration is called an island of ones if it is close to “all zeros”, and we denote the set of island of ones by \(\Delta _{1}\). If \(x\in \Delta _{1}\) and x is not all zeros, there are two unique integers i, j such that \(i < j,~ x_{i+1} =x_{j-1}= 1\) and \(x_k =0 \) if \(k\le i\) or \(j\le k\). For these unique positions, we define the length of the island x by \( j -i-1,\) and we denote this quantity by \(\mathsf{length}(x)\). Let us denote all zeros by \(0^{\mathbb {Z}}.\) We define \(\mathsf{length}(\{0\}^{\mathbb {Z}})=0.\)

We define cylinders in \(\Omega \) in the usual manner. By a thin cylinder we denote any set

where \(a_1,\dots , a_n \in \{0,1\}\) are parameters and \(i_1,\dots ,i_n\) are integer values. We denote by \(\mathcal M\) the set of normalized measures uniform on the \(\sigma \)-algebra generated by cylinders in \(\Omega \). By convergence in \(\mathcal M,\) we mean convergence on all thin cylinders.

Any map \(\mathsf{P}: \mathcal M\rightarrow \mathcal M\) is called an operator. Given an operator \(\mathsf{P}\) and an initial measure \(\nu \in \mathcal M,\) the resulting random process is the sequence of measures \(\nu ,\ \nu \mathsf{P},\ \nu \mathsf{P}^2,\ \ldots \) We say that a measure \(\nu \) is invariant under \(\mathsf{P}\) if \(\nu \mathsf{P}=\nu .\)

For any finite set \(K\subset \mathbb {Z}\) we define \(\mathcal{U}{(K)}=\bigcup _{i\in K}\mathcal{U}{(i)},\) where \(\mathcal{U}{(i)}=\{i-1,i,i+1\}.\) For any measure \(\delta _x\) the measure \(\delta _x\mathsf{P}\) is a product measure. Let us call the distribution of the \(i-\)th component according to this measure the transitional distribution and denote it by \(\theta _i(\cdot \mid x)\). In our study, the transition probability does not depend on the position i. Thus, in our text we will use \(\theta (\cdot \mid \cdot )\) instead of \(\theta _i(\cdot \mid \cdot ).\) In fact, the \(i-\)th transitional distribution depends only on components of x in \(\mathcal{U}(i)\), so we can write it also as \(\theta (\cdot \mid x_{\mathcal{U}(i)})\), where \(x_{\mathcal{U}(i)}\) is the restriction of x to \({\mathcal{U}(i)}\). By \(\theta (y\mid x)\) we denote the value of \(\theta (\cdot \mid x)\) on \(y\in \{0,1\}\). This probability is called transition probability.

The following equations give values of \(\nu \mathsf{P}\) for any \(\nu \in \mathcal M\) on all cylinders. Thus a general operator \(\mathsf{P}\) is defined. Lets \(x=(x_n)\) and \(y=(y_n)\) with \(n\in \mathbb {Z}\) two configurations.

The operator that we study is denoted by \(\mathsf{F},\) and for each position i, its transition probabilities are given by

The particular case of our operator \(\mathsf{F},\) taking \(\beta =1-\alpha ,\) is the operator studied in [13, 14].

Note that \(\delta _{0}\) and \(\delta _{1}\) are invariant under \(\mathsf{F}\). Therefore, any convex combination, \((1-\lambda )\delta _{0}+\lambda \delta _{1}\) for \(\lambda \in [0,1],\) is invariant under \(\mathsf{F},\) too.

We denote by \(\mathcal{A}_{1}\) the set of normalized measures uniform on the \(\sigma -\)algebra generated by cylinders in \(\Delta _{1}\). A measure belonging to \(\mathcal{A}_{1}\) is called an archipelago of ones, and we will denote this measure by \(\mu \) throughout the text.

Given \(\mu \), we define the random variable

The infimum of the empty set is \(\infty .\) The random variable \(\tau _{\mu }\) denotes the time to attain the configuration “all zeros” having \(\mathsf{F}\) started on measure \(\mu .\)

We denote the natural numbers by \(\mathbb N\) and by \(\mathbb N^*\) the natural numbers without zero. Given \(\mu \) there are islands of ones \(x^i\) for \(i\in \mathbb N^* \) belonging to \(\Delta _1\) such that \(\mu = \sum _{i=1}^{\infty } k_{i}\delta _{x^i},\) where \(k_{1}>0,~k_{2}>0,\ldots \) and \(k_{1}+k_{2}+\cdots =1.\) We define giant of \(\mu \) as

where \(\mathsf{length}(x^i)\) is the length of the island of ones \(x^i.\) If there is no such length, we say that giant(\(\mu \))\(=\infty .\) To get a feeling for giant’s definition, note that \(\mathsf{giant}(\delta _x)\) for \(x\in \Delta _1\) is the \(\mathsf{length}(x).\)

Let \(\mathrm{I\!E}\) denote the expectation. We say that our operator \(\mathsf{F}\) is an eroder of archipelagoes of ones in mean linear time if given \(\alpha \) and \(\beta \) there is constant k such that

for all \(\mu \) whose \(\mathsf{giant}(\mu )\) is finite. Note that the inequality \(\mathrm{I\!E}(\tau _{\nu })\) does not say anything if \(\nu \in \mathcal{M}\setminus \mathcal{A}_{1},\) i.e., \(\nu \) is not an archipelago of ones, in which case we have \(\mathsf{giant}(\nu )=\infty \).

In all our text, \(\phi (\alpha )\) will be referring to the expression:

where \(0 \le \alpha \le -1+\sqrt{2}.\)

Now, we shall declare our main result.

Main result.

-

(M.1) If \(\alpha =0\) and \(0\le \beta <1\) then \(\lim _{t\rightarrow \infty }\mu \mathsf{F}^t=\delta _0\) and \(\mathsf{F}\) is an eroder of archipelagoes of ones in mean linear time.

-

(M.2) For \(0 < \alpha \le -1+\sqrt{2},\) if \(\beta \le \phi (\alpha )\) then

$$\begin{aligned} \lim _{t\rightarrow \infty }\mu \mathsf{F}^t=\delta _0; \end{aligned}$$ -

(M.3) For \(0 < \alpha \le -1+\sqrt{2},\) if \(\beta < \phi (\alpha )\) then \(\mathsf{F}\) is an eroder of archipelagoes of ones in mean linear time.

Now we summarize the steps used to prove the main result: in Sect. 4, we define another operator \(\mathsf{G}\) in such a way that if \(\lim _{t\rightarrow \infty }\mu \mathsf{G}^t\rightarrow \delta _0\) then, \(\lim _{t\rightarrow \infty }\mu \mathsf{F}^t\rightarrow \delta _0\). The operator \(\mathsf{G}\) is still hard to manage. Thus in Sect. 5, we associate operator \(\mathsf{G}\) with a discrete time Markov chain, X, with the set of states \(\mathbb N,\) for which zero is the unique absorbing state, and we prove (M.1.). The study of process X is hard, so in Sect. 6 we define a coupling with another discrete time Markov chain, Y, which we treat analytically. Finally, in Sect. 7 we prove (M.2) and (M.3).

3 Order and an Application

Before we apply the main result to a particular probabilistic cellular automata, we need to describe the concept of order.

3.1 Order

To establish an order on the states, as per our usual pratice, let us consider \(0 < 1.\) Now, let us introduce a partial order on \(\Omega \) by saying that configuration x precedes configuration y or, what is the same, y succeeds x and writing \(x \prec y\) or \(y \succ x\) if \(x_i \le y_i\) for all \(i \in \mathbb {Z}.\)

Let us say that a measurable set \(S \subset \Omega \) is upper if

Analogously, a set S is lower if

It is easy to check that a complementary set to an upper set is lower and vice versa. Let us denote by y the configuration \(1^{\mathbb {Z}},\) so \(y\in S\). Let us take \(x^k\in \Omega \) such that \(x_{k}=0\) and \(x_i=1\) for all \(i\not = k,\) so we get an upper set \( S=\cup _{k=-\infty }^{\infty }\{x^k\}\cup \{y\}.\)

We introduce a partial order on \(\mathcal M\) by saying that a normalized measure \(\nu \) precedes \(\pi \) (or \(\pi \) succeeds \(\nu \)) if \(\nu (S) \le \pi (S)\) for any upper S (or \(\nu (S) \ge \pi (S)\) for any lower S, which is equivalent).

We call an operator \(\mathsf{P}: \mathcal M\rightarrow \mathcal M\) monotonic if \(\nu \prec \pi \) implies \(\nu \mathsf{P}\prec \pi \mathsf{P}\).

Lemmas 1, 2 and 3 can be found in [19].

Lemma 1

For all configurations x and y, an operator \(\mathsf{P}\) on \(\Omega \) with transition of probabilities \(\theta (\cdot |\cdot )\) is monotonic if and only if

Let us take \(\mathsf{P},~\mathsf{Q}\) two operators from \(\mathcal M\) to \(\mathcal M\). We say that operator \(\mathsf{P}\) precedes operator \(\mathsf{Q}\), if for all \(\nu \in \mathcal M\) follows \(\nu \mathsf{P}\prec \nu \mathsf{Q}.\) We denote this relationship \(\mathsf{P}\prec \mathsf{Q}.\)

Lemma 2

Given two operators \(\mathsf{P}\) and \(\mathsf{Q}\) on \(\Omega \) having transition of probability \(\theta ^{\mathsf{P}}(\cdot |\cdot )\) and \(\theta ^{\mathsf{Q}}(\cdot |\cdot ),\) respectively. Then \(\mathsf{P}\prec \mathsf{Q}\) if and only if

Lemma 3

Let us take \(\mathsf{P}\) and \(\mathsf{Q}\) monotonic operators on \(\Omega .\) If \(\mathsf{P}\prec \mathsf{Q}\) and at least one of \(\mathsf{P}\) and \(\mathsf{Q}\) is monotonic then \(\mathsf{P}^t\prec \mathsf{Q}^t\) for each natural value t.

Note that Lemma 1 gives us a means to conclude that our operator \(\mathsf{F}\) is not monotonic.

3.2 Percolation PCA

Here we consider a class of PCA that has a relationship with percolation. These models are refereed to as percolation operators in [19] and as percolation PCA in [20] and the second one is the way we will refer to them.

We now apply our results to a percolation PCA. We will denote this operator by \(\mathsf{P}\). It is a superposition of two operators, the first one deterministic, denoted by \(\mathsf{D},\) and the second one random, denoted by \(\mathsf{R}_{1-\alpha }\) [6, 19, 21].

Thus, \(\mathsf{P}=\mathsf{R}_{1-\alpha }\mathsf{D}.\) For \(x\in \Omega ,\) the \(\mathsf{D}\) operator transforms any configuration x into a configuration \(x\mathsf{D},\) whose \(k-\)th component is \((x\mathsf{D})_k=\max (x_{k-1},x_k,x_{k+1}).\) According to the classical decimal code introduced by Wolfram, this rule is the Elementary Cellular Automaton 254. \(\mathsf{R}_{1-\alpha }\) transforms 1 into 0 with probability \(1-\alpha \) independently of what happens to the other components. Thus, its transitions probability are given by

Note that \(\theta (1|000)=0\) since \(\max (0,0,0)=0\) and \(\mathsf{R}_{1-\alpha }\) does not change 0 to 1. Of course, \(\delta _{0} \mathsf{P}= \delta _{0}.\) It was proved in [6] that there is a constant \(\alpha ^*,\) which verifies \(1/3 \le \alpha ^{*}\le 53/54,\) and such that: (i)if \(\alpha <\alpha ^{*},\) then \(\lim _{t\rightarrow \infty }\nu \mathsf{P}^t\rightarrow \delta _{0}\) for all \(\nu \in \mathcal M\); (ii) if \(\alpha >\alpha ^{*},\) then \(\delta _{1} \mathsf{P}^t(1)> 0\) for all \(t>0.\) This means that the percolation operator shows a kind of phase transition.

Proposition 1 describes the mean time of convergence when the initial distribution belongs to \(\mathcal{A}_{1}.\)

Proposition 1

If \(\alpha <0.3,\) then \(\mathsf{P}\) is an eroder of archipelagoes of ones in mean linear time.

Proof

Let us denote by \(\mathsf{F}_{\alpha }\) and \(\tau _{\mu }^{\alpha }\) the operator \(\mathsf{F}\) and \(\tau _{\mu }\) when \(\beta =\alpha .\) Using Lemma 1, we conclude that \(\mathsf{F}_{\alpha }\) is monotonic. It is clear that \(\mathsf{P}\) is monotonic. Through Lemma 2, \(\mathsf{P}\prec \mathsf{F}_{\alpha }\). So, using Lemma 3, we obtain \(\mathsf{P}^t\prec \mathsf{F}_{\alpha }^t\). So, if \(\mathsf{F}_{\alpha }\) is an eroder of archipelagoes of ones in mean linear time, then \(\mathsf{P}\) is an eroder of archipelagoes of ones in mean linear time, too. By (M.3), if \(\alpha <0.3\) then \(\mathsf{F}_{\alpha }\) is an eroder of archipelagoes of ones in mean linear time. \(\square \)

4 The Growth Operator \(\mathsf{G}\)

Now we will describe growth operator, \(\mathsf{G},\) where if \(\lim _{t\rightarrow \infty }\mu \mathsf{G}^t\rightarrow \delta _0\) then, \(\lim _{t\rightarrow \infty }\mu \mathsf{F}^t\rightarrow \delta _0.\) We define the growth operator, \(\mathsf{G},\) in such way that it will be a Markov chain with a countable set of states.

Given that \(x\in \Delta _{1}\), we take \(i_0<j_0\) such that \(x_{i_0+1}=x_{j_0-1}=1\) and \(x_k=0\) for all \(k\le i_0\) or \(k\ge j_0.\) Thus, we define the following configuration:

We shall define \(\overline{\Delta }_{1}\) as the set of all \(x\in \Delta _{1}\) such that \(x=\overline{x}.\) Informally speaking, \(\overline{x}\) is a configuration with a finite “block” of ones in a background of zeros. Let \(~\overline{\mathcal{A}}_{1}\) be the set of normalized measures uniform on the \(\sigma \)-algebra generated by cylinders in \(\overline{\Delta }_{1}.\) Note that \(\overline{\Delta }_{1}\) is countable, so any measure belonging to \(\overline{\mathcal{A}}_{1}\) is a convex combination of \(\delta \)-measures; more precisely, if \(\mu \in \overline{\mathcal{A}}_{1}\) then

where \(k_x\ge 0\) for all \(x\in \overline{\Delta }_{1}\) and \(\sum _{x\in \overline{\Delta }_{1}}k_x=1.\)

Discrete-time growth operator \(\mathsf{G}:\overline{\mathcal{A}}_{1}\cup \{\delta _{0}\}\rightarrow \overline{\mathcal{A}}_{1}\cup \{\delta _{0}\}\) is defined by its transition probabilities depending on the state of its neighbourhood.

Remember that \(\theta (\cdot |\cdot )\) is the transition probability of \(\mathsf{F},\) as we can see in (2). Now, let us consider \(x\in \overline{\Delta }_{1}\) and the positions \(i_0<j_0\) such that \(x_{k}=1\) if \(i_0<k<j_0\) and \(x_k=0\) otherwise. The operator \(\mathsf{G}\) acts only on the components \(x_{i_0},~x_{i_0+1},~x_{j_0-1}\) and \(x_{j_0};\) all the other components will remain in the same state. When \(\mathsf{G}\) acts on \(x_{i_0+1}\) and \(x_{i_0},\) we say that \(\mathsf{G}\) is acting on the left side. When \(\mathsf{G}\) acts on \(x_{j_0-1}\) and \(x_{j_0},\) we say that \(\mathsf{G}\) is acting on the right side. Now, let us define the following events:

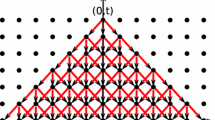

If \(j_0-1>i_0+1,\) i.e., \(\mathsf{length}(x)>1,\) the operator \(\mathsf{G}\) acts in the following manner on the left side: just one of the three events \(L_1,~L_2\) and \(L_3\) will occur. Similarly, when \(\mathsf{G}\) acts on the right side, just one of the three events \(R_1,~R_2\) and \(R_3\) will occur. Thus, after applying the operator \(\mathsf{G},\) just one among the events \(L_i\cap R_j\) for \(i,j\in \{1,2,3\}\) will occur (see Fig. 1) and through the independence of \(L_i\) and \(R_j,\) we obtain \(\mathbb P(L_i\cap R_j)=\mathbb P(L_i)\mathbb P(R_j).\) We get \(\mathbb P(R_1)= \theta (0|110)\theta (0|100),~\mathbb P(R_2)= \theta (1|110)\theta (0|100)\) and \(\mathbb P(R_3)= \theta (1|100).\) Note that \(\mathbb P(R_i)=\mathbb P(L_i)\) for \(i\in \{1,~2,~3\}.\)

We define the events \(E_1=\{x_{i_0} \hbox { becomes }1\}\) and \(E_2=\{x_{j_0} \hbox { becomes }1\}\). Now, we define the operator \(\mathsf{G}\) for the case \(j_0-1=i_0+1,\) i.e., when \(\mathsf{length}(x)=1\). After applying the operator \(\mathsf{G},\) just one of the following events can occur: \( L_2\cap R_2,~ R_1\cap E_1,\) \(~L_1\cap E_2,\) \(~L_1\cap R_1,\) \(~L_2\cap R_3,\) \(~L_3\cap R_2,~E_1\cap E_2.\) Here, the difficulty is that the events \(L_i\) and \(R_j\) for \(i,j\in \{1,2,3\}\) are not independent of each other once \(x_{j_0-1}=x_{i_0+1}.\) The events have following probabilities:

We assume that \(\delta _{0}\mathsf{G}=\delta _{0}\).

As \(\mathsf{G}\) acts on measures \(\mu \) that are a convex combination of \(\delta _x\) for \(x\in \overline{\Delta }_1.\) It is enough to define as \(\mathsf{G}\) acts in \(\delta _x\).

We defined our operator \(\mathsf{G}\) acting on \(\delta -\)measures. It is clear that \(\mathsf{G}\) is linear; thus, by (6) we defined \(\mathsf{G}\) acting on any measure belonging to \(\overline{\mathcal{A}}_1.\) In Fig. 2, we illustrate 7 time steps of the our operator \(\mathsf{G}\) acting on \(\delta _x.\)

Given \(\mu ,\) we get \( \mu =\sum _{x\in {\Delta _{1}}}k_x\delta _{x}, \) where \(k_x\ge 0\) for all \(x\in {\Delta _{1}}\) and \(\sum _{x\in {\Delta _{1}}}k_x=1.\) So, we obtain \( \overline{\mu }=\sum _{x\in {\Delta _{1}}}k_x\delta _{\overline{x}}. \) Of course, \(\overline{\mu }\in \overline{\mathcal{A}}_{1}\) and \(\mu \prec \overline{\mu }.\)

Lemmas 4 and 5 describe some of the relations between operators \(\mathsf{F}\) and \(\mathsf{G}\).

Lemma 4

Given \(\alpha ,~\beta \in (0,1),~x\in \Delta _{1}\) and \(\delta _x\) its respective normalized measure. If \(\lim _{t\rightarrow \infty }\delta _{\overline{x}}\mathsf{G}^t=\delta _{0}\). then \(\lim _{t\rightarrow \infty }\delta _x \mathsf{F}^t=\delta _{0}\) .

Proof

By the definition of \(\mathsf{F}\) and \(\mathsf{G},\) we have: \(\delta _{x}\mathsf{F}^t(1)\le \delta _{\overline{x}}\mathsf{G}^t(1).\) So, \(\lim _{t\rightarrow \infty }\delta _{\overline{x}}\mathsf{G}^t(1)= 0\), implying that \(\lim _{t\rightarrow \infty }\delta _{\overline{x}}\mathsf{F}^t(1)= 0\). \(\square \)

Lemma 5

Given \({\mu },\) if \(\lim _{t\rightarrow \infty }\overline{\mu }\mathsf{G}^t=\delta _{0}\), then \(\lim _{t\rightarrow \infty }\mu \mathsf{F}^t=\delta _{0}.\) .

Proof

The \(\overline{\mu }\mathsf{G}^t=\sum _{x\in {\Delta _{1}}}k_x\delta _{\overline{x}}\mathsf{G}^t\). As \(\overline{\mu }\mathsf{G}^t\rightarrow \delta _{0},\) then \(\delta _{\overline{x}}\mathsf{G}^t\rightarrow \delta _{0};\) so, using Lemma 4 we get \(\delta _{{x}}\mathsf{F}^t\rightarrow \delta _{0}\). Then \(\sum _{x\in {\Delta _{1}}}k_x\delta _{{x}}\mathsf{F}^t\rightarrow \delta _{0}.\) \(\square \)

5 The Operator \(\mathsf{G}\) and the Process X

Our main task here is to define the process \(X=(X_t)_{t\in \mathbb N},\) which assumes values in \(\mathbb N.\) Informally speaking if we started in a measure concentrated at an island x, then \(X_t\) will describe the length of that island at time t.

Given \(x\in \overline{\Delta }_{1}\) and \(\delta _x\), there are positions \(i_0<j_0\) such that \(x_{k}=1\) if \(i_0<k<j_0\) and \(x_k=0\) otherwise. If x is the configuration \(0^{\mathbb {Z}}\), we say that \(j_0=i_0+1.\) We define \(X_0=j_0-i_0-1;\) note that \(X_0=\mathsf{length}(x)\). After applying \(\mathsf{G},\) with probability one, we will get new positions \(i_1<j_1,\) where \(x_{k}=1\) if \(i_1<k<j_1\) and \(x_k=0\) otherwise. For those same positions, we define \(X_1=j_1-i_1-1\). Continuing with this argumentation, after applying \(\mathsf{G}^t,\) with probability one, we will get new positions \(i_t<j_t,\) where \(x_{k}=1\) if \(i_t<k<j_t\) and \(x_k=0\) otherwise. For those same positions, we define \(X_t=j_t-i_t-1\). Observe that the random variable \(X_t\) matches the length of the island x after t time steps. Figure 2 illustrates a possible action of our operator \(\mathsf{G}\) on \(\delta _x\) and shows corresponding values of \(X_t\) and \((i_t,j_t)\).

The random variables \(i_t\) and \(j_t\), (\(i_t<j_t\)), are related to the event \(L_i\cap R_j\) for \(i,j\in \{1,2,3\},\) which happened in the previous time step. In the appendix, we describe the probabilities transition of \(i_t\) and \(j_t\) and the probabilities transition of the process X associated with the operator \(\mathsf{G}\). Using the \(\theta (\cdot |\cdot )\) values defined in (2), we obtain the following equation:

\( \mathbb P(X_{t+1}=c|X_t=c)\quad ~~=\left\{ \begin{array}{ll} 1&{}\hbox { if }c=0,\\ \beta (1-\alpha )^2+2\alpha (1-\alpha )(1-\beta )&{}\hbox { if }c=1,\\ \beta ^2(1-\alpha )^2+2\alpha (1-\alpha )(1-\beta )&{}\hbox { if }c\ge 2. \end{array}\right. \)

\( \mathbb P(X_{t+1}=c-1|X_t=c)=\left\{ \begin{array}{ll} 0&{}\hbox { if }c=0,\\ (1-\alpha )^2(1-\beta )&{}\hbox { if }c=1,\\ 2\beta (1-\alpha )^2(1-\beta )&{}\hbox { if }c\ge 2. \end{array}\right. \)

\( \mathbb P(X_{t+1}=c-2|X_t=c)=\left\{ \begin{array}{ll} 0&{}\hbox { if }c<2,\\ (1-\alpha )^2(1-\beta )^2&{}\hbox { if }c\ge 2. \end{array}\right. \)

\( \mathbb P(X_{t+1}=c+1|X_t=c)=\left\{ \begin{array}{ll} 0&{}\hbox { if }c=0,\\ 2\alpha \beta (1-\alpha )&{}\hbox { if }c>0. \end{array}\right. \)

\( \mathbb P(X_{t+1}=c+2|X_t=c)=\left\{ \begin{array}{ll} 0&{}\hbox { if }c=0,\\ \alpha ^2&{}\hbox { if }c>0. \end{array}\right. \)

In Fig. 3, we show the diagram of the process X. In state one, indicated by the first dark gray ball from the left to the right, there are only non-null transition probabilities to go to 0, 1, 2 and 3. For any state \(c>1\) indicated by the second dark gray ball, from the left to the right, there are only non-null transition probabilities to go to \(c-2,~c-1,~c,~c+1\) and \(c+2\).

Proof of (M.1). When \(\alpha =\beta =0,\) (M.1) follows from the transition probability of \(\mathsf{F}\). When \(\alpha =0\) and \(\beta \in (0,1)\) the process X is really easy to manage. It only has non-null transition probabilities to stay on the same position or go to the left side, decrease by one or two units. Therefore, process X goes to zero with probability one. So, for all \(\overline{\mu }\) we get \(\lim _{t\rightarrow \infty }\overline{\mu }\mathsf{G}^t=\delta _0\). Thus, by the Lemmas 4 and 5 we get \(\lim _{t\rightarrow \infty }\mu \mathsf{F}^t=\delta _0\). The mean time to absorption of X gives an upper bound of the mean time of convergence of \(\mathsf{G},\) which is the upper bound of the mean time of convergence of \(\mathsf{F}.\) \(\square \)

From now on, in our text, we will take \(0<\alpha <1\) and \(0<\beta <1,\) which is enough to prove (M.2) and (M.3).

6 The Process X and the Process Y

We now present the process X in another manner, which will allow us to couple it with a discrete time Markov chain Y. Process Y is simpler than the process X, what allows us to study Y analytically.

Given \(\alpha ,~\beta \in (0,1),~a\ge 2\) and \(i=1,2\) we define

For simplicity we will denote here \(l_2(\alpha ,\beta ),~l_1(\alpha ,\beta ),r_1(\alpha ,\beta )\) and \(r_2(\alpha ,\beta )\)by \(l_2,l_1,r_1\) and \(r_2\) respectively. We further define intervals Also, we shall define intervals \(I_i\) for \(i=1,2,\ldots ,7.\)

Let \(U_1,~U_2,\ldots \) be a sequence of independent identically distributed random variables, where \(U_t\) has a uniform distribution on [0, 1].

We define the process \(Y=(Y_t)_{t\in \mathbb N}\) in the following way: let \(a\in \mathbb N,~Y_0=a\) and \(Y_{t+1}=F^Y(Y_{t},U_{t+1})\) where

In the Fig. 4, we show the diagram of the process Y. For any state \(a\ge 1\), indicated by the dark gray ball, there are just transition probabilities to go to \(~a-1,~a,~a+1\) and \(a+2\).

In contrast to the process X, the process Y acts in a homogenous manner at each \(a\ge 1.\)

In the same spirit used to define the process Y (8), process X can be defined in the following way: let \(a\in \mathbb N,~X_0=a\) and \(X_{t+1}=F^X(X_{t},U_{t+1}),\) where

Let \(a,b\in \mathbb N\). We define the coupling of X and Y as follows:

In Fig. 5 we illustrate a possible realization of \((X_t,Y_t)\) in the first six time steps with \((X_0,Y_0)=(3,4).\)

Lemma 6

If Y is an absorbing Markov chain (for all \(\epsilon >0,~\lim _{t\rightarrow \infty }\mathbb P(Y_t\ge \epsilon )= 0\)) then X is an absorbing Markov chain (for all \(\epsilon >0,~\lim _{t\rightarrow \infty }\mathbb P(X_t\ge \epsilon )=0\)).

Proof

Let \(a,b\in \mathbb N.\) As \(\{(X_t,Y_t)\}_{t=0}^{\infty }\) is a coupling, it follows that if \(X_0=a\le b=Y_0,\) then \(\mathbb P(X_t\le Y_t)=1.\)

Given an arbitrary \(\epsilon >0\) and \(X_0=Y_0=a,\) we get \(\mathbb P(Y_t\le \epsilon )\le \mathbb P(X_t\le \epsilon )\). As \(\lim _{t\rightarrow \infty }\mathbb P(Y_t\le \epsilon )= 1\), then \(\lim _{t\rightarrow \infty }\mathbb P(X_t\le \epsilon )=1\). \(\square \)

7 Proof of (M.2) and (M.3)

Let us denote by \(h_i\) the probability that the process Y hits the state 0 given that \(Y_0=i\). The fundamental relationship among the \(h_i\) is the following (see [22]):

where \(l_1,~r_1\) and \(r_2\) were defined previously.

Lemma 7

For \(C_1\) and \(C_2\) constants,

and for \(j=1,2\) we define

-

(a)

The solution of system (10) is

$$\begin{aligned} \begin{array}{l} h_i=1+C_1((\gamma _1)^i-1)+C_2((\gamma _2)^i-1); \end{array} \end{aligned}$$ -

(b)

If \(\beta \le \phi (\alpha ),\) then \(C_1\) and \(C_2\) are equal to zero.

Proof

In the appendix.

Given the process X, we denote the hitting time of state zero given that we started at state i by

where the infimum of the empty set is \(\infty .\) We denote \(\mathrm{I\!E}(H_i^X)\) by \(k_i^X\). \(\square \)

Proof of (M.2). Let us take \(0 \le \alpha \le -1 +\sqrt{ 2}\), \(\beta \le \phi (\alpha )\) and \(i\in \mathbb N\) . Apply Lemma 7, for \(h_i=1,\) i.e., given \(Y_0=i\) and \(\epsilon >0,\) then \(\lim _{t\rightarrow \infty }\mathbb P(Y_t>\epsilon )= 0.\) The process Y is an absorbing chain, then from Lemma 6 we can conclude that the process X is also an absorbing chain. Remember that X describes the length of the island under the action of the operator \(\mathsf{G}\) that can: increase, decrease, or stay the same. Therefore, the fact that the process X is an absorbing chain (\(\forall \epsilon>0,~\lim _{t\rightarrow \infty }\mathbb P(X_t>\epsilon )= 0\)), implies that for any island \(\overline{x}\in \overline{\Delta }_{1},\) we will get \(\lim _{t\rightarrow \infty }\delta _{\overline{x}}\mathsf{G}^t=\delta _{0}\). So, for any \(\overline{\mu }\in \overline{\mathcal{A}}_{1}\) we get

where \(k_{\overline{x}}\) are non-negative and \(\sum _{{\overline{x}}\in \overline{\Delta }_{1}}k_{\overline{x}}=1.\) Therefore, using the fact that for any \(\mu \) there is \(\overline{\mu }\in \overline{\mathcal{A}}_{1}\) such that \(\mu \prec \overline{\mu }\), by Lemma 5 we conclude the proof. \(\square \)

Given the process Y, we denote the hitting time of state zero given that we started at state i by

where the infimum of the empty set is \(\infty .\) \(\mathrm{I\!E}(H_i^Y)\) is the expected amount of time before the process Y hits zero, conditioned that the process starts at i. For simplicity, we will denote, \(\mathrm{I\!E}(H_i^Y)\) by \(k_i^Y.\) The fundamental relationship among the \(k_i^Y\)’s is the following (see [22]):

where \(l_1,~r_1\) and \(r_2\) were defined previously.

Lemma 8

Let \(C_1\) and \(C_2\) be constants; we take \(\gamma _1\) and \(\gamma _2\) as defined in Lemma 7, and we denote \(\eta =1-2\alpha +\alpha ^2(\beta -1)-\beta .\)

-

(a)

For \(\beta \not = \phi (\alpha )\), the solution of system (11) is

$$\begin{aligned} k_i^Y=\frac{i}{\eta }+C_1((\gamma _1)^i-1)+C_2((\gamma _2)^i-1); \end{aligned}$$ -

(b)

If \(\beta <\phi (\alpha ),\) then \(C_1\) and \(C_2\) are equal to zero.

Proof

See the appendix.

Before proving (M.3), we will need to establish some definitions. Let \(x\in \Delta _{1};\) then we define the random variables

The infimum of the empty set is \(\infty .\) \(\square \)

Now, we are ready to prove (M.3).

Proof of (M.3) From Lemma 6, we conclude that \(H_i^X\le H_i^Y\). So,

Let \(x\in \Delta _{1}\). From Lemma 5, we conclude that \(\tau _x^{\mathsf{F}}\le \tau _{\overline{x}}^{\mathsf{G}}.\) So,

Note that given \(x\in \Delta _{1}\) we get \(\mathsf{length}(x)=\mathsf{length}(\overline{x})\). Remember that the process X describes the quantity \(\mathsf{length}(\overline{x})\) under the action of the operator \(\mathsf{G}\). Informally speaking, when the process X achieves the absorbing state zero we get that \(\delta _{\overline{x}}\mathsf{G}^t\) achieves the measure \(\delta _{0}\) i.e. \(\tau _{\overline{x}}^{\mathsf{G}}=H^X_{\mathsf{length}(\overline{x})}\). So,

Thus, using (12), (13) , (14) and Lemma 8, we conclude that, for any \(x\in \Delta _{1},\)

where \(\eta \) was defined in Lemma 8.

Given \(\mu ,\) there is a sequence of islands of ones \(x^1,~x^2,\ldots \) such that \(\mu \) is a convex combination of \(\delta _{x^1},~\delta _{x^2},\ldots \) We also assume \(\mathsf{giant}(\mu )<\infty ,\) i.e., there is a natural value M such that \(\mathsf{length}(x^i)<M\) for \(i\mathbb N^*.\) The operator \(\mathsf{F}\) is linear, so \(\mu \mathsf{F}^t\) is a convex combination of \(\delta _{x^1}\mathsf{F}^t,~\delta _{x^2}\mathsf{F}^t,\ldots \) Thus,

Hence,

The second inequality is a consequence of (15).

Given \(\alpha \in (0,-1+\sqrt{2}),~\beta < \phi (\alpha ),\) and \(\eta \). \(\square \)

8 Numerical Study

Our process will be studied using mean-field approximation and the Monte Carlo method (M.C.).

8.1 Mean-Field Approximation

Similar to what Ramos and Toom did [23], we define the mean-field operator operator \(\mathcal{C}\,:\,\mathcal M\rightarrow \mathcal M\). Its action amounts to mixing randomly all the components. In other words, for each \(\nu \in \mathcal M,\) the measure \(\nu \,\mathcal{C}\) is a product measure with the same densities of zeros and ones as \(\nu \) has. The mean-field operator allows us to approximate a given process \(\nu \, P^t\) on the configuration space \(\Omega ^\mathbb {Z}\) by another process \(\nu \,(\mathcal{C}\, P)^t\) on the same space. (Every time, we apply \(\mathcal{C}\) first, then P). Thus, instead of the original process, whose set of variables is infinite or very large, we deal with the evolution of densities of zeros and ones. Since densities of zeros and ones sum up to one, the number of independent variables in the mean-field approximation is equal to one. We choose to deal with the density of ones.

The density of 1 in the measure \(\nu \, (\mathcal{C}\mathsf{F})\) is expressed as

As \(\nu \mathcal{C}\) is a product measure, x denotes the density of ones in the measure \(\nu \), f(x) denotes the density of ones in the measure \(\nu \mathsf{F}\). We get,

Thus, f(x) is defined and continuous for \(x,\quad \alpha ,\quad \beta \in [0,\ 1].\) The study of the operator \(\mathsf{F}\) is substituted with a study of \(\mathcal{C}\mathsf{F}\), which goes to the study of dynamical systems \(f : [0,1] \rightarrow [0,1]\) with parameters \(\alpha ,\quad \beta \in (0,\ 1)\). As usual, we call a fixed point of f any value of \(x \in [0,\ 1]\) such that \(f(x) = x\).

Let us define

It is easy to conclude for the function f defined in (16),

-

If \(1-2\alpha<\beta <1-\alpha /2,\) then \(\beta _1\) and \(\beta _3\) are the only fixed points.

-

If \(\beta < 1-2\alpha \) or \(1-\alpha /2<\beta ,\) then \(\beta _1,\quad \beta _2\) and \(\beta _3\) are the only fixed points.

Now, let us denote by \(f^t(x)\) the t-th iteration of f on the argument x. It is easy to show that

-

If \(\beta \le 1-2\alpha ,\) then \(\lim _{t\rightarrow \infty }f^t(x_0)=0\) for all \(x_0\in [0,1).\)

-

If \(\beta \ge 1-\alpha /2,\) then \(\lim _{t\rightarrow \infty }f^t(x_0)=1\) for all \(x_0\in (0,1].\)

-

If \( 1-\alpha /2<\beta <1-2\alpha ,\) then \(\lim _{t\rightarrow \infty }f^t(x_0)=\beta _2\) for all \(x_0\in (0,1).\)

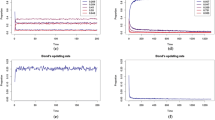

These results show that our mean-field approximation has three kinds of distinct behaviors. In Fig. 6 we plot the curves \(1-2\alpha \) and \(1-\alpha /2\) which determines when the mean-field approximation has only two fixed points or only three fixed points.

8.2 Monte Carlo Simulations

Any cellular automaton may be defined on an infinite space \(\mathbb {Z}\) or on a finite space with periodic boundary conditions, \(\mathbb {Z}_n-\) the set of remainders modulo n, where n is an arbitrary natural number. The study of binary PCA in finite space [24, 25] and infinite space [8, 26] is an active area of research.

For our finite cellular automata, let us consider the set of states \(\Omega _n=\{0,1\}^{\mathbb {Z}_n}\). We call the elements of \(\Omega _n\) periodic configurations. The periodic configurations are finite sequences of ones and zeros, now we imagine these sequences to have a periodic form.

\(C^t\) denotes the periodic configuration obtained at time t, and \(c^t_i\) denotes its i-th components where \(i=0,\ldots ,n-1.\) We consider the periodic configurations \(C^t\) as representations of measures \(\mu ^t\in \mathcal{M}_{\Omega _n},\) so the sequence \(C^0,~C^1,~ C^2,\ldots ,\) is a trajectory of some random process \(\mu ^0,\mu ^1,\mu ^2,\ldots \)

The graph illustrates some of our results. The Theoretical Curve indicates the curve \(\phi (\alpha ).\) The two Mean-Field Approximation indicate the curves, \(1-2\alpha \) and \(1-\alpha /2,\) obtained in our mean-field approximation study. The two Monte Carlo (M.C.) indicate the two curves obtained in simulations. The curves were obtained as an average of 10 independent experiments.

Given a periodic configuration \(C = (c_0,\ldots ,c_{n-1})\), we define the density of 1 in C as follows:

Also, we define the following quantities:

The purpose of (18) is to estimate the density of 1 at the limit distribution.

In a similar fashion, we can define \(\overline{\mathsf{dens}}(10|\mu ^t),\) i.e, the density 10 at the limit distribution.

As for the M.C. simulation, initially we take \(n=1000,\) where \(c^0_0=1\) and \(c^0_{1}=\ldots =c^0_{999}=0.\) We fix the parameters \((\alpha ,\beta )\) and perform our M.C. simulation for 100000 time steps.

We used our M.C. simulation as follows: if at the end of iteration the quantity \({\mathsf{dens}}(1|C^t)\) was zero, we interpreted it as a suggestion that the infinite process with the pair \((\alpha ,\beta )\) is an eroder of archipelagoes of ones; if at the end of iteration the quantity \({\mathsf{dens}}(1|C^t)\) was positive, we interpreted it as a suggestion that the infinite process with the pair \((\alpha ,\beta )\) is not eroder of archipelagoes of ones.

We used our M.C. simulation within a cycle with a fixed \(\alpha \) and growing \(\beta \): we started with \(\beta = 0\) and then iteratively performed our M.C simulation increasing \(\beta \) by 0.001 until \(\beta \) reached the value 1 or \({\mathsf{dens}}(1|C^t)\) was positive. Thus, we obtained a certain value of \(\beta \). In fact, we performed this cycle 10 times and recorded the arithmetical average of the 10 values of \(\beta \) thus obtained. All this was done for 1000 values of \(\alpha ,\) namely, the values \(0.001 \times i\) with \(i = 1,...,1000\). Thus we obtained 1000 pairs \(( \alpha ,\beta )\) represented by the lower “curve” in Fig. 6.

To obtain the upper “curve” in Fig. 6, we perform the M.C. simulation in the same way. Except this time for each \(\alpha \) we stopped the simulations at the smallest value of \(\beta \) for which the \({\mathsf{dens}}(1|C^t)\) is equal to one.

Finally, we perform our M.C. simulation in yet another way. Let us take

For each pair \((\alpha _i,\beta _j),\) we computed \(\overline{\mathsf{dens}}(1|\mu ^{100000})\) and the density of the pattern 10, \(\overline{\mathsf{dens}}(10|\mu ^{100000})\) and plotted the densities for the pairs of parameters in Fig. 7a, b, respectively. The values of the densities are represented by colors.

To verify the robustness of the simulation results, we performed experiments considering two kinds of initial condition: first one, we assume blocks of 1’s with different lengths, i.e., \(c_0^0 =\ldots =c_{n-1}^0=1\) and \(c_n^0=\ldots =c_{999}^0=0\), where \(n=2,\ldots ,10\); to the second one, we considered random initial periodic configuration, i.e., initial state of each site was chosen randomly with equal probability 1 / 2 for states 1 and 0. In both cases the results were the qualitatively the same.

A particular case of our process, when \(\beta =1-\alpha ,\) has been considered in [14]. Regnault obtained the following result for a configuration x where \(x_0=1\) and \(x_i=0\) for all \(i\not = 0\): If \(\alpha \ge 0.996,\) then \(\delta _{x} \mathsf{F}^t(1)\) belongs to (0, 1) for all \(t\ge 0.\) This result is verified by our computer simulations.

Here we get two space–time diagrams. In each figure we took \(C^0=(c^0_0,\ldots ,c^0_{99}),~t=0,\ldots ,100\) and the ones and zeros are represented by black and white colors respectively. We started with a uniform distribution of zeros and ones. Also, we assumed \(\beta =1-\alpha \) and \(\alpha \in \{0.1,0.9\}.\) In a when \(\alpha =0.1,\) we see ones and zeros are concentrate in massive blocks. In b when \(\alpha =0.9,\) we see the ones and zeros well mixed. The direction of the time flow follows from bottom to top

9 Concluding Remarks and Open Problems

We studied a particular non-monotonic PCA. For our process, we assumed a class of initial distributions and we were able to prove the convergence and give an upper bound to the mean time. The definitions of eroder operator and eroder’s time are well-known in deterministic cellular automata. In this work, we defined eroder operator and eroder’s mean time in PCA. We believe that this definition will facilitate research in this area.

We assumed the initial distribution of our process as an archipelago of ones. Our numerical studies indicate that, depending on the parameters, there are three distinct behaviors. The first one, the density of ones goes to zero; the second one, it goes to one; and the third one, it is strictly between zero and one, not reaching these extreme values. In (M.1) and (M.2), we were able to prove rigorously only the first behavior. For the two others behaviors, we are only able to offer the following conjecture.

Conjecture 1

There are subsets S of \([0,1]\times [0,1]\) and partition of S, \(S=S_1\cup S_2,\) such that for a given \(\mu ,\) if \((\alpha ,\beta ) \in S\) then \(0<\mu \mathsf{F}^t(1)<1\) for all \(t>0.\) Moreover, if \((\alpha ,\beta )\in S_1\)(respectively \((\alpha ,\beta )\in S_2\)), then \(\mu \mathsf{F}^t\) converges (respectively does not converge) to a convex combination of \(\delta _{0}\) and \(\delta _{1}\) when \(t\rightarrow \infty .\)

Part of Conjecture 1 was suggested by the results of our M.C. simulation. The third behavior of our process, when the density of ones is strictly between zero and one, has two further case: in the first one, the density of the 01 pairs goes to zero, and in the second one the density of the 01 pair is positive all the time.

This two cases are illustrated in the space-time diagram in Fig. 8 for \(\alpha =0.1\) and \(\beta =0.9,\) i.e, \((\alpha ,\beta )\in S_1\) and for \(\alpha =0.9\) and \(\beta =0.1,\) i.e, \((\alpha ,\beta )\in S_2.\) Our numerical studies, show cross diagonal that \((\alpha ,1-\alpha )\in S,\) where S is the region defined at our conjecture. Moreover, our simulations suggest that: if \(\alpha <1/2\) (resp. \(\alpha >1/2\)) then \(\lim _{t\rightarrow \infty }\mu \mathsf{F}^t(10)= 0\) (resp. \(\lim _{t\rightarrow \infty }\mu \mathsf{F}^t(10)>0\)). This result is an extension of the work presented in [14].

References

Galperin, G.A.: One-dimensional automaton networks with monotonic local interaction. Probl. Inf. Transm. 12(4), 74–87 (1975)

Galperin, G.A.: Homogeneous local monotone operators with memory. Sov. Math. Dokl. 228, 277–280 (1976)

Galperin, G.A.: Rates of interaction in one-dimensional networks. Probl. Inf. Transm. 13(1), 73–81 (1977)

de Menezes, M.L., Toom, A.: A non-linear eroder in presence of one-sided noise. Braz. J. Probab. Stat. 20(1), 1–12 (2006)

de Santana, L.H., Ramos, A.D., Toom, A.: Eroders on a plane with three states at a point. Part I: deterministic. J. Stat. Phys. 159(5), 1175–1195 (2015)

Toom, A.: Contornos, Conjuntos convexos e Autômato Celulares (in Portuguese). \(23^o\) Colóquio Brasileiro de Matemática, IMPA, Rio de Janeiro (2001)

Toom, A.: Stable and attractive trajectories in multicomponent systems. In: Dobrushin, R., Sinai, Y. (eds.) Multicomponent Random Systems. Advances in Probability and Related Topics, Dekker, vol. 6, pp. 549–576 (1980)

Mairesse, J., Marcovici, I.: Around probabilistic cellular automata. Theor. Comput. Sci. 559, 42–72 (2014)

Ponselet, L.: Phase transitions in probabilistic cellular automata. arXiv:1312.3612 (2013)

Petri, N.: Unsolvability of the recognition problem for annihilating iterative networks. Sel. Math. Sov. 6, 354–363 (1987)

Kurdyumov, G.L.: An algorithm-theoretic method for the study of homogeneous random networks. Adv. Probab. 6, 471–504 (1980)

Toom, A., Mityushin, L.: Two results regarding non-computability for univariate cellular automata. Probl. Inf. Transm. 12(2), 135–140 (1976)

Fatès, N.: Directed percolation phenomena in asynchronous elementary cellular automata. In: LNCS Proceedings of 7th International Conference on Cellular Automata for Research and Industry, vol. 4173, pp. 667–675 (2006)

Regnault, D.: Directed percolation arising in stochastic cellular automata. In: Proceedings of MFCS 2008. LNCS, vol. 5162, pp. 563–574 (2008)

Buvel, R.L., Ingerson, T.E.: Structure in asynchronous cellular automata. Physica D 1, 5968 (1984)

Harris, T.E.: Contact interactions on a lattice. Ann. Probab. 2(6), 969–988 (1974)

Liggett, T.M.: Interacting Particle Systems. Springer, Berlin (1985)

Toom, A.L.: A Family of uniform nets of formal neurons. Sov. Math. Dokl. 9(6), 1338–1341 (1968)

Toom, A., Vasilyev, N., Stavskaya, O., Mityushin, L., Kurdyumov, G., Pirogov, S.: Stochastic cellular systems: ergodicity, memory, morphogenesis. In: Dobrushin, R., Kryukov, V., Toom, A. (eds.) Nonlinear Science: Theory and Applications. Manchester University Press, Manchester (1990)

Taggi, L.: Critical probabilities and convergence time of percolation probabilistic cellular automata. J. Stat. Phys. 159(4), 853–892 (2015)

Toom, A.: Ergodicity of cellular automata .Tartu University, Estonia as a part of the graduate program (2013). http://math.ut.ee/emsdk/intensiivkursused/TOOM-TARTU-3.pdf

Norris, J.R.: Markov Chains. Cambridge University Press, Cambridge (1997)

Ramos, A.D., Toom, A.: Chaos and Monte-Carlo approximations of the Flip-Annihilation process. J. Stat. Phys. 133(4), 761–771 (2008)

Fatès, N., Thierry, É., Morvan, M., Schabanel, N.: Fully asynchronous behavior of double-quiescent elementary cellular automata. Theor. Comput. Sci. 362, 1–16 (2006)

Słowiński, P., MacKay, R.S.: Phase diagrams of majority voter probabilistic cellular automata. J. Stat. Phys. 159, 4361 (2015)

Taati, S.: Restricted density classification in one dimension. In: Proceedings of AUTOMATA-2015. LNCS 9099, pp. 238–250. Springer, Berlin (2015)

Acknowledgements

The authors would like to thank the anonymous reviewers for their valuable comments and suggestions, which certainly improved the presentation and quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Transitions of \((i_t,j_t)\) and Process X

Now, we will study the random variables \(i_t\) and \(j_t\) (see Fig. 2), starting by its probabilities transition. Let \(a,~b,~c,~d\in \mathbb N\). We denote by

On \(\mathbb P((a,b-1)|(a,b))\) and \(\mathbb P((a+1,b)|(a,b)),\) we do not indicate what happened when \(b=a+2,\) because in this case just on the position \(a+1\) we get the component on state 1 and in all the other components the state is 0. Moreover, in this case \(\{(a, b-1)| (a, b)\}= \{(a+1, b)|(a, b)\},\) hence we reach the configuration “all zeros” and \(\mathbb P((a+1,b)|(a,b))=\mathbb P(R_1\cap L_1).\)

For \(\mathbb P((a,b-1)|(a,b)),~\mathbb P((a+1,b)|(a,b))\) (resp. \(\mathbb P((a+1,b+1)|(a,b)),~\mathbb P((a-1,b-1)|(a,b))\) ) if \(b> a+2\) (resp. \(b=a+2\)) then \(j_t-i_t-1\) describes the length of the island of ones which with non-null probability can: increase by one, increase by two, decrease by one, decrease by two, or stay the same.

Now, we define process X :

where \(c=b-a-1\). Thus, we concluded the task to define the process X associated to G.

1.2 Proof of Lemmas 7 and 8

Proof of lemma 7

Item (a) results directly from the difference equation (10). Now, let us prove item (b); before we do that, several observations need to be established:

-

(1)

If \(\alpha \in (0,1)\) and \(\beta \le \phi (\alpha ),\) then \(\gamma _1\ge 1\). Moreover, \(\gamma _1= 1\) when \(\beta = \phi (\alpha ).\)

-

(2)

If \(\alpha \in (0,1/3],\) then \(\gamma _2< -1.\)

-

(3)

If \(\alpha \in (1/3,1)\) and \(\beta \ge (\alpha -1)/(3\alpha -1),\) then \(\gamma _2\le -1\). Moreover, \(\gamma _2=-1\) when \(\beta = (\alpha -1)/(3\alpha -1).\)

As \((\alpha -1)/(3\alpha -1)\) is negative for \(\alpha \in (1/3,1)\) and \(\beta \) is non-negative, we can conclude that if \(\alpha \in (1/3,1),\) then \(\gamma _2< -1.\) Thus, (2) and (3) can be concatenated in the following way:

(2’) if \(\alpha \in (0,1)\) then \(\gamma _2< -1.\)

Let us consider the case \(\beta =\phi (\alpha )\). So, \(\gamma _1=1,\) which implies that \(h_i=1+C_2((\gamma _2)^{i}-1).\) But in that case, \(\gamma _2<-1\). Thus, we get \(|h_i|\rightarrow \infty \). However, \(h_i\in [0,1]\) for all \(i\in \mathbb N\) which will be satisfied just for \(C_2=0.\)

From (1) and (2’), if \(\alpha \in (0,1)\) and \(\beta < \phi (\alpha ),\) then

\(C_1\) and \(C_2\) can not have the same sign. Because if \(C_1\) and \(C_2\) are both positive(respectively both are negative), then using the limit (19) \(C_1((\gamma _1)^{2i}-1)\) and \(C_2((\gamma _2)^{2i}-1)\) goes to infinity (respectively \(-\infty \)) when \(i\rightarrow \infty .\) So \(h_{2i}\rightarrow \infty \) (respectively \(-\infty \)) with \(i\rightarrow \infty \). But \(h_i\in [0,1]\) for all \(i\in \mathbb N\), what will be satisfied only for \(C_1=C_2=0.\)

\(C_1\) and \(C_2\) can not have different signals. Because if \(C_1>0\) and \(C_2<0\)(respectively \(C_1<0\) and \(C_2>0\)), then using the limit (19) \(C_1((\gamma _1)^{2i-1}-1)\) and \(C_2((\gamma _2)^{2i-1}-1)\) goes to infinity (respectively \(-\infty \)) when \(i\rightarrow \infty .\) So \(h_{2i-1}\rightarrow \infty \) with \(i\rightarrow \infty \) but \(h_i\in [0,1]\) for all \(i\in \mathbb N\), what will be satisfied just for \(C_1=C_2=0.\)

The case when \(C_1=0\) and \(C_2\) is different from zero (respectively \(C_2=0\) and \(C_2\not =0\)) is trivial. \(\square \)

Lemma 9

Given real values \(C_1,~C_2,~a\) and b where \(b<-a\le -1\):

-

(a)

If \(C_1\) and \(C_2\) are negative, then \(C_1 a^{2i}+C_2 b^{2i}\le C_1a^{2i}.\)

-

(b)

If \(C_1<0\) and \(C_2>0,\) then \(C_1 a^{2i-1}+C_2 b^{2i-1}\le C_1 a^{2i-1}.\)

-

(c)

If \(C_1\) and \(C_2\) are positive, then

$$\begin{aligned} C_1 a^{2i-1}+C_2 b^{2i-1}\rightarrow -\infty \hbox { and } \frac{2i-1}{C_1 a^{2i-1}+C_2 b^{2i-1}}\rightarrow 0\hbox { when }i\rightarrow \infty . \end{aligned}$$ -

(d)

If \(C_1>0\) and \(C_2<0,\) then

$$\begin{aligned} C_1 a^{2i}+C_2 b^{2i}\rightarrow -\infty \hbox { and } \frac{2i}{C_1 a^{2i}+C_2 b^{2i}}\rightarrow 0\hbox { when }i\rightarrow \infty . \end{aligned}$$

Proof

Items (a) and (b) are trivial. The case \(a=1\) is trivial; therefore, we shall consider when \(a>1.\) Next, let us prove item (c). Note that if \(1<-b/a,\) then \((-b/a)^{2i-1}\rightarrow \infty \) when \(i\rightarrow \infty .\) Also, \(a^{2i-1}\rightarrow \infty \) when \(i\rightarrow \infty .\) So, for each negative M value, there is a natural value \(i_M\) such that \(i>i_M\) implies that

Thus, \({C_1a^{2i-1}}<M-C_2b^{2i-1}.\) We proved the first part of item (c). Now, let us prove the second part. For this task, it is sufficient to prove

This is what we shall do. We get \((-b/a)^{2i-1}\rightarrow \infty \) and \(a^{2i-1}\rightarrow \infty \) when \(i\rightarrow \infty .\) So, for given M value, \(M(2i-1)/a^{2i-1}\rightarrow 0\) when \(i\rightarrow \infty .\) Therefore, for each negative M value, there is a natural value \(i_M,\) such that \(i>i_M\) implying that

Thus, \(C_1a^{2i-1}<M(2i-1)-C_2b^{2i-1}.\) Item (c) is proved. \(\square \)

Let us prove item (d). Note that \(1<b^2/a^2,\) then \((b/a)^{2i}\rightarrow \infty \) when \(i\rightarrow \infty .\) Also, \(a^{2i}\rightarrow \infty \) when \(i\rightarrow \infty .\) So, for each negative M value, there is a natural value \(i_M\) such that \(i>i_M,\) implying that

Thus, \({C_1a^{2i}}<M-C_2b^{2i}.\) We have now finished the first part of item (d). Now, let us prove the second part, which essentially proves that

Once \(b<-a\le -1,\) we get that for each negative M value there is natural value \(i_M\) such that \(i>i_M,\) implying that

Thus, \(C_1a^{2i}<M(2i)-C_2b^{2i-1}.\)

Proof of Lemma 8

Item (a) results directly from the difference equation (11). Here, we need to observe: (4) if \(\alpha ,\beta \in (0,1),\) then \(-1\ge -\gamma _1>\gamma _2 .\)

If we consider \(C_1\) and \(C_2\) negative, then by Lemma 9 item (a), we get

Moreover,

i.e., there is \(i_0\) such that \(i>i_0,\) implying that \(k_{2i}^Y<0\) is impossible. Then, \(C_1\) and \(C_2\) can not be simultaneously negative.

If we consider \(C_1<0\) and \(C_2>0,\) then by Lemma 9 item (b), we get

Moreover

i.e., there is \(i_0\) such that \(i>i_0,\) implying that \(k_{2i-1}^Y<0\) is impossible. So, the case \(C_1<0\) and \(C_2>0\) is impossible.

If we consider \(C_1=0\) and \(C_2\not =0,\) then for \(C_2>0,~\) \(C_2(\gamma _2)^{2i-1}\rightarrow -\infty \) when \(i\rightarrow \infty \) and for \(C_2<0,~\) \(C_2(\gamma _2)^{2i}\rightarrow -\infty \) when \(i\rightarrow \infty \). In both cases, there is \(i_0\) such that \(i>i_0,\) implying that \(k_{2i-1}^Y<0\) in the first case or \(k_{2i}^Y<0\) in the second case. Both cases are impossible. Then, the case \(C_1=0\) and \(C_2\not =0\) is impossible.

If we consider \(C_2=0\) and \(C_1<0,\) then \(C_1(\gamma _1)^{i}\rightarrow -\infty \) when \(i\rightarrow \infty .\) So, there is \(i_0\) such that \(i>i_0\) implying that \(k_i^Y<0\) is impossible. Then, the case \(C_2=0\) and \(C_1<0\) is impossible.

If we consider \(C_1\) and \(C_2\) positive, then by Lemma 9 item (c) we conclude that

Thus, there is \(i_0\) such that \(i>i_0,\) implying that \(k_{2i-1}^Y<0\) is impossible. Then, the case of \(C_1\) and \(C_2\) being positive is impossible.

Analogously to the previous case, \(C_1\) and \(C_2\) positive, if we consider \(C_1>0\) and \(C_2<0,\) then by Lemma 9 item (d) we conclude that there is \(i_0\) such that \(i>i_0,\) implies that \(k_{2i}^Y<0\) is impossible. Then, the case \(C_1>0\) and \(C_2<0\) is impossible.

Until this point, we proved that \(C_2=0\) and \(C_1\ge 0.\) Thus,

However, we get \((\gamma _1)^i>1\Rightarrow C_1((\gamma _1)^i-1))\ge 0\Rightarrow k_i^Y\ge i/\eta > 0\) when \(\beta < \phi (\alpha )\). By theorem 1.3.5 in [22], the mean hitting time is the minimal non-negative solution to system (11). This happens in our case when \(C_1=0.\) \(\square \)

Rights and permissions

About this article

Cite this article

Ramos, A.D., Leite, A. Convergence Time and Phase Transition in a Non-monotonic Family of Probabilistic Cellular Automata. J Stat Phys 168, 573–594 (2017). https://doi.org/10.1007/s10955-017-1821-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-017-1821-z