Abstract

We present a family of novel explicit numerical methods for the diffusion or heat equation with Fisher, Huxley and Nagumo-type reaction terms. After discretizing the space variables as in conventional method of lines, our methods do not apply a finite difference approximation for the time derivatives, they instead combine constant- linear- and quadratic-neighbour approximations, which decouple the ordinary differential equations. In the obtained methods, the time step size appears in exponential form in the final expression with negative coefficients. In the case of the pure heat equation, the new values of the variable are convex combinations of the old values, which guarantees unconditional positivity and stability. We analytically prove that the convergence of the methods is fourth order in the time step size for linear ODE systems. We also prove that the concentration values in the case of Fisher’s and Nagumo’s equations lie within the unit interval regardless of the time step size. We construct an adaptive time step size time integrator with an extremely cheap embedded error control method. Several numerical examples are provided to demonstrate that the proposed methods work for nonlinear equations in stiff cases as well. According to the comparisons with other solvers, the new methods can have a significant advantage.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 The Studied Problems

Diffusive transport of particles or heat has a fundamental role in several scientific and engineering applications [1,2,3]. It is well known that the so-called heat or diffusion equation, which describes diffusion and conductive heat transfer, is the following linear parabolic partial differential equation (PDE),

here \(u\,{:}\,{\mathbb{R}}^{3} \times {\mathbb{R}} \mapsto {\mathbb{R}}{;}\,\, \left( {\vec{r},t} \right) \mapsto u\left( {\vec{r},t} \right)\) is the unknown function, \(q\,{:}\,{\mathbb{R}}^{3} \times {\mathbb{R}} \mapsto {\mathbb{R}}{;}\, \,\left( {\vec{r},t} \right) \mapsto q\left( {\vec{r},t} \right)\) and \(u\left( {\vec{r},t = 0} \right) = u^{0} \left( {\vec{r}} \right)\) are given functions, while \(c = c\left( {\vec{r},t} \right)\), \(\rho = \rho \left( {\vec{r},t} \right)\), and \(k = k\left( {\vec{r},t} \right)\) are known nonnegative functions. The boundary conditions will be specified at the concrete numerical examples.

In one space dimension, provided that k is independent of the space variable, Eq. (1.1) can be written into the simple form

where \(u\,{:}\,{\mathbb{R}} \times {\mathbb{R}} \mapsto {\mathbb{R}}{;}\, \,\left( {x,t} \right) \mapsto u\left( {x,t} \right)\), \(\alpha > 0\) is a constant.

In the case of diffusive mass transfer, u is the concentration of the particles. In the case of heat conduction, u denotes the temperature, \(\alpha = k/(c\rho )\) is the thermal diffusivity, k, ρ, and c are the heat conductivity, the specific heat and the mass density, while q is the intensity of the heat sources (due to electromagnetic radiation, electric currents, etc.), respectively.

The diffusion equation and its generalizations, such as the advection–diffusion-reaction equation, can model mass transport in countless physical, chemical, and biological systems, for example charge carriers in semiconductors [4], atoms in carbon nanotubes [5], and proteins in embryos [6]. Furthermore, very similar equations or systems of equations are used to simulate fluid flow through porous media, such as moisture [7], ground water, or crude oil in underground reservoirs [8]. We are going to deal here with two nonlinear reaction–diffusion equations. The first one is Fisher’s equation, also called Fisher-Kolmogorov-Petrovsky-Piskunov equation [9], which contains an additional nonlinear logistic reaction term besides the diffusion term:

This equation is used to model the spreading of gene-variants in space, as well as the growth and spreading of misfolded proteins in neurophysiology [10], and the propagation of fronts in combustion processes [11]. The second nonlinear equation we use here contains a source term which can be a large and non-integer power of the variable u, and has the following form:

where β, γ, and δ are nonnegative real numbers. This equation has applications not only in biology [12], but in chemical reactions [13] and nuclear reactor theory [14] as well. It is usually called the Nagumo, FitzHugh-Nagumo or the generalized Huxley equation [15]. In fact, Huxley’s equation can be obtained from the Nagumo equation if one sets \(\gamma = 0 , \, \delta = 1\) as follows

It was shown [16] that this equation is superior to Fisher’s equation when the change in frequency of a new, advantageous recessive allele in a sexually reproducing population has to be modelled. Equation (1.5) is a special case of the Burgers-Huxley equation [17], which is used to model nonlinear wave phenomena.

1.2 On the Solution Methods

It is well known that several numerical methods have been developed and applied to solve the equations in question. Nevertheless, most of these are elaborated and tested under circumstances where the coefficients in the equations, such as the diffusivity α, do not depend on the space variable. In real applications, however, there are systems where the physical properties can be extremely different at neighbouring points, let us just think about a microprocessor. This means that the coefficients, and therefore the eigenvalues of the system matrix, may have a range of several orders of magnitude, thus the problem can be severely stiff. Although when combined with method of lines, the traditional explicit methods (such as the fourth-order Runge–Kutta method) can be very accurate [18], very small time step sizes have to be applied in stiff cases regardless of the measurement errors of the input data and the requirements on the accuracy of the output. This is because they are stable only if the time step size does not exceed a certain threshold number, the mesh Fourier number (also called Courant or CFL limit). Even the adaptive time step size solvers like ode23 and ode45 of MATLAB can run into instability [19] if the tolerance is not very small.

On the other hand, implicit methods have much better stability properties. That is why they are typically proposed to solve these equations [20,21,22]. For example, Ramos [23] successfully modified the standard explicit method to reach higher accuracy in the case of the one-dimensional Huxley’s equation. However, the stability was not improved by the modification, and the implicit methods, especially the different versions of Crank-Nicolson schemes are still outperform the modified explicit method as well. He also applied different linearized-implicit techniques for one-dimensional [24] and multi-dimensional [25] diffusion-reaction problems. Manaa and Sabawi solved [26] \(\delta = 1\) the version of Eq. (1.4) using Explicit (Euler) and Crank- Nicolson methods, and obtained that albeit the explicit method is faster, it is less stable and accurate than the Crank-Nicolson scheme. Kadioglu and Knoll solved coupled hydrodynamics and nonlinear heat conduction problems by treating the hydrodynamics explicitly and the heat conduction part implicitly [27]. They explain that this strategy, which is called IMEX, is typical for these kinds of problems. Its counterpart in the field of reservoir-simulation is the IMplicit Pressure Explicit Saturation (IMPES) approach [28], where the pressure-equation (mathematically similar to the diffusion equation) is solved implicitly, and the saturation is calculated explicitly. However, fully implicit methods are also proposed [29] for this problem.

The most serious problem with the implicit methods is that the solution of a system of algebraic equations is required at each time step, which cannot be straightforwardly parallelized. In one space dimension, when the number of nodes or cells is small and the matrix is tridiagonal, these calculations can be very fast and implicit methods are hard to compete with. However, the solution can be extremely time-consuming in the opposite case, and we note that the number of cells has already reached one trillion in reservoir simulations. Since the clock frequencies of CPUs halted in the last decades, which reinforced the tendency towards increasing parallelism in high-performance computing [30, 31], we believe that the attention towards the easily parallelizable explicit algorithms is going to increase.

The second problem with most of the methods, either explicit or implicit, is that they can lead to qualitatively unacceptable solutions, such as unphysical oscillations or negative values of the otherwise non-negative variables. These variables can be concentrations, densities, or temperatures measured in Kelvin, and the numerical methods should preserve their positivity. That is why Chen-Charpentier and others constructed and studied [32,33,34] the fully explicit and unconditionally positive finite difference (UPFD) scheme for linear advection–diffusion reaction equations. Then Kolev et al. [35] considered a model of cancer migration and invasion, which consists of two PDEs with diffusion terms and an ordinary differential equation (ODE). They discretized one of the PDEs and the ODE implicitly while the remaining PDE was solved by an explicit scheme similar to the UPFD method. However, their method, as well as the original UPFD scheme, has only first order temporal accuracy. Chertock and Kurganov developed a positivity preserving scheme for a system of advection-reaction–diffusion equations describing chemotaxis/haptotaxis models, but their method is positivity preserving only if the time step size is below the rather low mesh Fourier number [36]. The situation is similar to the nonstandard finite difference schemes (NSFD), applied to cross-diffusion equations by Chapwanya et al. [37], and Songolo [38], and to the Fisher and the Nagumo equation by Agbavon et al. [12, 39]. The schemes of Agbavon will be tested in this paper as well.

There are explicit algorithms which are at the same time unconditionally stable, at least in the case of the linear heat equation. For example, the Alternating Direction Explicit (ADE) scheme [40] and the odd–even hopscotch algorithm [41, 42] have second order temporal accuracy. They are often quite accurate indeed, but not positivity preserving. Actually, for stiff systems, the odd–even hopscotch method can produce very large errors [43]. Moreover, both of them build on the regularity of the mesh, thus they lose their explicit nature otherwise.

Nonlinear equations of type (1.3) or (1.4) are treated by operation splitting approaches as well, such as Strang-splitting. The diffusion part is solved by an implicit method as if the nonlinear term does not exist. The nonlinear term is treated locally, solving the ODE, either analytically or via linearization. In this way, one can spare the Newton iterations due to the nonlinearity, but most of the above-mentioned problems remain. Moreover, the splitting implies larger errors and even the decoupling of the reaction and the diffusion processes in the case of large time steps [18].

To summarize, we do not know any explicit and unconditionally stable, let alone unconditionally positive method above second order, even for the linear diffusion equation. That is why we started to construct novel explicit methods based on a fundamentally new way of thinking. The obtained lower order members (the CNe, LNe, CCL and CLL methods, see [44,45,46], respectively) have already been published, and now we are going to present the fourth order members, for which we need the quadratic-neighbour approximation.

1.3 The Outline of the Paper

In Sect. 2, only the linear diffusion equation is considered. First we briefly recall the CNe and LNe schemes, because they make the first two stages of the new methods. In Sect. 2.2, we introduce the new algorithms for the linear diffusion equation, first for the simplest case (one dimensional, equidistant mesh), then in Sect. 2.3, for a general, arbitrary mesh as well. Then we start to analyse the properties of these new methods. The unconditional positivity and stability are proved in Sect. 2.4, then the truncation error is examined to determine the conditions for consistency and the order of convergence in 2.5. In Sect. 3, we show how to adapt the algorithms to the nonlinear cases. In Sect. 4, five numerical tests are presented in 1D cases where analytical solutions of the equations are used as reference solutions. In Sect. 5, we present the adaptive stepsize controller and then perform three numerical experiments for two space-dimensional stiff systems. Finally, we summarise our conclusions and write about our future research goals.

2 Description and Properties of the New Method for the Linear Diffusion Equation

We begin with the construction of the new schemes for the one dimensional Eq. (1.2). First, the space variable is discretized by creating \(N \in {\mathbb{N}}^{ + }\) equidistant nodes: \(x_{i} = i\Delta x\,,\;i = 1,...,N\,\). As in the most standard method of lines, the next step is the discretization of the space derivatives by the second order central difference formula:

Using (2.1) we obtain an ordinary differential equation (ODE) for each node \(i = 1,...,N\):

where \(u_{i} \,{:}\,{\mathbb{R}} \mapsto {\mathbb{R}}{;}\,\, t \mapsto u_{i} \left( t \right)\) are functions of the time variable, which is still continuous, while \(q_{i}\) is the value of the source term at node i. The \(u_{i \pm 1} = u_{i + 1} + u_{i - 1}\) notation will be used throughout the whole paper for the sake of brevity. This system of Eq. (2.2) has the following matrix-form:

here M is a tridiagonal matrix with the usual elements: \(m_{ii} = - \frac{2\alpha }{{\Delta x^{2} }} \,,\;\, m_{i,i + 1} = m_{i,i - 1} = \frac{\alpha }{{\Delta x^{2} }} \,\;(1 < i < N)\), while the first and last row is determined by the boundary condition. The ith element of the vector \(\vec{u}\,{:}\, \left\{ {1,...,N} \right\} \times {\mathbb{R}} \mapsto {\mathbb{R}}^{N}\) is the function \(u_{i} \left( t \right)\). If one calculates the eigenvalues of the matrix M, then the (nonzero) eigenvalues with the largest and smallest absolute value (let us say \(\lambda_{{{\text{max}}}}\) and \(\lambda_{{{\text{min}}}}\)) can determine the stiffness ratio, which is \(\lambda_{{{\text{max}}}} /\lambda_{{{\text{min}}}}\). Moreover, the maximum time step size above which the explicit Euler time integration will be unstable can be given as \(h_{{{\text{MAX}}}}^{{{\text{EE}}}} { = } - 2/\lambda_{{{\text{max}}}}\). Similar but slightly larger mesh Fourier numbers hold for all explicit Runge–Kutta methods.

We define the usual mesh ratio as \(r = \frac{\alpha h}{{\Delta x^{2} }} = - \frac{{m_{ii} }}{2}h\,,\; 1 < i < N\), with which we can write

At this point the time variable is also discretized, \(t^{n} = nh\,,\;n \in \left\{ {0,\, 1,\, 2,...,\, T} \right\}\,\), and \(u_{i}^{n}\) is the numerical solution at \(x_{i}\) and \(t^{n}\). We use h instead of \(\Delta t\) or k for the time step size because of the similarity of our methods to the method of lines. We emphasize here that the time derivatives will not be approximated by finite difference formulas as in the Finite Difference Methods (FDM). Instead, the ODE system (2.2) or (2.3) will be solved analytically assuming the time-variable to be continuous and the initial value at \(t^{n}\) is \(u_{i}^{n}\). The constructed solution of ODE (2.3) is used to approximate the real \(u\left( {t^{n + 1} } \right)\) value. Due to this, the new methods are not always considered as FDMs.

2.1 The Already Known Constant- and Linear-Neighbour Type Approximations

Now the simplest member of our methods, the CNe algorithm [47] is briefly repeated via the following points.

-

(1a)

When the new value \(u_{i}^{n + 1}\) of the unknown function is calculated, we neglect that other variables, most importantly the neighbours \(u_{i - 1}^{n}\) and \(u_{i + 1}^{n}\) are also changing during the time step. This crude approximation means that uj \(\left( {j \ne i} \right)\), and therefore the (*) term in (2.3) are considered to be constants (this is where the name of the method comes from). After this a set of uncoupled, linear ODEs remains:

$$\frac{{du_{i} }}{dt} = a_{i} - \frac{2r}{h}u_{i} ,$$(2.4)where the unknown ui is still a function of the (continuous) time in each equation with initial values \(u_{i}^{n}\), while h is a parameter, but \(r/h\) is not a function of time. The quantity \(a_{{\text{i}}}\) has been introduced to condense information about the neighbours of cell i and the source term

$$a_{i} = \sum\limits_{{j \in \left\{ {i - 1,\, i + 1} \right\}}} {m_{ij} } u_{j}^{n} + q_{i} = \alpha \frac{{u_{i \pm 1}^{n} }}{{\Delta x^{2} }} + q_{i} = \frac{r}{h}u_{i \pm 1}^{n} + q_{i} .$$(2.5)We note again that \(u_{i - 1}^{n}\) and \(u_{i + 1}^{n}\) are the values of the neighbours at the beginning of the actual time step, thus the \(a_{{\text{i}}}\) values are considered as constants during the time step.

-

(1b)

The Eq. (2.4) are very simple and it is straightforward to use their analytical solution at time \(t = h\) to obtain the values of u at the end of the time step:

$$u_{i}^{n + 1} = u_{i}^{n} \cdot e^{ - 2r} + \frac{{ha_{i} }}{2r}\left( {1 - e^{ - 2r} } \right).$$(2.6)

Thus, for a one-dimensional homogeneous system with an equidistant mesh, the following one-stage method was introduced.

Algorithm 1, CNe method

The CNe method has first order convergence in time [44]. If one uses a half time step to obtain predictor values, and then a full time step for the corrector values, both with the CNe formula, one will have the so-called CpC algorithm:

-

(2a)

Instead of the constant-neighbour approximation, now we suppose that the neighbours of the node, and therefore the (*) term in (2.3) are changing linearly. It means that we have to solve the following uncoupled ODE system:

$$\frac{{du_{i} }}{dt} = s_{i} t + a_{i} - \frac{2r}{h}u_{i} ,\quad (i = 1 \ldots N)$$(2.8)To determine the si values (the aggregated or effective slopes), we need a set of predictor values \(u_{i}^{{{\text{pred}}}}\). These are obtained by the CNe algorithm and are valid at the end of the examined time step. So the slope for the neighbours can be calculated as

$$s_{i} = \frac{{a_{i}^{{{\text{pred}}}} - a_{i} }}{h}.$$(2.9)Here

$$a_{i}^{{{\text{pred}}}} = \sum\limits_{{{\text{j}} \in \left\{ {i - 1, i + 1} \right\}}} {m_{ij} u_{j}^{{n + {\text{1,pred}}}} } + q_{{\text{i}}} = \frac{\alpha }{{\Delta x^{2} }}u_{i \pm 1}^{{\text{n + 1,pred}}} + q_{i} = \frac{r}{h}u_{i \pm 1}^{{\text{n + 1,pred}}} + q_{{\text{i}}}$$(2.10)contains the predictor values of all the neighbours. The source terms are considered to be independent of time for the sake of simplicity.

-

(2b)

The equation system (2.8) is a set of independent linear ODEs again, with the simple analytical solution:

$$u_{i} \left( t \right) = u_{i}^{n} e^{{ - 2\frac{r}{h}t}} - \left( {\frac{{h^{2} }}{{4r^{2} }}s_{i} - \frac{h}{2r}a_{i} } \right)\left( {1 - e^{{ - 2\frac{r}{h}t}} } \right) - \frac{h}{2r}s_{i} t.$$

This solution is used at the end of the time step t = h to provide us with the corrector values. Steps 1a–b and then 2a–b make a two-stage (predictor–corrector) method, which is called “linear-neighbour method” [44] and abbreviated by LNe2 or LNe. After some rearrangement, we have the following scheme.

Algorithm 2, LNe method

-

Stage 1 The same as Algorithm 1.

$$Stage \, 2\quad u_{i}^{{{\text{L,}}n + {1}}} = u_{i}^{n} e^{ - 2r} + \frac{h}{2r}\left( {\frac{1}{2r}\left( {a_{i} - a_{i}^{{{\text{pred}}}} } \right) + a_{i} } \right)\left( {1 - e^{ - 2r} } \right) - \frac{h}{2r}\left( {a_{i}^{{{\text{pred}}}} - a_{i} } \right),$$(2.11)where \(a_{i}\) and \(a_{i}^{{{\text{pred}}}}\) are defined in (2.5) and (2.10).

This algorithm has second order convergence [44], and it is an important building block of our multistage methods.

2.2 The New Quadratic-Neighbour Approximation

Now we approximate the aggregate change of the neighbours, more precisely, the (*) term in (2.3), by a second order polynomial, which yields the following ODE for the node-variable:

where \(u\left( {t = 0} \right) = u^{0}\) and the values of w, s, and a contain information about the neighbours and the source term. The analytical solution of the initial value problem (2.13) at t = h is

In the case of the LNe method, we needed values of \(\vec{u}\) at two different time-points to determine the “slope” coefficients si. Now, for each node, we need three values, \(f_{0} ,\;f_{1}\) and \(f_{2}\), to determine the coefficients w, s and a. The quantity \(a_{i}\) is obviously \(a_{i} = f_{0} = {\raise0.7ex\hbox{r} \!\mathord{\left/ {\vphantom {r h}}\right.\kern-0pt} \!\lower0.7ex\hbox{h}}u_{i \pm 1}^{n} + q_{i}\). We choose to obtain an estimation of the other two values at the middle and at the end of the actual time step by the LNe scheme described above, so the two missing function values are:

Now we have the following simple system of equations:

The solution of this gives the unknown parameters for each node:

and with these, the new values of the solution can be calculated using (2.14). For the sake of simplicity and higher speed of the calculations, we introduce the following new quantities:

Now, if someone uses quantities with dimensions, then the new quantities A, S and W have the same dimension as u. With these new notations, we have

We can summarize the obtained algorithms as follows:

Algorithm 3: three-stage constant-linear-quadratic neighbour (CLQ) method

-

Stage 1 Calculate \(A_{i} = \frac{{u_{{{\text{i}} \pm 1}}^{{\text{n}}} }}{2} + \frac{h}{2r}q_{i}\), then take a full time step with the CNe method

$$u_{i}^{{\text{C}}} = u_{i}^{n} e^{{ - 2 r}} + A_{i} \left( {1 - e^{{ - 2 r}} } \right)$$ -

Stage 2 Calculate \(A_{i}^{{{\text{pred}}}} = \frac{{u_{i \pm 1}^{{\text{C}}} }}{2} + \frac{h}{2r}q_{i}\), then calculate a half as well as a full time step with the LNe method

$$\begin{aligned} & u_{i}^{{{\text{L}}{\raise0.7ex\hbox{1} \!\mathord{\left/ {\vphantom {1 2}}\right.\kern-0pt} \!\lower0.7ex\hbox{2}}}} = u_{i}^{n} e^{{ - r}} + A_{i} \left( {1 - e^{ - r} } \right) + \frac{{A_{i}^{{{\text{pred}}}} - A_{i} }}{2}\left( {1 - \frac{{1 - e^{ - r} }}{r}} \right) \\ & u_{i}^{{\text{L}}} = u_{i}^{n} e^{{ - 2r}} + A_{i} \left( {1 - e^{ - 2r} } \right) + \left( {A_{i}^{{{\text{pred}}}} - A_{i} } \right)\left( {1 - \frac{{1 - e^{ - 2r} }}{2r}} \right) \\ \end{aligned}$$ -

Stage 3 Calculate \(F_{{1, i}} = \frac{{u_{i \pm 1}^{L1/2} }}{2} + \frac{h}{2r}q_{i}\) and \(F_{{2, i}} = \frac{{u_{i \pm 1}^{L} }}{2} + \frac{h}{2r}q_{i}\), then \(S_{i} = 4F_{{1, i}} - F_{{2, i}} - 3A_{i}\) and \(W_{i} = 2\left( {F_{{2, i}} - 2F_{{1, i}} + A_{i} } \right)\), and finally

$$u_{i}^{{\text{Q}}} = e^{{ - 2r}} u_{i}^{{\text{n}}} + \left( {1 - e^{{ - 2r}} } \right)\left( {\frac{{W_{i} }}{{2r^{2} }} - \frac{{S_{i} }}{2r} + A_{i} } \right) + W_{i} \left( {1 - \frac{1}{r}} \right) + S_{i} .$$(2.15)

One can use the CLQ result obtained at Stage 3 to add one more stage, which will be the CLQ2 method. For this, the midpoint values must be calculated first as follows:

Algorithm 4: four-stage CLQ2 method

-

Stage 1–2–3 The same as in Algorithm 6.

-

Stage 4 Use formula (2.15) again to obtain the final values of the four-stage CLQ2 method, but now \(u_{i}^{Q1/2}\) is obtained by (2.16), \(F_{{1, i}} = \frac{1}{2}u_{i \pm 1}^{{{\text{Q}}1/2}} + \frac{h}{2r}q_{i}\), \(F_{{2, i}} = \frac{1}{2}u_{i \pm 1}^{{\text{Q}}} + \frac{h}{2r}q_{i}\), while \(S_{i}\) and \(W_{i}\) must also be recalculated. This iteration stage can be further repeated (in the very same way as Stage 4) to get the CLQ3 method (5 stages altogether), the CLQ4 method (6 stages altogether), etc. We will show that although this iteration \({\text{CLQ}}n\) does not converge to the true solution as \(n \to \infty\), it becomes more and more accurate due to the decreasing truncation error coefficients.

We have expressed the new values \(u_{{\text{i}}}^{{\text{n + 1}}}\) with the old values \(u_{{\text{j}}}^{{\text{n}}}\) for the new algorithm using the Mathematica software, but for the sake of brevity we present it only for the CLQ algorithm, because for the other schemes they are much longer expressions.

2.3 Extension to a General Grid

It is possible to extend the methods to more general cases where the geometrical and material properties of the simulated system depend on the position, and the discretization reflects this fact. To take a step towards a resistance–capacitance-type model [48] of heat conduction, we switch to cell variables, where \(u_{i}\), \(c_{i}\), \(\rho_{i}\), and \(q_{i}\) are the average temperature, specific heat, density and heat source intensity of cell i, respectively, which will be approximated by their values at the cell centres. The quantity \(k_{{i,\, i + 1}}\) is the heat conductivity between cell i and its right neighbour, which can be approximated as the value of k at the cell borders. The heat capacity of the cells are \(C_{i} = c_{i} \rho_{i} V_{i} \;\), while the thermal resistance between the cells can be estimated as \(R_{{i, i + 1}} \approx d_{{i, i + 1}} /\left( {k_{{i, i + 1}} A_{{i, i + 1}} } \right)\), where \(A_{{i, i + 1}}\) is the interface area between the neighbouring cells. Using these quantities and approximations, one can obtain the ODE system for the time derivative of each cell-variable for a general (e.g. unstructured) grid in any space-dimensions, independently of any coordinate-system as follows:

Equation system (2.18) can be written into the same matrix-form as (2.2). The off-diagonal elements of the matrix M are \(m_{ij} = 1/\left( {R_{ij} C_{i} } \right)\). If the cells i and j are not neighbours, i.e. there is no direct heat conduction between them, then the corresponding element is zero. The diagonal elements of the matrix are the negative sums of the off-diagonal elements: \(m_{ii} = - \sum\nolimits_{j \ne i} {\left( {R_{i,j} C_{i} } \right)^{ - 1} }\). Let us introduce two notations, namely \(r_{i} = - hm_{ii}\) and \(\tau_{i} = - \frac{1}{{m_{ii} }} = \frac{h}{{r_{i} }}\). The first one can be perceived as the generalization of r, while τi is the characteristic time or time constant of cell i. For the simplest one-dimensional case, we obtain \(r_{i} = h\frac{2\alpha }{{\Delta x^{2} }} = 2r{\text{ and }}\tau_{i} = \frac{h}{2r}\;\,\,(1 < i < N)\,\).

Algorithm 3b: generalized three-stage CLQ method

-

Stage 1 Calculate \(a_{i} = \sum\nolimits_{j \ne i} {\frac{{u_{j}^{n} }}{{R_{i,j} C_{i} }}} + q_{i}\), then take a full time step with the general form of the CNe method:

$$u_{i}^{{\text{C}}} = u_{i}^{n} e^{{ - r_{i} }} + \tau_{i} a_{i} \left( {1 - e^{{ - r_{i} }} } \right)$$ -

Stage 2 Calculate \(a_{i}^{{\text{C}}} = \sum\nolimits_{j \ne i} {\frac{{u_{j}^{{\text{C}}} }}{{R_{i,j} C_{i} }}} + q_{i}\), then calculate a half and a full time step with the LNe method:

$$\begin{aligned} & u_{i}^{{{\text{L}}1/2}} = u_{i}^{n} e^{{ - r_{i} /2}} + \tau_{i} a_{i} \left( {1 - e^{{ - r_{i} /2}} } \right) + \tau_{i} \left( {a_{i}^{{\text{C}}} - a_{i} } \right)\left[ {\frac{1}{2} - \frac{{\tau_{i} }}{h}\left( {1 - e^{{ - r_{i} /2}} } \right)} \right] \\ & u_{i}^{{\text{L}}} = u_{i}^{n} e^{{ - r_{i} }} + \tau_{i} a_{i} \left( {1 - e^{{ - r_{i} }} } \right) + \tau_{i} \left( {a_{i}^{{\text{C}}} - a_{i} } \right)\left[ {1 - \frac{{\tau_{i} }}{h}\left( {1 - e^{{ - r_{i} }} } \right)} \right] \\ \end{aligned}$$ -

Stage 3 Calculate

\(f_{1,i} = \sum\nolimits_{j \ne i} {\frac{{u_{j}^{{\text{L1/2}}} }}{{R_{i,j} C_{i} }}} + q_{i}\) and \(f_{2,i} = \sum\nolimits_{j \ne i} {\frac{{u_{j}^{{\text{L}}} }}{{R_{i,j} C_{i} }}} + q_{i}\), then \(s_{i} = \frac{{4f_{1,i} - f_{2,i} - 3a_{i} }}{h},\;\;{\text{and }}w_{i} = 2\frac{{f_{2,i} - 2f_{1,i} + a_{i} }}{{h^{2} }}\).

After this take a full (and if necessary, a half) time step as follows

Further stages can be constructed straightforwardly by recalculating \(f_{1,i} = \sum\nolimits_{{{\text{j}} \ne {\text{i}}}} {\frac{{u_{{\text{j}}}^{{\text{Q1/2}}} }}{{R_{{{\text{i}},{\text{j}}}} C_{{\text{i}}} }}} + q_{i}\) and \(f_{2,i} = \sum\nolimits_{{{\text{j}} \ne {\text{i}}}} {\frac{{u_{{\text{j}}}^{{\text{Q}}} }}{{R_{{{\text{i}},{\text{j}}}} C_{{\text{i}}} }}} + q_{i}\), then using these for the recalculation of \(s_{i} ,\;w_{i} ,\;u_{i}^{Q1/2} ,{\text{ and }}u_{i}^{Q}\) according to the formulas in Stage 3. (At the last stage calculating \(u_{i}^{Q1/2}\) is redundant.) The generalized CNe and LNe methods are presented in [44] and also can be extracted from the generalized version of the CLQ method. These generalized algorithms can still be implemented with little coding effort, and once the quantities \(C_{i}\) and \(R_{ij}\) are given, the methods are easy to use.

Remark 2.1

Note that in Algorithm 3b, the C and R quantities are not directly present, only through the matrix elements. Thus, if one defines \(a_{i} = \sum\nolimits_{j \ne i} {m_{i,j} } u_{j}^{n} + q_{i}\), etc., then the algorithms can be applied for any ODE system which has the form (2.2), independently of the physical content of the variables. Since exponential terms are present in the new algorithms, they may look similar to the so-called exponential integrators [49]. However, matrix exponentials are calculated in the case of those methods, while all of our algorithms are fully explicit: solving equation systems or performing operations on matrices is not needed. The necessary operations are clearly proportional to the first power of the number of spatial nodes as well as the total number of timesteps, thus the algorithms scale like \(O\left( {N \cdot T} \right)\). This is confirmed by all of our numerical experiments where we measured the running time for sufficiently long runs. Moreover, the calculations are suitable for parallelization on GPUs as well.

2.4 Unconditional Positivity and Stability of the CLQn Method

First, we examine the one-dimensional linear diffusion equation with an equidistant mesh. We recall the following simple lemma, the associativity of convex combinations [43, p. 28].

Lemma

A convex combination \(x = \sum {a_{i} x_{i} }\) of convex combinations \(x_{i} = \sum {b_{ij} y_{ij} }\) is again a convex combination:

for any \(y_{ij} \in {\mathbb{R}}^{n}\).

Theorem 2.1

In case of the linear diffusion Eq. (1.2), if the qi source terms are zero, the new values \(u_{i}^{{\text{C}}}\), \(u_{i}^{{\text{L}}}\), \(u_{i}^{{{\text{CLQ}}}}\), \(u_{i}^{{{\text{CLQQ}}}}\)\(u_{i}^{{{\text{CLQ3}}}}\) and \(u_{i}^{{{\text{CLQ}}4}}\) are the convex combinations of the initial values \(u_{j} (0),\,\,j = 1,...,N\).

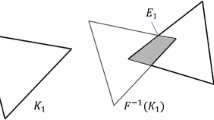

Proof

Using the Mathematica software, we have analytically calculated the coefficients of the old values \(u_{j}^{n}\) in the expression of the new value of a general element \(u_{i}^{n + 1}\) (such as Eq. (2.17)) as functions of the mesh ratio r > 0, and examined their properties. The sum of the coefficients is always exactly one. We have to prove that each of the coefficients is nonnegative. In the case of the CNe and LNe methods, we have 3 and 5 nonzero coefficients (first and second neighbours), respectively. These had been examined in our previous papers [44, 47] by hand calculations, but we repeated them by the software for verification. In the case of the CLQn method, we have \(2\left( {n + 2} \right) + 1\) nonzero coefficients, which are more and more complicated as n increases, but still continuous functions of the variable r. First, the limit values are calculated at 0 and ∞. Then, the functions are differentiated with respect to r to obtain the extrema (extreme points). In some cases, there are one or two extreme points, but the extreme values are always in the unit interval. The values are tabulated in Table 1, where the last column gives the second derivative of the coefficient with respect to r at the extrema to let the readers know if the extreme value is minimum or maximum. In Fig. 1 we exemplify the coefficients of the \(u_{i \pm j}^{n}\) terms as a function of r for the CLQ4 method, where i is a general mesh point while \(j \in \left\{ {0,...,6} \right\}\). The last two coefficients are quite small, and their curves are hard to distinguish with the naked eye.

From the analysis above, it is clear that in the open interval \(r \in \left] {0 ,\, \infty } \right[\), all coefficients are strictly positive. The Lemma now implies the theorem.□

Remark 2.2

In our previous paper [44], we analytically proved the convex combination property for the CNe and LNe methods in the case of an arbitrary mesh and space dimensions. For the CLQn method, however, we managed to do this only for the simplest case of Eq. (1.2) with an equidistant mesh, but all of the numerical experiments suggest that it holds in more general cases as well. Theorem 2.1 about convex combination property states that (for heat conduction) the temperature of each cell tends to the weighted average of the temperatures of the surrounding cells. It implies that errors cannot be amplified during the calculation. The solution obeys the maximum and minimum principles [51, p. 87], i.e. the extremal values of the function u occur among the initial or the (prescribed) boundary values. It also implies that starting from nonnegative initial values and assuming no heat sinks are present, the function u always remains positive. This convex-combination property is a much stronger property than unconditional stability.

In case of linear or linearized ODE systems of \(u^{\prime} = Mu\) type, the widely used definition of A-stability is that the numerical solution is bounded for arbitrarily large step sizes for Dahlquist’s famous test equation, i.e. for each (non-positive) eigenvalue of M. Since the work of Dahlquist we know that no explicit Runge–Kutta or multistep Adams–Bashforth method can be A-stable (see [52] and the references therein), due to the polynomial expression of the time step size h. Now, unlike in the case of those methods, in our cases the step size h appears not in a polynomial, but in an exponential form with negative exponents (for the linear heat equation) in the expression for the new values of the variable u. This means that the proposed formulas contain h up to infinite order, which gives the entirely different stability properties. However, this will have some consequences on the truncation errors, as we will see soon.

Remark 2.3

In the case of the CLQ5 method, the coefficient of \(u_{i \pm 7}^{n}\) can be negative with very small (less than 10–7) absolute value. However, this is already enough for not only the violation of the positivity preserving property, but for occasional unstable behaviour as well. That is why we propose and examine the methods only up to 4 quadratic stages. We have also tried to omit the linear stage and examined the obtained CQ, CQQ, etc. methods. These are typically slightly more accurate than their counterpart with the same number of stages. The problem is that they are only conditionally stable, albeit with much larger mesh Fourier numbers than for the RK methods. Still, we stopped their investigation, because we think that losing the unconditional stability is too big a price for a slight increase in accuracy.

2.5 Consistency and Convergence

First, the consistency and convergence will be discussed considering the schemes as direct PDE solvers as usual [53] (Sect. 4.2), [54]. Subscripts containing x or t denote differentiation with respect to the space or time variables, respectively. We give the truncation errors for the proposed methods below.

Theorem 2.2

When applied to the homogeneous PDE \(u_{t} = \alpha u_{xx}\), the CLQn methods (Algorithms 6 and 7) are conditionally consistent, i.e. if the time and space step sizes tend to zero \(h \to 0\), \(\Delta x \to 0\), such that \(\frac{h}{{\Delta x^{2} }} \to 0\), then the numerical solution tends to the analytical solution of the PDE.

Proof

We calculate the local truncation error for each method by symbolic computer calculation. Assuming that the analytical solution u of Eq. (1.2) is sufficiently smooth, one can use the following temporal and spatial Taylor-series expansions of this u:

Using the Taylor series expansion of the exponential function, we also expand the r-dependent coefficients, such as \(e^{ - 2r}\). In the case of the CLQ method, we are going up at least to \(u_{{\left( {4t} \right)}} ,\, u_{{\left( {8x} \right)}} ,\, {\text{and }}r^{4}\), and even further if there are more stages. We substitute these into the final expressions of the algorithms, for example, Eq. (2.17), in the case of the CLQ scheme. Since u is the true solution, we use the substitutions \(u_{t} = \alpha u_{xx}\), \(u_{tt} = \left( {\alpha u_{xx} } \right)_{t} = \alpha \left( {u_{t} } \right)_{xx} = \alpha^{2} u_{{\left( {4x} \right)}}\), \(u_{ttt} = \alpha^{3} u_{{\left( {6x} \right)}}\) and so on. After simplifications, we obtain the local truncation errors of the methods. Now the global error can be estimated by dividing the local truncation error by h. For the sake of brevity, we introduce the notations

where \(\varepsilon_{0}\) belongs to the central difference formula (2.1). The global errors without some higher order terms are as follows:

The global errors have the following orders: \(\varepsilon_{CLQ} = O\left( {\Delta x^{2} ,\,\,\Delta x^{2} h,\,\,\Delta x^{2} h^{2} ,\,\,h^{3} ,\,\,\frac{{h^{3} }}{{\Delta x^{6} }}} \right)\), \(\varepsilon_{{{\text{CLQQ}}}} = O\left( {\Delta x^{2} ,\,\,\Delta x^{2} h,\,\,\Delta x^{2} h^{2} ,\,\,\Delta x^{2} h^{3} ,\,\,h^{4} ,\,\,\frac{{h^{4} }}{{\Delta x^{8} }}} \right)\) and \(\varepsilon_{{{\text{CLQn}}}} = O\left( {\Delta x^{2} ,\,\,\Delta x^{2} h,\,\,\Delta x^{2} h^{2} ,\,\,\Delta x^{2} h^{3} ,\,\,h^{4} ,\,\,\frac{{h^{4} }}{{\Delta x^{4} }}} \right)\,,\;\,n = 3,\,\,4\). One can see that there are several mixed terms containing both the space and the time step size, which are not present in the case of traditional methods such as the Runge Kutta schemes. The most problematic is that the global error contains powers of \(\frac{h}{{\Delta x^{2} }}\) and \(\frac{h}{\Delta x}\) in all cases, which means that the consistency is only conditional. This fact and its negative consequences will be explained in the next remark. □

Remark 2.4

As we showed, these methods are only conditionally consistent, and therefore conditionally convergent, if one considers them as time integrators for the original PDE. The first consequence of the \(\frac{h}{{\Delta x^{2} }}\) terms in the global error is that when one has to solve the PDE with higher accuracy, it is not enough to make the size of the spatial cells \(\Delta x\) tend to zero, because if h is kept constant, the global error actually does not decrease (but stability remains, unlike in the case of the conditionally stable explicit methods). The numerical solution converges to the true solution of the original PDE if both the space step size \(\Delta x\) and the time step size h go to zero, but h goes faster than \(\Delta x^{2}\). If this condition is not fulfilled, the errors tend to non-negligible constant values due to the convex combination property. The second consequence is the slow convergence for larger time step sizes. As we will see, when we start to decrease the time step size, the errors are first decreasing very slowly, and they approach the theoretical order only for medium step sizes. It means that for larger time steps, the order of temporal convergence cannot be determined using the formula \(\varepsilon \sim h^{p}\). We note that this conditional consistency is the price one has to pay for unconditional stability. It is common among the stable explicit methods, for example in the case of the first order UPFD method. This is the main weak point of these kinds of algorithms. Nevertheless, we are going to show that they are very competitive in several cases.

As we already mentioned in Remark 2.2, the new CLQn methods (and the methods given in Sect. 2.1) can be used to solve general ODE systems. Now the order of accuracy is examined from this point of view.

Theorem 2.3

The order of convergence is three for the three-stage CLQ algorithm and four for the 4, 5 and 6-stage CLQn algorithms, \(n \in \left\{ {2,\;3,\;4} \right\}\), if they are applied to the general.

linear ODE system, where \(\vec{u}\,{:}\, \left\{ {1,...,N} \right\} \times {\mathbb{R}} \mapsto {\mathbb{R}}^{N}\) is the unknown vector-function, \(M \in \,{\mathbb{R}}^{N \times N}\) is an arbitrary constant matrix, while \(\vec{Q} ,\,\,\vec{u}^{0} \, \in \,{\mathbb{R}}^{N}\) are arbitrary vectors.

Proof

We applied the Mathematica software for symbolic computer-calculation. The linear initial value problem

has been examined, where the general elements mij are kept undetermined. It is well known that this ODE system has the following analytical solution:

Substituting h instead of t, i.e. considering this function at the end of the time step, the exact solution up to fourth order in h can be written as

The software calculated this solution as well as the numerical solution by symbolic matrix operations. After this, the coefficients of h from h0 up to h4 have been sorted out for both the analytical and the numerical solution of the ODE system. It is observed that the appropriate coefficients are exactly the same in the exact solution and in the CLQn results. It follows that the local error of the time integrator CLQn is fourth order in h if n = 1 and fifth order if n = 2, 3 or 4, which is equivalent to the statement of the theorem about global errors. □

Corollary 2.5

If we apply the CLQn methods as time integrators for the ODE system (2.18) obtained from the PDE (1.1) by spatial discretization using any fixed spatial grid, the order of the convergence of the method is the same as we stated for the equidistant grid. It means that these new methods can be used in any space dimensions, in case of unstructured grids or inhomogeneous medium. We underline again that some other stable and explicit methods, such as the odd–even hopscotch and ADE methods, lose their favourable properties when they are applied to these more general meshes.

3 The Adaptation of the Proposed Methods for the Nonlinear Cases

3.1 Fisher’s equation

We apply a kind of operator splitting, which means that first we handle the effect of the diffusion term and then separately the effect of the nonlinear reaction term in Eq. (1.3). The diffusion term is treated exactly as in Sect. 2. For example, first Algorithm 4 is applied, which yields \(u_{i}^{{{\text{pred}}}}\), the value in which the diffusion term is fully taken into account. These \(u_{i}^{{{\text{pred}}}}\) values are now used as starting points for the calculation of the reaction term, which has the form \(\beta u\left( {1 - u} \right) = \beta u - \beta uu\). The negative term can affect the positivity condition, thus we treat the terms in a “semi-implicit” or “pseudo-implicit” way as follows. We substitute the first power of the new, unknown value \(u_{i}^{n + 1}\) into the expression of the nonlinear term, thus this new value appears on the right-hand side of the equation as well. Everywhere else \(u_{i}^{{{\text{pred}}}}\) remains, thus we have

This can be straightforwardly rearranged into a fully explicit form:

The operation (3.1) can be performed at the last stage (inside the “for” loop going through the nodes) immediately after the new values \(u_{i}^{{{\text{CLQn}}}}\) (\(u_{i}^{{\text{C}}}\) and \(u_{i}^{{{\text{CL}}}}\) in the case of the CNe and LNe method, respectively) are calculated, because these serve as \(u_{i}^{{{\text{pred}}}}\). We note that a similar treatment has been applied by Appadu et al. [55] to the generalized Burgers-Huxley equation in the framework of the NSFD methods, but they constructed one-stage first order methods.

Another possibility of treating the Fisher-term arises if we solve the

ODE analytically. More precisely, we solve the corresponding initial value problem, where the values \(u_{i}^{{{\text{pred}}}}\) serve as initial values at the initial time, which is the beginning of the actual time step. This yields:

Apart from the operator splitting itself, this solution avoids any approximation or linearization.

Remark 3.1

Assume that \(0 \le u_{i}^{{{\text{pred}}}} \le 1\). Now if one considers the coefficient of \(u_{i}^{{{\text{pred}}}}\), one can easily see that the numerators and the denominators in both (3.1) and (3.3) are positive and the denominators cannot be larger than the numerators. Moreover, the right-hand sides cannot be larger than one. From these, it immediately follows that the new value \(u_{i}^{n + 1}\) cannot be smaller than the \(u_{i}^{{{\text{pred}}}}\) value, and it cannot be negative, thus \(0 \le u_{i}^{{{\text{pred}}}} \le u_{i}^{n + 1} \le 1\) holds.

Corollary 3.1

(dynamical consistency of the methods). If we use Algorithm 1–4 with Eqs. (3.1) or (3.3) to solve Fisher’s Eq. (1.3) and if the initial values are in the unit interval \(u_{i}^{0} \in \left[ {0,\, 1} \right]\), then the values of u remain in this interval for arbitrary nonnegative β and time step size h during the whole calculation. This is similar to the maximum and minimum principles, and automatically implies unconditional stability. Moreover, the appearance of the Fisher term (increasing β from zero to a positive value) cannot imply the decrease, only the increase of the u values, which also reflect a dynamical property of the original PDE (1.3).

Proof

Theorem 2.1 and Remark 3.1 immediately imply the statement.

3.2 Nagumo’s Equation

Now the reaction term in Eq. (1.4) has the form \(\beta u\left( {1 - u^{\delta } } \right)\left( {u^{\delta } - \gamma } \right) = \beta u^{\delta + 1} - \beta \gamma u - \beta uu^{2\delta } + \beta \gamma u^{\delta + 1}\). The ODE containing the nonlinear Nagumo term cannot be solved analytically in general, so we follow the logic which leads us to Formula (3.1) above. This implies

The explicit form is easily obtained:

Theorem 3.1

(dynamical consistency). If Algorithm 6 or 7 and then formula (3.4) is applied to solve Eq. (1.4), then the values of the solution lie in the unit interval \(\left[ {0,\, 1} \right]\) provided that \(0 \le u_{i}^{0} \le 1,\; 0 \le \gamma \le 1,\; \delta > 0,\; \beta > 0\) for arbitrary time step size h if.

Since \(1 + \beta h > \gamma \left( {1 + \beta h\gamma } \right)\), \(u_{i}^{n + 1}\) is obviously nonnegative, thus we have to prove that it cannot be larger than 1. Let us introduce the temporary notations \(u = u_{i}^{n + 1}\) \(p = u_{i}^{{{\text{pred}}}}\) and \(b = \beta h\) for brevity. We prove that

provided that \(0 \le p \le 1,\quad 0 \le \gamma \le 1,\quad \delta > 0,\quad b > 0\).

The examined u(p) function is continuous and sufficiently smooth in \(p \in \left[ {0,\, 1} \right]\) and at the boundaries of the examined interval its values are \(u\left( {p = 0} \right) = 0\) and \(u\left( {p = 1} \right) = 1\). Due to the extreme value theorem, if u has no extremal value in the interior of the unit interval, then it cannot be larger than 1. Let us differentiate the u(p) function:

The denominator of (3.6) is always positive, thus to seek for extremal values, we need to examine only the numerator. The value of the numerator is nonnegative for p = 0. The coefficients of the two largest power of p, namely \(p^{2\delta }\) and \(p^{3\delta }\) can be negative, which can cause a non-positive numerator. Note that since \(p \in \left[ {0,\, 1} \right]\) and the exponents are positive, larger powers of p are smaller and increasing with p. This implies that if the numerator is negative for a particular \(p^{ * } \in \left] {0,\, 1} \right[\), then it is also negative for p = 1. Thus, if the numerator of (3.6) is positive for p = 1, then the u(p) function is monotonously increasing from 0 to 1 and the statement of the theorem is true. The numerator for p = 1 can be written as

and the requirement of the non-negativity of (3.7) gives the condition (3.5). □

Remark 3.2

If condition (3.5) is reorganized into the form

Then it is easy to see that if δ ≤ 1 then (3.5) holds for arbitrary non-negative b = βh, and γ. Moreover, by examining the right-hand side of (3.5) one can obtain that it approaches the value 1 only if b tends to infinity and γ tend to zero. For example, for \(\gamma \to 0\) it takes \(\left( {1 + b} \right)/b\), so the maximum value of δ is at least two if \(h \le 1/\beta\). On the other hand, if b tends to zero and/or γ tends to one, it strictly monotonously tends to infinity. The values of the maximum allowed δ are plotted in Fig. 2.

The maximum allowed values of the parameter δ as a function of βh and γ for Eq. (1.4) under which dynamical consistency is guaranteed

Remark 3.3

In the case of Huxley’s equation, the \(\gamma = 0,\delta = 1\) substitution into (3.5) yields the

condition, which trivially holds for any nonnegative β for arbitrary time step size, thus the statement of the theorem is automatically true in this case.

We note that the investigation of the consistency of the methods in the case of nonlinear equations is out of the scope of this paper, and is planned to be published in the future.

4 Numerical Experiments in 1D Using Analytical Reference Solutions

The numerical solution is compared with the reference solution only at final time \(t_{{{\text{fin}}}}\) which will be specified later. We measure the accuracy using the global \(L_{\infty }\) error, which is the maximum of the absolute difference between the reference temperature \(u_{{\text{j}}}^{{{\text{ref}}}}\) and the temperature \(u_{{\text{j}}}^{{{\text{num}}}}\) calculated by our numerical methods at the final time:

The numerical order of convergence can be calculated using two values of the errors belonging to two subsequent time step sizes h1 and h2, where h1 > h2, using the formula

4.1 Experiment 1: Nonlinear Fisher’s Equation

We are going to reproduce the following analytical solution [39, 56] of PDE (1.3), valid for \(\alpha = 1\):

The initial condition is obtained simply by substituting the initial time into that:

The appropriate Dirichlet boundary conditions are prescribed at the two ends of the interval:

We examine the numerical solution at the interval \(x \in \left[ {0 ,\, 2 } \right]\), thus \(x_{0} = 0\,,\;\,x_{N} = 2\), which is discretized by dividing it into 500 equal parts: \(x_{j} = x_{0} + j\Delta x\,,\;j = 0,...,500\,,\;\,\Delta x = 0.004\). The initial and the final time are \(t^{{0}} = 0\) and \(t^{{{\text{fin}}}} = 0.1\). The nonlinear coefficient is quite large, \(\beta = 22\).

For comparison purposes, we coded the NSFD scheme mentioned in the paper of Agbavon et al. [39], which has the formula for the \(\alpha = 1\) case:

First, for verification of this NSFD part of our code, we reproduced some error-values from that publication (Table 6 in [39]). Then, in our experiment, we calculated the error as a function of the time step size h for all the examined methods. The results are presented in Fig. 3. We note that similar results have been obtained for other parameter values of β, \(t^{{{\text{fin}}}}\), etc. as well. The proposed CLQ, CLQ2, etc. algorithms are smoothly converging without the slightest sign of instability. However, this convergence is very slow for larger time step sizes due to the inconsistent truncation error terms, as we explained in Remark 2.4. In the case of all members of the CNe-CLQ4 family, not only Eq. (3.1) but Eq. (3.3) is used to test, where the nonlinear term is treated analytically. For very large and very small time step sizes, this treatment (denoted by “An” in the Figure) can be more accurate, but for medium time step sizes, which are the most interesting for us, the accuracy is the same for the two treatments. The NSFD method yields an error around 108 for large and medium time step sizes, but below those, it is quite accurate.

In Table 2 we present the maximum of the numerical orders calculated by the (4.2) formula, with the appropriate larger and smaller time step sizes h1 and h2, and the belonging errors. The numerical orders are not very far from their theoretical values, even for this severely nonlinear case. In Fig. 4 we present the errors of the CLQ and CLQ4 schemes for different numbers of nodes N, and therefore, mesh spacing \(\Delta x\) as a function of the time step size. This can help one to optimize the spatial and the temporal discretization.

4.2 Experiment 2: Nonlinear Nagumo’s Equation

Now PDE (1.4) with \(\alpha = 1\) is going to be solved in the domain \(\left( {x,\, t} \right) \in \left[ {x_{0} ,\, x_{N} } \right] \times \left[ {0,\, t^{{{\text{fin}}}} } \right]\). We found the following analytical solution of Eq. (1.4) in the literature [12], valid in the \(\delta = 1\) case:

where \(c = \sqrt {\frac{\beta }{2}} \left( {1 - 2\gamma } \right)\). We use parameter values \(\alpha = 1\,,\; \beta = 10\,,\; \gamma = 0.4\,,\; \delta = 1\) in Eq. (1.4), and \(x_{0} = 0\,,\;x_{N} = 3,\,\;t^{{{\text{fin}}}} = 0.6 ,\, \Delta x = 0.02\). Similarly to the previous subsection, we obtain the initial and the Dirichlet boundary conditions by taking this function at the appropriate time and space points: \(u\left( {x,\, 0} \right)\) and \(u\left( {x_{0} ,\, t} \right)\,,\;u\left( {x_{N} ,\, t} \right)\), respectively. In paper [12] four nonstandard finite difference schemes were proposed with the following formulas:

where \(\phi_{2} = \frac{{e^{\beta \Delta t} - 1}}{\beta }\), \(\psi_{1} = \frac{{1 - e^{ - \beta \Delta x} }}{\beta }\), \(\psi_{2} = \frac{{e^{\beta \Delta x} - 1}}{\beta }\) and \(R = \frac{{\phi_{2} }}{{\psi_{1} \,\psi_{2} }}\). It is analytically proved that these methods have dynamical consistency, i.e. positivity and boundedness. However, these are not unconditional, and the most important condition is \(R \le {\raise0.7ex\hbox{1} \!\mathord{\left/ {\vphantom {1 2}}\right.\kern-0pt} \!\lower0.7ex\hbox{2}}\), which is actually a similar condition than the standard mesh Fourier number for the explicit Euler method. We use these NSFD schemes for comparison purposes.

We present here the set of the error curves in Fig. 5, while some numerical errors are shown in Table 3. One can see that the new methods behave well for this strongly nonlinear case as well. The error as a function of h decreases smoothly with h, there are no signs of instability or unphysical, e.g. oscillatory behaviour. In Fig. 6 the concentration u as a function of the space variable x is presented for the case of the analytical solution at the final time and two numerical solutions. The initial \(u_{0}\) function is also plotted to see how the variable changes in time. One can see that even for this relatively large time step size, where the maximum error is 0.044, the numerical solution is qualitatively the same as the analytical one, and no unphysical oscillations appear. A further decrease of the time step size yields numerical curves which are indistinguishable from the analytical one.

4.3 Experiment 3: Nonlinear Huxley’s Equation

Now PDE (1.4) with \(\alpha = 1\) and \(\gamma = 0\) is going to be solved. We found the following analytical solution in the literature [16, p. 34]

The parameters are \(\beta = 7\,,\;c = 8\) and \(x_{0} = 0\,,\;x_{N} = 2,\,\;t^{0} = 0,\,\;t^{{{\text{fin}}}} = 0.4,\, \Delta x = 0.005\). Similarly to the previous subsection, we can construct the initial and the Dirichlet boundary conditions by taking this function at the appropriate time and space points. For comparison purposes, we use the NSFD schemes (4.3)–(4.6) again. The obtained error-curves are presented in Fig. 7. One can see that the methods behave well for this case as well.

In Table 4 we present the maximum of numerical orders with the appropriate larger and smaller time step sizes and errors. The numerical orders can exceed their theoretical values for the CLQ2, CLQ3 and CLQ4 methods. In Fig. 8 the concentration u as a function of the space variable x is presented for the case of the analytical solution at the final time and two numerical solutions. One can see that even for this case, where the maximum errors of the CLQ and CLQ4 algorithms are 0.0321 and 0.0123, respectively, the numerical solution is qualitatively the same as the analytical one.

4.4 Experiment 4: Diffusion Equation with Strongly Space-Dependent Coefficient

We use the recently published [57] analytical solution of the diffusion equation where the coefficient has a power-law space dependence: \(\alpha \left( x \right) = \overline{\alpha } x^{m}\). With this one has the following equation

The analytical solution of this equation is highly nontrivial, since it has the form

where \(s = m - 2\), \(\eta = x \cdot t^{1/s} \, \in {\mathbb{R}}\), σ, c1 and c2 are arbitrary constants, while M and W are the Whittaker functions [58, 59]. Here we consider only the c1 = 0 and c2 = 1 case and we set m = 20, \(\sigma = 6\,,\,\,\overline{\alpha } = 1\), \(t^{0} = 2.3\,,\;t^{{{\text{fin}}}} = 2.5\,\). The initial condition and the Dirichlet boundary conditions are calculated using the analytical solution, as in the previous examples. Let us consider the 1D interval \(x \in \left[ {x_{0} \,,\,\,x_{N} } \right]\), where \(x_{0} = 0.6\) and N = 299. The equidistant spatial grid is constructed using the \(x_{j} = x_{j - 1} + \Delta x\,,\, \,j = 1,...,N\) rule, where \(x_{0} \,,\, x_{1} \,,\, ...,\,\,x_{N}\) are the coordinates of the cell borders and \(\Delta x = 2 \cdot 10^{ - 3}\). The cell centres \(X_{1} \,,\, ...,\,\,X_{N}\) can be expressed as \(X_{j} = x_{j - 1} + \Delta x/2\,,\;\,\,\,j = 1,...,N\,\). The power-law space dependence \(\alpha \left( x \right) = \overline{\alpha } x^{m}\) of the diffusion coefficient is taken into account only at the level of the k material coefficients, so take \(c \equiv 1\) and \(\rho \equiv 1\) for simplicity, thus \(C_{j} = \Delta x_{j} \,,\, \,j = 1,...,N\). The resistances are calculated as follows:

Note that the concentrations, including the Dirichlet boundary conditions are calculated at the \(X_{1} \,,\, ...,\,\,X_{N}\) cell centres while the conductivities are taken into account at the \(x_{1} \,,\, ...,\,\,x_{N - 1}\) cell borders. The stiffness ratio of this problem is \(5.3 \times 10^{8}\), and \(h_{{{\text{MAX}}}}^{{{\text{EE}}}} { = 6}.3 \times 10^{ - 8}\). We use the generalized methods, for example, Algorithm 6b. For comparison purposes, we use the UPFD method [32], which in our case has the form:

and the most standard 4th order Runge–Kutta method [60, p. 737]. In Fig. 9 we present the errors as a function of the time step size h, while in Table 5 the numerical orders are tabulated. One can see that the unconditionally stable methods behave well for this very stiff problem as well, but the numerical order of convergence is significantly less for the higher order schemes. Only one point is shown for the RK4 method, since above the smallest time step size, that scheme was unstable.

\(L_{\infty }\) errors as a function of the time step size h for the linear Eq. (1.3) with a spatially varying diffusion coefficient (Experiment 4)

In Fig. 10 the analytical and a numerical solution at \(t = t_{{{\text{fin}}}}\) by two methods are presented. One can see that apart from a small delay at about x = 0.8, the numerical solutions are close to the analytical one even if we use time step size, which is 3–4 orders of magnitude larger than the stability threshold for the RK methods.

Remark 4.1

If in Experiment 4 one increases N from 299 to 799, then the stiffness ratio and the mesh Fourier number drastically change to \(6.7 \times 10^{14}\), and \(h_{{{\text{MAX}}}}^{{{\text{EE}}}} { = 5}{\text{.6}} \times 10^{ - 14}\). This implies that Runge–Kutta methods cannot be used at all, while the unconditionally stable methods produce almost the same error curves as in Fig. 9.

4.5 Experiment 5, General, Strongly Nonlinear Nagumo Equation with Non-equidistant Grid

We use a solitary wave analytical solution [61, 62] of Eq. (1.4), which is valid if \(\alpha = 1\), and has the form

here \(\vartheta = \frac{{\left( {1 + \delta - \gamma } \right)\rho }}{{2\left( {1 + \delta } \right)}}\) is the speed of the travelling wave, while \(\rho = \sqrt {4\beta \left( {1 + \delta } \right)}\), and \(\sigma = \frac{\rho \delta }{{4\left( {1 + \delta } \right)}}\). We use the following parameters: \(\alpha = 1\,,\; \beta = 24,\; \gamma = 0.8\,,\; \delta = 6\) and \(t^{0} = 0\,,\;t^{{{\text{fin}}}} = 0.1\,\). The initial condition and the Dirichlet boundary conditions are calculated using the analytical solution, as in the previous examples. Now a non-equidistant spatial grid is constructed on the 1D interval \(x \in \left[ {x_{0} \,,\,\,x_{N} } \right]\) using the following procedure. We start with the definition of the coordinates \(x_{0} \,,\, x_{1} \,,\, ...,\,\,x_{N}\) of the cell borders:

where \(x_{0} = - 5\), \(\Delta x_{1} = 2 \cdot 10^{ - 3}\) and \(\varepsilon = 1.002\). It means that the cell sizes are increasing from the left to the right. Now the cell centres \(X_{1} \,,\, ...,\,\,X_{N}\) can be expressed as follows:

Now \(C_{j} = \Delta x_{j} \,,\, \,j = 1,...,N\), and the resistances are calculated as \(R_{i,\,i + 1} = X_{i + 1} - X_{i} ,\,\;\,i = 1,...,N - 1\). The capacities and the resistances are both increasing from 0.002 to about 0.0219. The stiffness ratio is \(9.7 \times 10^{6}\), and \(h_{{{\text{MAX}}}}^{{{\text{EE}}}} { = 2}.1 \times 10^{ - 6}\). For comparison purposes, we have coded the explicit exponential finite difference method proposed by Inan [62], which is a one-stage scheme with the following formula

for an equidistant mesh. We adapted this to our non-equidistant case as follows:

In Fig. 11 we present the \(L_{\infty }\) errors as a function of the time step size h. One can see that the proposed methods behave quite similarly than in the previous cases, but some order-reduction can be observed for the higher order schemes (Table 6).

\(L_{\infty }\) errors as a function of the time step size h for the highly nonlinear Eq. (1.4) with a spatially varying diffusion coefficient (Experiment 5)

We also present the analytical and a numerical solution at \(t = t_{{{\text{fin}}}}\) by the method for a concrete time step size in Fig. 12.

5 Numerical Experiments in 2D

All simulations in this section are performed using the MATLAB R2020b software on a desktop computer with an Intel Core (TM) i11-11700F. Since the analytical solution does not exist for complicated systems, the reference solution has been calculated by the implicit ode15s solver setting very stringent error tolerance (‘RelTol’ and ‘AbsTol’ were both \({10}^{-14}\)).

5.1 The Adaptive Time-Step Controller

In references [63,64,65], the authors proposed adaptive procedures for the solution of diffusion–reaction equations. They used one-dimensional systems to test their methods. However, according to our experience, the successful validation of a method in one dimension does not guarantee the same performance in two dimensions. This is why our adaptive-CLQ2 method will be applied to two-dimensional systems with a high stiffness ratio.

On the other hand, in [66,67,68] the authors suggested a step size control algorithm for the numerical integrations of ODEs. The authors tested their algorithms by applying them to a small system of ODEs (maximum 25 equations). We do believe that the step size controller is usually efficient when it is applied to a system of ODEs containing a limited number of equations, but the same controller can show a poor performance when it is applied to a big system of ODEs containing, for instance, 50 equations. So, if we want to apply those proposed controllers to get the numerical solution of Eq. (2.2), then we will be confined to a small size of the matrix \(M\), which does not match the engineering applications. Our adaptive-CLQ2 will be applied to a system of \(900\) equations, and it can still show good performance, as we will see. Moreover, in [66] (Paragraph 4.1) one can straightforwardly calculate the stiffness ratio of the system, which is 4.66, while, as we will see, the stiffness ratio of our tested systems can be of order \(10^{11}\).

The main objective of this section is to design an adaptive time-step controller in order to achieve a given level of accuracy in the solution at a minimal computational cost. Numerical calculations based on an adaptive controller allow changing the time step \(h_{n}\) such that the error can be lower than a prescribed value. The most common way (see e.g. [69]) to adapt the step size is the elementary controller:

here \(\varepsilon\) is the desired tolerance and \(p\) is the order of the used method, while \(r_{n}\) is the local error estimator at time level \(n\). Recall that, by definition, the local error for an algorithm is the error generated by a single step of the algorithm, under the assumption that we start the step with the exact solution. There are many adaptive techniques for local error estimations. One of them is the so-called embedded Runge–Kutta pairs. In that technique, two RK methods are used to calculate the local error estimation, where one of them is more accurate than the other [70]. The Runge–Kutta-Fehlberg method (denoted RKF45) has two methods of orders 4 and 5, as it is well explained in the literature [71]. Inspired by that, and since our CLQ2 is a multi-stage embedded method, we decided to follow the same fashion of embedded Runge–Kutta pairs in calculating the local truncation error.

Let \(\hat{u}_{i}^{i + 1}\) and \(u_{i}^{n + 1}\) be the approximated solutions at time level \(n + 1\) obtained by the CLQ (third order) and CLQ2 (fourth order) methods respectively. We estimate the local error using the following formula:

If \(r_{n} \le \varepsilon\), we accept the solution and set \(t_{n + 1} = t_{n} + h_{n}\), while the solution is \(u_{i}^{n + 1}\) (since \(u_{i}^{n + 1}\) is more accurate than \(\hat{u}_{i}^{n + 1}\)).

If \(r_{n} > \varepsilon\), we reject the time step and try again with a new time step calculated by Eq. (5.1).

Our adaptive-CLQ2 method will be applied not only to linear diffusion–reaction problems but also to nonlinear types. In the CLQ2 method, the effect of the nonlinear reaction term (Nagumo term) is only considered at the fourth stage, but not in the third stage, as we can see in Eq. (3.4). In other words, \(\hat{u}^{n + 1}\) does not include the effect of the nonlinear reaction term, while the value of \(u^{n + 1}\) includes that effect. So, the difference between those two values, which represents \(r_{n}\), will not reflect the real behaviour of the local error. To fix this, we calculated \(\hat{u}_{i}^{n + 1}\) taking into account the effect of the nonlinear reaction term. If we applied here the same pseudo-implicit treatment as in Eq. (3.4), then the difference between \(u_{i}^{n + 1}\) and \(\hat{u}_{i}^{n + 1}\) would be extremely small when the reaction term dominates the diffusion term, and we could not trust in the error estimator. Therefore, we choose the following simple explicit treatment of the reaction term:

In the last equation, the value of \(u_{i}^{CLQ}\) can be calculated using stages 1, 2 and 3 in Algorithm 6. This algorithm is valid in the linear (\(\beta = 0\)) and in the nonlinear case (\(\beta > 0\)) as well.

In the following experiments, we will test our improved adaptive time step controller in the case of the linear diffusion equation with a heat source and the nonlinear diffusion equation with the Nagumo term. We do not claim that our adaptive time step controller is efficient for solving all kinds of systems. However, this algorithm can reduce the time required for achieving the numerical calculations when applied to some stiff systems. The advantages and disadvantages of this algorithm will be illustrated for each experiment individually. The adaptive-CLQ2 will be compared to the CLQ2, RK4, and RKF45 methods as well.

5.2 Experiment 6: Heat Equation with Heat Source

In this experiment we consider Eq. (2.2) in 2 space-dimensions \(\left( {x,y,t} \right) \in \left[ {0,1} \right] \times \left[ {0,1} \right] \times \left[ {0,2 \times 10^{ - 4} } \right]\), subjected to zero Neumann boundary conditions. The space domain was divided into \(N = N_{x} \times N_{y} = 30 \times 30\), thus we have 900 cells. The initial conditions were \(u_{{\text{i}}} (0) = rand\), where rand is a pseudo-random number in the unit interval. The resistances and capacities obeyed the following equations:

The heat source can be given by the following equation:

The stiffness ratio of the introduced system is \(1.54 \times 10^{11}\) (\(h_{{{\text{MAX}}}}^{{{\text{EE}}}} = 6.4 \times 10^{ - 12}\)). Figure 13 shows the error as a function of the total running time at different levels of accuracy. One can see that the adaptive-CLQ2 method is more efficient than the CLQ2 and the other methods when high-accuracy calculations are required. The order-reduction due to the high stiffness is smaller for the adaptive than for the non-adaptive CLQ2 algorithm. The RK5 method can provide reliable calculations only when the time step size is less than \(6.4 \times 10^{ - 12}\), otherwise it is unstable. A possible remedy for the problem of instability could be the usage of the Runge–Kutta-Fehlberg (RKF45) method, but it is not much faster, and it reduces the accuracy of the calculations, as we can see in the figure.

5.3 Experiment 7: FitzHugh-Nagumo equation

In this experiment we consider Eq. (1.4) with \(\beta = 10,\,\,\gamma = 0.1,\,\,\delta = 0.5\). All other settings and circumstances were the same as in Experiment 6, except that now \(q \equiv 0\). For comparison purposes, we used not only the non-adaptive RK5 and the adaptive RKF45, but the implicit and non-adaptive Crank-Nicolson (Cr-Ni) scheme with Strang-splitting. It means that the PDE contains only the diffusion term is solved by the standard Cr-Ni scheme, but before and after this stage, formula (3.4) is applied with half time step sizes to take into account the effect of the nonlinear term.

Figure 14 suggests that the adaptive-CLQ2 is more efficient than the CLQ2 when high accuracy is required. Time steps of order \(10^{ - 11}\) have to be used in the case of RK5 to reach the stability region of the algorithm. The RKF45 method is present with only a few points on the right of the figure, so none of these can be proposed for this type of problem. The Cr-Ni-Strang algorithm is completely useless for small and medium time step sizes, but suddenly becomes extremely accurate when h is decreased. So, if high accuracy is required, this implicit method can be recommended, but only for these relatively small system sizes (e.g. below N = 1000). We will see soon that for a larger system, the implicit solvers are much slower than the explicit ones.

5.4 Experiment 8: Heat Equation with Moving and Pulsing Heat Source

In this experiment we consider Eq. (2.2) in 2 space-dimensions \(\left( {x,y,t} \right) \in \left[ {0,1} \right] \times \left[ {0,1} \right] \times \left[ {0,1} \right]\), subjected to zero Neumann boundary conditions. The space domain was divided into \(N = N_{x} \times N_{y} = 100 \times 100\) cells, thus we have 10,000 cells. The initial conditions were \(u_{i} (0) = rand\), while the resistances and capacities were set to unity, \(C = 1,R_{x} = 1,R_{y} = 1\) to obtain a non-stiff system. The heat source can be given by the following equation:

It means we have a Gaussian spot-like heat source with effective diameter \(r_{0} = 4\Delta x = 0.04\), moving with initial horizontal velocity \(v_{x} = 0.8\) and vertical acceleration \(a_{y} = - 2\) from the initial position \(x_{0} = 0.05\;,\;\,y_{0} = 0.9\). Figure 15 shows the contour of the temperature distribution at T = 1. For comparison purposes, we used not only the RK5, RKF45 and the standard (non-adaptive) Crank-Nicolson, but the four implicit ODE solvers of MATLAB:

-

ode15s, variable-step, variable-order solver based on the numerical differentiation formulas (NDFs) of orders 1 to 5

-

ode23t, an implementation of the trapezoidal rule using a “free” interpolant.

-

ode23s, a 2nd order modified Rosenbrock formula.

-

ode23tb, a combination of the backward differentiation formula and the trapezoidal rule;

Figure 16 shows the error as a function of the total running time. One can see that the effect of conditional consistency is not present owing to the adaptive controller in the case of the adaptive-CLQ2 method. It makes this solver more efficient than all the other methods for high- and medium accuracy, and the whole CNe-CLQ family is quite efficient for low and medium accuracy.

6 Conclusions

Our aim was to construct a family of fully explicit numerical algorithms to solve parabolic PDEs, especially the non-stationary diffusion (or heat) and diffusion–reaction equations. The new algorithms are one step time-integrators, and consist of 3–6 stages. The algorithms can be applied to general linear ODE systems (for example, the spatially discretized heat equation, even in the case of unstructured grids as well), and for this case, we show that the convergence in the time step size of the 3-stage CLQ scheme is three, while it is four for the 4, 5 and 6-stage algorithms CLQ2, CLQ3 and CLQ4. Although the accuracy is not always good for large time step sizes, the dynamical consistency, as well as stability, is guaranteed for arbitrary time step sizes for the linear diffusion equation, the nonlinear Fisher’s and Huxley’s equation, and, within a wide parameter domain, for the Nagumo’s equation. This property is especially advantageous when one models physical processes, where the variable is the concentration, which must be in the unit interval.

We used one-dimensional analytical solutions of the linear diffusion equation with a space-dependent diffusion coefficient, as well as the Fisher’s, Huxley’s and Nagumo’s equations with rather large nonlinear coefficients to verify and test the new methods. After this, we developed the adaptive step size control version of the algorithms. The values predicted in the third stage are compared to the values calculated in the fourth stage to obtain an estimation of the local error. In this way an embedded method was implemented, where the adaptation of the time step size is performed with very little extra computational effort. The performance of the non-adaptive and adaptive methods was examined in the case of three 2-dimensional systems with inhomogeneous parameters and randomly generated discontinuous initial conditions. We showed that the proposed methods are competitive as they can give quite accurate results orders of magnitude faster than the standard Runge–Kutta and Crank-Nicolson schemes, depending on the system parameters.

In the near future, we plan to apply the proposed methods to other nonlinear equations as well. We are going to systematically investigate the possible treatment of the nonlinear terms, including linearizations [72] and operator-splitting techniques.

Data Availability

The datasets generated during the current study are available from the corresponding author on reasonable request.

References

Dattagupta, S.: Diffusion Formalism and Applications. Taylor & Francis, Milton Park (2014)

Ghez, R.: Diffusion Phenomena: Cases and Studies. Dover Publications Inc, New York (2001)

Bennett, T.: Transport by Advection and Diffusion. Wiley, New York (2012)

Pisarenko, I., Ryndin, E.: Numerical drift-diffusion simulation of GaAs p-i-n and Schottky-Barrier photodiodes for high-speed AIIIBV on-chip optical interconnections. Electronics 5(4), 52 (2016). https://doi.org/10.3390/electronics5030052

Fisher, D.J.: Defects and Diffusion in Carbon Nanotubes. Trans Tech Publications, Wollerau (2014)

Fradin, C.: On the importance of protein diffusion in biological systems: the example of the Bicoid morphogen gradient. Biochim. Biophys. Acta Proteins Proteom 1865(11), 1676–1686 (2017). https://doi.org/10.1016/j.bbapap.2017.09.002

Yu, H., et al.: The moisture diffusion equation for moisture absorption of multiphase symmetrical sandwich structures. Mathematics 10(15), 2669 (2022). https://doi.org/10.3390/math10152669

Zimmerman, R.W.: The Imperial College lectures in petroleum engineering. World Scientific Publishing, Singapore, London (2018)

Li, Y., van Heijster, P., Marangell, R., Simpson, M.J.: Travelling wave solutions in a negative nonlinear diffusion–reaction model. J. Math. Biol. 81, 1495–1522 (2020). https://doi.org/10.1007/s00285-020-01547-1

Weickenmeier, J., Jucker, M., Goriely, A., Kuhl, E.: A physics-based model explains the prion-like features of neurodegeneration in Alzheimer’s disease, Parkinson’s disease, and amyotrophic lateral sclerosis. J. Mech. Phys. Solids 124, 264–281 (2019). https://doi.org/10.1016/j.jmps.2018.10.013

Campos, D., Llebot, J.E., Fort, J.: Reaction-diffusion pulses: a combustion model. J. Phys. A. Math. Gen. 37, 6609–6621 (2004). https://doi.org/10.1088/0305-4470/37/26/001

Agbavon, K.M., Appadu, A.R.: Construction and analysis of some nonstandard finite difference methods for the FitzHugh–Nagumo equation. Numer. Methods Partial Differ. Equ. 36(5), 1145–1169 (2020). https://doi.org/10.1002/num.22468

Zhao, Y., Billings, S.A., Guo, Y., Coca, D., Dematos, L.L., Ristic, R.I.: Spatio-temporal modeling of wave formation in an excitable chemical medium based on a revised Fitzhugh-Nagumo model. Int. J. Bifurc. Chaos 21(2), 505–512 (2011). https://doi.org/10.1142/S0218127411028556

Seadawy, A.R., Rizvi, S.T.R., Ahmed, S.: Multiple lump, generalized breathers, Akhmediev breather, manifold periodic and rogue wave solutions for generalized Fitzhugh-Nagumo equation: applications in nuclear reactor theory. Chaos Solitons Fractals 161, 112326 (2022). https://doi.org/10.1016/j.chaos.2022.112326

Appadu, A.R., Inan, B., Tijani, Y.O.: Comparative study of some numerical methods for the Burgers-Huxley equation. Symmetry (Basel) 11, 1333 (2019). https://doi.org/10.3390/sym11111333

Bradshaw-Hajek, B.: Reaction-Diffusion Equations for Population Genetics. Wollongong (2004)

Hassan, M.M., Abdel-Razek, M.A., Shoreh, A.A.H.: Explicit exact solutions of some nonlinear evolution equations with their geometric interpretations. Appl. Math. Comput. 251, 243–252 (2015). https://doi.org/10.1016/j.amc.2014.11.046