Abstract

We propose explicit stochastic Runge–Kutta (RK) methods for high-dimensional Itô stochastic differential equations. By providing a linear error analysis and utilizing a Strang splitting-type approach, we construct them on the basis of orthogonal Runge–Kutta–Chebyshev methods of order 2. Our methods are of weak order 2 and have high computational accuracy for relatively large time-step size, as well as good stability properties. In addition, we take stochastic exponential RK methods of weak order 2 as competitors, and deal with implementation issues on Krylov subspace projection techniques for them. We carry out numerical experiments on a variety of linear and nonlinear problems to check the computational performance of the methods. As a result, it is shown that the proposed methods can be very effective on high-dimensional problems whose drift term has eigenvalues lying near the negative real axis and whose diffusion term does not have very large noise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We are concerned with stabilized explicit methods for high-dimensional stochastic differential equations (SDEs), which give weak second order approximations to the solution of SDEs. One such class for ordinary differential equations (ODEs) is the Runge–Kutta–Chebyshev (RKC) methods, which are useful for stiff problems whose eigenvalues lie near the negative real axis [3, 30]. When the dimension of ODEs is very high, the class can be a powerful tool, compared with other stabilized explicit methods such as exponential Runge–Kutta (RK) methods. Recently, the class has played a significant role for the development of stabilized explicit methods for SDEs.

Abdulle and Cirilli [1] and Abdulle and Li [2] have proposed a family of explicit stochastic orthogonal Runge–Kutta–Chebyshev (SROCK) methods of strong order 1/2 and weak order 1 for noncommutative Stratonovich SDEs and for noncommutative Itô SDEs, respectively. As the first order RKC methods are embedded into their SROCK methods, the SROCK methods have extended mean square (MS) stability regions. Komori and Burrage [21] have proposed SROCK methods of weak order 2 for noncommutative Stratonovich SDEs. The second order orthogonal RKC methods proposed in [3] are embedded into the SROCK methods from [21]. We will refer to the orthogonal RKC methods as the standard ROCK2 methods. The SROCK methods in [21] have the advantage that the stability region is large along the negative real axis, but their stability region is not very wide. In order to overcome this drawback, Abdulle, Vilmart, and Zygalakis [4] have proposed a new family of weak second order SROCK methods for noncommutative Itô SDEs. We call them SROCK2 methods throughout the present paper. In the SROCK2 methods, a new family of second order orthogonal RKC methods is embedded, and it has a real parameter \(\alpha \). We will refer to them as the ROCK2 methods with \(\alpha \). The ROCK2 methods with \(\alpha =1\) reduce to the standard ROCK2 methods.

Incidentally, there is another suitable class of explicit methods for semilinear problems that have stiffness in the linear part as opposed to the nonlinear part. One such class of methods for semilinear ODEs is the family of explicit exponential RK methods [15,16,17]. These methods have been recently developed for SDEs [9,10,11,12, 14, 22]. Some exponential RK methods have been proposed to cope with highly oscillatory problems [9, 12]. In [23], the authors have proposed weak second order stochastic exponential RK methods for noncommutative Itô SDEs with a semilinear drift term, and showed the superiority of stochastic exponential RK methods to SROCK2 methods in terms of computational accuracy for relatively large step size in numerical experiments. This fact reduces the advantage of SROCK2 methods against exponential RK methods in high-dimensional problems.

In the present paper, we propose the split SROCK2 methods, which not only are explicit and stabilized, but also have high computational accuracy for relatively large step size. Our extention for the SROCK2 methods is very helpful to remove a step size restriction that the SROCK2 methods suffer from for computational accuracy in high-dimensional SDEs. In Sect. 2 we will briefly introduce the derivative-free Milstein–Talay (DFMT) method. In Sect. 3 we will derive the split SROCK2 methods and will give their error analysis. In Sect. 4 we will state other stabilized explicit methods based on the ROCK2 methods with \(\alpha \), as well as stochastic exponential RK methods. In addition, we will deal with the implementation issues of Krylov subspace projection techniques for matrix exponential functions. In Sect. 5 we will present numerical results and in Sect. 6 our conclusions.

2 Weak Second Order Stochastic RK Methods

First of all, We briefly introduce the definition of weak order of convergence and the DFMT method of weak order 2 [4]. We are concerned with the autonomous SDE

where \(t\in [0,T]\) and where \({\varvec{f}},{\varvec{g}}_{j}\), \(j=1,2,\ldots ,m\), are \({\mathbb {R}}^{d}\)-valued functions on \({\mathbb {R}}^{d}\), the \(W_{j}(t)\), \(j=1,2,\ldots ,m\), are independent Wiener processes, and \({\varvec{y}}_{0}\) is independent of \(W_{j}(t)-W_{j}(0)\) [7, p. 100]. In order to consider weak q-th order approximations to its solution, we make the assumptions on (1) that all moments of the initial value \({\varvec{y}}_{0}\) exist and \({\varvec{f}},{\varvec{g}}_{j}\), \(j=1,2,\ldots ,m\), are Lipschitz continuous with all their components belonging to \(C_{P}^{2(q+1)}({\mathbb {R}}^{d}, {\mathbb {R}})\). As usual, \(C_{P}^{L}({\mathbb {R}}^{d}, {\mathbb {R}})\) denotes the family of L times continuously differentiable real-valued functions on \({\mathbb {R}}^{d}\), whose partial derivatives of order less than or equal to L have polynomial growth [20, p. 474]. Let \({\varvec{y}}_{n}\) denote a discrete approximation to the solution \({\varvec{y}}(t_{n})\) of (1) for an equidistant grid point \(t_{n}{\mathop {=}\limits ^{{\textrm{def}}}}nh\) (\(n=1,2,\ldots ,M\)) with step size \(h=T/M<1\) (M is a positive integer). Then we define the weak convergence of order q as follows [20, p. 327].

Definition 1

When a numerical method gives discrete approximations \({\varvec{y}}_{n}\), \(n=1,2,\ldots ,M\), we say that the method is of weak (global) order q if for all \(G \in C_{P}^{2(q+1)}({\mathbb {R}}^{d}, {\mathbb {R}})\), constants \(C>0\) (independent of \(h\) \()\) and \(\delta _{0}>0\) exist such that

An effective numerical method of weak order 2, the DFMT method, has been proposed [4]:

where

and where the \(\chi _{j}\) and \(\xi _{j}\), \(j=1,2,\ldots ,m\), are discrete random variables satisfying \(P(\chi _{j}=\pm 1)=1/2\), \(P(\xi _{j}=\pm \sqrt{3})=1/6\), and \(P(\xi _{j}=0)=2/3\), and the \(\zeta _{kj}\), \(j,k=1,2,\ldots ,m\), are given by

Based on the following well-known theorem proposed by Milstein [25, 26], the proof of the weak order of convergence is obtained for (2). For details, see [4].

Theorem 1

Suppose that the numerical solutions satisfy the following conditions:

-

(1)

for a sufficiently large \(r\in {\mathbb {N}}\), the moments \(E[\Vert {\varvec{y}}_{n}\Vert ^{2r}]\) exist and are uniformly bounded with respect to M and \(n=0,1,\ldots ,M\);

-

(2)

for all \(G \in C_{P}^{2(q+1)}({\mathbb {R}}^{d}, {\mathbb {R}})\), the local error estimation

$$\begin{aligned} \left| E[G({\varvec{y}}(t_{n+1}))]-E[G({\varvec{y}}_{n+1})] \right| \le |K({\varvec{y}}_{n})|h^{q+1} \end{aligned}$$holds if \({\varvec{y}}(t_{n})={\varvec{y}}_{n}\), where \(K\in C_{P}^{0}({\mathbb {R}}^{d}, {\mathbb {R}})\).

Then, the method that gives \({\varvec{y}}_{n}\), \(n=1,2,\ldots ,M\), is of weak (global) order q.

Remark 1

If (1) has diagonal noise [20, p. 348], that is, \(d=m\) and

are satisfied, then we have \(\sum _{j=1}^{d}{\varvec{g}}_{j}({\varvec{y}})\xi _{j}= [ g_{11}(y_{1})\xi _{1} \ g_{22}(y_{2})\xi _{2},\ldots ,\ g_{dd}(y_{d})\xi _{d} ]^{\top }\), where \(g_{jj}\) and \(y_{j}\), \(j=1,2,\ldots ,d\), denote the j-th component of \({\varvec{g}}_{j}\) and \({\varvec{y}}\), respectively. Similarly, \({\varvec{H}}({\varvec{y}})\) is a d-dimensional vector whose j-th component is

and \(\tilde{{\varvec{H}}}({\varvec{y}},{\varvec{z}})\) is a d-dimensional vector whose j-th component is

If \({\varvec{g}}_{j}\), \(j=1,2,\ldots ,m\), vanish in (1), then the problem is an ODE. Thus, we give a brief introduction to the ROCK2 methods with a free parameter \(\alpha \) for ODEs [3]. Originally, the standard ROCK2 methods have been proposed by Abdulle and Medovikov [3]. The parameter values of the methods are given in a siteFootnote 1 on the Internet. Abdulle, Vilmart, and Zygalakis [4] have extended the standard ROCK2 methods to the following ROCK2 methods with a free parameter \(\alpha \):

Here, \(\theta _{\alpha }{\mathop {=}\limits ^{{\textrm{def}}}}(1-\alpha )/2+\alpha \theta \), \(\tau _{\alpha }{\mathop {=}\limits ^{{\textrm{def}}}}(1-\alpha )^{2}/2+2\alpha (1-\alpha )\theta +\alpha ^{2}\tau \), and the \(\mu _{i},\kappa _{i},\theta \), and \(\tau \) are the same constants as in the standard s-stage ROCK2 method. This method achieves order 2 for ODEs regardless of the value of \(\alpha \).

3 Novel Stabilized Explicit Methods of Weak Order 2

We want explicit methods to inherit preferable stability properties from (3) by involving its stabilization procedures, while keeping weak order 2 for any \(\alpha \). In order to achieve this, we propose to utilize a splitting technique. As a result, we construct novel stabilized explicit methods of weak order 2, which have not only good stability properties, but also high precision in the weak sense.

3.1 Preliminary

When we consider deriving a new method of weak order 2 based on the DFMT method, the following lemma proposed in [23] can be a useful tool.

Lemma 1

For an approximate solution \({\varvec{y}}_{n}\), let \({\varvec{y}}_{n+1}\) be given by (2) and \(\hat{{\varvec{y}}}_{n+1}\) be defined by

Here, we assume that the intermediate values \(\tilde{{\varvec{y}}}_{n+1}\) and \({\varvec{Y}}_{i}, i=1,2,\ldots ,5\), have no random variable and satisfy \({\varvec{Y}}_{4}={\varvec{y}}_{n}+(h/2){\varvec{f}} \left( {\varvec{y}}_{n} \right) +O \left( h^{2} \right) \), \({\varvec{Y}}_{i}={\varvec{y}}_{n}+O(h),\ i=1,2,3,5\), and

(Note that the symbol \(O(h^{p})\) represents terms \({\varvec{x}}\) such that \(\Vert {\varvec{x}}\Vert \le |K({\varvec{y}}_{n})|h^{p}\) for a \(K\in C^{0}_{P}({\mathbb {R}}^{d},{\mathbb {R}})\) and a small \(h>0.)\) Then, for all \(G\in C_{P}^{r}({\mathbb {R}}^{d},{\mathbb {R}})\) \((r\ge 3)\),

The following lemma gives a splitting technique which does not violate the weak second order of convergence. A related theorem was originally proposed in [23]. Here, we write part of the statement in the theorem with an appropriate notation for the present paper.

Lemma 2

For an approximate solution \({\varvec{y}}_{n}\), let \({\varvec{y}}_{n+1}\) be given by (2) and \(\hat{{\varvec{y}}}_{n+1}\) be defined by

where \({\varvec{\varPhi }}_{h}\) is the DFMT method for SDEs given by making the drift term zero, that is,

and where \({\varvec{\varPsi }}_{h}\) denotes a numerical method that at least satisfies

Then, for all \(G\in C_{P}^{r}({\mathbb {R}}^{d},{\mathbb {R}})\) \((r\ge 3)\)

3.2 An Extended Class of the Stabilized Explicit Methods

Now, let us put all ideas mentioned so far together and organize them in a sophisticated way to obtain a new class of the stabilized explicit methods. Then, we propose

We name the methods (7) in our extended class as the split SROCK2 methods. In what follows, we will call them by this name.

Theorem 2

Suppose that all moments of the initial value \({\varvec{y}}_{0}\) exist and \({\varvec{f}},{\varvec{g}}_{j}\), \(j=1,2,\ldots ,m\), are Lipschitz continuous with all their components belonging to \(C_{P}^{6}({\mathbb {R}}^{d}, {\mathbb {R}})\) in (1). Then, (7) is of weak order 2 for (1).

Proof

We begin with

where \({\varvec{\varPhi }}_{h}\) is given in (6) and \({\varvec{\varPsi }}_{h}({\varvec{y}}_{n})\) denotes \({\varvec{y}}_{n+1}\) given in (3). As mentioned after (3), since (3) achieves order 2 for ODEs regardless of the value of \(\alpha \), its local error is of order 3 for ODEs. Due to Lemma 2, then (8) clearly has a local error of weak order 3. In order to see that the difference between (8) and (7) is of weak order 3, let us show that the difference between \({\varvec{\varPhi }}_{h}\Big ({\varvec{\varPsi }}_{\frac{h}{2}}({\varvec{y}}_{n})\Big )\) and \({\varvec{K}}^{*}_{s}\) is of weak order 3. When we denote \({\varvec{\varPsi }}_{\frac{h}{2}}\big ({\varvec{y}}_{n}\big )\) by \(\tilde{{\varvec{\varPsi }}}\), we can rewrite these expressions as

From (7) we obtain the estimates

and

where \({\varvec{a}}=(\theta _{\alpha }-1/4){\varvec{f}}({\varvec{y}}_{n}) +(1/4){\varvec{f}}({\varvec{y}}_{n})\) and \({\varvec{b}}=(1/4)\tau _{\alpha }{\varvec{f}}^{\prime }({\varvec{y}}_{n}) {\varvec{f}}({\varvec{y}}_{n})\). Since we can rewrite \({\varvec{H}}({\varvec{Y}})\) as

we have

where \({\varvec{r}}_{1} =\sum _{j,k=1}^{m} \left\{ {\varvec{g}}_{j}^{\prime \prime } \left( {\varvec{y}}_{n} \right) \left[ {\varvec{a}},{\varvec{g}}_{k} \left( {\varvec{y}}_{n} \right) \right] +{\varvec{g}}_{j}^{\prime } \left( {\varvec{y}}_{n} \right) {\varvec{g}}_{k}^{\prime } \left( {\varvec{y}}_{n} \right) {\varvec{a}} \right\} \zeta _{kj}\). Here, we use the notation \({\varvec{g}}_{j}^{\prime \prime }({\varvec{y}}) [\cdot ,\cdot ]\) for the second derivative (a symmetric bilinear form) of \({\varvec{g}}_{j}\) at \({\varvec{y}}\). Similarly, since we can rewrite \(\tilde{{\varvec{H}}}({\varvec{Y}}_{1},{\varvec{Y}}_{2})\) as

we have \(\tilde{{\varvec{H}}}(\tilde{{\varvec{\varPsi }}},\tilde{{\varvec{\varPsi }}}) -{\varvec{H}}(\hat{{\varvec{K}}}_{s-1},{\varvec{K}}_{s-2}) =h^{5/2}{\varvec{r}}_{2}+O(h^{3})\), where

Here, we use the notation \({\varvec{g}}_{j}^{(4)}({\varvec{y}}) [\cdot ,\cdot ,\cdot ,\cdot ]\) for the fourth derivative (a symmetric tetra-linear form) of \({\varvec{g}}_{j}\) at \({\varvec{y}}\). Thus, from \({\varvec{\varPhi }}_{h}\Big ({\varvec{\varPsi }}_{\frac{h}{2}}({\varvec{y}}_{n})\Big ) -{\varvec{K}}^{*}_{s} =h^{2}{\varvec{r}}_{1}+h^{5/2}{\varvec{r}}_{2}+O(h^{3})\), we have

Consequently, we obtain \(E[G\big ({\varvec{\varPhi }}_{h}\big ({\varvec{\varPsi }}_{\frac{h}{2}}({\varvec{y}}_{n})\big )\big )] -E[G({\varvec{K}}^{*}_{s})]=O(h^{3})\) since \(E[{\varvec{r}}_{1}]=E[{\varvec{r}}_{2}] =E[\xi _{j}{\varvec{r}}_{1}]=0\quad (j=1,2,\ldots ,m)\).

As a sufficient condition for (1) in Theorem 1, it is known that the following two inequalities hold for all sufficiently small \(h>0\):

where C is a positive constant and \(X_{n}\) is a random variable that has moments of all orders [26, p. 102]. The smoothness and global Lipschitzness of \({\varvec{g}}_{j}\) \((j=1,2,\ldots ,m)\) imply \(\Vert {\varvec{g}}_{j}^{\prime }({\varvec{y}}){\varvec{g}}_{k}({\varvec{y}}) \Vert \le C(1+\Vert {\varvec{y}}\Vert )\) for a constant \(C>0\), whereas the global Lipschitzness implies \(\Vert {\varvec{g}}_{j}({\varvec{y}})\Vert \le C(1+\Vert {\varvec{y}}\Vert )\). From these facts as well as (9) and

for constants \(C_{1},C_{2}>0\), we can see that the two inequalities requested above hold for (7). Consequently, (7) is of weak order 2 for (1) by Theorem 1. \(\square \)

3.3 Stabilized Methods with High Precision

As we have seen above, the split SROCK2 methods achieve weak order 2 for any \(\alpha \). In this section we decide an appropriate value for the parameter \(\alpha \) on the basis of a linear error analysis.

Let us consider the linear scalar SDE given by

where \(y_{0}\ne 0\) with probability one (w.p.1) and \(\lambda ,\sigma \in {\mathbb {R}}\). When a one-step method with a step size h is applied to (10), we generally have \(y_{n+1}=R \left( h,\lambda ,\sigma ,{\varvec{\eta }} \right) y_{n}\), where R is an amplification factor and \({\varvec{\eta }}\) is a vector whose components are random variables appearing in the method. In [23], the authors have considered the following errors of R for a method with \(h=T_{0}/2^{k}\) (k is a positive integer):

where \({\bar{R}}(\lambda h){\mathop {=}\limits ^{{\textrm{def}}}}E[R]\) and \({\hat{R}}(\lambda h,\sigma ^{2}h){\mathop {=}\limits ^{{\textrm{def}}}}E[ {R^{2}} ]\). We investigate these errors for the split SROCK2 methods (7).

If we apply (7) to (10), then we obtain

where \(P_{0}(z)= 1\), \(P_{1}(z) = 1+\mu _{1}z\), and \(P_{i}(z)=(1+\kappa _{i}+\mu _{i}z)P_{i-1}(z)-\kappa _{i}P_{i-2}(z)\), \(i=2,3,\ldots ,s\). Thus, we have

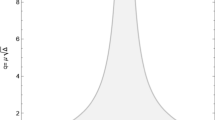

Suppose that the solution to (10) is asymptotically stable in the MS. Then \(2\lambda +\sigma ^{2}<0\) holds. Let us define r by \(\sigma ^{2}/\lambda \) and consider the case of \(-2<r\le 0\) in what follows. When an \(\alpha >0\) is given to (7), for each \(r\in (-2,0]\) \(\hat{{\mathscr {E}}}(0)\) oscillates when \(\lambda T_{0}\) moves in an interval [l(r), 0] (see Figure 1), where l(r) is a negative number such that \({\hat{R}}(l(r),rl(r))=1\).

If we choose \(r_{0}\in (-2,0]\), then

gives a uniform error of the second moment of the amplification factor for the numerical method in \((l(r),0)\times [r_{0},0]\). We decide the value of \(\alpha \), say \(\hat{\alpha }\), such that the uniform error for a given \(\hat{\alpha }\) becomes equal to

Here, note that if \(\alpha <1\), then the good stability properties of (3) themselves are violated.

As seen in the expression of \(\hat{{\mathscr {E}}}(k)\), we cannot expect that methods based on (3) including other methods introduced later, have a small error \(\hat{{\mathscr {E}}}(k)\) in general for a small \(r\in (-2,0]\), which leads to a large \(\sigma ^{2}=r\lambda >0\). For this, we set \(r_{0}\) at \(-1/2\) and seek an optimal value of \(\alpha \) for (7) with the stage number s. The reason why we have chosen \(r_{0}=-1/2\), not \(-2\), will also be mentioned in detail later. For example, when \(s=6\), we have \(\alpha =2.73\) as an optimal value for (7). For other values of s, see Table 1. Figure 1 shows the errors of (7) with \(\alpha =2.73\). Local maxima and local minima of the errors are indicated by points. In the right-hand plot, we set \(r=-1/2\) or 0. Note that \(\hat{{\mathscr {E}}}(k)\) depends on r, but \(\bar{{\mathscr {E}}}(k)\) does not.

Let us investigate the errors of (7) with optimal \(\alpha \) whose value is given in Table 1. Table 2 gives (12), that is, the uniform errors of the methods with \(h=T_{0}\). The notation \({\tilde{l}}_{s}\) denotes the abscissa of the local minimal point of \(\hat{{\mathscr {E}}}(0)\) that is located in the most left among the local minimal points of \(\hat{{\mathscr {E}}}(0)\). This gives auxiliary information about stability properties for the methods. From the change of values of \({\tilde{l}}_{s}\), we can observe that the stability interval becomes longer as the stage number s increases. This is because the split SROCK2 methods inherit the stability properties of (3). The computational cost for the stabilization procedures depends on the number of evaluations on \({\varvec{f}}\). The split SROCK2 method with s stages evaluates it 2s times in one step. The table gives \(|{\tilde{l}}_{s}|/(2s)^{2}\), which indicates the efficiency of the stabilization procedures. From the table, we can see that the value of \(|{\tilde{l}}_{s}|/(2s)^{2}\) is approximately 0.149 when s is large.

The first term in \(\bar{{\mathscr {E}}}(0)\) is equal to the first term in the right-hand side of (11) if \(h=T_{0}\). In the term, the polynomial \(\{1+\theta _{\alpha }\lambda h+\tau _{\alpha }(\lambda h/2)^{2} \} P_{s-2}(\alpha \lambda h/2)\) for \(h=T_{0}\) approximates \({\textrm{e}}^{\lambda T_{0}/2}\), and as a result, its square approximates \({\textrm{e}}^{\lambda T_{0}}\) in the interval \([l(-1/2),0]\) such as the error is shown in the left-hand plot of Fig. 1.

Also in \(\hat{{\mathscr {E}}}(0)\), the square approximates \({\textrm{e}}^{\lambda T_{0}}\) in the interval. In addition, the other polynomials \((1+\theta _{\alpha }\lambda h) P_{s-2}(\alpha \lambda h/2)\) and \(P_{s-2}(\alpha \lambda h/2)\) for \(h=T_{0}\) approximate \({\textrm{e}}^{\lambda T_{0}/2}\) less precisely than the above mentioned polynomial when \(|\lambda T_{0}|<<1\), but their squares serve together with the square of the above mentioned polynomial to overcome the increase by \(\sigma ^{2} h\) and \((\sigma ^{2}h)^{2}/2\) for \(h=T_{0}\). As a result, the error \(\hat{{\mathscr {E}}}(0)\) behaves as shown in the right-hand plot of Fig. 1.

Remark 2

The naïve split method (8) gives \({\hat{R}}\) as follows:

Although the differences from \({\hat{R}}\) for (7) are only the replacements of \((1+\theta _{\alpha }\lambda h)^{2}\) or 1 with \(\{1+\theta _{\alpha }\lambda h+\tau _{\alpha } (\lambda h/2)^{2}\}^{2}\), the replacements strengthen the increase in \({\hat{R}}\) when \(|\lambda h|\) is large. Thus, (8) is also of weak order 2, but unfortunately it cannot have good stability properties especially when s is large. In addition, note that making a change in one \({\varvec{\varPsi }}_{h/2}\) in (8) leads to a replacement of \(\{1+\theta _{\alpha }\lambda h+\tau _{\alpha } (\lambda h/2)^{2}\}^{2}\) \(\times \{P_{s-2} (\alpha \lambda h/2) \}^{2}\) in \({\hat{R}}\).

4 Other Stabilized Explicit Methods of Weak Order 2

As competitors to the split SROCK2 methods, we first state other stabilized explicit methods based on (3). Next, we introduce an exponential RK method of weak order 2 for SDEs [23]. When the dimension of the SDEs is very large, the exponential RK method needs techniques in order to calculate the product of a matrix exponential function and a vector efficiently. In the end of the section, we consider the implementation of Krylov subspace projection techniques related to a matrix exponential function \(\varphi _{2}\).

4.1 Other Methods Based on the ROCK2 Methods with a Parameter \(\alpha \)

By combining (2) and (3), Abdulle, Vilmart, and Zygalakis [4] have proposed the following class of methods:

where \(K_{i}\), \(i=s-3,s-2\), are the same as those in (3), and have determined the value of \(\alpha \) such that \({\varvec{K}}_{s-1}={\varvec{y}}_{n}+(h/2){\varvec{f}} ({\varvec{y}}_{n})+O(h^{2})\) holds. For the value of \(\alpha \), (13) achieves weak order 2. Note Lemma 1. The methods are called the SROCK2 methods. When (13) is applied to (10), we have

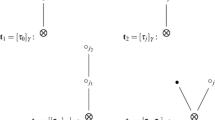

For a given s, (7) contains almost twice as many computational procedures as (13). For this, as a competitor to (7) with \(h=T_{0}\), we choose (13) with \(h=T_{0}/2\) when the both methods have the same stage number s. Figure 2 shows the errors of (13) when \(s=6\) and \(k=1\) in a similar manner to Fig. 1. From the comparison of Figs. 1 and 2, we have the following remarks. First, the split SROCK2 method with \(h=T_{0}\) can achieve very small errors, compared with the SROCK2 method with \(h=T_{0}/2\). Second, the SROCK2 method with \(h=T_{0}/2\) has a much longer stability interval than the split SROCK2 method with \(h=T_{0}\). Also for other stages, this stability property is a strength of the SROCK2 methods, whereas low precision is a weakness of them.

Incidentally, Table 3 gives the uniform errors of the SROCK2 methods with \(h=T_{0}\). As the methods are of weak order 2, the replacement of \(h=T_{0}\) with \(h=T_{0}/2\) is expected to decrease errors by a factor of 1/4 approximately. Even if we take this into account, we can say that the split SROCK2 methods with \(h=T_{0}\) have smaller errors than the SROCK2 methods with \(h=T_{0}/2\). Here, note that the value of errors in the table indicates a local maximum for \(\hat{{\mathscr {E}}}(0)\), not for \(\hat{{\mathscr {E}}}(1)\). The SROCK2 method with s stages evaluates the function \({\varvec{f}}\) \(s+1\) times in one step. The table gives \(|{\tilde{l}}_{s}|/(s+1)^{2}\), which indicates the efficiency of the stabilization procedures. From the table, we can see that the value of \(|{\tilde{l}}_{s}|/(s+1)^{2}\) is approximately 0.428 when s is large.

The first term in \(\bar{{\mathscr {E}}}(1)\) for (13) is equal to the first term in the right-hand side of (14) if \(h=T_{0}/2\). Thus, \(\bar{{\mathscr {E}}}(0)\) for (7) and \(\bar{{\mathscr {E}}}(1)\) for (13) have the same expression except the value of \(\alpha \). The difference in \(\alpha \) makes the difference of the left-hand plots in Figs. 1 and 2. In addition, in \(\hat{{\mathscr {E}}}(1)\) for (13), the polynomials \(P_{s-1}(\alpha \lambda h) +\lambda hP_{s-2}(\alpha \lambda h)/2\) and \(P_{s}(\alpha \lambda h)\) for \(h=T_{0}/2\) approximate \({\textrm{e}}^{\lambda T_{0}/2}\), and the fourth powers of them serve to overcome the increase by \((\sigma ^{2} h)^{2}\) and \((\sigma ^{2}h)^{4}/4\) for \(h=T_{0}/2\). Remember that \(\hat{{\mathscr {E}}}(0)\) for (7) has lower order terms with respect to \(\sigma ^{2}h\). This difference as well as the difference in \(\alpha \) makes the difference of the right-hand plots in Figs. 1 and 2.

In Fig. 2, the following are also remarkable. When \(\lambda T_{0}\) is sufficiently small, the first term is dominant over the second term \({\textrm{e}}^{\lambda T_{0}}\) in \(\bar{{\mathscr {E}}}(k)\). For example, since \({\textrm{e}}^{-3}\approx 5.0\times 10^{-2}\), in the left-hand plot of Fig. 2 the curve shows almost only the first term in \(\bar{{\mathscr {E}}}(1)\) when \(\lambda T_{0}<-3\). Similarly, when \(\lambda T_{0}\) is sufficiently small and r is close to 0, the first term is dominant over the second term \({\textrm{e}}^{(2\lambda +\sigma ^{2}) T_{0}}={\textrm{e}}^{(2+r)\lambda T_{0}}\) in \(\hat{{\mathscr {E}}}(k)\). For example, since \({\textrm{e}}^{(2+r)\lambda T_{0}}={\textrm{e}}^{-6}\approx 2.5\times 10^{-3}\) if \(r=-1/2\) and \(\lambda T_{0}=-4\), in the right-hand plot of Fig. 2 the curve shows almost only the first term in \(\hat{{\mathscr {E}}}(1)\) when \(\lambda T_{0}<-4\).

Remark 3

On the basis of the idea of dense output in RK methods, due to Lemma 1 we can obtain another class of weak second order methods based on the ROCK2 methods with a free parameter \(\alpha \). However, unfortunately the methods lead to much worse precision than the split SROCK2 methods and much worse stability properties than the SROCK2 methods.

We are concerned with weak second order methods, but there is a class of excellent stabilized explicit methods of weak order one [5]. Let us call them the SK-ROCK methods and give a brief remark on the methods.

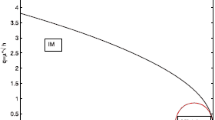

Remark 4

The SK-ROCK methods have a free parameter \(\eta \) to obtain good stability properties. In fact, they have enjoyed excellent stability properties in some numerical experiments [5], when the parameter has a typical value, \(\eta =0.05\). However, they suffer from the error of the amplification factor. When \(s=6\) and \(\eta =0.05\), we obtain the left-hand plot in Fig. 3. Even if we increase the value of \(\eta \), they still suffer from the error, especially for large \(\lambda T_{0}<0\). For example, see the right-hand plot in the figure.

4.2 Exponential RK Methods and the Implementation of Krylov Subspace Projection Techniques for Matrix Exponential Functions

If the drift term is given as a semilinear drift term \(A{\varvec{y}}+{\varvec{f}}_{0}({\varvec{y}})\) in (1), where A is a \(d\times d\) matrix and \(f_{0}\) is an \({\mathbb {R}}^{d}\)-valued nonlinear function on \({\mathbb {R}}^{d}\), then we can apply the following stochastic exponential RK method to (1):

where

The matrix exponential functions are defined by

where Z, I stand for a \(d\times d\) matrix, the \(d\times d\) identity matrix, respectively. As the method is of weak order 2 and deterministic order 3 [23], let us call this the SERKW2D3 method in what follows.

If A is diagonalizable and its dimension is not large, for example, 100, then the matrix exponential functions in the method are easy to calculate through a similarity transformation based on eigenvectors [27, p. 23]. In addition, once they are calculated for a given step size h, we can use them for all trajectories. Thus, in this case the SERKW2D3 method can be an effective and powerful method. However, if the dimension of A is very large, the situation drastically changes. The computation based on the similarity transformation is not useful. We need another computational method for the matrix exponential functions.

For a large sparse matrix A, let us suppose that A is symmetric since we can rewrite f as \((A+A^{\top })/2+{\varvec{f}}_{0}({\varvec{y}})+(A-A^{\top })/2\). The symmetric property will lead to computational savings for a matrix exponential function times a vector.

In order to calculate \(Y_{3}\) efficiently and precisely, let us derive a recursive formula for \(\varphi _{2}\). From the definition of \(\varphi _{1}\) and \(\varphi _{2}\), we have

for \(t,\varDelta t> 0\), where \({\varvec{q}}={\varvec{f}}_{0}(Y_{2})-{\varvec{f}}_{0}(y_{n})\). Similarly, for any \({\varvec{w}}\in {\mathbb {R}}^{d}\),

If we set \({\varvec{w}}=t\varphi _{2}(tA){\varvec{q}}\), then, from the above equalities we have

We can calculate \(2h\varphi _{2} ((h/2)A) {\varvec{q}}\) firstly and \(2h\varphi _{2} (hA) {\varvec{q}}\) secondly in \(Y_{3}\), using this relationship repeatedly. Note that the \(\varDelta t\) does not always need to be fixed in the calculations of \(Y_{3}\).

Our next concern is to calculate \((\varDelta t)^{2}\varphi _{2}(\varDelta t A)(A{\varvec{w}}+{\varvec{q}})\) and \(\varDelta t\varphi _{1}(\varDelta t A)(A{\varvec{w}}+{\varvec{q}})\). For this, we adopt Krylov subspace projection techniques. Let us set \({\varvec{u}}=A {\varvec{w}}+{\varvec{q}}\) and denote by \(V_{\rho +1}=[{\varvec{v}}_{1},{\varvec{v}}_{2},\ldots ,{\varvec{v}}_{\rho +1}]\) and \(H_{\rho }=[h_{i,j}]\), the orthonormal basis of the Krylov subspace Span \(\{{\varvec{u}},A{\varvec{u}},\ldots ,A^{\rho }{\varvec{u}}\}\) and the \(\rho \)-dimensional symmetric tridiagonal matrix resulting from Lanczos process, where \(\rho \) is a small positive integer compared to d. For our purpose, Theorem 2 in [28] gives the following approximation

for a sufficiently small \(\varDelta t>0\), where \(\beta =\Vert {\varvec{u}}\Vert \), \(h_{\rho +1,\rho }=\Vert A{\varvec{v}}_{\rho }-\sum _{i=\rho -1}^{\rho }h_{i,\rho }{\varvec{v}}_{i}\Vert \), \({\tilde{\varPhi }}_{i,\rho }=(\varDelta t)^{i}\varphi _{i}(\varDelta tH_{\rho })\), and \({\varvec{e}}_{i}\) denotes the \(\rho \)-dimensional unit vector whose i-th component is 1. Note that if the minimal degree of \({\varvec{u}}\) is some integer l \((\le \rho )\), then an invariant subspace is found and the above approximation is replaced with the equality \((\varDelta t)^{2}\varphi _{2}(\varDelta t A){\varvec{u}} =\beta V_{l}{\tilde{\varPhi }}_{2,l}\tilde{{\varvec{e}}}_{1}\), where \(\tilde{{\varvec{e}}}_{1}\) is the l-dimensional unit vector whose 1st component is 1 [28].

Now, our next concern is to calculate \({\tilde{\varPhi }}_{2,\rho }{\varvec{e}}_{1}\) and \({\tilde{\varPhi }}_{3,\rho }{\varvec{e}}_{1}\) efficiently. Theorem 1 in [28] gives the following equality:

where \({\varvec{0}}_{\rho }\) denotes the \(\rho \)-dimensional zero column vector and

This shows that \(\exp (\varDelta t{\tilde{H}}_{\rho +4})\) gives \({\tilde{\varPhi }}_{2,\rho }{\varvec{e}}_{1}\) and \({\tilde{\varPhi }}_{3,\rho }{\varvec{e}}_{1}\), and it is also helpful for us to determine such a small \(\varDelta t\) that (16) is valid.

The procedure to determine \(\varDelta t\) is given as follows [28]. When we set two errors by \( { err1}=\beta |h_{\rho +1,\rho }{\varvec{e}}_{\rho }^{\top }{\tilde{\varPhi }}_{3,\rho }{\varvec{e}}_{1}|\) and \( { err2}=\beta |h_{\rho +1,\rho }{\varvec{e}}_{\rho }^{\top }{\tilde{\varPhi }}_{4,\rho }{\varvec{e}}_{1}| \Vert A{\varvec{v}}_{\rho +1}\Vert \) for an initial \(\varDelta t\), we have an error estimation \(err_{loc}\) by Algorithm 2 in [28]. We repeatedly carry out a procedure to obtain an acceptable step size \(\varDelta t\). For details, see [28]. Incidentally, in our numerical experiments we calculate \(\exp (\varDelta t{\tilde{H}}_{\rho +4})\) in (17) by a method based on Padé approximations [28].

In (15), we can calculate the product of \((h/3)\varphi _{2}(hA)\) and a vector in a similar way. We can also calculate the product of the matrix exponential or the matrix exponential function \(\varphi _{1}\) and a vector in a similar but simpler way.

Remark 5

For the product of the matrix exponential and a vector in the case that the matrix is of moderate dimension and is not sparse, some researchers have proposed more efficient algorithms than the Krylov subspace projection techniques. See [6, 18] and references therein.

5 Numerical Experiments

We derived the split SROCK2 methods of weak order 2 that are expected to achieve better precision than the SROCK2 methods for SDEs with not very large noise. In order to confirm the performance of the methods, we investigate the expectation and the second moment of the solution of SDEs in our numerical experiments.Footnote 2 In all numerical experiments, we will use the Mersenne twister algorithm [24] to generate pseudorandom numbers.

The first example is the following 2-dimensional damped nonlinear Kubo oscillator [11, 32]

where \(t\in [0,1]\),

\(q_{1}({\varvec{y}})=0\), \(q_{2}({\varvec{y}})=\frac{1}{3}(y_{1}+y_{2})^{3}\), and \(\lambda ,\sigma _{j},\alpha ,\beta _{j}\in {\mathbb {R}}\). As we do not know the exact expectation and second moment of the solution, let us seek approximations to them by the SERKW2D3 method with \(h=2^{-7}\). We refer to these approximations instead of the exact expectation and second moment. As another competitor, we add the SSDFMT method, which is a stochastic Strang splitting method of weak order 2 [23].

We set \(\lambda =2\), \(\sigma _{1}=-\alpha =1/2\), \(\sigma _{2}=-\beta _{1}=1/5\), \(\beta _{2}=-1/10\) as well as \({\varvec{y}}_{0}=[1/2\ 1/2]^{\top }\) [31], and simulate \(1024\times 10^{6}\) independent trajectories for a given h, and seek a numerical approximation to the expectation of \({\varvec{y}}(1)\) or to the second moment of each element of \({\varvec{y}}(1)\), that is, \(\left[ E[(y_{1}(1))^{2}]\ \ E[(y_{2}(1))^{2}] \right] ^{\top }\). The results are indicated in Fig. 4. As the solution is a vector, the Euclidean norm is used. The solid and long-dashed lines denote the split SROCK2 and SROCK2 methods with \(s=3\), respectively, whereas the dashed and long-dash-dotted lines denote the SSDFMT and SERKW2D3 methods. Here and in what follows, the dotted line is a reference line with slope 2. All the methods show the theoretical order of convergence. The split SROCK2 method with \(s=3\), the SSDFMT method and the SERKW2D3 method show much smaller errors than the SROCK2 method.

Log-log plots of the relative error versus h for the expectation and second moment in (18). Solid lines: split SROCK2 (\(s=3\)); long-dashed lines: SROCK2 (\(s=3\)); dashed lines: SSDFMT; long-dash-dotted lines: SERKW2D3; dotted lines: reference line with slope 2

The second example is the following 2-dimensional linear SDE [29]

where \(t\in [0,1]\) and \(\lambda _{1},\lambda _{2},\sigma \in {\mathbb {R}}\). As the matrices in the drift and diffusion terms are not simultaneously diagonalizable if \(\lambda _{1}\ne \lambda _{2}\), the SDE cannot be transformed to decoupled scalar SDEs. Thus, the behaviour of errors is predicted to differ from the results in Sect. 3.3. As it is linear, we obtain ODEs with respect to the expectation and second moment of the solution, and we can solve them for the exact expectation and second moment.

Log-log plots of the relative error versus h for the expectation and second moment in (19). Solid lines: split SROCK2 (\(s=3,4,5,7,10\)); long-dashed lines: SROCK2 (\(s=3,4,5,7,10\)); dashed lines: SSDFMT; dotted lines: reference line with slope 2

Let us set \({\varvec{y}}_{0}=[1\ 1]^{\top }\), \(\lambda _{1}=-100\), \(\lambda _{2}=-1\), and \(\sigma =1/2\). We simulate \(1024\times 10^{6}\) independent trajectories for a given h, and seek a numerical approximation to the expectation of \({\varvec{y}}(1)\) or to the second moment of each element of \({\varvec{y}}(1)\). The results are indicated in Fig. 5. In order to solve the SDE numerically stably with reasonable cost when a step size h is given, we set the smallest stage number for each of the methods to solve it stably. As a result, we set \(s=3,4,5,7\), and 10 for the split SROCK2 and SROCK2 methods when \(h=1/32, 1/16, 1/8, 1/4\), and 1/2, respectively. As in the previous experiment, the solid, long-dashed, and dashed lines denote the split SROCK2, SROCK2, and SSDFMT methods. The results by the SERKW2D3 method are omitted since they are the same as those by the SSDFMT method.

As (19) is a linear SDE, we can predict that the two exponential methods, that is, the SERKW2D3 and SSDFMT methods perform well. In fact, their errors are very small especially in the approximation to the expectation, and the errors seem to come almost from statistical errors. The split SROCK2 methods with several stages follow after them and show smaller errors than the SROCK2 methods in the approximation to the expectation. In contrast to this, the relative error versus h for the second moment is almost the same between the split SROCK2 and the two exponential methods. In addition, the split SROCK2 methods with several stages show much smaller errors than the SROCK2 methods in the approximation to the second moment when h is large.

In this example, the two exponential methods have shown the best performance since the dimension of the SDE is small and especially the drift term has a diagonal matrix. However, when the dimension of SDEs is very large, the methods have high computational cost for matrix exponential functions unless some techniques, such as Krylov subspace projection, are provided in order to calculate them efficiently. If the SDEs come from a spatial discretization of multidimensional stochastic partial differential equations, for example, their dimension can be very large.

A multidimensional stochastic Burgers equation has been studied in [8]. As a third example, we consider the following 2-dimensional stochastic Burgers equation with zero Dirichlet boundary conditions

where \({\tilde{W}}_{i}(t,x,y)\), \(i=1,2\), are independent Brownian sheets on \([0,1/2]\times [0,1]\times [0,1]\), and where \(\gamma >0\) and \(\beta _{1},\beta _{2}\in {\mathbb {R}}\) are parameters. We discretize the space intervals by \(N+2\) equidistant points \(x_{i}\) and \(y_{k}\), \(i,k=0,1,\ldots ,N+1\), and define an \({\mathbb {R}}^{N^{2}}\)- vector valued function U(t) by \([{\tilde{u}}_{1}(t)^{\top }\ {\tilde{u}}_{2}(t)^{\top }\ \ldots \ {\tilde{u}}_{N}(t)^{\top }]\), where \({\tilde{u}}_{i}(t)=[u(t,x_{1},y_{i})\ u(t,x_{2},y_{i})\ \ldots \ u(t,x_{N},y_{i})]^{\top }\). In addition, we define another vector function V(t) similarly, by replacing \(\tilde{{\varvec{u}}}_{i}(t)\) and \({\varvec{u}}(t,\cdot ,\cdot )\) with \(\tilde{{\varvec{v}}}_{i}(t)\) and \({\varvec{v}}(t,\cdot ,\cdot )\), respectively. Then, the application of finite differences [4, 13] to (20) yields the \(2N^{2}\)-dimensional SDE with diagonal and additive noise

where \({\varvec{W}}_{1}(t),{\varvec{W}}_{2}(t)\) are \(N^{2}\)-dimensional independent standard Wiener processes, and where \(A,B_{1},B_{2}\) are \(N^{2}\times N^{2}\) matrices given in the following and vectors use \(*\) as component wise multiplication. In order to express \(A,B_{1},B_{2}\) concretely, let us introduce the notation

for \(C_{1},D,C_{2}\in {\mathbb {R}}^{k\times k}\), which can be used to give a tridiagonal matrix or a block tridiagonal matrix when \(k=1\) or \(k=N\), respectively. In (21), \(A={{\,\textrm{tridiag}\,}}(I,T,I)\), \(B_{1}={{\,\textrm{tridiag}\,}}(O,S,O)\), \(B_{2}={{\,\textrm{tridiag}\,}}(-I,O,-I)\), where \(T={{\,\textrm{tridiag}\,}}(1,-4,1)\), \(S={{\,\textrm{tridiag}\,}}(-1,0,1)\), and I and O denote the \(N\times N\) identity and zero matrices. If we use expressions in Remark 1 for (21), then \(H({\varvec{y}})\) is a \(2N^{2}\)-dimensional zero vector, and \({\tilde{H}}({\varvec{y}},{\varvec{z}})\) is a \(2N^{2}\)-dimensional vector whose j-th component is \(\beta _{1}(N+1)\sqrt{h}\xi _{j}\) \((j=1,2,\ldots ,N^{2})\) or \(\beta _{2}(N+1)\sqrt{h}\xi _{j}\) \((j=N^{2}+1,N^{2}+2,\ldots ,2N^{2})\).

In this example, let us set \(N=127\), \(\beta _{1}=\beta _{2}=1/(N+1)\), \(\gamma =1/10\), \(u_{0}(x,y)=4y(1-y)\sin (\pi x)\), and \(v_{0}(x,y)=\sin (\pi x)\sin ^{2}(2\pi y)\), and simulate 1000 independent trajectories for a given h. Then, the dimension of the SDE is 32258. In order to solve the SDE numerically stably with reasonable cost when a step size h is given, we set the smallest stage number for each of the SROCK2 and split SROCK2 methods to solve it stably. As a result, we set \(s=4,5\), and 7 for the SROCK2 methods when \(h=1/2048,1/1024\), and 1/512, respectively, whereas for the split SROCK2 method we set \(s=80\) when \(h=1/4\). We also investigate the SERKW2D3 and SSDFMT methods for \(h=1/4\). Taking computational time into account, we set \(\rho =100\) and 30 for Krylov subspace projection techniques in the SERKW2D3 and SSDFMT methods, respectively.

Approximations to the expectation of u in (21) by the split SROCK2 method with \(s=80\) for \(h=1/4\) or the SROCK2 method with \(s=7\) for \(h=1/512\) over 1000 trajectories

Approximations to the expectation and second moment of u at \(y=1/2\) over 1000 trajectories in (21). Solid line: split SROCK2 (s=80, h=1/4); dashed line: SSDFMT (h=1/4); long-dash-dotted line: SERKW2D3 (h=1/4); long-dashed line: SROCK2 (s=7, h=1/512); thick long-dashed line: SROCK2 (s=5, h=1/1024)

The results are indicated in Figs. 6 and 7. In Fig. 6, we show numerical approximations to the expectation of u(t, x, y) at \(t=1/2\). The left-hand plot shows an approximate mean by the split SROCK2 method with \(s=80\) for \(h=1/4\), whereas the right-hand plot shows an approximate mean by the SROCK2 method with \(s=7\) for \(h=1/512\). The difference between them is clearly visible. Although we have omitted plots given by the SROCK2 method with \(s=5\) for \(h=1/1024\), its difference from the left-hand plot is still visible. If we choose a smaller step size (for example, \(h=1/2048\) (\(s=4\))) for the SROCK2 method, then such a difference completely becomes invisible. These facts imply that when we approximate an expectation over not very large number of trajectories by the SROCK2 method with not sufficiently small h, the approximate mean might be largely contaminated by errors. On the other hand, we can get other plots by the SERKW2D3 and SSDFMT methods for \(h=1/4\), which are almost the same as the left-hand plot, and the difference between them is invisible. Thus, in order to check differences among all the methods in detail, we give Fig. 7. In the left-hand plot, we can see a slight difference between the split SROCK2 method for \(h=1/4\) and each of the SERKW2D3 and SSDFMT methods for \(h=1/4\), but if we set \(h=1/8\) for the SERKW2D3 and SSDFMT methods, then the difference becomes invisible. Instead of omitting approximations to E[u] given by the SROCK2 methods, we show approximations to \(E[u^{2}]\) given by them in the right-hand plot. We can see that their approximations are heavily influenced by errors. Especially, a line corresponding to the approximation by the SROCK2 method with \(s=7\) and \(h=1/512\) looks serrated.

In the left-hand plot of Fig. 6, we would like to emphasize that the smoothness does not come from the fact that for the split SROCK2 method the noise term is evaluated fewer times. In fact, we can get a smooth plot by the split SROCK2 method with \(s=28\) and \(h=1/32\). In contrast to this, the SROCK2 methods cannot solve the SDE numerically stably for a larger h than 1/32. In addition, even for \(h=1/32\), the SROCK2 methods need a larger stage number \(s=30\) than 28 to avoid a numerical explosion. Figure 8 gives a result by the SROCK2 method with \(s=30\) and \(h=1/32\). The method can avoid a numerical explosion, but it is heavily influenced by computational errors

Approximations to the expectation of u and the second moment of u at \(y=1/2\) in (21) by the SROCK2 method with \(s=30\) for \(h=1/32\) over 1000 trajectories

Remark 6

This is a typical example where the SROCK2 methods suffer from errors. Now, the eigenvalues in the linear part of the drift term are distributed in a wide interval. Thus, \(\lambda h\)’s for them are also distributed in a wide interval when a large step size h is given. As a result, the SROCK2 methods are heavily influenced by the errors due to wildly distributed \(\lambda h\)’s. This fact is suggested in Fig. 2, although the present SDE has additive noise, not multiplicative noise.

From all results in the present experiment, we can see that the split SROCK2 method for \(h=1/4\) has better precision than not only the SERKW2D3 and SSDFMT methods for \(h=1/4\), but also the SROCK2 method for \(h=1/1024\), and especially say that the SROCK2 methods suffer from precision when a sufficiently small step size such as \(h=1/2048\) is not given, even if they can solve the SDE numerically stably.

Incidentally, Table 4 indicates comparisons of computational cost for each of the SROCK2 and split SROCK2 methods in one step and one trajectory. In the table, \(n_{e}\) and \(n_{r}\) stand for the number of evaluations on d-dimensional functions in the drift or diffusion coefficients, and the number of generated pseudorandom numbers, respectively. Note that if the SDEs have diagonal noise, then \(n_{e}\) reduces to a smaller number obtained by the substitution of \(m=1\) due to Remark 1. In the above example, since the SROCK2 method has \(s=4\) and \(h=1/2048\) for the SDE with diagonal noise, the method evaluates the drift or diffusion coefficients 5120 times up to \(t=1/4\). On the other hand, the split SROCK2 method with \(s=80\) and \(h=1/4\) evaluates them merely 165 times up to \(t=1/4\). From a comparison between Tables 2 and 3, we can see that the efficiency of the stabilization procedures for the SROCK2 methods is 2.87 times higher than that for the split SROCK2 methods. However, this numerical experiment shows that the split SROCK2 method with \(s=80\) and \(h=1/4\) has 0.03 times fewer number of the function evaluations than the SROCK2 method with \(s=4\) and \(h=1/2048\), whereas they have invisible errors in the plots of E[u] and \(E[u^{2}]\).

Although we are concerned with weak second order methods, we give a brief comment on the SK-ROCK methods, corresponding to Remark 4. When \(\eta =2.0\), the SK-ROCK method with \(s=11\) and \(h=1/128\) gives the same profiles as those by the split SROCK2 method with \(s=80\) and \(h=1/4\) in Fig. 7. It is known that the SK-ROCK methods work very well for SDEs with additive noise [5]. In spite of it, this numerical experiment shows that the split SROCK2 method with \(s=80\) and \(h=1/4\) has 0.43 times fewer number of the function evaluations than the SK-ROCK method with \(s=11\) and \(h=1/128\), whereas they have invisible errors in the plots of E[u] and \(E[u^{2}]\).

We have also investigated computational time. The numerical experiments were carried out on Microsoft Windows 10 Pro 64-bit Operating System with an Intel Xeon W-2133 CPU @3.60GHz and 64GB RAM. We used Intel C and Fortran compilers in Intel oneAPI 2022. We simulated 16 batches of 1000 trajectories for each method. The average and standard deviation (SD) of time are indicated in Table 5. The split SROCK2 method clearly shows the best performance. Krylov subspace projection techniques are used in the SERKW2D3 and SSDFMT methods. As the SSDFMT method uses a smaller dimension \(\rho \) of a Krylov subspace since it does not have \(\varphi _{1}\) and \(\varphi _{2}\) functions, it recorded much shorter computational time than the SERKW2D3 method.

Incidentally, as we mentioned in Remark 5, in [18] the authors have proposed another efficient algorithm based on Taylor series for the product of the matrix exponential and a vector, and they [18] and some researchers [19] have indicated that it could work much faster than the Krylov subspace projection techniques. For this, we have tested it, which is called the expmvtay2 algorithm. As the algorithm is slightly more sensitive than the Krylov subspace projection techniques, we chose \(h=1/8\) and carried out simulations up to \(t=1/2\). On average, the SSDFMT method with the expmvtay2 algorithm took 1491.0 s (SD 4.8), and the SSDFMT method with the Krylov subspace projection techniques took 409.9 s (SD 4.1) per 1000 trajectories simulation. Thus, we can see that the Krylov subspace projection techniques are more efficient than the algorithm in this high-dimensional problem.

6 Concluding Remarks

We have derived a class of split SROCK2 methods that have a free parameter \(\alpha \) and which achieve weak order 2 for noncommutative Itô SDEs. To decide the value of \(\alpha \), we have provided a linear error analysis on one-step methods for SDEs. On the basis of the analysis, we have decided the optimal value of \(\alpha \) for each stage s such that the errors on the expectation and the second moment of the amplification factor of the methods become small if the noise term of the SDE is not very large. As a result, we have shown that the split SROCK2 methods have much smaller errors than the SROCK2 methods in the linear error analysis.

In order to check the computational accuracy and performance of the methods, we carried out three numerical experiments. Although the selection for \(\alpha \) was based on an error analysis in a linear case, the numerical experiments suggest that it is valid also in other cases. In the first experiment, the damped nonlinear Kubo oscillator was considered. The experiment confirmed that the values of \(\alpha \) we decided are appropriate for the split SROCK2 methods. In fact, the split SROCK2 method with \(s=3\) showed smaller errors than the SROCK2 method with \(s=3\). In the second experiment, we dealt with a linear SDE which cannot be transformed to decoupled scalar SDEs. The experiment showed the superiority of the split SROCK2 methods over the SROCK2 methods in the approximation to not only the expectation, but also the second moment when the step size is large. In the third experiment, we considered a multidimensional stochastic Burgers equation with a multidimensional space-time white noise, which leads to a very high-dimensional SDE with a high-dimensional, diagonal, and additive noise after finite differences. This experiment showed again the superiority of our method over the SROCK2 methods in terms of computational accuracy for relatively large time-step size. In addition, the method was superior to the stochastic exponential RK methods in terms of computational time.

A significant advantage of the methods based on the ROCK2 methods is that they can numerically stably cope with high-dimensional stiff SDEs whose drift term has eigenvalues lie near the negative real axis, by just increasing the stage number. In some types of SDEs such as the third example, however, the SROCK2 methods require a very small step size for computational accuracy, not for numerical stability. The split SROCK2 methods do not have such a step size restriction.

Finally, we should make some remarks. We have considered the case of \(-1/2\le r\le 0\) in the error analysis. If r becomes close to \(-2\), then \(\max _{l(r)<\lambda T_{0}<0}|\hat{{\mathscr {E}}}(0)|\) becomes close to 1 for the methods based on the ROCK2 methods, whereas \(\hat{{\mathscr {E}}}(0)=0\) for \(\lambda T_{0}=0\) (\(\sigma ^{2}=r\lambda \)). This means that we have to choose h such that \(|\lambda h|\) is sufficiently small for accuracy if we apply the methods in which the ROCK2 methods are embedded, to SDEs with large noise even if they are linear SDEs. This is a disadvantage of these methods, compared with other methods such as stochastic exponential RK methods. On the other hand, stochastic exponential RK methods also have a disadvantage, namely the computational cost for high-dimensional SDEs. As we have seen in Sect. 4.2, even when a step size h is large such as \(h=1/4\), we need small \(\varDelta t\)’s to calculate the products of matrix exponential functions and vectors precisely, which substantially increase the computational cost.

Data Availability

Data and program codes are available in https://github.com/yosh-komori/supplementary_info_files_2023.

Notes

For an implementation of the methods, we utilize the parameter values in a Fortran code, rectp.f, obtained from http://anmc.epfl.ch/Pdf/srock2.zip.

References

Abdulle, A., Cirilli, S.: S-ROCK: Chebyshev methods for stiff stochastic differential equations. SIAM J. Sci. Comput. 30(2), 997–1014 (2008)

Abdulle, A., Li, T.: S-ROCK methods for stiff Itô SDEs. Commun. Math. Sci. 6(4), 845–868 (2008)

Abdulle, A., Medovikov, A.A.: Second order Chebyshev methods based on orthogonal polynomials. Numer. Math. 90, 1–18 (2001)

Abdulle, A., Vilmart, G., Zygalakis, K.C.: Weak second order explicit stabilized methods for stiff stochastic differential equations. SIAM J. Sci. Comput. 35(4), A1792–A1814 (2013)

Abdulle, A., Almuslimani, I., Vilmart, G.: Optimal explicit stabilized integrator of weak order 1 for stiff and ergodic stochastic differential equations. SIAM/ASA J. Uncertain. Quantif. 6(2), 937–964 (2018)

Al-Mohy, A.H., Higham, N.J.: A new scaling and squaring algorithm for the matrix exponential. SIAM J. Matrix Anal. 31(3), 970–989 (2009)

Arnold, L.: Stochastic Differential Equations: Theory and Applications. John Wiley & Sons, New York (1974)

Brzeźniak, Z., Goldys, B., Neklyudov, M.: Multidimensional stochastic Burgers equation. SIAM J. Math. Anal. 46(1), 871–889 (2014)

Cohen, D.: On the numerical discretisation of stochastic oscillators. Math. Comput. Simul. 82(8), 1478–1495 (2012)

Cohen, D., Sigg, M.: Convergence analysis of trigonometric methods for stiff second-order stochastic differential equations. Numer. Math. 121(1), 1–29 (2012)

Debrabant, K., Kværnø, A., Mattsson, N.C.: Runge-Kutta Lawson schemes for stochastic differential equations. BIT Numer. Math. 61(2), 381–409 (2021)

Debrabant, K., Kværnø, A., Mattsson, N.C.: Lawson schemes for highly oscillatory stochastic differential equations and conservation of invariants. BIT Numer. Math. 62(4), 1121–1147 (2022)

Dyöngy, I.: Lattice approximations for stochastic quasi-linear parabolic partial differential equations driven by space-time white noise I. Potential Anal. 9, 1–25 (1998)

Erdoğan, U., Lord, G.J.: A new class of exponential integrators for SDEs with multiplicative noise. IMA J. Appl. Math. 39(2), 820–846 (2019)

Hochbruck, M., Ostermann, A.: Explicit exponential Runge-Kutta methods for semilinear parabolic problems. SIAM J. Numer. Anal. 43(3), 1069–1090 (2005)

Hochbruck, M., Ostermann, A.: Exponential integrators. Acta Numer. 19, 209–286 (2010)

Hochbruck, M., Lubich, C., Selhofer, H.: Exponential integrators for large systems of differential equations. SIAM J. Sci. Comput. 19(5), 1552–1574 (1998)

Ibáñez, J., Alonso, J.M., Alonso-Jordá, P., Defez, E., Sastre, J.: Two Taylor algorithms for computing the action of the matrix exponential on a vector. Algorithms 15(2), 48 (2022)

Kamm, K., Pagliarani, S., Pascucci, A.: Numerical solution of kinetic SPDEs via stochastic Magnus expansion. Math. Comput. Simul. 207, 189–208 (2023)

Kloeden, P.E., Platen, E.: Numerical Solution of Stochastic Differential Equations. Springer-Verlag, Berlin (1999). (Corrected Third Printing)

Komori, Y., Burrage, K.: Weak second order S-ROCK methods for Stratonovich stochastic differential equations. J. Comput. Appl. Math. 236(11), 2895–2908 (2012)

Komori, Y., Burrage, K.: A stochastic exponential Euler scheme for simulation of stiff biochemical reaction systems. BIT 54(4), 1067–1085 (2014)

Komori, Y., Cohen, D., Burrage, K.: Weak second order explicit exponential Runge-Kutta methods for stochastic differential equations. SIAM J. Sci. Comput. 39(6), A2857–A2878 (2017)

Matsumoto, M., Nishimura, T.: Mersenne twister: a 623-dimensionally equidistributed uniform pseudo-random number generator. ACM Trans. Model. Comput. Simul. 8(1), 3–30 (1998)

Milstein, G.N.: Weak approximation of solutions of systems of stochastic differential equations. Theory Probab. Appl. 30(4), 750–766 (1986)

Milstein, G.N., Tretyakov, M.V.: Stochastic Numerics for Mathematical Physics. Springer-Verlag, Berlin (2004)

Moler, C., Loan, C.V.: Nineteen dubious ways to compute the exponential of a matrix, twenty-five years later. SIAM Rev. 45(1), 3–49 (2003)

Sidje, R.B.: Expokit: a software package for computing matrix exponentials. ACM Trans. Math. Softw. 24(1), 130–156 (1998)

Tocino, A., Senosiain, M.J.: Mean-square stability analysis of numerical schemes for stochastic differential systems. J. Comput. Appl. Math. 236(10), 2660–2672 (2012)

van der Houwen, P.J., Sommeijer, B.P.: On the internal stability of explicit, \(m\)-stage Runge-Kutta methods for large \(m\)-values. ZAMM Z. Angew. Math. Mech. 60, 479–485 (1980)

Yang, G., Burrage, K., Burrage, P., Ding, X.: How to choose an appropriate initial condition to simulate stochastic differential equations stably. In: AIP Conference Proceedings (to appear)

Yang, G., Burrage, K., Komori, Y., Burrage, P.M., Ding, X.: A class of new Magnus-type methods for semi-linear non-commutative Itô stochastic differential equations. Numer. Algorithms 88(4), 1641–1665 (2021)

Acknowledgements

The authors would like to thank referees, Professor Chi-Wang Shu and Professor David Cohen for their comments which helped to improve the earlier versions of this paper.

Funding

This work was partially supported by JSPS Grant-in-Aid for Scientific Research 17K05369.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Komori, Y., Burrage, K. Split S-ROCK Methods for High-Dimensional Stochastic Differential Equations. J Sci Comput 97, 62 (2023). https://doi.org/10.1007/s10915-023-02354-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02354-8

Keywords

- Explicit method

- Weak second order approximation

- Orthogonal Runge–Kutta–Chebyshev method

- Stiffness

- Noncommutative noise

- Itô stochastic differential equation