Abstract

We study a posteriori error analysis for the space-time discretizations of linear parabolic integro-differential equation in a bounded convex polygonal or polyhedral domain. The piecewise linear finite element spaces are used for the space discretization, whereas the time discretization is based on the Crank–Nicolson method. The Ritz–Volterra reconstruction operator (IMA J Numer Anal 35:341–371, 2015), a generalization of elliptic reconstruction operator (SIAM J Numer Anal 41:1585–1594, 2003), is used in a crucial way to obtain optimal rate of convergence in space. Moreover, a quadratic (in time) space-time reconstruction operator is introduced to establish second order convergence in time. The proposed method uses nested finite element spaces and the standard energy technique to obtain optimal order error estimator in the \(L^{\infty }(L^2)\)-norm. Numerical experiments are performed to validate the optimality of the error estimators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The main objective of this article is to study a posteriori error analysis of the Crank–Nicolson finite element method for the linear parabolic integro-differential equations (PIDE) of the form

Here, \(\Omega \subset \mathbb {R}^d\, ( {d \ge 1})\) is a bounded convex polygonal or polyhedral domain with boundary \(\partial \Omega \), and \(u_t(x,t) = \frac{\partial u}{\partial t}(x,t)\) with \(T<\infty \). Further, \(\mathcal {A}\) is a self-adjoint, uniformly positive definite, second-order linear elliptic partial differential operator of the form

and the operator \(\mathcal {B}(t,s)\) is of the form

where \(``\nabla \)” denotes the spatial gradient. \(A = \{a_{ij}(x)\}\) and \(B(t,s) = \{b_{ij}(x;t,s)\}\) are two \(d \times d\) matrices assumed to be in \({L^{\infty }(\Omega )}^{d \times d}\) in space variable. Moreover, the elements of B(t, s) are assumed to be at least twice differentiable with respect to s and once with respect to t. Furthermore, we assume the initial function \(u_0(x)\) is in \(H^2(\Omega )\cap H_0^1(\Omega )\) and the source function f(x, t) is assumed to be in \(L^2(0;T;L^2(\Omega ))\). Under the regularity assumptions on f(x, t), \(u_0(x)\) as prescribed above and

the problem (1.1) admits a unique solution

We refer to Chapter 2 of [9] for further details on existence and uniqueness of the solution of (1.1). For regularity results for such problems, one may refer to [27, 31] and the references therein.

Such problems and variants of them arise in various applications, such as heat conduction in material with memory [13], the compression of poro-viscoelasticity media [14], nuclear reactor dynamics [21] and the epidemic phenomena in biology [7].

While a posteriori error analysis of finite element methods for elliptic and parabolic problems are quite rich in the literature [1,2,3,4,5, 8, 10,11,12, 15, 17,18,19, 28,29,30], relatively less progress has been made in the direction of a posteriori error analysis of PIDE [23,24,25]. In order to put the results of the paper into proper perspective, we give a brief account of the relevant literature and motivation for the present investigation. In the absence of the memory term, i.e., when \({\mathcal {B}}(t,s) = 0\), a posteriori error analysis for linear parabolic problems have been investigated by several authors in [2,3,4,5, 10, 12, 15, 17, 18, 22, 30]. In particular, for the fully discrete Crank–Nicolson method for the heat equation, a continuous, piecewise linear approximation in time is used to derive suboptimal (with respect to time steps) a posteriori error bounds in [30] using the standard energy techniques. Subsequently, a continuous, piecewise quadratic polynomial in time so-called the Crank–Nicolson reconstruction is then introduced in [2] to restore the second order of convergence for the semidiscrete in time discretization of a general parabolic problem. Later, the authors of [17] have introduced the reconstruction based on approximations on one time level (two-point reconstruction) as in [2], and the reconstructions based on approximations on two time levels (three-point reconstruction) to derive error bounds in the \(L^2(H^1)\)-norm. Recently in [3], an elliptic reconstruction technique in conjunction with the energy arguments are used to derive optimal order a posteriori error estimate for the Crank–Nicolson method in the \(L^\infty (L^2)\)-norm for parabolic problems.

Since PIDE (1.1) may be thought of as a perturbation of parabolic equation, an attempt has been made to extend a posteriori error analysis of parabolic problems [3] to PIDE (1.1). We wish to emphasize that such an extension is not straightforward due to the presence of the Volterra integral term in (1.1). In this paper we derive a posteriori bounds for PIDE in the \(L^\infty (L^2)\)-norm of the error for the fully discrete Crank–Nicolson approximations. The optimality in space hinges essentially on the Ritz–Volterra reconstruction operator [23], whereas a quadratic (in time) space-time reconstruction operator is introduced to establish an a posteriori error estimator with second-order convergence in time. It is important to note that choice of such a quadratic space-time reconstruction operator (see 3.4) is non-trivial and heavily problem dependent. Note that in [24], the a posteriori error estimates were derived for the fully discrete Crank–Nicolson approximations in the \(L^2(H^1)\)-norm. However, the analysis presented therein does not require Ritz–Volterra reconstruction as we require this reconstruction to get optimality in the \(L^2\)-norm in space. And consequently a quadratic reconstruction in time is enough to obtain optimality in the \(L^2(H^1)\)-norm as compared to a space-time quadratic reconstruction (as introduced in this article). We have used the nested refinement on finite element spaces to avoid further complications due to the presence of the Volterra integral term which memorizes the jumps over all element edges in all previous space meshes.

To the best of authors’ knowledge no article is available in the literature concerning a posteriori error analysis of the Crank–Nicolson method for PIDE in the \(L^\infty (L^2)\)-norm. Our main concern is on the theoretical aspect of a posteriori analysis of the method and to show numerically that the derived estimators are computable which exhibit optimal asymptotic behaviour. Qualitative behaviour of the obtained estimator is shown in Sect. 5, however, the development of different adaptive algorithms is out of the scope of this article.

The rest of the paper is organized as follows. We begin by introducing some standard notations and preliminary materials in Sect. 2. The development of a quadratic space-time reconstructions for PIDE appears in Sect. 3. In Sect. 4, we give a posteriori error analysis for the fully discrete Crank–Nicolson finite element method and derive error estimate in the \(L^\infty (L^2)\)-norm. Finally, numerical results are presented in Sect. 5.

2 Notations and Preliminaries

Given a Lebesgue measurable set \(\omega \subset \mathbb {R}^d\), we denote by \(L^p(\omega ),\ 1\le p \le \infty \), the Lebesgue spaces with corresponding norms \(\Vert \cdot \Vert _{L^p(\omega )}\). When \(p=2\), the space \(L^2(\omega )\) is equipped with inner product \(\langle \cdot ,\cdot \rangle _{\omega }\) and the induced norm \(\Vert \cdot \Vert _{L^2(\omega )}\). Whenever \(\omega = \Omega \), we omit the subscripts of \(\Vert .\Vert _{L^2(\omega )}\) and \(\langle \cdot ,\cdot \rangle _{}\omega \). For an integer \(m>0\), we use the standard notation for Sobolev spaces \(W^{m,p}(\omega )\) with \(1\le p \le \infty \). The norm on \(W^{m,p}(\omega )\) is defined by

with the standard modification for \(p = \infty \). When \(p = 2\), we denote \(W^{m,2}(\Omega )\) by \(H^m(\Omega )\) and the norm by \(\Vert \cdot \Vert _m\). The function space \(H^1_0(\Omega )\) consists of elements from \(H^1(\Omega )\) that vanishes on the boundary of \(\Omega \), where the boundary values are to be interpreted in the sense of trace.

Let \(a(\cdot ,\cdot )\,:\,H^1_0(\Omega )\times H^1_0(\Omega )\rightarrow \mathbb {R}\) be the bilinear form corresponding to the elliptic operator \(\mathcal {A}\) defined by

Similarly, let \(b(t,s;\cdot ,\cdot )\) be the bilinear form corresponding to the operator \(\mathcal {B}(t,s)\) defined on \(H^1_0(\Omega )\times H^1_0(\Omega )\) by

Let \(B_s(t,s)\) and \(B_{ss}(t,s)\) be obtained by differentiating B(t, s) partially with respect to s once and twice, respectively. Then we define \(b_s(t,s;\cdot ,\cdot )\) and \(b_{ss}(t,s;\cdot ,\cdot )\) to be the bilinear forms corresponding to the operators \(\mathcal {B}_s(t,s)\) and \(\mathcal {B}_{ss}(t,s)\) defined on \(H^1_0(\Omega )\times H^1_0(\Omega )\) by

and

We assume that the bilinear form \(a(\cdot ,\cdot )\) is coercive and continuous on \(H^1_0(\Omega )\), i.e.,

with \(\alpha ,\beta \in \mathbb {R}^+\).

Further, we assume that the bilinear forms \(b(t,s;\cdot ,\cdot )\), \(b_s(t,s;\cdot ,\cdot )\) and \(b_{ss}(t,s;\cdot ,\cdot )\) are continuous on \(H^1_0(\Omega )\), i.e.,

and

with \(\gamma ,\gamma ',\gamma '' \in \mathbb {R}^+\).

The weak formulation of the problem (1.1) may be stated as follows: Find \(u: [0,T] \rightarrow H^1_0(\Omega )\) such that

Remark

The stability properties for the continuous problem (1.1) can be found in [20] and [27].

Let \(0 = t_0< t_1< \cdots < t_N = T\) be a partition of [0, T] with \(\tau _n := t_n - t_{n-1}\) and \(I_n := (t_{n-1}, t_n]\). For \(t = t_n, \ n \in [0:N]\), we set \(f^n(\cdot ) = f(\cdot ,t_n)\). Let \((\mathcal {T}_n)_{n \in [0:N]}\) be a family of conforming triangulations of the domain \(\Omega \). Let \(h_n(x) = \text{ diam }(K),\;\text{ where }\; K \in \mathcal {T}_n \; \text{ and } \; x \in K\) denote the local mesh-size function corresponds to each given triangulation \(\mathcal {T}_n\). Let \(\mathcal {S}_n\) denote the set of internal sides of \(\mathcal {T}_n\) representing edges in \(d = 2\) or faces in \(d = 3\), and \(\mathcal {E}_n:= \cup _{E \in \mathcal {S}_n}E\) denotes the union of all internal sides.

Each triangulation \((\mathcal {T}_n)\), for \(n \in [1:N]\), is a refinement of a macro-triangulation \(\mathcal {T}_0\) of the domain \(\Omega \) that satisfies the same conformity and shape-regularity assumptions during refinements (cf. [6]). We assume the following admissible criteria on \(\mathcal {T}_n\) (cf. [15]):

-

The refined triangulation is conforming.

-

The shape-regularity of an arbitrary refinement depends only on the shape-regularity of the macro-triangulation \(\mathcal {T}_0\).

We shall allow only nested refinement of the space meshes at each time level \(t = t_n, \ n \in [0:N]\). Associated with these triangulations, we consider the finite element spaces:

where \(\mathbb {P}_1\) is the space of polynomials in d variables of degree atmost 1. Also, define the space \(\mathbb {V}^0 \oplus \mathbb {V}^1 \oplus \ldots \oplus \mathbb {V}^n\) by \(X^n\).

Let \(P^n_0 : L^2(\Omega ) \rightarrow \mathbb {V}^n\) be the \(L^2\) projection operator and is given by

Throughout this paper, the following notation will be used. For \(n = 1,2,\ldots ,N\),

Let \(\sigma ^n\) be the quadrature rule used to approximate the Volterra integral term. In order to be consistent with the Crank–Nicolson scheme, we use the trapezoidal rule given by

Representation of the bilinear forms. For a function \(v \in \mathbb {V}^n\), we can represent the bilinear form \(a(\cdot ,\cdot )\) as

where

is the regular part of the distribution \(-\text{ div }(A \nabla v)\) and

is the spatial jump of the field \(A\nabla v\) across an element side \(E \in \mathcal {S}_n\), where \(\nu _E\) is a unit normal vector to E at the point x.

Similarly, for all \(\phi \in H^1_0(\Omega )\), we represent the bilinear form \(b(t_n,s; \cdot ,\cdot )\) as

where \(\mathcal {B}_{el}(t_n,s) v(s)\) is the regular part of the distribution \(-\text{ div }(B(t_n,s) \nabla v(s))\) and is defined as

and \(J_2[v(s)]\) is the spatial jump of the field \(-\text{ div }(B(t_n,s)\nabla v(s))\) across an element side \(E \in \mathcal {S}_n\) as defined in (2.8) with \(B(t_n,s)\) replacing A.

We define the fully discrete operators \(\mathcal {A}^n: H^1_0(\Omega ) \rightarrow \mathbb {V}^n\) and \(\mathcal {B}^{n-r}(s): H^1_0(\Omega ) \rightarrow \mathbb {V}^n\), \(0 \le r < 1\), by

and

The fully discrete Crank–Nicolson scheme may be stated as follows: Given \(U^0 = P^0_0 u(0)\), find \(U^n \in \mathbb {V}^n, \;n \in [1:N]\) such that

where

Further, since

we define \(\sigma ^n(\mathcal {B}^{n-1/2}U)\) through

Let U be a continuous, piecewise linear approximation in time defined for all \(t \in I_n\) by

where

Following [23], we recall the definition of Ritz–Volterra reconstruction operator below.

Definition 2.1

(Ritz–Volterra reconstruction) We define the Ritz–Volterra reconstruction \(\mathcal {R}^n_w v(t) \in H^1_0(\Omega ), 0 \le n \le N, t \in [0,T]\) of \(v(t) \in H^1_0(\Omega )\) to be a solution of the following elliptic Volterra integral equation in the weak form

where \(g^n\) is given by

Remark

The Ritz–Volterra reconstruction is well defined (please refer to the definition 3.2 of [23]) and it is motivated by the Ritz Volterra projection introduced by Lin et al. [16] in the context of a priori analysis for PIDE. The Ritz–Volterra reconstruction is the partial right inverse of the Ritz Volterra projection operator (cf. [23]). Note that the Galerkin orthogonality type property holds for the Ritz–Volterra reconstruction:

Further, we use the following compatibility condition:

We use the following definitions in the subsequent error analysis. For \(t \in I_n\), we now define the Ritz–Volterra reconstructions of U(t) by

where \(l_{n-1}(t)\) and \(l_n(t)\) are given by (2.13). Now, set

For \(t \in I_n\), let \(\hat{\omega }_I(t)\) be the linear interpolant associated with the integral vectors \(\hat{\omega }(t_{n-1})\) and \(\hat{\omega }(t_n)\) and be given by

Further, let

For \(t \in I_n\), let \(\hat{\mathcal {U}}_{I,1}(t)\) be the linear interpolant associate with the integrals \(\hat{\mathcal {U}}(t_{n})\) and \(\hat{\mathcal {U}}(t_{n-1})\):

and let \(\hat{\mathcal {U}}_{I,2}(t)\) be the linear interpolant associate with that of the integral \(\hat{\mathcal {U}}(t_{n-1/2})\)

where

For \(n \in [0:N]\), we define the inner residual

and the jump residual

with \(\mathfrak {R}^0(U) := \mathcal {A}^0 U^0 - \mathcal {A}_{el} U^0\) and \(\mathfrak {J}^0[U] := J_1[U^0]\).

Modified Crank–Nicolson scheme for PIDE It is known fact that during refinements the discrete Laplace operator \(\Delta ^n_h\) on the finer mesh \(\mathcal {T}_n\) when applied to coarse grid function \(U^{n-1}\) leads to the oscillatory behaviour of the solution for the parabolic problem (cf. [3]). This is due to the presence of term \(\Delta ^n_hU^{n-1}\) which exhibits oscillations in the classical Crank–Nicolson scheme for the parabolic problems. Since PIDE may be thought of as the perturbation to the parabolic problem (when \(\mathcal {B}(t,s) = 0\), PIDE is same as parabolic problem), it is therefore natural to expect the same oscillatory behaviour for the classical Crank–Nicolson approximation to the PIDE (1.1). Therefore, a modified Crank–Nicolson scheme is considered and analyzed.

To that end, the modified Crank–Nicolson scheme for PIDE (1.1) is defined as follows: For \( 1\le n \le N\), find \(U^n \in \mathbb {V}^n\) such that

where \(\sigma ^n(\mathcal {B}^{n-1/2}U)\) is defined through (2.11).

3 Quadratic (in Time) Space-Time Reconstructions for PIDE

It is noteworthy that by spitting the error \(e = u - U = (u - \mathcal {R}_w U) + (\mathcal {R}_w U - U)\) yields optimal bounds for the PIDE (1.1) in \(L^\infty (L^2)\)-norm in case of the backward Euler scheme (cf. [23]). Here, u denotes the exact solution of the PIDE (1.1), U is defined by (2.12) and \(\mathcal {R}_w U\) is given by (2.17). The optimality in space hinges essentially on the Ritz–Volterra reconstruction \(\mathcal {R}_w U\) of U. But, in order to recover the second order convergence in time for the Crank–Nicolson scheme, we need to reconsider a reconstruction of \(\mathcal {R}_w U\) in time. The precise properties of such a space-time reconstruction \(\widehat{U}\) are:

-

\(\widehat{U}\) should be chosen such that the error (\(u - \widehat{U}\)) in the energy argument should lead to optimal estimates in both space and time, and

-

\(\widehat{U} - \mathcal {R}_w U = O(\tau ^2).\)

Thus, a natural choice for such a reconstruction \(\widehat{U}\) is that it should be quadratic in time as \(\mathcal {R}_w U\) is linear in nature (see definition (2.17)). Moreover, \(\widehat{U}\) should be continuous and \(\widehat{U}(t_n) = \mathcal {R}_w^n U^n \; \forall n = 1,\ldots , N\).

The rest of this section is devoted to introduce a space-time reconstruction \(\widehat{U}\). For this, we need some notations which will prove to be convenient for the error analysis in Sect. 4.

Let \(\Theta : [0,T] \rightarrow H^1_0(\Omega )\) be defined by

Define \(\hat{F}: [0,T] \rightarrow H^1_0(\Omega )\) by

where \(\varphi (t) := \hat{I} f(t)\). Here, \(\hat{I}\) is a piecewise linear interpolant chosen such that

We now define the quadratic space-time reconstruction \(\widehat{U} : [0,T] \rightarrow H^1_0(\Omega )\) as follows:

We note that \(\widehat{U}\) is quadratic in time as \(\hat{F}\) is linear in time. This definition is motivated by the fact that \(\widehat{U}(t)\) satisfies the following relation:

It follows from (3.4) that

and

where we have used (2.26) and the integral is evaluated using the mid-point rule. The terms \(\mathcal {R}_w^{n-1}U^{n-1}\) and \( (t- t_{n-1})({\mathcal {R}^n_w P^n_0 U^{n-1} - \mathcal {R}_w^{n-1} U^{n-1}})/\tau _n\) in the definition (3.4) act as corrector terms required to establish the continuity of \(\widehat{U}\) with \(\mathcal {R}_w U\) at the nodal points \(t_n\).

Using the above notations, the modified Crank–Nicolson scheme can be rewritten in the following compact form

In view of (3.2)

where \(F^{n-1/2} := \hat{F}(t_{n-1/2})\).

4 Error Analysis

In this section, we shall derive a posteriori error estimate for the error \(e := u - U\).

Main ideas and notations We decompose the error e as:

where \(\hat{\rho } := u - \widehat{U}\) denotes the parabolic error, \(\sigma := \widehat{U} - \mathcal {R}_w U\) denotes the time reconstruction error and \(\epsilon := \mathcal {R}_w U - U\) denotes the Ritz–Volterra reconstruction error.

The basic idea of obtaining the a posteriori error estimate can now be summarised as follows: (i) optimal order a posteriori error estimates for the Ritz–Volterra reconstruction error \(\epsilon \) in standard norms like \(L^2\) and \(H^1\) are contained in [23]; (ii) the parabolic error \(\hat{\rho }\) satisfies a variant of the original PIDE (1.1) with a right hand side that can be controlled a posteriori in an optimal way; (iii) the time reconstruction \(\widehat{U}\) is chosen in such a way that the difference \(\widehat{U} - \mathcal {R}_w U\) can be estimated a posteriori and will be of \( O(\tau ^2)\).

We now recall from [26] the following interpolation error estimates.

Proposition 4.1

Let \(\Pi ^n: H^1_0(\Omega )\rightarrow \mathbb {V}^n\) be the Scott-Zhang interpolation operator of Cl\(\acute{e}\)ment type. Then, for sufficiently smooth \(\psi \) and finite element polynomial space of degree 1, there exist constants \(C_{1,j}\) and \(C_{2,j}\) depending only upon the shape-regularity of the family of triangulations such that for \(j \le 2\)

and

Below we shall summarize the notations of the various a posteriori error estimates to be developed in the subsequent error analysis.

4.1 \(L^{\infty }(L^2(\Omega ))\) a Posteriori Error Estimates

For \(n = 1,\ldots ,N\), we define the following estimators.

The Ritz–Volterra reconstruction error estimators

and

where \(\mathfrak {R}^n(v)\) and \(\mathfrak {J}^n[v]\) are given by (2.24) and (2.25), respectively and \(v \in \mathbb {V}^n\). Moreover, the constants \(C_i, i = 1, \ldots , 4\) appeared in different estimators are positive constants depend upon the interpolation constants and the final time T.

\(\eta _n^{T,Rec1}\) and \(\eta _n^{T,Rec2}\) are the time reconstruction error estimators and are defined by

and

where \(\eta _n^{RVH1}(\mathcal {W}_n)\) and \(\eta _n^{RVL2}(\mathcal {W}_n)\) are given by (4.2) and (4.3), respectively and \(\mathcal {W}_n\) is an a posteriori quantity given by

\(\eta _n^{T,QL}\) is the time estimator, which captures quadrature error and linear approximation errors, is defined by

where \(\hat{\mathcal {U}}(t)\), \(\hat{\mathcal {U}}_{I,1}(t)\) and \(\hat{\mathcal {U}}_{I,2}(t)\) are given by (2.20), (2.21) and (2.22), respectively, \(C_5 = \max \Big \{\frac{\gamma ''}{4}, \frac{\gamma '}{2}, 1\Big \}\) and \(\theta _{n}\) is given by

The spatial mesh change estimator \(\eta _n^{SM}\) and the spatial estimator \(\eta _n^{S}\) are defined by

and

where \(\eta _n^{RVL2}(U)\), \(\eta _n^{RVL2}(\mathcal {W}_n)\) are given by (4.3) and \(\hat{\tau }_n = \displaystyle {\max _{1 \le j \le n}} \tau _j\). \(C_{\Omega }\) and \(C_{6}\) are regularity constants.

is the linear interpolation error estimator for the Volterra integral term, where \(\hat{\omega }(t)\) and \(\hat{\omega }_I(t)\) are given by (2.18) and (2.19), respectively.

is the mesh change estimator.

and

are the data approximation error estimators, where \(\varphi (t) := \hat{I}f(t)\) and \(\hat{I}\) is given by (3.3).

To prove the main result of this section, we shall first prove estimates for the Ritz–Volterra reconstruction error \(\epsilon \) and the parabolic error \(\hat{\rho }\).

4.2 A Posteriori Error Estimates for \(\epsilon \)

Below, we state the following a posteriori error estimates for the Ritz–Volterra reconstruction error. For a proof, we refer to Lemma 4.2 of [23].

Lemma 4.2

(Ritz–Volterra reconstruction error estimates) For any \(v \in \mathbb {V}^n\), the following estimates hold:

and

where \(\eta _n^{RVH1}(v)\) and \(\eta _n^{RVL2}(v)\) are given by (4.2) and (4.3), respectively.

Next we proceed to estimate \(\hat{\rho }(t)\) which is a cumbersome task.

4.3 A Posteriori Error Estimates for \(\hat{\rho }(t)\)

Lemma 4.3

(A posteriori error estimate for the parabolic error) For each \(m \in [1:N]\), the following estimate holds for \(\hat{\rho }(t)\):

where \(\Xi ^2_{1,m}\) and \(\Xi ^2_{2,m}\) are the total estimators corresponding to the parabolic error \(\hat{\rho }(t)\) and are defined by

and

The estimators \(\eta _n^{T,Rec2}\), \(\eta _n^{T,QL}\), \(\eta ^{S}_n\), \(\eta _n^{SM}\), \(\eta _{n}^{D,1}\), \(\eta _n^{M}\), \(\eta _{n}^{TL}\) and \(\eta _{n}^{D,2}\) are given by (4.5), (4.7), (4.10), (4.9), (4.13), (4.12), (4.11) and (4.14), respectively. Moreover, \(C_7\) is a positive constant independent of the discretization parameters but depends upon the final time T.

The proof of the above lemma in turn depends on several auxiliary results which we shall discuss in detail below. We shall use the notation \(\rho (t)\) for the error \(u(t)-\mathcal {R}_w U(t)\) in the subsequent error analysis. We begin with the following error equation for \(\hat{\rho }(t)\).

Lemma 4.4

For \(t \in I_n, n \in [1:N]\) and for each \(\phi \in H^1_0(\Omega )\), we have the following error equation for \(\hat{\rho }(t)\):

where G is defined by

with

and \(\mathcal {Y}_n\) is given by (2.23).

Proof

For \(t \in I_n\) and \(\forall \phi \in H^1_0(\Omega )\), we first multiply (3.5) by \(\phi \) and integrate over \(\Omega \). Then, subtract the resulting equation from (2.5) to obtain

Using (2.17)–(2.19) and (2.14), we obtain

which together with (3.1) and (2.20)–(2.22) yields

For the last two terms on the right hand side of (4.18), an application of (2.26) yields

Thus, the error equation (4.17) for \(\hat{\rho }(t)\) now follows from (4.18) and (4.19). \(\square \)

In view of the error equation obtained in the Lemma 4.4, the following lemma presents a clear picture of the terms to be estimated in order to obtain a bound on \(\hat{\rho }(t)\).

Lemma 4.5

The following estimate holds for \(\hat{\rho }(t)\)

where

with

and

Proof

Set \(\phi = \hat{\rho }(t)\) in (4.17) to obtain

We use the identity

to have

We use the coercivity of \(a(\cdot ,\cdot )\), and continuity of \(a(\cdot ,\cdot )\), \(b(t,s;\cdot ,\cdot )\) along with the Cauchy–Schwarz and Young’s inequalities, and integrate the resulting equation from \(t_{n-1}\) to \(t_n\) to obtain

where we have used the fact that

We apply Gronwall’s lemma and take sum from \(n = 1:m\) to obtain the desired result with \(C_7 = \max \{\beta C_G(T),2C_G(T)\}\), where \(C_G(T) = \exp (T)\) is a Gronwall’s constant. \(\square \)

Now, we proceed to estimate the terms appeared in Lemma 4.5. We begin with by providing a posteriori error bounds on the time discretization error.

Lemma 4.6

(Time error estimators) The following a posteriori bounds hold for the time discretization error terms \(\mathcal {I}_m^1 \) and \(\mathcal {I}_m^2\):

and

where \(\eta _n^{T,Rec2}\), \(\eta _n^{T,QL}\) and \(\eta _{n}^{TL} \) are given by (4.5), (4.7) and (4.11), respectively.

Proof

We know that

Thus, to estimate the term \(I_n^{T,1}\), we have to first estimate \(\widehat{U}(t) - \mathcal {R}_w U(t)\).

Using (2.17), (3.5) and (3.7), we have

We integrate from \(t_{n-1}\) to t and use the fact that \(\widehat{U}(t)\) coincides with \(\mathcal {R}_w U(t)\) at \(t=t_{n-1}\) to obtain

Using (3.1), (3.2) and the identity \(l_{n-1}(t) + l_n(t) = 1,\, t \in I_n\), we have

where \(\mathcal {W}_n\) is given by (4.6).

Substituting (4.30) in (4.29), we obtain

Using the coercivity and the continuity of the bilinear form \(a(\cdot ,\cdot )\), it follows that

where we have used (4.28) and (4.31).

Thus, using (4.2) we deduce that

Finally, with an aid of (4.32), we obtain

Thus, the first inequality (4.26) follows by taking summation over n and using (4.5).

Next, to prove the inequality (4.27), we first note that

We start with estimating the term \(\mathcal {J}_1\). A standard Trapezoidal rule argument for a sufficiently smooth function g(s) yields

If we define

then

and

Using (2.6), (2.12), (4.34) and (4.35), we obtain

where \(\theta _n\) is given by (4.8) and \(\bar{\gamma } = \max \bigg \{\frac{\gamma ''}{4}, \frac{\gamma '}{2} \bigg \}\).

Thus, in view of (4.36) we have the following bound on \(\mathcal {J}_1\)

Moreover, an application of Cauchy–Schwarz inequality gives

and

We now combine the bounds on \(|\mathcal {J}_1|\), \(|\mathcal {J}_2|\), \(|\mathcal {J}_3|\) and \(|\mathcal {J}_4|\) to obtain

Thus,

where \(\eta _n^{T,QL}\), \(\eta _{n}^{TL} \) are given by (4.7), (4.11), respectively and \(C_5 := \max \{\bar{\gamma },1\}\). The desired estimate now follows by taking summation over n. \(\square \)

The next lemma gives information on the a posteriori contributions due to mesh change.

Lemma 4.7

(Mesh change estimate) We have the following bound on the mesh change error term \(\mathcal {I}_m^3\):

where \(\eta _n^{M}\) is given by (4.12).

Proof

The orthogonality property of \(P_0^n\) now leads to

An application of the Cauchy–Schwarz inequality yields

where \(\eta _n^{M}\) is given by (4.12). Taking summation over n we complete the proof. \(\square \)

The next lemma captures contributions due to the spatial discretizations.

Lemma 4.8

(Spatial error estimates) The following a posteriori error bound holds on the spatial discretization error term \(\mathcal {I}_m^4\):

where \(\eta _n^{S}\) is given by (4.10). Moreover, the error bound holds for \(\mathcal {I}_m^5\) corresponds to the spatial discretization error due to mesh change:

where \(\eta _n^{SM}\) is given by (4.9).

Proof

Using (4.30) and (4.3), we have

Hence, we obtain

where \(\eta _n^{S}\) is given by (4.10) and the estimate (4.38) follows by taking the summation over n.

Next, to estimate \(\mathcal {I}^{S,5}_n\) as given in (4.24), we exploit the orthogonality property of the Ritz–Volterra reconstructions. We use the standard duality technique here.

For \(t \in (0,T)\), let \(\psi \in H^2({\Omega })\cap H^1_0(\Omega )\) be the solution of the following elliptic problem in the weak form

satisfying the following regularity estimate:

where the constant \(C_{\Omega }\) depends on the domain \(\Omega \).

Setting \(\chi = \mathcal {R}^n_w U^n - \mathcal {R}^{n-1}_w U^{n-1} - U^n + U^{n-1}\) in (4.41) and using (2.14), (2.7) and (2.9), we obtain

We now use (2.24) and (2.25) together with \(\mathfrak {R}^n(U) - \mathfrak {R}^{n-1}(U) = \tau _n \partial \mathfrak {R}^n(U)\) and \(\mathfrak {J}^n[U] - \mathfrak {J}^{n-1}[U] = \tau _n \partial \mathfrak {J}^n[U]\) to obtain

For the last term in the above, we use the fact

where \(\mathcal {B}^*(t_n,s)\) is the formal adjoint of the operator \(\mathcal {B}(t_n,s)\) and then apply the Cauchy–Schwarz inequality together with \(\Vert \mathcal {B}^*(t_n,s) \psi (t) \Vert \le C_{\mathcal {B}^*_1}\Vert \psi (t)\Vert _2\) to obtain

where \(\hat{\tau }_n = \displaystyle {\max _{1 \le j \le n}} \tau _j\) and we used (2.16). Using (4.45) in (4.43) and applying Proposition 4.1 with \(C_{6} = \max \Big (2C_{\mathcal {B}^*_1}, 1\Big )\), we obtain

Combining (4.24) and (4.46), we arrive at

where we have used (4.9) and the regularity result (4.42). Summing from \(n = 1:m\), the desired result is obtained. \(\square \)

The data approximation error is estimated in the following lemma.

Lemma 4.9

(Data approximation error estimate) The following bound holds on the data approximation error term \(\mathcal {I}_m^6\)

where \(\eta _{n}^{D,1}\) and \(\eta _{n}^{D,2}\) are given by (4.13) and (4.14).

Proof

In view of (3.1) and (3.2), we have

Using the Cauchy–Schwarz inequality, we obtain

For \(\mathfrak {J}_2\), we use orthogonality property of \(P^n_0\) to have

Therefore,

where \(\eta _{n}^{D,1}\) and \(\eta _{n}^{D,2}\) are given by (4.13) and (4.14), respectively. Now, taking summation over n, we obtain the desired result. \(\square \)

Proof of Lemma 4.3

Application of Lemmas 4.6–4.9 in Lemma 4.5 yields

We now use the following elementary fact to complete the proof. For \(a = (a_0, a_1, \ldots , a_m)\), \(b = (b_0, b_1, \ldots , b_m) \in \mathbb {R}^{m+1}\) and \(c \in \mathbb {R}\), if \(|a|^2 \le c^2 + a.b,\) then \(|a| \le |c| + |b|.\)

In particular for \(n = [1:m]\), taking

we obtain the desired result. \(\square \)

4.4 The Main Theorem

Now, we state the main result of this section concerning a posteriori error estimate for the fully discrete Crank–Nicolson scheme in the \(L^{\infty }(L^2)\)-norm.

Theorem 4.10

(\(L^{\infty }(L^2)\)a posteriori error estimate) Let u(t) be the exact solution of (1.1), and let U(t) be as defined in (2.12). Then, for each \(m \in [1:N]\), the following error estimate hold:

where \(\eta _n^{T,Rec2}\), \(\eta _n^{RVL2}(U)\), \(\eta _n^{T,Rec1}\), \(\Xi ^2_{1,m}\) and \(\Xi ^2_{2,m}\) are given by (4.5), (4.3), (4.4), (4.15) and (4.16), respectively. Moreover, the constants appeared in the a posteriori error bounds are positive constants independent of the discretization parameters but depend upon the interpolation constants and the final time T.

Proof

In view of (4.1), we apply triangle inequality to have

For \(t \in I_n\),

Therefore, for \(t \in [0,t_m]\), using Lemma 4.2, we have

Also,

where \(\eta _n^{T,Rec1}\) is given by (4.4).

Finally, we use (4.48)–(4.50) and Lemma 4.3 to obtain the desired result. \(\square \)

Remarks (i) The estimator appeared in Theorem 4.10 is formally of optimal order. Moreover, in the absence of the memory term (i.e., \(\mathcal {B}(t,s) = 0\)), the error estimator obtained in Theorem 4.10 is similar to that for the parabolic problems [3]. Further, we note that the estimator \(\eta _n^{T,QL}\), the contribution to the error from the approximation of the integral term, is of \(O(\tau ^2)\). Thus, the a posteriori error bound in Theorem 4.10 generalizes the results of [3] to PIDE.

(ii) The mesh change error term \(\mathcal {I}_m^3\) can alternatively be estimated as

where \(\eta _n^{M,1}\) is given by

This estimate for mesh change error will lead to an alternative a posteriori error estimate for the main error e. In particular, the terms \(\Xi ^2_{1,m}\) and \(\Xi ^2_{2,m}\) in Lemma 4.3 take the form

and

The corresponding changes take place in the Theorem 4.10.

(iii) The term

appeared in Theorem 4.10 (see 4.11) is not a traditional a posteriori quantity, where \(\hat{\omega }(t)\) and \(\hat{\omega }_I(t)\) are given by (2.18) and (2.19), respectively. Since, the error in linear interpolation is bounded as

where \(\frac{d^2}{dt^2}(\hat{\omega }(t))\) depends upon the quantities \(\nabla \omega _t(t)\) and \(\nabla \omega (t)\). The term \(\Vert \nabla \omega _t(t)\Vert \) can be estimated as

and for the term \(\Vert \nabla \omega (t)\Vert \), we have

This shows that (4.51) is now a meaningful a posteriori quantity by noting the fact that \(\Vert \nabla \epsilon ^n\Vert \) is bounded and is of O(h) (see Lemma 4.2). Taking \(\tau \approx h\), it is easy to see that the term (4.51) is of optimal order.

(iv) The a posteriori error analysis of the classical Crank–Nicolson scheme leads to one additional term \(\frac{1}{2}\Vert (P^n_0 - I)\mathcal {A}^{n-1}U^{n-1}\Vert \) in the error bounds. However, it does not affect the optimality of the main estimator.

(v) We have used \(\mathbb {P}_1\) elements in the analysis, however there is no limitation on the finite element space to be used.

(vi) The term \(\hat{\tau }_n\) appearing in the Lemma 4.8 can be problematic in case of the time adaptivity as it is defined globally. However, one can easily avoid such kind of term from the final a posteriori error estimate as follows: In the proof of Lemma 4.8, instead of writing

we could have written

and the rest of the analysis follows subsequently. In doing so, we could have avoided the term \(\hat{\tau }_n\) and one can use the modified estimate for time adaptation.

5 Numerical Assessment

This section reports numerical results for a test problem to validate the derived estimators. Our aim is to study the asymptotic behaviour of the error estimators presented in the Theorem 4.10 for a two dimensional test problem. Consider the PIDE (1.1) in a square domain \(\Omega = (0,1)^2 \subset \mathbb {R}^2\) with homogeneous Dirichlet boundary conditions and \(T = 0.1\). We select the coefficient matrices to be \(A = I\) and \(B(t,s) = exp(-\pi ^2(t-s))I\). Then, the forcing term f is calculated by applying the PIDE (1.1) to the corresponding u.

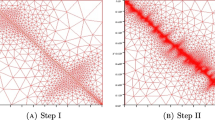

Our main emphasis here is to understand the asymptotic behaviour of the estimators following which we perform numerical test on uniform meshes with uniform time-steps. All computations have been carried out using \(\text {MATLAB}\_\text {R}2015a\). We choose a sequence of space mesh-sizes (\(h(i): i \in [1:l]\)), to which we couple a sequence of time step-sizes (\(\tau (i) : i \in [1:l]\)) with \(\tau (i) \propto h(i)\). Here, l denotes the number of runs. For each run, the spatial mesh-size becomes the half of the previous mesh-size. The experiment is carried out with \(\mathbb {P}_1\) elements. Since the finite element spaces consist of \(\mathbb {P}_1\) elements and the Crank–Nicolson scheme is second-order accurate in time, the error in \(L^{\infty }(L^2)\)-norm is \(O(h^2 + \tau ^2)\) so that we expect that parts of the main estimator should decrease with second order for \(h \propto \tau \). The exact and the finite element solutions are shown in Fig. 1, respectively.

For each run \(i \in [1:l]\), we compute the following quantities of interest at the final time point \(t_N = T = 0.1\):

-

The Ritz–Volterra reconstruction error estimator: \(\max _{n \in [0:N]}\eta _n^{RVL2}(U)\)

-

The time reconstruction error estimators: \(\max _{n \in [0:N]}\eta _n^{T,Rec1}\) and \(\Big (\sum _{n = 1}^{N} \tau _n \big (\eta _n^{T,Rec2}\big )^2\Big )^{1/2}\).

For each quantities of interest we observe their experimental order of convergence (EOC). The EOC is defined as follows: For a given finite sequence of successive runs (indexed by i), the EOC of the corresponding sequence of quantities of interest E(i) (estimator or part of an estimator) itself is a sequence defined by

where h(i) denotes the mesh-size of the run i. The value of EOC of an estimator indicates its order.

In order to measure the quality of our estimator the estimated error is compared to the true error so-called effectivity index (EI). We define the effectivity indices by

where \(\eta ^{Total}_n\) is the full estimator as shown in the Theorem 4.10. All the constants involved in the estimators are taken to be equal to 1 except Gronwall’s constant which is taken to be \(\exp (T)\). The effectivity index is to be understood only qualitatively in this paper as the main emphasis is on observing asymptotic behaviour of the estimator.

From the Tables 1, 2, and 3, it is apparent that each individual estimator constituting the total estimator have the optimal rate of convergence. Although, the Ritz–Volterra reconstruction estimator \(\max _n \eta _n^{RVL2}(U)\), the time reconstruction estimators \(\max _{n \in [0:N]} \eta _n^{T,Rec1}\) and \(\Big (\sum _{n = 1}^{N} \tau _n\big (\eta _n^{T,Rec2}\big )^2\Big )^{1/2}\) contribute same order of error, the time reconstruction estimator \(\max _{n \in [0:N]} \eta _n^{T,Rec1}\) seems dominant over other error estimators for prescribed choices of \((h,\tau )\) as shown in Tables 1, 2, and 3. Furthermore, the estimator \(\max _n \eta _n^{RVL2}(U)\) is observed to be divided by 4 at each iteration when we took a small constant time-step and divide the spatial mesh-size by 2 (see Table 4). Similarly, we observe that keeping the spatial mesh-size small and constant, when the time-step is divided by 2, the estimators \(\max _{n \in [0:N]} \eta _n^{T,Rec1}\) and \(\Big (\sum _{n = 1}^{N} \tau _n\big (\eta _n^{T,Rec2}\big )^2\Big )^{1/2}\) are divided by 4 (see Tables 5 and 6). Table 7 shows the behaviour of the total estimator.

Further, a higher value of the effectivity index EI for different estimators constituting the main estimator indicates that the present analysis can be improved in order to get better reliable estimator which may lead to a better effectivity index. Moreover, for the development of an efficient adaptive algorithm one has to trace down the constants carefully.

Concluding remarks Despite the importance of PIDE, and their variants in the modelling of several physical phenomena, the topic of a posteriori analysis for such kind of equations remains unexplored. In this paper, we have derived optimal order residual based a posteriori error estimator for PIDE (1.1) in the \(L^\infty (L^2)\)-norm for the fully discrete Crank–Nicolson method. The Ritz–Volterra reconstruction operator [23] unifies a posteriori approach from parabolic problems to PIDE. Moreover, for the optimality of the estimator, the linear approximation of the Volterra integral term is found to be crucial. Computational results are provided to illustrate that the estimator exhibits optimal rate of convergence which support our theoretical findings. The computability of the estimates with optimal asymptotic convergence ensures that these estimates can be utilised for different space-time adaptive algorithms to be developed. Thus, we believe that the work presented here gives a new direction for the various space-time adaptive algorithms to be developed for the Crank–Nicolson scheme in the \(L^\infty (L^2)\)-norm for PIDE. However, the development of such adaptive algorithms is out of scope of the current study and will be considered somewhere else.

There are many other important issues to be addressed in this direction. It is challenging to study the problem of obtaining a posteriori error estimates with the constants appeared in the bounds be independent of the final time T and hence, they can serve as long-time estimates. Moreover, a posteriori error analysis for hyperbolic integro-differential equations in the \(L^{\infty }(L^2)\)-norm is an interesting research problem which will be reported elsewhere.

References

Ainsworth, M., Oden, J.T.: A Posteriori Error Estimation in Finite Element Analysis. Wiley, New York (2000)

Akrivis, G., Makridakis, C., Nochetto, R.H.: A posteriori error estimates for the Crank–Nicolson method for parabolic equations. Math. Comput. 75, 511–531 (2006)

Bänsch, E., Karakatsani, F., Makridakis, C.: A posteriori error control for fully discrete Crank–Nicolson schemes. SIAM J. Numer. Anal. 50, 2845–2872 (2012)

Bänsch, E., Karakatsani, F., Makridakis, C.: The effect of mesh modification in time on the error control of fully discrete approximations for parabolic equations. Appl. Numer. Math. 67, 35–63 (2013)

Bergam, A., Bernardi, C., Mghazli, Z.: A posteriori analysis of the finite element discretization of some parabolic equations. Math. Comput. 74, 1117–1138 (2004)

Brenner, S.C., Scott, L.R.: The Mathematical Theory of Finite Element Methods. Springer, New York (2002)

Capasso, V.: Asymptotic stability for an integro-differential reaction-diffusion system. J. Math. Anal. Appl. 103, 575–588 (1984)

Dolejsi, V., Ern, A., Vohralík, M.: A framework for robust a posteriori error control in unsteady nonlinear advection-diffusion problems. SIAM J. Numer. Anal. 51, 773–793 (2013)

Chen, C., Shih, T.: Finite Element Methods for Integro-Differential Equations. World Scientific, Singapore (1998)

Eriksson, K., Johnson, C.: Adaptive finite element methods for parabolic problems I: a linear model problem. SIAM J. Numer. Anal. 28, 43–77 (1991)

Eriksson, K., Johnson, C.: Adaptive finite element methods for parabolic problems IV: nonlinear problems. SIAM J. Numer. Anal. 32, 1729–1749 (1995)

Ern, A., Vohralík, M.: A posteriori error estimation based on potential and flux re-construction for the heat equation. SIAM J. Numer. Anal. 48, 198–223 (2010)

Gurtin, M.E., Pipkin, A.C.: A general theory of heat conduction with finite wave speeds. Arch. Ration. Mech. Anal. 31, 113–126 (1968)

Habetler, G.J., Schiffman, R.L.: A finite difference method for analysing the compression of poro-viscoelasticity media. Computing 6, 342–348 (1970)

Lakkis, O., Makridakis, C.: Elliptic reconstruction and a posteriori error estimates for fully discrete linear parabolic problems. Math. Comput. 75, 1627–1658 (2006)

Lin, Y.P., Thomée, V., Wahlbin, L.B.: Ritz–Volterra projections to finite-element spaces and applications to integrodifferential and related equations. SIAM J. Numer. Anal. 28(4), 1047–1070 (1991)

Lozinski, A., Picasso, M., Prachittham, V.: An anisotropic error estimator for the Crank–Nicolson method: application to a parabolic problem. SIAM J. Sci. Comput. 31, 2757–2783 (2009)

Makridakis, C., Nochetto, R.H.: Elliptic reconstruction and a posteriori error estimates for parabolic problems. SIAM J. Numer. Anal. 41, 1585–1594 (2003)

Nochetto, R.H., Savaré, G., Verdi, C.: A posteriori error estimates for variable time-step discretizations of nonlinear evolution equations. Commun. Pure Appl. Math. 53, 525–589 (2000)

Pani, A.K., Peterson, T.E.: Finite element methods with numerical quadrature for parabolic integrodifferential equations. SIAM J. Numer. Anal. 33(3), 1084–1105 (1996)

Pao, C.V.: Solution of a nonlinear integro-differential system arising in nuclear reactor dynamics. J. Math. Anal. Appl. 48, 470–492 (1974)

Picasso, M.: Adaptive finite elements for a linear parabolic problem. Comput. Methods Appl. Mech. Eng. 167, 223–237 (1998)

Reddy, G.M.M., Sinha, R.K.: Ritz–Volterra reconstructions and a posteriori error analysis of finite element method for parabolic integro-differential equations. IMA J. Numer. Anal. 35, 341–371 (2015)

Reddy, G.M.M., Sinha, R.K.: On the Crank–Nicolson anisotropic a posteriori error analysis for parabolic integro-differential equations. Math. Comput. 85, 2365–2390 (2016)

Reddy, G.M.M., Sinha, R.K.: The backward Euler anisotropic a posteriori error analysis for parabolic integro-differential equations. Numer. Methods Partial Differ. Equ. 2016(32), 1309–1330 (2016)

Scott, L.R., Zhang, S.: Finite element interpolation of nonsmooth functions satisfying boundary conditions. Math. Comput. 54, 483–493 (1990)

Thomée, V., Zhang, N.Y.: Error estimates for semidiscrete finite element methods for parabolic integro-differential equations. Math. Comput. 53(187), 121–139 (1989)

Verfürth, R.: A posteriori error estimates for non linear problems: \(L^r(0, t; L^p(\Omega ))\)-error estimates for finite element discretizations of parabolic equations. Math. Comput. 67, 1335–1360 (1998)

Verfürth, R.: A posteriori error estimates for non linear problems: \(L^r(0, t;W^ {1, p}(\Omega ))\)-error estimates for finite element discretizations of parabolic equations. Numer. Methods Partial Differ. Equ. 14, 487–518 (1998)

Verfürth, R.: A posteriori error estimates for finite element discretization of the heat equation. Calcolo 40, 195–212 (2003)

Yanik, E.G., Fairweather, G.: Finite element methods for parabolic and hyperbolic partial integro-differential equations. Nonlinear Anal. 12, 785–809 (1988)

Acknowledgements

The authors wish to thank both the referees for their valuable comments and suggestion which led to the improvement of this manuscript. G. Murali Mohan Reddy would like to thank FAPESP for the financial support received (Grant No. 2016/19648-9).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Reddy, G.M.M., Sinha, R.K. & Cuminato, J.A. A Posteriori Error Analysis of the Crank–Nicolson Finite Element Method for Parabolic Integro-Differential Equations. J Sci Comput 79, 414–441 (2019). https://doi.org/10.1007/s10915-018-0860-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-018-0860-1