Abstract

In this paper, the a posteriori error estimates of the exponential midpoint method for time discretization are established for linear and semilinear parabolic equations. Using the exponential midpoint approximation defined by a continuous and piecewise linear interpolation of nodal values yields suboptimal order estimates. Based on the property of the entire function, we introduce a continuous and piecewise quadratic time reconstruction of the exponential midpoint method to derive optimal order estimates; the error bounds solely depend on the discretization parameters, the data of the problem, and the approximation of the entire function. Several numerical examples are implemented to illustrate the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is devoted to deriving optimal order a posteriori error estimates for the exponential midpoint method for linear and semilinear parabolic problems

where the operator \(A: D(A)\rightarrow H\) is positive definite, self-adjoint and linear on a Hilbert space \(( H,(\cdot ,\cdot ))\) with domain D(A) dense in H, \(B(t, \cdot ): D(A)\rightarrow H, \ t\in [0,T]\), and the initial value \(u^0\in H\). It is well known that reaction-diffusion equations and the incompressible Navier-Stokes equation fit into this framework [1, 2]. Problems of the form (1) arise frequently in many fields, such as mechanics, engineering, and other applied fields or disciplines.

Exponential integrators were developed and analyzed by many authors. Lawson [3] formulated A-stable explicit Runge-Kutta methods by using exponential functions, Friedli developed this idea in [4], and exponential multistep methods were considered in [5, 6]. Generally, exponential integrators provide higher accuracy and stability than non-exponential ones for stiff systems and highly oscillatory problems, and their implementation requires computing the products of matrix exponentials and vectors, with the new methods for evaluating it (see, e.g., [7,8,9,10,11]), exponential integrators have received more attention [10, 12,13,14,15,16,17,18,19,20,21,22,23]. It is worth mentioning that Hochbruck et al. [16] systematically presented exponential one step and multistep methods, which were based on the stiff order conditions. In this paper, we focus on exponential one step methods. Exponential Runge-Kutta methods of collocation type were proposed in [24]. However, optimal order a posteriori estimates for exponential Runge-Kutta methods of collocation type is still an open problem. There are some works about the earliest significant contribution to a posteriori error analysis for time or space discrete approximations for evolution problems [25,26,27,28]. Akrivis et al. derived a posteriori error estimates of evolution problems for Crank-Nicolson method [29], and continuous Galerkin method [30]. Makridakis and Nochetto [31] considered a posteriori error estimates for discontinuous Galerkin method. A posteriori error analysis for Crank-Nicolson-Galerkin type methods and fully discrete finite element methods for the generalized diffusion equations with delay have been derived by Wang et al. [32,33,34]. There is no article devoted to the a posteriori error analysis for standard exponential integrators for solving parabolic differential equations. Owing to this, our study starts by deriving a posteriori error estimates for the exponential midpoint method. Based on the property of the exponential function, we introduce the continuous, linear piecewise interpolant and quadratic time reconstruction of the exponential midpoint method to obtain suboptimal and optimal estimates, respectively.

This paper is mainly concerned with a posteriori error estimates for the exponential midpoint method for linear parabolic equations, with \(B(t,u(t))=f(t)\) as a given forcing function. A brief discussion on a posteriori error estimates for semilinear problems is included. The paper is organized as follows. Section 2 introduces the necessary notation, and briefly reviews exponential Runge-Kutta methods of collocation type and the exponential midpoint method. In Section 3, by introducing a continuous approximation U in time, the residual-based a posterior error bounds, which are of suboptimal order, are derived for linear parabolic problems by using the energy technique. To derive optimal order a posteriori error estimates, a quadratic exponential midpoint reconstruction \(\hat{U}\) is introduced in Section 4. Then a posteriori error estimates in \(L^2(0,t;V)\)- and \(L^{\infty }(0,t;H)\)-norms are derived for the exponential midpoint method for linear problems and the difference \(\hat{U}-U\) is computed in Section 5. Section 6 is devoted to the extension of the a posteriori error analysis for the exponential midpoint method from linear problems to semilinear problems. The effectiveness of the a posteriori error estimators is verified by several numerical examples in Section 7. The last section is concerned with concluding remarks.

2 The exponential midpoint method for parabolic problems

Now we consider the exponential midpoint method for the parabolic problem (1). To do this, we introduce some notations and make some appropriate assumptions.

2.1 Assumptions and notations

Let \(V:=D(A^{\frac{1}{2}})\) and denote the norms in H and V by \(|\cdot |\) and \(\Vert \cdot \Vert \), \(\Vert v\Vert =|A^{\frac{1}{2}}v|=(Av,v)^{\frac{1}{2}}\), respectively. We identify H with its dual, and let \(V^{\star }\) be the dual of V (\(V\subset H \subset V^{\star }\)), and denote by \(\Vert \cdot \Vert _{\star }\) the dual norm on \(V^{\star }\), \(\Vert v\Vert _{\star }=|A^{-\frac{1}{2}}v|=(v,A^{-1}v)^{\frac{1}{2}}.\) We still denote by \((\cdot ,\cdot )\) the duality pairing between \(V^{\star }\) and V, and introduce the Lebesgue spaces \(L^p(J;X)\) with the interval J and Banach space X (\(X=H,\ V \ or \ V^{\star }\)) and \(L^p\)-norm of \(\Vert u(t)\Vert _{X}\) , \(1\le p < \infty \), are

is finite. The space \(L^{\infty }(J;X)\) with interval J and Banach space X, consists of the functions u with finite, and the \(L^{\infty }\)-norm of \(\Vert u(t)\Vert _{X}\) is

For the sake of convenience, we write \(L^p(0,t;X)\) for \(L^p((0,t);X)\). Suppose that the Poincaré inequality holds:

here \(\lambda _1>0\) is the first eigenvalue of the operator A.

2.2 Exponential Runge-Kutta methods of collocation type

Let \( 0= t^0< t^1< \ldots < t^{N} = T\) be a partition of [0, T], \(I_n:=(t^{n-1},t^n]\), and \(k_n:=t^n-t^{n-1}\). It is well known that exponential integrators are based on the Volterra integral equation

also called variation-of-constants formula for solving semilinear parabolic differential equations (here, the nonlinear term is \(B(\tau ,u(\tau ))\)).

An s-stage Exponential Runge–Kutta (ERK) method for (1) is defined as

where the coefficients \(a_{ij}(-k_nA)\), \(b_i(-k_nA)\) are exponential functions of \(k_nA\) for \({i,j=1,\ldots s}\), \(t^{n-1,i}=t^{n-1}+c_ik_n\), and \(U^{n-1,i}\approx u(t^{n-1}+c_ik_n)\) for \(i=1,\ldots ,s\). The method (4) can be represented by the Butcher tableau

For \(0< c_1< \ldots < c_s \le 1\), ERK methods of collocation type can be viewed as using the interpolation polynomial \(P_{s-1}\) of degree \(s-1\) for the collocation nodes \(\{c_i\}_{i=1}^s\) to approximate the nonlinear term \(B(\tau ,u(\tau ))\) in the Volterra integral (3). Hence, the coefficients \(a_{ij}(-k_nA)\) and \(b_i(-k_nA)\) are defined as follows:

for \(i,j=1,\ldots ,s\), where \(L_1(\tau ), \ldots , L_s(\tau ) \) are the Lagrange interpolation polynomials

Let \(q_1\) and \(q_2\) be the largest integers such that

with the entire functions

It is clear that if we consider the limit \(A\rightarrow \textbf{0}\), the formulas (6) and (8) reduce to

and

This implies that the ERK method of collocation type reduces to a RK method of collocation type when \(A\rightarrow \textbf{0}\); therefore, the limit method is called the underlying RK method. Hochbruck et al. [24] illustrated that an s-stage ERK method of collocation type converges with order \(\min (s+1,p)\) for linear parabolic problems, where p denotes the classical (non-stiff) order of the underlying RK method. For semilinear parabolic problems, higher order ERK methods like the exponential Gauss methods in some special cases (sufficient temporal and spatial smoothness) were formulated.

2.3 The exponential midpoint method for parabolic problems

In this paper, we consider the exponential midpoint method, the simplest ERK method, for solving (1) (see [16]):

which can be denoted by the Butcher tableau

where \(\varphi _1(-k_nA)\) and \(\varphi _1(-\frac{1}{2}k_nA)\) are defined by (9).

3 A posteriori error estimates for linear problems

We consider linear parabolic differential equations (LPDEs):

where the forcing term f is a sufficiently smooth function. It is worth noting that if we apply an ERK method of collocation type to LPDEs, the method reduces to the exponential quadrature rule

where the weights are

3.1 Exponential midpoint rule

For given \(\{v^n\}_{n=0}^N\), we introduce the notation

For (14), the method (12) reduces to the exponential midpoint rule:

Combining the recurrence relation

and \(\varphi _k(0)=1/k!\), we have

Hence, \(U^n\) can be rewritten as

which implies that

with \(U^0:=u^0\). In [24], Hochbruck et al. proved that if \(f^{(s+1)}\in L^{1}(0,T;X)\), and the ERK method satisfies the conditions

then the error bound \(\Vert u(t^n)-U^{n}\Vert \le Ch^{s+1}\) holds on \(0\le t^n \le T\). As a consequence, it is easy to verify that the exponential midpoint rule is of second order.

The exponential midpoint approximation \(U: \ [0,T] \rightarrow D(A)\) to u is defined by linear interpolation between the nodal values \(U^{n-1}\) and \(U^n\),

Let \(R(t)\in H\) be the residual of U,

which can be considered as the amount by which the approximation solution U misses satisfying (14). A direct calculation yields

It follows from (20) that

Therefore, the residual R(t) of U is

Using the recurrence relation (18) and

we get

For \( t \in I_n\), the residual R(t) can also be rewritten in the following form

Let us define the error \(e:=u-U\). According to

the error e(t) satisfies the error equation

Taking in (27) the inner product with e(t), we have

and thus to

3.2 Maximum norm estimate

Combining the Poincaré inequality with the Cauchy-Schwarz inequality, from (29) we have

It is easy to obtain that

Integrating inequality (30) from 0 to t, we can obtain

Hence, we have

This indicates that the error of \(u(t)-U(t)\) is bounded by the initial error |e(0)| and the residual \(R(s), \ s\in [0,T]\). Under the assumption \(U^0=u^0\), we deduce

3.3 \({L^2(0,t;V)}\)-estimate

We have presented an error estimate in the maximum norm in the previous subsection; in the following theorem we give an error estimate in the \(L^2(0,t;V)\)-norm.

Theorem 1

Let u(t) be the exact solution of (14), and U(t) be the exponential midpoint approximation to u(t) defined by (22). Denote by R(t) the residual of U(t); then, the error \(e(t)=u(t)-U(t)\) satisfies

Proof

In view of (29), using the Cauchy-Schwarz inequality and the Young’s inequality, we get

Under the assumption \(U^0=u^0\), we integrate the above inequality from 0 to t, and obtain

The proof is complete. \(\square \)

These results reveal that the error \(e(t)=u(t)-U(t)\) can be bounded by the residual |R(t)|. It is obvious that the a posteriori quantity R(t) has order one. In fact, when we apply the exponential midpoint rule to a scalar ordinary differential equation (o.d.e.) \(u^{\prime }(t)=f(t)\), the exponential midpoint rule reduces to the mid-rectangle formula, i.e.,

In this case, obviously, R(t) has order one. However, the exponential midpoint rule has second-order accuracy, and the residual R(t) is of suboptimal order. Applying the energy techniques to the error equation leads to suboptimal bounds.

4 Exponential midpoint reconstruction

Based on the property of the exponential function, a continuous piecewise quadratic polynomial in time \(\hat{U}:[0,T]\rightarrow H\) with second-order residual is defined as follows. To begin with, we denote by \(\psi (t)\) the linear interpolant of \(\varphi _1(-k_nA)f(t)\) at the nodes \(t^{n-1}\) and \(t^{n-\frac{1}{2}}\),

and introduce a piecewise quadratic polynomial \(\Psi (t)=\int _{t^{n-1}}^{t} \psi (s)ds,\ t \in I_n,\) i.e.,

which satisfies

For any \(t \in I_n\), the exponential midpoint reconstruction \(\hat{U}\) of U is defined by

The above formula can be viewed as integrating \(-\varphi _1(k_nA)AU(t)+\psi (t) +(I-\varphi _1(-k_nA))U^{\prime }(t)\) from \(t^{n-1}\) to t. Obviously, the derivative of \(\hat{U}(t)\) in time is

In view of (38), we take the following approximation

to evaluate the integral in (38), where \(c_1=-k_n^{-1}(\varphi _1(-k_nA)A)^{-1}[I-\varphi _1(-k_nA)-k_n\varphi _1(-k_nA)A]\), and \(c_2=k_n^{-1}(\varphi _1(-k_nA)A)^{-1}[I-\varphi _1(-k_nA)]\); then \(\hat{U}\) takes the form

In fact, the exponential midpoint rule can be viewed as using the \(k_n\varphi _1(-k_nA)AU^{n-1}\) to evaluate the integral in (38), which is of first order. This allows us to evaluate the integral by using the first-order approximation, and it is easy to verify that if we use the approximation (40) to evaluate the integral in (38), then the approximation has order one as well. Moreover, we notice that \(\hat{U}(t^{n-1})=U^{n-1}\) and

Therefore, \(\hat{U}\) and U coincide at the nodes \(t^0, \ldots , t^{N}\); in particular, \(\hat{U}:[0,T]\rightarrow H\) is continuous.

In view of (35) and (38), we have

Denoting by \(\hat{R}(t)\) the residual of \(\hat{U}\), which satisfies

Inserting the formula (43) into (44), we get

For \(t \in I_n\), the residual \(\hat{R}(t)\) can also be rewritten as

In what follows, it will be proved that the a posteriori quantity \(\hat{R}(t)\) has order two; compare with (26).

5 Optimal order a posteriori error estimates for linear problems

In this section, using the energy technique, we derive optimal order a posteriori error bounds for the exponential midpoint reconstruction (38). Consider the errors e and \(\hat{e}\), \(e:=u-U\) and \(\hat{e}:=u-\hat{U}\), and recall that u and \(\hat{U}\) satisfy

Then, we obtain

where \(R_f(t)\) and R(t) are defined by

and

Taking in (46) the inner product with \(\hat{e}(t)\), we get

combining

and

we deduce

5.1 \({L^2(0,T;V)}\)-estimate

We show an \(L^2(0,T;V)\)-norm a posteriori error estimate for the exponential midpoint rule in the following theorem.

Theorem 2

Let u(t) be the exact solution of (14), U(t) be the exponential midpoint approximation to u(t) defined by (22), \(\hat{U}\) be the corresponding reconstruction of U defined by (38), and denote the errors by \(e=u-U\), \(\hat{e}=u-\hat{U}\), respectively. Then, the following a posteriori error estimate holds for \(t\in I_n\):

where \(R_f(s)\) and R(s) are defined by (47) and (48), respectively.

Proof

Using the following inequalities

and

we have

Integrating the above inequality from 0 to \(t\in I_{n}\), we can obtain

Next the lower bound will be analyzed. Using the triangle inequality

we get

Similarly, we integrate the inequality (56) from 0 to t and obtain the desired lower bound

The proof is complete.\(\square \)

5.2 \({L^{\infty }(0,t;H)}\)-estimate

From (51), the \(L^{\infty }(0,t;H)\)-estimate is: for \(\forall t\in I_n\),

Since \(\hat{U}(t)\) and U(t) coincide at the nodes \(t^0, \ldots , t^{N}\), the following estimate is valid

5.3 Estimate of \({\hat{U}-U}\)

In this subsection, we will estimate and derive a representation of \(\hat{U}-U\) for the exponential midpoint rule. Subtracting (22) from (41), we get

for \(t\in I_n\), which means that \(\max _{t\in I_n} |\hat{U}(t)-U(t)|=\mathcal {O}(k_n^2)\). With \(\varepsilon _U:=\int _0^T \Vert \hat{U}(t)-U(t)\Vert ^2dt\), the estimator is

We make some remarks for this section:

-

1.

The order of the a posteriori quantities \(\varphi _1(-k_nA)R_f(t)\) and \((\varphi _1(-k_nA)-I)R(t)\) will be discussed. The entire functions \(\varphi _k(-k_nA)\) are bounded (see [16]), then the a posteriori quantities \(\varphi _1(-k_nA)R_f(t)\) and \(\hat{U}(t)-U(t)\) are of second order once \(f\in C^2(0,T;H)\). As we presented in Section 3, the a posteriori quantity R(t) is of first order and \(\varphi _1(-k_nA)-I=\mathcal {O}(k_n)\), whence the a posteriori quantity \((\varphi _1(-k_nA)-I)R(t)\) has order two. However, the order of the a posteriori quantities \(\varphi _1(-k_nA)R_f(t)\) and \(\hat{U}(t)-U(t)\) may be reduced due to the practical computation of the entire function \(\varphi _1(-k_nA)\). We also notice that \(I-\varphi _1(-k_nA)=\mathcal {O}(k_n)\) is a truncation of the series in (25), and this truncation usually affects the order of \((\varphi _1(-k_nA)-I)R(t)\). It is true that the order of the exponential midpoint method may reduce in the \(L^2(0,t;V)\)-norm, therefore it is acceptable that \(\varphi _1(-k_nA)\) affects the orders of the a posteriori quantities \(\hat{U}(t)-U(t)\), \(\varphi _1(-k_nA)R_f(t)\) and \((\varphi _1(-k_nA)-I)R(t)\) via the \(L^2(0,t;V)\)-norm and \(L^2(0,t;V^{\star })\)-norm, respectively. The theoretical results will be illustrated by several numerical examples.

-

2.

We have presented a posteriori error estimates for the exponential midpoint rule (17) by using the reconstruction \(\hat{U}\) in the \(L^2(0,t;V)\)- and \(L^{\infty }(0,t;H)\)-norms. From (51) and (58), it is very clear that the error upper bounds depend on \(\hat{U}(t)-U(t)\), \(\varphi _1(-k_nA)R_f(t)\) and \((\varphi _1(-k_nA)-I)R(t)\) via the \(L^2(0,t;V)\)-norm and \(L^2(0,t;V^{\star })\)-norm, respectively, which are discretization parameters, the data of problem and the approximation of \(\varphi _1(-k_nA)\). The a posteriori quantities \(\varphi _1(-k_nA)R_f(t)\) and \((\varphi _1(-k_nA)-I)R(t)\) are of optimal second order in theory, and the formula (60) reveals that the a posteriori quantity \(U(t)-\hat{U}(t)\) has order two, whence the a posteriori estimate is of optimal (second) order.

6 Error estimates for semilinear parabolic differential equation

In this section, our objective is the derivation of a posteriori error estimates for the exponential midpoint method for semilinear parabolic differential equations. Suppose that \(B(t,\cdot )\) is an operator from V to \(V^{\star }\), and a local Lipschitz condition for \(B(t,\cdot )\) in a strip along the exact solution u holds:

where \(T_u:=\{v\in V: \min _t\Vert u(t)-v\Vert \le 1\}\), and a constant L. For (1), we assume that the following local one-sided Lipschitz condition holds:

around the solution u, uniformly in t, with a constant \(\lambda \) less than one and a constant \(\mu \).

6.1 Exponential midpoint method

It has been shown that the ERK methods converge at least with their stage order, and that convergence of higher order (up to the classical order) occurs, if the problem has sufficient temporal and spatial smoothness. Here, the exponential midpoint method (12) satisfies the conditions (21), then it is of second order.

Since we use the nodal values \(U^{n-1}\) and \(U^{n}\) to express \(U^{n-\frac{1}{2}}\)

Inserting (64) into the update of the exponential midpoint method, we get

We define the exponential midpoint approximation \(U: [0,T]\rightarrow D(A)\) to u(t) by a continuous piecewise linear interpolant of nodal values \(U^{n-1}\) and \(U^n\),

The residual \(R(t) \in H\) of U is

It is easy to verify that the a posteriori quantity R(t) is of first order, i.e., of suboptimal order. Hence, similar to the linear case, the reconstruction \(\hat{U}\) of U is introduced to recover the optimal order.

6.2 Reconstruction

Before we derive optimal order a posteriori error estimates, we define a continuous piecewise quadratic polynomial in time \(\bar{U}: [0,T]\rightarrow H\) by interpolation at the nodal values \(U^{n-1}\), \(U^{n-\frac{1}{2}}\) and \(U^{n}\). Let \(b:I_n\rightarrow H\) be the linear interpolation of \(\varphi _1(-k_nA)B(\cdot ,\bar{U}(\cdot ))\) at the nodes \(t^{n-1}\) and \(t^{n-\frac{1}{2}}\),

Recalling (38), the exponential midpoint reconstruction \(\hat{U}\) of U is defined by

i.e.,

for \(t\in I_n\). It is obvious that \(\hat{U}(t^{n-1})=U^{n-1}\) and

It follows from (66) and (69) that

\(t\in I_n\). In view of (68), we have

where \(P_1B(t,\bar{U}(t))=B(t^{n-\frac{1}{2}},U^{n-\frac{1}{2}})+\frac{2}{k_n}(t-t^{n-\frac{1}{2}}) [B(t^{n-\frac{1}{2}},U^{n-\frac{1}{2}})-B(t^{n-1}, U^{n-1})]\).

Now, the residual \(\hat{R}(t)\) of \(\hat{U}\) is

It immediately follows from (70) that

We rewrite the residual \(\hat{R}(t)\) as

for \(t\in I_n\), and the a posteriori quantity \(\hat{R}(t)\) has order two.

6.3 Error estimates

In this subsection, a posteriori error bounds for the exponential midpoint method are derived. Let \(e:=u-U\) and \(\hat{e}:=u-\hat{U}\). We make the assumption that \(\hat{U}(t)\), \(U(t) \in T_{u}\) for all \(t\in [0,T]\). Combining (1) and (70), we have

Therefore, \(\hat{e}(t)\) satisfies

with

Taking in (72) the inner product with \(\hat{e}(t)\), and using that

we have

with \(R_b(t)=B(t,U(t))-P_1B(t,\bar{U}(t))\). Under the assumptions (62) and (63), we respectively obtain

and

To sum up, we obtain

for any positive \(\theta <(1-\lambda )/4\).

Using Cauchy-Schwarz inequality, we obtain from (73) that

Under the condition that \(\hat{e}(0)=0\), we integrate the above inequality from 0 to \(t \in I_n\):

For the case of \(\mu =0\), we have the a posteriori error estimate

These imply that the error e(t) can be bounded by the a posteriori quantities \(\Vert \hat{U}(t)-U(t)\Vert ^2\), \(\Vert \varphi _1(-k_nA)R_b(t)\Vert _{\star }^2\) and \(\Vert (\varphi _1(-k_nA)-I)R(t)\Vert _{\star }^2\). Furthermore, assuming that \((1-\lambda -4\theta )>0\), we have

Integrating inequality (76) from 0 to t, we can obtain the desired lower bound

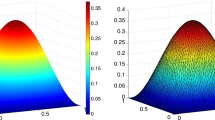

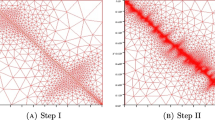

7 Numerical experiments

We have established optimal order a posteriori error estimates for the exponential midpoint method for linear and semilinear parabolic equations. The convergence rates of the a posteriori quantities and the effectivity of the error estimators will be verified by numerical examples. Throughout the numerical experiments, the matrix-valued function \(\varphi _1(-k_nA)\) is evaluated by the Krylov subspace method, which converges fast. Details about the Krylov subspace method can be found in [7, 9].

Example 1

In this example, we consider the linear parabolic equation [24]

with the exact solution

Let \(\Delta x=1/M\) and \(x_i =i\Delta x\). Using the standard central finite difference to approximate the space derivative \(u_{xx}\) leads to

where \(u_i(t) \approx u(t,x_i)\), and \(f_i\) stands for f at \((t,x_i)\).

The discrete maximum norm and the \(L^2(0,T;V)\)-norm in time of the error e(t) are defined by:

and the error \(E_{T}\) at time T is \(E_T=|e(T)|=|e(t^N)|.\) We introduce the a posteriori quantities \(\mathcal {E}_f\) and \(\zeta _{U}\) defined as

and

Taking \(\mathcal {E}_U\) as the square root of \(\varepsilon _U\) defined in (61), we define the effectivity indices \(ei_L\) and \(ei_U\) as

with \(lower \ estimator:= 2\mathcal {E}_U/5\) and \(upper \ estimator:= \mathcal {E}_U+6\mathcal {E}_f+6\zeta _{U}\), respectively. In what follows, all the integrals from \(t^{n-1}\) to \(t^n\) are approximated by the Gauss-Legendre quadrature formula with three nodes, which integrates exactly polynomials of degree at most 5.

Taking \(M=100\), We run the exponential midpoint rule with the uniform time stepsize \(k=1/N, \ N\in \mathbb {N}^{+}\). Table 1 indicates that the exact errors \(E_{T}\) and \(E_{\infty }\) have order two; however, the order of the exact error \(E_1\) is around 1.75. Table 2 presents that \(\varphi _1(-k_nA)\) has an impact on the orders of the a posteriori quantities \(\mathcal {E}_U\), \(\mathcal {E}_f\) and \(\zeta _U\), and their orders are optimal in theory, but the order reduction phenomenon is encountered. We also present the effectivity indices of the exponential midpoint rule in Table 3, the effectivity indices of the lower estimator and upper estimator seem to be asymptotically constant (around 0.2 and 2.9, respectively).

Example 2

In this example, we consider another linear parabolic (78) with \(f=2\exp (-t)-x(1-x)\exp (-t)\) (see, e.g., [35, 36]); the exact solution of this problem is

We take uniform time step \(N=10, 20, 40, 80, 160, 320\) with fixed spatial mesh size \(M=100\), i.e., \(\Delta x=1/M\); the exact errors \(E_T\), \(E_{\infty }\), \(E_1\) and their orders are indicated in Table 4. From Table 5, we observe that the orders of \(\mathcal {E}_U\), \(\zeta _U\) are close to 1.8 and 1.75, respectively, and the order of \(\mathcal {E}_f\) is around 1.9. The effectivity indices \(ei_L\) and \(ei_U\) of the exponential midpoint rule are shown in Table 6. It seems that the effectivity indices of the lower and upper estimators are asymptotically constant.

Example 3

We consider the semilinear parabolic problem (see, e.g., [14, 24])

with homogeneous Dirichlet boundary conditions.

The function \(\phi (x,t)\) is chosen in such a way that the exact solution is \(u(x,t)=x(1-x)\exp (t)\). For the semilinear parabolic problem, it should be pointed out that the exponential midpoint method (12) is implicit and the iteration is required for its implementation. In this example, we use the fixed-point iteration for the implicit equations, and the iteration will be stopped once the norm of the difference of two successive approximations is smaller than \(10^{-10}\). Using the finite difference to discretize the space derivative, we obtain

where \(\Delta x=1/M, \ M=100\), \(x_i=i\Delta x\), \(u_i(t)\approx u(x_i,t)\), and \(\phi _i(t)\) stands for \(\phi \) at \((x_i,t)\). As we stated in Section 6, the upper and lower error bounds are derived by (75) and (76), respectively. For this example, we choose \(\theta =1/6\), \(\lambda =1/6\), and \(1+L^2/4\theta =2\), and the a posteriori quantities \(\mathcal {E}_b\) and \(\zeta _U\) are defined by

and

Another a posteriori quantity \(\mathcal {E}_U\) is defined as

Let us define the effectivity indices \(ei_L\) and \(ei_U\) as:

here \(lower\ estimator:= \mathcal {E}_U/12\) and \(upper \ estimator:= 2\mathcal {E}_U+6\mathcal {E}_b+6\zeta _{U}\), respectively.

For this example, we take uniform time stepsize \(k=1/N,\ N=10, 20, \ldots ,360\). Table 7 indicates the roughly second-order convergence rate of the exact errors \(E_T\) and \(E_{\infty }\), and the order of the error \(E_1\) is around 1.75. Table 8 presents the order of the a posteriori quantities, and the order reduction phenomenon of \(\mathcal {E}_U\) and \(\zeta _U\) can be observed. Because the order of the exact error \(E_1\) is around 1.75, it is acceptable that the order of \(\zeta _U\) is close to 1.75. The effectivity indices \(ei_L\) and \(ei_U\) of the exponential midpoint method are reported in Table 9.

Example 4

Consider the Allen-Cahn equation [37]

For this example, the exact solution cannot be obtained, and we take the exponential midpoint method with smaller time stepsize \(k=1/1000\) as the reference solution and set \(\epsilon =0.01\). The space derivative is discretized by the second-order finite difference, and taking the spatial mesh with \(M = 80\). The uniform time stepsize is \(k=1/N, N=16,32,64,128,256\). The exact errors \(E_T\), \(E_{\infty }\), \(E_1\) and their convergence orders for the exponential midpoint method are shown in Table 10, and the order of \(E_T\) is around 1.97. Form Table 11, we observe that the entire function \(\varphi _1(-k_nA)\) still affects the orders of the a posteriori quantities \(\mathcal {E}_U\), \(\mathcal {E}_b\), \(\zeta _U\).

8 Concluding remarks

In this paper, we derived a posteriori error estimates for linear and semilinear parabolic equations by using the exponential midpoint method in time. For linear problems, we introduced a continuous and piecewise linear function U(t) of the exponential midpoint rule to approximate u(t), and derived suboptimal order estimates. In order to recover the optimal order, we introduced a continuous and piecewise quadratic time reconstruction \(\hat{U}\) of U by using the property of the \(\varphi \)-function. Then, lower and upper error bounds in the \(L^2(0,T;V)\) and \(L^{\infty }(0,T;H)\)-norms were derived in detail which depend only on the discrete solution and the approximation of the \(\varphi \)-function. We also extended this technique to semilinear problems, and established optimal order a posteriori error estimates.

We have implemented various numerical experiments for the exponential midpoint method for some linear and semilinear examples. For both cases, these experiments exactly verify the theoretical analysis. Based on the obtained a posteriori error estimates, we have also designed adaptive algorithm and implemented several numerical experiments which will be presented in the upcoming manuscript [38]. In addition, we only present a posteriori error estimates for the exponential midpoint method for parabolic problems in this paper. Whether our approach is applicable to exponential Runge-Kutta methods of collocation type will be further investigated.

Availability of Data and Material

All data, models, or code generated or used during the study are available from the corresponding author by request.

Data Availability

No datasets were generated or analysed during the current study.

References

Henry, D.: Geometric Theory of Semilinear Parabolic Equations. Lecture Notes in Mathematics, vol. 840. Springer, Berlin-New York (1981)

Lunardi, A.: Analytic Semigroups and Optimal Regularity in Parabolic Problems. Progress in Nonlinear Differential Equations and their Applications, vol. 16. Birkhäuser Verlag, Basel (1995). https://doi.org/10.1007/978-3-0348-9234-6

Lawson, J.D.: Generalized Runge-Kutta processes for stable systems with large Lipschitz constants. SIAM J. Numer. Anal. 4, 372–380 (1967)

Friedli, A.: Verallgemeinerte Runge-Kutta Verfahren zur Lösung steifer Differentialgleichungssysteme. In: Numerical Treatment of Differential Equations (Proc. Conf., Math. Forschungsinst., Oberwolfach, 1976). Lecture Notes in Math., vol. 631, pp. 35–50. Springer, Berlin-New York (1978)

Lambert, J.D., Sigurdsson, S.T.: Multistep methods with variable matrix coefficients. SIAM J. Numer. Anal. 9, 715–733 (1972)

Verwer, J.G.: On generalized linear multistep methods with zero-parasitic roots and an adaptive principal root. Numer. Math. 27(2), 143–155 (1976)

Hochbruck, M., Lubich, C.: On Krylov subspace approximations to the matrix exponential operator. SIAM J. Numer. Anal. 34(5), 1911–1925 (1997)

Berland, H., Skaflestad, B., Wright, W.M: Expint—a matlab package for exponential integrators. ACM Trans. Math. Softw. 33(1) (2007). https://doi.org/10.1145/1206040.1206044

Moler, C., Van Loan, C.: Nineteen dubious ways to compute the exponential of a matrix, twenty-five years later. SIAM Rev. 45(1), 3–49 (2003)

Moret, I., Novati, P.: RD-rational approximations of the matrix exponential. BIT 44(3), 595–615 (2004)

Higham, N.J.: The scaling and squaring method for the matrix exponential revisited. SIAM J. Matrix Anal. Appl. 26(4), 1179–1193 (2005)

Nørsett, S.P.: An A-stable modification of the Adams-Bashforth methods. In: Conf. on Numerical Solution of Differential Equations (Dundee, 1969). Lecture Notes in Math., vol. 109, pp. 214–219. Springer, Berlin-New York (1969)

Cox, S.M., Matthews, P.C: Exponential time differencing for stiff systems. J. Comput. Phys. 176(2), 430–455 (2002)

Hochbruck, M., Ostermann, A.: Explicit exponential Runge-Kutta methods for semilinear parabolic problems. SIAM J. Numer. Anal. 43(3), 1069–1090 (2005)

Krogstad, S.: Generalized integrating factor methods for stiff PDEs. J. Comput. Phys. 203(1), 72–88 (2005)

Hochbruck, M., Ostermann, A.: Exponential integrators. Acta Numer. 19, 209–286 (2010)

Hu, X., Wang, W., Wang, B., Fang, Y.: Cost-reduction implicit exponential Runge-Kutta methods for highly oscillatory systems. J. Math. Chem. 62, 2191–2221 (2024)

Li, Y.-W., Wu, X.: Exponential integrators preserving first integrals or Lyapunov functions for conservative or dissipative systems. SIAM J. Sci. Comput. 38(3), 1876–1895 (2016)

Mei, L., Wu, X.: Symplectic exponential Runge-Kutta methods for solving nonlinear Hamiltonian systems. J. Comput. Phys. 338, 567–584 (2017)

Du, Q., Ju, L., Li, X., Qiao, Z.: Maximum principle preserving exponential time differencing schemes for the nonlocal Allen-Cahn equation. SIAM J. Numer. Anal. 57(2), 875–898 (2019)

Wang, B., Wu, X.: Exponential collocation methods for conservative or dissipative systems. J. Comput. Appl. Math. 360, 99–116 (2019)

Du, Q., Ju, L., Li, X., Qiao, Z.: Maximum bound principles for a class of semilinear parabolic equations and exponential time-differencing schemes. SIAM Rev. 63(2), 317–359 (2021)

Li, B., Ma, S., Schratz, K.: A semi-implicit exponential low-regularity integrator for the Navier-Stokes equations. SIAM J. Numer. Anal. 60(4), 2273–2292 (2022)

Hochbruck, M., Ostermann, A.: Exponential Runge-Kutta methods for parabolic problems. Appl. Numer. Math. 53(2–4), 323–339 (2005)

Johnson, C., Nie, Y.Y., Thomée, V.: An a posteriori error estimate and adaptive timestep control for a backward Euler discretization of a parabolic problem. SIAM J. Numer. Anal. 27(2), 277–291 (1990)

Nochetto, R.H., Savaré, G., Verdi, C.: A posteriori error estimates for variable time-step discretizations of nonlinear evolution equations. Comm. Pure Appl. Math. 53(5), 525–589 (2000)

Giles, M.B., Süli, E.: Adjoint methods for PDEs: a posteriori error analysis and postprocessing by duality. Acta Numer. 11, 145–236 (2002)

Makridakis, C., Nochetto, R.H.: Elliptic reconstruction and a posteriori error estimates for parabolic problems. SIAM J. Numer. Anal. 41(4), 1585–1594 (2003)

Akrivis, G., Makridakis, C., Nochetto, R.H.: A posteriori error estimates for the Crank-Nicolson method for parabolic equations. Math. Comp. 75(254), 511–531 (2006)

Akrivis, G., Makridakis, C., Nochetto, R.H.: Optimal order a posteriori error estimates for a class of Runge-Kutta and Galerkin methods. Numer. Math. 114(1), 133–160 (2009)

Makridakis, C., Nochetto, R.H: A posteriori error analysis for higher order dissipative methods for evolution problems. Numer. Math. 104(4), 489–514 (2006)

Wang, W., Rao, T., Shen, W., Zhong, P.: A posteriori error analysis for Crank-Nicolson-Galerkin type methods for reaction-diffusion equations with delay. SIAM J. Sci. Comput. 40(2), 1095–1120 (2018)

Wang, W., Yi, L., Xiao, A.: A posteriori error estimates for fully discrete finite element method for generalized diffusion equation with delay. J. Sci. Comput. 84(1) (2020)

Wang, W., Yi, L.: Delay-dependent elliptic reconstruction and optimal \(L^\infty (L^2)\) a posteriori error estimates for fully discrete delay parabolic problems. Math. Comp. 91(338), 2609–2643 (2022)

Wang, W., Mao, M., Wang, Z.: Stability and error estimates for the variable step-size BDF2 method for linear and semilinear parabolic equations. Adv. Comput. Math. 47(1) (2021)

Wang, W., Mao, M., Huang, Y.: Optimal a posteriori estimators for the variable step-size BDF2 method for linear parabolic equations. J. Comput. Appl. Math. 413 (2022)

Allen, S.M., Cahn, J.W.: A microscopic theory for antiphase boundary motion and its application to antiphase domain coarsening. Acta Metallurgica 27(6), 1085–1095 (1979). https://doi.org/10.1016/0001-6160(79)90196-2

Hu, X., Wang, W.: Error control and adaptive algrithm for semilinear parabolic equations. Prepared

Acknowledgements

The authors are very grateful to the editor and anonymous referees for their invaluable comments and suggestions which helped to improve the manuscript.

Funding

The first author was partially supported by the Natural Science Foundation of China (Grant No. 12271367, 12071419). The second author is the corresponding author. He was supported by the Natural Science Foundation of China (Grant No. 12271367), Shanghai Science and Technology Planning Projects (Grant No. 20JC1414200), and Natural Science Foundation of Shanghai (Grant No. 20ZR1441200).

Author information

Authors and Affiliations

Contributions

All authors reviewed the manuscript. All the authors contributed equally.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Ethical Approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hu, X., Wang, W., Mao, M. et al. A posteriori error estimates for the exponential midpoint method for linear and semilinear parabolic equations. Numer Algor (2024). https://doi.org/10.1007/s11075-024-01940-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11075-024-01940-7