Abstract

A Jacobi spectral collocation method is proposed for the solution of a class of nonlinear Volterra integral equations with a kernel of the general form \( x^{\beta }\, (z-x)^{-\alpha } \, g(y(x))\), where \(\alpha \in (0,1), \beta >0\) and g(y) is a nonlinear function. Typically, the kernel will contain both an Abel-type and an end point singularity. The solution to these equations will in general have a nonsmooth behaviour which causes a drop in the global convergence orders of numerical methods with uniform meshes. In the considered approach a transformation of the independent variable is first introduced in order to obtain a new equation with a smoother solution. The Jacobi collocation method is then applied to the transformed equation and a complete convergence analysis of the method is carried out for the \(\displaystyle L^{\infty }\) and the \(L^2\) norms. Some numerical examples are presented to illustrate the exponential decay of the errors in the spectral approximation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This work is concerned with the numerical solutions to a general class of nonlinear second kind Volterra integral equations

which have important applications in nonlinear problems of heat conduction, boundary layer heat transfer, chemical kinetics and theory of superfluidity (see e.g. [4, 6, 17–19]). The above class of equations has been considered by the authors in [1]. The kernel of these equations, \( x^{\beta } (z-x)^{-\alpha } g(y(x))\), with \(\alpha \in (0,1)\) and \(\beta >0\), possesses two types of singularities (depending on the value of \(\beta \)) and their solutions are typically not regular.

In previous works a particular case of (1), with to \(\alpha =2/3, ~\beta =1/3, ~f(z)=1\) and \(g(y)=y^4\) was considered. We will refer to it as Lighthill’s equation. The derivative of its solution behaves like \(y'(t)\sim t^{-1/3}\), near the origin (cf. Example 1). As it would be expected, the typical nonsmooth properties of y cause a drop in the global convergence orders of numerical methods based on uniform meshes like collocation and product integration methods [3]. Some classical techniques can be used to recover the optimal convergence orders and this was done for Lighthill’s equation. A collocation method with graded meshes was proposed in [10]; the application of a hybrid collocation method, where the basis for the approximating space also includes some fractional powers, was considered in [22]; the use of extrapolation techniques combined with low order methods was investigated in [12]. We also refer to [2], where a Nyström-type method was applied after a smoothing transformation.

Recently, in [1], a method has been used for the general equation (1) where an initial integral over a small interval is calculated analytically, by using a series solution available near the origin; this combined with a product integration-type method leads to optimal convergence rates. In the present work we consider spectral approximations.

In the last decade the use of spectral methods for the solution of Volterra integral equations (VIEs) has raised more attention from researchers. We refer to [23] for a comprehensive study on the topic of spectral approximations and associated algorithms. It is known that an exponential convergence order can be achieved with spectral approximations in the case of linear and nonlinear Volterra integral equations with smooth kernels (see [24, 28]). This is also true for linear VIEs of the second kind with weakly singular kernel \((z-x)^{-\mu }, 0 < \mu < 1\), under the assumption that the underlying solution is smooth enough ([8],[7]). In 2010, Chen and Tang [9] developed their work by analyzing a Jacobi spectral collocation method for linear VIEs of the second kind with weakly singular kernel \((z-x)^{-\mu }, 0 < \mu < 1\), and with nonsmooth solutions. Some function transformations and variable transformations were employed to change the equation into a new one defined on the standard interval \([-1,1]\), so that the solution of the new equation possessed better regularity properties and the Jacobi orthogonal polynomials theory could be applied. In [15], Li and Tang analyzed a spectral Jacobi-collocation approximation for the particular case of the Abel-Volterra integral equation, that is, with singular kernel \((z-x)^{-1/2}\), where nonsmooth solutions were considered. In their convergence analysis, unlike in [9], only a coordinate transformation (and no solution transformation) is used. We also refer to [27] where some spectral and pseudo-spectral Jacobi-Galerkin approaches were considered for the smooth linear second kind Volterra integral equation. More recently, in [16], this work has been extended to linear Abel-type VIEs with nonsmooth solutions.

In the present work, we investigate the application of the Jacobi collocation method to the general equation (1). First a variable transformation is used on the original equation so that a new equation with a smoother solution is obtained. We note that the use of smoothing techniques for (linear) integral equations with Abel-type kernels was first considered in [11]. In the present case, it happens that the integral term in the transformed equation is suitable for the application of a Gauss-type quadrature with Jacobi weights. Finally, a Jacobi-type collocation condition is imposed on the transformed integral equation [cf. (25)].

The paper is organized as follows. In Sect. 2 first the regularity properties of the solution to equation (1) are discussed. Then the Jacobi collocation method is described. In Sect. 3 some auxiliary results are presented and in Sect. 4 a complete convergence analysis of the method is carried out for the \(\displaystyle L^{\infty }\) and the \(L^2\) norms. In order to illustrate the theoretical results some numerical examples are considered in Sect. 5.

Throughout the text, the same letter C will be used for all the constants, with different values.

2 The Jacobi-Collocation Method

2.1 Smoothness of the Solution

In [1] it is shown that if \(0<\alpha <1, ~~\beta >1 - \alpha , f\) is bounded and g satisfies a local Lipschtiz condition then (1) has a unique continuous (local) solution y(z) on some interval [0, T]. Furthermore, if g(z) is a positive nonlinear function then it can be shown that, under certain conditions, this interval can be extended to \([0, +\infty )\). If \(g(z)<0\) then the solution may blow up at some finite z. The theoretical results of Sects. 3–4 are valid independently of the sign of g.

The following lemma concerns Volterra integral equations which can be expressed in terms of the so-called “cordial” operators, which were introduced by Vainikko in [25].

Here we consider a partial result from [26] which will be useful in our analysis.

Lemma 1

Consider the following nonlinear Volterra integral equation

where \(\varphi \in L^1(0,1)\). Let \(\Delta _{{T}}=\{(x,z):0\le x\le z\le {T}\}\), and assume that \(f\in C^m([0,{T}])\) and \(g\in C^m(\Delta _{{T}}\times {\mathscr {D}}),{\mathscr {D}}\subset {\mathbb {R}}\), for an \(m\in {\mathbb {N}}\). Let \(u^*\in C([0,{T}])\) be a solution of (2) on [0, T] and define

so that \(a(0,0)=0\). Then \(u^*\) is also in \(C^m([0,{T}])\).

It will be of interest to consider the application of the previous lemma to the following nonlinear Volterra integral equation, of which (1) is a particular case with \(\gamma =1\),

with \(\beta >0\), \(\alpha \,\gamma -\beta < 1\). It can be rewritten as

which is of the form (2), with

Using (3), we have

which yields \(a(0,0)=0\). Here y is the unique solution of (4) on [0, T].

Now Lemma 1 can be applied to draw some conclusions on the regularity properties of the solution to (1). We consider \(\gamma =1\) an analyze the function \(z^{\eta },~ \eta = 1+\beta -\alpha \), for the values of interest, that is, for \(0<\alpha <1, \beta >0\).

-

Case I Let \(\eta \ge 1\), that is, \(\beta -\alpha \ge 0\), and let \(\beta -\alpha \) be an integer. Then \(z^{\eta }\) will be in the class \(C^{\infty }\). By Lemma 1, we may conclude that if \(f\in C^m([0,T])\) and \(g\in C^m({\mathscr {D}})\) then the solution of equation (1) satisfies \(y\in C^m([0,T])\).

-

Case II Let Let \(\eta > 1\) with \(\eta \) not being an integer. This corresponds to \(\beta -\alpha >0\) but with \(\beta -\alpha \) not in \(\mathbf {Z}\). Let \(\bar{m}\) denote the integer part of \(\beta -\alpha \). Then the function \(z^{\eta }\) will only have \(\bar{m}+1\) continuous derivatives on \(\Delta _{{T}}\). Therefore, Lemma 1 can be applied with \(f\in C^m([0,T])\) and \(g\in C^m({\mathscr {D}})\), for \(m\le \bar{m}+1\).

-

Case III Let \(0<\eta < 1\), that is, \(0<\beta <\alpha <1\). In this case, \(z^{\eta }\) is just continuous and the function \(\displaystyle \overline{g}(x,z,y)=-z^{1+\beta -\alpha } g(y)\) [cf. (6) ] will not be smooth with respect to z.

Remark 1

We consider the following equation

where \(C>0\), \(\mu <1\). We see that (7) is of the form (1), with

therefore it falls into Case III. It can be shown ([10]) that the exact solution y to (7) is such that its first derivative satisfies \(y'(t) \sim t^{-\frac{\mu }{1+\mu }}\) near the origin. Below we will generalize this result. We note that the first example of Sect. 5 (Lighthill’s equation) is of the type (7), with \(\mu =1/2\).

A variable transformation

Defining

then (1) is transformed into the following integral equation

where

It can be shown that (9) has a unique continuous solution on the interval \(\displaystyle [0,T^{1/\sigma }]\). We now analyze the regularity of the transformed equation (8).

Since (9) is of the form (4), therefore it admits the representation

where

Let us consider the case when \(\alpha \in ]0,1[\) and \(\displaystyle 0<1+\beta -\alpha <1\) and let \(\overline{f}\in C^m([0,T^{1/\sigma }]), g(\overline{y})\in C^m([0,T^{1/\sigma }])\), for a certain m. Let \(\sigma \) be an integer satisfying \(\sigma >\frac{m}{1+\beta -\alpha } \). Then \(\overline{g}\in C^m(\Delta _{T^{1/\sigma }}\times {\mathscr {D}})\) and, by Lemma 1, it follows that \(\overline{y}\in C^m([0,T^{1/\sigma }])\) (\(m\ge 1\)).

If there exist \(p, q \in \mathbf {N},~ q\ge 2\) (p, q coprimes), such that \(1+\beta -\alpha =p/q\), then we simply take \(\sigma =q\).

Remark 2

In particular, by taking into account (10) we can also conclude that, in the case \(\displaystyle 0<1+\beta -\alpha < 1\), the first derivative of the solution of (1) satisfies, for \(\displaystyle t\in (0,T],\)

The case when only some derivatives of y are continuous (Case II), can be dealt with in a similar way.

2.2 The Collocation Method

Before we define the Jacobi collocation method, we need to introduce some notations. Let \(\displaystyle \varLambda =[-1,1]\) and \(\displaystyle {{\omega }^{\alpha _1 ,{\beta }_1 }(x)} = {(1 - x)^{\alpha _1} }{(1 + x)^{\beta _1} }\) be a weight function, for \(\alpha _1 ,\beta _1 > - 1\). The Jacobi polynomials, denoted as \(\{ J_N^{\alpha _1 ,\beta _1 }(x)\} _{N = 0}^\infty \), form a complete \(L_{{\omega ^{\alpha _1,\beta _1 }}}^2(\varLambda )\) orthogonal system, where \(L_{{\omega ^{\alpha _1,\beta _1 }}}^2(\varLambda )\) is a weighted space defined by

equipped with the norm

and the inner product

For a given positive integer N, we denote by \(\displaystyle \{ {x_i}\} _{i = 0}^N=\{ {x_i^{\alpha _1,~\beta _1}}\} _{i = 0}^N\) the points of the Gauss-Jacobi quadrature formula, which are the roots of the Jacobi polynomial \(\displaystyle J_{N+1}^{\alpha _1,~\beta _1}\), while the weights of the formula will be denoted by \(\displaystyle \{ {w_i}\} _{i = 0}^N=\{ {w^{\alpha _1,~\beta _1}(x_i)}\} _{i = 0}^N \). Thus the Gauss-Jacobi quadrature rule with \(N+1\) points has the form:

Let \({{\mathscr {P}}_N}\) denote the space of all polynomials of degree not exceeding N. For any \(v \in C(\varLambda )\), we can define the Lagrange interpolating polynomial \(I_N^{\alpha _1 ,{\beta }_1 }v \in {{\mathscr {P}}_N}\) as

where the set \(\displaystyle \{{L_i}(x)\}_{i=0}^N\) is the Lagrange interpolation basis associated with the zeros of the Jacobi polynomial of degree \(\displaystyle N+1\), that is, the points \(\{ {x_i}\} _{i = 0}^N.\)

In order to apply the theory of orthogonal polynomials, we consider the variable transformations \(\displaystyle t =T^{1/\sigma }\frac{{x + 1}}{2},\quad s =T^{1/\sigma }\frac{{\tau + 1}}{2}\quad (x,~\tau \in [-1,1]),\) so that (9) becomes

where

Using the formula \(a^n-b^n=(a-b)(a^{n-1}+a^{n-2}b+\cdots +b^{n-1})\), we can rewrite equation (17) as follows

with the kernel \(\displaystyle \widetilde{k}\) being given by

where

Then by using the linear transformation \( \frac{{1+ x}}{2}\theta +\frac{{x-1}}{2} = \tau (x,\theta ),\quad x,~\theta \in [ - 1,1], \) we rewrite the integral term in (18) in the form

where

Hence, the equation (18) becomes

In the Jacobi-collocation method we seek an approximate solution \(\displaystyle \overline{U}_N(x) \in {{\mathscr {P}}_N}\), such that \(\displaystyle \overline{U}_N(x)\) satisfies the equation (21) at the collocation points \(\displaystyle x_i\):

where \(\displaystyle x_i,~i=0,1,\ldots ,N\), are the zeros of the Jacobi polynomial of degree \(N+1\).

The integral term in the above equation can be approximated by an \((N+1)\)-point Gauss quadrature formula with the Jacobi weights \(\displaystyle w_j= w^{-\alpha ,-\alpha }(x_j)=(1-{x_j}^2)^{-\alpha }=(1-x_j)^{-\alpha } (1+x_j)^{-\alpha }~j=0,1,\ldots ,N\):

In order to linearize the scheme (22), we shall use Lagrange interpolation to approximate the nonlinear part of the kernel

where the operator \(I_N^{-\alpha ,-\alpha }\) is defined by (16). Then from (23) and (24), the full collocation scheme becomes

After solving (25), an approximate solution of (21) will be given by

where the functions \(L_i(x)\) are defined as in (16).

3 Some Preliminaries and Useful Lemmas

In this section we obtain some preliminary results that will be needed in the convergence analysis of the method. First we introduce some weighted Hilbert spaces. Let \( \partial _x^{k}v\) denote the kth order derivative of v(x). For a non-negative integer m, define

with

where the norm \(\displaystyle \Vert \quad \Vert _{{{\omega }^{{\alpha }_1,{\beta }_1 }}}\) is defined by (14). We also introduce the seminorms

For any \(\displaystyle u,~v\in C(\varLambda )\), a discrete and a continuous inner product are defined, respectively, by

The first lemma gives error estimates for the interpolation polynomial and for the Gauss-Jacobi quadrature formula.

Lemma 2

Let \(\displaystyle v\in H^{m}_{w^{{\alpha }_1,{\beta }_1}}(\varLambda ),~m\ge 1\). The following error estimates hold

From [5] we have the following results concerning the Lebesgue constant.

Lemma 3

Let \(\displaystyle \{L_j\}_{j=0}^N\) be the N-th order Lagrange interpolation polynomials associated with the Jacobi collocation points \(\displaystyle \{x_i\}_{i=0}^N\). Then

Lemma 4

Let \(\displaystyle \{L_j\}_{j=0}^N\) be the N-th order Lagrange interpolation polynomials associated with the Jacobi collocation points \(\displaystyle \{x_i\}_{i=0}^N\). For every bounded function v, there exists a constant C independent of v such that

Given \(r\ge 0\) and \(\kappa \in [0,1]\) \(\displaystyle , {\mathscr {C}}^{r,\kappa }(\varLambda )\) will denote the space of functions whose \(\mathrm r^{th}\) derivatives are H\(\ddot{\text {o}}\)lder continuous with exponent \(\kappa \), endowed with the usual norm

For any function \(\displaystyle v\in {\mathscr {C}}^{r,\kappa }(\varLambda )\), we have the following density result ([20], [21]):

Lemma 5

Let r be a non-negative integer and \(\kappa \in (0,1)\). Then, there exists a constant \({{c}}_{r,\kappa }>0\) such that, for any function \(\displaystyle v\in {\mathscr {C}}^{r,\kappa }(\varLambda )\), there exists a polynomial function \({\mathscr {T}}_N v\in {\mathscr {P}}_N\) satisfying

Now, we need to prove the compactness of the linear weakly singular integral operator \(\displaystyle {\mathscr {M}}v\), from \(C(\varLambda )\) into \(C^{0,\kappa }(\varLambda )\), defined by

for any \(\displaystyle 0<\kappa <1-\alpha <1\) and where \(\widetilde{k}\) is defined in (19).

Lemma 6

Let \(\displaystyle {\mathscr {M}}\) be defined by (33). Then, for any function \(v\in {\mathscr {C}}(\varLambda )\), there exists a positive constant C such that

where \(\Vert .\Vert _{\infty }\) is the standard norm in \(\displaystyle C(\varLambda )\).

Proof

In order to prove (34), we only need to show that \(\displaystyle {\mathscr {M}}\) is Hölder continuous with exponent \(\displaystyle \kappa \), that is,

for \(0<\kappa <1-\alpha \). Let us analyze \(\displaystyle {\mathscr {M}}v(x_1)-\displaystyle {\mathscr {M}}v(x_2)\), with \(\displaystyle -1\le x_1< x_2\le 1.\) We have

where \(\displaystyle c^*=\sigma \left( \frac{T^{\frac{1}{\sigma }}}{2}\right) ^{\sigma (\beta -\alpha +1)}\) and

Using the variable transformation \(\varsigma =(s+1)^{\sigma }\) we obtain the following bounds for \(\displaystyle |E_1|\):

where \(\eta =\min \{1,\sigma (1-\alpha )\}\) and \(\sigma (1-\alpha )>1-\alpha \). From the last error inequality, and using the fact that \(\sigma >1, -1\le x_1<x_2\le 1\) and \(0<\kappa <1-\alpha ,\) we obtain

A bound for \(\displaystyle |E_2|\) can be obtained as follows

From the above inequality and by similar arguments to the ones used to bound \(\displaystyle |E_1|\), it follows that

From (35) and (36) we obtain (34). \(\square \)

We now need a result on the regularity of the kernel \(k(x,\theta )\), defined by (20).

Lemma 7

Consider (9), with \(\displaystyle \overline{f} \in C^m([0,T^{1/\sigma }]), \overline{g}\in C^m(\Delta _{T^{1/\sigma }}\times {\mathscr {D}}), m\ge 1\). Let \(\displaystyle \left\{ x_i\right\} _{i=0}^N\) be the set of the \(N+1\) zeros of the Jacobi polynomial \(\displaystyle J_{N+1}^{-\alpha ,~-\alpha }\) of degree \(N+1\). Then, we have that

Thus, there exist \(\displaystyle K_p^*>0,~ p=0,1,\ldots ,m\), and \(K^{**}\) so that

and

Proof

Let \(\displaystyle \varsigma =(\beta +1)\sigma +\alpha -1-m\). From Sect. 2, we know that the integer \(\sigma \) can be chosen to satisfy \(\displaystyle {\sigma >\frac{m}{1+\beta - \alpha }},\) which implies

Since \(\alpha \in ]0,1[\), then from the last inequality we obtain \(\varsigma \ge 0\). Then it is straightforward to prove that the functions \(\displaystyle \frac{\partial ^{p}}{\partial \theta ^p}k(x_i,\theta ),~p=0,1,\ldots , m\), are continuous.

We have

and this gives (37). The inequality (38) is easily obtained by using the definition of seminorm in (27). \(\square \)

4 Convergence Analysis

In this section we analyze the convergence of the approximate solution obtained by the Jacobi collocation scheme (25) to the exact solution of the integral equation (21).

Error estimate in \(L^{\infty }\)

Theorem 1

Assume that in (1) the nonlinear function g and all its derivatives up to order m satisfy a local Lipschitz condition. In (9) let \(\displaystyle \overline{f} \in C^m([0,T^{1/\sigma }])\), \(\overline{g}\in C^m(\Delta _{T^{1/\sigma }}\times {\mathscr {D}})\), for some \(m\in \mathbb {N}\).

Let Y be the exact solution of the Volterra integral equation (18) and let \(\displaystyle U_N\) be the approximate solution of (18) obtained by the Jacobi collocation scheme (25).

Then for \(Y\in H^m_{{\omega }^{{-\alpha },{-\alpha }}}(\varLambda )\cap H^m_{{\omega }^{C}}(\varLambda )\) we have

where \({\bar{\chi }}_0,{\bar{\chi }}_1\) and \({\bar{\chi }}_2\) are given by (67) and (69), respectively, and can be bounded by some constants that does not depend on N.

Proof

At the collocation points \(x=x_i\) we have \(\displaystyle U_N(x_i)=\hat{U}_N(x_i)\). Then, by subtracting (25) from (21) we obtain

Let \( \displaystyle e(x)=Y(x) - {U_{N}}(x)\), then we will have

where

Multiplying \(L_i(x)\) on both sides of (41) and summing up from \(i=0\) to \(i=N \) yields

Let

and

Then, after adding and subtracting \(\displaystyle \widetilde{\varphi }(x), \displaystyle \varphi (x)\) and \(\displaystyle Y(x)\) onto the right-hand side of (42), it follows that

Using the fact that the nonlinear function g satisfies a local Lipschitz condition on [0, T] we have

where

For \(\sigma >1\) the kernels \(\widetilde{k}(x,\tau )\) and \(k(x,\theta )\), defined by (19) and (20), respectively, are continuous for \(\tau \in [-1,x]\, \mathrm{and}\, \theta , x \in \varLambda \), which implies that they are bounded in their respective domains. Thus, from (45) we have

Then, using a standard Gronwall inequality we have

In what follows we bound \(\displaystyle \Vert I_j\Vert _{\infty }, j = 1, \ldots , 5\).

In order to simplify the notation, we must consider two cases: \(\displaystyle \frac{1}{2}\le \alpha <1\) and \(\displaystyle 0<\alpha <\frac{1}{2}.\)

-

Case 1: \(\displaystyle \frac{1}{2}\le \alpha <1\)

Bound for \(\Vert I_1\Vert _{\infty }\)

In order to bound \(\Vert I_1\Vert _{\infty }\) we use a result from [5]. Let \(I_N^CY\in {\mathscr {P}}_N\) be the interpolant of Y at any of the three families of Chebyshev-Gauss points. Then, from [5] we have

where \(\displaystyle w^{C}(x)=w^{-\frac{1}{2},-\frac{1}{2}}(x)\) is the Chebyshev weight function.

Noting that \(I_N^{-\alpha ,-\alpha }p(x)=p(x),\quad \forall ~ p\in {\mathscr {P}}_N\) and by adding and subtracting \(\displaystyle I_N^CY\), we obtain, for \(\frac{1}{2}< \alpha <1\),

Using Lemma 3 and inequality (52) leads to

Thus

Bound for \(\displaystyle \Vert I_2\Vert _{\infty }\)

Recall from (41) that

Hence, by using (29) and (31), we have

where \(\displaystyle K^{**}\) is defined in Lemma 7.

Thus, combining (54) with Lemma 3, yields

Bound for \(\displaystyle \left\| I_3\right\| _{\infty }\)

Let us consider the operator \({\mathscr {M}}\) defined by (33) with \(\mu =-\alpha \) and \(\displaystyle v(x)= g(U_{N}(x))-I_N^{-\alpha ,-\alpha }g(U_{N}(x))\).

From Lemma 6 the function \(\displaystyle {\mathscr {M}}v\) is Hölder continuous with exponent \(\displaystyle 0<\kappa <1-\alpha \). Then \(\displaystyle {\mathscr {M}}v \in {\mathscr {C}}^{0,\kappa }(\varLambda ) \), and from Lemma 5 there exists a constant \(\displaystyle c_{0,\kappa }\) and a polynomial function \(\displaystyle {\mathscr {T}}_N({\mathscr {M}}v) \in {\mathscr {P}}_N\) such that (32) is valid, thus we obtain

From Lemma 6, with \(0<\kappa <1-\alpha \), it follows

By using the same procedure as in the bounding of \(\Vert I_1\Vert _{\infty }\) we can obtain the following bound

By using the definition of seminorm (27) we have

Since the nonlinear function g and its derivatives of orders \(\displaystyle 1,2,\ldots ,m\) satisfy a Lipschitz condition, we have

Therefore, combining (58) with (57) yields

From (57), (59) and Lemma 3, we obtain

Finally, using (60) and Lemma 5 in (56), we have

Bound for \(\displaystyle \Vert I_4\Vert _{\infty }\)

Similarly to \(\Vert I_3\Vert _{\infty }\), we again consider the operator \({\mathscr {M}}\) defined by (33), with \(\mu =-\alpha \) but now with \(v(x)=g(Y(x))-g(U_{N}(x))\). Then we can rewrite \(I_4\) as follows

From (62) and Lemma 5, with \(r=0\) and \(\kappa \in (0,1-\alpha )\), we have

On the other hand, from Lemmas 3 and 6, with \(0<\kappa <1-\alpha \), and using the fact that g is Lipschitz continuous, yields

Bound for \(\displaystyle \Vert I_5\Vert _{\infty }\)

We have

where \(\displaystyle K=\max _{(\tau ,x)\in [-1,x]\times \varLambda }|\widetilde{k}(x,\tau )| \). From (60) we obtain

Finally, using the bounds (53), (55), (61), (64) and (65) in (51), then, for sufficiently large N, we obtain that

with \(\displaystyle \chi _0\) and \(\displaystyle \chi _1\) given by

and the desired result follows.

-

Case 2: \(\displaystyle 0<\alpha <\frac{1}{2}\)

In this case, attending to Lemma 3 and using similar arguments to the ones employed for Case 1, we obtain the following estimates:

Therefore, using the above inequalities in (51) we obtain the desired result:

with \(\displaystyle {\bar{\chi }}_2\) given by

The proof of Theorem 1 is now complete. \(\square \)

Error estimate in \(L^{2}(\varLambda )\)

To obtain an error estimate in the weighted \(L^2\) norm, we need a generalized Hardy inequality with weights [8].

Lemma 8

For all measurable functions \(f\ge 0\), the generalized Hardy’s inequality

holds if and only if

Here \(\displaystyle T\) is an operator of the form

where k(x, t) is a given kernel, u and v are weight functions, and \(- \infty \le a < b \le \infty \).

We will need the following Gronwall’s inequality [15].

Lemma 9

Suppose that \(\displaystyle L\ge 0, \displaystyle 0<\alpha <1\). Let v(x) be a non-negative, locally integrable function defined on \(\varLambda \) satisfying

Then, there exists a constant \(\displaystyle C\) such that

Theorem 2

Assume that in (1) the nonlinear function g and all its derivatives up to order m satisfy a local Lipschitz condition. In (9) let \(\displaystyle \overline{f} \in C^m([0,T^{1/\sigma }])\), \(\overline{g}\in C^m(\Delta _{T^{1/\sigma }}\times {\mathscr {D}})\), for some \(m\in \mathbb {N}\).

Let Y be the exact solution of the Volterra integral equation (18) and let \(\displaystyle U_N\) be the approximate solution of (18) obtained by the Jacobi collocation scheme (25). Then, for \(Y\in H^m_{{\omega }^{{-\alpha },{-\alpha }}}(\varLambda )\cap H^m_{{\omega }^{C}}(\varLambda )\) we have

where \(\rho _0,\rho _1\) and \(\rho _2\) are given by (81), (82) and (83), respectively, and can be bounded by some constant that does not depend on N.

Proof

Using the generalization of Gronwall’s inequality (see Lemma 9) in (45), we obtain

with \(\displaystyle I_1,~I_2,~I_3,~I_4\) and \(\displaystyle I_5\) defined by (46), (47), (48), (49) and (50), respectively. Then

Now, by using Hardy’s inequality (70), we will have the following bound

Bound for \(\displaystyle {\left\| I_1 \right\| _{{w^{-\alpha ,-\alpha }}}}\)

From (28) it follows

Bound for \(\displaystyle {\left\| I_2 \right\| _{{w^{-\alpha ,-\alpha }}}}\)

From (55), (54) and Lemma 4 we obtain

with \(K^{**}\) defined by (38).

Bound for \(\displaystyle {\left\| I_3\right\| _{{w^{-\alpha ,-\alpha }}}}\)

Let \(\displaystyle v(x)=g(U_N(x))-I_N^{-\alpha ,-\alpha }(g(U_N(x))\). Similarly to the arguments used for the infinity norm, for \(\alpha \in [\frac{1}{2},1\)), we use (60) and Lemma 3 to obtain

On the other hand, if \(\alpha \in (0,\frac{1}{2})\), from Lemma 3 it follows that

Bound for \(\displaystyle {\left\| I_4\right\| _{{w^{-\alpha ,-\alpha }}}}\)

By similar arguments to the ones used to bound \(\displaystyle {\left\| I_3\right\| _{{w^{-\alpha ,-\alpha }}}}\), but now with \(\displaystyle v(x)=g(Y(x))-g(U_N(x))\), we obtain

Bound for \(\displaystyle {\left\| I_5\right\| _{{w^{-\alpha ,-\alpha }}}}\)

By using the inequalities (28) and (59), it follows that

whith \(K_0^*\) defined by (37). Then, using (75), (76), (77), (79) and (80) in (74), we obtain, for sufficiently large N, that

with \(\rho _0, \rho _1\) given by

On the other hand, for \(\alpha \in (0,\frac{1}{2})\), using (75), (76), (78), (79) and (80) in (74), for sufficiently large N, we have

with \(\displaystyle \rho _2\) given by

The proof of Theorem 2 is complete. \(\square \)

5 Numerical Results

The Jacobi collocation method has been considered for three examples on the interval [0, T], with \(T=1\). The numerical results on the tables together with the semi-logarithmic error graphs illustrate the performance of the Jacobi collocation method. In the first two examples the exact solution is not known. Therefore, in order to compute the absolute errors \( |Y(x)-U_N(x) |=|\bar{y}(t)-U_N(t)|\), with \(t=T^{\frac{1}{\sigma }}\frac{x+1}{2}\), we have taken as the exact solution \(\bar{y}(t)\) the approximation \(U_N(t)\), obtained by the Jacobi collocation method with \(N=32\). To estimate the \(L^{\infty }\) error, we have computed the absolute error at the points \(x_i= - 1 + 2\frac{i - 1}{1000},\, i=1,..,1001\).

The first example is given by Lighthill’s equation [17].

Example 1

In [10] it is shown that y is smooth away from zero. Near the origin it admits the following series solution:

for \(z \in [0,R)\), where R is a positive number satisfying \(R < 0.070784\). By using the variable transformation \(\displaystyle x = {s^{3}}\), and then setting \(z= t^{3}\), we obtain

where \(\displaystyle \overline{y}(t)=y(t^{3})\). Then, the solution of the transformed equation (86) is smooth near the origin, therefore the Jacobi collocation method can be applied to the new equation.

Example 2

In this case it can be shown that \(y(t) \sim t^{5/6}\) for t near the origin (cf. [1]). By using the variable transformation \( x = {s^6}\) and setting \(z = {t^6}\), then (87) can be rewritten as

where \(\displaystyle \overline{y}(t)= y(t^6).\)

Example 3

This example has been taken from [2] and the exact solution of (89) is \(\displaystyle y(z) = \sqrt{z} \). Thus, using the variable transformation \(\displaystyle x = {s^2}\) and setting \( z = {t^2}\), then (89) is transformed into

with \(\displaystyle \overline{y}(t) = y({t^2}) =t.\)

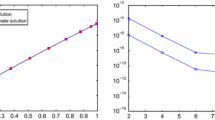

The results in Table 1 illustrate the performance of the Jacobi collocation method applied to equations (84) and (86), respectively. The values displayed show, as it was expected, a big improvement when we apply the Jacobi collocation method to the transformed equation (whose solution is smooth). In Fig. 1, the logarithms of the absolute errors in both \(L^{\infty }\) and \(L^2\) norms, versus N (the number of collocation points) are displayed. Again the exponential rate of convergence is observed for both nonlinear problems. The results confirm the exponential rate of convergence of the method for equation (86).

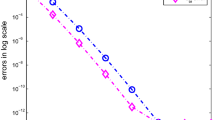

In order to obtain approximate solutions to the equations (87) and (89), the Jacobi collocation method has been applied to their respective transformed equations: (88) (with \(\displaystyle \alpha _1=\beta _1=-\frac{1}{2}\)) and (90) (with \(\displaystyle \alpha _1=\beta _1=-\frac{3}{4}\)). Table 2 shows the errors of the approximate solutions of (88) and (90) in \(L^{\infty }\) and weighted \(L^2\) norms. In Fig. 2, for Examples 2 and 3, we have plotted the logarithm of the absolute errors in both \(L^{\infty }\) and \(L^2\) norms, versus N. Again the exponential rate of convergence is observed for both nonlinear problems.

6 Conclusions

In this work we have considered a spectral Jacobi-collocation approximation for a class of nonlinear Volterra integral equations defined by (1), which has recently been introduced in [1]. When the underlying solutions of the VIEs have a nonsmooth behaviour at the origin, we first use an appropriate change of the independent variable in order to obtain an equivalent equation with smooth solution. Then the proposed method is applied to the transformed equation. We have provided a convergence analysis of the method in the weighted \(L^2\) and \(L^{\infty }\) norms and numerical results were presented to confirm the theoretical prediction of the exponential rate of convergence.

References

Seyed Allaei, S., Diogo, T., Rebelo, M.: Analytical and computational methods for a class of nonlinear singular integral equations (Submitted)

Baratella, P.: A Nyström interpolant for some weakly singular nonlinear Volterra integral equations. J. Comput. Appl. Math. 237, 542–555 (2013)

Brunner, H.: Collocation Methods for Volterra Integral and Related Functional Equations. Cambridge University press, Cambridge (2004)

Chambré, P.L.: Nonlinear heat transfer problem. J. Appl. Phys. 30, 1683–1688 (1959)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods Fundamentals in Single Domains. Springer-Verlag, Berlin (2006)

Chambré, P.L., Acrivos, A.: Chemical surface reactions in laminar boundary layer flows. J. Appl. Phys. 27, 1322 (1956)

Chen, Y., Li, X., Tang, T.: A note on Jacobi spectral-collocation methods for weakly singular Volterra integral equations with smooth solutions. J. Comput. Math. 31, 47–56 (2013)

Chen, Y., Tang, T.: Spectral methods for weakly singular Volterra integral equations with smooth solutions. J. Comput. Appl. Math. 233, 938–950 (2009)

Chen, Y., Tang, T.: Convergence analysis of the Jacobi spectral-collocation methods for Volterra integral equations with a weakly singular kernel. Math. Comput. 79, 147–167 (2010)

Diogo, T., Ma, J., Rebelo, M.: Fully discretized collocation methods for nonlinear singular Volterra integral equations. J. Comput. Appl. Math. 247, 84–101 (2013)

Diogo, T., McKee, S., Tang, T.: Collocation methods for second-kind Volterra integral equations with weakly singular kernels. Proc. R. Soc. Edinb. 124A, 199–210 (1994)

Diogo, T., Lima, P.M., Rebelo, M.S.: Comparative study of numerical methods for a nonlinear weakly singular Volterra integral equation. HERMIS J. 7, 1–20 (2006)

Elnagar, G.N., Kazemi, M.: Chebyshev spectral solution of nonlinear Volterra–Hammerstein integral equations. J. Comp. Appl. Math. 76, 147–158 (1996)

Guo, H., Cai, H., Zhang, X.: A Jacobi-collocation method for second kind Volterra integral equations with a smooth kernel, Abstr. Appl. Anal. 2014, (2014)

Li, X., Tang, T.: Convergence analysis of Jacobi spectral Collocation methods for Abel–Volterra integral equations of second-kind. J. Front. Math. China. 7, 69–84 (2012)

Li, X., Tang, T., Xu, C.: Numerical solutions for weakly singular Volterra integral equations using Chebyshev and Legendre pseudo-spectral Galerkin methods. J. Sci. Comput. doi:10.1007/s10915-015-0069-5

Lighthill, J.M.: Contributions to the theory of the heat transfer through a laminar boundary layer. Proc. R. Soc. Lond. 202(A), 359–377 (1950)

Mann, W.R., Wolf, F.: Heat transfer between solids and gases under nonlinear boundary conditions. Quart. Appl. Math. 9, 163–184 (1951)

Padmavally, K.: On a non-linear integral equation. J. Math. Mech. 7, 533–555 (1958)

Ragozin, D.L.: Polynomial approximation on compact manifolds ans homogeneous spaces. Trans. Am. Math. Soc. 150, 41–53 (1970)

Ragozin, D.L.: Polynomial approximation on spheres manifolds and projective spaces. Trans. Am. Math. Soc. 162, 157–170 (1971)

Rebelo, S.M., Diogo, T.: A hybrid collocation method for a nonlinear Volterra integral equation with weakly singular kernel. J. Comput. Appl. Math. 234, 2859–2869 (2010)

Shen, J., Tang, T., Wang, L.: Spectral Methods Algorithms. Analysis and Applications. Springer-Verlag, Berlin (2011)

Tang, T., Xu, X., Chen, J.: On spectral methods for Volterra type integral equations and the convergence analysis. J. Comput. Math. 26, 825–837 (2008)

Vainikko, G.: Cordial Volterra integral equations 1. Numer. Funct. Anal. Optim. 30, 1145–1172 (2009)

Vainikko, G.: Spline collocation-interpolation method for linear and nonlinear cordial Volterra integral equations. Numer. Funct. Anal. Optim. 32, 83–109 (2011)

Xie, Z., Li, X., Tang, T.: Convergence analysis of spectral Galerkin methods for Volterra type integral equations. J. Sci. Comput. 53, 414–434 (2012)

Yang, Y., Chen, Y., Huang, Y., Yang, W.: Convergence analysis of Legendre collocation methods for nonlinear Volterra type integro equations. Adv. Appl. Math. Mech. 7, 74–88 (2013)

Acknowledgments

This work was partially supported by Fundação para a Ciência e a Tecnologia (Portuguese Foundation for Science and Technology), through the projects Pest-OE/MAT/UI0822/2014 and PTDC/MAT/101867/2008. The research of the first author (S. Seyed Allaei) was also co-financed by the Hong Kong Research Grants Council (RGC Project HKBU 200113 and 1369648). The work of the third author was also partially supported by the FCT Project UID/MAT/00297/2013 (Centro de Matemática e Aplicações). The first author would like to thank Professor Hermann Brunner for his valuable suggestions and constructive discussions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Allaei, S.S., Diogo, T. & Rebelo, M. The Jacobi Collocation Method for a Class of Nonlinear Volterra Integral Equations with Weakly Singular Kernel . J Sci Comput 69, 673–695 (2016). https://doi.org/10.1007/s10915-016-0213-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-016-0213-x

Keywords

- Jacobi spectral collocation method

- Nonlinear Volterra integral equation

- Weakly singular kernel

- Convergence analysis