Abstract

We focus on the convex semi-infinite program with second-order cone constraints (for short, SOCCSIP), which has wide applications such as filter design, robust optimization, and so on. For solving the SOCCSIP, we propose an explicit exchange method, and prove that the algorithm terminates in a finite number of iterations. In the convergence analysis, we do not need to use the special structure of second-order cone (SOC) when the objective or constraint function is strictly convex. However, if both of them are non-strictly convex and constraint function is affine or quadratic, then we have to utilize the SOC complementarity conditions and the spectral factorization techniques associated with Euclidean Jordan algebra. We also show that the obtained output is an approximate optimum of SOCCSIP. We report some numerical results involving the application to the robust optimization in the classical convex semi-infinite program.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The semi-infinite program (SIP) [11, 16, 29] is a constrained optimization with the feasible region defined by means of infinitely many inequality constraints. This problem has strong practical backgrounds in engineering problems such as optimal control, optimal filter design in signal processing, resource allocation in decentralized systems, decision making under competition, least square problems, equilibrium problems, and so on [12, 23, 35, 36]. However, the SIP is much more difficult than the standard nonlinear program (NLP) since the infinitely many inequality constraints have to be dealt with.

So far, many numerical algorithms have been proposed for solving SIPs (see [18, 21, 28, 30] and references therein). A typical algorithm for solving an SIP generates a sequence of finitely constrained subproblems that can be solved by some standard algorithms for NLPs. Existing methods for SIPs can be roughly divided into three types: exchange methods [19, 28], discretization methods, and reduction-based methods (see, e.g. [28, 31] and references therein). The exchange and the discretization methods are numerically expensive in general since the cost per iteration increases dramatically as the cardinality of the auxiliary problem grows. The reduction-based methods, on the other hand, require strong assumptions for global convergence, and are often conceptual methods which can be implemented in a rather simplified form merely. Hence the exchange and the discretization methods are often used only for the first stage of the solution process, whereas the reduction-based methods are typically employed only in the final stage in order to provide a higher accuracy of the solution and a better rate of convergence. Wu et al. [33] proposed an iterative method for solving KKT systems of the SIP in which they drop some redundant points at certain iterations. Qi et al. [27] and Li et al. [20] presented semismooth/smoothing Newton methods in which the index set \(\Omega \) is specified by \(\Omega =\{s\,|\,c_j(s)\le 0\,(j=1,\ldots ,r)\}\) with twice continuously differentiable functions \(c_j:\mathbb {R}^m \rightarrow \mathbb {R}\ (j=1,\ldots ,r)\). They proved that their algorithms have nice convergence properties, but those two methods cannot ensure the feasibility. For linear SIPs, Lai and Wu [19] proposed the explicit algorithm in which they solve a linear program with a finite index set \(E_k\). They dropped out redundant points in \(E_k\) at each iteration and only kept active points ensuring \(|E_k|\le n\) for each k, and hence the algorithm is very efficient in saving the computational time.

In this paper, we focus on the following convex semi-infinite program with “second-order cone constraints” (SOCCSIP):

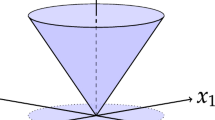

where \(f:\mathbb {R}^n\rightarrow \mathbb {R}\) is continuously differentiable and convex, \(c:\mathbb {R}^{n+m}\rightarrow \mathbb {R}\) is continuously differentiable and convex with respect to x, \(\Omega \subseteq \mathbb {R}^m\) is a given compact set playing a role of index set, and \(\mathcal{K}\) is the Cartesian product of several second order cones (SOCs), i.e., \(\mathcal{K}=\mathcal{K}^{n_1}\times \cdots \times \mathcal{K}^{n_m}\) with \(n=n_1+\cdots +n_m\) and

In the remainder of the paper, the Euclidean norm \(\Vert \cdot \Vert _2\) is denoted as \(\Vert \cdot \Vert \).

The SOCCSIP can be applied to many practical problems. One possible application is a robust optimization problem [2–4, 26] with spherical or ellipsoidal uncertainty. Since the typical example will be given in Sect. 5, we omit the details here. Another application is the finite impulse response (FIR) filter design problem. Let \(h_1,h_2,\ldots ,h_{2N}\in \mathbb {R}\) be the coefficients (impulse response) of a FIR filter with length 2N. Then the frequency response function \(H:[0,2\pi ]\rightarrow {\mathbb {C}}\) is defined as \(H(\omega ):=\sum _{k=1}^{2N}h_ke^{-j(k-1)\omega }\), where \(j:=\sqrt{-1}\). Suppose that the filter coefficients have symmetric structure, i.e., \(x_k:=h_k=h_{2N+1-k}\) for \(k=1,\dots ,N\). According to the calculation steps in [22, Sect. 3.3], we obtain \(H(\omega )=e^{-j({N}-\frac{1}{2})}\tau (\omega )^{\top }x\) with \(x:=(x_1,x_2,\ldots ,x_{N})^{\top }\) and \( \tau (\omega ):=2 (\cos ({N}-\frac{1}{2})\omega ,\, \cos ({N}-\frac{3}{2})\omega ,\ldots , \cos \frac{1}{2}\omega )^{\top }. \) We also have \(|H(\omega )|=|\tau (\omega )^{\top }x|\) since \(|e^{-j({N}-\frac{1}{2})}|=1\). Now, we aim to design the filter as follows: (i) we let the filter magnitude \(|H(\omega )|\) be upper-bounded by \(\beta >0\) in the stopband \(\omega \in [\omega _s,\pi ]\); (ii) we let \(|H(\omega )|\) be as close as possible to 1 in logarithmic scale in the passband \(\omega \in [0,\omega _p]\). Then, the FIR filter design problem is formulated as

which can be reformulated equivalently as

by introducing the auxiliary variables \(u,v\in \mathbb {R}\) and \(y\in \mathbb {R}^3\). (More detailed reformulation techniques are found in [22]). This problem is certainly of the form SOCCSIP (1.1).

Since \(x\in \mathcal{K}^n\Leftrightarrow u^\top x\ge 0\quad (\forall u\in U)\) with \(U=\{u\in \mathbb {R}^n\,|\,u_1=\Vert (u_2,\ldots ,u_n)\Vert =1\}\), the SOC constraint can be rewritten equivalently by an infinite number of linear constraints. Thus, SOCCSIP (1.1) can be cast as a standard convex SIP and existing SIP solvers can be applied. However, this approach is not sensible due to the following two aspects.

-

(i)

The dimension of the index set increases from m to \(n+m\). (When \(\mathcal{K}=\mathcal{K}^n\), the index set of the equivalent convex SIP becomes \(\Omega \oplus U\)).

-

(ii)

Some nice structures of the SOC such as the self-duality is destroyed, and hence the Euclidean Jordan algebra cannot be applied.

Indeed, the numerical results in [13] point out that the SOC constraint should not be handled as infinitely many linear constraints, but should be handled in a direct manner, especially when the dimension of the SOC is large.

The main goal of the paper is to design an explicit exchange method for solving SOCCSIP (1.1) and study its convergence properties. The algorithm inherits Lai and Wu’s explicit exchange technique [19], but we also introduce the relaxed scheme in which a maximization problem with respect to \(s\in \Omega \) need not be solved in each iteration. (It suffices to find some \(s\in \Omega \) such that a certain criterion with small \(\eta >0\) is satisfied. This scheme is also installed in the algorithms proposed in [13, 37]). When either of f or \(c(\cdot ,s)\) is strictly convex, we can obtain a finite convergence result without using the special structure of SOCs (Sect. 4.2). Therefore, even when both of f and \(c(\cdot ,s)\) are non-strictly convex, we can have the “approximate optimum” by solving the perturbed SOCCSIP in which the objective function is replaced by \(f(x)+\varepsilon \Vert x\Vert ^2\) with a very small \(\varepsilon >0\) (Sect. 4.4). However, without using such a regularization technique, we can have the finite convergence result when \(c(\cdot ,s)\) is affine or quadratic (Sect. 4.3). In this case, we have to utilize the SOC complementarity conditions and the spectral factorization techniques associated with Euclidean Jordan algebra. In the classical studies on the cutting plane or the exchange type methods (see [18, 21, 23, 28, 32] and references therein), the convergence properties were analyzed in a componentwise manner. However, such analyses does not make sense for SOCCSIP anymore since the SOC does not have componentwisely independent structure.

Also, we have to mention the related works [13, 25, 37] published recently. The topics and the ideas studied in those papers are similar to our work in some parts. However, they are different in other parts, which are summarized as follows.

-

When f and \(c(\cdot ,s)\) are affine, SOCCSIP (1.1) reduces to the SOCLSIP studied in [13]. However, the results for SOCLSIP cannot be applied to SOCCSIP (1.1) directly since, in case of SOCCSIP, we have to evaluate the residual values \(f(x^{k+1})-f(x^k)-\nabla _{x}f(x^k)^{\top }(x^{k+1}-x^k)\) and \(c(x^{k+1},s)-c(x^k,s)-\nabla _{x}c(x^k,s)^{\top }(x^{k+1}-x^k)\) in each iteration, which are absent when f and \(c(\cdot ,s)\) are affine.

-

In [25], the authors study the regularized explicit exchange method for solving SIP with infinitely many conic constraints. However, they analyze the convergence property of the algorithm only for the problems whose constraint function is affine. (See Sects. 3 and 4 in [25]). On the other hand, we consider more general cases. Moreover, the regularization technique is not installed in our algorithm when \(c(\cdot ,s)\) is affine or quadratic.

-

In [37], the authors propose the explicit exchange method, in which they introduce the finite index set \(\Omega _0\subset \Omega \) whose elements are never dropped throughout the iterations. We also use the same technique in the algorithm. However, [37] only focuses on the case where either f or \(c(\cdot ,s)\) is strictly convex, whereas we study the case where both f and \(c(\cdot ,s)\) are non-strictly convex.

This paper is organized as follows. In Sect. 2, we give some preliminaries needed for the later analyses. In Sect. 3, we develop an explicit exchange method for solving SOCCSIP (1.1). In Sect. 4, we establish the convergence analysis of the proposed algorithm. In Sect. 5, we give some numerical results.

2 Preliminaries

In this section, we give some fundamental knowledge on SOCCSIP (1.1) and the SOC which will be necessary in the subsequent sections.

Throughout the paper, we suppose that SOCCSIP (1.1) satisfies the following assumption.

Assumption A

(i) The optimum set of SOCCSIP (1.1) is nonempty and compact. (ii) It holds \(\inf \{f(x)\,|\,x\in \mathcal{K}\}<\inf \{f(x)\,|\,x\in \mathcal{K},\,c(x,s)\le 0\;(\forall s\in \Omega )\}\). (iii) There exists an \({\overline{x}}\in \mathcal{K}\) such that \(c({\overline{x}},s)<0\) for all \(s\in \Omega \).

This assumption is expected to hold in most cases. Assumption A (i) obviously holds when the optimum set is a singleton. Assumption A (ii) claims that there exists at least one index \(\overline{s}\in \Omega \) such that the inequality constraint \(c(x,\overline{s})\le 0\) is strictly active at the optimum. Therefore, if this assumption does not hold, then the infinite constraint \(c(x,s)\le 0\ (\forall s\in \Omega )\) in SOCCSIP (1.1) does not make sense anymore. Assumption A (iii) is nothing more than the well-known Slater condition. Under this assumption we have the following theorem, which can be proved by using [5, Proposition 2.3.1], [1, Lemma 3.1], and the convergence theorem for the discretization method [28, Chap. 7].

Theorem 2.1

Suppose that Assumptions A (i) and A (ii) hold. Then, there exists \(\Omega _0=\{s_1^0,\ldots ,s_{m_0}^0\}\subset \Omega \) such that the following statements hold:

-

f is level-bounded on the set \(\mathcal{K}\cap \left\{ x\,\big |\,c(x,s^0_i)\le 0\ (i=1,2,\ldots ,m_0)\right\} \).

-

\(\inf \left\{ f(x)\bigm |x\in \mathcal{K}\right\} \,<\, \inf \left\{ f(x)\,\big |\,x\in \mathcal{K},\ c(x,s^0_i)\le 0\ (i=1,2,\ldots ,m_0)\right\} \).

We next give some properties on the SOC. We introduce the spectral factorization for a single SOC \(\mathcal{K}^\ell \) in Euclidean Jordan algebra [9, 10]. For any vector \(y:=(y_1,\tilde{y})\in \mathbb {R}\times \mathbb {R}^{\ell -1}\) with \(\ell \ge 2\), its spectral factorization is defined by

where \(\lambda _i(y)\in \mathbb {R}\) and \(v_i(y)\in \mathbb {R}^\ell \) \((i=1,2)\) are the spectral values and vectors, respectively, defined by

Here, \(w\in \mathbb {R}^{\ell -1}\) is an arbitrary vector with \(\Vert w\Vert =1\). It is obvious that

and

Now, we study the relation between the complementarity on SOCs and the spectral factorization. The vectors \(x\in \mathbb {R}^n\) and \(z\in \mathbb {R}^n\) are said to satisfy the second-order cone complementarity if

It is easily seen that (2.2) holds if and only if

where \(x_j\) and \(z_j\) denote the Cartesian subvectors of x and z, respectively, i.e.,

Moreover, by [13, Prop. 2.3], we have

3 The Explicit Exchange Method for SOCCSIP

In this section, we propose an explicit exchange method for solving SOCCSIP (1.1) and give some fundamental properties. In each iteration of the algorithm, we solve a finitely constrained convex program as a subproblem. For a finite set \(E=\{s_1,\ldots ,s_m\} \subset \Omega \), let \(\mathrm{CSOCP}(E)\) denote the finitely constrained convex second-order cone program defined as

Then, the first-order optimality condition of \(\mathrm{CSOCP}(E)\) is given by

where \(\nu (s_j) \,\,(j=1,\ldots ,m)\) denotes the Lagrange multiplier corresponding to the constraint \(c(x,s_j) \le 0\). For more details on the optimality conditions, see e.g., [6, 7]. Actually, (3.1) reduces to a monotone second-order cone complementarity problem (SOCCP), which can be solved by existing algorithms [8, 10, 14, 15, 24]. Nonlinear second-order cone program such as \(\mathrm{CSOCP}(E)\) is also studied by some researchers [17, 34], but they are still immature.

The concrete scheme of the algorithm is written as follows.

Algorithm 1

- Step 0. :

-

Find a finite index set \(\Omega _0=\{s_1^0,\ldots ,s_{m_0}^0\}\) satisfying Theorem 2.1, and let \(E_0\) be such that \(\Omega _0\subset E_0\subset \Omega \) and \(|E_0|<\infty \). Solve \(\mathrm{CSOCP}(E_0)\) to obtain its optimum \(x^0\). Choose a small number \(\eta >0\) and set \(k:=0\).

- Step 1. :

-

Find an \(s_{\mathrm{new}}^k \in \Omega \) such that

$$\begin{aligned} c\left( x^k,s_{\mathrm{new}}^k\right) > \eta . \end{aligned}$$(3.2)If such an \(s_{\mathrm{new}}^k\) does not exist, i.e., \(\max _{s\in \Omega }c(x^k,s)\le \eta \), then stop. Otherwise, let

$$\begin{aligned} {\overline{E}}_{k+1}:=E_k \cup \{s_{\mathrm{new}}^k\}. \end{aligned}$$ - Step 2. :

-

Solve \(\mathrm{CSOCP}({\overline{E}}_{k+1})\) to obtain its optimum \(x^{k+1}\) and the Lagrange multipliers \(\{\nu _{k+1}(s)| s \in {\overline{E}}_{k+1}\}\).

- Step 3. :

-

Let

$$\begin{aligned} {E}_{k+1}:=\Omega _0\cup \left\{ s\in {\overline{E}}_{k+1}\setminus \Omega _0\bigm | \nu _{k+1}(s)>0\right\} . \end{aligned}$$Let \(k:=k+1\) and go to Step 1.

In Step 1, it is also possible to choose multiple elements satisfying (3.2). Although we merely deal with the single-point exchange scheme in the following analyses, they are also applicable to multiple exchange type algorithms. In Step 2, \(\mathrm{CSOCP}({\overline{E}}_{k+1})\) can be solved by using an existing method. Step 3 is to remove the constraints that are inactive at the optimum \(x^{k+1}\) and corresponding to \(t\in {\overline{E}}_{k+1}\setminus \Omega _0\). Here, we note that \(x^{k+1}\) also solves \(\mathrm{CSOCP}({E}_{k+1})\). Moreover, the sequence \(\{x^k\}\) is bounded since we have \(\Omega _0\subset {\overline{E}}_{k+1}\) for all k. (See Proposition 4.1 given later).

Next, we define some notations for convenience. For a finite set \(E=\{s_1,\ldots ,s_m\}\subset \Omega \), we denote the feasible set and the optimal value of \(\mathrm{CSOCP}(E)\) by \(\mathcal{F}(E)\subset \mathbb {R}^n\) and \(V(E)\in [-\infty ,+\infty )\), respectively, i.e.,

Moreover, we denote the optimal value of SOCCSIP (1.1) by \(V^*\in \mathbb {R}\), i.e.,

Let \(\{x^k\}\) and \(\{\nu _k(s)\}\) be the sequences generated by Algorithm 1, and \(\{z^k\}\) be defined as

Then, the complementarity slackness condition (3.1) yields

Moreover, by using the spectral factorization for Cartesian subvectors \(x^k_j\) and \(z^k_j\) (see (2.4)), we define \({\hat{x}}^k_{ij}\), \(\hat{z}^k_{ij}\in \mathbb {R}\) and \(\hat{e}^k_{ij}\in \mathbb {R}^{n_j}\) for each k as follows:

-

When \(n_j=1\),

$$\begin{aligned} {\hat{x}}^k_{1j}:={\hat{x}}^k_{2j}:=\frac{1}{2}x^k_j,\quad \hat{z}^k_{1j}:=\hat{z}^k_{2j}:=\frac{1}{2}z^k_j,\quad \hat{e}^k_{1j}:=\hat{e}^k_{2j}:=1. \end{aligned}$$(3.6) -

When \(\Big [n_j\ge 2\) and \(k=1\Big ]\) or \(\Big [n_j\ge 2\), \(k\ge 2\), and \(\Vert \sqrt{2}v_1(x^k_j)-\hat{e}^{k-1}_{1j}\Vert \le \Vert \sqrt{2}v_1(x^k_j)-\hat{e}^{k-1}_{2j}\Vert \Big ]\),

$$\begin{aligned} \begin{array}{l} {\hat{x}}^k_{1j}:=\lambda _1(x^k_j)/\sqrt{2},\quad {\hat{x}}^k_{2j}:=\lambda _2(x^k_j)/\sqrt{2},\quad \hat{z}^k_{1j}:=\lambda _2(z^k_j)/\sqrt{2},\quad \hat{z}^k_{2j}:=\lambda _1(z^k_j)/\sqrt{2},\\ \hat{e}^k_{1j}:=\sqrt{2}v_1(x^k_j)=\sqrt{2}v_2(z^k_j),\quad \hat{e}^k_{2j}:=\sqrt{2}v_2(x^k_j)=\sqrt{2}v_1(z^k_j). \end{array}\nonumber \\ \end{aligned}$$(3.7) -

When \(n_j\ge 2\), \(k\ge 2\), and \(\Vert \sqrt{2}v_1(x^k_j)-\hat{e}^{k-1}_{1j}\Vert >\Vert \sqrt{2}v_1(x^k_j)-\hat{e}^{k-1}_{2j}\Vert \),

$$\begin{aligned} \begin{array}{l} {\hat{x}}^k_{1j}:=\lambda _2(x^k_j)/\sqrt{2},\quad {\hat{x}}^k_{2j}:=\lambda _1(x^k_j)/\sqrt{2},\quad \hat{z}^k_{1j}:=\lambda _1(z^k_j)/\sqrt{2},\quad \hat{z}^k_{2j}:=\lambda _2(z^k_j)/\sqrt{2},\\ \hat{e}^k_{1j}:=\sqrt{2}v_2(x^k_j)=\sqrt{2}v_1(z^k_j),\quad \hat{e}^k_{2j}:=\sqrt{2}v_1(x^k_j)=\sqrt{2}v_2(z^k_j). \end{array}\nonumber \\ \end{aligned}$$(3.8)

Then, we have

for each k. For such factorizations, we have the following proposition

Proposition 3.1

[13, Prop. 3.4] Let \(x_j^k\) and \(z_j^k\) be factorized as (3.9). Then, for each \(i=1,2\), \(j=1,\ldots ,m\), and \(k\ge 1\), the following statements hold.

-

(a)

\(\max ({\hat{x}}^k_{ij},\hat{z}^k_{ij})\ge 0\) and \(\min ({\hat{x}}^k_{ij},\hat{z}^k_{ij})=0\).

-

(b)

(i) \(\Vert \hat{e}^k_{ij}\Vert =1\). (ii) \(\hat{e}^k_{ij}\in {\mathrm{bd}\,}\mathcal{K}^{n_j}\) and \((\hat{e}^k_{1j})^{\top }\hat{e}^k_{2j}=0\) if \(n_j\ge 2\)

-

(c)

\((\hat{e}^k_{ij})^{\top }\hat{e}^{k+1}_{ij}\ge 1/2\).

We also define

Since f and \(c(\cdot ,s)\) are continuously differentiable and convex, we have

4 Convergence Analysis

In this section, we show that the proposed algorithm terminates in a finite number of iterations under some mild conditions. Furthermore, we prove that the last output is sufficiently close to the optimal solution of SOCCSIP (1.1) if the criterion value is sufficiently close to zero.

4.1 Some Technical Propositions

In this subsection, we give some technical propositions that are important and convenient for analyzing the convergence of Algorithm 1.

The following proposition shows the boundedness of the generated sequence. The proof can be obtained easily since Theorem 2.1 guarantees the compactness of \(\{x\,|\,f(x)\le V^*\}\cap \mathcal{F}(\Omega _0)\supset \{x^k\}\), where \(V^*\) is defined by (3.3).

Proposition 4.1

The sequence \(\{x^k\}\) generated by Algorithm 1 is bounded, i.e., there exists \(M>0\) such that \(\Vert {x^k}\Vert \le M\) for all k.

The following proposition evaluates the increment of the optimal value of \(\mathrm{CSOCP}(\overline{E}_k)\) in each iteration.

Proposition 4.2

For all \(k\ge 0\), we have

Proof

By the definitions of \(d^k\), \(z^k\), \(F_k\) and \(P_k(s)\), we have

where the first equality follows from (3.5). Thus we have (4.1).

Next, we show the last two equalities. By the definitions of \(d^k\), \(z^{k+1}\), \(G_k\) and \(Q_k(s)\), we have

where the first equality follows from (3.5) with \(k:=k+1\), the fifth equality follows from \({\overline{E}}_{k+1}=E_k\cup \{s_{\mathrm{new}}^k\}\) and \(\nu _{k+1}(s)=0\) for any \(s\in {\overline{E}}_{k+1}\setminus E_{k+1}\), and the last equality holds since \(\nu _{k+1}(s_{\mathrm{new}}^k)\) \(c(x^{k+1},s_{\mathrm{new}}^k)=0\). Thus we have (4.2) and (4.3). \(\square \)

Note that we have \(F_k\ge 0\), \(\nu _k(s)\ge 0\), \(c(x^{k+1},s)\le 0\), and \(P_k(s)\ge 0\) for any \(s\in E_k\). Moreover, \(z^k\in \mathcal{K}\) and \(x^{k+1}\in \mathcal{K}\) entail \((z^k)^{\top }x^{k+1}\ge 0\). These inequalities and (4.1) lead us to the following corollary.

Corollary 4.1

The sequence of optimal values \(\{f(x^k)\}\) of \(\{\mathrm{CSOCP}(E_k)\}\) is monotonically nondecreasing, i.e.,

The following proposition shows that the distance between \(x^k\) and \(x^{k+1}\) never converges to 0.

Proposition 4.3

Let \(\{x^k\}\) be the sequence generated by Algorithm 1. Then, there exists \(d_{\min }>0\) such that \(\Vert x^k-x^{k+1}\Vert \ge d_{\min }\) for all k.

Proof

Note that function \(c(\cdot ,s_{\mathrm{new}}^k)\) is locally Lipschitzian since it is convex and \(c(x,s_{\mathrm{new}}^k)<\infty \) for any \(x\in \mathbb {R}^n\). Also, we have \(c(x^k,s_{\mathrm{new}}^k)>\eta \) and \(c(x^{k+1},s_{\mathrm{new}}^k)\le 0\) for all k. Thus, noticing the boundedness of \(\{x^k\}\) and \(\Omega \), we have

where \(L>0\) is the Lipschitzian constant. Hence, letting \(d_{\min }:=\eta /L>0\), we obtain the result. \(\square \)

The following two propositions show that the sequences \(\{\sum _{s\in E_k}\nu _k(s)\}\) and \(\{\Vert z^k\Vert \}\) are bounded, and \(\sum _{s\in E_k}\nu _k(s)\) is positive away from 0.

Proposition 4.4

Let \(\{x^k\}\) and \(\{\nu _k\}\) be the sequences generated by Algorithm 1, and \(\{z^k\}\) be defined by (3.4). Then, there exists \(M>0\) such that \(\Vert z^k\Vert \le M\) and \(\sum _{s\in E_k}\nu _k(s)\le M\) for all k.

Proof

Let \({\overline{x}}\in \mathcal{K}\) and \(\beta >0\) be chosen so that \(c({\overline{x}},s)\le -\beta \) for any \(s\in \Omega \). (Such a vector and a positive number exist from Assumption A). Then, we have

where the first inequality follows from \((z^k)^{\top }{\overline{x}}\ge 0\) and (3.5), the second inequality is due to the convexity of \(c(\cdot ,s)\), and the last inequality follows from \(c({\overline{x}},s)\le -\beta \). Hence, we have \(\sum _{s\in E_k}\nu _k(s)\le \nabla f(x^k)^{\top }({\overline{x}}-x^k)/\beta \), which implies the boundedness of \(\{\sum _{s\in E_k}\nu _k(s)\}\) since \(\nabla f\) is continuous and \(\{x^k\}\) is bounded. The boundedness of \(\{\Vert z^k\Vert \}\) can be easily shown by the definition of \(z^k\) and the boundedness of \(\{\sum _{s\in E_k}\nu _k(s)\}\). \(\square \)

Proposition 4.5

Let \(\{x^k\}\) and \(\{\nu _k\}\) be the sequences generated by Algorithm 1. Then, there exists \(\alpha >0\) such that \(\sum _{s\in E_k}\nu _k(s)\ge \alpha \) for all k.

Proof

Assume that the statement does not hold for contradiction. Then, Algorithm 1 does not terminate finitely, and \(\liminf _{k\rightarrow \infty }\sum _{s\in E_k}\nu _k(s)=0\). Since \(\{x^k\}\) is bounded from Proposition 4.1, there exists \(K\subseteq \{1,2,\ldots \}\) such that \({\overline{x}}:=\lim _{k\rightarrow \infty ,k\in K}x^k\) and \(\lim _{k\rightarrow \infty ,k\in K}\sum _{s\in E_k}\nu _k(s)=0\). Also, we have \(\lim _{k\rightarrow \infty ,k\in K}z^k=\nabla f({\overline{x}})\) from (3.4) and the boundedness of \(\{\nabla _{x}c(x^k,s)\}\). Thus, by (3.5), we have \({\overline{x}}\in \mathcal{K}\), \(\nabla f({\overline{x}})\in \mathcal{K}\) and \({\overline{x}}^{\top }\nabla f({\overline{x}})=0\), which imply \(f({\overline{x}})=\min \{f(x)\,|\,x\in \mathcal{K}\}\).Footnote 1 Hence, by Theorem 2.1 and \(\Omega _0\subset {\overline{E}}_k\), we must have \(f({\overline{x}})<V(\Omega _0)\le V({\overline{E}}_k)=f(x^k)\). However, this contradicts \(f(x^k)\le f(x^{k+1})\le \cdots \le f({\overline{x}})\). \(\square \)

4.2 Finite Termination for Strictly Convex Case

When f or \(c(\cdot ,s)\) is strictly convex, we can readily obtain the finite termination as follows.

Assumption B

At least one of the following statements holds: (a) f is strictly convex; (b) \(c(\cdot ,s)\) is strictly convex for any \(s\in \Omega \).

Theorem 4.1

Suppose that Assumption B holds. Then, Algorithm 1 terminates in a finite number of iterations.

Proof

We can prove the theorem by using Corollary 4.1 and the argument similar to the proof of [37, Thm. 3.1]. (Here, we do not use the special structure of the SOC). \(\square \)

4.3 Finite Termination Without Strict Convexity

In this section, we discuss the finite termination property of Algorithm 1 without assuming the strict convexity on f or \(c(\cdot ,s)\). We first consider the case (Case 1) where the constraint function \(c(\cdot ,s)\) is affine or quadratic. Then, we consider the more general case (Case 2).

Case 1: Affine or Quadratic Constraint Function

Now, we first suppose that the following assumption holds.

Assumption C

The constraint function \(c(\cdot ,s)\) satisfies either of the following two conditions:

-

(a)

\(c(\cdot ,s)\) is affine for any \(s\in \Omega \);

-

(b)

c is quadratic with respect to x, i.e., it is given as

$$\begin{aligned} c(x,s):= & {} x^\top M(s)x+2q(s)^\top x+r(s), \end{aligned}$$where \(M:\Omega \rightarrow \mathbb {R}^{n\times n}\), \(q:\Omega \rightarrow \mathbb {R}^n\) and \(r:\Omega \rightarrow \mathbb {R}\) are continuous, and \(M(s)\in \mathbb {R}^{n\times n}\) is symmetric and positive semidefinite for any \(s\in \Omega \). Moreover, it holds that \(q(s)^\top \xi \ne 0\) for any \(s\in \Omega \) and \(\xi \in \mathbb {R}^n\setminus \{0\}\) with \(\xi ^\top M(s)\xi =0\).

Then, we provide two lemmas below, which play crucial roles in the finite convergence analysis appearing later.

Lemma 4.1

Suppose that either of Assumption C (a) or C (b) holds. Then, there exists a \(\mu >0\) such that, for all k,

Proof

When Assumption C (a) holds, we have \(\nabla _{x}c(x^{k+1},s_{\mathrm{new}}^k)^{\top }(x^k-x^{k+1}) = c(x^{k},s_{\mathrm{new}}^k)-c(x^{k+1},s_{\mathrm{new}}^k)\) since \(c(\cdot ,s_{\mathrm{new}}^k)\) is affine. Moreover, we have \(c(x^{k},s_{\mathrm{new}}^k)>\eta \) and \(c(x^{k+1},s_{\mathrm{new}}^k)\le 0\) by Algorithm 1. Therefore, by letting \(\mu :=\eta \), we readily have (4.4).

Next we consider the case where Assumption C (b) holds. Let \(s^k\in E_k\) be an arbitrary element with \(\nu _{k}(s^k)>0\). Let \(\xi ^k\in \mathbb {R}^n\) be any vector such that \(\Vert \xi ^k\Vert =1\) and \(\nabla _{x}c({x^k},s^k)^\top \xi ^k=2(M(s^k){x^k}+q(s^k))^\top \xi ^k=0\). Then we have

where the last equality follows since \(\nabla _{x}c({x^k},s^k)^\top \xi ^k=0\) and \(c({x^k},s^k)=0\) from \(\nu _{k}(s^k)>0\).

We first show that there exists \(\delta '>0\) such that

for all k. Suppose for contradiction that there does not exist such an \(\delta '>0\). Then, we must have \(\liminf _{k\rightarrow \infty }(\xi ^k)^\top M(s^k)\xi ^k=0\). Since \(\{{x^k}\}\), \(\{\xi ^k\}\) and \(\{s^k\}\) are bounded and M(s) is continuous, there exists \(K\subset \{0,1,\ldots \}\) such that \(\lim _{k\rightarrow \infty ,k\in K}{x^k}={\overline{x}}\), \(\lim _{k\rightarrow \infty ,k\in K}\xi ^k=\overline{\xi }\), \(\lim _{k\rightarrow \infty ,k\in K}s^k=\overline{s}\), and \(\overline{\xi }^\top M(\overline{s})\overline{\xi }=0\). Notice that \(\overline{\xi }^\top M(\overline{s})\overline{\xi }=0\) implies \(M(\overline{s})\overline{\xi }=0\). Moreover, we have \(\nabla _{x}c({\overline{x}},\overline{s})^\top \overline{\xi }=0\) since \(\nabla _{x}c({x^k},s^k)^\top \xi ^k=0\) for all k. We thus have \(0=\nabla _{x}c({\overline{x}},\overline{s})^\top \overline{\xi }=2(M(\overline{s}){\overline{x}}+q(\overline{s}))^\top \overline{\xi }=q(\overline{s})^\top \overline{\xi }\). However, this contradicts the assumption.

Next, we show that there exists \(\delta ''>0\) such that

for all k. Let \(\mathcal{P}_k:=\big \{x\bigm |\nabla _{x}c({x^k},s^k)^\top (x-{x^k})\big \}=0\), \(\tilde{x}^{k+1}\) be the Euclidean projection of \(x^{k+1}\) onto \(\mathcal{P}_k\), and \(\theta _k\) be the angle between \(x^{k+1}-{x^k}\) and \(\tilde{x}^{k+1}-{x^k}\). Notice that we always have \(0\le \theta _k\le \pi /2\) since \((x^{k+1}-{x^k})^\top (\tilde{x}^{k+1}-{x^k})=\left( (\tilde{x}^{k+1}-{x^k})-(\tilde{x}^{k+1}-x^{k+1})\right) ^\top (\tilde{x}^{k+1}-{x^k})=\Vert \tilde{x}^{k+1}-{x^k}\Vert ^2\), where the last equality follows from \((\tilde{x}^{k+1}-x^{k+1})\perp (\tilde{x}^{k+1}-{x^k})\). If \(\theta _k>\pi /4\), then we have \(-\nabla _{x}c({x^k},s^k)^\top (x^{k+1}-{x^k})=\Vert \nabla _{x}c({x^k},s^k)\Vert \Vert x^{k+1}-{x^k}\Vert \cos (\pi /2-\theta _k)>\gamma d_{\min }/\sqrt{2}\), where \(d_{\min }>0\) is defined in Proposition 4.3 and \(\gamma >0\) is the positive number such that

for all k.Footnote 2 If \(\theta _k\le \pi /4\), then we have \(\Vert \tilde{x}^{k+1}-{x^k}\Vert =\Vert x^{k+1}-{x^k}\Vert \cos \theta _k\ge d_{\min }/\sqrt{2}\). Hence, letting \(\xi ^k:=(\tilde{x}^{k+1}-{x^k})/\Vert \tilde{x}^{k+1}-{x^k}\Vert \) and \(\tau _k:=\Vert \tilde{x}^{k+1}-{x^k}\Vert \), we have

where the last equality follows since \(c({x^k},s^k)=0\) and \(\nabla _{x}c({x^k},s^k)^\top \xi ^k=0\), and the inequality is due to (4.5) and \(\tau _k=\Vert \tilde{x}^{k+1}-{x^k}\Vert \ge d_{\min }/\sqrt{2}\). Since \(c(x^{k+1},s^k)\le 0\) and c is locally Lipschitzian, there exists \(L>0\) such that \(d_{\min }^2\delta '/2\le c(\tilde{x}^{k+1},s^k)-c(x^{k+1},s^k)\le L\Vert \tilde{x}^{k+1}-x^{k+1}\Vert \), that is, \(\Vert \tilde{x}^{k+1}-x^{k+1}\Vert \ge d_{\min }^2\delta '/(2L)\). So we have

where \(\gamma >0\) is a positive number given by (4.7).

Finally, we show that (4.4) holds. Notice that

where the first inequality is due to the convexity of f, the second inequality follows from (4.6) and \((z^k)^\top {x^k}=0\le (z^k)^\top x^{k+1}\), and the last inequality is due to Proposition 4.5. We thus have

where the first inequality is due to (4.8), \((z^{k+1})^\top x^{k+1}=0\le (z^{k+1})^\top {x^k}\), and the definition of \(Q_k(s)\), and the last inequality follows from \(\nu _{k+1}(s)\ge 0\), \(Q_k(s)\ge 0\), \(c({x^k},s)\le 0\) and \(\nu _{k+1}(s)c(x^{k+1},s)=0\). By Proposition 4.4, we have \(\nu _{k+1}(s_{\mathrm{new}}^k)\le M\). Hence, dividing both sides of (4.9) by \(\nu _{k+1}(s_{\mathrm{new}}^k)>0\), we obtain

which implies (4.4) with \(\mu :=\alpha \delta ''/M\). \(\square \)

Lemma 4.2

Let \(\theta :\mathbb {R}^n\rightarrow \mathbb {R}\) be an arbitrary continuously differentiable convex function, and \(x,\,y\in \mathbb {R}^n\) be arbitrary vectors. Then we have

Proof

The above formula holds evidently when \(x=y\). Also, if we have \((\Rightarrow )\), then \((\Leftarrow )\) holds automatically by swapping x for y. Therefore, we only show \((\Rightarrow )\) with \(x\ne y\).

Let x and y be arbitrary vectors such that \(x\ne y\) and \(\theta (y)-\theta (x)-\nabla \theta (x)^{\top }(y-x)=0\). Choose \(\alpha \in (0,1)\) arbitrarily. Then, we have

which implies

and \(\theta ((1-\alpha )x+\alpha y)-\theta (x)-\nabla \theta (x)^{\top }((1-\alpha )x+\alpha y-x)=0\) since \(\theta \) is convex. Hence, we have

where the third equality follows from (4.10) with \(\alpha :=1-t\). This completes the proof. \(\square \)

To show the finite iteration of the algorithm, we further introduce the following assumption.

Assumption D

(strict complementarity and activeness) There exists a small number \(\delta >0\) such that the following statements hold for each k:

(i) \(\max ({\hat{x}}_{ij}^k,\hat{z}_{ij}^k)\ge \delta \); (ii) \(\max (\nu _k(s),-c(x^k,s))\ge \delta \ \ (\forall s\in E_k)\); (iii) \(\nu _{k+1}(s_{\mathrm{new}}^k)\ge \delta \).

Statements (i) and (ii) claim that the strict complementarity should be satisfied in (3.5) in the sense of \(\delta >0\). Due to the complementarity, we always have \(\min (\nu _k(s),-c(x^k,s))=\min ({\hat{x}}_{ij}^k,\hat{z}_{ij}^k)=0\). Statement (iii) implies that the newly added constraint is sufficiently active.

Now, by using the aforementioned assumption and lemma, we provide the theorem for the finite iteration of Algorithm 1.

Theorem 4.2

Suppose that Assumptions C and D holds. Then, Algorithm 1 terminates in a finite number of iterations.

Proof

Suppose to the contrary that Algorithm 1 does not finitely terminate. Then, by Corollary 4.1 we have

which implies

Hence, each term in (4.1) also converges to 0 due to its nonnegativity. Let \(E^+_k:=\{s\in E_k\,|\,\nu _k(s)>0\}=\{s\in E_k\,|\,\nu _k(s)\ge \delta \}\), and \(s_{\mathrm{max}}^k:=\mathop {\mathrm{argmax}}\nolimits _{s\in E^+_k}Q_k(s)\). Moreover, noticing the boundedness of \(\{x^k\}\) and \(\Omega \), let \(({\overline{x}},{\overline{x}}_+,\overline{s}_{\mathrm{max}})\in \mathbb {R}\times \mathbb {R}\times \Omega \) be an arbitrary accumulation point of \(\{(x^k,x^{k+1},s_{\mathrm{max}}^k)\}\). Then, there exists an index set \(K\subseteq \{0,1,2,\ldots \}\) such that \(\lim _{k\rightarrow \infty ,k\in K}(x^k,x^{k+1},s_{\mathrm{max}}^k)=({\overline{x}},{\overline{x}}_+,\overline{s}_{\mathrm{max}})\). Since we have Proposition 4.3, it must hold \({\overline{x}}\ne {\overline{x}}_+\).

We first show that, for each \(s\in \Omega _0\), there exists \(\bar{k}\) such that either

Fix \(s\in \Omega _0\) arbitrarily. Then, by Assumption D (ii), we must have either \(\limsup _{k\rightarrow \infty }\nu _k(s)=0\) or \(\limsup _{k\rightarrow \infty }\nu _k(s)\ge \delta \). If \(\limsup _{k\rightarrow \infty }\nu _k(s)=0\), then we obviously have \(\nu _k(s)=0\) for all k sufficiently large. If \(\limsup _{k\rightarrow \infty }\nu _k(s)\ge \delta \), then there exists \(K'\subset \{1,2,\ldots \}\) such that \(|K'|=\infty \) and \(\nu _k(s)\ge \delta \) for all \(k\in K'\). Since \(\nu _k(s)c(x^{k+1},s)\) converges to 0, we have an \(\varepsilon \in (0,\delta ^2)\) and \(\bar{k}'\ge \hat{k}\) such that \(0\le -\nu _k(s)c(x^{k+1},s)<\varepsilon \) for all \(k\ge \bar{k}'\). Now, choose an arbitrary \(\bar{k}\ge \bar{k}'\) such that \(\nu _{\bar{k}}(s)\ge \delta \). Then, we have \(0\le -c(x^{\bar{k}+1},s)<\varepsilon /\delta <\delta \), which implies \(c(x^{\bar{k}+1},s)=0\) and \(\nu _{\bar{k}+1}(s)\ge \delta \) from Assumption D(ii). We thus obtain (4.12) recursively.

We next show

We readily have \(\lim _{k\rightarrow \infty }\sum _{s\in E_k}\nu _{k+1}(s)c(x^k,s)=0\) since (4.12) implies that either \(\nu _{k+1}(s)\) or \(c(x^k,s)\) is 0 for all k sufficiently large. Since \(\lim _{k\rightarrow \infty }F_k=0\), we have

Hence, by Lemma 4.2, we have

Since \({\overline{x}}\) and \({\overline{x}}_+\) are arbitrary accumulation points, the above equality implies \(\lim _{k\rightarrow \infty }G_k=0.\) Also, since \(\lim _{k\rightarrow \infty }\sum _{s\in E_k}\) \(\nu _k(s)P_k(s)=0\), \(P_k(s)\ge 0\), \(s_{\mathrm{max}}^k\in E^+_k\), and \(\nu _k(s)\ge \delta \) for all \(s\in E^+_k\), we have

Hence, by Lemma 4.2, we have \(\lim _{k\rightarrow \infty ,k\in K}Q_k(s_{\mathrm{max}}^k)=0\). Now, by Proposition 4.4, there exists \(M>0\) such that \(\sum _{s\in E^+_k}\nu _{k+1}(s)\le \sum _{s\in {\overline{E}}_{k+1}}\nu _{k+1}(s)=\sum _{s\in E_{k+1}}\nu _{k+1}(s)\le M\) for all k. Moreover, \(\nu _{k+1}(s)=0\) for all \(s\in E_k\setminus E^+_k\) and sufficiently large k since we have (4.12) and \(E_k\setminus E^+_k\subset \Omega _0\). We thus have

which yields \(\lim _{k\rightarrow \infty ,k\in K}\sum _{s\in E_{k}}\nu _{k+1}(s)Q_k(s)=0\). Since \({\overline{x}}\), \({\overline{x}}_+\) and \(\overline{s}_{\mathrm{max}}\) are arbitrary accumulation points, we have \(\lim _{k\rightarrow \infty }\sum _{s\in E_{k}}\nu _{k+1}(s)Q_k(s)=0\).

Now, choose a sufficiently small number \(\varepsilon >0\) arbitrarily. By Lemma 4.1, we have

Hence, by (4.1) and (4.3) together with (4.11), (4.13) and (4.14), we have some positive integer \(L=L(\varepsilon )\) such that

for all \(k\ge L\). Choose \(k\ge L\) arbitrarily, and let \(\mathcal{I}^k_1\) and \(\mathcal{I}^k_2\) be defined as

Then, we note that \(\mathcal{I}^k_1\cup \mathcal{I}^k_2=\{1,2\}\times \{1,\ldots ,m\}\), and

from Proposition 3.1(a) and Assumption D (i). Let \((i',j')\in \mathcal{I}^k_2\) be chosen arbitrarily.Footnote 3 Then we have

where the second inequality is due to the nonnegativity of \({\hat{x}}_{ij}^{k+1}\), \(\hat{z}_{ij}^k\) and \((\hat{e}_{ij}^{k+1})^{\top }\hat{e}_{ij}^k\), and the last inequality follows from Proposition 3.1(c) and \((i',j')\in \mathcal{I}^k_2\). Since \(\varepsilon >0\) can be chosen arbitrarily small and we have (4.16), the above inequality means \({\hat{x}}_{i'j'}^{k+1}=0\). Hence, we have \(\mathcal{I}^{k}_2\subset \mathcal{I}^{k+1}_2\).

Now, by (4.15), we have

which implies \(\mathcal{I}^{k+1}_2\setminus \mathcal{I}^k_2\ne \emptyset \). Since \(k\ge L\) can be chosen arbitrarily, it must hold

However, this contradicts the boundedness of \(\{|\mathcal{I}^k_2|\}\). Thus, Algorithm 1 must terminate in a finite number of iterations. \(\square \)

Case 2: More General Constraint Function

When the constraint function \(c(\cdot ,s)\) is neither affine nor quadratic, we may consider the following perturbed problem instead of the original SOCCSIP:

where \(\varepsilon >0\) is a very small constant. Since the objective function of SOCCSIP\({}_\varepsilon \) is strictly convex, the finite convergence result in Sect. 4.2 can be applied. In this case, it is important to see that, if we choose a sufficiently small \(\varepsilon >0\), then the optimum of SOCCSIP\({}_\varepsilon \) is sufficiently close to the original SOCCSIP optimum. The following theorem provides the positive answer for that.

Theorem 4.3

Let \(x^*_\varepsilon \) be the unique optimum of SOCCSIP\({}_\varepsilon \). Let X be the optimum set of the original SOCCSIP. Then we have \(\lim _{\varepsilon \searrow 0}\mathrm{dist}(x^*_\varepsilon ,X)=0\).

Proof

Let \(\mathcal{S}:\mathbb {R}\rightarrow 2^{\mathbb {R}^n}\) be the set-valued mapping such that \(\mathcal{S}(\varepsilon )\) is the solution set of SOCCSIP\({}_\varepsilon \). Then we have \(\mathcal{S}(\varepsilon )=\{x^*_\varepsilon \}\) for all \(\varepsilon >0\) and \(\mathcal{S}(0)=X\). Since it is known that the optimal set mapping \(\mathcal{S}\) is upper semi-continuous, any accumulation point of \(\mathcal{S}(\varepsilon )\) is contained in \(\mathcal{S}(0)\). Thus we have \(\lim _{\varepsilon \searrow 0}\mathrm{dist}(x^*_\varepsilon ,X)=0\). \(\square \)

4.4 Approximation Analysis for Obtained Solution

So far, we have shown the finite termination property of Algorithm 1 via the aforementioned theorems. Nevertheless, these theorems would be meaningless if the obtained solution is far from the optimum of SOCCSIP (1.1). The following theorem guarantees that if \(\eta >0\) is sufficiently close to 0, then the last output of Algorithm 1 is also close to the optimal solution of SOCCSIP (1.1).

Theorem 4.4

Suppose that Algorithm 1 terminates in a finite number of iterations. Let \(k^*(\eta )\) be the number of iterations in which Algorithm 1 terminates. Then, \(\lim _{\eta \rightarrow 0}\mathrm{dist}(x^{k^*(\eta )},\mathcal{S})=0\).

Proof

Let \(h:\mathbb {R}^n\rightarrow \mathbb {R}\), \(X\subset \mathbb {R}^n\), and \(\mathcal{S}_\eta \subset \mathbb {R}^n\) be defined by

Then, h is continuous and convex, X is closed and convex, and \(\mathcal{S}_0\) coincides with the solution set of SOCCSIP (1.1). Moreover, since \(f(x^{k^*(\eta )})\le V^*\), it follows \(x^{k^*(\eta )}\in \mathcal{S}_\eta \) for any \(\eta >0\). We can show the remainder of the proof in a way analogous to [13, Thm. 3.4]. \(\square \)

Remark

In Step 1 of Algorithm 1, we may also choose \(l\ (\ge 2)\) different points \(\{s^k_1,\ldots ,s^k_l\}\) such that \(c(x^k,s_i^k)>\eta \) for \(i=1,\ldots ,l\) with \({{\overline{E}}}_{k+1}:=E_k\cup \{s^k_1,\ldots ,s^k_l\}.\) For such a multiple explicit exchange method, Theorems 4.2–4.4 can be shown by using analogous techniques.

5 Numerical Results

In this section, we report some numerical results. We implement Algorithm 1 by Matlab 7.10.0 (R2010a) and run the experiments on a computer with Pentium (R) CPUs 3.19GHz and 3.20GHz with 0.99MB RAM. Throughout the experiments, we set \(\eta :=10^{-6}\) and \(E_0:=\Omega _0\). In Step 1, we find an \(s_{\mathrm{new}}^k\in \Omega \) with \(c(x^k,s_{\mathrm{new}}^k)>\eta \) as follows. We first test \(N\,(\approx 100)\) grid pointsFootnote 4 \(\tilde{s}_1,\ldots ,\tilde{s}_{N}\in \Omega \) to find \(\overline{s}^k:=\mathrm {argmax}_{i=1,2,\ldots ,N\,}c(x^k,\tilde{s}_i)\). If \(c(x^k,\overline{s}^k)>\eta \), then we set \(s_{\mathrm{new}}^k:=\overline{s}^k\). Otherwise, we solve the constrained maximization problem: “maximize \(c(x^k,s)\) subject to \(s\in \Omega \)” by means of fmincon solver in Matlab Optimization Toolbox with the initial point \(\overline{s}^k\). In Step 2, we solve \(\mathrm{CSOCP}({\overline{E}}_{k+1})\) by using the SOCCP reformulation technique together with the regularized smoothing Newton method [15]. In Step 3, we relax the criterion \(\nu _{k+1}(s)>0\) to \(\nu _{k+1}(s)>10^{-6}\). We stop the iteration of Algorithm 1 when \(\max \{c(x^k,s)|s\in \Omega \}\le \eta \). For all test problems, we checked that the required assumptions are satisfied.

5.1 Experiment 1 (Solving SOCCSIPs with Various Choices of Parameters)

Let \(p:\mathbb {R}^2\rightarrow \mathbb {R}\), \(q:\mathbb {R}^2\rightarrow \mathbb {R}^n\) and \(r:\mathbb {R}^2\rightarrow \mathbb {R}\) be defined as

where \(e\in \mathbb {R}^n\) denotes the identity element with respect to \(\mathcal{K}\). (For example, \(e=(1,0,0,0,1,0,0)^\top \) when \(\mathcal{K}=\mathcal{K}^4\times \mathcal{K}^3\)). Then, we solve the following SOCCSIP:

where \(\beta >0\) is a given constant, and \(A\in \mathbb {R}^{n\times n}\) and \(M\in \mathbb {R}^{n\times n}\) are positive semidefinite symmetric matrices. Function f is convex, but is not strictly convex when \({\mathrm{rank}}(A)<n\). Also c is convex with respect to x, but is not strictly convex when \({\mathrm{rank}}(M)<n\). Therefore, we replace the objective function by \(f(x)+10^{-10}\Vert x\Vert ^2\) so that the finite convergence is obtained theoretically. Matrices A and M are defined as \(A:=PP^\top \) and \(M:=QQ^\top \), where P and Q are respectively \((n\times r_a)\)- and \((n\times r_m)\)-dimensional matrices whose components are randomly chosen from \([-1,1]\). Since P and Q are randomly generated, we almost always have \({\mathrm{rank}}(A)=\min (n,r_a)\) and \({\mathrm{rank}}(M)=\min (n,r_m)\). Also, each component of vector b is randomly chosen from \([-1,1]\). In applying Algorithm 1, we set \(\Omega _0:=\{0\}\) since \(\mathcal{K}\cap \{x\,|\,c(x,0)\le 0\}\) is compact. SOCCSIP (5.1) has a Slater point since \(c(0,s)=r(s)<0\) for all \(s\in \Omega \).

First, we solve SOCCSIP (5.1) with various choices of constant \(\beta >0\). We solve 12 problem instances, each of which has different values of A, M and b. For all instances, we set \(\mathcal{K}=\mathcal{K}^{10}\), \(r_a=6\) and \(r_m=8\). Since A and M are rank-deficient, functions f and \(c(\cdot ,s)\) are not strictly convex. We show the obtained results in Table 1, in which \(\lambda _1\) and \(\lambda _2\) denote the spectral values defined by (2.1) of the obtained solutions \(x^*\), \(\sharp \)ite denotes the number of iterations, cpu(s) denotes the CPU time in seconds, and \(E^{\mathrm{final}}_k\setminus \Omega _0\) denotes the final output of \(E_k\) except \(\Omega _0\). Notice that the number of \(\sharp \text{ ite }\) does not count the first subproblem \(\mathrm{CSOCP}(E_0)\). Therefore, Algorithm 1 actually solves \(\sharp \text{ ite }+1\) CSOCPs for each instance. The rows with \(\sharp \text{ ite }=0\) (Problems 4 and 9) imply that Algorithm 1 finds the SOCCSIP optimum \(x^*(=x^0)\) in Step 0, and there exists no \(s_{\mathrm{new}}^0\in \Omega \) with \(c(x^0,s_{\mathrm{new}}^0)>\eta \). In other words, the convex constraint corresponding to \(s=(0,0)^\top \) is active at the solution \(x^*\). We can also see that, when \(\beta =0.1\) (Problems 1–3), the algorithm finds the solution \(x^*\) with \(k=1\), and the final active index is \(s=(-0.1\pi ,0.1\pi )^\top \). Notice that \(\Omega \) is expressed as a two-dimensional square, and \((-0.1\pi ,0.1\pi )^\top \) is one of its vertices. Since Algorithm 1 checks the values of \(c(x^k,s)\) at all the vertices in Step 1, we will find the SOCCSIP optimum very soon if the final active index is located at the vertex of \(\Omega \). On the contrary, for Problems 5–8 and 10–12, the final active indices are not located at the vertices of \(\Omega \).Footnote 5 This may be the main reason why Problems 5–8 and 10–12 need more iterations and cpu time than Problems 1–3. This tendency seems to be more noticeable as \(\beta \) becomes larger.

Next, we solve SOCCSIP (5.1) with various choices of the Cartesian structure \(\mathcal{K}\). We consider 9 different Cartesian structures, and solve 100 problems for each \(\mathcal{K}\). We therefore solve 900 problems in total. For all problems, we set \(r_a=r_m=0.8n\) and \(\beta =1\). Since A and M are rank-deficient, functions f and \(c(\cdot ,s)\) are not strictly convex. We give the obtained results in Table 2, in which \(\lambda _i^{\min }\) and \(\lambda _i^{\max }\) \((i=1,2)\) denote the maximum and minimum of the spectral values (2.1) among all Cartesian subvectors \(x^*_1,\ldots ,x^*_m\) in 100 problems for each \(\mathcal{K}\).Footnote 6 (In case of \(\mathcal{K}=(\mathcal{K}^1)^{10}=\mathbb {R}^{10}_+\), we only give the values for \(\lambda _1\)). Also, \(\lambda _i^{\mathrm{zero}}\) denotes the frequency that the spectral values become 0, and \(\sharp \)ite and cpu(s) are the average values among the 100 trials for each \(\mathcal{K}\). From the table, we can observe that the number of iterations does not change so much even when the dimension n of the variables or the number m of sub-SOCs increases. However, CPU time increases quite a bit as n becomes larger. This implies that the computational cost for solving each subproblem (CSOCPs solved in Steps 0 and 2) becomes more expensive as n increases. Also, we can see that the spectral value \(\lambda _1\) often becomes 0, which implies that each subvector \(x^*_j\) is located on the boundary of the SOC \(\mathcal{K}^{n_j}\). When \(\mathcal{K}=(\mathcal{K}^1)^{10}=\mathbb {R}^{10}_+\), approximately 50 % of obtained \(\lambda _1\)s are greater than 0, but it is not surprising since, in this case, each subvector \(x^*_j\) coincides with the j-th scalar component of vector \(x^*\), and \(\lambda _1(x^*_j)>0\) implies \(x^*_j>0\). When \(\mathcal{K}=(\mathcal{K}^2)^{5}\), we still have more than 20 % positive \(\lambda _1\)s. It is also convincing since the dimension of each SOC is small \((=2)\) and the problems are generated randomly. In other seven cases with \(\mathcal{K}=\mathcal{K}^{10}\), \((\mathcal{K}^{20})^{5},\ldots ,\mathcal{K}^{200}\), we always have \(\lambda _1(x^*_j)=0\) and \(\lambda _2(x^*_j)>0\), which means that all the subvectors of \(x^*\) are located on the boundary of SOCs.

Finally, we solve SOCCSIP (5.1) with various degrees of rank deficiency of matrices A and M. For all problems, we set \(\mathcal{K}=\mathcal{K}^{20}\times \mathcal{K}^{30}\), \(\beta =1\), and \(r:=r_a=r_m\), and choose 7 different values for r. We solve 100 problems for each r, and hence solve 700 problems in total. Note that we usually have \({\mathrm{rank}}(A)={\mathrm{rank}}(M)=\min (50,r)\). Therefore, f and \(c(\cdot ,s)\) are not strictly convex when \(r<50\), and are (almost always) strictly convex when \(r\ge 50\). We give the obtained results in Table 3, in which \(\lambda _i^{\min }\), \(\lambda _i^{\max }\) and \(\lambda _i^{\mathrm{zero}}\) are defined analogously to the previous experiment, and \(\sharp \)ite and cpu(s) are the average of 100 problems for each r. As the table shows, we need a large number of iterations when r is small, i.e., matrices A and M have high rank-deficiency. On the other hand, when \(r=50\) and 100, i.e., f and \(c(\cdot ,s)\) are strictly convex, we obtain the solution in a very small number of iterations. Especially, when \(r=100\), we often obtain the SOCCSIP optimum in the initial step with \(k=0\). Also we can observe that, when r is small, the value of \(\lambda _2\) sometimes becomes 0, which implies that the optimal solution of SOCCSIP (5.1) is \(x^*=0\). Actually, the above-mentioned features generally depend on the structure of each problem, but in many cases the ill-posedness of a problem seems to be relevant to the rank deficiency of certain matrices involved in the problem.

5.2 Experiment 2 (Application to Robust Optimization)

The robust optimization [2] is one of distribution-free methodologies for handling problems with uncertain data. We usually assume that the uncertain data belong to some set, and try to solve another optimization problem called robust counterpart (RC), which is composed with taking the worst possible case into consideration.

Algorithm 1 is also applicable to the robust optimization for convex semi-infinite programs (CSIPs). Consider the following uncertain CSIP:

where \(\Omega :=[-1,1]\) and \(c(x,s):=x^\top \Xi (s)x+\eta (s)^\top x+\zeta (s)\) with

Moreover, \(\hat{b}\in \mathbb {R}^n\) is an uncertain vector such that \(\hat{b}=b+\delta _b\), where \(b=(-3,\,4,\,2,\,-4,\,1)^\top \) is the nominal value of \(\hat{b}\), and \(\delta _b\) is the error term belonging to a certain set \(\mathcal{B}\). Then, the RC of CSIP (5.2) is written as

Now, suppose that \(\mathcal{B}\) is a closed sphere with radius \(\rho \), that is, \(\mathcal{B}:=\{\delta _b\,|\,\Vert \delta _b\Vert \le \rho \}\). Then, the objective function of RC (5.3) can be calculated as \(\max _{\delta _b\in \mathcal{B}}(b+\delta _b)^\top x\) \(=b^\top x+\max \{\delta _b^\top x\,|\Vert \delta _b\Vert \) \(\le \rho \}=b^\top x+\rho \Vert x\Vert \). Therefore, by introducing an auxiliary variable \(u\in \mathbb {R}\), RC (5.3) can be rewritten equivalently as

which is of the form SOCCSIP (1.1) with \(x:={u\atopwithdelims ()x}\) and \(\mathcal{K}:=\mathcal{K}^{n+1}\).

Table 4 shows the obtained results on SOCCSIP (5.4) with various choices of \(\rho \). In the table, \(u^{*,\rho }\) and \(x^{*,\rho }\) are the solutions of SOCCSIP (5.4), \(\lambda _1\) and \(\lambda _2\) are the spectral values for \({u^{*,\rho }\atopwithdelims ()x^{*,\rho }}\), and \(\sharp \)ite and cpu(s) denote the number of iterations and CPU time in seconds for Algorithm 1, respectively. As the table shows, the solution of RC (5.3) moves continuously as the radius \(\rho \) of \(\mathcal{B}\) varies. Also, for all cases, we have \(\lambda _1=0\), i.e., \(u^{*,\rho }=\Vert x^{*,\rho }\Vert \), and the algorithm finds the solution in a small number of iterations and CPU time.

Next, we investigate the distribution of the functional values to observe the actual effect of the robust optimization. We generate 10,000 sample vectors \(\delta _b^1,\delta _b^2,\ldots ,\delta _b^{10000}\in \mathbb {R}^5\) for the error term \(\delta _b\). Each \(\delta _b^i\) is defined as \(\delta _b^i:=\gamma _i v^i/\Vert v^i\Vert \), where \(\gamma _i\in \mathbb {R}\) and each component of \(v^i\in \mathbb {R}^5\) independently follow the normal distribution with mean 0 and deviation 1.5. Since the normal distribution contains \(95\,\%\) of the values within 2 standard deviations of the mean, we will have \(\Vert \delta _b^i\Vert \le 3\) with \(95\,\%\) probability. To make the functional values more intuitive, we add a positive constant \((=18)\) to the objective function, that is, we check the value of \(f_i(x^{*,\rho }):=(b+\delta _b^i)^\top x^{*,\rho }+18\) for each i and \(\rho \). The obtained results are summarized in Table 5, where the columns of ‘best’, ‘mean’ and ‘worst’ denote the minimum, average, and maximum of \(f_i(x^{*,\rho })\) among \(i=1,2,\ldots ,10,000\) for each \(\rho \), respectively. The column of \([6.5,\,7)\) denotes the number of times that we had \(6.5\le f_i(x^{*,\rho })<7\) among 10, 000 sample vectors of \(\delta _b^i\). (Other columns are similar). From the table, we can see that the values of ‘worst’ is smaller as \(\rho \) becomes larger, though it is opposite in the columns of ‘best’ and ‘mean’. This means that the robust optimization may have disadvantage under average or lucky situations, but the serious damage can be avoided or reduced even when an unlucky situation occurs. Since we applied the normal distribution to generate the sample error vectors, we seldom encountered the unlucky situations such as \(f_i(x^{*,\rho })>8.5\). However, if we apply another type of distribution, we may encounter such an undesirable situation more often, and the robust optimization can be more important.

Notes

Function f and cone \(\mathcal{K}\) are convex. Moreover, the KKT condisions of the problem “min f(x) s.t. \(x\in \mathcal{K}\)” can be written as \(x\in \mathcal{K}\), \(\nabla f(x)\in \mathcal{K}\) and \(x^{\top }\nabla f(x)=0\).

Assumption A (iii) and Proposition 4.1 yield \(0<-c({\overline{x}},\overline{s}_{\max })\le c({x^k},s^k)-c({\overline{x}},s^k)\le \nabla _{x}c({x^k},s^k)^\top ({x^k}-{\overline{x}})\le \Vert \nabla _{x}c({x^k},s^k)\Vert (M+\Vert {\overline{x}}\Vert )\), where \(\overline{s}_{\max }:={\mathrm{argmax}}_{s\in \Omega }c({\overline{x}},s)\). Therefore, we can choose \(\gamma :=-c({\overline{x}},\overline{s}_{\max })/(M+\Vert {\overline{x}}\Vert )>0\).

When \(\mathcal{I}^{k}_2=\emptyset \), we immediately obtain the desired result \(\mathcal{I}^{k}_2\subseteq \mathcal{I}^{k+1}_2\).

When \(\Omega =[l_s,u_s]\subset \mathbb {R}\), we test the 101 points \(l_s+i(u_s-l_s)/100\) with \(i=0,1,\ldots ,100\). When \(\Omega =[l_s,u_s]^2\subset \mathbb {R}^2\), we test the 121 points \((l_s+i(u_s-l_s)/10,\,l_s+j(u_s-l_s)/10)^\top \) with \(i=0,1,\ldots ,10\) and \(j=0,1,\ldots ,10\).

For Problem 7, the final active index is located in the interior of \(\Omega \), and for other six problems (5, 6, 8, 10, 11, 12), they are located on the non-vertex boundary.

For example, when \(\mathcal{K}=(\mathcal{K}^{\ell })^{m}\), we have m subvectors \(x^*_1,\ldots ,x^*_{m}\in \mathbb {R}^{\ell }\) for the optimum \(x^*\). Therefore, if the obtained solutions of the 100 problems are \(x^{*,1},x^{*,2},\ldots ,x^{*,100}\), then we have \(\lambda _i^{\max }:=\max \big \{\lambda _i(x^{*,p}_j)\bigm |(j,p)\in \{1,2,\ldots ,m\}\times \{1,2,\ldots ,100\}\big \}\) and \(\lambda _i^{\min }:=\min \big \{\lambda _i(x^{*,p}_j)\bigm |(j,p)\in \{1,2,\ldots ,m\}\times \{1,2,\ldots ,100\}\big \}\) for each \(i=1,2\).

References

Auslender, A., Goberna, M.A., López, M.A.: Penalty and smoothing methods for convex semi-infinite programming. Math. Oper. Res. 34, 303–319 (2009)

Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust Optimization. Princeton University Press, Princeton (2009)

Ben-Tal, A., Nemirovski, A.: Robust convex optimization. Math. Oper. Res 23, 769–805 (1998)

Ben-Tal, A., Nemirovski, A.: Robust solutions of uncertain linear programs. Oper. Res. Lett. 25, 1–13 (1999)

Bertsekas, D.P., Nedić, A., Ozdaglar, A.E.: Convex Analysis and Optimization. Athena Scientific, Belmont (2003)

Bonnans, J.F., Ramírez, H.: Perturbation analysis of second-order cone programming problems. Math. Progr. 104, 205–227 (2005)

Bonnans, J.F., Shapiro, A.: Perturbation analysis of optimization problems. Springer, New York (2000)

Chen, X.D., Sun, D., Sun, J.: Complementarity functions and numerical experiments for second-order-cone complementarity problems. Comput. Optim. Appl. 25, 39–56 (2003)

Faraut, J., Korányi, A.: Analysis on Symmetric Cones. Clarendon Press, New York (1994)

Fukushima, M., Luo, Z.-Q., Tseng, P.: Smoothing functions for second-order cone complementarity problems. SIAM J. Optim. 12, 436–460 (2001)

Goberna, M.A., López, M.A.: Linear semi-infinite programming: an updated survey. Eur. J. Oper. Res. 143, 390–405 (2002)

Gonzaga, C., Polak, E., Trahan, R.: An improved algorithm for optimization problems with functional inequality constraints. IEEE Trans. Autom. Control 25, 49–54 (1980)

Hayashi, S., Wu, S.-Y.: An explicit algorithm for linear semi-infinite programming problem with second-order cone constraints. SIAM J. Optim. 20, 1527–1546 (2009)

Hayashi, S., Yamaguchi, T., Yamashita, N., Fukushima, M.: A matrix splitting method for symmetric affine second-order cone complementarity problems. J. Comput. Appl. Math. 175, 335–353 (2005)

Hayashi, S., Yamashita, N., Fukushima, M.: A combined smoothing and regularization method for monotone second-order cone complementarity problems. SIAM J. Optim. 15, 593–615 (2005)

Hettich, R., Kortanek, K.O.: Semi-infinite programming: theory, methods, and applications. SIAM Rev. 35, 380–429 (1993)

Kato, H., Fukushima, M.: An SQP-type algorithm for nonlinear second-order cone programs. Optim. Lett. 1, 129–144 (2007)

Kortanek, K., No, H.: A central cutting plane algorithm for convex semi-infinite programming problems. SIAM J. Optim 3, 901–918 (1993)

Lai, H.C., Wu, S.-Y.: On linear semi-infinite programming problems: an algorithm. Numer. Funct. Anal. Optim. 13, 287–304 (1992)

Li, D., Qi, L., Tam, J., Wu, S.-Y.: A smoothing newton method for semi-infinite programming. J. Glob. Optim. 30, 169–194 (2004)

Lin, C.-J., Fang, S.-C., Wu, S.-Y.: An unconstrained convex programming approach to linear semi-infinite programming. SIAM J. Optim. 8, 443–456 (1998)

Lobo, M.S., Vandenberghe, L., Boyd, S., Lebret, H.: Applications of second-order cone programming. Linear Algebra Appl. 284, 193–228 (1998)

López, M., Still, G.: Semi-infinite programming. Eur. J. Oper. Res. 180, 491–518 (2007)

Narushima, Y., Sagara, N., Ogasawara, H.: A smoothing Newton method with Fischer-Burmeister function for second-order cone complementarity problems. J. Optim. Theory Appl. 149, 79–101 (2011)

Okuno, T., Hayashi, S., Fukushima, M.: A regularized explicit exchange method for semi-infinite programs with an infinite number of conic constraints. SIAM J. Optim. 22, 1009–1028 (2012)

Özmen, A., Weber, G.-W., Batmazb, I., Kropat, E.: RCMARS: robustification of CMARS with different scenarios under polyhedral uncertainty set. Commun. Nonlinear Sci. Numer. Simul. 16, 4780–4787 (2011)

Qi, L., Wu, S.-Y., Zhou, G.: Semismooth newton for solving semi-infinite programming problems. J. Glob. Optim. 27, 215–232 (2003)

Reemtsen, R., Görner, S.: Numerical methods for semi-infinite programming: a survey. In: Reemtsen, R., Rückmann, J. (eds.) Semi-Infinite Programming, pp. 195–275. Kluwer Academic Publishers, Boston (1998)

Reemtsen, R., Rückmann, J.: Semi-Infinite Programming. Kluwer Academic Publishers, Boston (1998)

Stein, O., Still, G.: Solving semi-infinite optimization problems with interior point techniques. SIAM J. Control Optim. 42, 769–788 (2003)

Still, G.: Discretization in semi-infinite programming: the rate of convergence. Math. Progr. 91, 53–69 (2001)

Wu, S.-Y., Fang, S.-C.: Solving convex programs with infinitely linear constraints by a relaxed cutting plane method. Comput. Math. Appl. 38, 23–33 (1999)

Wu, S.-Y., Li, D.H., Qi, L., Zhou, G.: An iterative method for solving KKT system of the semi-infinite programming. Optim. Methods Softw. 20, 629–643 (2005)

Yamashita, H., Yabe, H.: A primal-dual interior point method for nonlinear optimization over second-order cones. Optim. Methods Softw. 24, 407–426 (2009)

Yuan, Y.X.: Recent advances in numerical methods for nonlinear equations and nonlinear least squares. Numer. Algebra Control Optim. 1, 15–34 (2011)

Yuan, Y.X.: A trust region algorithm for Nash equilibrium problems. Pac. J. Optim. 7, 125–138 (2011)

Zhang, L., Wu, S.-Y., López, M.A.: A new exchange method for convex semi-infinite programming. SIAM J. Optim. 20, 2959–2977 (2010)

Author information

Authors and Affiliations

Corresponding author

Additional information

This research was supported in part by JSPS KAKENHI Grant Number 26330022, and by National Center for Theoretical Sciences, Taiwan.

Rights and permissions

About this article

Cite this article

Hayashi, S., Wu, SY. & Zhang, L. Computation Algorithm for Convex Semi-infinite Program with Second-Order Cones: Special Analyses for Affine and Quadratic Case. J Sci Comput 68, 573–595 (2016). https://doi.org/10.1007/s10915-015-0149-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-015-0149-6