Abstract

Sparse tensor best rank-1 approximation (BR1Approx), which is a sparsity generalization of the dense tensor BR1Approx, and is a higher-order extension of the sparse matrix BR1Approx, is one of the most important problems in sparse tensor decomposition and related problems arising from statistics and machine learning. By exploiting the multilinearity as well as the sparsity structure of the problem, four polynomial-time approximation algorithms are proposed, which are easily implemented, of low computational complexity, and can serve as initial procedures for iterative algorithms. In addition, theoretically guaranteed approximation lower bounds are derived for all the algorithms. We provide numerical experiments on synthetic and real data to illustrate the efficiency and effectiveness of the proposed algorithms; in particular, serving as initialization procedures, the approximation algorithms can help in improving the solution quality of iterative algorithms while reducing the computational time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the big data era, people often face intrinsically multi-dimensional, multi-modal and multi-view data-sets that are too complex to be processed and analyzed by traditional data mining tools based on vectors or matrices. Higher-order tensors (hypermatrices) naturally can represent such complex data sets. Tensor decomposition tools, developed for understanding tensor-based data sets, have shown the power in various fields such as signal processing, image processing, statistics, and machine learning; see the surveys [4,5,6, 8, 16, 29].

In high dimensional data-sets, another structure that cannot be ignored is the sparsity. Sparsity tensor examples come from clustering problems, online advertising, web link analysis, ranking of authors based on citations [17, 24, 26, 31, 32], and so on. Therefore, recent advances incorporate sparsity into tensor decomposition models and tensor-based statistics and machine learning problems [1, 3, 21, 26, 30, 32, 35, 38]; just to name a few. As pointed out by Allen [1], introducing sparsity into tensor problems is desirable for feature selection, for compression, for statistical consistency, and for better visualization and interpretation of the data analysis results.

In (dense) tensor decomposition and related tensor models and problems, the best rank-1 approximation (BR1Approx) is one of the most important and fundamental problems [10, 28]. Just as the dense counterpart, the sparse tensor BR1Approx serves as a keystone in the computation of sparse tensor decomposition and related sparse tensor models [1, 26, 30, 32, 35]. Roughly speaking, the sparse tensor BR1Approx is to find a projection of a given data tensor onto the set of sparse rank-1 tensors in the sense of Euclidean distance. This is equivalent to maximizing a multilinear function over both unit sphere and \(\ell _0\) constraints; mathematical models will be detailed in Section 2. Such a problem also closely connects to the sparse tensor spectral norm defined in [30, 32].

For (dense) tensor BR1Approx, several methods have been proposed; e.g., power methods [10, 18], approximation algorithms [12, 13, 39], and convex relaxations [15, 25, 37]. For sparse matrix BR1Approx, solution methods have been studied extensively in the context of sparse PCA and sparse SVD [36, 40]; see, e.g., iterative methods [20, 36], approximation algorithms [2, 11], and semidefinite relaxation [9]; just to name a few. For sparse tensor BR1Approx, in the context of sparse tensor decomposition, Allen [1] first studied models and iterative algorithms based on \(\ell _1\) regularization in the literature, whereas Sun et al. [32] developed \(\ell _0\)-based models and algorithms, and analyzed their statistical performance. Wang et al. [35] considered another \(\ell _1\) regularized model and algorithm, which is different from [1]. In the study of co-clustering, Papalexakis et al. [26] proposed alternating minimization methods for nonnegative sparse tensor BR1Approx.

For nonconvex and NP-hard problems, approximation algorithms are nevertheless encouraged. However, in the context of sparse tensor BR1Approx problems, little attention was paid to this type of algorithms. To fill this gap, by fully exploiting the multilinearity and sparsity of the model, we develop four polynomial-time approximation algorithms, some extending their matrix or dense tensor counterparts; in particular, the last algorithm, which is the most efficient one, is even new when reducing to the matrix or dense tensor cases. The proposed algorithms are easily implemented, and the computational complexity is not high: the most expensive execution is, if necessary, to only compute the largest singular vector pairs of certain matrices. Therefore, the algorithms are able to serve as initialization procedures for iterative algorithms. Moreover, for each algorithm, we derive theoretically guaranteed approximation lower bounds. Experiments on synthetic as well as real data show the usefulness of the introduced algorithms.

The rest of this work is organized as follows. Sect. 2 introduces the sparse tensor BR1Approx model. Approximation algorithms are presented in Sect. 3. Numerical results are illustrated in Sect. 4. Section 5 draws some conclusions.

2 Sparse tensor best rank-1 approximation

Throughout this work, vectors are written as \(({\mathbf {x}},{\mathbf {y}},\ldots )\), matrices correspond to \((A,B,\ldots )\), and tensors are written as \((\mathcal {A}, \mathcal {B}, \cdots )\). \({\mathbb {R}}^{n_1\times \cdots \times n_d}\) denotes the space of \(n_1\times \cdots \times n_d\) real tensors. For two tensors \({\mathcal {A}},{\mathcal {B}}\) of the same size, their inner product \(\langle {\mathcal {A}},{\mathcal {B}}\rangle \) is given by the sum of entry-wise product. The Frobenius norm of \({\mathcal {A}}\) is defined by \(\Vert {\mathcal {A}}\Vert _F = \langle {\mathcal {A}},{\mathcal {A}}\rangle ^{1/2}\). \(\circ \) denotes the outer product; in particular, for \({\mathbf {x}}_j\in {\mathbb {R}}^{n_j}\), \(j=1,\ldots ,d\), \({\mathbf {x}}_1\circ \cdots \circ {\mathbf {x}}_d\) denotes a rank-1 tensor in \( \mathbb R^{n_1\times \cdots \times n_d} \). \( \left\| {\mathbf {x}} \right\| _0 \) represents the number of nonzero entries of a vector \({\mathbf {x}}\).

Given \({\mathcal {A}} \in \mathbb R^{n_1\times \cdots \times n_d} \) with \(d\ge 3\), the tensor BR1Approx consists of finding a set of vectors \({\mathbf {x}}_1,\ldots ,{\mathbf {x}}_d\), such that

When \({\mathcal {A}}\) is sparse, it may be necessary to also investigate the sparsity of the latent factors \({\mathbf {x}}_j\), \(1\le j\le d\). Assume that the true sparsity level of each latent factors is known a prior, or can be estimated; then the sparse tensor BR1Approx can be modeled as follows [32]:

where \(1\le r_j\le n_j\) are positive integers standing for the sparsity level. Allen [1] and Wang et al. [35] proposed \(\ell _1\) regularized models for sparse tensor BR1Approx problems.

Since \({\mathbf {x}}_j\)’s are normalized in (2.2), we have

minimizing which with respect to \(\lambda \) gives \(\lambda = \left\langle {\mathcal {A}} , {\mathbf {x}}_1\circ \cdots \circ {\mathbf {x}}_d\right\rangle \), and so

Due to the multilinearity of \( \left\langle {\mathcal {A}} , {\mathbf {x}}_1\circ \cdots \circ {\mathbf {x}}_d\right\rangle \), \( \left\langle {\mathcal {A}} , {\mathbf {x}}_1\circ \cdots \circ {\mathbf {x}}_d\right\rangle ^2\) is maximized if and only if \( \left\langle {\mathcal {A}} , {\mathbf {x}}_1\circ \cdots \circ {\mathbf {x}}_d\right\rangle \) is maximized. Thus (2.2) can be equivalently recast as

This is the main model to be focused on. When \(r_j=n_j\), (2.3) boils down exactly to the tensor singular value problem [19]. Thus (2.2) can be regarded as a sparse tensor singular value problem.

When \(r_j=n_j\), (2.3) is already NP-hard in general [14]; on the other hand, when \(d=2\) and \({\mathbf {x}}_1={\mathbf {x}}_2\), it is also NP-hard [22]. Therefore, we may deduce that (2.3) is also NP-hard, whose NP-hardness comes from two folds: the multilinearity of the objective function, and the sparsity constraints. In view of this, approximation algorithms for solving (2.3) are necessary.

In the rest of this work, to simplify notations, we denote

In addition, we also use the following notation to denote the partial gradients of \({\mathcal {A}}{\mathbf {x}}_1 \cdots {\mathbf {x}}_d\) with respect to \({\mathbf {x}}_j\):

For example, \(({\mathcal {A}}{\mathbf {x}}_2\cdots {\mathbf {x}}_d)_{i_1} = \sum ^{n_2,\ldots ,n_d}_{i_2=1,\ldots ,i_d=1}{\mathcal {A}}_{i_1i_2\cdots i_d}{\mathbf {x}}_{2,i_2}\cdots {\mathbf {x}}_{d,i_d}\), where we write \({\mathbf {x}}_j := [x_{j,1},\ldots ,x_{j,n_j}]^\top \). The partial Hessian of \({\mathcal {A}}{\mathbf {x}}_1\cdots {\mathbf {x}}_d\) with respect to \({\mathbf {x}}_{d-1}\) and \({\mathbf {x}}_d\) is denoted as:

with \(({\mathcal {A}}{\mathbf {x}}_1\cdots {\mathbf {x}}_{d-2})_{i_{d-1},i_d} = \sum ^{n_1,\ldots ,n_{d-2}}_{i_1=1,\ldots ,i_{d-2}=1}{\mathcal {A}}_{i_1\cdots i_{d-1}i_d} {\mathbf {x}}_{1,i_1}\cdots {\mathbf {x}}_{d-2,i_{d-2}}\).

3 Approximation algorithms and approximation bounds

Four approximation algorithms are proposed in this section, all of which admit theoretical lower bounds. We first present some preparations used in this section. For any nonzero \(\mathbf {a}\in {\mathbb {R}}^n\), \(1\le r\le n\), denote \( \left[ \mathbf {a} \right] ^{\downarrow ,r} \in {\mathbb {R}}^n\) as a truncation of \({\mathbf {a}}\) as

In particular, if \( a_{i_1}\), \( a_{i_2}\), \( a_{i_3},\ldots \) are respectively the r-, \((r+1)\)-, \((r+2)\)-, \(\ldots \) largest entries (in magnitude) with \(i_1<i_2<i_3<\cdots \), and \(| a_{i_1}|=| a_{i_2}| = |a_{i_3}| = \cdots \), then we set \( \left[ {\mathbf {a}} \right] ^{\downarrow ,r} _{i_1}= a_{i_1}\) and \( \left[ {\mathbf {a}} \right] ^{\downarrow ,r} _{i_2} = \left[ {\mathbf {a}} \right] ^{\downarrow ,r} _{i_3}=\cdots =0\). Thus \( \left[ {\mathbf {a}} \right] ^{\downarrow ,r} \) is uniquely defined. We can see that \( \left[ {\mathbf {a}} \right] ^{\downarrow ,r} \) is a best r-approximation to \({\mathbf {a}}\) [20, Proposition 4.3], i.e.,

It is not hard to see that the following proposition holds.

Proposition 3.1

Let \({\mathbf {a}}\in {\mathbb {R}}^n\), \({\mathbf {a}}\ne 0\) and let \({\mathbf {a}}^0 = \left[ {\mathbf {a}} \right] ^{\downarrow ,r} / \Vert \left[ {\mathbf {a}} \right] ^{\downarrow ,r} \Vert \) with \(1\le r\le n\). Then

Let \(\lambda _{\max }(\cdot )\) denote the largest singular value of a given matrix. The following lemma is important.

Lemma 3.1

Given a nonzero \(A\in {\mathbb {R}}^{m\times n}\), with \(({\mathbf {y}},{\mathbf {z}})\) being the normalized singular vector pair corresponding to \(\lambda _{\max }(A)\). Let \({\mathbf {z}}^0 = \left[ {\mathbf {z}} \right] ^{\downarrow ,r} / \Vert \left[ {\mathbf {z}} \right] ^{\downarrow ,r} \Vert \) with \(1\le r\le n\). Then there holds

Proof

From the definition of \({\mathbf {z}}\), we see that \( \left\| A {\mathbf {z}} \right\| = \lambda _{\max }(A)\) and \(A^\top A{\mathbf {z}} = \lambda _{\max }^2(A){\mathbf {z}}\). Therefore,

where the last inequality follows from Proposition 3.1, the definition of \({\mathbf {z}}^0 \), and that \( \left\| {{\mathbf {z}}} \right\| =1\). This completes the proof. \(\square \)

3.1 The first algorithm

To illustrate the first algorithm, we denote \({\mathbf {e}}_j^{i_j}\in {\mathbb {R}}^{n_j}\), \(1\le i_j\le n_j\), \(j=1,\ldots ,d\), as standard basis vectors in \({\mathbb {R}}^{n_j}\). For example, \({\mathbf {e}}_2^1\) is a vector in \({\mathbb {R}}^{n_2}\) with the first entry being one and the remaining ones being zero. Denote \(\varvec{r}:=(r_1,\ldots ,r_d)\); without loss of generality, we assume that \(r_1\le \cdots \le r_d\).

It is clear that \({\mathcal {A}}{\mathbf {e}}_1^{i_1}\cdots {\mathbf {e}}_{d-1}^{i_{d-1}}\)’s are mode-d fibers of \({\mathcal {A}}\). For the definition of fibers, one can refer to [16]; following the Matlab notation we have \({\mathcal {A}}(i_1,\ldots ,i_{d-1},:) = {\mathcal {A}}{\mathbf {e}}_1^{i_1}\cdots {\mathbf {e}}_{d-1}^{i_{d-1}}\). Algorithm A is a straightforward extension of [2, Algorithm 1] for sparse symmetric matrix PCA to higher-order tensor cases. Intuitively, the first two steps of Algorithm A enumerate all the mode-d fibers of \({\mathcal {A}}\), such that the select one admits the largest length with respect to its largest \(r_d\) entries (in magnitude). \({\mathbf {x}}^0_d\) is then given by the normalization of this fiber. Then, according to (3.4), the remaining \({\mathbf {x}}^0_j\) are in fact obtained by sequentially updated as

We consider the computational complexity of Algorithm A. Computing \({\mathcal {A}}{\mathbf {e}}_1^{i_1}\cdots {\mathbf {e}}_{d-1}^{i_{d-1}}\) takes \(O(n_d)\) flops, while it takes \(O(n_d\log (n_d))\) flops to compute \( \left[ {\mathcal {A}}{\mathbf {e}}_1^{i_1}\cdots {\mathbf {e}}_{d-1}^{i_{d-1}} \right] ^{\downarrow ,r} \) by using quick-sort. Thus the first two steps take \(O(n_1\cdots n_d\log (n_d) + n_d\log (n_d))\) flops. Computing \({\mathbf {x}}^0_j,j=1,\ldots ,d-1\) respectively takes \(O(\prod ^j_{k=1}n_k \cdot n_d+n_j\log (n_j) )\) flops. Thus the total complexity can be roughly estimated as \(O(\prod ^d_{j=1}n_j\cdot \log (n_d) + \sum ^{d}_{j=1}n_j\log (n_j))\).

We first show that Algorithm A is well-defined.

Proposition 3.2

If \({\mathcal {A}}\ne 0\) and \(({\mathbf {x}}^0_1,\ldots ,{\mathbf {x}}^0_d)\) is generated by Algorithm A, then \({\mathbf {x}}^0_j\ne 0\), \(1\le j\le d\).

Proof

Since \({\mathcal {A}}\) is a nonzeros tensor, there exists at least one fiber that is not identically zero. Therefore, the definition of \(\bar{{\mathbf {x}}}^0_d\) shows that \(\bar{{\mathbf {x}}}^0_d\) is not identically zero, and hence \({\mathbf {x}}^0_d\). We also observe that \( \left\langle {\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-2}^{\bar{i}_{d-2}}{\mathbf {x}}^0_d , {\mathbf {e}}^{\bar{i}_{d-1}}_{d-1} \right\rangle ={\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-1}^{\bar{i}_{d-1}}{\mathbf {x}}^0_d = \Vert {\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-1}^{\bar{i}_{d-1}} \Vert >0\), which implies that \({\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-2}^{\bar{i}_{d-2}}{\mathbf {x}}^0_d \ne 0\), and so \({\mathbf {x}}^0_{d-1}\ne 0\). (3.5) shows that \({\mathcal {A}}{\mathbf {e}}^{\bar{i}_1}_1\cdots {\mathbf {e}}^{\bar{i}_{d-2}}_{d-2}{\mathbf {x}}^0_{d-1}{\mathbf {x}}^0_{d} \ge {\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-1}^{\bar{i}_{d-1}}{\mathbf {x}}^0_d >0\). Similarly, we can show that \({\mathbf {x}}^0_{d-2}\ne 0,\ldots ,{\mathbf {x}}^0_1\ne 0\). \(\square \)

We next first present the approximation bound when \(d=3\). The bound extends that of [2] to higher-order cases.

Theorem 3.1

Denote \(v^{\mathrm{opt}}\) as the optimal value of (2.3) when \(d=3\).

Let \(({\mathbf {x}}^0_1,{\mathbf {x}}^0_2,{\mathbf {x}}^0_3)\) be generated by Algorithm A. Then it holds that

Proof

Denote \(({\mathbf {x}}^*_1,{\mathbf {x}}^*_2,{\mathbf {x}}^*_3)\) as a maximizer of (2.3). By noticing that \( \left\| {\mathbf {x}}^*_1 \right\| _0 \le r_1\) and \({\mathcal {A}}{\mathbf {x}}^*_1{\mathbf {x}}^*_2{\mathbf {x}}^*_3=\sum _{\{i_1: x^*_{1,i_1}\ne 0\}}^{n_1} x^*_{1,i_1} \cdot \left( {\mathcal {A}}{\mathbf {e}}_1^{i_1} {\mathbf {x}}^*_2{\mathbf {x}}^*_3 \right) \); recalling that we write \({\mathbf {x}}_j := [x_{j,1},\ldots ,x_{j,n_j}]^\top \), we have

where the first inequality uses the Cauchy-Schwartz inequality, and the last equality follows from \( \left\| {\mathbf {x}}^*_1 \right\| =1\). In the same vein, we have

Assume that \( \left| {\mathcal {A}}{\mathbf {e}}_1^{\hat{i}_1}{\mathbf {e}}_2^{\hat{i}_2}{\mathbf {x}}^*_3 \right| = \max _{i_1,i_2} \left| {\mathcal {A}}{\mathbf {e}}_1^{i_1}{\mathbf {e}}_2^{i_2}{\mathbf {x}}^*_3 \right| \); denote

(3.4) shows that \( \left| {\mathcal {A}}{\mathbf {e}}_1^{\hat{i}_1}{\mathbf {e}}_2^{\hat{i}_2}{\mathbf {x}}^*_3 \right| \le \left| {\mathcal {A}}{\mathbf {e}}_1^{\hat{i}_1}{\mathbf {e}}_2^{\hat{i}_2}\hat{{\mathbf {x}}}_3 \right| \). By noticing the definition of \({\mathbf {x}}^0_3\), we then have

Finally, recalling the definitions of \({\mathbf {x}}^0_1\) and \({\mathbf {x}}^0_2\) and combining the above pieces, we arrive at

as desired. \(\square \)

In the same spirit of the proof of Theorem 3.1, for general \(d\ge 3\), one can show that

Theorem 3.2

Denote \(v^{\mathrm{opt}}\) as the optimal value of the problem (2.3).

Let \(({\mathbf {x}}^0_1,\ldots ,{\mathbf {x}}^0_d)\) be generated by Algorithm A for a general d-th order tensor \({\mathcal {A}}\). Then it holds that

3.2 The second algorithm

The second algorithm is presented as follows.

The main difference from Algorithm A mainly lies in the first step, where Algorithm A requires to find the fiber with the largest length with respect to the largest \(r_3\) entries (in magnitude), while the first step of Algorithm B looks for the matrix \({\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-2}^{\bar{i}_{d-2}} \in {\mathbb {R}}^{n_{d-1}\times n_d}\) with the largest spectral radius among all \({\mathcal {A}}{\mathbf {e}}_1^{i_1}\cdots {\mathbf {e}}_{d-2}^{i_{d-2}}\). Algorithm B combines the ideas of both Algorithms 1 and 2 of [2] and extends them to higher-order tensors. When reducing to the matrix case, our algorithm here is still different from [2], as we find sparse singular vector pairs, while [2] pursues sparse eigenvectors of a symmetric matrix.

The computational complexity of Algorithm B is as follows. Computing the largest singular value of \({\mathcal {A}}{\mathbf {e}}_1^{i_1}\cdots {\mathbf {e}}_{d-2}^{i_{d-2}}\) takes \(O(\min \{ n_{d-1}^2 n_d, n_{d-1} n_d^2 \} )\) flops in theory. Computing \({\mathbf {x}}^0_d\) takes \(O(n_d\log (n_d))\) flops. Thus the first two steps take \(O(n_1\cdots n_{d-2}\min \{ n_{d-1}^2 n_d, n_{d-1} n_d^2 \} + n_d\log (n_d))\) flops. The flops of the third step are the same as those of Algorithm A. As a result, the total complexity is dominated by \(O(n_1\cdots n_{d-2}\min \{ n_{d-1}^2 n_d, n_{d-1} n_d^2 \}+ \sum ^d_{j=1}n_j\log (n_j))\).

Algorithm B is also well-defined as follow.

Proposition 3.3

If \({\mathcal {A}}\ne 0\) and \(({\mathbf {x}}^0_1,\ldots ,{\mathbf {x}}^0_d)\) is generated by Algorithm B, then \({\mathbf {x}}^0_j\ne 0\), \(1\le j\le d\).

Proof

The definition of \((\bar{i}_1,\ldots , \bar{i}_{d-2})\) shows that the matrix \( {\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-2}^{\bar{i}_{d-2}} \ne 0\), and hence \(\bar{{\mathbf {x}}}_d\ne 0\) and \({\mathbf {x}}^0_d\ne 0\). We also observe from step 2 that \(\bar{{\mathbf {x}}}_d = {\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-2}^{\bar{i}_{d-2}} \bar{{\mathbf {x}}}_{d-1}/ \Vert {\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-2}^{\bar{i}_{d-2}} \bar{{\mathbf {x}}}_{d-1} \Vert \), and so

implying that \( {\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-2}^{\bar{i}_{d-2}}{\mathbf {x}}^0_d\ne 0\), and so \({\mathbf {x}}^0_{d-1} = \left[ {\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\cdots {\mathbf {e}}_{d-2}^{\bar{i}_{d-2}}{\mathbf {x}}^0_d \right] ^{\downarrow ,r_{d-1}} \ne 0\). Similar arguments apply to show that \({\mathbf {x}}^0_{d-2} \ne 0,\ldots ,{\mathbf {x}}^0_1\ne 0\) then. \(\square \)

To analyze the approximation bound, we need the following proposition.

Proposition 3.4

Let \(\bar{i}_1,\ldots ,\bar{i}_{d-2}\) and \((\bar{{\mathbf {x}}}_{d-1},\bar{{\mathbf {x}}}_d)\) be defined in Algorithm B. Then it holds that

Proof

The result holds by noticing the definition of \(({\mathbf {e}}^{\bar{i}_1}_1,\ldots ,{\mathbf {e}}^{\bar{i}_{d-2}}_{d-2},\bar{{\mathbf {x}}}_{d-1},\bar{{\mathbf {x}}}_d)\), and the additional sparsity constraints in the right-hand side of the inequality. \(\square \)

We first derive the approximation bound with \(d=3\) as an illustration.

Theorem 3.3

Let \(v^{\mathrm{opt}}\) be defined as that in Theorem 3.1 when \(d=3\), and let \(({\mathbf {x}}^0_1,{\mathbf {x}}^0_2,{\mathbf {x}}^0_3)\) be generated by Algorithm B. Then it holds that

Proof

Denote \(({\mathbf {x}}^*_1,{\mathbf {x}}^*_2,{\mathbf {x}}^*_3)\) as a maximizer to (2.3). We have

where the first inequality is the same as (3.6), while Proposition 3.4 gives the last one. Now denote \(A:= {\mathcal {A}}{\mathbf {e}}_1^{\bar{i}_1}\in {\mathbb {R}}^{n_2\times n_3}\). Our remaining task is to show that

Recalling the definition of \((\bar{{\mathbf {x}}}_2,\bar{{\mathbf {x}}}_3)\), we have \(\lambda _{\max }(A) = \bar{{\mathbf {x}}}_2^\top A\bar{{\mathbf {x}}}_3\). Lemma 3.1 tells us that

on the other hand, since

it follows from Proposition 3.1 that

combining which with (3.9) gives (3.8). Finally, (3.8) together with (3.7) and the definition of \({\mathbf {x}}^0_1\) yields

as desired. \(\square \)

The following approximation bound is presented for general order \(d\ge 3\).

Theorem 3.4

Let \(({\mathbf {x}}^0_1,\ldots ,{\mathbf {x}}^0_d)\) be generated by Algorithm B. Then it holds that

Proof

Similar to (3.7), we have

Similar to the proof of (3.8), we can obtain

\(\square \)

3.3 The third algorithm

We begin with the illustration from third-order tensors. We will employ the Matlab function reshape to denote tensor folding/unfolding operations. For instance, given \({\mathcal {A}}\in \mathbb R^{n_1\times \cdots \times n_d} \), \({\mathbf {a}} = \texttt {reshape}({\mathcal {A}}, \prod ^d_{j=1}n_j,1)\) means the unfolding of \({\mathcal {A}}\) to a vector \({\mathbf {a}}\) in \({\mathbb {R}}^{\prod ^d_{j=1}n_j}\), while \({\mathcal {A}} = \texttt {reshape}({\mathbf {a}},n_1,\ldots , n_d)\) means the folding of \({\mathbf {a}}\) back to \({\mathcal {A}}\).

Different from Algorithm B, Algorithm C0 is mainly based on a series of computing leading singular vector pairs of certain matrices. In fact, Algorithm C0 generalizes the approximation algorithm for dense tensor BR1Approx [13, Algorithm 1 with DR 2] to our sparse setting; the main difference lies in the truncation of \(\bar{{\mathbf {x}}}_j\) to obtain the sparse solution \({\mathbf {x}}^0_j\). In particular, if no sparsity is required, i.e., \(r_j=n_j,j=1,2,3\), then Algorithm C0 boils down essentially to [13, Algorithm 1 with DR 2]. The next proposition shows that Algorithm C0 is well-defined.

Proposition 3.5

If \({\mathcal {A}}\ne 0\) and \(({\mathbf {x}}^0_1,\ldots ,{\mathbf {x}}^0_d)\) is generated by Algorithm C0, then \({\mathbf {x}}^0_j\ne 0\), \(1\le j\le 3\).

Proof

It is clear that \(\bar{{\mathbf {x}}}_1\ne 0\), \({\mathbf {x}}^0_1\ne 0\), and \(\bar{\mathbf{w}}_1\ne 0\) due to that \(A_1\ne 0\). We have \( \left\langle {\mathbf {x}}^0_1 , A_1\bar{\mathbf{w}}_1\right\rangle = \left\langle {\mathbf {x}}^0_1 , \bar{{\mathbf {x}}}_1\right\rangle \Vert A_1\bar{\mathbf{w}}_1 \Vert >0\), and so \(A_1^\top {\mathbf {x}}^0_1 \ne 0\), and \(A_2\ne 0\). Similar argument shows that \({\mathbf {x}}^0_2\ne 0\) and \({\mathbf {x}}^0_3\ne 0\). \(\square \)

Due to the presence of the truncation, deriving the approximation bound is different from that of [13]. In particular, we need Lemma 3.1 to build bridges in the analysis. The following relation

is also helpful in the analysis, where \(\otimes \) denotes the Kronecker product [16].

Theorem 3.5

Let \(v^{\mathrm{opt}}\) be defined as that in Theorem 3.1 when \(d=3\), and let \(({\mathbf {x}}^0_1,{\mathbf {x}}^0_2,{\mathbf {x}}^0_3)\) be generated by Algorithm C0. Then it holds that

Proof

From the definition of \(A_1\), \(\bar{{\mathbf {x}}}_1\) and \(\bar{\mathbf{w}}_1\), we see that

Therefore, to prove the approximation bound, it suffices to show that

To this end, recalling Lemma 3.1 and the definition of \({\mathbf {x}}^0_1\) (which is a truncation of the leading left singular vector of \(A_1\)), we obtain

Since \(A_2 = \texttt {reshape}(A^\top _1{\mathbf {x}}^0_1,n_2,n_3)\), it holds that \( \left\| A_2 \right\| _F = \left\| A^\top _1{\mathbf {x}}^0_1 \right\| \). Using again Lemma 3.1 and recalling the definition of \({\mathbf {x}}^0_2\) (which is a truncation of the leading left singular vector of \(A_2\)), we get

where the second inequality follows from the relation between the spectral norm and the Frobenius norm of a matrix. Finally, Proposition 3.1 and the definition of \({\mathbf {x}}^0_3\) gives that

where the equality follows from (3.10). Combining the above relation with (3.12) and (3.13) gives (3.11). This completes the proof. \(\square \)

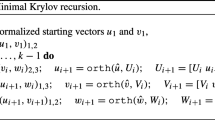

When extending to d-th order tensors, the algorithm is presented as follows.

Remark 3.1

In step 2, by noticing the recursive definition of \(A_j\), one can check that \(A_j\) is in fact the same as \({\texttt {\texttt {reshape}}}({\mathcal {A}}{\mathbf {x}}_1^0\cdots {\mathbf {x}}_{j-1}^0,n_j,\prod ^d_{k=j+1}n_k)\), where \({\mathcal {A}}{\mathbf {x}}^0_1\cdots {\mathbf {x}}^0_{j-1}\) is regarded as a tensor of size \(n_{j}\times \cdots \times n_d\) with \( \left( {\mathcal {A}}{\mathbf {x}}^0_1\cdots {\mathbf {x}}^0_{j-1} \right) _{i_j\cdots i_d} = \sum ^{n_1,\ldots , n_{j-1}}_{i_1=1,\ldots ,i_{j-1}=1} {\mathcal {A}}_{i_1\cdots i_{j-1} i_j\cdots i_d} ({\mathbf {x}}^0_1)_{i_1}\cdots ({\mathbf {x}}^0_{j-1})_{i_{j-1}} \).

The computational complexity of the first step is \(O(n_1^2 n_2\cdots n_d+n_1\log (n_1))\), where \(O(n_1^2 n_2\cdots n_d)\) comes from solving the singular value problem of an \(n_1\times \prod ^d_{j=2}n_j\) matrix. In the second step, for each j, computing \(A^\top _{j-1}{\mathbf {x}}^0_{j-1} \) requires \(O(n_{j-1}\cdots n_d)\) flops; computing the singular value problem requires \(O(n^2_j n_{j+1}\cdots n_d )\) flops; thus the complexity is \(O(n_{j-1}\cdots n_d + n^2_j n_{j+1}\cdots n_d + n_j\log (n_j))\). The third step is \(O(n_{d-1}n_d + n_d\log (n_d))\). Thus the total complexity is dominated by \(O(n_1\prod ^d_{j=1}n_j + \sum ^d_{j=1}n_j\log (n_j))\), which is the same as Algorithm B in theory.

Similar to Proposition 3.5, we can show that Algorithm C is well-defined, i.e., if \({\mathcal {A}}\ne 0\), then \({\mathbf {x}}^0_j\ne 0\), \(1\le j\le d\). The proof is omitted.

Concerning the approximation bound, similar to (3.14), one has

where the second equality comes from Remark 3.1 that \({\mathcal {A}}{\mathbf {x}}^0_1\cdots {\mathbf {x}}^0_{d-2} = A_{d-1}\) (up to a reshaping) and the inequality is due to Proposition 3.1. Analogous to (3.13), one can prove the following relation:

Based on the above relations and (3.12), for order \(d\ge 3\), we present the approximation bound without proof.

Theorem 3.6

Let \(({\mathbf {x}}^0_1,\ldots ,{\mathbf {x}}^0_d)\) be generated by Algorithm C. Then it holds that

3.4 The fourth algorithm

Algorithm C computes \(\bar{{\mathbf {x}}}_j\) from \(A_j\) via solving singular value problems. When the size of the tensor is huge, this might be time-consuming. To further accelerate the algorithm, we propose the following algorithm, which is similar to Algorithm C, while it obtains \(\bar{{\mathbf {x}}}_j\) without solving singular value problems. Denote \(A^k\) as the k-th row of a matrix A. The algorithm is presented as follows:

It is clear from the above algorithm that computing \(\bar{{\mathbf {x}}}_j\) only requires some matrix-vector productions, which has lower computational complexity than computing singular vectors. Numerical results presented in the next section will show the efficiency and effectiveness of this simple modification.

In Algorithm D, the first step needs \(O(n_1\cdots n_d + n_1\log (n_1))\) flops; in the second step, for each j, the complexity is \(O(n_{j-1}\cdots n_d + n_j\log (n_j))\); the third step is \(O(n_{d-1}n_d + n_d\log (n_d))\). Thus the total complexity is dominated by \(O(n_1\cdots n_d + \sum ^d_{j=1}n_j\log (n_j))\), which is lower than that of Algorithm C, due to the SVD-free computation of \(\bar{{\mathbf {x}}}_j\)’s.

Reducing to the dense tensor setting, i.e., \(r_j=n_j\) for each j, Algorithm D is even new for dense tensor BR1Approx problems; when \(d=2\), similar ideas have not been applied to approximation algorithms for sparse matrix PCA/SVD yet.

The next proposition shows that Algorithm D is well-defined, whose proof is quite similar to that of Proposition 3.5 and is omitted.

Proposition 3.6

If \({\mathcal {A}}\ne 0\) and \(({\mathbf {x}}^0_1,\ldots ,{\mathbf {x}}^0_d)\) is generated by Algorithm D, then \({\mathbf {x}}^0_j\ne 0\), \(1\le j\le d\).

The approximation bound analysis essentially relies on the following lemma.

Lemma 3.2

Given \(A\in {\mathbb {R}}^{m\times n}\), with \(A^{\tilde{k}}\) being the row of A having the largest magnitude. Let \(\mathbf{w}=(A^{\tilde{k}})^\top / \Vert (A^{\tilde{k}})^\top \Vert \), \({\mathbf {x}} = A\mathbf{w}\), and \({\mathbf {x}}^0 = \left[ {\mathbf {x}} \right] ^{\downarrow ,r} / \Vert \left[ {\mathbf {x}} \right] ^{\downarrow ,r} \Vert \) with \(1\le r\le m\). Then there holds

Proof

We have

where the second inequality follows from that \(\mathbf{w}\) is normalized, and the last one comes from Proposition 3.1. We also have from the definition of \({\mathbf {x}}\) that

where the last inequality comes from the definition of \(A^{\tilde{k}}\). Combining the above analysis gives the desired result. \(\square \)

Theorem 3.7

Let \(({\mathbf {x}}^0_1,\ldots ,{\mathbf {x}}^0_d)\) be generated by Algorithm D. Then it holds that

Proof

As the discussions above Theorem 3.6 we get \({\mathcal {A}}{\mathbf {x}}^0_1\cdots {\mathbf {x}}^0_d \ge \sqrt{ \frac{r_d}{n_d} } \left\| A^\top _{d-1} {\mathbf {x}}^0_{d-1} \right\| \). Using Lemma 3.2, for \(2\le j\le d-1\), we have

In particular, from step 1 of the algorithm, \( \left\| A^\top _1{\mathbf {x}}^0_1 \right\| \ge \sqrt{\frac{r_1}{n_1^2}} \left\| A_1 \right\| _F = \sqrt{\frac{r_1}{n_1^2}} \left\| {\mathcal {A}} \right\| _F \). It is clear that \( \left\| {\mathcal {A}} \right\| _F \ge \lambda _{\max }(A_1) \ge v^\mathrm{opt}\). Combining the analysis gives the desired results. \(\square \)

Before ending this section, we summarize the approximation ratio and computational complexity of the proposed algorithms in Table 1. For convenience we set \(r_1=\cdots =r_d\) and \(n_1=\cdots = n_d\).

Concerning the approximation ratio \({\mathcal {A}}{\mathbf {x}}^0_1\cdots {\mathbf {x}}^0_d/v^{\mathrm{opt}}\), we see that if r is a constant, then the ratio of Algorithm A is also a constant, while those of other algorithms rely on n. This is the advantage of Algorithm A, compared with other algorithms. When \(d=2\), the ratios of Algorithms A and B are respectively \(1/\sqrt{r}\) and r/n, which coincide with their matrix counterparts; see, [2, Algorithms 1 and 2]. We also observe that Algorithm B generalizes the approximation algorithm and bound for dense tensor BR1Approx in [39, Theorem 5.1]: If sparsity is not required, i.e., \(r=n\), the bound of Algorithm B boils down to those of [39, Theorem 5.1]. For Algorithm C, when \(r=n\), or r is proportional to n up to a constant, the ratio reduces to \( O(1/\sqrt{ n^{d-2}})\), which recovers that of [13, Algorithm 1]. Although the ratio of Algorithm D is worse than that of Algorithm C with an additional factor \(1/\sqrt{n}\), we shall also observe that the numerator of the bound of Algorithm D is \( \left\| {\mathcal {A}} \right\| _F \), while that of Algorithm C is \(\lambda _{\max }(A_1)\) (see Algorithm C for the definition of \(A_1\)), where the latter is usually much smaller than the former. Note that two randomized approximation algorithms as initializations were proposed in [32, Algorithms 3 and 4] (see its arXiv version), where the performance analysis was considered on structured tensors. It would be also interesting to study their approximation bound for general tensors. Concerning the computational complexity, we see that Algorithm D admits the lowest one in theory. This is also confirmed by our numerical observations that will be presented in the next section. Note that if the involved singular value problems in Algorithm C are solved by the Lanczos algorithm at k steps [7] where k is a user-defined parameter, then the computational complexity is \(O(k n^d + dn\log (n))\).

Finally, We discuss that whether the approximation bounds are achieved. We only consider the cases that \(r_j<n_j\). We first consider Algorithm C and take \(d=3\) as an example. In (3.13), achieving \(\lambda _{\max }(A_2)=\frac{\Vert A_2\Vert _F}{\sqrt{n_2}}\) requires that \(\mathrm{rank}(A_2)=n_2\) (assuming that \(n_2\le n_3\)) and all the singular values are the same. If these are true, then \(\Vert A_2^{\top }{\mathbf {x}}_2^0\Vert > \sqrt{ \frac{r_2}{n_2} }\lambda _{\max }(A_2)\).Footnote 1 Thus the bound of Algorithm C may not be tight. The approximation ratio of Algorithm D relies on Lemma 3.2. However, there do not exist matrices achieving the approximation ratio \(\sqrt{\frac{r}{m^2}}\) in Lemma 3.2, implying that the bound of Algorithm D may not be tight. For Algorithm A, if step 3 is not executed (use \({\mathbf {e}}_1^{\bar{i}_1}, \ldots , {\mathbf {e}}_{d-1}^{\bar{i}_{d-1}}, {\mathbf {x}}_d^0\) obtained in step 2 as the output), then the bound is tight: Consider \({\mathcal {A}}\in {\mathbb {R}}^{n_1\times n_2\times n_3}\) with \(n_1=n_2=n_3=n\) as an all-one tensor and let \(1< r_1=r_2=r_3 = r<n\); then \(v^{\mathrm{opt}} = r^{\frac{3}{2}}\). The generated feasible point is \({\mathbf {x}}^0_j = {\mathbf {e}}^1_j\), \(j=1,2\), and \(x^0_3\) is the vector whose first r entries are \(\frac{1}{\sqrt{r}}\) and the remaining ones are zero. Then \({\mathcal {A}}{\mathbf {x}}^0_1{\mathbf {x}}^0_2{\mathbf {x}}^0_3/v^\mathrm{opt} = \frac{1}{r}\), achieving the bound. For Algorithm B, in case that \(d=3\) and if \({\mathbf {x}}_1^0\) is not updated by step 3 (use \({\mathbf {e}}_1^{\bar{i}_1}\) obtained in step 2 as the output), then the bound can be tight: Consider \({\mathcal {A}}\in {\mathbb {R}}^{4\times 4\times 4}\), with \({\mathcal {A}}(i,:,:) = A:= \left[ {\begin{matrix} 0&{}1&{}0&{}1\\ 0&{}1&{}0&{}1\\ 1&{} 0&{}1&{}0\\ 1&{} 0&{}1&{}0\\ \end{matrix}}\right] \), \(i=1,\ldots ,4\), and let \(r_1=r_2=r_3=2\). Then \(v^{\mathrm{opt}}= 2\sqrt{2}\).Footnote 2 Applying Algorithm B to \({\mathcal {A}}\) yields \(\bar{i}_1=1\), with \(\bar{{\mathbf {x}}}_3=[1~1~1~1]^{\top }/2\); then \({\mathbf {x}}_3^0= [1~1~0~0]^{\top }/\sqrt{2}\), and \({\mathbf {x}}_2^0={\mathbf {x}}_3^0\). Finally, \( {{\mathcal {A}}{\mathbf {e}}_1^1{\mathbf {x}}_2^0{\mathbf {x}}_3^0 }/{v^{\mathrm{opt}}}= \frac{1}{2\sqrt{2}} = \sqrt{\frac{r^2}{n^2 r}}\). In summary, the reason that why the bounds cannot be achieved is that although we derive the worst-case inequalities in every step of the analysis, after putting them together, the final approximation bounds might not be tight. How to improve them still need further research.

4 Numerical experiments

We evaluate the proposed algorithms in this section on synthetic and real data. All the computations are conducted on an Intel i7 CPU desktop computer with 16 GB of RAM. The supporting software is Matlab R2019a. Tensorlab [33] is employed for basic tensor operations.

Performance of approximation algorithms We first compare Algorithms A, B, C, and D on solving (2.3). The tensor is given by

where \({\mathbf {x}}_{j,i}\in {\mathbb {R}}^{n_j}\), \(i=1,\ldots ,R\), \(j=1,\ldots ,d\), and we let \(R=10\). Here the vectors are first randomly drawn from the normal distribution, and then \(sr = 70\%\) of the entries are randomly set to be zero. We set \(d=3,4\), and let \(n_1=\cdots =n_d=n\) with n varying from 5 to 100. For each case, we randomly generated 50 instances, and the averaged results are presented. \(r_j = \lfloor (1-sr)n_j\rfloor \) for each j in (2.3). As a baseline, we also randomly generate feasible points \(({\mathbf {x}}^\mathrm{random}_1,\ldots ,{\mathbf {x}}^\mathrm{random}_d)\) and evaluate its performance. On the other hand, we easily see that

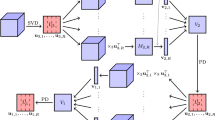

is an upper bound for problem (2.3), where \(A_{(j)} = \texttt {reshape}({\mathcal {A}},n_j,\prod ^d_{k\ne j}n_k)\) denotes the j-mode unfolding of \({\mathcal {A}}\). Thus we also evaluate \(v^\mathrm{ub}\). The results are depicted in Fig. 1, where the left panels show the curves of the objective values \({\mathcal {A}}{\mathbf {x}}^{0}_1\cdots {\mathbf {x}}^0_d\) versus n of different algorithms, whose colors are respectively cyan (Algorithm A), magenta (Algorithm B), blue (Algorithm C), and red (Algorithm D); the curves of the random value \({\mathcal {A}}{\mathbf {x}}^\mathrm{random}_1\cdots {\mathbf {x}}^\mathrm{random}_d\) is in black with hexagram markers, while the curve of the upper bounds \(v^\mathrm{ub}\) is in black with diamond markers. The right ones plot the curve of CPU time versus n.

From the left panels, we observe that the objective values generated by Algorithms A, B, C, and D are similar, where Algorithm C performs better; Algorithm D performs the second when \(d=4\), and it is comparable with Algorithm B when \(d=3\); Algorithm A gives the worst results, which may be that Algorithm A does not explore the structure of the problem as much as possible. We also observe that the objective values of all the algorithms are quite close to the upper bound (4.16), which demonstrates the effectiveness of the proposed algorithms. In fact, the ratio of \(\frac{{\mathcal {A}}{\mathbf {x}}^0_1\cdots {\mathbf {x}}^0_d}{v^\mathrm{ub}}\) is in (0.7, 1), which is far better than the approximation ratios presented in Sect. 3. This implies that at least for this kind of tensors, the approximation ratios might be independent of the size of the tensor. The value \({\mathcal {A}}{\mathbf {x}}^\mathrm{random}_1\cdots {\mathbf {x}}^\mathrm{random}_d\) is close to zero (the curve almost coincides with the x-axis). Concerning the computational time, Algorithms D is the most efficient one, confirming the theoretical results in Table 1. Algorithm C is the second efficient one. Algorithms A and B do not perform well compared with Algorithms C and D, although their computational complexity is similar in theory. The reason may be because the first two algorithms require for-loop operations, which is time-consuming in Matlab.

Comparisons of Algorithms A, B, C, and D for solving (2.3) with fixed n and sparsity ratio varying from 10 to \(90\%\). Figure 2a: \({\mathcal {A}}\in {\mathbb {R}}^{100\times 100\times 100}\); Fig. 2b: \({\mathcal {A}}\in {\mathbb {R}}^{100\times 100\times 100\times 100}\). Left panels: the objective values \({\mathcal {A}}{\mathbf {x}}^0_1\cdots {\mathbf {x}}^0_d\) versus sparsity ratio; right panels: CPU time

We then consider fix \(n=100\) and vary the sparsity ratio sr from 10 to \(90\%\) of \({\mathcal {A}}\) in (4.15), and compare the performance of the four proposed algorithms. \(r_j\) in (2.3) is set to \(\lfloor (1-sr) n_j\rfloor \) correspondingly. The results of the objective values \({\mathcal {A}}{\mathbf {x}}^0_1\cdots {\mathbf {x}}^0_d\) together with the upper bound are depicted in the left panels of Fig. 2, from which we still observe that all the algorithms are close to the upper bound (4.16); among them, Algorithm C is still slightly better than the other three, followed by Algorithms B and D. The CPU time is plotted in the right panels of Fig. 2, which still shows that Algorithm D is the most efficient one. In fact, in average, Algorithm D is 5 times faster than Algorithm C, which ranks the second, and is about 80 times faster than Algorithm B, which is the slowest one.

Overall, comparing with Algorithms A and B that find the solutions fiber by fiber, or slide by slide, Algorithm C admits hierarchical structures that take the whole tensor into account, and so it can explore the structure of the data tensor better. This may be the reason why Algorithm C is better than Algorithms A and B in terms of the effectiveness. When compared with Algorithm D, Algorithm C computes each \({\mathbf {x}}^0_j\) “optimally” via SVD, while Algorithm D computes \({\mathbf {x}}^0_j\) “sub-optimally” but more efficiently; this explains why Algorithms C performs better than Algorithm D. Concerning the efficiency, Algorithms A and B require for-loop operations, which is known to be slow in Matlab. This leads to that although the algorithms have similar computational complexity in theory (Algorithms B and C), after implementation, their performances are quite different.

Performance of approximation plus iterative algorithms In this part, we first use approximation algorithms to generate \(({\mathbf {x}}^0_1,\ldots ,{\mathbf {x}}^0_d)\), and then use it as an initializer for iterative algorithms. The goal is to see if approximation algorithms can help in improving the solution quality of iterative algorithms. The iterative algorithm used for solving problem (2.3) is simply an alternating maximization method (termed AM in the sequel) with the scheme

for \(j=1,\ldots ,d\) and \(k=1,2,\ldots \). The stopping criterion used for AM is \(\max _j\{ \left\| {\mathbf {x}}_j^{k+1} -{\mathbf {x}}_j^k \right\| \}\le 10^{-5}\), or \(k\ge 2000\). We employ Algorithms C and D in this part, and denote the approximation plus iterative algorithms as Algorithm C + AM and D + AM in the sequel. As a baseline, we also evaluate AM initialized by randomly generated feasible point \(({\mathbf {x}}^\mathrm{random}_1,\ldots ,{\mathbf {x}}^\mathrm{random}_d)\), which is generated the same as the previous part. This algorithm is denoted as Random + AM. The data tensors are also (4.15), where 50 instances are randomly generated for each n. \(r_j=\lfloor 0.3n_j\rfloor \). The objective values \({\mathcal {A}}{\mathbf {x}}^\mathrm{out}_1\cdots {\mathbf {x}}^\mathrm{out}_d\) and CPU time for third- and fourth-order tensors with n varying from 10 to 100 are plotted in Fig. 3a, b, where \(({\mathbf {x}}^\mathrm{out}_1,\ldots ,{\mathbf {x}}^\mathrm{out}_d)\) is the output of AM. Here the CPU time counts both that of approximation algorithms and AM. Figure 3c depicts the number of iterations of AM initialized by different strategies. Algorithm C + AM is in blue, D + AM is in red, and Random + AM is in black.

In terms of objective value, we see from Fig. 3a, b that Algorithm C + AM performs the best, while Algorithm D + AM is slightly worse. Both of them are better than Random + AM, demonstrating that approximation algorithms can indeed help in improving the solution quality of iterative algorithms. Considering the efficiency, Algorithm D + AM is the best among the three, followed by Random + AM. This further demonstrates the advantage of approximation algorithms. In fact, from Fig. 3c, we can see that both Algorithm C and D can help in reducing the number of iterations of AM. However, as we have observed in the previous part, Algorithm C is more time-consuming than Algorithm D, leading to that Algorithm C + AM is the slowest one.

We also try to use Algorithms C and D to initialize AM for \(\ell _1\) regularized model [1]:

where \(\rho _j=0.2\) is set in the experiment. The algorithms are denoted as Algorithm C + AM (\(\ell _1\)), Algorithm D + AM (\(\ell _1\)) and Random + AM (\(\ell _1\)). The results are plotted in Fig. 4, from which we observe similar results as those of Fig. 3; in particular, the efficiency of approximation algorithms plus AM (\(\ell _1\)) is significantly better than Random + AM (\(\ell _1\)), and their number of iterations is much more stable.

Overall, based on the observations of this part, compared with random initializations, iterative algorithms initialized by approximation solutions generated by approximation algorithms is superior both in terms of the solution quality and the running time.

Sparse tensor clustering Tensor clustering aims to cluster matrix or tensor samples into their underlying groups: for N samples \({\mathcal {A}}_i \in \mathbb R^{n_1\times \cdots \times n_d} ,i = 1,\dots ,N\) with \(d\ge 2\) and given clusters \(K\ge 2\), a clustering \(\psi (\cdot )\) is defined as a mapping \(\psi :{\mathbb {R}}^{n_1\times \dots \times n_d}\rightarrow \{1,\dots ,K\}\). Several tensor methods have been proposed for tensor clustering; see, e.g., [26, 31, 32]. Usually, one can first perform a dimension reduction to the samples by means of (sparse) tensor decomposition, and then use classic methods such as K-means to the reduced samples for clustering. Here, we use a deflation method for tensor decomposition, including the proposed approximation algorithms as initialization procedures for AM as subroutines. The whole method for tensor clustering is presented in Algorithm STC, which has some similarities to [31, Algorithm 2]. We respectively use STC (A), STC (B), STC (C), STC (D) to distinguish AM involved in STC initialized by different approximation algorithms. We also denote STC (Random) as AM involved in STC with random initializations.

Denote \(\psi _0\) as the true clustering mapping and let |S| represent the cardinality of a set S. The following metric is commonly used to measure clustering performance [34]:

Synthetic data This experiment is similar to [31, Sect. 5.2]. Consider N sparse matrix samples \(\{A_i\in \mathbb {R}^{n_1\times n_2},i = 1,\dots ,N\}\) with \(n_1=n_2=20\) as follows:

where \(\Sigma ={\mathbf {v}}{\mathbf {v}}^{\top } \in {\mathbb {R}}^{4\times 4}\) with \({\mathbf {v}} = [1,-1,0.5,-0.5]^{\top }\), and \({\mathbf {0}}\) is a zero matrix of size \(12\times 12\). We stack these samples into a third order tensor \(\mathcal {T}\in \mathbb {R}^{n_1\times n_2 \times N}\) with \(\mathcal T(:,:,i)=A_i\). We apply STC (A), STC (B), STC (C), STC (D), and the vanilla K-means to the tensor \(\mathcal T^* = \frac{\mathcal T}{||\mathcal T||_F} + \sigma \frac{\mathcal E}{||\mathcal E||_F}\) where \(\mathcal E\) is a noisy tensor and \(\sigma \) is the noise level. Here vanilla K-means stands for directly applying K-means to the vectorizations of \(A_i\)’s without tensor methods. We vary the sample number \(N = \{20,40\}\) and noise level \(\sigma = \{0.1,0.5,0.9\}\), and set \(\mu = 0.5\). To select parameters, we apply a similar Bayesian Information Criterion as [1]: For a set of parameter combinations defined as the cartesian product of the set \( \mathfrak {R}\) of given ranks and the sets \( \mathfrak {r}\) of tuning parameters, namely, \( \mathfrak {R}\times \mathfrak {r} = \{(R,\varvec{r})|R\in \mathfrak {R},\varvec{r}\in \mathfrak {r}\}\), we select \(( R^*, {\varvec{r}^*})=\arg \min _{\mathfrak {R}\times \mathfrak {r}} BIC(R,\varvec{r})\) with

where \( {\alpha }_l\) and \({\mathbf {x}}^l_j\) are computed by Algorithm STC.

For tuning procedure, we set \( \mathfrak {R} = \{4,6\}\) and \(\mathfrak {r}= \{(7,7,N),(8,8,N)\}\). For the number K of clusters, we employ the widely used evalclusters function in Matlab which evaluate each proposed number of clusters in a set \(\{K_1,\cdots ,K_m\}\) and select the smallest number of clusters satisfying \(Gap(K)\ge Gap(K+1) - SE(K+1),\) where Gap(K) is the gap value for the clustering solution with K clusters, and \(SE(K + 1)\) is the standard error of the clustering solution with \(K + 1\) clusters. The results are reported in Table 2 averaged over 50 instances in each case, where bold means that the cluster error is the lowest among all the methods.

Table 2 shows that the cluster error of STC with any approximation algorithm is smaller than that of the vanilla K-means in all cases and is even zero in some case when the noise level is not high, where the best one is STC (C), followed by STC (D). This shows the accuracy and robustness of our method. Considering the CPU time, among the four tensor methods, STC (D) is the most efficient one, followed by STC (C), while STC (B) needs more time than the other three. However, the computational time of tensor methods is not as good as that of the vectorized K-means, which is because of the increasing cost of the tuning procedure via (4.18) with a pre-specified set of parameter combinations.

Real data We test the clustering performance of STC (D), STC (Random), and the vanilla K-means on Columbia Object Image Library COIL-20 [23] for image clustering. The data contains 20 objects viewed from varying angles and each image is of size \(128\times 128\). The images are in grayscale, and the background of the objects is black, resulting in that the images can be seen as sparse matrices. In this experiment, we consider \(K = \{5, 6, 7, 8, 9, 10\}\) objects and pick up 36 images from each object for clustering, giving \(\mathcal T\in {\mathbb {R}}^{128\times 128\times 36K}\). We still tune parameters of Algorithm STC via (4.18) and the evalclusters function in Matlab, and set \( \mathfrak {R} = \{40,45\}\) and \(\mathfrak {r}= \{(75,75,36K),(80,80,36K)\}\). The experiment has been repeated 20 times for each K, and the averaged results are shown in Table 3.

Table 3 shows that the cluster error of tensor methods is still better than that of the vanilla K-means, while STC (D) is slightly better than STC (Random). On the other hand, the computational time of STC (D) is less than that of STC (Random). However, it still needs more time than that of the vanilla K-means. There are two possible reasons for this phenomenon: first, it takes more time to tune parameters via (4.18); and second, our approach deals directly with data tensors instead of reshaping them into vectors, which preserves their intrinsic structures but naturally increases the computational complexity at the same time.

Overall, tensor methods armed with an approximation algorithm to generate good initializers show its ability to better exploring the data structure for clustering, and it would be interesting to further improve the efficiency.

5 Conclusions

Sparse tensor BR1Approx problem can be seen as a sparse generalization of the dense tensor BR1Approx problem and a higher-order extension of the matrix BR1Approx problem. In the literature, little attention was paid to approximation algorithms for sparse tensor BR1Approx. To fill this gap, four approximation algorithms were developed in this work, which are of low computational complexity, easily implemented, and all admit theoretical guaranteed approximation bounds. Some of the proposed algorithms and the associated aproximation bounds generalize their matrix or dense tensor counterparts, while Algorithm D, which is the most efficient one, is even new when reducing to the matrix or dense tensor cases. Numerical experiments on synthetic as well as real data showed the effectiveness and efficiency of the developed algorithms; in particular, we observed that compared with random initializations, our algorithms can improve the performance of iterative algorithms for solving the problem in question. Possible future work is to design algorithms with better approximation ratio; following [12, Theorem 4.3], we conjecture that the best ratio might be \(O(\sqrt{\prod ^{d}_{j=1}\frac{r_j}{n_j}} \cdot \sqrt{\prod ^{d-2}_{j=1} \frac{\ln n_j }{ n_j} } )\). On the other hand, Qi defined and studied the best rank-1 approximation ratio of a tensor space [27]; it would be interesting to extend this notion to the sparse best rank-1 approximation setting and study its bounds.

Notes

Otherwise, from the analysis of Lemma 3.1, \(\Vert A_2^{\top } {{\mathbf {x}}_2^0}\Vert = \sqrt{ \frac{r_2}{n_2} }\lambda _{\max }(A_2)\) if and only if 1) \(A_2^{\top }\bar{{\mathbf {x}}}_2 = \alpha A_2^{\top }{\mathbf {x}}_2^0\) for some \(\alpha \in {\mathbb {R}}\), and 2) \( \left\langle \bar{{\mathbf {x}}}_2 , {\mathbf {x}}_2^0\right\rangle = \sqrt{ \frac{r_2}{n_2} }\). 2) holds if and only if every entry of \(\bar{{\mathbf {x}}}_2\) takes the same value, which together with \(r_2<n_2\) implies that \(\bar{{\mathbf {x}}}_2-\alpha {\mathbf {x}}_2^0\ne 0\); however, this and 1) lead to \(\mathrm{rank}(A_2)<n_2\), deducing a contradiction.

Due to the structure of \({\mathcal {A}}\), \({\mathcal {A}}{\mathbf {x}}_1{\mathbf {x}}_2{\mathbf {x}}_3 = \left\langle {\mathbf {e}} , {\mathbf {x}}_1\right\rangle \cdot {\mathbf {x}}_2^{\top }A{\mathbf {x}}_3\) with \({\mathbf {e}} = [1~1~1~1]^{\top }\). It is clear that \({\mathbf {x}}_1^*=\frac{1}{\sqrt{2}}[ 1~ 1~ 0~ 0]^{\top }\). Denote \({\mathbf {x}}_2^*:={\mathbf {x}}_1^*\), and \({\mathbf {x}}_3^*:=\frac{1}{\sqrt{2}}[0~1~0~1]^{\top }\). Since \(({\mathbf {x}}_2^*)^{\top }A{\mathbf {x}}_3^* = \lambda _{\max }(A) = 2\), \(({\mathbf {x}}_2^*,{\mathbf {x}}_3^*)\) is a maximizer to \(\max _{\Vert {\mathbf {x}}_i\Vert =1,\Vert {\mathbf {x}}_i\Vert _0\le 2,i=2,3}{\mathbf {x}}_2^{\top }A{\mathbf {x}}_3\), and so \(({\mathbf {x}}_1^*,{\mathbf {x}}_2^*,{\mathbf {x}}_3^*)\) is a maximizer to (2.3), with \({\mathcal {A}}{\mathbf {x}}_1^*{\mathbf {x}}_2^*{\mathbf {x}}_3^*=2\sqrt{2}\).

References

Allen, G.I.: Sparse higher-order principal components analysis. In: International Conference on Machine Learning, pp. 27–36 (2012)

Chan, S.O., Papailliopoulos, D., Rubinstein, A.: On the approximability of sparse PCA. In: Conference on Learning Theory, pp. 623–646 (2016)

Chi, E.C., Kolda, T.G.: On tensors, sparsity, and nonnegative factorizations. SIAM J. Matrix Anal. Appl. 33(4), 1272–1299 (2012)

Cichocki, A.: Era of big data processing: a new approach via tensor networks and tensor decompositions (2014). arXiv:1403.2048

Cichocki, A., Mandic, D., De Lathauwer, L., Zhou, G., Zhao, Q., Caiafa, C., Phan, H.A.: Tensor decompositions for signal processing applications: from two-way to multiway component analysis. IEEE Signal Process. Mag. 32(2), 145–163 (2015)

Comon, P.: Tensors: a brief introduction. IEEE Signal Process. Mag. 31(3), 44–53 (2014)

Comon, P., Golub, G.H.: Tracking a few extreme singular values and vectors in signal processing. Proc. IEEE 78(8), 1327–1343 (1990)

Comon, P., Luciani, X., De Almeida, A.: Tensor decompositions, alternating least squares and other tales. J. Chemometr. 23(7–8), 393–405 (2009)

d’Aspremont, A., El Ghaoui, L., Jordan, M.I., Lanckriet, G.R.G.: A direct formulation for sparse PCA using semidefinite programming. SIAM Rev. 49(3), 434–448 (2007)

De Lathauwer, L., De Moor, B., Vandewalle, J.: On the best rank-\(1\) and rank-(\({R}_1,{R}_2,\ldots,{R}_n\)) approximation of higer-order tensors. SIAM J. Matrix Anal. Appl. 21, 1324–1342 (2000)

d’Aspremont, A., Bach, F., El Ghaoui, L.: Approximation bounds for sparse principal component analysis. Math. Progam. 148(1–2), 89–110 (2014)

He, S., Jiang, B., Li, Z., Zhang, S.: Probability bounds for polynomial functions in random variables. Math. Oper. Res. 39(3), 889–907 (2014)

He, S., Li, Z., Zhang, S.: Approximation algorithms for homogeneous polynomial optimization with quadratic constraints. Math. Program. Ser. B 125, 353–383 (2010)

Hillar, C.J., Lim, L.H.: Most tensor problems are NP-hard. J. ACM 60(6), 45:1–45:39 (2013)

Jiang, B., Ma, S., Zhang, S.: Tensor principal component analysis via convex optimization. Math. Program. Ser. A 150, 423–457 (2015)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51, 455–500 (2009)

Kolda, T.G., Bader, B.W., Kenny, J.P.: Higher-order web link analysis using multilinear algebra. In: Fifth IEEE International Conference on Data Mining, pp. 242–249. IEEE (2005)

Kolda, T.G., Mayo, J.R.: Shifted power method for computing tensor eigenpairs. SIAM J. Matrix Anal. Appl. 32(4), 1095–1124 (2011)

Lim, L.H.: Singular values and eigenvalues of tensors: a variational approach. In: 2005 1st IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive Processing, vol. 1, pp. 129–132 (2005)

Luss, R., Teboulle, M.: Conditional gradient algorithms for rank-one matrix approximations with a sparsity constraint. SIAM Rev. 55(1), 65–98 (2013)

Madrid-Padilla, O.H., Scott, J.: Tensor decomposition with generalized lasso penalties. J. Comput. Graph. Stat. 26(3), 537–546 (2017)

Magdon-Ismail, M.: NP-hardness and inapproximability of sparse PCA. Inf. Process. Lett. 126, 35–38 (2017)

Nene, S.A., Nayar, S.K., Murase, H.: Columbia object image library (COIL-20). Technical Report CUCS-005-96 (1996). https://www.cs.columbia.edu/CAVE/software/softlib/coil-20.php

Ng, M.K.P., Li, X., Ye, Y.: Multirank: co-ranking for objects and relations in multi-relational data. In: Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1217–1225 (2011)

Nie, J., Wang, L.: Semidefinite relaxations for best rank-1 tensor approximations. SIAM J. Matrix Anal. Appl. 35(3), 1155–1179 (2014)

Papalexakis, E.E., Sidiropoulos, N.D., Bro, R.: From K-means to higher-way co-clustering: multilinear decomposition with sparse latent factors. IEEE Trans. Signal Process. 61(2), 493–506 (2012)

Qi, L.: The best rank-one approximation ratio of a tensor space. SIAM J. Matrix Anal. Appl. 32(2), 430–442 (2011)

Qi, L., Chen, H., Chen, Y.: Tensor Eigenvalues and Their Applications, vol. 39. Springer, Berlin (2018)

Sidiropoulos, N.D., De Lathauwer, L., Fu, X., Huang, K., Papalexakis, E.E., Faloutsos, C.: Tensor decomposition for signal processing and machine learning. IEEE Trans. Signal Process. 65(13), 3551–3582 (2017)

Sun, W.W., Li, L.: Store: sparse tensor response regression and neuroimaging analysis. J. Mach. Learn. Res. 18(1), 4908–4944 (2017)

Sun, W.W., Li, L.: Dynamic tensor clustering. J. Am. Stat. Assoc. 114(528), 1894–1907 (2019)

Sun, W.W., Lu, J., Liu, H., Cheng, G.: Provable sparse tensor decomposition. J. R. Stat. Soc. Ser. B 79(3), 899–916 (2017)

Vervliet, N., Debals, O., Sorber, L., Van Barel, M., De Lathauwer, L.: Tensorlab 3.0 (2016). http://www.tensorlab.net

Wang, J.: Consistent selection of the number of clusters via crossvalidation. Biometrika 97(4), 893–904 (2010)

Wang, Y., Dong, M., Xu, Y.: A sparse rank-1 approximation algorithm for high-order tensors. Appl. Math. Lett. 102, 106140 (2020)

Witten, D.M., Tibshirani, R., Hastie, T.: A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis. Biostatistics 10(3), 515–534 (2009)

Yang, Y., Feng, Y., Huang, X., Suykens, J.A.K.: Rank-1 tensor properties with applications to a class of tensor optimization problems. SIAM J. Optim. 26(1), 171–196 (2016)

Zhang, A., Han, R.: Optimal sparse singular value decomposition for high-dimensional high-order data. J. Am. Stat. Assoc. 114(528), 1708–1725 (2019)

Zhang, X., Qi, L., Ye, Y.: The cubic spherical optimization problems. Math. Comput. 81(279), 1513–1525 (2012)

Zou, H., Hastie, T., Tibshirani, R.: Sparse principal component analysis. J. Comput. Graph. Stat. 15(2), 265–286 (2006)

Acknowledgements

We thank the editor and the anonymous reviewers for their insightful comments and suggestions that helped improve this manuscript. This work was supported by the National Natural Science Foundation of China Grants 11801100 and 12171105, the Fok Ying Tong Education Foundation Grant 171094, and the Innovation Project of Guangxi Graduate Education Grant YCSW2020055. The datasets generated during and/or analysed during the current study are available from [23], and from the corresponding author on reasonable request.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mao, X., Yang, Y. Several approximation algorithms for sparse best rank-1 approximation to higher-order tensors. J Glob Optim 84, 229–253 (2022). https://doi.org/10.1007/s10898-022-01140-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-022-01140-4