Abstract

This paper proposes a joint decomposition method that combines Lagrangian decomposition and generalized Benders decomposition, to efficiently solve multiscenario nonconvex mixed-integer nonlinear programming (MINLP) problems to global optimality, without the need for explicit branch and bound search. In this approach, we view the variables coupling the scenario dependent variables and those causing nonconvexity as complicating variables. We systematically solve the Lagrangian decomposition subproblems and the generalized Benders decomposition subproblems in a unified framework. The method requires the solution of a difficult relaxed master problem, but the problem is only solved when necessary. Enhancements to the method are made to reduce the number of the relaxed master problems to be solved and ease the solution of each relaxed master problem. We consider two scenario-based, two-stage stochastic nonconvex MINLP problems that arise from integrated design and operation of process networks in the case study, and we show that the proposed method can solve the two problems significantly faster than state-of-the-art global optimization solvers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Global optimization is a field of mathematical programming devoted to obtaining global optimal solutions; and it has over the years found enormous applications in Process Systems Engineering (PSE). Mixed-integer nonlinear programs are global optimization problems where some decision variables are integer while others are continuous. Discrete decisions and nonconvex nonlinearities introduce combinatorial behavior for such problems [1, 2]. Various applications of mixed-integer nonlinear programming for PSE systems include natural gas network design and operation [3], gasoline blending and scheduling problems [4], expansion of chemical processes [5], reliable design of software [6, 7], pump network problem [8, 9], chemical process design synthesis [10], planning of facility investments for electric power generation [11], etc.

As adopted for mixed-integer linear programing (MILP), branch-and-bound has been employed for global optimization of nonconvex mixed-integer nonlinear programs (MINLP) [2, 12, 13]. The method entails systematically generating lower and upper bounds of the optimal objective function value over subdomains of the search space. The lower bounds can be generated via convex relaxations (such as McCommick relaxations [14]) or Lagrangian relaxation (or called Lagrangian decomposition) [15,16,17]. Ways of generating multipliers for the Lagrangian subproblem exist, including subgradient methods [18], cutting plane methods [15], and the Dantzig–Wolfe master problem (also known the restricted Lagrangian master problem) [19, 20].

Branch-and-bound based strategies can be improved by incorporation of domain reduction techniques. Domain reduction entails eliminating portions of the feasible domain based on feasibility and optimality. Bound tightening or contraction [21], range reduction [22] and generation of cutting planes [23] are different domain reduction strategies that have been successful in solving nonconvex problems [7]. In bound contraction, the variable bounds are shrunk at every iteration by solving bound contraction subproblems [21]. In range reduction, the bounds on the variables are shrunk based on simple calculations using Lagrange multiplier information [22]. For cutting planes generation, Lagrangian relaxation information provides cuts that is used to cut-off portion of the feasible domain that does not contain the global optimum [24]. Current state-of-the-art commercial deterministic global optimization solvers embody branch-and-bound and enhancements such as tighter convex relaxations and domain reduction techniques, such as the Branch-And-Reduce Optimization Navigator (BARON) [2] and Algorithms for coNTinuous/Integer Global Optimization of Nonlinear Equations (ANTIGONE) [25]. They do provide rigorous frameworks for global optimization of Problem (P0).

Branch-and-bound based methods have been successful for global optimization, mostly for small to medium sized problems. However, when the size of the problem becomes large, the branch-and-bound steps needed for convergence can be prohibitively large. A typical example of large-scale nonconvex MINLP is the following multiscenario optimization problem:

where \(x_0\) links s subparts of the model that are indexed by \(\omega \), and it is called linking variable in the paper. We assume that at least one of the functions \(f_{0,\omega }: X_0 \rightarrow {\mathbb {R}}\), \(f_{\omega }: V_{\omega } \rightarrow {\mathbb {R}}\), \(g_{0,\omega }: X_0 \rightarrow {\mathbb {R}}^m\) , \(g_{\omega }: V_{\omega } \rightarrow {\mathbb {R}}^m\) or one of the sets \(X_0\) and \(V_{\omega }\) is nonconvex, so Problem (P0) is a nonconvex MINLP, or a nonconvex nonlinear program (NLP) if no integer variables are involved. Clearly, (P0) is a large-scale problem when s is large. Problem (P0) has attracted more and more attention over the last 20 years in the field of PSE [26]. It usually arises from scenario-based two-stage stochastic programming [27, 28], for which \(x_0\) represents the first stage decisions that are made before the uncertainty is realized and \(v_\omega \) represents second-stage decisions that are made after the uncertainty is revealed in scenario \(\omega \). Functions \(f_{0,\omega }\) and \(f_\omega \) represent probability times costs associated with \(x_0\) and \(v_{\omega }\) for every scenario \(\omega \). Problem (P0) can also arise from integrated system design and operation problems which consider system operation over multiple time periods (but without uncertainties), such as for energy polygeneration plants [29] and electrical power distribution networks [30]). In this case, \(x_0\) represents system design decisions and \(x_\omega \) represents system operational decisions for time period (or scenario) \(\omega \), and \(f_{0,\omega }\) and \(f_{\omega }\) represent frequency of occurrence of time period \(\omega \) times investment cost and operational cost, respectively. In this paper, we focus on how to efficiently solve Problem (P0) to global optimality, rather than how to generate scenarios and probabilities for stochastic programming or the time periods and their occurrence frequencies for multiperiod optimization.

It is well-known that Problem (P0) has a decomposable structure that could be exploited for efficient solution. Benders decomposition (BD) [31] (known as L-shaped method in the stochastic programming literature [27, 28]) is one class of decomposition methods applied for MILPs. Geoffrion [32] generalized BD into Generalized Benders Decomposition (GBD), for solving convex MINLPs. Li et al. developed a further extension, called Nonconvex Generalized Benders Decomposition [4], for solving nonconvex MINLPs, but this method can guarantee global optimality only if the linking variable is fully integer. Karuppiah and Grossmann applied a Lagrangian decomposition-based scheme to solve Problem (P0) [33]; in order to guarantee convergence to a global optimum, explicit branch-and-bound of linking variables is needed. They also presented bound contraction as an optional scheme in their Lagrangian-based branch-and-bound strategy. Shim et al. [34] proposed a method that combines Lagrangian decomposition and BD together with branch-and-bound (to ensure convergence), in order to solve a class of bilevel programs with an integer program in the upper-level and a complementarity problem in the lower-level. A more recent algorithm combining NGBD and Lagrangian decomposition was proposed by Kannan and Barton [35], and this algorithm also requires explicit branch-and-bound for convergence.

Efforts have been taken to achieve better computational efficiency by combining classical decomposition methods. In 1983, Van Roy proposed a cross decomposition method that combines Lagrangian decomposition and Benders decomposition [19] to solve MILP problems which do not have non-linking integer variables. Since then, a number of extensions and variants of cross decomposition have been developed [20, 36,37,38,39,40]. All of these methods require that no nonconvexity comes from non-linking variables as otherwise finite convergence cannot be guaranteed.

The performance of branch-and-bound based solution methods depends heavily on the branching and node selection strategies, but what the best strategies are for a particular problem are usually unknown. In addition, branching and node selection strategies are not able to fully exploit the problem structure. Therefore, the goal of this paper is to develop a new decomposition method for global optimization of Problem (P0), which does not require explicit branch-and-bound. The new decomposition method was inspired by cross decomposition, and it follows a similar algorithm design philosophy, combining primarily generalized Benders decomposition and Lagrangian decomposition. However, its decomposition procedure is rather different in many details due to the nonconvexity it has to deal with, so we do not call it cross decomposition, but a new name joint decomposition. To the best of our knowledge, this is the first decomposition method that can solve Problem (P0) to global optimality without explicitly performing branch-and-bound (but the solution of nonconvex subproblems requires branch-and-bound based solvers).

The remaining part of the article is organized as follows. In Sect. 2, we give a brief introduction to generalized Benders decomposition and Lagrangian decomposition, using a reformulation of Problem (P0). Then in Sect. 3, we present the basic joint decomposition algorithm and the convergence proof. Section 4 discusses enhancements to the basic joint decomposition algorithm, including domain reduction and use of extra convex relaxation subproblems. The joint decomposition methods are tested with two case study problems adapted from the literature, and the simulation results demonstrate the effectiveness and the computational advantages of the methods. The article ends with concluding remarks in Sect. 6.

2 Problem reformulation and classical decomposition methods

In order to bring up the joint decomposition idea, we reformulate Problem (P0) and briefly discuss how the reformulated problem can be solved via classical GBD and LD methods. The reformulation starts by separating the convex part and the nonconvex part of the problem, and it ends up in the following form:

where set \(X_\omega \subset {\mathbb {R}}^{n_x}\) is convex, set \(Y_{\omega } \subset {\mathbb {R}}^{n_y}\) is nonconvex, and set \(x_0 \subset {\mathbb {R}}^{n_0}\) can be either convex or nonconvex. The first group of equations in (P) are nonanticipativity constraints (NACs) [17, 24, 41], where matrix \(H_\omega \in {\mathbb {R}}^{n_0} \times {\mathbb {R}}^{n_{x}}\) selects from \(x_{\omega }\) the duplicated \(x_0\) for scenario \(\omega \). The details of transforming (P0) to (P) are provided in “Appendix A”.

\(x_0\) and \(y_\omega \) are the two reasons why Problem (P) is difficult to solve. Linking variables \(x_0\) couple different subparts of the model and they cause nonconvexity if set \(X_0\) is nonconvex. Variables \(y_{\omega }\) cause nonconvexity due to the nonconvexity of set \(Y_\omega \). If the values of \(x_0\) and \(y_{\omega }\) are fixed, the problem will be much easier to solve. Therefore, in this paper we call \(x_0\) and \(y_{\omega }\)complicating variables. In order to distinguish the two sets of variables, we also call \(x_0\)linking variables, and \(y_{\omega }\)non-linking complicating variables. We also call \(x_\omega \)non-complicating variables.

The classical GBD method can be used to solve Problem (P) by treating \(x_0\) and \(y_{\omega }\) as complicating variables, while the LD method can be used to solve Problem (P) by dualizing NACs so that \(x_0\) no long links different scenarios. In the next two subsections we briefly introduce GBD and LD for Problem (P), and we make the following assumptions for Problem (P) for convenience of discussion.

Assumption 1

\(X_0\), \(X_{\omega }\) and \(Y_{\omega }\) for all \(\omega \in \{1,\ldots ,s\}\) are non-empty and compact.

Assumption 2

After fixing \((x_0, y_1, \cdots , y_s )\) to any point in \(X_0 \times Y_1 \times \cdots \times Y_s\), if Problem (P) is feasible, it satisfies Slater condition.

Assumption 1 is a mild assumption, as for most real-world applications, the variables are naturally bounded and the functions involved are continuous. If a discontinuous function is involved, it can usually be expressed with continuous functions and extra integer variables. Assumption 2 ensures strong duality of convex subproblems that is required for GBD. If this assumption is not satisfied for a problem, we can treat the non-complicating variables that fail the Slater condition to be complicating variables, so that after fixing all complicating variables the Slater condition is satisfied.

2.1 Generalized Benders decomposition

At each GBD iteration l, fixing the complicating variables \(x_0=x_0^{(l)}\), \(y_{\omega }=y_{\omega }^{(l)}\) (\(\forall \omega \in \{1,\ldots ,s\}\)) results in an upper bounding problem that can be decomposed into the following Benders primal subproblem for each scenario \(\omega \):

\(obj_{\text {BPP}_\omega ^l}\) is the optimal objective value of (\(\hbox {BPP}_{\omega }^{(l)}\)). For convenience, we indicate the optimal objective value of a problem in the above way for all subproblems discussed in this paper. Obviously, \(\sum _{\omega =1}^{s}obj_{\text {BPP}_\omega ^{(l)}}\) represents an upper bound for Problem (P). If (\(\hbox {BPP}_{\omega }^{(l)}\)) is infeasible for one scenario, then solve the following Benders feasibility subproblem for each scenario \(\omega \):

where \({z}_{1,\omega }^+\), \({z}_{1,\omega }^-\), and \({z}_{2,\omega }\) are slack variables. Note that (\(\hbox {BFP}_{\omega }^{(l)}\)) is always feasible according to Assumption 1. Solution of (\(\hbox {BFP}_{\omega }^{(l)}\)) provides a feasibility cut (that is described below), which prevents the generation of the same infeasible \(x_0^{l}\) and \(y_\omega ^{(l)}\) [42].

At the same iteration, the following Benders relaxed master problem is solved to yield a lower bound for Problem (P):

where \(\mu _\omega ^{(l)}\) includes Lagrange multipliers for the first group of constraints in Problem (\(\hbox {BPP}_{\omega }^{(l)}\)) or (\(\hbox {BFP}_{\omega }^{(l)}\)), and \(\lambda _\omega ^{(l)}\) includes Lagrange multipliers for the second group of constraints in Problem (\(\hbox {BPP}_{\omega }^{(l)}\)) or (\(\hbox {BFP}_{\omega }^{(l)}\)). Set \(T^{(l)}\) includes indices of Benders iterations at which only (\(\hbox {BPP}_{\omega }^{(l)}\)) is solved, and set \(S^{(l)}\) includes indices of Benders iterations at which (\(\hbox {BFP}_{\omega }^{(l)}\)) is solved. Note that Problem (\(\hbox {BRMP}^{(l)}\))) is used in the multicut BD or GBD, which is different from the one used in the classical single cut BD or GBD. The multicut version of the Benders master problem is known to be tighter than the single cut version [43, 44], so it is considered in this paper.

Remark 1

The finite convergence property of GBD is stated and proved in [32]. In Sect. 3, we will provide more details in the context of our new decomposition method.

Remark 2

For (P), the relaxed master problem (\(\hbox {BRMP}^{(l)}\)) can still be very difficult as its size grows with the number of scenarios. However, if most variables in (P) are non-complicating variables, the size of (\(\hbox {BRMP}^{(l)}\)) is much smaller than that of (P), and then (\(\hbox {BRMP}^{(l)}\)) is much easier to solve than (P).

2.2 Lagrangian decomposition

We start discussing LD from the Lagrangian dual of Problem (P) that is constructed by dualizing the NACs of the problem:

where \(obj_{\text {LS}}(\pi _1, \cdots ,\pi _s)\) is the optimal objective value of the following Lagrangian subproblem with given \((\pi _1, \cdots ,\pi _s)\):

Due to weak duality, Problem (DP) or any Lagrangian subproblem is a lower bounding problem for Problem (P). Typically, the LD method is incorporated in a branch-and-bound framework that only needs to branch on linking variables \(x_0\) to guarantee convergence to an \(\epsilon \)-optimal solution. At each branch-and-bound node or LD iteration k, a set of multipliers \((\pi _1^k, \cdots , \pi _s^k)\) are selected to construct a Lagrangian subproblem for (DP), and this subproblem can be naturally decomposed into \(s+1\) subproblems, i.e.,

and

for all \(\omega \in \{1,\cdots , s\}\). Let \(obj_{LS^k}\) be the optimal objective value of the Lagrangian subproblem, then \(obj_{\text {LS}^k}=\sum _{\omega =1}^{s}obj_{{LS}_\omega ^k} +obj_{{LS_0}^k}\). Clearly, \(obj_{\text {LS}^k} \le obj_{\text {DP}}\) always holds. If \((\pi _1^k, \cdots , \pi _s^k)\) happens to be an optimal solution of (DP), then \(obj_{\text {LS}^k}=obj_{\text {DP}}\).

The upper bounds in the LD methods are typically generated by fixing \(x_0\) to certain values. At each iteration k, an upper bounding problem, or called primal problem, is constructed via fixing \(x_0=x_0^k\) (which may be the solution of (\(\hbox {LS}_0^k\))), and this problem can be separated into s primal subproblem in the following form:

Let \(obj_{{PP}^k}\) be the optimal objective value of the primal problem, then \(obj_{{PP}^k}=\sum _{\omega =1}^sobj_{\text {PP}_\omega ^k}\).

For generation of multipliers, we take the idea from Dantzig–Wolfe decomposition, which is essentially a special LD method. Consider the convex hull of nonconvex set \(Y_\omega \):

where \(y_{\omega }^{[i]}\) denotes a point in \(Y_{\omega }\) that is indexed by i. The index set I may need to be an infinite set for \({\tilde{Y}}_{\omega }\) being the convex hull. Replace \(Y_\omega \) with its convex hull for all \(\omega \) in (P), then we get the following Dantzig–Wolfe master problem, or called primal master problem in this paper:

Clearly, Problem (PMP) is a relaxation of Problem (P), and it is either fully convex or partially convex (as set \(X_0\) can still be nonconvex). At LD iteration k, the following restriction of (PMP) can be solved:

where index set \({I^{k}} \subset I\) is finite. \({I^{k}}\) may consist of indices of \(y_\omega \) that are generated in the previously solved primal problems and Lagrangian subproblems. Replacing set I with set \(I^k\) is a restriction operation, so (\(\hbox {RPMP}^k\)) is a restriction of (PMP). Since (PMP) is a relaxation of (P), (\(\hbox {RPMP}^k\)) is neither a relaxation nor a restriction of (P), so it does not yield an upper or a lower bound of (P). The role of (\(\hbox {RPMP}^k\)) in joint decomposition is to generate multipliers for NACs to construct a Lagrangian subproblem, and to generate \(x_0^{k+1}\) to construct (\(\hbox {PP}^{k+1}_{\omega }\)). Problem (\(\hbox {RPMP}^k\)) can be solved by an optimization solver or by GBD.

Actually, we can construct a different Lagrangian dual of Problem (P) by dualizing both the NACs and the second group of constraints in the problem, as what we do for GBD in the last subsection. However, this Lagrangian dual is not as tight as Problem (DP) (as stated by the following proposition), so it is not preferred for a LD method. The following proposition follows from Theorem 3.1 of [17] and its proof is omitted here.

Proposition 1

Consider the following Lagrangian dual of Problem (P):

where

The duality gap of (DP) is no larger than the duality gap of (DP2).

3 The joint decomposition method

3.1 Synergizing LD and GBD

In the LD method described in the last section, at each iteration the subproblems to be solved are much easier than the original problem (P), as either the size of the subproblem is independent of number of scenarios, such as (\(\hbox {PP}_{\omega }^k\)), (\(\hbox {LS}_0^k\)), and (\(\hbox {LS}_{\omega }^k\)), or the subproblem is a MILP or convex MINLP that can be solved by existing optimization solvers or by GBD relatively easily, such as (\(\hbox {RPMP}^k\)). However, without branching on the linking variables \(x_0\), LD cannot guarantee finding a global solution, and we do not always know how to exploit the problem structure to efficiently branch on \(x_0\) and whether the branching can be efficient enough.

On the other hand, GBD can find a global solution, but it requires solving the nonconvex relaxed master problem (\(\hbox {BRMP}^{(l)}\)) at each iteration. The size of (\(\hbox {BRMP}^{(l)}\)) may be much smaller than the size of (P) if most variables in (P) are non-complicating variables, but (\(\hbox {BRMP}^{(l)}\)) can still be difficult to solve, especially considering that it needs to be solved at each iteration and its size grows with the number of iterations.

Therefore, there may be a way to combine LD and GBD, such that we solve as many LD subproblems and Benders primal subproblems as possible (as they are relatively easy to solve), but avoid solving many difficult Benders relaxed master problems (\(\hbox {BRMP}^{(l)}\)). This idea is similar to the one that motivates cross decomposition [19], but it leads to very different subproblems and a very different algorithmic procedure. The subproblems are very different, because for problem (P), we prefer dualizing only NACs in LD in order to achieve the smallest possible dual gap (according to Proposition 1), but we have to dualize both the NACs and the second group of constraints in GBD. In addition, due to the different nature of the subproblems, the order in which the subproblems are solved and how often the problems are solved are different. Therefore, we do not name the proposed method cross decomposition, but call it joint decomposition (JD).

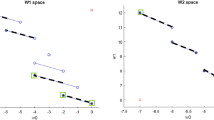

Figure 1 shows the basic framework of JD. Each JD iteration includes one LD iteration part, as indicated by the solid lines, and possibly one GBD iteration, as indicated by the dashed lines. In a JD iteration, the GBD iteration is performed only when the LD iteration improves over the previous LD iteration substantially. The GBD iteration is same to the one described in the last section, except that the relaxed master problem (\(\hbox {BRMP}^{(l)}\)) includes more valid cuts (which will be described later). The LD iteration is slightly different from the one described in the last section. One difference is that, after solving (\(\hbox {PP}_{\omega }^k\)) at LD iteration k, a Benders primal problem (\(\hbox {BPP}^k\)) is constructed using \(x_0^k\) (which is used for constructing (\(\hbox {PP}_{\omega }^k\))) and \((y_{1}, \cdots , y_{s})\) (which is from the optimal solution of (\(\hbox {PP}_{\omega }^k\))). The (\(\hbox {BPP}^k\)) is solved to generate a Benders cut that can be added to (\(\hbox {BRMP}^{(l)}\)). The other difference is that (\(\hbox {RPMP}^k\)), (\(\hbox {LS}_0^k\)), (\(\hbox {LS}_{\omega }^k\)) (decomposed from (\(\hbox {LS}^k\))) slightly differ from the ones described in the last section, and they will be described later.

Remark 3

The JD method requires that all subproblems can be solved using an existing optimization solver within reasonable time. If this requirement is not met, then JD does not work, or we have to further decompose the difficult subproblems into smaller, solvable subproblems.

3.2 Feasibility issues

According to Assumption 1, a subproblem in JD either has a solution or is infeasible. Here we explain how JD handles infeasibility of a subproblem.

First, if a lower bounding problem (\(\hbox {LS}^k\)) or (\(\hbox {BRMP}^{(l)}\)) is infeasible, then the original problem (P) is infeasible and JD can terminate.

Second, if (\(\hbox {BPP}^k\)) or (\(\hbox {BPP}^{(l)}\)) is infeasible, then JD will solve the corresponding Benders feasibility problem (\(\hbox {BFP}^k\)) or (\(\hbox {BFP}^{(l)}\)) to yield a feasibility cut. If (\(\hbox {BFP}^k\)) or (\(\hbox {BFP}^{(l)}\)) is infeasible, then (P) is infeasible and JD can terminate.

Third, if (\(\hbox {PP}_{\omega }^k\)) is infeasible, then JD will solve a feasibility problem that “softens” the second group of constraints: and this problem can be separated into s subproblems as follows:

If (\(\hbox {FP}_{\omega }^k\)) is infeasible for one scenario \(\omega \), then (P) is infeasible and JD can terminate. If (\(\hbox {FP}_{\omega }^k\)) is feasible for all scenarios, then JD can construct and solve a feasible Benders feasibility problem (\(\hbox {BFP}^k\)) to yield a Benders feasibility cut for (\(\hbox {BRMP}^{(l)}\)).

Finally, problem (\(\hbox {RPMP}^k\)) can actually be infeasible if none of the \((y^{[i]}_1, \cdots , y^{[i]}_s)\) in the problem is feasible for the original problem (P). To prevent this infeasibility, we can generate a point \(({\hat{y}}_1, \cdots , {\hat{y}}_s)\) that is feasible for (P), by solving the following initial feasibility problem:

Problem (IFP) is not naturally decomposable over the scenarios, but it can be solved by JD. When solving (IFP) using JD, the restricted primal master problem (\(\hbox {RPMP}^k\)) must have a solution (according to Assumption 1).

3.3 The tightened subproblems

The relaxed master problem described in Sect. 2 can be tightened with the solutions of previously solved subproblems in JD. The tightened problem, called joint decomposition relaxed master problem, can be written as:

where the index set \(R^k=\{1, \cdots , k \}\), UBD is the current best upper bound for (P), and LBD is the current best lower bound for (P).

Proposition 2

Problem (\(\hbox {JRMP}^{(l)}\)) is a valid lower bounding problem for Problem (P).

Proof

Since it is already known that Problem (\(\hbox {BRMP}^{(l)}\)) is a valid lower bounding problem and UBD and LBD are valid upper and lower bounds, we only need to prove that the cuts from Lagrangian subproblems together with the Benders optimality cuts do not exclude an optimal solution. Let \(obj_\text {P}\) be the optimal objective value of (P), then

where

On the one hand, \(\forall \pi ^i_{\omega }, i \in R^k\),

On the other hand,

where \(v_{\omega } (x_0, y_{\omega })=\min \{c^T_{\omega }x_{\omega }:x_0=H_{\omega }x_{\omega }, \; A_{\omega } x_{\omega } + B_{\omega } y_{\omega } \le 0 \}\). From weak duality, \(\forall j \in T^{(l)}\),

Therefore, \(\forall y_{\omega } \in Y_{\omega }\),

Equations (1)–(2) indicate that the cuts from Lagrangian subproblems together with the Benders optimality cuts do not exclude an optimal solution of (P). \(\square \)

For convenience, we call the cuts from the Lagrangian subproblems, Lagrangian cuts. The Benders cuts and the Lagrangian cuts in (\(\hbox {JRMP}^{(l)}\)) imply that, \(\forall i \in R^k\),

Therefore, from each iteration i we can construct the following valid cut

Since \(x_0=x_{\omega }\) in the original problem, the above cut is also valid if \(x_0\) is replaced with \(x_{\omega }\). Consequently, problems (\(\hbox {LS}_0^k\)), (\(\hbox {LS}_{\omega }^k\)), (\(\hbox {RPMP}^k\)) can be enhanced with the constraint as:

Note that the index set \(I^k\) includes indices for all constant points \(y^{[i]}_\omega \) in Problem (\(\hbox {RPMP}^k\)), and the constant points \(y^{[i]}_\omega \) come from all previously solved PP, FP, LS and JRMP.

3.4 The basic joint decomposition algorithm

Table 1 shows the basic JD algorithm. As described in Sect. 3.1, a JD iteration always include a LD iteration and sometimes a GBD iteration as well. We index JD and LD iterations using k and GBD iterations using l. Whether a GBD iteration is performed at JD iteration k depends on whether LD iteration k improves over LD iteration \(k-1\) substantially, i.e., whether \(obj_{{LS}^{k}} \ge obj_{{LS}^{k-1}} + \epsilon \). In the first JD iteration, subproblem (\(\hbox {PP}_\omega \)) is constructed by fixing \(x_0\) to its initial value, and in any subsequent JD iteration, (\(\hbox {PP}_\omega \)) is constructed by fixing \(x_0\) to the optimal value of \(x_0\) for (RPMP) in the previous JD iteration. Note that the solution of (\(\hbox {LS}_0\)) is only used for constructing a valid lower bound, and the \(x_0\) value in the solution of (\(\hbox {LS}_0\)) is not used for constructing (\(\hbox {PP}_\omega \)). The JD algorithm has the following property.

Proposition 3

The JD algorithm shown in Table 1 cannot perform an infinite number of LD iterations between two GBD iterations.

Proof

The initial point \((x_0^1, y_1^{[1]}, \cdots , y_s^{[1]})\) that are feasible for Problem (P) can lead to a finite upper bound UBD. According to Assumption 1, all Lagrangian subproblems are bounded, so between two GBD iterations, the first LD iteration leads to a finite \(obj_{LS}\), and the subsequent LD iterations increase \(obj_{LS}\) by at least \(\epsilon >0\) (because otherwise a GBD iteration has to be performed). Therefore, in a finite number LD iterations either \(obj_{LS}\) exceeds \(UBD-\epsilon \) and the algorithm terminates with an \(\epsilon \)-optimal solution, or a GBD iteration is performed. This completes the proof. \(\square \)

Remark 4

If an initial feasible point for Problem (P) is not known, the initial feasibility problem (IFP) can be solved to get a feasible point for (P) or verify that Problem (P) is infeasible (when the optimal objective value of Problem (IFP) is positive). Note that it is easy to find a feasible point of Problem (IFP).

In the JD algorithm, we use k to index both a JD iteration and a LD iteration, as every JD iteration includes one LD iteration. We use l (together with ’()’) to index a GBD iteration, and usually \(l < k\) because not every JD iteration includes one GBD iteration. We use i (together with ’[]’) to index the columns generated for constructing Problem (\(\hbox {RPMP}^k\)). Next, we establish the finite convergence property of the JD algorithm.

Proposition 4

If set \(X_{\omega }\) is polyhedral \(\forall \omega \in \{1, \cdots , s\}\), the JD algorithm shown in Table 1 cannot perform an infinite number of GBD iterations.

Proof

In this case, the GBD part of the algorithm reduces to BD, and BD is known to have finite termination property [31, 42]. The finite termination property results from:

-

(a)

The Benders master problem (\(\hbox {BRMP}^{(l)}\)) (and therefore \(\hbox {JRMP}^{(l)}\) as well) requires only a finite number of Benders cuts to equal Problem (P), due to linear duality theory;

-

(b)

A same Benders cut cannot be generated twice before the optimality gap is closed.

\(\square \)

Proposition 5

If \(X_0 \times Y_1 \times \cdots \times Y_s\) is a finite discrete set, the JD algorithm shown in Table 1 cannot perform an infinite number of GBD iterations.

Proof

This result comes from the fact that a point in \(X_0 \times Y_1 \times \cdots \times Y_s\) cannot be generated twice before the optimality gap is closed. For more details readers can see Theorem 2.4 of [32]. \(\square \)

Proposition 6

The JD algorithm shown in Table 1 cannot include an infinite number of GBD iterations at which the Benders primal problem BPP is feasible.

Proof

A similar proposition has been proved in the context of GBD in [32] (as Theorem 2.5). The central idea of the proof can be used here for JD.

Suppose the JD algorithm includes an infinite number of GBD iterations at which the Benders primal problem BPP is feasible. Let superscript (n) index these GBD iterations, \(\{(\eta _0^{(n)},x_0^{(n)},y_{1}^{(n)},\ldots ,y_{s}^{(n)})\}\) be the sequence of optimal solutions of JRMP and \(\{(\mu _\omega ^{(n)},\lambda _\omega ^{(n)})\}\) be the sequence of dual solutions of BPP. Since \(\{\eta _0^{(n)}\}\) is nondecreasing and is bounded from above, so a subsequence of it converges to a finite value, say \(\eta _0^*\). Due to the compactness of \(X_0\), \(Y_1, \cdots , Y_s\), a subsequence of \(\{(x_0^{(n)},y_{1}^{(n)},\ldots ,y_{s}^{(n)})\}\), say, \(\{(x_0^{(n_i)},y_{1}^{(n_i)},\ldots ,y_{s}^{(n_i)})\}\), converges to \((x_0^*,y_{1}^*,\ldots ,y_{s}^*) \in X_0 \times Y_1 \times \cdots \times Y_s\). Solving BPP in this subsequence of GBD iterations can be viewed as point-to-set mappings from points in \(X_0 \times Y_1 \times \cdots \times Y_s\) to the relevant Lagrange multiplier sets. From Lemma 2.1 of [32] and Assumption 2, such a mapping is uniformly bounded in some open neighborhood of the point it maps from. Let such open neighborhood of \((x_0^*,y_{1}^*,\ldots ,y_{s}^*)\) be \(N(x_0^*,y_{1}^*,\ldots ,y_{s}^*)\), then \(\exists t\) such that \(\forall n_i > t\), \((x_0^{(n_i)},y_{1}^{(n_i)},\ldots ,y_{s}^{(n_i)}) \in N(x_0^*,y_{1}^*,\ldots ,y_{s}^*)\), and then the relevant subsequence of Lagrange multipliers is bounded, which must contain a subsequence converging to \(\{\mu _\omega ^\star ,\lambda _\omega ^\star \}\). Therefore, there exists a subsequence of \(\{(\eta _0^{(n)},x_0^{(n)},y_{1}^{(n)},\ldots ,y_{s}^{(n)},\mu _\omega ^{(n)},\lambda _\omega ^{(n)})\}\), say, \(\{(\eta _0^{(m)},x_0^{(m)},y_{1}^{(m)},\ldots ,y_{s}^{(m)},\mu _\omega ^{(m)},\lambda _\omega ^{(m)})\}\), which converges to \(\{(\eta _0^*,x_0^*,y_{1}^*,\ldots ,y_{s}^*,\mu _\omega ^*,\lambda _\omega ^*)\}\).

Consider any GBD iteration \(m>1\) in this convergent subsequence. Let UBD and LBD be the upper and lower bounds after this GBD iteration, then

and that the JD algorithm does not terminate after GBD iteration m implies

therefore

According to how JRMP is constructed,

Equations (3) and (4) imply that

However, when m is sufficiently large, \(y_{\omega }^{(m)}-y_{\omega }^{(m-1)}\) and \(x_0^{(m)} - x_0^{(m-1)}\) are sufficiently close to 0 while \(\mu _{\omega }^{(m-1)}\) and \(\lambda _\omega ^{(m-1)}\) are sufficiently close to limit points \(\mu _\omega ^*\) and \( \lambda _\omega ^*\), so the right-hand-side of Eq. (5) is a positive value (as \(\epsilon >0\)). This contradiction implies that the JD algorithm cannot include an infinite number of GBD iterations at which BPP is feasible. \(\square \)

Theorem 1

With an initial feasible point, the JD algorithm shown in Table 1 terminates in a finite number of iterations with an \(\epsilon \)-optimal solution, if one the following three conditions is satisfied:

-

(a)

Set \(X_{\omega }\) is polyhedral \(\forall \omega \in \{1, \cdots , s\}\).

-

(b)

Set \(X_0 \times Y_1 \times \cdots \times Y_s\) is finite discrete.

-

(c)

There are only a finite number of GBD iterations at which the Benders primal problem BPP is infeasible.

Proof

From Proposition 3, the JD algorithm can only include a finite number of LD iterations. From Propositions 4 and 5, when condition (a) or (b) is satisfied, the JD algorithm can only include a finite number of BD iterations. From Proposition 6, the JD algorithm can only have a finite number of GBD iterations at which the Benders primal problem BPP is feasible, and together with condition (c), it implies that the JD algorithm can only include a finite number of BD iterations. Therefore, if one of the three conditions is satisfied, the JD algorithm can only include a finite number LD and BD iterations before termination.

On the other hand, according to Proposition 2, the JD algorithm never excludes an optimal solution. This together with the termination criterion ensures that the solution returned is \(\epsilon \)-optimal. \(\square \)

Remark 5

Condition (c) in Theorem 1 is actually not a restrictive condition, because we can always “soften” the complicating constraints in Problem (P) (i.e., penalize the violation of these constraints in the objective function) so that Problem (\(\hbox {BPP}_{\omega }^{(l)}\)) is always feasible.

4 Enhancements to joint decomposition

The solution of Problem (\(\hbox {JRMP}^{(l)}\)) is the bottleneck of the JD algorithm, even considering that the problem is solved only when necessary. Problem (\(\hbox {JRMP}^{(l)}\)) is challenging due to two major reasons. One is that the number of complicating variables in Problem (\(\hbox {JRMP}^{(l)}\)) is dependent on the number of scenarios, so the size of Problem (\(\hbox {JRMP}^{(l)}\)) is large (although smaller than the original problem). The other is that the number of constraints in the problem grows with the JD iteration; in other words, Problem (\(\hbox {JRMP}^{(l)}\)) becomes more and more challenging as JD progresses. In this section, we introduce two ways to mitigate the difficulty in solving Problem (\(\hbox {JRMP}^{(l)}\)):

-

1.

To solve a convex relaxation of Problem (\(\hbox {JRMP}^{(l)}\)) before solving Problem (\(\hbox {JRMP}^{(l)}\)). If the solution of the convex relaxation can improve the lower bound, then skip solving Problem (\(\hbox {JRMP}^{(l)}\)).

-

2.

To perform domain reduction iteratively in JD in order to keep reducing the ranges of the complicating variables. This way, the convex relaxation of Problem (\(\hbox {JRMP}^{(l)}\)) is progressively tightened and Problem (\(\hbox {JRMP}^{(l)}\)) itself does not become much harder as the algorithm progresses.

In addition, domain reduction for the complicating variables can make other nonconvex JD subproblems easier, including Problems (\(\hbox {LS}_{\omega }^k\)) and (\(\hbox {PP}_{\omega }^k\)). Domain reduction for the linking variables can also tighten the Lagrangian relaxation gap [41]; in extreme cases, the Lagrangian relaxation gap can diminish and there is no need to solve Problem (\(\hbox {JRMP}^{(l)}\)) in JD to close the optimality gap. Note that we do not perform domain reduction for non-complicating variables, because normally reducing ranges on these variables do not help much to tighten convex relaxations and ease the solution of nonconvex subproblems.

4.1 Convex relaxation and domain reduction

The convex relaxation of Problem (\(\hbox {JRMP}^{(l)}\)) is a valid lower bounding problem for Problem (\(\hbox {JRMP}^{(l)}\)) and consequently for Problem (P) as well. It can be written as:

Here \({\hat{X}}_0\) and \({\hat{Y}}_{\omega }\) denote the convex relaxations of \({X}_0\) and \({Y}_{\omega }\). Let \(obj_{{JRMPR}^{(l)}}\) be the optimal objective of Problem (\(\hbox {JRMPR}^{(l)}\)).

Since Problem (\(\hbox {JRMPR}^{(l)}\)) is also a valid convex relaxation of Problem (P), the solution of Problem (\(\hbox {JRMPR}^{(l)}\)) can be exploited to eliminate the parts of variable ranges that cannot include an optimal solution of Problem (P), using marginal based domain reduction method. This method was first proposed in [22] (and it was called range reduction therein). The following proposition lays the foundation of marginal based domain reduction for complicating variables \(y_\omega \) in JD, which results directly from Theorem 2 in [22].

Proposition 7

Consider the following bounds on \(y_{\omega ,j}\) (\(\forall \omega \in \{1, \cdots , s\}, \;\; \forall j \in \{1, \cdots , n_y\}\)):

whose Lagrange multipliers obtained at the solution of Problem (\(\hbox {JRMPR}^{(l)}\)) are \(u_{\omega ,j}\), \(v_{\omega ,j}\). Let \({\mathbb {J}}^{(l)}_{1,\omega }\) include indices of upper bounds whose \(u_{\omega ,j}\) are nonzero, and \({\mathbb {J}}^{(2)}_{1,\omega }\) include indices of lower bounds whose \(v_{\omega ,j}\) are nonzero, then the following constraints do not exclude an optimal solution of (P):

The following proposition states a similar result for the linking variables \(x_0\):

Proposition 8

Consider the following bounds on \(x_{0,j}\) (\(\forall j \in \{1, \cdots , n_0\}\)):

whose Lagrange multipliers obtained at the solution of Problem (\(\hbox {JRMPR}^{(l)}\)) are \(u_{0,j}\), \(v_{0,j}\). Let \({\mathbb {J}}^{(l)}_{1,0}\) include indices of upper bounds whose \(u_{0,i}\) are nonzero, and \({\mathbb {J}}^{(l)}_{2,0}\) include indices of lower bounds whose \(v_{0,i}\) are nonzero, then the following constraints do not exclude an optimal solution of (P):

According to Propositions 7 and 8, the bounds of nonconvex and linking variables can be updated via the following range reduction calculation:

where \(G^{(l)}=UBD-obj_{{RMPCR}^{(l)}}\).

The effectiveness of marginal based domain reduction relies on how many bounds are active, the magnitude of Lagrange multipliers of active bounds at the solution of \(\hbox {JRMPR}^{(l)}\), and how often \(\hbox {JRMPR}^{(l)}\) is solved. In order to achieve effective domain reduction more consistently, we also introduce optimization based domain reduction in JD. Optimization based domain reduction, or called bound contraction or bound tighening [21, 45], is to maximize or minimize a single variable over a convex relaxation of the feasible set of the original problem. For example, if we are to estimate the upper bound of a linking variable \(x_{0,j}\) at JD iteration k, we can solve the following optimization problem:

The third group of constraints in Problem (\(\hbox {ODRStd}_i^k\)) utilizes the known upper bound of (P) to tighten the convex relaxation, but it cannot be included in Problem (\(\hbox {ODRStd}_i^k\)) when UBD is not available (e.g., before a feasible solution of (P) is known). We now index sets \(X_{0}\), \({\hat{Y}}_{\omega }\) with the JD iteration number k, as these sets may change after the domain reduction calculations.

Problem (\(\hbox {ODRStd}_i^k\)) represents the standard optimization based domain reduction formulation, but it can be further enhanced in the JD algorithm, via the incorporation of valid cuts derived from other JD subproblems. First, we can add the following constraint:

This constraint is redundant in the classical branch-and-bound based global optimization, as LBD is obtained via convex relaxation as well. In JD, LBD is obtained via Lagrangian subproblems and JD relaxed master problems, which may be tigher than convex relaxations of the original problem, so this constraint may enhance Problem (\(\hbox {ODRStd}_i^k\)). Second, we can include constraints (*) (that are derived from Problem (\(\hbox {JRMP}^{(l)}\))). Therefore, we can write the enhanced optimization based domain reduction formulation as:

If we are to estimate an upper bound, then Problem (\(\hbox {ODR}_i^k\)) is a maximization problem; otherwise, Problem (\(\hbox {ODR}_i^k\)) is a minimization problem.

Although Problem (\(\hbox {ODR}_i^k\)) is convex, it can have a very large size because its size grows with the number of scenarios. Therefore, we proposed to solve Problem (\(\hbox {ODR}_i^k\)) for \(x_0\) but not for \(y_\omega \). Actually, we can see in the case study section that optimization based domain reduction is time consuming even when we only solve Problem (\(\hbox {ODR}_i^k\)) for \(x_0\).

4.2 The enhanced joint decomposition method

Figure 2 shows the framework of the JD method that includes solving convex relaxation, Problem (\(\hbox {JRMPR}^{(l)}\)), bound tightening for \(x_0\) and the domain reduction calculations. In this framework, optimization based domain reduction is performed at the beginning of the algorithm and in every LD iteration (right before the solution of nonconvex Lagrangian subproblems). Convex relaxation, Problem (\(\hbox {JRMPR}^{(l)}\)) is solved before solving Problem (\(\hbox {JRMP}^{(l)}\)), and after solving Problem (\(\hbox {JRMPR}^{(l)}\)), marginal based domain reduction is performed. Problem (\(\hbox {JRMP}^{(l)}\)) is not solved if Problem (\(\hbox {JRMPR}^{(l)}\)) can improve the lower bound significantly; this strategy can postpone solving Problem (\(\hbox {JRMP}^{(l)}\)) to a later time, so that the ranges of \(x_0\) can be reduced as much as possible when a Problem (\(\hbox {JRMP}^{(l)}\)) has to be solved. The detailed algorithm for the enhanced JD is shown in Table 2.

Theorem 2

The decomposition algorithm described in Table 2 terminates in a finite number of steps with an \(\epsilon \)-optimal solution of Problem (P), if one the following three conditions is satisfied:

-

(a)

Set \(X_{\omega }\) is polyhedral \(\forall \omega \in \{1, \cdots , s\}\).

-

(b)

Set \(X_0 \times Y_1 \times \cdots \times Y_s\) is finite discrete.

-

(c)

There are only a finite number of GBD iterations at which the Benders primal problem BPP is infeasible.

Proof

This can be proved by showing that, solving Problem (\(\hbox {JRMPR}^{(l)}\)) in every GBD iteration in JD and including domain reduction calculations do not invalidate the finite termination to an \(\epsilon \)-optimal solution.

First, we can show that there cannot be an infinite number of GBD iterations at which Problem (\(\hbox {JRMPR}^{(l)}\)) is solved but Problem (\(\hbox {JRMP}^{(l)}\)) is not solved. Consider a GBD iteration at which Problem (\(\hbox {JRMPR}^{(l)}\)) is solved but Problem (\(\hbox {JRMP}^{(l)}\)) is not solved, then Problem (\(\hbox {JRMPR}^{(l)}\)) is not unbounded (because otherwise Problem (\(\hbox {JRMP}^{(l)}\)) needs to be solved) and the lower bound LBD is finite. The upper bound UBD is also finite (because an initial feasible solution exists). Therefore, it is not possible that LBD can be improved by \(\epsilon >0\) for an infinite number of GBD iterations, so there cannot be an infinite number of GBD iterations at which Problem (\(\hbox {JRMPR}^{(l)}\)) is solved but Problem (\(\hbox {JRMP}^{(l)}\)) is not solved. According to the proof of Theorem 1, JD can only include a finite number of LD iterations, and a finite number of GBD iterations at which Problem (\(\hbox {JRMP}^{(l)}\)) is solved, if one of the three listed conditions are satisfied.

Second, domain reduction reduces the ranges of \(x_{0}\) and \(y_{1},\ldots ,y_{s}\) but does not exclude any optimal solution from the reduced ranges. So the Lagrangian relaxation problems and JD relaxation master problems are still valid lower bounding problems and they cannot cut off any optimal solution. \(\square \)

5 Case studies

The purpose of the case studies is to demonstrate the potential computational advantages of the proposed joint decomposition method for problems exhibiting the decomposable structure of (P0), especially when off-the-shelf solvers cannot effectively exploit the problem structure. We consider two case study problems here, which are both scenario-based two-stage stochastic nonconvex MINLPs arising from integrated design and operation under uncertainty.

5.1 Case study problems

Case Study A—This problem is a variant of the stochastic Haverly pooling problem [3], which was originally developed based on the classical Haverly pooling problem [46, 47]. Figure 3 shows the superstructure of the pooling system to be developed. The circles denote four sources that supply intermediate gasoline products with different sulfur percentages and costs, the ellipse denotes a blender (or called a pool) at which some intermediate products can be blended, and the rectangles denote product sinks at which the final products are blended. The goal of optimization is to minimize the negative profit of the system by determining: (1) Whether the pool and the two product sinks are to be developed in the system; (2) The capacities of the sources and the pipelines. The stochastic pooling model of the problem can be found in “Appendix B”. Two uncertain parameters, percentage of sulfur in source 4 and upper limit on the demand at sink 1, were considered. They were assumed to follow independent normal distributions, with means of 2.5 and 180 and standard deviations of 0.08 and 10, respectively. Other parameters used in the problem can be found in [3]. For this problem, \(x_0\) contains 3 binary variables and 13 continuous variables, \(x_\omega \) contains 7s continuous variables and \(y_\omega \) contains 14s continuous variables, where s stands for the total number of scenarios. In the case study, each uncertain parameter was sampled for 5, 6, 7, 8, 9 and 10 scenario values, via the sampling rule described in [3], and this led to problem instances with 25, 36, 49, 64, 81 and 100 scenarios.

Case Study B—This problem is a variant of the Sarawak Gas Production System (SGPS) design problem [48], and the original form of the design problem appeared in [3]. Figure 4 shows the superstructure of the SGPS system under consideration, where the circles represent gas fields (sources), ellipses represent offshore gas platforms (pools) at which gas flows from different gas fields are mixed and split, rectangles represent onshore liquefied natural gas (LNG) plants (product terminals). Symbols with solid lines represent the part of the system that is already developed, and symbols with dashed lines represent the superstructure of the part of the system that needs to be designed in the problem. The goal of optimization is to maximize expected net present value while satisfying specifications for gas qualities at the LNG plants in the presence of uncertainty. There are two uncertain parameters, i.e., the quality of \(\hbox {CO}_2\) at gas field M1 and upper limit on the demand at LNG plant 2. They were assumed to follow independent normal distributions with means of 3.34% and 2155 Mmol/day and standard deviations of 1% and 172.5 Mmol/day, respectively. In the case study, each uncertain parameter was sampled for 5, 6, 7, 8, 9 and 10 scenario values, via the same sampling rule described in [3], which led to problem instances with 25, 36, 49, 64, 81 and 100 scenarios. The problem was also formulated following the new stochastic pooling model provided in “Appendix B”. In the resulting formulation, \(x_0\) contains 5 binary variables and 29 continuous variables. The 5 binary variables are to determine whether gas fields HL, SE, M3, M1 and JN are to be developed, and the 29 continuous variables are the capacities of other units to be developed. \(x_\omega \) contains 8s variables and \(y_\omega \) contains 85s variables, where s stands for the total number of scenarios.

5.2 Solution approaches and implementation

The case studies were run on a virtual machine allocated with a 3.2GHz CPU. The virtual machine ran Linux operating system (Ubuntu 16.04) with 6 GB of memory. Four solution approaches were compared in the case studies: Monolith, GBD, JD1, JD2. Monolith refers to solving the problem using an off-the-shelf, general-purpose global optimization solver, GBD refers to generalized Benders decomposition, JD1 refers to the basic JD algorithm, and JD2 refers to the enhanced JD algorithm. The case study problems and the subproblems required in GBD, JD1 and JD2 were all modeled on GAMS 24.7.4 [49], but GBD, JD1 and JD2 algorithms were programmed on MATLAB 2014a [50]. Data exchange between MATLAB and GAMS was realized via GAMS GDXMRW facility [51].

The monolith approach solved the problems using three global optimization solvers, i.e., ANTIGONE 1.1 [25], BARON 16.8 [2], SCIP 3.2 [52]. ANTIGONE 1.1 and BARON 16.8 adopted CONOPT 3 [53] as its NLP solver and CPLEX 12.6 [54] as its LP/MILP solver, and SCIP 3.2 used its default solvers for the subproblems. GBD, JD1 and JD2 solved the problems by using CPLEX 12.6 for the LP/MILP subproblems and ANTIGONE 1.1 for the nonconvex NLP/MINLP subproblems.

The construction of Problems (\(\hbox {ODR}_i^k\)) and (\(\hbox {JRMPR}^{(l)}\)) in JD2 requires the convex relaxation of nonconvex sets \(X_0\) and \(Y_\omega \). In the case studies, \(X_0\) was a mixed integer set defined by linear constraints, and it was relaxed into a polyhedral set via continuous relaxation. \(Y_\omega \) was a nonconvex continuous set defined with bilinear functions, and it was relaxed into a polyhedral set via standard McCormick relaxation [14]. The relative and absolute termination tolerances for Case Study A were set to \(10^{-3}\), and for Case study B were set to \(10^{-2}\). GBD, JD1 and JD2 started with all design decisions being 0 (which is a trivial feasible solution for both case study problems).

During the execution of JD1 and JD2, large computing overhead may be incurred due to frequent model generation in GAMS and data exchange between GAMS and MATLAB. So both “Total solver time” and “Total run time” were recorded for the simulation studies, which refer to the total time for the subproblem solvers to solve each individual subproblem and the wall time for the entire solution procedure, respectively. The computing overhead could have been significantly reduced if JD1 and JD2 had been implemented using general-purpose programming languages, such as C++. For the monolith approach, the computing overhead was much less, as seen from the results in the next subsection.

5.3 Results and discussion

Summary of the results for case study A is presented on Tables 3, 4, 5 and 6. Table 3 shows the results for the monolith approach using the three global optimization solvers. It can be seen that ANTIGONE was the fastest among the three solvers, but its solution time increased quickly with the problem size. BARON could also solve small problem instances quickly, but it could not find the desired \(10^{-3}\)-optimal solution (i.e., a solution with a relative gap no larger than \(0.1\%\)) for larger problem instances within the 1 h run time limit. SCIP was the slowest of the three solvers; but unlike BARON, it happened to find the \(10^{-3}\)-optimal solution within 1 h for all problem instances (but could not verify the optimality for large problem instances). Table 4 shows that GBD could not find a nontrivial feasible solution for any problem instance within the 1 h time limit (and the zero objective value is from the initial trivial solution). On the other hand, Tables 4 and 5 show that both JD1 and JD2 could solve all problem instances fairly quickly. JD1 was not as fast as ANTIGONE or BARON for small problem instances, but its solution time increased more slowly than that of ANTIGONE or BARON. This was primarily because the number of JD1 iterations did not vary much with the number of scenarios. The nonconvex relaxed master problem (\(\hbox {JRMP}^{(l)}\)) was the major contributor to JD1 solution time, and sometimes it dominated the solution time (as in the 64 scenario case). In JD2 where the relaxation of (\(\hbox {JRMP}^{(l)}\)) (i.e., (\(\hbox {JRMPR}^{(l)}\))) is solved, the number of (\(\hbox {JRMP}^{(l)}\)) needed to be solved was significantly reduced, and each (\(\hbox {JRMP}^{(l)}\)) was much easier to solve due to extensive domain reduction. The price for reducing the (\(\hbox {JRMP}^{(l)}\)) solution time was the time spent on optimization based domain reduction \(\hbox {ODR}^k\), but the resulting total solution time still decreased for most cases, so JD2 generally outperformed JD1 and it scaled with the number of scenarios in a more consistent way. Note that Tables 4 and 5 do not include the times to solve easy LP and MILP subproblems like Problem (\(\hbox {BPP}_{\omega }^{(l)}\)), (\(\hbox {BFP}_{\omega }^{(l)}\)), (\(\hbox {LS}_0^k\)) and (\(\hbox {JRMPR}^{(l)}\)), because those times were very small compared to the total solution time.

Tables 7, 8, 9 and 10 present the results for case study B. ANTIGONE actually found the desired \(10^{-2}\)-optimal solution, but it cannot reduce the gap to \(1\%\) within the 24 h run time limit; for the 25-scenario instance, it mistakenly terminated before the run time limit without reducing the gap to \(1\%\). BARON had the similar problem; it obtained the \(10^{-2}\)-optimal solution for most problem instances but could not reduce the gap to \(1\%\) for any problem instance. SCIP performed better than ANTIGONE and BARON for case study B, but it could only solve the 25 scenario and 36 scenario problem instances successfully. Table 8 shows that GBD could not return a nontrivial feasible solution within the 24 h time limit. Table 9 shows that JD1 achieved an optimal solution for the 36 and 64 scenario cases, but it could not close the optimality gap within the 24 h time limit for the 25 and 49 scenario cases, and it suffered from insufficient memory for the 81 and 100 scenario cases. Either the insufficient solution time limit or the insufficient memory problem results from the fact that subproblem (\(\hbox {JRMP}^{(l)}\)) is too difficult if its domain is not sufficiently reduced according to the information from previous solved subproblems. Table 7 shows that JD2 solved all problem instances successfully, and its solution time scaled well with the number of scenarios. This is because the total number of JD2 iterations did not vary significantly with the number of scenarios, and the times for \(\hbox {JRMP}^{(l)}\) and domain reduction did not increase greatly with the number of scenarios. It can be seen that for this problem, domain reduction, primarily (\(\hbox {ODR}_i^k\)), dominated the total solution time, so a more efficient way to perform domain reduction could have been able to effectively reduce the solution time. This case study problem indicates that, general-purpose global optimization solvers may not be able to effectively exploit the structure of a complex nonconvex MINLP and solve the problem efficiently enough, and this is when one might consider the use of a tailored decomposition strategy like the one proposed in this paper.

6 Concluding remarks

Two joint decomposition methods, JD1, and JD2, are developed in this paper for efficient global optimization of Problem (P). JD1 is a basic joint decomposition approach, which follows the notions of classical decomposition methods as well as convex relaxation, in order to solve (P) via solving a sequence of relatively easy subproblems. JD2 is an enhanced version of JD1 that integrates several domain reduction techniques. It has been proved that both methods can terminate in a finite number of iterations with an \(\epsilon \)-optimal solution if some mild conditions are satisfied.

We considered two case study problems that come from integrated design and operation under uncertainty, in order to demonstrate the potential computational advantages of joint decomposition. For the first problem which is smaller and easier, both JD1 and JD2 outperformed state-of-the-art global solvers when the number of scenarios was large, and JD2 generally outperformed JD1. For the second problem which is larger and more difficult, JD2 outperformed state-of-the-art global solvers and JD1 (which could not close the gap for most cases). The case study results indicate that, when joint decomposition can effectively exploit the problem structure, the total number of iterations it requires does not increase significantly with the number of scenarios, and consequently the solution time increases slowly with the problem size compared to the general-purpose global optimization solvers. On the other hand, like all decomposition methods, joint decomposition uses existing solvers to solve its subproblems, so its computational performance does rely on the advances in general-purpose local and global optimization solvers.

In this paper, we only consider domain reduction for the linking variables in \(x_0\). In the future, we will also consider domain reduction for some key non-linking complicating variables in \(y_\omega \) that influence the convergence rate the most, and investigate how to find out these key variables. This can effectively tighten the convex relaxation of Problem (\(\hbox {JRMP}^{(l)}\)), and therefore reduce the number of \(\hbox {JRMP}^{(l)}\) to be solved and accelerate the solution of each \(\hbox {JRMP}^{(l)}\). In addition, in the current JD method subproblem (\(\hbox {RPMP}^k\)) is used for generating Lagrangian multipliers (as well as \(x_0\)), but this subproblem may be time-consuming when a large number of JD iterations is required. In the future, we would like to use a subgradient based method (such as a bundle method [55]) to generate Lagrangian multipliers in the JD framework, and assess the performance of this method in comparison to the (\(\hbox {RPMP}^k\)) approach.

References

Floudas, C.A.: Nonlinear and Mixed-Integer Optimization: Fundamentals and Applications. Oxford University Press, Oxford (1995)

Tawarmalani, M., Sahinidis, N.V.: Global optimization of mixed-integer nonlinear programs: a theoretical and computational study. Math. Program. 99, 563–591 (2004)

Li, X., Armagan, E., Tomasgard, A., Barton, P.I.: Stochastic pooling problem for natural gas production network design and operation under uncertainty. AIChE J. 57, 2120–2135 (2011)

Li, X., Tomasgard, A., Barton, P.I.: Nonconvex generalized Benders decomposition for stochastic separable mixed-integer nonlinear programs. J. Optim. Theory Appl. 151, 425–454 (2011)

Sahinidis, N., Grossmann, I.: Convergence properties of generalized Benders decomposition. Comput. Chem. Eng. 15(7), 481–491 (1991)

Berman, O., Ashrafi, N.: Optimization models for reliability of modular software systems. IEEE Trans. Softw. Eng. 19, 1119–1123 (1993)

Floudas, C.A., Pardalos, P.M., Adjiman, C.S., Esposito, W.R., Gumus, Z., Harding, S.T., Klepeis, J.L., Meyer, C.A., Schweiger, C.A.: Handbook of Test Problems for Local and Global Optimization. Kluwer Academic Publishers, Dordrecht (1999)

Adjiman, C.S., Androulakis, I.P., Floudas, C.A.: Global optimization of mixed-integer nonlinear problems. AIChE J. 46(9), 1769–1797 (2000)

Westerlund, T., Pettersson, F., Grossmann, I.E.: Optimization of pump configurations as a MINLP problem. Comput. Chem. Eng. 18(9), 845–858 (1994)

Duran, M., Grossmann, I.: A mixed-integer nonlinear programming algorithm for process systems synthesis. AIChE J. 32, 592–606 (1986)

Bloom, J.: Solving an electricity generating capacity expansion problem by generalized benders’ decomposition. Oper. Res. 31, 84–100 (1983)

Falk, J., Soland, R.: An algorithm for seperable nonconvex programming problems. Manag. Sci. 15, 550–569 (1969)

Soland, R.: An algorithm for seperable nonconvex programming problems II: nonconvex constraints. Manag. Sci. 17, 759–772 (1971)

McCormick, G.P.: Computability of global solutions to factorable nonconvex programs: part I—convex underestimating problems. Math. Program. 10, 147–175 (1976)

Fisher, M.: Lagrangian relaxation methods for solving integer programming problems. Manag. Sci. 27, 1–18 (1981)

Barnhart, C., Johnson, E.: Branch and price: column generation for solving huge integer programs. Oper. Res. 46, 316–329 (1998)

Guignard, M., Kim, S.: Lagrangean decomposition: a model yielding stronger lagrangean bounds. Math. Program. 39, 215–228 (1987)

Held, M., Wolfe, P., Crowder, H.: Validation of subgradient optimization. Math. Program. 6, 62–88 (1974)

Van Roy, T.J.: Cross decomposition for mixed integer programming. Math. Program. 25(1), 46–63 (1983)

Ogbe, E., Li, X.: A new cross decomposition method for stochastic mixed-integer linear programming. Eur. J. Oper. Res. 256, 487–499 (2017)

Zamora, J., Grossmann, I.E.: A branch and contract algorithm for problems with concave univariate, binear and linear fractional terms. J. Glob. Optim. 14, 217–249 (1999)

Ryoo, H.S., Sahinidis, N.V.: A branch-and-reduce approach to global optimization. J. Glob. Optim. 8, 107–138 (1996)

Misener, R., Floudas, C.A.: A framework for globally optimizing mixed-integer signomial programs. J. Optim. Theory Appl. 161, 905–932 (2014)

Karuppiah, R., Grossmann, I.E.: A Lagrangean based branch-and-cut algorithm for global optimization of nonconvex mixed-integer nonlinear programs with decomposable structures. J. Glob. Optim. 41, 163–186 (2008)

Misener, R., Floudas, C.A.: ANTIGONE: algorithms for continuous/integer global optimization of nonlinear equations. J. Glob. Optim. 59, 503–526 (2014)

Sahinidis, N.: Optimization under uncertainty: state-of-the-art and opportunities. Comput. Chem. Eng. 28, 971–983 (2004)

Birge, J., Louveaux, F.: Introduction to Stochastic Programming. Springer, New York (2010)

Van Slyke, R.M., Wets, R.: L-shaped linear programs with applications to optimal control and stochastic programming. SIAM J. Appl. Math. 17, 638–663 (1969)

Chen, Y., Adams, T.A., Barton, P.I.: Optimal design and operation of static energy polygeneration systems. Ind. Eng. Chem. Res. 50(9), 5099–5113 (2011)

Frank, S.M., Rebennack, S.: Optimal design of mixed AC–DC distribution systems for commercial buildings: a nonconvex generalized benders decomposition approach. Eur. J. Oper. Res. 242(3), 710–729 (2015)

Benders, J.: Partitioning procedures for solving mixed-variables programming problems. Numer. Math. 4, 238–252 (1962)

Geoffrion, A.M.: Generalized Benders decomposition. J. Optim. Theory Appl. 10(4), 237–260 (1972)

Karuppiah, R., Grossmann, I.: A Lagrangean based branch-and-cut algorithm for global optimization of nonconvex mixed-integer nonlinear programs with decomposable structures. J. Glob. Optim. 41, 163–186 (2008)

Shim, Y., Fodstad, M., Gabriel, S.A., Tomasgard, A.: A branch-and-bound method for discretely-constrained mathematical programs with equilibrium constraints. Ann. Oper. Res. 210(1), 5–31 (2013)

Kannan, R.: Algorithms, analysis and software for the global optimization of two-stage stochastic programs. Ph.D. thesis, Massachusetts Institute of Technology (2018)

Van Roy, T.J.: A cross decomposition algorithm for capacitated facility location. Oper. Res. 34, 145–163 (1986)

Holmberg, K.: On the convergence of cross decomposition. Math. Program. 47, 269–296 (1990)

Holmberg, K.: Mean value cross decomposition applied to integer programming problems. Eur. J. Oper. Res. 97, 124–138 (1997)

Deep, N., Shahidehpour, S.: Cross decomposition for multi-area optimal reactive power planning. IEEE Trans. Power Syst. 8, 1539–1544 (1993)

Mitra, S., Garcia-Herreros, P., Grossmann, I.E.: A cross-decomposition scheme with integrated primal-dual multi-cuts for two-stage stochastic programming investment planning problems. Math. Program. 157(1), 95–119 (2016)

Carøe, C.C., Schultz, R.: Dual decomposition in stochastic integer programming. Oper. Res. Lett. 24(1–2), 37–45 (1999)

Lasdon, L.: Optimization Theory for Large Systems, 1st edn. Macmillian, Toronto (1970)

Birge, J., Louveaux, F.V.: A multicut algorithm for two-stage stochastic linear programs. Eur. J. Oper. Res. 34(3), 384–392 (1988)

Ogbe, E., Li, X.: Multicolumn-multicut cross decomposition for stochastic mixed-integer linear programming. Comput. Aided Chem. Eng. 37, 737–742 (2015)

Maranas, C., Floudas, C.A.: Global optimization in generalized geometric programming. Comput. Chem. Eng. 21(4), 351–369 (1997)

Haverly, C.: Studies of the behaviour of recursion for the pooling problem. ACM SIGMAP Bull. 25, 29–32 (1978)

Haverly, C.: Behaviour of recursion model—more studies. ACM SIGMAP Bull. 26, 22–28 (1979)

Selot, A., Kuok, L.K., Robinson, M., Mason, T.L., Barton, P.I.: A short-term operational planning model for natural gas production systems. AIChE J. 54(2), 495–515 (2008)

Brook, A., Kendrick, D., Meeraus, A.: Gams, a user’s guide. SIGNUM Newsl. 23(3–4), 10–11 (1988)

MATLAB, version 8.3 (R2014a) The MathWorks Inc., Natick, Massachusetts (2014)

Ferris, M., Dirkse, S., Ramakrishnan, J.: GDXMRW: Interfacing GAMS and MATLAB. http://research.cs.wisc.edu/math-prog/matlab.html (2016). Accessed August

Achterberg, T.: SCIP: solving constraint integer programs. Math. Program. Comput. 1(1), 1–41 (2009)

Drud, A.S.: CONOPT—a large-scale GRG code. ORSA J. Comput. 6, 207–216 (1994)

IBM: IBM ILOG CPLEX OPTIMIZER: high-performance mathematical programming engine (2014)

Kiwiel, K.C.: A proximal-projection bundle method for Lagrangian relaxation, including semidefinite programming. SIAM J. Optim. 17(4), 1015–1034 (2006)

Gatzke, E.P., Tolsma, J.E., Barton, P.I.: Construction of convex relaxations using automated code generation technique. Optim. Eng. 3, 305–326 (2002)

Acknowledgements

The authors are grateful to the discovery grant (RGPIN 418411-13) and the collaborative research and development grant (CRDPJ 485798-15) from Natural Sciences and Engineering Research Council of Canada (NSERC).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Reformulation from (P0) to (P)

From Problem (P0), We first separate the convex part and the nonconvex part of the problem. Specifically, let \(v_{\omega }=(v_{c,\omega },v_{nc,\omega })\), where \(v_{c,\omega }\) includes variables that are only involved in convex functions and restricted by convex constraints/sets, and \(v_{nc,\omega }\) includes the variables that are involved in a nonconvex function and/or restricted by a nonconvex constraint/set. In addition, we introduce duplicate variables \(v_{0,1},\ldots ,v_{0,s}\) for variable \(x_0\), to express the relation among all scenarios using NACs. We then rewrite Problem (P0) as:

In the above formulation, set \(X_0 \subset {\mathbb {R}}^{n_0}\) is either convex or nonconvex, set \(V_{c,\omega } \subset {\mathbb {R}}^{n_c}\) is convex, set \(V_{nc,\omega } \subset {\mathbb {R}}^{n_{nc}}\) is either convex or nonconvex. Functions \(f_{c,\omega }: V_{c,\omega } \rightarrow {\mathbb {R}}\) and \(g_{c,\omega }: V_{c,\omega } \rightarrow {\mathbb {R}}^{m_{c}}\) are convex. Functions \(f_{nc,\omega }: V_{nc,\omega } \rightarrow {\mathbb {R}}\), \(g_{nc,\omega }: V_{nc,\omega } \rightarrow {\mathbb {R}}^{m_{nc}}\), \(f_{0,\omega }\), and \(g_{0,\omega }\) are either convex or nonconvex. Set \({\hat{X}}_0 \in {\mathbb {R}}^{n_0}\) is a convex relaxation of \(X_0\) (and it is same to \(X_0\) if \(X_0\) is convex). The restriction \(z_{0,\omega } \in {\hat{X}}_{0}\) is actually redundant with the presence of NACs; however, it tightens the problem when the NACs are dualized. Note that in order to generate a convex relaxation of \(X_0\), extra variables may be introduced [56], so the dimension of the relaxation may be larger than that of \(X_0\). Here \({\hat{X}}_0\) can be understood as the projection of the relaxation set on the \({\mathbb {R}}^{n_0}\) space. For simplicity of notation, in this paper we always express a convex relaxation (of a set or a function) on the original variable space and do not explicitly show the extra variables needed for constructing the relaxation.

Define new variables \(t_\omega \), \(\alpha _{c,\omega }\), \(\alpha _{nc,\omega }\), \(\beta _{c,\omega }\), \(\beta _{nc,\omega }\), such that Problem (A.1) can be written as:

Define \(x_\omega =(v_{0,\omega }, v_{c,\omega }, t_\omega , \alpha _{c,\omega }, \beta _{c,\omega })\), \(y_\omega =(v_{nc,\omega }, \alpha _{nc,\omega }, \beta _{nc,\omega })\), then the above formulation can be written as the following Problem (P):

where the matrices

and the sets

The “0” and “I” in the matrices represent zero and identity matrices, and their dimensions are conformable to the relevant variables. According to the convexity/nonconvexity of the functions and the sets stated before, set \(X_\omega \) is convex and set \(Y_\omega \) is nonconvex.

Appendix B: The stochastic pooling problem with mixed-integer first-stage decisions

The two-stage stochastic pooling problem from Li et al. [3] is modified here to address continuous design (first-stage) decisions. The nomenclature used in [3] is adopted to describe the model, in which the scenarios are indexed by h (rather than \(\omega \)).

In the modified model, the design decisions on sources, pools, product terminals, denoted by \(y_i^{{\mathrm{S}}}\), \(y_j^{{\mathrm{P}}}\), \(y_k^{{\mathrm{T}}}\), can be continuous, integer, or mixed integer. If \(y_i^S \in \{0,1\}\), then the design decision is to determine whether source i is to be developed, and the related parameter \(Z_{i}^{{\mathrm{UB}}}\) represents the fixed capacity of the source. If \(y_i^S\) is continuous and \(y_i^{{\mathrm{S}}} \in [0,1]\), then it is a capacity design decision, specifically it represents the ratio of source i capacity to the maximum allowed capacity of the source (denoted by \(Z_{i}^{{\mathrm{UB}}}\)). The design decisions on the pipelines among sources, pools, and terminals are all continuous, denoted by \(y_{i,j}^{{\mathrm{SP}}}\), \(y_{i,k}^{{\mathrm{ST}}}\), \(y_{j,j^-}^{{\mathrm{PP}}}\), \(y_{j,k}^{{\mathrm{PT}}} \in [0,1]\). They represents the ratios of the pipeline capacities to the maximum allowed capacities (denoted by \(F^{{\mathrm{SP,UB}}}_{i,j}\), \(F^{{\mathrm{ST,UB}}}_{i,k}\), \(F^{{\mathrm{PP,UB}}}_{j,j^-}\), \(F^{{\mathrm{PT,UB}}}_{j,k}\)).

All design and operational decision variables are nonnegative, and we do not impose other lower bounds on these variables in order to simplify discussion. The new stochastic pooling model consists primarily of three submodels, for the sources, pools, and product terminals, respectively.

1.1 Model for the sources

The following group of constraints (B.1) represents the submodel for the sources. Equations (B.1a–B.1c) are same to Eqs. (12–14) in [3], except that the lower flow bounds are not imposed. Equations (B.1d–B.1f) are developed in place of the topology constraints Eqs. (15–16) (which are invalid for continuous design decisions). Equations (B.1d–B.1e) limit the capacity of a pipeline by the capacity of the source it connects. If \(y_i^{{\mathrm{S}}}=0\), then there cannot exist a pipeline connecting it, in other words, the capacity of a pipeline connecting it has to be zero. Equation (B.1f) requires that the total capacity of all pipelines connecting to a source should be no less than the capacity of the source. This is to ensure enough pipeline capacity to move all materials generated in the source to other parts of the system in real-time.

1.2 Model for the pools

The following group of constraints (B.2) represents the submodel for the pools. Euations (B.2a–B.1e) are same to Eqs. (17–21) in [3], except that the lower flow bounds are not imposed. Equations (B.2f–B.2k) are developed in place of the topology constraints (23–26) in [3]. The interpretation of Eqs. (B.2f–B.2k) is similar to that of Eqs. (B.1d–B.1f) and therefore omitted.

1.3 Model for the product terminals

The following group of constraints (B.3) represents the submodel for the terminals. Equations (B.3a–B.3b) are same to Eq. (27–28) in [3], except that the lower flow bounds and content bounds are not imposed. Again, Eqs. (B.3c–B.3e) are developed in place of the old topology constraints that are invalid for continuous design decisions (i.e., Eqs. (23–26) in [3]).

The modified stochastic pooling model can be stated as:

The objective can be negative net present value, or negative annualized profit, as specified in [3].

Rights and permissions

About this article

Cite this article

Ogbe, E., Li, X. A joint decomposition method for global optimization of multiscenario nonconvex mixed-integer nonlinear programs. J Glob Optim 75, 595–629 (2019). https://doi.org/10.1007/s10898-019-00786-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-019-00786-x