Abstract

In this article, we introduce an inertial projection and contraction algorithm by combining inertial type algorithms with the projection and contraction algorithm for solving a variational inequality in a Hilbert space H. In addition, we propose a modified version of our algorithm to find a common element of the set of solutions of a variational inequality and the set of fixed points of a nonexpansive mapping in H. We establish weak convergence theorems for both proposed algorithms. Finally, we give the numerical experiments to show the efficiency and advantage of the inertial projection and contraction algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let H be a Hilbert space with the inner product \(\langle .,.\rangle \) and \(C \subseteq H\) be a nonempty closed and convex set in H.

In this article, we are concerned with the classical variational inequality, which is to find a point \(x^*\in C\) such that

where \(f : H \rightarrow H\) is a mapping. This problem captures various applications arising in many areas, such as partial differential equations, optimal control, optimization, mathematical programming and some other nonlinear problems (see, for example, [1] and references therein).

It is well-known that if f is L-Lipschitz continuous and \(\eta \)-strongly monotone on C, i.e.

and

where \(L>0\) and \(\eta >0\) are the Lipschitz and strong monotonicity constants, respectively, then the variational inequality (1) has a unique solution. Recently, Zhou et al. [2] weakened the Lipschitz continuity to the hemicontinuity. However, if f is simply L-Lipschitz continuous and monotone on C, i.e.

but not \(\eta \)-strongly monotone, then the variational inequality (1) may fail to have a solution. We refer the readers to [2] for counterexamples.

Some authors have proposed and analyzed several iterative methods for solving the variational inequality (1). The simplest one is the following projection method, which can be seen an extension of the projected gradient method for optimization problems:

for each \(k\ge 1\), where \(\tau \in (0,\frac{2\eta }{L^2})\) and \(P_C\) denotes the Euclidean least distance projection onto C. The projection method converges provided that the mapping f is \(L-\hbox {Lipschitz}\) continuous and \(\eta \)-strongly monotone. By using a counterexample, Yao et al. (see [3]) proved that the projected gradient method may diverge if the strong monotonicity assumption is relaxed to plain monotonicity.

To avoid the hypotheses of the strong monotonicity, Korpelevich [4] proposed the extragradient method:

for each \(k\ge 1\), which converges if f is Lipschitz continuous and monotone.

In fact, in the extragradient method, one needs to calculate two projections onto C in each iteration. Note that the projection onto a closed convex set C is related to a minimum distance problem. If C is a general closed and convex set, this might require a prohibitive amount of computation time.

To our knowledge, there are two kinds of methods to overcome this difficulty. The first one is the subgradient extragradient method developed by Censor et al. [5, 6]:

for each \(k\ge 1\). This method replaces the second projection onto C of the extragradient method by a projection onto a specific constructible subgradient half-space \(T_k\). The second one is the projection and contraction method studied by some authors [7,8,9,10]:

for each \(k\ge 1\), where \(\gamma \in (0,2),\)

The projection and contraction method needs only one projection onto C in each iteration and has an advantage in computing over the extragradient and subgradient extragradient methods (see [9]).

The inertial type algorithms originate from the heavy ball method (an implicit discretization) of the two order time dynamical system [11, 12], the main features of which is that the next iterate is defined by making use of the previous two iterates. Recently, there are growing interests in studying inertial type algorithms. Some latest references are inertial forward-backward splitting methods for certain separable nonconvex optimization problems [13], strongly convex problems [14, 15] and inertial dynamics methods [16, 17].

For finding the zeros of a maximally monotone operator, Bot and Csetnek [18] proposed the so-called inertial hybrid proximal-extragradient algorithm, which combines inertial type algorithms and hybrid proximal-extragradient algorithms (see, e.g. [19, 21]) and includes the following algorithm as a special case (see, e.g. [22]):

It is obvious that the algorithm (6) can be seen as the projection algorithm (2) with inertial effects and also can be seen as a bounded perturbation of the projection algorithm (2) (see, e.g. [20]). The authors showed that the algorithm (6) converges weakly to a solution of the variational inequality (1) provided that \((\alpha _k)\) is nondecreasing with \(\alpha _1 = 0\), \(0\le \alpha _k\le \alpha \), and \(0<\underline{c}\le c_k\le 2\gamma \sigma ^2\), for \(\alpha ,\sigma \ge 0\) such that \(\alpha (5+4\sigma ^2)+\sigma ^2<1\).

Very recently, Dong et al. [22] introduced the following algorithm:

for each \(k\ge 1\), where \(\{\alpha _k\}\) is nondecreasing with \(\alpha _1=0\) and \(0\le \alpha _k\le \alpha <1\) for each \(k\ge 1\) and \(\lambda ,\sigma ,\delta >0\) are such that

and

where L is the Lipschitz constant of f.

In this paper, we study an inertial projection and contraction algorithm and analyze its convergence in a Hilbert space H. We also present a modified inertial projection and contraction algorithm for approximating a common element of the set of solutions of a variational inequality and the set of fixed points of a nonexpansive mapping in H. Finally, we give numerical examples are presented to illustrate the efficiency and advantage of the inertial projection and contraction algorithm.

2 Preliminaries

In the sequel, we use the notations:

-

(1)

\(\rightharpoonup \) for weak convergence and \(\rightarrow \) for strong convergence;

-

(2)

\(\omega _w(x^k) = \{x : \exists x^{k_j}\rightharpoonup x\}\) denotes the weak \(\omega \)-limit set of \(\{x^k\}\).

We need some results and tools in a real Hilbert space H which are listed as lemmas below.

Recall that, in a Hilbert space H,

for all \(x, y\in H\) and \(\lambda \in {\mathbb {R}}\) (see Corollary 2.14 in [23]).

Definition 2.1

Let \(B : H\rightrightarrows 2^H\) be a point-to-set operator defined on a real Hilbert space H. B is called a maximal monotone operator if B is monotone, i.e.,

for all \(u\in B(x)\) and \(v\in B(y)\) and the graph G(B) of B,

is not properly contained in the graph of any other monotone operator.

It is clear that a monotone mapping B is maximal if and only if, for any \((x, u) \in H \times H\), if \(\langle u-v,x-y\rangle \ge 0\) for all \((v, y) \in G(B)\), then it follows that \( u\in B(x)\).

Lemma 2.1

(Goebel and Kirk [24]) Let C be a closed convex subset of a real Hilbert space H and \(T : C \rightarrow C\) be a nonexpansive mapping such that \(Fix(T)\ne \emptyset \). If a sequence \(\{x^k\}\) in C is such that \(x^k\rightharpoonup z\) and \(x^k - T x^k \rightarrow 0\), then \(z = T z\).

Lemma 2.2

Let K be a closed convex subset of real Hilbert space H and \(P_K\) be the (metric or nearest point) projection from H onto K (i.e., for \(x\in H\), \(P_Kx\) is the only point in K such that \(\Vert x-P_Kx\Vert =\inf \{\Vert x-z\Vert : z \in K\}\)). Then, for any \(x \in H\) and \(z\in K\), \(z=P_Kx\) if and only if there holds the relation:

for all \(y\in K\).

Lemma 2.3

(see [11]) Let \(\{\varphi _k\}\), \(\{\delta _k\}\) and \(\{\alpha _k\}\) be the sequences in \([0, +\infty )\) such that, for each \(k\ge 1\),

and there exists a real number \(\alpha \) with \( 0\le \alpha _k\le \alpha <1\) for all \(k\ge 1\). Then the following hold:

-

(i)

\(\sum _{k=1}^{\infty }[\varphi _k-\varphi _{k-1}]_+<+\infty \), where \([t]_+= \max \{t,0\};\)

-

(ii)

there exists \(\varphi ^*\in [0, +\infty )\) such that \(\lim _{k\rightarrow +\infty }\varphi _k=\varphi ^*.\)

Lemma 2.4

(see [23], Lemma 2.39) Let C be a nonempty set of H and \(\{x^k\}\) be a sequence in \({\mathcal {H}}\) such that the following two conditions hold:

-

(i)

for all \(x\in C\), \(\lim _{k\rightarrow \infty }\Vert x^k-x\Vert \) exists;

-

(ii)

every sequential weak cluster point of \(\{x^k\}\) is in C.

Then the sequence \(\{x^k\}\) converges weakly to a point in C.

3 The inertial projection and contraction algorithm

In this section, we present the inertial projection and contraction algorithm and analyze its convergence.

For a mapping \(f : H\rightarrow H\), we introduce the following algorithm:

Algorithm 3.1

Choose initial guesses \(x_0,x_1\in H\) arbitrarily. Calculate the \((k+1)th\) iterate \(x^{k+1}\) via the formula:

for each \(k\ge 1\), where \(\gamma \in (0,2)\), \(\tau >0\) and

where

and \(\{\alpha _k\}\) is nondecreasing with \(\alpha _1=0,\) \(0\le \alpha _k\le \alpha <1,\) and \(\sigma ,\delta >0\) are such that

If \(y^k=w^k\) or \(d(w^k,y^k)=0\) then \(x^{k+1}\) is a solution of the variational inequality (1) (see Lemma 3.1 below) and the iterative process stops; otherwise, we set \(k:=k + 1\) and go on to (9) to evaluate the next iterate \(x^{k+2}\).

To discuss the convergence of the algorithm (9), we assume the following conditions.

Condition 3.1

The solution set of (1), denoted by SOL(C, f), is nonempty.

Condition 3.2

The mapping f is monotone on H.

Condition 3.3

The mapping f is Lipschitz continuous on H with the Lipschitz constant \(L>0\).

Lemma 3.1

Assume \(0<\tau < 1/L.\) If \(y^k=w^k\) or \(d(w^k,y^k)=0\) in (9), then \(x^{k+1}\in SOL(C,f).\)

Proof

From conditions 3.2 and 3.3, it follows

Similarly, we can show

So, \(d(w^k,y^k)=0\) if and only if \(y^k=w^k\). From (9), we have

When \(d(w^k,y^k)=0\), by (9) and in (10), one has \(x^{k+1}=y^k\) and

which with Lemma 2.2 yields \(x^{k+1}\in SOL(C,f)\). This completes the proof. \(\square \)

Remark 3.1

From Lemma 3.1, we see that if the algorithm (9) terminates in a finite (say k) step of iterations, then \(x^k\) is a solution of the variational inequality (1). So in the rest of this section, we assume that the algorithm (9) does not terminate in any finite iterations, and hence generates an infinite sequence

The following lemma is crucial for the proof of our convergence theorem.

Lemma 3.2

Let \(\{x^k\}\) be the sequence generated by (9) and let \(0<\tau < 1/L.\) Assume \(d(w^k,y^k)\ne 0.\) If \(u \in SOL(C,f )\), then, under Conditions 3.1, 3.2 and 3.3, we have the following:

-

(i)

$$\begin{aligned} \Vert x^{k+1}-u\Vert ^2\le \,\Vert w^k-u\Vert ^2-\frac{2-\gamma }{\gamma }\Vert x^{k+1}-w^k\Vert ^2; \end{aligned}$$(12)

-

(ii)

$$\begin{aligned} \Vert w^k-y^{k}\Vert ^2\le \frac{1+\tau ^2L^2}{[(1-\tau L)\gamma ]^2}\Vert x^{k+1}-w^k\Vert ^2. \end{aligned}$$(13)

Proof

(i) From the Cauchy-Schwarz inequality and Condition 3.3, it follows

Using Condition 3.2, we have

Combining (14) and (15), we obtain

\(\square \)

By the definition of \(x^{k+1}\), we have

It follows that

By the definition of \(y^k\) and Lemma 2.2, we have

From Condition 3.2, it follows that

Since \(u \in SOL(C,f )\) and \(y^k\in C,\) it follows from (1) that

Combining (18) and (22), we obtain

Substituting (23) into (17) and using \(\beta _k=\frac{\varphi (w^k,y^k)}{\Vert d(w^k,y^k)\Vert ^2}\), we have

Again, using the definition of \(x^{k+1}\), we have

Combining (24) and (25), we obtain (12).

(ii) From (25) and (16), it follows that

which with (14) yields

This completes the proof. \(\square \)

Theorem 3.1

Assume that Conditions 3.1, 3.2 and 3.3 hold and let \(0<\tau < \frac{1}{L}.\) Then the sequence \(\{x^k\}\) generated by (9) converges weakly to a solution of the variational inequality (1).

Proof

Fix \(u \in SOL(C,f )\). Applying (8), we have

Hence, from (12), it follows that

We also obtain

where \(\rho _k:=\frac{2}{2\alpha _k+\delta \gamma }.\) Combining (27) and (28), we have

where

since \(\alpha _k\rho _k<1\) and \(\gamma \in (0,2).\) Again, taking into account the choice of \(\rho _k\), we have

and, from (30), it follows that

This completes the proof. \(\square \)

In the following, we apply some techniques from [12, 25] adapted to our problems. Define the sequences \(\{\varphi _k\}\) and \(\{\xi _k\}\) by

for all \(k\ge 1\), respectively. Using the monotonicity of \(\{\alpha _k\}\) and the fact that \(\varphi _k \ge 0\) for all \(k \in {\mathbb {N}},\) we have

Employing (29), we have

Now, we claim that

Indeed, by the choice of \(\rho _k\), we have

By using (30), we have

where the last inequality follows by using the upper bound for \(\gamma \) in (11). Hence the claim in (33) is true.

Thus it follows from (32) and (33) that

The sequence \(\{\mu _k\}\) is nonincreasing and the bound for \(\{\alpha _k\}\) delivers

It follows that

where we notice that \(\xi _1=\varphi _1\ge 0\) (due to the relation \(\alpha _1=0\)). Combining (34) and (35), we have

which shows that

Thus we have \(\lim _{k\rightarrow \infty }\Vert x^{k+1}-x^k\Vert =0.\) By (9), we have

So, we have \(\lim _{k\rightarrow \infty }\Vert x^{k+1}-w^k\Vert =0.\) From (13), it follows that

Next, we prove this by using the result of Opial given in Lemma 2.4. For arbitrary \(u\in SOL(C,f),\) by (29), (31), (36) and Lemma 2.3, we derive that \(\lim _{k\rightarrow \infty }\Vert x^{k}-u\Vert \) exists (we take into consideration also that, in (29), \(\alpha _k\rho _k<1\)). Hence \(\{x^k\}\) is bounded.

Now, we only need to show \(\omega _w(x^k)\subseteq SOL(C,f).\) Due to the boundedness of \(\{x^k\}\), it has at least one weak accumulation point. Let \({\hat{x}}\in \omega _w(x^k)\). Then there exists a subsequence \(\{x^{k_i}\}\) of \(\{x^k\}\) which converges weakly to \({\hat{x}}\). Also, it follows that \(\{w^{k_i}\}\) and \(\{y^{k_i}\}\) converge weakly to \({\hat{x}}.\)

Finally, we show that \({\hat{x}}\) is a solution of the variational inequality (1). Let

where \(N_C(v)\) is the normal cone of C at \(v\in C\), i.e.,

It is known that A is a maximal monotone operator and \(A^{-1}(0) = SOL(C,f)\). If \((v,w) \in G(A)\), then we have \(w-f(v) \in N_C(v)\) since \(w\in A(v) = f(v) + N_C(v)\). Thus it follows that

for all \(y\in C\). Since \(y^{k_i}\in C\), we have

On the other hand, by the definition of \(y^k\) and Lemma 2.2, it follows that

and, consequently,

Hence we have

which implies

Taking the limit as \(i\rightarrow \infty \) in the above inequality, we obtain

Since A is a maximal monotone operator, it follows that \({\hat{x}}\in A^{-1}(0) = SOL(C,f)\). This completes the proof.\(\square \)

Remark 3.2

(1) \(\gamma \in (0,2)\) is a relaxation factor of Algorithm 3.1 and the projection and contraction algorithm (5). Cai et. al. [10] explained why taking a suitable relaxation factor \(\gamma \in [1, 2)\) can achieve the faster convergence in the proof of main results of the projection and contraction algorithm (5). However, we do not obtain a suitable relaxation factor \(\gamma \) in the proof of Algorithm 3.1.

(2) Moudafi [26] proposed an open problem on investigating, theoretically as well as numerically, which are the best choices for the inertial parameter \(\alpha _k\) in order to accelerate the convergence. Since the open problem was proposed, there has been little progress, except for some special problems. Beck and Teboulle [27] introduced the well-known FISTA to solve the linear inverse problems, which is an inertial version of the ISTA. They proved that the FISTA has global rate \(O(1/k^2)\) of convergence, while the global rate of convergence of the ISTA is O(1 / k). The inertial parameter \(\alpha _k\) in the FISTA is chosen as follows:

where \(t_1=1\), and

Chambolle and Dossal [28] took \(t_k\) as follows:

where \(a>2\) and showed that the FISTA has better property, i.e., the convergence of the iterative sequence when \(t_k\) is taken as in (39).

4 The modified projection and contraction algorithm and its convergence analysis

Let \(S:H\rightarrow H\) be a nonexpansive mapping and denote by Fix(S) its fixed point set, i.e.,

Next, we present a modified projection and contraction algorithm to find a common element of the set of solutions of the variational inequality and the set of fixed points of the nonexpansive mapping S as follows:

Algorithm 4.1

Choose initial guesses \(x_0,x_1\in H\) arbitrarily. Calculate the \((k+1)th\) iterate \(x^{k+1}\) via the formula:

for each \(n\ge 1\), where \(\gamma \in (0,2),\) \(\tau >0\) and

where

and \(\{\alpha _k\}\) is nondecreasing with \(\alpha _1=0,\) \(0\le \alpha _k\le \alpha <1,\) and \(\sigma ,\delta >0\) are such that

Now, we assume the following condition:

Condition 4.1

\(Fix(S)\cap SOL(C,f)\ne \emptyset \).

Set \(t^k:=w^k-\gamma \beta _k d(w^k,y^k)\) for each \(k\ge 1\). Then we have

Remark 4.1

From Lemma 3.1, if \(d(w^k,y^k)=0\) in (40), then \(y^k=w^k.\) Using the definition of \(t^k\) and (41), we have \(w^k=t^k\) when \(d(w^k,y^k)=0\).

Following along the lines of Lemma 3.2, we get the following Lemma:

Lemma 4.1

Let \(\{x^k\}\) be the sequence generated by (40) and let \(0<\tau < 1/L.\) Assume \(d(w^k,y^k)\ne 0.\) If \(u \in SOL(C,f )\), then, under Conditions 3.2, 3.3 and 4.1, we have the following:

-

(i)

$$\begin{aligned} \Vert t^{k}-u\Vert ^2\le \Vert w^k-u\Vert ^2-\frac{2-\gamma }{\gamma }\Vert w^k-t^k\Vert ^2; \end{aligned}$$(43)

-

(ii)

$$\begin{aligned} \Vert w^k-y^{k}\Vert ^2\le \frac{1+\tau ^2L^2}{[(1-\tau L)\gamma ]^2}\Vert w^k-t^k\Vert ^2. \end{aligned}$$(44)

Remark 4.2

From Remark 4.1, (43) and (44) in Lemma 4.1 still holds when \(d(w^k,y^k)=0.\)

Theorem 4.1

Assume that Conditions 3.2, 3.3 and 4.1 hold. Let \(0<\tau < \frac{1}{L}\) and \(\{\alpha _k\}\subset [c,d]\) for some \(c,d\in (0,1).\) Then the sequence \(\{x^k\}\) generated by (40) converges weakly to the same solution \(u^* \in Fix(S)\cap SOL(C,f )\).

Proof

By (28), we have

where we denote \(\rho _k=\frac{1}{\alpha _k+\delta \mu _k}.\) Let \(u\in Fix(S)\cap SOL(C,f ).\) From (26), it follows that

Using (8), (42), Lemma 4.1(i) and Remark 4.2, we have

Combining (45), (46) and (47), we obtain

where

since \(\alpha _k\rho _k<1\) and \(\mu _k\in (0,1).\) Again, taking into account the choice of \(\rho _k\), we have

and, from (49), it follows that

Following along the lines of Theorem 3.1, we obtain

Thus we have \(\lim _{k\rightarrow \infty }\Vert x^{k+1}-x^k\Vert =0.\) From (48), (50), \(\alpha _k\rho _k<1\) and Lemma 2.3, we can show that \(\lim _{k\rightarrow \infty }\Vert x^{k}-u\Vert \) exists for arbitrary \(u\in Fix(S)\cap SOL(C,f).\) Hence \(\{x^k\}\) is bounded. By (37), we have \(\sum _{k=1}^{\infty }\Vert x^{k+1}-w^k\Vert ^2<+\infty \) and so

By (47), we have \(\sum _{k=1}^{\infty }\Vert t^k-w^k\Vert ^2<+\infty \) and so

Using (44), we have

Since \(\{x^k\}\) is bounded, it has a subsequence \(\{x^{k_i}\}\) which converges weakly to a point \({\hat{x}}\). By (51)–(52), the subsequences \(\{w^{k_i}\}\) and \(\{t^{k_i}\}\) also converge weakly to \({\hat{x}}\).

Now, we show that \({\hat{x}} \in Fix(S) \cap SOL(C, f)\). Define the operator A as in (38). By using arguments similar to those used in the proof of Theorem 3.1, we can show that

It is now left to show that \({\hat{x}} \in Fix(S)\). To this end, it follows from (42) that

which with (51) implies that

Using (52), we obtain

By Lemma 2.1, we obtain \({\hat{x}}\in Fix(S).\) Now, again, by using similar arguments to those used in the proof of Theorem 3.1, we can show that the sequence \(\{x^k\}\) converge weakly to \({\hat{x}}\in Fix(S)\cap SOL(C, f)\). This completes the proof.\(\square \)

Remark 4.3

Note that we need to restrict \(\gamma \) in the the algorithm (9) for the variational inequality, however, we only need to make restriction on \(\{\mu _k\}\) in the algorithm (40).

5 Numerical experiments

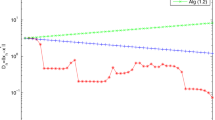

In order to evaluate the performance of the proposed algorithm, we present numerical experiments relative to the variational inequality. In this section, we provide an example to compare the inertial projection and contraction algorithm with the projection and contraction algorithm, the inertial extragradient algorithm and the extragradient algorithm.

Example 5.1

Let \(f:{\mathbb {R}}^2 \rightarrow {\mathbb {R}}^2\) be defined by

Claim that f is Lipschitz continuous and strongly monotone. Therefore the variational inequality (1) has a unique solution and (0, 0) is its solution.

Firstly, we show that f is Lipschitz continuous. Take arbitrarily \(z_1=(x_1,y_1)\in {\mathbb {R}}^2,\) \(z_2=(x_2,y_2)\in {\mathbb {R}}^2\). Then

where the last inequality comes from

for any \(x,y\in {\mathbb {R}}.\) Similarly, we have

Combining (53) and (55), we obtain that

where the inequality comes from the relation \((a+b)^2\le 2(a^2+b^2)\) for any \(a,b\in {\mathbb {R}}\). From (56), we get that f is Lipschitz continuous with \(L=\sqrt{26}\).

Next we verify that f is strongly monotone. It is easy to get

where the inequality follows from (54). Hence, f is \(1-\)strongly monotone.

Let \(C=\{x\in {\mathbb {R}}^2\,|\,e_0\le x\le 10e_1\}\), where \(e_0=(-10,-10)\) and \(e_1=(10,10)\). Take the initial point \(x_0=(1,10)\in {\mathbb {R}}^2\) and \(\tau =1/(2L)\). Since (0, 0) is the unique solution of the variational inequality (1), denote by \(\Vert x^k\Vert \le 10^{-8}\) the stopping criterion.

Comparison of the number of iterations of the inertial projection and contraction algorithm (iPCA) with the projection and contraction algorithm (PCA), the inertial extragradient algorithm (iEgA) and the extragradient algorithm (EgA) for Example 5.1

Comparison of the number of iterations of the inertial projection and contraction algorithm (iPCA) with the projection and contraction algorithm (PCA), the inertial extragradient algorithm (iEgA) and the extragradient algorithm (EgA) for Example 5.2

Example 5.2

Let \(f: {\mathbb {R}}^n \rightarrow {\mathbb {R}}^n\) defined by \(f(x)=Ax+b\), where \(A=Z^TZ\), \(Z=(z_{ij})_{n\times n}\) and \(b=(b_i)\in {\mathbb {R}}^n\) where \(z_{ij}\in [1,100]\) and \(b_i\in [-100,0]\) are generated randomly.

It is easy to verify that f is \(L-\)Lipschitz continuous and \(\eta -\)strongly monotone with \(L=\max (eig(A))\) and \(\eta =\min (eig(A))\). Take arbitrarily \(x_1,x_2\in {\mathbb {R}}^n.\) Firstly, we have

On the other hand,

where the third equality comes from the symmetry of the matrix A and the inequality follows from the symmetry and the positive definiteness of the matrix A. Note that the matrix Z is randomly generated, so it is full rank. Therefore, the matrix A is positive definite.

Let \(C:=\{x\in {\mathbb {R}}^n\,|\, \Vert x-d\Vert \le r\}\), where the center \(d\in {\mathbb {R}}^n\) and radius r are randomly chosen. Take the initial point \(x_0=(c_i)\in {\mathbb {R}}^n\), where \(c_i\in [0,1]\) is generated randomly. Set \(n=100\) and \(\tau =1/(1.05L)\). Although the variational inequality (1) has an unique solution, it is difficult to get the exact solution. So, denote by \(D_k =\Vert x^{k+1}-x^k\Vert \le 10^{-5}\) the stopping criterion.

Take \(\gamma =1.5\) in the inertial projection and contraction algorithm, and the projection and contraction algorithm. Take \(\alpha _k=0.4\) in the inertial projection and contraction algorithm and the inertial extragradient algorithm. Choose \(\lambda _k=0.6\) in the inertial extragradient algorithm.

The Figs. 1 and 2 illustrate that the inertial projection and contraction algorithm is more efficient in comparison with existing algorithms such as the the projection and contraction algorithm, the inertial extragradient algorithm and the extragradient algorithm.

6 Conclusions

In this paper, we introduce a new inertial projection and contraction algorithm by incorporating the inertial terms in the projection and contraction algorithm, which does not need the summability condition for the sequence. The convergence result is presented under some assumptions and several numerical results confirm the effectiveness of proposed algorithm.

References

Noor, M.A.: Some developments in general variational inequalities. Appl. Math. Comput. 152, 199–277 (2004)

Zhou, H., Zhou, Y., Feng, G.: Iterative methods for solving a class of monotone variational inequality problems with applications. J. Inequal. Appl. 2015, 68 (2015)

Yao, Y., Marino, G., Muglia, L.: A modified Korpelevich’s method convergent to the minimum-norm solution of a variational inequality. Optimization 63, 559–569 (2014)

Korpelevich, G.M.: The extragradient method for finding saddle points and other problems. Ekon. Mat. Metody 12, 747–756 (1976)

Censor, Y., Gibali, A., Reich, S.: The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 148, 318–335 (2011)

Censor, Y., Gibali, A., Reich, S.: Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert spaces. Optim. Methods Softw. 26, 827–845 (2011)

He, B.S.: A class of projection and contraction methods for monotone variational inequalities. Appl. Math. Optim. 35, 69–76 (1997)

Sun, D.F.: A class of iterative methods for solving nonlinear projection equations. J. Optim. Theory Appl. 91, 123–140 (1996)

Dong, Q.L., Yang, J., Yuan H.B.: The projection and contraction algorithm for solving variational inequality problems in Hilbert spaces. J. Nonlinear Convex Anal. (to appear)

Cai, X., Gu, G., He, B.: On the \(\text{ O }(1/\text{ t })\) convergence rate of the projection and contraction methods for variational inequalities with Lipschitz continuous monotone operators. Comput. Optim. Appl. 57, 339–363 (2014)

Alvarez, F.: Weak convergence of a relaxed and inertial hybrid projection-proximal point algorithm for maximal monotone operators in Hilbert spaces. SIAM J. Optim. 14, 773–782 (2004)

Alvarez, F., Attouch, H.: An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 9, 3–11 (2001)

Ochs, P., Chen, Y., Brox, T., Pock, T.: iPiano: Inertial proximal algorithm for non-convex optimization. SIAM J. Imaging Sci. 7, 1388–1419 (2014)

Ochs, P., Brox, T., Pock, T.: iPiasco: inertial proximal algorithm for strongly convex optimization. J. Math. Imaging Vis. 53, 171–181 (2015)

Attouch, H., Peypouquet, J., Redont, P.: A dynamical approach to an inertial forward–backward algorithm for convex minimization. SIAM J. Optim. 24(1), 232–256 (2014)

Attouch, H., Chbani, Z., Peypouquet, J., Redont, P.: Fast convergence of inertial dynamics and algorithms with asymptotic vanishing viscosity. Math. Program. (to appear)

Attouch, H., Chbani, Z.: Fast inertial dynamics and FISTA algorithms in convex optimization, perturbation aspects (2016). arXiv:1507.01367

Bot, R.I., Csetnek, E.R.: A hybrid proximal-extragradient algorithm with inertial effects. Numer. Funct. Anal. Optim. 36, 951–963 (2015)

Svaiter, B.F.: A class of Fejér convergent algorithms, approximate resolvents and the hybrid proximal-extragradient method. J. Optim. Theory Appl. 162, 133–153 (2014)

Jin, W., Censor, Y., Jiang, M.: Bounded perturbation resilience of projected scaled gradient methods. Comput. Optim. Appl. 63, 365–392 (2016)

Solodov, M.V., Svaiter, B.F.: A hybrid approximate extragradient-proximal point algorithm using the enlargement of a maximal monotone operator. Set-Valued Anal. 7, 323–345 (1999)

Dong, Q.L., Lu, Y.Y, Yang, J.: The extragradient algorithm with inertial effects for solving the variational inequality, Optimization, 65, 2217–2226 (2016)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, Berlin (2011)

Goebel, K., Kirk, W.A.: Topics in Metric Fixed Point Theory, Cambridge Studies in Advanced Mathematics, vol. 28. Cambridge University Press, Cambridge (1990)

Bot, R.I., Csetnek, E.R., Hendrich, C.: Inertial Douglas–Rachford splitting for monotone inclusion problems. Appl. Math. Comput. 256, 472–487 (2015)

Moudafi, A., Oliny, M.: Convergence of a splitting inertial proximal method formonotone operators. J. Comput. Appl. Math. 155, 447–454 (2003)

Beck, A., Teboulle, M.: A fast iterative shrinkage–thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Chambolle, A., Dossal, Ch.: On the convergence of the iterates of the fast iterative shrinkage/ thresholding algorithm. J. Optim. Theory. Appl. 166, 968–982 (2015)

Acknowledgements

The authors express their thanks to the reviewers, whose constructive suggestions led to improvements in the presentation of the results.

Author information

Authors and Affiliations

Corresponding author

Additional information

The first author was supported by National Natural Science Foundation of China (No. 61379102) and Open Fund of Tianjin Key Lab for Advanced Signal Processing (No. 2016ASP-TJ01), the second author was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT and Future Planning (2014R1A2A2A01002100) and the third author was supported by Tianjin Research Program of Application Foundation and Advanced Technology (No. 15JCQNJC04400).

Rights and permissions

About this article

Cite this article

Dong, Q.L., Cho, Y.J., Zhong, L.L. et al. Inertial projection and contraction algorithms for variational inequalities. J Glob Optim 70, 687–704 (2018). https://doi.org/10.1007/s10898-017-0506-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-017-0506-0

Keywords

- Inertial type algorithm

- Extragradient algorithm

- Variational inequality

- Projection and contraction algorithm