Abstract

Motivated by robust (non-convex) quadratic optimization over convex quadratic constraints, in this paper, we examine minimax difference of convex (dc) optimization over convex polynomial inequalities. By way of generalizing the celebrated Farkas’ lemma to inequality systems involving the maximum of dc functions and convex polynomials, we show that there is no duality gap between a minimax DC polynomial program and its associated conjugate dual problem. We then obtain strong duality under a constraint qualification. Consequently, we present characterizations of robust solutions of uncertain general non-convex quadratic optimization problems with convex quadratic constraints, including uncertain trust-region problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the minimax difference of convex optimization problem,

where \(h:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\) is a convex function\(,\ f_{j}: {\mathbb {R}}^{n}\rightarrow {\mathbb {R}},\,j=1,\ldots ,l\ \) and \(g_{i}:{\mathbb {R}} ^{n}\rightarrow {\mathbb {R}},\ i=1,2,\ldots ,m\) are convex polynomials. The minimax model \((\mathrm{P})\) was motivated by robust optimization of uncertain (not necessarily convex) quadratic functions, \(\langle x,Ax\rangle +\langle a,x\rangle +\alpha \), over a convex set \(K\), where the data \( (A,a,\alpha )\) is assumed to be uncertain and it belongs to an uncertainty set \(U\). As an illustration, consider the simple case, where the uncertainty set \(U\) is given by the convex hull of the set \(\{(A_{j},a_{j},\alpha _{j}):\ j=1,2,\ldots ,l\},\,A_{j}\) are \(n\times n\) symmetric matrices, \( a_{j}\in {\mathbb {R}}^{n}\) and \(\alpha _{j}\in {\mathbb {R}}\). The worst-case solution of the uncertain quadratic minimization is obtained by solving the minimax problem

It is a minimax dc-optimization problem of the form \({\mathrm{(P)}}\),

where \(\rho =\max \{0,\max _{j=1,\ldots ,l}\{-\gamma _{\min }(A_{j})\}\},\,\gamma _{\min }(A_{j})\) is the least eigenvalue of \(A_{j},\,A_{j}+\rho I_{n}\) is positive semidefinite, \(f_{j}(x):=\langle x,(A_{j}+\rho I_{n})x\rangle +\langle a_{j},x\rangle +\alpha _{j}\), for \(j=1,\ldots ,l\) and \(h(x)=\rho \langle x,x\rangle \) are convex polynomials.

Minimax optimization of this form arises, for instance, when solving uncertain quadratic minimization problems with convex quadratic constraints, such as uncertain trust-region problems by robust optimization (see Sect. 5). Moreover, the model \({\mathrm{(P)}}\) includes the minimax polynomial optimization problems, examined recently in [13, 15], where \(h=0\) and classes of DC-optimization problems which have been extensively studied in the literature (see [4–6, 8, 9, 16] and other references therein).

We make the following key contributions to global non-convex optimization.

-

(i)

We establish a generalization of the Farkas lemma by obtaining a complete dual characterization of the implication

$$\begin{aligned} g_{i}(x)\le 0\quad i=1,\ldots ,m\Rightarrow \max _{j=1,\ldots ,l}f_{j}(x)-h(x)\ge 0 \end{aligned}$$in terms of conjugate functions without any qualifications. A numerical example is given to show that such a qualification-free generalization may fail when the functions \(g_i\)’s are not polynomials. Consequently, we also obtain a form of S-procedure [18] for non-convex quadratic systems. For a recent survey of generalizations of the Farkas lemma, see [7] and S-procedure, see [18].

-

(ii)

As an application of our generalized Farkas’ lemma, we show that there is no duality gap between \((\mathrm{P})\) and its associated conjugate dual problem, which, in particular, establishes that there is no duality gap between a dc polynomial program and its associated standard conjugate dual program. We also obtain strong duality between the problems under a normal cone constraint qualification, instead of the commonly used closed cone epigraph constraint qualification (see [5, 6, 8, 11, 12, 16]). We then derive necessary and sufficient global optimality conditions for classes of problems including fractional programming problems.

-

(iii)

Finally, we present complete dual characterizations of robust solutions of various classes of non-convex quadratic optimization problems in the face of data uncertainty in the objective as well as constraints. They include uncertain trust-region problems and nonconvex quadratic problems with convex quadratic constraints. For related recent results, see [14, 15], where conditions for robust solutions were given for minimax convex quadratic problems or classes of non-convex problems with no uncertainty in the objective function. The DC approach presented in this work allowed us to treat general non-convex quadratic objective functions.

The outline of the paper is as follows. In Sect. 2, we present basic definition and properties of convex polynomials and conjugate functions. In Sect. 3, we present a generalized Farkas’s lemma and its corresponding results for quadratic systems. In Sect. 4, we present duality results for the minimax DC-optimization problems \({{\mathrm{(P)}}}\) and their applications to fractional programming problems involving convex polynomials. In Sect. 5, we derive robust solution characterizations of various classes of quadratic optimization problems.

2 Preliminaries

We begin this section by fixing notation and preliminaries of convex sets, functions and polynomials. Throughout this paper, \({\mathbb {R}} ^{n}\) denotes the Euclidean space with dimension \(n\). The inner product in \( {\mathbb {R}}^{n}\) is defined by \(\langle x,y\rangle :=x^{T}y\) for all \(x,y\in {\mathbb {R}}^{n}\). The nonnegative orthant of \({\mathbb {R}}^{n}\) is denoted by \( {\mathbb {R}}_{+}^{n}\) and is defined by \({\mathbb {R}}_{+}^{n}:=\{(x_{1},\ldots ,x_{n})\in {\mathbb {R}}^{n}\ :\ x_{i}\ge 0\}\). We say that the set \(A\) is convex whenever \(\mu a_{1}+(1-\mu )a_{2}\in A\) for all \(\mu \in [0,1],\,a_{1},a_{2}\in A\). The convex hull of the set \(A\) is denoted by \({\mathrm{co}}(A)\). A function \(f:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\) is said to be convex if for all \(\mu \in [0,1]\),

for all \(x,y\in {\mathbb {R}}^{n}\). The function \(f\) is said to be concave whenever \(-f\) is convex. The epigraph of a function \(f\), denoted by epi\(f\), is defined by \(\mathrm{epi}f:=\{(x,\alpha )\in {\mathbb {R}}^{n}\times {\mathbb {R}}\ |\ f(x)\le \alpha \}\).

The conjugate of a convex function \(f:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\) is defined by, for any \(u\in {\mathbb {R}}^{n},\,f^{*}(u)=\sup _{x\in {\mathbb {R}}^{n}}\left\{ u^{T}x-f(x)\right\} \) and, for any \(x\in {{\mathbb {R}}} ^{n},\,f^{**}(x)=\sup _{u\in {\mathbb {R}}^{n}}\left\{ u^{T}x-f^{*}(x)\right\} \), respectively. If \(f:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\) is convex, then \(f=f^{**}\). For details, see [17, 19, 21]. The domain of \(f^{*}\), denoted by \(\mathrm{dom}f^{*}\), is defined by \(\mathrm{dom}f^{*}:=\{u\in {\mathbb {R}}^{n}|f^{*}(u)<\infty \}\). The positive semi-definiteness of an \(n\times n\) matrix \(B\), denoted by \( B\succeq 0\), is defined by \(\langle x,Bx\rangle \ge 0\), for each \(x\in {\mathbb {R}}^{n}\). If \(f_{1}\) and \(f_{2}:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\) are convex functions, then for \(u\in {\mathbb {R}}^{n}\),

For details, see [17, 19, 21].

The following useful result of convex polynomial systems was given in [2] and will play an important role later in the paper.

Lemma 2.1

[2] Let \(f_{0},f_{1},\ldots ,f_{m}\) be convex polynomials on \({\mathbb {R}}^{n}\). Let \(C:=\{x\in {\mathbb {R}}^{n}:f_{i}(x)\le 0,\ i=1,\ldots ,m\}\ne \emptyset \). Suppose that \(\inf _{x\in C}f_{0}(x)>-\infty \). Then, \(\arg \min _{x\in C}f_{0}(x)\ne \emptyset \).

The next lemma, given first in [13], follows easily from Lemma 2.1.

Lemma 2.2

Let \(f_{0},f_{1},\ldots ,f_{m}\) be convex polynomials on \({\mathbb {R}}^{n}\). Then the set,

is closed and convex.

Proof

Clearly, as \(f_{i}\)’s are convex functions, \(\Omega \) is a convex set. Let \(\{y_{0}^{k},y_{1}^{k},\ldots ,y_{m}^{k}\}\subset \Omega \) and \( (y_{0}^{k},y_{1}^{k},\ldots ,y_{m}^{k})\rightarrow (y_{0},y_{1},\ldots ,y_{m})\) as \( k\rightarrow \infty \). By the definition of \(\Omega \), for each \(k\), there exists \(x^{k}\in {\mathbb {R}}^{n}\) such that \(f_{i}(x^{k})\le y_{i}^{k}\). Now, consider the optimization problem

Then, \((\mathrm{P}_{0})\) is a convex polynomial optimization problem and \( 0\le \inf (\mathrm{P}_{0})\le \sum _{i=0}^{m}(y_{i}^{k}-y_{i})^{2}\ \ \rightarrow 0\), as \(k\rightarrow \infty \). Hence, \(\inf (\mathrm{P}_{0})=0\) and by Lemma 2.1, \(\inf (\mathrm{P}_{0})\) is attained, and so, there exists \( x^{*}\in {\mathbb {R}}^{n}\) such that \(f_{i}(x^{*})\le y_{i}\). Thus, \( (y_{0},y_{1},\ldots ,y_{m})\in \Omega \) and \(\Omega \) is closed.

The following example shows that Lemma 2.2 may fail when all the functions involved in \(\Omega \) are not convex polynomials.

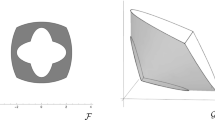

Example 2.1

Let \(f_{1}(x_{1},x_{2})=\sqrt{ x_{1}^{2}+x_{2}^{2}}-x_{1}\) and \(f_{2}(x_{1},x_{2})=1-x_{2}\). Then, \(f_{1}\) is not a polynomial. Define the set \(C\) by

Then, for each integer \(k\ge 1,(y_{1}^{k},y_{2}^{k})=(\sqrt{k^{2}+1} -k,0)\in C\) as \((f_{1}(k,1),f_{2}(k,1))\le (\sqrt{k^{2}+1}-k,0)\), and that \( (y_{1}^{k},y_{2}^{k})=(\sqrt{k^{2}+1}-k,0)=(\frac{1}{\sqrt{k^{2}+1}+k} ,0)\rightarrow (0,0)\) as \(k\rightarrow \infty \). However, \((0,0)\notin C\) because the system

has no solution. Therefore, \(C\) is not closed. \(\square \)

3 Generalized Farkas’ lemma

In this section, we present a generalization of Farkas’ lemma and a corresponding result for quadratic inequality systems, called \(S\)-procedure [18]. The generalized Farkas’ lemma holds without any regularity condition. Note that the simplex in \({\mathbb {R}}^{l}\) is given by \(\Delta =\left\{ \delta \in {\mathbb {R}}_{+}^{l}:\sum _{i=1}^{l}\delta _{i}=1\right\} \).

Theorem 3.1

(Generalized Farkas’ lemma) Let \( f_{j}:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}},\ j=1,\ldots ,l,\,g_{i}:{\mathbb {R}} ^{n}\rightarrow {\mathbb {R}},\,i=1,2,\ldots ,m\) be convex polynomials and \(h: {\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\) be a convex function. Let \(K:=\{x\in {\mathbb {R}}^{n}:g_{i}(x)\le 0,\ i=1,\ldots ,m\}\) be non-empty. Then, the following statements are equivalent:

-

(i)

\(g_{i}(x)\le 0\,\ i=1,\ldots ,m\Rightarrow \max _{j=1,\ldots ,l}f_{j}(x) -h(x) \ge 0;\)

-

(ii)

\((\forall u\in \mathrm{\mathrm{dom}}h^{*},\ \epsilon >0)\ \left( \exists \lambda \in {\mathbb {R}}_{+}^{m},\ \mu \in \Delta ,\ v\in {\mathbb {R}}^{n}\right) :\)

Proof

\(\mathrm{[(ii)]}\,\Rightarrow \mathrm{(i)}\)] Note first, by the definition of a conjugate function, that \(\mathrm{(ii)}\) is equivalent to the condition that, for any \(u\in \mathrm{\mathrm{dom}}h^{*},\ \epsilon >0\), there exist \(\lambda \in {\mathbb {R}}_{+}^{m},\ v\in {\mathbb {R}}^{n}\) and \(\mu \in \Delta \) such that

which shows that for any \(x\in {\mathbb {R}}^{n}\),

Let \(x\in K\). Then, \(h^{*}(u)-\langle u,x\rangle +\left( \sum _{j=1}^{l}\mu _{j}f_{j}\right) (x)+\epsilon \ge 0\), as \(\sum _{i=1}^{m}\lambda _{i}g_{i}(x) \le 0\). So, for any \(u\in \mathrm{\mathrm{dom}}h^{*}\) and \(\epsilon >0\), there exists \(\mu \in \Delta \) such that for any \(x\in K\):

Moreover, \(\max _{j=1,\ldots ,l}f_{j}(x)=\max _{\delta \in \Delta }\delta _{j}f_{j}(x)\ge \sum _{j=1}^{l}\mu _{j}f_{j}(x)\). Hence, for any \(u\in \mathrm{\mathrm{dom}}h^{*},\,\epsilon >0,\,\max _{j=1,\ldots ,l}f_{j}(x)+ \epsilon \ge \langle u,x\rangle -h^{*}(u)\) and so, \( \max _{j=1,\ldots ,l}f_{j}(x)\ge \sup _{u\in \mathrm{\mathrm{dom}}h^{*}}\left\{ \langle u,x\rangle -h^{*}(u)\right\} =h(x)\). Thus (i) holds.

[\(\hbox {(i)}\)] \(\Rightarrow \mathrm{(ii)}\)] Assume that \(\mathrm{(i)}\) holds. Let \(u\in \mathrm{\mathrm{dom}}h^{*}\). Then, from \(\mathrm{(i)}\), for each \(x\in K\),

So, for each \(\epsilon >0,\,\max _{j=1,\ldots ,l}\ \left\{ f_{j}(x)+h^{*}(u)-\langle u,x\rangle \right\} +\epsilon >0\). For \(j\in \{1,\ldots ,l\}\), let \( \phi _{j}(x)=\ f_{j}(x)+h^{*} (u)-\langle u,x\rangle +\epsilon \), for \( x\in {\mathbb {R}}^{n}\). Define a set \(C\) by

Since \(\phi _{j},\,j=1,\ldots ,l\) and \(g_{i},\,i=1,\ldots ,m\) are convex polynomials, \(C\) is a convex set. By Lemma 2.2, \(C\) is closed.

Since \(\max _{j}\phi _{j}(\cdot )>0\), we have \(0\notin C\). By the strong separation theorem ([21, Theorem1.1.5]), there exist \((\gamma _{1},\ldots ,\gamma _{l},v_{1},\ldots ,v_{m})\ne 0,\ \alpha \in {\mathbb {R}},\ \delta _{0}>0\) such that for all \((y,z)\in C\),

Since \(C+{\mathbb {R}}_{+}^{m+l}\subset C\), it follows that \(\gamma _{j}\ge 0,\ j=1,\ldots ,l\) and \(v_{i}\ge 0,\ i=1,\ldots ,m\) and for any \((y,z)\in C\),

As \(K\ne \emptyset \), there exists \(x_{0}\in {\mathbb {R}}^{n}\) such that \( g_{i}(x_{0})\le 0,\ i=1,\ldots ,m\) and so, if \((\gamma _{1},\ldots ,\gamma _{l})=0, \) then \(0\le \alpha <0\). This is a contradiction. So, \(0\le (\gamma _{1},\ldots \gamma _{l})\ne 0\).

Let \(\lambda _{i}=\frac{v_{i}}{\gamma _{1}+\ldots +\gamma _{l}}\ge 0,\ i=1,\ldots ,m \) and \(\mu _{i}=\frac{\gamma _{i}}{\gamma _{1}+\ldots +\gamma _{l}} \ge 0,\ i=1,\ldots ,m\). Then, \(\sum _{j=1}^{l}\mu _{j}=1\) and \(\sum _{j=1}^{l}\mu _{j}\phi _{j}(x)+\sum _{i=1}^{m}\lambda _{i}g_{i}(x)>0\), for any \(x\in {\mathbb {R}}^{n}\), because \((\phi _{1}(x),\ldots ,\phi _{l}(x),\ g_{1}(x),\ldots ,g_{m}(x))\in C\) for each \(x\in {\mathbb {R}}^{n}\). It shows that

So, for each \(u\in \mathrm{dom}h^{*}\) and for each \(\epsilon >0\), there exist \(\lambda \in {\mathbb {R}}_{+}^{m}\) and \(\mu \in \Delta \) such that, for each \(x\in {\mathbb {R}}^{n}\),

which gives us that

On the other hand, as \(f_{j},\,j=1,\ldots ,l\) and \(g_{i},\,i=1,\ldots ,m\) are real-valued convex functions, by (1), for \(u\in \mathrm{dom}h^{*}\), there exists \(v\in {\mathbb {R}}^{n}\) such that

So, we obtain,

Hence (ii) holds. \(\square \)

The following example shows that generalized Farkas’s lemma may not hold for convex constraint systems, \(g_{i}(x)\le \,0,i=1,\ldots ,m\), that are not polynomials.

Example 3.1

(Failure of generalized Farkas’s lemma for non-polynomial convex constraint systems) Let \(f_{1}(x_1,x_2)=1-x_{2},\, f_{2}(x_1,x_2)=-x_{1},\,h(x_1,x_2)=x_{2}^{2}\) and \(g_{1}(x_1,x_2)=\sqrt{x_{1}^{2}+x_{2}^{2}} -x_{1}\). Then,

Moreover, \(\{x\in {\mathbb {R}}^{2}:g_{1}(x)\le 0\}={\mathbb {R}}_{+}\times \{0\}\) and so, \(\max \{f_{1}(x),f_{2}(x)\}-h(x)\ge 0\) for each \(x\in {\mathbb {R}} _{+}\times \{0\};\) thus, the implication

holds. Now, we show that the statement (ii):

fails. To see this, let \(u=(0,0)\in \mathrm{dom}h^{*}\). Then, \(h^{*}(0,0)=0,\, \sum \nolimits _{j=1}^{2}\mu _{j}f_{j}(x)=\mu _{1}(-x_{2})+\mu _{2}(-x_{1})+\mu _{1}\) and \((\sum \nolimits _{j=1}^{2}\mu _{j}f_{j})^{*}(v)=\sup _{x\in {\mathbb {R}}^{2}}\{v_{1}x_{1}+v_{2}x_{2}+\mu _{1}x_{2}+\mu _{2}x_{1}-\mu _{1}\} \). Moreover,

For \(\lambda =0\),

For \(\lambda >0,\,(\lambda g_{1})^{*}(\mu _{2},\mu _{1})=\sup _{x\in {\mathbb {R}}^{2}}\{\mu _{2}x_{1}+\mu _{1}x_{2}-\lambda \sqrt{ x_{1}^{2}+x_{2}^{2}}+\lambda x_{1}\}=(\lambda ||x||)^{*}(\mu _{2}+\lambda ,\mu _{1})\). Since \(\mu _{2}+\mu _{1}=1\), we get that

Hence, for \(u=0\), and \(\epsilon >0\), for any \(\lambda \ge 0\), we can see that \((\lambda g_{1})^{*}(-v)=+\infty \), whenever \(v=(-\mu _{2},-\mu _{1})\), and \((\sum \nolimits _{j=1}^{2}\mu _{j}f_{j})^{*}(v)=+\infty \), whenever \(v\ne (-\mu _{2},-\mu _{1})\), and so (ii) of Theorem 3.1 doesn’t hold. \(\square \)

The following example verifies our generalized Farkas’ lemma, involving convex polynomial constraint systems.

Example 3.2

(Convex polynomial constraint systems and generalized Farkas’ lemma) Let \(f_{1}(x)=x_{1}^{8}+x_{1}^{2},\, f_{2}(x)=x_{2}^{2}-1,\,h(x)=\sqrt{x_{1}^{2}+x_{2}^{2}},\, g_{1}(x)=(x_{1}-1)^{2}+x_{2}^{2}-1,\,g_{2}(x)=x_{1}\) and \( g_{3}(x)=x_{1}+x_{2}\). Then \(f_{1},\ f_{2},\,g_{1},\,g_{2},\ g_{3}\) are convex polynomials, \(h\) is convex, but is not a polynomial function. Moreover, \(h^{*}(u)=\delta _{B}(u)\), where \(\delta _{B}\) is the indicator function of the unit ball \(B\) in \({\mathbb {R}}^{2}\).

Let us consider \(\mathrm{(i)}\) and \(\mathrm{(ii)}\) as in the previous theorem. We can see that \(K:=\{x\in {\mathbb {R}}^{2}:g_{i}(x)\le 0,\ i=1,2,3\}=\{(0,0)\}\). Then \(\mathrm{(i)}\) holds.

Let \(d(x,u,\lambda ):=\mu _{1}f_{1}(x)+\mu _{2}f_{2}(x)+\sum _{i=1}^{3}\lambda _{i}g_{i}(x)+h^{*}(u)-\langle u,x\rangle \). Then,

Thus, \(d(x,u,\lambda )= \mu _{1}x_{1}^{8}+x_{1}^{2}(\mu _{1}+\lambda _{1})+x_{1}(\lambda _{2}+\lambda _{3}-2\lambda _{1}-u_{1})+\left( \lambda _{1}+1-\mu _{1}\right) x_{2}^{2}+(\lambda _{3}-u_{2})x_{2}+\mu _{1}-1\).

For \(\lambda _{1}>0\), we get

By the above calculation, for any \(u\in {\mathbb {R}}^{2},\epsilon >0\), taking \(\mu _{1}=1\) and

we get that \(-\left( \frac{\lambda _{2}+\lambda _{3}-2\lambda _{1}-u_{1}}{2 \sqrt{\lambda _{1}+\mu _{1}}}\right) ^{2}-\left( \frac{\lambda _{3}-u_{2}}{2 \sqrt{\lambda _{1}+1-\mu _{1}}}\right) ^{2}+\mu _{1}-1\ge -\epsilon \) and hence

So, we have,

which is equivalent to

for some \(v\in {\mathbb {R}}^{2}\) by (1). It verifies \(\mathrm{(ii)}\). \(\square \)

As a consequence of Theorem 3.1 we derive a form of the S-procedure for a quadratic system without any qualification. The S-procedure is a commonly used technique in stability analysis of nonlinear systems [18] and is a relaxation strategy for solving a quadratic inequality system or a quadratic optimization problem via a linear matrix inequality relaxation. Recall that, for a matrix \(C\), the least eigenvalue of \(C\) is denoted by \( \gamma _{\min }(C)\).

Theorem 3.2

(S-Procedure) Let \(B_{1},\ldots ,B_{m}\) be symmetric and positive semidefinite \(n\times n\) matrices, \( a_{1},\ldots ,a_{l},b_{1},\ldots ,b_{m}\in {\mathbb {R}}^{n}\) and \(\alpha _{1},\ldots ,\alpha _{l},\beta _{1},\ldots ,\beta _{m}\in {\mathbb {R}}\). Let \( A_{1},\ldots ,A_{l}\) be symmetric \(n\times n\) matrices. Assume that \(\gamma _{\min }(A_{j})<0\) for some \(j\in \{1,\ldots ,l\}\). Let \(\rho =\max _{j=1,\ldots ,l}\{-\gamma _{\min }(A_{j})\}\). Then the following statements are equivalent:

-

(i)

\(\langle x,B_{i}x\rangle +\langle b_{i},x\rangle +\beta _{i}\le 0,\ i=1,\ldots ,m\ \Rightarrow \max _{j=1,\ldots ,l}\langle x,A_{j}x\rangle +\langle a_{j},x\rangle +\alpha _{j}\ge 0;\ \)

-

(ii)

\((\forall u\in {\mathbb {R}}^{n},\ \epsilon >0)\ \left( \exists \lambda \in {\mathbb {R}}_{+}^{m},\ \mu \in \Delta \right) \ \ \ \)

Proof

Note that for any \(j=1,\ldots ,l\) we can write [11]

Then for any \(j\in \{1,\ldots ,l\},\,A_{j}+\rho I_{n}\) is positive semidefinite. Let \(f_{j}(x)=\langle x,(A_{j}+\rho I_{n})x\rangle +\langle a_{j},x\rangle +\alpha _{j}\) for \(j=1,\ldots ,l\) and \(h(x)=\rho ||x||_{2}^{2}\). Thus, \(f_{j},~j=1,\ldots ,l\) and \(h\) are convex polynomials. We have \( h^{*}(u)=\frac{||u||^{2}}{4\rho }\) for any \(u\in {\mathbb {R}}^{n}\). Then, the conclusion follows from Theorem 3.1 by noting that

which is equivalent to for any \(u\in \mathrm{\mathrm{dom}}h^{*}\) and \(\epsilon >0\) there exist \(\lambda \in {\mathbb {R}}_{+}^{m}\) and \(\mu \in \Delta \) such that

\(\square \)

A special case of Theorem 3.1, where \(l=1\) is given in the following corollary which gives us a form of Farkas’ lemma for different convex polynomials.

Corollary 3.1

(Qualification-free DC Farkas Lemma) Let \(h,\,f,\ g_{i}:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}},\, i=1,2,\ldots ,m\) be convex polynomials. Let \(K:=\{x\in {\mathbb {R}} ^{n}:g_{i}(x)\le 0,\ i=1,\ldots ,m\}\) be non-empty. Then the following statements are equivalent:

-

(i)

\(g_{i}(x)\le 0,\ i=1,\ldots ,m\ \Rightarrow \ f(x)\ge h(x);\)

-

(ii)

\((\forall u\in \mathrm{\mathrm{dom}}h^{*},\ \epsilon >0)\ \left( \exists \lambda \in {\mathbb {R}}_{+}^{m},\ v\in {\mathbb {R}}^{n}\right) \ \ \ h^{*}(u)-f^{*}(v)-(\sum _{i=1}^{m}\lambda _{i}g_{i})^{*}(u-v)+\epsilon \ge 0\).

Proof

Applying Theorem 3.1 with \(l=1\) and \(f_{1}=f \), we see that \(\Delta =\{1\}\) and the statement \((ii)\) of Theorem 3.1 collapses to the statement that \((\forall u\in \mathrm{dom}\, h^{*},\ \epsilon >0)\ \left( \exists \lambda \in {\mathbb {R}}_{+}^{m},\ \mu \in \{1\},\ v\in {\mathbb {R}} ^{n}\right) :\)

and so the conclusion follows. \(\square \)

When \(h=0\) in Theorem 3.1, we obtain a version of Farkas’s lemma for convex polynomials, given in [15].

Corollary 3.2

[15] Let \(f_{j},\ g_{i}:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}},\, j=1,\ldots ,l\) and \(i=1,2,\ldots ,m\) be convex polynomials. Let \(K:=\{x\in {\mathbb {R}}^{n}:g_{i}(x)\le 0,\ i=1,\ldots ,m\}\) be non-empty. Then the following statements are equivalent:

-

(i)

\(g_{i}(x)\le 0,\ i=1,\ldots ,m\ \Rightarrow \ \max _{j=1,\ldots ,l}f_{j}(x)\ge 0;\)

-

(ii)

\((\forall \epsilon >0)\ \left( \exists \lambda \in {\mathbb {R}} _{+}^{m},\ \mu \in \Delta \right) :\sum _{j=1}^{l}\mu _{j}f_{j}(\cdot )+\sum _{i=1}^{m}\lambda _{i}g_{i}(\cdot )+\epsilon \ge 0\).

Proof

Let \(h=0\). Then, \(\mathrm{dom}h^{*}=\{0\}\) and Theorem 3.1 gives us that (i) is equivalent to the statement that for each \( \epsilon >0\), there exist \(\lambda \in {\mathbb {R}}_{+}^{m},\ \mu \in \Delta \) and \(v\in {\mathbb {R}}^{n}\) such that

Hence, by the conjugate duality Theorem (see [10]), we obtain that

Hence, if (i) holds then (ii) also holds. The converse implication \(\mathrm{(ii)\Rightarrow (i)}\) follows by construction. \(\square \)

4 Duality and minimax optimization

4.1 Duality

In this subsection, we present duality results for the minimax DC optimization problem

where \(h:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\) is a convex function\(,\ f_{j}: {\mathbb {R}}^{n}\rightarrow {\mathbb {R}},\,j=1,\ldots ,l\ \) and \(g_{i}:{\mathbb {R}} ^{n}\rightarrow {\mathbb {R}},\ i=1,2,\ldots ,m\) are convex polynomials. The conjugate dual problem associated with \({\mathrm{(P)}}\) is given by

We first show that there is no duality gap between problems (P) and (D).

Theorem 4.1

(Zero duality gaps & minimax DC polynomial programs) Let \(f_{j}:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}},\ j=1,\ldots ,l\), let \(g_{i}: {\mathbb {R}}^{n}\rightarrow {\mathbb {R}},\,i=1,\ldots ,m\) be convex polynomials and \(h:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\) be a convex function. Let \( K=\{x\in {\mathbb {R}}^{n}:g_{i}(x)\le 0,\ i=1,\ldots ,m\}\ne \emptyset \). Then,

Proof

Let \(x\in K\). Then, by the definition of conjugate of \(h\),

As \(x\in K\), for each \(\lambda \in {\mathbb {R}}_{+}^{m},\, \sum _{i=1}^{m}\lambda _{i}g_{i}(x)\le 0\) and so, for each \(\mu \in \Delta \),

It then follows from (5) and (6) that for \(x\in K\),

Now, by the definition of a conjugate function, we get that

The last equation is obtained by the sum-conjugate formula (1). Therefore,

which shows that

To see the reverse inequality, we may assume, without loss of generality, that \(\inf {\mathrm{(P)}} > -\infty \), otherwise the conclusion follows immediately. Since \(K\ne \emptyset \), we have \(\overline{t}:=\inf \mathrm{(P)}\in {\mathbb {R}}\). Then, as \(\max _{j=1,\ldots ,l}f_{j}(x)-\left( h(x)+ \overline{t}\right) \ge 0\) for all \(x\in K\), by Theorem 3.1 we get that for any \(\epsilon >0,\,u\in \mathrm{dom}h^{*}\), there exist \( \lambda \in {\mathbb {R}}_{+}^{m},\mu \in \Delta ,\ v\in {\mathbb {R}}^{n}\) such that

Consequently

Since the above inequality holds for any \(\epsilon >0\), passing to the limit we obtain the desired inequality, which concludes the proof. \(\square \)

The following example shows that zero duality gap between (P) and (D) may not hold for convex constraints, \(g_{i}(x)\le \,0,i=1,\ldots ,m\), that are not polynomials.

Example 4.1

(Non-zero-duality gaps for (P) with non-polynomial convex constraints) Consider the problem

where \(f_{1}(x)=1-x_{2},\,f_{2}(x)=-x_{1},\,h(x)=x_{2}^{2}\) and \(g_{1}(x)= \sqrt{x_{1}^{2}+x_{2}^{2}}-x_{1}\). Then, clearly, \(\inf (\mathrm{EP}_{1})=1\). On the other hand,

As we saw in Example 3.1, \(h^{*}(0,0)=0\) and

For \(\lambda =0\),

For \(\lambda >0,\,(\lambda g_{1})^{*}(\mu _{2},\mu _{1})=+\infty \). Therefore,

which shows that

\(\square \)

We use the same constraint system as in Example 3.2 to verify that the zero-duality gap property of a minimax DC program.

Example 4.2

(Verifying zero-duality gap for a minimax DC program). Consider the following example

From Example 3.2, we can see that

Note that \(||u||_{*}\le 1\), for \(u=(u_{1},u_{2})\). Then, \( u_{1},u_{2}\in [-1,1]\). Taking \(\mu _{1}=1,\,\lambda _{2}=2\lambda _{1},\,\lambda _{3}=|u_{2}|\) and \(\lambda _{1}\rightarrow +\infty \), we get

\(\square \)

A special case of Theorem 4.1 where \(l=1\) shows that there is no duality gap between a difference of convex program and its associated dual program whenever \(f,\,h\) and \(g_i\)’s are convex polynomials.

Corollary 4.1

(Zero duality gaps for DC polynomial programs) Let \(h,\,f,\ g_{i}:{\mathbb {R}}^{n}\rightarrow R,\,i=1,2,\ldots ,m\) be convex polynomials. Let \(K:=\{x\in {\mathbb {R}}^{n}:g_{i}(x)\le 0,\ i=1,\ldots ,m\}\) be non-empty. Then,

Proof

Applying Theorem 4.1 with \(l=1\) and \(f_{1}=f\), we see that \(\Delta =\{1\}\) and then the conclusion easily follows. \(\square \)

Under the additional assumption that \(N_{K}(x)=\bigcup _{\lambda _{i}\ge 0,\lambda _{i}g_{i}(x)=0}\{\sum _{i=1}^{m}\lambda _{i}\nabla g_{i}(x)\}\), we obtain the following strong duality theorem for \(({\mathrm{P}})\), where \( N_{K}(x)\) is the normal cone in the sense that \(N_{K}(x)=\{w\in {\mathbb {R}} ^{n}: \langle w,y-x \rangle \le 0,\ \forall y\in K\}\).

Theorem 4.2

(Strong Duality) Let \(f_{j}:{\mathbb {R}} ^{n}\rightarrow {\mathbb {R}},\ j=1,\ldots ,l,\,g_{i}:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}},\,i=1,\ldots ,m\) be convex polynomials and \(h:{\mathbb {R}} ^{n}\rightarrow {\mathbb {R}}\) be a convex function. Let \(K=\{x\in {\mathbb {R}} ^{n}:g_{i}(x)\le 0,\ i=1,\ldots ,m\}\ne \emptyset \). Assume that \(\inf ({ \mathrm{P}})\) is finite, and \(N_{K}(x)=\bigcup _{\lambda _{i}\ge 0,\lambda _{i}g_{i}(x)=0}\{\sum _{i=1}^{m}\lambda _{i}\nabla g_{i}(x)\}\), for each \( x\in K\). Then

Proof

Let \(f:=\max _{j=1,\ldots ,l}f_{j}\). Using the basic properties of conjugate function of \(h\), we have

Let \(u\in \mathrm{dom} h^{*}\). As \(\inf ({\mathrm{P}})\) is finite, \(\inf _{x\in K}\left\{ f(x)+h^{*}(u)-\langle u,x\rangle \right\} \) is also finite; thus,

is finite, where \(\Delta =\left\{ \delta \in {\mathbb {R}}_{+}^{l}:\sum _{j=1}^{l}\delta _{j}=1\right\} \). By the convex-concave minimax Theorem,

is finite, and so, there exists \(\overline{\mu }\in \Delta \) such that \(\inf _{x\in K}\left\{ \sum _{j=1}^l\overline{\mu }_jf_j(x)+h^{*}(u)-\langle u,x\rangle \right\} \) is finite. Now, by Lemma 2.1, \(\sum _{j=1}^l\overline{\mu }_jf_j(x)+h^{*}(u)-\langle u,x\rangle \) attains its infimum. Then, there exists \(\overline{x} _{u}\in K\) such that \(\sum _{j=1}^l\overline{\mu }_jf_j(\overline{x}_{u})+h^{*}(u)-\langle u,\overline{x} _{u}\rangle =\inf _{x\in K}\left\{ \sum _{j=1}^l\overline{\mu }_jf_j(x)\right. \left. +h^{*}(u)-\langle u,x\rangle \right\} \). The standard necessary optimality condition at \( \overline{x}_{u}\) gives us that \(0\in \sum _{j=1}^{l}\overline{\mu }_{j}\nabla f_{j}(\overline{x}_{u})-u+N_{K}(\overline{x}_{u})\). Employing the assumed normal cone constraint qualification, we can find \(\overline{\lambda } _{i}\ge 0,i=1,2,\ldots ,m\) such that

Since \(\sum _{j=1}^{l}\overline{\mu }_{j}f_{j}(\cdot )-\langle u,\cdot \rangle +\sum _{i=1}^{m}\overline{\lambda }_{i}g_{i}(\cdot )\) is convex, for all \(x\in {\mathbb {R}}^{n}\)

Then,

On the other hand, applying Theorem 4.1 by replacing \(f_{j}(\cdot )\) by \(\widetilde{f_{j}}(\cdot ):=f_{j}(\cdot )+h^{*}(u)-\langle u,\cdot \rangle \) and \( h(\cdot )\) by \(\widetilde{h}(\cdot )=0\) gives us

Note that the equality in (8) follows by Fenchel’s duality. As \(f( \overline{x}_{u})=\sum _{j=1}^{l}\overline{\mu }_{j}f_{j}(\overline{x}_{u})\) and \(\inf _{x\in K}\max _{j=1,\ldots ,l}\widetilde{f_{j}}(x)=f(\overline{x} _{u})+h^{*}(u)-\langle u,\overline{x}_{u}\rangle \), it follows from (7) and (8) that

So, we have

Hence, by the definition of conjugate function and (1)

As this is true for each \(u\in \mathrm{dom}h^{*}\),

\(\square \)

In the special case of \((P)\) where \(l=1\), Theorem 4.2 yields that

whenever the normal cone constraint qualification, \(N_{K}(x)=\bigcup _{ \lambda _{i}\ge 0,\lambda _{i}g_{i}(x)=0}\{\sum _{i=1}^{m}\lambda _{i}\nabla g_{i}(x)\}\), holds.

Note that the normal cone constraint qualification of Theorem 4.2 is guaranteed by the epigraph constraint qualification that the convex cone, \(\bigcup _{\lambda \in {\mathbb {R}}_{+}^{m}}\mathrm{epi} (\sum _{i=1}^{m}\lambda _{i}g_{i})^{*}\), is closed. The epigraph constraint qualification has extensively been used to study convex as well as difference of convex optimization problems. For details, see [5, 6, 8, 11, 12, 16]. Note also that if the so-called Slater condition is satisfied in the sense that there exists \(x_{0}\in {\mathbb {R}}^{n}\) such that \(g_{i}(x_{0})<0\) for all \(i=1,\ldots ,m\), then both the epigraph constraint qualification and the normal cone constraint qualification hold. For details, see [12] and [10, Section 2.2].

We obtain a global optimality characterization directly from Theorem 4.2 under the normal cone constraint qualification.

Corollary 4.2

(Global optimality characterization) Let \( f_{j}:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}},\ j=1,\ldots ,l,\,g_{i}:{\mathbb {R}} ^{n}\rightarrow {\mathbb {R}},\,i=1,\ldots ,m\) be convex polynomials and \(h: {\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\) be a convex function. Let \(K=\{x\in {\mathbb {R}}^{n}:g_{i}(x)\le 0,\ i=1,\ldots ,m\}\ne \emptyset \). Assume that \( \inf ({\mathrm{P}})\) is finite, and \(N_{K}(x)=\bigcup _{\lambda _{i}\ge 0,\lambda _{i}g_{i}(x)=0}\{\sum _{i=1}^{m}\lambda _{i}\nabla g_{i}(x)\}\) for each \(x\in K\). A feasible point \(\overline{x}\in K\) is a solution of \( {\mathrm{(P)}}\) if and only if, for any \(u\in \mathrm{dom}h^{*}\), there exist \(\lambda \in {\mathbb {R}}_{+}^{m}\) and \(\mu \in \Delta \) such that

Proof

Note first from Theorem 4.2 that

Using (1) and the definition of a conjugate function, we get that

[\(\Rightarrow \)] Assume that \(\overline{x}\in K\) is a solution of \(\mathrm{(P)}\). As \(\max _{j=1,\ldots ,l}f_{j}(\overline{x})-h(\overline{x})=\inf \mathrm{(P)}\), from (11), we have for any \(u\in \mathrm{dom}h^{*}\), there exist \(\lambda \in {\mathbb {R}}_{+}^{m}\) and \(\mu \in \Delta \), such that for all \(x\in {\mathbb {R}}^{n}\),

and so \(h^{*}(u)+\sum _{j=1}^{l}\mu _{j}f_{j}(\cdot )+\sum _{i=1}^{m}\lambda _{i}g_{i}(\cdot )-\langle u,\cdot \rangle -(\max _{j=1,\ldots ,l}f_{j}(\overline{x})-h(\overline{x}))\ge 0\). Hence, (10) holds.

[\(\Leftarrow \)] Conversely, let \(\overline{x}\in K\). Assume that for any \(u\in \mathrm{dom}h^{*}\), there exist \(\lambda \in {\mathbb {R}} _{+}^{m}\) and \(\mu \in \Delta \) such that (10) holds. Then, for any \( u\in \mathrm{dom}h^{*}\),

By (11),

which shows that \(\overline{x}\) is a solution of \(\mathrm{(P)}\). \(\square \)

We now give an example verifying our global optimality characterization.

Example 4.3

Consider the problem

Let \(f_{1}(x_{1},x_{2})=x_{1}^{8}+x_{1}x_{2}+x_{1}^{2}+x_{2}^{2},\, f_{2}(x_{1},x_{2})=x_{1}^{2}-3,\,f(x_1, x_2)=\max _{j=1,2} f_j (x_1, x_2 ),\,h(x_{1},x_{2})=x_{1}^{2}+x_{2}^{2},\, g_{1}(x_{1},x_{2})=x_{1}^{2}+x_{2}^{2}+4x_{1}+3\) and \( g_{2}(x_{1},x_{2})=x_{2}\). Then \(f_{1},\ f_{2},\ h,\ g_{1},\ g_{2}\) are convex polynomials. Moreover, \(h^{*}(u_{1},u_{2})=\frac{1}{4} (u_{1}^{2}+u_{2}^{2})\). We can see that \(\overline{x}=(-1,0)\) is a solution of \((\mathrm{EP}_{3})\) and \(\inf \mathrm{(EP}_{3}\mathrm{)}=1\).

Let \(\overline{\lambda }=(4,1),\,\overline{\mu }_{1}=1\). Then, for any \( u\in {\mathbb {R}}^{2}\) and \(x\in {\mathbb {R}}^{2}\),

So, for each \(u\in \mathrm{dom}h^{*}\)

It verifies the optimality condition of Corollary 4.2. \(\square \)

4.2 Minimax fractional programs

In this subsection, we derive global optimality characterizations for fractional programs, involving convex polynomials.

Corollary 4.3

Let \(f_{j},\,\ j=1,\ldots ,l\) and \(g_{i}:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}},\ i=1,2,\ldots ,m\) be convex polynomials. Assume that \(f_{j}(x)>0\) over the feasible set \(K:=\left\{ x\in {\mathbb {R}}^{n}:g_{i}(x)\le 0,\ \ i=1,\ldots ,m\right\} ,\,K\ne \emptyset \) and the Slater condition holds. Let \( \overline{x}\in K\) and \(\overline{t}=\max _{j=1,\ldots ,l}\frac{f_{j}(\overline{x} )}{||\overline{x}||+1}\). Then, \(\overline{x}\) is a solution of

if and only if, for any \(u\in {\mathbb {R}}^{n},\,||\frac{u}{\overline{t}} ||_{*}\le 1\), there exist \(\lambda \in {\mathbb {R}}_{+}^{m}\), and \(\mu \in \Delta \) such that

Proof

As \(\overline{t}=\max _{j=1,\ldots ,l}\frac{f_{j}(\overline{x})}{ ||\overline{x}||+1},\ \overline{t}=\inf (\mathrm{FP}_{1})\in {\mathbb {R}}_{+}\), if and only if,

Let \(h(x)=||x||+1\). Then,

By Corollary 4.2, \(\overline{x}\in K\) is a solution of (12), if and only if, for any \(u\in \mathrm{dom}(\overline{t}h)^{*}\), there exist \(\lambda \in {\mathbb {R}}_{+}^{m}\) and \(\mu \in \Delta \) such that

Thus, \(\overline{x}\in K\) is a solution of \((\mathrm{FP}_{1})\) if and only if, for any \(u\in \mathrm{dom}(\overline{t}h)^{*}\), there exist \(\lambda \in {\mathbb {R}}_{+}^{m}\) and \(\mu \in \Delta \) such that \(\sum _{j=1}^{l}\mu _{j}f_{j}(\cdot )+\sum \nolimits _{i=1}^{m}\lambda _{i}g_{i}(\cdot )- \overline{t}-\langle u,\cdot \rangle \ge 0\). \(\square \)

Now, consider the minimax fractional quadratic programs of the form

where \(A_{1},\ldots ,A_{l},B_{1},\ldots ,B_{m}\) are symmetric and positive semidefinite \(n\times n\) matrices, \(a_{1},\ldots ,a_{l},\,b_{1},\ldots ,b_{m}\in {\mathbb {R}}^{n}\) and \(\alpha _{1},\ldots ,\alpha _{l},\,\beta _{1},\ldots ,\beta _{m}\in {\mathbb {R}}\). The matrix \(C\) is \(n\times n\) symmetric and positive definite, \(c\in {\mathbb {R}}^{n}\) and \(\delta \in {\mathbb {R}}\). As before, we assume that \(K:=\{x\in {\mathbb {R}}^{n}:\langle x,B_{i}x\rangle +\langle b_{i},x\rangle +\beta _{i}\le 0,\ i=1,\ldots ,m\}\ne \emptyset \).

Corollary 4.4

For problem \((\mathrm{FP}_{2})\), assume that \(f_{j}(x)=\langle x,A_{j}x\rangle +\langle a_{j},x\rangle +\alpha _{j}>0,\,h(x)=\langle x,Cx\rangle +\langle c,x\rangle +\delta >0\ \)over the feasible set \(K\) and the Slater condition holds. Assume that \(C\) is positive definite. Let \( \overline{x}\in K\) and \(\overline{t}=\max _{j=1,\ldots ,l}\left\{ \frac{\langle \overline{x},A_{j}\overline{x}\rangle +\langle a_{j},\overline{x}\rangle +\alpha _{j}}{\langle \overline{x},C\overline{x}\rangle +\langle c,\overline{ x}\rangle +\delta }\right\} \). Then, \(\overline{x}\) is a solution of \(( \mathrm{FP}_{2})\), if and only if, \(\forall u\in {\mathbb {R}}^{n},\,\exists \lambda \in {\mathbb {R}}_{+}^{m},\ \mu \in \Delta \) such that

Proof

Note that, \(\inf (\mathrm{FP}_{2})=\overline{t}\in {\mathbb {R}}\), if and only if, \(\inf (\mathcal {P}_{\overline{t}})=0\), where

Let \(\widehat{f}_{j}(x)=\left[ \langle x,A_{j}x\rangle +\langle a_{j},x\rangle +\alpha _{j}-\overline{t}\left( \langle c,x\rangle +\delta \right) \right] ,\,j=1,\ldots ,l\) and \(\widehat{h}(x)=\overline{t}\langle x,Cx\rangle \). We have \(\widehat{h}^{*}(u)=\frac{1}{4\overline{t}} \langle u,C^{-1}u\rangle \).

Then, \(\overline{x}\) is a solution of \((\mathcal {P}_{\overline{t}})\), if and only if, by Corollary 4.2, for any\(~u\in {\mathbb {R}}^{n}\), there exist\(~\lambda \in {\mathbb {R}}_{+}^{m}\) and \(\mu \in \Delta \) such that

which is equivalent to

\(\square \)

5 Robust solutions of uncertain quadratic problems

In this section, we present characterizations of robust solutions of classes of quadratic optimization problems under data uncertainty.

We begin with a quadratic problem where the data of both the constraints and the objective functions are uncertain. Note that for a \(\left( n\times n\right) \) matrix \(\Omega ,\ \,||\Omega ||_{\mathrm{spec}}\) is the spectral norm of \(\Omega \) and is defined by \(||\Omega ||_{\mathrm{spec}}=\sqrt{ \lambda _{\max }(\Omega ^{T}\Omega )}\). Consider the quadratic problem

where the data \((B_{i},b_{i},\beta _{i})\in S^{n}\times {\mathbb {R}}^{n}\times {\mathbb {R}},\,i=1,\ldots ,m\) are uncertain and belong to the spectral norm uncertainty set

\(\delta _{i}>0\), and \((A,a,\alpha )\in S^{n}\times {\mathbb {R}}^{n}\times {\mathbb {R}}\) is uncertain and it belongs to the uncertainty set

\(\omega =\sum _{j=1}^{p}\tau _{j}v^{j},\ \tau \in \Delta _{p}\) for some \(l,\ p\in \mathbb {N},\,v^{1},\ldots ,v^{p}\in {\mathbb {R}}_{+}^{l}\) and \((A_k, a_k, \alpha _k ) \in S^{n}\times {\mathbb {R}}^{n}\times {\mathbb {R}},\,k=1,\ldots ,l\).

The robust counterpart of \((\mathrm{QP})\), that finds a worst-case solution of \(\mathrm{(QP)}\), is

Note that the constraints are enforced for all \((B_{i},b_{i},\beta _{i})\) in the uncertainty set \(\mathcal {W}_{i},\ i=1,\ldots ,m\). Following robust optimization [3, 14], an optimal solution of the robust counterpart of an uncertain problem is a robust solution of the uncertain problem.

Let \(K:=\{x\in {\mathbb {R}}^{n}:\langle x,B_{i}x\rangle +2\langle b_{i},x\rangle \ +\beta _{i}\le 0,\ \forall (B_{i},b_{i},\beta _{i})\in \mathcal {W}_{i},\ i=1,\ldots ,m\}\).

Theorem 5.1

(Characterization of Robust Solutions) For problem \((\mathrm{QP })\), assume that for \(i=1,\ldots ,m,\,\overline{B}_{i}\) is positive semidefinite and the Slater condition holds. Let \(\widehat{A} _{j}=A_{0}+\sum _{k=1}^{l}v_{k}^{j}A_{k},\,\widehat{a}_{j}=a_{0}+ \sum _{k=1}^{l}v_{k}^{j}a_{k}\) and \(\widehat{\alpha }_{j}=\alpha _{0}+\sum _{k=1}^{l}v_{k}^{j}\alpha _{k}\) for \(j=1,\ldots ,p\).

Let \(\rho =\max _{j=1,\ldots ,p}\{-\gamma _{\min }(\widehat{A}_{j})\}\). Assume that \(\gamma _{\min }(\widehat{A}_{j})<0\) for some \(j\in \{1,\ldots \), \(p\}\). Then \(x^{*}\in K\) is a robust global minimizer of \(\mathrm{(QP),}\) if and only if, for any \(u\in {\mathbb {R}}^{n}\), there exist \(\lambda \in {\mathbb {R}}_{+}^{m}\), and \(\mu \in \Delta \) such that

where \(\overline{t}=\max _{(A,a,\alpha )\in \mathcal {V}}\langle x^{*},Ax^{*}\rangle +\langle a,x^{*}\rangle \ +\alpha .\ \)

Proof

We first note that for each \(x\in {\mathbb {R}}^{n}\)

Since \(\widehat{A}_{j}=A_{0}+\sum _{k=1}^{l}v_{k}^{j}A_{k},\ \widehat{a} _{j}=a_{0}+\sum _{k=1}^{l}v_{k}^{j}a_{k}\) and \(\widehat{\alpha }_{j}=\alpha _{0}+\sum _{k=1}^{l}v_{k}^{j}\alpha _{k}\), we have

Note also that \(K\subseteq \{x\in {\mathbb {R}}^{n}:\langle x,\left( \overline{B }_{i}+\delta _{i}I_{n}\right) x\rangle +2\langle \overline{b}_{i},x\rangle \ +\overline{\beta }_{i}+\delta _{i}\le 0,\ i=1,\ldots ,m\}\) because \(\left( \overline{B}_{i}+\delta _{i}I_{n},\overline{b}_{i},\overline{\beta } _{i}+\delta _{i}\right) \in \mathcal {W}_{i},\ i=1,\ldots ,m\).

On the other hand, it is easy to check that, for each \((B_{i},b_{i},\beta _{i})\in \mathcal {W}_{i},\ \)all the eigenvalues of the matrix \(\left( \begin{array}{l@{\quad }l} \overline{B}_{i}+\delta _{i}I_{n} &{} \overline{b}_{i} \\ \overline{b}_{i}^{T} &{} \overline{\beta }_{i}+\delta _{i} \end{array} \right) -\left( \begin{array}{l@{\quad }l} B_{i} &{} b_{i} \\ b_{i}^{T} &{} \beta _{i} \end{array} \right) \) are non-negative, and so, \(\left( \begin{array}{l@{\quad }l} \overline{B}_{i}+\delta _{i}I_{n} &{} \overline{b}_{i} \\ \overline{b}_{i}^{T} &{} \overline{\beta }_{i}+\delta _{i} \end{array} \right) -\left( \begin{array}{l@{\quad }l} B_{i} &{} b_{i} \\ b_{i}^{T} &{} \beta _{i} \end{array} \right) \) is a positive semidefinite matrix. Hence,

This shows that \(\{x\in {\mathbb {R}}^{n}:\langle x,\left( \overline{B} _{i}+\delta _{i}I_{n}\right) x\rangle +2\langle \overline{b}_{i},x\rangle \ + \overline{\beta }_{i}+\delta _{i}\le 0,\ i=1,\ldots ,m\}\subseteq K\). Thus, \( K=\{x\in {\mathbb {R}}^{n}:\langle x,\left( \overline{B}_{i}+\delta _{i}I_{n}\right) x\rangle +2\langle \overline{b}_{i},x\rangle \ +\overline{ \beta }_{i}+\delta _{i}\le 0,\ i=1,\ldots ,m\}\).

Using (14) and the fact that \(K=\{x\in {\mathbb {R}}^{n}:\langle x,\left( \overline{B}_{i}+\delta _{i}I_{n}\right) x\rangle +2\langle \overline{b}_{i},x\rangle \ +\overline{\beta }_{i}+\delta _{i}\le 0,\ i=1,\ldots ,m\}\), the problem \((\mathrm{RQP})\) can be written as

The quadratic function \(\langle x,\widehat{A}_{j}x\rangle +\langle \widehat{a }_{j},x\rangle \ +\widehat{\alpha }_{j}\) can be written as \(\langle x,\left( \widehat{A}_{j}+\rho I_{n}\right) x\rangle +\langle \widehat{a}_{j},x\rangle \ +\widehat{\alpha }_{j}\ -\rho ||x||^{2}\) where \(\langle x,\left( \widehat{A }_{j}+\rho I_{n}\right) x\rangle +\langle \widehat{a}_{j},x\rangle \ + \widehat{\alpha }_{j}\) is convex for any \(j=1,\ldots ,p\) and \(\rho =\max _{j=1,\ldots ,p}\{-\gamma _{\min }(\widehat{A}_{j})\}\). By our assumption, \( \rho \,>0\). Now, the problem \((\mathrm{RQP})\) becomes

By Corollary 4.2, \(x^{*}\in K\) is a solution of (15) if and only if for any \(u\in {\mathbb {R}}^{n}\), there exist \( \lambda \in {\mathbb {R}}_{+},\mu \in \Delta \) such that

as required.

Conversely, assume for any \(u\in {\mathbb {R}}^{n}\), there exist \(\lambda \in {\mathbb {R}}_{+},\mu \in \Delta \) relation (13) holds. The linear matrix inequality (13) can be equivalent to relation (16). Hence, \(x^{*}\) is a solution of problem in (15) and then, \(x^{*}\) is a robust global minimizer of \((\mathrm{QP})\). \(\square \)

Let us consider the uncertain trust region problem as a special case of problem \((\mathrm{QP})\)

where the data \(\left( A,a,\alpha \right) \) is uncertain and it belongs to the uncertainty set \(\mathcal {V}\), which is given by

\(l,\ p\in \mathbb {N}\) and \(v^{1},\ldots ,v^{p}\in {\mathbb {R}}_{+}^{l}\). We assume that \(s>0\) and \(x_{0}\in {\mathbb {R}}^{n}\).

The robust counterpart, that finds a worst-case solution of \(\mathrm{(TP)}\), is

Corollary 5.1

(Uncertain Trust-Region Problem) For problem \((\mathrm{TP})\) with data uncertainty \(\mathcal {V}\) as above, let \(\widehat{A}_{j}=A_{0}+ \sum _{k=1}^{l}v_{k}^{j}A_{k},\,\widehat{a}_{j}=a_{0}+ \sum _{k=1}^{l}v_{k}^{j}a_{k}\) and let \(\widehat{\alpha }_{j}=\alpha _{0}+\sum _{k=1}^{l}v_{k}^{j}\alpha _{k}\) for \(j=1,\ldots ,p\). Let \(\rho =\max _{j=1,\ldots ,p}\{-\gamma _{\min }(\widehat{A}_{j})\}\).

-

(i)

If \(\rho >0\), then a feasible point \(x^{*}\) of \((\mathrm{TP})\) is a robust global minimizer of \((\mathrm{TP})\) if and only if, for any \(u\in {\mathbb {R}}^{n}\), there exist \(\lambda \in {\mathbb {R}}_{+}\) and \(\mu \in \Delta _{p}\) such that

$$\begin{aligned} \left( \begin{array}{l@{\quad }l} {2}\left[ \sum \nolimits _{j=1}^{p}\mu _{j}\widehat{A}_{j}+\left( \rho {+\lambda }\right) {I}_{n}\right] &{} \left[ \sum \nolimits _{j=1}^{p}\mu _{j}\widehat{a}_{j} {-u-2\lambda x}_{0}\right] \\ \left[ \sum \nolimits _{j=1}^{p}\mu _{j}\widehat{a}_{j}{-u-2\lambda x}_{0}\right] ^{T} &{} 2\left[ {\lambda }\left( ||x_{0}||^{2}-s\right) +\frac{||u||^{2} }{4\rho }{-}\overline{t}+\sum \nolimits _{j=1}^{p}\mu _{j}\widehat{\alpha }_{j}\right] \end{array} \right) \succeq 0, \end{aligned}$$where \(\overline{t}=\max _{(A,a,\alpha )\in \mathcal {V}}\langle x^{*},Ax^{*}\rangle +\langle a,x^{*}\rangle \ +\alpha .\ \)

-

(ii)

If \(\rho \le 0\), then a feasible point \(x^{*}\) of \(( \mathrm{TP})\) is a robust global minimizer of \((\mathrm{TP})\) if and only if, there exist \(\lambda \in {\mathbb {R}}_{+}\) and \(\mu \in \Delta _{p}\) such that

$$\begin{aligned} \left( \begin{array}{l@{\quad }l} {2}\left[ \sum \nolimits _{j=1}^{p}\mu _{j}\widehat{A}_{j}+{\lambda I}_{n}\right] &{} \left[ \sum \nolimits _{j=1}^{p}\mu _{j}\widehat{a}_{j}{-2\lambda x}_{0}\right] \\ \left[ \sum \nolimits _{j=1}^{p}\mu _{j}\widehat{a}_{j}{-2\lambda x}_{0}\right] ^{T} &{} 2\left[ {\lambda }\left( ||x_{0}||^{2}-s\right) +\sum \nolimits _{j=1}^{p}\mu _{j} \widehat{\alpha }_{j}{-}\overline{t}\right] \end{array} \right) \succeq 0, \end{aligned}$$where \(\overline{t}=\max _{(A,a,\alpha )\in \mathcal {V}}\langle x^{*},Ax^{*}\rangle +\langle a,x^{*}\rangle \ +\alpha .\)

Proof

Note as before that for each \(x\in {\mathbb {R}}^{n}\)

Then, the problem \((\mathrm{RP})\) becomes

and the Slater condition holds.

-

(i)

Assume that \(\rho >0\). Let

$$\begin{aligned} \mathcal {W=}\left\{ (B,b,\beta )\in S^{n}\times {\mathbb {R}}^{n}\times {\mathbb {R}}:\left\| \left( \begin{array}{l@{\quad }l} B &{} b \\ b^{T} &{} \beta \end{array} \right) -\left( \begin{array}{l@{\quad }l} I &{} - x_{0} \\ - x_{0}^{T} &{} ||x_{0}||^{2}-s^{2} \end{array} \right) \right\| _{\mathrm{spec}}\le 0\right\} \end{aligned}$$The constraint \(||x-x_{0}||\le s\) is equivalent to the quadratic constraint \(\langle x,B_{1}x\rangle +2\langle b_{1},x\rangle \ +\beta _{1}\le 0\), where \(B_{1}=I,\,b_{1}=-x_{0}\) and \(\beta _{1}=||x_{0}||^{2}-s^{2}\). Applying the previous theorem with \(m=1\), we obtain the required condition characterizing the robust global minimizer.

-

(ii)

If \(\rho \le 0\), then \(\gamma _{\min }(A_{j})\ge 0\) for all \(j=1,\ldots ,p\). Let \(f_{j}(x)=\langle x,\widehat{A}_{j}x\rangle +\langle \widehat{a}_{j},x\rangle \ +\widehat{\alpha }_{j}\), and \(h(x)=0\). Then \( f_{j} \) is convex. By Corollary 4.2, the conclusion follows\(.\square \)

A special case of \((\mathrm{TP})\) occurs when \(\left( A,a,\alpha \right) \in \mathcal {V}_{1}:=\mathrm{co}\{\left( A_{j},a_{j},\alpha _{j}\right) \in S^{n}\times {\mathbb {R}}^{n}\times {\mathbb {R}},\ j=1,\ldots ,p\}\), where co denotes the convex hull. The robust counterpart of \((\mathrm{TP})\) in this case is

Corollary 5.2

(Polytope uncertainty) For the problem \((\mathrm{TP})\) with the data uncertainty \(\mathcal {V}_{1}\), let \(\overline{t}=\,\inf \mathrm{(TP)}\) and let \(\rho =\max \{-\gamma _{\min }(A_{j}):j=1,\ldots ,p\}\).

-

(i)

If \(\rho >0\), then a feasible point \(x^{*}\) of \(\mathrm{(TP)}\) is a robust global minimizer of \(\mathrm{(TP)}\), if and only if, for any \(u\in {\mathbb {R}}^{n}\), there exist \(\lambda \in {\mathbb {R}}_{+}\) and \(\mu \in \Delta _{p}\) such that

$$\begin{aligned} \left( \begin{array}{l@{\quad }l} {2}\left[ \sum \nolimits _{j=1}^{p}\mu _{j}A_{j}+\left( \rho { +\lambda } \right) {I}_{n}\right] &{} \left[ \sum \nolimits _{j=1}^{p}\mu _{j}a_{j}{ -u-2\lambda x}_{0}\right] \\ \left[ \sum \nolimits _{j=1}^{p}\mu _{j}a_{j}{-u-2\lambda x}_{0}\right] ^{T} &{} 2 \left[ {\lambda }\left( ||x_{0}||^{2}-s\right) {+}\frac{ ||u||^{2}}{4\rho }+\sum \nolimits _{j=1}^{p}\mu _{j}\alpha _{j}{-}\overline{ {t}}\right] \end{array} \right) \succeq 0, \end{aligned}$$where \(\overline{t}=\max _{(A,a,\alpha )\in \mathcal {V}_{1}}\langle x^{*},Ax^{*}\rangle +\langle a,x^{*}\rangle \ +\alpha \).

-

(ii)

If \(\rho \le 0\), then a feasible point \(x^{*}\) of \( \mathrm{(TP)}\) is a robust global minimizer of \(\mathrm{(TP)}\), if and only if, there exist \(\lambda \in {\mathbb {R}}_{+}\) and \(\mu \in \Delta _{p}\) such that

$$\begin{aligned} \left( \begin{array}{l@{\quad }l} {2}\left[ \sum \nolimits _{j=1}^{p}\mu _{j}A_{j}+{\lambda I}_{n}\right] &{} \left[ \sum \nolimits _{j=1}^{p}\mu _{j}a_{j}{-2\lambda x}_{0}\right] \\ \left[ \sum \nolimits _{j=1}^{p}\mu _{j}a_{j}{-2\lambda x}_{0}\right] ^{T} &{} 2 \left[ {\lambda }\left( ||x_{0}||^{2}-s\right) {+} \sum \nolimits _{j=1}^{p}\mu _{j}\alpha _{j}{ -}\overline{{t}}\right] \end{array} \right) \end{aligned}$$where \(\overline{t}=\max _{(A,a,\alpha )\in \mathcal {V}_{1}}\langle x^{*},Ax^{*}\rangle +\langle a,x^{*}\rangle \ +\alpha .\ \)

Proof

Note that for each \(x\in {\mathbb {R}}^{n}\), we have

The conclusion follows from the same line of arguments as in the proof of the previous Corollary. \(\square \)

We give an example illustrating Corollary 5.2(i).

Example 5.1

Consider the uncertain problem

where \(a\) is uncertain and it belongs to the uncertainty set \(\mathcal {V=} [-1,1]\). Its robust counterpart is given by

Then the problem \(\mathrm{(EP}_{4}\mathrm{)}\) can be written as \(\inf _{x\in K}\max \{-x^{2}+x,\ -x^{2}-x\}=\inf _{x\in K}\max \{x,\ -x\}-x^{2}\). We can check that \(\inf \mathrm{(EP}_{4}\mathrm{)}=0\).

Let \(h(x)=x^{2}\), we have \(h^{*}(u)=\frac{u^{2}}{4}\) and let

Then \(d\) can be written as

Hence, for any \(u\in {\mathbb {R}}^{2}\), there exist \(\lambda \in {\mathbb {R}} _{+} \) and \(\mu \in \Delta \) such that \(d(x,u,\lambda ,\mu )\ge 0\), which is equivalent to the linear matrix inequality of Corollary 5.2 (i).

References

An, L.T.H., Tao, P.D.: The DC (difference of convex functions) programming and DCA revisited with DC models of real world non-convex optimization problems. Ann. Oper. Res. 133, 23–46 (2005)

Belousov, E.G., Klatte, D.: A Frank–Wolfe type theorem for convex polynomial programs. Comp. Optim. Appl. 22, 37–48 (2002)

Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust Optimization. Princeton Series in Applied Mathematics. Princeton University Press, Princeton, NJ (2009)

Bot, R., Hodrea, I., Wanka, G.: Some new Farkas-type results for inequality systems with DC functions. J. Glob. Optim. 39, 595608 (2007)

Dinh, N., Nghia, T.T.A., Vallet, G.: Closedness condition and its applications to DC programs with convex constraints. Optimization 59(3–4), 541–560 (2010)

Dinh, N., Vallet, G., Nghia, T.T.A.: Farkas-type results and duality for DC programs with convex constraints. J. Convex Anal. 15, 235–262 (2008)

Dinh, N., Jeyakumar, V.: Farkas’ lemma: three decades of generalizations for mathematical optimization. TOP 22(1), 1–22 (2014)

Fang, D.H., Li, C., Yang, X.Q.: Stable and total Fenchel duality for DC optimization problems in locally convex spaces. SIAM J. Optim. 21(3), 730–760 (2011)

Harada, R., Kuroiwa, D.: Lagrange-type duality in DC programming. J. Math. Anal. Appl. 418, 415–424 (2014)

Hiriart-Urruty, J.-B., Lemaréchal, C.: Convex Analysis and Minimization Algorithms. I. Fundamentals, vol. 305. Springer, Berlin (1993)

Horst, R., Thoai, N.V.: DC programming: overview. J. Optim. Theory Appl. 103, 1–43 (1999)

Jeyakumar, V., Lee, G.M.: Complete characterization of stable Farkas’ lemma and cone-convex programming duality. Math. Program. 114, 335–347 (2008)

Jeyakumar, V., Li, G.: A new class of alternative theorems for SOS-convex inequalities and robust optimization. Appl. Anal. 94(1), 56–74 (2015)

Jeyakumar, V., Li, G.: Robust solutions of quadratic optimization over single quadratic constraint under interval uncertainty. J. Glob. Optim. 55(2), 209–226 (2013)

Jeyakumar, V., Vicente-Pérez, J.: Dual semidefinite programs without duality gaps for a class of convex minimax programs. J. Optim. Theory Appl. 162(3), 735–753 (2014)

Martinez-Legaz, J.E., Volle, M.: Duality in DC programming: the case of several DC constraints. J. Math. Anal. Appl. 237, 657–671 (1999)

Penot, J.-P.: Calculus Without Derivatives, Graduate Texts in Mathematics, vol. 266. Springer, New York (2013)

Polik, I., Terlaky, T.: A survey of the S-lemma. SIAM Rev. 49, 371–418 (2007)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton, NJ (1970)

Toland, J.F.: Duality in nonconvex optimization. J. Math. Anal. Appl. 66, 399–415 (1978)

Zalinescu, C.: Convex Analysis in General Vector Spaces. World Scientific, Singapore (2002)

Author information

Authors and Affiliations

Corresponding author

Additional information

The authors are grateful to the referees for their comments and suggestions which have contributed to the final preparation of the paper. Research of the first author was partially supported by a grant from the Australian Research Council, whereas the second author was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2013R1A1A2005378).

Rights and permissions

About this article

Cite this article

Jeyakumar, V., Lee, G.M. & Linh, N.T.H. Generalized Farkas’ lemma and gap-free duality for minimax DC optimization with polynomials and robust quadratic optimization. J Glob Optim 64, 679–702 (2016). https://doi.org/10.1007/s10898-015-0277-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-015-0277-4