Abstract

Eliciting and responding to student thinking are high-leverage practices that have powerful implications for student learning. However, they are difficult to enact effectively, particularly for novices, and more research is needed to understand how teacher education can support teachers in developing these skills. This study examined ways prospective elementary teachers (PSTs) responded to unanticipated incorrect student solutions to high-demand problem-solving tasks and how their responses changed over a 6-week field experience embedded in a practiced-based mathematics methods course. Data were collected in 6 weekly cycles of planning (written plans), enactment (video of problem-solving sessions with students), and reflection (written reflections on video). Problem-solving task implementations were analyzed using cognitive demand and math-talk frameworks. Of the three collaborating PST groups, two groups improved at responding to unanticipated incorrect solutions, but these two groups also developed a tendency to shut down anticipated solutions. The third group showed no patterns in their responses to unanticipated incorrect solutions, but did maintain the cognitive demand when responding to anticipated solutions. I present a case of one group of collaborating PSTs who made improvements in responding to unanticipated incorrect solutions in terms of the pedagogical moves they employed, cognitive demand associated with their responses, and how their responses changed over time. Implications for teacher education are discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

One of the central goals of mathematics instruction is for students to develop a conceptual understanding of mathematics and of the norms for constructing, defending, and questioning mathematical arguments [Blanton et al. 2001; National Council of Teachers of Mathematics (NCTM) 1989, 1991, 2000, 2006; National Research Council (NRC) 2001; Peressini and Knuth 1998]. The most recent mathematics curriculum standards call for students to “construct viable arguments and critique the reasoning of others” [National Governors’ Association and Council of Chief State School Officers (NGA and CCSSO) 2010, p. 6]. Engaging students in representing, articulating, analyzing, evaluating, and justifying their and others’ thinking about mathematical ideas places heavy demands on teachers (Ball 1993, 1997; Baxter and Williams 1996; Brendefur and Frykholm 2005; Brodie 2010; Nicol 1999; Sherin 2002b) and requires two fundamental prerequisite skills: eliciting student thinking and leveraging that thinking to address important mathematical concepts.

This article presents a portion of the results of a larger study that focused on developing prospective elementary teachers’ (PSTs) ability to support pairs of elementary children in reasoning and making sense of mathematics by developing, explaining, and justifying conjectures. Specifically, in this study, I examined how PSTs’ pedagogical moves to elicit and respond to children’s thinking influenced cognitive demand and how planning influenced their task implementations during a 6-week field experience. Several layers of planning activities built into the study’s design were intended to prepare the PSTs for children’s typical solutions and approaches to the tasks. I believed that being able to anticipate children’s thinking would better enable PSTs to respond to that thinking in ways that did not lower the cognitive demand of the task (Smith and Stein 2011). Despite the study’s focus on anticipating solutions, the PSTs still encountered thinking that they had not anticipated while planning, we had not discussed in class, or they had not already seen in the field. This article addresses the research question of how PSTs responded to unanticipated incorrect solutions to problem-solving tasks during a field experience focused on implementing high-demand tasks in ways that focused on children’s thinking. I highlight results of one PST group as an example of participants that improved at responding to a particular type of solution: those that were both unanticipated and incorrect. I discuss pedagogical moves PSTs employed in responding to unanticipated incorrect solutions and the levels of cognitive demand associated with their responses.

Background

In reviewing literature, I contrast student–teacher interactions of the typical mathematics classroom with those advocated by standards documents (NCTM 2000, 2014; NGA and CCSSO 2010; NRC 2001) that have been shown to promote students’ conceptual understanding (e.g., Cobb et al. 1992; Kazemi and Stipek 2001; Lampert 1990; Stein et al. 2000; Yackel and Cobb 1996). I then discuss the skills teachers need, questioning and attending to student thinking, the difficulties of enacting these skills, and research in preparing prospective and practicing teachers to elicit and respond to student thinking.

In the most prevalent mode of student–teacher interaction, the teacher initiates with a question (often focused on procedures) that is followed by short student responses which are then quickly evaluated by the teacher for correctness (Mehan 1979). While an initiation–response–evaluation (IRE) model of communication may keep students attentive, it fails to engage them in reasoning or constructing arguments. In what Knuth and Peressini (2001) term dialogic discourse, the speaker and listener generate meaning through shared dialogue, the “zig-zag of discovery” (Lakatos 1976, p. 42). Dialogic discourse is consistent with recommendations of standards documents and differs from the IRE model in several key ways. Teacher questions press for meaning, requiring that students explain and justify their ideas, not just describe a procedure (Kazemi and Stipek 2001). In lieu of praising or correcting answers, teachers explore student solutions by eliciting their thinking with further questioning and set up students to judge the validity of their own solutions (Boaler and Brodie 2004; Crespo 2000).

Questioning to elicit student thinking

Developing effective teacher questioning is key to moving away from an IRE model. Questions need to explore both mathematics content and children’s’ thinking about mathematics. Teacher questions have the power to enhance children’s arguments that justify their work, generalize their strategies to other problems, and extend their thinking by inviting students to compare their work to that of others (Martino and Maher 1999). Sahin (2007) has found that while a wealth of research on questioning exists, very little has focused on guiding or probing questions that explored students’ thinking. In her study of PSTs’ challenges in working with students, Nicol (1999) employed a “question–listen–respond” model and found the teacher’s response after listening to a student needed to connect to the students’ thinking. In making their thinking public, students wrestle with important mathematical ideas and misconceptions. Teachers then gain insight into student thinking and are able to extend it to new contexts (Chapin et al. 2003, 2013; Pirie and Schwarzenberger 1988). Boaler and Brodie (2004) identified nine types of teacher questions and found that teachers using traditional curricula almost exclusively asked questions that gathered information or led students through a specific procedure, similar to those used in an IRE model. Though teachers in Boaler and Brodie’s (2004) study who used reform curricula also posed information gathering questions, they additionally posed a variety of other types of questions that elicited and explored student thinking.

Using student thinking as a centerpiece of mathematics, instructional practice has been shown to provide students access to significant learning opportunities (Carpenter et al. 1999; Franke et al. 2007, 2009; Kazemi and Stipek 2001). Teachers who were engaged in a professional development program focused on children’s mathematical thinking had students who reported a greater understanding of mathematics, and this was borne out by test score gains from teachers’ previously low-achieving students (Carpenter et al. 1989). In describing their construct of professional noticing of children’s mathematical thinking, Jacobs et al. (2010) discuss three interrelated skills: attending to, interpreting, and responding to children’s thinking. In comparing 131 practicing and prospective teachers who represented four levels of experience with children’s thinking, Jacobs et al. (2010) found that those with more teaching experience were more likely to attend to and be able to interpret student thinking, but teaching experience alone did not account for teachers’ abilities to respond to student thinking. Only teachers involved in at least 2 years of professional development focused on children’s thinking provided evidence of being better able to respond to student thinking.

Challenges of attending to student thinking

In being “intellectually honest to both mathematics and the child,” (Ball 1993, p. 377) teachers must maintain the rigor of the mathematics while supporting and attending to student thinking (Sherin 2002a). One of the underlying assumptions of student-centered pedagogy is that the teacher often does not explicitly model procedures. Maintaining the cognitive demand of mathematical tasks (e.g., requiring students develop their own strategies rather than imitate the teacher’s strategy) is essential to ensure that students grapple with challenging mathematics (Stein et al. 2008). Teachers, however, persist in their reliance on a question–answer–evaluate model (Boaler and Brodie 2004). Leading questions reduce a worthwhile task to the execution of procedures prompted by the teacher.

Crespo (2000) noted that, “For prospective teachers the idea of listening to students is not obvious” (p. 156). PSTs in her study focused on the correctness of student work and not the thinking underlying the work; incorrect solutions were corrected and correct solutions were praised not explored. Teachers also struggled to follow up on student thinking after an initial inquiry about their strategies (Franke et al. 2009). Moyer and Milewicz (2002) found that PSTs relied on series of short recall questions and leading questions that guided students to the answers, only questioned incorrect responses, and used general questions that did not address the specifics of a child’s work. Nicol (1999) found that prospective teachers experienced tension between asking questions that elicited student thinking and asking questions that lead students through the teacher’s way of thinking to a correct answer. Listening to students’ thinking was overwhelmed by listening for what the prospective teachers expected to hear, and responding to student thinking left prospective teachers with a sense of loss of authority.

Teachers also struggle to respond to incorrect or incomplete solutions (Mewborn and Huberty 1999; Nicol 1999). In an IRE model, an incorrect response typically prompts an evaluation from the teacher in the form of a correction. Yet a contradiction between a student solution and a given parameter of a problem can lead to students analyzing and evaluating their own and others’ work, and by turning a student’s attention to incorrect solutions, the teacher provides the opportunity for students to understand why solutions are invalid (Staples and Colonis 2007).

Attending to student thinking is a teaching skill that can be learned (Franke et al. 2009; Jacobs et al. 2010), and extensive work has been undertaken in helping prospective and practicing teachers learn to notice, interpret, and respond to children’s mathematical thinking. Van Es and Sherin (2008, 2010) and Leatham et al. (2015) have studied using video with prospective and practicing teachers to help them learn to notice and interpret children’s mathematical thinking. They found that both groups showed improvement in noticing and interpreting student thinking and that for practicing teachers this increased attention to student thinking was evident in instruction as well. Nicol (1999) found that with concentrated support and reflection over a one-semester methods course, prospective teachers were able to improve in asking questions that attended to student thinking.

Rationale

Eliciting and responding to student thinking are a “high-leverage practice,” one that is essential for novices to know and be able to carry out on their first day of teaching (Ball and Bass 2000) and that has a substantial payoff for student learning (Ball et al. 2009). However, it can be challenging for teachers to attend to student thinking, especially prospective teachers who have less experience with children’s mathematical thinking. Because it is a teaching skill that can be learned (Franke et al. 2009; Jacobs et al. 2010), research needs to examine how teacher education might support prospective teachers in developing these skills. Work has been undertaken in helping prospective and practicing teachers learn to notice, interpret, and respond to children’s mathematical thinking. Van Es and Sherin (2008, 2010) have studied using video with prospective and practicing teachers to help them learn to notice, interpret, and respond to children’s mathematical thinking. They found that both prospective and practicing teachers showed improvement in noticing and interpreting student thinking and that for practicing teachers this increased attention to student thinking was evident in instruction as well. Nicol (1999) found that with concentrated support and reflection over a one-semester methods course, prospective teachers were able to improve in asking questions that attended to student thinking.

These can be considered examples of practice-based teacher education (Ball and Forzani 2009; Feiman-Nemser 2001; Hiebert and Morris 2012; Lampert 2010), where teachers learn in, from, and for practice. Practice-based teacher education offers a means of developing PSTs’ skills in high-leverage practices through “repeated opportunities for novices to practice carrying out the interactive work of teaching” (Ball and Bass 2000, p. 503). Decomposing teaching into specific high-leverage practices that can be “articulated, studied, and rehearsed” (Sleep and Boerst 2012, p. 1039) helps to make practices accessible to novices. I designed my study to help PSTs zoom-in on a particular high-leverage practice (eliciting and responding to student thinking) in ways that can “articulated” by modeling and discussing practices for engaging students in high-demand tasks, and “studied and rehearsed” by enacting the same tasks over multiple weeks and reflecting on that work to improve task implementations. My study took place with pairs of students outside of the typical classroom setting, where the complexities of classroom practice (managing the curricular, social, and disciplinary demands) could be minimized. Because weak content knowledge can limit teacher ability to decipher and interpret student thinking (Sherin 2002b; Yackel 2002), PSTs in this study were chosen specifically for their strong content knowledge.

Situated within a practice-based mathematics teaching methods course, with an integrated field experience and a pedagogical focus of facilitating discussions, my study builds on work developing practice-based teacher education and makes an initial foray into describing prospective teachers’ growth in a practice-based setting. The goals and activities of the course were explicitly aimed at preparing for, practicing for, and reflecting on the work done in the field with pairs of elementary students. The work undertaken by my PSTs centered on creating and analyzing representations of practice (Crespo et al. 2011) and employed a cycle of planning, enactment, and reflection similar to the process used by Kazemi et al. (2010). In teaching this course over previous semesters, I learned several things about difficulties my prospective teachers experienced while doing challenging mathematics with young children that informed the design of this study. They only developed tasks that involved performing routine procedures or word problems. When provided cognitively demanding tasks, they spent minimal time planning and then lacked the experience to develop in-the-moment responses. Similar to Ding and Carlson’s (2013) findings, when given guidelines for lesson planning, they only considered superficial aspects of the tasks and the teacher’s actions, not the students. Thus, in this study, I asked PSTs to hypothesize potential solutions, both correct and incorrect, and consider how they would respond.

Framework

Hufferd-Ackles et al. (2004) present a detailed framework for studying teacher development in specific aspects of facilitating discourse. Their work is based on a year-long study in a whole-class setting of one teacher working to build a math-talk community, where all participants, both teacher and students, work together using discourse to engage all students in serious mathematical work. The math-talk framework (see Appendix 4 for revised framework) provides four levels (0–3) that range from a strictly teacher-directed lecture-based instruction to instruction where student ideas, explanations, and judgments drive classroom activity. Within each level, four components of math-talk (questioning, explaining mathematical thinking, source of ideas, and responsibility for learning) are described. Because this framework breaks discourse into these four components, it is helpful for comparing specific teacher moves to other features of classroom interactions. The framework’s attention to teacher and student responses makes it appropriate for examining the relationship between the two. The multi-level nature of this framework makes it appropriate for studying teacher changes over time.

However, to fit the context of this study, I made some key changes to the framework and how it was used. First, the math-talk framework (originally developed in a whole-class setting with an experienced teacher) did not translate smoothly to the context of two teachers working with two students. Thus, I modified the descriptions of the levels to fit the context of two teachers working with two students (e.g., “Teacher is physically at the board, usually chalk in hand, telling and showing students how to do math,” was modified to, “Teacher shows how to solve or tells correct answers or appropriate strategies.”). Second, as novices, PSTs often only made small changes each week that did not translate to a change in level of math talk; they often straddled the fence providing evidence of parts of each of two adjacent levels. Using the same process, Hufferd-Ackles et al. (2004) described to create their original framework, I added mid-levels (0.5, 1.5, 2.5) to describe PST actions that did not fit cleanly into only one level (for more detail on this process, see Hallman-Thrasher 2011; see Appendix 4 for the revised framework). Third, Hufferd-Ackles et al. (2004) generally found that the four components of math talk developed together (e.g., a Level 2 teacher was a Level 2 in all four components). However, as novices, the PSTs in this study were less able than an experienced teacher to maintain consistency across all components. Hence, their implementations provided evidence of different levels in each component of math-talk framework and so each component of math talk was coded with its own level (e.g., a Level 2 in questioning, but only a Level 1 in explaining).

In assessing the PSTs’ implementation of problem-solving tasks, I also wanted to associate math talk with opportunities for student learning. Thus, I also relied on the mathematical task analysis guide which categorizes a task’s cognitive demand (Stein et al. 2000), “the cognitive processes in which students actually engage as they go about working on the task” (Stein et al. 1996, p. 461). To gauge cognitive demand, Stein et al. (1996) use two levels, low and high, and within each level provide two categories that describe the types of thinking students use to complete a task. Low-level cognitive demand tasks may be classified as memorization (recall of memorized fact) or procedures without connections (execute known procedures with no attention to the concepts they embody). High-level cognitive demand tasks may be classified as procedures with connections (focused on the ways procedures connect to one another or to mathematical concepts) and doing mathematics (synthesize knowledge in non-routine ways to explore and understand concepts). Different goals for a lesson dictate which type of task is appropriate (e.g., if a teacher wants to engage students in reasoning and constructing arguments, she would use a high-level task). Stein et al. (2000) also developed the mathematical task framework which describes the trajectory of a task as it moves through several phases: the task as it is written, the task as the teacher sets it up, and the task as implemented with students. The transitions between phases represent critical points where the intended cognitive demand of a task could change.

My use of cognitive demand differs from Stein et al. (2000) work in two important ways: (1) my use of cognitive demand as a scale and how I mapped the descriptions of the four cognitive demand categories to context of this study and (2) how I used cognitive demand to analyze task implementations. First, Stein and colleagues categories of cognitive demand were not intended to be used hierarchically. High-level tasks require more cognitive effort from students in terms of thinking and reasoning than do low-level tasks, but within high or low, one type of task was not superior to the other. A doing mathematics task was not better than procedures with connections task; they were different types of tasks both requiring high cognitive effort from students, but that served different instructional goals. In this study, where the goal was to press elementary students to reason, make sense of mathematics, and justify their solutions to non-routine, high-demand tasks, it was appropriate to treat the categories of cognitive demand as hierarchical levels. PSTs used doing mathematics tasks (so classified because children did not have a set of known algorithms to use in solving them) and through planning activities, methods course discussions, and modeling by methods course instructors were provided strategies for extending the tasks in ways that maintained the cognitive demand at a doing mathematics level. In my study what distinguished a doing mathematics task from a procedure with connections task was that the former required inventing and justifying a strategy whereas the later “suggest[ed], implicitly or explicitly, pathways to follow” that connected to an underlying concept (Smith and Stein 2011, p. 16). Therefore, I decided doing mathematics level of implementation required more cognitive effort than procedures with connections and, hence, was a higher level of cognitive demand than procedures with connections. Memorization tasks involve “previously learned facts, rules, formulas, or definitions” (Smith and Stein 2011, p. 16). In the context of the elementary school mathematics of my study, what students most likely had memorized that related to the tasks were basic facts. Hence, when PSTs turned the high-demand task implementation into a series of basic facts questions or yes/no questions about task or the student’s work, I classified this as memorization and, because it was so far from the intended goal of the task implementation, it was the lowest level of cognitive demand. When PSTs broke the task into a suggesting series of known procedures and failed to explore the concepts underlying those procedures, the implementation was procedures without connections. Because procedures without connections required more cognitive effort than stating memorized facts, I considered procedures without connections level of implementation a higher cognitive demand than memorization. Thus, in my study, the levels of cognitive demand were ordered from lowest to highest as memorization, procedures without connections, procedures with connections, and doing mathematics.

Second, Stein et al. (2000) assigned a single cognitive demand category to an entire implementation, using the guideline of which category best described what more than half of the students were doing more than half the time. Using a single level did not work for this study for two reasons. First, working with only two students meant that applying the “more than half the students” guideline meant either both students had to be putting in the same amount of cognitive effort or I was unable to code the implementation. Even using only a ‘half the students’ guideline ignored important work and contributions of the other student. Second, I also found that novice teachers changed the cognitive demand at many points throughout a session and those changes were important. I wanted a more fine-grain analysis to capture what was happening to cognitive demand as PSTs employed particular pedagogical moves. Thus, I chunked the video transcripts into segments whose start was defined by the introduction of a change in student thinking (see data analysis for more detail on this process) and coded each segment with its own level of cognitive demand.

Methods

Context

The study was conducted in conjunction with the field experience associated with a mathematics teaching methods course for early childhood (grade K-5) education majors at a large university in the southeastern United States. Children’s mathematical learning was the first course in a required two-course sequence that focused on counting, number sense, basic operations with whole and rational numbers, and making sense of standard arithmetic algorithms. Some key goals of the course were to become aware of children’s mathematical thinking, how it differs from adult thinking, and how attending to children’s thinking might impact teaching practices. The central feature of the course was an 8-week field experience in which the class, together with myself (also the course instructor), and teaching assistant, made weekly visits to a local elementary school. This study was conducted only during the last 6 weeks of the field experience. The first 2 weeks of the experience focused on developing a rapport with the students and completing a case study that explored the mathematical thinking of a single student.

In the field experience, I assigned PSTs to teams of three, and each team worked with pairs of fifth-grade students: Two PSTs interacted with the two students, while the third PST made a video record of the session. The tasks PSTs presented to the students were not connected to students’ daily classroom mathematics lessons. This was an intentional part of the design of this field experience to ensure PSTs were not responsible for covering any particular instructional objectives, and the students’ regular classroom teachers had no responsibility for observing or monitoring the PSTs’ work. This arrangement allowed the PSTs freedom to work at the pace that was set by their students and to maintain student-centered dialogue without the pressure of being held accountable for content coverage. In order to help the PSTs begin to understand how children think mathematically, I directed them to allow the students to approach tasks in their own way and to use effective questioning techniques and talk moves (e.g., Boaler and Brodie 2004; Chapin et al. 2003, 2013; Kazemi and Stipek 2001; Mewborn and Huberty 1999; Nicol 1999; Smith and Stein 2011) to elicit student thinking and promote discussion. The goal I set forth to the PSTs was to use strategies we discussed in the methods course to elicit the students’ thinking, understand students’ thinking, and connect students’ thinking to the mathematical concepts underlying the problem-solving task. In class, we specifically worked on questions that required explaining how or why a process worked and that pressed for a complete and clear explanation. To help elicit thinking, we focused on asking how questions, revoicing student ideas, and attending students to specific parts of their representations to help them articulate and clarify their ideas. We discussed that incorrect solutions were opportunities for exploring student thinking, and asking questions about them could help students identify their own errors or misconceptions. Because explaining and justifying solutions were a main of goal of our work, I stressed the importance of questioning correct solutions as well. To help PSTs (and later their students) see connections among different solutions, I also asked them to solve a task they had already completed in a different way, or prompted them to execute classmate’s strategy and compare it with their own. These were teaching practices the PSTs had read about and discussed in class and that I modeled during class so they could experience these strategies as learners themselves. Our course and their work with students in the field experience emphasized helping students articulate their thinking and using that thinking as a basis for solving tasks.

Participants

Of 30 PSTs in the class, I selected 12 for participation in this study, all of whom agreed to participate, and I assigned them to teams of 3 PSTs each. Due to absences, one participant was actively involved in only one session of enactment, and for the rest of the study her teammates operated as group of 2. I discarded this participant’s incomplete data and analyzed her group’s data as if they were a team of 2. One of PST groups (Group G) failed to complete all aspects of data collection in the manner I specified (during the first block, they did not consistently do the assigned tasks with students and in the second block 4 of their 6 students repeated tasks they’d already done) and hence were also dropped as participants. Therefore, the final structure of the participants was one group of 2 PSTs and 2 groups of 3 PSTs each.

I chose PSTs based on their strong mathematics and communication skills and openness to teaching mathematics in ways that were different from their own elementary school experiences. I assessed these traits based on their written class assignments, contributions to class discussion, and an individual interview in which they completed and explained their work on a problem-solving task similar to what they would later enact with elementary students. The fifth-grade students with whom the PSTs worked were selected by their teachers for having average mathematics performance. Of the 30 teacher-selected students, 13 agreed to be a part of this study.

Data collection

I collected data in two 3-week blocks. Each block focused on a set of 5–10 tasks, which in their written form were high demand. They all involved non-routine problem-solving with each set organized around a different problem-solving strategy: generalize and explain patterns, make organized lists, work backward, reason algebraically, and reason deductively. Generalize and explain patterns tasks required students to identify a pattern existed, make a conjecture as to what the pattern was, explain why that pattern occurred, and generalize that pattern to any case. Making organized lists tasks asked students to construct and count a number of combinations while ensuring they accounted for all possibilities without double counting. Often making an organized list was the most efficient strategy for these tasks (though not one children typically attempted initially). Working backward tasks had an unknown starting amount, a known ending amount, and multiple steps were needed to find the starting amount from the ending amount. While these tasks could all be solved via working backward, it was expected that students would have different approaches (e.g., guess and check) and that PSTs should be prepared to manage these approaches. Algebraic reasoning tasks involved solving for two unknowns, and, like the working backward tasks, PSTs were to prepare for several approaches. Deductive reasoning tasks included logic problems and problems in which students had to draw conclusions based on given statements.

Because this field experience was not connected to particular K-5 classroom content, in semesters past, PSTs viewed the tasks as random without understanding how they might relate to content covered in their students’ K-5 mathematics lessons. To help PSTs see mathematical connections among tasks, I grouped them in these sets. Grouping them by strategy also served as a reminder to PSTs that tasks within a set were linked by a common strategy or concept. The intention was not to require students use that strategy, but to ultimately help students connect their thinking to an underlying mathematical concept. Within each set, I selected 2 focus tasks that I required PSTs to enact with students (see Appendix 1). The focus tasks were at a doing mathematics level of cognitive demand because the students did not have available to them known algorithms for solving the tasks. Both focus and non-focus tasks were chosen because they had a low-entry, high-exit threshold; a student with any level of background knowledge could easily start the tasks, even if only through a guess and check strategy, and to complete them students experienced important mathematical concepts that went beyond the K-5 curriculum. The tasks also provided opportunities for multiple solution approaches and, hence, opportunities to discuss those approaches. Having enacted the tasks myself and observed PSTs enacting the focus tasks with K-5 students in the past, I was familiar with K-5 students’ approaches, which allowed me to better support my PSTs in anticipating their students’ thinking. The non-focus tasks also allowed the PSTs to gain more practice with a particular concept with which they themselves might struggle and provided them additional tasks to enact with students if time permitted.

For the first 3-week block, each PST group enacted 2 assigned focus tasks with a different pair of elementary students each week. In the second 3-week block, I assigned each PST group a new set of problem-solving tasks and, accordingly, 2 new focus tasks to enact. Each PST group was assigned 4 tasks over the 6 weeks, but each PST group could not be assigned the same 4 tasks without having students repeat tasks. To ensure that no students repeated tasks during the study, all PST teams were not assigned the same set of tasks for each 3-week block. In addition, some weeks, some groups were only able to complete one of their focus tasks (see Table 1 for each group’s task sets, focus tasks, and students, denoted Cx). This repetition of tasks within each block allowed PSTs to use what they learned about students’ strategies on a task to inform their work with a new pair of students on the same task.

Within each week of both 3-week blocks, the PSTs engaged in a cycle of planning, enactment, and reflection modeled on the work of Kazemi et al. (2010) (see Table 2). Planning data for each 3-week block included one task dialogue, one activity plan, and two revisions of that plan for each individual PST. Modified from Crespo et al.’s (2011) work, in a task dialogue, I suggested three or four possible solutions to each of the tasks and the PSTs had to create a hypothetical teacher–student dialogue that might follow each solution (see Appendix 2 for sample assignment, see Appendix 3 for portion of completed assignment). I provided feedback on the task dialogue, which PSTs then used to develop an activity plan for how they would implement the tasks. In planning for the first week of a 3-week block, PSTs wrote an activity plan in which they posited possible student solutions and how they would respond to those solutions. In this activity plan, they had to suggest specific hints, questions, and teacher moves they would implement to help a student who (1) did not know how to start, (2) had an incorrect approach, (3) had a nearly correct solution, and (4) had a correct solution and needed to be further challenged. The activity plan served as a reference to help them as they worked with students. I provided feedback on their plans prior to task implementation, and each week they were able to revise plans by adding new student strategies they observed and refining their responses to student strategies. I also provided feedback on their plan revisions each week.

For each weekly enactment, two PSTs in each group worked with a pair of students, while the third video recorded. Within each group, the PSTs decided how to share leading the tasks discussions, and this role changed from week to week depending on the needs and personalities of their students and the PSTs’ expertise on particular tasks. Generally, both the students were provided time to work alone first and then one or two PSTs supported them in collaborating on each task while the third PST video recorded. At times, groups allowed students to work individually for part or all of a session, and during these times one PST worked with one student while the third alternated video recording between the two student–PST pairs. In these instances, the off-camera pair was audio recorded. In the group of only two PSTs, one recorded while the other took charge of implementing the tasks. In all groups, there were instances of the recording PST interjecting from behind the camera to help out. These video records of PSTs’ weekly sessions comprised the enactment data. Reflection data were collected in the form of PSTs’ weekly written reflections, in which they analyzed their video to determine what students understood, how well or poorly they made use of talk moves to elicit and respond to students and to support students in explaining and justifying their work, and what changes they could make to future enactments. Each PST in a group wrote her own reflection and then read and made written comments on one another’s reflections. This paper focuses on analysis of enactment video data with planning data used to determine which student solutions were anticipated by PSTs and reflection data used to confirm or disconfirm findings.

Data analysis

I only analyzed data for those tasks that were implemented for all weeks of a 3-week block by a group and that were being attempted for the first time by students (see Table 1). Thus, I eliminated data on the 6 Numbers Task for Group H and data on the Tickets and the Phone Club Tasks for Group J because these groups did not implement these tasks all 3 weeks of the block. I chose to eliminate Group I’s data on the Crayons Task because they spent the vast majority of their time each week focusing on the Puppies Task.

I analyzed both planning and enactment data using levels of cognitive demand in the mathematics task analysis guide (Stein et al. 2000) and the revised math talk (Hufferd-Ackles et al. 2004) framework (see Appendix 4 for revised Math Talk and Appendix 5 for cognitive demand guidelines). For each dialogue of a task dialogue assignment, I assigned a level of cognitive demand and a level for each component of math talk. I did the same for each of the four sections of an activity plan (helping a child start, helping a child with an incorrect approach, helping a child with a nearly correct solution, and helping a child with a correct solution who needed to be challenged further).

As discussed earlier, in analyzing the video enactment data I struggled to assign a single level of cognitive demand or a single level of categories of math talk to given task enactment: Within a single enactment, there often were moments of low and high demands and math talk. Applying only one level of cognitive demand to describe a single enactment of a problem-solving task did not capture the ways the demand and math talk changed within that one enactment. Thus, to analyze the enactment data, I chunked the video transcripts into segments. The first segment of an enactment represented PSTs setup of the task. Each segment thereafter represented a PST (or several PSTs) responding to student thinking (a solution, strategy, idea, or a lack of solution). Often PSTs responded to no solution situations; either a student was stuck and asked for help or a student stalled out and a PST decided to intervene. Each time a student introduced a new solution, strategy, idea, or had no solution and a PST intervened, this defined the start of a new segment. I then coded each segment of the video enactment data with one of the four (0–3) levels of cognitive demand (see Appendix 5) and with one of the seven levels of the modified version of the math-talk framework (see Appendix 4). Thus, every segment of video enactment data was coded five times: with a level for cognitive demand, and a level for each of questioning, explaining, responsibility, and ideas of math talk. Cognitive demand (Stein et al. 2000) allowed me to assess the quality of the mathematics made available to the students in the implementation of the problem-solving tasks and to analyze how PSTs and students engaged in (or planned to engage in) that mathematics. Math talk (Hufferd-Ackles et al. 2004) allowed me to determine which teacher moves to elicit and respond to student thinking were associated with which levels of cognitive demand.

Looking across the analysis, I sought to explore what might account for the fluctuations in cognitive demand of segments with in a single enactment. The correctness of a student’s solution or strategy did not fully account for the differences in cognitive demand that I observed within any given enactment (i.e., cognitive demand was not consistently high or low for an incorrect versus correct response). I then categorized each segment to indicate if a student’s response was anticipated or not by the PSTs. I considered a child’s response anticipated if it was a response that (1) we had discussed in class, (2) the PSTs had addressed in their task dialogues or plans, or (3) the PSTs had encountered it with students in a prior week of the study. Because PSTs prepared for how to respond to no solution situations, I also considered no response as anticipated. Over the full 6 weeks, comparing the cognitive demand against PSTs’ anticipation of what they were responding to helped to explain some of the fluctuations in the cognitive demand. The results presented here address PSTs responses to unanticipated incorrect student solutions.

Results

Of the 3 groups, both Group I and Group H improved at responding to unanticipated incorrect solutions in ways that maintained high cognitive demand. Group H also developed a tendency to shut down anticipated incorrect solutions, whereas Group I developed a tendency to shut down any anticipated solutions. In Group J, each PST responded to students in different ways so that there was no overall pattern in how they responded to different types of solutions. First, I provide a brief excerpt of results for Groups I and J. Next, I present in detail the case of Group H which provides a rich example of PSTs who made improvements in maintaining high cognitive demand when responding to unanticipated incorrect solutions while shutting down anticipated incorrect solutions.

Group I

Group I improved at maintaining high cognitive demand for unanticipated incorrect solutions, but lowered it for anticipated solutions. In Week 1, when they implemented the Cupcakes Task, they responded to a series of unanticipated incorrect solutions (red dots in Fig. 1) in ways that lowered the cognitive demand. They only achieved high cognitive demand when responding to anticipated correct solutions. C7 interprets the statement of the task to mean she has 4 and 6 boxes of vanilla and chocolate cupcakes, respectively (Segment 3). Dana corrected her, “the chocolate ones are grouped into boxes and they put 6 in a box.” C7 persisted in thinking there are only 10 boxes total, and the PSTs restated the correction several times (Segment 4 and 5). When her partner, C5 chose a random number of boxes as a starting point (a valid guess and check strategy), the PSTs posed a series 10 short answer computation questions that involved recall of basic facts (Segment 6 and 7): “How many boxes of vanilla do you have here? How many cupcakes would that be total? How many boxes have you used?” Dana continued memorization level of cognitive demand by concluding for the students there are too many cupcakes (Segment 8). When the students started making correct adjustments to the number of boxes, Dana briefly relented in her overly directive interventions (Segment 9). But as the students’ adjustments moved them further from the correct solution, Dana quickly interjected again with another series of computation questions and suggested strategies (Segment 10). Only after students presented a correct solution did she elevate the cognitive demand to doing mathematics by asking about other solutions (Segment 11), “Why didn’t it [other combinations of boxes] work?” Dana then pushed for a justification of the correct solution (Segment 12), “I know you know. I just want to know, why did this work, having 7 vanilla [boxes] and 5 chocolate [boxes]?” and then continued to press for an explanation, “Do you think there always needs to be less chocolate cupcakes than vanilla?”

In Week 5, in their second implementation of the Puppies Task, Group I encountered a long series of unanticipated incorrect solutions (related to confusing when to double versus add in working backward, seen in red dots of Segments 3, 4, 6–8, 10, 113–115, 117, 118, 120–123, 125 in Fig. 1). Alice lowered the cognitive demand to memorization using leading questions and repeating the same question four times “Would we add them or not add them?” in effort to get the student to stop adding (Segments 113–117). Dana intervened to help raise the demand to procedures with connections by trying to decipher and follow the student’s thinking: She clarified the student’s thinking by asking what specific numbers in his solution represented, how to map back to the original task, and what justified each calculation of halving, doubling, or adding (Segment 118–123). Alice began to adopt Dana’s practices (Segment 125), asking the student why he thought he should sum the numbers. The student persisted in sticking with his incorrect solution over seven more segments when time ran out. Even though the student did not reach a correct solution during this time, Dana and Alice tried to understand his thinking and did not attempt to route him through their solution strategy. Their Week 6 implementation of the Puppies Task shows that Dana and Alice responded to anticipated solutions by lowering the cognitive demand. When one of their student s quickly found a solution through guess and check, they led the student through a working backward strategy modeled with unifix cubes in which the only cognitive effort required of the student was to count up the cubes at each successive step.

Group J

Group J showed no patterns in the cognitive demand for incorrect unanticipated solutions. Though they spent more time on a single task than other groups (usually only completing one task in a session), more time did not consistently translate to high cognitive demand. Regardless of correctness or if solutions were anticipated or not, Erica consistently lowered the cognitive demand to memorization by drawing conclusions for her students and using basic recall questions and leading questions such as, “We have a big number here and a big number here, do you think that is why?” On the other hand, Rene and Megan worked to maintain or raise the cognitive demand, frequently pressing for clear and complete explanations, asking for justification of incorrect and correct solutions, and requiring students verify their own work. When students achieved a correct (and usually anticipated) solution, they often prompted them to explain their approaches to one another or for one student to assist the other in finding a correct solution, achieving a procedures with connections or doing mathematics level of cognitive demand (blue dotes of Segments 49, 50, 52, 76, 80, 81, 83, 128 in Fig. 2). When C7 was stuck on finding another solution to 6 Numbers, Rene turned her attention to her partner’s idea (Segment 46), “C5 was saying…the side can’t equal less than 9? Wasn’t that what you were saying?” She then prompted C5 to explain to C7 and Megan followed up with five more prompts to help C5 clarify his explanation (Segment 47), “Say it again,” “What do you mean by that?”, and “What do you mean by they’re too high?”

However, in both the 6 Numbers and Cupcakes Tasks, they could not consistently achieve high cognitive demand in responding to unanticipated incorrect solutions (red dots of Segments 19, 25, 30, 40, 51, 64, 77, 78, 113, 121, 129 in Fig. 2). In several instances, they shut down unanticipated incorrect solutions (Segments 19, 64, 113, and 121) by verifying students’ work, and using vague or leading questions to redirect the student toward a correct solution. For example, Megan suggested strategies through her questions (Segment 121), “Each stick represents a box, right?” and “So do you want to count them again?” When the students failed to pick up on her hints, she persisted with a vague prompt to change student thinking, “Are you sure?” and Rene interjected more directively with “if you want to…put it back where it was a second ago.”

Group H

Group H group implemented 12 Pennies and Clock 6s Tasks in the first 3-week block and Phone Club Task in the second. In Week 1, with 12 Pennies, this group lowered the cognitive demand when presented with unanticipated incorrect solutions by correcting a mistake and restating the task (Casey) or explaining that if order of the three piles mattered, each unique combination could be rearranged in six ways (Kate). In Week 2, they maintained or raised the cognitive demand in response to an unanticipated incorrect solution and did so by allowing a student to test a faulty hypothesis (Nadia) and asking for explanation and justification (Kate). They also raised the demand in response to a correct anticipated solution by posing a similar question that changed the conditions of the task (Casey). In Week 3, they did not encounter any unanticipated incorrect solutions. However, they did make strides in coordinating the participation of their two students by requiring them explain strategies to one another.

I focus the detailed analysis on the remaining of their two tasks: Clock 6s (Weeks 1–3) and Phone Club (Weeks 4–6). In the following section, I share details of two teaching episodes from Group H. In Week 1 of the Clock 6s, they lowered the demand in response to unanticipated incorrect solutions, and once they anticipated these solutions, in Weeks 2 and 3 they worked to head them off before they could arise. The Week 1 episode serves as an example of ways that they initially struggled in responding to unanticipated incorrect student solutions in ways that maintained high cognitive demand. However, in Week 4, with the Phone Club Task they explored students’ confusions and provided opportunities to work through them. The Week 4 episode illustrates ways they improved in maintaining high cognitive demand when responding to unanticipated incorrect student solutions. For each episode, I discuss the mathematics of the task and typical student approaches, the unanticipated incorrect solutions the PSTs encountered, and my analysis of the PSTs’ responses to the unanticipated incorrect solutions.

Week 1 episode

In solving the Clock 6s, I wanted PSTs to focus their work with students on developing a systematic way of solving the task (e.g., making an organized list) and discuss the possible patterns in the solution. It was not important that students were able to find all 36 solutions, but it was important that they identified patterns that helped them find solutions and that they could identify how their pattern would indicate that all solutions had been found. There are many ways to organize the solutions, and each way makes particular patterns evident (Fig. 3). A typical student solution involved randomly generating a list of times that met the criteria of the task, but missing several solutions or groups of solutions (often those with four digits, or all possible permutations of a single time). In their planning, Group H was prepared to assist a student organize a random list of solutions, to discuss ways of permuting one solution to find others, to find solutions using four digits instead of only three, and to make connections between the task and sums of 6. However, they were not prepared to manage the incorrect solutions presented by C3 (in Fig. 4). Next, I describe C3’s solutions and the PSTs’ responses and then share my analysis of the episode.

C3 created several representations (shown in Fig. 4) that the PSTs, Nadia and Kate, had not anticipated, starting with an analog clock and thinking about the minute hand of the clock passing by the number 6 on the clock face. The PSTs moved quickly to shut down this line of thinking with both Kate and Nadia immediately intervening with firm “no’s” immediately after C3 explained her diagram. Though Nadia reread the question and directed C3 to identify what is meant by sum of 6, C3 persisted in referring to “how many times it [the clock hand] goes through 6” for another 3 segments of the episode (Segments 22–25). After C3 admitted she was unsure what to do, a scenario for which the PSTs had a prepared hint, they suggested she make a list of sums of 6 (Segment 26). At this point, C3 offered a second unanticipated incorrect solution: listing the numbers 6 and 12 (Segment 27), perhaps indicating that she had interpreted their idea as multiples of 6 or adding 6s. Nadia explained that they were looking for answers in the form of “blank plus blank equals 6.” When C3 appeared to finally understand what the question was asking by suggesting 6 plus 0, both Nadia and Kate eagerly pushed her to “make that into a time.” Though their leading hints helped her produce the first correct answer of 6:00, C3’s next answer of “3 o’clock plus 3 o’clock” indicated she still did not comprehend the meaning of the task (Segment 28). Kate decided this student needed more explicit instructions about how to solve the task:

You’re not adding the times. Look, look for that, that would be 3:30 right? Because 3 plus 3 plus 0 equals 6. So, you’re not adding the times together. You’re just looking at any time of the day you look at the clock and those numbers add up to six. Ok? Does that make sense?

With these guidelines and immediate verification of her next solutions from Nadia and Kate, C3 began generating correct solutions. For the rest of the episode, Nadia and Kate were managing a situation for which they were prepared, a random list of solutions that they could help a child organize to explore patterns.

Kate and Nadia launched the Clock 6s Task at a procedures without connections level of cognitive demand (Segment 21 in Figs. 5, 6). But when C3 clung to her digital clock representation (Segment 23), cognitive demand changed to a memorization level and remained at that level throughout the segments of the episode where PSTs responded to unanticipated incorrect solutions (see Fig. 5). The cognitive demand was only elevated to procedures without connections or procedures with connections after C3 produced more correct solutions, and Kate and Nadia were managing student thinking that fit the parameters of their plans (Segment 31). Kate was then able to elevate the demand by exploring patterns in the list C3 created (Segment 33 and 34). Zooming in on the segments of the episode that involved unanticipated incorrect solutions (Fig. 6) allowed for a careful examination of the components of math talk to which Kate and Nadia attended. Figure 6 shows the levels of the components of math talk during the initial portion of the teaching episode in which the student’s produced mostly unanticipated incorrect solutions. No components of the math talk even reached the first level, indicating a strongly teacher-centered dialogue with directive teacher questions, minimal student explanation, and responsibility for generating and evaluating ideas resting solely with the teacher.

Week 4 episode

To show the changes in how Group H responded to unanticipated incorrect solutions, I share details of a task implementation from Week 4 (Fig. 7). Like the Clock 6s Task, I wanted PSTs to focus students’ attention on patterns in the task. However, with the Phone Club Task I also wanted them to help students discover and articulate a pattern for a general case.

The Phone Club Task was presented in two parts, with the first intended to help students gain an understanding of the task. Part I was generally straightforward and solved by drawing a picture. Part I gave the PSTs an opportunity to help the students notice two keys to successfully solving the task: making sure that each member of the phone club was connected to all the others and having a systematic method to count the “strings.” The second part of the task asked students to think about the task in the reverse, given the number of strings needed, find how many people they would connect. A typical initial student response was to try to draw a picture with 28 strings and then add people. Students also made random guesses (usually too high) at the number of people and then added strings. They often alternated between adding strings and people haphazardly, stopping as soon as they reached 28 strings, regardless of whether all people were connected as the task described.

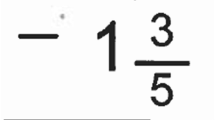

In their planning, this group of PSTs planned to encourage students to work up from four people while asking students questions about patterns as the number of people increased. This was a common approach the PSTs used when they were first introduced to the task and it usually led to noticing that if n people were in the club, then the number of strings was equal to the sum of the first n − 1 counting numbers. A less common approach for the PSTs and their students was to think multiplicatively about the task: Each person (n) must have one less string connected to him than people in the club (n − 1), so there are n(n −1) strings and because each string connects two people, n(n −1) double counts the strings; thus, n people are connected by n(n −1)/2 strings. However, in Week 4, their first implementation of Phone Club, they managed a series of C1’s incorrect, unanticipated solutions (Fig. 8). Next, I describe C1’s solutions and the PSTs’ responses and then share my analysis of the episode.

C1’s initial idea was that any number of strings will connect two fewer people than there are strings (Segment 117). Not only is this an unanticipated incorrect solution, it is worded in reverse of the way most of the PSTs presented it: number of people as a function of number of strings instead of number of strings as a function of number of people. Rather than shut down this oddball solution as they had before, Nadia asked C1 why she might think that. When C1 responded (Segment 119), “Because it was 4 [people] and 6 [strings]. So maybe it could be…10 and then 12,” Nadia pressed for further clarification about what the numbers 10 and 12 represented and Casey intervened to ask, “What is there 2 less of?” By seeking this clarification, they were attending to this wording issue and ensuring they could follow the student’s way of thinking (4 people need 6 strings, so 10 people need 12 strings). Though the student had an incorrect strategy, the PSTs were willing to take the time to try to understand the reasoning behind it and, rather than correct her, they encouraged testing her hypothesis. Both PSTs encouraged C1 to test the “2 less” hypothesis, which led to C1’s next unanticipated incorrect representation for 26 people and 28 strings (Segments 120, 121). Again, there was an added layer of complexity to her solution: The idea that 28 strings connected 26 people was incorrect and accurately representing this idea with a diagram as she planned would be overwhelming. The PSTs allowed her to continue executing her plan until she said, “This is going to be confusing.” Whereas in the past they might have curtailed C1’s work at this point, Kate asked the two students to explain their strategies to one another. To further prompt C1 to reevaluate her plan, Kate zeroed in on specific language C1 used, “You said it’s going to be really confusing, why is it going to be really confusing?” When C1 stated it would produce a lot of strings to keep track of, Nadia clarified that only one person was connected the other 25 and additional lines were needed to complete the representation.

C1 began to abandon this idea and seemed about to start a typical strategy of randomly applying an operation to numbers in the task, in this instance 4 times 6 (Segment 122). Kate ignored this suggestion and directed C1 back to her “2 less” hypothesis, asking her to test whether 5 people could be connected with 7 strings. When C1 incorrectly drew her diagram, she, by coincidence, happened to get the same answer her incorrect hypothesis predicted (Segments 123–125). Nadia intervened to help her correct the diagram and, once it was corrected, she offered a useful hint that moved C1’s work forward and prevented compulsive recounting of lines: “What I do when I am counting is I make a little mark on each line after I’ve counted it just to make sure you don’t count it twice.” Once C1 recognized that 5 people need 10 strings, not 7, she looked for another pattern suggesting, that, “Maybe you add 4 each time?” In response to this unanticipated incorrect solution (Segments 126, 127), the PSTs again asked the pair to share their solutions with each other in the hope that C1 would adopt the more successful sum of counting numbers strategy of her partner. Once she tried to test her “add 4 strategy,” C1 began to see the decreasing pattern her partner noticed and produced a correct representation. She connected the first person to the other five 5 people then added 4 lines to connect the second person to everyone and paused; as before, her drawing was too complicated. Her pen started moving in the air, drawing in imaginary lines for the third person. At this point, she began listing numbers beside her picture and abandoned her drawn representation entirely. She recognized that 6 people need 15 strings and then, continuing to look for a pattern as people increased, noted, “There was 5 and 10 and then there was 10 and 15.” This unanticipated solution needed clarification and Kate interjected to help her clarify:

the 10 and 15 were two different amounts of strings, right, but you were thinking about—are you thinking in terms of people and strings or are you thinking about the different number of strings as you add more people?

C1 went on to test for seven people and then identified a correct a pattern, the number of strings added for each additional person was one more than what was added for the previous number of people.

Cognitive demand only reached a doing mathematics level when the PSTs were managing unanticipated student work (red dots of Segments 113, 116–122, 126, 128, 129 in Fig. 9). In the instances where anticipated student solutions cropped up, the cognitive demand immediately dropped. Though at the end of the session, C1 could clearly describe the pattern to the PSTs, they did not ask her to articulate why this pattern appeared and missed the opportunity for her to justify this discovery fully and thus lowered the cognitive demand for this portion of session. Examining the components of math talk, levels of explaining mathematical thinking and responsibility for learning tracked closely to the levels of cognitive demand (Fig. 10). This indicates that the way the PSTs maintained high cognitive demand was by eliciting students’ explanations of strategies and justifications of their ideas. Further, during most of the session, they pressed for full explanations and did not just blindly accept any explanation that was offered. The PSTs also rarely evaluated either student’s conjectures. Whereas in the past they had often given positive or negative feedback on a student’s ideas, in this session they frequently asked students to test their conjectures and to draw their own conclusions from those tests. They were able to achieve Level 2 for these components by asking students to explain their thinking to one another and holding them responsible for determining the validity of one another’s ideas.

Discussion

Group H

There are some important differences between Group H’s Week 1 and Week 4 enactments. In Week 4, the PSTs were able to maintain higher cognitive demand when presented with unanticipated incorrect solutions than in Week 1. Yet in Week 4, they lowered the cognitive demand when the student work was something they had anticipated and for which they were prepared. In concert with this change in cognitive demand, the levels of math-talk components improved when PSTs responded to unanticipated incorrect student solutions and declined in response to anticipated solutions. Consistent with Henningsen and Stein’s (1997) factors that influenced the cognitive demand of implementations, in my study, PSTs maintained demand by using questioning to scaffold student work and pressing for clear and complete explanations and justifications of ideas and they lowered demand by having an answer-oriented approach that reduced challenging tasks to a series of small questions. The PSTs were less likely to rely on student self-monitoring to maintain the demand as PSTs often kept the authority for validating work and drawing conclusions with themselves, not students.

For unanticipated incorrect student solutions, PSTs achieved doing mathematics level of cognitive demand. Yet every time a student’s work took a turn that the PSTs had anticipated, they lowered the cognitive demand, at times, even to the memorization level. The way PSTs responded to anticipated incorrect solutions in Week 4 paralleled the way they responded to a student’s correct solutions: as something they could check off having observed that meant they were ready to move to the next thing. They did not treat anticipated solutions as objects of inquiry that required further questioning to be fully understood. Their response to anticipated work was also similar to the way they responded when correctly solving a mathematics task for themselves. Much in the way they were satisfied to arrive at a correct solution, when a student did something a PST anticipated, it may have served as confirmation that their planning was complete and correct. This was the opposite of what was seen in the Week 1 results.

In responding to unanticipated incorrect student solutions, PSTs made improvements in several aspects of attending to student thinking that align with the components of math-talk. Even early in the study PSTs used questioning to elicit student strategies, and, as the study progressed, they also asked follow-up questions to help students clarify their thinking. In particular, when presented with unanticipated incorrect student work, they attended carefully to students’ language, often embedding a student’s exact wording in their questions to students. Early in the study when students’ presented unanticipated incorrect solutions, the PSTs asked leading questions (“What does sum that mean? It means we’re adding, right?”) or stated a correct approach or idea for the student to adopt (“So why don’t we start with writing down numbers that add up to 6?”). With experience, they learned to refrain from correcting students and instead asked them to test their own conjectures and draw conclusions based on that work. They shifted the source of ideas and responsibility for validating ideas to the students.

Another key difference from Week 1 to Week 4 was the use of two different tasks, each with their own underlying concept (Clock 6s from the organized list tasks and Telephone Club explaining and generalizing patterns tasks). Though it could be argued the improvements reported here resulted in part from the change in task, I assert that the fact that PSTs were able to improve particular pedagogical practices across two dissimilar tasks strengthens the claims that they improved in responding to unanticipated incorrect solutions. Seeing improvement across multiple implementations of the same task (which also occurred) can in part be explained by PSTs becoming more familiar with content and potential solutions of a task. Improvement across the two dissimilar tasks shows that the PSTs were able to transfer pedagogical skills across different mathematics content; they were not confined by the task. Examining each task the first time it was implemented, when unanticipated solutions were most likely to occur, ensured that PSTs were equally unfamiliar with the two tasks. The unanticipated incorrect solutions of Phone Club were more complex (complicated drawings of the phone club as compared to relatively straightforward misconceptions about vocabulary, clocks, digits, and sums). A PST who had not improved in responding to unanticipated solutions independent of task would have struggled more in managing the unanticipated incorrect solutions to Phone Club than Clock 6s, not less. Extending their improvement to a new mathematical context shows the improvement is not dependent on familiarity with the task.

Groups I and J

Similar to Group H, Group I maintained or raised the demand when responding to unanticipated incorrect solutions and lowered the demand when responding to anticipated solutions (correct or incorrect). Group J, on the other hand, presented a different group dynamic. Despite the interventions in the study designed to help PSTs in responding to students, one PST, Erica, consistently lowered the demand. The other two members of Group J, Megan and Rene, responded in ways more consistent with existing research (Smith and Stein 2011) and the premise of this study: They were better able to maintain or raise the cognitive demand in response to anticipated solutions. Their responses to student solutions they had not anticipated varied: At times, they were able to maintain the demand by encouraging students to attend to one another’s work and pressing for justification. At other times, they took responsibility for learning from their students by verifying and correcting solutions and shifted the source of ideas away from students by using leading questions and suggesting strategies.

Factors influencing PST responses

It is important to note that not all PSTs improved consistently in maintaining the cognitive demand (Rene and Megan) or improved at all (Erica) in responding to unanticipated incorrect solutions. Group J did not always agree on how to best respond to student thinking (correct or not). Therefore, they spent part of their planning and reflection effort debating how to respond, rather than hypothesizing and analyzing student thinking and carefully crafting responses. The other two groups tended to have a common vision on what responses were appropriate, even if they at times struggled to provide appropriate responses in-the-moment. Though all three groups made strides in helping students explore and explain their ideas, they all experienced difficulty in helping students question or leverage an incorrect idea toward a more productive strategy. Group J in particular often encouraged students with correct solutions to explain their ideas to students who had incorrect solutions or inefficient strategies, thus limiting one student’s autonomy. The struggles of all 3 groups in using student thinking to inform their next moves indicates that PSTs did not treat student ideas as objects of inquiry.

The task dialogue was intended to help PSTs treat student ideas as objects to be explored. The purpose of the task dialogue assignment was for PSTs to develop a hypothesis about what a student might be thinking and design a question that would test that hypothesis. The problematic aspect of the task dialogue for the PSTs was to hypothesize how a student might respond to the question in a way that refuted the PSTs’ hypothesis. Instead, PSTs often wrote their dialogues so that their hypothesis was always correct. The assignment proved helpful in allowing prospective teachers to practice how to elicit thinking, but it did not give them an opportunity to challenge or redirect thinking in ways that maintained the cognitive demand. This may help explain why the PSTs struggled with responding to unanticipated solutions.

As the study progressed, Groups I and H began to understand they were expected to explore student thinking and seemed to be more comfortable doing so. When students presented an incorrect idea that was familiar to the PSTs, they seemed to interpret this as getting their planning “right.” In reflections, PSTs often expressed excitement when a student did something they had planned for or did something they had seen another student do. In such instances, they tended to use their planning documents as a script to funnel students in a particular direction. The PSTs may have assumed they understood the mathematical thinking underlying familiar solutions and hence were not motivated to explore them. To some degree, they perceived anticipated student solutions as proof their planning was accurate and unanticipated solutions as an opportunity to show they could try to understand the student. The goal of the course and field work, repeatedly expressed to PSTs as understanding children’s thinking, may have influenced their tendency to shut down anticipated solutions. When presented unanticipated solutions, PSTs may have viewed this as opportunity to learn something new about students’ thinking and, hence, be worthy of further exploration. PSTs, in fact, may have treated plans as a list of potential solutions to check off for having observed and their revisions to plans as collections of novel student solutions.

I should also point out that PSTs in this study were purposefully selected for their strong content knowledge. Given the importance of content knowledge to navigating uncertainties of teaching (Ball and Bass 2000), it is reasonable to speculate that their strong content knowledge may explain why some were able to become comfortable exploring students’ mathematics when it was unfamiliar. It is possible that, had PSTs with weaker content knowledge been included in the study, results would have shown a higher demand when solutions were anticipated and PSTs did not have the added stress of making in-the-moment pedagogical decisions while also trying unpack their mathematical understandings of tasks.

Implications for teacher education

A major premise of this study was that if PSTs could anticipate children’s thinking, they could thoughtfully craft responses in advance which would be better than those developed in-the-moment of teaching. Instead, I found that two of the three groups shut down student solutions that they had anticipated (hence lowering the cognitive demand) and improved at maintaining high demand when responding to incorrect solutions that they had not anticipated. Though I tried to minimize instances of PSTs encountering student solutions they had not anticipated, responding to unanticipated incorrect work thinking in-the-moment of teaching provided the PSTs opportunities to deepen their understanding of responding to students and provided me with authentic opportunities to assess their developing teaching skill. In these situations, they improved at eliciting student thinking, interpreting that thinking and, to a lesser extent, coordinating work between two students. Analyzing PST responses to unanticipated incorrect student work can also provide insight for mathematics teacher educators in supporting our PSTs in these situations.

Early in the study, PSTs used planning documents as a script and PSTs were more comfortable engaging in students’ mathematics when the students’ activities fell within the parameters of their plan. This trend continued for Group J throughout the study and indicates the importance of planning for novices: to help them think through how to respond to a student before having to do so under the pressure of real-time teaching. However, as the study progressed, for some PSTs the plans took on a role of record keeper, amassing a collection of strategies that PSTs understood. It may be that the role of planning changes as teachers gain more experience with students’ mathematics. Thus, mathematics teacher educators should give more attention to planning, not less. Following the ideas of Ball and Bass (2000), it may be helpful to parse planning into more manageable components, to provide specific targeted support in particular aspects of planning (Ding and Carlson 2013), and to change the aspects of planning on which prospective teachers focus over time as they gain more experience with student thinking and enacting high-demand tasks. For example, as teachers become comfortable with how to elicit student explanations (a relatively accessible task for novices in my study), they could then work on coordinating participation between students or connecting and sequencing different student strategies, tasks which proved more challenging for my PSTs.

This study provides an existence proof that prospective teachers can improve (and in a relatively short time) at eliciting and responding to student thinking. However, it also points out that prospective teachers need concentrated and targeted practice with repeated opportunities to rehearse and refine this skill. The field experience in which these PSTs engaged was carefully structured to support their learning. Every aspect of this field experience (planning, reflection, and the work with students) and its university course counterpart were targeted specifically on learning to facilitate discussions. It was also a concentrated experience in that it eliminated distractors (e.g., classroom management, conflicting K-12 school and university goals, the need to cover particular content) and focused on several essential elements of supporting students in reasoning and making sense of mathematics: implementing a high-demand task, questioning both correct and incorrect student solutions and strategies, and eliciting student explanations and justifications.

Concluding remarks