Abstract

Jaya algorithm (JAYA) is a recently developed metaheuristic algorithm for global optimization problems. JAYA has a very simple structure and only needs the essential population size and terminal condition for solving optimization problems. However, JAYA is easy to get trapped in the local optimum for solving complex global optimization problems due to its single learning strategy. Motivated by this disadvantage of JAYA, this paper presents an improved JAYA, named comprehensive learning JAYA algorithm (CLJAYA), for solving engineering design optimization problems. The core idea of CLJAYA is the designed comprehensive learning mechanism by making full use of population information. The designed comprehensive learning mechanism consists of three different learning strategies to improve the global search ability of JAYA. To investigate the performance of CLJAYA, CLJAYA is first evaluated by the well-known CEC 2013 and CEC 2014 test suites, which include 50 multimodal test functions and eight unimodal test functions. Then CLJAYA is employed to solve five real-world engineering optimization problems. Experimental results demonstrate that CLJAYA can achieve better solutions for most test problems than JAYA and the other compared algorithms, which indicates the designed comprehensive learning mechanism is very effective. In addition, the source code of the proposed CLJAYA can be loaded from https://www.mathworks.com/matlabcentral/fileexchange/82134-the-source-code-for-cljaya.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Engineering optimization is an attractive and challenging field of study. An engineering design problem usually includes the following components: objective function, design variables, feasible solutions and constrained conditions. The feasible solutions are the set of all possible values of the design variables. Solving an engineering optimization problem is to find the best solution meeting the constrained conditions from a large of feasible solutions by an optimization technique. Various numerical optimization methods have been proposed to solve engineering optimization problems. Numerical methods usually require substantial gradient information and are sensitive to the initial solutions (Cheng and Prayogo 2014; Eskandar et al. 2012; Liu et al. 2019). In fact, most real-world engineering optimization problems are very complex, whose objective functions usually have more than one local optimum. Gradient search in these problems is difficult and unstable(Cheng and Prayogo 2014; Lee and Geem 2004). Thus these numerical methods may easily get trapped in the local optima for complex engineering optimization problems(Eskandar et al. 2012). Given the drawbacks of the numerical methods, it is necessary for researchers to design simple and efficient optimization methods for real-world engineering optimization problems. Metaheuristic algorithms are developed under this background.

Briefly, metaheuristic methods commonly operate by combing some defined rules and randomness to simulate natural phenomena (Lee and Geem 2005). From the inspiration source, the reported metaheuristic algorithms can be broadly classified into the following four categories:

-

Evolutionary algorithms. The inspiration source of these algorithms is biological evolution. Differential evolution (Storn and Price 1997) and genetic algorithm (Holland 1975) are two typical members of such algorithms. Differential evolution and genetic algorithm perform the search tasks by simulating some processes of biological evolution.

-

Swarm intelligence algorithms. These algorithms mimic some behavior of animals and plants in nature, such as foraging behavior in particle swarm optimization (Kennedy and Eberhart 1995) and hunting mechanism of grey wolves in grey wolf optimizer (Mirjalili et al. 2014).

-

Physics-based algorithms. These algorithms are inspired from some physical phenomenon in real life, such as the law of gravity in gravitational search algorithm (Rashedi et al. 2009) and the water cycle process and how rivers and streams flow to the sea in water cycle algorithm (Eskandar et al. 2012).

-

Human-related algorithms. These algorithms are inspired from human activity, such as the artificial nervous networks in neural network algorithm (Sadollah et al. 2018) and the teaching activities in teaching–learning-based optimization (Rao et al. 2011).

Although many metaheuristic algorithms have been successfully applied to solve a lot of real-world engineering optimization problems, there remains a need for developing simple and efficient metaheuristic algorithms without any effort for fine tuning initial parameters due to the following two reasons:

-

Most reported metaheuristic algorithms all need special parameters. The parameters of metaheuristic algorithms consist of common parameters and special parameters. Every metaheuristic algorithm needs common parameters, such as population size and stopping criterion (e.g. the maximum number of function evaluations, the maximum number of iterations or the defined threshold value). The parameters reflecting the characteristics of algorithms can be called special parameters, such as differential amplification factor and crossover probability in differential evolution (Storn and Price 1997), discovery probability in cuckoo search (Yang and Deb 2014), and cognitive factor and social factor in particle swarm optimization (Kennedy and Eberhart 1995). The major drawbacks of metaheuristic algorithms with special parameters can be summarized as follows: (1) it is very hard task to set the optimal values of these parameters for unknown optimization problems; (2) different optimization problems usually need different optimal values for these parameters to get the optimal solutions. Given the two drawbacks, the applications of metaheuristic algorithms with special parameters will be restricted. To overcome the two drawbacks, developing metaheuristic algorithms without special parameters is a very efficient method.

-

There is a very important theory in the optimization field, which is called the No Free Lunch (NFL) theorem(Wolpert and Macready 1997). According to NFL theorem, a metaheuristic algorithm may obtain very promising results on a set of optimization problems while it may show poor performance on another set of optimization problems. In other words, no single metaheuristic algorithm is suitable for solving all optimization problems. Thus, more studies are very necessary for researcher to develop new optimization algorithms for solving different types of real-world engineering optimization problems.

Motivated by the mentioned reasons, this work reports a new metaheuristic method without special parameter, named comprehensive learning Jaya algorithm (CLJAYA), for solving engineering design optimization problems. CLJAYA is an improved version of Jaya algorithm (JAYA)(Rao 2016), which is aiming at enhancing the global search ability of JAYA by the designed comprehensive learning mechanism with three different learning strategies. Learning strategy-I in CLJAYA inherits the feature of JAYA. Learning strategy-II introduces the current mean solution to increase the chance of JAYA to escape from the local optima. Learning strategy-III is guided by the current best solution to accelerate the convergence speed of JAYA. Obviously, compared with JAYA, CLJAYA can use population information more efficiently to generate the next generation population. To sum up, the contributions of this work are presented as follows:

-

A novel optimization algorithm called CLJAYA algorithm is proposed.

-

A comprehensive learning mechanism consisting of three different learning strategies is built.

-

CLJAYA is evaluated by the well-known CEC 2013 and CEC 2014 test suites.

-

CLJAYA is employed to solve five real-world engineering design optimization problems.

The rest of this paper is organized as follows: Sect. 2 presents the brief introduction of JAYA. CLJAYA is described in Sect. 3. CLJAYA is checked by CEC 2013 and CEC 2014 test suites in Sect. 4. CLJAYA is used for solving five real-world engineering optimization problems in Sect. 5. Finally, conclusions and further work are made in Sect. 6.

Jaya algorithm

JAYA has a very simple learning strategy to perform the search process, which can be stated as follows. Let \({\mathbf{X}}\) is a population consisting of \(N\) individuals, i.e. \({\mathbf{X}}{ = }\left[ {{\mathbf{x}}_{1} ,{\mathbf{x}}_{2} ,{\mathbf{x}}_{3} , \ldots ,{\mathbf{x}}_{N} } \right]\). Assume there are \(D\) considered variables for the given problem, i.e. \({\mathbf{x}}_{i} { = }\left\{ {{\mathbf{x}}_{i,1} ,{\mathbf{x}}_{i,2} ,{\mathbf{x}}_{i,3} , \ldots ,{\mathbf{x}}_{i,D} } \right\}\), \(i = 1,2,3, \ldots ,N\). In JAYA, the position of the ith individual can be updated by

where \(\kappa_{1}\) and \(\kappa_{2}\) are two random numbers between 0 and 1 subject to uniform distribution, \({\mathbf{v}}_{i}\) is the candidate position of the ith individual, \({\mathbf{x}}_{{{\text{BEST}},j}}\) is the value of the jth variable in the current best individual, and \({\mathbf{x}}_{{{\text{WORST}},j}}\) is the value of the jth variable in the current worst individual. According to the authors of JAYA(Rao 2016), the second and third terms on right-hand side of Eq. (1) indicate the tendency of the solution \({\mathbf{x}}_{i}\) to move closer to the best solution and avoid the worst solution, respectively. To find the optimal solution with a fast speed, the final position of the ith individual at this iteration is selected from the candidate position \({\mathbf{v}}_{i}\) and \({\mathbf{x}}_{i}\), which can be expressed as

The proposed CLJAYA

This section presents the proposed CLJAYA in detail. The framework of CLJAYA is shown in Fig. 1. As can be seen from Fig. 1, updating population in CLJAYA is completed by the designed comprehensive learning mechanism with three different learning strategies. Thus, we first introduce the motivation of the designed comprehensive learning mechanism in “Motivation of CLJAYA” section. Then, the comprehensive learning of CLJAYA is given in The implementation of CLJAYAThe implementation of CLJAYA” section.

Motivation of CLJAYA

JAYA has two drawbacks that may result in its weak ability of avoiding the local optimum, which can be summarized as follows in detail:

-

JAYA doesn’t make full use of population information. As shown in Eq. (1), JAYA has only one learning strategy, which employs the current best solution and the current worst solution to guide the search direction of the population. Thus once the current best individual is trapped into a local optimum, the other individuals will be attracted to approach this local optimum gradually based on Eq. (1). This case will cause the loss of population diversity. Therefore, it is very difficult for the population to escape from the local optimum.

-

The effectiveness of the search operator in JAYA may be tempered in solving optimization problems with search space with positive numbers. In Eq. (1), the absolute value symbol is very critical in keeping population diversity. Generally, the values of the design variables of the real-world engineering optimization problems are more than 0, which means the absolute value symbol is invalid for solving these problems. That is, Eq. (1) can be rewritten as

$$ {\mathbf{v}}_{i,j} = {\mathbf{x}}_{i,j} + \kappa_{1} \times ({\mathbf{x}}_{{{\text{BEST}},j}} - {\mathbf{x}}_{i,j} ) - \kappa_{2} \times ({\mathbf{x}}_{{{\text{WORST}},j}} - {\mathbf{x}}_{i,j} ),i = 1,2,3, \ldots ,N,j = 1,2,3, \ldots ,D $$(3)Note that there are the following two risks in Eq. (3): (1) if the ith individual is equal to the current optimal individual, the second term on right-hand side of Eq. (3) is 0, which is of no help to search better solution; (2) if the ith individual is equal to the current worst individual, the third term on right-hand side of Eq. (3) is 0, which also does nothing to find better solution. Obviously, when the mentioned two cases happen, the search ability of JAYA will be reduced.

The above mentioned two disadvantages of JAYA motivate us to design an improved version of JAYA with better global search ability.

The implementation of CLJAYA

Given the disadvantages of the learning strategy in JAYA, a comprehensive learning mechanism consisting of three different learning strategies is built to improve the global search ability of JAYA. The three different learning strategies can be described as:

-

Learning strategy-I. This learning strategy is based on the current optimal individual and the current worst individual, which inherits the feature of JAYA and can be denoted as

$$ {\mathbf{v}}_{i,j} { = }{\mathbf{x}}_{i,j} + \varphi_{1} \times ({\mathbf{x}}_{{{\text{BEST}},j}} - \left| {{\mathbf{x}}_{i,j} } \right|) - \varphi_{2} \times ({\mathbf{x}}_{{{\text{WORST}},j}} - \left| {{\mathbf{x}}_{i,j} } \right|) $$(4)where \(\varphi_{1}\) and \(\varphi_{2}\) are two random numbers subject to standard normal distribution. Here, it should be pointed out that Eq. (4) uses two random number (i.e. \(\varphi_{1}\) and \(\varphi_{2}\)) with standard normal distribution while Eq. (1) employs two random numbers (i.e. \(\kappa_{1}\) and \(\kappa_{2}\)) with uniform distribution. Compared with random numbers with uniform distribution, random numbers with standard normal distribution have the larger amplitude of variation, which can extend the search space of the individual. Thus, Eq. (4) has more chance to find better solutions than Eq. (1).

-

Learning strategy-II. As an effective indicator of evaluating population distribution, the mean position of the current population has been employed by many optimization algorithms (Cheng and Jin 2015; Rao et al. 2012) to improve their search ability due to the following reason. With the increasing of iteration times, most individuals have gathered around the current optimal individual to perform the local exploitation. The rest few individuals (lagged individuals) are away from the current optimal individual, which perform the task of global exploration. In the search process, the mean position of the current population is always moving. Thus once the population is trapped into local optimum, the lagged individuals guided by the mean position of the current population can have more chance to escape from the local optimum. Given this, the learning strategy-II is designed based on the current optimal individual and the mean position of the current population, which can be defined as

$$ {\mathbf{v}}_{i,j} \,{ = }\,{\mathbf{x}}_{i,j} + \varphi_{3} \times ({\mathbf{x}}_{{{\text{BEST}},j}} - \left| {{\mathbf{x}}_{i,j} } \right|) - \varphi_{4} \times ({\mathbf{M}} - \left| {{\mathbf{x}}_{i,j} } \right|) $$(5)where \(\varphi_{3}\) and \(\varphi_{4}\) are two random numbers subject to standard normal distribution, and \({\mathbf{M}}\) is the mean position of the current population that can be written as

$$ {\mathbf{M}}\,{ = }\,\frac{1}{N}\sum\limits_{i = 1}^{N} {{\mathbf{x}}_{i} } $$(6)Note that \(\varphi_{3}\) and \(\varphi_{4}\) in Eq. (5) play the same role with \(\varphi_{1}\) and \(\varphi_{2}\) in Eq. (4).

-

Learning strategy-III. To accelerate the convergence speed, the current optimal individual is considered as a leader in CLJAYA, which can be expressed as

$$ {\mathbf{v}}_{i,j}\, { = }\,{\mathbf{x}}_{i,j} + \varphi_{5} \times ({\mathbf{x}}_{{{\text{BEST}},j}} - {\mathbf{x}}_{i,j} ) + \varphi_{6} \times ({\mathbf{x}}_{p,j} - {\mathbf{x}}_{q,j} ) $$(7)where \(\varphi_{5}\) and \(\varphi_{6}\) are two random numbers between 0 and 1 subject to uniform distribution, and \(p\) and \(q(p \ne q \ne i)\) are two random integers between 1 and \(N\). In addition, considering the case where \({\mathbf{x}}_{i}\) is the current best solution, the second term on right-hand side of Eq. (7) is 0. Thus a random perturbed term is added to Eq. (7) to avoid this case.

Learning strategy-I, learning strategy-II, and learning strategy-III have the same importance for CLJAYA and they should be assigned the same selected probability. Given this, the designed comprehensive learning mechanism can be indicated as

where \(p_{{{\text{switch}}}}\) is called switch probability and it is uniformly distributed on the interval from 0 to 1.

The built comprehensive learning mechanism as shown in Eq. (8) is the core idea of CLJAYA. Note that there are no any extra parameters in the built mechanism, which indicates the proposed CLJAYA still inherits the advantages of JAYA, i.e. simple structure and only needs essential parameters. In addition, like JAYA, the population \({\mathbf{X}}\) is initialized by

where \(\chi\) is a random number between 0 and 1 subject to the uniform distribution. Figure 2 shows the flow chart of the proposed CLJAYA.

Applications of CLJAYA on numerical optimization

In this section, the performance of CLJAYA on CEC 2013 and CEC 2014 test suites is checked by comparing with six state-of-the-art metaheuristic algorithms.

As listed in Tables 1–2, the solved test suites have been widely used to test the performance of many metaheuristic algorithms (Li et al. 2015; K.S. and Murugan 2017; Tanweer et al. 2016; Xiang et al. 2019; Zhang et al. 2019). CEC 2013 test suite consists of five unimodal functions (F1–F5), 15 simple multimodal functions (F6–F20) and eight composition functions (F21–F28). CEC2014 test suite includes three unimodal functions (F29–F31), 13 simple multimodal functions (F32–F44) and 14 hybrid functions (F45–F58). Compared with unimodal functions, multimodal functions with more than one local optimal solutions are more complex. Note that composition functions in CEC 2013 test suite and hybrid functions in CEC 2014 test suite are also multimodal functions. That is, 23 of 28 functions in CEC 2013 test suite and 27 of 30 functions in CEC 2014 test suite are multimodal functions. Therefore, the two test suites are very suitable for testing the performance of CLJAYA in solving complex optimization problems. In addition, the detailed information for the two test suites can be found in https://www.ntu.edu.sg/home/EPNSugan/.

CLJAYA is compared with six state-of-the-art metaheuristic algorithms to validate the competitive performance of CLJAYA. The selected algorithms are closely associated with CLJAYA in terms of parameters, which include JAYA, teaching–learning-based optimization (TLBO) (Rao et al. 2012), neural network algorithm (NNA) (Sadollah et al. 2018), grey wolf optimizer (GWO)(Mirjalili et al. 2014), whale optimization algorithm (WOA) (Mirjalili and Lewis 2016) and sine cosine algorithm (SCA) (Mirjalili 2016). JAYA is the basis of our proposed CLJAYA. TLBO is a recently proposed metaheuristic algorithm, which is inspired by the traditional teaching method in the classroom. NNA is one of the latest metaheuristic algorithms and its motivation is the artificial neural networks and biological nervous systems. When solving optimization problems, JAYA, NNA and TLBO need the same parameters (i.e. population size and terminal condition) with CLJAYA. GWO, WOA and SCA are inspired by the hunting behavior of grey wolves, the social behavior of humpback whales and the sine cosine theory, respectively. Although the required parameters (i.e. population size and terminal condition) of GWO, WOA and SCA are the same with CLJAYA, there are control parameters related to the terminal condition in the three algorithms. These control parameters can be found in the corresponding references.

In order to make a fair comparison, population size and the maximum number of function evaluations for CLJAYA and the compared algorithms were set to 20 and 300,000, respectively. In addition, every algorithm for every test function was executed 50 independent runs and then the mean absolute error (MEAN) and standard variance (STD) were recorded. The results are presented in Tables 3 and 6. MEAN can be defined by

where \(R_{{\text{N}}}\) is the number of independent runs, \({\mathbf{x}}_{{{\text{Best}},i}}\) is the obtained optimal solution at the \(i{\text{th}}\) run, and \({\mathbf{x}}^{*}\) is the real optimal solution. Besides, Wilcoxon signed-rank test is employed to determine whether there are significance differences between the results obtained by CLJAYA and the compared algorithms on CEC 2013 and CEC 2014 test suites. More specifically, the mean results achieved from 50 independent runs for each algorithm are subjected to this statistical test with a level of significance α = 0.05. Tables 5 and 7 show the results produced by Wilcoxon signed-rank test. In Tables 5 and 7, symbol ‘ + ’ indicates that with 95% certainty the null hypothesis is rejected (p value < 0.05) and CLJAYA outperforms the compared algorithm; symbol ‘−’ means that the null hypothesis is rejected and CLJAYA is inferior to the compared algorithm; symbol ‘ = ’ demonstrates there is no statistical different between CLJAYA and the compared algorithm (p value ≥ 0.05).

Benchmark problem set I: CEC 2013 test suite

Table 3 presents the statistical results obtained by CLJAYA and the compared algorithms on CEC 2013 test suite. According to Table 3, CLJAYA can offer the best solutions on nearly half of functions, i.e. F1, F3, F5, F6, F10, F11, F12, F13, F14, F15, F19 and F21. GWO shows a strong competitiveness, which can obtain the best solutions on eight functions, i.e. F7, F9, F16, F18, F24, F25, F26 and F28. Moreover, TLBO, NNA and WOA can find the optimal solutions on two (i.e. F2 and F4), five (i.e. F17, F20, F22, F23 and F27) and one (i.e. F8) functions, respectively.

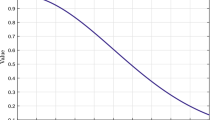

Besides, SCA and JAYA cannot achieve the optimal solutions on any functions. Based on MEAN from Table 3, Table 4 displays the sorted results obtained by all applied algorithms on CEC2013 test suite according to “tied rank”(Rakhshani and Rahati 2017). Moreover, according to Table 4, Fig. 3 shows the average rank of the applied algorithms. Based on Fig. 3, the applied algorithms can be sorted from best to worst in the following order: CLJAYA, NNA, TLBO, GWO, JAYA, WOA and SCA.

Table 5 displays the Wilcoxon signed-rank test results on CEC 2013 test suite. From Table 5, CLJAYA have a significant advantage over WOA, SCA and JAYA, which can offer better solutions than WOA, SCA and NNA on 23, 25 and 24 functions, respectively. Moreover, NNA, GWO and TLBO is superior to CLJAYA on six (i.e. F15, F16, F19, F23, F25 and F26), nine (i.e. F7, F9, F15, F17, F23, F24, F25, F26, F27 and F28) and two (F4 and F25) functions, respectively. But CLJAYA outperforms NNA, GWO and TLBO on 14 (i.e. F1, F2, F3, F4, F5, F6, F7, F9, F10, F11, F12, F13, F18 and F20), 14 (i.e. F1, F2, F3, F4, F5, F6, F10, F11, F12, F14, F18, F19, F21 and F28) and 15 (i.e. F1, F3, F5, F6, F7, F9, F10, F11, F12, F13, F14, F17, F18, F19 and F28) functions, respectively.

To observe the impact of the designed comprehensive learning mechanism on convergence performance of JAYA, Fig. 4 shows several convergence curves obtained by JAYA and CLJAYA on CEC 2013 test suite. The selected functions are F1, F2, F9, F10, F11, F12, F13, F14, F15, F17, F18, F20, F21, F22, F23, F24, F27 and F28. Obviously, 16 of 18 selected functions are complex multimodal functions. As shown in Fig. 4, CLJAYA can find better solutions with faster speed compared with JAYA on these functions, which demonstrates the designed comprehensive learning mechanism can enhance the ability of JAYA to escape from the local optimum.

Benchmark problem set II: CEC 2014 test suite

The statistical results achieved by CLJAYA and the compared algorithms on CEC 2014 test suite are shown in Table 6. From Table 6, CLJAYA can obtain the best solutions on 11 functions, i.e. F29, F30, F32, F34, F35, F36, F37, F38, F41, F43, and F47. TLBO also shows excellent global search ability, which can offer the best solutions on nine functions, i.e. F31, F45, F46, F48, F49, F50, F51, F53 and F58. NNA, GWO, WOA, and SCA can get the best solutions on four (i.e. F33, F40, F54 and F57), five (i.e. F34, F44, F52, F55 and F56), and one (i.e. F42), respectively. SCA and JAYA can’t obtain the best solutions on any functions. According to MEAN from Table 6, Table 7 shows the sorted results of “tied rank” obtained by all applied algorithms on CEC2014. Moreover, according to Table 7, Fig. 5 gives the average rank of the applied algorithms. As can be seen from Fig. 5, the applied algorithms can be sorted from best to worst in the following order: TLBO, CLJAYA, NNA, GWO, WOA, JAYA and SCA. Although TLBO is the best of the applied algorithms, CLJAYA and TLBO are very close in terms of the average rank, which means CLJAYA and TLBO have the similar performance on CEC 2014 test suite.

The results produced by Wilcoxon signed-rank test for CLJAYA and the compared algorithms on CEC 2014 test suite are displayed in Table 8. According to Table 8, CLJAYA is far superior to NNA, WOA, SCA and JAYA, which can find better solutions than NNA, WOA, SCA and JAYA on 21, 21, 25 and 25 functions, respectively. Moreover, GWO and TLBO can offer better solutions than CLJAYA on seven (i.e. F39, F40, F44, F52, F55, F56 and F57) and 12 (i.e. F30, F31, F39, F44, F45, F46, F48, F52, F53, F56, F57 and F58) functions, respectively. Note that CLJAYA can beat GWO and TLBO on 19 (i.e. F29, F30, F31, F32, F33, F35, F36, F37, F38, F42, F43, F45, F46, F47, F48, F49, F51, F53 and F54) and 11 (i.e. F32, F33, F34, F35, F36, F37, F38, F43, F47, F51 and F54) functions, respectively.

In addition, Fig. 6 shows several convergence curves obtained by JAYA and CLJAYA on CEC2014 test suite to test the effectiveness of the designed comprehensive learning mechanism. The selected functions consist of two unimodal functions (i.e. F29 and F30) and sixteen multimodal functions (i.e. F32, F33, F34, F35, F36, F37, F38, F39, F40, F41, F42, F44, F47, F52, F53 and F55). From Fig. 6, CLJAYA is superior to JAYA on these functions in terms of solution quality and convergence speed. That is, the designed comprehensive learning mechanism is very effective for enhancing the ability of JAYA escaping from the local optimum.

Discussion for the effectiveness of the improved strategies

In this section, we discuss the effectiveness of the improved strategies based on the experimental results obtained by CLJAYA on CEC 2013 and CEC 2014 test suites.

In order to improve the global search ability of JAYA for complex optimization problems, three learning strategies are designed in CLJAYA. Learning strategy-I is similar with JAYA. In learning strategy-II, mean position is introduced to enhance the chance of CLJAYA to escape from local optima. Learning-strategy-III is to accelerate the convergence speed of CLJAYA, which is guided by the current best solution. In order to study the performance of CLJAYA for complex optimization problems, CEC 2013 and CEC 2014 test suites are employed. Note that the two test suites include 50 multimodal functions and eight unimodal functions, which are very suitable for checking the performance of CLJAYA in solving complex optimization problems.

According to MEAN shown in Tables 3 and 6, JAYA only outperforms CLJAYA on F8, F16, F25, F26, F56, F57 and F58. CLJAYA can beat JAYA on the rest 51 test functions. In addition, Figs. 3 and 4 present the convergence curves obtained by JAYA and CLJAYA for more than 60% of test functions. Based on Fig. 3 and 4, CLJAYA shows better convergence performance than JAYA on these test functions in terms of convergence speed and solution quality. Besides, according to Fig. 3 and 4, JAYA tends to premature convergence while CLJAYA shows strong ability of escaping from the local optima for solving complex optimization problems. Obviously, benefiting from the designed learning strategies, CLJAYA is significant superior to JAYA for the used two test suites in terms of overall optimization performance. More specifically, the designed learning strategies can make full use of population information including the current best solution, the current worst solution, the current mean solution and some current random solutions, which is very helpful for keeping population diversity and enhancing the global search ability of CLJAYA.

Based on the above discussion, the improved strategies introduced to JAYA are very successful and achieve the expected effect.

Applications of CLJAYA on constrained engineering optimization

In this section, CLJAYA is employed to solve five practical engineering design optimization problems. Section 4 has demonstrated the effectiveness of the improved strategies. That is, the improved strategies can significantly enhance the global search ability of JAYA for solving complex optimization problems, which lays a good foundation for using CLJAYA to solve practical complex engineering optimization problems. This section is divided into two parts. Section 5.1 shows the mathematical model of constrained engineering problems and the used mechanism addressing the constrained conditions. Section 5.2 presents the experimental results obtained by CLJAYA for five practical constrained engineering optimization problems.

The mathematical model of constrained engineering problems

Although there are many different types of engineering optimization problems in the real world, their mathematical models all can be formulated as follows:

where the objective function is defined by \(f\left( {\mathbf{x}} \right)\) and \({\mathbf{x}} = \left( {x_{1} ,x_{2} , \ldots ,x_{D} } \right)^{T}\) is a one-dimensional vector of \(D\) variables.\(l_{i}\) and \(u_{i}\) are the lower and upper limits of the ith variable, respectively.\( \, h_{t} ({\mathbf{x}})\) and \( \, g_{k} ({\mathbf{x}})\) are the tth equality constraint and kth inequality constraint, respectively.\(m\) and \(n\) are the number of equality constraints and inequality constraints, respectively. Moreover, although Eq. (11) describes the minimization problem, the maximization problem can be transformed into minimization one as \(- f\left( {\mathbf{x}} \right)\). A major barrier in solving a constrained engineering optimization problem is how to handle equality constraints and inequality constraints of the given problem. We transform the constraint optimization problem into an unconstrained optimization problem by the penalty function approach as done in (Gandomi et al. 2011; Gandomi et al. 2013a, b), which can be expressed as

where

where \(\eta_{i} \left( {1 \le \eta_{i} } \right)\) and \(\xi_{j} \left( {0 \le \xi_{j} } \right)\) are penalty factors, and \(P\) is the total penalty function. \(H_{i} ({\mathbf{x}})\) and \(G_{j} ({\mathbf{x}})\) are the penalty functions of the ith equality constraint and the jth inequality constraint, respectively. The penalty factors (\(\eta_{i}\) and \(\xi_{j}\)) should be large enough based on the specific optimization problem (Gandomi et al. 2011), which are set to 10e20 in our experiments. As can be seen from Eqs. (12–14), if the ith equality constraint is met,\(H_{i} ({\mathbf{x}})\) is equal to 0 and has no contribution to \(P\); if the ith equality constraint is violated,\(H_{i} ({\mathbf{x}})\) will significantly increase and has a significant impact on \(P\). This phenomenon can also happen in \(G_{j} ({\mathbf{x}})\).

Experiential results on constrained engineering problems

In this section, CLJAYA is used to solve five constrained engineering optimization problems, i.e. welded beam design problem, tension/compression spring design problem, speed reducer design problem, three-bar truss design problem and car impact design problem. In order to show the superiority of CLJAYA for these problems, the obtained results by CLJAYA are compared with those of JAYA and recently reported results. In addition, population size of JAYA and CLJAYA was set to 20 for all test cases. In addition, for every test case, JAYA and CLJAYA were executed 50 independent runs and then the worst value, the mean value, the best value and the standard variance were obtained as shown in Tables 9, 10, 11, 12 and 13. In the five tables, “Worst”, “Mean”, “Best”, “STD”, “NFEs” and “NA” stand for the worst value, the mean value, the best value, the standard variance, the number of function evaluations and not available, respectively.

Case 1: Welded beam design problem

This is a classical engineering design optimization problem, which was proposed by Coello (Coello 2000a, b). Solving this problem is to design a welded beam with the minimum cost. The formula of this problem can be found in Appendix A.1. The optimization constraints of this problem are shear stress \((\tau )\), bucking load \((P_{c} )\), bending stress in the beam \((\theta )\) and deflection rate \((\delta )\). The design variables of this problem consist of the height of the bar \(t(x_{1} )\), the thickness of the weld \(h(x_{2} )\), the length of the bar \(l(x_{3} )\) and the thickness of the bar \(b(x_{4} )\).

Table 9 shows the results for the welded beam design problem obtained by CAEP (Coello and Becerra 2004), CPSO (Krohling and Coelho 2006), HPSO (Amirjanov 2006), SC (Ray and Liew 2003), DE (Lampinen 2002), PSO-DE (Liu et al. 2010), MGA (Coello 2000a, b), WCA (Eskandar et al. 2012), QS (Zhang et al. 2018), NDE (Mohamed 2018), TLNNA(Zhang et al. 2020), DPSO (Liu et al. 2019), MHS-PCLS (Yi et al. 2019), εDE-HP (Yi et al. 2020), hHHO-SCA (Kamboj et al. 2020), IAFOA (Wu et al. 2018), MRFO (Zhao et al. 2020), EO (Faramarzi et al. 2020), I-ABC greedy (Sharma and Abraham 2020), UFA (Brajević and Ignjatović 2019), JAYA and CLJAYA. In Table 9, if one algorithm can get the smallest Best, which means this algorithm can obtain a better solution to design the welded beam than the compared algorithms. According to Table 9, CAEP, HPSO, PSO-DE, QS, NDE, TLNNA, MHS-PCLS, εDE-HP, MRFO, UFA, I-ABC greedy and CLJAYA can find the best solution, i.e.1.724852. Note that CLJAYA only consumes 5,000 function evaluations. However, the number of function evaluations consumed by CAEP, HPSO, PSO-DE, QS, NDE, TLNNA, MHS-PCLS, εDE-HP, MRFO and I-ABC greedy is 50,020, 81,000, 66,600, 20,000, 8,000, 9,000, 10,000, 20,000, 30,000, and 14,500 respectively. Obviously, CLJAYA has a significant advantage over CAEP, HPSO, PSO-DE, QS, NDE, TLNNA, MHS-PCLS, εDE-HP, MRFO and I-ABC greedy in terms of computational efficient. In addition, UFA only needs 2,000 function evaluations, which is more efficient than CLJAYA. Besides, CLJAYA is superior to JAYA on Best, Worst, Mean and STD, which means CLJAYA is more suitable for solving the welded beam design problem compared with JAYA.

Case 2: Tension/compression spring design problem

The tension/compression spring design problem is introduced in (Arora 1989). The goal of this problem is to minimize the weight of a tension/compression spring. This problem includes three design variables, i.e. the wire diameter \(p\)\((x_{1} )\), the mean coil diameter \(D\) \((x_{2} )\) and the number of active coils \(d\)\((x_{3} )\). Moreover, four constraints need to be considered. The formula of this problem can be found in Appendix A.2.

Table 10 presents the results for the tension/compression spring design problem obtained by GA2 (Coello 2000a, b), GA3 (Coello and Mezura Montes 2002), CPSO (Krohling and Coelho 2006), HPSO (Liu et al. 2010), PSO (Liu et al. 2010), CAEP (Coello and Becerra 2004), DE (Lampinen 2002), DEDS (Zhang et al. 2008), HEAA (Wang et al. 2009), PSO-DE (Liu et al. 2010), PVS(Savsani and Savsani 2016), QS (Zhang et al. 2018), NDE (Mohamed 2018), TLNNA (Zhang et al. 2020), DPSO (Liu et al. 2019), MHS-PCLS (Yi et al. 2019), εDE-HP (Yi et al. 2020), hHHO-SCA (Kamboj et al. 2020), IAFOA (Wu et al. 2018), MRFO (Zhao et al. 2020), EO(Faramarzi et al. 2020), DSLC-FOA (Du et al. 2018), JAYA, UFA, I-ABC greedy and CLJAYA. In Table 10, if one algorithm can achieve the smallest Best, which means this algorithm can offer a better solution to design the tension/compression spring than the compared algorithms. From Table 10, DEDS, HEAA, PSO-DE, PVS, QS, NDE, TLNNA, DPSO, MHS-PCLS, εDE-HP, IAFOA, EO, UFA, I-ABC greedy and CLJAYA achieve the optimal objective function value, i.e. 0.012665. The consumed number of function evaluations for DEDS, HEAA, PSO-DE, PVS, QS, NDE, TLNNA, DPSO, MHS-PCLS, εDE-HP, IAFOA, EO and CLJAYA are 24,000, 24,000, 24,950, 8,000, 8,000, 24,000, 18,000, 30,000, 10,000, 20,000, 40,000, 15,000 and 6,000, respectively. Obviously, CLJAYA can find the optimal solution with a faster speed compared with DEDS, HEAA, PSO-DE, PVS, QS, NDE, TLNNA, DPSO, MHS-PCLS, εDE-HP, IAFOA and EO. Note that, UFA and I-ABC greedy can consume fewer function evaluations than CLJAYA. In addition, JAYA is inferior to CLJAYA on all considered four indicators.

Case 3: Speed reducer design problem

As a common engineering design problem, the goal of this problem is to minimize the weight of speed reducer subject to constraints on bending stress of the gear teeth, surface stress, transverse deflections of the shafts, and stresses in the shafts(Brajević and Ignjatović 2019). This problem has seven design variables: the face width \(b(x_{1} )\), module of teeth \(m(x_{2} )\), number of teeth in the pinion \(z(x_{3} )\), length of the first shaft between bearings \(l_{1} (x_{4} )\), length of the second shaft between bearings \(l_{2} (x_{5} )\), and the diameter of first \(d_{1} (x_{6} )\) and second shafts \(l_{2} (x_{7} )\). Moreover, 11 constraints are included in the problem. Note that, it should be pointed out that this problem has two versions. The only difference between the two versions is that the limits of the variable \(x_{5}\)(Brajević and Ignjatović 2019; Savsani and Savsani 2016). The variable \(x_{5}\) lies between 7.3 and 8.3 for the first version while it ranges between 7.8 and 8.3. This experiment is to solve the first version. The formula of this problem can be found in Appendix A.3.

Table 11 displays the results obtained by SC (Ray and Liew 2003), PSO-DE (Liu et al. 2010), DELC (Wang et al. 2009), DEDS (Zhang et al. 2008), HEAA(Wang et al. 2009), FFA (Baykasoğlu and Ozsoydan 2015), MBA (Sadollah et al. 2013), CSA (Askarzadeh 2016), PVS(Savsani and Savsani 2016), WCA (Eskandar et al. 2012), QS (Zhang et al. 2018), NDE (Mohamed 2018), TLNNA (Zhang et al. 2020), DPSO (Liu et al. 2019), MHS-PCLS (Yi et al. 2019), εDE-HP (Yi et al. 2020), hHHO-SCA (Kamboj et al. 2020), IAFOA(Wu et al. 2018), MRFO (Zhao et al. 2020), UFA, I-ABC greedy, JAYA and CLJAYA. In Table 11, one algorithm with the smallest Best can give a better solution to design the speed reducer than the compared algorithms. As presented in Table 11 I-ABC greedy is the best, which can find the optimal solution, i.e. 2994.471032. In addition, DELC, DEDS, WCA, QS, PVS, NDE, TLNNA, εDE-HP, UFA, JAYA and CLJAYA can find the same optimal solution, i.e. 2994.471066. Note that CLJAYA is superior to JAYA on the rest indicators including Worst, Mean and STD, which indicates CLJAYA has a better comprehensive performance than JAYA for speed reducer design problem.

Case 4: Three-bar truss design problem

The goal of this problem is to minimize the volume of a statistically loaded three-bar truss subject to stress constraints on each of the truss members by adjusting cross sectional areas \((x_{1} ,x_{2} )\). Moreover, three constraints also need to be taken into account. The formula of this problem can be found in Appendix A.4.

Table 12 displays the results for the three-bar truss design problem obtained by SC (Ray and Liew 2003), PSO-DE (Liu et al. 2010), DSS-MDE (Zhang et al. 2008), MBA(Sadollah et al. 2013), DEDS (Zhang et al. 2008), HEAA (Wang et al. 2009), WCA (Eskandar et al. 2012), MFO (Mirjalili 2015), MVO(Mirjalili et al. 2016), GOA (Saremi et al. 2017), SFO (Shadravan et al. 2019), PRO (Samareh Moosavi and Bardsiri 2019), hHHO-SCA (Kamboj et al. 2020), JAYA and CLJAYA. In Table 12, one algorithm with the smallest Best can find a better solution to design the three-bar truss than the compared algorithms. As can be seen from Table 12, PSO-DE, DSS-MDE, DEDS, HWAA, WCA, CLJAYA can offer the optimal solution. By observing Table 12, PSO-DE, DSS-MDE, DEDS and HEAA consume more than 10,000 function evaluations while CLJAYA only consumes 5,000 function evaluations. Moreover, WCA shows strong competitiveness with 5,250 function evaluations. Note that CLJAYA is slight superior to WCA in terms of convergence speed. Besides, CLJAYA outperforms JAYA on Worst, Mean, Best and STD.

Case 5: Car impact design problem

The design problem of car side impact is stated by Gu et al. (Gu et al. 2001). On the foundation of European Enhanced Vehicle-Safety Committee procedures, a car is exposed to a side-impact (Gandomi et al. 2011). The goal of this case is to minimize the weight related to nine influence parameters including thickness of B-Pillar inner, B-Pillar reinforcement, floor side inner, cross members, door beam, door beltline reinforcement and roof rail \(\left( {x_{1} { - }x_{7} } \right)\), materials of B-Pillar inner and floor side inner \(\left( {x_{8} { - }x_{9} } \right)\) and barrier height and hitting position \(\left( {x_{10} { - }x_{11} } \right)\). Moreover, ten inequality constraints associated with the car side impact design problem need to be considered. The formula of this problem can be found in Appendix A.5.

Table 13 gives the best solutions obtained by PSO (Gandomi et al. 2013a, b), DE (Gandomi et al. 2013a, b), GA (Gandomi et al. 2013a, b), FA (Gandomi et al. 2011), CS (Gandomi et al. 2013a, b), TLBO (Huang et al. 2015), TLCS (Huang et al. 2015), MHS-PCLS (J. Yi et al. 2019), JAYA and CLJAYA. In Table 13, the algorithm with the smallest weight can find the better solution to the car impact design problem compared with the other algorithms. From Table 13, CS achieves the optimal solution. Note that DE, FA and CLJAYA can offer very competitive solutions.

Conclusions and further work

This paper presents an improved Jaya algorithm called comprehensive learning Jaya algorithm (CLJAYA) for solving engineering optimization problems. The proposed CLJAYA has a very simple structure and only depends on the essential population size and terminal condition for solving optimization problems. In CLJAYA, the designed comprehensive learning mechanism with three different learning strategies is introduced to enhance its ability of escaping from the local optimum. In order to investigate the effectiveness of the improved strategies, CLJAYA is used to solve CEC 2013 test suite, CEC 2014 test suite and five challenging engineering optimization problems. In addition, CEC 2013 and CEC 2014 test suits have 58 test functions. Note that 50 of 58 test functions are multimodal functions. Besides, the used five engineering optimization problems need to meet the given complex constraints. Thus, these test cases are very suitable for examining the performance of CLJAYA for complex optimization problems. The experimental results prove the superiority of CLJAYA in solving complex optimization problems by comparing with JAYA and some state-of-the-art algorithms in terms of solution quality and computational efficiency. In other words, the designed strategies for improving JAYA are very effective.

Further work will focus on the following two aspects. On the one hand, CLJAYA is a metaheuristic algorithm without special parameters and has a great potential to be widely used. Thus, we intend to use CLJAYA to solve more practical engineering optimization problems, such as flexible job-shop scheduling problem and vehicle routing problem with time windows. On another hand, we discuss the advantages of metaheuristic algorithms without special parameters in this work. However, most of the reported metaheuristic algorithms have special parameters. Note that developing metaheuristic algorithms without special parameters has not been regarded highly by researchers. Thus, we will develop more metaheuristic algorithms without special parameters to solve different types of optimization problems in the future research.

Abbreviations

- \({\mathbf{X}}\) :

-

Population

- \(N\) :

-

Population size

- \(D\) :

-

Dimension

- \({\mathbf{x}}_{i}\) :

-

The position of the ith individual

- \({\mathbf{v}}_{i}\) :

-

The candidate position of the ith individual

- \({\mathbf{x}}_{{{\text{BEST}},j}}\) :

-

The jth variable of the best individual

- \({\mathbf{x}}_{{{\text{WORST}},j}}\) :

-

The jth variable of the worst individual

- \({\mathbf{M}}\) :

-

The mean position of the population

- \(p_{{{\text{switch}}}}\) :

-

Switch probability

- \(p,q\) :

-

Integers between 1 and \(N\)

- \(\kappa_{1} ,\kappa_{2} ,\varphi_{5} ,\varphi_{6} ,\chi\) :

-

Random numbers between 0 and 1 with uniform distribution

- \(\varphi_{1} ,\varphi_{2} ,\varphi_{3} ,\varphi_{4}\) :

-

Random numbers with standard normal distribution

- \({\mathbf{L}}\) :

-

The lower boundary of the variables

- \({\mathbf{U}}\) :

-

The upper boundary of the variables

- \(F_{{{\text{current}}}}\) :

-

The current number of function evaluations

- \(F_{\max }\) :

-

The maximum number of function evaluations

- \(R_{N}\) :

-

The number of independent runs

- \({\mathbf{x}}^{*}\) :

-

The real optimal solution

- \(h_{t}\) :

-

The tth equality constraint

- \(g_{k}\) :

-

The kth inequality constraint

- \(m\) :

-

The number of equality constraints

- \(n\) :

-

The number of inequality constraints

- \(P({\mathbf{x}})\) :

-

The total penalty function

- \(H_{i} ({\mathbf{x}})\) :

-

The penalty function of the ith equality constraint

- \(G_{j} ({\mathbf{x}})\) :

-

The penalty function of the jth inequality constraint

- \(\eta_{i}\) :

-

The penalty factor of the penalty function of the ith equality constraint

- \(\xi_{j}\) :

-

The penalty factor of the penalty function of the jth inequality constraint

- MEAN:

-

Mean absolute error

- STD:

-

Standard variance

- NNA:

-

Neural network algorithm

- GWO:

-

Grey wolf optimizer

- WOA:

-

Whale optimization algorithm

- SCA:

-

Sine cosine algorithm

- JAYA:

-

Jaya algorithm

- TLBO:

-

Teaching–learning-based optimization

- CLJAYA:

-

Comprehensive learning Jaya algorithm

References

Amirjanov, A. (2006). The development of a changing range genetic algorithm. Computer Methods in Applied Mechanics and Engineering, 195(19), 2495–2508. https://doi.org/10.1016/j.cma.2005.05.014.

Arora, J. S. (1989). Introduction to optimum design. New York: McGraw-Hill.

Askarzadeh, A. (2016). A novel metaheuristic method for solving constrained engineering optimization problems: Crow search algorithm. Computers & Structures, 169, 1–12. https://doi.org/10.1016/j.compstruc.2016.03.001.

Baykasoğlu, A., & Ozsoydan, F. B. (2015). Adaptive firefly algorithm with chaos for mechanical design optimization problems. Applied Soft Computing, 36, 152–164. https://doi.org/10.1016/j.asoc.2015.06.056.

Brajević, I., & Ignjatović, J. (2019). An upgraded firefly algorithm with feasibility-based rules for constrained engineering optimization problems. Journal of Intelligent Manufacturing, 30(6), 2545–2574. https://doi.org/10.1007/s10845-018-1419-6.

Cheng, M.-Y., & Prayogo, D. (2014). Symbiotic organisms search: A new metaheuristic optimization algorithm. Computers & Structures, 139, 98–112. https://doi.org/10.1016/j.compstruc.2014.03.007.

Coello, C. A. C. (2000). Constraint-handling using an evolutionary multiobjective optimization technique. Civil Engineering Systems, 17(4), 319–346.

Coello, C. A. C., & Becerra, R. L. (2004). Efficient evolutionary optimization through the use of a cultural algorithm. Engineering Optimization, 36(2), 219–236.

Coello Coello, C. A. (2000). Use of a self-adaptive penalty approach for engineering optimization problems. Computers in Industry, 41(2), 113–127. https://doi.org/10.1016/S0166-3615(99)00046-9.

Coello Coello, C. A., & Mezura Montes, E. (2002). Constraint-handling in genetic algorithms through the use of dominance-based tournament selection. Advanced Engineering Informatics, 16(3), 193–203. https://doi.org/10.1016/S1474-0346(02)00011-3.

Du, T.-S., Ke, X.-T., Liao, J.-G., & Shen, Y.-J. (2018). DSLC-FOA : Improved fruit fly optimization algorithm for application to structural engineering design optimization problems. Applied Mathematical Modelling, 55, 314–339. https://doi.org/10.1016/j.apm.2017.08.013.

Eskandar, H., Sadollah, A., Bahreininejad, A., & Hamdi, M. (2012). Water cycle algorithm – A novel metaheuristic optimization method for solving constrained engineering optimization problems. Computers & Structures, 110–111, 151–166. https://doi.org/10.1016/j.compstruc.2012.07.010.

Faramarzi, A., Heidarinejad, M., Stephens, B., & Mirjalili, S. (2020). Equilibrium optimizer: A novel optimization algorithm. Knowledge-Based Systems, 191, 105190. https://doi.org/10.1016/j.knosys.2019.105190.

Gandomi, A. H., Yang, X.-S., & Alavi, A. H. (2011). Mixed variable structural optimization using Firefly Algorithm. Computers & Structures, 89(23), 2325–2336. https://doi.org/10.1016/j.compstruc.2011.08.002.

Gandomi, A. H., Yang, X.-S., & Alavi, A. H. (2013a). Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems. Engineering with Computers, 29(1), 17–35. https://doi.org/10.1007/s00366-011-0241-y.

Gandomi, A. H., Yang, X.-S., Alavi, A. H., & Talatahari, S. (2013b). Bat algorithm for constrained optimization tasks. Neural Computing and Applications, 22(6), 1239–1255. https://doi.org/10.1007/s00521-012-1028-9.

Gu, L., Yang, R., Tho, C.-H., Makowski, M., Faruque, O., & Li, Y. (2001). Optimization and robustness for crashworthiness of side impact. International journal of vehicle design, 26(4), 348–360.

Holland, J. H. (1975). Adaptation in natural and artificial systems: An introductory analysis with applications to biology, control, and artificial intelligence. Oxford, England: U Michigan Press.

Huang, J., Gao, L., & Li, X. (2015). An effective teaching-learning-based cuckoo search algorithm for parameter optimization problems in structure designing and machining processes. Applied Soft Computing, 36, 349–356. https://doi.org/10.1016/j.asoc.2015.07.031.

Li, J., Zhang, J., Jiang, C., & Zhou, M. (2015). Composite particle swarm optimizer with historical memory for function optimization. IEEE Transactions on Cybernetics, 45(10), 2350–2363. https://doi.org/10.1109/TCYB.2015.2424836.

Kamboj, V. K., Nandi, A., Bhadoria, A., & Sehgal, S. (2020). An intensify Harris Hawks optimizer for numerical and engineering optimization problems. Applied Soft Computing, 89, 106018. https://doi.org/10.1016/j.asoc.2019.106018.

Kennedy, J., & Eberhart, R. (1995). Particle swarm optimization. In Proceedings of ICNN’95-International Conference on Neural Networks (Vol. 4, pp. 1942–1948). IEEE.

Krohling, R. A., & dos Santos Coelho, L. (2006). Coevolutionary particle swarm optimization using gaussian distribution for solving constrained optimization problems. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 36(6), 1407–1416. https://doi.org/10.1109/TSMCB.2006.873185.

K.S., S. R., & Murugan, S. (2017). Memory based Hybrid Dragonfly Algorithm for numerical optimization problems. Expert Systems with Applications, 83, 63–78. https://doi.org/10.1016/j.eswa.2017.04.033.

Lampinen, J. (2002). A constraint handling approach for the differential evolution algorithm. In Proceedings of the 2002 Congress on Evolutionary Computation. CEC’02 (Cat. No.02TH8600) (Vol. 2, pp. 1468–1473 vol.2). https://doi.org/10.1109/CEC.2002.1004459

Lee, K. S., & Geem, Z. W. (2004). A new structural optimization method based on the harmony search algorithm. Computers & Structures, 82(9), 781–798. https://doi.org/10.1016/j.compstruc.2004.01.002.

Lee, K. S., & Geem, Z. W. (2005). A new meta-heuristic algorithm for continuous engineering optimization: harmony search theory and practice. Computer Methods in Applied Mechanics and Engineering, 194(36), 3902–3933. https://doi.org/10.1016/j.cma.2004.09.007.

Liu, H., Wang, Y., Tu, L., Ding, G., & Hu, Y. (2019). A modified particle swarm optimization for large-scale numerical optimizations and engineering design problems. Journal of Intelligent Manufacturing, 30(6), 2407–2433. https://doi.org/10.1007/s10845-018-1403-1.

Liu, H., Cai, Z., & Wang, Y. (2010). Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Applied Soft Computing, 10(2), 629–640. https://doi.org/10.1016/j.asoc.2009.08.031.

Mirjalili, S. (2015). Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowledge-Based Systems, 89, 228–249. https://doi.org/10.1016/j.knosys.2015.07.006.

Mirjalili, S. (2016). SCA: A Sine Cosine Algorithm for solving optimization problems. Knowledge-Based Systems, 96, 120–133. https://doi.org/10.1016/j.knosys.2015.12.022.

Mirjalili, S., & Lewis, A. (2016). The whale optimization algorithm. Advances in Engineering Software, 95, 51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008.

Mirjalili, S., Mirjalili, S. M., & Hatamlou, A. (2016). Multi-Verse Optimizer: a nature-inspired algorithm for global optimization. Neural Computing and Applications, 27(2), 495–513. https://doi.org/10.1007/s00521-015-1870-7.

Mirjalili, S., Mirjalili, S. M., & Lewis, A. (2014). Grey Wolf Optimizer. Advances in Engineering Software, 69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007.

Mohamed, A. W. (2018). A novel differential evolution algorithm for solving constrained engineering optimization problems. Journal of Intelligent Manufacturing, 29(3), 659–692. https://doi.org/10.1007/s10845-017-1294-6.

Rakhshani, H., & Rahati, A. (2017). Snap-drift cuckoo search: A novel cuckoo search optimization algorithm. Applied Soft Computing, 52, 771–794. https://doi.org/10.1016/j.asoc.2016.09.048.

Cheng, R., & Jin, Y. (2015). A competitive swarm optimizer for large scale optimization. IEEE Transactions on Cybernetics, 45(2), 191–204. https://doi.org/10.1109/TCYB.2014.2322602.

Rao, R. (2016). Jaya: A simple and new optimization algorithm for solving constrained and unconstrained optimization problems. International Journal of Industrial Engineering Computations, 7(1), 19–34.

Rao, R. V., Savsani, V. J., & Vakharia, D. P. (2011). Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Computer-Aided Design, 43(3), 303–315. https://doi.org/10.1016/j.cad.2010.12.015.

Rao, R. V., Savsani, V. J., & Vakharia, D. P. (2012). Teaching–learning-based optimization: An optimization method for continuous non-linear large scale problems. Information Sciences, 183(1), 1–15. https://doi.org/10.1016/j.ins.2011.08.006.

Rashedi, E., Nezamabadi-pour, H., & Saryazdi, S. (2009). GSA: A gravitational search algorithm. Special Section on High Order Fuzzy Sets, 179(13), 2232–2248. https://doi.org/10.1016/j.ins.2009.03.004.

Ray, T., & Liew, K. M. (2003). Society and civilization: An optimization algorithm based on the simulation of social behavior. IEEE Transactions on Evolutionary Computation, 7(4), 386–396. https://doi.org/10.1109/TEVC.2003.814902.

Sadollah, A., Bahreininejad, A., Eskandar, H., & Hamdi, M. (2013). Mine blast algorithm: A new population based algorithm for solving constrained engineering optimization problems. Applied Soft Computing, 13(5), 2592–2612. https://doi.org/10.1016/j.asoc.2012.11.026.

Sadollah, A., Sayyaadi, H., & Yadav, A. (2018). A dynamic metaheuristic optimization model inspired by biological nervous systems: Neural network algorithm. Applied Soft Computing, 71, 747–782. https://doi.org/10.1016/j.asoc.2018.07.039.

Samareh Moosavi, S. H., & Bardsiri, V. K. (2019). Poor and rich optimization algorithm: A new human-based and multi populations algorithm. Engineering Applications of Artificial Intelligence, 86, 165–181. https://doi.org/10.1016/j.engappai.2019.08.025.

Saremi, S., Mirjalili, S., & Lewis, A. (2017). Grasshopper optimisation algorithm: Theory and application. Advances in Engineering Software, 105, 30–47. https://doi.org/10.1016/j.advengsoft.2017.01.004.

Savsani, P., & Savsani, V. (2016). Passing vehicle search (PVS): A novel metaheuristic algorithm. Applied Mathematical Modelling, 40(5), 3951–3978. https://doi.org/10.1016/j.apm.2015.10.040.

Shadravan, S., Naji, H. R., & Bardsiri, V. K. (2019). The sailfish optimizer: A novel nature-inspired metaheuristic algorithm for solving constrained engineering optimization problems. Engineering Applications of Artificial Intelligence, 80, 20–34. https://doi.org/10.1016/j.engappai.2019.01.001.

Sharma, T. K., & Abraham, A. (2020). Artificial bee colony with enhanced food locations for solving mechanical engineering design problems. Journal of Ambient Intelligence and Humanized Computing, 11(1), 267–290. https://doi.org/10.1007/s12652-019-01265-7.

Storn, R., & Price, K. (1997). Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. Journal of global optimization, 11(4), 341–359.

Tanweer, M. R., Suresh, S., & Sundararajan, N. (2016). Dynamic mentoring and self-regulation based particle swarm optimization algorithm for solving complex real-world optimization problems. Information Sciences, 326, 1–24. https://doi.org/10.1016/j.ins.2015.07.035.

Wang, Y., Cai, Z., Zhou, Y., & Fan, Z. (2009). Constrained optimization based on hybrid evolutionary algorithm and adaptive constraint-handling technique. Structural and Multidisciplinary Optimization, 37(4), 395–413. https://doi.org/10.1007/s00158-008-0238-3.

Wolpert, D. H., & Macready, W. G. (1997). No free lunch theorems for optimization. IEEE Transactions on Evolutionary Computation, 1(1), 67–82. https://doi.org/10.1109/4235.585893.

Wu, L., Liu, Q., Tian, X., Zhang, J., & Xiao, W. (2018). A new improved fruit fly optimization algorithm IAFOA and its application to solve engineering optimization problems. Knowledge-Based Systems, 144, 153–173. https://doi.org/10.1016/j.knosys.2017.12.031.

Xiang, Z., Ji, D., Zhang, H., Wu, H., & Li, Y. (2019). A simple PID-based strategy for particle swarm optimization algorithm. Information Sciences, 502, 558–574. https://doi.org/10.1016/j.ins.2019.06.042.

Yang, X.-S., & Deb, S. (2014). Cuckoo search: recent advances and applications. Neural Computing and Applications, 24(1), 169–174. https://doi.org/10.1007/s00521-013-1367-1.

Yi, J., Li, X., Chu, C.-H., & Gao, L. (2019). Parallel chaotic local search enhanced harmony search algorithm for engineering design optimization. Journal of Intelligent Manufacturing, 30(1), 405–428. https://doi.org/10.1007/s10845-016-1255-5.

Yi, W., Gao, L., Pei, Z., Lu, J., & Chen, Y. (2020). ε Constrained differential evolution using halfspace partition for optimization problems. Journal of Intelligent Manufacturing. https://doi.org/10.1007/s10845-020-01565-2.

Zhang, J., Xiao, M., Gao, L., & Pan, Q. (2018). Queuing search algorithm: A novel metaheuristic algorithm for solving engineering optimization problems. Applied Mathematical Modelling, 63, 464–490. https://doi.org/10.1016/j.apm.2018.06.036.

Zhang, K., Huang, Q., & Zhang, Y. (2019). Enhancing comprehensive learning particle swarm optimization with local optima topology. Information Sciences, 471, 1–18. https://doi.org/10.1016/j.ins.2018.08.049.

Zhang, M., Luo, W., & Wang, X. (2008). Differential evolution with dynamic stochastic selection for constrained optimization. Nature Inspired Problem-Solving, 178(15), 3043–3074. https://doi.org/10.1016/j.ins.2008.02.014.

Zhang, Y., Jin, Z., & Chen, Y. (2020). Hybrid teaching–learning-based optimization and neural network algorithm for engineering design optimization problems. Knowledge-Based Systems, 187, 104836. https://doi.org/10.1016/j.knosys.2019.07.007.

Zhao, W., Zhang, Z., & Wang, L. (2020). Manta ray foraging optimization: An effective bio-inspired optimizer for engineering applications. Engineering Applications of Artificial Intelligence, 87, 103300. https://doi.org/10.1016/j.engappai.2019.103300.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interest

The authors declare that there is no conflict of interests regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

A.1 welded beam design problem

A.2 Tension/compression spring design problem

A.3 Speed reducer design problem

A.4 Three-bar truss design problem

A.5 Car impact design problem

Rights and permissions

About this article

Cite this article

Zhang, Y., Jin, Z. Comprehensive learning Jaya algorithm for engineering design optimization problems. J Intell Manuf 33, 1229–1253 (2022). https://doi.org/10.1007/s10845-020-01723-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-020-01723-6