Abstract

Scholarship on faculty and student perceptions of plagiarism is plagued by a vast, scattered constellation of perspectives, context, and nuance. Cultural, disciplinary, and institutional subtitles, among others in how plagiarism is defined and perspectives about it tested obfuscate consensus about how students and faculty perceive and understand plagiarism and what can or should be done about those perspectives. However, there is clear consensus that understanding how students and faculty perceive plagiarism is foundational to mitigating and preventing plagiarism. This study takes up the challenge of investigating its own institution’s student and faculty perspectives on plagiarism by testing whether students and instructors differentiate between different kinds or genres of plagiarism, and measuring differences in their perception of seriousness or severity of those genres. Using a device modified from the ‘plagiarism spectrum’ published by Turnitin®, the researchers implemented a campus-wide survey of faculty and student perceptions, and analyzed the data using two different methodologies to ensure results triangulation. This study demonstrates both students and faculty clearly differentiate between kinds of plagiarism, but not on their severity. This study demonstrates both students and faculty clearly differentiate the severity between kinds of plagiarism, but not on the specific rank or order of their severity. Further, this study’s novel methodology is demonstrated as valuable for use by other academic institutions to investigate and understand their cultures of plagiarism.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Plagiarism is a near ubiquitous “cross-disciplinary issue” (Sutherland-Smith 2005) in academia, ever threatening to erode higher education’s fundamental ethics and value (Hamlin et al. 2013; Josien and Broderick 2013). Violations of academic ethics such as plagiarism and cheating frustrate academic institutions, and even lead wider-society to question the value of higher education and its conferred degrees. Still, academic integrity violations proliferate -- even despite the development, use, and spread of plagiarism detection software, formal codes of conduct, strict penalties, and preventative campaigns. Indeed, plagiarism’s plight is perhaps more acute and widespread now than ever before, with the internet’s digital information economy making it increasingly easier to perpetrate – perhaps to the point of becoming nearly uncontrollable (Eret and Ok 2014).

However, despite this gravity, clear solutions are clouded by equally wide and competing perceptions of plagiarism itself. In spite of wide and thriving bodies of scholarship on plagiarism (see Sutherland-Smith 2014 for a quality review of plagiarism research’s many discourses), lack of shared definitions or even basic assumptions about what constitutes plagiarism on a cultural, national, institutional, let alone instructor or student levels paints a complex, often contradictory picture of how plagiarism is perceived. There are so many competing and intertwining perspectives about what plagiarism is, that identifying and navigating pragmatic or actionable remedies represents a dizzying effort. Louw’s (2017) lamenting endnote touches on this problematic well,

It is an ironic situation … that it is impossible to read or reference all the plagiarism statements of the many universities in the world… A second irony is that precious few of the university websites with information on plagiarism actually mention where that information comes from (p. 134)

Similarly, the mundane cultural, national, institutional, and individual differences inherent to the plagiarism perspective research community makes understanding the implications of its collective results very difficult to discern. There are so many different perspectives on just what plagiarism is and what about it is important, that discerning what can be done about it on an institutional level is a herculean task.

In the hopes of cutting through such fog, this study tests the perception of plagiarism from the students and instructors of a single University to understand whether or not and how both groups identify and differentiate between kinds or forms of plagiarism, and whether or not and how they perceive the severity of those kinds or forms. Given the great variance in how plagiarism is defined, exemplified, tested, and measured in scholarship, this study seeks an institutionally specific, contextualized, internally valid means of understanding of how plagiarism is perceived. Such an internally-contextualized understanding, this study argues, represents the best chance at developing pragmatic responses.

Literature Review

Contrary Assumptions

Varying cultural assumptions about intellectual property are a well-noted source confusion over how plagiarism is perceived and understood. As Heckler and Forde (2015) observe, “perceptions of plagiarism are culturally conditioned” (p. 73). Yet, the general body of plagiarism perception research is usually couched in Western contexts (Hayes and Intron 2005) Even slight variations in basic cultural assumptions about and styles of teaching and learning muddy perceptions of plagiarism. For example, Hayes and Intron (2005) describe how non-western students’ familiarity with textbook and recall-based teaching approaches rather than those requiring critical review multiple sources leads to confusion over what counts as plagiarism, and how it is measured. Viewed from the perspective of fundamental cultural preconceptions, the privileging of Western definitions of plagiarism represents a clear problematic that virtually ensures dissensus and confusion on an international scale.

Perceptions about what constitutes plagiarism also vary across academic disciplines. Studies of plagiarism from disciplines like Engineering (Yeo 2007), Nursing (Wilkinson 2009), Computer Science (Razera et al. 2010), Psychology (Gullifer and Tyson 2010), and Business (Walker 2010) take a relatively unproblematized view of plagiarism. This perspective understands plagiarism in terms of academic dishonesty or academic ethics violations, defining it along the lines of “knowingly presenting the work or property of another person as one’s own, without appropriate acknowledging or referencing” (Yeo 2007 p. 1). This general definition frames plagiarism squarely in terms of clear academic dishonesty where its perpetration constitutes an explicit violation of structural norms, rules, and ethics; and adjudicated as such.

However, in some contexts like Technical Writing and Communication or Professional Writing, common disciplinary and workplace practices challenge notions of single-authorship and clear source attribution inherent in many articulations of plagiarism (Logie 2005; Reyman 2008). Howard (2007) evidences similar tensions by describing the similarities between the influence of the print revolution and the current escalating importance of the Internet on notions of intellectual property and reproduction. Further, Howard’s work on “patchwriting” (1992) suggests that overly simplistic ideas of plagiarism overlook the nuanced and often scaffolded ways students learn to write academically. These disciplinary practices and contexts share an undercurrent of collaborative learning and collective production that demand more careful and critical reflection about what it means to plagiarize.

Competing Research

Such differences in cultural and disciplinary assumptions reflect in an array of plagiarism definitions, taxonomies, and examples used in perception-focused research. In practice, plagiarism perception research tends to define and exemplify plagiarism by using similarity detection systems, developing original definitions and devices, borrowing other study’s definitions and devices, or by mixing the aforementioned strategies.

One community of plagiarism perception scholarship, represented by studies like Cheema et al. (2011) and Ison (2012), makes use of “similarity detection” software scores and reports like those offered by Turnitin.com to define and measure plagiarism. Ison’s (2012) study of plagiarism in dissertations utilized Turnitn.com’s “similarity reports” representing the key artifacts for analysis. By subjecting published Ph.D dissertations to modified Turnitin.com “similarity index score” tests, controlled for quotes, definitions, and inter-university material), Ison (2017) used a 10% score threshold to define and mark plagiarism in their study. Some studies, like Cheema et al. (2011), cite third-party resources like https://www.plagiarism.org/, a Turnitin.com managed web resource (Turnitin 2017) to define and differentiate kinds of plagiarism. Other, similarly focused studies do not operationalize similarity detection systems to strictly define plagiarism, nor employ them in study devices, but rather focus on interrogating and testing their fundamental premises. Louw (2017), for example, crafts a compelling central argument challenging similarity detection as an adequate means of identifying or defining plagiarism. Louw (2017) overlaps the “categories of plagiarism” offered in Turnitin.com’s 2012 white paper (iParadigm 2012) with Bloom’s taxonomies of learning to demonstrate the problematic similarities and contest the simple logic that measuring similarity in word use between sources provides an accurate measurement of plagiarism.

A large community of studies employs original survey taxonomies and devices to operationalize plagiarism, typically with custom-made examples or scenarios (Brimble and Stevenson-Clarke 2005; Yeo 2007; Childers and Burton 2016; Heckler and Forde 2015; Watson et al. 2014). Brimble and Stevenson-Clarke’s (2005) study of Australian student and faculty perception measures plagiarism simply and unproblematically in terms of academic dishonesty with 20 custom scenarios describing acts ranging from “plagiarism to cheating on examinations” (p. 29). Watson et al. (2014) also produce scenarios statements such as, “[y]ou pay for a topic (research) paper from an on-line source and submit it as your own work” (p. 131) for students to evaluate on Likert-type scales. Similarly, Childers and Burton (2016) incorporate feedback from “Library Science, Education, English, and Statistics” (p. 7) staff to develop pairings of an ‘original passage’ and an ‘example of plagiarism’ for students to evaluate in terms of how strongly they feel the ‘example of plagiarism’ represents plagiarism. Childers and Burton’s (2016) examples, “represent a range of non-verbatim citation issues, from inadequately cited cases of patch writing to instances involving the mere reuse of ideas,” (p. 7) including two examples with “substantial amounts of copied text” (p. 7), three examples being drawn from publicized academic cases, and three modified from ‘other sources.’ Other studies like Yeo (2007), citing the kinds of contextual differences inherent in defining plagiarism cited above, explicitly acknowledge the design of their survey devices and plagiarism example scenarios as originating from their own expertise and experience. Alternatively, some original device studies, like Heckler and Forde (2015), use original, open-ended questionnaires. In the case of Heckler and Forde (2015), students are posed open-ended questions about examples from a course textbook assignment, “designed to elicit students’ cultural perspectives and understandings of plagiarism” (p 64) and prompt participants on their own definitions of plagiarism for evaluation.

A smaller community of studies utilizes definitions and examples of plagiarism from previous research on the plagiarism perception (Löfström et al. 2017; Razera et al. 2010; Ramzan et al. 2012). Within the ‘borrowing’ sub-group, studies like Razera et al. (2010) utilize entire survey devices from previous scholarship; in their case, the questionnaire utilized in Henriksson, (2008, as cited in Razera al. 2010) modified for University context and English to Swedish translation. Others, like Löfström et al. (2017) produce original examples matching schema produced in other studies; in their case, a taxonomy for plagiarism from Walker’s (2010) review of plagiarism in a New Zealand university’s international business courses, and the questionnaire from Löfström and Kupila (2013).

As noted above, international plagiarism perception research tends to rely on Western intellectual property and academic integrity definitions when defining and representing plagiarism in studies. Ramzan et al.’s (2012) work across multiple Pakistani universities defines plagiarism using Pritchett’s (2010, as cited in Ramzan et al. 2012) questionnaire borrowed from an American dissertation. Similarly, Hu and Lei’s (2015) study of Chinese university student perceptions of plagiarism relies on examples of plagiarism, “typically regarded as improper in Anglo American academia” (p. 239) in its survey device. Even studies with significantly more integrated multicultural definitions of plagiarism (Khoshsaligheh et al. 2017; Amiri and Razmjoo 2016; Rezanejad and Rezaei 2013) tend to borrow and rely on decontextualized foreign definitions of plagiarism. For example, Khoshsaligheh et al.’s (2017) study of Iranian students depends on a survey device amalgamated from, “recent empirical studies both in international and Iranian context as well as seminal texts on research ethics, various possible instances of plagiarism and other examples of academic dishonesty were collected to create a pool from which the items of the questionnaire could be collected” (p. 130).

Another notable community of scholarship amalgamates aspects from the aforementioned communities. Cheema et al. (2011) for example, blend an original questionnaire “based on the items regarding the perceptions of the students about plagiarism, terminologies about plagiarism, types of plagiarism and penalties of plagiarism (sic.)” (p.668), with definitions from the Turnitin.com subsidiary plagairism.org, and reference to Pakistan’s Higher Education Commission policies in the construction and execution of their study. While Louw (2017) focus much of their review, discussion, and results on Turnitin.com plagiarism definitions and metrics, their survey device is ultimately a questionnaire developed with “disciplinary office, staff at the university writing lab and lecturers in the faculty of Arts to ensure content validity” and “gathered information on a number of areas related to plagiarism” (p. 124). Walker’s (2010) survey device – itself borrowed by Löfström et al. (2017) and others – represents a fusion of approaches to defining and exemplifying plagiarism. Walker (2010) subjected five years of assignments from a 200 level international business to Turnitin.com ‘similarity report’ scans. Using a threshold of 20% “similarity,” Walker (2010) analyzed the Turnitin.com reports according to their “subjective choice,” and directly notes, “there was no precedent, except the experience of the researcher as a lecturer and marker” as an inherent limitation. Still, Walker (2010) cites the work of Park (2003, as cited in Walker 2010) and (Warn 2006, as cited in Walker 2010) and influencing the final taxonomy.

Conflicting Results

Given the diversity in how researchers define and exemplify plagiarism in their work, the body of plagiarism perception research hardly seems to study the same basic subject. There is little wonder, then, that the plagiarism perception research community produces a wide spectrum of results. In studies focused on student perceptions of plagiarism, results are particularly varied and often contradictory. Some studies’ results demonstrate confusion and ignorance about what constitutes plagiarism (Gullifer and Tyson 2010). On the other hand, Heckler and Forde’s (2015) investigation into whether or not students know the meaning of plagiarism showed unanimous agreement on definitions, and suggest their results may indicate students reaching a kind of “saturation point on plagiarism education”. Still others split the difference. Childers and Bruton’s (2016) study into what students would or would not identify as plagiarism, “demonstrated significance in regard to how students view different types of potential plagiarism,” (p. 7) and yet concludes that, “students may have more sensitivity to recommended practices than is often claimed in academic literature” (p. 13).

Findings also differ on how seriously students and instructors perceive plagiarism. While Razera et al.’s (2010) study of student and instructor perceptions about what counted as plagiarism found strongly shared understandings, Wilkinson’s (2009) study into students’ and instructors’ perceptions of various aspects of plagiarism found no clearly shared definitions. Similarly, Brimble and Stevenson-Clarke (2005) found students and instructors demonstrated significant divergence in their perceptions of plagiarisms’ seriousness, with “staff perceiving the seriousness to be greater than the students” in each exemplary case (p. 32). Research into how students more precisely define or rank different kinds of plagiarism also yields complex results. Yeo’s (2007) work shows that students rank “cut and paste” kinds of plagiarism as the “worst,” followed by “copy an assignment” kinds, with “copy text” kinds rounding out the top three severely ranked categories. Walker’s (2010) study of student submissions over a five-year time period showed that students most often committed “sham” acts of plagiarism, followed by “verbatim,” then “purloin.”

Finally, studies of non-Western students’ perceptions of plagiarism in Pakistan (Cheema et al. 2011; Ramzan et al. 2012), China (Hu and Lei 2015) that utilize US intellectual and academic property standards to define plagiarism in their survey devices report general confusion about what constitutes plagiarism in-practice (Cheema et al. 2011; Hu and Lei 2015; Ramzan et al. 2011). Even studies that explicitly and purposefully employ more multicultural definitions and examples of plagiarism (Amiri and Razmjoo 2016; Khoshsaligheh et al. 2017; Rezanejad and Rezaei 2013) report superficial, inaccurate, and/or inconsistent awareness or perceptions of plagiarism among students.

Consensus on the Issue

The wide spectrum of results detailing how academic faculty and students perceive plagiarism makes charting out pragmatic courses of action difficult. With such variation in research results and conclusions, academic administration and faculty seeking to address, mitigate, or prevent plagiarism are hard-pressed to identify the right choices for their contexts. There are, thankfully, also bright points of consensus in plagiarism perception research. Plagiarism perception’s scholarly conversations tend to agree that students and instructors need clear, shared definitions (Camara et al. 2017; Cheema et al. 2011; Curtis et al. 2018; Gullifer and Tyson 2010; Hayes and Intron 2005; Löfström et al. 2017; Louw 2017; Ramzan et al. 2012; Reyman 2008; Sutherland-Smith 2005; Watson et al. 2014; Wilkinson 2009; Yeo 2007). Towards that goal, a growing community of scholars advocate for a reassessment of baseline academic assumptions about plagiarism to better account for the shifting complexity and nuance of writing in the digital information economy (Gullifer and Tyson 2010; Reyman 2008; Sutherland-Smith 2005; Vie 2013). Plagiarism perception research convincingly demonstrates that fostering shared perceptions of plagiarism requires training for instructors, and education for students that are consistent with scholarship (Cheema et al. 2011; Louw 2017; Watson et al. 2014; Wilkinson 2009; Yeo 2007), and supported by institutional policies that are more visible and widely communicated (Ramzan et al. 2012).

Despite the dizzying, even confusing variance in how plagiarism is defined, studied, and perceived, its body of scholarship speaks clearly to the need for researchers investigate their own institution’s culture of plagiarism. To develop faculty training, student education, and institutional policies that effectively mitigate – and ultimately deter or prevent – plagiarism, researchers should focus on how plagiarism is perceived in the context of their microcosms. By charting their own microcosm of plagiarism perception against the larger constellation of plagiarism perception research, scholars can better understand their institution’s position relative it its academic community, and contribute to a more holistic view of plagiarism’s many dimensions.

Research Question and Purpose

Understanding from the research described above that plagiarism is not a black-and-white issue, but rather a nebula of acts defined along varying spectrums; this study picks up the challenge to awl the knot of plagiarism’s perception in our own institution’s context. This study’s primary goals are to test whether students and instructors differentiate between different kinds or genres of plagiarism, and to measure differences between how instructors and students perceive the seriousness or severity of those genres. After analysis, this study’s results are intended to inform target education and training for faculty and students that addresses the genres of plagiarism perceived to be the most severe, and genres with the greatest discrepancy in severity perceptions.

Materials and Methods

Study Design

This study’s design is largely adapted from iParadigm’s (the parent company of Turnitin.com) The plagiarism spectrum: Instructor insights into the 10 types of plagiarism (2012) white paper. Based on “a study of thousands of plagiarized papers” (iParadigm 2012 p.3), the white paper describes 10 unique types or genres of plagiarism, “that comprise the vast majority of unoriginal work in student papers” (iParadigm 2012 p. 3). The iParadigm white paper offers definitions for each of its genres; ratings of each genre’s frequency and severityFootnote 1; and examples of each genre, compared side-by-side with “original text” (see Fig. 1).

An example of Turnitn.com’s “Find – Replace” plagiarism genre (iParadigm 2012)

Although unable to obtain permission to directly reuse iParadigm’s original survey instrument, the research team was granted permission to adapt the white paper’s taxonomy as needed, with proper citation. Emulating iParadigm white paper’s 10 genres, original text from one of the author’s previous scholarship that was manipulated in approximation with the research team’s interpretation of each genre. Like iParadigm’s (2012) genres, ours fall on a spectrum ranging from text completely from an original source copied without citation (iParadigm’s “Clone”, our “Case A”) to a mixture of new text and sparse copying, with some correct citations (Turnitin.com’s “Retweet,” our “Case F”) (iParadigm 2012). Also like iParadigm’s (2012) spectrum, this study’s case examples include passages with various degree of text copied verbatim; passages with various degrees of text copied verbatim, accompanied by citations of various degrees of correctness; and passages with various degrees of text copied verbatim, combined with text from third-party sources (not the original source example text or “new” text in the plagiarism example) reused through either verbatim quote or paraphrase. See Appendix for a full sample of this study’s plagiarism case examples.

The iParadigm spectrum was adapted for use because of its common use among the sample institution’s faculty and students. Turnitin.com similarity scans and reports are an institutional staple, widely used across the Campus as a plagiarism detection mechanism. Campus students and faculty are very likely to share experience interacting with Turnitin.com reports on their submitted assignments, regardless of major or degree program; therefore, operationalizing a Turnitin.com product ensured some degree of familiarity with the survey device’s fundamental structure. Further, given Sutherland-Smith’s (2005) findings that even faculty perspectives on plagiarism do not clearly match institutional definitions or policies, the iParadigm spectrum may serve as a more familiar reference for all respondents. Though Turnitin.com certainly holds a vested commercial interest in promoting and maintaining its defined ‘spectrum of plagiarism,’ the authors included substantial modification based on their own professional pedagogical and scholarly experiences in order to ensure originality and reduce bias. For example, in addition to manufacturing completely original case examples, all identifying iParadigms titles and severity metrics have been removed. Finally, the potential bias of iPardigm’s ‘plagiarism spectrum’ is mitigated by this study’s focus on comparing student and faculty perspectives, not their perspectives relative to a given standard. Like the work of Childers and Burton (2016), our interest does not lie in comparing how well students and faculty perceive plagiarism in relation to a particular standard, only in how respondents evaluate plagiarism. Since this study analyzes the comparison of faculty and student perceptions about variations of plagiarism and their severity, with no reference to iParadigm’s assigned genres or severity metrics, the inspiring artifact and nuanced construction of the device are of secondary concern.

In each plagiarism genre example, text copied and reused from the original without alteration is displayed in red. New, ‘altered’ text is highlighted in yellow (Fig. 2). Plagiarism example text representing material paraphrased from other sources without citation is highlighted in blue (Fig. 2). Plagiarism example text signifying material copied verbatim from other sources without citation is represented by lorem ipsum text, highlighted in blue (Fig. 2). Captions describing the schema of text colors and highlights are offered beneath each plagiarism example (Fig. 2).

Participant Population and Recruitment

The study sample population was the online (Worldwide) campus of Embry-Riddle Aeronautical University. This mid-sized private university has two residential campuses, and one online campus. The online campus serves more than 22,000 students throughout the United States and abroad. As one of the earliest, and highest ranked online universities, this stable structure served as an ideal population for this research study.

Participants were recruited using college and department Email distribution lists. The initial recruitment Email stated provided full disclosure including project name, purpose, eligibility, participant, risks and benefits of participation, privacy, and the ability to opt-out. A SurveyMonkey link to the survey was provided in the Email.

Compliance with Ethical Standards

As approved by the Institutional Review Board, all participates had to be at least 18 years old, a resident of the United States, and either a student in a degree program, or faculty member (full time or adjunct). The research falls in the “exempt” category as defined in the provisions stated in 45 Code of Federal Regulations 46, Subpart A (Common Rule). Exempt research is research with human subjects that generally involves no more than minimal risk.

Each respondent received a written description of the project and gave informed consent for participation in this study. The informed consent page appeared prior to the respondents starting the survey. Respondents had to select ‘AGREE’ to continue with the survey. If the respondent chose ‘DISAGREE’, the survey closed and the respondent was not allowed to participate.

The risks of participating in this study were minimal, no more than everyday life. None of the questions asked were intended to have the respondent to recall an unpleasant, traumatic, or emotional memory. The respondents did not receive any personal benefit.

Participant information was anonymous and not even the researcher could match data with names. Individual participant information was protected and all data resulting from this study. No personal information was collected other than basic demographic descriptors. The online survey system (Survey Monkey) did not save IP address or any other identifying data. No one other than the researchers had access to any responses. If a participant chose to ‘opt-out’ during the research process, no collected data was used in the study. All survey responses were treated confidentially on a password protected, unencrypted hard drive on a computer.

Sample

The sample (N = 375) was collected over a 12 week period in 2018. Participants self-selected to participate based on the original Email invitation and agreeing to the informed consent. The worldwide campus consists of three colleges (business, arts and science, and aeronautics) with good responses from each college (41%, 24%, and 35% respectively). Decomposition between respondent type was 29% faculty (N = 109) and 71% students (266). Further, 49% of the students were undergraduate students (N = 184), which included associates or bachelor degrees, and 51% were master level graduate students (N = 191). The only other demographic information collected was the degree name.

Collection Instrument

The survey was implemented using SurveyMonkey using the ten plagiarism genres (A through J) and demographic information previously described. Participants were asked to compare each genre to all the other genres in a pairwise fashion. The case pairs are illustrated in Fig. 3. It was assumed that the result would be independent of the order of the text. For example, placing block A on the left and block B on the right of the survey is the same as placing block B on the left and block A on the right (Fig. 3). Participants are prompted to “compare the original statement to options A and B in the given question” (Fig. 3). After each case comparison’s examples, participants are asked, “When compared to the original statement, which of the above options is a more severe, blatant, or problematic example of academically dishonest writing?” (Fig. 3). Participants are given the choice to select, “A,” “B,” or “Equal” (Fig. 3). Next, participants are asked to “[r]ate the severity of your choice relative to the other option,” using the options: “Equal,” “Concerning,” “Problematic,” “Very Problematic,” “Most Problematic” (Fig. 3). Finally, participants are given a write-in area for “Additional comments.”

Cases were randomly entered into the survey instrument to avoid patterns, or minimize respondent fatigue. Still, by assuming symmetry, the survey was 45 questions, plus the demographic questions. Using random question order, it was not necessary for an individual to complete 100% of the survey for the data to be of use. The survey instrument and datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request.

Analysis and Results

Statistical Analysis

This plagiarism perception analysis used two different questions for each pairwise question and two different analysis methods to ensure results triangulation. No participants responded to the survey’s third, open-ended question, thus no data from this category was included in analysis. The pairwise comparison selections were the primary data set used for analysis below, and the severity ratings were used to account for the equal selection, and to confirm the answer provided in the statement comparison. Analysis of the rating data focused on the left, right, equal comparison and did not use the results of the Likert question since results from the first question provided statistically significant and consistent results.

Each possible combination of pairwise selections was analyzed for statistical significance using the Chi-Square test since the data was the categorical type (Heiman 2006). Since this study demanded many comparisons, the Bonferroni correction was used. The Bonferroni correction is used when there are multiple hypotheses being tested at once to reduce the chance of having a non-significant result become significant (Drew et al. 2007). The process is to take the level of significance divided by the total number of tests (McDonald 2014). The Bonferroni correction changes the level of significance from .05 to an appropriate lower level of significance, protecting against familywise errors.

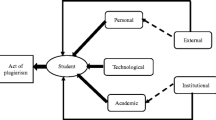

Analytic Hierarchy Process (AHP) was also applied to establish rank orders of genre case examples. AHP is a structured technique for analyzing complex decisions and determining the rank order of results when decision makers answer questions with respect to pairwise comparison of two objectives or outcomes, while allowing the decision maker to be inconsistent in answering questions on comparisons. This flexibility makes AHP a preferable method over other rank ordering methods (Evans 2016). AHP can be used to model unstructured problems in social, economic, and management sciences (Saaty 1978). To rank the alternatives in AHP, following steps are used:

-

Develop a hierarchy of factors representing the decision situation, as shown in Fig. 4

-

Perform pairwise comparisons at each level and section of the hierarchy

-

Form a square influence matrix

-

Find the value for a quantity, λmax, the value of λ by solving the system of equations |A- λI| = 0

-

Determine the local weights for each factor in a section of the hierarchy

-

Determine the global weight for each alternative on each lowest-level criterion in the hierarchy

In this study the hierarchy has three levels, with the top level as “Rank Types of Plagiarism by Severity” (Fig. 4). The second level has one cell for each faculty or student participant. It is assumed that each participant’s response is equally important. In other words, all of the local weights at level 2 are equal. The third level has types of plagiarism connected to each of the nodes at the second level.

The A matrix for each participant is generated with their input on comparison between each of the plagiarism types.

Results

Chi-Square Test Results

In this study, there were 184 p values obtained from the initial Chi-Square tests. With the Bonferroni correction, the total p value became .00027. All possible combination of responses was then assessed for significance. The results indicated which combinations of responses were significant.

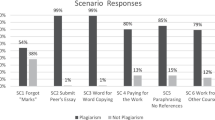

The count of each significant and non-significant results for each example was counted (Table 1). The writing example A had N = 12 of statistically higher than any other example, an N = 2 of statistically lower, and N = 4 of non-significance. Writing example E had a statistically significant lower number of responses with N = 11, the number of higher responses had N = 1, and for non-significant responses of N = 6. Writing example F had a statistically significant lower number of responses with N = 15, the number of higher responses had N = 0, and for non-significant responses of N = 3. Writing example J had a statistically significant lower number of responses with N = 11, the number of higher responses had N = 2, and for non-significant responses of N = 5.

Out of 270 different possible results, there were 31 occasions where student severity perception rating results were significantly higher (or more severe) than the faculty’s perception. There was one instance where the faculty perceived the writing to be statistically significantly plagiarized to the student’s result was not significant. Overall, the students were stricter in their perception plagiarism severity compared with faculty perception. Figures 5 and 6 shows the Chi-Square test results for students and faculty for each plagiarism genre. The Figures’ cells show all possible combinations of each genre. In Fig. 5, purple-shaded cells indicate a significant difference compared to the faculty’s non-significance; and, the green-shaded cell indicates an exception. In Fig. 6, green-shaded cells indicate faculty’s non-significance, with one exception in purple where the faculty members indicated the genre was significant compared to the student’s non-significance.

Analytical Hierarchy Process Results

Following the steps in the AHP approach, the global weight of each alternative is calculated as shown in Tables 2 and 3 below. Plagiarism types are ranked in the decreasing order of priority.

Discussion

The results of this study’s analysis suggest that the sample institution’s students and instructors do perceive differences in severity between the example genres, and generally agree on the qualities of severe plagiarism; but less so on the qualities of less severe plagiarism. The triangulation of statistical analysis and AHP show general agreement between students and instructors on both the most and least severe example genres. Both methods evidence students and faculty identifying genre A as the most blatant or severe genre of plagiarism, and genres E, F, and J essentially tied for being the least blatant or severe. AHP adds an additional dimension of priority showing students and instructors identified genres B and C as similarly high in severity or blatancy as example A, and genre I as similarly perceived as low severity or blatancy genres E, F, and J. AHP indicates respondents perceived the remaining genres (D, G and H) as somewhere in the middle of the spectrum, not quite as severe as A, B, or C; but more severe than I, E, F, and J.

Genre A was identified by both student and respondents as the most blatant or severe example of plagiarism (Fig. 7). Genre A’s text is entirely copied from the original without change or citation. Faculty respondents identified B and C as the second and third, respectively, most blatant or severe genres of plagiarism (Fig. 8). Genre B’s text is almost entirely copied from the original without citation, with only four words changed. Genre C’s text is very heavily copied from the original without citation, with a handful of words or phrases changed. Neither genres B or C utilize new text, proper citations, nor text borrowed from other sources with or without proper citation. Student respondents identified G and D as the second and third, respectively, most blatant severe genres of plagiarism (Fig. 9). Genre G’s text is entirely either copied from the original without change or citation, or copied from another source without citation. Genre D’s text is comprised of a fairly even mix of text copied from the original without change or citation, and original text. Student respondents respectively identified genres B and C as their fifth and fourth most blatant or severe examples of plagiarism.

Genres I, E, F, and J were identified by student and instructor respondents as the least blatant or severe examples of plagiarism, and share the general trait of including a significant amount of new text, or text borrowed from other sources with proper citation (Fig. 10). Genre I’s text is largely copied from the original without change or citation, but does include two sentences that borrow text from other sources with proper citation. Genre E’s text is largely original, but includes a modest amount of text copied from the original without change, used as quotes but lacking proper citation. Genre F’s text is largely original and utilizes text copied from the original with proper citations, as well as a light amount of text copied from the original without change or proper citation – only a few words or phrases. Genre J’s text is almost entirely copied from the original without change, but cited correctly, with one word of new text.

Like the “most blatant or severe” hierarchy, the “lease blatant or severe” hierarchy also exhibited some dissonance between students and faculty perceptions. While students identified genres I, J, E, and F as their least problematic, blatant, or severe genres of plagiarism, respectively, faculty identified I, F, F, and J as their least problematic, blatant, or severe genres of plagiarism.

In the middle of the spectrum, students and faculty shared mixed perceptions. Students perceived examples C, B, and H as their fourth, fifth, and sixth most severe or blatant genres of plagiarism, respectively. Faculty, however, perceived examples H, G, and D as the fourth, firth, and sixth respectively blatant genres of plagiarism. Of these “middle three” selections, genre H represents the greatest differentiation – students’ sixth ranked most problematic genre and instructors’ fourth. Genre H features an even mix of text copied directly from the original without change or citation with material paraphrased from another source, without citation; as well as a handful of new words. AHP analysis also identified a notable difference in student and faculty perception of genres B and G. As noted above, while faculty ranked B as their second most problematic, blatant, or severe genre example, students ranked B as their fifth most problematic, blatant, or severe. Genre B’s text is almost entirely copied from the original without citation, with only four words changed. Faculty perceived G as their fifth most problematic, blatant, or severe genre, while students ranked it as their second most problematic, blatant, or severe. Genre G’s text is entirely either copied from the original without change or citation, or copied from another source without citation.

Conclusions

The body of plagiarism perception research makes a strong argument that actionable insight into how to combat and mitigate plagiarism requires a clear understanding of an institution’s specific plagiarism culture. Given the deep and wide nuance permeating how plagiarism is perceived across cultures, disciplines, and studies, generalizable research is fraught with problems of external validity. The present study was conducted to develop a more finite, clear vision of how plagiarism is perceived among students and faculty within the specific context of a single university’s online campus. This study’s results provide compelling, statistically significant evidence that faculty and students from the sample university do perceive meaningful differences in the blatancy or severity between different kinds of plagiarism. This evidence supports a shaded, nuanced vision of what constitutes plagiarism. A more nuanced understanding of the university’s culture of plagiarism would be a valuable tool in developing more tailored, responsive institutional definitions and policies.

Both analyses show student and faculty respondents perceive examples of plagiarism with a significant amount of text that is copied from an original source without change or citation as the most problematic, severe, or blatant kinds or genres of plagiarism. These results support Yeo’s (2007) observations that students viewed direct copying and “cut and paste” forms of plagiarism as most severe. Further, AHP analysis demonstrates student and faculty respondents generally perceive examples of plagiarism with lighter amounts of text copied from an original source without change, or which included incorrect citations, as less problematic, severe, or blatant. Similar to Wilkinson (2009) and Löfström et al. (2017), this study confirms that students and faculty can share similar, even overlapping perceptions of plagiarism. However, unlike Heckler and Forde’s (2015) work, this campus’ faculty and students did not share unanimous perceptions. This study’s results also support Yeo’s (2007) and Childers and Bruton’s (2016) observations that students are capable of understanding plagiarism in a nuanced manner, where various kinds of plagiarism activities are understood on various levels of intensity severity. These results further echo Heckler and Forde’s (2015) observations that students do view plagiarism with a more nuanced eye than frequently credited, and are capable of making a variety of value-judgements about different kinds or manifestations of plagiarism.

However, these general results are somewhat complicated by disagreement between student and faculty perceptions of which specific genre example was less problematic, severe, or blatant than others. As noted in “Discussion,” students and instructors only shared complete perception agreement on the severity of genre example A, ranked on aggregate as the most problematic, severe or blatant instance of plagiarism across respondents. Students and instructors ultimately failed to share perceptions on the ranking of any other unique genre example. In terms of the “most severe” rankings, students perceived example genres of plagiarism that included mixtures of original text and text copied from other sources without citations as very problematic, blatant, or severe (ranking genre examples in their top three most blatant or severe). Instructors’ perceptions of the most problematic, blatant, or severe were focused on example genres that included only the least amount of new, original text. Although both students and faculty selected example I as their fourth least severe or blatant genre – a mix of text copied from the original without change or citation, and text used from other sources with proper citations – their respective fifth, sixth, and last choices were reversed. Students perceived examples of plagiarisms that included text that was copied unchanged from the original, but for which some attribution and correct citations were provided, as less problematic than examples that included mixes of new text, text copied from the original used as quotes with citations (incorrect or correct). Faculty perceived largely the opposite, ranking the use of text copied entirely from the original with attribution and citations more severe than examples that included original text and text copied from the original without change as quotes with correct or incorrect citations. Like Löfström et al. (2017), this study demonstrates that while students and faculty may share similarities in general perceptions, they may still perceive notable differences in the ordering and valuing. These mixed perceptions are similar to those observed by Razera et al. (2010) and Louw (2017), where students and faculty perceptions were similar but ultimately lacked consensus. This study’s results demonstrating differences in plagiarism severity perception also support the findings of Brimble and Stevenson-Clarke (2005), though not to the same degree. Unlike Brimble and Stevenson-Clarke’s (2005) findings, students and faculty at the sample institution disagreed on only a few of the genre examples rather than every-one.

Taken together, this study confirms that both students and faculty perceive plagiarism along a gray-scale rather than as a black-and-white practice. Students and faculty both perceive differences in the severity of plagiarism based on the amount of text copied from original or other sources, the amount of original text, and the use of attributions and citations. This study also observes notable differences in how students and faculty perceive specific examples. These differences confirm the plagiarism perception research community’s larger observations that students and faculty ultimately view plagiarism differently. These spurious findings confirm a basic truth about the perception of plagiarism: there are no silver bullets. How and why students and faculty perceive plagiarism remains steeped in a deep, complex milieu of cultural, national, and institutional influences. Despite the complexity of those influences, however, this study’s intra-institutional focus gives it powerful leverage to help understand the interior culture of plagiarism. In this way, like other plagiarism perception research (Bouville 2008; Cheema et al. 2011; Hayes and Intron 2005; Wilkinson 2009; Louw 2017; Gullifer and Tyson 2010; Sutherland-Smith 2005; Watson et al. 2014; Yeo 2007), this study’s results represent both a clarifying look into a unique culture of plagiarism perception, and the cornerstones for further investigation into and mitigation of that culture. Beyond the specific application of this study’s results and conclusions, the work described herein should encourage plagiarism research to seek out and investigate our own institution’s cultures of plagiarism. How plagiarism is perceived may be far too enmeshed a knot to ever be awed on a notable level of generalizable validity, but careful investigation into nuance of a specific culture of perception can provide meaningful, internally valid, and therefore actionable insights.

Limitations

The present study faced several noteworthy limitations in terms of sampling and the survey device. Firstly, this study only sampled students and instructors from University’s online campus, one of three. While the strength of this study’s data and results demonstrate it as an appropriate sampling of the campus, surveying one of the three campuses may not accurately represent the University’s larger plagiarism culture. The other two campuses’ residential student population may embody different perceptions of plagiarism not covered in this study.

Secondly, some participants noted confusion or frustration about the survey device. The complexity of the pairwise comparisons, and the formatting of plagiarism examples represented points of confusion noted by several participants. One participant noted they, “didn’t understand the text presented and the options below,” and described only truly understanding the format until well into the survey. Another participant cited an inability to complete the survey due to the use of lorem ipsum text to represent plagiarism example text copied verbatim from other sources without citation, noting they were unable to read or understand that particular text. Participants also expressed frustration over the length of the survey device. One participant directly complained that the survey, “… just wouldn’t end!” Confusion over the survey format, and frustration over the survey’s length may have limited participation in the study, or caused participants to quit without completing the entire survey. However, as noted above, because the survey utilized a random question order, it was not necessary for an individual to complete the entire survey to produce usable data. Therefore, although noteworthy, this limitation is inherently mitigated as demonstrated by statistically significant samples.

Finally, a disconnect between University policies and definitions and the study’s definitions and examples could represent a source of confusion among participants. This study does not explicitly utilize University plagiarism policies and definitions to represent plagiarism in the survey device. The sampled University Campus’s “Student Handbook” defines plagiarism in the following terms:

Plagiarism is presenting the ideas, words, or products of another as one’s own and violations include, but are not limited to:

-

The use of any source to complete academic assignments without proper acknowledgement of the source;

-

Submission of work without appropriate documentation or quotation marks;

-

The use of part or all of written or spoken statements derived from sources, such as textbooks, the Internet, magazines, pamphlets, speeches, or oral statements;

-

The use of part or all of written or spoken statements derived from files maintained by individuals, groups or campus organizations;

-

Resubmission of previously submitted work without formal quotation;

-

The use of a sequence of ideas, arrangement of material, or pattern of thought of someone else, even though you express such processes in your own words. (ERAU 2017).

However, the survey device and its examples of plagiarism do not draw explicitly from this definition, nor do they rely on the specific examples given. Instead, as noted in “Methods,” the study’s examples of plagiarism are derived from Turnitin.com’s The plagiarism spectrum: Instructor insights into the 10 types of plagiarism (2012) white paper. As noted in “Background,” studies in the perception of plagiarism frequently rely on university definitions and policies in their study devices as a means of insuring participant familiarity. By not explicitly associating this study’s device with University policies and definitions, a measure of dissonance may have been introduced if participants were not able to clearly associate the study’s given examples of plagiarism with the terms of the University’s definition its examples. However, no participant noted this issue, and no received complaints speak to this particular problematic.

Future Research

On a smaller, internally valid scale, future research into the university’s perceptions of plagiarism should focus on developing a deeper, qualitative analysis. This study’s survey device did include opportunity for participants to provide a text explanation for their judgements, but none of the 375 respondents added text to any of the 45 questions (representing over 16,000 opportunities). Given the significance of the data collected in this study and the mixed perceptions revealed in analysis, a qualitative study through interview or focus-groups could help add depth and nuance that would help parse out student and faculty rationales supporting their judgement.

Methodologically, the use of AHP to rank the plagiarism types fits well in this research as it provides a simplified structure of a decision process. The approach is flexible when it comes to the inconsistency in the responses provided by the decision maker. In other words, the methodology only requires preference information which is not that difficult to gather from a decision maker versus in a multi attribute value function assessment in which detailed information concerning the decision maker’s preference is expected. In addition, AHP and other outranking methods provide a complete ranking whereas multi attribute value function assessment may not. Though multi attribute value function assessment has its own advantages which can be an extension to this research. The approach requires the decision maker to think much harder to compare the criteria. Another big advantage of multi attribute value function assessment is that when a new alternative comes into consideration it can be placed in the overall ranking with other alternatives without repeating the entire procedure, which will be the case in AHP or similar approaches.

For the future, additional qualitative data analysis using AHP and other outranking methods would be valuable in triangulating plagiarism perceptions and evaluating plagiarism culture. First, while collected, the analysis described in this paper did not use the results of the Likert question since results from the first question provided statistically significant and consistent results. Additional analysis using the Likert analysis and comparing it to the results of the analysis already conducted on this data set, might yield additional insight into this study question. Survey devices of future research might also provide a “not plagiarism” response option when soliciting perspectives on kinds or degree of plagiarism to accommodate a wider spectrum of cultural and disciplinary contexts. Such an option might capture compelling data from respondents who do not view case examples in terms of plagiarism or academic dishonestly.

On an externally valid scale, this study confirms a fundamental truism of plagiarism and perceptions about it: institutional cultures of plagiarism are unique and unlikely to conglomerate into a single, macro-culture. This truism fundamentally limits any one study’s external validity. However, the strength of this study’s internal validity suggests that, taken together as a research community, the collection of plagiarism perception research do combine into a generalizable meaningful patchwork. Plagiarism researchers are therefore called to investigate their own institution’s perceptions and culture of plagiarism not only for the internal value of such work to address institutional problematics, but also to enhance the patchwork as to help create a broader, more holistic vision of how plagiarism is understood and perceived across institutions.

Notes

iParadigm’s The plagiarism spectrum: Instructor insights into the 10 types of plagiarism (2012) claims to have utilized, “both higher and secondary education instructors to take a measure of how prevalent and problematic these [examples] of plagiarism are among their students” (p. 9) in their rankings. However, no further descriptions or details of the study’s population and sample, data collection methods, datasets, or analysis methods are provided.

References

Amiri, F., & Razmjoo, S. A. (2016). On Iranian EFL undergraduate students’ perceptions of plagiarism. Journal of Academic Ethics, 14(2), 115–131.

Bouville, M. (2008) Plagiarism: Words and Ideas. Science and Engineering Ethics, 14(3), 311-322.

Brimble, M., & Stevenson-Clarke, P. (2005). Perceptions of the prevalence and seriousness of academic dishonesty in Australian universities. The Australian Educational Researcher, 32(3), 19–44.

Camara, S. K., Eng-Ziskin, S., Wimberley, L., Dabbour, K. S., & Lee, C. M. (2017). Predicting students’ intention to plagiarize: An ethical theoretical framework. Journal of Academic Ethics, 15(1), 43–58.

Cheema, Z. A., Mahmood, S. T., Mahmood, A., & Shah, M. A. (2011). Conceptual awareness of research scholars about plagiarism at higher education level: Intellectual property right and patent. International Journal of Academic Research, 3(1), 666-671.

Childers, D., & Bruton, S. (2016). “Should it be considered plagiarism?” student perceptions of complex citation issues. Journal of Academic Ethics, 14(1), 1–17.

Curtis, G. J., Cowcher, E., Greene, B. R., Rundle, K., Paull, M., & Davis, M. C. (2018). Self-control, injunctive norms, and descriptive norms predict engagement in plagiarism in a theory of planned behavior model. Journal of Academic Ethics, (16), 1–15.

Drew, C. J., Hardman, M. L., & Hosp, J. L. (2007). Designing and conducting research in education. Thousand Oaks: Sage.

ERAU. (2017). ERAU Worldwide Student Handbook 2018=2019. [pamphlet]. Daytona Beach: ERAU.

Eret, E., & Ok, A. (2014). Internet plagiarism in higher education: Tendencies, triggering factors, and reasons among teacher candidates. Assessment & Evaluation in Higher Education, 8(39), 1002-1016.

Evans, G. W. (2016). Multiple criteria decision analysis for industrial engineering: Methodology and applications. Boca Raton: CRC Press.

Gullifer, J., & Tyson, G. A. (2010). Exploring university students' perceptions of plagiarism: A focus group study. Studies in Higher Education, 35(4), 463–481.

Hamlin, A., Barky, C., Powell, G., & Frost, J. (2013). A comparison of university efforts to contain academic dishonesty. Journal of Legal, Ethical and Regulatory Issues, 16(1), 35–46.

Hayes, N., & Intron, L. D. (2005). Cultural values, plagiarism, and fairness: When plagiarism pets in the way of learning. Ethics & Behavior, 15(3), 213–231.

Heckler, N. C., & Forde, D. R. (2015). The role of cultural values in plagiarism in higher education. Journal of Academic Ethics, 13(1), 61–75.

Heiman, G. W. (2006). Basic statistics for the behavioral sciences (5th ed.). Boston: Houghton Mifflin.

Howard, R. M. (1992). Plagiarism and the postmodern professor. Journal of Teaching Writing, 11(2), 233–245.

Howard, R. M. (2007). Understanding “internet plagiarism”. Computers and Composition, 24(1), 3–15.

Hu, G., & Lei, J. (2015). Chinese university students’ perceptions of plagiarism. Ethics & Behavior, 25(3), 233–255.

iParadigms, LLC. (2012). The plagiarism spectrum: Instructor insights into the 10 types of plagiarism (version 0512). Turnitin. www.turnitin.com

Ison, D. (2017). Academic misconduct and the Internet. In D. Velliaris (Ed.), Handbook of Research on Academic Misconduct in Higher Education (pp. 82-111). Hershey, PA.

Ison, D. C. (2012). Plagiarism among dissertations: Prevalence at online institutions. Journal of Academic Ethics, 10(3), 227–236.

Josien, L., & Broderick, B. (2013). Cheating in higher education: The case of multi-methods cheaters. Academy of Educational Leadership Journal, 17(3), 93–105.

Khoshsaligheh, M., Mehdizadkhani, M., & Keyvan, S. (2017). Severity of types of violations of research ethics: Perception of Iranian Master’s students of translation. Journal of Academic Ethics, 15(2), 125–140.

Löfström, E., & Kupila, P. (2013). The instructional challenges of student plagiarism. Journal of Academic Ethics, 11(3), 231–242.

Löfström, E., Huotari, E., & Kupila, P. (2017). Conceptions of plagiarism and problems in academic writing in a changing landscape of external regulation. Journal of Academic Ethics, 15(3), 277–292.

Logie, J. (2005). Cut and paste: Remixing composition pedagogy for online workspaces. In K. St. Amant, & P. Zemliansky (Eds.), Internet-Based Workplace Communications: Industry and Academic Applications (pp. 299-316). IGI Global.

Louw, H. (2017). Defining plagiarism: Student and staff perceptions of a grey concept. South African Journal of Higher Education, 31(5), 116–135.

McDonald, J.H. (2014). Handbook of biological statistics (3rd ed.). Baltimore, MD: Sparky House Pub.

Ramzan, M., Munir, M. A., Siddique, N., et al. (2012). Awareness about plagiarism amongst university students in Pakistan. Higher Education, 64, 73.

Razera, D., Verhagen, H., Pargman, T. C., & Ramberg, R. (2010, June). Plagiarism awareness, perception, and attitudes among students and teachers in Swedish higher education—A case study. In 4th International plagiarism conference–towards an authentic future. Northumbria University in Newcastle Upon Tyne-UK (pp. 21-23).

Reyman, J. (2008). Rethinking plagiarism for technical communication. Technical Communication, 55(1), 61–67.

Rezanejad, A., & Rezaei, S. (2013). Academic dishonesty at universities: The case of plagiarism among Iranian language students. Journal of Academic Ethics, 11(4), 275–295.

Saaty, T. L. (1978). Modeling unstructured decision problems—The theory of analytical hierarchies. Mathematics and Computers in Simulation, 20(3), 147–158.

Sutherland-Smith, W. (2005). Pandora's box: Academic perceptions of student plagiarism in writing. Journal of English for Academic Purposes, 4(1), 83–95.

Sutherland-Smith, W. (2014). Legality, quality assurance and learning: Competing discourses of plagiarism management in higher education. Journal of Higher Education Policy and Management, 36(1), 29–42.

Turnitin, LLC. (2017). Plagiarism.org. Retrieved from https://www.plagiarism.org/

Vie, S. (2013). A pedagogy of resistance toward plagiarism detection technologies. Computers and Composition, 30(1), 3–15.

Walker, J. (2010). Measuring plagiarism: Researching what students do, not what they say they do. Studies in Higher Education, 35(1), 41–59.

Watson, G., Sottile, J., & Liang, J. G. (2014). What is cheating? Student and faculty perception of what they believe is academically dishonest behavior. Journal of Research in Education, 24(1), 120–134.

Wilkinson, J. (2009). Staff and student perceptions of plagiarism and cheating. International Journal of Teaching and Learning in Higher Education, 20(2), 98–105.

Yeo, S. (2007). First-year university science and engineering students’ understanding of plagiarism. Higher Education Research & Development, 26(2), 199–216.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Survey Instrument

Rights and permissions

About this article

Cite this article

Denney, V., Dixon, Z., Gupta, A. et al. Exploring the Perceived Spectrum of Plagiarism: a Case Study of Online Learning. J Acad Ethics 19, 187–210 (2021). https://doi.org/10.1007/s10805-020-09364-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10805-020-09364-3