Abstract

The solution of singular two-point boundary value problem is usually not sufficiently smooth at one or two endpoints of the interval, which leads to a great difficulty when the problem is solved numerically. In this paper, an algorithm is designed to recognize the singular behavior of the solution and then solve the equation efficiently. First, the singular problem is transformed to a Fredholm integral equation of the second kind via Green’s function. Second, the truncated fractional series of the solution about the singularity is formulated by using Picard iteration and implementing series expansion for the nonlinear function. Third, a suitable variable transformation is performed by using the known singular information of the solution such that the solution of the transformed equation is sufficiently smooth. Fourth, the Chebyshev collocation method is used to solve the deduced equation to obtain approximate solution with high precision. Fifth, the convergence analysis of the collocation method is conducted in weighted Sobolev spaces for linear singular equations. Sixth, numerical examples confirm the effectiveness of the algorithm. Finally, the Thomas–Fermi equation and the Emden–Fowler equation as some applications are accurately solved by the method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we explore the following nonlinear singular two-point boundary value problem

where f(x, u) may be weakly singular at \(x=0\) and is continuously differentiable with respect to u. We consider two kinds of boundary conditions

We note that Eq. (1.1) is called weakly singular when \(0<\alpha <1\) and strongly singular when \(\alpha \ge 1\) [1]. For the weakly singular case, we can impose all kinds of boundary conditions, but for the strongly singular case, we can only impose \(u'(0)=0\) at the left boundary [2]. For a more general second-order singular differential equation, the existence and uniqueness of the solution have been explored by Kiguradze and Shekhter [3].

Nonlinear singular differential equations (1.1) occur frequently in several areas of science and engineering [4, 5], such as gas dynamics, chemical reaction, atomic calculation, and physiological studies. Some famous models include the Emden–Fowler equation [6, 7], the Lane–Emden equation [8], which are used in mathematical physics and chemical physics, and the Thomas–Fermi equation in atomic physics describing the electron distribution in an atom [7, 9,10,11,12]. In mathematics, linear or nonlinear elliptic equations with spherically symmetry naturally reduce to singular differential equations (1.1) with boundary condition (1.3) using spherical coordinate [13].

The solution of singular differential equation is usually not sufficiently smooth at the singularity [14], which will deteriorate the accuracy of the standard numerical methods. Jamet [2] proved this assertion when standard three-point finite difference scheme with a uniform mesh was used to solve the singular two-point boundary value problem (1.1) with Dirichlet boundary condition when \(0<\alpha <1\). Ciarlet et al. [15] obtained a similar conclusion when a suitable Rayleigh–Ritz–Galerkin method was used to solve the problem. Chawla and Katti [16] proposed a three-point difference method of second order under appropriate conditions by constructing an integral identity for this kind of singular two-point boundary value problem. Followed this integral identity, a lot of finite difference methods with second or fourth order accuracy were constructed for weakly or strongly singular differential equations, see, for example, [1, 17,18,19,20,21]. Another treatment for singularity was proposed by Gustafsson [22], who solved the problem by first writing the series solution in the neighborhood of the singularity and then employing several standard difference schemes in the remaining part of the interval. As for the further development of this method, see [23, 24]. Recently, Roul et al. [25] designed a high-order compact finite difference method for a general class of nonlinear singular boundary value problems with Neumann and Robin boundary conditions. Some kinds of collocation methods including cubic or quartic B-spline, Jacobi interpolation, and Chebyshev series were also used to numerically solve singular differential equations [26,27,28,29,30,31,32,33,34,35,36,37]. As stated by Russel and Shampine [31], collocation with piecewise polynomial functions is an effective method for solving singular problems with smooth solutions, although the coefficients are singular. But for the problem with non-analytic solution, the accuracy becomes lower [36]. Hence, some modifications should be adopted for these problems. For instance, nonpolynomial bases are used in collocation space [34] or singularity is removed by splitting the interval into two subintervals [26], or graded mesh is used in finite element method [38]. It is worth mentioning that Huang et al. [30] derived the convergence order of Legendre and Chebyshev collocation methods for a singular differential equation (\(\alpha =1\) and linear case in (1.1)) and Guo et al. [33] developed the convergence theory of Jacobi spectral method for differential equations possessing two-endpoint singularities.

Besides numerical methods, many semi-analytical methods were also developed to obtain approximate series solutions for singular problems, see, for example, the homotopy perturbation method (HPM) [39], the homotopy analysis method (HAM) [40,41,42,43], and the (improved) Adomian decomposition method (ADM) [44, 45]. These methods transformed the singular differential equations to the Fredholm integral ones using Green’s function and then obtained recursive schemes based on HPM, HAM, or ADM. We note that the transformed integral equations can also be discretized by numerical method [46].

The first thing to effectively solve singular differential equation is describing the singular behavior of the solution at its singularity. A useful tool is the Puiseux series, a generalization of Taylor’s series, which may contain negative and fractional exponents and logarithms [47,48,49]. In [50], this kind of series was called psi-series. We note that if the Puiseux series or psi-series contains only fractional exponents, it is also called fractional Taylor’s series [51, 52]. For the singular differential equation (1.1) with Dirichlet boundary conditions, Zhao et al. [53] first derived the series expansion of the solution about zero with a parameter for the case \(0<\alpha <1\), and then designed an augmented compact finite volume method to solve the equation. In this paper, we consider the equation with mixed boundary conditions for the case \(0\le \alpha <\infty \). We aim to explore the singular behavior of the solution at \(x=0\) by deriving the fractional Taylor’s expansion for the solution about zero. The main idea is first transforming the differential equation (1.1) to an equivalent Fredholm integral equation, and then obtaining the fractional series expansion via Picard iteration. The key point is implementing the series expansion for the nonlinear function f(x, u(x)) about \(x=0\) by symbolic computation. We note that the obtained series expansion contains an unknown parameter, which is essentially determined by the right boundary condition. Since the series expansion may not be convergent at \(x=1\), we should resort to some other methods to solve the singular differential equation, such as Chebyshev collocation method [30, 54,55,56,57]. Certainly, the series expansion can help us to find a suitable variable transformation such that the solution of (1.1) is sufficiently smooth, even though the equation is still singular. For the transformed equation, the Chebyshev collocation method could get accurate numerical solution if we evaluate the function values near the singularity with very small roundoff errors. Once the numerical values at nodes are obtained, their interpolation can give an accurate approximation to the unknown parameter in the series expansion.

As stated above, the methods for solving singular differential equations usually fall into two categories, one is series expansion method and the other is numerical method. The series expansion method can automatically recognize the singular behavior of the solution when generating the series solution, but it usually costs much time, whereas the numerical method is usually fast, but it cannot recognize the singular feature of the solution. For singular equation, it needs to introduce special techniques to treat the singularity. The method in this paper combines the advantages of the above two classes of methods. We note that our series expansion method is different from those of ADM, HPM, and HAM. What we obtained is exactly the truncated series of the solution about zero, which accurately reflects the singular behavior of the solution. We also optimize the iterative procedures so that the series decomposition has high efficiency. Based on this series expansion, we are easy to know how to perform a suitable variable transformation to make the solution be sufficiently smooth. For the transformed differential equation, all kinds of traditional numerical methods such as finite difference method, finite element method, and finite volume method can be effectively used. That is to say, we develop a common technique such that the existing numerical methods can be effectively simplified. In this paper, we only use Chebyshev collocation method to obtain numerical solutions with high accuracy for singular differential equations.

The outline of the paper is as follows. In Sect. 2, we derive the truncated fractional series for the solution about zero via Picard iteration for the equivalent Fredholm integral equation. In Sect. 3, we first transform the singular differential equation to the one with smooth solution based on the series expansion, and then discretize the transformed differential equation by Chebyshev collocation method. In Sect. 4, we discuss the convergence of the Chebyshev collocation method by taking the corresponding linear equation as an example. In Sect. 5, some typical examples are provided to show that the series expansion generating algorithm is feasible and the Chebyshev collocation method is accurate for these kinds of nonlinear singular differential equations. In Sect. 6, we apply the method to the Thomas–Fermi equation and the Emden–Fowler equation over finite interval and derive the fractional series expansions for the solution about the two endpoints. We end with a concise conclusion in Sect. 7.

2 The singular series expansion for the solution about zero

In this section, we consider the nonlinear singular differential equation (1.1) with the boundary condition (1.2) or (1.3). In order to guarantee the existence and uniqueness of the solution, we need to impose some additional conditions for the function f(x, u), which will be given later. In the following, we first transform the singular two-point boundary value problem to an equivalent Fredholm integral equation of the second kind using Green’s function and then formulate the finite-term truncation of the fractional Taylor’s series for the solution about \(x=0\).

For the boundary value problem (1.1), (1.2), by introducing the Green’s function [44]

we can obtain an equivalent Fredholm integral equation

For the boundary value problem (1.1), (1.3), by introducing the Green’s function [45]

or

we can also obtain an equivalent Fredholm integral equation

Remark 1

Under the assumptions for \(\sigma \) and \(\gamma \) in (1.2) and (1.3), it is easy to show that the Green’s functions defined by (2.1), (2.3), and (2.4) are all nonpositive for \(x,\xi \in [0,1]\).

Next, we try to derive the fractional Taylor’s expansion for u(x) about \(x=0\) with the form

Suppose that f(x, u) is infinitely differentiable with respect to u and possesses the following Taylor’s expansion about \(u=A\):

We further assume that \({\partial ^i f(x,A)}/{\partial u^i}\), \(i=0,1,\ldots , \infty \) hold the following fractional Taylor’s expansions about \(x=0\):

Here, we impose another condition for \(\rho _{i,j}\), which is \(\rho _{0,1}\le \rho _{1,1}\le \rho _{2,1}\le \cdots \). Substituting (2.6), (2.8) into (2.7), we can show that f(x, u(x)) possesses the following series expansion:

Since we only need to obtain a finite-term series expansion for u(x) about \(x=0\), we further denote the expansions of u(x) and f(x, u(x)), respectively, as

where M is a suitably large integer and the remainders \(r_{u,M}(x)=x^{\alpha _{M+1}}z_{u,M}(x)\) (\(\alpha _{M+1}>\alpha _{M}\)), \(r_{f,M}(x)=x^{\beta _{M+1}}z_{f,M}(x)\) (\(\beta _{M+1}>\beta _{M})\), and both \(z_{u,M}(x)\), \(z_{f,M}(x)\) are continuous over [0, 1]. We note that the coefficient \(a_0\) in (2.10) is a free parameter to be determined for the problem (1.1) with (1.2), but it vanishes for the problem (1.1) with (1.3), where in this problem, A is a free parameter to be determined.

First, we discuss the problem (1.1) with (1.2). Substituting (2.10) and (2.11) into (2.2), we obtain

Using the Green’s function (2.1), a straightforward computation shows

Noticing Remark 1, the last term on the right-hand side of (2.12) is estimated by

where \(C_{f,M}=\max _{x\in [0,1]}|z_{f,M}(x)|\). The estimation (2.13) means that the last integral on the right-hand side of (2.12) does not generate the terms in front of it except the one like \(x^{1-\alpha }\). Hence, equating the powers and coefficients of like powers of x on both sides of (2.12) yields

Second, we consider the problem (1.1) with (1.3). Noting that for this problem, \(a_0=0\) in (2.10), we have from (2.5)

For the case \(\alpha \ne 1\), by substituting the Green’s function (2.3) into (2.15), we can obtain

For the case \(\alpha =1\), substituting the Green’s function (2.4) into (2.15) yields

Obviously, the above equation is a special case of (2.16) when \(\alpha =1\). Hence, we only consider the general case (2.16) in the following. For (2.16), we should further assume that \(\beta _1>\alpha -1\) since \(u'(0)=0\). Equating the powers and coefficients of like powers of x on both sides of (2.16) yields

Comparing (2.14) with (2.17), we know the singular expansions for u(x) about \(x=0\) are very similar for the two different boundary conditions \(u(0)=A\) (\(0\le \alpha <1\)) and \(u'(0)=0\).

From (2.14) and (2.17), we know the key point to derive the singular expansion for u(x) about \(x=0\) is determining the power exponents \(\beta _j\) and the coefficients \(c_j\) of the truncated fractional series for f(x, u(x)) in (2.11). This can be achieved by symbolic computation, for instance, using the Series command of Mathematica. For the nonlinear equation, we need to implement a Picard iteration to recover the \(\beta _j\) and \(c_j\) step by step. Since \(a_0\) in (2.12) and A in (2.16) cannot be determined by these equations, we take them as free parameters when implementing the iterations. Concretely, we have the following series expansion algorithm.

Step 1: Let \(u_0(x)=A+a_0 x^{1-\alpha }\) for the problem (1.1) with (1.2), where \(a_0\) is a free parameter to be determined or let \(u_0(x)=A\) for the problem (1.1) with (1.3), where A is a free parameter to be determined.

Step 2: For \(j=1,2,\ldots ,M\), implement the fractional series expansion for \(f(x,u_{j-1}(x))\) about \(x=0\) by symbolic computation. We assume that

where \(r_{f,j}(x)=x^{\beta _{j+1}}z_{f,j}(x)\) (\(\beta _{j+1}>\beta _{j})\) is the remainder and the coefficients \(c_k\), \(k=1,2,\ldots ,j\), are related with \(a_0\) (for boundary condition (1.2)) or A (for boundary condition (1.3)). Then let

and repeat for \(j+1\). Finally, we obtain a truncated series

Theorem 1

Suppose that f(x, u) is infinitely differentiable with respect to u and \({\partial ^i f(x,A)}/{\partial u^i}\), \(i=0,1,\ldots , \infty \), hold the fractional Taylor’s expansions with respect to x as in (2.8), where \(A=u(0)\). We further assume that in (2.9), \(\beta _1=\rho _{0,1}>-1\) for the problem (1.1), (1.2), and \(\beta _1=\rho _{0,1}>\alpha -1\) for the problem (1.1), (1.3). Then the power exponents \(\beta _k\) and the coefficients \(c_k\) in the fractional series expansion of \(f(x,u_{j-1}(x))\), \(k = 1, 2, \ldots , j\) do not change as j increases.

Proof

Performing Taylor’s expansion for \(f(x, u_j(x))\) about u at \(u_{j-1}(x)\), noticing (2.8), we have from (2.18) and (2.19)

where the exponents of x in the omitting part are all greater than \(2-\alpha +\beta _j+\rho _{1,1}\). By our assumptions about \(\rho _{1,k}\), we have

For the problem (1.1) with (1.2), since \(0 \le \alpha < 1\) and \(\beta _1>-1\), we know \(2-\alpha +\beta _1+\beta _j > \beta _j\). For the problem (1.1) with (1.3), since \(\beta _1>\alpha -1\), we obtain \(2-\alpha +\beta _1+\beta _j>1+\beta _j >\beta _j\). Therefore, we can conclude that \(\beta _k\) and \(c_k\), \(k = 1, 2, \ldots , j\) do not change as j increases for these two different boundary conditions. The proof is complete. \(\square \)

Theorem 2

Under the conditions imposed on f(x, u) in Theorem 1, \(u_M(x)\) given in (2.20) is exactly the truncated fractional Taylor’s series for u(x) about \(x=0\), but contains an unknown parameter \(a_0\) for the problem (1.1), (1.2) or A for the problem (1.1), (1.3) (in this case \(a_0=0\)).

Proof

We only prove the case for the problem (1.1), (1.2). Define

then combining (2.12), (2.18)–(2.20), and using Theorem 1 yield

Further, the estimation (2.13) implies

for suitably small x since \(\beta _{M+1}\rightarrow \infty \) by our assumption. Then letting \(M\rightarrow \infty \) in (2.22) yields

By choosing \(a_0\) such that the coefficient of \(x^{1-\alpha }\) equals to zero, we assert that \(e_M(x)\rightarrow 0\) as \(M\rightarrow \infty \) for suitably small x. Hence, by letting \(M\rightarrow \infty \) in (2.21), we know \(u_\infty (x)=A+a_0x^{1-\alpha }+\sum _{j=1}^{\infty }a_jx^{\alpha _j}\) is the series solution for u(x) about \(x=0\), and of course, \(u_M(x)\) is exactly the truncated fractional Taylor’s series for u(x) about \(x=0\). A similar argument can be conducted for the problem (1.1), (1.3). The proof is complete. \(\square \)

Remark 2

Theorems 1, 2 actually give the conditions for f(x, u) such that the boundary value problem has a unique series solution, which are (2.8) holding for f(x, A) and \(\beta _1>-1\) for the problem (1.1), (1.2), \(\beta _1>\alpha -1\) for the problem (1.1), (1.3). These conditions can be easily checked since \(f(x, A)= \eta _{0,1} x^{\beta _1}+\cdots \).

Remark 3

In this section, we first assume that u(x) possesses the fractional Taylor’s expansion (2.6). In the proof of Theorem 2, we show the Picard iteration has truly generated this expansion under the assumptions for f(x, u), which means that the assumption (2.6) is reasonable.

Remark 4

The truncated series expansion \(u_M(x)\) contains an unknown parameter \(a_0\) for the left boundary condition \(u(0)=A\) or A for the case \(u'(0)=0\), which should be determined by the right boundary condition \(\sigma u(1)+\gamma u'(1)=B\). As is well known, the Taylor’s expansion is very good near the center of expansion, but the error may increase rapidly as the variable moves away. This is true for \(u_M(x)\). Even though we cannot expect to determine these parameters accurately by the right boundary condition except that the series is convergent fast at \(x=1\), the expansion \(u_M(x)\) at least provides the information about the singular behavior of the solution, which may help us to design numerical methods with high precision for solving singular differential equations.

Remark 5

We write a Mathematica function Pesbvp[f,\(\alpha \),M,A,a0] to implement the series expansion algorithm, where f is the given function f(x, u), M is the maximal exponent of the series to be recovered, and a0,A are the undetermined parameters corresponding to the boundary condition \(u(0)=A\) (in this case, A is known) and \(u^\prime (0)=0\), respectively.

Remark 6

If f(x, u) is singular at \(x=1\), we can also use the method in this section to derive the series expansion for u(x) about \(x=1\).

3 Chebyshev collocation method

The results in Sect. 2 reveal that the singular equation (1.1) with boundary condition (1.2) or (1.3) has generally insufficiently smooth solution, which will lead to the decrease of accuracy when high-order collocation methods are directly used to solve it. In this section, we show that this difficulty can be effectively overcome after a suitable smoothing transformation is performed.

From the truncated fractional power series \(u_M(x)\) in (2.20), we can reasonably assume that \(u(x)=A+\sum _{j=0}^M a_j x^{\beta \rho _j}+\cdots \), where \(0<\beta \le 1\) and \(\rho _j\), \(j=0,1,\ldots ,M\) are all integers. Hence, taking the variable transformation \(t=x^{\beta }\) can make the solution sufficiently smooth about t. By letting \(s=2t-1\) and \(v(s)=u(x)\), we can transform Eq. (1.1) into

where

Meanwhile, the boundary condition (1.2) is converted to

and the boundary condition (1.3) is converted to

We note that Eq. (3.1) is still singular, but its solution is sufficiently smooth. In order to obtain an accurate numerical solution, we solve it by the Chebyshev collocation method [54,55,56,57].

We first divide the interval \([-1,1]\) into n subintervals and select the nodes as the Chebyshev–Gauss–Lobatto points \(s_j=\cos \left( \pi j/n\right) \), \(j=0,1,\ldots ,n\), which are the extreme points of the Chebyshev polynomial \(T_n(s)=\cos n\arccos s\). Then, for v(s), \(s\in [-1,1]\), we construct a Lagrange interpolating polynomial \(v_n(s)\) of degree n using the nodes \(s_j\), \(j=0,1,\ldots ,n\), which is

where the Lagrange basis functions \(l_j(s)\) read as

Finally, replacing v(s) in (3.1) with \(v_n(s)\) and forcing the resulting equation to be held at the interior collocation points, we get a collocation scheme

In the following, we show that (3.5) is equivalent to a system of nonlinear equations. Differentiating the Lagrange interpolation (3.4) m times, we have

Let \(s=s_i\), then

By introducing the following notations:

the formula (3.6) is equivalent to \(V_n^{(m)}=D^{(m)}V\), where \(D^{(m)}\) is called a differentiation matrix, which plays an important role in the collocation method. When \(m=1\), denote \(D^{(1)}\) by D, whose entries can be explicitly formulated as [54]

where \(\widetilde{c}_i=1\) \((i=1,2,\ldots , n-1)\) and \(\widetilde{c}_0=\widetilde{c}_n=2\). It can be straightforwardly shown that \(D^{(m)}=D^{m}\) when \(m>1\).

Denote the solution of (3.5) by \(v_j\), \(j=0,1,\ldots ,n\). Using the differentiation matrices, (3.5) can be converted to a system of nonlinear equations

where the parameters \(\widetilde{c}_+\), \(\widetilde{\alpha }_{0j}\), \(\widetilde{c}_-\), \(\widetilde{\alpha }_{nj}\) are evaluated by

via the boundary condition (3.2). Then

Similarly, for the problem (3.1) with the boundary condition (3.3), we have

where

with the parameters \(\widetilde{c}_+\), \(\widetilde{\alpha }_{0j}\), \(\widetilde{c}_-\), \(\widetilde{\alpha }_{nj}\) being defined by

The system of the nonlinear equations (3.7) or (3.9) can be solved by Newton’s iterative method with an initial guess, then (3.8) or (3.10) is used to compute \(v_0\) and \(v_n\). We now discuss how to choose the initial values for Newton’s method. For the first boundary condition, we can use the known conditions \(u(0)=A\), \(u(1)=B\) to construct a linear interpolation to determine the initial values. For the other boundary conditions, we first use the condition \(\sigma u(1)+ \gamma u'(1)=B\) to determine the parameter \(a_0\) or A in (2.20) for \(u_M(x)\), then the deduced \(u_M(x)\) is used to evaluate the initial values. As we have mentioned in Remark 4, this technique is not accurate for determining the parameter, but it is enough to obtain the initial values for iteration. We note that we can also simply generate the initial values randomly in practical computation. Since the collocation method has high accuracy, we set machine precision (\(2.22045\times 10^{-16}\)) as the stop criteria for Newton’s method.

By using the computational values \((s_i,v_i)\), \(i=0,1,\ldots ,n\), we can construct a Lagrange interpolant \(v_n(s)=\sum _{j=0}^{n}v_j l_j(s)\). By letting \(\widetilde{l}_j(x)= l_j(2x^{\beta }-1)\), we obtain \(u_n(x)=\sum _{j=0}^{n}v_j\widetilde{l}_j(x)\), which is the interpolating approximation of u(x) based on the Chebyshev–Gauss–Lobatto points. Expanding \(u_n(x)\) about \(x=0\), we can also determine the unknown parameter \(a_0\) or A in the truncated series expansion \(u_M(x)\) with high precision.

Remark 7

Although the solution of (3.1) is smooth, the equation is still singular. In order to avoid the cancellation of significant digits, we should treat the singular parts carefully. For instance, when evaluating \(p(s_i)\) in (3.1), we should rewrite

4 Convergence analysis

The implementation of collocation method is simple, but its convergence analysis is not easy. Canuto, Quarteroni, Shen, et al. [55,56,57] constructed the framework for analyzing the convergence of collocation method in weighted Sobolev spaces and Huang [30], Guo [33] conducted the convergence analysis of spectral collocation methods for singular differential equations. In this section, we only consider the linear case of Eq. (3.1) with homogeneous Dirichlet boundary conditions, which is rewritten as

where \(\kappa = \frac{\alpha +\beta -1}{\beta }\) (\(0\le \alpha <1\)) and \(\widetilde{g}=(1+s)g(s)\) is sufficiently smooth about \(s\in [-1,1]\).

Denote \(v_n(s)\) by the collocation solution to (4.1) corresponding with the Chebyshev–Gauss–Lobatto points \(s_i\), \(i=0,1,\ldots ,n\), then

where the Lagrange interpolating polynomial \(v_n(s)\) of degree n reads

and \(v_j\) is the approximation of \(v(s_j)\). Denote by

where \(\mathcal {P}_n\) is the polynomial space of degree less than or equal to n. By introducing the Chebyshev weight function \(\omega (x)=(1-x^2)^{-1/2}\), we know the Chebyshev–Gauss–Lobatto quadrature formula holds [55]

where \(\omega _0=\omega _n=\frac{\pi }{2n}\), \(\omega _i=\frac{\pi }{n}, \ i=1,2,\dots , n-1\).

For \(\forall \phi (s)\in \varPhi _{n,0}\), multiplying the equation in (4.2) by \((1+s_i)\omega _i \phi (s_i)\), summing over the range of i from 1 to \(n-1\), and noting that \( \phi (s_0)=\phi (s_n)=0\), we have

Noting that \(v_n(s)\) is a polynomial of degree n, we know from (4.3) that Eq. (4.4) is equivalent to

which yields by integrating by parts for the first integral in (4.5)

By introducing the following notations:

Eq. (4.6) is written as

Analogously, the boundary value problem (4.1) can be transformed to

Then, subtracting (4.9) from (4.10) gives the error equation

Generally, it is convenient to estimate the error \(v-v_n\) in weighted Sobolev spaces. Denote \(I=(-1,1)\), and let \(\omega ^{(\gamma ,\delta )}(s)=(1-s)^\gamma (1+s)^\delta \) be the Jacobi weight. For this weight, we define weighted \(L^2\) space

with inner product and norm

For any integer \(m\ge 0\), we set

with norm and semi-norm

We also set \(H^1_{\omega ^{(\gamma ,\delta )},0}(I)=\{v\in H^1_{\omega ^{(\gamma ,\delta )}}(I)|\ v(-1)=v(1)=0\}\). We note that when \(\gamma =\delta =-\frac{1}{2}\), the Jacobi weight \(\omega ^{(\gamma ,\delta )}(s)\) degenerates to Chebyshev weight \(\omega (s)=(1-s^2)^{-1/2}\), and the notations for spaces and norms are correspondingly simplified. In the following, we provide some useful lemmas.

Lemma 1

([33], Lemma 3.7) If \(\rho \le \gamma +2\), \(\tau \le \delta +2\), and \(-1<\rho ,\tau <1\), then for any \(v\in H^1_{\omega ^{(\rho ,\tau )},0}(I)\), we have \(\Vert v\Vert _{\omega ^{(\gamma ,\delta )}}\le c |v|_{1,\omega ^{(\rho ,\tau )}}\), where c is a positive constant.

Lemma 2

([30], Lemma 3.6) For \(v,\phi \in H^1_{\omega ,0}(I)\), we have for \(a_\omega (v,\phi )\) defined in (4.7)

Lemma 3

For \(v,\phi \in H^1_{\omega ,0}(I)\), we have for \(b_\omega (v,\phi )\) defined in (4.7)

Proof

From the definition of \(b_\omega (v,\phi )\), noting that \(v(-1)=v(1)=0\), we know

As for (4.15), by using Cauchy inequality, we have

In Lemma 1, taking \(\gamma =-\frac{1}{2}\), \(\delta =-\frac{3}{2}\), \(\rho =-\frac{1}{2}\), \(\tau =\frac{1}{2}\) implies

Hence, (4.15) holds. The proof is complete. \(\square \)

Lemma 4

([33], Lemma 3.16) For the orthogonal projection \(P^0_{n,\gamma ,\delta }\): \(H_{\omega ^{(\gamma ,\delta )},0}^1(I)\rightarrow \varPhi _{n,0}\) defined by \(((v-P^0_{n,\gamma ,\delta }v)^\prime ,\phi ^\prime )_{\omega ^{(\gamma ,\delta )}}=0\), \(v\in H_{\omega ^{(\gamma ,\delta )},0}^1(I)\), \(\forall \phi \in \varPhi _{n,0}\), there holds

where \(c>0\) is a constant and the definition of the norm \(\Vert v\Vert _{m,\omega ^{(\gamma ,\delta )},*}\) can be found in [33].

Lemma 5

([56, 57]) For all \(\phi \in \varPhi _{n,0}\) and \(v\in H_{\omega }^m(I)\), \(m\ge 1\), there holds

In Lemma 1, by taking \(\gamma =\delta =-\frac{1}{2}\), \(\rho =-\frac{1}{2}\), \(\tau =\frac{1}{2}\), we know \(\Vert \phi \Vert _{\omega }\le c |\phi |_{1,\omega ^{(-1/2,1/2)}}\) for \(\phi \in \varPhi _{n,0}\). Hence

Now we state the convergence result of the Chebyshev collocation method. We note that \(C_1\), \(C_2\) and c are general positive constants in this section, which may have different values in different places.

Theorem 3

Suppose that \(v(s)\in H^1_{\omega ,0}(I)\cap H^m_{\omega ^{(-1/2,1/2)}}(I)\) is the solution of the singular differential equation (4.1) (\(0\le \alpha <1\)) and \(v_n(s)\in \varPhi _{n,0}\) is the collocation solution to (4.1) corresponding with \(n+1\) Chebyshev–Gauss–Lobatto points. If \(\kappa ={\alpha +\beta -1}/{\beta }\) satisfies \(-\frac{1}{2}\le \kappa \le \frac{1}{2}\) (or equivalently \(\frac{1}{2}\beta \le 1-\alpha \le \frac{3}{2}\beta \)), then there exist two positive constant \(C_1\), \(C_2\) such that the error \(v-v_n\) is estimated by

where \(\widetilde{g}=(1+s)g(s)\) is sufficiently smooth.

Proof

For \(\forall \psi \in \varPhi _{n,0}\), we rewrite the error equation (4.11) as

Taking \(\phi =\psi -v_n\) and using (4.13), (4.15), (4.17), we have

From (4.12) and (4.14), we know when \(-\frac{1}{2}\le \kappa \le \frac{1}{2}\)

Combining (4.19) with (4.20) yields

Taking \(\psi =P^0_{n,-1/2,1/2}v\) defined in Lemma 4, we know

Further using the triangle inequality

and Lemma 4, we know (4.18) holds. The theorem is proved. \(\square \)

Theorem 3 tells us that the accuracy of the collocation method depends upon the smoothness of the solution and the source term. In order to increase the accuracy of the scheme, it is necessary to perform a smooth transformation as done in Sect. 3. In this section, we only discuss a special linear case for \(0\le \alpha <1\). We note that the case includes the most important one \(\beta =1-\alpha \) corresponding to \(\kappa =0\). As for the other cases, we are going to explore in the future.

Remark 8

As stated in [33], the semi-norm \(|v|_{1,\omega ^{(-1/2,1/2)}}\) is a norm of the space \(H^1_{\omega ^{(-1/2,1/2)},0}(I)\), which is equivalent to the norm \(\Vert v\Vert _{1,\omega ^{(-1/2,1/2)}}\). Hence, (4.18) also holds for this norm.

5 Numerical examples

In this section, we present three examples to show the performance of our proposed methods. All the experiments are performed on a Laptop with Intel Core i5-6200U CPU (2.30 GHz) and 8 GB RAM by using Mathematica.

Example 1

Solve the following linear strongly singular two-point boundary value problem using our method, the Adomian decomposition method (ADM) [45], and the optimal homotopy analysis method (OHAM) [41]

We know its exact solution is \(u(x)=4\mathrm {e}^{x^{3/2}}\).

In this example, \(\alpha =1\), but \(g(x)=\frac{9}{4}\left( \sqrt{x}+x^2\right) \) involves a term \(\sqrt{x}\). Hence the solution is not sufficiently smooth at \(x=0\). By implementing the series expansion method, we obtain the truncated fractional power series expansion for u(x) about \(x=0\) with a parameter \(A=u(0)\), denoted by

from which we know the transformation \(s=2\sqrt{x}-1\) can make the solution u(x) infinitely smooth. By discretizing the transformed equation (3.1) using the Chebyshev collocation method with \(n=18\), we obtain a fractional Lagrange interpolation

Comparing this approximate solution with \(u_p(x)\), we know the parameter \(A=4\) in the fractional series expansion \(u_p(x)\), which leads to

As a comparison, we also solve this example using ADM and OHAM. Since Eq. (5.1) is equivalent to

the Adomian iteration reads

from which the m-th approximate solution of ADM is \(u_m^{\mathrm {adm}}(x)=\sum _{i=0}^m y_i(x)\). We note that the parameter A is determined by the right boundary condition. A direct computation shows

The OHAM is based on the integral equation (2.5). By introducing an optimal parameter h, the OHAM reads

from which the m-th approximate solution of OHAM is \(u_m^{\mathrm {oham}}(x)=\sum _{i=0}^m y_i(x)\). The parameter h is determined by minimizing the error

When \(m=6\), we obtain the optimal \(h=-0.394885\) and the approximate solution

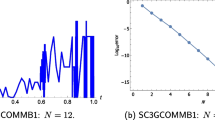

We note that when \(h=-1\), the OHAM degenerates to the improved Adomian decomposition method [45], but it is not convergent for this example. In order to discuss the accuracy of these approximations, we plot their absolute errors with logarithmic scale, as shown in Fig.1.

The absolute errors of the approximate solutions to the exact solution in Example 1

It can be seen from Fig. 1 that the Chebyshev collocation method with smoothing transformation has the highest accuracy even though the highest degree of \(u_{tc}(x)\) is only half compared with the ones of the other two methods and the OHAM has the lowest accuracy in this example. Since the standard OHAM is not convergent fast, Roul et al. [42] further designed a domain decomposition OHAM to accelerate the convergence. As we expected, the series expansion \(u_{p}(x)\) approximates u(x) with high precision only over a small interval near \(x=0\). When the variable x moves away from \(x=0\) to \(x=1\), the accuracy decreases gradually. In the computation, we also record the CPU time for these methods. For our method, the total CPU time for generating \(u_p(x)\) and calculating \(u_{tc}(x)\) is only 0.03125 seconds, whereas the ADM and OHAM cost 8.04688 and 99.6719 seconds, respectively. Obviously, our method is much faster than the other two methods. Although both the iteration procedures of the ADM and OHAM are very simple, they cost much time since many integrals are evaluated analytically by symbolic computation in their iterations.

Example 2

Solve the following nonlinear singular two-point boundary value problem:

Its exact solution is \(u(x)=\left( \arcsin (1-x)\right) ^2\).

For this example, f(x, u) is obviously weakly singular at \(x=0\). Even so, we can also use the series expansion Mathematica function Pesbvp[f,1/2,10,A,\(a_0\)] to obtain

Based on this series expansion, we choose the smoothing transformation \(s=2\sqrt{x}-1\). By applying the Chebyshev collocation method with \(n=20\) to the transformed equation (3.1), we can obtain the Chebyshev interpolation

which is an approximate solution to u(x). Since \(u_{tc}(x)\) and \(u_p(x)\) are both accurate when x is small, we can conclude that \(a_{0}=-4.442882938175524\) in the series expansion \(u_p(x)\). Thus

For comparison, we apply the Chebyshev collocation method (taking \(n=20\)) directly to solve the problem (5.2) and obtain an approximate solution

The absolute errors of the approximate solutions \(u_{tc}(x)\), \(u_{p}(x)\), and \(u_{c}(x)\) are plotted in Fig. 2 with logarithmic scale.

The absolute errors of the approximate solutions to the exact solution in Example 2

It can be seen from Fig. 2 that the approximate solution \(u_{c}(x)\) has very low precision of about \(10^{-1}\) order. After smoothing transformation, the solution \(u_{tc}(x)\) achieves a very high precision, whose accuracy is about \(10^{-13}\) order. In addition, the fractional power series \(u_{p}(x)\) is a good approximation to u(x) when \(x\le 0.25\), but it gradually becomes less accurate as x tends to 1.

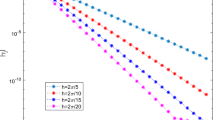

We further explore the convergence order of the Chebyshev collocation method for the transformed equation using different n, and the results are shown in Table 1, where in this table, the maximal absolute error \(E_n=\max _{0\le x\le 1}|u(x)-u_{tc}(x)|\) and the order is defined by \(\log E_n/\log E_{2n}\). From Table 1, we know the order is about 0.5, which means \(E_n=O\left( \exp (-\theta n)\right) \) (\(\theta >0\)). Rubio [58] called this convergence as exponential convergence. We note that we only discuss the algebraic convergence in Sect. 4. In addition, we can also see that the CPU time increases slowly as n increases to two times.

Example 3

Solve the nonlinear strongly singular two-point boundary value problem

with the exact solution \(u(x)=\cos \left( \pi x^{4/3}/2\right) \).

This example is a strongly singular problem. By means of the proposed series expansion method, we obtain the truncated fractional series expansion for u(x) about \(x=0\)

Hence, we choose the smoothing transformation \(s=2x^{2/3}-1\). By applying the Chebyshev collocation method with \(n=20\) to the new equation, we can formulate a Lagrange interpolation

From this approximate solution, we know the parameter \(A=1\) in the series expansion \(u_p(x)\), which leads to

We can also directly solve Eq. (5.3) using the Chebyshev collocation method (taking \(n=20\)) to obtain an approximate solution \(u_c(x)\). The absolute errors of the three approximate solutions \(u_{tc}(x)\), \(u_{p}(x)\), and \(u_{c}(x)\) to the exact solution u(x) are plotted in Fig. 3.

The absolute errors of the approximate solutions to the exact solution in Example 3

We can see from Fig. 3 that the approximate solution \(u_{c}(x)\) has the accuracy \(10^{-6}\) since the exact solution is twice continuously differentiable over the interval [0, 1]. By using smoothing transformation, the solution \(u_{tc}(x)\) achieves the accuracy \(10^{-14}\). For this example, the series expansion \(u_{p}(x)\) has very high accuracy when \(x\le 0.2\), but it becomes less accurate as \(x\rightarrow 1\).

The three examples in this section demonstrate that the Chebyshev collocation method can be applied directly to solve the singular two-point boundary value problem, but the approximate solution is not accurate due to the low regularity of the exact solution u(x) at \(x=0\). Obviously, the smoother the solution is, the higher accuracy will be achieved for the Chebyshev collocation method. With the proposed series decomposition method, we can give an accurate description of the singular behavior of the solution using the fractional series expansion. Then, a suitable smoothing transformation is taken for the equation so that the solution is sufficiently smooth. For the transformed equation, the accuracy of the Chebyshev collocation method can be improved significantly. In addition, the Lagrange interpolation of the Chebyshev collocation solution can also determine the unknown parameter \(a_0\) or A in the fractional series expansion with high precision. In most cases, the fractional series expansion approximates u(x) with high precision only in the neighborhood of \(x=0\). When the variable x is away from the point \(x=0\), the series expansion gradually becomes less accurate.

6 Applications to the Thomas–Fermi equation and Emden–Fowler equation

In this section, we apply our method to solve the Thomas–Fermi equation and Emden–Fowler equation over finite interval. We first consider the Thomas–Fermi equation. It is used to model the potentials and charge densities in atoms [7, 9,10,11,12]. The equation is

There are three kinds of boundary conditions of interest for this equation [9, 10]. Here, we only consider the ion case

By implementing the Mathematica function Pesbvp[f,0,10,1,a0], we obtain the series expansion with an undetermined parameter \(a_0\) for the solution u(x) about \(x=0\)

Next, we discuss the asymptotic behavior of u(x) about \(x=1\). Since \(u(1)=0\), we can reasonably let \(u(x)=(1-x)^\beta w(x)\), where \(\beta >0\) and \(w(1)=b_0\ne 0\). Substituting it into (6.1) yields

Noting that \(w(x)=b_0+(1-x)^{\beta _1}g(x)\), \(\beta _1>0\), we can deduce that the third term on the left-hand side of (6.4) must vanish, which means \(\beta =1\). Hence, Eq. (6.4) becomes

Obviously, f(x, w) is infinitely differentiable with respect to w at \(w=b_0\). Hence, w(x) possesses the fractional power series about \(x=1\). Substituting \(w(x)=b_0+b_1(1-x)^{\gamma _1}+\cdots \) into (6.5), we can deduce \(\gamma _1=\frac{5}{2}\), which means we can impose a right boundary condition \(w^\prime (1)=0\) for Eq. (6.5). Hence, we can obtain the series expansion of w(x) about \(x=1\) by using our decomposition method for Eq. (6.5) at \(x=1\), denoted by \(w_p(x)\). Further by letting \(u_{p,r}(x)=(1-x)w_p(x)\), we have

where \(b_0\) is a parameter to be determined.

Remark 9

In history, many researchers studied the series expansions of the solution for the Thomas–Fermi equation. As early as 1930, Baker [59] obtained the series expansion (6.3) for the Cauchy problem of the Thomas–Fermi equation. Hille [10] proved the convergence of this series and obtained the radius of convergence. Hille also discussed the series expansion (6.6). Here, we reproduce these series expansions by our method.

From (6.3) and (6.6), we know the solution u(x) is not smooth enough at the two endpoints, but we can take a variable substitution \(t=2\sqrt{1-\sqrt{1-x}}-1\) in (6.1) such that the solution \(v(t)=u(x)\), \(t\in [-1,1]\) is sufficiently smooth. Correspondingly, the equation (6.1) with boundary condition (6.2) is transformed to

By taking \(n=20\) in the Chebyshev collocation method, we obtain an approximate solution to the transformed Thomas–Fermi equation (6.7). Taking \(t=2\sqrt{1-\sqrt{1-x}}-1\) again, we obtain

In order to show the accuracy of the approximate solution, we introduce an error function

which is plotted in Fig. 4 in logarithmic scale. In this figure, we also plot the error computed by directly applying the Chebyshev collocation method to the original Thomas–Fermi equation (6.1) (\(e_c(x)\), dashed line). Obviously, the transformed approximate solution is more accurate than the one obtained by direct Chebyshev collocation method, which further shows our singular transformation is successful.

By expanding \(u_{tc}(x)\) as fractional power series about \(x=0\) and \(x=1\), respectively, we know \(a_0=-1.9063841613597106\) in (6.3) and \(b_0=0.8365830238318072\) in (6.6). Hence, we can plot the errors \(|u_{tc}(x)-u_{p,l}(x)|\), \(|u_{tc}(x)-u_{p,r}(x)|\) with logarithmic scale, shown in Fig. 5.

It can be seen from Fig. 5 that the collocation solution \(u_{tc}(x)\) approximates the series expansions \(u_{p,l}(x)\), \(u_{p,r}(x)\) as \(x\rightarrow 0\), \(x\rightarrow 1\), respectively. Hence, it is a good approximation to the Thomas–Fermi equation (6.1) with boundary condition (6.2) over the whole interval [0, 1].

Now we consider the Emden–Fowler equation, which can be imposed initial or boundary conditions [6, 7, 12, 43]. Here, we only discuss the first boundary value problem, which reads [6]

where \(\alpha \) and \(\rho \) are real numbers satisfying \(0<\alpha <1\), \(\rho >0\). Obviously, this equation has a trivial solution \(u=0\). Here, we explore the positive solution. By introducing a variable transformation \(y=x^{1-\alpha }\) and letting \(v(y)=u(x)\), we obtain

where \(\mu ={\alpha +\theta }/{1-\alpha }\). Here, we further require that \(\mu \ge 0\). Now we discuss the asymptotic behaviors of v(y) about \(y=0,1\). Since \(v(0)=0\), we can reasonably assume that \(v(y)=y^\beta w_l(y)\), where \(\beta >0\) and \(w_l(0)=a_0>0\). Substituting it into (6.11) yields

The fact \(w_l(0)=a_0>0\) implies \(w_l(y)=a_0+y^{\gamma _1}g(y)\), where \(\gamma _1>0\), g(y) is continuous over [0, 1] and \(g(0)\ne 0\). Substituting this expression of \(w_l(y)\) into (6.13) yields

For Eq. (6.14), the only term involving \(y^{-2}\) (the smallest exponent about y) must be vanished. Hence \(\beta =1\). Further, since the coefficient of the term involving \(y^{\gamma _1-2}\) is not equal to zero (\(\gamma _1(\gamma _1+1)>0\), \(g(0)\ne 0\)), we must set \(\gamma _1-2=\mu +\rho -1\Rightarrow \gamma _1=\mu +\rho +1\) to make the terms involving \(y^{\gamma _1-2}\) be zero. Since \(\gamma _1=\mu +\rho +1>1\), we can impose a left boundary condition \(w_l^\prime (0)=0\) on Eq. (6.14). Finally, we obtain a boundary value problem from (6.13)

Analogously, we can show \(v(y)=(1-y) w_r(y)\) and \(w_r(y)\) satisfies

Obviously, (6.15) and (6.16) are standard equations in Sect. 2, but (6.16) is singular at the right boundary \(y=1\). Hence, we can generate the series expansions of \(w_l(y)\) and \(w_r(y)\) about \(y=0\) and \(y=1\), respectively, by the algorithm in Sect. 2. In the following, we show that the exponents of the series expansion for v(y) about \(y=0\) can be directly determined from (2.14) for the problem (6.11), (6.12). From the above deduction, we can suppose that \(v(y)=a_0 y+\sum _{j=1}^{\infty }a_j y^{\alpha _j}\). By substituting \(v_0(y)=a_0 y\) into (2.18), we know \(\beta _1=\mu +\rho \), and hence \(\alpha _1=2+\mu +\rho \). Further let \(v_1(y)=a_0 y+a_1 y^{\alpha _1}\), then the binomial expansion of \(v_1(y)^\rho \) implies \(\beta _2=1+2(\mu +\rho )\), which deduces \(\alpha _2=3+2(\mu +\rho )\). Recursively, we can conclude that \(\alpha _j=j+1+j(\mu +\rho )\), \(j=1,2,\ldots \). By similar arguments, we know the exponents of the series expansion for v(y) about \(y=1\) have the form \(\{1\}\cup \{i+j \rho \}\) \((i\ge 2, j=1,2,\ldots i-1)\). We only consider the case that both \(\mu \) and \(\rho \) are rational numbers. Let \(\mu +\rho ={\xi _0}/{\eta _0}\) and \(\rho ={\xi _1}/{\eta _1}\), where \(\xi _0\), \(\eta _0\) and \(\xi _1\), \(\eta _1\) are coprime numbers, respectively. Then the variable substitution \(t=\left( 1-(1-y)^{1/\eta _1}\right) ^{1/\eta _0}\) can make the solution of Eq. (6.11) sufficiently smooth, which yields by letting \(w(t)=v(y)\)

where

We choose \(\alpha =\frac{1}{2}\), \(\theta =-\frac{1}{3}\), and \(\rho =\frac{1}{3}\) in (6.9), then a computation yields \(\eta _0=\eta _1=3\) in (6.17). By applying the Chebyshev collocation method to Eq. (6.17) with boundary condition (6.18), we obtain the approximate solution by taking \(n=20\)

which is plotted in Fig. 6.

Finally, the logarithmic absolute errors of the approximate solutions obtained by the Chebyshev collocation method to (6.17) (\(e_{tc}(x)\), solid line) and (6.9) (\(e_{c}(x)\), dashed line) are plotted in Fig. 7, which show that the given singular transformation improves the computational accuracy significantly. Here, we note that the error \(e_{tc}(x)\) is defined by

Remark 10

The solutions of the Thomas–Fermi equation and the Emden–Fowler equation are not sufficiently smooth at the two endpoints. For these kinds of problems, we tactically perform a smoothing transformation reflecting the singular behaviors of the solution at the two endpoints such that the solution is sufficiently smooth over the whole interval. Hence, the Chebyshev collocation method can be effectively used to solve these problems with high accuracy.

7 Conclusions

Nonlinear singular two-point boundary value problem is an important model equation, which has wide applications in many branches of mathematics, physics, and engineering. Since the solution of the equation is not sufficiently smooth at the one or two endpoints of the interval, the computational accuracy is low when standard numerical algorithms are used to solve the problem. In this paper, we design a simple method to recover the truncated fractional series expansion for the solution about the endpoint, which accurately describes the singular behavior of the solution. Although the series expansion involves an undetermined parameter, the singular feature of the solution is known. By taking a simple variable transformation, the solution is sufficiently smooth, and hence, the Chebyshev collocation method can be used to effectively solve the transformed differential equation, which has been confirmed by convergence analysis. Numerical examples illustrate the high efficiency of the algorithm, which shows that the computational accuracy improves significantly compared with the direct implementation of the Chebyshev collocation method to the original differential equation. The method is capable of solving the problems with two-endpoint singularities. As some applications, the Thomas–Fermi equation and the Emden–Fowler equation over finite interval are solved by the proposed algorithm with high accuracy.

References

El-Gebeily MA, Abu-Zaid IT (1998) On a finite difference method for singular two-point boundary value problems. IMA J Numer Anal 18:179–190

Jamet P (1970) On the convergence of finite-difference approximations to one-dimensional singular boundary-value problems. Numer Math 14:355–378

Kiguradze IT, Shekhter BL (1988) Singular boundary-value problems for ordinary second-order differential equations. J Math Sci 43:2340–2417

Lin SH (1976) Oxygen diffusion in a spherical cell with nonlinear oxygen uptake kinetics. J Theor Biol 60:449–457

Gray BF (1980) The distribution of heat sources in the human head-theoretical considerations. J Theor Biol 82:473–476

Wong JSW (1975) On the generalized Emden–Fowler equation. SIAM Rev 17:339–360

Pikulin SV (2019) The Thomas–Fermi problem and solutions of the Emden–Fowler equation. Comput Math Math Phys 59:1292–1313

Boyd JP (2011) Chebyshev spectral methods and the Lane–Emden problem. Numer Math Theor Meth Appl 4:142–157

Hille E (1969) On the Thomas–Fermi equation. Proc Natl Acad Sci USA 62:7–10

Hille E (1970) Some aspects of the Thomas–Fermi equation. J Anal Math 23:147–170

Amore P, Boyd JP, Fernández FM (2014) Accurate calculation of the solutions to the Thomas–Fermi equations. Appl Math Comput 232:929–943

Flagg RC, Luning CD, Perry WL (1980) Implementation of new iterative techniques for solutions of Thomas–Fermi and Emden–Fowler equations. J Comput Phys 38:396–405

Eriksson K, Thomée V (1984) Galerkin methods for singular boundary value problems in one space dimension. Math Comput 42:345–367

Bender CM, Orszag SA (1999) Advanced mathematical methods for scientists and engineers. Springer, New York

Ciarlet PG, Natterer F, Varga R (1970) Numerical methods of high-order accuracy for singular nonlinear boundary value problems. Numer Math 15:87–99

Chawla MM, Katti CP (1982) Finite difference methods and their convergence for a class of singular two point boundary value problems. Numer Math 39:341–350

Chawla MM, Katti CP (1985) A uniform mesh finite difference method for a class of singular two-point boundary value problems. SIAM J Numer Anal 22:561–565

Chawla MM (1987) A fourth-order finite difference method based on uniform mesh for singular two-point boundary-value problems. J Comput Appl Math 17:359–364

Han GQ, Wang J, Ken H, Xu YS (2000) Correction method and extrapolation method for singular two-point boundary value problems. J Comput Appl Math 126:145–157

Abu-Zaid IT, El-Gebeily MA (1994) A finite-difference method for the spectral approximation of a class of singular two-point boundary value problems. IMA J Numer Anal 14:545–562

Kumar M, Aziz T (2006) A uniform mesh finite difference method for a class of singular two-point boundary value problems. Appl Math Comput 180:173–177

Gustafsson B (1973) A numerical method for solving singular boundary value problems. Numer Math 21:328–344

Kanth ASVR, Reddy YN (2003) A numerical method for singular two point boundary value problems via Chebyshev economizition. Appl Math Comput 146:691–700

Kadalbajoo MK, Aggarwal VK (2005) Numerical solution of singular boundary value problems via Chebyshev polynomial and B-spline. Appl Math Comput 160:851–863

Roul P, Goura VMKP, Agarwal R (2019) A compact finite difference method for a general class of nonlinear singular boundary value problems with Neumann and Robin boundary conditions. Appl Math Comput 350:283–304

Khuri S, Sayfy A (2010) A novel approach for the solution of a class of singular boundary value problems arising in physiology. Math Comput Model 52:626–636

De Hoog FR, Weiss R (1978) Collocation methods for singular boundary value problems. SIAM J Numer Anal 15:198–217

Burkotová J, Rachunková I, Weinmüller EB (2017) On singular BVPs with nonsmooth data: convergence of the collocation schemes. BIT 57:1153–1184

Auzinger W, Koch O, Weinmüller EB (2005) Analysis of a new error estimate for collocation methods applied to singular boundary value problems. SIAM J Numer Anal 42:2366–2386

Huang WZ, Ma HP, Sun WW (2003) Convergence analysis of spectral collocation methods for a singular differential equation. SIAM J Numer Anal 41:2333–2349

Russel RD, Shampine LF (1975) Numerical methods for singular boundary value problems. SIAM J Numer Anal 12:13–36

El-Gamel M, Sameeh M (2017) Numerical solution of singular two-point boundary value problems by the collocation method with the Chebyshev bases. SeMA 74:627–641

Guo BY, Wang LL (2001) Jacobi interpolation approximations and their applications to singular differential equations. Adv Comput Math 14:227–276

Doedel EJ, Reddien GW (1984) Finite difference methods for singular two-point boundary value problems. SIAM J Numer Anal 21:300–313

Kumar D (2018) A collocation scheme for singular boundary value problems arising in physiology. Neural Parallel Sci Comput 26:95–118

Babolian E, Hosseini MM (2002) A modified spectral method for numerical solution of ordinary differential equations with non-analytic solution. Appl Math Comput 132:341–351

Roul P, Thula K (2018) A new high-order numerical method for solving singular two-point boundary value problems. J Comput Appl Math 343:556–574

Schreiber R (1980) Finite element methods of high-order accuracy for singular two-point boundary value problems with nonsmooth solutions. SIAM J Numer Anal 17:547–566

Roul P, Warbhe U (2016) A novel numerical approach and its convergence for numerical solution of nonlinear doubly singular boundary value problems. J Comput Appl Math 296:661–676

Roul P, Biswal D (2017) A new numerical approach for solving a class of singular two-point boundary value problems. Numer Algorithms 75:531–552

Roul P (2019) A fast and accurate computational technique for efficient numerical solution of nonlinear singular boundary value problems. Int J Comput Math 96:51–72

Roul P, Madduri H (2018) A new highly accurate domain decomposition optimal homotopy analysis method and its convergence for singular boundary value problems. Math Meth Appl Sci 41:6625–6644

Singh R (2019) Analytic solution of singular Emden–Fowler-type equations by Green’s function and homotopy analysis method. Eur Phys J Plus 134(583):1–17

Singh R, Kumar J, Nelakanti G (2013) Numerical solution of singular boundary value problems using Green’s function and improved decomposition method. J Appl Math Comput 43:409–425

Singh R, Kumar J (2014) An efficient numerical technique for the solution of nonlinear singular boundary value problems. Comput Phys Commun 185:1282–1289

Cen ZD (2006) Numerical study for a class of singular two-point boundary value problems using Green’s functions. Appl Math Comput 18:310–316

Wang TK, Liu ZF, Zhang ZY (2017) The modified composite Gauss type rules for singular integrals using Puiseux expansions. Math Comput 86:345–373

Wang TK, Zhang ZY, Liu ZF (2017) The practical Gauss type rules for Hadamard finite-part integrals using Puiseux expansions. Adv Comput Math 43:319–350

Wang TK, Gu YS, Zhang ZY (2018) An algorithm for the inversion of Laplace transforms using Puiseux expansions. Numer Algorithms 78:107–132

Hemmi MA, Melkonian S (1995) Convergence of psi-series solutions of nonlinear ordinary differential equations. Can Appl Math Q 3:43–88

Wang TK, Li N, Gao GH (2015) The asymptotic expansion and extrapolation of trapezoidal rule for integrals with fractional order singularities. Int J Comput Math 92:579–590

Liu ZF, Wang TK, Gao GH (2015) A local fractional Taylor expansion and its computation for insufficiently smooth functions. E Asian J Appl Math 5:176–191

Zhao TJ, Zhang ZY, Wang TK (2021) A hybrid asymptotic and augmented compact finite volume method for nonlinear singular two point boundary value problems. Appl Math Comput 392:125745

Shen J, Tang T (2006) Spectral and high-order methods with applications. Science Press, Beijing

Shen J, Tang T, Wang LL (2011) Spectral methods: algorithms, analysis and applications. Springer, New York

Canuto C, Hussaini MY, Quarteroni A, Zang TA (1988) Spectral methods in fluid dynamics. Springer, New York

Quarteroni A, Valli A (2008) Numerical approximation of partial differential equations. Springer, New York

Rubio G, Fraysse F, Vicente J, Valero E (2013) The estimation of truncation error by \(\tau \)-estimation for Chebyshev spectral collocation method. J Sci Comput 57:146–173

Baker EB (1930) The application of the Fermi–Thomas statistical model to the calculation of potential distribution in positive ions. Phys Rev 36:630–647

Acknowledgements

The authors are very grateful to the editors and anonymous referees for their valuable comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This project is supported by the National Natural Science Foundation of China (Grant No. 11971241), the Program for Innovative Research Team in Universities of Tianjin (Grant No. TD13-5078), and 2017-Outstanding Young Innovation Team Cultivation Program of Tianjin Normal University (Grant No. 135202TD1703).

Rights and permissions

About this article

Cite this article

Wang, T., Liu, Z. & Kong, Y. The series expansion and Chebyshev collocation method for nonlinear singular two-point boundary value problems. J Eng Math 126, 5 (2021). https://doi.org/10.1007/s10665-020-10077-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10665-020-10077-0

Keywords

- Chebyshev collocation method

- Convergence analysis

- Emden–Fowler equation

- Fractional series expansion

- Green’s function

- Nonlinear singular two-point boundary value problem

- Thomas–Fermi equation