Abstract

Understanding program code is a complicated endeavor. As a result, studying code comprehension is also hard. The prevailing approach for such studies is to use controlled experiments, where the difference between treatments sheds light on factors which affect comprehension. But it is hard to conduct controlled experiments with human developers, and we also need to find a way to operationalize what “comprehension” actually means. In addition, myriad different factors can influence the outcome, and seemingly small nuances may be detrimental to the study’s validity. In order to promote the development and use of sound experimental methodology, we discuss both considerations which need to be applied and potential problems that might occur, with regard to the experimental subjects, the code they work on, the tasks they are asked to perform, and the metrics for their performance. A common thread is that decisions that were taken in an effort to avoid one threat to validity may pose a larger threat than the one they removed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Code comprehension is a major element of software development. According to Robert Martin, developers read 10 times more code than they write (Martin 2009). In one survey, 95% of developers said understanding code was an important part of their job, and a large majority said they do it every day (Cherubini et al. 2007). Most developers also agree that understanding code written by others is hard. As researchers, we are interested in exactly what makes it hard, and what can be done about it.

Controlled experiments are at the heart of research on code comprehension (Weissman 1974; Siegmund 2016). In such experiments the participants are asked to perform a programming task based on some code. The task is crafted so that performing it successfully requires the code to be understood. By measuring the effort and success of performing the task, one can therefore obtain some information on the difficulty of understanding the code. Repeating the measurements on modified code or using various tools then sheds light on the effect of code features and tools on program comprehension.

While the general framework of code comprehension experiments is well known, there are many variations in the details. This is a natural result of the combination of the many decisions that have to be taken:

-

One has to select the code on which the subjects will work. The code often reflects the nature of the study, e.g. using a certain style of identifiers if the effect of such styles on comprehension is the focus of the study. However, many other attributes of the code may also affect the comprehension process. It is therefore important to select code that does not introduce threats to the validity of the study.

-

One has to select the tasks to be performed. The tasks are supposed to require understanding, but what does “understanding” mean? A particular risk is that subjects may be able to find shortcuts and perform the task without actually understanding the code, thereby undermining the whole experiment.

-

One has to select the metrics by which performance will be measured. Different metrics may actually measure different things, and reflect different aspects of the difficulties in understanding code — or some factor that is unrelated to understanding the code.

-

One also has to select the subjects themselves. A much-cited threat is the use of students as experimental subjects. But when are students indeed a problem, and when can they be used safely? And is the student/professional dichotomy indeed the correct one to be concerned about?

Most work on the methodology of empirical software engineering focuses on experimental design, statistical tests, and reporting guidelines (Basili et al. 1986; Juristo and Moreno 2001; Wohlin et al. 2012; Shull et al. 2008; Jedlitschka and Pfahl 2005; Siegmund et al. 2021). But it is also important to get the domain-specific core features right (Brooks 1980). For program comprehension our focus will be on general attributes of the code, the tasks, and the measurements. We will discuss the manipulations that are part of a specific research agenda only so far as they interact with such attributes. Our goal is to review the choices that have been made in experimental studies, and the threats to validity involved in them. This should be considered as a basis for discussion, not a comprehensive listing. Hopefully this will encourage additional work on the methodological aspects of code comprehension research.

Some previous work in this domain includes the following. In an early work Brooks identified four factors which affect comprehension: what the program does, the program text, the programmer’s task, and individual differences (Brooks 1983). These foreshadow some of our observations on the code, the task, and the experimental subjects. Littman et al. defined understanding at a somewhat higher level of abstraction (Littman et al. 1987). According to them, understanding a program comprises knowing the objects the program manipulates and the actions it performs, as well as its functional components and the causal interactions between them. Note that this description relates to systems, and not to smaller elements of code.

To the best of our knowledge the only empirical evaluation of methods to measure code comprehension was conducted by Dunsmore and Roper more than 20 years ago (Dunsmore and Roper 2000). Their conclusion was that tasks performed using mental simulation of the code provided the best results, and that a simple question regarding perceived comprehension was also a good indicator. However, these results are of only a preliminary nature.

Siegmund et al. report on their experiences with conducting controlled experiments on program comprehension, placing an emphasis on the need to control for programming experience (Siegmund et al. 2013). Perhaps the closest to our work is Siegmund and Schumann’s review of confounding parameters in program comprehension (Siegmund and Schumann 2015). This includes a catalog of 39 factors that may influence the results of program comprehension studies. Many of them have parallels in our discussion. However, we place greater focus on the considerations involved in the technical aspects of the experiment, such as the code used and the tasks performed: for example, Siegmund and Schumann spend only one paragraph on the task, saying it may be a confounding factor, while we devote a whole section to considerations in selecting a task and how this interacts with levels of understanding the code. At the same time, many of the factors identified by Siegmund and Schumann, especially considerations involving the experimental subjects, are not repeated here. As a result the two papers largely complement each other. Another close paper is Oliveira et al. (2020). This paper presents a literature survey of code readability and understanding, with an emphasis on the tasks performed and the metrics used to assess understanding. It then relates them to a taxonomy of learning. Our focus is narrower: we perform an in-depth analysis of the factors involved in only the “understanding” level of the taxonomy, and on concrete activities performed by developers.

Finally, von Mayrhauser and Vans (1995) and Storey (2005) emphasize the theoretical underpinnings of program comprehension research. The most-often cited cognitive models of code comprehension are the top-down model (Brooks 1983) and the bottom-up model (Shneiderman and Mayer 1979). Another distinction is between the systematic strategy and the as-needed strategy (Littman et al. 1987). While this is obviously important and worthy, our work is focused on the more technical aspects of making the experimental observations in the first place.

The following sections review issues related to the code, the task, the metrics, and the experimental subjects. In each the pertinent considerations are listed first, and then the potential pitfalls. This paper is an extended version of a paper from the 29th International Conference on Program Comprehension (Feitelson 2021). The extensions added to this version fall into two categories. First, the discussions of many of the points made throughout the paper have been fleshed out. The original conference version naturally suffered from space limitations, while in the present version it was possible to present the arguments more fully and give more examples. In addition, several figures were added. Second, a few considerations and pitfalls that were completely missing in the original version have been added. A checklist summarizing the main points that need to be attended to in conducting a code comprehension study has also been added.

2 The code

Experiments on code comprehension necessarily start with code. But finding suitable code is not easy. Things to consider are the scope of the code, its level of difficulty so it will be challenging enough but not too hard for an experiment, and whether to use real code or write code specifically for the experiment. Pitfalls include the danger of misleading code on the one hand, or code that will give the task away on the other hand, including the danger of using well-known code that may be recognized, and problems with obfuscating variable and function names and how the code is presented.

2.1 Considerations

2.1.1 Code scope

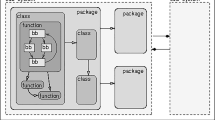

A central question regarding the code to use in a program comprehension study is how much code to use. There is a wide spectrum of options: a short snippet of a few lines, a method, a complete class, a package, or a full system.

The main consideration in favor of a limited scope is in cases where such a scope corresponds to the focus of the study. For example, when investigating the effect of the names of parameters on the understanding of a function, it is natural to use complete functions (Avidan and Feitelson 2017). If investigating control structures, focused snippets containing a single program element reduce confounding effects. For example, this was done by Ajami et al. in a study that found differences in understanding loops that count up and loops that count down (Ajami et al. 2019). An additional consideration is that a limited scope allows for a manageable experiment, for example not extending beyond a single hour of the experimental subject’s time and sometimes as short as 10 or 15 minutes.

Scope is also an important confounding factor (Gil and Lalouche 2017). If you want to compare two constructs, and one requires more lines of code than the other, should differences in performance be interpreted as resulting from the constructs or from the amount of code involved? This has no good solution, as artificially padding the shorter version may compromise the integrity of the code and cause a confounding effect worse than the difference in length. However, if the longer code turns out to be beneficial, this strengthens the results (Jbara and Feitelson 2014).

If the focus is on understanding as it is done during real development, e.g. to fix a reported bug, a large volume of code should be used. Ideally, the whole system should be available, just as it would be in a real-world setting (Abbes et al. 2011). This is important since understanding a full system is quite different from understanding a limited amount of code (Levy and Feitelson 2021). Brooks suggests that this difficulty is due to the software’s myriad possible states (Brooks 1987).

In the past something that passes for a full system could involve relatively little code, thereby enabling practical experiments on “complete systems”. For example, in the mid 1980s Littman et al. used a 250-line, 14-subroutine Fortran program that maintains a database of personnel records. Today such a volume more realistically represents a single class. As a result, experiments often make compromises. For example, a bug fixing task may skip the stage of locating the relevant code in the system, and focus only on the actual fix of the function in which the bug occurs.

The alternative is to conduct large scale experiments. For example, Wilson et al. asked graduate students to implement change requests in programs comprising about 100 Kloc, 800 classes, and 500 files (Wilson et al. 2019). Sjøberg et al. suggest that realistic experiments should be based on hiring professional programmers for relatively long periods of days to months (Sjøberg et al. 2002; Sjøberg et al. 2003). In such a setting, subjects can work in a realistic environment, including having access to all the relevant code. This is important because comprehension—like development—is an incremental process. It takes time and accumulates, and short experiments cannot evaluate this. At best they can focus on a well-defined single step.

Another alternative is to observe professionals during their work. This approach was taken by von Mayrhauser and Vans, who analyzed maintenance sessions of professionals working on large-scale full systems. For example, in one paper they report in detail on a 2-hour session devoted to porting client programs to a new operating system (von Mayrhauser and Vans 1996), and in another they report on two 2-hour sessions, one fixing a bug and the other searching for the location to insert new code (von Mayrhauser et al. 1997).

2.1.2 Code difficulty

Probably the most important characteristic of code used in an experiment is that the code should be appropriate for the task and the subjects. It should not be too easy and not too hard.

As an example of easy code, consider Fig. 1 (Listing 1 from Busjahn et al. (2015)). This listing comprises 22 lines of code. It defines a class Vehicle with a constructor and a method, followed by a main function that creates a Vehicle object and calls the method. The method increments the vehicle’s speed, subject to not going over a maximal value. So essentially all this code does is to increment an integer. Whether this is a problem depends on its use in the experiment. The original experiment was to use an eye tracker to follow the code reading order, so very simple code is a suitable base case. But using such code in a comprehension study would probably actually measure the ability to find the one line that does something.

Example of trivial code. (Ⓒ2015 IEEE. Reprinted, with permission, from Busjahn et al. (2015))

As an example of hard code consider Fig. 2 (Fig. 3 of Beniamini et al. (2017)). This is 11 lines long, comprising an initialized array and a function. The array is a lookup table. The function calculates the number of 1 bits in an input buffer by using the top and bottom halves of each byte as indexes to the lookup table and summing. While short, this code is non-trivial due to the use of bitwise operations to manipulate array accesses.

Example of difficult code using bit operations to index an array. (Ⓒ2017 IEEE. Reprinted, with permission, from Beniamini et al. (2017))

Example of mechanistic abbreviations. (Reprinted by permission from Springer Nature from Hofmeister et al. (2019), Ⓒ2019)

The issue of difficulty is closely associated with code complexity. Unfortunately the term “complexity” is used in three different meanings in the context of software:

-

The simplest, which we emphasize here, is code complexity. This is a direct property of the code—as text which expresses a set of instructions—that makes it hard to understand. For example, McCabe suggested that the number of branch points in a function is a measure of such complexity (McCabe 1976), and Dijkstra claimed that goto statements are especially harmful (Dijkstra 1968). However, more recent research has questioned whether such code metrics indeed predict comprehension difficulty (Denaro and Pezzè 2002; Nagappan et al. 2006; Feigenspan et al. 2011; Nagappan et al. 2015; Gil and Lalouche 2017).

-

A completely different issue is the conceptual complexity of the software. This is what Brooks calls “essential complexity”, and is what makes software development hard (Brooks 1987). Naturally it may also affect code comprehension studies.

-

the third meaning is algorithmic or computational complexity, as in the number of steps required to perform some computation. This is unrelated to our interests here.

As noted above, code difficulty may be related to the use of specific code constructs — such as goto or bitwise operations. Pointers have also been observed to be hard to master (Orso et al. 2001; Spolsky 2005). More generally, code smells and anti-patterns can make code harder to understand (Sharma and Spinellis 2018; Abbes et al. 2011; Politowski et al. 2020). All these obviously justify being studied, but should probably be avoided when they are not the focus of the study.

Assessing whether code is of suitable difficulty is hard, because this issue interacts with the subjects. For example, if subjects don’t know about bitwise operations, code using such operations becomes impenetrable. A similar problem occurs when understanding the code requires specific domain knowledge. This needs to be checked in pilot studies and during subject recruitment.

2.1.3 Code source

The considerations regarding what code to use depend on the type of experiment being conducted. When style or tools are being investigated, the code should be “representative” of code in general. However, given the vast amount of code that exists—much of which is closed source—it is unrealistic to try to create a statistically representative sample of code. But we can at least use some sample of real code.

Volumes of real code are now freely available in open source repositories. An unanswered question is whether this is also representative of proprietary code. There are dissenting opinions on which approach produces better code (and by implication, also clearer code) (Paulson et al. 2004; Raghunathan et al. 2005). Proponents of open source cite Linus’s law, and claim that open source is better due to being subject to review by multiple users (Raymond 2000). Alternatively, proprietary code has been claimed to be better because it is more managed in terms of testing and documentation.

One major concern with using real code is that the code was written by people who know what it is for. So the code may rely on implicit domain knowledge or reflect unknown assumptions and constraints. If experimental subjects in code comprehension experiments lack this knowledge, they will be unable to understand the code. A possible solution is to use code from utility libraries (e.g. performing array or string operations) (Avidan and Feitelson 2017), or to otherwise ensure that domain knowledge is not required.

The quest for self-contained code may suggest the use of functions that perform some computation that is completely devoid of any context. A good source for such functions is web sites with programming exercises for job interviews, such as leetcode.com. The advantage of such sites is that hundreds of exercises are available, often with dozens of solutions for each. However, many of the problems are unrealistic, for example implementing a contrived complicated rule to distribute candy among children. It is highly unlikely that anyone would actually be required to write such code except in a coding exercise. As a result understanding the code can be difficult simply because it does not have a clear purpose and does not make sense. It is therefore recommended to vet candidate codes carefully, and use only codes for realistic problems.

In experiments focused on particular features of the code, using real code may provide only imperfect examples, and at the same time it may introduce unwanted confounding factors. It may therefore be necessary to write code snippets specifically for the experiment to better control the different treatments. For example, Ajami et al. wrote code snippets with exactly the same functionality using different programming constructs, to investigate the effect of these constructs on understanding (Ajami et al. 2019). Note, however, that different treatments can also be based on real code. Abbes et al. used 6 large systems that contained specific antipatterns in an experiment on the effect of these antipatterns on comprehension (Abbes et al. 2011). To create the alternative treatment they refactored the systems to remove the antipatterns, without changing the rest of the design.

An extreme case is using randomly-generated code (Hollmann and Hanenberg 2017). This is by definition devoid of meaning, which raises the question of what we are asking subjects to understand. It can perhaps be used to study very technical aspects of reading, as a way to separate them from the effect of semantics. For example, Stefik and Siebert used randomly-generated keywords as a baseline (like a placebo) for studying the effect of syntax on understanding (Stefik and Siebert 2013).

In any case, regardless of the source of the code, one should ensure that it compiles and runs correctly. Few things are more embarrassing than having subjects in an experiment point out a bug (where this is not part of the experiment).

2.2 Pitfalls

Even when all the considerations are taken into account, problems with the code can threaten the validity of the study.

2.2.1 Misleading code

Perhaps the biggest problem is unintentionally misleading code. If the code is misleading, subjects may make mistakes not because of the studied effect but because they were misled. Gopstein et al. have identified 15 coding practices that may be misleading (Gopstein et al. 2017). Examples include using an assignment as a value, using short-circuit logic for control flow (in A||B, if A is true B is not evaluated), or presenting literals in an unnatural encoding like octal. Scalabrino et al. suggest code consistency as another attribute which affects readability and understanding (Scalabrino et al. 2016). This refers to the consistent use of terms in variable names and in comments. In other work, Scalabrino et al. define a metric for the deceptiveness of code based on the discord between perceived comprehension and actual comprehension (Scalabrino et al. 2021).

A major factor in misleading code appears to be names. Arnaoudova et al. call this “linguistic antipatterns”, e.g. when a variable’s name does not match its type, or when its plurality does not match its use (Arnaoudova et al. 2016). A simple example appears in Fig. 3 (Listing 4 of Hofmeister et al. (2019)). This includes an array named str, and a line int len = str.Length. But contrary to what might be expected, str is not a string. Rather, “str” is an abbreviation for “start”, and is used to denote the initial array of integers passed to a function that will change it. To further confuse matters, the array that will be appended to str is called end, a name that may be more suitable for the final result.

A striking example of the effect of misleading names was given by Avidan and Feitelson (2017). In a study about variable naming they used 6 real functions from utility libraries. Each function was presented either as it was originally written, or with variable names changed to a, b, c, etc. in order of appearance. The unexpected result was that in 3 of the functions there was no significant difference in the time to understand the different versions, and moreover, several subjects made mistakes — and all the mistakes were in the versions with the original names. The conclusion was that the names were misleading, to the degree of being worse than meaningless names like consecutive letters of the alphabet. But presumably the original developers did not intentionally choose misleading names. So the real threat is that names that look OK to the experiment designer would turn out to be misleading for the experimental subjects.

A more insidious example comes from Soloway and Ehrlich’s seminal paper on programming knowledge (Soloway and Ehrlich 1984). The code samples shown in Fig. 1 of that paper were meant to investigate the rule that “a variable’s name should reflect its function”. This was done by writing code that calculates the maximum or the minimum of a set of input numbers. The experimental subjects’ task was to insert the correct relation symbol (< or >) in the expression comparing the result so far with each new input.

But in the version calculating the minimum, the code used the name max instead of min (Fig. 4). This left the initialization to a large number Footnote 1 instead of to 0 as the only clue that the code actually calculates the minimum; if one assumes that the code calculates the maximum, as the variable name suggests, such an initialization would be erroneous. In other words, the experiment did not present its subjects with a situation in which the name does not reflect its function — it presented them with a downright contradiction. And this contradiction pitted a central variable name against a not so prominent initialization. Subjects would have to be especially diligent to get this right.

Example of misleading code. The task was to decide whether to put a < or a > in the box. (Ⓒ1984 IEEE. Reprinted, with permission, from Soloway and Ehrlich (1984))

2.2.2 Recognized code

The opposite of misleading code is easily recognized code. This may occur if textbook examples are used, e.g. a well-known sorting algorithm. Using such code may end up measuring how well-versed subjects are in the cannon of programming examples. This also applies to many of the problems available on web sites with job-interview programming exercises.

It may be tempting to nevertheless use a well-known example but modify it in some way. This is a dangerous practice, as modifying such code risks turning it into misleading code, because subjects who recognize it expect the conventional unaltered functionality. For example, altering the initialization or termination of a canonical for loop leads to a large increase in the errors made in interpreting what it does (Ajami et al. 2019).

2.2.3 Code structure giveaways

Code used in experiments should be realistic, in the sense that it could have been written in the context of a real project. Code written specifically for experiments sometimes violates this requirement. For example, it should not include parts that do not make sense, especially if such unnecessary additions may give the experiment away.

An example is shown in Fig. 5 (Fig. 1 in Schankin et al. (2018)). This is a class that converts variable names in under_score style to camelCase style. The class contains two helper functions, to lowercase the first letter of a word and to capitalize it. The point of the experiment is to notice that lowercase is called instead of capitalize, which is an error. But in fact there is no reason for the lowercase function to exist at all, because the class as presented supports only one-way conversion. And in the original (erroneous) code capitalize is dead code that is never called, which may provide a hint.

Example of problematic code. The task was to find the bug, which is that the wrong function is called. (Republished with permission of ACM, from Schankin et al. (2018), Ⓒ2018)

Another example is when the code allows the answer to be guessed. For example, Sharif and Maletic studied name recall using a multiple-choice question with the following options: fill_pathname, full_mathname, full_pathname, and full_pathnum (Sharif and Maletic 2010). The distractors were selected to be as similar as possible to the original name. But the first of them contains a verb, and is therefore more likely to be a function name. Two others contain unlikely word conjugations, mathname and pathnum. This leaves only one option which makes sense as a variable name, and indeed this is the correct answer.

2.2.4 Problematic code presentation

A potential problem in presenting code in the context of comprehension studies is what to do with comments and descriptive names. For example, header comments and method names are specifically designed to allow readers to understand what a function does without reading its code. Leaving them intact may therefore undermine an experiment where subjects are supposed to deduce just that. But given that they normally exist, removing them creates an unnatural situation. Thus it is suggested that this be done only in experiments using short code segments, where the focus justifies using an unrealistic setting. If a large body of code is used, names and comments should probably be retained. Likewise, in experiments where the task is not to understand what the code does there is no problem. An example is superficial debugging tasks, as explained below in Section 3.1.6.

As a side note, care should be taken that the comments do not directly interact with the task. For example, Fig. 1 in a paper by Buse and Weimer (2010) shows a short code snippet preceded by the comment “this is hard to read”, from an experiment where subjects are asked to assess readability. Providing such overt hints regarding the expected answer should be avoided.

A more delicate issue is how to handle mathematical and logical expressions. For example, should one rely on operator precedence, or use parentheses to clarify the order of evaluation? Again, if this is not the issue being studied, the best approach is probably to make it as unobtrusive as possible. Any effort that subjects spend on understanding expressions, and any mistakes they make, dilute the results that the experiment was designed to produce. In practical terms, this means to make the expressions as simple and obvious as possible, including by using parentheses.

More generally, all aspects of code readability affect its comprehension. If they are not the issue being studied (e.g. Oman and Cook 1990), they should be controlled. When using real code it may be tempting to present the code as it was written. But if the original code is not laid out properly, or uses an idiosyncratic style, this could introduce a confounding effect. A good practice is to use an IDE’s default indentation and syntax highlighting, as inconsistent presentation leads to cognitive load and therefore constitutes a confounding factor (Fakhoury et al. 2020). Methods within a class should be listed in calling order, meaning that called methods are placed after methods that call them (Geffen and Maoz 2016).

Another aspect of code presentation is coding style. Fashions change, and different people write in different styles. A mismatch between the writing style and the preferences (or experience) of the experimental subject may cause bias and a confounding effect. Two things can be done to reduce such problems. First, adopt the style that matches the prevailing culture (e.g. snake_case for Python but CamelCase for Java). Second, be consistent and use the same style throughout.

2.2.5 Variable naming side-effects

Variable names and function names are instrumental for comprehension, and in many cases they provide the main clues regarding what the code is about. In the context of comprehension studies this may be undesirable, so the names have to be stripped of meaning. Some of the ways that this has been done are problematic:

-

Simple options are using arbitrary strings (asdf, qetmji) or unrelated words (superman, purple). These are distracting, and may be useful only in relation to extreme research questions on reading or the possible detrimental effect of extremely bad (distracting) names. They should not be used if this is not the research issue.

-

Another approach is to use obfuscation, e.g. by applying a simple letter-exchange cipher (Siegmund et al. 2017). This leads to names that are long and distracting non-words. For example, the function name ‘countSameCharsAtSamePosition’ can change into ‘ecoamKayiEoaikAmKayiEckqmqca’. Long names like this that differ in just a couple of letters may become very hard to distinguish.

Note too that obfuscation may deeply affect how subjects perform tasks. Variable names convey meaning, and thus enable a measure of top-down comprehension. Siegmund et al. therefore used obfuscated variable names to force subjects to use bottom-up comprehension based on the syntax (Siegmund et al. 2014; Siegmund et al. 2017). Such an effect may happen with problematic code also when this is not intentional.

Alternative better ways to obfuscate variable names are the following:

-

One option is to use words that represent the technical use of the variables, but do not reveal the intent — exactly the opposite of what we usually try to do. For example, one could use num1 and num2 instead of base and exponent in a function that calculates a power (Siegmund et al. 2014).

-

Perhaps the simplest and most straightforward approach is to just use consecutive letters of the alphabet in order of appearance (Avidan and Feitelson 2017; Hofmeister et al. 2019). Note that this is different from using the first letter of the “good” name, as that may still convey information (Beniamini et al. 2017).

A related case is when names are abbreviated to see the effect of such abbreviations. This is sometimes done in a mechanical manner in an attempt to be more scientific and reduce the reliance on individual judgment. For example a possible approach is to concatenate the first 3 consonants in the name (as shown in Fig. 3 above) (Hofmeister et al. 2019). However, this may lead to unnatural or misleading names, such as str for start or rsl for result. Consequently the gain in rigorosity may come at the expense of reduced validity. It is better to use judgment rather than a mechanical approach, e.g. allowing res for result and the 4-letter conc for concatenate. To reduce the danger of mistakes in judgment, the abbreviations can be derived independently by two people, and then compared.

2.2.6 Inappropriate code for the task

Importantly, the task subjects are required to perform and the code must be compatible. For example, when studying whether indentation aids comprehension, one needs a task that depends on the block structure of the code. Otherwise indentation is indeed not an important feature, and the results will show that it does not matter. But this would be wrong, because maybe indentation does indeed matter for another task — for example, one that is related to navigation and identification of code blocks.

For example, Miara et al. conducted a study on indentation using 102-line long code with a main and two functions, and a maximal nesting level of 3 (Miara et al. 1983). The result was that nesting had some effect, based on questions such as whether all variables were global, which require the definition of variables at the beginning of functions to be identified (the study is from 1983 and the code was written in Pascal). Many years later Bauer et al. replicated this study, but the code used was 17-line single functions with a maximal nesting of 2, and the task was to anticipate what the program would print (Bauer et al. 2019). In this setting indentation was not found to be important, but maybe the reason was that the code structure was too simple.

3 The task

If we focus on comprehension per se, experiments on code comprehension are a sort of challenge-response game. The experimenter challenges the subject to understand some code. A subject that claims to have achieved such understanding must prove it by performing some task. It is therefore vital that the task really reflect comprehension. In a sense, the task defines what “comprehension” means. The main considerations are therefore what level of comprehension is reflected by each task. And the main pitfall is that the link between the task and the understanding might be compromised, e.g. if subjects can guess the correct answer without actually understanding the code.

3.1 Considerations

In real life, program comprehension is rarely an end in itself. Rather, comprehension is a prerequisite to performing some programming task, such as fixing a bug or adding a feature. So experiments can use such tasks directly.

Alternatively, one can consider comprehension itself. In this case the main consideration in selecting the task to perform is that the task reflects the level of understanding which is at the focus of the experiment. Should the subjects just know the variables and data structures? Maybe the behavior at runtime? Or perhaps also the underlying algorithms? The following subsections detail several such possible levels. We name them using the straightforward dictionary meaning of different words. Note, however, that this is not universal, and over the years some of these names and others have been used in various non-compatible ways.

It is also interesting to consider the relationship of code comprehension to reading natural language texts, and to the terminology used there. Being able to read at all depends on the legibility of the text — that the letters stand out clearly. This is more a matter of design (e.g. fonts and color contrast) than of reading. Reading is commonly referred to as combining the acts of identifying letters and words and gleaning the meaning conveyed by them. While this terminology is not universal (for example, Smith and Taffler advocate distinguishing between “reading” and “understanding” (Smith and Taffler 1992)), it is often also adopted in studies on reading code. For example, the first sentence in Buse and Weimer (2010) is “we define readability as a human judgment of how easy a text is to understand”. But code is actually somewhat different from text. For example, the difficulty of text can be approximated based on simple metrics like sentence lengths and word lengths (DuBay 2004; Smith and Taffler 1992), but at the same time text may be ambiguous (which may be used to advantage in both prose and poetry). In code such metrics are not very meaningful, and the semantics are unique and well defined. Our goal is to describe how levels of the semantics relate to levels of reading the code. The relationship between the different levels is shown in Fig. 6.

3.1.1 Recognition task (tokens and structure)

The most basic level is just recognizing the elements of the code, such as tokens and structure. Note that this has two facets. Recognizing tokens is a localized task. It is aided by programming practices such as surrounding the assignment operator with spaces, and by IDE options such as colorizing keywords. Recognizing structure is a more global issue, which has a strong influence on navigation in the code and on the findability of key elements in it (Oman and Cook 1990). In addition to colorized keywords, this is aided by practices such as consistent indentation.

The above considerations indicate what type of tasks may be used to assess recognition. Such tasks include:

-

Find a certain word, e.g. the use of a variable.

-

Identify nesting of constructs, e.g. the most deeply nested one.

-

Verify whether two expressions have the same syntactic structure.

But studies that focus on mere recognition are few, such as those targeting notation or syntax highlighting (Oman and Cook 1990; Purchase et al. 2002; Hollmann and Hanenberg 2017; Hannebauer et al. 2018). In addition, disrupted layout has been used as a control and to force subjects to employ bottom-up comprehension (Siegmund et al. 2017).

3.1.2 Parsing task (understand syntax)

The next level up is to be able to parse the code. This shows that you are able to understand the syntax: what are legal expressions, and what their relations may be. Example tasks can include

-

Find the type of a variable (in a typed language).

-

Find a syntax error in a function.

-

At a larger scale, draw a basic UML class diagram of a project, or compare a UML diagram with code that is supposed to implement it. “Basic” here means without some details that require deeper understanding, such as using type inference and defining the cardinality of associations.

Note that these tasks do not require any understanding of what the code does. This is intentional, as such understanding is detailed in subsequent higher-level tasks. It is sometimes claimed that this level of understanding is not very important or interesting in itself, as syntax issues are typically delegated to a compiler. It is therefore not commonly used in comprehension experiments, and when it is, it may be used as a control, to show the difference between understanding syntax and semantics (e.g. Siegmund et al. 2014; Schankin et al. 2018).

3.1.3 Interpretation task (local semantics)

While parsing requires understanding the structure of the code, interpretation requires understanding the semantics of the individual instructions. Tasks which reflect the ability to interpret code include

-

Find what the code prints for a certain input. This can be done by simulating the execution one instruction at a time, much like an interpreter would.

-

Answer simple questions about the code. For example, Pennington suggested using questions on the program’s control flow (will the last record be counted?) and data flow (does the value of variable a affect that of b?), its states (will c have a certain value after the loop?), and specific operations (is d initialized to 0?) (Pennington 1987).

-

Write tests that provide statement coverage or branch coverage. This only requires one to understand individual condition statements.

-

Identify and remove dead code which will never be executed (e.g. a function that is not called, or a condition that is always false).

-

Draw a UML sequence diagram. To do so one just needs to understand which functions call each other.

Interpretation is on the verge of “real” understanding. On the one hand, one can hand-simulate the execution of code and figure out what it will print without forming a general understanding of what the code actually does. But this is nevertheless appropriate for very short snippets of code comprising a single control block and nothing else. For example, Ajami et al. use this for comparisons of different formulations of a predicate used in an if construct (Ajami et al. 2019).

On the other hand, there are cases where it is actually easier to figure out what the code does rather than to simulate its execution. A case in point is code with loops, especially when many iterations are performed. For example, Hannebauer et al. suggest this is the case in an experiment they perform on code that implements bubble sort (Hannebauer et al. 2018, Figure 2).

Interpretation tasks are quite popular in comprehension experiments, because they are easy to create and to check: you just compare the given answer to the known correct answer. However, one must carefully consider the details to determine whether an answer based on tracing the execution is likely, and whether this affects the validity of the experimental results.

3.1.4 Comprehension task (global semantics)

Comprehension is understanding the underlying concepts of the code, and grasping its functionality in abstract terms. This is the general goal of code comprehension. The difference between comprehending semantics and parsing syntax is real. fMRI studies show that comprehension tasks activate different parts of the brain than syntax-related tasks — parts related to working memory, attention, and language processing (Siegmund et al. 2014).

The most common way to assess comprehension is to ask questions about the program. Specific questions and tasks which are thought to reflect comprehension are:

-

After reading and understanding the code, answer a question about the expected output for a given input without seeing the code again — that is, without the ability to simulate its execution (this assumes the code is non-trivial and cannot be remembered easily).

-

Describe the functionality of the code. More concretely, this can be achieved in several ways:

-

Ask subjects to suggest a suitable meaningful name for a function.

-

Ask subjects to summarize the purpose of the code.

-

Ask subjects to add documentation to the code, for example header comments for functions.

-

More formally, ask subjects to articulate the contract of a function or API: what are the preconditions and postconditions when using it (Meyer 1992).

-

-

Describe the flow of the code, namely how it transforms its input into its output. Or more generally, perform a code summarization task. Answers to such questions must be carefully analyzed to ascertain that they indeed reflect comprehension. For example, saying that the code loops over all numbers smaller than the input and checks for cases where they divide the input number with no remainder may be precise, but it is just a technical description of the code. True comprehension is to say that the code checks whether the input number is a prime.

-

Answer questions about specific elements of the code, for example the purpose of a certain variable, or why a certain function is called. Note however that such questions do not necessarily assess global understanding.

-

Write a test suite for a function. As this requires the expected results to be given, it shows you know what the tested code does. A comprehensive test suite specifically shows understanding of semantic corner cases.

-

Another possible task is to explain the limitations of a function or API — when should it be used, and when can’t it be used. This is related to the question of writing the contracts for functions mentioned above.

It is advisable to use questions of several types, so as to cover different aspects of understanding the code (Pennington 1987; Cook et al. 1984). But in many cases, assessing the answers given to comprehension tasks is not easy (as discussed in Section 4.1.2 below). Therefore other tasks which assess comprehension indirectly are sometimes used. Such options are described in the following subsections.

3.1.5 Code completion or recall task

A common exercise when learning foreign languages is “fill in the blanks” (the so-called cloze test): the students are given a text with some parts missing, and need to complete them either on their own or using a list of options, based on their understanding of the text and of how different options fit in. This can also be done with code (Cook et al. 1984). For example, Soloway and Ehrlich used this to study the effect of a mismatch between a variable’s name and its function (Soloway and Ehrlich 1984), and Hannebauer et al. used it to study the understanding of an inheritance hierarchy (Hannebauer et al. 2018). However, finding the right balance between trivial cases and misleading cases appears to be hard. As noted above, Soloway and Ehrlich’s code was misleading. Hannebauer et al’s is trivial: subjects were requested to replace the XXXXX in the declaration Mother x = new XXXXX() with one of the options Father, Mother, or Daughter. This can obviously be done without ever looking at the code.

A rather different type of task is to read the code, understand it, and then try to recall it from memory. Obviously this is limited to reasonably short codes, e.g. up to 20–30 lines long. The motivation for this task is the seminal work of Simon and Chase, which showed that expert chess players can easily memorize meaningful chess positions, but are not good at memorizing random placements of chess pieces (Simon and Chase 1973). Hence the memorization interacts with identification of meaning.

Shneiderman conducted an experiment based on this approach more than 40 years ago (Shneiderman 1977), concluding that better recall indeed correlates with better comprehension (as measured by the ability to make modifications to the code). McKeithen et al. also showed that expert programmers are better able to recall semantically meaningful program code (McKeithen et al. 1981). However, this type of task is rather far removed from what programmers actually do, and perhaps for this reason does not seem to be popular.

3.1.6 Correction task (white-box)

Of the different types of maintenance (Lientz et al. 1978), corrective maintenance (fixing bugs) is the one most often used to test understanding. Moreover, Dunsmore et al. have found that perceived comprehension indeed correlates with finding bugs (Dunsmore et al. 2000). But not all bugs reflect the same level of understanding. One needs to distinguish technical bug fixing (e.g. finding and correcting a null pointer dereference (Levy and Feitelson 2021), a method declared private instead of public (Hannebauer et al. 2018), or a syntax error) from a semantic error (such as calling the wrong helper function (Schankin et al. 2018) or using a wrong index into an array (Hofmeister et al. 2019)). Finding technical errors is more at the level of interpretation than comprehension — it can be done by scanning the code superficially without any deep understanding of the whole. Syntax errors may be irrelevant, as they should be caught by the compiler (but nevertheless they are sometimes used, e.g. (Hannebauer et al. 2018)). Only semantic errors reflect real comprehension.

An important question is exactly what bugs to inject. Two classifications were suggested by Basili and Selby (1987). The first is a distinction between errors of omission and errors of commission. This is an important distinction, because with commission the subjects can see the error, but for omission they need to notice that something is missing — which depends on a preconception of what the code is trying to do. The second classification lists six types: initialization, control, computation, interface, data, and cosmetic. Using such classifications helps reduce confounding effects that may be due to a specific type of bugs. They were used for example by Juristo et al. (2012) and Jbara and Feitelson (2014).

An important consideration is whether to ask only for the correction of the bug given the location where it occurs, or to also require subjects to locate the bug (Pearson et al. 2017). The first option is more focused on understanding the details of the given code. The second mixes this with achieving an overall view of how the code is structured and how responsibilities are distributed across modules and functions. This is a different level of understanding that should be assessed separately, for example by asking where to look for the bug rather than asking to fix it.

3.1.7 Extension or modification task (large scale white-box)

Most of the tasks outlined above are suitable for short code snippets, a function, or perhaps a class. Some of them, e.g. correction tasks, can also apply to larger software systems. Extension and modification of software can also be done on a single function, but usually the minimal relevant scope is a class, and the common scope in real-life situations is a module or a complete system.

A few examples are given by Wilson et al. who use large-scale projects of 78 and 100 KLoC to study adding new features (Wilson et al. 2019). This enables them to study not only the change itself, but also the process of finding where in the code the change must be made. If the task asks only to change a given function, it misses the steps of zeroing in on the correct location to make the change, and the evaluation of the impact that the change may have on other parts of the system (Rajlich and Wilde 2002). Whether this is a problem depends on whether you consider it part of comprehending the system.

Importantly, writing code as in code extension or modification tasks is different from reading code as in comprehension tasks. Krueger et al. show using fMRI studies that writing activates areas in the right hemisphere of the brain, associated, inter alia, with planning and spatial cognition (Krueger et al. 2020). So it seems that in these tasks, while comprehension is needed, it is not the main activity. They may therefore be less suitable as tasks that reflect comprehension.

Moreover, there are additional levels of comprehension which may be required to correctly change code but are hard to attain and to measure. Levy and Feitelson identify two such levels beyond the usual black-box/white-box dichotomy (Levy and Feitelson 2021):

-

“Out-of-the-box” comprehension refers to subtle interactions of the code under study with other parts of the system, e.g. as may be required for extreme optimizations.

-

“Unboxable” comprehension is the appreciation of the underlying assumptions and considerations involved in developing the code, which may not be directly reflected in the code at all.

3.1.8 Use task (black-box)

Black-box is a special case of comprehension, where we are interested in using the code as opposed to understanding how it works (white-box) (Levy and Feitelson 2021). This is very common and important in real life when one needs to use third-party libraries. It also forms the basis for modularity, encapsulation, and information hiding (Parnas 1972; Parnas et al. 1985). But it is rather uncommon and not very useful in comprehension experiments. The simplest way to exhibit black-box knowledge about an API is to use it, that is to write some code that calls the API functions. In addition, one can ask about various attributes of the API:

-

Details about parameters of API functions.

-

Connections between functions, e.g. if one must be called before another is called.

-

Documented preconditions or constraints.

Note that black-box understanding is actually disconnected from the code itself — this is the essence of information hiding. It is based on documentation. But generating such knowledge requires deeper comprehension, as noted above.

3.1.9 Design-related task (abstraction)

Understanding a system is not the same as understanding a single module or a smaller piece of code. When understanding a system the focus is on understanding the structure, namely the system’s components, what are their responsibilities, and how they interact with each other (Biggerstaff 1989; Levy and Feitelson 2021). A deeper level of understanding is to understand why it is structured like this, that is, to understand the rationale for the design decisions that were taken during development.

Recovering the design of a system from its implementation is an act of reverse engineering (Chikofsky and Cross II 1990). One possible approach to achieve this is by analyzing the dynamic behavior of the system at runtime, and noting the interactions between its components (Cornelissen et al. 2009). This is different from the approaches used for other tasks listed above, which mostly focus on the static code. It may even be claimed that resorting to code execution is a way to circumvent the need to understand the code directly. However, performing tasks that affect the design do require one to contend with the code itself. Possible tasks related to understanding the design are different types of refactoring, such as the following (Fowler 2019):

-

Extract methods, that is identify blocks of code that should be made into independent methods for reuse or better structure.

-

Suggest methods that should be moved to another class.

-

Modify the inheritance hierarchy by pulling up or pushing down a field or a method, and placing them in a more appropriate class.

-

Replace a conditional behavior with using inheritance to create polymorphism.

-

Identify a common base-case and extract a superclass to represent it.

-

Extract explaining variables to improve the comprehensibility of the code (Cates et al. 2021).

While there is extensive literature about the execution of such tasks, they are not common in code comprehension studies. Possible reasons are that they require a large scope to be meaningful, which is harder to provide in a controlled experiment. It is also hard to judge the correctness of performing such tasks, as design is always also partially a matter of taste.

At a higher level of abstraction, the result of the design process is an architecture. Hence understanding the design is understanding the architecture. A possible task to show this level of understanding is then to describe the architecture. This can be expressed, for example, as defined by the 4 + 1 views suggested by Kruchten (1995). For example, in an experiment we can ask participants to draw a conceptual diagram showing relations between entities. Note, however, that in a real-life setting comprehending a system is a continuous process, and each task adds to this understanding in an incremental manner (von Mayrhauser and Vans 1995). In all likelihood such a process cannot be fully replicated in an experiment. However, design recovery may also be useful in the context of other tasks, such as debugging or adding a feature.

3.1.10 Selection of tasks

The previous subsections indicate that different tasks actually reflect different aspects of understanding. A possible way to interpret the relations between them is shown in Fig. 7. A major distinction is between factors that reflect code properties and factors that characterize the developer tasked with understanding the code. For example, style is a code property, but being able to parse and interpret code reflects knowledge of the programming language in which it is written. Thus the selection of which task to use should be predicated by what we want to study: the code or the developer. The more involved tasks, such as modifying or explaining code, typically involve both code and developer. It is then hard or impossible to claim that the task measures one or the other (Bergersen et al. 2014). In addition, challenging tasks such as adding a feature conflate comprehension with other activities such as designing and programming. This dilutes the fraction of the effort invested in comprehension, thereby reducing the accuracy of experiments with the express purpose of studying only comprehension.

To get a better picture it may be advisable to use multiple different tasks in the same study (as done e.g. by Pennington 1987). Multiple tasks of different types can illuminate different aspects of comprehension, and may expose unanticipated differences between treatments. With multiple tasks of the same type one can obtain more nuanced and accurate results, leading to better validity.

It would be good to also have independent assessments of the value of different tasks in measuring comprehension. Regrettably it seems that very little such research has been performed to date. One study is that by Dunsmore et al., in which they found a correlation between bug fixing and perceived comprehension (Dunsmore et al. 2000). But more work on this issue is required.

3.2 Pitfalls

3.2.1 Substituting opinion for measurement

The main problem with selecting a task that reflects program understanding is the classic construct validity issue: are you measuring what you set out to measure? In particular, does your task actually measure understanding at the level you are interested in?

For example, consider Buse and Weimer’s “A metric for software readability” (Buse and Weimer 2008), which—very naturally, given its title—is often cited as a reference on readability. But in the reported experiments, subjects were told to score code snippets “based on [your] estimation of readability”, where “readability is [your] judgment about how easy a block of code is to understand”. This reflects two problems. First, “readability” is a catch-all phrase which does not distinguish between different levels of understanding as delineated above. Second, using judgment as the dependent variable conflates personal opinion about what it means to understand (which could be any of the levels discussed above) with misconceptions about how easy or hard a specific code snippet is. This violates the whole concept of using a well-chosen and well-defined task to actually measure performance that depends on comprehension. However, one should acknowledge that many of the tasks listed above actually do not measure comprehension directly, but rather by proxy.

Scalabrino et al. face this issue head-on and distinguish between perceived understanding, where subjects just declare that they think they have understood a method, and actual understanding, where they correctly answer several verification questions (Scalabrino et al. 2021). However, the questions they suggested were about the meaning of a variable name, or the purpose of calling a certain function. It is debatable whether such questions indeed reflect a full understanding of the code.

3.2.2 The danger of shortcuts

The tasks in code comprehension experiments are predicated on the assumption that they can only be performed successfully if one understands the code. But as noted above, it is not necessarily true that you need to fully understand the code to predict what it will print, or to correct a bug that it contains. Furthermore, experimental subjects may be lazy (Roehm et al. 2012; Levy and Feitelson 2021). They may prefer to use an “as-needed” program comprehension strategy as an alternative to a “systematic” strategy leading to full understanding (Littman et al. 1987). So given a specific task, they might make do with comprehending only whatever is directly needed for this task (von Mayrhauser and Vans 1998). Unless this part is precisely aligned with the experimental objectives, this compromises the validity of the experiment.

When designing an experiment it is therefore of paramount importance to avoid tasks where the brunt of the work can be avoided. This is a significant threat to the premise that comprehension is a prerequisite for testing, debugging, and maintenance. Examples include cases where tasks can be done mechanically without understanding. For example, in bug fixing, finding a syntax error or finding a null pointer reference can be done without understanding what the function does. In code modification, a simple refactor like extracting a function can be done without understanding how the function works.

3.2.3 Confounding explanations

In controlled experiments one needs a control: a base-level treatment with which to compare the performance on the other treatments. This is what gives controlled experiments their explanatory power (which is why it is regrettable that there is such a limited use of controlled experiments (Sjøberg et al. 2005)).

To provide explanatory power the task has to be crisp in the sense that it strongly supports a certain interpretation. Not all tasks have this property. For example, the fill in the blanks task used by Soloway and Ehrlich is not crisp, because the variable name they used is misleading (as described above) (Soloway and Ehrlich 1984). Thus a failure to answer correctly may not be due to a problem with the conceptual model (what the experiment was supposed to check), but simply due to falling in the trap of the misleading name. Likewise, failure in recalling code verbatim from memory may identify totally wrong code or code that does not abide by conventions, not necessarily hard to understand code. Finally, failure to find a bug such as calling the wrong function may be the result of lack of attention, rather than lack of understanding.

3.2.4 The working environment

A potentially important confounding factor is the working environment in which subjects perform their task. Certain environments may include facilities that support the task and make it easier to complete. If such an environment is provided, performing the task becomes easier. Worse, having access to features that support the task may undermine the need to understand the code or affect the process of how it is understood.

Note, however, that this also depends on the subject knowing how to use the environment. Subjects who do not know how to use the required feature (or don’t know it exists) will be at a disadvantage. If some subjects know how to use these features and others do not, this becomes a confounding factor that may interfere with the results.

A possible solution to this problem is to use a reduced environment, which does not include the features that may be used to help perform the task. However, this is also problematic for subjects who are used to work in an environment which does include such support.

4 The metrics

Rajlich and Cowan suggested that the dependent variables measured in comprehension studies should be the accuracy of the answers, the response time of accurate answers, and the response time of inaccurate answers (Rajlich and Cowan 1997). Of these, the most commonly used is time to correct answer. Accuracy is also often used, especially when it is easy to assess (for example, when the task is to predict what a given code will print). The time to inaccurate answers is typically not used. An interesting question is how and whether different measurements should be combined into a single metric.

Note, however, that these metrics are actually proxies for what we are really interested in: the effort invested in understanding the code, and the difficulty of understanding the code. Recently, biophysical indicators (ranging from skin conductance through pupil size to fMRI brain activity patterns) have also been suggested as indicative of the effort expended in code comprehension. This is a potentially valuable development, but such metrics are not widely used yet.

4.1 Considerations

The main consideration regarding metrics is that they be measurable. This may interact with the task, as some tasks produce outcomes that are more measurable than others. Note that as discussed above we do not consider voicing an opinion as a measurement.

4.1.1 Imposing time limits

There are basically two approaches to measuring performance: how much one can achieve in a given time, or how long it takes to perform a given task (Bergersen et al. 2014). Most experiments on comprehension measure time for a task. This is also closer to normal working conditions. However, placing a generous time limit may be advisable to exclude subjects who experience difficulties for some reason, or subjects who do not work continuously or conscientiously.

4.1.2 Judging accuracy

If we consider comprehension experiments as a challenge-response game, the outcome of the game depends on the evaluation of the response. If the response was correct, the experimental subject has met the challenge and “wins”. But how do we know whether the response was correct? This obviously depends on the details of the task.

The easy cases are when the response is well-defined in advance, such as to identify what a given code will print (e.g. Ajami et al. 2019). In this case the answer can be checked automatically. The only reservation is that inconsequential variations (e.g. an added space) should be ignored. If multiple tasks are used, the fraction performed correctly can serve as a score.

In cases such as when the question is “what does this code do” or “give this function a meaningful name”, one needs to prepare a capacity for judging the responses. This should include

-

A key, prepared in advance, of what responses are expected to include, and how to identify and score each level of achievement. For example, in an experiment based on comprehension of a program that created a histogram of word occurrences in a text, a third of the points were given for answering that the program counts word occurrences, a third for saying that it prints each unique word, and a third for noting that it prints the number of occurrences next to each word (Miara et al. 1983).

-

Application of the key by at least two and possibly more independent judges.

-

A protocol for settling disputes, e.g. majority vote (2 of 3 judges) or conducting a joint discussion till reaching consensus.

It is also important to keep track of and report how many disagreements there were.

4.1.3 Reaction to errors

In those cases where a wrong answer can be detected automatically, e.g. when the experimental subject is required to find out what the code will print, one has to decide what to do if a wrong answer is given. A common approach is to just go on with the experiment. Possible alternatives include

-

Display a message indicating that a mistake has been made. But this may affect the rest of the experiment, either due to discouraging the subject, or due to facilitating a learning effect.

-

In addition to indicating that a mistake was made, allow the subject to try again (Hofmeister et al. 2019). This raises the questions of how to measure time. Do you include the sum of all trials? Is it fair to compare this to the time taken by someone who did not try and fail?

-

When it is expected that all subjects will succeed (which implies that correctness is not being measured), discard subjects who fail (Hofmeister et al. 2019). In other words, failure is used as an exclusion criterion.

4.1.4 Combining dimensions of performance

If both time and accuracy (correctness) are measured, the question is whether to report them separately or to combine them in some way. Combining the two metrics simplifies the analysis by making it one-dimensional. But this is justified only if they indeed reflect the same underlying concept.

Bergersen et al. suggest a crude categorical classification scheme which combines time and correctness (2011). In its simplest form, this scheme defines 3 levels of accomplishment:

-

1.

Incorrect answer.

-

2.

Correct answer, time above the median.

-

3.

Correct answer, time below the median.

If the task is made up of multiple stages, the levels first reflect the number of stages completed successfully, and if all were, the time range in which this was achieved.

Beniamini et al. suggest a continuous version of such a combination, where accomplishment is defined to be the quotient of the correctness score divided by the time (Beniamini et al. 2017). This can be interpreted as the “rate of answering correctly”. Incorrect answers are naturally included with a rate of 0. Scalabrino et al. suggest a similar formula, but use the time saved relative to the subject who took the longest to answer (Scalabrino et al. 2021). This has the disadvantage that outliers may distort the results of others.

A related question is what is the significance of time to incorrect answer? The most common approach is to ignore this data. A possible alternative is to interpret such data as instances of censoring: we know that the subject spent this much time and did not arrive at a correct answer, therefore the time needed for a correct answer would be longer. Another option is to interpret this as wasted time. If the task was something practical, like fixing a bug, a wrong fix reflects waste because the task would have to be done again. A third option is to use this as an assessment of motivation: this is how much time subjects are willing to invest (Rajlich and Cowan 1997).

4.1.5 Using direct physiological measurements

The commonly used dependent variables of time and accuracy measure the overall resulting performance when executing a task. But they rarely provide information about how this performance is achieved, e.g. what cognitive processes were used, and what were the trouble spots on which the experimental subjects stumbled. They also do not provide direct information on the effort needed to achieve the measured performance. In recent years there is an increasing use of tools that enable these factors to be studied too.

The most prominent tool in the context of code comprehension studies is eye trackers (Shaffer et al. 2015; Sharafi et al. 2015; Obaidellah et al. 2018; Bednarik et al 2020; Sharafi et al. 2020). Eye trackers enable an identification and quantification of how the experimental subjects focus on different parts of the code, and also a recording of the gaze scan path: the order in which they go over the code. This is especially useful to identify what the experimental subjects are interested in. An example is given by Jbara and Feitelson (2017). This study used eye tracking to quantify the amount of time spent looking at successive repetitions of the same basic structure. The results showed that the first instances get more attention, and were used to create quantitative models of how attention decreases with instance serial number.

The effort required to comprehend code can also be measured more directly than by the time and correctness of the comprehension. For example, changes in pupil size are known to be correlated with mental effort (Kahneman 1973), and this is measured by most eye trackers. Various other biophysical indicators for effort have also been used in relation to software engineering research (Fritz et al. 2014; Couceiro et al. 2019). In addition, subjective self- reporting can be used, e.g. based on the NASA task load index (Abbes et al. 2011).

An even deeper level of analysis of how subjects comprehend code is provided by functional magnetic resonance imaging (fMRI). This is being used in an increasing number of studies (Siegmund et al. 2014; Siegmund et al. 2017; Floyd et al. 2017; Ivanova et al. 2020; Krueger et al. 2020). fMRI identifies areas of the brain that become active when performing a task. For example, this has enabled the distinction between brain activity patterns when performing syntactic vs. semantic tasks (Siegmund et al. 2014) or reading vs. writing (Krueger et al. 2020). It has also been possible to distinguish between reading code and reading prose based on brain activity patterns (Floyd et al. 2017). Finally, thinking about manipulating data structures and about spacial rotation tasks employ the same regions in the brain (Sharafi et al. 2021).

A technically simpler alternative is to use functional near-infrared spectroscopy (fNIRS) technology (Fakhoury et al. 2020; Sharafi et al. 2021). Unlike fMRI, which is a large noisy machine in which subjects need to lie and is expensive, fNIRS is based on wearing a scalp cap and can be done sitting in front of a computer. And it provides nearly the same level of data as fMRI.

An especially interesting attribute of neuroimaging studies is that they bypass the limitation of conscious reporting. A lot of processing in the brain is done unconsciously, and therefore subjects cannot report on precisely what they had done. Techniques such as fMRI and fNIRS provide an objective glimpse into what the brain is doing, without the need for cognizant reporting, and irrespective of potential filtering and rationalization by the conscious self.

Importantly, using all the above methodologies in the context of program comprehension studies is still pretty new. Developing the methodologies and establishing best practices is therefore an ongoing effort (Bednarik and Tukiainen 2006; Sharafi et al. 2020; Sharafi et al. 2021).

4.2 Pitfalls

4.2.1 Confounding effects

Measurements are always subject to the danger of confounding effects. Many of the pitfalls noted in the previous sections may come into play when we measure the time or accuracy of code comprehension, and lead to unreliable results — namely results which do not reflect the intended aspects of code comprehension.

One straightforward effect is getting used to the experimental setting. It is apparently not uncommon that the first task or two in a sequence take longer, as the subjects learn what exactly is required of them (e.g. Fig. 8, from (Ajami et al. 2019, Figure 5)). It may therefore be better to discard the first such result(s), or use them to evaluate the participants. More generally, measurements necessarily conflate the effects of code attributes with those of the person participating in the experiment (as noted above in Section 3.1.10 and Fig. 7). If the subjects are unsuitable, e.g. is they lack appropriate experience, it would be wrong to assign their low performance to the code.

Example of distributions of time to correct answer for a sequence of questions in an experiment. (Reprinted by permission from Springer Nature from Ajami et al. (2019), Ⓒ2019)

Focusing on the metrics themselves, a special case is the relation between time and correctness. Errors by definition reflect misunderstandings. The question is whether this is due to the difficulty of the code or to misleading beacons. Evidence that time and correctness may actually reflect different concepts is given by Ajami et al. (2019). This study included a comparison of understanding a canonical for loop (for (i = 0; i<n; i++)) with variations in which the initialization, termination condition, or step are varied. The results were that loops counting down took a bit longer, while loops with abnormal initialization or termination caused more errors. The suggested interpretation was that time reflects difficulty, and the error rate reflects a “surprise factor”, namely whether the code deviates from expectations. Thus if the code contains misleading elements it may be ill-advised to combine time and correctness scores.

4.2.2 Learning and fatigue effects

One confounding effect that deserves special attention is that measurements can change during the experiment. For example, when multiple codes are used the question arises in what order to display them. Using the same order for all experimental subjects reduces variability and enhances comparisons. However, such a consistent order may cause a confounding effect due to learning, fatigue, or dropouts. For example, if unsuccessful subjects feel discouraged and drop out of the experiment, only the more successful subjects will reach the last questions (Ajami et al. 2019). In other words, a difference in performance on different codes may be the result of their placement in the sequence, rather than a result of the differences we wish to study. The common solution is to randomize the order.

4.2.3 Measurement technical issues