Abstract

Antipatterns are known as poor solutions to recurring problems. For example, Brown et al. and Fowler define practices concerning poor design or implementation solutions. However, we know that the source code lexicon is part of the factors that affect the psychological complexity of a program, i.e., factors that make a program difficult to understand and maintain by humans. The aim of this work is to identify recurring poor practices related to inconsistencies among the naming, documentation, and implementation of an entity—called Linguistic Antipatterns (LAs)—that may impair program understanding. To this end, we first mine examples of such inconsistencies in real open-source projects and abstract them into a catalog of 17 recurring LAs related to methods and attributes. Then, to understand the relevancy of LAs, we perform two empirical studies with developers—30 external (i.e., not familiar with the code) and 14 internal (i.e., people developing or maintaining the code). Results indicate that the majority of the participants perceive LAs as poor practices and therefore must be avoided—69 % and 51 % of the external and internal developers, respectively. As further evidence of LAs’ validity, open source developers that were made aware of LAs reacted to the issue by making code changes in 10 % of the cases. Finally, in order to facilitate the use of LAs in practice, we identified a subset of LAs which were universally agreed upon as being problematic; those which had a clear dissonance between code behavior and lexicon.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There are many recognized bad practices in software development, known as code smells and antipatterns (Brown et al. 1998a; Fowler 1999). They concern poor design or implementation solutions, as for example the Blob, also known as God class, which is a large and complex class centralizing the behavior of a part of the system and using other classes simply as data holders. Previous studies indicate that APs may affect software comprehensibility (Abbes et al. 2011) and possibly increase change and fault-proneness (Khomh et al. 2012; Khomh et al. 2009). From a recent study by Yamashita and Moonen (2013) it is also known that the majority of developers are concerned about code smells.

Most often, documented bad practices deal with the design of a system or its implementation—e.g., code structure. However, there are other factors that can affect software comprehensibility and maintainability, and source code lexicon is surely one of them.

In his theory about program understanding, Brooks (1983) considers identifiers and comments as part of the internal indicators for the meaning of a program. Brooks presents the process of program understanding as a top-down hypothesis-driven approach, in which an initial and vague hypothesis is formulated—based on the programmer’s knowledge about the program domain or other related domains—and incrementally refined into more specific hypotheses based on the information extracted from the program lexicon. While trying to refine or verify the hypothesis, sometimes developers inspect the code in detail, e.g., check the comments against the code. Brooks warns that it may happen that comments and code are contradictory, and that the decision of which indicator to trust (i.e., comment or code) primarily depends on the overall support of the hypothesis being tested rather than the type of the indicator itself. This implies that when a contradiction between code and comments occur, different developers may end up trusting different indicators, and thus have different interpretations of a program.

The role played by identifiers and comments in source code understandability has been also empirically investigated by several other researchers showing that commented programs and programs containing full word identifiers are easier to understand (Shneiderman and Mayer 1975; Chaudhary and Sahasrabuddhe 1980; Woodfield et al. 1981; Takang et al. 1996; Lawrie et al. 2006, 2007).

For the reasons emerged from the above studies, researchers have developed approaches to assess the quality of source code lexicon (Lawrie et al. 2007; Caprile and Tonella 2000; Merlo et al. 2003) and have provided a set of guidelines to produce high-quality identifiers (Deissenbock and Pizka 2005). Also, in a previous work (Arnaoudova et al. 2013), we have formulated the notion of source code Linguistic Antipatterns (LAs), i.e., recurring poor practices in the naming, documentation, and choice of identifiers in the implementation of an entity and we defined a catalog of 17 types of LAs related to inconsistencies. The notion of LAs builds on those previous works. In particular, we concur with Brooks (1983) that inconsistencies may lead to different program interpretations by different programmers and some of these interpretation may be wrong, thus impairing program understanding. An incorrect initial interpretation may impact the way developers complete their tasks as their final solution will possibly be biased by this initial interpretation. This cognitive phenomenon is known as anchoring and the difficulty to move away (or to adjust) from the initial interpretation as the adjustment bias. For example, Parsons and Saunders (2004) provide evidence of the existence of the phenomenon in the context of reusing software code/design. Our conjecture is that defining a catalog of LAs will increase developer awareness of such poor practices and thus contributes to the improvement of the lexicon and program comprehension.

An example of an LA, which we have named Attribute Signature and comment are opposite, occurs in class EncodeURLTransformer of the Cocoon Footnote 1 project. The class contains an attribute named INCLUDE_NAME_DEFAULT whose comment documents the opposite, i.e., a “Configuration default exclude pattern”. Whether the pattern is included or excluded is therefore unclear from the comment and name. Another example of LA called “Get” method does not return occurs in class Compiler of the EclipseFootnote 2 project where method getMethodBodies is declared. Counter to what one would expect, the method does not either return a value or clearly indicate which of the parameters will hold the result.

To understand whether such LAs would be relevant for software developers, two general questions arise:

-

Do developers perceive LAs as indeed poor practices?

-

If this is the case, would developers take any action and remove LAs?

Indeed, although tools may detect instances of (different kinds of) bad practices, they may or may not turn out to be actual problems for developers. For example, by studying the history of projects (Raţiu et al. 2004) showed that some instances of antipatterns, e.g., God classes being persistent and stable during their life, are considered harmless.

This paper aims at empirically answering the questions stated above, by conducting two different studies. In Study I we showed to 30 developers an extensive set of code snippets from three open-source projects, some of which containing LAs, while others not. Participants were external developers, i.e., people that have not developed the code under investigation, unaware of the notion of LAs. The rationale here is to evaluate how relevant are the inconsistencies, by involving people having no bias—neither with respect to our definition of LAs, nor with respect to the code being analyzed. In Study II we involved 14 internal developers from 8 projects (7 open-source and 1 commercial), with the aim of understanding how they perceive LAs in systems they know, whether they would remove them, and how (if this is the case). Here, we first introduce to developers the definition of the specific LA under scrutiny, after which they provide their perception about examples of LAs detected in their project.

Overall, results indicate that external and internal developers perceive LAs as poor practices and therefore should be avoided—69 % and 51 % of participants in Study I and Study II, respectively. Interestingly, developers felt more strongly about certain LAs. Thus, an additional outcome of these studies was a subset of LAs that are considered to be the most problematic. In particular, we identify a subset of LAs i) that are perceived as poor practices by at least 75 % of the external developers, ii) that are perceived as poor practices by all internal developers, or iii) for which internal developers took an action to remove it. In fact, 10 % (5 out of 47) of the LAs shown to internal developers during the study have been removed in the corresponding projects after we pointed them out. There are three LAs that both external and internal developers find particularly unacceptable. Those are LAs concerning the state of an entity (i.e., attributes) and they belong to the “says more than what it does” (B) and “contains the opposite” (F) categories—i.e., not answered question (B.4), attribute name and type are opposite (F.1), and attribute signature and comment are opposite (F.2). External developers found particularly unacceptable (i.e., more than 80 % of them perceived as poor or very poor) the LAs with a clear dissonance between the code behavior and its lexicon— .i.e., get method does not return (B.3), not answered question (B.4), method signature and comment are opposite (C.2), and attribute signature and comment are opposite (F.2). The extremely high level of agreement on these LAs motivates the need for a tool pointing out these issues to developers while writing the source code.

Paper Structure

Section 2 provides an overview of the LA definitions (Arnaoudova et al. 2013) and tooling. Sections 3 describes the definition, design, and planning of the studies. In Section 4 we detail the catalog of LAs while reporting and discussing the results of the two studies. After a discussion of related work in Section 6, Section 7 concludes the paper and outlines directions for future work.

2 Linguistic Antipatterns (LAs)

Software antipatterns—as they are known so far—are opposite to design patterns (Gamma et al. 1995), i.e., they identify “poor” solutions to recurring design problems. For example, Brown’s 40 antipatterns describe the most common pitfalls in the software industry (Brown et al. 1998b). They are generally introduced by developers not having sufficient knowledge and–or experience in solving a particular problem or misusing good solutions (i.e., design patterns). Linguistic antipatterns (Arnaoudova et al. 2013) shift the perspective from source code structure towards its consistency with the lexicon.

Linguistic Antipatterns (LAs) in software systems are recurring poor practices in the naming, documentation, and choice of identifiers in the implementation of an entity, thus possibly impairing program understanding.

The presence of inconsistencies can mislead developers—they can make wrong assumptions about the code behavior or spend unnecessary time and effort to clarify it when understanding source code for their purposes. Therefore, highlighting their presence is essential for producing easy to understand code. In other words, our hypothesis is that the quality of the lexicon depends not only on the quality of individual identifiers but also on the consistency among identifiers from different sources (name, implementation, and documentation). Thus, identifying practices that result in inconsistent lexicon, and grouping them into a catalog, will increase developer awareness and thus contribute to the improvement of the lexicon.

We defined LAs and group them into categories based on a close inspection of source code examples. We started by analyzing source code from three open-source Java projects—namely ArgoUML, Cocoon, and Eclipse.

Initially, we randomly sampled a hundred files and analyzed the source code looking for examples of inconsistencies of lexicon among different sources of identifiers (i.e., identifiers from the name, documentation, and implementation of an entity). For each file, we analyzed the declared entities (methods and attributes) by asking ourselves questions such as “Is the name of the method consistent with its return type?”, “Is the attribute comment consistent with its name?”. Two of the authors of this paper were involved in the process. The set of inconsistencies examples that we found were then organized into an initial set of LAs. We iterated several times over the sampling and coding process and refine the questions based on the newly discovered examples. For example, “What is an inconsistent name for a boolean return type?”, “Is void a consistent type for method isValid?”, “What other types are inconsistent with method isValid?”, etc.

As the goal is to capture as many different lexicon inconsistencies as possible, the sampling was guided by the theory (theoretical sampling Strauss (1987)), and thus cannot be considered to be representative of the entire population of source code entities. We stopped iterating over the sampling and coding process when new examples of inconsistencies did not anymore modify the defined LAs and their categories. Also, it is important to point out that during our analyses we did not follow a thorough grounded-theory approach (Strauss 1987; Glaser 1992)—i.e., we did not measure the inter-agreement at each iteration—as the process was meant to identify possible inconsistencies for which we would gather developers’ perceptions. Thus, the agreement between the authors of this paper was a guidance rather than a requirement. Nevertheless, similarly to grounded-theory, we performed refinements to our initial categories.

Over the iterations LAs were refined, compared, and grouped into categories. Categories were modified according to the new examples of inconsistencies, i.e., some categories were combined, refined, or split to account for the newly defined LAs. For instance, we ended up with a preliminary list of 23 types LAs for which we abstracted the type of inconsistency they concern. At a very high level, all but 6 of those 23 types LAs concerned inconsistencies where i) a single entity (method or attribute) is involved, and ii) the behavior of the entity is inconsistent with what its name or related documentation (i.e., comment) suggests, as it provides more, says more, or provides the opposite. Thus, we discarded from this catalog those LAs that did not obey the above two rules. An example of a discarded LAs from the preliminary list is the practice consisting in the use of synonyms in the entity signature—e.g., method completeResults(..., boolean finished), where the term complete in the method name is synonym of finished (parameter). Similarly, we discarded the following five cases of comment inconsistencies:

-

Not documented or counter-intuitive design decision, e.g., using inheritance instead of delegation.

-

Parameter name in the comment is out of date.

-

Misplaced documentation, e.g., entity documentation exists, but it is placed in a parent class.

-

The entity comment is inconsistent across the hierarchy.

-

Not documented design pattern. Previous work has proposed approaches to document design patterns (Torchiano 2002), and has shown that design patterns’ documentation helps developers to complete maintenance tasks faster and with fewer errors (Prechelt et al. 2002).

The fact that we discarded the above practices from the current catalog does not mean that we consider them any less poor. On the contrary, we believe that further investigation in different projects may result in discovering other related poor practices that can be abstracted into new categories of inconsistencies, thus extending the current catalog.

After pruning out the six types described above, the analysis process resulted in 17 types of LA, grouped into six categories, three regarding behavior—i.e., methods—and three regarding state—i.e, attributes. For methods, LAs are categorized into methods that (A) “do more than they say”, (B) “say more than they do”, and (C) “do the opposite than they say”. Similarly, the categories for attributes are (D) “the entity contains more than what it says”, (E) “the name says more than the entity contains”, and (F) “the name says the opposite than the entity contains”. Table 1 provides a summary of the defined LAs. We further detail the LAs during the qualitative analysis of the results (Section 4.3).

We implemented the LAs detection algorithms in an offline tool, named LAPD (Linguistic Anti-Pattern Detector), for Java source code (Arnaoudova et al. 2013); for the purpose of this work, we extended the initial LAPD to analyze C ++. LAPD analyzes signatures, leading comments, and implementation of program entities (methods and attributes). It relies on the Stanford natural language parser (Toutanova and Manning 2000) to identify the Part-of-Speech of the terms constituting the identifiers and comments and to establish relations between those terms. Thus, given the identifier notVisible, we are able to identify that ‘visible’ is an adjective and that it holds a negation relation with the term ‘not’.

Finally, to identify semantic relations between terms LAPD uses the WordNet ontology (Miller 1995). Thus, we are able to identify that ‘include’ and ‘exclude’ are antonyms.

Consider for example the code shown in Fig. 1. To check whether it contains an LA of type “Get” more than accessor (A.1) LAPD first analyses the method name. As it follows the naming conventions for accessors—i.e., starts with ‘get’—LAPD proceeds and searches for an attribute named imageData of type ImageData defined in class CompositeImageDescriptor. The existence of the attribute indicates that the implementation of getImageData would be expected to satisfy the expectations from an accessor, i.e., return the value of the corresponding attribute. Thus, LAPD analyses the body of getImageData and reports the method as an example of “Get” more than accessor (A.1) as it contains a number of additional statements before returning the value of imageData. Indeed, one can note that the value of the attribute is always overridden (line 69) which is not expected from an accessor except if the value is null—as for example the Proxy and Singleton design patterns). Further details regarding the detection algorithms of LAs can be found in Appendix A.

For Java source code, we also made available an online version of LAPDFootnote 3 integrated into Eclipse as part of the Eclipse Checkstyle PluginFootnote 4. CheckstyleFootnote 5 is a tool helping developers to adhere to coding standards, which are expressed in terms of rules (checks), by reporting violations of those standards. Users may choose among predefined standards, e.g., the Sun coding conventionsFootnote 6, or define their owns. Figure 1 shows a snapshot of a code example and an LA, of type “Get” more than accessor (A.1), reported by the LAPD Checkstyle Plugin 4 detected in the example. After analyzing the entity containing the reported LA, the user may decide to resolve the inconsistency or disable the warning report for the particular entity.

3 Experimental Design

Before studying the perception of developers, it is important to study the prevalence of LAs. Section 3.1 provides details about our preliminary study on the prevalence of LAs. Next, we report the definition, design, and planning of the two studies we have conducted with external (Section 3.2) and internal (Section 3.3) developers. To report the studies we followed the general guidelines suggested by Wohlin et al. (2000), Kitchenham et al. (2002), and Jedlitschka and Pfahl (2005).

3.1 Prevalence of LAs

The goal of our preliminary study is to investigate the presence of LAs in software systems, with the purpose of understanding the relevance of the phenomenon. The quality focus is software comprehensibility that can be hindered by LAs. The perspective is of researchers interested to develop recommending systems aimed at detecting the presence of LAs and suggesting ways to avoid them. Specifically, the preliminary study aims at answering the following research question:

- RQ0: :

-

How prevalent are LAs? We investigate how relevant is the phenomenon of LAs in the studied projects.

- Experiment design: :

-

For each LA we report the occurrences in the studied projects. We also report the percentage of the programming entities in which an LA occurs with respect to the population for which the LA been defined. Finally, we report the relevance of each LA with respect to the total entity population of its kind. For example, for “Get” more than accessor (A.1) we report the the number of occurrences, the percentage of the occurrences with respect to the number of accessors, and the percentage of the occurrences with respect to all methods.

- Objects: :

-

Using convenience sampling (Shull et al. 2007), we select seven open-source Java and C++ projects Table 2 shows the projects’ characteristics.Footnote 7 We have chosen projects from various application domains, with different size, different programming language, and different number of developers.

- Data collection: :

-

For this study, we simply downloaded the source code archives of the considered system releases (Table 2), and analyzed them using the LAPD tool with the aim of identifying LAs.

Next, we report the definition, design, and planning of the two studies we have conducted with external (Section 3.2) and internal (Section 3.3) developers. Both studies were designed as online questionnaires. A replication package is available.Footnote 8 It contains (i) all the material used in the studies, i.e., instructions, questionnaires, and LA examples, and (ii) working data sets containing anonymized results for both studies. We do not disclose information about participants’ ability and experience.

3.2 Study I (SI)—External Developers

The goal of the study is to collect opinions about code snippets containing LAs from the perspective of external developers, i.e., people new to the code containing LAs—with the purpose of gaining insights about developers’ perception of LAs. The feedback of external developers will help us to understand how LAs are perceived by developers who are new to the particular code, as it is often the case when developers join a new team or maintain a large system they are not entirely familiar with. Specifically, the study aims at answering the following research questions:

- RQ1: :

-

How do external developers perceive code containing LAs? We investigate whether developers actually recognize the problem and in such case how important they believe the problem is.

- RQ2: :

-

Is the perception of LAs impacted by confounding factors? We investigate whether results of RQ1 depend on participant’s i) main programming language (for instance Java versus C ++, as the LAs were originally defined for Java), ii) occupation (i.e., professionals or students), and iii) years of programming experience.

In the following, we report details of how the study has been planned and conducted.

- Experiment design: :

-

The study was designed as an online questionnaire estimated to take about one hour for an average of two and a half minutes per code snippet. However, participants were free to take all the necessary time to complete the questionnaire. The use of an online questionnaire was preferred over in person interview, as it is more convenient for the participants. Participants were free to decide when to fill the questionnaire and in how many steps to complete it—i.e., participants may decide to complete the questionnaire in a single session or to stop in between questions and to resume later. To avoid biasing the participants, we also consider as part of the questionnaire 8 code snippets that do not contain any LA. Thus, we ask participants to analyze 25 code snippets (17 being examples of LAs, and 8 not containing any LA), and to evaluate the quality of each example comparing naming, documentation, and implementation.Ideally, we would have preferred to evaluate an equal number of code snippets with and without LAs. This, however, would have increased the required time with more than 20 min and increased the chances that participants do not complete the survey. Therefore, we decided to decrease the number of code snippets without LAs by half (compared to the number of code snippets with LAs).

We (the authors) selected examples covering the set of LAs from the analyzed projects that in our opinion are representative of the studied LAs. In particular, we used the examples from our previous work as the study was performed before the proceedings were publicly available.

For each code snippet, we formulated a specific question, trying to avoid any researcher bias on whether the practice is good or poor. Thus, for example, when showing the code snippet of method getImageData (used in Fig. 1) corresponding to the example of “Get” more than accessor, we asked participants to provide their opinion on the practice consisting of using the word “get” in the name of the method with respect to its implementation. Note that if the question does not indicate what aspect of the snippet the participants are expected to evaluate, there is a high risk that the participants evaluate an unrelated aspect—e.g., performance or memory related. However, specific questions are subject to the hypothesis guessing bias thus participants may evaluate as poor practices all code snippets as they may guess that this is what is expected. This is why inserting code snippets that do not contain LAs is a crucial part of the design. To compare the scores given by developers to code snippets that contain LAs and those that do not, we perform a Mann-Whitney test.

To minimize the order/response bias, we created ten versions of the questionnaire where the code snippets appear in a random order. Participants were randomly assigned to a questionnaire. To achieve a design as balanced as possible, i.e., equal number of participants for each questionnaire, we invited participants through multiple iterations. That is, we sent an initial set of invitations to an equal number of participants. After a couple of days, we sent a second set of invitations assigning such additional participants to the questionnaire instances that received the lowest number of responses.

- Objects: :

-

For the purpose of the study, we choose to evaluate LA instances detected and manually validated in our previous work (Arnaoudova et al. 2013). Such LAs have been detected in 3 Java software projects, namely ArgoUML Footnote 9 0.10.1 (82 KLOC) and 0.34 (195 KLOC), Cocoon Footnote 10 2.2.0 (60 KLOC), and Eclipse Footnote 11 1.0 (475 KLOC).

- Participants: :

-

Ideally, a target population—i.e., the individuals to whom the survey applies—should be defined as a finite list of all its members. Next, a valid sample is a representative sample of the target population (Shull et al. 2007). When the target population is difficult to define, non-probabilistic sampling is used to identify the sample. In this study, the target population being all software developers, it is impossible to define such list. We selected participants using convenience sampling. We invited by e-mail 311 developers from open-source and industrial projects, graduate students and researchers from the authors’ institutions as well as from other institutions. 31 developers completed the study and after a screening procedure (see Section 3.2), 30 participants remained—11 professionals, and 19 graduate students, resulting in a response rate close to 10 %—as expected (Groves et al. 2009). Participants were volunteers and they did not receive any reward for the participation to the study. We explicitly told them that anonymity of results was preserved, and so we did.

Table 3 provides information on participants’ programming experience and Fig. 2 shows their native language and the country they live in.

- Study Procedure: :

-

We did not introduce participants to the notion of LAs before the study. Instead, we informed them that the task consists of providing their opinion of code snippets.

For each code snippet—containing LAs or not—we asked participants the five questions reported in Table 4. With SI-q1 participants judge the quality of the practice on a 5-point Likert scale (Oppenheim 1992), ranging between ‘Very poor’ and ‘Very good’. The purpose of SI-q2 is to ensure that the participants provide their judgement for the practice targeted by the question. For both SI-q4 and SI-q5, we provide predefined options, to decrease the effort and ease the analysis, however we left space in the form to provide a customized answer. In addition, for each code snippet, we also allow participants to share any additional comment they would make. At any point, participants are free to decide not to answer a question by selecting the option ‘No opinion’.

- Data Collection: :

-

We collected 31 completed questionnaires. Before proceeding with the analysis, we applied the following screening procedure: For each LA we remove subjects who chose ‘No opinion’ as answer to SI-q1.

The collected answers being in nominal and ordinal scales, standard outlier removal techniques do not apply here. Thus, we first sought for inconsistent answers between questions SI-q1 and SI-q3, i.e., between the quality of the code snippet and whether an action should be undertaken. Although one may judge a code snippet as ‘Poor’ but believes that no action should be undertaken, we fear that participants providing high number of such combinations may have misunderstood the questions. We intentionally sought for participants providing high number of such combinations (> 75 %), resulting in removing one participant.

Then, we individually analyze the justification, i.e., answers of SI-q2, and we remove the answer of a participant for an LA if it is clear that the participant judge an aspect different from the one targeted by the LA. For example, when a participants are asked to give their opinion on the use of conditional sentence in comments and no conditional statement in method implementation, participant providing the following justification is removed for the particular LA: “the method name is well chosen and is well commented too”. Thus, the number of obtained answers for each kind of LA varies between 25 and 30, as it can be noticed from Fig. 5.

3.3 Study II (SII)—Internal Developers

The goal of this study is to investigate the perception of LAs from the perspective of internal developers, i.e., those contributing to the project in which LAs occur. Internal developers will provide us not only with their opinion about LAs but also with insights on the typical actions they are willing to undertake, to correct the existing inconsistencies and possibly help us to understand what causes LAs to occur. The context consists of examples of code, selected from projects to which the surveyed developers contribute. To extend the external validity of the results, for this study, we considered projects written in two different programming languages, i.e., Java and C ++. The study aims at answering the following research questions:

-

RQ3: How do internal developers perceive LAs? This research question is similar to RQ1 of Study I, however here we are interested in the perception of developers familiar with the code containing LAs, i.e., of people who contributed to it.

-

RQ4: What are the typical actions to resolve LAs? Other than the opinion on the practices described by LAs, we investigate whether developers are willing to undertake actions to correct the suggested inconsistencies.

-

RQ5: What causes LAs to occur? We are interested to understand under what circumstances LAs appear to better cope with them.

- Experiment Design: :

-

The study was designed as an online questionnaire. The number of LAs was selected so that the questionnaire requires approximately 15 min to be completed, and therefore ensures a high response rate from internal developers. As in Study I, the time was simply indicative, i.e., participants are free to take all the necessary time to complete the questionnaire. As LAs were related to methods having different size and complexity, the questionnaires contained between 5 and 6 examples, i.e., not always the same number. Thus, each participant evaluates only a subset of the LAs.We, the authors, selected examples of LAs from the analyzed projects that in our opinion are representative of the studied LAs. We selected the examples in a way to have higher diversity, i.e., so that the study includes examples of all 17 types of LAs.

- Objects: :

-

To select the projects for this study we also used a convenience sampling. We consider LAs extracted from 8 software projects, specifically 1 industrial, closed-source project, namely MagicPlan,Footnote 12 and 7 open-source projects—i.e., the projects used in the preliminary study (see Table 2). The projects have different size and belong to different domain, ranging from utilities for developers/project managers (e.g., Apache OpenMeetings, GanttProject, commitMonitor, Apache Maven) to APIs (Boost, BWAPI, and OpenCV) or mobile applications (MagicPlan). We chose more projects than in Study I, in order to obtain a larger external validity from developers belonging to different projects (including a commercial one), and in order to consider both Java and C ++ code.

- Participants: :

-

The study involved 14 developers from the projects mentioned above. As for the distribution across projects, one developer per project participated in the study, except for Boost, for which 3 developers participated, and for BWAPI, for which 4 developers participated. Such 14 developers are the respondents from an initial set of 50 ones we invited to participate, resulting in a response rate of 28 %. Invited participants were committers whose e-mails were available in the version control repository of the project. Also in this case, participants were volunteers and did not receive any reward. Similarly to the previous study, anonymity of results was preserved.

- Study Procedure::

-

We showed to participants examples of LAs detected in the system they contribute to. For each example, we first provided participants with the definition of the corresponding LA, and then we asked them to provide an opinion about the general practice—i.e., question SII-q0 “How do you consider the practice described by the above Linguistic Antipattern?”—using, again, a 5-point Likert scale. Then, we asked participants to provide indications about the specific instance of LA by asking the questions shown in Table 5.

- Data Collection: :

-

We collected responses of 14 developers regarding 47 unique examples of all types of LAs except C.2.Footnote 13 The collected answers represent 72 data points, where each data point is a unique combination of a particular example (instance) of an LA and a developer who evaluated it.

4 Developers’ Perception of LAs

In this section we present the results of our studies. First, in Section 4.1 we report the results of our preliminary study on the prevalence of LAs. Next, we present the results of the two studies on developers’ perceptions of LAs providing both quantitative (Section 4.2) and qualitative (Section 4.3) analyses.

4.1 Prevalence of LAs

-

RQ0:: How Prevalent are LAs?

Table 6 shows the number of detected instances of LAs per project and per kind of LA. Based on previous evaluation on a subset of those systems, LAPD has an average precision of 72 % (95 % confidence level and a confidence interval of ±10 %). As the goal of this work is to evaluate developers’ perception of LAs we did not re-evaluate the precision but rather manually validated a subset of the detected examples to assure that they are indeed representative of LAs.

Table 7 shows how relevant is the phenomenon in the studied projects. For each LA we report its relevance with respect to the population for which it has been defined as well as its relevance with respect to the total entity population of its kind. For example, the first row of Table 7—“Get” more than accessor (A.1)—shows that such complex accessors represent 2.65 % of the accessors and 0.05 % of all methods. By looking at the table, the percentage of LAs instances may appear rather low (Min.: 0.02 %; 1st Qu.: 0.17 %; Median: 0.26 %; Mean: 0.61 %; 3rd Qu.: 0.65 %; Max.: 3.40 %). However, in their work on smell detection using change history information (Palomba et al. 2013) provide statistics about the number of actual classes involved in 5 types of code smells in 8 Java systems; the percentages of affected classes are below 1 % for each type of smell, thus somewhat consistent with our findings—although a direct comparison is difficult (due to the different types of entities) the numbers can be taken as a rough indication. Slightly higher are the statistics provided by Moha et al. (2010) in which for 10 systems the percentage of affected classes for 4 design smells are as follows: Blob: 2.8 %, Functional Decomposition: 1.8 %, Spaghetti Code: 5.5 %, and Swiss Army Knife: 3.9 %.

Moreover, when we consider only the relevant population, the phenomenon appears to be sufficiently important to justify our interest (Min.: 0.02 %; 1st Qu.: 1.19 %; Median: 5.98 %; Mean: 16.89 %; 3rd Qu.: 19.33 %; Max.: 69.53 %).

In the rest of this section we present the results of both studies providing both quantitative (Section 4.2) and qualitative (Section 4.3) analyses.

4.2 Quantitative Analysis

Quantitative analysis pertain all RQs from RQ1 to RQ5. In RQ1 and RQ2 we report the results from the study with external developers, i.e., Study I, while in RQ3 to RQ5 we report the results from the study with internal developers, i.e., Study II.

-

RQ1: How Do External Developers Perceive Code Containing LAs?

We first analyzed the developers’ perception of examples without LAs. Figure 3 shows violin plots (Hintze and Nelson 1998) depicting the developers’ perception of examples without LAs. Violin plots combine boxplots and kernel density functions, thus providing a better indication of the shape of the distribution. The dot inside a violin plot represents the median; a thick line is drawn between the lower and upper quartiles; a thin line is drawn between the lower and upper tails. As expected, those examples are perceived as having a median ‘Good’ quality (1st quartile: ‘Neither good nor poor’, median: ‘Good’, 3rd quartile: ‘Very good’).

Figure 4 shows violin plots depicting the developers’ perception of LAs individually for each kind—having a median ‘Poor’ quality (1st quartile: ‘Poor’, median: ‘Poor’, 3rd quartile: ‘Neither good nor poor’). Mann-Whitney test indicates that the median score provided for code without LAs is significantly higher than for code with LAs (p − v a l u e<0.0001), with a large (d = 0.66) Cliff’s delta (d) effect size (Grissom and Kim 2005). Overall, if we consider all LAs, 69 % of the participants perceive LAs as ‘Poor’ or ‘Very Poor’ practices. However, as Fig. 4 shows, the perception distribution varies among different LAs. For instance, boxplots—i.e., the inner lines of violin plots—for A.3 (“Set” method returns), B.1 (Not implemented condition), B.3 (“Get” method does not return), B.4 (Not answered question), C.2 (Method signature and comment are opposite), and F.2 (Attribute signature and comment are opposite) have lower quartile at ‘Very Poor’, median at ‘Poor’, and, for all of them except B.1, higher quartile at ‘Poor’.

We also observe that the perceptions of B.6 (Expecting but not getting a collection), D.2 (Name suggests Boolean but type does not), E.1 (Says many but contains one), and F.1 (Attribute name and type are opposite) have little variability and are generally ‘Poor’.

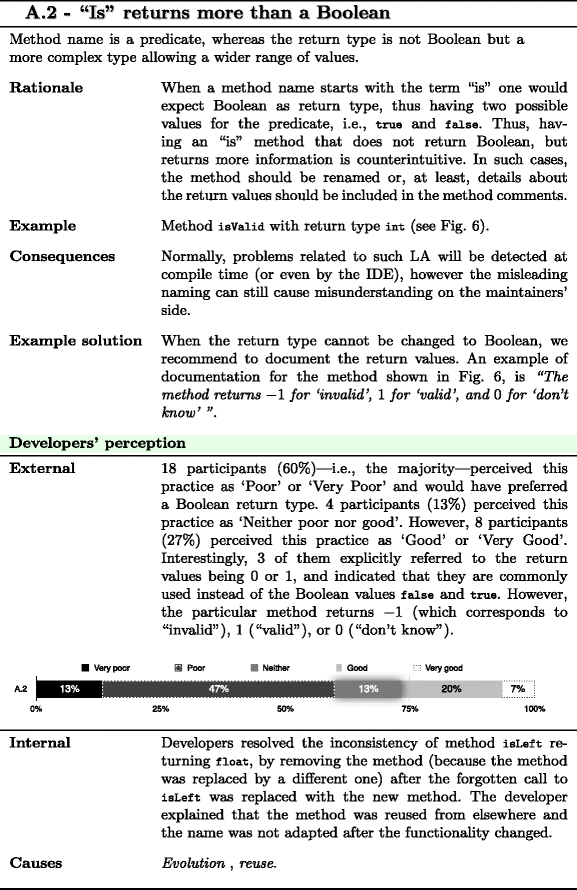

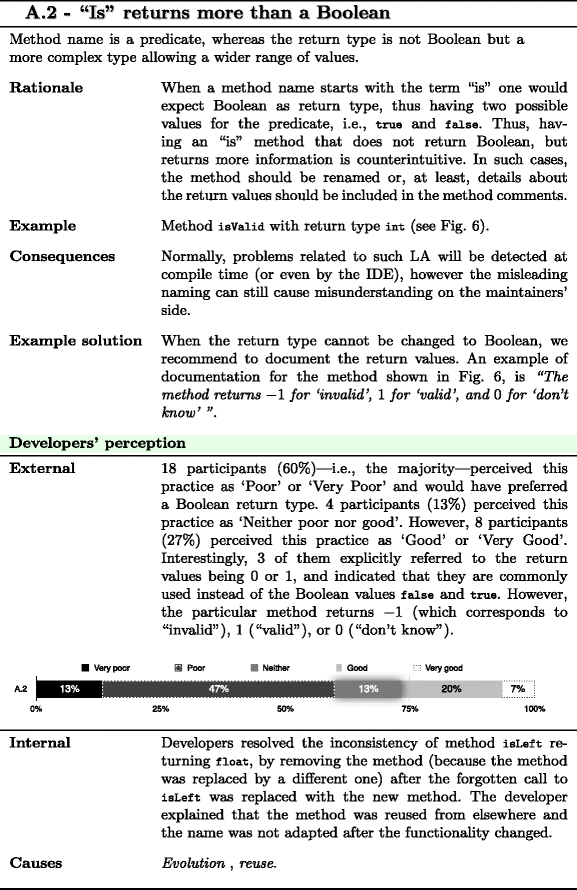

On the contrary, the most controversial LAs are A.1 (“Get more than accessor) and A.2 (“Is” returns more than a Boolean), with lower and higher quartiles being at ‘Poor’ and ‘Good’ respectively. Other controversial LAs are A.4 (Expecting but not getting single instance), B.2 (Validation method does not confirm), B.5 (Transform method does not return), C.1 (Method name and return type are opposite), and D.1 (Says one but contains many ), with lower and higher quartiles being at ‘Poor’ and ‘Neither poor nor good’ respectively.

In addition to violin plots, we show proportions of the LA perception by grouping, on the one hand, ‘Poor’ and ‘Very Poor’ judgements, and on the other hand, ‘Good’ and ‘Very Good’ ones. Results are reported in Fig. 5, where we sort LAs based on the proportion of participants that perceive them as ‘Poor’ or ‘Very Poor’. We observe that, for all but three LAs, majority of participants perceive LAs as ‘Poor’ or ‘Very Poor’. The three exceptions are A.1 (“Get” more than accessor), A.4 (Expecting but not getting single instance), and D.1 (Says one but contains many), for all of which the percentage of participants perceiving them as ‘Poor’ or ‘Very Poor’ is 36 %, 37 %, and 39 %, respectively. These are the three LAs having a median perception of ‘Neither poor nor good’ (see Fig. 4).

-

RQ2: Is the Perception of LAs Impacted by Confounding Factors?

We grouped the results of the participants according to their (i) main programming language (Java/C# or C/C++), (ii) occupation (student vs. professional), and (iii) years of programming experience (< 5 or ≥ 5 years). The grouping concerning the main programming language is motivated by the different way the languages handle Boolean expressions i.e., in C/C++ an expression returning a non-null or non-zero value is evaluated as true, whereas Java and C# do not perform this cast directly. For this reason, our conjecture is that developers who are used to C/C++ would consider acceptable that a method/attribute that should return/contain a Boolean could instead return/contain an integer.

We performed a Mann-Whitney test to compare the median perception of participants in each group. Results regarding the main programming language and the experience of participants indicate no significant difference (p-value >0.05) with a negligible Cliff’s delta effect size (d < 0.147). We obtained consistent results—i.e., no statistically significant differences when analyzing each LA separately—thus, neither the main programming language nor the experience affect the way participants perceive LAs. Only when considering LAs separately, the difference between the rating given by professionals and students is statistically significant for D.2—i.e., Name suggests Boolean but type does not, p − value=0.049, with a medium effect size (d = 0.40)—and a marginally significant for E.1—i.e., Says many but contains one, p − value = 0.053, with a medium effect size (d = −0.39). Thus, we conclude that developers’ perceptions of LAs are not impacted by their main programming language, occupation, or experience.

With the remaining three research questions we investigate the perception of LAs of internal developers—i.e., we report the results of Study II.

-

RQ3: How Do Internal Developers Perceive LAs?

Regarding the general opinion of participants (i.e., answers of SII-q0), 51 % of the times participants perceived LAs as ‘Poor’ or ‘Very poor’. This percentage is lower than the one obtained in Study I with external developers, i.e., 69 %. In our understanding—and also according to what we observed from developers’ comments (see Section 4.3)—such a decrease in the proportion may sometimes be due to the context in which LAs occur where internal developers perceive LAs as acceptable.

-

RQ4: What are the Typical Actions to Resolve LAs?

Participants would undertake an action in 56 % of the cases, and in 44 % of the cases they believe that the code should be left ‘as is’. We discuss the reasons behind these two choices—as reported by the participants—and illustrate them with examples in Section 4.3 Footnote 14.

Overall, the kind of changes that participants are willing to undertake to reduce the effect of LAs fall into one of the following (or a combination of those) categories: renaming, changeFootnote 15 in comments, and change in implementation. In 42 % of the cases, the solution involved renaming, 14 % involved a change of comments, and 11 % a change in the implementation. Resolving an LA may involve changes from the different categories.

Ten percent (5 out of 47) of the LAs shown to internal developers during the study have been removed in the corresponding projects after we pointed them out. The removed examples were instances of A.2 (“Is” returns more than a Boolean), A.3 (“Set” method returns), B.2 (Validation method does not confirm), and B.4 (Not answered question). We report the examples in the corresponding LA tables when discussing the perception of internal developers.

Clearly, one must consider that whether or not developers would actually undertake an action depends on other factors such as the potential impact on other projects, the risk of introducing a bug, and the high effort that is required (Arnaoudova et al. 2014). Sometimes, developers are reluctant to rename programming entities that belong to non-local context (e.g., public methods) or that are bound at runtime (e.g., when classes are loaded by name or methods are bound by name). We believe that some of those factors can be mitigated if LAs are pointed out as developers write source code thus, for example, removing or limiting the impact on other code entities.

-

RQ5: What Causes LAs to Occur?

Regarding the possible causes of LAs, we limit our analysis only to cases where the participants wrote the code containing the LAs and cases where they were knowledgeable of that code, e.g., because they were maintaining it. The reported causes and the number of times they occur are as follows (ordered by decreasing order of frequency):

-

1.

Evolution (8): The code was initially consistent, but at some point an inconsistency was introduced, hence causing the LA.

-

2.

Developers’ decision (7): It is a design choice or simply personal preference.

-

3.

Not enough thought (5): Developers did not carefully choose the naming when writing the code.

-

4.

Reuse (2): Code was reused from elsewhere without properly adapting the naming.

4.3 Qualitative Analysis

For each type of LA, we first briefly summarize its definition and we provide the rationale behind it. Then, we illustrate it using the example we showed to external developers (i.e., in Study I)—examples coming from real software projects— followed by possible consequences and solutions. Next, we highlight the perception of external and internal developers. Finally, we report the causes of LA introduction—when reported by the internal developers.

4.3.1 Summary of Developers’ Perception on LAs

We can summarize what we have learned from the studies on LAs perceptions as follows:

- Does more than what it says (A): :

-

Methods that do more than what they say seem to be perceived acceptable in some situations by both external and internal developers. In such situations the surveyed developers tend to infer the behavior suggested by the name. Sometimes such inference is wrong and may lead to faults. This was the case with the example of “Is” returns more than a Boolean (A.2) where 3 of the external developers wrongly mapped the return values for method isValid and assumed 2 possible return values whereas in reality there are 3.

- Says more than what it does (B): :

-

Developers are less lenient with methods that say more than what they do. LAs from this category are perceived often as unacceptable as the expectations resulting from the method’s name/documentation are not fulfilled—as for method isValid with void return type, example of Not answered question (B.4)—or it is unclear how to obtain the result—as for method getMethodBodies with void return type, example of “Get” method does not return (B.3).

- Does the opposite (C): :

-

Developers perceive as poor practices methods that do the opposite of what they say. When the inconsistency is between the method’s name and return type developers try to infer the actual behavior from the method’s comments—which they correctly did for the particular examples that we showed. However, developers are less lenient when the inconsistency is between the method’s signature and comments as they wouldn’t know which one to trust.

- Contains more than what it says (D): :

-

The surveyed developers are more lenient with attributes that contain more than what they say when they feel they need more context—e.g., for Says one but contains many (D.1) some developers explained that whether it is a poor practice would depend on the context and in the particular example the attribute declaration is not sufficient to understand the intent. Thus, developers would need to browse the source code and possibly other sources of documentation to clarity the attribute’s intent. However developers perceived as poor practice attributes whose Name suggests Boolean but type does not. We suspect that examples of LAs in this category may be more likely to increase comprehension effort.

- Says more than what it contains (E): :

-

Attributes that say more than what they contain are perceived more severely by external developers; internal developers are more lenient. Thus, although LAs in this category may impede comprehension of newcomers—they may have difficulties to infer the implicit aggregation function—it seems that developers familiar with the code simply get used to and have less issues with those LAs.

- Contains the opposite (F): :

-

Attributes that contain the opposite of what they say are perceived as poor practices by the majority of the surveyed developers, especially when the inconsistency occurs between the attribute’s signature and comments—similar to methods that do the opposite of what they say.

4.4 LAs Perceived as Particularly Poor

Based on the two studies with developers we distill a subset of 11 LAs (see Table 8) that are perceived by external (column SI) and–or internal (column SII) developers as particularly poor practices. From Study I, we consider that LAs are perceived as particularly poor when they are perceived as ‘Poor’ or ‘Very poor’ by at least 75 % of the external developers. As

proportions for Study II are not meaningful—due to the limited number of data points—we consider that LAs are perceived as particularly poor when there is a full agreement among internal developers—i.e., all internal developers perceived them as ‘Poor’ or ‘Very poor’—or when internal developers took an action to resolve them.

There are three LAs that both external and internal developers find particularly unacceptable. Those are LAs concerning the state of an entity (i.e., attributes) and they belong to the “says more than what it does” (B) and “contains the opposite” (F) categories—i.e., Not answered question (B.4), Attribute name and type are opposite (F.1), and Attribute signature and comment are opposite (F.2).

In addition, internal developers appear to be concerned with “Is” returns more than a Boolean (A.2). External developers are more lenient with this practice as only 60 % of them consider it as poor. However, we believe that A.2 may cause comprehension problems as 3 of the external developers that perceive this practice as good wrongly assumed the return values.

Finally, we observe that external developers perceive as particularly unacceptable LAs from all categories.

5 Threats to Validity

Threats to construct validity concern the relation between theory and observation, and they are mainly related to the accuracy of the measurements we performed to address our research questions. We manually validated the instances of the LAs we showed to the participants, and we selected a representative sample of the different kinds of LAs. Clearly, there is always a risk that the developer’ perception is bound to the particular instance of an LA rather than to its category. However, we limited this threat by collecting comments helping us to understand whether the LAs are indeed a general problem—which we found most of the times to be the case—or whether, instead, it depends on the context.

Regarding the measurement of the participants’ perception, we used Likert scale (Oppenheim 1992), which helps to aggregate and compare results from multiple participants.

Threats to internal validity concern factors that could have influenced our results. When asking participants to evaluate code snippets, we formulate a specific question thus possibly affecting the internal validity of the study as participants may guess the expected answer (Shull et al. 2007). To cope with this threat we also evaluate a set of examples not containing LAs and show a statistically significant difference in developers’ evaluations. In Study I, we analyzed the effect of the experience, the main programming language, and occupation of the participants. Another threat to validity is that external developers are only provided with code snippets and thus unaware of the context, i.e., the particular project that a snippet belongs to. Providing context may lead to more lenient evaluations by external developers as they may resolve the inconsistencies from other places in the code (e.g., from the way the entity is used), which could bias the perception of the practice itself. Also, as participants in Study I are external to the project, the lack of domain knowledge may have impacted their perception. We believe that this threat is limited as LAs concern general inconsistencies and thus are mainly domain independent.

Due to the limited number of data points, we did not perform any particular analysis in Study II, where we discussed results qualitatively rather than quantitatively. Our results may have been impacted by the fact that participants in Study II only validate a subset of the LAs. More data points for each LA may produce different results. More important, we have conducted the two studies to gain insights from different perspectives, i.e., both external and internal developers. A threat for Study II is that internal developers could have been more lenient with their own code. We mitigated this threat by asking them to motivate their answer and, in any case, also for Study II we found a pretty high proportion of poor/very poor perception of LAs.

As shown in Table 3 Note that the majority of the participants are native French speakers and that only for 13 % of the participants are native English speakers. However, we believe that this threat to validity is limited as our questions relate to basic grammar rules (e.g., singular/plural) and we analyze the justification for each question to ensure that the participants properly understood the question.

Threats to external validity concern the generalizability of our findings. In terms of objects, the two studies have been conducted on three and eight systems respectively. Although we cannot really ensure full diversity (Nagappan et al. 2013), as explained in Section 3, the chosen systems are pretty different in terms of size and application domain. In terms of subjects, the studies involved both students and professionals (from industry and from the open-source community), as well as developers of projects from which the LAs were detected and developers of other projects.

6 Related Work

This section discusses related work, concerning (i) the relationship between source code lexicon and software quality (Section 6.1), (ii) the identification and analysis of lexicon-related inconsistencies (Section 6.2), and (iii) empirical studies aimed at investigating developers’ perception of code smells (Section 6.3).

6.1 Role of Source Code Identifiers in Software Quality

Many authors have shown that the quality of the lexicon is an important factor for program comprehensibility.

As discussed in the introduction, Brooks (1983) considers identifiers and comments as part of the internal indicators for the meaning of a program.

Shneiderman (1977) presents a syntactic/semantic model of programmer behavior. The syntactic knowledge about a program is built through a perception process; it is precise, language dependent and easily forgettable. The semantic knowledge is built through cognition; it is language independent and concerns important concepts at different levels of details. On the basis of several experiments, Shneiderman and Mayer (1975) observed a significantly better program comprehension by subjects with commented programs. Higher number of subjects located bugs in commented programs compared to not commented programs, although the difference is not statistically significant. They argue that program comments and mnemonic identifiers simplify the conversion process from the program syntax to the program internal semantic representation.

Chaudhary and Sahasrabuddhe (1980) argue that the psychological complexity of a program—i.e., the characteristics that make a program difficult to be understood—is an important aspect of program quality. They identify several features that contribute to the psychological complexity one of which is termed “meaningfulness”. They argue that meaningful variable names and comments facilitate program understanding as they facilitate the relation between the program semantics and the problem domain. An experiment with students using different versions of FORTRAN programs—with and without meaningful names—confirms the hypothesis.

Weissman (1974a) also considers that the program form—e.g., comments, choice of identifiers, paragraphing—is a an important factor that affects program complexity, in particular suggesting that meaningless and incorrect comments can be harmful and that mnemonic names of reasonable length ease program understanding. However empirical evidence showed that comments lead to faster but more error prone hand simulation of a program (Weissman 1974a). Sheil (1981) reviews studies on the psychological research on programming, and argues that the ineffectiveness of the research in the domain is partly due to the unsophisticated experimental techniques.

Other authors have focused their attention on source code identifiers and their importance for various tasks in software engineering. Among them, Caprile and Tonella (1999,2000), Merlo et al. (2003), and Anquetil and Lethbridge (1998) show that identifiers carry important source of information and that identifiers are often the starting point for program comprehension. Deissenbock and Pizka (2005) provided guidelines for the production of high-quality identifiers. Later, Lawrie et al. (2006, 2007) performed an empirical study to assess the quality of source code identifiers, and suggest that the identification of words composing identifiers could contribute to a better comprehension.

6.2 Identifying Inconsistencies in the Lexicon

Previous studies have shown that poor source code lexicon correlates with faults (Abebe et al. 2012), and negatively affects concept location (Abebe et al. 2011).

Abebe and Tonella (2011) extract concepts and relations between concepts from program identifiers to build an ontology. They use the ontology to help developers in choosing identifiers consistent with the concepts already used in the system (Abebe and Tonella 2013). To this aims, given partially written identifiers, they suggest and rank candidate completions and replacements. We complement the above work, as Abebe and Tonella focus on the quality of the lexicon, whereas we identify inconsistencies among identifiers, source code, and comments.

De Lucia et al. (2011) proposed an approach and tool—named COCONUT—to ensure consistency between the lexicon of high-level artifacts and of source code. In their approach, the inconsistent lexicon is measured in terms of textual similarity between high-level artifacts traced to the code, and the code itself. In addition, COCONUT uses the lexicon of high-level artifacts to suggest appropriate identifiers.

Tan et al. (2007, 2011, 2012) proposed several approaches to detect inconsistencies between code and comments. Specifically, @iComment (Tan et al. 2007) detects lock- and call-related inconsistencies; the validation made by developers confirmed 19 of the detected inconsistencies. @aComment (Tan et al. 2011) detects synchronization inconsistencies related to interrupt context, and the evaluation by developers confirmed 7 previously unknown bugs. @tComment infers properties form Javadoc related to null values and exceptions; then, it generates tests cases by searching for violations of the inferred properties. Also in this case, Tan et al. reported the detected inconsistencies to the developers who indeed resolved 5 of them. Zhong et al. (2011) automatically generate specifications from API documentation concerning resource usage, namely creation, lock, manipulation, unlock, and closure. They contacted developers of the open-source projects who confirmed 5 previously unknown defects.

While the approaches described above address inconsistencies specific to certain source code aspect/implementation technology—i.e., lock/call, null values/exceptions, synchronization, and resource usage—our approach can be considered as complementary as it deals with generic naming and commenting issues that can arise in OO code, and specifically in the lexicon and comments of methods and attributes.

6.3 Developers’ Perception of Code Smells

Yamashita and Moonen (2013) performed a study—involving 85 professionals—with the aim of investigating the perception of code smells, in particular, the degree of awareness of code smells, their severity, and the usefulness of automatic tool support. Surprisingly, 23 of the participants (32 %) were not aware of such code smells. From the remaining 50 participants, i.e., those that have at least heard of anti-patterns and code smells, only 3 participants (6 %) were not concerned about the presence of code smells. 47 of the participants (94 %) were concerned at a different level—10 (20 %) were slightly concerned, 11 (22 %) were somewhat concerned, 19 (38 %) were moderately concerned, and 7 (14 %) were extremely concerned. Yamashita and Moonen performed categorical regression analysis and found that the more familiar participants are with anti-patterns and code smells, the more concerned they are. Palomba et al. (2014) also studied developers’ perceptions of code smells. They evaluated examples of 12 code smells found in 3 open-source Java projects from the perspective of 34 external and internal developers. Their results show that there are some code smells that developers do not perceive as poor practices. They also observed that for several code smells experienced developers are more concerned than less experienced developers.

We share with the above works the interest in how developers perceive poor practices. The main difference between previous work and our work is that while they evaluate practices that have been out there more than a decade, we study practices with which developers were not at all familiar with Study I or just introduced to Study II. This could be one of the reasons why a lower number of participants perceive LAs as ‘Poor’ or ‘Very Poor’—69 % and 51 % for Study I and Study II respectively—as opposed to anti-patterns and code smells—94 % when only considering participants familiar with anti-patterns and code smells. Also, while they evaluate the awareness and the concern of professionals about the code smells in general, we focus on evaluating the perception of developers of LAs i) through the mean of concrete examples thus allowing us to also investigate possible solutions, and ii) from both perspectives i.e., newcomers (Study I), and internal developers (Study II).

7 Conclusion and Future Work

This work aimed at investigating the developers’ perception of Linguistic Anti-patterns (LAs)—i.e., “poor practices in the naming, documentation, and choice of identifiers in the implementation of an entity, thus possibly impairing program understanding”—and the extent to which they suggest that such LAs need to be removed. The studies concerned a catalog of 17 types of LAs—defined in our previous work (Arnaoudova et al. 2013)—that we conjecture to be poor practices. In this paper, we rely on the opinion of developers as an indication of the quality of source code containing such poor practices with the aim of confirming or refuting our conjecture.

First, we conducted a study involving 30 external developers among graduate students and professional, i.e., people that did not participate to the development of the system in which the LAs were detected and unaware of the notion of LAs. They provided information about their perception of LAs found in 3 Java open-source projects, and the majority of them (69 %) indicated that such LAs are poor or very poor practices. Overall, developers perceived as more serious ones the instances where the inconsistency involved both method signature and comments. The perception of external developers is important as 1) it provides an indication of the difficulties that newcomers may encounter understanding code that contains LAs, and 2) it is an unbiased opinion.

In a second study we asked 14 (internal) developers of 7 open-source Java/C++ projects and one C++ commercial system to provide us their perception of LAs we found in the code of their projects. Internal developers provides us 1) with an indication whether code containing LAs is problematic even for people that are familiar with the project and 2) with insights on why LAs occurred in the code and how can they be refactored. 51 % of respondents evaluated LAs as poor or very poor practices. The percentage is lower compared to the one observed with external developers, as in some cases internal developers perceive LAs acceptable in the particular context. When asked why the LAs were possibly introduced—and developers had elements to answer—they pointed out maintenance activities—e.g., done by developers different from the original code authors—that deteriorated the lexicon quality, or lack of attention to naming conventions/comments. For a conspicuous proportion of LAs (56 %) developers highlighted that such LAs should be removed and, at the time of writing this paper, internal developers had already resolved 10 % of the cases containing LAs that we pointed out. As a result of the studies with developers, we distill a subset of LAs i) that are perceived as poor practices by at least 75 % of the external developers, ii) that are perceived as poor practices by all internal developers, or iii) for which internal developers took an action to remove it. There are three LAs that both external and internal developers agree on and perceived as particularly poor. Those are LAs concerning the state of an entity (i.e., attributes) and they belong to the “says more than what it does” (B) and “contains the opposite” (F) categories—i.e., Not answered question (B.4), Attribute name and type are opposite (F.1), and Attribute signature and comment are opposite (F.2). External developers found particularly unacceptable (i.e., more than 80 % of them perceived as poor or very poor) the LAs with a clear dissonance between code behavior and its lexicon—i.e., “Get” method does not return (B.3), Not answered question (B.4), Method signature and comment are opposite (C.2), and Attribute signature and comment are opposite (F.2). Given the extremely high level of agreement on those LAs, our results encourage the use of a recommender tool highlighting LAs, like such as the Checkstyle extension we developed and described in Section 2.

Clearly, one must consider that, whether or not developers could remove LAs also depends on the impact that this can have on the whole system. In other words, developers are less prone to remove LAs if this has a large impact on the code, as such change can be too risky. Instead, it can be more useful to point out LAs as developers write source code—e.g., on-the-fly using our Linguistic AntiPattern Detector (LAPD) Checkstyle plugin— thus removing or limiting the impact on other code entities.

Work-in-progress includes: (i) proposing automatic refactorings to resolve LAs, and (ii) performing a study involving developers using (or not) the LAPD Checkstyle plugin, with the aim of observing to what extent the recommendations will be followed, and to what extent will the code lexicon be improved.

Notes

For projects where we did not provide a version, we used version control (accessed on 31/05/2013).

None of the questionnaires containing examples of type C.2 was answered.

We do not report project names with the examples to avoid disclosing the confidentiality of the provided answers.

A change may be one or more of the following: modification, addition, or removal.

References

Abbes M, Khomh F, Guéhéneuc YG, Antoniol G (2011) An empirical study of the impact of two antipatterns, Blob and Spaghetti Code, on program comprehension. In: Proceedings of the European Conference on Software Maintenance and Reengineering (CSMR), pp 181–190

Abebe S, Tonella P (2011) Towards the extraction of domain concepts from the identifiers. In: Proceedings of the Working Conference on Reverse Engineering (WCRE), pp 77–86

Abebe S, Tonella P (2013) Automated identifier completion and replacement. In: Proceedings of the European Conference on Software Maintenance and Reengineering (CSMR), pp 263–272

Abebe SL, Haiduc S, Tonella P, Marcus A (2011) The effect of lexicon bad smells on concept location in source code. In: Proceedings of the International Working Conference on Source Code Analysis and Manipulation (SCAM), pp 125–134

Abebe SL, Arnaoudova V, Tonella P, Antoniol G, Guéhéneuc YG (2012) Can lexicon bad smells improve fault prediction? In: Proceedings of the Working Conference on Reverse Engineering (WCRE), pp 235–244

Anquetil N, Lethbridge T (1998) Assessing the relevance of identifier names in a legacy software system. In: Proceedings of the International Conference of the Centre for Advanced Studies on Collaborative Research (CASCON), pp 213–222

Arnaoudova V, Di Penta M, Antoniol G, Guéhéneuc YG (2013) A new family of software anti-patterns: Linguistic anti-patterns. In: Proceedings of the European Conference on Software Maintenance and Reengineering (CSMR), pp 187–196

Arnaoudova V, Eshkevari L, Di Penta M, Oliveto R, Antoniol G, Guéhéneuc YG (2014) Repent: Analyzing the nature of identifier renamings. IEEE Trans Softw Eng (TSE) 40(5):502–532

Brooks R (1983) Towards a theory of the comprehension of computer programs. In J Man-Machine Stud 18(6):543–554

Brown WJ, Malveau RC, Brown WH, McCormick III HW, Mowbray TJ (1998a) Anti patterns: refactoring software, architectures, and projects in crisis, 1st edn. Wiley, New York

Brown WJ, Malveau RC, HWM III, Mowbray TJ (1998b) AntiPatterns: refactoring software, architectures, and projects in crisis. Wiley, New York

Caprile B, Tonella P (1999) Nomen est omen: Analyzing the language of function identifiers. In: Proceedings of Working Conference on Reverse Engineering (WCRE), pp 112–122

Caprile B, Tonella P (2000) Restructuring program identifier names. In: Proceedings of the International Conference on Software Maintenance (ICSM), pp 97–107

Chaudhary BD, Sahasrabuddhe HV (1980) Meaningfulness as a factor of program complexity. In: Proceedings of the ACM Annual Conference, ACM, ACM ’80, pp 457–466

De Lucia A, Di Penta M, Oliveto R (2011) Improving source code lexicon via traceability and information retrieval. IEEE Trans Softw Eng 37(2):205–227

Deissenbock F, Pizka M (2005) Concise and consistent naming. In: Proceedings of the International Workshop on Program Comprehension (IWPC), pp 97–106

Fowler M (1999) Refactoring: improving the design of existing code. Addison-Wesley, MA

Gamma E, Helm R, Johnson R, Vlissides J (1995) Design patterns: elements of reusable object oriented software. Addison-Wesley, Boston

Glaser BG (1992) Basics of grounded theory analysis. Sociology Press

Grissom RJ, Kim JJ (2005) Effect sizes for research: a broad practical approach, 2nd edn. Lawrence Earlbaum Associates

Groves RM, Fowler Jr FJ, Couper MP, Lepkowski JM, Singer E, Tourangeau R (2009) Survey methodology, 2nd edn. Wiley, New York

Hintze JL, Nelson RD (1998) Violin plots: a box plot-density trace synergism. Am Stat 52(2):181–184

Jedlitschka A, Pfahl D (2005) Reporting guidelines for controlled experiments in software engineering. In: International symposium on empirical software engineering

Khomh F, Di Penta M, Guéhéneuc YG (2009) An exploratory study of the impact of code smells on software change-proneness. In: Proceedings of the working conference on reverse engineering (WCRE), pp 75–84

Khomh F, Di Penta M, Guéhéneuc YG, Antoniol G (2012) An exploratory study of the impact of antipatterns on class change- and fault-proneness. Empir Softw Eng 17(3):243–275

Kitchenham B, Pfleeger S, Pickard L, Jones P, Hoaglin D, El Emam K, Rosenberg J (2002) Preliminary guidelines for empirical research in software engineering. IEEE Trans Softw Eng (TSE) 28(8):721–734

Lawrie D, Morrell C, Feild H, Binkley D (2006) What’s in a name? a study of identifiers. In: Proceedings of the International Conference on Program Comprehension (ICPC), pp 3–12

Lawrie D, Morrell C, Feild H, Binkley D (2007) Effective identifier names for comprehension and memory. Innovations Syst Softw Eng 3(4):303–318

Merlo E, McAdam I, De Mori R (2003) Feed-forward and recurrent neural networks for source code informal information analysis. J Softw Maint 15(4):205–244

Miller GA (1995) WordNet: a lexical database for English. Commun ACM 38(11):39–41

Moha N, Guéhéneuc YG, Duchien L, Le Meur AF (2010) DECOR: a method for the specification and detection of code and design smells. IEEE Trans Softw Eng (TSE’10) 36(1):20–36

Nagappan M, Zimmermann T, Bird C (2013) Diversity in software engineering research. In: Proceedings of the joint meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering (ESEC/FSE), pp 466–476

Oppenheim AN (1992) Questionnaire design, interviewing and attitude measurement. Pinter, London

Palomba F, Bavota G, Di Penta M, Oliveto R, De Lucia A, Poshyvanyk D (2013) Detecting bad smells in source code using change history information. In: Proceedings of the international conference on automated software engineering (ASE), pp 268–278

Palomba F, Bavota G, Penta M D, Oliveto R, Lucia A D (2014) Do they really smell bad? A study on developers’ perception of code bad smells. In: International conference on software maintenance and evolution (ICSME), p. to appear

Parsons J, Saunders C (2004) Cognitive heuristics in software engineering: applying and extending anchoring and adjustment to artifact reuse. IEEE Trans Softw Eng (TSE) 30(12):873–888

Prechelt L, Unger-Lamprecht B, Philippsen M, Tichy W (2002) Two controlled experiments assessing the usefulness of design pattern documentation in program maintenance. IEEE Trans Softw Eng (TSE) 28(6):595–606

Raţiu D, Ducasse S, Girba T, Marinescu R (2004) Using history information to improve design flaws detection. In: Proceedings of the European conference on software maintenance and reengineering (CSMR), pp 223–232

Sheil BA (1981) The psychological study of programming. ACM Comput Surv (CSUR) 13(1):101–120

Shneiderman B (1977) Measuring computer program quality and comprehension. Int J Man-Machine Stud 9(4):465–478

Shneiderman B, Mayer R (1975) Towards a cognitive model of progammer behavior, Tech Rep, vol 37. Indiana University, Bloomington

Shull F, Singer J, Sjøberg DI (eds) (2007) Guide to advanced empirical software engineering. Springer, New York

Strauss AL (1987) Qualitative analysis for social scientists. Cambridge Univsersity Press

Takang A, Grubb PA, Macredie RD (1996) The effects of comments and identifier names on program comprehensibility: an experiential study. J Program Lang 4(3):143–167

Tan L, Yuan D, Krishna G, Zhou Y (2007) /*iComment: bugs or bad comments?*/, Proceedings of the ACM SIGOPS Symposium on Operating Systems Principles (SOSP) 41(6):145–158

Tan L, Zhou Y, Padioleau Y (2011) Acomment: mining annotations from comments and code to detect interrupt related concurrency bugs. In: Proceedings of the International Conference on Software Engineering (ICSE)

Tan SH, Marinov D, Tan L, Leavens GT (2012) @tComment: Testing Javadoc comments to detect comment-code inconsistencies. In: Proceedings of the international conference on software testing, verification and validation (ICST), pp 260–269

Torchiano M (2002) Documenting pattern use in java programs. In: Proceedings of the international conference on software maintenance (ICSM), pp 230–233

Toutanova K, Manning CD (2000) Enriching the knowledge sources used in a maximum entropy part-of-speech tagger. In: Proceedings of the Joint SIGDAT conference on empirical methods in natural language processing and very large corpora (EMNLP/VLC-2000), association for computational linguistics, pp 63–70

Weissman L (1974a) Psychological complexity of computer programs: an experimental methodology. SIGPLAN Not 9(6):25–36

Weissman LM (1974b) A methodology for studying the psychological complexity of computer programs. PhD thesis

Wohlin C, Runeson P, Höst M, Ohlsson MC, Regnell B, Wesslén A (2000) Experimentation in software engineering - an introduction. Kluwer, Boston

Woodfield SN, Dunsmore HE, Shen VY (1981) The effect of modularization and comments on program comprehension. In: Proceedings of the international conference on software engineering (ICSE), pp 215–223

Yamashita A, Moonen L (2013) Do developers care about code smells? - An exploratory survey. In: Proceedings of the working conference on reverse engineering (WCRE), pp 242–251

Zhong H, Zhang L, Xie T, Mei H (2011) Inferring specifications for resources from natural language api documentation. Autom Softw Eng 18(3–4):227–261

Acknowledgments

The authors would like to thank the participants to the two studies for their precious time and effort. They made this work possible.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Nachiappan Nagappan

This work is an extension of our previous paper (Arnaoudova et al. 2013)

Appendix

Appendix

1.1 A Detection

- A.1 - “Get” more than accessor::

-