Abstract

During the design of complex systems, software architects have to deal with a tangle of abstract artefacts, measures and ideas to discover the most fitting underlying architecture. A common way to structure such complex systems is in terms of their interacting software components, whose composition and connections need to be properly adjusted. Along with the expected functionality, non-functional requirements are key at this stage to guide the many design alternatives to be evaluated by software architects. The appearance of Search Based Software Engineering (SBSE) brings an approach that supports the software engineer along the design process. Evolutionary algorithms can be applied to deal with the abstract and highly combinatorial optimisation problem of architecture discovery from a multiple objective perspective. The definition and resolution of many-objective optimisation problems is currently becoming an emerging challenge in SBSE, where the application of sophisticated techniques within the evolutionary computation field needs to be considered. In this paper, diverse non-functional requirements are selected to guide the evolutionary search, leading to the definition of several optimisation problems with up to 9 metrics concerning the architectural maintainability. An empirical study of the behaviour of 8 multi- and many-objective evolutionary algorithms is presented, where the quality and type of the returned solutions are analysed and discussed from the perspective of both the evolutionary performance and those aspects of interest to the expert. Results show how some many-objective evolutionary algorithms provide useful mechanisms to effectively explore design alternatives on highly dimensional objective spaces.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Software architects face some of the most difficult decisions in the earliest stages of project execution, since they must guarantee that their designs satisfy multiple quality criteria. At this point, only a few concrete items of information are known about the final system, even though its structure already has to be determined (Bosch and Molin 1999). In this scenario, the discovery of software architectures constitutes an abstract and complex task, where software engineers have to identify high-level architectural artefacts, like components and interfaces, from a prior analysis model. As a result, an architectural specification is obtained comprising the underlying system structure, which will serve throughout the software development to add later functionality, to get suitable designs or to ensure proper maintenance (Ducasse and Pollet 2009).

Along the design phase, software architects have to deal with multiple design alternatives that need to be factually evaluated in order to find those solutions that best meet the system requirements. However, characteristics like modularity and reusability clearly hamper the decision making process because of the lack of quantifiable measures that really would represent the quality of the resulting software designs. Therefore, efforts have been directed towards the definition of specific metrics at the architectural level (Narasimhan and Hendradjaya 2007; Sant’Anna et al. 2007) like those already existing and commonly accepted for more concrete artefacts (Bansiya and Davis 2002). Nevertheless, selecting the most suitable subset of metrics is a complex task usually accomplished by experts only guided by their own experience. Since many different design criteria have to be simultaneously balanced, they may affect the manner in which architectural constraints are considered to meet the software architects expectations.

The automatic evaluation of design alternatives constitutes an interesting application field for Evolutionary Computation (EC) according to the rationale used by Search Based Software Engineering (SBSE) (Harman et al. 2012), where traditional Software Engineering (SE) activities, usually performed by humans, are formulated as search problems to be solved using metaheuristics. In the last years, EC has been successfully applied to the resolution of combinatorial problems in Software Engineering like requirements selection (Durillo et al. 2011) or resource allocation in project scheduling (Luna et al. 2014). In this sense, other SE tasks have demanded the attention of more sophisticated EC approaches in order to provide a higher performance in terms of the quality of the solutions and the satisfaction of the engineer’s expectations when more than one or two objectives should be considered (Yao 2013).

In this context, a trade-off between different objectives is required, resulting in a set of equivalent solutions among which the expert will decide. Well-known multi-objective evolutionary algorithms (MOEAs) (Zhou et al. 2011), like SPEA2 (Strength Pareto Evolutionary Algorithm 2) and NSGA-II (Nondominated Sorting Genetic Algorithm II), usually arise as the first option when SE practitioners want to adopt a multi-objective approach (Sayyad and Ammar 2013). Less often, comparative studies of different MOEAs are presented with the aim of providing a further analysis of the abilities of each proposal. These studies are really useful to gain empirical evidence of the adequacy of a specific algorithm to a given problem domain (Luna et al. 2014; Zhang et al. 2013).

These studies have traditionally considered optimisation problems with 2 or 3 predefined objectives, though there exist many SE tasks whose nature clearly demands the definition of a large number of objectives. Therefore, many-objective evolutionary algorithms appear to be an interesting approach for dealing with this kind of situation (Purshouse and Fleming 2007; von Lücken et al. 2014). The current interest within the EC community in tackling highly dimensional objective spaces creates an opportunity to benefit from novel and powerful algorithms to support software design. Traditionally, many-objective approaches have been tested on benchmarks considering a continuous search space (Bader and Zitzler 2011; Yang et al. 2013; Zhang and Li 2007). For this specific kind of problems, many-objective evolutionary algorithms have shown to clearly outperform MOEAs in terms of the standard performance metrics defined within the field of multi-objective optimisation (Coello Coello et al. 2007; Zitzler et al. 2004). Only a few authors have more deeply studied the performance of many-objective approaches in real-world environments like those appearing in SE and, consequently, SBSE practitioners seem to be less familiar with this kind of algorithms. In this sense, the works presented in Kalboussi et al. (2013), Mkaouer et al. (2014), and Sayyad et al. (2013) are the first proposals that are actually focused on the application of a specific many-objective evolutionary algorithm in testing, refactoring and software product lines design activities, respectively.

The greater the number of factors required to reach architectural decisions is, the more dependent the performance, in terms of quality and feasibility, of the obtained architectural solution becomes on the selection of the most appropriate optimisation approach. Therefore, even though many-objective approaches have proven capable of providing improvements in other related fields of application, the intrinsic characteristics of each algorithm make it necessary to thoroughly study them with the aim of identifying those aspects having a greater influence on the satisfactory resolution of a given design problem. In this context, an empirical analysis allows offering a more in-depth comprehensive overview of the behaviour of different types of algorithms when dealing with a different number and sort of objectives.

In Ramírez et al. (2014), we presented an initial discussion on the performance of 5 multi- and many-objective evolutionary algorithms. This research, performed on a set of 6 different design metrics using 6 representative case studies, highlighted the suitability of this new branch of algorithms in terms of their evolutionary performance and ability to support the decision making process as the number of objectives increased. Nevertheless, we consider it necessary to broaden and deepen in different ways this previous analysis in order to get more valuable findings on the performance of different families of many-objectives algorithms applied to the automatic discovery of software architectures. Firstly, the number of problem instances has been increased to 10 real-world system specifications. Secondly, the study focuses on the maintainability of software systems, considering up to 9 metrics to represent the optimisation objectives. These design metrics quantify diverse related non-functional properties of an architectural specification. The experimental framework, which includes a detailed discussion on the existing dependencies between objectives, provides a complete study of the most suitable characteristics of the different families of algorithms to deal with the evolutionary discovery of software architectures. Amongst these families, 8 different algorithms have been chosen to carry out this empirical research. Due to the very wide range of algorithms within the multi-objective optimisation field, such an analysis has served to articulate the distinctive features of the families of algorithms that best fit into the problem domain under study.

The rest of the paper is structured as follows. Section 2 presents some background, focused on multi- and many-objective optimisation and SBSE. Section 3 introduces the conceptual framework related to software architecture design and the search problem being addressed. The evolutionary model is explained in Section 4, including the definition of the evaluation objectives. The experimental framework is described in Section 5. Section 6 presents the obtained outcomes, whereas a discussion from the perspective of the decision making process is given in Section 7. The threats to the validity are explained in Section 8. Finally, some concluding remarks are pointed out in Section 9.

2 Background

The key concepts of multi- and many-objective optimisation are introduced in this section, as well as the evolutionary algorithms used for this comparative study. Additionally, the prior application of these multiple objective approaches in the SBSE field is reviewed.

2.1 Multi- and Many-objective Evolutionary Optimisation

Solving a multi-objective optimisation problem (MOP) consists in looking for solutions characterised by a set of decision variables that can find the most optimal trade-off among a set of objective functions, which are usually in conflict with each other (Coello Coello et al. 2007). One initial approximation to address a MOP is to turn it into a single-objective problem by formulating a new objective function that calculates the weighted sum of all the objectives. Similarly, other approaches have proposed its resolution by keeping only one objective and considering the rest of them as constraints. Even though they are just simple proposals, they have handicaps like the weight selection or requiring the reformulation of the problem (Deb 2001).

Therefore, any search algorithm specifically devoted to address MOPs will deal with objectives independently, so it can return a set of optimal solutions. Each solution is determined by a different trade-off between all the objectives, in which the improvement achieved by one could imply the loss of others. This idea is formally stated by the Pareto dominance definition (Coello Coello et al. 2007) as follows: a solution a is said to dominate another solution b if a has equal or better objective values for all the objectives and a better value for at least one objective than b. If that condition cannot be satisfied by either a or b, then both solutions are referred as non-dominated, meaning that they are incomparable or equivalent. The set of non-dominated solutions constitutes the Pareto set (PS), whereas the mapping of these solutions to the objective space is called Pareto front (PF). Finding a fitting approximation to the PF is a twofold task (Deb 2001). On the one hand, a multi-objective approach is requested to find non-dominated solutions that are close to the front, which is known as the convergence to the true PF. On the other hand, the resulting PF should be properly distributed within the objective space, making a good spread of non-dominant solutions important. Having both ideas in mind, the search algorithm should be able to provide an interesting set of high-quality solutions for the decision maker to choose among.

Evolutionary algorithms (EAs) are a metaheuristic technique inspired on the principles of natural evolution that deals with a set of solutions to solve optimisation problems (Boussaïd et al. 2013). Each solution is characterised by its genotype, i.e. the structure used to encode the decision variables that need to be managed during the problem resolution. In contrast, the phenotype symbolises the real-world representation of its genotype. The algorithm begins with the random creation of a set of candidate solutions, known as population. Each solution, also called individual, is then evaluated considering how well it can solve the optimisation problem according to its fitness function. The rest of the evolution is performed by an iterative process in such a way that individuals in the population are modified in each iteration, also called generation, using genetic operators until the stopping condition, e.g. a maximum number of generations, is reached. With this purpose, some individuals are selected to act as parents, from which new solutions are generated according to the specific EC paradigm. The most commonly used genetic operators are crossover and mutation. The former is applied to create offspring tending to be similar to their parents, whereas the latter is performed to introduce diversity among offspring. New solutions have to be evaluated and compared against one another in order to decide whether they should be part of the next population of individuals. Finally, the best individual found in terms of its fitness function is returned. Notice that several paradigms have emerged from the general concept of Evolutionary Computation, such as genetic algorithms (GAs), evolution strategy (ES), evolutionary programming (EP) and genetic programming (GP) (Boussaïd et al. 2013). On the one hand, GA and GP apply both crossover and mutation. In the latter, individuals represent executable programs encoded using a tree structure instead of a fixed-length genotype as proposed by GAs. On the other hand, ES and EP do not usually implement any crossover operator, EP being characterised by the use of domain-specific encodings.

Evolutionary computation has traditionally been a way to efficiently solve complex search problems having a unique fitness function. Nevertheless, they can be also adapted to cover a multidimensional objective space. In fact, the application of EAs for the resolution of multi-objective problems, i.e. multi-objective evolutionary algorithms (Coello Coello et al. 2007), has been widely studied for the resolution of real-world applications. They work well in objective spaces with 2 or 3 objectives, in both combinatorial and continuous optimisation problems. MOEAs perform in general the same iterative process than EAs. However, a specific terminology is often used Zitzler et al. (2004), and they require some additional steps to be executed, as shown in Algorithm 1. Observe that MOEAs provide a mating selection method to pick individuals to act as parents. After the generation of offspring through a domain-specific variation mechanism, i.e. genetic operators, environmental selection is performed to select the individuals that will survive in the next generation. The former can determine an overall fitness value, which is independent from the objective values and represents a quality criterion to classify individuals, usually promoting the selection of non-dominated solutions. The latter applies some diversity preservation techniques in order to choose between equivalent solutions. In addition, an external population, called archive, can be maintained throughout the evolution containing the best found solutions. Finally, the Pareto set is returned by extracting non-dominated solutionsl from either the population or the archive. Two well-known examples of MOEAs adopting this latter structure are SPEA2 (Zitzler et al. 2001) and NSGA-II (Deb et al. 2002).

In the last decades, important efforts have been devoted to deal with the increasing complexity of MOPs, especially with those having a large number of objectives, known as many-objective optimisation problems. Although the actual difference between multi- and many-objective problems has not been clearly stated in the literature (Purshouse and Fleming 2007), some works initially considered that having more than 2 objectives can be considered as a many-objective problem (Adra and Fleming 2011). Nevertheless, most authors agree today with the idea that many-objective problems require the presence of at least 4 objectives (Deb and Jain 2014; von Lücken et al. 2014; Zhou et al. 2011). In this paper, this latter criterion is followed in order to distinguish among optimisation problems. Even so, notice that algorithms originally conceived as multi-objective have served to address many-objective problems, whereas other authors use the term multi-objective to refer to the algorithm, and the term many-objective to the optimisation problem. Here, algorithms will be categorised as prescribed by their respective authors. There are studies which conclude that proposals like SPEA2 and NSGA-II tend to decrease their performance as the number of objectives increases, at least when solving continuous optimisation benchmarks (Khare et al. 2003; Praditwong and Yao 2007). In highly dimensional objective spaces, the Pareto dominance loses the efficiency required to guide the search, since a great number of population members become non-dominated solutions. For this reason, many-objective optimisation problems require new techniques to deal with such complexity (Schutze et al. 2011; von Lücken et al. 2014).

Recently, different strategies have been applied in order to improve the performance of EAs for solving many-objective problems, including the adaptation of some already existing algorithms (Adra and Fleming 2011; Wang et al. 2014) or the design of new frameworks (Hadka and Reed 2013). Some of the proposed features are related to the modification of the dominance principle (He et al. 2014) or the inclusion of specific diversity preservation techniques (Wang et al. 2014), among others. Thus, they are usually classified in families in accordance with the selected technique (Wagner et al. 2007).

2.2 Selected Algorithms

Eight evolutionary algorithms have been selected for this comparative study. Two multi-objective evolutionary algorithms, SPEA2 and NSGA-II, are included as baseline algorithms, their original implementation being maintained. Additionally, other representative proposals, especially well-suited to deal with many-objective problems, were chosen from the different families of algorithms in accordance to the literature (von Lücken et al. 2014; Wagner et al. 2007). Notice that for this selection, algorithms could not consider any previous assumption about domain-specific elements like the encoding or the genetic operators, since both characteristics need to be configured according to the problem domain under study.

SPEA2 (Zitzler et al. 2001) assigns a fitness value based on a strength rate and a density estimator strategy. The former counts the number of dominated and non-dominated solutions considering each individual, whereas the latter uses a clustering approach to select more diverse individuals. Both values are combined and used in the mating selection. Non-dominated solutions are stored in a fixed-size archive using a truncation method if it is required, while the new population will be set using all the offspring.

NSGA-II (Deb et al. 2002) involves a sorting method in order to rank the solutions in fronts considering the dominance between them. Both this ranking and a crowding distance are used in the mating selection. During the environmental selection, individuals are progressively kept by fronts, the crowding distance being used to decide which individuals are selected when a front cannot be stored entirely. This algorithm does not define an external archive, so the non-dominated solutions are extracted from the final population.

MOEA/D (Multiobjective Evolutionary Algorithm based on Decomposition) (Zhang and Li 2007) proposes a decomposition approach where the multi-objective problem is divided into several scalar problems to be simultaneously optimised, looking for a better diversity among solutions. More specifically, MOEA/D associates each subproblem to one individual in the population by means of a weighted vector. Individuals are randomly chosen in the mating selection, whereas the neighbourhood information is determined on the weighted vectors during the environmental selection in order to match each offspring to the subproblem that it can best solve. The Tchebycheff approach is applied to evaluate individuals over their specific problem, computing its distance to a reference point. This algorithm uses an archive to keep all the non-dominated solutions found throughout the evolution process. Originally proposed to solve MOPs, MOEA/D is frequently used in many-objective problems, as well as to try out new proposals (Deb and Jain 2014; von Lücken et al. 2014; Yang et al. 2013).

A common technique to avoid the poor selection pressure of the Pareto dominance criterion is to divide the objective space into hypercubes or grids. Then, a relaxed dominance relation, usually referred as the 𝜖-dominance, is defined over the resulting landscape. Thus, solution a 𝜖-dominates solution b if a belongs to better or equal hypercubes for all the objectives and to a better hypercube for at least one objective than b. This idea is explored in 𝜖-MOEA (Deb et al. 2003), a steady-state algorithm which establishes a fixed length of the hypercubes for every objective, named 𝜖 i . In each iteration, one parent from the current population and another one from an archive of solutions are selected to generate one or more offspring. These new solutions will survive depending on the hypercubes to which they belong and those already filled with the solutions previously saved in the external population. Similarly to MOEA/D, 𝜖-MOEA was originally conceived to deal with 2 or 3 objectives, although it has turned into a reference algorithm due to its notable performance to address many-objective optimisation problems (Wagner et al. 2007; Yang et al. 2013).

GrEA (Grid-based Evolutionary Algorithm) (Yang et al. 2013) is a many-objective evolutionary algorithm based on the notion of landscape partition, though grids are here dynamically created considering the objective values in the current population. Initially, GrEA calculates some properties of the individuals based on the grids. Such information is used by the mating selection in order to obtain the most promising reproducible solutions. Inspired by NSGA-II, GrEA also ranks the population by fronts during the environmental selection, but it uses its own specific distance and diversity metrics to discard equivalent solutions. GrEA does not define an external archive, the returned solutions being extracted from the final population.

Indicator-based methods propose the use of indicators to guide the evolution process. The selected indicator can represent the preferences of the decision maker, so the evolution is heavily oriented towards a region of interest. Some popular indicator of the quality of a Pareto set approximation, e.g. the hypervolume, can be considered. This kind of algorithms has become an interesting alternative to address many-objective optimisation, since they transform the original problem into a task consisting in optimising the quality indicator (von Lücken et al. 2014). One representative approach here is IBEA (Indicator-based Evolutionary Algorithm) (Zitzler and Künzli 2004), which defines a general evolutionary algorithm where any binary indicator could be used as a fitness function. The selected measure is a key factor, since the fitness function is heavily involved in both the mating and the environmental selection procedures. For instance, the authors proposed the application of the 𝜖-indicator, representing the minimum distance by which a set of solutions should be translated in each dimension such that another set of solutions is weakly-dominated. It requires a less complex operation than hypervolume, which could be prohibitive in terms of computational effort when a large number of objectives is set. During the mating selection, binary tournaments are performed to generate the set of parents. For each tournament, two randomly selected individuals are compared with each other according to their fitness values, so the best individual is returned as parent. The worst individuals are discarded during the environmental selection.

Another relevant proposal is HypE (Hypervolume Estimation Algorithm) (Bader and Zitzler 2011), which allows the optimisation of many-objective problems replacing the exact value of the hypervolume with an estimated value based on Monte Carlo simulations. This value represents the portion of the hypervolume to be actually attributed to each solution and used to determine the winner of each binary tournament in the mating selection process. Environmental selection is based on the minimum loss of hypervolume, discarding those solutions that contribute the least to the overall hypervolume as a way to promote convergence to the most promising solutions in terms of this indicator. Neither IBEA nor HyPE makes use of an archive of non-dominated solutions.

Finally, NSGA-III (Deb and Jain 2014) is a many-objective evolutionary algorithm which looks for the improvement of the performance of its predecessor, NSGA-II, when dealing with highly dimensional objective spaces. It is classified as a reference-point-based method, being characterised by the promotion of those individuals that are close to a set of reference points supplied by the user or uniformly generated by the algorithm. In NSGA-III, the crowding distance of NSGA-II is replaced by a new method, where each individual is associated to the closest reference point. In this way, diversity is preserved attending to the survival of, at least, one representative solution for each reference point. Apart from belonging to a different family of many-objective approaches, NSGA-III has shown promising results when coping with complex real-world combinatorial SBSE problems like software refactoring (Mkaouer et al. 2014).

2.3 Multiple Objective Approaches in SBSE

Multi-objective optimisation algorithms have been widely applied in SBSE (Yao 2013), since they have demonstrated their ability to reach a trade-off between objectives, as is often the case in Software Engineering. Focusing on search-based software design (Räihä 2010), i.e. the field within SBSE that proposes the application of metaheuristics to the design and improvement of different software artefacts, some classical MOEAs have already been used. Object-oriented analysis using SPEA2 (Bowman et al. 2010), software module clustering executing the Two-Archive algorithm (Praditwong et al. 2011), or the application of NSGA-II to code refactoring (Ouni et al. 2013) represent some examples of software design activities that have been solved from a multi-objective perspective.

Within the field of search-based software design, software architecture optimisation (Aleti et al. 2013) encompasses those works devoted to automatically specifying, re-designing, discovering or deploying software architectures. Recently, these tasks have also being addressed with multi-objective evolutionary approaches. Grunske (2006) presented a first analysis of the applicability of a multi-objective strategy facing several specific non-functional requirements to the software architecture refactoring problem. In this case, no implementation was proposed.

The generation of a set of alternative architectural specifications by means of a specific multi-objective genetic algorithm is proposed in Räihä et al. (2011). Here, a structural model, specified as a class diagram representing a low level architecture, is derived from its corresponding behavioural specification, modelled in terms of sequence diagrams, which are extracted from the software requirement specification and drawn as use cases. Simultaneously, 4 design metrics related to modifiability and efficiency are optimised in the process. NSGA-II is the algorithm chosen to perform the architectural deployment in Koziolek et al. (2013), looking for a trade-off between availability, performance and cost. Here, the optimisation problem consists of the optimal allocation of components into servers, including the server configuration and component selection.

Although NSGA-II stands out as the preferred algorithm in all the SBSE fields, some comparative studies have recently appeared to empirically decide the most appropriate MOEA for a given design problem. With reference to software architecture deployment, Li et al. (2011) compares SPEA2, NSGA-II and SMS-EMOEA (S Metric Selection Evolutionary Multiobjective Optimisation Algorithm) to obtain the best allocation of software components in terms of CPU utilization, cost and latency. Further, there are some recently proposed studies related to other problem domains. For example, in Zhang et al. (2013) the performance of several variants of NSGA-II were analysed for the priorisation of requirements, considering up to 5 objectives. In Assunção et al. (2014), the authors propose a comparison between SPEA2, NSGA-II and PAES (Pareto Archived Evolution Strategy) to deal with 2 and 4 objectives in the context of software testing. Only del Sagrado et al. (2015), Durillo et al. (2011), and Luna et al. (2014) seem to consider a greater variety of metaheuristics, even though they are actually applied to bi-objective problems, like the selection of requirements or the project scheduling problem.

Classical MOEAs still are the most used algorithms in SBSE for multi-objective problems, though some of these problems really seem to require more than 2 or 3 objectives to be optimised (Sayyad and Ammar 2013). Many-objective approaches have received less attention and their applicability has been only explored in a few proposals, mainly focusing on one specific algorithm for a very certain task. In Sayyad et al. (2013) IBEA was chosen to optimise 5 design characteristics of product lines. Kalboussi et al. (2013) presented a preference-based evolutionary method to deal with 7 objectives of a testing problem, whereas Mkaouer et al. (2014) has recently proposed an interesting solution to the software refactoring, using NSGA-III to simultaneously optimise 15 objectives.

3 Software Architecture Discovery

3.1 Conceptual Framework

The ISO Std. 42010 (ISO 2011b) defines the architecture of a software system as “the fundamental concepts or properties of a system in its environment embodied in its elements, relationships, and in the principles of its design and evolution”. A software architect is in charge of conceiving a system that meets the expected functional requirements, as well as the non-functional requirements like performance or maintainability, at a high level of abstraction. A commonly used approach for developing the software architecture specification is to follow a component-based design, which advocates for the construction of independent, interrelated software artefacts, mostly conceived in favour of reuse. In a component-based architecture, a component represents a block of highly cohesive functionality, whose interactions are specified by means of provided and required interfaces (Szyperski 2002). Connectors are links between provided and required interfaces, so they tie a component that supplies some services to another component demanding them.

The tasks related to the architectural design deal with aspects like the understanding, reuse, construction, evolution, analysis and management of the software system (Garlan 2000). From the beginning, an architectural specification is not only required to provide an abstract description of the system, but also to check its consistency and conformance to quality attributes. Nevertheless, the techniques currently used to evaluate software architectures are mostly based on the expert’s opinion (Dobrica and Niemela 2002). Actually, the definition of design metrics at the architectural level is still a great challenge, where the ISO Std. 25000 (ISO 2011a) can play an important role as it can serve as a framework to provide a precise guidance on how an architectural model should be assessed.

Ideally, the architecture specification of the system should be maintained throughout the entire development process, evolving as the software does. Frequently, uncontrolled changes in the requirements or the inclusion of a new functionality are directly reflected in the most concrete artefacts, like source code, leading to out-of-date, incoherent, and useless software documentation. As a result, understanding the original architecture becomes a complicated task (Ducasse and Pollet 2009). Although diverse methods have been proposed to recover the architectural specification, most of them start with the source code, meaning that the recovery process can only be conducted once the system implementation is completed. If code is not available, the discovery of the original architecture could only be done manually in accordance to the analysis models. As this process strongly relies on the expertise of software engineers, providing semi-automatic approaches could serve to assist them, especially in exploring different design alternatives in terms of the expected quality attributes.

3.2 The Search Problem

In Ramírez et al. (2015), the discovery of software architectures is presented as a search problem, where an analysis model represented in form of a class diagram (OMG 2010) saved in XMI format provides the input information in terms of classes and their relationships (see Fig. 1). In this case, the search problem is solved by a single-objective evolutionary algorithm, which is able to generate one single candidate solution representing a high-level architectural specification in terms of components, interfaces and connectors, and depicted by a component diagram. The discovery process is guided by the following rules:

-

A component is represented by a cohesive group of classes. The search algorithm should be able to discover the groups of classes that best integrate the different functionalities of the interacting system. Relationships between components are mapped into interacting interfaces. Two constraints should be satisfied in this regard: any class should belong to only one component, and the resulting architectural specification should not contain any empty element.

-

Once the classes are divided up among components, directed relationships between pairs of classes, each belonging to a different component, would represent a candidate interface. The types of the relationships are those defined by UML 2: associations, dependencies, aggregations, compositions and generalisations. Here, two exceptional cases could cause the interface not to be created: either the navigability of the relationship is unspecified, or the relationship represents a data abstraction modelled by a generalisation. Two additional constraints should be also considered: any component should define at least one interface, and mutually dependent components, i.e. a pair of components each one providing services to the other, should not be created.

-

A connector is a link between a pair of required-provided interfaces belonging to different components.

4 Optimisation Approach for the Discovery Process

According to Aleti et al. (2013), the proposed approach can be classified as an architecture optimisation method, where a search-based algorithm is applied on a number of architectural artefacts in order to get the best trade-off between certain quality attributes. More specifically, our evolutionary model falls into the category of multi-objective approaches (dimensionality category), making use of a high-level technique, i.e. metaheuristics, to find approximate solutions (optimisation strategy). Similarly to other methods, constraint handling techniques are applied, and UML 2 is used as a notation for the architectural specification. What distinguishes our approach from other existing architecture optimisation methods are those aspects related to the problem domain, i.e. quality attributes and decision variables (degrees of freedom). The discovery of software architectures was recently proposed as a search problem in Ramírez et al. (2015), being addressed from a single-objective perspective.

The architectural specification is not constructed from scratch during the search-based discovery process. Instead of being derived from requirements, the identification of the system functional blocks and their abstract specification are carried out from an earlier analysis model. A similar approach is the decomposition process proposed by Lutz (2001), where an evolutionary algorithm is used to find the best distribution of classes into modules. This proposal is founded on clustering, so the system structure is viewed as a graph and, besides, valuable analysis information, such as the presence of abstract artefacts or the existence of different types of relationships among the classes, is omitted during the search. Nonetheless, the proposed procedure is not intended to precisely simulate what the engineer would do to build the architecture, e.g. defining interacting interfaces. Similarly, software modularisation (Praditwong et al. 2011) deals with the organisation of code into packages and modules, working at a lower level of abstraction. Although the three optimisation approaches share the idea of searching the optimal organisation of some kind of software artefacts in other units of construction, each problem defines its own objectives and constraints, leading to several differences among them regarding their respective fitness landscapes.

The elements that constitute the evolutionary algorithm addressing the discovery process are introduced next. Firstly, the encoding and the initialisation are explained. Secondly, the objective functions and the genetic operator, composed by five different mutation procedures, are presented. Crossover is not applied because the evolutionary model presented here adopts the evolutionary programming paradigm.

4.1 Encoding and Initialisation

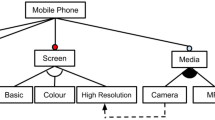

The individuals of the population represent design solutions in the form of a component-based software architecture. A simple genotype/phenotype mapping constitutes an essential element in favour of interpretability. Additionally, since no prior assumption of the architectural structure to be returned is made, a flexible encoding is also required in order to be able to manage architectural solutions having different number of components. Therefore, a tree structure is used to encode each architectural model, allowing a comprehensible decomposition of the involved software artefacts as established in the problem statement. As depicted in Fig. 2, shaded nodes stand for mandatory elements, whereas the rest of nodes vary from one individual to another, i.e. components and connectors and their internal composition, vary from one individual to another. Additional data about the input class diagram, such as the original relationships between classes and its abstractness are kept by the algorithm. Since this information does not vary from one individual to another, it is not explicitly represented in the genotype.

The initialisation process randomly generates component-based architectures for a given input analysis model. For each solution, a random number of components is chosen between a minimum and a maximum value that is configurable by the software architect. All the classes within the input class diagram are arbitrarily distributed among components. As a constraint none of these components can be empty. Interfaces and connectors are also set considering the existing relationships between classes belonging to different components. The obtained individuals do not need to strictly satisfy all the constraints related to the interaction between components, since they are not checked at this point in order to promote a quicker and more flexible initialisation step. Unfeasible individuals should be turned into feasible ones or be discarded as the evolution elapses, right after the application of the mutation operator and the selection mechanisms.

4.2 Evaluation Objectives

Nine software quality metrics are mapped into their respective objective functions, which serve to guide the evolutionary process. These metrics quantify diverse non-functional requirements related to the maintainability of the software system, which stands out as one of most important quality criteria for component-based software architectures. The software product quality model specified by the ISO Std. 25000 (ISO 2011a) defines maintainability as “the degree to which the software product can be modified”, where modifications “may include corrections, improvements or adaptation of the software to changes in the environment, and in requirements and functional specifications”. This standard document defines the sub-characteristics into which the maintainability can be decomposed to effectively measure a number of related design aspects. The specific maintainability terms applicable to the nature of the addressed problem are the following:

-

Modularity, which is defined as “the degree to which a system is composed of discrete components such that a change to one component has minimal impact on other components”.

-

Reusability, which establishes “the degree to which an asset can be used in more than one software system or in building other assets”.

-

Analysability, which determines “the degree to which the parts of the software to be modified can be identified”.

All these terms need to be translated into quantifiable metrics that will then be selectively combined to be simultaneously optimised by the evolutionary algorithm. On the basis of the ISO Std. 25000, a review of the literature was conducted to select those quality metrics that best suited an architectural specification and could be directly computed over the architectural solutions. These metrics are explained next.

Intra-modular Coupling Density (icd)

This metric was proposed by Gupta et al. (2012) and establishes a trade-off between cohesion and coupling, as shown in (1). icd is defined as the ratio between internal and external relations in the components. \(ci_{i}^{in}\) represents the number of interactions between classes inside the component i, whereas \(ci_{i}^{out}\) is the number of external relations, i.e. the number of candidate interfaces. Here, the ratio between \(ci_{i}^{in}\) and \(ci_{i}^{out}\) is weighted by the fraction of classes taking part in these relationships. Finally, icd is calculated for the overall architecture as the average of all the i c d i values, all these terms varying in [0,1].

External Relations Penalty (erp)

This metric is inspired by the Interface Violations metric (Krogmann 2010). erp computes the number of existing relationships between classes belonging to each possible pair of different components, i and j, that could not be defined as candidates interfaces (see (2)). More precisely, these relationships are those associations (as), aggregations (ag) and compositions (co) that do not explicitly specify their navigability, as well as generalisations (ge). A weighted sum (w x ) is calculated to emphasise those relations that strongly harm the overall quality of the architectural solution at the software engineer’s discretion.

Instability (ins)

The instability metric is intended to create highly independent components, leading to more separated functionality blocks. Although the concept of instability was originally defined by Martin (1994) to evaluate the stability of object-oriented designs, it can be also considered at the architectural level. In this way, the overall instability of the architecture is obtained as the average value of the instability of each single component (i n s i ) (see (3)). In order to calculate this metric, the terms a c i and e c i need to be first computed. a c i represents the afferent coupling of component i, i.e. the number of components that require its services, whereas e c i stands for the efferent coupling, i.e. the number of external components that provide their services to component i (Sant’Anna et al. 2007).

Encapsulation (enc)

This metric is inspired by the Data Access Metric proposed by Bansiya and Davis (2002), which serves to evaluate the encapsulation of one class within an object-oriented design. With the aim of adapting this concept to the architectural level, the encapsulation of each component i, e n c i , is computed as the ratio of its hidden classes, i.e. those that do not participate in any interaction with classes belonging to other components in the architecture, and the total number of contained classes. The overall encapsulation is calculated as the mean of the e n c i values (see (4)).

Critical Size (cs)

This metric, presented in Narasimhan and Hendradjaya (2007), intends to minimise the number of large components. To this end, cs counts the number of critical components with respect to their size (c c s i z e ), this value being returned by the s i z e() function and calculated as the percentage of classes comprised by the component in relation to the entire model. A threshold value is required to determine if the corresponding component would be critical or not (see (5)).

Critical Link (cl)

Similarly to cs, cl considers the number of critical components, c c l i n k , with respect to the number of their interactions within the architecture (Narasimhan and Hendradjaya 2007). As can be seen in (6), the links refer to the number of provided interfaces and a threshold should be established.

Groups/Components Ratio (gcr)

The gcr metric, presented in (7), has been adapted from the Component Packing Density (cpd) metric, defined by Narasimhan and Hendradjaya (2007). cpd determines the ratio between the number of constituents, i.e. those elements that compose the components, and the total number of components in the architecture. Here, the constituents are the groups of connected classes (# c g r o u p s) inside each component.

Abstractness (abs)

This measure (Krogmann 2010) is defined as the ratio of abstract classes inside a component, a b s i (see (8)). A global value of the abstractness distribution for a given architectural solution, abs, can be obtained by computing the mean value of abstractness for each component.

Component Balance (cb)

Reducing the presence of critical components is an important factor in software design, though it could lead to an excessive number of tiny components hampering the comprehension of the resulting system structure. cb is proposed in Bouwers et al. (2011) to quantify the balance among components, taking into consideration their respective sizes. It is based on two terms, the system breakdown (sb) and the component size uniformity (csu), as shown in (9), where csu is defined as the Gini Coefficient, i.e. a statistical measure of dispersion. The v o l u m e(c) function counts the number of classes per component c, C being the set of components in the architecture. csu varies in the range [0,1], the unity being its optimum value, meaning that all the components have the same size. sb represents a linear function in the range [0,1], which benefits the proximity to a number of components, μ, and penalises those architectures having just one or too many components (ω). Since the problem definition determines the minimum and maximum number of components to be comprised by the candidate architectures, ω should be set to this maximum value, and the theoretical minimum threshold, 1, should be replaced by the minimum number of components. Similarly, the μ value will be equal to the average value between these maximum and minimum values.

Table 1 summarises the main characteristics of the 9 objective functions, including whether they are to be minimised (min) or maximised (max), and a brief description of the design goals being promoted. Each metric is related to maintainability characteristics defined above, and the range of possible values is also provided. The symbol ∗ is used to represent that the upper bound of erp and gcr is dependent on the features of the problem instance, whilst n indicates the number of components within the architecture.

Table 2 shows the list of design goals being promoted (↑) or demoted (↓) by each metric. The symbol − represents that the corresponding metric has no influence on that goal. As can be seen, most of the trade-offs between metrics are related to the size of components and the way in which components would preferably interact with each other. For example, those metrics that look for decreasing the number of interactions between components, in terms of both interfaces and external relationships, i.e. coupling, tend to demote the internal component cohesion due to an excessive encapsulation of functionalities.

4.3 Mutation Operator

The execution of a crossover operator could produce a significant amount of unfeasible solutions, requiring the implementation of repair methods to satisfy those constraints that had been already checked during the initialisation process. It would be a time-consuming procedure, without which unfeasible individuals could be generated even late in the search, making the convergence to the feasible region of the search space considerably more difficult. Only a domain-specific mutation operator serves to explore the different architectural solutions throughout the evolutionary search (Ramírez et al. 2015). A weighted roulette is used to select one of the five proposed mutation procedures. More specifically, these procedures simulate those architectural transformations that could be applied to a given individual, i.e. the parent, to generate an offspring.

-

Add a component. A new component is added to the architecture. Creating such a new component requires extracting some classes from other components. More specifically, if a component would contain several unconnected groups of related classes, some of them could be randomly selected to create the new component. Hence, the ultimate aim of this procedure is to improve the distribution of functionalities among components.

-

Remove a component. This procedure implies that one component in the current architecture will be discarded. In this case, its inner artefacts are arbitrarily distributed among the remaining components. A component having a great number of dependencies with others is a preferable candidate to be removed.

-

Merge two components. This procedure involves the construction of a new component by taking two existing components from the current architecture. Since the aim of this procedure is to attempt to concentrate functionalities, the first selected component would be one with a large number of external dependencies. Then, a second component is selected at random.

-

Split a component. This procedure is designed with the aim of avoiding excessively large components. One component is randomly selected and split into two components, forcing an interaction between both of them to remain in the form of a new interface. More precisely, an internal relationship is chosen at random to act as the candidate interface. Next, each inner class is assigned to one component or another depending on how it relates to the interface ending, i.e. classes providing and requiring the service are separated.

-

Move a class. This procedure executes the simple movement of one class from one component to another. Notice that this procedure does not apply any change in the structure of the solution, so the transformation is done completely at random.

The roulette is dynamically built for each parent, only considering those applicable procedures in each particular case. Thus, if the initial individual is a feasible solution, the mutation procedure will always return a solution without duplicate classes or empty components. The minimum and maximum number of components within the architecture is also preserved. For example, the split a component operation cannot be executed on an individual representing an architecture that already contains the maximum number of components. The weights of the roulette can be configured by the software architect. Finally, notice that the existing constraints related to interactions between components have to be considered here. For example, if the obtained individual represents an unfeasible solution, the parent is mutated again until a feasible solution is reached or a maximum number of attempts is exceeded. In the latter situation, the unfeasible mutant individual could be considered as a valid offspring depending on a probabilistic criterion. In this sense, a dynamic threshold is set at the first generation, and will be decreased along the evolution. If a randomly generated number exceeds the current threshold, the unfeasible individual is added to the offspring pool. On the contrary, the original parent takes his position in the set of descendants.

As a way to illustrate how the mutation operator works, Fig. 3 shows the result of applying the remove component procedure over the solution presented Fig. 2. As can be observed, the second component (C o m p o n e n t2) within the original individual has been removed, and its classes randomly reallocated. In this example, class E is moved to C o m p o n e n t1, whilst class F is reallocated to C o m p o n e n t3. As a result, since classes A and E are now part of the same component, the existing interaction between them becomes internal. No more interfaces are created to link classes E and F, since the navigability of their composition is unspecified.

5 Experimental Framework

All the algorithms and the experimental framework have been coded in Java. Additionally, some public Java libraries have been used to facilitate the implementation. SDMetrics Open CoreFootnote 1 provides support for parsing XMI files, allowing the extraction of information from the analysis models created with the MagicDraw tool.Footnote 2 Preprocessing tasks and internal data structures have been implemented using the Datapro4j library.Footnote 3 The evolutionary algorithms have been written using JCLEC (Ventura et al. 2008). Experiments were run on a HPC cluster of 8 compute nodes with Rocks cluster 6.1 x64 Linux distribution, where each node comprises two Intel Xeon E5645 CPUs with 6 cores at 2.4 GHz and 24 GB DDR memory.

In this section, the comparison methodology is presented, including the performance measures used to evaluate the evolutionary algorithms. The parametrisation and set-up, as well as the problem instances used, are also introduced next.

5.1 Methodology

To assess the validity of the experiments, every algorithm has been executed 30 times with different random seeds. The 9 proposed design metrics have been combined in groups of 2, 4, 6, 8 and 9 objectives, which make a total of 256 combinations. After executing all these combinations, it is possible to analyse how the selection of a particular subset of metrics, including its cardinality, can affect both the evolutionary performance and the type of obtained architectural solutions.

Regarding the comparison study from an evolutionary perspective, two quality indicators, hypervolume (HV) and spacing (S) have been considered (Coello Coello et al. 2007). HV calculates the hyper-area covered by the Pareto set, whereas S measures the spread of the front. It should be noted that HV requires an objective space in the range [0,1], so the normalisation approach proposed in Luna et al. (2014) is applied after executing the algorithms. More specifically, a reference Pareto front (RPF) is built up considering all the solutions found after executing the 8 algorithms for each problem instance. Then, the objective values are normalised taking as reference the worst value and the best value achieved by any solution within the RPF.

Statistical tests (Arcuri and Briand 2011; Derrac et al. 2011) have served to compare the performance of the algorithms under study. The Friedman test, a non-parametric test that allows comparing multiple algorithms over different problem instances, is executed first. At a specific level of significance, 1−α, this test establishes the null hypothesis, H 0, denoting that all the algorithms perform equally well. If H 0 is rejected, i.e. significant differences are detected, a post-hoc procedure has to be carried out in order to properly determine those statistical differences between the considered algorithms. Here, the Holm test is performed when H 0 cannot be accepted, a control algorithm being compared to the rest of algorithms. Additionally, the Cliff’s Delta test has been executed to assess the magnitude of the improvements using an effect size measurement (Arcuri and Briand 2011). This test performs pairwise comparisons to determine whether such an improvement should be considered negligible, small, medium or large on the basis of specific thresholds (Romano et al. 2006).

Some other measures have been compiled as well in order to precisely state the discussion of results beyond the evolutionary performance. Hence, aspects like the execution time, the size of the Pareto set, or the set of non-dominated solutions are also scrutinised to properly analyse the behaviour of each algorithm concerning the software architect’s expectations.

5.2 Parameter Set-up

Before entering into the details of the value assignment to specific parameters of each algorithm, there are some related aspects to be addressed respecting constraint handling and mating selection. On the one hand, notice that constraint handling techniques need to be applied in different ways. A first scenario refers to the way invalid solutions are evaluated. In the case of the algorithms SPEA2, MOEA/D, GrEA, IBEA and HypE, the worst possible fitness value is assigned to every unfeasible individual. A constrained version of NSGA-II was already presented in the original work by Deb et al. (2002), in which feasible solutions were promoted according to a specific comparison method. The same approach has been adopted for 𝜖-MOEA, since this algorithm does not declare any fitness function. These two mechanisms guarantee that unfeasible individuals will not prevail over feasible individuals in any selection process.

On the other hand, regarding the mating selection phase in the case of MOEA/D, it usually takes two individuals from the neighbourhood of each member of the population to act as parents and generates a unique descendant. However, given that only one mutator operator is executed, just one neighbour is selected to generate the offspring.

The specific configuration parameters of the selected algorithms have been set up according to their respective authors, whilst the domain-specific configuration is identical in all cases. If required, some preliminary experiments have been performed to properly adapt their parameters to the specific needs of the problem domain, e.g. the hypercube lengths in 𝜖-MOEA. The final parameter set-up is shown in Table 3. Notice that different values are set for the population size and the maximum number of evaluations to deal with the growing complexity of the optimisation problem when increasing the number of objectives from 2 to 4, 6, 8 and 9. Regarding the population size, it should be noted that MOEA/D requires a uniformly distributed set of weighted vectors, whose size is controlled by the number of objectives and the parameter H. Therefore, the number of weighted vectors obtained for each number of combined objectives has determined the population size for the rest of algorithms. For the remaining parameters, they are related to the problem domain, including the minimum and maximum number of components to be considered for the architecture, the parameters required to calculate the objective functions (see Section 4.2) and the weight values related to the mutation procedures (see Section 4.3).

5.3 Problem Instances

The experimental study has been carried out over 10 diverse problem instances, each one representing a system design with different complexity in terms of both the number of classes and the interoperability among them. Table 4 provides the complete list of their respective characteristics. The number and types of relationships are enumerated in the column #Relationships, where the different types are broken down in turn as prescribed by UML 2: associations (As), dependencies (De), aggregations (Ag), compositions (Co) and generalisations (Ge). The last column (#Interfaces) indicates the number of candidate interfaces, i.e. the number those relationships whose navigability has been explicitly specified.

Most of these instances have been taken from actual real-world software systems working on diverse application domains. Only Aqualush Footnote 4 is a benchmark used for educational purposes. Some of the software specifications can be accessed from the Java Open Source Code ProjectFootnote 5, whereas Datapro4j, iCal4j Footnote 6 and Logisim Footnote 7 are available at their respective websites.

6 Analysis of Results

In this section, we analyse the results from the perspective of the evolutionary performance. More specifically, we focus on the scalability of the selected algorithms regarding the two quality indicators mentioned in Section 5.1, hypervolume and spacing.

6.1 Analysis of 2- to 4-objective Problems

Table 5 shows the overall ranking obtained by the Friedman test for each selected algorithm in terms of hypervolume. A value represents the arithmetic mean of the ranking positions determined considering all the possible combinations for the given number of objectives. Notice that the lowest values are the best. Their corresponding standard deviation is shown accordingly. Similarly, Table 6 shows the summary of the results for the spacing indicator. Complementary information about the results is reported in the Appendix.

Regarding the HV for 2-objective problems, NSGA-II, 𝜖-MOEA and HypE achieve the best ranking positions, obtaining significant differences with the rest of algorithms. On the contrary, SPEA2 and MOEA/D show a better behaviour in terms of the spacing indicator. IBEA and NSGA-III achieve good spacing values only for certain combinations of objectives, which is reflected in a higher standard deviation. Notice that simultaneously having good scores in terms of both hypervolume and spacing becomes a great challenge. Even though a multi-objective evolutionary algorithm tries to find a compromise between the convergence towards a set of non-dominated solutions and the spread of the resulting front, its specific characteristics could encourage the promotion of one criterion at the expense of the other. Specific many-objective approaches do not exhibit any superiority yet, obtaining the best ranking positions for only a few problems.

As for the combinations of metrics, it can be noted that their respective optimisation problems may imply several differences in the performance of the algorithms. Since the software architect could be interested in different aspects of the same quality criteria, e.g. several design metrics are related to modularity, the selection of the specific metrics is also an important decision with respect to the expected architectural solutions. For those problems where erp, gcr, cs or cl are jointly optimised, all the algorithms have achieved similar values in terms of both quality indicators. For example, the selection of erp and cl ends in a tie between SPEA2, NSGA-II, MOEA/D and 𝜖-MOEA. Moreover, there are no statistical differences when comparing these algorithms to GrEA and HypE, because all the algorithms obtain either a unique solution or an equivalent Pareto set. In the former case, every approach finds an optimal solution, since the objectives are not strongly opposed. In the latter case, all the algorithms have some trouble in avoiding local optima. In constrast, the combination of erp and cl metrics with icd, abs, cb or enc implies the formulation of a more complex optimisation problem, where the dominance between solutions is harder to achieve. Therefore, the resulting Pareto set will be comprised of more diverse solutions, where both objectives are satisfied in different ways.

As the number of objectives increases up to four, differences between them become more evident. It is worth noticing that SPEA2 and NSGA-II are still the best algorithms regarding spacing and hypervolume, respectively, though their rankings for the other indicator have increased. As can be seen in Tables 5 and 6, 𝜖-MOEA and NSGA-III have improved their overall rankings for both indicators, whereas the rest of algorithms do not experience great changes or their performance even decreases, as in the case of IBEA and GrEA.

MOEA/D and 𝜖-MOEA obtain the best ranking for some specific combinations of objectives. For example, MOEA/D has shown good spacing values when cs, cl or ins are considered. Similarly, 𝜖-MOEA seems to optimise certain objectives, e.g. icd, ins, abs or cb, more accurately than NSGA-II in terms of HV. These 4 objectives promote the functional distribution among components. On the contrary, if metrics like erp, gcr, enc and cl are selected to define the search problem, the number of components within the architectural solution tends to be minimised as a way to reduce their interactions. In general, 𝜖-MOEA provides a better performance than NSGA-II when the optimisation problem is more complex from a design perspective, as the latter tends to promote the discovery of an excessively monolithic architecture.

Table 7 compiles the outcomes obtained by the different algorithms in terms of their performance when a low number of objecives is considered to address the problem under study.

6.2 Analysis of 6- to 9-objective Problems

When algorithms optimising 6 or more objectives are compared, the Friedman test returns significant differences for a large number of combinations, many of them including at least one objective that promotes a design goal that tends to be demoted by the rest of objectives. Some initial tendencies observed for 4-objective optimisation problems are now clearly brought out. To illustrate this point, Fig. 4 depicts the percentage of combinations for which each algorithm reaches the best ranking for hypervolume and spacing, respectively. As can be noted, as the number of objectives increases, 𝜖-MOEA emerges as the best algorithm for HV, outperforming NSGA-II, and SPEA2 is the best alternative in terms of spacing, especially when dealing with more than 6 objectives.

As can be seen from Tables 5 and 6, SPEA2, NSGA-II, GrEA and IBEA report worse HV ranking values than those obtained for 2 and 4 objectives. On the contrary, MOEA/D, 𝜖-MOEA, HypE and NSGA-III have experienced an improvement regarding this indicator, though 𝜖-MOEA and HypE obtain competitive values when dealing with 8 and 9 objectives.

Additionally, the Cliff’s Delta test assesses that the pairwise comparisons between the algorithms are statistically significant in most objective combinations Footnote 8. For the 9-objective problem, a total of 56 test executions per quality indicator were required. In this case, differences in the magnitude of the corresponding indicator have been catalogued as large in 49 and 48 out of the total number of executions made for HV and S, respectively. On the one hand, the effect size obtained when comparing 𝜖-MOEA in terms of HV against any other algorithm is always greater than 0.7. On the other hand, regarding SPEA2, the test reports an effect size greater than 0.6 for all its comparisons regarding S, except when facing NSGA-II.

Concerning the performance of all the algorithms with more than 4 objectives, the number of objectives has a deeper impact than the set of selected metrics. As can be observed from the experimental results, the ranking values obtained by each algorithm are very similar regardless of the metric subset being optimised. A progressively decrease of the standard deviation is observed in Tables 4 and 5, whereas the number of different algorithms achieving the top ranking position is lower for 4 or 6 objectives than for 2 (see Fig. 4), even when a larger number of objective combinations was generated. Notice that the specific subset of metrics, as well as the need for a different number of objectives, relies on the software architect’s judgement. A detailed analysis on the influence of these aspects is provided in Section 7.2.

Some additional remarks can be pointed out with respect to the joint optimisation of 9 objectives. For instance, Fig. 5 shows an example of the Pareto front obtained by each algorithm for a representative problem instance, Aqualush. Notice that the objective space is normalised and all the objectives must be maximised. For the sake of readability, a maximum number of 50 solutions is depicted. The solutions have been randomly selected from the set of all the solutions generated in different executions. Those solutions reaching the global minimum and maximum value of each objective are also included in order to identify the worst and best values obtained.

If we look at how lines are distributed, it can be seen that some algorithms tend to focus their search on specific values of particular objectives, while others can reach a wider range of trade-offs among them. More specifically, SPEA2 is unable to reach a proper trade-off among all the metrics, icd, abs and cb usually being optimised worse than erp, gcr, enc and cl. On the contrary, NSGA-II shows great ability to simultaneously deal with all the objectives. In this case, a wide spectrum of solutions is returned, some of them being are able to reach the best values for several objectives. Regarding MOEA/D, most of the obtained solutions lie on intermediate ranges, gcr, cs and cl being frequently promoted to the detriment of icd and abs. This algorithm is able to obtain great diversity of solutions in terms of erp, enc and cb. Focusing on 𝜖-MOEA, it can reach competitive values for all the objectives. Its ability to provide several alternatives for icd, enc and cb is noteworthy, whereas good values are frequently reached for gcr and cl. GrEA and HypE behave similarly. Both algorithms experience difficulties to optimise abs and cb, getting only low or medium values. Besides, some solutions tend to concentrate on certain values of the design metrics, generating very similar solutions. HypE converges disproportionally towards erp, gcr and cl. Solutions returned by IBEA show very poor values for all the objectives. Even cb and cs are usually neutralised in benefit of other metrics, causing the returned solutions to be comprised by components completely unbalanced in size. Finally, NSGA-III has had problems to reach high values of icd and abs, although it is able to provide a wide range of values for erp, gcr, cs and enc.

Table 8 shows the most relevant aspects related to the behaviour of the different algorithms when dealing with highly dimensional objective spaces.

7 Discussion of Results

Due to the particular nature of the problem, further discussion of the outcomes is still required, not only from the evolutionary perspective, but also in terms of its applicability in tool support and the software architect expectations. Therefore, there are other additional aspects from the experimentation to be observed that can provide relevant information about both the architectural specification and the decision support process. With this aim, the number of non-dominated solutions, the execution time or the dependencies among metrics have been studied. These factors directly affect the number of solutions given to the software architect or the sort of returned architectural solutions.

7.1 On Applicability in Tool Support

Having a wide variety of alternative solutions to choose from is not an ideal scenario for the software architect, even more so if only a few of them could serve to meet his expectations in terms of diversity. In such a case, the number of solutions could be useless to the expert, who would be unable to handle them all during the decision process. This matter is likely to require some further external post-processing to select the most representative solutions. Figure 6 shows the distribution of returned solutions per number of objectives. More precisely, the Y axis represents the mean number of solutions found by each algorithm, i.e. the number of non-dominated solutions composing the Pareto set, considering all the possible combinations of design metrics over all the problem instances. As can be seen, the number of objectives has a clear impact on the number of solutions returned. It can be noted that SPEA2 establishes a fixed size for the external population, whereas MOEA/D and 𝜖-MOEA do not constrain the size of their archive of solutions. Since NSGA-II, GrEA, IBEA, HypE and NSGA-III do not have any external population, the maximum number of solutions is given by the corresponding population size (see Table 3).

If 2 or 4 objectives are considered, SPEA2 and NSGA-II usually obtain a number of solutions close to the maximum allowed. These algorithms also present some variability in the size of the Pareto set. NSGA-II even shows some difficulties to control such a variability. Having to optimise 6 or more objectives, SPEA2 is unable to fill its external archive with only non-dominated solutions, facing difficulties to find interesting architectures. Finding a more representative set of solutions than the one provided by SPEA2 could benefit the decision making process. NSGA-II always reaches the maximum limit allowed with difficulties to reject equivalent solutions along the search process. MOEA/D provides an excessive number of final solutions for any design problem. Besides, preserving the variety of these solutions is not guaranteed since the maximum number of solutions associated to each search direction is not restricted. The same behaviour could be expected for 𝜖-MOEA, but the use of the 𝜖-dominance criteria to manage the addition of solutions into the archive clearly serves to implicitly limit its size.

Depending on the combination of objectives, GrEA, IBEA, HypE and NSGA-III can generate as many solutions as the prefixed maximum. As the number of objectives increases, IBEA and GrEA return a lower number of solutions. In contrast, HypE and NSGA-III tend to get closer to their respective maximum limit. In this sense, many-objective approaches provide a better control than NSGA-II and MOEA/D. Nevertheless, only SPEA2 permits setting up the estimated number of solutions that should be returned after the search process. Hence, it is a useful mechanism for the expert, who might be interested in strictly determining the number of solutions that he really wants to check. Notice that GrEA, SPEA2, IBEA and 𝜖-MOEA might finish the search without generating any architectural solutions that fulfil the constraints (see Fig. 6). These algorithms have some difficulties in dealing with invalid solutions when optimising the most complex problem instances and certain combinations of objectives. Setting a larger number of iterations would be a first attempt to address this situation. The ability to handle constraints is a relevant factor with respect to its generic applicability to the decision support process.

Another important factor that may affect the usability of search-based approaches is the execution time. Figure 7 shows the scalability of the average execution time of each algorithm in relation to the number of objectives. It can be observed that dealing with many design metrics influences SPEA2, GrEA, 𝜖-MOEA and HypE. The density estimator based on clustering proposed by SPEA2, the computation of distance metrics in GrEA, and the hypervolume estimation required by HypE are operations that clearly affect the performance of these algorithms. Besides, SPEA2, GrEA and 𝜖-MOEA present some difficulties when removing invalid solutions because they have to execute a large number of attempts during the mutation phase, especially when dealing with the most complex problem instances. Since this kind of instances tends to comprise more interconnected classes, identifying independent components becomes a harder work. In this sense, software engineers should consider if getting high quality solutions could make up for the amount of time spent on the process. On the one hand, 𝜖-MOEA seems to be a better choice than HypE or NSGA-II if the architect is mostly interested in high quality solutions. On the other hand, NSGA-II might provide good enough solutions for most of the objective combinations, its execution time being steady and suitable. It is worth mentioning that the computational cost of NSGA-III has proven to be quite similar to NSGA-II, concluding that its execution time has not been significantly influenced by the adjustments proposed to deal with many-objective optimisation.

7.2 On the Influence of Metrics

Analysing whether two metrics, i.e. objectives, are highly competitive is an important factor to properly face the multi-objective optimisation, since it might determine much of the complexity of problem to be solved. Regarding the problem under study, this could lead to situations in which architects might presume beforehand that some specific metrics are closely related, mostly because they apparently arrange the architectural design in a similar way.