Abstract

Because of their complexity, taking agent-based models to the data is still an unresolved issue. In this paper we propose a method to calibrate the model parameters on real data that is based on a novel global sensitivity analysis procedure. The innovative feature of this procedure is that it allows to estimate regression meta-models for the relationship between model parameters and model output without resorting to Monte Carlo simulations to eliminate the effect of randomness. This is achieved by sampling at the same time both the parameters and the seed of the random numbers generator in a random fashion. If correctly specified, the meta-models can be directly used to consistently estimate the average response of the ABM to any parameter vector input by the modeler and, as a consequence, also the distance between real and simulated data. The advantage of the proposed method is twofold: it is very parsimonious in terms of computational time and is relatively easy to implement, being it based on elementary econometric techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Unlike mainstream economic models, taking agent-based models (ABMs) to the data is still an unresolved issue.

Generally speaking, agent-based (or also ‘multi-agent’) models are analytical frameworks used to describe and analyze complex systems populated by many heterogeneous and interacting units (i.e. the agents). The application of ABMs to economics has given birth to the so-called agent-based computational economics (ACE), which is basically the use of computer simulations to study evolving artificial economies composed of many autonomous interacting agents such as consumers and firms (Judd and Tesfatsion 2006). Applications are uncountable and range from the study of single markets, such as the financial market and the labor market, to the study of an entire multi-market economy.

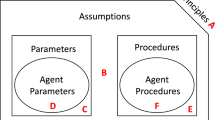

Formally speaking, ABMs are systems of stochastic difference equations that in general cannot be analytically solved because of their complexity. Once designed, therefore, an AB model must be coded and turned into a software, initialized and then simulated with the help of a computer for a given number of time steps. Then, from simulations the modeler obtains statistics that can be analyzed in order to assess the model’s properties, which in general will depend on the initial conditions, on the parameter values and on the stream of random numbers used along the simulation (for a deeper theoretical treatment see e.g. Delli Gatti et al. 2011, 2018).

An important phase of the model analysis is its empirical validation, that is the comparison of the model with empirical data aimed at evaluating its degree of realism (Fagiolo et al. 2007). There are essentially three types of validation (Judd and Tesfatsion 2006; Bianchi et al. 2007):

-

1.

Input or ex ante validation, ensuring that the model characteristics exogenously input by the modeler (such as agents’ behavioral equations, initial conditions, random-shock realizations, etc.) are empirically meaningful and broadly consistent with the real system being studied through the model.

-

2.

Descriptive output validation, assessing how well the model output matches the properties of pre-selected empirical data.

-

3.

Predictive output validation, assessing how well the model output is able to forecast properties of new data (out-of-sample forecasting).

The most common procedure is descriptive output validation, which is carried out by visually and statistically evaluating the model’s ability to replicate (qualitatively and/or quantitatively) some stylized facts such as for instance firm-size distributions (as in Bianchi et al. 2007, 2008) or macroeconomic co-movements (as in Delli Gatti et al. 2011). Recently, more advanced methods to validate ABMs have been put forth. For example, Guerini and Moneta (2017) propose to compare the causal structures implied by a structural VAR model estimated on both real and simulated data, while Lamperti (2018) introduces a new measure of divergence to quantify the similarity between the dynamics of real-world time series and the dynamics of model-generated time series.

Related and instrumental to validation is calibration (or also ‘estimation’), which is the process of choosing particular values for the model parameters in order to make the model output as realistic as possible.Footnote 1 As in general ABMs are complicated models that cannot be analytically solved, their parameters cannot be calibrated through direct estimation as it happens for regression models. Hence, ABMs are commonly calibrated by indirect methods such as indirect inference (Gourieroux et al. 1993) and the method of simulated moments (McFadden 1989), which are special cases of the simulated minimum distance approach (Grazzini and Richiardi 2015).

Many different variants of calibration through indirect methods exist in ACE literature, including among the othersFootnote 2 those proposed by Gilli and Winker (2003), Bianchi et al. (2007, 2008), Fabretti (2013), Recchioni et al. (2015), Grazzini and Richiardi (2015) and Lamperti (2018). In essence, however, indirect methods always consist of three phases (Richiardi 2018):

-

1.

Choosing a set of statistics \(S=(S_1,S_2,\ldots ,S_q)'\) computable both on real data (\(S_r\)) and on simulated data (\(S_m\));Footnote 3

-

2.

Choosing a metric \(d(\cdot )\) to measure the distance between \(S_r\) and \(S_m\), that is the degree of realism of the model;

-

3.

Choosing by simulations those model parameters that minimize the distance \(d(S_r,S_m)\).

All the three phases of the above scheme present their own issues, but the one that is more problematic from the computational point of view is the minimization procedure. For most of ABMs, in fact, the minimization of the distance cannot be achieved by solving a system of first-order conditions. Calibration therefore would require in principle a point-by-point exploration of the parameter space of the ABM aimed at calculating by simulations the value of the objective function \(d(S_r,S_m)\). In this way the modeler can find the “best” parameter vector, that is the one associated to the smallest distance. If the model is simple, a complete exploration of the parameter space can be done at reasonable computational costs. However, for most of ABMs this is hardly the case, as two difficulties arise.

A first difficulty encountered during this exploration is created by the typical over-parametrization that characterizes ABMs and the ensuing high-dimensionality of the parameter space. For example, if in a model there are just 10 relevant parameters and each parameter can take on 10 different values (a rather simplifying assumption indeed), then the parameter space is composed of \(10^{10}\) parameter vectors.

But a second difficulty adds to the search for the best parameter vector: the interference of randomness on the model output. Given in fact the set of statistics S, the statistic \(S_m\) computed on the output of a single simulation of the model will be in general a function of the model parameters \(\theta \) belonging to the parameter space \(\Theta \), of initial conditions \(I_0\) and random numbers r. Ignoring for simplicity the initial conditions (which can be done if the model is ergodic), we can write

Hence, when computing the distance \(d(\cdot )\) between real and simulated data, also this will be a function of the parameters and the random numbers:

Definition (2) highlights the second difficulty afflicting calibration, that is the influence of the numbers r on the magnitude of distance d. The typical way of getting rid of this influence is to perform Monte Carlo simulations. Basically, the model needs to be simulated k times with the same parameter vector but with different streams of random numbers, obtaining k statistics \(S_m(\theta ,r_k)\), so that the Monte Carlo average \({\hat{S}}_m(\theta )=k^{-1}\sum _k S_m(\theta ,r_k)\) can be taken. Finally, the distance d is computed using \({\hat{S}}_m(\theta )\), from which the influence of random numbers has been possibly averaged out.

Clearly, computing Monte Carlo averages for each parameter vector \(\theta \in \Theta \) is computationally demanding, if anyway workable (in the example above it would require \(k\cdot 10^{10}\) simulations of the model). Hence, in order to reduce the computational burden approaches to calibration have been developed that explore only suitable sub-sets of the parameter space, where the sub-set can be endogenously selected via optimization procedures based on search algorithms (Nocedal and Wright 1999, is a good reference to the vast literature about this topic). Because of the typical complexity of ABMs, however, a major drawback of the application of search algorithms is that they can easily get stuck into local solutions. In other words, the solution depends on the starting point of the search. Hence, this approach may still require many simulations of the model because the researcher needs to repeat the search for different starting points. For instance, Recchioni et al. (2015) is a recent application of a gradient-based search algorithm to the calibration of a financial agent-based model requiring thousands of simulations. Another strategy adopted to avoid the complete exploration of the parameter space is to employ genetic algorithms as for instance in Fabretti (2013). Also genetic algorithms, however, are computationally demanding.

A different approach to calibration that avoids to simulate a computational model (including ABMs) for the whole parameter space is based on the concept of meta-modeling. Meta-modeling, mainly used in sensitivity analysis, is the process of approximation of an unknown complicated relationship between input factors (the parameters \(\theta \)) and model output (the statistics \(S_m\)) with a simpler one of known shape (Saltelli et al. 2008, ch. 5). In this paper we will present a novel calibration method based on the global sensitivity analysis procedure proposed by Chen and Desiderio (2018, 2020), which belongs precisely to the meta-modeling approach. Our method requires the estimation of an auxiliary regression meta-model (the model of a model, also called ‘emulator’) for the statistics of interests by running the ABM only for some parameter vectors. Once estimated, the meta-model can be used to calculate approximated statistics \({\hat{S}}_m\) for the whole parameter space without further simulations of the original computational model. This approach bears strong resemblance to indirect methods and consists of four phases:

-

1.

Choosing a set of statistics \(S=(S_1,S_2,...,S_q)'\) computable both on real data (\(S_r\)) and on simulated data (\(S_m\));

-

2.

Choosing and estimating a meta-model \(S_m=MM(\theta )\) for the relationship between the model parameters \(\theta \) and \(S_m\);

-

3.

Choosing a metric \(d(\cdot )\) to measure the distance between \(S_r\) and the fitted values \({\hat{S}}_m\) of the meta-model;

-

4.

Choosing those model parameters that minimize the distance \(d(S_r,{\hat{S}}_m)\).

The advantage of such a method is that the ABM is simulated only for a limited number of parameter vectors at point 2 in order to estimate the meta-model. Calibration procedures based on meta-modeling such as ours are therefore intended to increase the speed of the calibration process at the expenses of its accuracy, because the statistics \(S_m\) are approximated by the fitted values of the meta-model. Hence, calibration through meta-modeling makes specially sense for computationally demanding large-scale ABMs, in which case some accuracy may well be sacrificed in exchange of a gain in speed.

To our knowledge, there are only three examples of calibration through meta-modeling in agent-based economics: Salle and Yildizoglu (2014), Barde and van der Hoog (2017) and Bargigli et al. (2020), basically all sharing the same methodology. The novelty introduced by our method, and also the essential difference with respect to the one used in the above-mentioned works, is its sampling strategy for the parameter vectors, which allows to eliminate the noise caused by the random numbers without resorting to Monte Carlo replications. In this way the computational burden is greatly reduced. Moreover, our sampling strategy is very simple and can in principle be applied to any other technique based on meta-modeling. Another important feature of the method is its simplicity of implementation, as it is based on simple econometric techniques that are at the average economist’s reach.

The paper continues as follows: the calibration method is explained in Sect. 2, and in Sect. 3 it is applied as example to calibrate two parameters of the ABM presented in Chen and Desiderio (2018, 2020). Section 4 concludes.

2 The Calibration Method

Let us suppose that we have already chosen the set of statistics S and the metric \(d(\cdot )\), and that the statistic \(S_m\) computed on the output of a single simulation of the agent-based model is a function of the model parameters \(\theta \in \Theta \) and random numbers r. Hence, our goal is to find the vector \(\theta ^*\) that minimizes the distance given by Eq. (2).

Doing this requires first a crucial intermediate step, that is the estimation of an auxiliary regression meta-model:

where \(f(\cdot )\) is a linear regression function depending on the meta-parameters \(\beta \), which measure the partial effect of the model parameters \(\theta \) on \(S_m\).Footnote 4

In principle, the regression error u may depend both on the stream of random numbers and on the model parameters. For simplicity, we can assume that the error does not depend on \(\theta \). This last assumption is equivalent to assuming that the meta-model (3) is correctly specified, and can be tested using a regression specification error test such as Ramsey’s RESET. In case the hypothesis of correct specification is rejected, model (3) can always be augmented with non-linear functions of \(\theta \) until a satisfactory meta-model is found. Hence, in the following we will suppose that the error u depends only on the stream of random numbers r:

The convenience of this representation of the statistic \(S_m\) is that it allows to split it into two parts: one that depends on the random numbers (u(r)) and one which does not (\(f(\theta ;\beta )\)). Moreover, if \(Cov(\theta ,r)=0\) we have \(E(S_m|\theta )=f(\theta ;\beta )\), that is the meta-model \(f(\theta ;\beta )\) is the average value of \(S_m\) for given \(\theta \). In other words, and this is the crucial point we want to stress, if \(Cov(\theta ,r)=0\) the meta-model \(f(\theta ;\beta )\) represents exactly the average response of the ABM to the parameter vector \(\theta \), which is precisely what the researcher wants to obtain when resorting to Monte Carlo simulations aimed at eliminating the noise generated by the random numbers.

In order to estimate the meta-model we have to generate n vectors \(\theta _i\) randomly sampled from the parameter space \(\Theta \) and n streams of numbers \(r_i\) randomly selected as well. Then, for each vector \(\theta _i\) and stream \(r_i\) we simulate the agent-based model once, obtaining n values \(S_m(i)\) for the statistic of interest. In practice, when for a given \(\theta _i\) we run a simulation of the agent-based model to generate the corresponding \(S_m(i)\), we have to randomly choose the seed of the random number generator. Consequently, using the samples for \(S_m\) and for the parameters \(\theta \) we can estimate, for instance by OLS, the relationship

obtaining estimated meta-parameters \({\hat{\beta }}\), from which we can compute the sample regression function

Clearly, these estimates will depend on the unobserved sequences of random numbers. However, the central point of our approach is that we treat the numbers \(r_i\) in Eq. (5) simply as omitted explanatory variables influencing \(S_m(i)\) through the error term. And as the stream \(r_i\) was randomly selected, necessarily it is uncorrelated with the regressors \(\theta _i\), which therefore are not even correlated with the error \(u_i\). Hence, under the assumption that the regression model (5) is correctly specified, the OLS estimators \({\hat{\beta }}\) applied to it are consistent for \(\beta \) when \(n\rightarrow +\infty \).

In addition, as we have \(Cov(\theta _i,r_i)=0\) for each observation i, equation (6) is nothing else than the consistent estimate of the expectation of \(S_m\) conditional on the parameter vector, that is

Once the meta-model is estimated we can therefore use it to directly compute the average value of the statistic \(S_m\) for every parameter vector \(\theta _j \in \Theta \) without additional simulations.

Finally, for each parameter vector \(\theta _j\in \Theta \) and statistic \({\hat{S}}_m(\theta _j)\) we can calculate the distance

and use it to rank all the vectors. At this point, the vector corresponding to the smallest distance \(d(\theta _j)\) will be our estimate of the “best” vector \(\theta ^*\).

To make things even simpler, we suggest to search for the best vector directly among the n vectors \(\theta _i\) used to estimate the meta-model. This is in fact legitimate as the n vectors are randomly sampled and, therefore, provide a fair coverage of the whole parameter space \(\Theta \).

In summary, the procedure can be described by the following algorithm:

Algorithm 1

-

1.

We sample randomly n vectors \(\theta _i \in \Theta \).

-

2.

For each \(\theta _i\) we run the ABM for T periods. Before each simulation the seed of the random number generator is randomly selected.

-

3.

On the output of each simulation i we calculate the statistics \(S_m(i)\).

-

4.

Using the samples \(\{\theta _i\}^n_{i=1}\) and \(\{S_m(i)\}^n_{i=1}\) we estimate by OLS Eq. (5), obtaining the sample regression function \({\hat{S}}_m\) (Eq. 6).

-

5.

For each parameter vector \(\theta _j\in \Theta \) we compute the fitted value \({\hat{S}}_m(\theta _j)\), which is the estimate of the average response of the ABM to the input \(\theta _j\).

-

6.

Using the fitted values and real data \(S_r\), for each vector \(\theta _j\) we determine the distance \(d(\theta _j)=d(S_r,{\hat{S}}_m(\theta _j))\).

-

7.

After ranking all the vectors, we choose the one associated with the minimum distance \(d(\theta _j)\).

3 An Example

As a mere illustrative example, in this section we are going to apply the proposed calibration method to the agent-based model of Chen and Desiderio (2018, 2020), which we refer to for details.

In that model a closed economy is populated by firms, households and one commercial bank. The households supply labor, buy consumption goods and hold deposits at the bank. The firms demand labor, produce and sell consumption goods, demand bank loans and hold deposits. The bank receives deposits and extend loans to firms. In particular, firms with open vacancies raise their offered wage by a stochastic percentage in order to attract workers. This stochastic percentage is parametrized by an upper bound \(h_{\xi }\). In addition, the bank extends credit to firms according to a “lending attitude” parameter \(\lambda \) (the higher \(\lambda \), the lower the credit supply).

Suppose now to want to calibrate the parameters \(\lambda \) and \(h_{\xi }\) on real data, so that \(\theta =(\lambda ,h_{\xi })\), with the relative parameter space \(\Theta \) given by Table 1.Footnote 5 Moreover, suppose that the statistics S are the average growth rate of real GDP and the average inflation rate, and that the metric \(d(\cdot )\) is simply the euclidean distance.Footnote 6 Then, we have to specify the meta-model (4) for the two statistics, for which we choose a quadratic model with a pairwise interaction term:

At this point, we randomly sample \(n=200\) vectors \(\theta _i\) (indeed, with \(n=100\) results are quite similar), and for each of them we run the ABM for 500 time steps, choosing randomly the seed of the random number generator at every run. From each simulation we take the statistics \(S_m(i)\) and we estimate by OLS the two meta-models reported in Table 2. Finally, we compute the estimate \({\hat{S}}_m\) for the whole parameter space \(\Theta \) and with it also the distance \(d(S_r,{\hat{S}}_m)\), which we report in Fig. 1. In conclusion, the resulting estimate of the minimum-distance vector \(\theta ^*\) turns out to be \((\lambda ,h_{\xi })=(0.1,0.005)\). Notice that if we searched only among the 200 vectors used to estimate the meta-models, we would obtain a quite close solution of \((\lambda ,h_{\xi })=(0.1242,0.016)\).

3.1 Discussion

The merit of our approach is its speed and ease of implementation, obtained however at the price of a loss of accuracy caused by the use of approximated simulated statistics. So, is the found solution the real minimum-distance vector? To answer this question one should run Monte Carlo simulations for the whole parameter space \(\Theta \), which is not feasible.Footnote 7 Thus, in Table 3 we report both estimated and real distances only for 6 selected points: our solution (0.1, 0.005), the near-solution (0.1242, 0.016) found among the points used to estimate the meta-models, the other three corner points of \(\Theta \) and also its middle point (0.5, 0.1).

Among those points, we can see that the smallest actual distance is achieved at the near-solution (0.1242, 0.016), while the solution (0.1, 0.005) is outperformed also by the middle point. Nonetheless, the found solution is still far better than the other three corner points, in line with the ranking obtained using the estimated distance. In addition, the estimated distance for the point (0.9, 0.2) is very close to the actual one.

The above outcome is only a partial failure of the procedure. We have in fact to consider three things. First of all, the procedure was still able to identify a solution belonging to an area of the parameter space where the distance is lower, as signaled by the actual distance associated with the near-solution (0.1242, 0.016) and other several nearby points that we tried (which we do not report). Secondly, the solution depends heavily on the quality of the meta-models employed to approximate the statistics. In Table 2 we can see in fact that their fitting (as measured by the \(R^2\)) is not particularly good, specially for the GDP growth rate. Hence, choosing a better meta-model by adding more non-linear functions of the parameters would probably increase the accuracy of the procedure. Last but not least, the method is intended to reduce the computational time of calibration, and under this respect it is very successful. Then, what the modeler should prefer between speed and accuracy clearly depends on the circumstances: if the ABM is simple a more accurate calibration procedure may be preferred, but if the computational model requires hours to be simulated, then a faster albeit less precise procedure as ours could be the best choice.

3.2 Robustness

As a robustness check, we now proceed with a brief comparison with the closely-related approach followed by Salle and Yildizoglu (2014), Barde and van der Hoog (2017) and Bargigli et al. (2020) (SBB, henceforth), which differs from ours mainly in three aspects: (1) the meta-model employed (SBB use the kriging method); (2) the object approximated by the meta-model; (3) the sampling method used to estimate the meta-model. As the innovative feature of our calibration method is the sampling strategy employed to estimate the meta-model, below we focus on the last point. Moreover, in the end we will also touch briefly upon the second point.Footnote 8

The sampling strategy used by SBB is the so-called nearly-orthogonal latin hypercube approach (NOLH), devised to reduce the computational effort of meta-model estimation (Cioppa, 2002; Cioppa and Lucas 2007). This method allows to construct very small samples from the parameter space which nonetheless have very good space-filling properties and mitigate the problem of multicollinearity. To make a comparison we therefore re-estimate model (9) using the NOLH sample for two variables taken from the spreadsheet available in Sanchez (2005). This sample involves only 17 observations \(\theta _i\) of the parameter space given above in Table 1. Table 4 reports the results, which are quite different from the estimates obtained through our method. In principle, we cannot tell whether these estimates are better than ours. Hence, we repeat the estimation using the same NOLH sample but, to refine the quality of the simulated data, for each of the 17 observations \(\theta _i\) we run 20 Monte Carlo replications to eliminate the influence of randomness. From Table 5 we can see that, by using more precise data, the estimated coefficients look now much closer to what we obtained by our method (cf. Table 2).Footnote 9 However, to obtain similar results we had to run the agent-based model \(17\cdot 20=340\) times (against the 200 runs formerly used with our method). In addition, our approach is sounder from a theoretical point of view: as in fact we use random samples, we can invoke basic econometric theory to support the validity of our OLS estimates, whereas nothing can be said about the statistical properties of the OLS estimates obtained using the NOLH sample. All of this suggests that, at least in the limited context of this example, the NOLH approach to meta-model estimation is not better than ours.

Finally, we briefly take into consideration also the second point above. SBB, in fact, use a meta-model not to approximate the model statistics but the distance metric. Hence, as an additional robustness check we follow this way by estimating meta-model (9) to approximate the distance. Basically, this means that we will have \({\hat{d}}(S_r,S_m)\) instead of having \(d(S_r,{\hat{S}}_m)\). The estimated distance surface thus obtained (which we do not report) is very similar to Fig. 1 and the resulting minimum-distance vector turns out to be (0.1, 0.1035), with an estimated distance of 0.0028. However, after running 50 MC replications of the ABM for this parameter vector, the actual distance results in 0.0062, higher than the actual distance obtained in correspondence of the near-solution (0.1242, 0.016) and not much lower than the actual distance relative to (0.1, 0.005). Thus, using a meta-model directly for the distance did not improve our prediction of the best parameter vector. Nonetheless, whether the meta-model should be used for the statistics or for the distance is a topic that would deserve further attention.

4 Conclusive Remarks

Taking agent-based models to the data for predictions or for mere validation purposes is a phase that still poses many challenges to the researcher. In particular, no consensus on which parameter calibration method should be used has emerged thus far. This is essentially due to the complexity of ABMs, which requires enormous computational efforts and is very likely to cause search algorithms to remain stuck into local solutions. Moreover, none of these methods is of easy implementation.

In this paper we have proposed a new method to calibrate the parameters on real data that is based on the global sensitivity analysis procedure introduced by Chen and Desiderio (2018, 2020). This method consists in estimating regression meta-models for the relationship between model parameters and simulated statistics using relatively few simulations. The central point of the method is that, if correctly specified, the meta-models can be directly used to consistently estimate the average response of the ABM to any parameter vector input by the modeler and, as a consequence, also the distance between real and simulated data. The novelty of our method with respect to other meta-modeling approaches is the way to sample the parameter vectors. The trick lies in sampling at the same time both the parameters and the seed of the random numbers generator in a random fashion, which allows to eliminate the effect of randomness without resorting to Monte Carlo simulations. The advantage of the proposed method is twofold: it is very parsimonious in terms of computational time and is relatively easy to implement, being it based on elementary econometric techniques. We point out, however, that our procedure is intended to increase the speed of the calibration process at the expenses of its accuracy and that, consequently, it makes more sense when applied to computationally demanding large-scale ABMs, for which the gain in speed is well worth the loss in accuracy.

The validity of our method relies on the crucial assumption embodied in Eq. (4), that is the correct functional form specification of the meta-models. If this is not true, the method may bring to very misleading conclusions, but we remark that the correct functional form specification is an issue characterizing all the techniques based on meta-modeling. However important, the correct specification of the model can be easily tested, and the researcher is free to choose any functional form to find a good fit between parameter vectors and simulated statistics. Moreover, misspecification is the only concern for the researcher, as there are no other sources of endogeneity that could afflict the meta-models.

There are many other issues which we have not touched upon, like for instance the choice of the statistics S and the estimation technique.

As for the statistics, we think that the researcher may better use those that are more sensitive to the ABM parameters. To discover which statistics are more influenced by the parameters a preliminary global sensitivity analysis may be performed using the very same method proposed in this paper. At any rate, the statistics should at least be ergodic and stationary within a given run of the ABM. The choice of the statistics, however, is a problem that is common to any calibration procedure based on indirect methods. The same applies to the choice of the distance metric.

As for the estimation technique, we deem OLS as adequate. As the parameters \(\theta _i\) and the streams of random numbers \(r_i\) are randomly sampled, in fact, no serial correlation among the n simulations exists. Heteroskedasticity may be present, however, and a FGLS may be considered to improve efficiency. The well-known drawback of FGLS, however, is that it works better than OLS only if heteroskedasticity is correctly specified, which is hardly true in practice. To increase efficiency also a seemingly unrelated regressions (SUR) approach may be used if correlation exists among the statistics S. Finally, nonparametric techniques may be used to increase estimation precision (at the expenses of higher computational costs, though). This is left for future research.

Data Availability

Data are available from the authors upon request.

Code Availability

Codes are available from the authors upon request.

Notes

Some authors distinguish between calibration and estimation (e.g. Grazzini and Richiardi 2015), but to us the difference is more conceptual than factual.

The relevant information needs not to be necessarily summarized by statistics, also entire time series and cross-sectional data can be used.

For clarity’s sake we will suppose that \(S_m\) is a scalar. If \(S_m\) is multidimensional, then a meta-model needs to be estimated for each element of \(S_m\).

The type of discretization used for the parameter space is another issue we do not consider here.

As real data \(S_r\) we used U.S. quarterly macro data retrieved from FRED dataset.

Running the ABM for 500 time steps on our computer takes approximately 10 s. Hence, 50 MC replications for all the 10000 points of \(\Theta \) would require about 1390 computer-hours.

We do not consider the first point as our sampling strategy can be applied to the estimation of any meta-model, including the one used by SBB.

Using these coefficients to estimate the distance the best vector is (0.1646, 0.005), with basically the same real distance of the vector (0.1, 0.005).

References

Barde, S., & van der Hoog, S. (2017). An empirical validation protocol for large-scale agent-based models. University of Kent, School of Economics Discussion Papers.

Bargigli, L., Riccetti, L., Russo, A., & Gallegati, M. (2020). Network calibration and metamodeling of a financial accelerator agent-based model. Journal of Economic Interaction and Coordination, 15(2), 413–440.

Bianchi, C., Cirillo, P., Gallegati, M., & Vagliasindi, P. A. (2007). Validating and calibrating agent-based models: A case study. Computational Economics, 30, 245–264.

Bianchi, C., Cirillo, P., Gallegati, M., & Vagliasindi, P. A. (2008). Validation in agent-based models: An investigation on the CATS model. Journal of Economic Behavior and Organization, 67, 947–964.

Chen, S., & Desiderio, S. (2018). Computational evidence on the distributive properties of monetary policy. Economics - The Open-Access, Open-Assessment E-Journal, 12(2018–62), 1–32.

Chen, S., & Desiderio, S. (2020). Job duration and inequality. Economics - The Open-Access, Open-Assessment E-Journal, 14(2020–9), 1–26.

Cioppa, T., & Lucas, T. (2007). Efficient nearly orthogonal and space-filling Latin hypercubes. Technometrics, 49(1), 45–55.

Delli Gatti, D., Desiderio, S., Gaffeo, E., Gallegati, M., & Cirillo, P. (2011). Macroeconomics from the bottom-up. Milan: Springer.

Delli Gatti, D., Fagiolo, G., Gallegati, M., Richiardi, M. G., & Russo, A. (2018). Agent-based models in economics: A toolkit. Cambridge: Cambridge University Press.

Fabretti, A. (2013). On the problem of calibrating an agent-based model for financial markets. Journal of Economic Interaction and Coordination, 8(2), 277–293.

Fagiolo, G., Guerini, M., Lamperti, F., Moneta, A., & Roventini, A. (2017). Validation of agent-based models in economics an finance. LEM Working Paper Series, Scuola Superiore Sant’Anna, Pisa.

Fagiolo, G., Moneta, A., & Windrum, P. (2007). A critical guide to empirical validation of agent-based models in economics: Methodologies, procedures, and open problems. Computational Economics, 30, 195–226.

Gilli, M., & Winker, P. (2003). A global optimization heuristic for estimating agent-based models. Computational Statistics and Data Analysis, 42, 299–312.

Gourieroux, C., Monfort, A., & Renault, E. (1993). Indirect inference. Journal of Applied Econometrics, 8, 85–118.

Grazzini, J., & Richiardi, M. (2015). Estimation of ergodic agent-based models by simulated minimum distance. Journal of Economic Dynamics and Control, 51, 148–165.

Guerini, M., & Moneta, A. (2017). A method for agent-based models validation. Journal of Economic Dynamics and Control, 82, 125–141.

Judd, K., & Tesfatsion, L. (Eds.). (2006). Handbook of computational economics II: Agent-based models. Amsterdam: North-Holland.

Lamperti, F. (2018). Empirical validation of simulated models through the GSL-div: An illustrative application. Journal of Economic Interaction and Coordination, 13, 143–171.

Lux, T., & Zwinkels, R. (2018). Empirical validation of agent-based models. In C. Hommes & B. LeBaron (Eds.), Handbook of computational economics (Vol. 4, pp. 437–488). Available at SSRN: https://ssrn.com/abstract=2926442 or https://doi.org/10.2139/ssrn.2926442.

McFadden, D. (1989). A method of simulated moments for estimation of discrete response models without numerical integration. Econometrica, 57(5), 995–1026.

Nocedal, J., & Wright, S. J. (1999). Numerical optimization. Berlin: Springer.

Recchioni, M. C., Tedeschi, G., & Gallegati, M. (2015). A calibration procedure for analyzing stock price dynamics in an agent-based framework. Journal of Economic Dynamics and Control, 60, 1–25.

Richiardi, M. G. (2018). Estimation of agent-based models. In G. D. Delli, G. Fagiolo, M. G. Richiardi, & A. Russo (Eds.), Agent-based models in economics: A toolkit. Cambridge: Cambridge University Press.

Salle, I., & Yildizoglu, M. (2014). Efficient sampling and meta-modeling for computational economic models. Computational Economics, 44(4), 507–536.

Saltelli, A., Ratto, M., Andres, T., Campolongo, F., Cariboni, J., Gatelli, D., et al. (2008). Global sensitivity analysis. The primer. Hoboken, NJ: Wiley.

Sanchez, S. M. (2005). Work smarter, not harder: Guidelines for designing simulation experiments. In M. E. Kuhl, N. M. Steiger, F. B. Armstrong, & J. A. Joines (Eds.), Proceedings of the 2005 winter simulation conference. Software available at http://harvest.nps.edu/linkedfiles/nolhdesigns_v4.xls.

Acknowledgements

We gratefully acknowledge the financial support by the Natural Science Foundation of Guangdong Province, under Grant No. 2018A030310148. Additionally, we would like to thank the Editor and two anonymous referees for helpful comments. We also wish to thank all the participants to IWcee19-VII International Workshop on Computational Economics and Econometrics at CNR, Rome, 3-5 July 2019.

Funding

This work was funded by Natural Science Foundation of Guangdong Province, under Grant No. 2018A030310148.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, S., Desiderio, S. A Regression-Based Calibration Method for Agent-Based Models. Comput Econ 59, 687–700 (2022). https://doi.org/10.1007/s10614-021-10106-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-021-10106-9