Abstract

This study proposes a decomposition-ensemble based carbon price forecasting model, which integrates ensemble empirical mode decomposition (EEMD) with local polynomial prediction (LPP). The EEMD method is used to decompose carbon price time series into several components, including some intrinsic mode functions (IMFs) and one residue. Motivated by the fully local characteristics of a time series decomposed by EEMD, we adopt the traditional LPP and regularized LPP (RLPP) to forecast each component. This led to two forecasting models, called the EEMD-LPP and EEMD-RLPP, respectively. Based on the fine-to-coarse reconstruction principle, an auto regressive integrated moving average (ARIMA) approach is used to forecast the high frequency IMFs, and LPP and RLPP is applied to forecast the low frequency IMFs and the residue. The study also proposes two other forecasting models, called the EEMD-ARIMA-LPP and EEMD-ARIMA-RLPP. The empirical study results showed that the EEMD-LPP and EEMD-ARIMA-LPP outperform the two other models. Furthermore, we examine the robustness and effects of parameter settings in the proposed model. Compared with existing state-of-art approaches, the results demonstrate that EEMD-ARIMA-LPP and EEMD-LPP can achieve higher level and directional predictions and higher robustness. The EEMD-LPP and EEMD-ARIMA-LPP are promising approaches for carbon price forecasting.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Global climate change has become a serious global challenge in recent decades. To address this escalating climate change problem, the European Union (EU) launched the EU Emissions Trading Scheme (ETS) in January 2005 (Ellerman and Buchner 2007). China has been the largest carbon emission emitter since 2009, contributing almost 25% of total worldwide emissions (Qin et al. 2017). The ETS has had a global impact on emission reductions, with the goals set out in the Paris Agreement. In December 19, 2017, China formally launched an initial national carbon trading market. The first phase of the market covers power generation; the new cap is approximately twice the size of the EU’s carbon market and ten times the size of California’s cap and trade system in the United States.Footnote 1

The emergence of ETS has transformed carbon emission permits into a new asset class, called “carbon emission allowances” (Neuhoff et al. 2006; Hintermann 2010). Like other commodities, carbon emission allowances can be bought and sold in the carbon markets. Corporations, organizations, and investors can trade both the spot and future of emission allowances. The establishment of an appropriate ETS has become an important issue for China to allow it to effectively compete in global carbon finance and achieve sustainable development (Lo 2016; Jotzo and Löschel 2014). Accurate carbon price forecasting has been recognized as an effective way to formulate relevant policies for carbon emissions trading and to reduce the risk of carbon assets in the carbon markets (Koop and Tole 2013; Zhu et al. 2015). As such, carbon price forecasting is critically important for governments and traders in the carbon trading market. Carbon price is affected by many factors, including short-term market fluctuations, the effects of significant trend breaks, and long-term trends (Benz and Trück 2009; Wei et al. 2010; Tang et al. 2017; Chen et al. 2013). The carbon price time series is nonlinear, non-stationary, and is highly volatile. This makes accurately forecasting carbon prices a significant challenge (Feng et al. 2011; Zhu and Wei 2013).

Existing carbon price forecasting studies can be divided into two categories. The first category can be interpreted as multivariate models, which model the relationship between carbon price and factors (García-Martos et al. 2013; Chevallier 2009, 2010, 2011a, b; Fezzi and Bunn 2009). The second category is univariate models, also known as the time series forecasting approach. These models generally include two kinds of methods: the traditional econometric method (Byun and Cho 2013; Benz and Trück 2009) and artificial intelligence (AI) method (Zhu and Wei 2013; Fan et al. 2015; Atsalakis 2016). Because it is difficult to determine the appropriate factors affecting carbon price, time series forecasting approach received widely concerns. In the time series forecasting approach, the decomposition-ensemble framework is becoming increasingly popular. This framework first decomposes the complex time series into several components that have simple structures, which are more easily forecasted. These components are individually forecasted and then we ensemble the results to obtain the final forecasting result (Yu et al. 2008; Zhang et al. 2015; Zhu et al. 2016).

Decomposition-ensemble forecasting models have been shown to perform better than the single econometric and AI model (Yu et al. 2008; Tang et al. 2011). Over the past decades, some decomposition-ensemble approaches have been proposed to forecast the carbon price. Zhu et al. (2016) proposed a novel decomposition-ensemble paradigm, which incorporates least square support vector machine (LSSVM), auto regressive integrated moving average (ARIMA), and particle swarm optimization. Sun et al. (2016) proposed a combined forecasting model based on variational mode decomposition and spiking neural networks to forecast carbon price.

Lately, empirical mode decomposition (EMD), invented by Huang et al. (1998), decomposes a raw non-linear and non-stationary signal into a set of intrinsic mode functions (IMFs) and one residue, without leaving the time domain. EMD is easy to implement and offers the ability to estimate subtle changes in frequency (Huang et al. 2011). The decomposition scale of EMD depends on the characteristics of the signal itself and does not require a priori basic function. EMD is based on local extreme points: cubic spline interpolation, based on the local extreme point signal envelope to strike down the average envelope (Chen et al. 2006). Therefore, EMD is a fully local and self-adaptive approach, and can decompose the local characteristics of the raw time series into several fluctuations and trend items. EMD-based forecasting models have been widely adopted within the forecasting research community (Yu et al. 2008; Xiong et al. 2013; Chen et al. 2012; Ren et al. 2015). Considering the characteristics discomposed by EMD, combining EMD-based decomposition with a local forecasting method, which can capture the local characteristics of a time series, is a novel attempt to improve forecasting performance.

The local polynomial prediction (LPP), developed by Farmer and Sidorowichl (1988), works effectively by analyzing the local characteristics of a time series in the frame of nonlinear dynamics. LPP displays promising forecasting performance with fast speed in low dimensional and smoothing problems (Regonda et al. 2005; Lu 2002; Beran et al. 2002; Su and Li 2015). Similar to AI model, the forecasting process of LPP is also based on the technique of phase space reconstruction (Packard et al. 1980; Takens 1981). LPP does not require a long time series and it can effectively model different scales of nonlinearity in different period of time series because of using the localization method (Farmer and Sidorowich 1988; Fan and Yao 2008). The decomposition feature of EMD motivated us to investigate the forecasting ability of LPP and RLPP approaches under the EMD framework. This study proposes a novel carbon price forecasting model integrated with the promising and relatively simple LPP approach. The ensemble EMD (EEMD), developed by Wu and Huang (2009) to overcome the mode mixing effect in EMD, is used to decompose the original carbon price time series. The LPP approach is used to individually forecast each component. The obtained model is called EEMD-LPP in this study.

It is worth noting that the parameters of the LPP are typically estimated using ordinary least squares (OLS), which can have large variance under certain conditions. Regularization techniques can modify the OLS solution towards better predictions (Kugiumtzis et al. 1998). In this study, a well-known regularization technique, namely ridge regression, is used to estimate the LPP (RLPP) parameters. A new forecasting model, called the EEMD-RLPP, is developed. The EEMD-RLPP model applies the EEMD method as a decomposition tool and uses RLPP as forecasting tool. Selecting the optimal model for each component can improve forecasting performance in the decomposition-ensemble framework. Some hybrid models have been developed based on this idea (Zhang et al. 2015; Zhu et al. 2016). The empirical results show that hybrid models demonstrate satisfactory performance. We integrate the ARIMA, which has a strong capability of modeling short-term memory and random process, with LPP and RLPP to develop two new models: EEMD-ARIMA-LPP and EEMD-ARIMA-RLPP. Based on the fine-to-coarse reconstruction principle developed by Zhang et al. (2008), the ARIMA approach is used to forecast the high frequency IMFs, whereas LPP and RLPP are used to forecast the low frequency IMFs and the residue.

The features of this study are represented as follows: (1) Motivated by the fully local characteristics of a time series decomposed by EEMD, we first integrate the LPP and RLPP approach with the EEMD method for carbon price forecasting. (2) Based on the fine-to-coarse reconstruction principle, we combined LPP and RLPP with ARIMA to forecast different components according to the components’ characteristics. (3) Experimental results demonstrate the effectiveness of the proposed approach for carbon price forecasting. (4) Under the decomposition-ensemble framework, we analyzed the robustness of the related parameters and how the parameters affect the forecasting result in the proposed model.

The rest of this study is organized as follows: Sect. 2 describes the methods, including EEMD, LPP, and ARIMA. Section 3 presents the proposed EEMD-based forecasting models in detail. Section 4 presents the experimental study, mainly including data sources, evaluation criteria, experimental results, and comparisons with state of the art approaches. Concluding remarks are drawn in Sect. 5.

2 Methods

2.1 EEMD

EEMD was developed to overcome the mode mixing problem of EMD through performing the EMD over an ensemble of the data plus Gaussian white noise (Wu and Huang 2009). EEMD is considered to be a significant improvement over the EMD method. EEMD can decompose a complex signal into finite IMFs and one residue that have simple pattern and stationary fluctuation based on the local characteristic time scale of the signal.

For an original time series \( y_{0} (t)\,(t = 1,2, \ldots ,n) \), where \( n \) is the length of the time series. The EEMD procedures are described as follows:

- (1)

Initialize the number of ensemble (\( N \)) and the standard deviation (\( \sigma \)) of the added white noise.

- (2)

Add \( N \) random white noises \( \{ n_{1} (t),n_{2} (t), \ldots ,n_{N} (t)\} \) to the original data and produce a group of new data \( \{ y_{1} (t),y_{2} (t), \ldots ,y_{N} (t)\} \).

- (3)

Decompose every new data \( y_{i} (t)(i = 1,2, \ldots ,N) \) using EMD to obtain \( N \) groups of IMFs components and \( N \) groups of residues. The EMD method is described as follows:

- 1)

Identify all the local minima and local maxima of the a time series \( X(t)\; \).

- 2)

Connect all local extrema by the cubic spline interpolation to individually create the upper envelope \( e_{up} (t) \) and the lower envelope \( e_{low} (t) \).

- 3)

Compute the point-by-point mean value \( m_{1} (t) \) of the upper envelope and the lower envelope: \( m_{1} (t) = [e_{up} (t) + e_{low} (t)]/2 \).

- 4)

Calculate the difference between \( x(t)\; \) and \( m_{1} (t) \):\( d_{1} (t) = X(t)\; - m_{1} (t) \).

- 5)

Check the property of \( d_{1} (t) \): (1) If \( d_{1} (t) \) satisfies the conditions of an IMF, then an IMF is derived and denoted as \( c_{1} (t) \), while \( x(t)\; \) is replaced with the residue \( r_{1} (t) = x(t)\; - d_{1} (t) \); (2) if \( d_{1} (t) \) is not an IMF, replace \( x(t)\; \) with \( d(t) \) and repeat the steps 1–4 until \( d(t) \) meets the conditions of an IMF.

- 6)

Repeat steps 1)–5) until the residue \( r(t) \) cannot further extract an IMF, then the originally data \( X(t) \) can be expressed as the sum of the IMFs and the residue:

$$ X(t) = \sum\limits_{i = 1}^{v} {c_{i} (t)} + r(t) $$

- 1)

where \( v \) is the total number of IMFs, \( c_{i} (t) \) is the \( i{\text{th}} \) IMF at time \( t \).

- (4)

Average \( N \) groups of IMFs components and residue respectively to obtain the responding mean value \( \left\{ {C_{1} ,C_{2} , \ldots ,C_{n} } \right\} \) and Res, and then the original data can be expressed as:

2.2 LPP and RLPP

2.2.1 Phase Space Reconstruction

Assume an observed time series of \( x_{1} ,x_{2} , \ldots ,x_{n} \). The first step in the local prediction is phase space reconstruction. Based on the approach introduced by Packard et al. (1980) and Takens (1981), the time series can be embedded in a state space by using delay coordinates. The state vector has the following form:

In this expression, \( m \) is the embedding dimension, τ is the delay time, and T denotes the transpose. According to phase space reconstruction theory, there is a smooth map \( f:R^{m} \to R^{m} \) between the current state \( X_{t} \) and the future state \( X_{{t + T_{1} }} \). For convenience, the m-dimensional states are usually mapped into one dimensional value, such that:

for all integers t and where the forecasting time \( T_{1} \) and \( \tau \) are also assumed to be integers.

2.2.2 LPP

Farmer and Sidorowich (1988) first used local approximation to fit the functional relationship between the state vectors and to compare the forecasting capability between different order approximations. The most general form for a dth degree \( m \)-dimensional polynomial is as follows:

where \( \sum\limits_{j = 1}^{m} {i_{j} \le d} \). The empirical results show that the local quadratic polynomial, i.e. the second order approximation, performs better in most cases (Farmer and Sidorowich 1988). This expression is described as:

where \( T_{1} \) is the forecasting step, \( m \) is the embedding dimension, τ is the delay time, and \( a_{ij} \) are coefficients. Unlike global approximation methods, such as the artificial neural network (ANN) and support vector machine, which use global data to model the smooth map. LPP estimates the function coefficient by partitioning the embedding space into the \( k \) nearest neighbors \( \left\{ {X_{r} } \right\}\;(r = 1,2, \ldots ,k) \). These neighbors are identified by computing the distance between the current state vector \( X_{r} \) and its preceding delay vectors with an imposed metric \( \left\| \cdot \right\| \) (i.e. the \( k \) state vector \( X_{r'} \) with an \( r^{'} < r \) that minimizes \( \left\| {X_{r} - X_{r'} } \right\| \)), and using OLS to linearly fit a LPP model and predict the future value. The key of local approximation is to correctly select the local neighborhood size; this provides sufficient points to make the local parameter fitting stable while adding more points would not lead to significant improvements. To ensure solution stability, the number of neighbors \( k \) is consistently larger than embedding dimension \( m \) (Farmer and Sidorowich 1987).

2.2.3 RLPP

A typical and well-known regularization technique is ridge regression, proposed by Hoerl and Kennard (1970). This technique balances the bias and the variance of the regression by introducing a regularization term based on least squares, as follows:

In this expression, \( \omega_{j} \) is the coefficient and \( \lambda \) is the penalty parameter, which plays a vital role in controlling the bias of the regression, while being able to be specified by users. Here, we combine the LPP with the ridge regression method, i.e. using the ridge regression to fit the LPP to develop a regularized LPP (RLPP), defined as:

The penalty parameter \( \lambda \) can be determined by trial and error. The coefficients \( a_{ij} (i = 0, \ldots ,m,j = 1, \ldots ,m) \) can be estimated by nearest neighbor points.

2.2.4 Procedures of LPP and RLPP

For a time series \( x_{1} ,x_{2} , \ldots ,x_{n} \), to predict the future value \( x_{{n + T_{1} }} \), the LPP and RLPP procedures are as follows:

- (1)

Determine the embedding dimension \( m \), delay time \( \tau \), and the nearest neighbors number k (and the penalty parameter \( \lambda \) when using RLPP).

- (2)

Calculate the distance of delay vector \( X_{n} \) from other vectors \( X_{j} \), \( 1 + (m - 1)\tau \le j \le n - T_{1} \), in the state space. For computational efficiency, we use the maximum norm (i.e. \( \left\| {A - B} \right\| = \left\| {a_{1} - b_{1} ,a_{2} - b_{2} , \ldots ,a_{m} - b_{m} } \right\| = \hbox{max} (\left| {a_{1} - b_{1} } \right|,\left| {a_{2} - b_{2} } \right|, \ldots ,\left| {a_{m} - b_{m} } \right|) \) to measure the distance between state vectors.

- (3)

Rank the distance \( d_{j} \), identify the \( k \) nearest neighbors \( X_{j1} ,X_{j2} , \ldots ,X_{jk} \), and fit a model of the form:

In these expressions, the parameters \( a_{ij} (i = 0, \ldots ,m,j = 1, \ldots ,m) \) of LPP are computed using the ordinary least squares. The RLPP is fitted using ridge regression.

- (4)

Use the fitted model to estimate a \( T_{1} \) step ahead forecast \( \hat{x}_{{n + T_{1} }} \) for the vector \( X_{n} \).

2.3 ARIMA

Theoretically, ARIMA is the most general class of models for forecasting a time series. The ARIMA is a generalization of an autoregressive moving average (ARMA) (Box et al. 2015). ARMA is generally applied to stationary series. ARIMA can be applied in non-stationarity time series using an initial differencing, which is used to eliminate the non-stationarity. An ARIMA (\( p,d,q \)) typically consists of three parts: autoregression (order \( p \)), difference (order \( d \)), and moving average MA (order \( q \)). This can be expressed as:

In this expression, \( \nabla = (1 - B) \), B refers to the backward shift operator for \( B(x_{t} ) = x_{t - 1} \); \( x_{t} \) is the observation data at time t; c is the constant; p and q are the numbers of autoregressive and moving average terms in the ARIMA, respectively; \( \varphi_{1} ,\varphi_{2} , \ldots ,\varphi_{p} \) and \( \theta_{1} ,\theta_{2} , \ldots ,\theta_{q} \) are the autoregressive and the moving average parameters to be estimated, respectively; \( \varepsilon_{t} \) is the random error at time \( t \); and \( \varepsilon_{t} \sim\,N(0,\sigma^{2} ) \).

3 Proposed EEMD-Based Carbon Price Forecasting Model

There are three main steps in a typical decomposition-ensemble model: (1) decomposition of the original complicated series; (2) individual prediction for each component; and (3) aggregating the forecasting result of all components to generate a final forecasting result of the original time series. Based on the general framework of the “Divide-and-Conquer” principle, we developed four different hybrid models based on the EEMD method to forecast the nonlinear, non-stationary carbon price time series.

3.1 Model 1: EEMD-LPP

The EEMD-LPP forecasting model generally consists of the following three steps:

Step 1 The original carbon price time series is first decomposed by EEMD method into \( n \) IMFs and one residue series, denoted as \( {\text{Res}} \).

Step 2 The LPP is used to model the extracted IMF components and residue, and to individually forecast each component.

Step 3 Simple addition has been shown to be an effective aggregation method (Tang et al. 2012). As such, the forecasting result of all extracted IMF components and the residue are added, to generate a final forecasting result of the original time series.

3.2 Model 2: EEMD-RLPP

The main step of EEMD-RLPP model is like the EEMD-LPP, except for in Step 2, where the RLPP is used as an individual forecasting tool to forecast the extracted IMF components and residue, instead of using LPP.

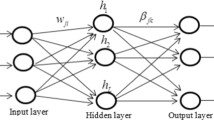

Figure 1 illustrates the methodological procedures associated with Models 1 and 2.

3.3 Model 3: EEMD-ARIMA-LPP

Unlike EEMD-LPP and EEMD-RLPP, EEMD-ARIMA-LPP incorporates the ARIMA and the LPP to forecast different components, based on their data characteristics. The IMFs can be divided into two parts based on their frequency (Zhang et al. 2008): (1) High frequency parts (HFs), which are characterized by highly fluctuations and low amplitude, reflecting the information of normal market fluctuations which have a short-term impact on the carbon price; (2) Low frequency parts (LFs), characterized by low fluctuations, expressing the effects of significant trend breaks, with a medium-term impact on carbon price. The residue drives the major long-term trends in carbon price. Here, we use the fine-to-coarse reconstruction algorithm proposed by Zhang et al. (2008) to identify the HFs and LFs of the carbon price to determine the forecasting model for each component. The identification process is as follows:

- (1)

Compute the mean value \( \bar{s}_{i} \) of the cumulative sum series \( s_{i} = \sum\nolimits_{p = 1}^{i} {IMF_{i} } \;(i = 1,2, \ldots ,n) \) from \( IMF_{1} \) to \( IMF_{i} \), where \( n \) is the total number of IMFs.

- (2)

Apply a t test to determine from which \( IMF_{i} \) the mean value \( \bar{s}_{i} \) most significantly departs from zero. The significance level α is generally selected as 0.05.

- (3)

If \( \bar{s}_{i} \) start to deviate from zero, then \( IMF_{1} \) to \( IMF_{i} \) are identified as HFs, and the remaining \( IMFs \) are identified as LFs

The ARIMA model has a strong ability to model a short-term memory and random process. This makes it suitable for forecasting HFs. The LPP is used to forecast the LF components and the residue. The procedures of the EEMD-ARIMA-LPP forecasting model can be summarized as follows:

Step 1 Decompose the original carbon price time series using EEMD.

Step 2 Identify the HFs and LFs of the extracted IMF components.

Step 3 Use the ARIMA to forecast each component of HFs and use the LPP to forecast each LF component and the residue.

Step 4 Add the forecasting result of all IMF components and the residue to obtain the final forecasting result of the original time series.

3.4 Model 4: EEMD-ARIMA-RLPP

The main step of EEMD-RLPP model is like the step of the EEMD-ARIMA-LPP model, except for Step 3, where the RLPP is used to forecast each LF component and the residue instead of LPP.

Figure 2 summarizes the procedures associated with proposed Models 3 and 4.

4 Experimental Study

4.1 Data

The European Climate Exchange (ECX) is the largest carbon market under the EU ETS. The ECX includes spot, futures, and options of the EU allowance (EUA) and Certified Emission Reduction (CER), which has the maximum trading volume of EUA. In this study, two daily carbon EUA future prices with maturity dates in December 2014 (Dec 14) and December 2015 (Dec 15) form the ECX. These were selected as the experimental data, and are freely available from the Intercontinental Exchange (ICE) website (http://www.theice.com). For Dec 14, the daily trading data cover the period from September 28, 2010 to December 15, 2014, excluding public holidays, for a total of 1079 observations. For Dec 15, the trading data is from November 29, 2011 to December 14, 2015, excluding public holidays, for a total of 1035 observations.

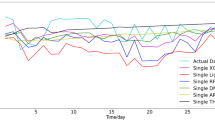

Figure 3 shows the time series curves of Dec 14 and Dec 15, and demonstrates that carbon prices have highly uncertain, nonlinear, and complicated characteristics. For the convenience of LPP and RLPP modeling and optimal parameter searches, the experimental data are divided into three subsets: the training set, the validation set, and the testing set (70%, 15% and 15% respectively). The training set and the validation set are used to find the best parameters using trial and error, where \( \lambda \in (0,1] \)\( m \in [1,10] \), \( \tau \in [1,4] \) and \( k \in \left[ {2m + 1,L} \right] \), \( m \), \( \tau \), \( k \) are integers. In contrast, λ is presented in terms of decimals and L denotes the length of training set after phase space reconstruction. Then, the testing set is used to verify the validity of the newly proposed models using the previously identified parameters. Table 1 reports the divided sample of Dec 14 and Dec 15.

4.2 Evaluation Criteria

To measure the forecasting ability of the hybrid models, two main criteria are used to evaluate the level forecasting and directional forecasting. First, the root mean squared error (RMSE) is selected as the evaluation criterion of level forecasting, defined as:

where \( x(t) \) is the actual value, \( \hat{x}(t) \) is the predicted value, and n is the number of predictions. Smaller RMSE values are associated with a more accurate model.

Second, the directional prediction statistic (\( D_{stat} \)) is used to measure the directional forecasting performance, expressed as:

In this expression, \( a_{t} = 1 \) if \( [x(t + 1) - x(t)] * [\hat{x}\left( {t + 1} \right) - x\left( t \right)] \ge 0 \); otherwise, \( a_{t} = 0 \). The greater the value of \( D_{stat} \), the more accurate the prediction is.

To further compare the predictive accuracy of different models from a statistical perspective, the Diebold–Mariano (DM) statistic is introduced to test the statistical significance of the forecasting difference (Diebold and Mariano 2002). Assuming \( e_{t}^{1} \) and \( e_{t}^{2} \) are two sets of forecast errors, generated by two different forecasting models respectively, the DM test is based on the loss differential \( d_{t} = e_{t}^{1} - e_{t}^{2} \). In this study, the loss function is set to mean the absolute percentage error (MAPE), defined as:

4.3 Decomposing Carbon Price Using EEMD and Identifying HFs and LFs

Two important EEMD parameters must be established before it can be used: the number of the ensemble \( N \) and the amplitude of white noise \( \sigma \). Referring to previous studies, this study set \( N \) as 100 and \( \sigma \) as 0.1 times the standard deviation of each series (Zhang et al. 2008). Figure 4 shows the decomposition result of Dec 14 and Dec 15.

Figure 4 shows the extracted IMFs for Dec 14 and Dec 15 experienced changing frequency and amplitudes. These IMFs were arranged from high frequency to low frequency. The fine-to-coarse reconstruction algorithm was applied to identify HFs and LFs, with a significance level set at 0.05. Table 2 presents the results, which show that the \( \overline{{S_{i} }} \) of Dec 14 started to significantly deviate from 0 at the point i = 6. Therefore IMF1 to IMF5 belonged to HFs, whereas IMF6 to IMF9 were associated with LFs. For Dec 15, the \( \overline{{S_{i} }} \) started to significantly deviate from 0 at the point \( i = 4 \), illustrating that IMF1 to IMF3 were HFs, and the IMF4–IMF 9 were LFs.

4.4 Experimental Results and Analysis

To study the forecasting abilities of the proposed models with other widely used forecasting models, the EEMD-LSSVM, EEMD-ARIMA, and EEMD-ANN served as benchmark models. For LSSVM, the Gaussian RBF kernel function was chosen; the values of the parameters sigma squared and gamma were set using the grid-search method (Fan et al. 2016). For ANN, the hidden nodes were set to 7, and the number of input neurons was determined using autocorrelation and partial correlation analyses (Yu et al. 2016). For ARIMA, the parameters were selected based on the Akaike’s information criterion (AIC) (Wang et al. 2015).

All components used one-step-ahead forecasting; results were aggregated as the final forecasted value of the original series. The ARIMA method was realized using EViews 8.0 statistical software; LSSVM and ANN were implemented through programming using the Matlab R2012b platform; and the LPP and RLPP were implemented through programming using the Python 2.7 platform. Table 3 illustrates the optimal parameters of the proposed four models for the two carbon future prices forecasting. Tables 4 and 5 compare model performances in terms of RMSE and \( D_{stat} \); Table 6 reports the results of the DM test.

In terms of level accuracy measured by RMSE, EEMD-LPP achieved the best level accuracy on Dec 14; EEMD-LPP-ARIMA performed better on Dec 15. In all, EEMD-LPP and EEMD-ARIMA-LPP outperformed the other five models. Under the decomposition-ensemble framework, LPP generated better forecasts than any other single model when EEMD was used as the decomposition method. Applying ARIMA to forecast HFs may not necessarily improve the forecasting result; this is because LPP may also perform well on HFs. EEMD-RLPP and EEMD-ARIMA-RLPP showed poor level accuracy on Dec 14 and Dec 15. This may be mainly because the components decomposed by EEMD have a simple pattern, stationary fluctuation, and less noisy data. A regularization technique introduces more bias in the forecasting process, increasing the forecasting error.

Regarding the direction forecasting accuracy measured by \( D_{stat} \), Table 5 shows that the EEMD-ARIMA-LPP model performed best on Dec 14, followed by EEMD-LPP. On Dec 15, these two models performed the best. Of the other models, EEMD-ARIMA achieved a higher value of \( D_{stat} \). Compared with level accuracy, EEMD-RLPP and EEMD-ARIMA-RLPP performed satisfactory on direction accuracy. This implies that level accuracy is not necessarily endowed with high directional prediction accuracy. In all, EEMD-LPP and EEMD-ARIMA-LPP were the most accurate and also achieved the highest directional accuracy. This indicates the two models have similar powerful forecasting capability.

Based on the DM test result shown in Table 6, at a 5% significance level, the EEMD-LPP-ARIMA and EEMD-LPP model performed better than other comparative models on both Dec 14 and Dec 15, except EEMD-LPP-ARIMA outperforms EEMD-LSSVM on Dec 14 at a 10% significance level. Additionally, there is no statistically significant difference between EEMD-LPP and EEMD-ARIMA-LPP. EEMD-RLPP and EEMD-ARIMA-RLPP were inferior to EEMD-LPP and EEMD-ARIMA-LPP, and were also inferior to EEMD-ARIMA and EEMD-LSSVM on Dec 15 at a confidence level of 99%. This illustrates a less than satisfying performance by the proposed EEMD-RLPP and EEMD-ARIMA-RLPP model. The introduction of regularization techniques did not improve the forecasting ability of LPP under the EEMD method.

The forecasting results show that among the proposed four models, EEMD-LPP and EEMD-ARIMA-LPP generated the most accurate forecasts. These two models achieved similar forecasting performance, indicating that LPP performs well when forecasting HFs and LFs. EEMD-RLPP and EEMD-RLPP-ARIMA did not perform effectively, and were inferior to the benchmark EEMD-LSSVM, EEMD-ARIMA model.

The components decomposed using the EEMD were endowed with a simpler pattern and more stable fluctuations. After the phase space reconstruction, LPP more precisely approximated the hidden smooth map between the state vectors compared to the complex AI techniques. In this way, EEMD-LPP model could achieve better forecasting result. Compared to the traditional ARIMA method, LPP modeled different scales of nonlinearity in different period because of localization, leading to better performance when forecasting LFs and residue. Introducing regularization techniques to estimate LPP parameters introduces more bias, lowering the forecasting accuracy. Thus, RLPP may not effectively forecast the components decomposed by EEMD. Figure 5 shows the difference between the actual values and each one-step ahead predicted values. The predicted values were generally close to their actual values, further demonstrating the forecasting ability of EEMD-LPP and EEMD-ARIMA-LPP model.

4.5 Robustness of Parameters

The experimental results above show there are different parameter settings for different components in the LPP method. The optimal parameter settings of each component in LPP are obtained using trial and error, creating challenges for the real-world applications of the proposed model. As such, we focused on finding a robust and generalized parameter combination for all components and investigated how the parameters of the LPP method affect the forecasting result.

Here, all components are assigned the same parameters i.e. same embedding dimension \( m \), delay time τ, and nearest neighbors \( k \) to create individual forecasts. To examine the generalizability of the experimental parameter combination, an orthogonal design (OD) is used to study the effect of multi-factors and multi-level problems (Fang and Wang 1994). Here, we applied the \( L_{9} (3^{4} ) \) orthogonal array for the experiment design; the levels of the three parameters were as follows: \( m \in \{ 2,4,6\} \), \( \tau \in \{ 1,2,3\} \), \( k \in \left\{ {0.4n,0.5n,0.6n} \right\} \), where \( n \) denotes the length of the training data. Details about the orthogonal array are provided in Fang and Wang (1994) and Qin et al. (2015). Table 7 shows the \( L_{9} (3^{4} ) \) orthogonal array; Table 8 shows the experimental results of these parameter combinations for the two series. Table 9 illustrates the range analysis of the orthogonal experiment in terms of the average rank for both series.

Tables 7, 8, and 9 show that the combination (m = 4, τ = 1 and k = 0.4n) achieves the best average result of the two series. Furthermore, we compared the experimental results obtained by the combination with the parameters obtained using trial and error. Table 10 shows the results; column “T&E” in this table denotes the parameter combination selected by trial-and-error. The differences between the two combinations of the three parameters are extremely small. The optimal combination (m = 4, τ = 1 and k = 0.4n) is slightly better than the combination obtained using trial and error method in some cases. There may be an over-fitting phenomenon when using trial and error to obtain the best parameters. Thus, the proposed EEMD-LPP mode is shown to be robust for the three parameters.

4.6 Comparisons of the State of Art Approaches

In order to further investigate and demonstrate the superiority of the proposed EEMD-LPP and EEMD-ARIMA-LPP model, we compare the forecasting accuracy of the proposed models with the state of the art carbon price forecasting models. Based on data availability, we compared the proposed models and a newly adaptive multi-scale ensemble learning paradigm proposed by Zhu et al. (2016). For a fair comparison, we used the same historical observations for training and testing to obtain an objective comparison result and the LPP parameters are selected as the experiential parameter mentioned above. The comparison results are illustrated in Table 11.

Table 11 shows that EEMD-LPP and EEMD-ARIMA-LPP are better than the EEMD-HLT-Σ model with respect to both level and directional forecasting. This further demonstrates the superiority of the proposed EEMD-LPP and EEMD-ARIMA-LPP and the effectiveness of the experiential parameter combination.

5 Concluding Remarks

To improve the forecasting performance of carbon price time series, which have highly non-linear and non-stationary characteristics, we proposed four hybrid forecasting models based on the principle of “Divide-and-Conquer.” The proposed models use the popular and effective EEMD method as decomposition tool. To investigate the forecasting capability of these newly proposed models, three widely used forecasting models were used as benchmarks: EEMD-SVR, EEMD-ARIMA, and EEMD-ANN. The empirical study shows that EEMD-LPP and EEMD-ARIMA-LPP model statistically outperform other models with higher forecasting accuracy with respect to both level and directional forecasting. The EEMD-LPP and EEMD-ARIMA-LPP are promising to forecast carbon prices; in contrast, EEMD-RLPP and EEMD-RLPP-ARIMA do not perform effectively. This may mainly be because the components decomposed using the EEMD method have stationary fluctuations and less noisy data. Thus, introducing regularization techniques to estimate LPP parameters may introduce more bias and lower the forecasting accuracy. Additionally, several experiments were conducted to analyze the robustness of the parameters in LPP. We further compared the proposed EEMD-LPP and EEMD-ARIMA-LPP model with state-of-the-art approaches using the same historical observations. The experimental results further demonstrated the effectiveness of the proposed EEMD-LPP and EEMD-ARIMA-LPP models. In our future work, we are interested in extending the proposed approach to a multi-step-ahead model.

Notes

References

Atsalakis, G. S. (2016). Using computational intelligence to forecast carbon prices. Applied Soft Computing,43, 107–116.

Benz, E., & Trück, S. (2009). Modeling the price dynamics of CO2 emission allowances. Energy Economics,31(1), 4–15.

Beran, J., Feng, Y., Ghosh, S., & Sibbertsen, P. (2002). On robust local polynomial estimation with long-memory errors. International Journal of Forecasting,18(2), 227–241.

Box, G. E., Jenkins, G. M., Reinsel, G. C., & Ljung, G. M. (2015). Time series analysis: Forecasting and control. Hoboken: Wiley.

Byun, S. J., & Cho, H. (2013). Forecasting carbon futures volatility using GARCH models with energy volatilities. Energy Economics,40, 207–221.

Chen, Q., Huang, N., Riemenschneider, S., & Xu, Y. (2006). A B-spline approach for empirical mode decompositions. Advances in Computational Mathematics,24(1), 171–195.

Chen, C. F., Lai, M. C., & Yeh, C. C. (2012). Forecasting tourism demand based on empirical mode decomposition and neural network. Knowledge-Based Systems,26, 281–287.

Chen, X., Wang, Z., & Wu, D. D. (2013). Modeling the price mechanism of carbon emission exchange in the European Union emission trading system. Human and Ecological Risk Assessment: An International Journal,19(5), 1309–1323.

Chevallier, J. (2009). Carbon futures and macroeconomic risk factors: A view from the EU ETS. Energy Economics,31(4), 614–625.

Chevallier, J. (2010). Volatility forecasting of carbon prices using factor models. Economics Bulletin,30(2), 1642–1660.

Chevallier, J. (2011a). A model of carbon price interactions with macroeconomic and energy dynamics. Energy Economics,33(6), 1295–1312.

Chevallier, J. (2011b). Evaluating the carbon-macroeconomy relationship: Evidence from threshold vector error-correction and Markov-switching VAR models. Economic Modelling,28(6), 2634–2656.

Diebold, F. X., & Mariano, R. S. (2002). Comparing predictive accuracy. Journal of Business & economic statistics,20(1), 134–144.

Ellerman, A. D., & Buchner, B. K. (2007). The European Union emissions trading scheme: Origins, allocation, and early results. Review of Environmental Economics and Policy,1(1), 66–87.

Fan, X., Li, S., & Tian, L. (2015). Chaotic characteristic identification for carbon price and an multi-layer perceptron network prediction model. Expert Systems with Applications,42(8), 3945–3952.

Fan, L., Pan, S., Li, Z., & Li, H. (2016). An ICA-based support vector regression scheme for forecasting crude oil prices. Technological Forecasting and Social Change,112, 245–253.

Fan, J., & Yao, Q. (2008). Nonlinear time series: Nonparametric and parametric methods. Berlin: Springer.

Fang, K.-T., & Wang, Y. (1994). Number-theoretic methods in statistics. NewYork: Chapman & Hall.

Farmer, J. D., & Sidorowich, J. J. (1987). Predicting chaotic time series. Physical Review Letters,59(8), 845.

Farmer, J. D., & Sidorowichl, J. J. (1988). Exploiting chaos to predict the future and reduce noise. In Evolution, learning and cognition (pp. 277–330). Singapore: World Scientific Publishing Co Pte Ltd.

Feng, Z. H., Zou, L. L., & Wei, Y. M. (2011). Carbon price volatility: Evidence from EU ETS. Applied Energy,88(3), 590–598.

Fezzi, C., & Bunn, D. W. (2009). Structural interactions of European carbon trading and energy prices. The Journal of Energy Markets,2(4), 53–69.

García-Martos, C., Rodríguez, J., & Sánchez, M. J. (2013). Modelling and forecasting fossil fuels, CO2 and electricity prices and their volatilities. Applied Energy,101, 363–375.

Hintermann, B. (2010). Allowance price drivers in the first phase of the EU ETS. Journal of Environmental Economics and Management,59(1), 43–56.

Hoerl, A. E., & Kennard, R. W. (1970). Ridge regression: Biased estimation for nonorthogonal problems. Technometrics,12(1), 55–67.

Huang, N. E., Chen, X., Lo, M. T., & Wu, Z. (2011). On Hilbert spectral representation: A true time-frequency representation for nonlinear and nonstationary data. Advances in Adaptive Data Analysis,3(01n02), 63–93.

Huang, N. E., Shen, Z., Long, S. R., Wu, M. C., Shih, H. H., Zheng, Q., et al. (1998). The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. In Proceedings of the royal society of London A: Mathematical, physical and engineering sciences (Vol. 454, No. 1971, pp. 903–995). The Royal Society.

Jotzo, F., & Löschel, A. (2014). Emissions trading in China: Emerging experiences and international lessons. Energy Policy,75, 3–8.

Koop, G., & Tole, L. (2013). Forecasting the European carbon market. Journal of the Royal Statistical Society: Series A (Statistics in Society),176(3), 723–741.

Kugiumtzis, D., Lingjærde, O. C., & Christophersen, N. (1998). Regularized local linear prediction of chaotic time series. Physica D: Nonlinear Phenomena,112(3–4), 344–360.

Lo, A. Y. (2016). Challenges to the development of carbon markets in China. Climate Policy,16(1), 109–124.

Lu, Z. Q. (2002). Local polynomial prediction and volatility estimation in financial time series. In A. S. Soofi & L. Cao (Eds.), Modelling and forecasting financial data. Studies in computational finance (Vol. 2, pp. 115–135). Boston, MA: Springer.

Neuhoff, K., Martinez, K. K., & Sato, M. (2006). Allocation, incentives and distortions: The impact of EU ETS emissions allowance allocations to the electricity sector. Climate Policy,6(1), 73–91.

Packard, N. H., Crutchfield, J. P., Farmer, J. D., & Shaw, R. S. (1980). Geometry from a time series. Physical Review Letters,45(9), 712.

Qin, Q., Cheng, S., Zhang, Q., Wei, Y., & Shi, Y. (2015). Multiple strategies based orthogonal design particle swarm optimizer for numerical optimization. Computers & Operations Research,60, 91–110.

Qin, Q., Liu, Y., Li, X., & Li, H. (2017). A multi-criteria decision analysis model for carbon emission quota allocation in China’s east coastal areas: Efficiency and equity. Journal of Cleaner Production,168, 410–419.

Regonda, S., Rajagopalan, B., Lall, U., Clark, M., & Moon, Y. I. (2005). Local polynomial method for ensemble forecast of time series. Nonlinear Processes in Geophysics,12(3), 397–406.

Ren, Y., Suganthan, P. N., & Srikanth, N. (2015). A comparative study of empirical mode decomposition-based short-term wind speed forecasting methods. IEEE Transactions on Sustainable Energy,6(1), 236–244.

Su, L., & Li, C. (2015). Local prediction of chaotic time series based on polynomial coefficient autoregressive model. Mathematical Problems in Engineering, 2015, 901807.

Sun, G., Chen, T., Wei, Z., Sun, Y., Zang, H., & Chen, S. (2016). A carbon price forecasting model based on variational mode decomposition and spiking neural networks. Energies,9(1), 54.

Takens, F. (1981). Detecting strange attractors in turbulence. In D. Rand & L. S. Young (Eds.), Dynamical systems and turbulence, Warwick 1980. Lecture notes in mathematics (Vol. 898, pp. 366–381). Berlin: Springer.

Tang, B. J., Gong, P. Q., & Shen, C. (2017). Factors of carbon price volatility in a comparative analysis of the EUA and sCER. Annals of Operations Research,255(1–2), 157–168.

Tang, L., Wang, S., & Yu, L. (2011). EEMD-LSSVR-based decomposition-and-ensemble methodology with application to nuclear energy consumption forecasting. In Computational sciences and optimization (CSO), 2011 fourth international joint conference on (pp. 589–593). IEEE.

Tang, L., Yu, L., Wang, S., Li, J., & Wang, S. (2012). A novel hybrid ensemble learning paradigm for nuclear energy consumption forecasting. Applied Energy,93, 432–443.

Wang, W. C., Chau, K. W., Xu, D. M., & Chen, X. Y. (2015). Improving forecasting accuracy of annual runoff time series using ARIMA based on EEMD decomposition. Water Resources Management,29(8), 2655–2675.

Wei, Y. M., Wang, K., Feng, Z. H., & Cong, R. (2010). Carbon finance and carbon market: Models and empirical analysis. Beijing: Science Press.

Wu, Z., & Huang, N. E. (2009). Ensemble empirical mode decomposition: A noise-assisted data analysis method. Advances in Adaptive Data Analysis,1(01), 1–41.

Xiong, T., Bao, Y., & Hu, Z. (2013). Beyond one-step-ahead forecasting: Evaluation of alternative multi-step-ahead forecasting models for crude oil prices. Energy Economics,40, 405–415.

Yu, L., Dai, W., & Tang, L. (2016). A novel decomposition ensemble model with extended extreme learning machine for crude oil price forecasting. Engineering Applications of Artificial Intelligence,47, 110–121.

Yu, L., Wang, S., & Lai, K. K. (2008). Forecasting crude oil price with an EMD-based neural network ensemble learning paradigm. Energy Economics,30(5), 2623–2635.

Zhang, X., Lai, K. K., & Wang, S. Y. (2008). A new approach for crude oil price analysis based on empirical mode decomposition. Energy Economics,30(3), 905–918.

Zhang, J. L., Zhang, Y. J., & Zhang, L. (2015). A novel hybrid method for crude oil price forecasting. Energy Economics,49, 649–659.

Zhu, B., Shi, X., Chevallier, J., Wang, P., & Wei, Y. M. (2016). An adaptive multiscale ensemble learning paradigm for nonstationary and nonlinear energy price time series forecasting. Journal of Forecasting,35(7), 633–651.

Zhu, B., Wang, P., Chevallier, J., & Wei, Y. (2015). Carbon price analysis using empirical mode decomposition. Computational Economics,45(2), 195–206.

Zhu, B., & Wei, Y. (2013). Carbon price forecasting with a novel hybrid ARIMA and least squares support vector machines methodology. Omega,41(3), 517–524.

Acknowledgements

This paper is partly supported by the National Natural Science Foundation of China (Nos. 71874070, 71871146 and 71402103), the MOE (Ministry of Education in China) Project of Humanities and Social Science (No. 18YJA630090).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Qin, Q., He, H., Li, L. et al. A Novel Decomposition-Ensemble Based Carbon Price Forecasting Model Integrated with Local Polynomial Prediction. Comput Econ 55, 1249–1273 (2020). https://doi.org/10.1007/s10614-018-9862-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-018-9862-1