Abstract

We propose a new randomized method for solving systems of nonlinear equations, which can find sparse solutions or solutions under certain simple constraints. The scheme only takes gradients of component functions and uses Bregman projections onto the solution space of a Newton equation. In the special case of euclidean projections, the method is known as nonlinear Kaczmarz method. Furthermore if the component functions are nonnegative, we are in the setting of optimization under the interpolation assumption and the method reduces to SGD with the recently proposed stochastic Polyak step size. For general Bregman projections, our method is a stochastic mirror descent with a novel adaptive step size. We prove that in the convex setting each iteration of our method results in a smaller Bregman distance to exact solutions as compared to the standard Polyak step. Our generalization to Bregman projections comes with the price that a convex one-dimensional optimization problem needs to be solved in each iteration. This can typically be done with globalized Newton iterations. Convergence is proved in two classical settings of nonlinearity: for convex nonnegative functions and locally for functions which fulfill the tangential cone condition. Finally, we show examples in which the proposed method outperforms similar methods with the same memory requirements.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider a constrained nonlinear system of equations

where \(f:D \subset \mathbb {R}^d \rightarrow \mathbb {R}^n \) is a nonlinear differentiable function and \(C\subset D \subset \mathbb {R}^d\) is a nonempty closed convex set. Let \(S \subset C\) be the set of solutions of (1). Our aim is to design an iterative method which approximates a solution of (1) and in each step uses first-order information of just a single component function \(f_i\).

The idea of our method is as follows. Given an appropriate convex function \(\varphi :\mathbb {R}^d\rightarrow \mathbb {R}\cup \{+\infty \}\) with

our method computes the Bregman projection w.r.t. \(\varphi \) onto the solution set of the local linearization of a component function \(f_i\) around the current iterate \(x_k\). Here, the underlying distance is the Bregman distance defined by

where \(x^*\) is a subgradient of \(\varphi \) at x. That is, the method we study is given by

with

where \(i_k \in \{1,\ldots , n\}\) and \(x_k^*\) is in the subgradient \(\partial \varphi (x_k).\) Since one can show that Bregman projections are always contained in \(\textrm{dom}\ \partial \varphi \), the condition (2) guarantees that \(x_k\in C\) holds for all k and hence, if the \(x_k\) converge, they converge to a point in C.

In order for the Bregman projection \(x_{k+1}\) to exist, we need that the hyperplanes \(H_{k}\) have nonempty intersection with \(\textrm{dom}\ \varphi \). Proposition 2.3 below will show that the slightly stronger condition

is sufficient for existence and uniqueness of the Bregman projection under regularity assumptions on \(\varphi \).

If (5) is violated, we propose to compute a relaxed projection, which is always defined and inspired by the recently proposed mSPS method [23].

1.1 Related work and our contributions

1.1.1 Nonlinear Kaczmarz method and Sparse Kaczmarz

In the pioneering work by Stefan Kaczmarz [35], the idea of solving systems of equations by cycling through the separate equations and solving them incrementally was first executed on linear systems in finite dimensional spaces, an approach which is known henceforth as Kaczmarz method. In this conceptually simple method, an update is computed by selecting one equation of the system according to a rule that may be random, cyclic or adaptive, and computing an orthogonal projection onto its solution space, which is given by a hyperplane.

Recently, two completely different extensions of the Kaczmarz method have been developed. One idea was to transfer the method to systems with nonlinear differentiable functions by considering its local linearizations: In each step k, an equation \(i_k\) is chosen and the update \(x_{k+1}\) is defined as the orthogonal projection

It is easy to check that this update can be computed by

This method was studied under the names Sketched Newton-Raphson [62] or Nonlinear Kaczmarz method [58]. Convergence was shown for two kinds of mild nonlinearities, namely star convex functions [62] and functions which obey a local tangential cone condition [58].

A different kind of extension of the Kaczmarz method has been proposed by [39]. Here, the notion of projection was replaced by the (more general) Bregman projection, giving rise to the ‘sparse’ Kaczmarz method, which can find sparse solutions of the system. The method has been further extended to inconsistent systems [54], accelerated by block averaging [57] and investigated as a regularization method in Banach spaces [33]. But so far only linear systems have been addressed.

The present article unifies these two generalizations, that is, we study the case of nonlinearity and general Bregman projections onto linearizations and derive convergence rates in the two aforementioned nonlinear settings. We also demonstrate that instead of sparsity, the proposed method is able to handle simple constraints such as simplex constraints as well.

1.1.2 Stochastic Polyak step size (SPS)

One popular method for solving the finite-sum problem \(\min \frac{1}{n}\sum _{i=1}^n \ell _i(x)\) is stochastic gradient descent (SGD), which is defined by the update \(x_{k+1} = x_k - \gamma _k\nabla \ell _{i_k}(x_k)\). It is still a challenging question if there exist good choices of step sizes which are adaptive in the sense that no hyperparameter tuning is necessary. In this context, the stochastic Polyak step size (SPS)

was proposed in [38], where \(c>0\) is a fixed constant and \(\hat{\ell }_i = \inf \ell _i\). It was shown that the iterates of this method converge for convex lower bounded functions \(f_i\) for which the interpolation condition holds, meaning that there exists \({\hat{x}}\in \mathbb {R}^d\) with \( \ell _i({\hat{x}}) = \hat{\ell }_i \) for all \(i=1,\ldots ,n\). This assumption is strong, but can be fulfilled e.g. by modern machine learning applications such as non-parametric regression or over-parametrized deep neural networks [40, 64]. We cover these assumptions with our framework as a special case by requiring that the functions \(f_i\) in (1) are nonnegative, which is clear by setting \(f_i=\ell _i-\hat{\ell }_i\). The SPS method applied to \(\ell _1,...,\ell _n\) then coincides with the Nonlinear Kaczmarz method applied to \(f_1,...,f_n\).

1.1.3 Mirror descent and SPS

For incorporating additional constraints or attraction to sparse solutions into SGD, a well-known alternative to projected SGD is the stochastic mirror descent method (SMD) [3, 44, 65], which is defined by the update

Here, \(\varphi \) is a convex function with additional properties which will be refined later on, which is then called the distance generating function (DGF), \(x_k^*\) is a subgradient of \(\varphi \) at \(x_k\) and \(D_\varphi \) is the Bregman distance associated to \(\varphi \). We demonstrate that our proposed method can be reinterpreted as mirror descent with a novel adaptive step size in case that the \(f_i\) are nonnegative. Moreover, for \(\varphi (x)=\frac{1}{2}\Vert x\Vert _2^2\), we obtain back the SGD method with the stochastic Polyak step size. For general \(\varphi \), computing the step size requires the solution of a convex one-dimensional minimization problem. This is a similar situation as in the update of the stochastic dual coordinate ascent method [56], a popular stochastic variance reduced method for minimizing regularized general linear models.

The two recent independent works [23] and [60] propose to use the stochastic Polyak step size from SGD in mirror descent. This update has the advantage that it is relatively cheap to compute. However, we prove that for convex functions, our proposed method takes bigger steps in terms of Bregman distance towards the solution of (1).

We generalize the step size from [23] to the case in which the functions \(f_i\) are not necessarily nonnegative and employ this update as a relaxed projection whenever our iteration is not defined. We compare our proposed method with the method which always performs relaxed projections in our convergence analysis and experiments. As an additional contribution, we improve the analysis for the method in [23] for the case of smooth strongly convex functions \(f_i\) (Theorem 4.16).

Finally, our method is by definition scaling-invariant in the sense that a multiplicative change \(\varphi \mapsto \alpha \varphi \) of the DGF \(\varphi \) with a constant \(\alpha >0\) does not affect the method. To the best of our knowledge, this is the first mirror descent method which has this property.

1.1.4 Bregman projection methods

The idea of using Bregman projections algorithmically dates back to the seminal paper [11], which proposed to solve the feasibility problem

for convex sets \(C_i\) by iterated Bregman projections onto the sets \(C_i\). This idea initiated an active line of research [1, 4,5,6, 15,16,17,18, 36, 50] with applications in fields such as matrix theory [21], image processing [19, 20, 46] and optimal transport [9, 37]. We can view problem (1) as a feasibility problem by setting \(C_i=\{x\mid f_i(x)=0\}\). Our approach to compute Bregman projections onto linearizations has already been proposed for the case of convex inequalities \(C_i=\{x\mid f_i(x)\le 0\}\) under the name outer Bregman projections [13, 14]. Convergence of this method was studied in general Banach spaces. Obviously, the two problems coincide for convex nonnegative functions \(f_i\). However, to the best of our knowledge, convergence rates have been given recently only in the case that the \(C_i\) are hyperplanes [36]. In this paper, we derive rates in the space \(\mathbb {R}^d\). Also, we extend our analysis to the nonconvex setting for equality constraints.

1.1.5 Bregman–Landweber methods

There are a couple of works in inverse problems, typically studied in Banach spaces, which already incorporate Bregman projections into first-order methods with the aim of finding sparse solutions. Bregman projections were combined with the nonlinear Landweber iteration the first time in [55]. Later, [10] employed Bregman projections for \(L_1\)- and TV-regularization. A different nonlinear Landweber iteration with Bregman projections for sparse solutions of inverse problems was investigated in [41]. All of these methods use the full Jacobian Df(x) in each iteration. In [32, 34], a deterministic Kaczmarz method incorporating convex penalties was proposed which performs a similar mirror update as our method, but with a different step size which does not originate from a Bregman projection. The apparently closest related method to our proposed one was recently suggested in [28], where the step size was calculated as the solution of a quite similar optimization problem, which is still different and also does not come with the motivation of a Bregman projection.

1.1.6 Sparse and Bregman–Newton methods

Finally, since our proposed method can be seen as a stochastic first-order Newton iteration, we briefly point out that a link of Newton’s method to topics like Bregman distances and sparsity has already been established in the literature. Iusem and Solodov [30] introduced a regularization of Newton’s method by a Bregman distance. Nesterov and Doikov [22] continued this work by introducing an additional nonsmooth convex regularizer. Polyak and Tremba [48] proposed a sparse Newton method which solves a minimum norm problem subject to the full Newton equation in each iteration, and presented an application in control theory [49].

1.2 Notation

For a set \(S\subset \mathbb {R}^d\), we write its interior as \(S^\circ \), its closure as \(\overline{S}\) and its relative interior as \(\textrm{ri}(S)\). The Cartesian product of sets \(S_i\subset \mathbb {R}^d\), \(i=1,...,m\), is written as  . The set \(\textrm{span}(S)\) is the linear space generated by all elements of S. We denote by \(\mathbb {1}_d\) the vector in \(\mathbb {R}^d\) with constant entries 1. For two vectors \(x,y\in \mathbb {R}^d\), we express the componentwise (Hadamard) product as \(x\cdot y\) and the componentwise logarithm and exponential as \(\log (x)\) and \(\exp (x)\). For a given norm \(\Vert \cdot \Vert \) on \(\mathbb {R}^d\), by \(\Vert \cdot \Vert _*\) we denote the corresponding dual norm, which is given as

. The set \(\textrm{span}(S)\) is the linear space generated by all elements of S. We denote by \(\mathbb {1}_d\) the vector in \(\mathbb {R}^d\) with constant entries 1. For two vectors \(x,y\in \mathbb {R}^d\), we express the componentwise (Hadamard) product as \(x\cdot y\) and the componentwise logarithm and exponential as \(\log (x)\) and \(\exp (x)\). For a given norm \(\Vert \cdot \Vert \) on \(\mathbb {R}^d\), by \(\Vert \cdot \Vert _*\) we denote the corresponding dual norm, which is given as

2 Basic notions and assumptions

We collect some basic notions and results as well as our standing assumptions for problem (1).

2.1 Convex analysis and standing assumptions

Let \(\varphi :\mathbb {R}^d\rightarrow \overline{\mathbb {R}}:=\mathbb {R}\cup \{+\infty \}\) be convex with

We also assume that \(\varphi \) is lower semicontinuous, i.e. \(\varphi (x) \le \liminf _{y\rightarrow x} \varphi (y)\) holds for all \(x\in \mathbb {R}^d\), and supercoercive, meaning that

The subdifferential at a point \(x\in \textrm{dom}\ \varphi \) is defined as

An element \(x^*\in \partial \varphi (x)\) is called a subgradient of \(\varphi \) at x. The set of all points x with \(\partial \varphi (x)\ne \emptyset \) is denoted by \(\textrm{dom}\ \partial \varphi \). Note that the relative interior of \(\textrm{dom}\ \varphi \) is a convex set, while \(\textrm{dom}\ \partial \varphi \) may not be convex, for a counterexample see [52, p.218]. In general, convexity of \(\varphi \) guarantees the inclusions \(\textrm{ri}\ \textrm{dom}\ \varphi \subset \textrm{dom}\ \partial \varphi \subset \textrm{dom}\ \varphi \). For later purposes, we require that \( \textrm{dom}\ \partial \varphi = \textrm{ri}\ \textrm{dom}\ \varphi , \) which will be fulfilled in all our examples. We further assume that \(\varphi \) is essentially strictly convex, i.e. strictly convex on \(\textrm{ri}\ \textrm{dom}\ \varphi \). (In general, this property only means strict convexity on every convex subset of \(\textrm{dom}\ \partial \varphi \).). The convex conjugate (or Fenchel-Moreau-conjugate) of \(\varphi \) is defined by

The function \(\varphi ^*\) is convex and lower semicontinuous. Moreover, the essential strict convexity and supercoercivity imply that \(\textrm{dom}\ \varphi ^*=\mathbb {R}^d\) and \(\varphi ^*\) is differentiable, since \(\varphi \) is essentially strictly convex and supercoercive, see [7, Proposition 14.15] and [52, Theorem 26.3].

The Bregman distance \(D_\varphi ^{x^*}(x,y)\) between \(x,y\in \textrm{dom}\ \varphi \) with respect to \(\varphi \) and a subgradient \(x^*\in \partial \varphi (x)\) is defined as

Using Fenchel’s equality \(\varphi ^*(x^*)=\langle x^*,x\rangle - \varphi (x)\) for \(x^*\in \partial \varphi (x)\), one can rewrite the Bregman distance with the conjugate function as

If \(\varphi \) is differentiable at x, then the subdifferential \(\partial \varphi (x)\) contains the single element \(\nabla \varphi (x)\) and we can write

The function \(\varphi \) is called \(\sigma \)-strongly convex w.r.t. a norm \(\Vert \cdot \Vert \) for some \(\sigma >0\), if for all \(x,y\in \textrm{dom}\ \partial \varphi \) it holds that \(\frac{\sigma }{2}\Vert x-y\Vert ^2\le D_\varphi ^{x^*}(x,y)\).

In conclusion, we require the following standing assumptions for problem (1):

Assumption 1

-

(i)

The set C is nonempty, convex and closed.

-

(ii)

It holds that \(\varphi :\mathbb {R}^d\rightarrow \overline{\mathbb {R}}\) is essentially strictly convex, lower semicontinuous and supercoercive.

-

(iii)

The function \(\varphi \) fulfills that \(\overline{\textrm{dom}\ \partial \varphi } = C\) and \(\textrm{dom}\ \partial \varphi = \textrm{ri}\ \textrm{dom}\ \varphi \).

-

(iv)

For each \(x\in \textrm{dom}\ \varphi \) and each sequence \(x_k\in \textrm{dom}\ \partial \varphi \) with \(x_k^*\in \partial \varphi (x_k)\) and \(x_k\rightarrow x\) it holds that \(D_\varphi ^{x_k^*}(x_k,x)\rightarrow 0\).

-

(v)

The function \(f:D\rightarrow \mathbb {R}^n\) is continuously differentiable with \(D\supset C\).

-

(vi)

The set of solutions S of (1) is non-empty, that is \(S:= C\cap f^{-1}(0)\ne \emptyset \).

2.2 Bregman projections

Definition 2.1

Let \(E\subset \mathbb {R}^d\) be a nonempty convex set, \(x\in \textrm{dom}\ \partial \varphi \) and \(x^*\in \partial \varphi (x)\). Assume that \(E\cap \textrm{dom}\ \varphi \ne \emptyset \). The Bregman projection of x onto E with respect to \(\varphi \) and \(x^*\) is the point \(\Pi _{\varphi , E}^{x^*}(x)\in E\cap \textrm{dom}\ \varphi \) such that

Existence and uniqueness of the Bregman projection is guaranteed if \(E\cap \textrm{dom}\ \varphi \ne \emptyset \) by our standing assumptions due to the fact that the function \(y\mapsto D_\varphi ^{x^*}\big (x, y\big )\) is lower bounded by zero, coercive, lower semicontinuous and strictly convex. For the standard quadratic \(\varphi =\frac{1}{2}\Vert \cdot \Vert _2^2\), the Bregman projection is just the orthogonal projection. Note that if \(E\cap \textrm{dom}\ \varphi =\emptyset \), then for all \(y\in E\) it holds that \(D_\varphi ^{x^*}(x,y)=+\infty \).

The Bregman projection can be characterized by variational inequalities, as the following lemma shows.

Lemma 2.2

( [39]) A point \(z\in E\) is the Bregman projection of x onto E with respect to \(\varphi \) and \(x^*\in \partial \varphi (x)\) if and only if there exists \(z^*\in \partial \varphi (z)\) such that one of the following conditions is fulfilled:

-

(i)

\(\langle z^*-x^*,z-y\rangle \le 0 \qquad \text {for all } y\in E,\)

-

(ii)

\(D_\varphi ^{z^*}(z,y) \le D_\varphi ^{x^*}(x,y) - D_\varphi ^{x^*}(x,z) \qquad \text {for all } y\in E.\)

We consider Bregman projections onto hyperplanes

and halfspaces

and analoguously we define \(H^\ge (\alpha ,\beta )\).

The following proposition shows that the Bregman projection onto a hyperplane can be computed by solving a one-dimensional dual problem under a qualification constraint. We formulate this one-dimensional dual problem under slightly more general assumptions than previous versions, e.g. we neither assume smoothness of \(\varphi \) (as e.g. [5, 6, 11, 17, 21]) nor strong convexity of \(\varphi \) (as in [39]).

Proposition 2.3

Let \(\varphi \) fulfill Assumption 1(ii). Let \(\alpha \in \mathbb {R}^d\setminus \{0\}\) and \(\beta \in \mathbb {R}\) such that

Then, for all \(x\in \textrm{dom}\ \partial \varphi \) and \(x^*\in \partial \varphi (x)\), the Bregman projection \(\Pi _{\varphi , H(\alpha ,\beta )}^{x^*}(x)\) exists and is unique. Moreover, the Bregman projection is given by

where \(x_+^* = x^* - {\hat{t}}\alpha \in \partial \varphi (x_+)\) and \({\hat{t}}\) is a solution to

Proof

The assumptions guarantee that \(\varphi ^*\) is finite and differentiable on the full space \(\mathbb {R}^d\). We already know that the Bregman projection \(x_+\) exists and is unique. Fermat’s condition applied to the projection problem \(\min _{y\in H(\alpha ,\beta )} D_\varphi ^{x^*}(x,y)\) states that

where the indicator function \(\iota _M:\mathbb {R}^d\rightarrow \overline{\mathbb {R}}\) is defined by

Applying subdifferential calculus [52, Theorem 23.8], where we make use of the fact that \(H(\alpha ,\beta )\) is a polyhedral set, we conclude that \(x_+\in \textrm{dom}\ \partial \varphi \) and

where we used the fact that it holds \(\partial \iota _{H(\alpha ,\beta )} = \textrm{span}(\{\alpha \})\) on \(H(\alpha ,\beta )\). Using subgradient inversion \((\partial \varphi )^{-1} = \nabla \varphi ^*\), we arrive at the identity

with some \({\hat{t}}\in \mathbb {R}\). Inserting this equation into the constraint \(\langle x_+,\alpha \rangle = \beta \), we conclude that \({\hat{t}}\) minimizes (8). \(\square \)

3 Realizations of the method

To solve problem (1), we propose the following method. In each step, we randomly pick a component equation \(f_{i_k}(x)=0\) and consider the set of zeros of its linearization around the current iterate \(x_k\). This set is just the hyperplane

where

For later purposes, we also consider the halfspace

As the update \(x_{k+1}\), we now propose to take the Bregman projection of \(x_k\) onto the set \(H_k\) using Proposition 2.3, which is possible if

The update is then given by \(x_{k+1}^* = x_k^*-t_{k,\varphi }\nabla f_{i_k}(x_k)\) and \(x_{k+1}=\nabla \varphi ^*(x_{k+1}^*)\) with

Note that, although the Bregman projection \(x_{k+1}\) is unique, \(t_{k,\varphi }\) might not be unique. If (10) is not fulfilled, we define an update inspired from [23] by setting \(x_{k+1} = \nabla \varphi ^*(x_k^*-t_{k,\sigma }\nabla f_{i_k}(x_k))\) with the Polyak-like step sizeFootnote 1

with some norm \(\Vert \cdot \Vert _*\) and some constant \(\sigma >0\). We will refer to the resulting update as the relaxed projection. We note that it is always defined and gives a new point \(x_{k+1}\in \textrm{dom}\ \partial \varphi \). However, \(x_{k+1}\) does not lie in \(H_k\): Indeed, if it would lie in \(H_k\cap \textrm{dom}\ \partial \varphi \), this would contradict the assumption that (10) is not fulfilled. In [23], the similar step size \(t = \sigma \frac{f_{i_k}(x_k) - \inf _{i_k} f_{i_k}}{c\Vert \nabla f_{i_k}(x_k)\Vert _*^2}\) with some constant \(c>0\) was proposed for minimization with mirror descent under the name mirror-stochastic Polyak step size (mSPS). Both the projection and relaxed projection guarantee that \(x_{k+1}\in \textrm{dom}\ \varphi \) and deliver a new subgradient \(x_{k+1}^*\) for the next update. The steps are summarized in Algorithm 1 below.

As an alternative method, we also consider the method which always chooses the step size \(t_{k,\sigma }\) from (12).

Note that the problem (11) is convex and one-dimensional and can be solved with the bisection method, if \(\varphi ^*\) is a \(C^1\)-function, or (globalized) Newton methods, if \(\varphi ^*\) is a \(C^2\)-function, see Appendix A. In Examples 3.2, 3.3, 3.4 and 3.5, we show how to implement the steps of Algorithm 1 for typical constraints.

Remark 3.1

(Choice of \(\sigma \) in Algorithm 1 and Algorithm 2) In this paper, we focus on the case that \(\varphi \) is a strongly convex function. In this setting, we propose to choose the parameter \(\sigma \) in Algorithm 1 and Algorithm 2 as the modulus of strong convexity, since in this case our theorems in Sect. 3 guarantee convergence.

Example 3.2

(Unconstrained case and sparse Kaczmarz) In the unconstrained case \(\textrm{dom}\ \varphi = \mathbb {R}^d\), condition (10) is always fulfilled whenever \(\nabla f_{i_k}(x_k)\ne 0\).

For \(\varphi (x) = \frac{1}{2}\Vert x\Vert _2^2\), we obtain back the nonlinear Kaczmarz method [43, 58, 62]

For the function

Assumptions 1(i-iv) are fulfilled and it holds that \(\varphi ^*(x) = \tfrac{1}{2} \Vert S_\lambda (x)\Vert _2^2\) with the soft-shrinkage function

Hence, in this case lines 9, 11 and 12 of Algorithm 1 read

For affine functions \(f_{i}(x) = \langle a^{(i)},x\rangle - b_{i}\) with \(a^{(i)}\in \mathbb {R}^d\), \(b_{i}\in \mathbb {R}\), Algorithm 1 has been studied under the name Sparse Kaczmarz method and converges to a sparse solution of the linear system \(f(x)=0\), see [39, 53]. The update with \(t_k\) from (11) is also called the Exact step Sparse Kaczmarz method. The linesearch problem can be solved exactly with reasonable effort, as \(\varphi ^*\) is a continuous piecewise quadratic function with at most 2d discontinuities. The corresponding solver is based on a sorting procedure and has complexity \({\mathcal {O}}(d\log (d))\), see [39] for details.

Example 3.3

(Simplex constraints) We consider the probability simplex

The restriction of the negative entropy function

fulfills Assumption 1(i-iv) and is 1-strongly convex with respect to \(\Vert \cdot \Vert _1\) due to Pinsker’s inequality, see [25, 47] and [8, Example 5.27]. We have that

We can characterize condition (10) easily as follows: The hyperplane \(H(\alpha ,\beta )\) intersects \(\textrm{dom}\ \partial \varphi =\Delta ^{d-1}_+\) if and only if

-

\(\alpha = \beta \mathbb {1}_d\) or

-

there exist \(r,s\in \{1,..., d\}\) with \(\alpha _r<\beta <\alpha _s\).

This condition is quickly established by the intermediate value theorem and can be easily checked during the method. When verifying the condition in practice, in case of instabilities one may consider the restricted index set

for some positive \(\delta \).

The Bregman distance induced by \(\varphi \) is the Kullback–Leibler divergence for probability vectors

The convex conjugate of \(\varphi \) is the log-sum-exp-function \( {\varphi }^*(p) = \log \big (\sum \limits _{i=1}^d e^{p_i}\big ). \) Since \(\varphi \) is differentiable, the steps of Algorithm 1 can be rewritten by substituting \(x_k^*\) by \(\nabla \varphi (x_k)=1+\log (x_k)\). Denoting the ith component of an iterate \(x_{l}\) by \(x_{l,i}\), lines 9, 11 and 12 of Algorithm 1 read

where multiplication and exponentiation of vectors are understood componentwise. The method (16) is known with the name exponentiated gradient descent or entropic mirror descent, provided that \(t_k\) is nonnegative. We claim that our proposed step size \(t_{k,\varphi }\) is new. Note that some convex polyhedra, such as \(\ell ^1\)-balls, can be transformed to \(\Delta ^{d'-1}\) for some \(d'\in \mathbb {N}\) by writing a point as a convex combination of certain extreme points [23].

Example 3.4

(Cartesian products of constraints) For \(i\in \{1,...,m\}\), let \(\varphi _i\) be a DGF for \(C_i\subset D_i\subset \mathbb {R}^{d_i}\) fulfilling Assumption 1(i-iv) and let  fulfill Assumption 1(v-vi). Then

fulfill Assumption 1(v-vi). Then

is a DGF for  fulfilling Assumption 1(i-iv) with

fulfilling Assumption 1(i-iv) with

Denoting the ith component of an iterate \(x^{(*)}_l\) by \(x^{(*)}_{l,i}\), the lines 9, 11 and 12 of Algorithm 1 for \(i\in \{1,...,m\}\) read as follows:

where \(\nabla _i\) stands for the gradient w.r.t. the ith block of variables.

Finally, we give a suggestion which constant \(\sigma \) and norm \(\Vert \cdot \Vert _\infty \) should be used in Algorithm 2/ line 10 in Algorithm 1. Let us assume that \(\varphi \) is \(\sigma _i\)-strongly convex w.r.t. a norm \(\Vert \cdot \Vert _{(i)}\) on \(\mathbb {R}^{d_i}\). Then, the function \(\varphi \) is \(\sigma \)-strongly convex with \(\sigma =\min _{i=1,...,m}\sigma _i\) w.r.t. the mixed norm

Indeed, for all x, y with \(x_i,y_i\in \mathbb {R}^{d_i}\) it holds that

A quick calculation using Cauchy-Schwarz’ inequality shows that the dual norm of \(\Vert \cdot \Vert \) is given by

where \(\Vert \cdot \Vert _{(i,*)}\) is the dual norm of \(\Vert \cdot \Vert _i\) on \(\mathbb {R}^{d_i}\). Hence, we recommend to use Algorithm 2 with (17) and \(\sigma =\min \limits _{i=1,...,m}\sigma _i\), if \(\varphi _i\) is \(\sigma _i\)-strongly convex w.r.t. \(\Vert \cdot \Vert _{(i)}\).

Example 3.5

(Two-fold Cartesian product of simplex constraints) As a particular instance of Example 3.4 we consider the 2-fold product of the probability simplex \(C_i=\Delta ^{d-1}\), \(i\in \{1,2\}\) with \(\varphi _i=\varphi \) from Example 3.3. The properties from Assumption 1 are inherited from the \(\varphi _i\). We denote the iterates of Algorithm 1 by \(x_k,y_k\in \Delta ^{d-1}\) and address its components by \(x_{k,i}, y_{k,i}\) for \(i=1,...,d\). Similar to Example 3.3, the steps of the method can be rewritten as

where \(\nabla _x\) stands for the gradient w.r.t. x and \(\nabla _y\) for the gradient w.r.t. y. Also here, we can give a characterization of condition (10): For \(\alpha =(\alpha _1, \alpha _2)\) with \(\alpha _1, \alpha _2\in \mathbb {R}^d\) and \(\beta \in \mathbb {R}\), the hyperplane \(H(\alpha ,\beta )\) intersects \(\textrm{dom}\ \partial \varphi = \Delta _+^{d+1}\times \Delta _+^{d+1}\) if and only if for \((i,j)=(1,2)\) or \((i,j)=(2,1)\) one of the following conditions is fulfilled:

-

\(\alpha _i = c\mathbb {1}_d\) with some \(c\in \mathbb {R}\) and \(\alpha _j= (\beta -c)\mathbb {1}_d\),

-

\(\alpha _i = c\mathbb {1}_d\) with some \(c\in \mathbb {R}\) and there exist \(r, s\in \{1,...,d\}\) with \(\alpha _{j,r}< \beta - c < \alpha _{j,s}\) or

-

\(\big ] \min \alpha _i, \max \alpha _i \big [ \ \cap \ \big ] \beta - \max \alpha _j, \beta - \min \alpha _j \big [ \ \ne \ \emptyset \).

To prove this condition, we can invoke Proposition 2.3 which states that (10) is fulfilled if and only if the objective function g from (8) has a minimizer. Next, we note that, for each \(c\in \mathbb {R}\), the objective in (18) can be rewritten as

and a case-by-case analysis shows that g has a minimizer if and only if one of the above assertions is fulfilled. Note that also the here discussed condition can be easily checked during the method. We remind that, in case of instabilities one may consider the restricted index set

for some positive \(\delta \). As derived in Example 3.4, in Algorithm 2/ line 10 of Algorithm 1 we use \(\sigma =1\) and \(\Vert u^*\Vert _* = \sqrt{\Vert u_1^*\Vert _\infty ^2 + \Vert u_2^*\Vert _\infty ^2}\).

4 Convergence

In this section we do the convergence analysis of Algorithm 1.

At first, we characterize fixed points of Algorithm 1 and Algorithm 2 and provide necessary lemmas for the subsequent analysis. In Sect. 4.1, we prove that for nonnegative star-convex functions \(f_i\), condition (10) is always fulfilled and the step size (11) is better than the relaxed step size (12) in the sense that it results in an iterate with a smaller Bregman distance to all solutions of (1). Finally, we present convergence results for Algorithm 1 for this setting. In Sect. 4.2, we prove convergence in a second setting, namely in the case that the functions \(f_i\) fulfill a local tangential cone condition as in [34, 41, 58].

As a first result, we determine the fixed points of Algorithm 1. The proposition states in particular that, in the unconstrained case \(\textrm{dom}\ \varphi =\mathbb {R}^d\), fixed points are exactly the stationary points of the least-squares function \(\Vert f(x)\Vert _2^2\).

Proposition 4.1

Let Assumption 1 hold and let \(x\in \textrm{dom}\ \partial \varphi \) and \(x^*\in \partial \varphi (x)\). The pair \(\big (x,x^*\big )\) is a fixed point of Algorithm 1 if and only if for all \(i\in \{1,...,n\}\) it holds that \(f_i(x) = 0\) or \(\nabla f_i(x)=0\).

Proof

If \(f_i(x)=0\) or \(\nabla f_i(x)=0\) holds for all \(i\in \{1,...,n\}\), then \((x,x^*)\) is a fixed point by definition of the steps.

Next, we assume that \(x\in \textrm{dom}\ \partial \varphi \) is a fixed point of Algorithm 1 and \(\nabla f_i(x)\ne 0\). First, assume that condition (10) is not fulfilled, then the update for \(x^*\) shows that \(t_{k,\sigma }=0\), since \(\nabla f_i(x)\ne 0\), and hence, \(f_i(x)=0\). Finally, we assume that condition (10) holds. Then, from Proposition 2.3 we know that Algorithm 1 computes the Bregman projection \(x = \Pi ^{x^*}_{\varphi ,H}(x)\) with

But this means that \(x\in H\) and hence, \(f_i(x) = 0\) holds also in this case. \(\square \)

The following fact will be useful in the convergence analysis. It shows that Algorithm 1 performs a mirror descent step whenever \(f_i(x)>0\), and a mirror ascent step whenever \(f_i(x)<0\).

Lemma 4.2

Consider the kth iterate \(x_k\) of Algorithm 1 and consider the case that \(f_{i_k}(x_k)\ne 0\) and \(\nabla f_{i_k}(x_k)\ne 0\). Let Assumption 1 hold. Then, the step size \(t_k\) in Algorithm 1 fulfills

Proof

If condition (10) is not fulfilled, the assertion is clear by definition of the step size. Next, we assume that (10) holds. Then, the function

is minimized by \(t_{k,\varphi }\). (Note that the expression is indeed fully determined by \(i_k\) and \(x_k^*\) by the fact that \(x_k=\nabla \varphi ^*(x_k^*\))). We compute

Since \(g_{i_k,x_k^*}\) is convex, its derivative is monotonically increasing and it vanishes at \(t_{k,\varphi }\). Since it holds \(f_{i_k}(x_k)\ne 0\) by assumption, we conclude that \(t_{k,\varphi }\) and \(f_{i_k}(x_k)\) have the same sign. \(\square \)

To exploit strong convexity and smoothness, we will use the following.

Lemma 4.3

If \(\varphi :\mathbb {R}^d\rightarrow \mathbb {R}\) is proper, convex and lower semicontinuous, then the following statements are equivalent:

-

(i)

\(\varphi \) is \(\sigma \)-strongly convex w.r.t. \(\Vert \cdot \Vert \).

-

(ii)

For all \(x,y\in \mathbb {R}^d\) and \(x^*\in \partial \varphi (x)\), \(y^*\in \partial \varphi (y)\),

$$\begin{aligned} \langle x^*-y^*, x-y\rangle \ge \sigma \Vert x-y\Vert ^2. \end{aligned}$$ -

(iii)

The function \(\varphi ^*\) is \(\tfrac{1}{\sigma }\)-smooth w.r.t. \(\Vert \cdot \Vert _*\).

Proof

See [63, Corollary 3.5.11 and Remark 3.5.3]. \(\square \)

Lemma 4.4

If \(\varphi :\mathbb {R}^d\rightarrow \mathbb {R}\) is convex and lower semicontinuous, then the following statements are equivalent:

-

(i)

\(\varphi \) is L-smooth w.r.t. a norm \(\Vert \cdot \Vert \),

-

(ii)

\(\varphi (y) \le \varphi (x) + \langle \nabla \varphi (x),y-x\rangle + \frac{L}{2} \Vert x-y\Vert ^2\) for all \(x,y\in \mathbb {R}^d\),

-

(iii)

\(\langle \nabla \varphi (y) - \nabla \varphi (x),y-x\rangle \le L\Vert x-y\Vert ^2\) for all \(x,y\in \mathbb {R}^d\).

Proof

See [63, Corollary 3.5.11 and Remark 3.5.3]. \(\square \)

4.1 Convergence for nonnegative star-convex functions

In this subsection we assume in addition that the functions \(f_i\) are either nonnegative and star-convex or affine.

Definition 4.5

([45]) Let \(f:D\rightarrow \mathbb {R}\) be differentiable. We say that f is called star-convex, if the set \(\mathop {\mathrm {\arg \!\min }}\limits f\) is nonempty and for all \(x\in D\) and \({\hat{x}}\in \mathop {\mathrm {\arg \!\min }}\limits f\) it holds that

Moreover, we call f strictly star-convex, if the above inequality is strict, and \(\mu \)- strongly star-convex relative to \(\varphi \), if for all \(x\in D\), \(x^*\in \partial \varphi (x)\) and \({\hat{x}}\in \mathop {\mathrm {\arg \!\min }}\limits f\) it holds that

We recall that the first assumption of nonnegativity and star-convexity covers two settings:

-

Minimizing a sum-of-terms

$$\begin{aligned} \min \frac{1}{n} \sum _{i=1}^n f_i(x)\qquad \text {s.t. } x\in C, \end{aligned}$$(21)under the interpolation assumption

$$\begin{aligned} \text { }\exists x: \quad x\in \bigcap _{i=1}^n \mathop {\mathrm {\arg \!\min }}\limits f_i|_C, \end{aligned}$$(22)where \(f_i\) is a star-convex function with known optimal value \({\hat{f}}_i\) on C. Under the interpolation assumption every point in the intersection on the right hand side of (22) is a solution to (21).

Furthermore, we will construct a sequence which converges to this intersection point by applying Algorithm 1 to the nonnegative function \({\tilde{f}}\) where \({\tilde{f}}_i = f_i|_C - {\hat{f}}_i\). When \(n=1\), we cover the setting of mirror descent for the problem

$$\begin{aligned} \min f(x) \quad \text {s.t. } x\in C \end{aligned}$$(23)with known optimal value \({\hat{f}}\).

-

Systems of nonlinear equations

$$\begin{aligned} f(x) = 0 \qquad \text {s.t. } x\in C \end{aligned}$$with star-convex component functions \(f_i\), where we apply Algorithm 1 to \(f_i^+ = \max (f_i,0)\). Note that \(f_i^+\) is not differentiable only at points x with \(f_i(x)=0\), which is anyway checked during the method.

Precisely, we will use the following assumption.

Assumption 2

For each \(f_i\) one of the following conditions is fulfilled:

-

(i)

\(f_i\) is nonnegative and star-convex and it holds that \(f_i^{-1}(0) \cap \textrm{dom}\ \partial \varphi \ne \emptyset \),

-

(ii)

\(f_i\) is nonnegative and strictly star-convex or

-

(iii)

\(f_i\) is affine.

The first theorem states that Algorithm 1 always computes nonrelaxed Bregman projections under Assumption 2 outside of the fixed points.

Theorem 4.6

Let \(\big (x_k,x_k^*\big )\) be given by Algorithm 1 and consider the case that \(f_{i_k}(x)\ne 0\) and \(\nabla f_{i_k}(x)\ne 0\). Let Assumptions 1 and 2 hold true. Then, the hyperplane \(H_k\) separates \(x_k\) and \(f_{i_k}^{-1}(0)\), the condition

holds and the Bregman projection of \(x_k\) onto \(H_k\) is defined, namely it holds that

In particular, Algorithm 1 always chooses the step size \(t_k=t_{k,\varphi }\) from (11).

Proof

For \(x\in D\) we define the affine function

We consider the cases (i) and (ii) from Assumption 2 first. Here we have that \(\ell _{x_k}(x_k)=f_{i_k}(x_k)> 0\) and for all \({\hat{x}}\in f_{i_k}^{-1}(0)\), star-convexity of \(f_{i_k}\) shows that \(\ell _{x_k}({\hat{x}}) \le 0\). This means that the hyperplane \(H_k\) separates \(x_k\) and \(f_{i_k}^{-1}(0)\). By the intermediate value theorem there exists \(x^{\lambda } = \lambda x_k + (1-\lambda ){\hat{x}}\) for some \(\lambda \in [0,1[\) such that \(\ell _{x_k}(x^{\lambda })=0\). Now let us assume that Assumption 2(i) holds, so we can choose \({\hat{x}}\in \textrm{dom}\ \partial \varphi \) with \(f_{i_k}({\hat{x}})=0\). By Assumption 1(iii) it holds that \(\textrm{dom}\ \partial \varphi =\textrm{ri}\ \textrm{dom}\ \varphi \), which is a convex set and hence, \(x^{\lambda }\in \textrm{dom}\ \partial \varphi \). This proves that the claimed condition (10) is fulfilled and the update in Algorithm 1 computes a Bregman projection onto \(H_k\) by Proposition 2.3. In case of Assumption 2(ii) we have that \(\ell _{x_k}({\hat{x}})<0\) and therefore it even holds that \(\lambda \in ]0,1[\). Assumption 1(iii) guarantees that \({\hat{x}} \in \overline{\textrm{dom}\ \varphi }\) and \(x_k\in \textrm{dom}\ \partial \varphi = \textrm{ri}\ \textrm{dom}\ \varphi \). Hence, we have \(x^{\lambda }\in \textrm{ri}\ \textrm{dom}\ \varphi \) by [52, Theorem 6.1], which again implies that \(x^{\lambda }\in \textrm{dom}\ \partial \varphi \) by Assumption 1(iii). This proves that condition (10) is fulfilled in this case, too.

Under Assumption 2(iii) it holds that \(\ell _x = f_{i_k}\) for all \(x\in D\). This already implies that \(H_k\) separates \(x_k\) and \(f_{i_k}^{-1}(0)\). Condition (10) is fulfilled by the assumption that \(f_{i_k}^{-1}(0)\ne \emptyset \) and so, the update computes the claimed Bregman projection by Proposition 2.3 also in this case. \(\square \)

As an immediate consequence, we see that Algorithm 1 is stable in terms of Bregman distance.

Corollary 4.7

If \({\hat{x}}\in S\) is a solution to (1) and the assumptions from Theorem 4.6 hold true, then it holds that

Proof

By Theorem 4.6, \(x_{k+1}\) is the Bregman projection of \(x_k\) onto \(H_{k}\) with respect to \(x_k^*\). If \(f_{i_k}(x_k)=0\), then \(x_k\) is a fixed point by Proposition 4.1and the statement holds trivially. Next, assume that \(f_{i_k}(x_k)>0\). Then by Lemma 4.2, we have that \(t_k > 0\). As \(x_{k+1}\in H\), we have that \(\langle \nabla f_{i_k}(x_k), x_{k+1}-x_k\rangle =0\). We conclude for all \(y \in H_k^\le \) that

which by Lemma 2.2 shows that \(x_{k+1} = \Pi _{\varphi ,H^\le _k}^{x_k^*}(x_k)\). As \({\hat{x}}\in H^\le _k\), the claim follows from Lemma 2.2(ii). An analoguous argument shows the claim in the case \(f_{i_k}(x_k)<0\). \(\square \)

Next, we prove that the exact Bregman projection moves the iterates closer to solutions of (1) than the relaxed projections, where the distance is in the sense of the used Bregman distance (see Theorem 4.11). To that end, for \((x_k,x_k^*)\) from Algorithm 1 we define an update with variable step size

Lemma 4.8

Let \(\big (x_k,x_k^*\big )\) be given by Algorithm 1 and consider \((x_{k+1}(t),x_{k+1}^*(t))\) from (24) for some \(t\in \mathbb {R}\). Let Assumption 1 hold true. Then, for all \(x\in \mathbb {R}^d\) it holds that

Proof

Rewriting the Bregman distance as in (7) shows that

\(\square \)

Proposition 4.9

Let \(\big (x_k,x_k^*\big )\) and \(t_k\) be given by Algorithm 1 and consider \(\big (x_{k+1}(t),x_{k+1}^*(t)\big )\) from (24) for some \(t\in \mathbb {R}\). Let Assumption 1 hold true. Then, for all \(x\in \mathbb {R}^d\) it holds that

Proof

Note that \(t_k\) equals \(t_{k,\varphi }\) from (11), since condition (10) is fulfilled by Theorem 4.6. Hence, the optimality property (11) shows that for any t we have that

Lemma 4.8 then shows that for any x it holds that

We use the definitions of \(\beta _k\), \(x_{k+1}(t)\) and \(x_{k+1}^*(t)\) and get

\(\square \)

In order to draw a conclusion from Proposition 4.9, we relate the step sizes \(t_{k,\varphi }\) from (11) and \(t_{k,\sigma }\) from (12). We already know by Lemma 4.2 that both step sizes have the same sign. The next lemma gives upper and lower bounds for \(t_{k,\varphi }\) with respect to \(t_{k,\sigma }\) under additional assumptions on \(\varphi \).

Lemma 4.10

Let \(\big (x_k,x_k^*\big )\) be the iterates from Algorithm 1 and let Assumption 1 hold true. We consider \(t_{k,\varphi }\) and \(t_{k,\sigma }\) from (11) and (12) and the function \(g_{i_k,x_k^*}\) from (19).

-

(i)

If \(\varphi \) is \(\sigma \)-strongly convex w.r.t. \(\Vert \cdot \Vert \), then \(g_{i_k,x_k^*}\) is \(\frac{\Vert \nabla f_{i_k}(x_k)\Vert _*^2}{\sigma }\)-smooth and

$$\begin{aligned} |t_{k,\varphi }| \ge \sigma \frac{ |f_{i_k}(x_k)| }{\Vert \nabla f_{i_k}(x_k)\Vert _*^2} = |t_{k,\sigma }|. \end{aligned}$$(25) -

(ii)

If \(\varphi \) is M-smooth w.r.t. \(\Vert \cdot \Vert \), then \(g_{i_k,x_k^*}\) is \(\frac{\Vert \nabla f_{i_k}(x_k)\Vert _*^2}{M}\)-strongly convex and

$$\begin{aligned} |t_{k,\varphi }| \le M\frac{|f_{i_k}(x_k)|}{\Vert \nabla f_{i_k}(x_k)\Vert _*^2} = \frac{M}{\sigma } \cdot |t_{k,\sigma }|. \end{aligned}$$(26)

Proof

For \(s,t\in \mathbb {R}\) with \(s<t\) it holds that

-

(i)

If \(\varphi \) is \(\sigma \)-strongly convex w.r.t. \(\Vert \cdot \Vert \), then \(\varphi ^*\) is \(\frac{1}{\sigma }\)-smooth w.r.t. \(\Vert \cdot \Vert _*\) by Lemma 4.3(iv). Hence, by Lemma 4.4(iii) we can estimate

$$\begin{aligned} 0 \le g_{i_k,x_k^*}'(t)-g_{i_k,x_k^*}'(s) \le \frac{\Vert (t-s)\nabla f_{i_k}(x_k)\Vert _*^2}{\sigma \cdot (t-s)} = \frac{\Vert \nabla f_{i_k}(x_k)\Vert _*^2}{\sigma } \cdot (t-s), \end{aligned}$$which proves by the same lemma that \(g_{i_k,x_k^*}\) is \(\frac{\Vert \nabla f_{i_k}(x_k)\Vert _*^2}{\sigma }\)-smooth. Hence, (25) follows by choosing \(t=\max (t_{k,\varphi },0)\), \(s=\min (t_{k,\varphi },0)\) and inserting (20).

-

(ii)

Here, by Lemma 4.3 and the Fenchel-Moreau-identity \(\varphi =\varphi ^{**}\), the function \(\varphi ^*\) is \(\frac{1}{M}\)-strongly convex w.r.t. \(\Vert \cdot \Vert _*\). Using Lemma 4.3(ii) we can estimate

$$\begin{aligned} g_{i_k,x_k^*}'(t)-g_{i_k,x_k^*}'(s) \ge \frac{\Vert (t-s)\nabla f_{i_k}(x_k)\Vert _*^2}{M\cdot (t-s)} = \frac{\Vert \nabla f_{i_k}(x)\Vert _*^2}{M} \cdot (t-s) \end{aligned}$$which shows that \(g_{i_k,x_k^*}\) is \(\frac{\Vert \nabla f_{i_k}(x_k)\Vert _*^2}{M}\)-strongly convex. Inequality (26) then follows as in (i).

\(\square \)

Theorem 4.11

Let \(\big (x_k,x_k^*\big )\) and \(t_k\) be given by Algorithm 1. Let \(t\in [0,t_k]\) and let \(\big (x_{k+1}(t),x_{k+1}^*(t)\big )\) be as in (24). Let Assumptions 1-2 hold true and assume that \(f_{i_k}(x_k)>0\). Then for every solution \({\hat{x}}\in S\) it holds that

If \(\varphi \) is \(\sigma \)-strongly convex, the inequality holds as well for \(t=t_{k,\sigma }\).

Proof

We recall that \(t_k\) equals \(t_{k,\varphi }\) from (11), since condition (10) is fulfilled by Theorem 4.6. By Lemma 4.2 we have that \(t_k>0\). Since \(f_{i_k}\) is star-convex and \({\hat{x}}\in S\), it holds that

The statement now follows from Proposition 4.9. The theorem applies in particular to \(t=t_{k,\sigma }\) if \(\varphi \) is \(\sigma \)-strongly convex, as Lemma 4.10(i) ensures that \(0<t_{k,\sigma }\le t_{k,\varphi }\). \(\square \)

For mirror descent or stochastic mirror descent under interpolation, Theorem 4.11 tells that a choice of a smaller step size than \(t_{k,\varphi }\) results in a larger distance to solutions \({\hat{x}}\) of problem (1) in Bregman distance.

The following lemma is the key element of our convergence analysis.

Lemma 4.12

Let \(\big (x_k,x_k^*\big )\) be the iterates of either Algorithm 1 or Algorithm 2. Let Assumptions 1 and 2 hold true and assume that \(\varphi \) is \(\sigma \)-strongly convex w.r.t. a norm \(\Vert \cdot \Vert \). Then for every solution \({\hat{x}}\in S\) it holds that

Proof

We bound the right-hand side in Lemma 4.8 from above for \(t\in \{t_{k,\varphi },t_{k,\sigma }\}\). As \(\varphi \) is \(\sigma \)-strongly convex, \(\varphi ^*\) is \(\frac{1}{\sigma }\)-smooth by Lemma 4.3(iii). Hence, by Lemma 4.4(ii), for all \(t\in \mathbb {R}\) we can estimate that

Minimizing the right hand side over \(t\in \mathbb {R}\) gives \({\hat{t}} = \sigma \frac{ f_{i_k}(x_k)}{\Vert \nabla f_{i_k}(x_k)\Vert _*^2} = t_{k,\sigma }\) and

By optimality of \(t_{k,\varphi }\) we have that

for all \(t\in \mathbb {R}\). Hence, we have shown that

holds for \(t\in \{t_{k,\sigma },t_{k,\varphi }\}\). If Assumption 2(i) or 2(ii) are fulfilled, Lemma 4.2 and star-convexity of \(f_{i_k}\) show that

Under Assumption 2(iii), we have equality in (30). Inserting this inequality into Lemma 4.8, we obtain the claimed bound

where we used (7) in the last step.

\(\square \)

We can now establish almost sure (a.s.) convergence of Algorithm 1. The expectations are always taken with respect to the random choice of the indices. Sometimes we also take conditional expectations conditioned on choices of indices in previous iterations, which we will indicate explicitly.

Theorem 4.13

Let Assumptions 1-2 hold true and assume that \(\varphi \) is \(\sigma \)-strongly convex w.r.t. a norm \(\Vert \cdot \Vert \). Then it holds that

and we have the rate

with some constant \(c>0\). Moreover, the iterates \(x_k\) of Algorithm 1 converge a.s. to a random variable whose image is contained in the solution set S.

Proof

By \(\sigma \)-strong convexity of \(\varphi \) and Lemma 4.12, we have that

holds for all \(k\in \mathbb {N}\) and \({\hat{x}}\in S\). Hence, the sequence \(x_k\) is bounded and we have

with some constant \(M>0\). Inserting this into (28) gives that for all \(l\in \mathbb {N}\) it holds

Taking conditional expectation w.r.t. \(i_0,...,i_{l-1}\), we obtain

By rearranging and using the tower property of conditional expectation, we conclude that

The convergence rate now follows with \(c=2\cdot M\cdot D_{\varphi }^{x_0^*}(x_0,{\hat{x}})\) for any \({\hat{x}}\in S\) by averaging over \(l=0,...,k\) and telescoping.

Next, we prove the a.s. iterate convergence. Using (31) gives that

The Robbins-Siegmund Lemma [51] proves that \(f(x_k)\rightarrow 0\) holds with probability 1. Along any sample path in \(\{f(x_k)\rightarrow 0\}\), due to boundedness of the sequence \(x_k\), there exists a subsequence \(x_{k_l}\) converging to some point x. By continuity, we have that \(f(x)=0\) and hence, \(x\in S\). Due to Assumption 1(iv), it holds that \(D_\varphi ^{x_{k_l}^*}(x_{k_l}, x)\rightarrow 0\) and since \(D_\varphi ^{x_k^*}(x_k,{\hat{x}})\) is a decreasing sequence in k for \({\hat{x}}\in S\) by Lemma 4.12, we conclude that \(D_\varphi ^{x_{k}^*}(x_{k}, x)\rightarrow 0.\) Finally, strong convexity of \(\varphi \) implies that \(x_k\rightarrow x\). \(\square \)

If the functions \(f_i\) have Lipschitz continuous gradient, we can derive a sublinear rate for the \(\ell _1\)-kind loss \(\mathbb {E}\big [\min _{l=1,...,k} \sum _{i=1}^n p_i f_i(x_l)\big ]\), which coincides with the rate in [23, Theorem 4]. Note that without this assumption, Jensen’s inequality gives the asymptotically slower rate

for some constant \(c>0\). We will need the following lemma.

Lemma 4.14

[38, Lemma 3] Let \(\varphi \) be \(\sigma \)-strongly convex w.r.t. \(\Vert \cdot \Vert \). Moreover, let the functions \(f_i\) be L-smooth w.r.t. \(\Vert \cdot \Vert \). Then it holds that

Theorem 4.15

Let Assumptions 1-2 hold true and assume that \(\varphi \) is \(\sigma \)-strongly convex w.r.t. a norm \(\Vert \cdot \Vert \) and all functions \(f_i\) are L-smooth w.r.t. \(\Vert \cdot \Vert \). Then the iterates \(x_k\) of Algorithm 1 fulfill that

Proof

Combining Lemma 4.12 and Lemma 4.14 yields that

The assertion now follows as in the proof of Theorem 4.13 by taking expectation and telescoping. \(\square \)

For strongly star-convex functions \(f_i\), we can prove a linear convergence rate, where we recover the contraction factor from [23, Theorem 3]. Moreover, we can even improve this factor for smooth \(\varphi \).

Theorem 4.16

Let Assumptions 1-2 hold true and assume that \(\varphi \) is \(\sigma \)-strongly convex w.r.t. a norm \(\Vert \cdot \Vert \) and all functions \(f_i\) are L-smooth w.r.t. \(\Vert \cdot \Vert \). Moreover, assume that \(\overline{f}:=\sum _{i=1}^d p_if_i\) is \(\mu \)-strongly star-convex w.r.t. S relative to \(\varphi \). Then there exists an element \({\hat{x}} \in S\) such that the iterates \(x_k\) of Algorithm 1 converge to \({\hat{x}}\) at the rate

Moreover, if \(\varphi \) is M-smooth, it holds that

Proof

By Lemma 4.14 we have that

Taking expectation and using the assumption of relative strong convexity, we obtain that

The first convergence rate then follows by the steps in Lemma 4.12 and Theorem 4.15, replacing (30) by the above inequality. Finally, let \(\varphi \) be additionally M-smooth. Using that \(\nabla \overline{f}({\hat{x}})=0\), we can further bound

\(\square \)

Since the proofs of Theorems 4.13, 4.15 and 4.16 rely on Lemma 4.12, they also hold for Algorithm 2.

4.2 Convergence under the local tangential cone condition

Inspired by [58], we consider functions fulfilling the so-called tangential cone condition, which was introduced in [29] as a sufficient condition for convergence of the Landweber iteration for solving ill-posed nonlinear problems.

Definition 4.17

A differentiable function \(f:D\rightarrow \mathbb {R}\) fulfills the local tangential cone condition (\(\eta \)-TCC) on \(U\subset D\) with constant \(0<\eta <1\), if for all \(x,y\in U\) it holds that

Under this condition, we are able to formulate a variant of Lemma 4.12 and derive corresponding convergence rates. Precisely, we will assume the following.

Assumption 3

There exist a point \({\hat{x}}\in S\) and constants \(\eta \in ]0,1[\) and \(r>0\) such that each function \(f_i\) fulfills \(\eta \)-TCC w.r.t. \(\eta \) on

Lemma 4.18

Let Assumptions 1 and 3 hold true and assume that \(\varphi \) is \(\sigma \)-strongly convex w.r.t. a norm \(\Vert \cdot \Vert \). Let \({\hat{x}}\in S\). Then, the iterates of Algorithm 1 fulfill

if one of the following conditions is fulfilled:

-

(i)

\(t_k=t_{k,\sigma }\), \(\eta <\frac{1}{2}\) and \(\tau = \sigma \big (\frac{1}{2}-\eta \big )\),

-

(ii)

\(t_k=t_{k,\varphi }\), \(\varphi \) is additionally M-smooth w.r.t. \(\Vert \cdot \Vert \), \(\eta < \frac{\sigma }{2\,M}\) and \(\tau = \sigma \big (\frac{1}{2}-\eta \frac{M}{\sigma }\big ).\)

In particular, if \(x_0\in B_{r,\varphi }({\hat{x}})\), then in both cases we have that \(x_k\in B_{r,\varphi }({\hat{x}})\) for all \(k\in \mathbb {N}\).

Proof

For (i), by definition of \(t_{k,\sigma }\) and \(\eta \)-TCC we have that

The first convergence rate then follows by the steps in Lemma 4.12, replacing (30) by the above inequality. For (ii), using Lemma 4.10(ii) and the fact that \(f_{i_k}\) fulfills \(\eta \)-TCC, we estimate

so that the assertion follows as in (i). \(\square \)

The condition on \(\eta \) in (i) is the classical condition for Landweber methods (see e.g. [29, Theorem 3.8]) and is also required in the work [58], which studies Algorithm 1 for \(\varphi (x)=\frac{1}{2}\Vert x\Vert _2^2\) under \(\eta \)-TCC. The constant \(\tau \) in (ii) can not be greater than \(\tau \) in (i), as it holds that \(\sigma \le M\).

Theorem 4.19

Let Assumption 1 hold and let \(\varphi \) be \(\sigma \)-strongly convex. Moreover, let Assumption 3 hold with some \(\eta >0\) and \({\hat{x}}\in S\) and let \(x_0\in B_{r,\varphi }({\hat{x}})\).

-

(i)

If \(\eta <\frac{1}{2}\), then the iterates \(x_k\) of Algorithm 2 converge a.s. to a random variable whose image is contained in the solution set \(S\cap B_{r,\varphi }({\hat{x}})\) and it holds that

$$\begin{aligned} \mathbb {E}\big [\min _{l=1,...,k} \sum _{i=1}^n p_i \big (f_i(x_l)\big )^2\big ] \le \frac{C \cdot D_\varphi ^{x_0^*}(x_0,{\hat{x}})}{\sigma \big (\frac{1}{2}-\eta \big ) k}. \end{aligned}$$ -

(ii)

Let \(\varphi \) be additionally M-smooth and \(\eta < \frac{\sigma }{2\,M}\). Assume that \(x_k\) are the iterates of Algorithm 1 and the condition \(H_k\cap \textrm{dom}\ \varphi \ne \emptyset \) is fulfilled for all k. Then the \(x_k\) converge a.s. to a random variable whose image is contained in the solution set \(S\cap B_{r,\varphi }({\hat{x}})\) and it holds that

$$\begin{aligned} \mathbb {E}\big [\min _{l=1,...,k} \sum _{i=1}^n p_i \big (f_i(x_l)\big )^2\big ] \le \frac{C \cdot D_\varphi ^{x_0^*}(x_0,{\hat{x}})}{\sigma \big (\frac{1}{2}-\eta \frac{M}{\sigma }\big ) k}. \end{aligned}$$

Proof

By Lemma 4.18, the \(x_k\) stay in \(B_{r,\varphi }({\hat{x}})\). The statements now follow as in Theorem 4.13 by invoking Lemma 4.18 instead of Lemma 4.12. \(\square \)

Finally, we can give a local linear convergence rate under the additional assumption that the Jacobian has full column rank. For \(\varphi (x)=\frac{1}{2}\Vert x\Vert _2^2\), in part (i) of the theorem we recover the result from [58, Theorem 3.1] as a special case. In both Theorem 4.19 and Theorem 4.20, unfortunately we obtain a more pessimistic rate for Algorithm 1 compared to Algorithm 2, as the \(\tau \) in (ii) is upper bounded by the \(\tau \) in (i).

Theorem 4.20

Let Assumption 1 hold true and let \(\varphi \) be \(\sigma \)-strongly convex and M-smooth. Let Assumption 3 hold with some \(\eta >0\) and \({\hat{x}}\in S\) and let \(x_0\in B_{r,\varphi }({\hat{x}})\). Moreover, assume that the Jacobian Df(x) has full column rank for all \(x\in B_{r,\varphi }({\hat{x}})\) and \(p_{\min }=\min _{i=1,...,n} p_i>0\). We set

-

(i)

If \(\eta <\frac{1}{2}\), then the iterates \(x_k\) of Algorithm 2 fulfill that

$$\begin{aligned} \frac{\sigma }{2}\mathbb {E}\big [\Vert x_k-{\hat{x}}\Vert _2^2] \le \mathbb {E}\big [D_\varphi (x_{k},{\hat{x}})\big ] \le \Big ( 1- \frac{ \sigma \big (\frac{1}{2}-\eta \big )p_{\min }}{M(1+\eta )^2\kappa _{\min }^2} \Big )^k \mathbb {E}\big [D_\varphi (x_0,{\hat{x}})\big ]. \end{aligned}$$(33) -

(ii)

Let \(\varphi \) be additionally M-smooth and let \(\eta < \frac{\sigma }{2\,M}\). Assume that \(x_k\) are the iterates of Algorithm 1 and the condition \(H_k\cap \textrm{dom}\ \varphi \ne \emptyset \) is fulfilled for all k. Then it holds that

$$\begin{aligned} \frac{\sigma }{2}\mathbb {E}\big [\Vert x_k-{\hat{x}}\Vert _2^2] \le \mathbb {E}\big [D_\varphi (x_{k},{\hat{x}})\big ] \le \Big ( 1- \frac{ \sigma \big (\frac{1}{2}-\eta \frac{M}{\sigma }\big )p_{\min }}{(1+\eta )^2\kappa _{\min }^2} \Big )^k \mathbb {E}\big [D_\varphi (x_0,{\hat{x}})\big ]. \end{aligned}$$(34)

For the proof, as in [58] we use the following auxiliary lemma.

Lemma 4.21

Let \(a_1,...,a_n\ge 0\) and \(b_1,...,b_n>0\). Then it holds that

Proof

Since \(a_{i},b_{i}\ge 0\) it holds that

\(\square \)

Proof of Theorem 4.20

By Assumption 3 and the fact that \(f({\hat{x}})=0\), we can estimate

In all cases of the assumption, inserting the above estimate we respectively conclude that

Taking expectation and using Lemma 4.21 as well as the definition of \(\kappa _{\min }\), we conclude that

\(\square \)

5 Numerical experiments

In this section, we evaluate the performance of the NBK method. In the first experiment we used NBK to find sparse solutions with the nonsmooth DGF \(\varphi (x) = \frac{1}{2}\Vert x\Vert _2^2+\lambda \Vert x\Vert _1\) for unconstrained quadratic equations, that is, with \(C=\mathbb {R}^d\). Next, we employed the negative entropy DGF over the probability simplex \(C=\Delta ^{d-1}\) to solve simplex-constrained linear equations as well as the left-stochastic decomposition problem, a quadratic problem over a product of probability simplices with applications in clustering [2]. All the methods were implemented in MATLAB on a macbook with 1,2 GHz Quad-Core Intel Core i7 processor and 16 GB memory. Code is available at https://github.com/MaxiWk/Bregman-Kaczmarz.

5.1 Sparse solutions of quadratic equations

As the first example, we considered multinomial quadratic equations

with \(A^{(i)}\in \mathbb {R}^{d\times d}\), \(b^{(i)}\in \mathbb {R}^d\), \(c^{(i)}\in \mathbb {R}\) and \(i=1,...,n\). We investigated if Algorithm 1 (NBK method) and Algorithm 2 (rNBK method) are capable of finding a sparse solution \({\hat{x}}\in \mathbb {R}^d\) by using the DGF \(\varphi (x)=\lambda \Vert x\Vert _1+\frac{1}{2}\Vert x\Vert _2^2\) and tested both methods against the euclidean nonlinear Kaczmarz method (NK). As it holds \(\textrm{dom}\ \varphi = \mathbb {R}^d\), it is always possible to choose the step size \(t_{k,\varphi }\) from (11) in the NBK method. Moreover, the step size can be computed exactly by a sorting procedure, as \(\varphi ^*\) is a continuous piecewise quadratic function, see Example 3.2. In order to guarantee existence of a sparse solution, we chose a sparse vector \({\hat{x}}\in \mathbb {R}^d\), sampled the data \(A^{(i)}, b^{(i)}\) randomly with entries from the standard normal distribution and set

In all examples, the nonzero part of \({\hat{x}}\) and the initial subgradient \(x_0^*\) were sampled from the standard normal distribution. The initial vector \(x_0\) was computed by \(x_0=\nabla \varphi ^*(x_0^*) = S_\lambda (x_0^*)\).

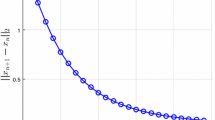

From the updates it is evident that computational cost per iteration is cheapest for the NK method, slightly more expensive for the rNBK method and most expensive for the NBK method. To examine the case \(d<n\), we chose \(A^{(i)}\sim {\mathcal {N}}(0,1)^{500\times 500}\) for \(i=1,...,1000\), \({\hat{x}}\) with 25 nonzero entries and \(\lambda =10\) and performed 20 random repeats. Figure 1 shows that the NBK method clearly outperforms the other two methods in this situation, even despite the higher cost per iteration.

Figure 2 illustrates that in the case \(d>n\), both the NBK method and the rNBK method can fail to converge or converge very slowly.

Experiment with quadratic equations, \((n,d) = (1000,500)\), \({\hat{x}}\) with 50 nonzero entries, 20 random repeats. Left: plot of residual \(\Vert f(x_k)\Vert _2\), right: plot of distance to solution \({\hat{x}}\), both over computation time. Thick line shows median over all trials, light area is between min and max, darker area indicates 25th and 75th quantile

Experiment with quadratic equations, \((n,d) = (50,100)\), \({\hat{x}}\) with 5 nonzero entries, 50 random repeats, plot of residual \(\Vert f(x_k)\Vert _2\) against computation time. Left: \(\lambda =2\), right: \(\lambda =5\). Thick line shows median over all trials, light area is between min and max, darker area indicates 25th and 75th quantile

5.2 Linear systems on the probability simplex

We tested our method on linear systems constrained to the probability simplex

That is, in problem (1) we chose \(f_i=\langle a_i,x\rangle - b_i\) with \(D=\mathbb {R}^d\) and viewed \(C=\Delta ^{d-1}\) as the additional constraint. For Algorithm 1, we used the simplex-restricted negative entropy function from Example 3.3, i.e. we set

We know from Example 3.3 that \(\varphi \) is 1-strongly convex w.r.t. the 1-norm \(\Vert \cdot \Vert _1\). Therefore, as the second method we considered the rNBK iteration given by Algorithm 2 with \(\sigma =1\) and \(\Vert \cdot \Vert _*=\Vert \cdot \Vert _\infty \). As a benchmark, we considered a POCS (orthogonal projection) method which computes an orthogonal projection onto a row equation, followed by an orthogonal projection onto the probability simplex, see Algorithm 3 listed below. We note that in [36, Theorem 3.3] it has been proved that the distance of the iterates of the POCS method and the NBK method to the set of solutions on \(\Delta ^{d-1}_+\) decays with an expected linear rate, if there exists a solution in \(\Delta ^{d-1}_+\). Theorem 4.13 shows at least a.s. convergence of the iterates towards a solution for all three methods.

We note that it holds \(\nabla f_{i_k}(x)=a_{i_k}\) for all x and \(\beta _k = b_{i_k}\) in the NBK method. If problem (35) has a solution, then condition (10) is fulfilled in each step of the NBK method, so the method takes always the step size \(t_k=t_{k,\varphi }\) from the exact Bregman projection. For the projection onto the simplex in Algorithm 3, we used the pseudocode from [59], see also [12, 24, 26].

Alternating euclidean projections (POCS method) for (35)

In our experiments we noticed that in large dimensions, such as \(d\ge 100\), solving the Bregman projection (11) up to a tolerance of \(\epsilon =10^{-9}\) takes less than half as much computation time as the simplex projection. As these two are the dominant operations in these methods, the NBK updates are computationally cheaper than the NK updates in the high dimensional setting. However, the examples will show that convergence quality of the methods depends on the distribution of the entries of A. All methods were observed to converge linearly.

In the following experiments, we took different choices of A and set the right-hand side to \(b=A{\hat{x}}\) with a point \({\hat{x}}\) drawn from the uniform distribution on the probability simplex \(\Delta ^{d-1}\). All methods were initialized with the center point \(x_0=(\frac{1}{d},..., \frac{1}{d}).\)

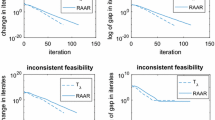

For our first experiment, we chose standard normal entries \(A\sim {\mathcal {N}}(0,1)^{n\times d}\) with \((n,d) = (500,200)\) and \((n,d)=(200,500)\). Figure 3 shows that in this setting, the POCS method achieves much faster convergence in the overdetermined case \((n,d) = (500,200)\) than the NBK method, whereas both methods perform roughly the same in the underdetermined case \((d,n) = (200,500)\). The rNBK method is considerably slower than the other two methods, which shows that the computation of the \(t_{k,\varphi }\) step size for NBK pays off.

In our second experiment, we built up the matrix from uniformly distributed entries \(A\sim {\mathcal {U}}([0,1])^{n\times d}\) and \(A\sim {\mathcal {U}}([0.9,1])^{n\times d}\) with \((n,d)=(200,500)\). The results are summarized in Fig. 4. For the Kaczmarz method it has been observed in practice that so called ’redundant’ rows of the matrix A deteriorate the convergence of the method [31]. This effect can also occur with the POCS method, as it also relies on euclidean projections. Remarkably, we can see that this is not the case for the NBK method and it clearly outperforms the POCS method and the rNBK method. This in particular shows that the multiplicative update used in both the rNBK method and the NBK method is not enough to overcome the difficulty of redundancy- to achieve fast convergence, it must be combined with the appropriate step size which is used by the proposed NBK method.

Finally, we illustrate the effect of the accuracy \(\epsilon \) in step size computation for the NBK method. We chose \(A\sim U([0,1])^{n\times d}\) and \(\epsilon =10^{-9}\) and compared with the larger tolerance \(\epsilon =10^{-5}\). Figure 5 shows that, with \(\epsilon =10^{-5}\), the residual plateaus at a certain threshold. In contrast with \(\epsilon =10^{-9}\), the residual does not plateau, and despite the more costly computation of the step size, the NBK method is still competitive with respect to time. Hence, for the problem of linear equations over the probability simplex we recommend to solve the step size problem up to high precision.

Experiment with linear equations on the probability simplex, plot of relative residuals averaged over 50 random examples against iterations (k) and computation time. Left column: \(A\sim {\mathcal {N}}(0,1)^{500\times 200}\), right column: \(A\sim \mathcal N(0,1)^{200\times 500}\). Thick line shows median over all trials, light area is between min and max, darker area indicates 25th and 75th quantile

Experiment with linear equations on the probability simplex, plot of relative residuals averaged over 50 random examples against iterations (k) and computation time. Left column: \(A\sim {\mathcal {U}}([0,1])^{200\times 500}\), right column: \(A\sim {\mathcal {U}}([0.9,1])^{200\times 500}\). Thick line shows median over all trials, light area is between min and max, darker area indicates 25th and 75th quantile

Experiment with linear equations on the probability simplex, plot of relative residuals averaged over 50 random examples against computation time. In both examples, \(A\sim \mathcal U([0,1])^{200\times 500}\). Left: \(\epsilon =10^{-9}\), right: \(\epsilon =10^{-5}\) in NBK method. Thick line shows median over all trials, light area is between min and max, darker area indicates 25th and 75th quantile

5.3 Left stochastic decomposition

The left stochastic decomposition (LSD) problem can be formulated as follows:

where

is the set of left stochastic matrices and \(A\in \mathbb {R}^{r\times m}\) is a given nonnegative matrix. The problem is equivalent to the so-called soft-K-means problem and hence has applications in clustering [2]. We can view (36) as an instance of problem (1) with component equations

and \(C=L^{r\times m}\cong \left( \Delta ^{r-1}\right) ^m\), where \(X_{:,i}\) denotes the ith column of X. For Algorithm 1 we chose the DGF from Example 3.4 with the simplex-restricted negative entropy \(\varphi _i=\varphi \) from Example 3.3. Since \(f_{i,j}\) depends on at most two columns of X, Algorithm 1 acts on \(\Delta ^{r-1}\) or \(\Delta ^{r-1}\times \Delta ^{r-1}\) in each step. Therefore, we applied the steps from Example 3.3 in the first case, and from Example 3.5 in the second case.

We compared the performance of Algorithms 1 and 2 to a projected nonlinear Kaczmarz method given by Algorithm 4. Here, by \(X_{k,:,i}\) we refer to the ith column of the kth iterate matrix. In all examples, we set \(A={\hat{X}}^T {\hat{X}}\), where the columns of \({\hat{X}}\) were sampled according to the uniform distribution on \(\Delta ^{r-1}\).

Projected nonlinear Kaczmarz method (PNK) for (36)

We observed that Algorithm 1 (NBK method) gives the fastest convergence, if r is not much smaller than m, see Fig. 6 and Fig. 7. In both experiments, we noticed that condition (10) was actually fulfilled in each step, but checking did not show a notable difference in performance. The most interesting setting for clustering is that r is very small and m is large, as r is the number of clusters [2]. However, it appears unclear if the NBK or the PNK method is a better choice for this problem size, as Fig. 8 shows. In this experiment, condition (10) was not always fulfilled in the NBK method and we needed to employ the globalized Newton method together with an additional condition to the step size approximation, see Appendix for details. Finally, we can again see that Algorithm 2 is clearly outperformed by the other two methods in all experiments.

Experiment ’Left stochastic decomposition problem’ with \(r=100, m=50\). Residuals \(\Vert f(X^{(k)})\Vert _2\) averaged over 50 random examples against outer iterations k (left) and computation time (right). Thick line shows median over all trials, light area is between min and max, darker area indicates 25th and 75th quantile

Experiment ’Left stochastic decomposition problem’ with \(r=50, m=100\), plot of residuals \(\Vert f(X^{(k)})\Vert _2\) averaged over 50 random examples against iterations (left) and computation time (right). Thick line shows median over all trials, light area is between min and max, darker area indicates 25th and 75th quantile

Experiment ’Left stochastic decomposition problem’ with \(r=3, m=100\), residuals \(\Vert f(X_k)\Vert _2\) against computation time. Left and right: Two random examples with different convergence behavior. Thick line shows median over all trials, light area is between min and max, darker area indicates 25th and 75th quantile

6 Conclusions and further research

We provided a general Bregman projection method for solving nonlinear equations, where each iteration needs only to sample one equation to make progress towards the solution. As such, the cost of one iteration scales independently of the number of equations. Our method is also a generalization of the nonlinear Kaczmarz method which allows for additional simple constraints or sparsity inducing regularizers. We provide two global convergence theorems under different settings and find a number of relevant experimental settings where instantiations of our method are efficient.

Convergence for non-strongly convex distance generating functions \(\varphi \), as well as a suitable scope of \(\sigma \) in this setting, has so far not been explored.

Our work also opens up the possibility of incorporating more structure into SGD type methods in the interpolation setting as has been done in [33] for the linear case. In this setting each \(f_i(x)\) is a positive loss function over the ith data point. If we knew in addition that some of the coordinates of x are meant to be positive, or that x is a discrete probability measure, then our nonlinear Bregman projection methods applied to the interpolation equations would provide new adaptive step sizes for stochastic mirror descent. Further venues for exploring would be to relax the interpolation equations, say into inequalities [27], and applying an analogous Bregman projections to incorporate more structure. We will leave this to future work.

Data availability

We do not analyze or generate any datasets, because our work proceeds within a theoretical and mathematical approach. However, the code that generates the figures in this article can be found at https://github.com/MaxiWk/Bregman-Kaczmarz.

Change history

05 April 2024

A Correction to this paper has been published: https://doi.org/10.1007/s10589-024-00570-y

Notes

The typical setting in convergence analysis will be that \(\varphi \) is \(\sigma \)-strongly convex with respect to a norm \(\Vert \cdot \Vert \), and \(\Vert \cdot \Vert _*\) will be its dual norm.

References

Alber, Y., Butnariu, D.: Convergence of Bregman projection methods for solving consistent convex feasibility problems in reflexive Banach spaces. J. Optim. Theory Appl. 92(1), 33–61 (1997)

Arora, R., Gupta, M.R., Kapila, A., Fazel, M.: Similarity-based clustering by left-stochastic matrix factorization. J. Mach. Learn. Res. 14(1), 1715–1746 (2013)

Azizan, N., Lale, S., Hassibi, B.: Stochastic mirror descent on overparameterized nonlinear models. IEEE Trans. Neural Netw. Learn. Systems 33(12), 7717–7727 (2021)

Bauschke, H.H., Borwein, J.M., Combettes, P.L.: Bregman monotone optimization algorithms. SIAM J. Control. Optim. 42(2), 596–636 (2003)

Bauschke, H.H., Borwein, J.M., et al.: Legendre functions and the method of random Bregman projections. J. Convex Anal. 4(1), 27–67 (1997)

Bauschke, H.H., Combettes, P.L.: Iterating Bregman retractions. SIAM J. Optim. 13(4), 1159–1173 (2003)

Bauschke, H.H., Combettes, P.L.: Convex analysis and monotone operator theory in Hilbert spaces, vol. 408. Springer, Berlin (2011)

Beck, A.: First-order methods in optimization. SIAM (2017)

Benamou, J.-D., Carlier, G., Cuturi, M., Nenna, L., Peyré, G.: Iterative Bregman projections for regularized transportation problems. SIAM J. Sci. Comput. 37(2), A1111–A1138 (2015)

Boţ, R.I., Hein, T.: Iterative regularization with a general penalty term-theory and application to L1 and TV regularization. Inverse Prob. 28(10), 104010 (2012)

Bregman, L.M.: The relaxation method of finding the common point of convex sets and its application to the solution of problems in convex programming. USSR Comput. Math. Math. Phys. 7(3), 200–217 (1967)

Brucker, P.: An O(n) algorithm for quadratic knapsack problems. Oper. Res. Lett. 3(3), 163–166 (1984)

Butnariu, D., Iusem, A.N., Zalinescu, C.: On uniform convexity, total convexity and convergence of the proximal point and outer Bregman projection algorithm in Banach spaces. J. Convex Anal. 10(1), 35–62 (2003)

Butnariu, D., Resmerita, E.: The outer Bregman projection method for stochastic feasibility problems in Banach spaces. In: Studies in computational mathematics, vol. 8, pp. 69–86. Elsevier, Amsterdam (2001)

Butnariu, D., Resmerita, E.: Bregman distances, totally convex functions, and a method for solving operator equations in Banach spaces. In Abstract and Applied Analysis, volume 2006. Hindawi, (2006)

Censor, Y., Elfving, T., Herman, G.: Averaging strings of sequential iterations for convex feasibility problems. In: Studies in computational mathematics, vol. 8, pp. 101–113. Elsevier, Amsterdam (2001)

Censor, Y., Lent, A.: An iterative row-action method for interval convex programming. J. Optim. Theory Appl. 34(3), 321–353 (1981)

Censor, Y., Reich, S.: Iterations of paracontractions and firmaly nonexpansive operators with applications to feasibility and optimization. Optimization 37(4), 323–339 (1996)

Cetin, A.E.: Reconstruction of signals from Fourier transform samples. Signal Process. 16(2), 129–148 (1989)

Cetin, A.E.: An iterative algorithm for signal reconstruction from bispectrum. IEEE Trans. Signal Process. 39(12), 2621–2628 (1991)

Dhillon, I.S., Tropp, J.A.: Matrix nearness problems with Bregman divergences. SIAM J. Matrix Anal. Appl. 29(4), 1120–1146 (2008)

Doikov, N., Nesterov, Y.: Gradient regularization of Newton method with Bregman distances. arXiv preprint arXiv:2112.02952, (2021)

D’Orazio, R., Loizou, N., Laradji, I., Mitliagkas, I.: Stochastic mirror descent: convergence analysis and adaptive variants via the mirror stochastic Polyak stepsize. arXiv preprint arXiv:2110.15412, (2021)

Duchi, J., Shalev-Shwartz, S., Singer, Y., Chandra, T.: Efficient projections onto the \(\ell _1\)-ball for learning in high dimensions. In: Proceedings of the 25th international conference on Machine learning, pp. 272–279, (2008)

Fedotov, A.A., Harremoës, P., Topsoe, F.: Refinements of Pinsker’s inequality. IEEE Trans. Inf. Theory 49(6), 1491–1498 (2003)

Gafni, E.M., Bertsekas, D.P.: Two-metric projection methods for constrained optimization. SIAM J. Control. Optim. 22(6), 936–964 (1984)

Gower, R. M., Blondel, M., Gazagnadou, N., Pedregosa, F.: Cutting some slack for SGD with adaptive Polyak stepsizes. arXiv:2202.12328 (2022)

Gu, R., Han, B., Tong, S., Chen, Y.: An accelerated Kaczmarz type method for nonlinear inverse problems in Banach spaces with uniformly convex penalty. J. Comput. Appl. Math. 385, 113211 (2021)

Hanke, M., Neubauer, A., Scherzer, O.: A convergence analysis of the Landweber iteration for nonlinear ill-posed problems. Numer. Math. 72(1), 21–37 (1995)

Iusem, N.A., Solodov, V.M.: Newton-type methods with generalized distances for constrained optimization. Optimization 41(3), 257–278 (1997)

Jarman, B., Yaniv, Y., Needell, D.: Online signal recovery via heavy ball Kaczmarz. arXiv preprint arXiv:2211.06391 (2022)

Jin, Q.: Landweber-Kaczmarz method in Banach spaces with inexact inner solvers. Inverse Prob. 32(10), 104005 (2016)

Jin, Q., Lu, X., Zhang, L.: Stochastic mirror descent method for linear ill-posed problems in banach spaces. Inverse Prob. 39(6), 065010 (2023)

Jin, Q., Wang, W.: Landweber iteration of Kaczmarz type with general non-smooth convex penalty functionals. Inverse Prob. 29(8), 085011 (2013)

Kaczmarz, S.: Angenäherte Auflösung von Systemen linearer Gleichungen. Bull. Internat. Acad. Polon. Sci. Lettres A, pp. 355–357 (1937)

Kostic, V., Salzo, S.: The method of Bregman projections in deterministic and stochastic convex feasibility problems. arXiv preprint arXiv:2101.01704 (2021)

Lin, T., Ho, N., Jordan, M.: On efficient optimal transport: an analysis of greedy and accelerated mirror descent algorithms. In: International conference on machine learning, pp. 3982–3991. PMLR (2019)

Loizou, N., Vaswani, S., Laradji, I. H., Lacoste-Julien, S.: Stochastic Polyak step-size for SGD: An adaptive learning rate for fast convergence. In: International Conference on Artificial Intelligence and Statistics, PMLR pp. 1306–1314 (2021)

Lorenz, D.A., Schöpfer, F., Wenger, S.: The linearized Bregman method via split feasibility problems: analysis and generalizations. SIAM J. Imag. Sci. 7(2), 1237–1262 (2014)

Ma, S., Bassily, R., Belkin, M.: The power of interpolation: Understanding the effectiveness of SGD in modern over-parametrized learning. In: International conference on machine learning, pp 3325–3334. PMLR (2018)

Maaß, P., Strehlow, R.: An iterative regularization method for nonlinear problems based on Bregman projections. Inverse Prob. 32(11), 115013 (2016)

Mishchenko, K.: Regularized Newton method with global \( {O} (1/k^2) \) convergence. arXiv preprint arXiv:2112.02089 (2021)

Nedić, A.: Random algorithms for convex minimization problems. Math. Program. 129(2), 225–253 (2011)