Abstract

A block decomposition method is proposed for minimizing a (possibly non-convex) continuously differentiable function subject to one linear equality constraint and simple bounds on the variables. The proposed method iteratively selects a pair of coordinates according to an almost cyclic strategy that does not use first-order information, allowing us not to compute the whole gradient of the objective function during the algorithm. Using first-order search directions to update each pair of coordinates, global convergence to stationary points is established for different choices of the stepsize under an appropriate assumption on the level set. In particular, both inexact and exact line search strategies are analyzed. Further, linear convergence rate is proved under standard additional assumptions. Numerical results are finally provided to show the effectiveness of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Block coordinate descent methods, also known as block decomposition methods, are algorithms that iteratively update a suitably chosen subset of variables, usually referred to as working set, trough an appropriate optimization step. Numerous variants of block coordinate descent methods have been proposed in the literature that essentially differ from each other in two aspects: the working set selection and the optimization step (see, e.g., [31] and the references therein for an overview of block coordinate descent methods in unconstrained optimization). In the last decades, block coordinate descent methods gained great popularity, especially to address large structured problems, such as those arising in machine learning, where classical algorithms may not be so efficient and, sometimes, even not applicable for computational reasons. Moreover, block coordinate descent methods are well suited for parallelization, allowing to exploit modern computer architectures.

In this paper, we are concerned with the minimization of a continuously differentiable function subject to one linear equality constraint and simple bounds on the variables. Many relevant problems can be formulated in this way, such as, e.g., Support Vector Machine training, the continuous quadratic knapsack problem, resource allocation problems, the page rank problem, the Chebyshev center problem and the coordination of multi-agent systems.

In the literature, most of the block coordinate descent methods proposed for this class of problems use gradient information to identify a subset of variables that violate some optimality condition and guarantee, once updated, a certain decrease in the objective function [1, 9, 11, 13, 14, 24, 26]. Other methods select variables in order to satisfy an appropriate descent condition with a decreasing tolerance [12], or follow a Gauss–Seidel (cyclic) strategy [16]. Different working set selection rules, based on sufficient predicted descent, have also been studied in [30]. Moreover, a Jacobi-type algorithm has been devised in [15] and a class of parallel decomposition methods for quadratic objective functions has been proposed in [18].

In addition to the above algorithms, different versions of random coordinate descent methods have been proposed in [19,20,21, 25, 28], characterized by the fact that the working set is randomly chosen from a given probability distribution. From a theoretical point of view, random coordinate descent methods have good convergence properties, given in expectation, and they turn out to be efficient also in practice. In particular, since random coordinate descent methods do not use first-order information to choose the working set, the whole gradient of the objective function does not need to be computed during the iterations, leading to good performances when the objective function has cheap partial derivatives.

In our method, a working set of two coordinates is iteratively chosen according to the following almost cyclic strategy: one coordinate is selected in a cyclic manner, while the other one is obtained by considering the distance of each variable from its nearest bound in some points produced by the algorithm. We see that this working set selection rule does not use first-order information. So, similarly as in random coordinate descent methods, the whole gradient of the objective function does not need to be computed during the algorithm and high efficiency is still achieved when the partial derivatives of the objective function are cheap. Anyway, differently from random coordinate descent methods, the proposed algorithm has deterministic convergence properties.

More in detail, once a pair of coordinates is selected in the working set, our algorithm performs a minimization step by moving along a first-order search direction with a certain stepsize. We first give a general condition on the stepsize that guarantees global convergence to stationary points, under the assumption that every point of the level set has at least one component strictly between the lower and the upper bound. Note that this assumption is automatically satisfied in many cases: e.g., when the feasible set is the unit simplex, or when at least one variable has no bounds. Then, we describe some practical ways to compute the stepsize, considering different classes of objective functions: the Armijo line search can be used for general non-convex objective functions, overestimates of the Lipschitz constant can be used for objective functions with Lipschitz continuous gradient, and the exact line search can be used when the objective function is strictly convex.

We also show that the proposed method converges linearly under standard additional assumptions. In particular, two different results are given: asymptotic linear convergence rate is proved when there are finite bounds on some (or all) of the variables, while non-asymptotic linear convergence rate is proved when there are no bounds on the variables.

Lastly, experimental simulations performed on different classes of test problems show promising results of the proposed algorithm in comparison with other block coordinate descent methods.

The rest of the paper is organized as follows. In Sect. 2, we introduce the notation and recall some preliminary results. In Sect. 3, we present the algorithm and carry out the convergence analysis. In Sect. 4, we describe some practical line search strategies. In Sect. 5, we analyze the convergence rate of the algorithm. In Sect. 6, we show the numerical results. Finally, we draw some conclusions in Sect. 7.

2 Preliminaries and notation

Let us introduce the notation. Given a vector \(x \in {\mathbb {R}}^n\), we indicate by \(x_i\) the ith entry of x. We denote by \(e_i \in {\mathbb {R}}^n\) the vector made of all zeros except for the ith entry that is equal to 1. Given a function \(f :{\mathbb {R}}^n \rightarrow {\mathbb {R}}\), the gradient of f is indicated by \(\nabla f\), the ith partial derivative of f is indicated by \(\nabla _i f\) and the Hessian matrix of f is indicated by \(\nabla ^2 f\). The derivative of a function \(f :{\mathbb {R}}\rightarrow {\mathbb {R}}\) is denoted by \(\dot{f}\). The Euclidean norm of a vector \(x \in {\mathbb {R}}^n\) is indicated by \(||x||\).

Throughout the paper, we focus on the following singly linearly constrained problem with lower and upper bounds on the variables:

where \(f :{\mathbb {R}}^n \rightarrow {\mathbb {R}}\) is a continuously differentiable function, \(b \in {\mathbb {R}}\) and, for all \(i = 1,\ldots ,n\), we have \(l_i < u_i\), with \(l_i \in {\mathbb {R}}\cup \{-\infty \}\) and \(u_i \in {\mathbb {R}}\cup \{\infty \}\). By slight abuse of standard mathematical notation, we allow variable bounds to be infinite.

Note that every problem of the form

with \(\omega :{\mathbb {R}}^n \rightarrow {\mathbb {R}}\), \(b\in {\mathbb {R}}\) and \(a_i \ne 0\), \({\bar{l}}_i < {\bar{u}}_i\), \(i = 1,\ldots ,n\), can be rewritten as in (1) via the following variable transformation: \(x_i = a_i s_i\), \(i = 1,\ldots ,n\), thus considering the objective function \(f(x) {:}{=} \omega \bigl ((x_1/a_1), \ldots , (x_n/a_n)\bigr )\) and setting the lower and the upper bound on x according to the above transformation.

From now on, we denote the feasible set of problem (1) by \({\mathcal {F}}\). Throughout the paper, we assume that \({\mathcal {F}}\ne \emptyset \). Moreover, the terms variable and coordinate will be used interchangeably to indicate each \(x_i\), \(i=1,\ldots ,n\).

Finally, let us recall the following characterization of stationary points of problem (1), which can be easily derived from KKT conditions.

Proposition 1

A feasible point \(x^*\) of problem (1) is stationary if and only if there exists \(\lambda ^* \in {\mathbb {R}}\) such that, for all \(i = 1,\ldots ,n\),

3 The almost cyclic 2-coordinate descent (AC2CD) method

In this section, we present the algorithm for solving problem (1) and we analyze its convergence properties to stationary points.

3.1 Description of the algorithm

The proposed almost cyclic 2-coordinate descent (AC2CD) method is a block decomposition method that iteratively performs a minimization step with respect to a working set of two coordinates, chosen by an almost cyclic strategy (note that two is the smallest number of variables that can be updated to maintain feasibility). A remarkable feature of AC2CD is that the working set selection rule does not use first-order information, allowing us not to compute the whole gradient of the objective function during the algorithm.

To describe the proposed method, we have to distinguish between outer iterations, indicated by the integer k, and inner iterations, indicated by the pair of integers (k, i), where \(k = 0,1,\ldots \) and \(i = 1,\ldots ,n\). Each outer iteration k starts with a feasible point, denoted by \(x^k\), and has n inner iterations \((k,1),\ldots ,(k,n)\). Each inner iteration (k, i) starts with a feasible point, denoted by \(z^{k,i}\), and produces the successive (feasible) \(z^{k,i+1}\) by performing a minimization step with respect to a suitably chosen pair of coordinates. Outer and inner iterations are linked by the following relation:

Namely, each cycle of inner iterations \((k,1),\ldots ,(k,n)\) starts from \(x^k\) and returns the successive \(x^{k+1}\).

To be more specific, given any \(x^k\) produced by the algorithm, first we choose a variable index \(j(k) \in \{1,\ldots ,n\}\) (as to be described later). Then, in the cycle of inner iterations \((k,1),\ldots ,(k,n)\), we adopt the following rule to choose the working set: one variable index is selected in a cyclic manner, following an arbitrary order with no repetition, while the second variable index remains fixed and equal to j(k). Since only one variable index is selected in a cyclic manner, we name this working set selection rule almost cyclic.

From now on, for every inner iteration (k, i) we denote by \(p^k_i\) the variable index of the working set that is selected in a cyclic manner. So, each minimization step is performed with respect to the two coordinates \(z^{k,i}_{p^k_i}\) and \(z^{k,i}_{j(k)}\). In this minimization step, we compute the first-order search direction \(d^{k,i} = [\nabla _{j(k)} f(z^{k,i}) - \nabla _{p^k_i} f(z^{k,i})](e_{p^k_i} - e_{j(k)})\). Clearly, we have \(d^{k,i}_h = 0\) for all \(h \in \{1,\ldots ,n\}{\setminus }\{p^k_i,j(k)\}\). Then, we set

where \(\alpha ^{k,i} \in {\mathbb {R}}\) is a feasible stepsize (which will be described later on).

Now, we explain how to choose the index j(k) at the beginning of an outer iteration k. Roughly speaking, \(x^k_{j(k)}\) must be “sufficiently far” from its nearest bound. Formally, for each index \(h \in \{1,\ldots ,n\}\), let us define the operator \(D_h :{\mathcal {F}}\rightarrow [0,\infty ) \cup \{\infty \}\) that takes as input a feasible point x and returns the distance of \(x_h\) from its nearest bound:

So, for a given \(x^k\), we choose j(k) as any index that satisfies

where \(\tau \in (0,1]\) is a fixed parameter and

In other words, the distance between \(x^k_{j(k)}\) and its nearest bound must be greater than or equal to a certain fraction (equal to \(\tau \)) of the maximum distance between each component of \(x^k\) and its nearest bound.

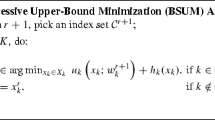

In Algorithm 1, we report the scheme of AC2CD. Hereinafter, we indicate

as they are defined at steps 6 and 7 of Algorithm 1. Note that

i.e., \(d^{k,i}\) is a descent direction at \(z^{k,i}\) if \(g^{k,i} \ne 0\) (equivalently, every nonzero \(d^{k,i}\) is a descent direction at \(z^{k,i}\)).

Remark 1

For the sake of simplicity, within each outer iteration k of AC2CD we consider n inner iterations, but they actually are \(n-1\), since no pair of coordinates is updated when \(p^k_i = j(k)\).

Now, let us focus on the computation of the stepsize \(\alpha ^{k,i}\) (step 8 of Algorithm 1). First, for any inner iterate \(z^{k,i}\) we define \({\bar{\alpha }}^{k,i}\) as the largest feasible stepsize along the direction \(d^{k,i}\). Since the equality constraint is clearly satisfied by the choice of \(d^{k,i}\), by simple calculations we obtain

In particular, the choice to define \({\bar{\alpha }}^{k,i} = 0\) when \(g^{k,i} = 0\) is for convenience of exposition (and without loss of generality), since it implies that \({\bar{\alpha }}^{k,i} = 0\) if \(d^{k,i} = 0\) (equivalently, \(d^{k,i} \ne 0\) if \({\bar{\alpha }}^{k,i} > 0\)).

A general rule for the computation of the stepsize \(\alpha ^{k,i}\) is stated in the following Stepsize Condition 1 (SC 1). As to be shown, this rule can be easily satisfied in practice by different line search strategies and guarantees global convergence to stationary points under an appropriate assumption on the level set.

Let us spend a few words on the meaning of SC 1. Point (i) requires that every inner iterate is feasible and does not increase the objective value. Point (ii) is a condition usually needed for convergence of block decomposition methods, requiring that the distance of two successive inner iterates goes to zero. Finally, point (iii) requires that the directional derivatives converge to zero over appropriate subsequences when, for sufficiently large k, the largest feasible stepsizes are bounded from below by a positive constant over the same subsequences.

Let us conclude this section by stating the following lemma, which will be useful in the sequel. It shows that, if points (i)–(ii) of SC 1 are satisfied, then every limit point of \(\{x^k\}\) is a limit point of \(\{z^{k,i}\}\) for every fixed \(i = 1,\ldots ,n\).

Lemma 1

Let \(\{x^k\}\) be a sequence of points produced by AC2CD that satisfies points (i)–(ii) of SC 1, and let \(\{x^k\}_{K \subseteq \{0,1,\ldots \}}\) be a subsequence converging to \({\bar{x}}\). Then,

Proof

For \(i = 1\) the result is trivial, since \(z^{k,1} = x^k\) for all k. For any \(i \in \{2,\ldots ,n\}\), we have \(z^{k,i} - z^{k,1} = \sum _{t=1}^{i-1} z^{k,t+1} - z^{k,t}\), and then

By continuity of f, we have \(\{f(x^k)\}_K \rightarrow f({\bar{x}})\). By point (i) of SC 1, we also have \(f(x^{k+1}) = f(z^{k,n+1}) \le f(z^{k,n}) \le \ldots \le f(z^{k,1}) = f(x^k)\) for all \(k \ge 0\), and then, \(\displaystyle {\lim _{k \rightarrow \infty } f(x^k) = f({\bar{x}})}\). Therefore, using point (ii) of SC 1, we can write \(\displaystyle {\lim _{k \rightarrow \infty }||z^{k,t+1} - z^{k,t}||} = 0\) for all \(t = 1,\ldots ,n\). Combining this relation with (9), we get

Moreover, \(||z^{k,i} - {\bar{x}}|| = ||z^{k,i} - x^k + x^k - {\bar{x}}|| \le ||z^{k,i} - x^k|| + ||x^k - {\bar{x}}||\). Then, the result is obtained by combining this inequality with (10) and the fact that \(\{x^k\}_K \rightarrow {\bar{x}}\). \(\square \)

3.2 Convergence to stationary points

In this subsection, we are concerned with the convergence analysis of AC2CD.

For a given starting point \(x^0\), let us first define the level set

To prove global convergence of AC2CD to stationary points, we need the following assumption, which requires that every point of \({\mathcal {L}}^0\) has at least one component strictly between the lower and the upper bound.

Assumption 1

\(\forall \, x \in {\mathcal {L}}^0, \, \exists \, i \in \{1,\ldots ,n\} :x_i \in (l_i,u_i)\).

Let us discuss the role played by this assumption, when combined with SC 1, in the convergence analysis of AC2CD. First, it guarantees that, for every outer iteration \(k \ge 0\), at least one pair of coordinates can be updated if and only if \(x^k\) is non-stationary. Indeed, every \(x^k\) remains in \({\mathcal {L}}^0\) by point (i) of SC 1 and, by the rule used to choose the index j(k), if Assumption 1 holds we have

So, from the stationarity conditions (2), it is straightforward to verify that a variable index \(p^k_i\) exists such that \(\nabla f(z^{k,i})^T d^{k,i} < 0\) with \({\bar{\alpha }}^{k,i} > 0\) if and only if \(x^k\) is non-stationary, that is, at least one pair of coordinates can be updated during the inner iterations if and only if \(x^k\) is non-stationary.

Second, as to be shown in the proof of Theorem 1, if Assumption 1 holds we can prove that \(\{\nabla _{j(k)} f(x^k)\}\) converges, over certain subsequences, to the KKT multiplier \(\lambda ^*\) defined as in (2). So, for sufficiently large k, we can measure the stationarity violation for each coordinate \(x^k_h\) by \(\nabla _h f(x^k) - \nabla _{j(k)} f(x^k)\) and guarantee that, at the limit, each coordinate satisfies (2).

Let us also remark that Assumption 1 is automatically satisfied in many cases, for example when \({\mathcal {F}}\) is the unit simplex, or when at least one variable has no bounds, that is, if an index \(i \in \{1,\ldots ,n\}\) exists such that \(l_i = -\infty \) and \(u_i = \infty \). Further, in [14], where the same assumption is used, it is shown that, for the Support Vector Machine training problem, this assumption is satisfied if the smallest eigenvalue of \(\nabla ^2 f\) is sufficiently large and the starting point \(x^0\) is such that \(f(x^0)<0\) (see Appendix B in [14] for more details).

Now, we are ready to show that, under Assumption 1, AC2CD converges to stationary points.

Theorem 1

Let Assumption 1 hold and let \(\{x^k\}\) be a sequence of points produced by AC2CD that satisfies SC 1. Then, every limit point of \(\{x^k\}\) is stationary for problem (1).

Proof

Let \(x^*\) be a limit point of \(\{x^k\}\) and let \(\{x^k\}_{K \subseteq \{0,1,\ldots \}}\) be a subsequence converging to \(x^*\). From the instructions of the algorithm, \(x^*\) is a feasible point. Moreover, from Lemma 1 we can write

By continuity of f, we have that \(\{f(x^k)\}_K\) converges to \(f(x^*)\). Since \(\{f(x^k)\}\) is monotonically non-increasing (by point (i) of SC 1) we have that \(\{f(x^k)\}\) converges to \(f(x^*)\). Therefore, by point (ii) of SC 1 it follows that

Using the fact that the set of indices \(\{1,\ldots ,n\}\) is finite, there exist an index \({\hat{\jmath }} \in \{1,\ldots ,n\}\) and a further infinite subsequence, that we still denote by \(\{x^k\}_K\) without loss of generality, such that

We first want to show that a real number \(\rho > 0\) exists such that

To this extent, by Assumption 1 we have that an index \({\bar{h}} \in \{1,\ldots ,n\}\) exists such that \(x^*_{{\bar{h}}} \in (l_{{\bar{h}}},u_{{\bar{h}}})\). So, using the operator \(D_h\) defined as in (3), a positive real number \(\rho \) exists such that \(D_{{\bar{h}}}(x^*) \ge (4/\tau ) \rho \), where \(\tau \in (0,1]\) is the parameter defined at step 0 of Algorithm 1, used to compute j(k). Since \(\{x^k\}_K \rightarrow x^*\), by continuity of \(D_h\) we have that \(D_{{\bar{h}}}(x^k) \ge (2/\tau ) \rho \) for all sufficiently large \(k \in K\). Using the definition of \(D^k\) given in (5), we obtain

By the rule used to choose the index j(k), we can write

The above relation, combined with (12), implies that \(D_{{\hat{\jmath }}}(z^{k,i}) \ge \rho \), \(i = 1,\ldots ,n\), for all sufficiently large \(k \in K\). Equivalently, (13) holds.

Now, we want to show that the stationarity conditions (2) are satisfied at \(x^*\) with

Reasoning by contradiction, assume that this is not true. Then, there exists an index \({\hat{t}} \in \{1,\ldots ,n\}{\setminus }\{{\hat{\jmath }}\}\) that violates (2). Using again the fact that the set of indices \(\{1,\ldots ,n\}\) is finite, there also exist an index \({\hat{\imath }} \in \{1,\ldots ,n\}\) and a further infinite subsequence, that we still denote by \(\{x^k\}_K\) without loss of generality, such that \(p^k_{{\hat{\imath }}}\) is constant and equal to \({\hat{t}}\) for all \(k \in K\). Since also j(k) is constant (and equal to \({\hat{\jmath }}\)) for all \(k \in K\), we thus obtain

By (11) and the continuity of \(\nabla f\), it follows that \(\{\nabla f(z^{k,i})\}_K\) is bounded for all \(i = 1,\ldots ,n\). So, a real number T exists such that

Therefore,

Since we have assumed \({\hat{t}}\) to violate (2) with \(\lambda ^*\) defined as in (14), one of the following three cases must hold.

-

(i)

\(x^*_{{\hat{t}}} \in (l_{{\hat{t}}}, u_{{\hat{t}}})\) and \(|\nabla _{{\hat{\jmath }}} f(x^*)-\nabla _{{\hat{t}}} f(x^*)| > 0\). Taking into account (11), a real number \(\zeta > 0\) exists such that

$$\begin{aligned} \min \{z^{k,{\hat{\imath }}}_{{\hat{t}}} - l_{{\hat{t}}}, u_{{\hat{t}}} - z^{k,{\hat{\imath }}}_{{\hat{t}}}\} \ge \zeta , \quad&\forall \, \text {sufficiently large } k \in K, \end{aligned}$$(16)$$\begin{aligned} |g^{k, {\hat{\imath }}}| = |\nabla _{{\hat{\jmath }}} f(z^{k, {\hat{\imath }}}) - \nabla _{{\hat{t}}} f(z^{k, {\hat{\imath }}})| \ge \zeta , \quad&\forall \, \text {sufficiently large } k \in K. \end{aligned}$$(17)From (13), (15), (16), (17) and the definition of \({\bar{\alpha }}^{k,i}\) given in (8), we obtain

$$\begin{aligned} {\bar{\alpha }}^{k,{\hat{\imath }}} \ge \min \Bigl \{\frac{\zeta }{2T}, \frac{\rho }{2T}\Bigl \} > 0, \quad \forall \, \text {sufficiently large } k \in K. \end{aligned}$$Therefore, by point (iii) of SC 1 we get

$$\begin{aligned} 0 = \lim _{\begin{array}{c} k \rightarrow \infty \\ k \in K \end{array}} \nabla f(z^{k,{\hat{\imath }}})^T d^{k,{\hat{\imath }}} = \lim _{\begin{array}{c} k \rightarrow \infty \\ k \in K \end{array}} -\bigl [\nabla _{{\hat{\jmath }}} f(z^{k,{\hat{\imath }}})-\nabla _{{\hat{t}}} f(z^{k,{\hat{\imath }}})\bigr ]^2, \end{aligned}$$where the second equality follows from (7). We thus obtain a contradiction with (17).

-

(ii)

\(x^*_{{\hat{t}}} = l_{{\hat{t}}}\) and \(\nabla _{{\hat{t}}} f(x^*) < \nabla _{{\hat{\jmath }}} f(x^*)\). Taking into account (11), we have

$$\begin{aligned} u_{{\hat{t}}} - z^{k,{\hat{\imath }}}_{{\hat{t}}} \ge \frac{u_{{\hat{t}}}-l_{{\hat{t}}}}{2}, \quad \forall \, \text {sufficiently large } k \in K, \end{aligned}$$(18)and a real number \(\zeta > 0\) exists such that

$$\begin{aligned} g^{k, {\hat{\imath }}} = \nabla _{{\hat{\jmath }}} f(z^{k, {\hat{\imath }}}) - \nabla _{{\hat{t}}} f(z^{k, {\hat{\imath }}}) \ge \zeta , \quad \forall \, \text {sufficiently large } k \in K. \end{aligned}$$(19)From (13), (15), (18), (19) and the definition of \({\bar{\alpha }}^{k,i}\) given in (8), we obtain

$$\begin{aligned} {\bar{\alpha }}^{k,{\hat{\imath }}} \ge \min \Bigl \{\frac{u_{{\hat{t}}}-l_{{\hat{t}}}}{4T}, \frac{\rho }{2T}\Bigl \} > 0, \quad \forall \, \text {sufficiently large } k \in K. \end{aligned}$$Therefore, by point (iii) of SC 1 we get

$$\begin{aligned} 0 = \lim _{\begin{array}{c} k \rightarrow \infty \\ k \in K \end{array}} \nabla f(z^{k,{\hat{\imath }}})^T d^{k,{\hat{\imath }}} = \lim _{\begin{array}{c} k \rightarrow \infty \\ k \in K \end{array}} -\bigl [\nabla _{{\hat{\jmath }}} f(z^{k,{\hat{\imath }}})-\nabla _{{\hat{t}}} f(z^{k,{\hat{\imath }}})\bigr ]^2, \end{aligned}$$where the second equality follows from (7). We thus obtain a contradiction with (19).

-

(iii)

\(x^*_{{\hat{t}}} = u_{{\hat{t}}}\) and \(\nabla _{{\hat{t}}} f(x^*) > \nabla _{{\hat{\jmath }}} f(x^*)\). This is a verbatim repetition of the previous case, which again leads to a contradiction.

We can thus conclude that \(x^*\) is a stationary point. \(\square \)

4 Computation of the stepsize

In this section, we describe some practical ways to compute the stepsize in order to satisfy SC 1. We consider different classes of objective functions: general non-convex functions, those with Lipschitz continuous gradient and strictly convex functions.

4.1 General non-convex objective functions

When the objective function is non-convex, a common way to compute the stepsize is using an inexact line search. Here, we consider the Armijo line search, which is now described. Given any inner iterate \(z^{k,i}\) and the direction \(d^{k,i}\), first we choose a trial feasible stepsize \({\varDelta }^{k,i}\) and then we obtain \(\alpha ^{k,i}\) by a backtracking procedure. In particular, we set

where c is the smallest nonnegative integer such that

and \(\delta \in (0,1)\), \(\gamma \in (0,1)\) are fixed parameters.

For what concerns the choice of \({\varDelta }^{k,i}\), first it must be feasible, that is, \({\varDelta }^{k,i} \le {\bar{\alpha }}^{k,i}\). Moreover, as to be shown in the proof of the following proposition, \({\varDelta }^{k,i}\) must be bounded from above by a finite positive constant to satisfy point (ii) of SC 1. Namely, we require that \({\varDelta }^{k,i} \le A^{k,i}\), where \(A^{k,i}\) is any scalar satisfying \(0< A_l \le A^{k,i} \le A_u < \infty \), with \(A_l\) and \(A_u\) being fixed parameters. In conclusion, in the Armijo line search we set \({\varDelta }^{k,i} = \min \{{\bar{\alpha }}^{k,i}, A^{k,i}\}\). In the next proposition, we show that a stepsize computed in this way satisfies SC 1.

Proposition 2

Let \(\delta \in (0,1)\), \(\gamma \in (0,1)\) and \(0< A_l \le A_u < \infty \). Then, SC 1 is satisfied by computing, at every inner iteration (k, i), the stepsize \(\alpha ^{k,i}\) as in (20), where c is the smallest nonnegative integer such that (21) holds, \({\varDelta }^{k,i} = \min \{{\bar{\alpha }}^{k,i}, A^{k,i}\}\) and \(A^{k,i} \in [A_l,A_u]\).

Proof

We first observe that, at every inner iteration (k, i), there exists a nonnegative integer c satisfying (21), since, from (7), we have \(\nabla f(z^{k,i})^T d^{k,i} < 0\) for all \(d^{k,i} \ne 0\).

Point (i) of SC 1 immediately follows from (21) and the fact that \(\alpha ^{k,i} \le {\varDelta }^{k,i}\), with \({\varDelta }^{k,i}\) feasible. Now, we prove point (ii) of SC 1. We first show that a real number \(\sigma > 0\) exists such that, for all \(k \ge 0\), we have

From (20) and (21), for all \(k \ge 0\) we can write

Using (7), we obtain

Moreover, \(z^{k,i+1}-z^{k,i} = \alpha ^{k,i} g^{k,i} (e_{p^k_i} - e_{j(k)})\), and then, \(||z^{k,i+1}-z^{k,i}||^2 = 2 (\alpha ^{k,i})^2 (g^{k,i})^2\). Using this equality in (24), and recalling that \(\alpha ^{k,i} \le A_u < \infty \), we have that

Therefore, (22) holds with \(\sigma = \gamma /(2A_u)\) for all \(k \ge 0\). So, point (ii) of SC 1 is satisfied by combining (22) with the fact that \(f(x^{k+1}) = f(z^{k,n+1}) \le f(z^{k,n}) \le \cdots \le f(z^{k,1}) = f(x^k)\) for all \(k \ge 0\).

Now, we prove that also point (iii) of SC 1 holds. Let \(\{z^{k,{\hat{\imath }}}\}_K\), \({\hat{\imath }}\), \({\hat{\jmath }}\) and \({\bar{\alpha }}^{k,{\hat{\imath }}}\) be defined as in point (iii) of SC 1. Rearranging the terms in (23), we can write

Moreover, since \(\{z^{k,{\hat{\imath }}}\}_K\) converges and \(f(x^{k+1}) = f(z^{k,n+1}) \le f(z^{k,n}) \le \ldots \le f(z^{k,1}) = f(x^k)\) for all \(k \ge 0\), by continuity of f we have that \(\{f(x^k)\}\) converges, implying that

Combining (25) and (26), we obtain

Proceeding by contradiction, we assume that it does not hold that

Since \(\{z^{k,{\hat{\imath }}}\}_K\) converges, then it is bounded and, by continuity of \(\nabla f\) and the definition of the direction \(d^{k,i}\), also \(\bigl \{\nabla f(z^{k,{\hat{\imath }}})\bigr \}_K\) and \(\{d^{k,{\hat{\imath }}}\}_K\) are bounded. So, if (28) does not hold, there exist further infinite subsequences, that we still denote by \(\{z^{k,{\hat{\imath }}}\}_K\), \(\bigl \{\nabla f(z^{k,{\hat{\imath }}})\bigr \}_K\) and \(\{d^{k,{\hat{\imath }}}\}_K\) without loss of generality, such that

and

with \(\eta > 0\). From (27) and (30), we get

Since \(A^{k,{\hat{\imath }}} \ge A_l > 0\) for all \(k \ge 0\) and \({\bar{\alpha }}^{k,{\hat{\imath }}} \ge \xi /2 > 0\) for all sufficiently large \(k \in K\), using the definition of \({\varDelta }^{k,{\hat{\imath }}}\) we obtain

Consequently, by (31), an outer iteration \({\bar{k}} \in K\) exists such that

The above relation implies that (21) is satisfied with \(c > 0\) for all \(k \ge {\bar{k}}\), \(k \in K\). Therefore,

By the mean value theorem, we can write

where \(\displaystyle {\beta ^{k,{\hat{\imath }}} = z^{k,{\hat{\imath }}} + \theta ^{k,{\hat{\imath }}} \frac{\alpha ^{k,{\hat{\imath }}}}{\delta } d^{k,{\hat{\imath }}}}\) and \(\theta ^{k,{\hat{\imath }}} \in (0,1)\). Using (32) and (33), we obtain

Since \(\theta ^{k,{\hat{\imath }}} \in (0,1)\), and taking into account (29) and (31), it follows that \(\{\beta ^{k,{\hat{\imath }}}\}_K \rightarrow {\tilde{z}}\). So, passing to the limit in (34), we have that \(\nabla f({\tilde{z}})^T {\tilde{d}} \ge \gamma \nabla f({\tilde{z}})^T {\tilde{d}}\). Using (30), we obtain \(-\eta \ge -\gamma \eta \), contradicting the fact that \(\eta > 0\) and \(\gamma \in (0,1)\). Then, point (iii) of SC 1 holds. \(\square \)

4.2 Objective functions with Lipschitz continuous gradient

In this subsection, we consider the case where \(\nabla f\) is Lipschitz continuous over \({\mathcal {F}}\) with constant L. Namely, we assume that

First, let us recall a result due to Beck [1], which will be useful in the sequel.

Lemma 2

Let us assume that \(\nabla f\) is Lipschitz continuous over \({\mathcal {F}}\) with constant L. For any point \(z \in {\mathcal {F}}\) and any pair of indices \(i,j \in \{1,\ldots ,n\}\), define the function

where \(I_{i,j,z}\) is the interval that comprises the feasible stepsizes. Namely,

Then, every \({{\dot{\phi }}}_{i,j,z}\) is Lipschitz continuous over \(I_{i,j,z}\) with constant \(L_{i,j} \le 2L\), that is,

Proof

See Sect. 3 in [1]. \(\square \)

Now, let \({\bar{L}}_{i,j}\) be some positive overestimates of \(L_{i,j}\), with \(L_{i,j}\) being the Lipschitz constants defined in Lemma 2. Namely,

We will show that these overestimates can be used to compute, in closed form, a stepsize satisfying SC 1. In particular, for a given fixed parameter \(\gamma \in (0,1)\), at every inner iteration (k, i) we can set

As to be pointed out in the proof of the following proposition, this stepsize can be seen as a particular case of the Armijo stepsize defined in Proposition 2. Note also that, since \(L_{i,j} \le 2L\), every positive overestimate of 2L can be used in (38).

Proposition 3

Let us assume that \(\nabla f\) is Lipschitz continuous over \({\mathcal {F}}\) with constant L and let \(\gamma \in (0,1)\). Then, SC 1 is satisfied by computing, at every inner iteration (k, i), the stepsize \(\alpha ^{k,i}\) as in (38).

Proof

Let \(i,j \in \{1,\ldots ,n\}\) be any pair of indices and consider inequality (36). Observing that \(0 \in I_{i,j,z}\) for every feasible z, and using known results on functions with Lipschitz continuous gradient (see, e.g., [23]), we can write

Since \({\dot{\phi }}_{i,j,z}(t) = \nabla _i f\bigl (z+t(e_i-e_j)\bigr )-\nabla _j f\bigl (z+t(e_i-e_j)\bigr )\), it follows that

To prove the assertion, it is sufficient to show that \(\alpha ^{k,i}\), defined as in (38), is a particular case of the Armijo stepsize defined in Proposition 2. To this extent, we can set \(A^{k,i} = 2(1-\gamma )/ {\bar{L}}_{p^k_i,j(k)}\) (all these quantities are positive and finite) and, by (38), we obtain \(\alpha ^{k,i} = {\varDelta }^{k,i}\), with \({\varDelta }^{k,i}\) defined as in Proposition 2. So, all we need is to prove that (21) holds with \(c=0\) for this choice of \({\varDelta }^{k,i}\). Namely, we want to show that

Since \({\varDelta }^{k,i} \le {\bar{\alpha }}^{k,i}\), and taking into account the definition of \(d^{k,i}\) given in (6), we have that

that is, \({\varDelta }^{k,i} g^{k,i} \in I_{p^k_i,j(k),z^{k,i}}\) by definition (35). Therefore, using (39) with i and j replaced by \(p^k_i\) and j(k), respectively, \(z = z^{k,i}\) and \(t = {\varDelta }^{k,i} g^{k,i}\), we obtain

where the equality follows from (7). Since \(0 \le {\varDelta }^{k,i} \le 2(1-\gamma )/{\bar{L}}_{p^k_i,j(k)}\), we get (40). \(\square \)

Remark 2

As appears from the proof of Proposition 3, the stepsize given in (38) satisfies SC 1 by using, for every pair of indices \(i,j \in \{1,\ldots ,n\}\), a positive constant \({\bar{L}}_{i,j} \ge L_{i,j}\), where it is sufficient that \(L_{i,j}\) satisfies (39). In particular, a case of interest is when we have a (possibly non-convex) separable objective function \(f(x) = \sum _{i=1}^n f_i(x_i)\) where each \(f_i :{\mathbb {R}}\rightarrow {\mathbb {R}}\) has a Lipschitz continuous derivative with constant \(L_i\). In this case, Proposition 3 holds even with \({\bar{L}}_{i,j}\) replaced by \({\bar{L}}_i + {\bar{L}}_j\) in (38), where \(\bar{L}_i\) and \(\bar{L}_j\) are two positive overestimates of \(L_i\) and \(L_j\), respectively. This follows from the fact that, in this case, (39) holds even with \(L_{i,j}\) replaced by \(L_i + L_j\), since, by Lipschitz continuity of \(\dot{f}_1,\ldots ,\dot{f}_n\), we have

Now, let us analyze the case where the objective function is quadratic of the following form: \(f(x) = \frac{1}{2} x^T Q x - q^T x\), with \(Q \in {\mathbb {R}}^{n \times n}\) symmetric and \(q \in {\mathbb {R}}^n\). Denoting by \(Q_{i,j}\) the element of Q in position (i, j), we have (again from [1])

So, at every inner iteration (k, i), we can easily obtain (a positive overestimate of) \(L_{p^k_i,j(k)}\) in order to compute the stepsize \(\alpha ^{k,i}\) as in (38).

Moreover, if \((d^{k,i})^T Q d^{k,i} > 0\) for a given direction \(d^{k,i}\), we can write

It follows that, when \((d^{k,i})^T Q d^{k,i} > 0\), we can set \(\gamma = 1/2\), \({\bar{L}}_{p^k_i,j(k)} = L_{p^k_i,j(k)}\) and the stepsize given in (38) is the exact stepsize, i.e., it is the feasible minimizer of \(f(z^{k,i} + \alpha d^{k,i})\) with respect to \(\alpha \).

Vice versa, if \((d^{k,i})^T Q d^{k,i} \le 0\) for a given direction \(d^{k,i}\), we can exploit the fact that the greatest objective decrease along \(d^{k,i}\) is achieved by setting \(\alpha ^{k,i}\) as large as possible, since

So, we can set \(\alpha ^{k,i} = \min \{{\bar{\alpha }}^{k,i}, A_u\}\), with \(0< A_u < \infty \) being a (large) fixed parameter. Using (41), it is easy to see that also this stepsize is a particular case of the Armijo stepsize defined in Proposition 2 (indeed, for every nonnegative value of \({\varDelta }^{k,i}\), the Armijo condition (21) is satisfied by \(c = 0\)).

4.3 Strictly convex objective functions

Let us consider a strictly convex objective function. In this case, we can satisfy SC 1 by computing, at every inner iteration (k, i), the stepsize \(\alpha ^{k,i}\) by an exact line search, that is,

To ensure that the above minimization is well defined at every inner iteration, we assume that \({\mathcal {L}}^0\) is compact.

Proposition 4

Let us assume that f is strictly convex and \({\mathcal {L}}^0\) is compact. Then, SC 1 is satisfied by computing, at every inner iteration (k, i), the stepsize \(\alpha ^{k,i}\) as in (42).

Proof

Point (i) of SC 1 immediately follows from the definition of \(\alpha ^{k,i}\). Since \(f(x^{k+1}) = f(z^{k,n+1}) \le f(z^{k,n}) \le \cdots \le f(z^{k,1}) = f(x^k)\) and each \(z^{k,i}\) lies in the compact set \({\mathcal {L}}^0\), it follows that \(\{f(x^k)\}\) converges. So, to prove point (ii) of SC 1 we have to show that \(\displaystyle {\lim _{k \rightarrow \infty } ||z^{k,i+1}-z^{k,i}|| = 0}\), \(i = 1,\ldots ,n\). Arguing by contradiction, assume that this is not true. Then, there exist a real number \(\rho > 0\), an index \({\hat{\imath }} \in \{1,\ldots ,n\}\) and an infinite subsequence \(\{z^{k,{\hat{\imath }}}\}_{K \subseteq \{0,1,\ldots \}}\) such that \(||z^{k,{\hat{\imath }}+1} - z^{k,{\hat{\imath }}}|| \ge \rho \) for all \(k \in K\). Since every point \(z^{k,i}\) lies in the compact set \({\mathcal {L}}^0\), there also exist a further infinite subsequence, that we still denote by \(\{z^{k,{\hat{\imath }}}\}_K\) without loss of generality, and two distinct points \(z', z'' \in {\mathbb {R}}^n\) such that

As \(\alpha ^{k,i}\) is obtained by an exact line search, we can write

where the second inequality follows from the convexity of f and the last inequality follows from the fact that \(f(z^{k,{\hat{\imath }}+1}) \le f(z^{k,{\hat{\imath }}})\). Moreover, since \(\{f(x^k)\}\) converges, a real number \({\bar{f}}\) exists such that

By continuity of the objective function, we can write

So, passing to the limit in (44), we obtain \({\bar{f}} \le f\Bigl (\dfrac{z'+z''}{2}\Bigr ) \le {\bar{f}}\), that is, \(f\Bigl (\dfrac{z'+z''}{2}\Bigr ) = {\bar{f}}\). Adding to the left-hand side of this equality the two quantities \(\Bigl (\dfrac{1}{2} {\bar{f}} - \dfrac{1}{2} f(z')\Bigr )\) and \(\Bigl (\dfrac{1}{2} {\bar{f}} - \dfrac{1}{2} f(z'')\Bigr )\), that are both equal to zero, we get

contradicting the fact that f is strictly convex and \(z' \ne z''\). Then, point (ii) of SC 1 holds.

Finally, point (iii) of SC 1 can be proved by the same arguments used in the proof of Proposition 2 for the Armijo stepsize, just observing that the objective decrease achieved by the exact line search is greater than or equal to the one achieved by the Armijo line search. \(\square \)

5 Convergence rate analysis

In this section, we show that the convergence rate of AC2CD is linear under standard additional assumptions.

The key to prove linear convergence rate of AC2CD is to show a relation between the points produced by AC2CD and the points produced by the classical coordinate descent method applied to an equivalent transformed problem. Then, linear convergence rate follows from the well known properties of the classical coordinate descent method proved by Luo and Tseng [17] and by Beck and Tetruashvili [2].

In particular, here we give two results. The first one is more general and states that, eventually, \(\{f(x^k)\}\) converges linearly to the optimal value of problem (1), that is, \(C \in [0,1)\) exists such that \(f(x^{k+1})-f(x^*) \le C [f(x^k)-f(x^*)]\) for all sufficiently large k, with \(x^*\) being the optimal solution of problem (1). The second result is for the case where there are no bounds on the variables and establishes a non-asymptotic linear convergence rate, that is, the above inequality holds for every \(k \ge 0\).

Let us start by showing the general result. First, we need a specific rule to compute the index j(k) in order to ensure that it remains constant from a certain outer iteration \({\hat{k}}\). In particular, we initialize AC2CD with \(\tau \in (0,1)\) (step 0 of Algorithm 1) and, from a certain \(k \ge 1\), we adopt the following rule: \(j(k) = j(k-1)\) if this choice satisfies (4), otherwise j(k) is set equal to any index h such that \(D_h(x^k) = D^k\), where \(D_h\) and \(D^k\) are the operators given in (3) and (5), respectively. Namely,

We can state the following intermediate lemma.

Lemma 3

Let Assumption 1 hold and let \(\tau \in (0,1)\). Let \(\{x^k\}\) be a sequence of points produced by AC2CD, where j(k) is computed as in (46) from a certain \(k \ge 1\). Let us also assume that \(\lim _{k \rightarrow \infty } \{x^k\} = x^* \in {\mathbb {R}}^n\).

Then, there exist a variable index \({\bar{\jmath }}\) and an outer iteration \({\hat{k}}\) such that \(j(k) = {\bar{\jmath }}\) for all \(k \ge {\hat{k}}\). Moreover, \(x^*_{{\bar{\jmath }}} \in (l_{{\bar{\jmath }}}, u_{{\bar{\jmath }}})\).

Proof

Let \(j^* \in {{{\,\mathrm{Argmax}\,}}}_{h = 1,\ldots ,n} D_h(x^*)\). From Assumption 1, we have \(D_{j^*}(x^*)> 0\).

First, we prove that there exist a variable index \({\bar{\jmath }}\) and an outer iteration \({\hat{k}}\) such that \(j(k) = {\bar{\jmath }}\) for all \(k \ge {\hat{k}}\). Arguing by contradiction, assume that this is not true. Since the set of indices \(\{1,\ldots ,n\}\) is finite, there exist two indices \(j_1,j_2 \in \{1,\ldots ,n\}\) and two infinite subsequences \(\{x^k\}_{K_1}\) and \(\{x^k\}_{K_2}\) such that

Since j(k) is computed as in (46) from a certain \(k \ge 1\), it follows that

By continuity of the operator \(D_h\), we can write

Combining these two inequalities, and recalling that \(D_{j^*}(x^*) > 0\), we obtain

This is contradiction, since \(D_{j^*}(x^*) \ge D_{j_2}(x^*)\) and \(\tau \in (0,1)\). So, a variable index \({\bar{\jmath }}\) and an outer iteration \({\hat{k}}\) exist such that \(j(k) = {\bar{\jmath }}\) for all \(k \ge {\hat{k}}\).

To prove that \(x^*_{{\bar{\jmath }}} \in (l_{{\bar{\jmath }}},u_{{\bar{\jmath }}})\), assume by contradiction that \(D_{{\bar{\jmath }}} (x^*) = 0\). Since j(k) is computed as in (46) from a certain \(k \ge 1\) and \(j(k) = {\bar{\jmath }}\) for all \(k \ge {\hat{k}}\), for all sufficiently large k we have \(D_{{\bar{\jmath }}}(x^k) \ge \tau D^k \ge \tau D_{j^*}(x^k)\). By continuity of the operator \(D_h\), we obtain

which leads to a contradiction, since \(D_{j^*}(x^*) > 0\) and \(\tau \in (0,1)\). Therefore, \(x^*_{{\bar{\jmath }}} \in (l_{{\bar{\jmath }}},u_{{\bar{\jmath }}})\). \(\square \)

Now, we are ready to show that, eventually, \(\{f(x^k)\}\) converges linearly to \(f(x^*)\) under the following assumption.

Assumption 2

It holds that

-

f is strictly convex twice continuously differentiable over \({\mathbb {R}}^n\);

-

the optimal solution of problem (1), denoted by \(x^*\), exists;

-

\(\nabla ^2 f(x^*) \succ 0\).

Note that Assumption 2 implies that \({\mathcal {L}}^0\) is compact (see Lemma 9.1 in [29]). Therefore, if also Assumption 1 holds and SC 1 is satisfied, then \(\{x^k\}\) converges to the optimal solution \(x^*\). As a further consequence, under Assumption 2 we can compute the stepsize by an exact line search to satisfy SC 1 (see Proposition 4).

Theorem 2

Let Assumption 1 and 2 hold, and let \(\tau \in (0,1)\). Let \(\{x^k\}\) be a sequence of points produced by AC2CD, where j(k) is computed as in (46) from a certain \(k \ge 1\) and the stepsize is computed as indicated in Proposition 4.

Then, a real number \(C \in [0,1)\) and an outer iteration \({\bar{k}}\) exist such that

Proof

Without loss of generality, we assume that \(n>1\) (otherwise the feasible set is either empty or a singleton). Let \({\bar{\jmath }}\) and \({\hat{k}}\) be the variable index and the outer iteration defined in Lemma 3, respectively. Namely, \(j(k) = {\bar{\jmath }}\) for all \(k \ge {\hat{k}}\). To simplify the notation, without loss of generality we assume that

Let us consider the following variable transformation:

Equivalently, we can write

where, recalling that \({\bar{\jmath }} = n\),

Note that the columns of M are linearly independent and, for every point \(x \in {\mathbb {R}}^n\) such that \(\sum _{i=1}^n x_i = b\), the linear system \(x = M y + w\) has a unique solution given by \(y_i = x_i\), \(i = 1,\ldots ,n-1\).

Now, since \(x^*_n \in (l_n,u_n)\) (by Lemma 3) and f is strictly convex, in problem (1) we can remove the bound constraints on the variable \(x_n\) and \(x^*\) is still the unique optimal solution. Consequently, by defining the function \(\psi :{\mathbb {R}}^{n-1} \rightarrow {\mathbb {R}}\) as \(\psi (y) {:}{=} f\bigl (M y + w\bigr )\), we can recast problem (1) as follows:

We observe that, for every feasible point y of problem (51), the corresponding x obtained by (49) satisfies both the equality constraint \(\sum _{i=1}^n x_i = b\) and the bound constraints \(l_i \le x_i \le u_i\), \(i = 1,\ldots ,n-1\) (the bound constraints on \(x_n\) are ignored for the reasons explained above).

An optimal solution \(y^*\) of problem (51) exists, is unique and is given by \(y^*_i = x^*_i\), \(i = 1,\ldots ,n-1\). Indeed, this \(y^*\) clearly satisfies \(x^* = M y^* + w\) and \(\psi (y^*) = f(x^*)\). So, if a feasible point \({\tilde{y}} \ne y^*\) of problem (51) existed such that \(\psi ({\tilde{y}}) \le \psi (y^*)\), it would mean that \(M {\tilde{y}} + w \ne M y^* + w\) (since the columns of M are linearly independent) and \(f(M {\tilde{y}} + w) \le f(M y^* + w) = f(x^*)\). But this is not possible, since \(M {\tilde{y}}\) would be feasible for problem (1) after removing the bound constraints on \(x_n\), and we said above that \(x^*\) is still the unique optimal solution of problem (1) even if we remove the bound constraints on \(x_n\).

Now, for any \(k \ge {\hat{k}}\) and for any \(i = 1,\ldots ,n\), let us define \(y^{k,i}\) as the unique vector [feasible for problem (51)] satisfying

which is given by

For all \(k \ge {\hat{k}}\), without loss of generality we can consider only the first \(n-1\) inner iterations in AC2CD. Indeed, by (47) we are assuming that \(p^k_n = {\bar{\jmath }}\) for all \(k \ge {\hat{k}}\), and then, no pair of coordinates is updated in the inner iteration (k, n) for all \(k \ge {\hat{k}}\) (see Remark 1). Let us consider any inner iteration (k, i), with \(k \ge {\hat{k}}\) and \(i \in \{1,\ldots ,n-1\}\). We want to show that

In other words, (54) says that moving \(z^{k,i}\) along \(d^{k,i}\) with a certain stepsize \(\alpha \) corresponds, in the y space, to moving the \(p^k_i\)th coordinate of \(y^{k,i}\) along \(-\nabla _{p^k_i} \psi (y^{k,i})\) with the same stepsize \(\alpha \), keeping all the other coordinates of \(y^{k,i}\) fixed. To prove that (54) holds, we use again the fact that, for all \(\alpha \in {\mathbb {R}}\), the linear system \(z^{k,i} + \alpha d^{k,i} = M y + w\) has a unique solution y given by \(y_h = z^{k,i}_h + \alpha d^{k,i}_h\), \(h = 1,\ldots ,n-1\). Then, (54) is equivalent to writing

Since \(d^{k,i}_h = 0\) for all \(h \notin \{p^k_i, {\bar{\jmath }}\}\), and we are assuming that \({\bar{\jmath }} = n\), (55b) immediately follows from (53). To obtain (55a), we use (53) again to write \(y^{k,i}_{p^k_i} = z^{k,i}_{p^k_i}\). Thus, we only need to show that \(d^{k,i}_{p^k_i} = -\nabla _{p^k_i} \psi (y^{k,i})\). This follows from the relation \(\nabla \psi (y) = M^T \nabla f(My + w)\), that, combined with (52) and the definition of M given in (50), yields to

Therefore, (55) holds, implying that (54) holds too. Using \(\alpha = \alpha ^{k,i}\) in (55), for all \(k \ge {\hat{k}}\) we can write

Using (53) (with i replaced by \(i+1\)) in the above relations, for all \(k \ge {\hat{k}}\) we obtain

We see that, for all \(k \ge {\hat{k}}\), the vectors \(y^{k,1}, \ldots , y^{k,n}\) are the same that would be generated by a coordinate descent algorithm applied to problem (51). In particular, for all \(k \ge {\hat{k}}\), every \(y^{k+1,1}\) is obtained from \(y^{k,1}\) by selecting one coordinate at a time by a Gauss-Seidel (or cyclic) rule and moving it with a certain stepsize.

Now we want to show that, for all sufficiently large k, each stepsize \(\alpha ^{k,i}\) is the same that would be obtained by performing an exact line search in the updating scheme (56), applied to problem (51). First observe that there exists \({\tilde{k}} \ge {\hat{k}}\) such that \(z^{k,i}_{{\bar{\jmath }}} \in (l_{{\bar{\jmath }}},u_{{\bar{\jmath }}})\), \(i = 1,\ldots ,n\), for all \(k \ge {\tilde{k}}\), as \(x^*_{{\bar{\jmath }}} \in (l_{{\bar{\jmath }}},u_{{\bar{\jmath }}})\) and \(\{z^{k,i}\} \rightarrow x^*\), \(i = 1,\ldots ,n\). Therefore, since an exact line search is used in AC2CD, for all \(i = 1,\ldots ,n\) we can write

In other words, for all \(k \ge {\tilde{k}}\), the constraints \(z^{k,i}_{{\bar{\jmath }}} + \alpha d^{k,i}_{{\bar{\jmath }}} \in [l_{{\bar{\jmath }}}, u_{{\bar{\jmath }}}]\) with respect to \(\alpha \) are not necessary for the computation of the stepsize in AC2CD. Combining (57) with (54), we get that, for all \(k \ge {\tilde{k}}\), each \(\alpha ^{k,i}\) is the optimal solution of the following one-dimensional problem:

In particular, the last bound constraints in (58) follow from those in (57), using (55a). Therefore, for all \(k \ge {\tilde{k}}\), each \(\alpha ^{k,i}\) is the stepsize that would be obtained by performing an exact line search in the coordinate descent scheme (56), applied to problem (51).

So, according to the results established by Luo and Tseng [17], eventually \(\{\psi (y^{k,1})\}\) converges linearly to the optimal value of problem (51) if the following three conditions hold: (i) \(\psi \) is strictly convex twice continuously differentiable over \({\mathbb {R}}^{n-1}\), (ii) the optimal solution \(y^*\) of problem (51) exists, (iii) \(\nabla ^2 \psi (y^*) \succ 0\). The second point has already been proved before, while the other two points follow by combining Assumption 2 with the fact that the columns of M are linearly independent and \(x^* = My^* + w\). We conclude that a real number \(C \in [0,1)\) and an outer iteration \({\bar{k}} \ge {\tilde{k}}\) exist such that

Since \(\psi (y^*) = f(x^*)\), \(\psi (y^{k,1}) = f(z^{k,1}) = f(x^k)\) and \(\psi (y^{k+1,1}) = f(z^{k+1,1}) = f(x^{k+1})\), we get the result. \(\square \)

Now, we focus on the case where there are no bounds on the variables, i.e., \(l_i = -\infty \) and \(u_i = \infty \) for all \(i = 1,\ldots ,n\). In this case, a non-asymptotic linear convergence rate can be achieved by using the stepsize defined in Proposition 3 (with \(\gamma = 1/2\)). To obtain this result, we need the following assumption.

Assumption 3

It holds that

-

f is strongly convex over \({\mathbb {R}}^n\) with constant \(\mu \);

-

\(\nabla f\) is Lipschitz continuous over \({\mathbb {R}}^n\) with constant L;

-

the optimal solution of problem (1), denoted by \(x^*\), exists.

Also in this case, we need to maintain the index j(k) fixed throughout the algorithm. In particular, now we require j(k) to be equal to any index \({\bar{\jmath }} \in \{1,\ldots ,n\}\) for all \(k \ge 0\) (note that any choice of \({\bar{\jmath }}\) is acceptable, since there are no bounds on the variables).

Theorem 3

Let us assume that there are no bounds on the variables in problem (1), i.e., \(l_i = -\infty \) and \(u_i = \infty \) for all \(i = 1,\ldots ,n\), and let Assumption 3 hold. Given any index \({\bar{\jmath }} \in \{1,\ldots ,n\}\), let \(\{x^k\}\) be a sequence of points produced by AC2CD, where \(j(k) = {\bar{\jmath }}\) for all \(k \ge 0\) and the stepsize is computed as indicated in Proposition 3, with \(\gamma = 1/2\).

Then, a real number \(C \in [0,1)\) exists such that

In particular, considering the constants \(L_{i,j}\) defined in Lemma 2 and the constants \({\bar{L}}_{i,j}\) used for the stepsize computation, we have

where \(\displaystyle {{\bar{L}}^{\text {min}}_{{\bar{\jmath }}} {:}{=} \min _{i \ne {\bar{\jmath }}} {\bar{L}}_{i,{\bar{\jmath }}}}\,\) and \(\displaystyle {\,{\bar{L}}^{\text {max}}_{{\bar{\jmath }}} {:}{=} \max _{i \ne {\bar{\jmath }}} {\bar{L}}_{i,{\bar{\jmath }}}}\).

Proof

Following the same line of arguments as in the proof of Theorem 2, without loss of generality we assume that \(n>1\) and that (47) holds for all \(k \ge 0\). Then, we consider the variable transformation \(x = My + w\) given in (48), (49) and (50) to define the function \(\psi :{\mathbb {R}}^{n-1} \rightarrow {\mathbb {R}}\) as \(\psi (y) {:}{=} f\bigl (M y + w\bigr )\). We obtain that problem (1) is equivalent to the following problem (which is now unconstrained since there are no bounds on the variables):

An optimal solution \(y^*\) of problem (61) exists, is unique and is given by \(y^*_i = x^*_i\), \(i = 1,\ldots ,n-1\), satisfying \(x^* = M y^* + w\) and \(\psi (y^*) = f(x^*)\). Moreover, for any \(k \ge 0\) and for any \(i = 1,\ldots ,n\), let us define \(y^{k,i}\) as in (52)–(53). We have that (56) holds for all \(k \ge 0\). Namely, for all \(k \ge 0\), every \(y^{k+1,1}\) is obtained from \(y^{k,1}\) by selecting one coordinate at a time by a Gauss-Seidel (or cyclic) rule and moving it with a certain stepsize.

Now, let us consider the stepsize \(\alpha ^{k,i}\). By hypothesis, it is computed as described in Proposition 3, with \(\gamma = 1/2\), using the constants \(L_{i,j}\) and \({\bar{L}}_{i,j}\) as they are defined in Lemma 2 and in (37), respectively. Since there are no bounds on the variables, for every \(d^{k,i} \ne 0\) we have \({\bar{\alpha }}^{k,i} = \infty \). Then,

We want to show that \(L_{1,{\bar{\jmath }}}, \ldots , L_{n-1,{\bar{\jmath }}}\) are the coordinatewise Lipschitz constants of \(\nabla \psi \) over \({\mathbb {R}}^{n-1}\). Namely, for every \(i = 1,\ldots ,n-1\) and for all \(y \in {\mathbb {R}}^{n-1}\), we want to show that

where \(y(\alpha ) \in {\mathbb {R}}^{n-1}\) is defined as follows:

Recalling that \({\bar{\jmath }} = n\) and the definition of M given in (50), first observe that \(\nabla _i \psi (y) = \nabla f\bigl (M y + w\bigr )^T (e_i - e_{{\bar{\jmath }}})\) and \(\nabla _i \psi \bigl (y(\alpha )\bigr ) = \nabla f\bigl (M y(\alpha ) + w\bigr )^T (e_i - e_{{\bar{\jmath }}}) = \nabla f\bigl (M y + w + \alpha (e_i - e_{{\bar{\jmath }}})\bigr )^T (e_i - e_{{\bar{\jmath }}})\). Thus,

Considering the functions \(\phi _{i,j,z}\) defined in Lemma 2, we have that \({\dot{\phi }}_{i,j,z}(\alpha ) = \nabla f\bigl (z+\alpha (e_i-e_j)\bigr )^T (e_i - e_j)\). Therefore, from Lemma 2 we get

and then (62) holds.

So, for all \(k \ge 0\) and all \(i=1,\ldots ,n-1\) such that \(d^{k,i} \ne 0\), each stepsize \(\alpha ^{k,i}\) is the reciprocal of an overestimate of \(L_{p^k_i, {\bar{\jmath }}}\), where \(L_{p^k_i, {\bar{\jmath }}}\) is the \(p^k_i\)th coordinatewise Lipschitz constant of \(\nabla \psi \). According to the results stated by Beck and Tetruashvili [2] (see Theorem 3.9 in [2]), \(\{\psi (y^{k,1})\}\) has a non-asymptotic linear convergence rate if the following three conditions hold: (i) \(\psi \) is strongly convex over \({\mathbb {R}}^{n-1}\), (ii) \(\nabla \psi \) is Lipschitz continuous over \({\mathbb {R}}^{n-1}\), (iii) the optimal solution \(y^*\) of problem (61) exists. The third point has already been proved before. The first point follows from Assumption 2 and the fact that the columns of M are linearly independent. The second point follows from the fact that \(L_{1,{\bar{\jmath }}}, \ldots , L_{n-1,{\bar{\jmath }}}\) are the coordinatewise Lipschitz constants of \(\nabla \psi \) over \({\mathbb {R}}^{n-1}\). In particular, using known results (see Lemma 2 in [22]) and the fact that \({\bar{\jmath }} = n\), we can write

Therefore, a real number \(C \in [0,1)\) exists such that

Then, we get (59) by using the fact that \(\psi (y^*) = f(x^*)\), \(\psi (y^{k,1}) = f(z^{k,1}) = f(x^k)\) and \(\psi (y^{k+1,1}) = f(z^{k+1,1}) = f(x^{k+1})\).

Now, we prove (60). First observe that, by Theorem 3.9 in [2], the constant C appearing in (64) is given by

where \(\mu ^{\psi }\) and \(L^{\psi }\) are the strong convexity constant of \(\psi \) over \({\mathbb {R}}^{n-1}\) and the Lipschitz constant of \(\nabla \psi \) over \({\mathbb {R}}^{n-1}\), respectively, while \({\bar{L}}^{\text {min}}_{{\bar{\jmath }}}\) and \({\bar{L}}^{\text {max}}_{{\bar{\jmath }}}\) are defined as in the assertion of the theorem. Using (63), we can upper bound \(L^{\psi }\) with \(\sum _{i \ne {\bar{\jmath }}} L_{i,{\bar{\jmath }}}\). Therefore, to obtain (60), we only have to show that

i.e., we have to show that \(\psi \) is strongly convex over \({\mathbb {R}}^{n-1}\) with constant \(\mu \). Using the fact that f is strongly convex over \({\mathbb {R}}^n\) with constant \(\mu \), for any \(y', y'' \in {\mathbb {R}}^{n-1}\) and for all \(\theta \in [0,1]\) we can write

Denoting by \(\lambda _{\text {min}}(M^T M)\) the smallest eigenvalue of \(M^T M\), we also have \(||M (y'-y'')||^2 = (y'-y'')^T M^T M (y'-y'') \ge \lambda _{\text {min}} (M^T M) ||y' - y''||^2\), and then,

Note that \(M^T M\) has all entries equal to 1, except for those on the diagonal that are equal to 2. Namely,

where \(I_{(n-1) \times (n-1)}\) is the \((n-1)\) dimensional identity matrix and \(1_{(n-1) \times (n-1)}\) is the \((n-1) \times (n-1)\) matrix made of all ones. It follows that \(\lambda _{\text {min}}(M^T M) = 1 + 0 = 1\), that, combined with (66), implies that \(\psi \) is strongly convex over \({\mathbb {R}}^{n-1}\) with constant \(\mu \). Then, (65) holds and the result is obtained. \(\square \)

Let us conclude this section by discussing the relation between AC2CD and the classical coordinate descent method. As appears from the proofs of Theorem 2 and Theorem 3, this relation is crucial to obtain linear convergence rate of AC2CD and it also provides further insight into the proposed method.

Indeed, for every \(k \ge 0\), a cycle of inner iterations \((k,1), \ldots , (k,n)\) in AC2CD can be seen as a cycle of iterations of the classical coordinate descent method, with cyclic selection rule, applied to an equivalent transformed problem. In particular, for every \(k \ge 0\) we can use the variable transformation given in (48), (49) and (50), with \({\bar{\jmath }}\) replaced by j(k), to define the function \(\psi (y) {:}{=} f\bigl (M y + w\bigr )\) and recast (1) as an equivalent problem. Note that, in absence of any information on j(k), the resulting equivalent transformed problem

-

can change from k to \(k+1\), as j(k) can change;

-

has bound constraints \(l_i \le y_i \le u_i\), \(i \in \{1,\ldots ,n\}{\setminus }\{j(k)\}\), plus the constraints \(l_{j(k)} \le b - \sum _{i \ne j(k)} y_i \le u_{j(k)}\), where the latter follow from the constraints \(l_{j(k)} \le x_{j(k)} \le u_{j(k)}\) in (1).

Then, in the proofs of Theorems 2 and 3, we obtain a linear convergence rate by exploiting the rule used to compute j(k) that, together with other standard assumptions, guarantees that \(j(k) = {\bar{\jmath }}\) for all \(k \ge {\hat{k}}\) and \(x^*_{{\bar{\jmath }}} \in (l_{{\bar{\jmath }}},u_{{\bar{\jmath }}})\) (for problems with no bounds on the variables, we have \({\hat{k}} = 0\)). In this way, for all sufficiently large k, the equivalent transformed problem remains the same and the constraints \(l_{j(k)} \le b - \sum _{i \ne j(k)} y_i \le u_{j(k)}\) can be ignored. It also follows that quickly identifying the index \({\bar{\jmath }}\) in problems with finite bounds on the variables may benefit the algorithm. Then, it may be useful combining AC2CD with some strategies to predict which variables are at the bounds in the final solution, such es, e.g., those proposed in [6, 8, 11, 27].

Finally, the approach of transforming (1) into an equivalent simply constrained problem has some connections with the method proposed by Bertsekas in [3] for solving problems with linear inequality constraints. In particular, at every iteration, the algorithm proposed in [3] selects a subset of n indices that comprises all those corresponding to the binding constraints. Then, assuming linear independence of these constraints, a linear variable transformation is used to obtain a new problem with a first block of box constraints, plus a second block of general linear inequality constraints which are not binding at the current point and can be ignored for the computation of the search direction, obtained by a Newton strategy. So, the main difference between AC2CD and the method proposed in [3] is that, for every \(k \ge 0\), we perform a cycle of inner iterations to update one coordinate at a time in the equivalent transformed problem.

6 Numerical results

In this section, we present the numerical results of AC2CD on some structured problems where the computation of the partial derivatives of the objective function is cheap with respect to the computation of the whole gradient. The codes were written in Matlab (version R2017b) and the experiments were run on an Intel(R) Core(TM) i7-7500U with 16 GB RAM memory.

First, we considered the Chebyshev center problem and the linear support vector machine (SVM) training problem, which both can be written as

for some \(Q \in {\mathbb {R}}^{m \times n}\), \(q \in {\mathbb {R}}^n\), \(l_i \in {\mathbb {R}}\cup \{-\infty \}\) and \(u_i \in {\mathbb {R}}\cup \{\infty \}\), \(i = 1,\ldots ,n\). On these convex quadratic problems, we compared AC2CD with the following block decomposition methods:

-

Random Coordinate Descent (RCD) [21], which, at every iteration, randomly selects two distinct variables from a given probability distribution and updates them by minimizing a quadratic model of the objective function;

-

Maximal Violating Pair (MVP) [9, 26], which, at every iteration, selects the two variables that most violate the stationarity conditions (2) and moves them by using an appropriate stepsize that, in our case, is computed by an exact line search.

Note that RCD, like AC2CD, does not need to compute the whole gradient of the objective function for choosing the working set. On the other hand, MVP exploits a Gauss-Southwell (or greedy) strategy which requires to calculate the whole vector \(\nabla f(x^k)\) at each iteration k.

Then, we focused on problems with strongly convex objective function and no bounds on the variables, for which non-asymptotic linear convergence rate of AC2CD has been proved in Sect. 5. In this case, we compared our algorithm with two versions of RCD that were proposed in [20] for problems of the following form:

where each \(f_i :{\mathbb {R}}\rightarrow {\mathbb {R}}\) is convex and has a Lipschitz continuous derivative with constant \(L_i > 0\). In our experiments, we considered the same test problems used in [20, 32], which are of the form of (68), with strongly convex \(f_i\), \(i = 1,\ldots ,n\).

Lastly, we considered the following non-convex problems:

for some \(Q \in {\mathbb {R}}^{m \times n}\), \(D \in {\mathbb {R}}^{m \times m}\) and \(q \in {\mathbb {R}}^n\), choosing D as a diagonal matrix so that \(Q^T D Q\) is indefinite. On these problems, AC2CD is still compared with RCD (whose analysis for the non-convex case can be found in [25]) and MVP with exact line search (whose analysis for non-convex problems over the unit simplex can be found in [4], just observing that, for this class of problems, MVP coincides with the so called pairwise Frank-Wolfe method).

Finally, to have a fair comparison between AC2CD and RCD, in the following results we consider an outer iteration of RCD as made of n inner iterations, each of them involving a minimization step with respect to a pair of coordinates.

6.1 Implementation issues

In this subsection, we describe some implementation details concerning

-

the computation of \(\{p^k_1,\ldots ,p^k_n\}\) at step 3 of Algorithm 1,

-

the computation of the derivatives of the objective function in each algorithm,

-

the termination criterion used in each algorithm.

As regards the first point, a permutation \(\{p^k_1,\ldots ,p^k_n\}\) of \(\{1,\ldots ,n\}\) was randomly computed at the beginning of every outer iteration k of AC2CD (it is known that, in coordinate descent methods with cyclic selection rules, periodically shuffling the ordering of the variables may speed up convergence in practice [7, 31]).

For what concerns the derivatives computation, this operation is straightforward for problem (68), given the separable structure of the objective function. More attention is needed for problems (67) and (69), since, due to the possibly excessive dimension of Q, in these problems the Hessian matrices \(Q^T Q\) and \(Q^T D Q\) were not stored. For what concerns problems (67), using some ideas from [10, 21] we introduced the vector \(r(x) {:}{=} Qx\), updating it during the iterations of each considered algorithm, so that

where \(Q_i\) is the ith column of Q. We see that computing any partial derivative has a cost \({\mathcal {O}}(m)\) and we have an extra cost \({\mathcal {O}}(2m)\) to update the vector r(x) (since the considered algorithms can move two variables at a time). These costs can also reduce when Q is sparse. Similarly, for what concerns problems (69), we introduced the vector \(r(x) {:}{=} DQx\) and (70) still holds (in this case we have a cost \({\mathcal {O}}(3m)\) to update r(x), due to the presence of the diagonal matrix D).

It is worth observing that, in AC2CD and RCD, both variables included in the working set at the beginning of an inner iteration may be at the lower or at the upper bound. In this case, the minimization step is not performed in practice (since the two variables cannot be moved), and then, the partial derivatives of the objective function do not need to be computed. So, by inserting a simple check in the scheme of AC2CD and RCD, we avoided to compute the partial derivatives of the objective function when not necessary.

For what concerns the termination criterion used in AC2CD, let us first rewrite the stationarity conditions (2) in an equivalent form:

Exploiting some ideas from [10], the termination criterion used in AC2CD tries to approximately satisfy the above relation without the need of computing the whole gradient of the objective function, in order to preserve efficiency. To this extent, at the beginning of every outer iteration k we first set \(G^k_{\text {min}} = \infty \) and \(G^k_{\text {max}} = -\infty \). Then, at every inner iteration (k, i) we update \(G^k_{\text {min}}\) and \(G^k_{\text {max}}\) as follows: for each variable \(z^{k,i}_h\) included in the working set, if \(z^{k,i}_h < u_h\) then we update \(G^k_{\text {min}} \leftarrow \min \{G^k_{\text {min}},\nabla _h f(z^{k,i})\}\), and if \(z^{k,i}_h > l_h\) then we update \(G^k_{\text {max}} \leftarrow \max \{G^k_{\text {max}},\nabla _h f(z^{k,i})\}\). So, AC2CD was stopped at the end of the first outer iteration k satisfying

where \(\epsilon \) is a scalar that was set to \(10^{-1}\). Since \(\nabla _{p^k_i} f(z^{k,i})\) and \(\nabla _{j(k)} f(z^{k,i})\) are not computed when both \(z^{k,i}_{p^k_i}\) and \(z^{k,i}_{j(k)}\) are at the lower or at the upper bound, for the reasons explained above, we also added a final check after that (71) is satisfied, evaluating all those components of \(\nabla f(x^k)\) that were skipped, if any.

For the convex problems considered in our experiments, we used the final objective value \(f^{\text {AC2CD}}\) returned by AC2CD as benchmark for termination of RCD and MVP. Namely, RCD and MVP were stopped when they produced a point \(x^k\) satisfying

where \(\nu \) is a scalar that was set to \(10^{-6}\). Clearly, a termination criterion based on (72) cannot be used when the objective function is non-convex, since the algorithms may converge to different stationary points. So, for the non-convex problems (69), the same termination criterion used in AC2CD was also used in RCD (recall that we consider an outer iteration of RCD as made of n inner iterations), while MVP, that computes \(\nabla f(x^k)\) at each iteration k, was stopped when it produced a point \(x^k\) such that \(\min _{i=1,\ldots ,n} \{\nabla _i f(x^k)\} - \max _{i :x_i>0} \{\nabla _i f(x^k)\} \ge -10^{-1}\).

6.2 Chebyshev center

Given a finite set of vectors \(v^1,\ldots ,v^n \in {\mathbb {R}}^m\), the Chebyshev center problem consists in finding the smallest ball that encloses all the given points. It arises in many fields, such as mechanical engineering, biology, environmental science and computer graphics (see [33] and the references therein for more details). The Chebyshev center problem can be formulated as the following convex standard quadratic problem:

Nine synthetic data sets were created by randomly generating each component of the samples \(v^1,\ldots ,v^n\) from a standard normal distribution, using \(n = 40{,}000, 60{,}000, 80{,}000\) and \(m = 0.01 n, 0.05 n, 0.1 n\) for every fixed n. The nine data sets are listed below:

-

(i)

\(n = 40{,}000\), \(m = 400\);

-

(ii)

\(n = 40{,}000\), \(m = 2000\);

-

(iii)

\(n = 40{,}000\), \(m = 4000\);

-

(iv)

\(n = 60{,}000\), \(m = 600\);

-

(v)

\(n = 60{,}000\), \(m = 3000\);

-

(vi)

\(n = 60{,}000\), \(m = 6000\);

-

(vii)

\(n = 80{,}000\), \(m = 800\);

-

(viii)

\(n = 80{,}000\), \(m = 4000\);

-

(ix)

\(n = 80{,}000\), \(m = 8000\).

For each data set, a randomly chosen vertex of the unit simplex was used as starting point. In AC2CD, for all \(k \ge 1\) the index j(k) was computed as in (46), with \(\tau = 0.9\) (for \(k = 0\) we set \(j(k) \in {{{\,\mathrm{Argmax}\,}}}_{h=1,\ldots ,n} D_h(x^k)\)), and the stepsize was computed as described in the last part of Sect. 4.2 for quadratic objective functions, with \(\gamma = 1/2\) and \(A_u = 10^{12}\). In RCD, an \({\mathcal {O}}(1)\) procedure was used at each inner iteration to randomly choose, from a uniform distribution, the pair of distinct variables to be updated. In particular, this procedure first randomly generates a real number r from a uniform distribution on \((0,\frac{n(n-1)}{2})\), and then sets \(i = 1 + \bigl \lfloor (\sqrt{1+8\lfloor r \rfloor }+1)/2 \bigr \rfloor \) and \(j = 1 + \bigl \lfloor \lfloor r \rfloor - (i-2)(i-1)/2 \bigr \rfloor \), where \(\lfloor \cdot \rfloor \) denotes the floor operation.

In Table 1, we report the results for each algorithm in terms of final objective value, number of (outer) iterations and CPU time in seconds. To analyze how fast the objective function decreases in the three considered algorithms, in Fig. 1 we plot the normalized optimization error \(E^k\) versus the CPU time. Coherently with the termination criterion used in the experiments, \(E^k\) is computed as the left-hand side of (72).

Normalized optimization error (y axis) versus CPU time in seconds (x axis) for AC2CD, RCD and MVP on Chebyshev center problems. The y axis is in logarithmic scale and, for each algorithm, the normalized optimization error is computed as the left-hand side of (72)

We see that, on all the considered data sets, AC2CD outperforms RCD both in CPU time, by a factor between 2 and 14, and in the number of outer iterations, by a factor between 60 and 247. Looking at Fig. 1 more in detail, we observe that AC2CD and RCD are comparable in the first iterations, but AC2CD is able to compute a solution with higher precision in a smaller amount of time. In comparison with MVP, we observe that AC2CD is slightly slower on the data sets with \(m = 0.01n\), i.e., data sets (i), (iv) and (vii) . On all the other data sets, on average AC2CD is more than 2 times faster than MVP in CPU time.

6.3 Linear SVM

Linear Support Vector Machine [5] is a popular technique for data classification, which aims at separating a given set of samples by a hyperplane. Formally, let \(v^1,\ldots ,v^n \in {\mathbb {R}}^m\) be a finite set of vectors and \(a^1,\ldots ,a^n \in \{-1,+1\}\) be the corresponding labels. To train a linear SVM, we can solve the following convex quadratic problem:

where C is a positive parameter, set to 1 in our experiments. As mentioned in Sect. 2, the above problem can be easily rewritten as in (1).

Eight data sets were downloaded from the LIBSVM [6] webpage https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets. They are listed below:

-

(i)

gisette (\(n = 6000\), \(m = 5000\));

-

(ii)

rcv1 (\(n = 20{,}242\), \(m = 47{,}236\));

-

(iii)

a9a (\(n = 32{,}561\), \(m = 123\));

-

(iv)

w8a (\(n = 49{,}749\), \(m = 300\));

-

(v)

ijcnn1 (\(n = 49{,}990\), \(m = 22\));

-

(vi)

real sim (\(n = 72{,}309\), \(m = 20{,}958\));

-

(vii)

webspam (\(n = 350{,}000\), \(m = 254\));

-

(viii)

covtype (\(n = 581{,}012\), \(m = 54\)).

For each data set, we used as starting point a vector made of all zeros except for two randomly chosen variables that were set strictly between the lower and the upper bound. In AC2CD, the index j(k) and the stepsize were computed as described before for the Chebyshev center problem. In RCD, the working set was randomly chosen at each inner iteration by the same \({\mathcal {O}}(1)\) procedure used for the Chebyshev center problem.

The results for each algorithm are reported in Table 2 in terms of final objective value, number of (outer) iterations and CPU time in minutes. On the first six data sets, which have less than \(10^5\) samples, we see that MVP has the lowest CPU time, but AC2CD still outperforms RCD. In particular, on these problems AC2CD is on average faster than RCD by a factor of almost 5 in CPU time and by a factor larger than 11 in the number of outer iterations. On the two largest data sets, having more than \(10^5\) samples, AC2CD achieves the best performances. In particular, considering the CPU time, on data set (vii) AC2CD is more than 42 times faster than RCD and more than 4 times faster than MVP, while on data set (viii) AC2CD is about 15 times faster than RCD and about 13 times faster than MVP.

6.4 Problems with no bounds on the variables

The last convex test problems in our experiments are of the form of (68). We considered the same class of objective functions used in [20, 32], that is,

where \(a_i > 0\), \(i=1,\ldots ,n\), and \(b_i\), \(c_i\), \(d_i\)\(\in {\mathbb {R}}\), \(i=1,\ldots ,n\). It is possible to show that each \(f_i\) is strongly convex with constant \(a_i\) and has a Lipschitz continuous derivative with constant \(L_i = a_i + (1/4)b_i^2\) (see [20, 32]).

Six artificial problems were created. The first three have the following dimension: (i) \(n = 5000\), (ii) \(n = 10{,}000\), (iii) \(n = 20000\), with \(a_i\) randomly generated from a uniform distribution on (0, 15) and \(b_i\), \(c_i\), \(d_i\) randomly generated from a uniform distribution on \((-\,15, 15)\). The last three problems have the same dimension as the previous ones, i.e., (iv) \(n = 5000\), (v) \(n = 10{,}000\), (vi) \(n = 20{,}000\), but with \(a_i\) randomly generated from a uniform distribution on (0, 2), \(b_i\) randomly generated from a uniform distribution on \((-\,2, 2)\) and \(c_i\), \(d_i\) randomly generated from a uniform distribution on \((-\,10, 10)\).

In all of these problems, the zero vector was used as starting point. In AC2CD, the index j(k) was maintained fixed for all \(k \ge 0\) and was chosen as an element of the set \({{{\,\mathrm{Argmax}\,}}}_{i=1,\ldots ,n} 1/L_i\), while the stepsize was computed as described in Proposition 3, with \(\gamma = 1/2\) and \({\bar{L}}_{i,j}\) replaced by \(L_i + L_j\), \(i,j = 1,\ldots ,n\) (see Remark 2).

Our algorithm was compared with two versions of RCD proposed in [20], using blocks made of two variables and different probability distributions (studied in [20]), which are now described. Denoting by \(p_{ij}\) the probability to select a pair of distinct variable indices (i, j) at any inner iteration of RCD, we first used a uniform distribution, i.e., all \(p_{ij}\) have the same value, and then we used probabilities that depend on the Lipschitz constants, i.e., each \(p_{ij}\) is equal to \((L_i^{-1} + L_j^{-1}) / \sum _{\begin{array}{c} h,t = 1,\ldots ,n \\ h \ne t \end{array}} (L_h^{-1} + L_t^{-1})\). We name the two resulting algorithms RCD\(_{\text {unif}}\) and RCD\(_{\text {Lips}}\), respectively. More in detail, to choose the working set at any inner iteration, in RCD\(_{\text {unif}}\) we used the same \({\mathcal {O}}(1)\) procedure described before for the Chebyshev center problem, while in RCD\(_{\text {Lips}}\) we used the random generator proposed by Nesterov in [22], adjusted for our purposes. It requires to randomly generate one real number from a uniform distribution on (0, 1) and perform \({\mathcal {O}}(\ln (\frac{n(n-1)}{2}))\) operations on some vectors (whose preliminary definition has a cost that has not been included in the final statistics).

In Table 3, we report the results for each algorithm in terms of final objective value, number of outer iterations and CPU time in seconds. We see that, on the first three problems, RCD\(_{\text {unif}}\) achieves the best performances: in terms of CPU time it is faster than AC2CD by a factor of about 1.5, but AC2CD is almost 13 times faster than RCD\(_{\text {Lips}}\) on average. On the last three problems, in terms of CPU time AC2CD outperforms both RCD\(_{\text {unif}}\) and RCD\(_{\text {Lips}}\) by an average factor of about 2 and 15, respectively. Moreover, we observe that the number of outer iterations of AC2CD and RCD\(_{\text {Lips}}\) is similar on all the considered problems, but the amount of time needed to converge is remarkably different, with AC2CD being much faster. This is due to the procedure used in RCD\(_{\text {Lips}}\) to randomly generate, at each inner iteration, a pair of variable indices from a Lipschitz-dependent probability distribution.

6.5 Non-convex problems

To test how AC2CD works when the objective function is non-convex, we finally considered problems of the form of (69). Each problem was created by the following procedure: first the elements of Q and those of q were randomly generated from a standard normal distribution and from a uniform distribution on (0, 1), respectively; then the diagonal elements of D were set to 1, except for a prefixed number of them that were randomly chosen and set to negative values randomly generated from a uniform distribution on \((-\,1,0)\).

More in detail, we generated three problems by fixing \(n = m = 7000\) and considering a number of negative diagonal elements of D equal to 0.35m, 0.5m and 0.65m, respectively. The three problems are summarized below:

-

(i)

\(n = m = 7000\), \(n_{\text {neg}} = 2450\), \(n_{\text {pos}} = 4550\), \(\lambda _{\text {min}} = -\,9.19 \cdot 10^3\), \(\lambda _{\text {max}} = 2.18 \cdot 10^4\);

-

(ii)

\(n = m = 7000\), \(n_{\text {neg}} = 3500\), \(n_{\text {pos}} = 3500\), \(\lambda _{\text {min}} = -\,1.13 \cdot 10^4\), \(\lambda _{\text {max}} = 1.90 \cdot 10^4\);

-

(iii)

\(n = m = 7000\), \(n_{\text {neg}} = 4550\), \(n_{\text {pos}} = 2450\), \(\lambda _{\text {min}} = -\,1.30 \cdot 10^4\), \(\lambda _{\text {max}} = 1.60 \cdot 10^4\);