Abstract

This paper presents a sequence of deferred correction (DC) schemes built recursively from the implicit midpoint scheme for the numerical solution of general first order ordinary differential equations (ODEs). It is proven that each scheme is A-stable, satisfies a B-convergence property, and that the correction on a scheme DC2j of order 2j of accuracy leads to a scheme DC2j + 2 of order 2j + 2. The order of accuracy is guaranteed by a deferred correction condition. Numerical experiments with standard stiff and non-stiff ODEs are performed with the DC2, ..., DC10 schemes. The results show a high accuracy of the method. The theoretical orders of accuracy are achieved together with a satisfactory stability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In [10, 20], Gustafsson and Kress introduced a new version of deferred correction (DC) strategy for the numerical solution of linear systems of ordinary differential equations (ODE) [10] and initial boundary value problems [20], under a monotonicity condition. Numerical experiments with one-dimensional linear parabolic and hyperbolic equations were performed and showed that the method is effective (orders 2, 4 and 6 of accuracy are achieved). We propose to extend the method from [10, 20] to the time-discretization of more general time-evolution partial differential equations (PDEs). In this paper, we restrict to the case of the initial value problem (IVP)

where the unknown u is from [0, T] into a Banach space X, \(u_0\) is a given data and F is a sufficiently differentiable function such that u exists and is sufficiently differentiable. The main objective is to show the properties of the numerical method (consistency, stability, convergence and order of accuracy). A complete analysis of the DC method applied to reaction-diffusion equations leads to an arbitrary high order and unconditionally stable method (see [18]).

The DC method is used to improve the order of accuracy of numerical methods of lower order. This method is explored by many authors, e.g. [1, 2, 6, 7, 10, 12, 21, 23]. The method in [6] is an application of iterative deferred correction (IDC). The authors proved that an asymptotic improvement of order p can be accomplished, from a scheme of order p, at each step of the IDC procedure, provided suitable finite difference operators are employed. Numerical experiments are performed with the IDC applied to the trapezoidal rule, Taylor-2 and Adams-Bashforth of order 2. The results are promising even though they point out some difficulties of the proposed algorithms: inaccuracy for “large” time step and no asymptotic improvement for high levels of correction. The approaches in [1, 2, 7, 10, 12, 21] are quite similar and consist in a linear perturbation of a low order scheme. However, solving stiff problems (problems extremely hard to solve by standard explicit methods [25]) is a challenge unfavorable for these methods. In particular, the method in [21], concerning a highly accurate solver for stiff ODEs, requires sufficiently small time steps for moderately stiff problems while convergence is reduced to order 2 for “very stiff” problems.

Our schemes are based on nonlinear perturbations (corrections) of the implicit midpoint rule and inherit the A-stable property of the trapezoidal rule [5] at any stage of the correction. Starting from an approximation \(\left\{ u^{2,n}\right\} _{n=0}^N\) of the exact solution u by the implicit midpoint rule on a uniform partition \(0=t_0<t_1<\cdots < t_N=T\) of [0, T], at the stage \(j=1,2,\ldots \) of the correction we obtain an approximation \(\left\{ u^{2j+2,n}\right\} _{n=0}^N\) of u, expected to be of order \(2j+2\) of accuracy, on the same partition. Each approximate solution \(\left\{ u^{2j,n}\right\} _{n=0}^N\) to be corrected is subject to a deferred correction condition (DCC) which guarantees the improvement of the order of accuracy. We prove that if \(\left\{ u^{2j,n}\right\} _{n=0}^N\) satisfies the DCC and its correction \(\left\{ u^{2j+2,n}\right\} _{n=0}^N\) converges to u at the discrete points \(0=t_0<t_1<\cdots < t_N=T\) (or is simply bounded, when X is finite dimensional) then \(\left\{ u^{2j+2,n}\right\} _{n=0}^N\) approximates u with order \(2j+2\). Moreover, provided the function F is Lipschitz with respect to its second variable or satisfies a one-sided Lipschitz condition, each \(\left\{ u^{2j,n}\right\} _{n=0}^N\) satisfies the DCC and then converges with order 2j of accuracy, for arbitrary positive integer j. We also prove that each DC scheme involving \(\left\{ u^{2j,n}\right\} _{n=0}^N\) is B-stable. The theory is illustrated by numerical tests, for the schemes of order 2, 4, ..., 10.

The paper is organized as follows: in Sect. 2 we recall some basic results from finite difference approximations and present the DC schemes; Sect. 3 deals with the consistency of the method; the analysis of convergence and order of accuracy together with a B-convergence result are given in Sect. 4; absolute stability is proved in Sect. 5, and Sect. 6 is devoted to numerical experiments.

2 Deferred correction schemes for the implicit midpoint rule

We suppose that \(\displaystyle F\in C^{2p+2}\left( [0,T]\times X,X \right) \), for a positive integer p, so that (1.1) has a unique solution \(\displaystyle u\in C^{2p+3}\left( [0,T],X \right) \). We simply denote by \(\Vert \cdot \Vert \), the norm in the Banach space X. For a time step \(k>0\), we denote \(t_n=nk\) and \(t_{n+1/2}=(n+1/2)k\), for each integer n. This implies that \(t_0=0\). We consider the time steps k such that \(0=t_0<t_1<\cdots < t_N=T\) is a partition of [0, T], for a non-negative integer N. The centered, forward and backward difference operators D, \(D_+\) and \(D_-\), respectively, related to k and applied to u, are defined as follows:

and

The average operator is denoted by E:

The composition of \(D_+\) and \(D_-\) is defined recursively. They commute, that is \((D_+D_-)u(t_n)=(D_-D_+)u(t_n)=D_-D_+u(t_n)\), and satisfy the identities

and

for each integer \(m\ge 1\) such that \(0\le t_{n-m-1}\le t_{n+m}\le T\). We have the estimate

provided \([t_{n-m_2},t_{n+m_1}] \subset [0,T]\) and \(m_1+m_2\le 2p+3\) (see [15, p. 249] or [17]).

If \(\left\{ u^n \right\} _n\) is a sequence of approximation of u at the discrete points \(t_n\), the finite difference operators apply to \(\left\{ u^n \right\} _n\), and we define

and

From the centered finite difference approximation (see [17, Thm 5] or [3, 4, 13]) we have

and

for each integer \(j=1,2,\ldots ,p\). These approximations lead to the schemes

The schemes (2.6) are multi-steps and prone to stability restrictions. We resort to DC method to transform them into a sequence of one step schemes as follows: For \(j=0\), we have the implicit midpoint rule

For \(j\ge 1\),

The scheme (2.8)–(2.9) has unknowns \(u^{2j+2,n}\), \(n=1,2,\ldots ,N\), and is deduced from (2.6) by substituting the unknown \(u^n\) under the summation symbols by \(u^{2j,n}\). The index 2j indicates that \(\left\{ u^{2j,n}\right\} _n\) is expected to be an approximation of the exact solution u with order 2j of accuracy. We call the schemes (2.8)–(2.9) Deferred Correction of order \(2j+2\) for the implicit midpoint rule, denoted DC2j+2.

Remark 2.1

The scheme (2.8)–(2.9), for \(n=1,2,3,\ldots ,j\), should involve unknowns \(u^{2j,-1}, \ldots , u^{2j,-j}\) which represent approximate solutions of (1.1) at the discrete points \(t=-k,\ldots ,-jk\), respectively. To avoid those approximations for \(t<0\), we propose the following scheme which is efficient for the computation of \(u^{2j+2,1},\ldots , u^{2j+2,j}\), using only points within the solution interval [0, T].

The finite difference operator in (2.10) are related to the time step \(k_j=k/(2j+1)\). The approximations \(\left\{ \overline{u}^{2j,m}\right\} _m\) and \(\left\{ u^{2j,n}\right\} _n\) are computed from the same scheme, (2.7) or (2.8)–(2.9), but for the time steps \(k_j\) and k, respectively. The scheme (2.10) results from the finite difference approximations

and

where \(t_n=\tau _0<\tau _1<\ldots <\tau _{2j+1}=t_{n+1}\), with \(\tau _m=t_n+mk_j\), for \(m=1, 2,\ldots , 2j+1\). Table 1 gives the coefficients \({c}^j_i\) for \(j=1,2,3,4\).

Remark 2.2

Each \(u^{2j+2,n+1}\), \(n\ge j\), can be obtained by solving iteratively the system

where x is the unknown, and \(a_n^j\) and \(b_n^j\) are constants depending on \(u^{2j+2,n}\) and \(u^{2j,n+1+j},u^{2j,n+j},\ldots ,u^{2j,n-j}\). The total number of vectors (in the solution space X) stored for the computation of \(u^{2j+2,n+1}\) is \(j^2+3j+1\): \(u^{2j+2,n}\) and the \(u^{2i,q}\), for \(i=1,2,\ldots ,j\), and \(n+(j-i+1)(j+i)/2-2i \le q\le n+1+(j-i+1)(j+i)/2\).

Remark 2.3

From Remark 2.2, only the implicit midpoint rule, DC2, and the DC schemes of the form (2.10)–(2.11) used at startup are implicit Runge-Kutta (RK) methods. Starting with DC4, all the DC2j methods of the form (2.8)–(2.9) are not RK methods. For instance, \(u^{4,n+1}\) depends on \(u^{4,n}\) and some of the \(u^{2,i}\), which \(u^{2,i}\) evolve independently and are not stages computed from \(u^{4,n}\). As we will see in Sect. 5, the analysis of A-stability, in particular the proof of lemma 5.2, shows that it is impossible to write a recurrence \(u^{2j+2,n+1}=R(z)\,u^{2j+2,n}\) from (2.8) when \(j\ge 1\), as one would get by applying any RK method to Dahlquist equation. This is the main ingredient behind the A-stability of our DC2j methods independently of the order of accuracy.

3 Deferred correction condition (DCC)

In this section we give a sufficient condition for the scheme (2.8)–(2.9) to achieve order \(2j+2\) of accuracy. Hereafter, the letter C will denote any constant independent from k, and that can be calculated explicitly in terms of known quantities. The exact value of C may change. We have the following definition:

Definition 3.1

(Deferred Correction Condition) Let u be the exact solution of the Cauchy problem (1.1). Given a positive integer j, a sequence \(\left\{ u^{2j,n}\right\} _{n=0}^N\) of approximations of u, at the discrete points \(0=t_0<\cdots <t_N=T\), is said to satisfy the Deferred Correction Condition (DCC) for the implicit midpoint rule if \(\left\{ u^{2j,n}\right\} _{n=0}^N\) approximates u with order 2j of accuracy, and we have

for \(n=1,2,\ldots ,N-2\) and \(k\le k_0\), where \(k_0>0\) is fixed and C is a constant independent from k.

Remark 3.1

Condition (3.1) is equivalent to

and

for \(n=j,j+1,\ldots , N-j\). This is due to the transform

and a similar transform for \(k^i\left( D_+D_-\right) ^iD\left( u^{2j,n+1/2}-u(t_{n+1/2}) \right) \).

We have the following result:

Theorem 3.1

Let u be the exact solution of (1.1) and \(\left\{ u^{2j,n}\right\} _{n=0}^N\), \(j=1,\dots ,p\), a sequence of approximations of u satisfying DCC for the implicit midpoint rule. Let \(\left\{ u^{2j+2,n}\right\} _{n=0}^N\) be the solution of (2.8)–(2.9) built from \(\left\{ u^{2j,n}\right\} _{n=0}^N\). We suppose that \(u^{2j+2,1},\ldots ,u^{2j+2,j}\) are given and satisfy

where C is a constant independent from k. Furthermore, we suppose that one of the following four conditions holds:

-

(i)

F is Lipschitz with respect to the second variable x: there exists \(\mu \ge 0\) such that

$$\begin{aligned} \Vert F(t,x)-F(t,y)\Vert \le \mu \Vert x-y\Vert ,~~\forall (t,x,y)\in [0,T]\times X\times X. \end{aligned}$$(3.5) -

(ii)

X is finite dimensional, and \(\left\{ u^{2j+2,n}\right\} _{n=0}^N\) remains close to u in the sense that there exists \(M>0\) such that

$$\begin{aligned} \Vert u^{2j+2,n}-u(t_n) \Vert \le M,~~ \text{ for } \text{ each } ~n=0,1,\ldots ,N. \end{aligned}$$(3.6) -

(iii)

X is infinite dimensional, and \(\left\{ u^{2j+2,n}\right\} _n\) converges to the exact solution u.

-

(iv)

X is a Hilbert space with inner product \(\left( .,. \right) \), and F satisfies the following so-called one-sided Lipschitz condition, with a one-sided Lipschitz constant \(\beta \in \mathbb {R}\):

$$\begin{aligned} \left( F(t,x)-F(t,y),x-y\right) \le \beta \Vert x-y\Vert ^2, ~~\forall (t,x,y)\in [0,T]\times X\times X. \end{aligned}$$(3.7)Then \(\left\{ u^{2j+2,n}\right\} _n\) approximates u with order \(2j+2\) of accuracy, that is

$$\begin{aligned} \Vert u^{2j+2,n}-u(t_n)\Vert \le Ck^{2j+2},~~ \text{ for } \text{ each } ~n=0,1,\ldots ,N, \end{aligned}$$(3.8)where C is a constant depending only on j, T, DCC, a Lipschitz constant on F and the derivatives of u up to order \(2j+3\), for time steps k sufficiently small.

Proof

-

1.

First we consider the case where the function \(F=F(t,x)\) is Lipschitz with respect to the second variable x. Combining (1.1) and (2.8), we obtain the identity

$$\begin{aligned} \begin{aligned}&D\varTheta ^{2j+2,n+1/2} =\sigma ^{2j+2,n+1/2}+ (\varLambda ^j -\varGamma ^j)D\left( u^{2j,n+1/2}-u(t_{n+1/2})\right) \\&+F\left( t_{n+1/2}, \widehat{u}^{2j+2,n+1 }-\varGamma ^j \widehat{u}^{2j,n+1}\right) -F\left( t_{n+1/2}, \widehat{u}(t_{n+1})-\varGamma ^j \widehat{u}(t_{n+1})\right) , \end{aligned} \end{aligned}$$(3.9)where \(\varLambda ^j\) and \(\varGamma ^j\) are finite difference operators defined for arbitrary integer \(j \ge 1\) by

$$\begin{aligned} \varLambda ^ju(t_n) =\sum _{i=1}^jc_{2i+1}k^{2i}(D_+D_-)^i u(t_n), \end{aligned}$$and

$$\begin{aligned} \varGamma ^j u(t_n)=\sum _{i=1}^jc_{2i}k^{2i}(D_+D_-)^i u(t_n), \end{aligned}$$provided \(u(t_{n\pm i})\) exists for \(i=0,1,2,\ldots ,j\). We have defined

$$\begin{aligned} \varTheta ^{2j+2,n}=\left( u^{2j+2,n}-u(t_{n})\right) -\varGamma ^j \left( u^{2j,n}-u(t_n)\right) , \end{aligned}$$(3.10)and

$$\begin{aligned} \begin{aligned} \sigma ^{2j+2,n+1/2}&=\left[ u'(t_{n+1/2})-Du(t_{n+1/2})+\varLambda ^j Du(t_{n+1/2})\right] \\&-\left[ F(t_{n+1/2},u(t_{n+1/2}))-F( t_{n+1/2}, \widehat{u}(t_{n+1})-\varGamma ^j \widehat{u}(t_{n+1}))\right] . \end{aligned} \end{aligned}$$From (2.4) we have

$$\begin{aligned} \left\| u'(t_{n+1/2})-Du(t_{n+1/2})+\varLambda ^jD u(t_{n+1/2}) \right\| \le Ck^{2j+2}, \end{aligned}$$and, since F is differentiable and u is sufficiently regular, we deduce from the mean value theorem and the approximation (2.5) that

$$\begin{aligned} \left\| F(t_{n+1/2},u(t_{n+1/2}))-F( t_{n+1/2}, \widehat{u}(t_{n+1})-\varGamma ^j \widehat{u}(t_{n+1})) \right\| \le Ck^{2j+2}, \end{aligned}$$for each \(n=0,1,\ldots ,N\), where C is a constant depending only on j, T, a Lipschitz constant from F and the derivatives of u up to order \(2j+3\). The last two inequalities imply that

$$\begin{aligned} \left\| \sigma ^{2j+2,n+1/2}\right\| \le Ck^{2j+2}. \end{aligned}$$(3.11)Since the sequence \(\left\{ u^{2j,n}\right\} _n\) satisfies DCC, from Remark 3.1 we have

$$\begin{aligned} \left\| \left( \varLambda ^j -\varGamma ^j\right) D\left( u^{2j,n+1/2}-u(t_{n+1/2})\right) \right\| \le Ck^{2j+2}. \end{aligned}$$(3.12)From the Lipschitz condition on F we have

$$\begin{aligned} \begin{aligned}&\left\| F\left( t_{n+1/2}, \widehat{u}^{2j+2,n+1 }-\varGamma ^j \widehat{u}^{2j,n+1}\right) -F\left( t_{n+1/2}, \widehat{u}(t_{n+1})-\varGamma ^j \widehat{u}(t_{n+1})\right) \right\| \\ {}&\le \mu \Vert \widehat{\varTheta }^{2j+2,n+1}\Vert . \end{aligned} \end{aligned}$$(3.13)Substituting inequalities (3.11)–(3.13) in the identity (3.9), we deduce that

$$\begin{aligned} \Vert D\varTheta ^{2j+2,n+1/2} \Vert \le Ck^{2j+2}+\mu \Vert \widehat{\varTheta }^{2j+2,n+1}\Vert , \end{aligned}$$and it follows from the triangle inequality that

$$\begin{aligned} \Vert \varTheta ^{2j+2,n+1} \Vert \le C\frac{k^{2j+3}}{2-\mu k}+\frac{2+\mu k}{2-\mu k}\Vert \varTheta ^{2j+2,n} \Vert , \end{aligned}$$for \(0\le \mu k <2\). We then deduce by induction on n that

$$\begin{aligned} \Vert \varTheta ^{2j+2,n} \Vert \le C\frac{1}{2-\mu k}\left( \frac{2+\mu k}{2-\mu k}\right) ^{n-j-1} k^{2j+2}+\left( \frac{2+\mu k}{2-\mu k}\right) ^{n-j}\Vert \varTheta ^{2j+2,j} \Vert . \end{aligned}$$(3.14)From hypothesis (3.4) and the DCC we have

$$\begin{aligned} \Vert \varTheta ^{2j+2,j} \Vert \le \Vert u^{2j+2,j}-u(t_j)\Vert + \left\| \varGamma ^j(u^{2j,j}-u(t_j))\right\| \le Ck^{2j+2}, \end{aligned}$$(3.15)where C is a constant independent from k. Moreover, the sequence \(\left\{ \left( \frac{2+\mu k}{2-\mu k}\right) ^{n} \right\} _n \) is bounded above by \(\exp (2\mu T/(2-\varepsilon ))\), for \(0\le \mu k \le \varepsilon <2\). Whence

$$\begin{aligned} \Vert \varTheta ^{2j+2,n} \Vert \le Ck^{2j+2}. \end{aligned}$$Finally, by the triangle inequality, identity (3.10) and DCC, we have

$$\begin{aligned} \Vert u^{2j+2,n}-u(t_n)\Vert \le \Vert \varTheta ^{2j+2,n} \Vert +\left\| \varGamma ^j (u^{2j,n}-u(t_n))\right\| \le Ck^{2j+2}, \end{aligned}$$where C is a constant depending only on j, T, the DCC constant, \(\mu \) and the derivatives of u up to order \(2j+3\).

-

2.

Suppose that \(\left\{ u^{2j+2,n} \right\} _{n=0}^N \) satisfies (3.6) and X is finite dimensional. We can write

$$\begin{aligned} \begin{aligned} F&\left( t_{n+1/2}, \widehat{u}^{2j+2,n+1 }-\varGamma ^j \widehat{u}^{2j,n+1}\right) -F\left( t_{n+1/2}, \widehat{u}(t_{n+1})-\varGamma ^j \widehat{u}(t_{n+1})\right) \\&= \int _0^1d_uF\left( t_{n+1/2},\widehat{u}(t_{n+1})-\varGamma ^j \widehat{u}(t_{n+1})+s \widehat{\varTheta }^{2j+2,n+1} \right) \left( \widehat{\varTheta }^{2j+2,n+1} \right) ds. \end{aligned} \end{aligned}$$From (3.6) and the DCC there exists \(k_1>0\) such that \(0<k\le k_1\le k_0\) implies

$$\begin{aligned} \Vert \widehat{\varTheta }^{2j+2,n+1} \Vert \le M+Ck^{2j+2}\le M+1. \end{aligned}$$On the other hand, we have

$$\begin{aligned} \Vert \widehat{u}(t_{n+1})-\varGamma ^j \widehat{u}(t_{n+1})\Vert =\left\| \widehat{u}(t_{n+1})-\sum _{i=1}^j\sum _{l=0}^{2i}(-1)^{l}c_{2i}{{2i}\atopwithdelims (){l}}u(t_{n+i-l} )\right\| \le R_{j+1}, \end{aligned}$$(3.16)where

$$\begin{aligned} R_{j+1}: =(j+1)\max _{0\le t\le T}\Vert u(t)\Vert \ge \left( 1+\sum _{i=1}^j2^{2i}|c_{2i}| \right) \max _{0\le t\le T}\Vert u(t)\Vert . \end{aligned}$$(3.17)It follows (3.13) for

$$\begin{aligned} \mu =\sup _{0\le t\le T,\Vert x\Vert \le M+R_{j+1}+1}\left\| d_xF(t,x) \right\| . \end{aligned}$$Since F is differentiable and the set \(\left\{ x\in X: \Vert x\Vert \le M+R_{j+1}+1 \right\} \) is compact in the finite dimensional linear space X, the supremum exists and is finite. The theorem is then deduced from the case (i).

-

3.

If \(\left\{ u^{2j+2,n}\right\} _n\) converges to the exact solution u, taking the DDC and the finite difference formula (2.5) into account, we have

$$\begin{aligned} \left( \widehat{u}(t_{n+1})-\varGamma ^j \widehat{u}(t_{n+1})+s \widehat{\varTheta }^{2j+2,n+1}\right) -u(t_{n+1/2})\rightarrow 0, \text{ as } k\rightarrow 0, \text{ for } 0\le s\le 1. \end{aligned}$$It follows from the continuity of \(u \mapsto d_uF(t,u)\) that there exists \(0<k_2\le k_0\) such that \(0< k\le k_2\) implies

$$\begin{aligned} \begin{aligned} \Vert d_uF(t_{n+1/2},&\widehat{u}(t_{n+1})-\varGamma \widehat{u}(t_{n+1})+\tau \widehat{\varTheta }^{2j+2,n+1} ) \Vert \le 1+\max _{0\le t\le T}\Vert d_uF\left( t,u(t)\right) \Vert . \end{aligned} \end{aligned}$$The theorem, in this case, follows by taking \(\mu = 1+\max _{0\le t\le T}\Vert d_uF\left( t,u(t)\right) \Vert \) in (i).

-

4.

Here we consider the case where X is a Hilbert space and F satisfies the monotonicity condition (3.7). Then, taking the inner product of the identity (3.9) with \(\widehat{\varTheta }^{2j+2,n+1}\), we deduce the inequality

$$\begin{aligned} \begin{aligned} \left( D\varTheta ^{2j+2,n+1/2},\widehat{\varTheta }^{2j+2,n+1} \right) \le \left( \sigma ^{2j+2,n+1/2} ,\widehat{\varTheta }^{2j+2,n+1}\right) +\beta \Vert \widehat{\varTheta }^{2j+2,n+1}\Vert ^2 \\\left( (\varLambda ^j -\varGamma ^j)D(u^{2j,n+1/2}-u(t_{n+1/2})),\widehat{\varTheta }^{2j+2,n+1}\right) \end{aligned} \end{aligned}$$(3.18)since, according to (3.7), we have

$$\begin{aligned} \begin{aligned} \left( F\left( t_{n+1/2}, \widehat{u}^{2j+2,n+1 }-\varGamma \widehat{u}^{2j,n+1}\right) -F\left( t_{n+1/2}, \widehat{u}(t_{n+1})-\varGamma \widehat{u}(t_{n+1})\right) ,\widehat{\varTheta }^{2j+2,n+1}\right) \\ \le \beta \left\| \widehat{\varTheta }^{2j+2,n+1}\right\| ^2. \end{aligned} \end{aligned}$$Inequalities (3.11)–(3.12) together with the Cauchy-Schwartz inequality yield

$$\begin{aligned} \left| \left( \sigma ^{2j+2,n+1/2},\widehat{\varTheta }^{2j+2,n+1}\right) \right| \le Ck^{2j+2}\Vert \widehat{\varTheta }^{2j+2,n+1}\Vert , \end{aligned}$$and

$$\begin{aligned} \left| \left( (\varLambda ^j -\varGamma ^j)D(u^{2j,n+1/2}-u(t_{n+1/2})),\widehat{\varTheta }^{2j+2,n+1}\right) \right| \le Ck^{2j+2}\Vert \widehat{\varTheta }^{2j+2,n+1}\Vert , \end{aligned}$$where C is a constant depending only on j, T, a Lipschitz constant on F and the derivatives of u up to order \(2j+3\). Substituting the last three inequalities into (3.18), we obtain

$$\begin{aligned} \left( D\varTheta ^{2j+2,n+1/2},\widehat{\varTheta }^{2j+2,n+1} \right) \le Ck^{2j+2}\Vert \widehat{\varTheta }^{2j+2,n+1}\Vert +\beta \Vert \widehat{\varTheta }^{2j+2,n+1}\Vert ^2 , \end{aligned}$$and we deduce from the identity

$$\begin{aligned} \left( D\varTheta ^{2j+2,n+1/2},\widehat{\varTheta }^{2j+2,n+1} \right) =\frac{1}{2k}\left( \Vert \varTheta ^{2j+2,n+1}\Vert ^2-\Vert \varTheta ^{2j+2,n}\Vert ^2 \right) \end{aligned}$$and the inequality

$$\begin{aligned} \Vert \widehat{\varTheta }^{2j+2,n+1}\Vert \le \frac{1}{2}\left( \Vert \varTheta ^{2j+2,n+1}\Vert +\Vert \varTheta ^{2j+2,n}\Vert \right) \end{aligned}$$that

$$\begin{aligned} \Vert \varTheta ^{2j+2,n+1}\Vert \le C\frac{k^{2j+3}}{2-\beta k}+\frac{2+\beta k}{2-\beta k}\Vert \varTheta ^{2j+2,n} \Vert . \end{aligned}$$The conclusion follows from the case (i), for \(-2\le \beta k<2\).

\(\square \)

Remark 3.2

Theorem 3.1 shows that the correction may be applied for any other scheme satisfying DCC.

4 Convergence and order of accuracy

The aim of this section is to prove the following theorem:

Theorem 4.1

Let \(u\in C^{2p+3}\left( [0,T],X \right) \) be the exact solution of the problem (1.1). Suppose that one of the four conditions (i)–(iv) of Theorem 3.1 holds, with condition (ii) or (iii) holding for all \(j=0, 1, \ldots , p+1\). Then each sequence \(\left\{ u^{2j,n}\right\} _{n=0}^N\), \(j=1, 2, \ldots , p+1\), solution of the scheme (2.7) or (2.8)–(2.9), approximates u with order 2j of accuracy. Furthermore, we have the estimate

for \(m=0,1,\ldots ,p-j\) and \(n=m+j-1,m+j,\ldots , N-j-m\), where C is a constant depending only on p, T, and the derivatives of u and F up to order \(2m+2j+1\) and \(2m+2j-1\), respectively.

To prove this theorem we need Theorem 3.1 and the the following lemma:

Lemma 4.1

Let \(\left\{ u^{2,n}\right\} _{n=0}^N\) be the solution of the scheme (2.7). Suppose that one of the conditions (i), (iii) or (iv) of Theorem 3.1 holds, or \(\left\{ u^{2,n}\right\} _{n=0}^N\) is bounded in the sense of the condition (ii) of this theorem. Then \(\left\{ u^{2,n}\right\} _{n=0}^N\) approximates u with order 2 of accuracy, and we have the inequality

for \(m=0,1,\ldots ,p\) and \(n=m,m+1,\ldots , N-m-1\), where C is a constant depending only on p, T, and the derivatives of u and F up to order \(2m+3\) and \(2m+1\), respectively.

Proof

(Proof of Lemma 4.1) For the sake of simplification we suppose that \(F=F(x)\). The general case can be handled by transforming (1.1) to an autonomous system. From the hypotheses of the Lemma, Theorem 3.1 implies that \(\left\{ u^{2,n}\right\} _{n=0}^N\) approximates u with order two of accuracy:

where C is a constant depending only on T, F and the derivatives of u up to order 3. To establish (4.2) we proceed by induction on the integer \(m=0,1,\ldots ,p\).

-

1.

Inequality (4.2) for \(m=0\).

As in Theorem 3.1, we combine (1.1) and (2.7) and deduce the identity

$$\begin{aligned} D\varTheta ^{2,n+1/2}=\left[ F\left( \widehat{u}^{2,n+1}\right) -F\left( \widehat{u}(t_{n+1})\right) \right] +\sigma ^{2,n+1/2}, \end{aligned}$$(4.4)where

$$\begin{aligned} \varTheta ^{2,n}=u^{2,n}-u(t_n), \end{aligned}$$and

$$\begin{aligned} \sigma ^{2,n+1/2}=\left[ u'(t_{n+1/2})-Du(t_{n+1/2})\right] -\left[ F\left( u(t_{n+1/2}\right) -F\left( \widehat{u}(t_{n+1})\right) \right] . \end{aligned}$$From Taylor’s formula with integral remainder and the estimate (2.3), there exists a function g such that

$$\begin{aligned} \sigma ^{2,n+1/2} =k^2g(t_{n+1}), \end{aligned}$$with

$$\begin{aligned} \Vert D_+^{m_1}D_-^{m_2} g(t_{n+1})\Vert \le C, ~ \text{ for } ~ m_2-1\le n\le N-m_1-1, \end{aligned}$$(4.5)for each nonnegative integers \(m_1\) and \(m_2\) such that \(m_1+m_2\le 2p\), where C is a constant depending only on T, F, and the derivatives of u up to order \(m_1+m_2+3\). We can write

$$\begin{aligned} F\left( \widehat{u}^{2,n+1}\right) -F\left( \widehat{u}(t_{n+1})\right) = \int _0^1dF\left( K^{n+1}_1\right) (\widehat{\varTheta }^{2,n+1})d\tau _1, \end{aligned}$$where

$$\begin{aligned} K^{n+1}_1=\widehat{u}(t_{n+1})+\tau _1 \widehat{\varTheta }^{2,n+1}. \end{aligned}$$The last identities substituted into (4.4) yield

$$\begin{aligned} D\varTheta ^{2,n+1/2}=\int _0^1dF\left( K^{n+1}_1\right) (\widehat{\varTheta }^{2,n+1})d\tau _1+k^2g(t_{n+1}). \end{aligned}$$(4.6)Proceeding as in Theorem 3.1, we deduce from (4.3) and the regularity of u that

$$\begin{aligned} \left\| \int _0^1dF\left( K^{n+1}_1\right) (\widehat{\varTheta }^{2,n+1})d\tau _1\right\| \le C\Vert \widehat{\varTheta }^{2,n+1}\Vert . \end{aligned}$$Therefore, taking the norm on both sides of (4.6), we deduce by the triangle inequality and the inequalities (4.3) and (4.5), for \(m_1=m_2=0\), that

$$\begin{aligned} \Vert D\varTheta ^{2,n+1/2}\Vert \le C\Vert \widehat{\varTheta }^{2,n+1}\Vert +k^2\Vert g(t_{n+1})\Vert \le Ck^2, \end{aligned}$$(4.7)where C is a constant depending only on T and the derivatives of u and F up to order 3 and 1, respectively. The last inequality combined with (4.3) implies that (4.2) holds for \(m=0\).

-

2.

Here we are going to prove that inequality (4.2) remains true for \(m+1\), assuming that it holds for an arbitrary integer m such that \(0\le m\le p-1\).

We apply \(\left( D_+D_-\right) ^{m}D_+\) to (4.6) and obtain

$$\begin{aligned} \left( D_+D_-\right) ^{m+1}\varTheta ^{2,n+1}=\left( D_+D_-\right) ^{m}D_+ h(t_{n+1}) +k^2\left( D_+D_-\right) ^{m}D_+g(t_{n+1}), \end{aligned}$$(4.8)where we set

$$\begin{aligned} h(t_{n+1})=\int _0^1dF\left( K^{n+1}_1\right) (\widehat{\varTheta }^{2,n+1})d\tau _1. \end{aligned}$$The main difficulty is to bound \(\left( D_+D_-\right) ^{m}D_+ h(t_{n+1})=D_+^{2m+1}h(t_{n+1-m})\). We have

$$\begin{aligned} D_+h(t_{n})= & {} \int _0^1dF\left( K^{n+1}_1\right) (D_+\widehat{\varTheta }^{2,n})d\tau _1 +\int _0^1\int _0^1d^2F\left( K^{n}_2\right) \left( D_+K_1^{n},\widehat{\varTheta }^{2,n}\right) d\tau _1d\tau _2, \\ D^2_+h(t_{n})= & {} \int _0^1dF(K^{n+2}_1)(D^2_+\widehat{\varTheta }^{2,n})d\tau _1+\int _0^1\int _0^1d^2F(K^{n+1}_2)( D_+K_1^{n+1},D_+\widehat{\varTheta }^{2,n})d\tau ^2\\&+\int _0^1\int _0^1d^2F(K^{n+1}_2)( D_+^2K_1^{n},\widehat{\varTheta }^{2,n+1})d\tau ^2\\&+\int _0^1\int _0^1d^2F(K^{n+1}_2)( D_+K_1^{n},D_+\widehat{\varTheta }^{2,n})d\tau ^2\\&+\int _0^1\int _0^1\int _0^1d^3F\left( K^{n}_3\right) \left( D_+K_2^n, D_+K_1^{n},\widehat{\varTheta }^{2,n}\right) d\tau ^3, \end{aligned}$$where \(d\tau ^i =d\tau _1\ldots d\tau _i\), and

$$\begin{aligned} K_{i+1}^{n}=K^n_{i}+\tau _{i+1}(K_{i}^{n+1}-K_{i}^{n})=K_1^n+\sum _{l=1}^i \sum _{2\le i_1<\cdots <i_l \le i+1}\tau _{i_1}\cdots \tau _{i_l}k^lD_+^lK_1^n. \end{aligned}$$(4.9)It follows the general formula

$$\begin{aligned} \begin{aligned} D_+^qh(t_n)=\sum _{i=1}^{q+1}\sum _{|\alpha _i|=q} L^{n,q}_{i,\alpha _i} , \text{ for } q=1,2,\ldots ,2p+1, \text{ and } n\le N-q, \end{aligned} \end{aligned}$$(4.10)where \(\alpha _i=(\alpha _i^1,\ldots ,\alpha _i^{i-1},\alpha _i^i) \in \left\{ 1,2,\ldots , q\right\} ^{i-1}\times \left\{ 0,1,\ldots , q-i+1\right\} \), and \(L^{n,q}_{i,\alpha _i}\) is a linear combination, with properly chosen coefficients, of the quantities

$$\begin{aligned} L^{n,q}_{i,\alpha _i,\beta _i} =\int _{[0,1]^i}d^iF(K_i^{n+q+1-i})\left( D_+^{\alpha _i^{i-1}}K_{i-1}^{n+\beta _{i}^{i-1}},\ldots ,D_+^{\alpha ^1_{i}}K_{1}^{n+\beta _i^1},D_+^{\alpha _i^i}\widehat{\varTheta }^{2,n+\beta _i^i}\right) d\tau ^i, \end{aligned}$$where \(\beta _i=(\beta _i^1,\ldots ,\beta _i^{i-1},\beta _i^i) \in \left\{ 1,2,\ldots , q\right\} ^{i-1}\times \left\{ 0,1,\ldots , q-i+1\right\} \) with \(\beta _i^l+\alpha _i^{l}\le q-l+1\), for \(l=1, \ldots , i\). From (4.9) and (4.3) we have

$$\begin{aligned} K_i^{n+1}=u(t_{n+1/2})+O(k), \text{ for } i=1,2,\ldots ,2p+2, \end{aligned}$$and we deduce that there exists \(k_3>0\) such that \(0<k\le k_3\) implies

$$\begin{aligned} \left\| d^i F\left( K_{i}^{n} \right) \right\| \le C_i,\; \text{ for } i=1,2,\ldots ,2p+2, \text{ and } n=0,1,\ldots ,N-i+1, \end{aligned}$$(4.11)where \(C_i\) is a constant depending only on \(k_3\), T, and the derivatives of u and F up to order 3 and i, respectively. From the inductions hypothesis (4.2) and inequality (2.3) we have

$$\begin{aligned} \Vert D^r_+K_{i}^{n}\Vert \le C, \text{ for } 1\le r\le i\le 2m+3,1\le n\le N-i-r+1, \end{aligned}$$(4.12)and

$$\begin{aligned} \Vert D_+^r \widehat{\varTheta }^{2,n}\Vert \le Ck^2, \text{ for } 1\le r\le 2m+1,1\le n\le N-r, \end{aligned}$$(4.13)where C is a constant depending only on m, T, and the derivatives of u and F up to order \(r+2\) and r, respectively. Each \(L^{n,q}_{i,\alpha _i,\beta _i}\) being multilinear continuous, we deduce from (4.11)–(4.13) and the relation \(\beta _i^l+\alpha _i^{l}\le q-l+1\), for \(l=1,\ldots ,i\), that

$$\begin{aligned} \Vert L^{n,q}_{i,\alpha _i,\beta _i}\Vert \le Ck^2 , \text{ for } 1\le i\le q+1\le 2m+2, \, n\le N-q. \end{aligned}$$It follows by the triangle inequality that (4.10) for \(q=2m+1\) yields

$$\begin{aligned} \Vert \left( D_+D_-\right) ^{m}D_+ h(t_{n+1}) \Vert =\left\| D_+^{2m+1}h(t_{n+1-m})\right\| \le Ck^2, \end{aligned}$$for \(n=m,m+1,\ldots , N-(m+1)-1\), where C is a constant depending only on p, T, and the derivatives of u and F up to order \(2m+4\) and \(2m+2\), respectively . Passing to the norm in identity (4.8), we deduce from (4.5) and the last inequality that

$$\begin{aligned} \Vert \left( D_+D_-\right) ^{m+1}\varTheta ^{2,n+1} \Vert \le Ck^2. \end{aligned}$$(4.14)Otherwise, applying \(D_-\) to (4.8), inequalities (4.11)–(4.13) and (4.14) yield

$$\begin{aligned} \Vert \left( D_+D_-\right) ^{m+1} h(t_{n+1}) \Vert =\left\| D_+^{2m+2}h(t_{n-m})\right\| \le Ck^2, \end{aligned}$$for \(n=m,m+1,\ldots , N-(m+1)-1\), where C is a constant depending only on p, T, and the derivatives of u and F up to order \(2m+5\) and \(2m+3\), respectively. Therefore, passing to the norm in the identity obtained by applying \(D_-\) to (4.8), we deduce from (4.8) and the last inequality that

$$\begin{aligned} \Vert D_-\left( D_+D_-\right) ^{m+1}\varTheta ^{2,n+1} \Vert \le Ck^2, \end{aligned}$$(4.15)for \(n=m,m+1,\ldots , N-(m+1)-1\), with the constant C depending only on p, T, and the derivatives of u and F up to order \(2m+5\) and \(2m+3\), respectively. Inequalities (4.14) and (4.15) imply that the induction hypothesis is also true for \(m+1\), and we deduce that (4.2) is true for each integer \(m=0,1,\ldots ,p\).

\(\square \)

Proof

(Proof of Theorem 4.1) We proceed by induction on \(j=1,2,\ldots ,p+1\). The case \(j=1\) is immediate from Lemma 4.1. Suppose that \(\left\{ u^{2j,n}\right\} _n^N\) approximates u with order 2j of accuracy and satisfies (4.1), for an arbitrary j such that \(j\le p\). We are going to prove that \(\left\{ u^{2j+2,n}\right\} _n^N\) approximates u with order \(2j+2\) of accuracy and (4.1) holds substituting j by \(j+1\).

From the induction hypothesis, \(\left\{ u^{2j,n}\right\} _{n}\) satisfies DCC. Because \(\left\{ u^{2j,n}\right\} _{n}\) and \(\left\{ \overline{u}^{2j,m}\right\} _m\) are computed from the same scheme DC2j, but for different time steps, \(\left\{ \overline{u}^{2j,m}\right\} _m\) also satisfies DCC. Therefore, as in 3.14, Theorem 3.1 applied to the approximation \(\left\{ u^{2j+2,n}\right\} _{n=0}^j\), built from \(\left\{ \overline{u}^{2j,m}\right\} _m\), yields

where

According to the DCC and the condition \(u^{2j+2,0}=u(t_0)=u_0\), we have

By the triangle inequality and the DCC, the last two inequalities yield

From the DCC on \(\left\{ u^{2j,n}\right\} _n\) and the inequality (4.16), Theorem 3.1 again implies that \(\left\{ u^{2j+2,n}\right\} _{n=0}^N\) approximates the exact solution u with order \(2j+2\) of accuracy. Therefore, it is enough to establish (4.1) for \(j+1\), \(j\le p\). To this end we rewrite identity (3.9) as follows

with

where \(\varTheta ^{2j+2,n}\) and \(\sigma ^{2j+2,n+1/2}\) are as in Theorem 3.1. Proceeding as in Lemma 4.1 and taking the finite difference formulae (2.4) and (2.5) into account, we can write

where

C is a constant depending only on p, T, and the derivatives of u and F. According to the inequality (4.1) from the induction hypothesis, we may write

where

Therefore, writing (4.17) as follows

with

the induction hypothesis and the reasoning from Lemma 4.1, substituting the functions h and g, respectively, by H and G, \(\widehat{\varTheta }^{2,n+1}\) by \(\widehat{\varTheta }^{2j+2,n+1}\), and \(k^2\) by \(k^{2j+2}\), yields

for \(m=0,1,\ldots ,p-j\) and \(n=m+j-1,m+j,\ldots , N-j-m\), where C is a constant depending only on p, T, and the derivatives of u and F up to order \(2(m+j+1)+1\) and \(2(m+j)+1\), respectively. Inequality (4.1) holds for \(\left\{ u^{2j+2,n}\right\} _n \) by the triangle inequality from the last inequality. \(\square \)

We end this section by the following corollary that gives an important convergence property of the DC method. This property is useful for a time-stepping method to solve stiff and large dimensional differential equations arising from the space discretization of time-dependent PDEs.

Corollary 4.1

Suppose that the function F is from \(\mathbb {R}^s\rightarrow \mathbb {R}^s\), for a positive integer s, and satisfies the one-sided Lipschitz condition (3.7). Then, each approximate solution \(\left\{ u^{2j,n}\right\} _{n=0}^N\) from DC2j satisfies the inequality

where C is a constant independent from any global Lipschitz constant on F, and either \(k_0=2/\beta \) for \(\beta >0\) or \(k_0=+\infty \) for \(\beta \le 0\).

Proof

From the regularity assumption on F and u and the one sided-Lipschitz condition, we deduce from Theorem 4.1 that each \(\left\{ u^{2j,n}\right\} _{n=0}^N\), \(j=1,2,\ldots \), satisfies DCC. Therefore, inequality (4.18) is immediate from the part 4 of Theorem 3.1. The constant C depends only on the derivatives of u up to order \(2j+1\) and, according to (3.16)–(3.17) and the mean value theorem, on the bound of the Jacobian \(F_y\) on the compact set \([0,T]\times \left\{ y\in \mathbb {R}^s : |y|\le R_j \right\} \). \(\square \)

Remark 4.1

The convergence property satisfied by the schemes DC2j in Corollary 4.1 is in fact B-convergence (see, e.g., [9, 19]) since the constant C of the global error in (4.18) is independent from any global Lipschitz constant of the function F. Nevertheless, since in the definition of B-convergence the constant C depends on high order derivatives of the exact solution u, the identity

can make any requirement on the independence of the constant C with respect to \(F_u\) somewhat artificial. The numerical test on Bernoulli ODE in Sect. 6 gives an application of Corollary 4.1.

Remark 4.2

From part 4 of the proof of Theorem 3.1, the global error for an approximate solution by a DC2j+2 method, \(j=0,1,2,\ldots \), of the IVP (1.1) under the one-sided Lipschitz condition (3.7) takes the form

whenever \(-2\le \beta k<2\). The constant C depends on the derivatives of the function F up to order \(2j+2\) and can be very large in magnitude. However, if \(\beta <0\) and k is not necessarily small, the factor \(\left( \frac{2+\beta k}{2-\beta k}\right) ^{n}\) sharply decreases with n, so that \(C\left( \frac{2+\beta k}{2-\beta k}\right) ^{n}<<1\), leading to accurate approximate solutions. As the time step k gets larger, \(\frac{2+\beta k}{2-\beta k}\) gets smaller and accuracy occurs from the first iterations. Nevertheless, when k is very small, \(\left( \frac{2+\beta k}{2-\beta k}\right) ^{n}\) is close to 1 for smaller values of n, so that the global error bound of our DC methods reduces to \(C\,k^{2j+2}\) shortly after startup. This occurs when k is in the asymptotic region \(k\mu <2\), where \(\mu \) is the global Lipschitz constant of F, \(\mu \) large. For such small k, non B-convergent methods can also be used and may be competitive with our B-convergent DC methods, at least during this time interval following startup. This situation will be illustrated with the Bernoulli equation in Sect. 6.

5 Absolute stability

In this section we prove the absolute stability of the DC schemes. The notion of absolute stability is introduced by Dahlquist [5] to characterize methods able to solve stiff ODEs. Considering the following IVP,

where \(\lambda \) is a complex number, we have the following definition (see [5, 22]):

Definition 5.1

A numerical method is said to be absolutely stable if the corresponding solution for the problem (5.1) for fixed \(k>0\) and some \(Re(\lambda )<0\) is such that

The region of absolute stability of a numerical method is defined as the subset of the complex plane

If \(\mathcal {A}\cap \mathbb {C}_-=\mathbb {C}_-\), \(\mathbb {C}_-=\left\{ \lambda \in \mathbb {C} : Re(\lambda )<0 \right\} \), the numerical method is said to be A-stable.

Before establishing absolute stability results for the deferred correction schemes (2.7) and (2.8)–(2.9), we recall the following result.

Lemma 5.1

(See [27, formula (6)]) Let \(P_m\) be a polynomial of degree m in one variable. Then the sum \(\sum _{i=0}^nP_m(i)\) is a polynomial of degree \(m+1\) in the variable n.

Lemma 5.2

Suppose that \(F(t,u)=\lambda u\) and \(u_0=1\) in the initial value problem (1.1), where \(\lambda \) is a complex number with negative real part (\(\lambda \in \mathbb {C}_-\)). Then the corresponding approximate solutions from the schemes (2.7) and (2.8)–(2.9) can be written as follows

where \(P_j(n)\) is a polynomial of degree j in the variable n.

Proof

We suppose that \(\lambda k \ne -2\), otherwise we trivially have \(u^{2j,n+1}=0\), for \(n\ge j\). Since \(F(t,u)=\lambda u\), we can rewrite (2.8) as follows

where, according to formulae (2.1) and (2.2), we have

and

Combining the last three identities, we deduce that

where \(\alpha _{j,i}\) is affine in \(\lambda k\). Under the hypothesis of the lemma, (2.7) matches the trapezoidal rule, and we have

that is (5.4) is true for \(j=0\). Suppose that (5.4) holds for an arbitrary integer \(j\ge 0\). From (5.5) we have

with \(n\ge j+2\), and, substituting each \(u^{2j+2,n+1+j-i}\) by the formula given by the induction hypothesis (5.4), we deduce that

where

It follows that

It is clear that \(Q_{j}(n)\) is a polynomial of degree j in the variable n as \(P_j(n)\). Therefore, according to the Lemma 5.1, \(\sum _{i=j+2}^{n}Q_{j}(i)\) is a polynomial of degree \((j+1)\) in the variable n. Whence,

where

is a polynomial of degree \(j+1\) in the variable n. We then deduce by induction that the lemma is true for arbitrary non-negative integer j. \(\square \)

Theorem 5.1

Each of the deferred correction schemes (2.7) and (2.8)–(2.9) is A-stable.

Proof

From Lemma 5.2 we have, for \(Re(\lambda k)<0\),

since, under the condition \(Re(\lambda k)<0\), we have \(\left| \frac{2+\lambda k}{2-\lambda k}\right| <1\). \(\square \)

6 Numerical experiments

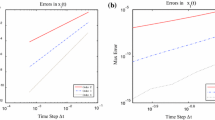

In this section we evaluate the accuracy and order of convergence of the schemes \(DC2, DC4, \ldots , DC10\), implemented using the Scilab programming language. The starting values are computed using the scheme (2.10)–(2.11).

We choose six standard problems for the evaluation. The first problem concerns B-convergence by considering a Bernoulli equation. The second problem is about long term integration with an oscillatory solution of large amplitude. The four other problems are about stiffness. The third and fourth problems (B5 modified and E5, respectively) both involve complex eigenvalues of negative real parts, where the imaginary parts of the eigenvalues for the third problem have larger magnitudes while those from the fourth problem have smaller magnitudes. The fifth problem (Robertson) is nonlinear and stiff with real negative eigenvalues, and it also addresses B-convergence. The sixth problem is the van der Pol oscillator, which is stiff with arbitrary complex eigenvalues.

The first three problems have analytic solutions. For problems (6.4), (6.5) and (6.6) that do not have an analytic solution, we consider a small time step such that the approximate solutions with \(DC6, \ldots , DC10\) are almost identical [to machine precision for problem (6.5)], and we choose one of the approximate solutions as reference solution.

For solutions \(u=(u_1,\ldots ,u_d)~:~[0,T] \rightarrow \mathbb {R}^d\), \(1\le d\le 6\), the absolute error on the approximate solutions \(\left\{ u^{2j,n} \right\} _{0\le n\le N} \), \(1\le j\le 5\), is computed with the norm

For very large N we extract solutions at \(2\times 10^6\) or \(3\times 10^6\) discrete times evenly spread over the interval [0, T].

For a comparison of accuracy, we implement in Scilab the backward differentiation formulae (BDF) of order 2, 4 and 6, and the explicit Runge-Kutta (RK) of order 4. The implemented BDF are run with exact starting values for the first three problems that have analytic solutions, while for problems four and five the starting values are provided by the function stiff (implementing BDF with adaptive steps) of the solver ode from Scilab. For the van der Pol oscillator, the comparison of our DC methods is done only with the solutions from stiff and rkf from the solver ode. For each of the problems, we give a table of absolute errors and orders of convergence for pairs of two consecutive time steps, for the approximate solutions with the DC methods. We denote by \(k_{max}\) the maximal time step allowed to compute an approximate solution with the solver stiff or rkf (see [8] for a discussion on maximal time steps).

6.1 Bernoulli differential equation

Table 2 gives the absolute error and the order of convergence for each pair of consecutive time steps, in the case of DC, BDF and RK4 methods. The dash for RK4 indicates that the method is unstable for the corresponding time steps.

This problem addresses B-convergence since the function F is one-sided Lipschitz with \(\beta =-0.1\), when positive solutions are considered. The problem is strongly nonlinear with the magnitude of derivatives of the right hand side function F exponentially increasing with the order of the derivatives. Such derivatives of large magnitude generally limit the accuracy of high order methods that are not B-convergent. In fact, B-convergence provides at least two main advantages to time-stepping methods. First, when \(\beta <0\), the error estimate for our B-convergent methods remains valid for large \(k>0\), as stated in our Corollary 4.1, and the error can be small even for relatively large time step k, as discussed in Remark 4.2. As seen in Table 2, DC methods provide accurate approximate solutions for large time steps, and their accuracy increases with the order of the method. However, the order of convergence of the DC methods is suboptimal in this range of time steps. BDF methods are stable for large time steps, but they are less accurate than their corresponding DC methods (except for BDF2). As expected, RK4 is unstable for \(k\ge k_0 \approx 2.03\times 10^{-3}\).

A second main advantage of B-convergence is the relatively small size of the error constant that depends on \(\beta \) (among others) instead of the potentially much larger two-side Lipschitz constant \(\mu \), see Remarks 4.1 and 4.2. This should result in lower error when comparing a B-convergent method with a non B-convergent method of the same order for the same time step, in the range of small time steps. Table 2 suggests that the error constants are, respectively, about 10, 100, 1000 smaller for DC2, DC4, DC6 compared to BDF2, BDF4, BDF6 methods. Of course, care is needed when doing such crude comparisons of errors. For instance, some non B-convergent method may happen to be competitive on a specific problem for very small time steps, as discussed in Remark 4.2. For the smallest time steps, RK4 is more accurate than DC4 and any of the BDF methods, but as expected DC6-10 achieve better accuracy.

Finally, we note that DC4 and DC6 almost achieve their proper order for \(k\le 5\times 10^{-6}\), and the order of convergence of DC8 and DC10 are not observed since these methods quickly achieve machine accuracy.

6.2 Oscillatory problem [14]

The exact solution is \(u(t)=e^{\lambda \sin (t)}\). The original problem is set with \(\lambda =1\) in [14]. The author in [16] solved this problem with Runge-Kutta methods of orders 4 and 8, for \(\lambda =2\) and \(T=2580\pi \), to “illustrate the need of higher order methods when a long-term integration problem is considered”. Table 3 gives the absolute error and the order of convergence for each pair of consecutive time steps. The BDF methods are run only for the smallest time step. The solvers rkf and stiff use adaptive time stepping with a maximal time step \(k_{max}=0.1\) and tolerances \(rtol=100\times atol=10^{-10}\).

The magnitude of the exact solution \(u(t)=e^{10\sin (t)}\) of the modified oscillatory problem is large, resulting in a relatively large absolute error obtained by the DC schemes (absolute errors of about \(10^{-7}\) is possible for a good choice of stepsize). Moreover, the long term integration influences the accuracy of these schemes since they achieve absolute errors of about \(10^{-9}\) when the solution interval is reduced to [0, 1000]. Nevertheless, each DC scheme converges with its proper order. The DC methods are considerably more accurate than standard methods (both with fixed and variable stepsizes) which are inaccurate for this problem. For instance, for BDF2 and rkf, the solutions remain bounded with bounds close to the maximal amplitude of the exact solution but the phase of the oscillation is completely wrong.

6.3 Problem B5 modified [8], stiff with complex eigenvalues of negative real parts and larger (in magnitude) imaginary parts

This problem, originally set with \(\alpha =100\), is an illustration of ODEs resulting from a semi-discretization by finite element methods of parabolic PDEs [26]. We choose \(\alpha =5000\) to make the problem a little more difficult. Table 4 gives the absolute errors for the first component of the approximate solutions which is similar for the second component. The absolute errors for the others components quickly achieve machine precision. The solvers stiff and rkf are run with \(k_{max}=2\times 10^{-5}\) and \(atol=10\times rtol=10^{-15}\).

The imaginary parts of the Jacobian eigenvalues of the modified B5 problem are large. Even though the real parts of the eigenvalues are negative, we observe that smaller time steps are required by DC schemes to obtain accurate approximations. DC schemes achieve their proper order of convergence, but BDF methods perform better for this problem than DC schemes.

6.4 Problem E5 [8], stiff with complex eigenvalues of negative real parts and smaller (in magnitude) imaginary parts

A reference solution is computed with DC10 for \(k= 10^{-3}\). The solution of this problem has small magnitude in \([1.618\times 10^{-3},1.76\times 10^{-3}]\times [0,1.46\times 10^{-10}]\times [0,8.27\times 10^{-12}]\times [0,1.38\times 10^{-10}]\) and the eigenvalues of the Jacobian matrix dF(y) along the solution curve belong to the region \([-20490,3.68\times 10^{-12}]\times [-9.17\times 10^{-5},9.17\times 10^{-5}]\) of the complex plane. Table 5 gives the absolute errors and order of convergence for the four components of the approximate solutions. For BDF, RK4 and stiff, the absolute errors are provided only for the first component. The absolute error on the other components is smaller by 2 (RK4) to 5 (stiff) orders of magnitude, as we should expect from the magnitude of the solution components. The implemented BDF methods are run with starting values deduced from the solver stiff. The implemented RK4 is unstable for time steps \(k\ge 2\times 10^{-4}\), and the absolute error is reported for \(k=10^{-4}\) in Table 5. The solver stiff is run with \(k_{max}=10^{-3}\) and \(rtol=10^8\times atol=10^{-15}\).

Imaginary parts of eigenvalues for the problem E5 are smaller, and larger time steps allow DC schemes to produce very accurate approximations, compared to the modified B5 problem. DC schemes perform better for this problem than BDF methods. They achieve their proper order of convergence but on a relatively small range of time steps, for higher order DC methods, since the solution is already very accurate for large time steps.

6.5 Robertson (1966) [11], stiff with real negative eigenvalues

This is one of the three problems considered as stiffest in [11]. We compute a reference solution with DC10 for the time step \(k=1/6000\). The solution belongs to the region \([1.78\times 10^{-2},1.00]\times [0,3.58\times 10^{-5}]\times [0,0.983]\) and the eigenvalues of the Jacobian dF(y) along the solution curve belong to \([-9825.744,0]\). Table 6 gives absolute errors and orders of convergence of DC methods for each component of the solution. For other methods, we give only the maximal errors on the three components of the approximate solutions. The solver stiff is run with \(k_{max}=1/600\) and \(rtol=100\times atol=10^{-15}\). The solver rkf fails in solving this problem for various tolerances and \(k_{max}\), and Scilab reported: “it is likely that rkf45 is inefficient for solving this problem”. The implemented BDF methods are run with starting values deduced from the solver stiff using the preceding tolerances.

The Robertson problem is stiff and addresses B-convergence since its Jacobian matrix has real negative eigenvalues with some having large magnitude. For this problem, DC schemes produce accurate approximate solutions even for large time steps, and high order DC methods can be avoided (DC6 is enough). The convergence is slow for \(k>1/300\), but faster convergence happens for k in the asymptotic region (\(k<1/300\)). The DC schemes perform better than BDF methods at equal order and time step. A comparison of the errors for \(k=1/600\) suggests that the error constants might be 3 to 5 orders of magnitude smaller for DC than BDF methods.

6.6 van der Pol oscillator [8, 24], stiff with arbitrary complex eigenvalues

This problem was initially proposed for \(T=1\) and \(\mu =5\) in [8]. The actual version results from a suggestion by Shampine [24]. We compute a reference solution with DC8 for \(k=1.875\times \times 10^{-6}\). The solution belong to the region \([-2, 2.000073]\times [-1323.04,1231.35]\) of the real plane and the eigenvalues along the solution curve belong to the region \([-3000.29,1123.17]\times [-1158.48,1158.48]\) of the complex plane. Table 7 gives the absolute errors and orders of convergence. For rkf and stiff, we use \(k_{max}=7.5\times 10^{-5}\) and \(rtol=10\), \(atol=10^{-16}\).

The van der Pol oscillator is stiff and the solution has a large magnitude. DC6 and DC8 reached their order of convergence. This shows that the DC strategy works well in spite of the fact that DC2 and DC4 would require much smaller time steps to produce reasonably accurate solutions. The order of convergence for DC10 is not observed, though the solutions obtained are accurate.

6.7 Discussion of the numerical results

In general, a careful assessment of the proof of Theorem 3.1 points out to the fact that, for a system with complex eigenvalues \(\lambda =\lambda _1+i\lambda _2\), we only need a time step k such that \(k\,\max \left\{ \lambda _1,|\lambda _2|\right\} <2\) for a good accuracy (faster convergence happens when \(- \lambda _1>>|\lambda _2|\)). These situations are well illustrated by the test cases of Sects. 6.3 and 6.4, where the required time step for accuracy is much smaller for modified B5 than E5. However, time steps k such that \(k\mu \simeq k|\lambda |<2\), \(\mu \simeq \displaystyle \max _{0\le t\le T}\Vert d_uF\left( t,u(t)\right) \Vert \), is necessary for an asymptotic convergence with proper order. For example, in the case of the Bernoulli equation we have \(\lambda \simeq -20{,}000.1<0\) and \(\mu =20{,}000.1\). Large time steps provide accurate approximations (as expected from B-convergent methods), but asymptotic convergences are observed only for \(k\mu <2\).

For the computational effort of the DC methods, we recall that to compute an approximate solution on discrete points \(0=t_0<t_1<\cdots <t_N=T\), DC2 solves N nonlinear systems while DC2j, \(j\ge 2\), solves \(j\times N\) systems. In the case of the Bernoulli equation, for example, DC10 achieves the maximal error of about \(1.1\times 10^{-11}\) by solving approximately \(5\times 10^6\) nonlinear systems while the maximal absolute error for DC2 is about \(8.9\times 10^{-7}\) for \(N=5\times 10^6\). We did not report any CPU time since our code is written in Scilab, an interpreted language. All methods that we implemented are consequently interpreted, while rkf and stiff provided with Scilab are compiled. Nevertheless, the main burden in implicit time-stepping solvers is the resolution of nonlinear systems, and we have shown that higher order DC methods give the most accurate approximations by solving fewer systems of equations. This gives a clue on the CPU time required and the efficiency of these methods. High order DC methods should be competitive in situations where using fully implicit methods is unavoidable.

7 Conclusions

We have presented a new approach of deferred correction methods for the numerical solution of general first order ordinary differential equations. Proofs for consistency, order of convergence and stability of the method are given, which rely on a recursive argument using a new deferred correction condition. The numerical experiments comply with the theory and show a high accuracy of the method and its satisfactory A-stable property and B-convergence. Globally, each DC scheme reaches its proper order of convergence and applies to any category of problem, providing accurate approximations for time steps not necessarily small. The accuracy of the DC schemes increases with the level of correction.

References

Auzinger, W.: Defect correction methods. In: Engquist, B. (ed.) Encyclopedia of Applied and Computational Mathematics, pp. 323–332. Springer, Berlin (2015)

Christlieb, A., Ong, B., Qiu, J.M.: Integral deferred correction methods constructed with high order Runge–Kutta integrators. Math. Comput. 79, 761–783 (2010)

Chung, T.: Computational Fluid Dynamics, 2nd edn. Cambridge University Press, Cambridge (2010)

Dahlquist, G., Björck, A.K.: Numerical Methods in Scientific Computing, vol. I. SIAM, Philadelphia (2008)

Dahlquist, G.G.: A special stability problem for linear multistep methods. Nordisk Tidskr. Informationsbehandling (BIT) 3, 27–43 (1963)

Daniel, J.W., Pereyra, V., Schumaker, L.L.: Iterated deferred corrections for initial value problems. Acta Cient. Venezolana 19, 128–135 (1968)

Dutt, A., Greengard, L., Rokhlin, V.: Spectral deferred correction methods for ordinary differential equations. BIT 40, 241–266 (2000)

Enright, W.H., Hull, T., Lindberg, B.: Comparing numerical methods for stiff systems of O.D.E:s. BIT 15, 10–48 (1975)

Frank, R., Schneid, J., Ueberhuber, C.W.: The concept of B-convergence. SIAM J. Numer. Anal. 18(5), 753–780 (1981)

Gustafsson, B., Kress, W.: Deferred correction methods for initial value problems. BIT 41, 986–995 (2001)

Hairer, E., Wanner, G.: Solving Ordinary Differential Equations. II. Stiff and Differential-Algebraic Problems, vol. 14. Springer, Berlin (1991)

Hansen, A.C., Strain, J.: On the order of deferred correction. Appl. Numer. Math. 61, 961–973 (2011)

Hildebrand, F.B.: Introduction to Numerical Analysis. McGraw-Hill, New York (1974)

Hull, T.E., Enright, W.H., Fellen, B.M., Sedgwick, A.E.: Comparing numerical methods for ordinary differential equations. SIAM J. Numer. Anal. 9, 603–637 (1972)

Isaacson, E., Keller, H.B.: Analysis of Numerical Methods. Wiley, New York (1966)

Karouma, A.: A class of contractivity preserving Hermite–Birkhoff–Taylor high order time discretization methods. Ph.D. thesis, Université d’Ottawa/University of Ottawa (2015)

Koyaguerebo-Imé, S.C.E., Bourgault, Y.: Finite difference and numerical differentiation: General formulae from deferred corrections. arXiv preprint arXiv:2005.11754 (2020)

Koyaguerebo-Imé, S.C.R., Bourgault, Y.: Arbitrary high-order unconditionally stable methods for reaction-diffusion equations via deferred correction: Case of the implicit midpoint rule. arXiv:2006.02962v2 (2020)

Kraaijevanger, J.: B-convergence of the implicit midpoint rule and the trapezoidal rule. BIT 25(4), 652–666 (1985)

Kress, W., Gustafsson, B.: Deferred correction methods for initial boundary value problems. J. Sci. Comput. 17(1–4), 241–251 (2002)

Kushnir, D., Rokhlin, V.: A highly accurate solver for stiff ordinary differential equations. SIAM J. Sci. Comput. 34, A1296–A1315 (2012)

Quarteroni, A., Sacco, R., Saleri, F.: Numerical Mathematics, vol. 37, 2nd edn. Springer, Berlin (2007)

Schild, K.H.: Gaussian collocation via defect correction. Numer. Math. 58, 369–386 (1990)

Shampine, L.F.: Evaluation of a test set for stiff ODE solvers. ACM Trans. Math. Softw. 7, 409–420 (1981)

Spijker, M.N.: Stiffness in numerical initial-value problems. J. Comput. Appl. Math. 72, 393–406 (1996)

Stewart, K.: Avoiding stability-induced inefficiencies in BDF methods. J. Comput. Appl. Math. 29, 357–367 (1990)

Tuenter, H.: The Frobenius problem, sums of powers of integers, and recurrences for the Bernoulli numbers. J. Number Theory 117, 376–386 (2006)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Antonella Zanna Munthe-Kaas.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors would like to acknowledge the financial support of the Discovery Grant Program of the Natural Sciences and Engineering Research Council of Canada (NSERC) and a scholarship to the first author from the NSERC CREATE program “Génie par la Simulation”.

Rights and permissions

About this article

Cite this article

Koyaguerebo-Imé, SC.E.R., Bourgault, Y. Arbitrary high order A-stable and B-convergent numerical methods for ODEs via deferred correction. Bit Numer Math 62, 139–170 (2022). https://doi.org/10.1007/s10543-021-00875-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-021-00875-y

Keywords

- Ordinary differential equations

- High order time-stepping methods

- Deferred correction

- A-stability

- B-convergence