Abstract

Trajectory generation for biped robots is very complex due to the challenge posed by real-world uneven terrain. To address this complexity, this paper proposes a data-driven Gait model that can handle continuously changing conditions. Data-driven approaches are used to incorporate the joint relationships. Therefore, the deep learning methods are employed to develop seven different data-driven models, namely DNN, LSTM, GRU, BiLSTM, BiGRU, LSTM+GRU, and BiLSTM+BiGRU. The dataset used for training the Gait model consists of walking data from 10 able subjects on continuously changing inclines and speeds. The objective function incorporates the standard error from the inter-subject mean trajectory to guide the Gait model to not accurately follow the high variance points in the gait cycle, which helps in providing a smooth and continuous gait cycle. The results show that the proposed Gait models outperform the traditional finite state machine (FSM) and Basis models in terms of mean and maximum error summary statistics. In particular, the LSTM+GRU-based Gait model provides the best performance compared to other data-driven models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Several types of mobile robots have been studied and designed in the literature (Singh et al., 2021; Kim et al., 2021; Gao et al., 2021; Burman and Kumar, 2021), which can be categorized by their way of locomotion in different environments and applications: (a) land-based: legged or wheel-type, (b) water-based, and (c) air-based. The main focus of this paper is on land-based mobile robots, and particularly humanoid/bipedal robots that have recently attracted the attention of researchers and industry professionals. This is not only due to their human-like shape, but also their ability to provide efficient and robust walking in uneven terrain, jumping, or running (Wang et al., 2014; Aoi and Tsuchiya, 2011). However, the primary focus of research on bipedal robotics is to achieve human-like walking stability with robustness to disturbances in complex real-world environments (Hosseinmemar et al., 2019; Kwon and Park, 2009; Yamaguchi and Takanishi, 1997; Morris et al., 2019; Doerschuk et al., 2002). The locomotion stability of these robots is hindered by unfavorable conditions such as ground surface inclination or obstacles in the path (Kim et al., 2021; Hildebrandt et al., 2019; Kuffner et al., 2002), making it a great challenge to produce a gait that can ensure stable walking. The main focus of this work is to deal with the generation of a kinematic trajectory for walking on varied ground slopes at multiple speeds.

In the literature, several successful strategies have been developed for trajectory generation, such as the inverted pendulum (Kim et al., 2020; Razavi et al., 2019; Liu et al., 2021; Sun et al., 2021; Kormushev et al., 2019), the linear inverted pendulum (Huang and Yeh, 2019; Kim et al., 2018; Li et al., 2013; Lee et al., 2016), LSTM pattern generation (Li et al., 2020), multi-objective meta-heuristic optimization (Juang and Yeh, 2017), and fuzzy control (Li et al., 2010). However, these generated trajectories are only applicable to laboratory environments due to issues such as high energy consumption, robustness issues in the presence of disturbances, and difficulty in finding new trajectories in emergency situations. Some authors have applied the learning-based algorithms to generate reference trajectories that have been successfully tested in real-world environments, demonstrating good adaptability to disturbances and irregular terrains (Castillo et al., 2022; Siekmann et al.., 2021).

According to the literature, some researchers have used real human locomotion kinematic datasets collected under different conditions as reference trajectories (Holgate et al.., 2009; Wang et al., 2021; Liu et al., 2020; Wang et al., 2018; Zhou et al., 2017). This is because human trajectories are optimal and stable in nature. A common strategy from gait analysis, known as a finite state machine (FSM), has been employed to approximate the continuation of multiple tasks and phases (Simon et al., 2014). The strategy is based on dividing the gait cycle into multiple phases and designing separate controllers for each phase. A high-level topology then selects the suitable controller from the set based on the classifier algorithm output, which indicates the phase of the gait cycle. However, as the number of activities increases, so does the requirement for controllers, which requires a lot of expertise that is hard to find. Therefore, authors have used the Discrete Fourier Transform (DFT)-based Gait model to parametrize the continuous kinematic trajectory as a function of the phase variable and tasks (Quintero et al., 2018; Embry et al., 2018). Likewise, authors have developed the Basis model, where the phase variable plays an important role (Embry et al., 2016). The phase variable is a special signal that monotonically increases with the gait cycle, and Tasks is a stacked vector that consists of ground slope and speed. This Gait model helps in predicting the joint kinematic angle with many combinations of speed and incline (Embry et al., 2016). However, the presented model could not capture the relationship between the joints (knee, hip, and ankle), as it has three separate setups for predicting each joint angle individually. Since there is a strong relationship between the joints (Huang et al., 2021), it is necessary to develop a coupled model that can predict the joint trajectory simultaneously while taking the joint relationships into account.

Data-driven models such as deep neural networks, long short-term memory, gated recurrent neural networks, etc., can be employed to find the relationship between the features (Yang et al., 2021; Shrestha and Mahmood, 2019). In our case, the features are the joint knee, ankle and, hip angle. In previous works (Singh et al., 2021, 2022), authors have developed machine learning and deep learning models to capture the important relationship between the joints. However, the developed models were confined only to flat ground with one speed. Additionally, the choice of the objective function for training the data-driven model is not straightforward because it deeply impacts the prediction performance. It has been shown in the literature that mean absolute error outperforms mean squared error for the prediction ability of deep neural networks (Qi et al., 2020). Therefore, a new objective has been developed, taking inspiration from previous studies in the literature (Embry et al., 2016; Qi et al., 2020).

This study proposes using data-driven deep learning approaches to model the kinematics of joint trajectories by using continuously changing inclines and speeds of locomotion data. The dataset consists of locomotion data from 10 able-bodied individuals with continuously changing inclines (-10 to +10 degrees) and speeds (0.8 to 1.2 m/s). A new objective function is also proposed that incorporates the effect of the standard error from the mean trajectory that needs to be followed. This incorporation helps the model avoid high variance points in the dataset. This loss function is used to train the data-driven models. The impact of changing speed with continuously varying inclines with monotonically increasing phase variables is also studied by developing the wireframes. Overall, the major contribution of this study is:

-

Data-driven based Gait models are proposed for continuous parametrization of kinematic trajectory as a function of stack tasks vector and monotonically increasing phase variable.

-

A new objective function is designed, which incorporates the information of standard error of inter-subject mean trajectory from data, for the training of proposed Gait models.

-

Comparative analysis of developed Gait models, based on the mean and maximum error statistics obtained from prediction, for two different cases are briefly discussed.

-

Impact of changing inclines with phase variable are studied in detail.

-

Wire-frames are also presented from the proposed gait models to find the suitable model for biped robot gait trajectory generation.

This research work is organized as follows: Sect. 2 presents the proposed methodology, which includes the data description, gait model, and objective function. Then, Sect. 3 explains the results and discussion, which includes the model parameter settings, performance evaluation index, prediction analysis, and the impact of the objective function on the performance of the proposed model. Finally, Sect. 4 concludes the work.

2 Proposed methodology

This section discusses the proposed methodology to design the data-driven Gait model for predicting the joint kinematic trajectory angle on continuously varying ground slope and speed. The proposed methodology has three components: (a) data description and preprocessing, (b) development of the data-driven model, and (c) implementation of predicted trajectory wire-frame surfaces to the policy for the control of a biped robot/prosthetic leg. The main focus of this section is on component (b). A schematic flow chart of the proposed methodology is presented in Fig. 1

2.1 Data formulation and pre-processing

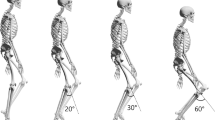

The kinematic locomotion dataset used in this paper was obtained from the IEEE data port provided by Embry et al. Embry et al. (2018). The dataset was produced by capturing the walking patterns of ten subjects using a ten-camera motion system at 100 Hz. Each subject was asked to walk for twenty-seven combinations of speed and incline (known as tasks and denoted by \(\theta _{j}\) with \(j=1,2,\dots ,27\)), varying at regular intervals of 0.2 m/s and 2.5 degrees between the range of 0.8 m/s to 1.2 m/s and -10 degrees to +10 degrees, respectively. Each gait cycle consists of 150 time steps for each of the twenty-seven combinations. The obtained dataset was then filtered using a 10th order Weltering filter to produce smooth gait cycle trajectories. Afterward, the dynamic plug-in model based on the Newington-Helen Hayes model was applied to the filtered gait cycle. The resulting output is the kinematic and kinetic dataset [45]. Here, a plug-in gait lower body model is used to calculate the joint angles. The output angles from the model for all joint angles are evaluated from the YXZ Cardan angles. Cardan angles are evaluated by comparing the relative orientations of segments based on the parent and child to the joint. For example, the knee angle is evaluated using the femur and untorsioned tibia segments. The resulting joint angles are relative to each other, such as the ankle angle is relative to the knee angle, the knee angle is relative to the hip angle, and the hip angle is relative to the pelvis angle (it is absolute with respect to the laboratory coordinates). Outliers are removed if they were more than three standard deviations away from the mean trajectory \(\overline{X}_{\phi _i,\theta _j}\) at every instant i \(\in \) (1, 150) in each stride.

2.2 Gait model designing

This section discusses the procedure for designing the Gait model and loss function. The Gait model \(q(\phi _i,\theta _j)\) is essentially a parametrization function of a normalized gait cycle \(\phi _i\) for i \(\in \) (0, 1) with task \(\theta _{j}\). The key assumption is that the dataset obtained from the previous step is continuous and periodic, which smoothly changes with tasks (Macaluso et al., 2021). The proposed Gait model is developed using data-driven approaches. In this study, seven data-driven models have been used to develop the Gait model for predicting kinematic joint trajectories. These models are: (a) Deep Neural Network (DNN), (b) Long-Short Term Memory (LSTM), (c) Gated Recurrent Units (GRU), (d) Bidirectional LSTM (BiLSTM), (e) Bidirectional GRU (BiGRU), (f) LSTM+GRU, and (g) BiLSTM+BiGRU.

2.2.1 Data-driven models

Deep Neural Network (DNN) (Canziani et al., 2016) is a type of neural network with multiple hidden layers between the input and output layers. This type of neural network is inspired by the human brain and requires more data to train itself to provide better accuracy. It establishes a non-linear relationship between inputs and outputs by processing the training dataset. The number of layers helps in deriving better high-level logic from the given inputs. A DNN consists of interconnected neural units that are stacked on top of each other, creating a complex structure. It uses fewer parameters to tune manually, making it easier to generate better logic. Therefore, the network has reusable codes that help in generating better results. The prediction model for the DNN is given by (1),

where, \(R_n\), \(W^n\), and \(b_n\) is function, weights, and bias for n layer respectively.

Long-Short Term Memory (LSTM) (Greff et al., 2016) is a type of RNN but with three additional gates: input, forget, and output. These gates control the flow of information within the model. The input gate controls the input values that enter the memory cell, while the forget gate filters the important values from the memory cell. The output gate controls the output value from the memory cell. LSTM can memorize the time dependency of data by looking back at past values in the long run, which helps build better predictive logic. It also controls the addition of new information into the memory cells. LSTM has an additional advantage because it helps in dealing with the vanishing and exploding gradient problem that exists in RNNs. The mathematical expression for prediction model using LSTM is presented by (2),

where, \(R_n\), \(W_n\), and \(b_n\) are function, weight, and bias for n layer respectively, \(O_g\), \(I_g\), and \(F_g\) are output gate, input gate, and forget gate respectively, \(h_{t-1}\) is input value from previous hidden layer, relu and tanh are activation function.

Gated Recurrent Units (GRU) (Dey et al., 2017) have an LSTM unit with two additional gates: the reset and update gates. The reset gate controls the flow of information, while the update gate controls the update in values of weight and bias. GRU has a hidden state for controlling the flow of information. Essentially, it has an uncontrolled exposure of content of memory cells from the previous step, while LSTM has control over the exposure of content. GRU controls the output values of the layer but doesn’t control the addition of new knowledge in the memory cells. The major advantage of GRU is that it takes less training time compared to LSTM. It can easily capture different time dependencies for short times. Therefore, the performance of GRU is better than LSTM if long text and short data in sequence are available, and vice versa. Expression for prediction model is presented by (3),

where, \(R_n\), \(W_n\), and \(b_n\) are function, weight, and bias for n layer respectively, \(I_g\), and \(F_g\) are input gate, and forget gate respectively, \(h_{t-1}\) is input value from previous hidden layer, relu and tanh are activation function.

Bidirectional LSTM (BiLSTM) (Huang et al., 2015) is an update to LSTM with an additional bidirectional layer. Due to its bidirectional nature, the weights and biases are trained using both forward and backward propagation, with the training data flowing alternatively in both directions. This layer captures the relationship between different features available in the dataset. This is an improvement over traditional LSTM, which uses only forward propagation for logic building. The mathematical expression is presented by (4),

Bidirectional GRU (BiGRU) (Dong, 2018) is a GRU layer with an additional Bidirectional layer. It is a sequence processing model that takes input through forward and backward propagation alternatively. BiGRU is better than normal GRU because it has feed-forward and back-propagation for better logic creation regarding the relationship between the features. It has only input and forget gates similar to GRU. Thus, the forward and backward feeding of inputs helps it to produce better weight and bias values for each input feature. Mathematically, it is given by (5),

A combination of LSTM and GRU models yields the LSTM+GRU model. It captures the advantage of LSTM’s ability to capture the relationship of long dataset with short text and GRU’s capability of finding the relationship of short dataset with long text. Therefore, it performs better for both types of datasets. It averages the predicted values of LSTM and GRU models. Similarly, a combination of BiLSTM and BiGRU yields the BiLSTM+BiGRU model, which leverages the bidirectional nature and LSTM+GRU property to predict the output. It also yields the average of predicted values obtained from respective models. However, the BiLSTM+BiGRU performs better than the LSTM+GRU if the availability of training dataset is more. Both of these models combine the advantage of memory element and conventional method, which is clearly evident in the results and discussion section.

2.2.2 Objective function

This section discusses about the comparative analysis between mean squared error and mean absolute error function with their mathematical expression, which is employed in literature for training of models based on multi-variate regression. Afterward, the authors discuss about the rationale behind choosing the new loss function for training of the data-driven model.

Lipschitz continuity (Mangasarian and Shiau, 1987; Gouk et al., 2021): A function g is said to be \(\alpha \)-Lipschitz for all variables x, y \(\in \) \(\mathbb {R}^n\), if it satisfy (6) \(\forall \) h \(\ge \) 1,

Mean absolute error (Coyle and Lin, 1988): It quantifies the mean absolute difference between the actual and M prediction vectors A \(=\) \(\{x_1, x_2,..... x_M\}\) and \(A^*\) \(=\) \(\{y_1, y_2,..... y_M\}\) respectively, mathematically it is represented by (7),

where, \(||.||_1\) is the \(L_1\) norm.

Mean squared error (Allen, 1971; Chai and Draxler, 2014): It quantifies the quadratic rule based difference between the actual M prediction vectors A \(=\) \(\{x_1, x_2,..... x_M\}\) and \(A^*\) \(=\) \(\{y_1, y_2,..... y_M\}\) respectively, mathematically it is represented by (8),

where, \(||.||_2\) is the \(L_2\) norm.

Based on the Lipschitz continuity equation (1), it can be easily proved that the MAE and MSE loss function are 1-Lipschitz and not Lipschitz continuous respectively. It is proved in literature, that if function is Lipschitz continuous then it can upper bound the estimated regressor error from the Empirical Rademacher Complexity (Qi et al., 2020). It is suggested that the Laplacian distribution based loss function which relates to MAE can provide the better prediction then the Gaussian based loss function which relates to MSE. It is true only if the variance related term are same in both expression (Chai et al., 2019).

Mathematical expression of MAE in terms of variance at every-point in stride is (9),

Mathematical expression of MSE in terms of variance at every-point in stride is (10),

As per literature, MSE loss function can outperform the MAE based loss function if the expected error satisfy the Gaussian distribution with enough samples available for training purpose (Chai and Draxler, 2014). In this paper, authors have less training samples. Thereby, authors have modifies the loss function by integrating the MAE and MSE. It is used for tuning of the weights for data-driven model. The objective function is the square of error between actual and predicted value divided by the standard error in real values at every stride. Since, impact of error is very different in each point of stride, which is incorporated in terms of SE term in expression. Mathematically, it is defined by (11),

It provides better precision against the two other loss functions which are validated in Sect. 3. Additionally, it also help in deciding to not follow the values with a high variance error in training dataset, which is our assumption for providing the smooth and continuous trajectory, as shown in Fig. 2.

2.2.3 Bayesian optimizer

Bayesian optimization is a powerful technique to find the global optimum solution in a smaller number of steps (Pon and KK, 2021). Basically, it includes the prior belief about the objective function and updates its belief by sampling process to get the posterior belief that is better than the previous belief. The model used for approximating the objective function is known as the surrogate model. In our case, the Gaussian process regressor is used as the surrogate model. The sampling area is determined using the acquisition function, which helps in improvement in current estimates by reducing the variance in observation. The basic steps for Bayesian optimization using the Keras tuner are presented as:

-

1.

Build the surrogate model that minimizes the objective function, the mean square error is chosen as the objective function that directly relates to the hyper-parameters.

-

2.

Different hyper-parameters are sampled based on variance.

-

3.

Evaluate the true objective using the hyper-parameters sampled from step 2.

-

4.

Update the surrogate model based on the minimum error.

-

5.

Steps 2 to 4 are repeated till the optimum solution is obtained.

3 Results and discussion

This section includes the performance evaluation of proposed models using the mean and maximum error statistic indices. In this study, the models are trained and tested using the human locomotion dataset which is derived from the walking data on a different combination of speed and incline. Firstly, the reasoning behind the single multiple output model, parameter settings, and prediction evaluation methods are briefly discussed. Afterward, the prediction analysis of the developed models for two cases is discussed. Lastly, the significant finding has been provided which includes the impact of losses on model performance, dispersion of error in both cases, and wire-frame prediction analysis.

3.1 Model’s performance settings

In this study, it is considered that the proposed models contain the three inputs and three outputs configuration. Two rationales behind using this configuration are: (a) the relationship between knee, hip, and ankle joint trajectory is captured efficiently. (b) the input features such as the speed and incline are constant for one complete gait cycle while the time is increasing at each step during that gait cycle. The model will not be able to predict the output if the output configuration does not have the three output configurations. Since it confuses the model in making the appropriate relationship between three inputs and one output. The model predicts a sloppy gait cycle which is increasing with the values of the time. Whereas, when the model was given three outputs for three input values, the model was able to deduce a good relationship between inputs and outputs. It allows the training algorithm to capture the relationship between the three joints i.e., hip, knee, and ankle effectively. This makes the use of a single model to predict all the gait cycle trajectories at the knee, hip, and ankle.

The dataset consists of a combination of twenty-seven tasks for various values of speed and time. The values of all three inputs speed, hip, and ankle were normalized between 0 and 1. Then, the dataset is split into training and testing sets of two different sizes and additionally, the two cases are also formed. The sizes and combinations of training and testing for both cases are explained in further sections. The input shape for models other than the DNN model is reshaped into a 3-D array. The normalization formula for the speed, incline, and time is given by (12),

Here the \(\phi \) is normalised Gait, \(\theta \) is normalised incline, S is normalised speed, t is time step, T is total time, i is incline in range (-10 to +10), I is total incline gap, v is walking speed in range (0.6 to 1.4), and V is total speed gap.

The layer configuration applied to all the models is (3, 3, [128, 512, 16]). The activation function used for hidden layers is relu while linear is used for the output layer. The hyperparameters were tuned using the Bayesian Optimizer. The optimizer gave 500, \(1e-2\), and 10 as optimized values for a number of epochs, learning rate, and batch size respectively. In order to quantify the performance of the proposed models, performance indices inspired from Embry et al. (2016) are chosen. It measures how closely the model can predict the untrained tasks. The error between the real value of mean trajectory found using the experiments, \(_{\phi _i,\theta _j}\) and predicted kinematic trajectory \(q(\phi _i,\theta _j)\) for tasks \(\theta _j\) is defined by (13),

For every tasks maximum is evaluated by taking the largest error encountered in a gait cycle. Then, the mean and maximum of above evaluated maximum error for all tasks is calculated, which is used to quantify the model performance.

3.2 Prediction analysis

3.2.1 Case 1

The dataset is split into 52:48 ratio for training and testing sets. Overall fourteen and thirteen tasks combination are selected for training and testing purpose. Tables 1, 2 presents the training dataset for all data-driven model denoted by the \(\checkmark \) and other blank are used for testing purpose.

Table 3 presents the maximum error obtained from all proposed models for knee, hip, and ankle joint trajectories. Every entry shows the maximum error encountered in the predicted gait cycle. Here, the bold value represent the combination used for the testing purpose whereas the normal value for training purpose. Tables 4, 5 present the mean and maximum error statistic for proposed model, which is extracted from the Table 3. LSTM and GRU obtaithe ned approximate 40% less error than the DNN model. Even both models outperform the BiLSTM as well. And, also on testing dataset, LSTM and GRU outperform the BiGRU. However, LSTM+GRU provide better statistic than the LSTM and GRU individually for both in training and testing. It is because, the LSTM+GRU is suiareble for all type of dataset as discussed in Sect. 2. Due to the lack of data availability, BiLSTM+BiGRU could not perform better than the LSTM+GRU model.

It is inferred from above discussion, precision of all models is comparable for our dataset. The performance of LSTM+GRU suggests that this is the best option to be used for the generation of gait cycle trajectory as compared to other models. It works best in capturing the main relationship between input and output of time series dataset.

3.2.2 Case 2

From Table 2, it can infer that two combinations of speed 0.8 m/s and 1 m/s and incline at \(7.5^o\) and \(10^o\) respectively, provide the highest maximum error which were affecting the precision of all data-driven models. To increase the precision, these two combinations of speed and incline is included into training dataset. Thus, the overall training and testing dataset is in ratio of 60:40. Overall Sixteen and eleven tasks combination are selected for training and testing purpose. Table 2 presents the training dataset for all data-driven model denoted by the \(\checkmark \) and other blank are used for testing purpose.

Table 4 discussed the maximum error obtained by the predicted trajectories from each model for twenty-seven combination of speed and incline. The normal values represent the error obtained for prediction on the training dataset and the bold values present the prediction on testing dataset. Every value in table present the maximum error encountered in the predicted gait cycle from the data-driven model.

Table 4 is summarized in Table 6, which discussed the overall statistic of mean and maximum error obtained from all proposed models for all tasks. It presents that the performance of all models is significantly improved by introducing the worst performer tasks combination of case 1 into case 2. In most cases, the LSTM+GRU outperforms all other models in this case well. All models are also compared with the previous work in literature i.e., basis and FSM model (Embry et al., 2016). Chosen parameter settings are the same for both models as in this study. It shows that the proposed model in this study outperforms the basis and FSM model approximately in the range of 20–50 \(\%\) for all knee, hip, and ankle trajectories for both training and testing datasets. It is inferred from the above result that the LSTM + GRU model provides high precision as compared to all other models. Therefore, it is recommended to use the LSTM+GRU for providing the reference trajectories.

3.3 Discussion

Figures 3 and 4 presents the sample trajectory prediction of knee, hip, and ankle joint angle for both cases at 1 m/s speed for \(0^o\) incline, for all data-driven models. It shows that the LSTM+GRU model provides a smooth trajectory as well as accurately follows the actual gait cycle. Therefore, it is recommended to use the combo of LSTM and GRU for gait generation in real-time for providing references to biped robot.

Table 7 shows that the overall statistic of prediction accuracy for all models. It tells that mean and max of error in case II from all models is less than the case I. It is because the training data-set used for case II includes the worst performer incline and speed data (i.e. \(7.5^o\) incline at speed 0.8 m/s and \(10^o\) incline at speed 0.8 m/s) of case I.

Table 8 shows the impact of different loss function in term of mean and max error statistic for DNN model only. It shows that the our proposed loss function outperform DNN-MAE by more than approximate 20% in most cases. And also, DNN-proposed model based on the proposed loss outperforms the DNN-MSE by large margins. It validate the theoretical background developed in objective function section.

Comparative analysis of trajectory prediction for all developed models is shown in Figs. 5 and 6. One-hundred-fifty points of the normalized incline and gait are used to make the wire-frame plots. Trajectories are predicted for 1 m/s speed. In basic models (DNN, LSTM, GRU, BiLSTM, and BiGRU) as shown in Fig. 5, the BiLSTM model is performing well because it captures the relationship of speed and incline as compared to all other models. The predicted trajectory from the model smoothly changes from the +\(10^o\) to -\(10^o\). Firstly, the magnitude of the angle decreases from the +\(10^o\) to \(0^o\) and then increases from +\(0^o\) to -\(10^o\) incline. As per Fig. 6, the combination of LSTM+GRU provides smooth trajectory prediction. And, also the combo LSTM+GRU outperforms the BiLSTM model as well in smooth prediction for all joint angles. The performance of the LSTM+GRU model is better than other models because it can memorize the directionality variations in the inclination slope more accurately. The combined structure of LSTM+GRU helps in capturing the changes in training joint angle trajectory data because of speed and incline variation.

4 Conclusion

This work presented the parametrization of the kinematic dataset using data-driven models. The dataset employed for training purposes includes the walking data of 10-able subjects on treadmill with varying speeds and inclines. The novel loss function is proposed for tuning the weights of data-driven models. The loss function incorporated the standard error of the inter-subject mean trajectory and squared difference of actual and predicted value at each point in the gait cycle. The hyper-parameter of the model is optimized using the Bayesian optimizer (given in the Keras tuner library). Also, the superiority of the proposed loss function is proved by comparing it with the other two standard loss functions i.e., mean absolute error (MAE) and mean squared error (MSE). Afterward, the proposed model performance is validated based on the two cases. Overall, the combo of LSTM and GRU outperforms all other models in terms of statistical mean and max error indices. And, the wire-frame is also predicted for both the untrained and trained tasks over 150 \(\times \) 150-time points. Finally, the impact of the varying speeds with inclines is also discussed. It is recommended to use the LSTM+GRU model for predicting the smooth joint trajectories for the biped robot or prosthetic leg.

As a future scope, authors will apply the meta-heuristic optimization approaches for the tuning of parameters of the model, and reinforcement learning will be applied to deal with the issue of non-periodic walking. Additionally, inputs such as ground slopes which are based on the external sensor can have uncertainty in their measurement. It can badly impact the predictions from the model, which is subjected to further investigation in future research.

Code availability

Not applicable.

Abbreviations

- DNN:

-

Deep neural network

- LSTM:

-

Long short term memory

- GRU:

-

Gated recurrent units

- BiLSTM:

-

Bidirectional LSTM

- BiGRU:

-

Bidirectional GRU

- COMBO BI:

-

BiLSTM + BiGRU

- FSM:

-

Finite state machine

- MAE:

-

Mean absolute error

- MSE:

-

Mean squared error

- SE:

-

Standard error

References

Allen, D. M. (1971). Mean square error of prediction as a criterion for selecting variables. Technometrics, 13(3), 469–475. https://doi.org/10.1080/00401706.1971.10488811

Aoi, S., & Tsuchiya, K. (2011). Generation of bipedal walking through interactions among the robot dynamics, the oscillator dynamics, and the environment: Stability characteristics of a five-link planar biped robot. Autonomous Robots, 30(2), 123–141.

Burman, V., & Kumar, R. (2021). Wheel robot review. Innovations in cyber physical systems (pp. 781–791). Singapore: Springer.

Canziani, A., Paszke, A., & Culurciello, E., (2016). An analysis of deep neural network models for practical applications. arXiv preprint arXiv:1605.07678.

Castillo, G. A., Weng, B., Zhang, W., & Hereid, A. (2022). Reinforcement learning-based cascade motion policy design for robust 3D bipedal locomotion. IEEE Access, 10, 20135–20148.

Chai, T., & Draxler, R. R. (2014). Root mean square error (RMSE) or mean absolute error (MAE)?-Arguments against avoiding RMSE in the literature. Geoscientific Model Development, 7(3), 1247–1250. https://doi.org/10.5194/gmd-7-1247-2014

Chai, L., Du, J., Liu, Q. F., & Lee, C. H. (2019). Using generalized Gaussian distributions to improve regression error modeling for deep learning-based speech enhancement. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 27(12), 1919–1931. https://doi.org/10.1109/TASLP.2019.2935803

Coyle, E. J., & Lin, J. H. (1988). Stack filters and the mean absolute error criterion. IEEE Transactions on Acoustics, Speech, and Signal Processing, 36(8), 1244–1254. https://doi.org/10.1109/29.1653

Dey, R., & Salem, F.M., (2017). Gate-variants of gated recurrent unit (GRU) neural networks. In 2017 IEEE 60th international midwest symposium on circuits and systems (MWSCAS), IEEE (pp. 1597–1600).

Doerschuk, P. I., Nguyen, V., & Li, A. (2002). A scalable intelligent takeoff controller for a simulated running jointed leg. Applied Intelligence, 17(1), 75–87. https://doi.org/10.1023/A:1015731916039

Dong, Y., (2018). A survey on neural network-based summarization methods. arXiv:1804.04589.

Embry, K.R., Villarreal, D.J., Gregg, R.D., (2016). A unified parameterization of human gait across ambulation modes. In 38th annual international conference of the IEEE engineering in medicine and biology society, IEEE (pp. 2179–2183).

Embry, K., Villarreal, D., Macaluso, R., & Gregg, R. (2018). The effect of walking incline and speed on human leg kinematics, kinetics, and EMG. IEEE Dataport. https://doi.org/10.21227/gk32-e868

Embry, K. R., Villarreal, D. J., Macaluso, R. L., & Gregg, R. D. (2018). Modeling the kinematics of human locomotion over continuously varying speeds and inclines. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 26(12), 2342–2350. https://doi.org/10.1109/TNSRE.2018.2879570

Gao, Y., Wei, W., Wang, X., Li, Y., Wang, D., & Yu, Q. (2021). Feasibility, planning and control of ground-wall transition for a suctorial hexapod robot. Applied Intelligence, 51, 1–19. https://doi.org/10.1007/s10489-020-01955-2

Gouk, H., Frank, E., Pfahringer, B., & Cree, M. J. (2021). Regularisation of neural networks by enforcing lipschitz continuity. Machine Learning, 110(2), 393–416. https://doi.org/10.1007/s10994-020-05929-w

Greff, K., Srivastava, R. K., Koutník, J., Steunebrink, B. R., & Schmidhuber, J. (2016). LSTM: A search space odyssey. IEEE Transactions on Neural Networks and Learning Systems, 28(10), 2222–2232. https://doi.org/10.1109/TNNLS.2016.2582924

Hildebrandt, A. C., Schwerd, S., Wittmann, R., Wahrmann, D., Sygulla, F., Seiwald, P., & Buschmann, T. (2019). Kinematic optimization for bipedal robots: A framework for real-time collision avoidance. Autonomous Robots, 43(5), 1187–1205.

Holgate, M A., Sugar, T G., Bohler, A.W., (2009). A novel control algorithm for wearable robotics using phase plane invariants. In 2009 IEEE international conference on robotics and automation, IEEE, pp 3845-3850.

Hosseinmemar, A., Baltes, J., Anderson, J., Lau, M. C., Lun, C. F., & Wang, Z. (2019). Closed-loop push recovery for inexpensive humanoid robots. Applied Intelligence, 49(11), 3801–3814. https://doi.org/10.1007/s10489-019-01446-z

Huang, Z., Xu, W., & Yu, K., (2015). Bidirectional LSTM-CRF models for sequence tagging. arXiv:1508.01991.

Huang, C. F., & Yeh, T. J. (2019). Anti slip balancing control for wheeled inverted pendulum vehicles. IEEE Transactions on Control Systems Technology, 28(3), 1042–1049. https://doi.org/10.1109/TCST.2019.2891522

Huang, B., Xiong, C., Chen, W., Liang, J., Sun, B. Y., & Gong, X. (2021). Common kinematic synergies of various human locomotor behaviours. Royal Society open science, 8(4), 210161. https://doi.org/10.1098/rsos.210161

Juang, C. F., & Yeh, Y. T. (2017). Multiobjective evolution of biped robot gaits using advanced continuous ant-colony optimized recurrent neural networks. IEEE Transactions on Cybernetics, 48(6), 1910–1922. https://doi.org/10.1109/TCYB.2017.2718037

Kim, J. H., Lee, J., & Oh, Y. (2018). A theoretical framework for stability regions for standing balance of humanoids based on their LIPM treatment. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 50(11), 4569–4586. https://doi.org/10.1109/TSMC.2018.2855190

Kim, D., Jorgensen, S. J., Lee, J., Ahn, J., Luo, J., & Sentis, L. (2020). Dynamic locomotion for passive-ankle biped robots and humanoids using whole-body locomotion control. The International Journal of Robotics Research, 39(8), 936–956. https://doi.org/10.1177/0278364920918014

Kim, N., Jeong, B., & Park, K. (2021). A novel methodology to explore the periodic gait of a biped walker under uncertainty using a machine learning algorithm. Robotica. https://doi.org/10.1017/S0263574721000424

Kim, K., Spieler, P., Lupu, E. S., Ramezani, A., & Chung, S. J. (2021). A bipedal walking robot that can fly, slackline, and skateboard. Science Robotics., 6(59), eabf8136. https://doi.org/10.1126/scirobotics.abf8136

Kormushev, P., Ugurlu, B., Caldwell, D. G., & Tsagarakis, N. G. (2019). Learning to exploit passive compliance for energy-efficient gait generation on a compliant humanoid. Autonomous Robots, 43(1), 79–95.

Kuffner, J. J., Kagami, S., Nishiwaki, K., Inaba, M., & Inoue, H. (2002). Dynamically-stable motion planning for humanoid robots. Autonomous robots, 12(1), 105–118.

Kwon, O., & Park, J. H. (2009). Asymmetric trajectory generation and impedance control for running of biped robots. Autonomous Robots, 26(1), 47–78.

Lee, Y., Hwang, S., & Park, J. (2016). Balancing of humanoid robot using contact force/moment control by task-oriented whole body control framework. Autonomous Robots, 40(3), 457–472.

Li, T. H. S., Su, Y. T., Lai, S. W., & Hu, J. J. (2010). Walking motion generation, synthesis, and control for biped robot by using PGRL, LPI, and fuzzy logic. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 41(3), 736–748. https://doi.org/10.1109/TSMCB.2010.2089978

Li, Z., Tsagarakis, N. G., & Caldwell, D. G. (2013). Walking pattern generation for a humanoid robot with compliant joints. Autonomous Robots, 35(1), 1–14.

Li, T. H. S., Kuo, P. H., Cheng, C. H., Hung, C. C., Luan, P. C., & Chang, C. H. (2020). Sequential sensor fusion-based real-time LSTM gait pattern controller for biped robot. IEEE Sensors Journal, 21(2), 2241–2255. https://doi.org/10.1109/JSEN.2020.3016968

Liu, C., Zhang, T., Liu, M., & Chen, Q. (2020). Active balance control of humanoid locomotion based on foot position compensation. Journal of Bionic Engineering, 17(1), 134–147.

Liu, J., Chen, H., Wensing, P. M., & Zhang, W. (2021). Instantaneous capture input for balancing the variable height inverted pendulum. IEEE Robotics and Automation Letters, 6(4), 7421–7428. https://doi.org/10.1109/LRA.2021.3097074

Macaluso, R., Embry, K., Villarreal, D. J., & Gregg, R. D. (2021). Parameterizing human locomotion across quasi-random treadmill perturbations and inclines. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 29, 508–516. https://doi.org/10.1109/TNSRE.2021.3057877

Mangasarian, O. L., & Shiau, T. H. (1987). Lipschitz continuity of solutions of linear inequalities, programs and complementarity problems. SIAM Journal on Control and Optimization, 25(3), 583–595. https://doi.org/10.1137/0325033

Morris, K. J., Samonin, V., Baltes, J., Anderson, J., & Lau, M. C. (2019). A robust interactive entertainment robot for robot magic performances. Applied Intelligence, 49(11), 3834–3844. https://doi.org/10.1007/s10489-019-01565-7

Pon, MZ, KK, KP., (2021). Hyperparameter tuning of deep learning models in keras. Sparklinglight Transactions on Artificial Intelligence and Quantum Computing (STAIQC). 1(1):36-40. https://doi.org/10.55011/staiqc.2021.1104

Qi, J., Du, J., Siniscalchi, S. M., Ma, X., & Lee, C. H. (2020). On mean absolute error for deep neural network based vector-to-vector regression. IEEE Signal Processing Letters, 27, 1485–1489. https://doi.org/10.1109/LSP.2020.3016837

Quintero, D., Villarreal, D. J., Lambert, D. J., Kapp, S., & Gregg, R. D. (2018). Continuous-phase control of a powered knee-ankle prosthesis: Amputee experiments across speeds and inclines. IEEE Transactions on Robotics, 34(3), 686–701. https://doi.org/10.1109/TRO.2018.2794536

Razavi, H., Faraji, S., & Ijspeert, A. (2019). From standing balance to walking: A single control structure for a continuum of gaits. The International Journal of Robotics Research, 38(14), 1695–1716. https://doi.org/10.1177/0278364919875205

Shrestha, A., & Mahmood, A. (2019). Review of deep learning algorithms and architectures. IEEE Access, 7, 53040–53065. https://doi.org/10.1109/ACCESS.2019.2912200

Siekmann, J., Godse, Y., Fern, A., & Hurst, J., (2021). Sim-to-real learning of all common bipedal gaits via periodic reward composition. In 2021 IEEE international conference on robotics and automation (ICRA), pp. 7309-7315.

Simon, A. M., Ingraham, K. A., Fey, N. P., Finucane, S. B., Lipschutz, R. D., Young, A. J., & Hargrove, L. J. (2014). Configuring a powered knee and ankle prosthesis for transfemoral amputees within five specific ambulation modes. PloS One, 9(6), e99387. https://doi.org/10.1371/journal.pone.0099387

Singh, B., Gupta, V., & Kumar, R. (2021). Probabilistic modeling of human locomotion for biped robot trajectory generation. In 2021 IEEE 8th Uttar Pradesh section international conference on electrical electronics and computer engineering (UPCON) (pp. 1–6).

Singh, B., Kumar, R., Singh, V. P., et al. (2021). Reinforcement learning in robotic applications: A comprehensive survey. Artificial Intelligence Review. https://doi.org/10.1007/s10462-021-09997-9

Singh, B., Vijayvargiya, A., & Kumar, R. (2022). Kinematic modeling for biped robot gait trajectory using machine learning techniques. Journal of Bionic Engineering, 19, 355–369. https://doi.org/10.1007/s42235-021-00142-4

Sun, Y., Tang, H., Tang, Y., Zheng, J., Dong, D., Chen, X., & Luo, J. (2021). Review of recent progress in robotic knee prosthesis related techniques: Structure, actuation and control. Journal of Bionic Engineering, 18(4), 764–785.

Vicon Motion Systems Ltd. (2018) Vicon nexus reference guide. https://docs.vicon.com/display/Nexus26/Vicon+Nexus+Reference+Guide

Wang, L., Liu, Z., Chen, C. P., & Zhang, Y. (2014). Interval type-2 fuzzy weighted support vector machine learning for energy efficient biped walking. Applied Intelligence, 40(3), 453–463. https://doi.org/10.1007/s10489-013-0472-2

Wang, Z., He, B., Zhou, Y., Yuan, T., Xu, S., & Shao, M. (2018). An experimental analysis of stability in human walking. Journal of Bionic Engineering, 15(5), 827–838.

Wang, K., Ren, L., Qian, Z., Liu, J., Geng, T., & Ren, L. (2021). Development of a 3D printed bipedal robot: Towards humanoid research platform to study human musculoskeletal biomechanics. Journal of Bionic Engineering, 18(1), 150–170.

Yamaguchi, J. I., & Takanishi, A. (1997). Development of a leg part of a humanoid robot-development of a biped walking robot adapting to the humans’ normal living floor. Autonomous Robots, 4(4), 369–385.

Yang, W., Tan, R. T., Wang, S., Fang, Y., & Liu, J. (2021). Single image deraining: From model-based to data-driven and beyond. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(11), 4059–4077. https://doi.org/10.1109/TPAMI.2020.2995190

Zhou, C., Wang, X., Li, Z., & Tsagarakis, N. (2017). Overview of gait synthesis for the humanoid COMAN. Journal of Bionic Engineering, 14(1), 15–25.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

Mr. Bharat Singh has contributed to develop the methodology, analysis, and drafting of this paper by carrying out a thorough study of the literature regarding the mentioned topics. He has also contributed in the sense of presenting a comparison between the various techniques of gait models. All authors read and approved the final manuscript. Mr. Suchit Patel has contributed on the development of gait model. Mr. Ankit Vijayvargiya has contributed in analyzing the results and provided the suggestion in developing the gait models. Prof. Rajesh Kumar has also contributed this work by supervising all aspects related to methodology, analysis and drafting.

Corresponding author

Ethics declarations

Conflicts of interest

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent to publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Singh, B., Patel, S., Vijayvargiya, A. et al. Data-driven gait model for bipedal locomotion over continuous changing speeds and inclines. Auton Robot 47, 753–769 (2023). https://doi.org/10.1007/s10514-023-10108-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-023-10108-6