Abstract

Bayesian network classifier (BNC) allows efficient and effective inference under condition of uncertainty for classification, and it depicts the interdependencies among random variables using directed acyclic graph (DAG). However, learning an optimal BNC is NP-hard, and complicated DAGs may lead to biased estimates of multivariate probability distributions and subsequent degradation in classification performance. In this study, we suggest using the entropy function as the scoring metric, and then apply greedy search strategy to improve the fitness of learned DAG to training data at each iteration. The proposed algorithm, called One\(+\) Bayesian Classifier (O\(^{+}\)BC), can represent high-dependence relationships in its robust DAG with a limited number of directed edges. We compare the performance of O\(^{+}\)BC with other six state-of-the-art single and ensemble BNCs. The experimental results reveal that O\(^{+}\)BC demonstrates competitive or superior performance in terms of zero-one loss, bias-variance decomposition, Friedman and Nemenyi tests.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The study on data mining has long been an active research domain in artificial intelligence and machine learning due to its theoretical and practical significance. By analyzing data and performing inductive reasoning on the basis of data mining, researchers expect to extract valuable knowledge and information from data intelligently and automatically [1,2,3,4,5,6,7]. Among numerous data mining algorithms, the Bayesian networks (BNs) [8, 9] designed for inference or the Bayesian network classifiers (BNCs) [10,11,12] for classification encode the probability distribution over a set of random variables using directed acyclic graph (DAG). The DAG qualitatively describes the dependencies (or independencies) among random variables in the form of directed edges (or independent nodes), and then it quantitatively factorizes the joint probability into the product of a set of conditional probabilities.

However, how to learn a BNC that can fit data well is NP-hard [13,14,15], and the study of restricted BNC has attracted great attention especially after Naive Bayes (NB) [16, 17] is ranked as one of the top ten data mining algorithms. Restricted BNC takes the topology of NB as the skeleton, and an effective and feasible approach to structure learning is adding augmented edges to relax the attribute independence assumption of NB. The newly added directed edges should help make the multivariate probability distribution encoded in the local topology approximate the true one. However, each edge in the DAG, e.g., \(X_i\rightarrow X_j\), is commonly measured by conditional mutual information (CMI) \(I(X_i;X_j|Y)\) [18]. Thus CMI measures the significance of one single edge rather than the fitness of the learned topology to data.

High-dependence topology can help the learned BNC model complex multivariate probability distributions. To achieve excellent generalization and classification performance, the learned DAG should be robust and simple, and can represent high-dependence relationships with a limited number of directed edges. Some researchers proposed to use \(I(X_i;X_j|Y)\) to measure high-dependence relationships by implicitly assuming that the parent attributes impact on the children attribute independently rather than jointly [19]. Thus the learned topology is suboptimal and sometimes high-dependence BNC even performs poorer than one-dependence BNC.

Given the topology \(\mathcal {B}\) learned from training data \(\mathcal {D}\), the optimal value of joint probability should correspond to the maximum value of the log likelihood (LL) function \(LL(\mathcal {B}|\mathcal {D})\) or the minimum value of the entropy function \(H(X,Y|\mathcal {B},\mathcal {D})\) [17], where X and Y respectively denote the predictive attribute set and class variable. Since entropy is commonly applied to measure the disorder or randomness, if it is introduced as the scoring function for measuring the uncertainty of the learned topology, then directed edges will have causal semantics and the topology robustness will be retained when 1-dependence topology is scaled up to represent high-order dependencies. The variation in training data or noise will not make the classification performance of the learned robust BNC vary greatly. The main contributions of this paper are described as follows,

-

We use entropy based scoring criteria to measure the fitness of learned topology to training data, and then a novel greedy search strategy is introduced to learn high-dependence maximum weighted spanning tree from data. During the learning procedure, the newly added augmented edge should help improve the topology robustness at each iteration. The operations applied include edge addition and edge reversal. The learned \( n-1\) directed edges in the topology of \(\mathcal {B}\) can represent from 1-dependence to arbitrary k-dependence relationships.

-

The experimental study proves the effectiveness of the proposed entropy function on measuring dependencies and the feasibility of the application of greedy search strategy on labeled data. The proposed O\(^{+}\)BC demonstrates competitive or superior classification performance in terms of zero-one loss, bias, variance, Friedman and Nemenyi test, and it can handle different domains with distinctive properties.

The rest of the paper is structured as follows. Section 2 reviews relevant theoretical background and research work. Section 3 outlines the basic idea and details of the proposed O\(^+\)BC. Section 4 shows the experimental results of O\(^+\)BC and its comparison with six state-of-the-art BNCs. Finally, Section 5 concludes the paper.

2 Background theory and related research work

2.1 Directed acyclic graph

A succinct summary of the symbols used in this paper are listed in Table 1.

The topology of BN graphically represents the dependencies in the form of DAG, which encodes the probability distributions learned from data. Given a finite set \(X = \{ X_1, \ldots , X_n\}\) of discrete random variables, a BN for \(X \) contains two components, i.e., \(\mathcal {G}\) and \(\Theta \). \(\mathcal {G}\) is the learned DAG with vertices corresponding to the random variables and edges representing direct dependencies between the variables. \(\Theta \) represents the set of probability distributions that quantifies the DAG. A BN \(\mathcal {B}\) defines the joint probability distribution as follows,

For restricted BNC, the class variable Y is considered as the root node of DAG or the common parent of all the variables in \(X \). The main objective of BNC learning [20] is to build DAG for representing the dependency relationships among these variables, and then compute the posterior probability of the class given any configuration of the attributes, \(P(y |{\textbf {x}} )\).

2.2 Semi-naive Baysian classifiers

NB [21] is the simplest BNC without any directed edges between predictive attributes in its fixed topology. The high-confidence estimates of the 1-order conditional probability \(P(y |x_i)\) for each attribute help NB exhibit surprisingly competitive classification accuracy, and it performs significantly better than other more sophisticated BNCs on numerous datasets especially when there exists no correlation between attributes [11, 17, 22,23,24]. Semi-naive Baysian classifiers (SNBs) [25] take the network topology of NB as the skeleton and then relax the independence assumption by adding augmented edges. The SNBs can be grouped according to the maximum number of parent attributes.

Friedman et al. [17] proposed to fully mine one- dependence relationships from data and the resulting algorithm is the Tree-augmented naive Bayesian network (TAN) (see Fig.1(a)). Each attribute in the framework of maximum weighted spanning tree (MWST) can have no more than one parent attribute and all attributes are required to point outward from the randomly selected root attribute. This alleviates NB’s independence assumption to some extent and reduces the search space at the expense of the reasonability of directionality. Considering the random selection of root attribute, Jiang et al. [26] suggested to learn an ensemble rather an individual TAN by taking each attribute as the potential root attribute in turn. Based on statistical n-gram language modeling, Feng et al. [27] proposed to employ Markov chain to model adjacent attribute dependencies.

Sahami [28] further extended NB to represent arbitrary k-dependence relationships. The resulting algorithm called k-dependence Bayesian classifier or KDB for simplicity (see Fig.1(b)) allows every attribute to be conditioned to at most k parent attributes. Sahami argued that a BNC would be expected to obtain optimal Bayesian accuracy given enough data and large k. KDB also disregards the identification of directionality. KDB compares mutual information (MI) \(I(X_i;Y)\) [29] to sort the attributes in descending order, and only when \(i<j\) holds the directed edge \(X_i\rightarrow X_j\) possibly exists. To achieve the necessary efficiency and remove memory size as a bottleneck, Ana et al. [19] proposed to apply leave-one-out cross validation (LOOCV) to select attribute subset and appropriate parameter k. Then semi-supervised KDB (SSKDB) algorithm [30] applied heuristic search strategy to learn BNCs that can work jointly in the framework of semi-supervised learning. Jiang et al. [31] proposed to use MI as the weighting metric to assign weights to SPODE members in AODE. Duan et al. [32] considered the difference between SPODE members in AODE, and the weighting metric may vary greatly from instance to instance.

2.3 The scoring metrics and directionality identification

Information-theoretic metrics, e.g., AIC, BIC, MDL and MML [33,34,35,36,37], can measure the degree of fitness of a DAG to training data with distinct characteristics, thus the learned simple and robust topology may achieve excellent generalization and classification performance. From the perspective of information theory, the learned topology aims to minimize the conditional entropy of each variable given its parents and then greedy search strategy provides a feasible approach to find the parents that can give as much information as possible about this variable [38].

The MDL scoring function \(\textrm{MDL}(\mathcal {B}|\mathcal {D})\) is asymptotically correct as the size of training data increases since the learned probability distribution may approximate the true one [39], and it represents the combined length of the network description as follows [17],

where \(|\mathcal {B}|\) denotes the number of parameters for \(\mathcal {B}\). Thus the first term can be considered as a constant given \(\mathcal {B}\). Given the joint probability distribution \(P_\mathcal {B}\) over the N instances \(\{d_1,\cdots , d_N \}\) appearing in the training data \(\mathcal {D}\), the second term in (2), i.e., \(LL (\mathcal {B}|\mathcal {D})\), is commonly applied to measured the quality of \(\mathcal {B}\) and can be factorized into the following form,

Maximizing the log likelihood function \(LL (\mathcal {B}|\mathcal {D})\) is equivalent to minimizing the entropy function \(H (X,Y|\mathcal {B},\mathcal {D})\) [17], and (3) turns to be

where

The entropy function corresponding to NB is

Researchers commonly apply greedy search strategy and add augmented edges between attributes for the obvious computational reasons. The learning procedure starts with the topology of NB and successively applies local operations to maximize the score until a local minima is reached. The difference between (4) and (6), i.e., \(\sum _{i=1}^nI(X_i;\Pi _i|Y)= H (X,Y|\mathrm{{NB},\mathcal {D}})-H (X,Y|\mathcal {B},\mathcal {D})\), measures the quality of the conditional dependencies between attributes mined from data. Due to the symmetry form of CMI, we need to make rule to sort the attributes explicitly or implicitly, and then determine the directionality of the edge \(X_i- X_j\) or which one is the parent for attribute pair \(\{X_i,X_j\}\).

3 Learning robust Bayesian network classifier with simple topology

For restricted BNCs, as shown in Fig. 2 conditional independence \(\mathcal {B}_{ind}\) and conditional dependence \(\mathcal {B}_{de}\) are the two basic local topologies. The CMI is commonly used to measure the significance of conditional dependence between attribute pair, and it can be factorized in the form of entropy functions as follows [40],

where

Thus the underlying essence of \(I(X_i;X_j|Y)\) is to evaluate the extent to which \(\mathcal {B}_{de}\) is more reasonable than \(\mathcal {B}_{ind}\), whereas \(I(X_i;X_j|Y)\) cannot directly measure the extent to which \(\mathcal {B}_{de}\) fits data [41]. Furthermore, the aim to learn the topology of BNC is to explore complex multivariate probability distributions from data. Although the 2-dependence topology shown in Fig. 3(a) and the 1-dependence topology shown Fig. 3(b) have the same skeleton, considering the impact of attribute \(X_k\), the significance of \(X_i\rightarrow X_j\) and \(X_j\rightarrow X_i\) may vary greatly. Thus \(I(X_i;X_j|Y)\) measures the significance of one single edge whereas the entropy function \(H (X,Y|\mathcal {B},\mathcal {D})\) in (4) measures the fitness of the learned topology to data, and the latter is considered more appropriate to be the scoring function for measuring the robustness of the learned topology.

3.1 The initial topology of O\(^+\)BC with two nodes

For high-dependence BNCs, the number of directed edges or dependency relationships increases as the topology complexity increases, and the learned high dimensional probability distributions may fit data better especially while dealing with large dataset. In contrast, TAN allows at most one parent for every attribute node, and its 1-dependence topology cannot represent high dimensional probability distributions, thus TAN commonly demonstrates superior performance while dealing with small or medium sized datasets [42]. To prove the effectiveness of the scoring function in learning high-dependence topology with a limited number of directed edges, the proposed algorithm O\(^+\)BC also takes \(n-1\) directed edges in the topology as TAN. We will clarify the basic idea in detail in the following discussion.

O\(^+\)BC initializes the network topology of \(\mathcal {B}\) as an empty topology, and then applies local search operations to add attribute \(X_i\) and the edges connecting to it to the topology at each iteration. During the learning procedure, the edges connecting to \(X_i\) should help achieve local optimal solution, or more precisely, the minimum value of \(H (X,Y|\mathcal {B},\mathcal {D})\). The operations applied include edge addition and edge reversal. Assuming that initially there are two attribute nodes \(\{X_1, X_2\}\) and the class node Y in the topology, as shown in Fig. 4 the directed edge between \(X_1\) and \(X_2\) may be either \(X_1\rightarrow X_2\) or \(X_2 \rightarrow X_1\). The entropy functions corresponding to one-dependence BNCs \(\mathcal {B}_{12}\) and \(\mathcal {B}_{21}\) are respectively

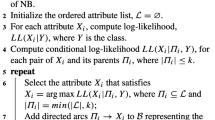

Thus \(H(X_1,X_2,Y)=H(\mathcal {B}_{12})= H(\mathcal {B}_{21})\) always holds, and the topologies for \(\mathcal {B}_{12}\) and \(\mathcal {B}_{21}\) are both reasonable and we can randomly select one of them as the candidate one-dependence topology of O\(^+\)BC. The corresponding pseudo codes are shown as follows,

However, the number of bits encoded in the undirected edge \(X_i-\{X_j,X_k\}\) may be different from that encoded in \(\{X_i,X_j\}-X_k\) for high-dependence BNC. Thus while dealing with three or more attributes, it is difficult or even an NP-hard problem to capture all appropriate correlations between attributes [43] and simultaneously differentiate between the parent attributes and the children attributes.

3.2 High-dependence maximum weighted spanning tree

To transform undirected tree to directed one, TAN requires that the root node point outwards, and this rule helps simplify the learning procedure whereas restricts the learning flexibility. We argue that the resulting one-dependence topology may be suboptimal since it fails to consider other possible transformations. The \(n-1\) directed edges can represent from 1-dependence to arbitrary k-dependence relationships. Since the structure learning complexity increases exponentially with k, we set \(k=2\) in the following discussion and experimental study.

The augmented edges pointing to or from the newly added attribute should help minimize the entropy function at each iteration. For example, if we need to add one more attribute node, e.g., \(X_3\), to the initial one-dependence topology of O\(^+\)BC learned by Algorithm 1, then the attribute set in the topology includes \(\{X_1, X_2,X_3\}\). The undirected edge between \(X_3\) and \(\{X_1, X_2\}\) may be either \(X_3-X_1\) or \(X_3-X_2\), and the resulting four directed topologies are shown in Fig. 5. The corresponding entropy functions are respectively

Four types of directed topologies with three attribute nodes, including a) the topology with directed edge \(X_2\rightarrow X_3\), b) the topology with directed edge \(X_3\rightarrow X_2\), c) the topology with directed edge \(X_1\rightarrow X_3\) and d) the topology with directed edge \(X_3\rightarrow X_1\)

The topologies for \(\mathcal {B}_{23}\), \(\mathcal {B}_{13}\) and \(\mathcal {B}_{31}\) are one-dependence, and only the topology for \(\mathcal {B}_{32}\) is two-dependence since \(X_2\) has two parent attributes \(\{X_1,X_3\}\). Due to the restriction in structure complexity, k-dependence BNC can select for each attribute no more than k parents attributes. Suppose that \(H(X_1,X_2,X_3,Y|\mathcal {B}_{32})\) \(<H(X_1,X_2,X_3,Y|\mathcal {B}_{23})\) \(<H(X_1,X_2,X_3,Y|\mathcal {B}_{13})=H(X_1,X_2,X_3,Y|\mathcal {B}_{31})\) holds, then the topology for \(\mathcal {B}_{32}\) is the optimal one among all, whereas the topology for \(\mathcal {B}_{23}\) is the optimal one among one-dependence topologies. The description above gives an example to illustrate the learning procedure after \(X_3\) is selected as the next attribute. In practice, each attribute other than \(\{X_1,X_2\} \) in the attribute list will be tested to check if it is the right one to be added. So we need to test the directed edge connecting to the candidate attribute, and finally select the augmented topology which is the most helpful for improving \(H (X,Y|\mathcal {B},\mathcal {D})\). Thus we apply a greedy search to iteratively add the attributes and corresponding directed edges to the topology. The algorithm terminates until all the attributes are included in the topology of \(\mathcal {B}\). The learning procedure of adding a new directed edge can be summarized as shown in Algorithm 2. The O\(^+\)BC algorithm is illustrated in Fig. 6 and described in Algorithm 3.

Given a testing instance \({\textbf {x}} \), O\(^+\)BC calculates the joint probability distribution and assigns \(y^*\) to \({\textbf {x}} \) using

At training time, O\(^+\)BC generates a three-dimensional table of co-occurrence counts for each pair of attribute values and each class value, which needs \(O(Nn^2)\) time. O\(^+\)BC selects two attributes to build an initial model by computing \(H(X_i,X_j,Y)\) in (9), which has a time complexity of \(O(m(nv)^2)\). Assuming that each attribute can take a maximum of k parent attributes, O\(^+\)BC then iteratively selects the attributes and the corresponding directed edges and adds them to the topology by calculating the corresponding entropy function \(H(\mathcal {B})\) in (4). Hence, the corresponding time complexity is \(O(m(nv)^{k+1})\). At classification time, O\(^+\)BC calculates the joint probability distribution in (11) for classification and it only requires O(mnk) time.

4 Experimental study

For comparison study, the state-of-the-art BNCs described as follows run on 44 benchmark datasets from the UCI machine learning repository [44]. Table 2 provides detailed characteristics of these datasets. The missing values for numerical attribute and nominal attribute are respectively replaced by means or modes from the available data. The MDL [45] discretization method is applied to handle numerical attributes for each dataset.

-

TAN [17], which extends NB by representing the one-dependence relationships in the form of MWST.

-

SKDB [19], which extends KDB and uses LOOCV to select the best parameter k.

-

AODE-SR [46], which eliminates generalizations at classification time by using subsumption resolution.

-

WAODE-MI [31], which assigns weight to each SPODE by using MI as the weighting metric.

-

IWAODE [32], which defines weights for each SPODE by using instance-based weighting metric.

Each algorithm is tested for 10 rounds on 44 datasets using the 10-fold cross-validation method.

The Win/Draw/Loss (W/D/L) records are used to perform statistical comparisons, and Tables 3, 4 and 5 summarize the number of datasets on which the proposed O\(^+\)BC obtains better, similar or worse outcomes relative to the alternative in terms of zero-one loss, bias and variance. The detailed experimental results are respectively given in Tables 7 – 9 in the Appendix. We assess a difference as significant if the outcome of a one-tailed binomial sign test is less than 0.05 [46, 47].

4.1 Zero-one loss

Zero-one loss (ZOL) is a common loss function to measure the classification accuracy. Table 3 provides statistical W/D/L records in terms of ZOL to show the comparison of O\(^+\)BC against its competitors.

We can see in Table 3 that O\(^+\)BC achieves the best classification performance among all the BNCs. For example, O\(^+\)BC enjoys significant advantages over TAN (19/22/3) and SKDB (22/17/5), since O\(^+\)BC could represent high-dependence relationships and achieve the tradeoff between data fitness and topology complexity. Among ensemble BNCs, WATAN represents the same undirected dependency relationships in its TAN members, and weighted AODEs don’t differentiate significant dependencies from insignificant ones due to its unrealistic independence assumptions. Thus O\(^+\)BC outperforms WATAN (18/24/2), AODE-SR (20/22/2), WAODE-MI (16/25/3) and IWAODE (19/23/2).

To further describe the advantage of O\(^+\)BC, Fig. 7 shows scatter plots of the experimental results in terms of ZOL, with each point corresponding to one dataset. The three dotted lines in black, blue and red respectively correspond to \(Y=1.1 *X\), \(Y=X\) and \(Y=0.9 *X\), which respectively denote that O\(^+\)BC performs significantly better, equally well or significantly poorer than its competitor in terms of ZOL. As shown in Fig. 7, O\(^+\)BC enjoys significant advantages over TAN, WATAN, SKDB, AODE-SR, WAODE-MI and IWAODE on 11, 11, 16, 10, 9 and 11 datasets, respectively. And O\(^+\)BC performs significantly poorer than TAN, WATAN, SKDB, AODE-SR, WAODE-MI and IWAODE only on 1, 1, 4, 1, 2 and 2 datasets, respectively. These illustrative results prove the effectiveness of our proposed O\(^+\)BC.

4.2 Bias and variance

The bias-variance decomposition [48] of ZOL provides further insights into the analysis of classification performance. Bias measures the resulting systematic error of the learner for describing the decision boundary, and variance measures the sensitivity of the learner to random variation in the training data.

As shown in Table 4, O\(^+\)BC obtains significantly better bias compared to other learners. The 0-dependence topology for NB requires only one pass through the data. The implicit independence assumptions make NB fail to represent high-dependence relationships. The numbers of directed edges in DAG for TAN and each member of WATAN are the same, and the same topology skeleton makes them fit training data to the same extent. Thus O\(^+\)BC performs much better than TAN (17/23/4) and WATAN (18/22/4) in terms of bias. SKDB and weighted AODE can represent more dependency relationships, whereas complex topology with insignificant dependencies may bias the estimate of probability distributions. Thus O\(^+\)BC also outperforms SKDB (19/16/9), AODE-SR (20/19/5), WAODE-MI (18/21/5) and IWAODE (24/16/4).

Variance-wise, the weighting metrics and subsumption resolution can help AODE-SR, WAODE-MI and IWAODE finely tune the probability estimates whereas their independence assumptions reduce the sensitivity to variation in training data. Thus as shown in Table 5, they demonstrate significant advantages over O\(^+\)BC in terms of variance. TAN constructs MWST to represent one-dependence relationships between attributes, and WATAN uses weighting metric to mitigate the negative effect caused by random selection of the root attribute. High-dependence topology corresponds to high-order probability distributions and relatively high risk of overfitting. Thus O\(^+\)BC demonstrates significant advantages over TAN (38/2/4), WATAN (38/2/4) and SKDB (39/2/3).

4.3 Friedman and Nemenyi test

The Friedman test [49] rank the classification performance of these BNCs in terms of ZOL, bias and variance. The difference in average ranks under the null-hypothesis can help statistically investigate the differences among BNCs. As shown in Table 6, the average ranks of ZOL, bias and variance are respectively 4.4278, 3.3790 and 74.0856. Based on the significance level \(\alpha = 0.05\) and the degree of freedom \((7-1)\times (44-1) = 258\), the critical value for Friedman test is 2.1338, thus we can reject the null-hypothesis and there exist significant difference among the compared algorithms.

Since the Friedman test can only conclude whether there exists difference in metrics for evaluating the classification performance of the compared algorithms, we apply the Nemenyi test [50] as a “follow-up test” to find out which algorithms have statistical differences in their performance. Figure 8 depicts the experimental results of 7 algorithms using the Nemenyi test on ZOL, bias and variance. The BNCs and the matching average ranks are respectively represented by the left line and the parallel right line. If the difference is greater than the critical difference (CD) [51], the algorithm with lower average rank is statistically better than the algorithm with higher average rank. The algorithms will be connected if the differences among them are not significant. The higher position corresponds to lower rank and better performance. The CD value for \(\alpha = 0.05\) is 1.3582 for 44 datasets and 7 algorithms.

In Fig. 8(a), the Nemenyi test differentiates O\(^+\)BC from other BNCs and the analysis reveals that O\(^+\)BC achieves the lowest average ZOL rank followed by WATAN (3.7159), TAN (3.8068), WAODE-MI (4.0341). The differences in ZOL ranks between TAN, WAODE-MI, IWAODE, AODE-SR and SKDB are small, ranging from 3.8068 to 5.0795. Figs. 8(b) and (c) graphically show the compared results of the Nemenyi test on bias and variance. From Fig. 8(b), the bias rank obtained by O\(^+\)BC (3.1023) is the lowest followed by WATAN (3.6136) and SKDB achieves the third lowest mean bias rank (3.8182). Although WAODE-MI, AODE-SR and IWAODE can fully represent the conditional dependencies due to ensemble learning, some conditional independencies or weak dependencies may be introduced into the topology of committee members. Thus WAODE-MI, AODE-SR and IWAODE achieve higher mean bias ranks than other BNCs (4.2841, 4.2841 and 4.8750 respectively). Variance-wise, as shown in Fig. 8(c), the top three ranked algorithms are IWAODE (1.9886), AODE-SR (2.4545) and WAODE-MI (2.6250), with no significant difference in performance among them due to their fixed structures. O\(^+\)BC achieves lower average variance rank (3.8523) when compared to WATAN (5.5455) and SKDB (5.6932). TAN has the highest mean variance rank (5.8409) since it encodes all significant dependency relationships in one topology.

4.4 Comparision of running time

We will compare the averaged training and classification time in milliseconds of 7 algorithms on 44 UCI benchmark datasets in this subsection. Each bar in Fig. 9 shows the logarithmic average running time of the corresponding algorithm in 10-fold cross validation experimental study. As shown in Fig. 9(a), AODE-SR and IWAODE require the least training time among all the algorithms, since they need no structure learning for training. In contrast, WAODE-MI needs additional time to calculate MI as the attribute weights. TAN utilizes CMI to construct MWST. WATAN constructs n TAN classifiers using n MWSTs. SKDB needs to select attribute subset and parameter k. O\(^+\)BC uses information-theoretic metrics (i.e., conditional entropy) to order attributes and determine the directions of the edges and thus it needs the most training time against other algorithms.

As shown in Fig. 9(b), WATAN uses a weighted average learning method at classification time thus it needs time to calculate the weights of MWSTs. AODE-SR and IWAODE spend more time than WAODE-MI since AODE-SR checks all attribute-value pairs for generalization relationships and IWAODE needs to compute the weighting metrics for each testing instance during classification. TAN, SKDB and O\(^+\)BC are single BNCs, thus they need less time to compute the joint probability for classification.

5 Conclusion

The magnificent bloom of the machine learning area enhances the development of Bayesian learning. Bayesian network can not only qualitatively describe the implicit knowledge hidden in data in the form of DAG, but it can also quantitatively measure the fitness to data in the form of factorized joint probability. In this paper, we prove theoretically the reasonableness and feasibility of the application of entropy function as the scoring function, and the experimental results demonstrate the efficiency and effectiveness of the greedy search strategy. The proposed algorithm O\(^+\)BC allows to build high-dependence topology with a limited number of dependency relationships, and it delivers lower ZOL and bias than single learners (e.g., TAN, SKDB) and ensemble learners (e.g., WATAN, AODE-SR, WAODE-MI and IWAODE) at the cost of relatively high variance. The potential directions for future research include the study on how to scale up O\(^+\)BC to represent complex multivariate distributions and learn instantiated O\(^+\)BC to represent distinct data characteristics hidden in different instances.

Data Availability

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

References

Fayyad U, Piatetsky-Shapiro G, Smyth P (1996) From data mining to knowledge discovery in databases. Artif Intell 17(3):37–37

Yukselturk E, Ozekes S, Türel YK (2014) Predicting dropout student: an application of data mining methods in an online education program. Eur J Open, Dist E-learning 17(1):118–133

Wang L, Xie Y, Pang M, Wei J (2022) Alleviating the attribute conditional independence and I.I.D. assumptions of averaged one-dependence estimator by double weighting. Knowl-Based Syst 250:109078

Wu H, Yan G, Xu D (2014) Developing vehicular data cloud services in the IoTenvironment. IEEE Trans Ind Inform 10(2):1587–1595

Peña-Ayala A (2014) Educational data mining: a survey and a data mining-based analysis of recent works. Expert Syst Appl 41(4):1432–1462

Jiang L, Zhang L, Yu L, Wang D (2019) Class-specific attribute weighted naive Bayes. Pattern Recognit 88:321–330

Ren Y, Wang L, Li X, Peng M, Wei J (2022) Stochastic optimization for bayesian network classifiers. Appl Intell 52(13):15496–15516

Pearl J (1988) Probabilistic reasoning in intelligent systems: networks of Plausible Inference. Morgan Kaufmann

Wang L, Zhang S, Mammadov M, Li K, Zhang X (2021) Semi-supervised weighting for averaged one-dependence estimators. Appl Intell 52(4):4057–4073

Zhang H, Petitjean F, Buntine W (2020) Bayesian network classifiers using ensembles and smoothing. Knowl Inf Syst 62(9):3457–3480

Jiang L, Li C, Wang S, Zhang L (2016) Deep feature weighting for naive Bayes and its application to text classification. Eng Appl Artif Intell 52:26–39

Kong H, Shi X, Wang L (2021) Averaged tree-augmented onedependence estimators. Appl Intell 51(7):4270–4286

Zhang H, Jiang L, Li C (2022) Attribute augmented and weighted naive Bayes. Sci China Inf Sci 65(12):222101

Chickering DM (1996) Learning Bayesian networks is NP-complete. Learn Data: Artif Intell Stat V:121-130

Wang L, Zhou J, Wei J, Pang M, Sun M (2022) Learning causal Bayesian networks based on causality analysis for classification. Eng Appl Artif Intell 114:105212

Jiang L, Zhang H, Cai Z (2008) A novel Bayes model: hidden naive bayes. IEEE Trans Knowl Data Eng 21(10):1361–1371

Friedman N, Geiger D, Goldszmidt M (1997) Bayesian network classifiers. Mach Learn 29(2):131–163

Cover TM, Thomas JA (2006) Elements of Information Theory. Wiley-Interscience

Martínez AM, Webb GI, Chen S, Zaidi NA (2012) Scalable learning of Bayesian network classifiers. J Mach Learn Res 17(1):1515–1549

Zhao X, Yan H, Hu Z, Du D (2022) Deep spatio-temporal sparse decomposition for trend prediction and anomaly detection in cardiac electrical conduction. IISE Trans Healthc Syst Eng 12(2):150–164

Jiang L, Zhang L, Li C, Wu J (2019) A correlation-based feature weighting filter for naive Bayes. IEEE Trans Knowl Data Eng 31:201–213

Langley P, Iba W, Thompson K (1992) An analysis of Bayesian classifiers. In: Proceedings of AAAI conference on artificial intelligence, pp 223–228

Pang Y, Zhao X, Hu J, Yan H, Liu Y (2022) Bayesian spatio-temporal graph transformer network (b-star) for multi-aircraft trajectory prediction. Knowl-Based Syst 249:108998

Domingos P, Pazzani M (1997) On the optimality of the simple Bayesian classifier under zero-one loss. Mach Learn 29(2):103–130

Kononenko I (1991) Semi-naive Bayesian classifier. In: Machine learning-EWSL-91: European working session on learning porto, pp 206–219

Jiang L, Cai Z, Wang D, Zhang H (2012) Improving Tree augmented Naive Bayes for class probability estimation. Knowl-Based Syst 26:239–245

Peng F, Schuurmans D, Wang S (2004) Augmenting naive bayes classifiers with statistical language models. Inf Retr 7(3–4):317–345

Sahami M (1996) Learning limited dependence Bayesian classifiers. In: Proceedings of the second international conference on knowledge discovery and data mining, pp 335–338

Shannon CE (2001) A mathematical theory of communication. ACM SIGMOBILE Mob Comput Commun Rev 5(1):3– 55

Wang L, Zhang X, Li K, Zhang S (2022) Semi-supervised learning for k-dependence Bayesian classifiers. Appl Intell 52(4):3604–3622

Jiang L, Zhang H, Cai Z, Wang D (2012) Weighted average of one-dependence estimators. J Exp Theor Artif Intell 24(2):219– 230

Duan Z, Wang L, Chen S, Sun M (2020) Instance-based weighting filter for superparent one-dependence estimators. Knowl-Based Syst 203:106085

Akaike H (1974) A New Look at the Statistical Model Identification. IEEE Trans Autom Control 19:716–723

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–465

Suzuki J (1999) Learning Bayesian belief networks based on the MDL principle: an efficient algorithm using the branch and bound technique. IEICE Trans Inf Syst 82(2):356–367

Korb KB, Nicholson AE (2004) Bayesian artificial intelligence. Chapman and Hall

Kong H, Wang L (2023) Flexible model weighting for one-dependence estimators based on point-wise independence analysis. Pattern Recognit 139:109473

Sun H (2020) Simultaneous material microstructure classification and discovery using acoustic emission signals. Arizona State University

Heckerman D (1998) A tutorial on learning Bayesian networks. Springer, Netherlands

Liu Y, Wang L, Mammadov M, Chen S, Wang G, Qi S, Sun M (2021) Hierarchical independence thresholding for learning Bayesian network classifiers. Knowl-Based Syst 212:106627

Zhao X, Yan H, Liu Y (2021) Hierarchical tree-based sequential event prediction with application in the aviation accident report. In: 2021 IEEE 37th international conference on data engineering (ICDE), pp 1925–1930

Wang L, Chen S, Mammadov M (2018) Target learning: a novel framework to mine significant dependencies for unlabeled data. In: Proceedings of the 22nd Pacific-Asia conference on knowledge discovery and data mining, pp 06–117

Pang Y, Zhao X, Yan H, Liu Y (2021) Data-driven trajectory prediction with weather uncertainties: a Bayesian deep learning approach. Transp Res C: Emerg Technol 130:103326

Bache K, Lichman M, UCI machine learning repository, Available online: https://archive.ics.uci.edu/ml/datasets.html

Fayyad U, Irani K (1993) Multi-interval discretization of continuous-valued attributes for classification learning. In: Proceedings of the 13th international joint conference on artificial intelligence, pp 1022–1029

Zheng F, Webb GI, Suraweera P, Zhu L (2012) Subsumption resolution: an efficient and effective technique for semi-naive Bayesian learning. Mach Learn 87(1):93–125

Fisher RA (1970) Statistical methods for research workers. Breakthroughs in statistics: Methodology and distribution 66–70

Kohavi R, Wolpert DH (1996) Bias plus variance decomposition for zero-one loss functions. In: Proceedings of the 13th international conference on machine learning, pp 275–283

Friedman M (1937) The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J Am Stat Assoc 32(200):675–701

Nemenyi PB (1963) Distribution-free multiple comparisons, Princeton University

Demšar J (2006) Statistical comparisons of classifiers over multiple datasets. J Mach Learn Res 7:1–30

Acknowledgements

This work is supported by the National Key Research and Development Program of China (No.2019YFC1804804), Open Research Project of The Hubei Key Laboratory of Intelligent Geo-Information Processing (No.KLIGIP-2021A04), and the Scientific and Technological Developing Scheme of Jilin Province (No.20200201281JC).

Author information

Authors and Affiliations

Contributions

Lanni Wang: Conceptualization, Validation, Visualization, Writing - original draft. Limin Wang: Methodology, Supervision, Writing - review & editing, Funding acquisition. Lu Guo: Formal analysis, Project administration. Qilong Li: Software, Investigation. Xiongfei Li: Writing - review & editing, Validation.

Corresponding author

Ethics declarations

Competing Interest

The authors declare that they have no conflict of interest.

Ethical and Informed Consent for Data Used

This study does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, L., Wang, L., Guo, L. et al. Exploring complex multivariate probability distributions with simple and robust bayesian network topology for classification. Appl Intell 53, 29799–29817 (2023). https://doi.org/10.1007/s10489-023-05098-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-05098-y