Abstract

Several studies have shown the excellent performance of deep learning in image segmentation. Usually, this benefits from a large amount of annotated data. Medical image segmentation is challenging, however, since there is always a scarcity of annotated data. This study constructs a novel deep network for medical image segmentation, referred to as asymmetric U-Net generative adversarial networks with multi-discriminators (AU-MultiGAN). Specifically, the asymmetric U-Net is designed to produce multiple segmentation maps simultaneously and use the dual-dilated blocks in the feature extraction stage only. Further, the multi-discriminator module is embedded into the asymmetric U-Net structure, which can capture the available information of samples sufficiently and thereby promote the information transmission of features. A hybrid loss by the combination of segmentation and discriminator losses is developed, and an adaptive method of selecting the scale factors is devised for this new loss. More importantly, the convergence of the proposed model is proved mathematically. The proposed AU-MultiGAN approach is implemented on some standard medical image benchmarks. Experimental results show that the proposed architecture can be successfully applied to medical image segmentation, and obtain superior performance in comparison with the state-of-the-art baselines.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Medical image segmentation is one of the most important tasks in biological image processing and analysis. Its purpose is to segment the parts of a medical image with some special implications and extract related features, thereby assisting doctors in diagnosis and pathology research. Previous approaches to medical image segmentation were often based on traditional methods, such as support vector machines (SVMs) [1] and random forests (RF) [2], which generally demanded manual features in advance [3, 4]. These traditional methods often create problems in terms of efficiency and subjectivity. Naturally, it is necessary to explore advanced segmentation algorithms for medical images.

In recent years, deep learning, owing to its powerful and automatic feature extraction capability, has been widely used in image processing and computer vision, such as image reconstruction [5, 6], image classification [7], object detection [8], etc. This technique has also been extensively employed in image segmentation [9, 10]. A fully convolutional network (FCN) in [11] was the first image segmentation approach to perform end-to-end image segmentation. Subsequently, Badrinarayanan et al. [12] improved upon FCN to develop a novel architecture named SegNet. SegNet consists of a 13 layer deep encoder network, which extracts spatial features from the image. A corresponding 13 layer deep decoder network upsamples the feature maps to predict the segmentation masks. And a series of DeepLap model in [13] performed semantic segmentation using dilated convolutions and employed the VGG [14] as a feature extractor to raise the depth of the network.

Despite these approaches have made tremendous successes in image segmentation, a major drawback of the convolutional neural network (CNN) architectures is that they require massive volumes of training data [15,16,17]. Unfortunately, in the context of medical images, the situation is always scarcity of labeled images due to the fact that the annotation process is time-consuming and prone to errors. Therefore, developing a novel architecture of medical diagnosis on small samples is of practical significance.

CNN has shown great promise in medical image segmentation recently. This mainly attributes to the development of U-Net [9]. The structure of U-Net is quite similar to SegNet, comprising an encoder and a decoder network. The corresponding layers of the encoder and decoder network are connected by skip connections, which allows efficient information flow and performs well when sufficiently large datasets are not available. Simultaneously, in order to avoid the over-fitting problem caused by the lack of data, the author also proposes a data enhancement method to expand the data in the data pre-processing stage.

Subsequently, several modified versions about skip connections of U-Net have emerged. Drozdzal et al. [18] employed both long and short skip connections to enhance the information flow for biomedical image segmentation. Yu et al. [19] raised a novel combination of residual connections (ConvNet), which can greatly improve the segmentation performance of the proposed network by enhancing the information propagation both locally and globally. Zhou et al. [20] presented nested U-Net structures for medical image segmentation by using short-skip and long-skip connections to link shallow and deep features. Furthermore, Zhuang et al. [21] fused skip connections and residual blocks to acquire more information flow paths on the segmentation task for blood vessels in retinal images. All the approaches for changing the skip connections increase information flow. These tricks perform well when sufficiently large datasets are not available.

Almost all previous works have been designed for a certain kind of medical image model. However, the objects of interest are of irregular and different scales in most cases, which images may originate from various modalities. Therefore, a network should be robust enough to analyze objects at different scales. Various deformable modules on the U-Net have become popular to settle this problem. Oktay et al. [22] proposed a novel attention gate model for two large computed tomography abdominal datasets that automatically learned to focus on target structures of varying shapes and sizes. Gu et al. [23] devised a context extractor in a traditional encoder-decoder structure to capture more high-level information. Moreover, Alom et al. [24] embedded the recurrent convolution module into U-Net. Ibtehaz et al. [17] presented an inception-like block to reconcile the features learned from the image at different scales. In [25], a large kernel encoder-decoder network with deep multiple atrous convolution is proposed, where the use of this network can capture multi-scale contexts by enlarging the valid receptive field. However, image segmentation requires dense pixel-level labeling. A common property across all CNN architectures is that all label variables are predicted independently from each other [26].

Generative adversarial network (GAN) can make the model achieve better results from a distribution perspective by introducing a discriminator, which solves the problem of inconsistent distribution between different data domains [27]. By making the discriminator unable to distinguish data from two different domains, it indirectly leads them to belong to the same distribution. In [26], the image segmentation approach based on GAN has been explored to reinforce spatial contiguity in the output label maps. In medical image segmentation, there have been several types of researches on using U-Net and GAN. These works usually regard medical image segmentation as the process of generating segmentation for samples and introduce a discriminator to fit the generated segmentation distribution to the real segmentation distribution. Dong et al. [28] designed a model called U-Net generative adversarial network (U-Net-GAN), which jointly trained a set of U-Nets as generators and fully convolutional networks as discriminators to implement multimodel segmentation. For segmenting the tumor in breast ultrasound images, Negi et al. [29] used Residual-Dilated-Attention-Gate-UNet as the generator, which serves as a segmentation module. Then the Wasserstein GAN algorithm was employed to stabilize training. However, these approaches involve iterative training between the generator and single discriminator. In fact, it is important for each recovery segmentation in the decoder to increase information flow from high-level semantic information in small sample problems.

To solve the above-mentioned problems, we propose a novel segmentation model for small-sample medical images, referred to as asymmetric U-Net generative adversarial network with multi-discriminators (AU-MultiGAN). More specifically, AU-MultiGAN jointly trains an asymmetric U-Net as generators and multi-discriminators to implement the medical image segmentation tasks. The novelty of the proposed model architecture is twofold. First, the construction of the asymmetric U-Net generates multiple results of different sizes from distinct upsampling levels. Before upsampling, the features of different receptive fields are extracted through multiple proposed dual-dilated block to obtain higher-level semantic information. Second, a multi-discriminator module is designed for improving sample utilization and increasing information flow from the high-level semantic information. The multi-discriminators by employing a discriminator for each upsampling layer of generating the segmentation achieve deep supervision. Furthermore, a hybrid loss is designed for imbalanced sample issues and an adaptive parameter selection method is proposed for this loss. Here, the hybrid loss includes both discriminator and segmentation losses, in which the segmentation loss consists of FocalLoss [30] and the reconstruction loss. The discriminator loss adopts the form of the mean square error. Such a combined loss can possess various functions, such as dealing with the imbalance class, matching the generated segmentation with the real segmentation, and stability training. In addition, we conduct a theoretical and experimental analysis of the proposed method. The experimental results on benchmark datasets indicate that the proposed AU-MultiGAN can be successfully applied to medical image segmentation in small sample cases and it is more effective than the state-of-the-art baselines.

The main contributions of this study can be summarised as follows.

-

A multi-discriminator deep network, which mainly embeds the multi-discriminator modules into the asymmetric U-Net in order to utilize the information of samples sufficiently and thereby enhance the information flow of features, is devised to overcome the small sample issue in image segmentation tasks.

-

A hybrid loss is proposed that integrates discriminator loss with segmentation loss. This new hybrid loss can not only balance the intra-classes of samples but also keep consistent with the generated and real segmentation maps. Further, an adaptive selection method on the scale factors for this hybrid loss is designed.

-

The theoretical convergence of the proposed method is discussed and analyzed rigorously.

The remainder of this paper is organised as follows. Section 2 lists some notations that are used throughout the text. Section 3 details the AU-MultiGAN. Experimental results are presented and analysed in Section 4. The conclusion of the study is provided in Section 5, and the mathematical proofs of the convergence of the proposed model are presented in the final Appendix.

2 Notations

This section lists some notations used in this study. Let Rm×n be the set of real numbers with m × n dimensions. For a matrix, X ∈Rm×n, we denote its elements as xij(i = 1,2,…,m, j = 1,2,…,n) and call \(\phi _{1}({x}) = \frac {1}{1+\mathrm {e}^{-{x}}} (x\in \mathbf {R})\) as the logistic sigmoidal function and \(\phi _{2}(x_{ij})=\frac {\mathrm {e}^{x_{ij}}}{{\sum }_{i,j}\mathrm {e}^{x_{ij}}} (x_{ij}\in \mathbf {R})\) as the softmax function. \(\sigma (x)=\max \limits (0,x)(x\in \mathbf {R})\) denotes as the rectified linear unit (ReLU).

We use ψ(⋅) to represent max pooling, τ(⋅) to denote a random neuron discard operation (dropout), and ξ(⋅) to express pixelshuffle [31]. Moreover, [X, Y] represents a concatenation operator for X and Y; \(p_{_{\boldsymbol {Y}_{i}}}\), \(p_{_{\boldsymbol {X}}}\), and \(p_{g_{i}}\) are the distributions of label, sample, and the generated segmentation, respectively; \(p_{_{\boldsymbol {Y}_{i}}}(\cdot )\) and \(p_{g_{i}}(\cdot )\) represents the probability density functions of \(p_{_{\boldsymbol {Y}_{i}}}\) and \(p_{g_{i}}\), respectively, where i is the discriminator index.

For readability, all the above-mentioned symbols are listed in Table 1.

3 Method

In this section, we first explain the architecture of the proposed network, then describe the training strategy, and finally analyse the convergence for the proposed algorithm.

3.1 Architectural design

The proposed network includes two major parts: a asymmetric U-Net and a multi-discriminator module, as shown in Fig. 1. The asymmetric U-Net is regarded as a generator for segmentation problems, containing a dual-dilated block, bottleneck block, decoder block, and classifier block. In contrast to the U-Net, the main differences in the asymmetric U-Net are reflected in two aspects. One aspect is that a dual-dilated block is designed for each convolutional layer in the encoder. The other is that all level classifier blocks are followed by segmented images for each up-sampling feature extraction stage of the decoder. The multi-discriminator corresponds to the multi-level output of the asymmetric U-Net, and the multiple discriminators are uniform in structure. The description of the architecture is given in Sections 3.2 and 3.3 in further detail.

3.2 Asymmetric U-net

Segmentation tasks can be regarded as a generation of segmented images. The asymmetric U-Net is a generator and employs the dual-dilated blocks in the feature extraction stage to obtain more abundant information, whereas just one convolution branch is used in the process of generating segmentation maps. Concretely, let X ∈Rm×n be the input of the asymmetric U-Net. The image that is segmented at the i th level in the asymmetric U-Net is denoted by \(G_{i}(\boldsymbol {X};\theta _{i})(i=1,2\dots , k)\), where 𝜃i is the parameter of generator Gi. For simplicity, we often omit 𝜃i and write Gi(X;𝜃i) as Gi(X) or \({\boldsymbol {Y}}_{i}^{\prime }\). Specifically, we can write Gi(X) as a matrix form, \(\left (g_{js}^{(i)}\right )_{m_{i}\times n_{i}}\), where \(g_{js}^{(i)}\) represents the pixel of Gi(X) at coordinates (j, s). In addition, mi = m/(2i− 1) and ni = n/(2i− 1) denote the length and width of Gi(X), respectively. Further, Gi(X) can be computed as

where B(⋅) is the bottleneck block, and Fi(⋅),Hi(⋅), and Ci(⋅)(i = 1,2,…,k) represent the dual-dilated, decoder, and classifier blocks in the i th level, respectively. Details of these blocks are provided as follows.

-

1)

Dual-Dilated Block. First, the features are extracted from different receptive fields by using two branches of 3 × 3 convolutions with different dilated rates. The dilated rate is set to 1 and 2. The operation is equivalent to adopting a 3 × 3 convolution and a 5 × 5 convolution with fewer parameters. Subsequently, we use a 3 × 3 convolution to further extract features and fuse the distinct features. The encoder in the proposed network consists of k dual-dilated blocks. For each Fi(i = 1,2,…,k), we employ max pooling for the output features from Fi− 1 in advance, as the input features, \(\bar {\boldsymbol {F}}_{i}\), are expected to be at different scales. Let

$$ \bar{\boldsymbol{F}}_{i} = \psi(F_{i}(\bar{\boldsymbol{F}}_{i-1})), i=1,2,\ldots,k $$(2)Then, \(\bar {\boldsymbol {F}}_{i-1}\) is the input feature of Fi(⋅); in particular, \(\bar {\boldsymbol {F}}_{0}=\boldsymbol {X}\) and \(\hat {\boldsymbol {F}}_{i} = F_{i}(\bar {\boldsymbol {F}}_{i-1})\) are the output features of Fi(⋅). This strategy can provide abundant information for the discriminator at the lower level.

-

2)

Bottleneck Block. The function of the bottleneck block is to process the lower-level features in the asymmetric U-Net. Only τ(⋅) is expanded in relation to a dual-dilated block. The remarkable role of this additional operation is to avoid over-fitting. From the block, the high-level features, O, are specifically obtained for sample X.

-

3)

Decoder Block. To correspond with the encoder, the decoder adopts k decoder blocks as well. In parallel, the features in the decoder block require dissimilar scales because the input features Zi for each Hi(i = 1,2,…,k) are the concatenation of \(\bar {\boldsymbol {H}}_{i+1}\) and \(\hat {\boldsymbol {F}}_{i}\), i.e.,

$$ \boldsymbol{Z}_{i} = [\bar{\boldsymbol{H}}_{i+1}, \hat{\boldsymbol{F}}_{i}],i=1,\ldots,k, $$(3)where

$$ \bar{\boldsymbol{H}}_{i}=\xi(H_{i}(\boldsymbol{Z}_{i}))(i=2,3,\ldots,k)\ \text{and} \ \bar{\boldsymbol{H}}_{k+1}=\xi(\boldsymbol{O}) $$(4)denote the upsampling features through pixelshuffle for the output from Hi. Consequently, for the given features \(\bar {\boldsymbol {H}}_{i+1}(i=1,\ldots ,k)\), the first phase in Hi(⋅) involves putting the upsampling results from a low level and the shallow features in the same level dual-dilated block together according to (3). Next, two convolutional layers are exploited to handle the features from the concatenate maps:

$$ H_{i}(\boldsymbol{Z}_{i})=\sigma(\boldsymbol{W}_{H_{i}}^{2}*\sigma(\boldsymbol{W}_{H_{i}}^{1}*\boldsymbol{Z}_{i}+\boldsymbol{b}_{H_{i}}^{1})+\boldsymbol{b}_{H_{i}}^{2}),i=1,\ldots,k, $$(5)where \(\boldsymbol {W}_{H_{i}}^{j}\) (j = 1,2) expresses a 3 × 3 convolution kernel with a dilated rate of 1 in module Hi. The decoder block is designed for processing high-abstract features and restoring the size of the image.

-

4)

Classifier Block. The classifier block aims to obtain segmented images. To this end, we apply a sigmoidal function ϕ1 after two convolutional layers to limit the value between 0 and 1, described as

$$ C_{i}(\bar{\boldsymbol{C}}_{i}) = \phi_{1} (\sigma(\boldsymbol{W}_{C_{i}}^{2} * \sigma(\boldsymbol{W}_{C_{i}}^{1} * \bar{\boldsymbol{C}}_{i} + \boldsymbol{b}_{C_{i}}^{1}) + \boldsymbol{b}_{C_{i}}^{2})), $$where \(\boldsymbol {W}_{C_{i}}^{1}\) and \(\boldsymbol {W}_{C_{i}}^{2}\) denote the 3 × 3 convolution kernel and the 1 × 1 convolution kernel with a dilated rate of 1 in modules Ci(i = 1,...,k), respectively; \(\bar {\boldsymbol {C}}_{i}=H_{i}(\boldsymbol {Z}_{i})\); the output of Ci(⋅)(i = 1,…,k) can be regarded as a segmented image defined by (1).

3.3 Multi-discriminator mechanism

The asymmetric U-Net is a generator for producing multi-scale segmentation and the decoder contributes k outputs in different scales, as mentioned earlier. Accordingly, k discriminators are used to train k tasks of the generator. The role of the discriminator is to receive the segmentation generated from the asymmetric U-Net and correspondingly output a confidence value. Here, \(D_{i}(G_{i}(\boldsymbol {X});\beta _{i})(i=1,2\dots , k)\) denotes the confidence value of the i th segmentation Gi(X), where βi is the parameter of discriminator Di. For simplicity, we omit βi and write Di(Gi(X);βi) as Di(Gi(X)). The confidence value represents the probability that the input segmentation is a real segmentation label. For segmentation tasks, it is natural that the generated segmentation is expected to be similar to the real segmentation label, and it is similar for the generative tasks. Therefore, it is feasible to transfer generative ideas to segmentation tasks. In applications, the structures of all discriminators Di(i = 1,…,k) is the same. Specifically, five 4 × 4 convolutions are followed by average pooling and the softmax function ϕ2(⋅). Here, the softmax function is used to produce confidence values in the discriminators. A schematic diagram of the dual-dilated block is shown in Fig. 1.

3.4 Hybrid loss and its adaptive scale factor selection

This subsection firstly describes two different losses, the segmentation loss and the discriminator loss, and then builds a combination loss for the proposed AU-MultiGAN model.

-

1)

Segmentation Loss. An intuitive idea for the segmentation task is to minimize the pixel-wise loss between the inputs and the segmented ones, which can be modelled as

$$ L_{\text{seg}}(G_{i}) = L_{\text{FL}}(G_{i}) + L_{\text{re}}(G_{i}), $$(6)where

$$ \begin{array}{@{}rcl@{}} L_{\text{FL}}(G_{i}) &=& \mathbb{E}_{\boldsymbol{X}\sim p_{_{\boldsymbol{X}}}}\sum\limits_{j,s}[-\alpha(1-g_{js}^{(i)})^{\gamma}]\log(g_{js}^{(i)}), \end{array} $$(7)$$ \begin{array}{@{}rcl@{}} L_{\text{re}}(G_{i}) &=& \mathbb{E}_{\boldsymbol{X}\sim p_{_{\boldsymbol{X}}}}[\|\boldsymbol{Y}_{i}-G_{i}(\boldsymbol{X})\|_{1}]. \end{array} $$(8)Here, Yi(i = 1,2,…,k) denotes the real segmented image; LFL(Gi) is referred to as FocalLoss, dealing with the imbalance classes; 0 ≤ α ≤ 1 and γ ≥ 0 are hyperparameters. In particular, if γ = 1 and α = 1, then LFL(Gi) is the binary cross-entropy loss. When α = 0, the segmentation loss only contains the reconstruction loss Lre(Gi), which ensures that the generated segmentation Gi(X) ends up matching closely with Yi. Segmentation loss guides the generator to realize a segmentation task.

-

2)

Discriminator Loss. It is well known that the loss (8) may lead to the missing of high-frequency information and blur segmentation results. To generate more realistic results, a least square-based GAN loss is introduced, defined as

$$ \begin{array}{@{}rcl@{}} L_{\text{GAN}}(G_{i},D_{i}) &=& \mathbb{E}_{\boldsymbol{Y}_{i}\sim p_{_{\boldsymbol{Y}_{i}}}}\left[\frac{1}{2}(D_{i}(\boldsymbol{Y}_{i})-1)^{2}\right]\\ &&+\mathbb{E}_{\boldsymbol{X}\sim p_{_{\boldsymbol{X}}}}\left[\frac{1}{2}{D_{i}^{2}}(G_{i}(\boldsymbol{X}))\right]. \end{array} $$(9) -

3)

Hybrid Loss. The objective function for the proposed AU-MultiGAN is obtained by combining the segmentation loss (6) and the discriminator loss (9):

$$ L(G_{i},D_{i})=L_{\text{GAN}}(G_{i},D_{i})+\lambda_{1}L_{\text{FL}}(G_{i})+\lambda_{2}L_{\text{re}}(G_{i}), $$(10)where i = 1,2,…,k, and λ1,λ2 > 0 are scale factors. In the following discussion, the key is the optimisation of the hybrid loss:

$$ \underset{G_{i}, D_{i}}{\min} L(G_{i},D_{i}). $$(11)We adopt the alternate iteration method to solve this problem. Firstly, we optimize discriminator Di for a fixed Gi, i.e.

$$ D_{i}^{*} = \arg\underset{D_{i}}{\min} L_{\text{GAN}} (G_{i},D_{i}), $$(12)and then, we optimize generator Gi for a fixed \(D_{i}^{*}\):

$$ G_{i}^{*} = \arg\underset{G_{i}}{\min} L_{\text{GAN}}(G_{i},D_{i}^{*})+\lambda_{1}L_{\text{FL}}(G_{i})+\lambda_{2}L_{\text{re}}(G_{i}). $$(13) -

4)

Adaptive Scale Factor Selection. To solve the optimisation problem (13) with scale factor λ1 and λ2, the conventional method is to set the parameter values by using manual empirical selection. This manual selection method is always inefficient. Once the selection is inappropriate, it may yield poor results. Hence, we design an adaptive method to select the scale factors. Denote \(L(G_{i},\lambda _{1},\lambda _{2}) = L_{\text {GAN}}(G_{i}, D_{i}^{*}) + \lambda _{1}L_{\text {FL}}(G_{i}) + \lambda _{2}L_{\text {re}}(G_{i})\). Then, the function must be optimised as a conditional extremum problem. Fortunately, it can be converted to a Lagrange duality problem [32]:

$$ \underset{\lambda_{1},\lambda_{2}>0}{\max} \underset{G_{i}}{\min} L(G_{i},\lambda_{1},\lambda_{2}). $$(14)Consequently, following (13), the method of gradient ascent can be adopted to update the scale factors λ1 and λ2:

where η is the learning rate and is a fixed constant selected empirically, which is discussed in Section 4.1; the partial derivatives can be written as \(\frac {\partial L(G_{i}^{*},\lambda _{1},\lambda _{2})}{\partial \lambda _{1}} = L_{\text {FL}}(G_{i}^{*})\) and \(\frac {\partial L(G_{i}^{*},\lambda _{1},\lambda _{2})}{\partial \lambda _{2}}=L_{\text {re}}(G_{i}^{*})\).

Overall, the whole training process of AU-MultiGAN is listed in Algorithm 1.

3.5 Theoretical analysis

This subsection gives the convergence analysis for the proposed method. The main theoretical result is summarised in Theorem 1, and its proof is provided in the Appendix. As a preparation for the analysis, two lemmas are given. Their mathematical proofs are also provided in the Appendix. Specifically, the optimal discriminator Di for any given generator Gi is considered in Lemma 1, and Lemma 2 indicates that when the discriminator loss achieves the value \(\frac {1}{4}\), \(p_{g_{i}} = p_{_{\boldsymbol {Y}_{i}}}\) holds for all i(i = 1,2,…,k).

Lemma 1

For a fixed Gi, the optimal discriminator Di is

for all i = 1,2,…,k.

Lemma 2

For all i = 1,2,…,k, \(p_{g_{i}}=p_{_{\boldsymbol {Y}_{i}}}\) if and only if the discriminator loss achieves value \(\frac {1}{4}\).

Theorem 1

Assume that Gi and Di have sufficient capacity. If at each step of Algorithm 1, the discriminator loss reaches value \(\frac {1}{4}\), and \(p_{g_{i}}\) is updated to improve the criterion

for all i = 1,2,…,k, then \(p_{g_{i}}\) converges to \(p_{_{\boldsymbol {Y}_{i}}}\).

4 Experiments

In this section, a series of experiments are conducted to illustrate the performance of the AU-MultiGAN for small-sample medical image segmentation task. The experiments are carried out in a Python 3.6 environment running on a double NVIDIA GTX 1080 GPU and an Intel(R), Xeon(R) W-2123 CPU @ 3.60 GHz with 64 GB main memory.

4.1 Experiments setup

Datasets

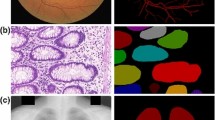

We choose the different medical imaging modalities to evaluate the proposed segmentation framework. The datasets are compiled from six databases: ISBI2009 [33], ISBI2012 [34, 35], ISBI2014 [36, 37], DRIVE [38], ISIC [39, 40], and CVC-ClinicDB [41]. These datasets contain various types of medical images, such as cell data, digital eye masks, dermoscopy image and endoscopy image. Meanwhile, these datasets can also be classified into different types of medical images segmentation tasks, such like cell contour segmentation, cell nuclei segmentation, organizational segmentation in several different situations and retinal vessel detection. In these six datasets, there is an underlying commonality for four datasets (ISBI2009, ISBI2012, ISBI2014, and DRIVE) is that the amount of annotated data is small. In order to test and verify the proposed method can be applied to many types of medical images, we randomly select a subset of other two datasets according to the scale of ISBI2012 as new mini-batch datasets in our experiment. To sum up, the details of each dataset are listed in Table 2.

Evaluation

Four indices, including dice coefficient (Dice), intersection over union (IoU), accuracy, and sensitivity, are adopted to comprehensively assess the performance of the segmentation. The detail definitions can be described as follows.

-

The Dice [42] between two binary pixels can be written as

$$ \text{Dice} = \frac{2{\sum}_{i,j}^{m,n} \hat{y}_{ij}y_{ij}}{{\sum}_{i,j}^{m,n}\hat{y}_{ij}^{2}+{\sum}_{i,j}^{m,n}y_{ij}^{2}}, $$(18)where \(\hat {y}_{ij}\) and yij denote the pixels of the predicted binary segmented image and the ground truth binary map at coordinates (i, j), respectively.

-

A similarity measure related to Dice referred to as the IoU [43] can be defined as

$$ \text{IoU} = \frac{{\sum}_{i,j}^{m,n}\hat{y}_{ij}y_{ij}}{{\sum}_{i,j}^{m,n}\hat{y}_{ij}^{2}+{\sum}_{i,j}^{m,n}y_{ij}^{2}-{\sum}_{i,j}^{m,n}\hat{y}_{ij}y_{ij}}. $$(19) -

The accuracy (Acc) describes the proportion of correctly classified samples to the total number of samples and can be represented as

$$ \text{Acc}=\frac{TP+TN}{TP+TN+FP+FN}, $$(20)where TP, TN, FP and FN are the number of true positives, true negatives, false positives and false negatives, respectively.

-

The sensitivity (Sen) also called recall calculates the proportion of positives (TP) that are correctly predicted to all positives in the true label image. This metric can be written as

$$ \text{Sen} = \frac{TP}{TP+FN}. $$(21)

It is worth mentioning that in the binary image segmentation problem, the Sen metric only considers the proportion of the generated segmentation map that is correctly predicted in the real segmentation map. Even if there are many noises or outliers in the generated segmentation map, it does not affect the value of this metric. The main reason is that these noises or outliers are always false positives (FP), which is not included in the denominator part of the formula (21). Therefore, it is generally unreasonable. In contrast, Acc, Dice, and IoU have fully considered the true and the false prediction in the real segmentation map. Based on theses analyses, we mainly focus on the three indices Acc, Dice, and IoU when comparing with other methods. The index Sen is given as a reference item.

Implementation details

All methods are implemented without data augmentation. The 5-fold cross-validation is adopted in all the experiments. Furthermore, the learnable weight parameters of the asymmetric U-Net and the multi-discriminator are optimised by using the adaptive moment estimation (Adam) method with a learning rate of 4 × 10− 3. Also, we set the hyperparameters α = 0.25, γ = 1, and k = 2, respectively. A discussion of these parameters is presented concretely in Section 4.3.

4.2 Comparison results

In this subsection, we compare the proposed method with seven methods based on the U-Net architecture as the baseline models, including U-Net [9], Unet+ + [20], CE-Net [23], LadderNet [21], R2U-Net [24], Attention U-Net [22], and MultiResUnet [17]. Simultaneously, two traditional methods, SVM [1] and RF [2], are also used in this paper. Next, we will analyze the experimental results from both quantisation and vision.

The quantitative results for different datasets are listed in Tables 3, 4, 5, 6, 7 and 8. Bold represents the best performance. It can be observed that the proposed method has a great improvement on the Dice, IoU, and Acc for almost all types of datasets when comparing with other methods, although it is not the best performance on the index Sen. But this is reasonable as explained in Section 4.1. Specially, for ISBI2009, ISBI2012, ISBI2014, DRIVE, and CVC-ClinicDB(small), the proposed method achieves the best performances on the Dice, IoU, and Acc over all the baseline models. Further, for ISIC(small) dataset, the effectiveness of the proposed AU-MultiGAN method surpasses those of the existing methods except for MultiResUnet according to Table 8. One possible reason for failure is that the parameter number of MultiResUnet are more larger than our framework. This is generally unfair. To this end, we reduce the parameter number for MultiResUnet to a situation similar to the proposed method. The related results are recorded in Table 9. Obviously, the proposed AU-MultiGAN method can obtain the improvement with roughly 0.75%, 1.1%, and 2.1% for Dice, IoU, and Acc in comparison with MultiResUnet with the same magnitude. These good performances largely benefit from the fact that the proposed multi-discriminator module and the use of dual-dilated block can guide the generator to capture more favorable information and thereby improve the segmentation performance. To sum up, the above-mentioned analyses indicate the superiority of the proposed method.

Moreover, from the view of visual effects, we present segmentation results of some representative images for these different datasets. The corresponding visual images are displayed in Figs. 2, 3, 4, 5, 6 and 7. It can be observed that our method performs well on various modalities of datasets. For example, Fig. 2 shows the result of ISBI2009, where some images of this dataset are with bright objects that are almost indistinguishable from the actual nuclei. Specially, the input image is polluted by small particles that are not actual cell nuclei. But the AU-MultiGAN method can segment the images reliably and acquire perfect segmentation in the regions of interest in comparison with other approaches. Similarly, we can see that the proposed method have obtain clearer boundaries or textures compared with other methods from Figs. 3–5, corresponding to the datasets ISBI2012, ISBI2014 and DRIVE, respectively. Moreover, for the ISIC and CVC-ClinicDB datasets, the segmentation tasks in Figs. 6 and 7 are more difficult. It seems that all the baselines have unsatisfactory results. However, most regions of the proposed framework are segmented accurately when comparing with other methods, although it still has few wrong segmentation part. These effective visual results are likely to depend on the proposed multi-discriminator module and dual-dilated block, which contribute to produce useful features in the deep model.

In summary, the proposed method can achieve superior performance in comparison with the baselines from both quantitative and visual results.

4.3 Discussion

-

1)

The impact of hyperparameters α andγ. Adaptive selecting the scale factors α and γ is generally a challenging work. Here, we take the ISBI2012 dataset as an example. Table 10 depicts the effects of different values of α and γ in a relatively wide. As can be seen, the best results are in the case of α = 0.25 and γ = 1. Consequently, we set them empirically.

Table 10 Results on different scale factors for the ISBI2012 dataset -

2)

Choice of the discriminators number k. We will discuss the influence of different number of discriminators k to determine the sizes in the overall network. To this end, we complete a set of comparative experiments with k = {1,2,3,4} on the ISBI2012 dataset, as shown in Table 11. It is easy to see that the performance of our framework is optimal when k = 2. Consequently, we select this value empirically.

Table 11 AU-MultiGAN with various k values for the ISBI2012 dataset -

3)

Effectiveness of the multi-discriminator mechanism and the dual-dilated block. We consider the influence of the multi-discriminator mechanism and the dual-dilated block. To this end, some comparison experiments are carried out, taking the ISBI2012 dataset as an example. The baselines include four cases, i.e. only the asymmetric U-Net called MultiUnet, only the single discriminator model referred to as SingleGAN, the single-scale U-Net with a multi-discriminator named UGAN, and the proposed AU-MultiGAN. The results are presented in Table 12. Clearly, we can see that the multi-discriminator mechanism is effective when compared with SingleGAN and MultiUnet. Further, the dual-dilated block of the proposed method is also useful in comparison with UGAN, which use a single-scale block. These demonstrate that the multi-discriminator and dual-dilated block are of importance in the proposed method.

Table 12 Effectiveness of the multi-discriminator mechanism and the dual-dilated block -

4)

Ablation study of the hybrid loss. We discuss the effectiveness of the proposed hybrid loss through three groups of experiments on the ISBI2012 dataset as an example. The first one only consider the discriminator loss LGAN denoted by AU-MultiGAN (LGAN). The second case embeds the Focal Loss into the discriminator loss LGAN, referred to as AU-MultiGAN(LGAN + λ1LFL). The last one is the proposed method. The corresponding results are listed in Table 13. It can be seen that the proposed method outperforms than other two cases. This verifies the effectiveness of the hybrid loss and may balance the intra-classes of samples so that keeping consistent with the generated and real segmentation maps.

Table 13 Ablation study of the hybrid loss -

5)

Convergence of AU-MultiGAN. In Fig. 8, we plot the curves of the segmentation loss in each epoch on the first four datasets in Table 2. It can be seen that for all cases, the proposed model attains convergence quickly. This can be attributed to the synergy between the multi-discriminator mechanism and batch normalisation. These imply that the proposed AU-MultiGAN method are likely to obtain superior results in fewer training epochs and are consistent with the theoretical results.

-

6)

Analysis of model size. We give the parameters for different methods. The results are recorded in Table 14. As can be seen, the parameter number of the proposed method is smaller than all the baselines, demonstrating that the proposed method is a lightweight framework.

Table 14 The model sizes of the proposed method and all the baselines

5 Conclusion

We present a novel method based on GAN for medical image segmentation with small samples by designing multiple adversarial networks, referred to as AU-MultiGAN. This framework mainly contains an asymmetric U-Net module and a multi-discriminator module. The former is designed to produce multiple segmentation maps. Further, the multi-discriminator module is embedded into the asymmetric U-Net structure, capturing the available information of samples sufficiently and thereby promote the information transmission. Also, a hybrid loss is developed and an adaptive method of selecting the scale factors is designed. Simultaneously, the convergence of the proposed method is proved mathematically. Experimental results demonstrate that the effectiveness of proposed method surpasses the existing baselines.

There is scope for further research in this task. For example, it is feasible to introduce a more sophisticated architecture that can adapt to few-shot segmentation. In addition, to extend our work for the selection of hyperparameters, meta-learning [44] may be a viable approach.

References

Barkana BD, Saricicek I, Yildirim B (2017) Performance analysis of descriptive statistical features in retinal vessel segmentation via fuzzy logic, ANN, SVM, and classifier fusion. Knowledge-Based Syst 118:165–176. https://doi.org/10.1016/j.knosys.2016.11.022

Mitra J, Bourgeat P, Fripp J, Ghose S, Rose S, Salvado O, Connelly A, Campbell B, Palmer S, Sharma G et al (2014) Lesion segmentation from multimodal MRI using random forest following ischemic stroke. Neuroimage 98:324–335. https://doi.org/10.1016/j.neuroimage.2014.04.056

Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, Van Der Laak JA, Van Ginneken B, Sánchez CI (2017) A survey on deep learning in medical image analysis. Med Image Anal 42:60–88. https://doi.org/10.1016/j.media.2017.07.005

Yu H, Yang Z, Tan L, Wang Y, Sun W, Sun M, Tang Y (2018) Methods and datasets on semantic segmentation: a review. Neurocomputing 304:82–103. https://doi.org/10.1016/j.neucom.2018.03.037

Cao F, Liu H (2019) Single image super-resolution via multi-scale residual channel attention network. Neurocomputing 358:424–436. https://doi.org/10.1016/j.neucom.2019.05.066

Zhang J, Gu Y, Tang H, Wang X, Kong Y, Chen Y, Shu H, Coatrieux J (2020) Compressed sensing MR image reconstruction via a deep frequency-division network. Neurocomputing 384:346–355. https://doi.org/10.1016/j.neucom.2019.12.011

Cao F, Guo W (2020) Cascaded dual-scale crossover network for hyperspectral image classification. Knowledge-Based Syst 189:105122. https://doi.org/10.1016/j.knosys.2019.105122

Li Z, Dong M, Wen S, Hu X, Zhou P, Zeng Z (2019) Clu-cnns: object detection for medical images. Neurocomputing 350:53–59. https://doi.org/10.1016/j.neucom.2019.04.028

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: Proceedings of international conference on medical image computing and computer-assisted intervention. Springer, pp 234–241. https://doi.org/10.1007/978-3-319-24574-4_28

Fang L, Wang X, Wang L (2020) Multi-modal medical image segmentation based on vector-valued active contour models. Inf Sci 513:504–518. https://doi.org/10.1016/j.ins.2019.10.051

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of IEEE conference on computer vision and pattern recognition. IEEE, pp 3431–3440. https://doi.org/10.1109/TPAMI.2016.2572683

Badrinarayanan V, Kendall A, Cipolla R (2017) Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence 39(12):2481–2495. https://doi.org/10.1109/TPAMI.2016.2644615

Wang N, Zhang Z, Xiao J, Cui L (2019) DeepLap: a deep learning based non-specific low back pain symptomatic muscles recognition system. In: 2019 16th annual IEEE international conference on sensing, communication, and networking (SECON). IEEE, pp 1–9. https://doi.org/10.1109/SAHCN.2019.8824868

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International conference on learning representations

Roy AG, Siddiqui S, Pölsterl S, Navab N, Wachinger C (2020) ‘Squeeze & excite’ guided few-shot segmentation of volumetric images. Med Image Anal 59:101587. https://doi.org/10.1016/j.media.2019.101587

Cui H, Wei D, Ma K, Gu S, Zheng Y (2020) A unified framework for generalized low-shot medical image segmentation with scarce data. IEEE Trans Med Imaging. https://doi.org/10.1109/TMI.2020.3045775

Ibtehaz N, Rahman MS (2020) MultiResUNet: rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Networks 121:74–87. https://doi.org/10.1016/j.neunet.2019.08.025

Drozdzal M, Vorontsov E, Chartrand G, Kadoury S, Pal C (2016) The importance of skip connections in biomedical image segmentation: 179–187. https://doi.org/10.1007/978-3-319-46976-8_19

Yu L, Yang X, Chen H, Qin J, Heng PA (2017) Volumetric ConvNets with mixed residual connections for automated prostate segmentation from 3D MR images. In: Proceedings of thirty-first AAAI conference on artificial intelligence. ACM, pp 66–72

Zhou Z, Siddiquee MMR, Tajbakhsh N, Liang J (2018) Unet++: a nested U-Net architecture for medical image segmentation. In: Proceedings of deep learning in medical image analysis and multimodal learning for clinical decision support. Springer, pp 3–11. https://doi.org/10.1007/978-3-030-00889-5_1

Zhuang J (2018) LadderNet: multi-path networks based on U-Net for medical image segmentation. arXiv:1810.07810

Oktay O, Schlemper J, Folgoc LL, Lee M, Heinrich M, Misawa K, Mori K, McDonagh S, Hammerla NY, Kainz B et al (2018) Attention U-Net: learning where to look for the pancreas. In: Proceedings of medical imaging with deep learning

Gu Z, Cheng J, Fu H, Zhou K, Hao H, Zhao Y, Zhang T, Gao S, Liu J (2019) CE-Net: context encoder network for 2D medical image segmentation. IEEE Trans Med Imaging 38 (10):2281–2292. https://doi.org/10.1109/TMI.2019.2903562

Alom MZ, Yakopcic C, Hasan M, Taha TM, Asari VK (2019) Recurrent residual U-Net for medical image segmentation. J Med Imaging 6(1):014006. https://doi.org/10.1117/1.JMI.6.1.014006

Zhang C, Shu H, Yang G, Li F, Wen Y, Zhang Q, Dillenseger JL, Coatrieux JL (2020) HIFUNet: multi-class segmentation of uterine regions from MR images using global convolutional networks for HIFU surgery planning. IEEE Trans Med Imaging 39(11):3309–3320. https://doi.org/10.1109/TMI.2020.2991266

Luc P, Couprie C, Chintala S, Verbeek J (2016) Semantic segmentation using adversarial networks. In: NIPS workshop on adversarial training

Xie H, Lei H, Zeng X, He Y, Chen G, Elazab A, Yue G, Wang J, Zhang G, Lei B (2020) Amd-gan: attention encoder and multi-branch structure based generative adversarial networks for fundus disease detection from scanning laser ophthalmoscopy images. Neural Networks 132:477–490. https://doi.org/10.1016/j.neunet.2020.09.005

Dong X, Lei Y, Wang T, Thomas M, Tang L, Curran WJ, Liu T, Yang X (2019) Automatic multiorgan segmentation in thorax CT images using U-net-GAN. Medical Physics 46(5):2157–2168. https://doi.org/10.1002/mp.13458

Negi A, Raj ANJ, Nersisson R, Zhuang Z, Murugappan M (2020) RDA-UNET-WGAN: an accurate breast ultrasound lesion segmentation using wasserstein generative adversarial networks. Arabian Journal for Science and Engineering 45:6399– 6410

Lin TY, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of ieee international conference on computer vision, pp 2980–2988. https://doi.org/10.1109/iccv.2017.324

Shi W, Caballero J, Huszár F, Totz J, Aitken AP, Bishop R, Rueckert D, Wang Z (2016) Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of IEEE conference on computer vision and pattern recognition. pp 1874–1883. https://doi.org/10.1109/CVPR.2016.207

De Haan L, Ferreira A (2007) Extreme value theory: an introduction. Springer Science & Business Media

Coelho LP, Shariff A, Murphy RF (2009) Nuclear segmentation in microscope cell images: A hand-segmented dataset and comparison of algorithms. In: Proceedings of IEEE international symposium on biomedical imaging: from nano to macro. IEEE, pp 518–521. https://doi.org/10.1109/ISBI.2009.5193098

Arganda-Carreras I, Turaga SC, Berger DR, Cireşan D, Giusti A, Gambardella LM, Schmidhuber J, Laptev D, Dwivedi S, Buhmann JM et al (2015) Crowdsourcing the creation of image segmentation algorithms for connectomics. Front Neuroanat 9:142. https://doi.org/10.3389/fnana.2015.00142

Cardona A, Saalfeld S, Preibisch S, Schmid B, Cheng A, Pulokas J, Tomancak P, Hartenstein V (2010) An integrated micro-and macroarchitectural analysis of the Drosophila brain by computer-assisted serial section electron microscopy. PLoS Biol 8(10):1000502. https://doi.org/10.1371/journal.pbio.1000502

Lu Z, Carneiro G, Bradley AP, Ushizima D, Nosrati MS, Bianchi AG, Carneiro CM, Hamarneh G (2016) Evaluation of three algorithms for the segmentation of overlapping cervical cells. IEEE J Biomed Health Inform 21(2):441–450. https://doi.org/10.1109/JBHI.2016.2519686

Lu Z, Carneiro G, Bradley AP (2015) An improved joint optimization of multiple level set functions for the segmentation of overlapping cervical cells. IEEE Trans Image Process 24(4):1261–1272. https://doi.org/10.1109/TIP.2015.2389619

Staal JJ, Abramoff M, Niemeijer M, Viergever M, van Ginneken B (2004) Drive: digital retinal images for vessel extraction. IEEE Trans Med Imaging 23(4):501–509

Codella NC, Gutman D, Celebi ME, Helba B, Marchetti MA, Dusza SW, Kalloo A, Liopyris K, Mishra N, Kittler H et al (2018) Skin lesion analysis toward melanoma detection: A challenge at the 2017 international symposium on biomedical imaging (ISBI), hosted by the international skin imaging collaboration (ISIC). In: Proceedings of IEEE 15th international symposium on biomedical imaging, pp 168–172. https://doi.org/10.1109/ISBI.2018.8363547

Tschandl P, Rosendahl C, Kittler H (2018) The ham10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Scientific Data 5(1):1–9. https://doi.org/10.1038/sdata.2018.161

Bernal J, Sánchez FJ, Fernández-Esparrach G, Gil D, Rodríguez C, Vilariño F (2015) Wm-dova maps for accurate polyp highlighting in colonoscopy: Validation vs. saliency maps from physicians. Computerized Medical Imaging and Graphics 43:99–111. https://doi.org/10.1016/j.compmedimag.2015.02.007

Fausto M, Nassir N, Seyed-Ahmad A (2016) V-Net: fully convolutional neural networks for volumetric medical image segmentation. In: Proceedings of 2016 fourth international conference on 3D vision. IEEE, pp 565–571. https://doi.org/10.1109/3DV.2016.79

Hassan T, Akram MU, Werghi N, Nazir N (2020) Rag-fw: A hybrid convolutional framework for the automated extraction of retinal lesions and lesion-influenced grading of human retinal pathology. IEEE J Biomed Health Inform 24(99):1–1. https://doi.org/10.36227/techrxiv.11877879.v1

Shu J, Xie Q, Yi L, Zhao Q, Zhou S, Xu Z, Meng D (2019) Meta-weight-net: learning an explicit mapping for sample weighting. In: Advances in neural information processing systems, pp 1919–1930

Boyd S, Boyd SP, Vandenberghe L (2004) Convex optimization. Cambridge University Press, Cambridge

Acknowledgments

This work was supported by the National Natural Science Foundation of China under grant 61672477, and the Zhejiang Provincial Natural Science Foundation of China under grant LZ20F030001.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

1.1 A.1 Proof of Lemma 1

For a given generator Gi, the training criterion for the sub-discriminator Di(i = 1, 2,…,k) is to minimize the discriminator loss, LGAN(Gi,Di). Let

By the formula in (22), the optimisation problem of the sub-discriminator can be transformed into a least squares problem:

It achieves the minimum at \(\frac {p_{_{\boldsymbol {Y}_{i}}}(\boldsymbol {Y}_{i})}{p_{_{\boldsymbol {Y}_{i}}}(\boldsymbol {Y}_{i})+p_{g_{i}}(\boldsymbol {Y}_{i})} (i=1,2,\ldots ,k)\) in [0, 1]. This completes the proof.

1.2 A.2 Proof of Lemma 2

For all i(i = 1, 2,…,k), if the relation \(p_{g_{i}}=p_{_{\boldsymbol {Y}_{i}}}\) is satisfied, then \(D_{i}^{*}=\frac {1}{2}\) is calculated by (16). Hence,

To see that \(\frac {1}{4}\) is the best possible value of \(\underset {D_{i}}{\min \limits } L_{\text {GAN}}(G_{i},D_{i})\), reached only for \(p_{g_{i}}=p_{_{\boldsymbol {Y}_{i}}}\), we observe

where the relationship between \(D_{i}^{*2}(\boldsymbol {Y}_{i})\), the label distribution \(p_{_{\boldsymbol {Y}_{i}}}\) and the generated segmentation distribution \(p_{g_{i}}\) are obtained in Lemma 1. Here, we introduce this relationship into the above formula.

where χ2 is the Pearson χ2 divergence and \(\chi ^{2}(p_{_{\boldsymbol {Y}_{i}}}\) \(+p_{g_{i}}\|2p_{_{\boldsymbol {Y}_{i}}})\) denotes the simplified representation of \({\int \limits }_{\boldsymbol {Y}_{i}} \left [\frac {(2p_{_{\boldsymbol {Y}_{i}}}(\boldsymbol {Y}_{i}) - (p_{_{\boldsymbol {Y}_{i}}}(\boldsymbol {Y}_{i}) + p_{g_{i}}(\boldsymbol {Y}_{i})))^{2}}{p_{_{\boldsymbol {Y}_{i}}} (\boldsymbol {Y}_{i}) + p_{g_{i}}(\boldsymbol {Y}_{i})}\right ]d\boldsymbol {Y}_{i}\). Thus, the results of (26) achieve the value \(\frac {1}{4}\) when \(p_{_{\boldsymbol {Y}_{i}}}\) and \(p_{g_{i}}\) are equal. We have shown that \(L_{\text {GAN}}=\frac {1}{4}\) and that the only solution is \(p_{g_{i}}=p_{_{\boldsymbol {Y}_{i}}}\). Thus, the asymmetric U-Net can perfectly replicate the distribution of the real segmented image. This completes the proof.

1.3 A.3 Proof of Theorem 1

Consider \(L_{\text {GAN}}(G_{i},D_{i})=\mathrm {V}(p_{g_{i}},D_{i})\) as a function of \(p_{g_{i}}\), as done in the above criterion (17), in which \(\mathrm {V}(p_{g_{i}},D_{i})\) is the criterion. Note that \(\mathrm {V}(p_{g_{i}},D_{i})\) is convex on \(p_{g_{i}}\). The inf-derivatives of an infimum of convex functions are the derivative of the function at the point where the minimum is attained. This is equivalent to computing a gradient descent update for \(p_{g_{i}}\) at the optimal Di, given the corresponding Gi. From [45], \(\inf _{D_{i}}L_{\text {GAN}}(G_{i},D_{i})\) is convex on \(p_{g_{i}}\). Moreover, \(\inf _{D_{i}}L_{\text {GAN}}(G_{i},D_{i})\) takes the value \(\frac {1}{4}\) as proven in Lemma 2; therefore, with sufficiently small updates of \(p_{g_{i}}\), \(p_{g_{i}}\) converges to \(p_{_{\boldsymbol {Y}_{i}}}(i=1,2,\ldots ,k)\). This completes the proof.

Rights and permissions

About this article

Cite this article

Wang, Y., Ye, H. & Cao, F. A novel multi-discriminator deep network for image segmentation. Appl Intell 52, 1092–1109 (2022). https://doi.org/10.1007/s10489-021-02427-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-021-02427-x