Abstract

Salient object detection has witnessed rapid progress, despite most existing methods still struggling in complex scenes, unfortunately. In this paper, we propose an efficient framework for salient object detection based on distribution-edge guidance and iterative Bayesian optimization. By considering color, spatial, and edge information, a discriminative metric is first constructed to measure the similarity between different regions. Next, boundary prior embedded with background scatter distribution is utilized to yield the boundary contrast map, and then a contour completeness map is derived through a wholly closed shape of the object. Finally, the above both maps are jointly integrated into an iterative Bayesian optimization framework to obtain the final saliency map. Results from an extensive number of experimentations demonstrate that the promising performance of the proposed algorithm against the state-of-the-art saliency detection methods in terms of different evaluation metrics on several benchmark datasets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Saliency detection is concentrated on detecting the most attractive objects in an image. Recently, this area has witnessed rapid progress. As a preprocessing procedure, automatic saliency detection has been widely used in a variety of computer vision tasks such as image segmentation [1], object recognition [2], compression [3], image retrieval [4], de-blurring [5], and others.

Several saliency models have been proposed in the past years. Due to the lack of a uniform definition of salient objects, most salient object detection methods are based on effective assumptions. Contrast prior is one of the most popular principles adopted by various kinds of models from either a local or global view [12, 13]. Essentially, local contrast-based methods [14] prefer to detect the high-frequency information such as edges, failing to pop out the salient holistic object, as shown in Fig. 1b. On the contrary, global contrast-based methods can locate the salient object while the performance of these methods is limited in such scenarios when the foreground regions are complex and with diverse appearance, as shown in Fig. 1c [13].

To address the limitation of the contrast cue, boundary prior is also applied to detect salient regions, where the image boundary areas are looked upon as background [15,16,17]. For example, Yang et al. [15] generated the saliency of image regions via manifold ranking, in which the regions on four boundary sides are treated as queries. However, such methods are fragile and limited when the object appears on the image boundary. To overcome this limitation, several improvement mechanisms have been proposed. Lu et al. [18] proposed an effective method based on dense and sparse reconstruction using the background templates. Xia et al. [19] exploited corner information together with the convex hull to extract background seeds. Li et al. [9] removed the top 30% border pixels with a considerable color difference among a border set. Wang et al. [20] dropped the untrustworthy superpixels with sharp edge out of the border set to obtain the reliable background regions. Zhou et al. [21] used diffusion-based techniques on the proposed sparse graph. All of them perform well in easy cases, but they still struggle in complex scenes for two reasons: 1) the distinctiveness of their calculation is confined to the border set instead of the entire image; 2) their constructed similarity metric is weak, as shown in Fig. 1d-e.

Furthermore, it is insufficient for saliency detection based on simple boundary prior, especially when the image has an intricate background structure or the salient object has a similar appearance with non-salient. Accordingly, a variety of methods have been proposed. Some researchers committed to conducting a robust discriminative matrix based on high-level information to enhance the difference between object and background. Lee et al. [22] concatenated encoded low-level distance map and high-level features to compute saliency. Hou et al. [23] introduced short connections to the Holistically-Nested Edge Detector (HED), which can effectively combine rich multi-scale features to identify the whole salient object and accurately capture its boundary. Liu et al. [24] proposed Deeply-supervised Nonlinear Aggregation (DNA) to make better use of the complementary information on various side-outputs by using a nonlinear side-output prediction aggregation. Even though these models show promising performance on benchmark datasets, they need to collect large hand-labeled images, yet expensive to set up the learning framework. On the contrary, based on the boundary prior, different propagation models have been put forward to improve the visual quality of saliency maps. Li et al. [10] estimated the saliency via regularized random walks ranking, Jiang et al. [11] formulated saliency detection based on Markov absorption probabilities on an image graph model, and Yu et al. [25] presented a cross-diffusion process for salient object detection. However, they still suffer from a saliency overestimation of the background when the background is clustered, and the similarity metric is inefficient. Besides, they may not be able to highlight salient objects fully and may even produce erroneous results, because the graph-based approaches ignore the overall consistency between different regions of the salient object, as depicted in Fig. 1f and g.

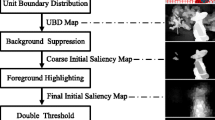

In this paper, we propose a novel saliency detection method, which is a hybrid of background scatter and foreground contour completeness. The pipeline of the proposed method is depicted in Fig. 2. First, we derive superpixels from an edge guided segmentation. Different from previous methods for the extraction of a robust background, we consider it from the global view. Namely, the background scatter of the whole image is introduced to remove the foreground noise from the border set (BS) by setting different thresholds for different boundaries, so then the solid boundary background (BG) can be achieved. Based on the boundary prior, a boundary contrast saliency map is then generated. Besides, with the help of the BG together with the boundary contrast saliency map, a contour completeness map can be produced by considering the expectation of times of the regions being activated in a hierarchical segmentation space. Finally, we refine the integrated result to obtain a more smooth and accurate saliency map based on an iterative Bayesian optimization framework. Comprehensively, the main contributions of this proposed research lie in the following aspects:

-

(1)

We proposed a novel model for saliency detection via background scatter and foreground contour completeness. A selective mechanism for robust background nodes is presented based on background scatter. To maintain the completeness of the salient object, the contour completeness saliency map is derived via the completely closed shape of the object.

-

(2)

An iterative Bayesian optimization framework is proposed to achieve improved performance by optimizing the integration result of background-based saliency map and contour completeness saliency map into a more favorable result.

-

(3)

Extensive experiments and comprehensive analysis are conducted on four public datasets that demonstrate the effectiveness of the proposed method against state-of-the-art saliency detection methods.

The work in this paper is a substantial extension of our preliminary study titled “A hybrid of background scatter and foreground contour completeness for salient object detection” [26] (referred to as BSFC). Three-fold extensions are made upon BSFC in this paper, namely algorithm, framework, and experiment. Specifically, this paper proposes to additionally develop a discriminative metric to measure the similarity between different nodes, which can improve the effectiveness of the proposed algorithm (as demonstrated in Section 2.1). Secondly, we improve the original framework by adding a refinement step, which can highlight the foreground while suppressing the background (as demonstrated in Section 2.5). Lastly, we conducted more plentiful experiments by comparing with more state-of-the-art methods and implementing the more comprehensive and in-depth analysis of the algorithm.

2 The proposed approach

The remainder of the proposed algorithm is organized as follows. First, a mechanism for extracting robust boundary background regions based on scatter prior is proposed. Next, two saliency cue maps (boundary contrast map and contour completeness map) are generated via two different computational schemes, and lastly, refinement to obtain the final saliency map is proposed.

2.1 Background scatter

The structured random forest edge detector [27] to derive the probability of boundary (PB) is adopted, so then superpixels are obtained by thresholding an ultrametric contour map (UCM) based on PB. Let us define the initial segmentation \(P_{0} =\{R_{i}\}\) and the number of regions is K0. Based on the superpixels segmentation result, an undirected weighted graph G =< V,E > is constructed, where V is the set of superpixels, and E is weighted by an affinity matrix.

Boundary prior is a handy cue that has been widely used in saliency detection that assumes the image boundary as background regions. However, it may lead to adverse effects if we directly use all border nodes as background when the object appears on the border. Accordingly, we perform boundary analysis to exclude salient superpixels on the four boundaries of the image. In an image, the salient regions usually represent a similar color and compact spatiality as well, while non-salient regions generally show a different appearance and loose scatter around. Hence, we introduce the scatter prior measured by spatial variance that takes the complete image as the research object instead of the border set (BS). In detail, it is defined as below:

Herein, si and ρi indicate the position and the weighted mean position of superpixel i, respective; N = K0 represents the number of superpixels; normalize(x) is a function that normalizes x to [0,1]; wij is the edge weight used to measure the similarity between different nodes. At this point, a joint metric taking the color difference, spatial distance, and edge information into consideration is adopted to compute the edge weight wij. The following aspects are considered:

-

(1)

According to the cognitive property of color similarity, image regions with similar colors usually belong to the same class.

-

(2)

According to spatial proximity property, spatially adjacent image regions are likely to share the same label.

-

(3)

In some cases, using the edge map can be better to highlight the outline between foreground and background than (1) and (2), as shown in Fig. 3.

Effects of edge-weight. (a) Input; (b) Only using color affinity matrix; (c) Only using spatial distance affinity matrix; (d) Using both color and spatial distance affinity matrix; (d) Edge detection by [27]; (e) The proposed affinity matrix

Based on the above considerations, the proposed edge weight is formulated as:

where σw controls the strength of weight between a pair of nodes, σw = 0.1 is empirically set, dc(i,j), ds(i,j), and de(i,j) represents the color difference, spatial distance, and contour magnitude, respectively. The color difference dc(i,j) is defined:

where |Ri − Rj| denotes the Euclidean distance between superpixel i and j in CIELab color space. For spatial distance ds(i,j), we combine geodesic distance and sine spatial distance to measure the distance between superpixel i and j:

where xi, yi, Ix and Iy refer to the x- and y-coordinates of the region i and image, respectively. The calculation mechanism can realize the connection of the regions with the same structure well in an arbitrary shape and range. de(i,j) is defined as the accumulated weight along the shortest path on the graph, i.e.

where the weight of graph edge eij is assigned as the strength of image edge between i and j in terms of UCM.

As shown in Table 1, it is noted that there are different probabilities of the object appearing at different boundaries. Accordingly, we choose different threshold values for different sides instead of setting a single value, and a robust boundary background set BG can be obtained from the border set BS. As shown in Fig. 4, the proposed method is superior to other methods.

2.2 Boundary contrast map

According to the boundary prior, a saliency map can be obtained by measuring the contrast with the robust background BG. However, superpixels in the background may only be similar to a portion of the border set rather than all of the background nodes. Therefore, it is unwise to measure the saliency value of a superpixel by counting all the differences between corresponding superpixel and the background seeds. To solve it, several methods have been proposed in previous works [10, 28]. In this paper, we put forward a distinctive way of measuring the boundary contrast map. First, the K − means algorithm is applied to divide the set BG into K clusters (empirically set K = 3). For each cluster k, we compute the mean color \(\bar {c}_{k}^{m}, m \in \{L,a,b,Lab\}\) in CIELab color space and the color covariance matrix V ark. Also, for each superpixel, we select one of the K clusters that have the minimum difference from the superpixel. Then the saliency of superpixel Ri can be obtained based on the Mahalanobis distance:

and

where g represents the index of the cluster that has the smallest difference with Ri; (w,h) indicates a pixel in superpixel Ri; |Ri| is the cardinality of Ri; \(P_{R_{i}}\) provides the proportion of superpixel Ri compared with the whole image. Then the background contrast saliency map SB can be achieved by normalizing the obtained saliency values to [0,1]. Fig. 5 shows the results of SB. It can be seen that SB can highlight the salient regions.

Boundary contrast map and contour completeness map. From left to right: (a) Input; (b)SB; (c) Contour completeness map without processing [29]; (d) SC; (e) Ground-truth

2.3 Contour completeness map

Boundary contrast map SB in collaboration with BG works well, whereas in some complex scenes. As is shown in the second example of Fig. 5b, relying solely on the boundary prior might lead to highlight the background regions erroneously. Generally, the salient object has a well-defined closed boundary [30]. As a consequence, the contour completeness cue is introduced to facilitate the salient object detection.

A hierarchical segmentation Pξ can be obtained by setting different ξ ∈ [0,ξN] for the derived hierarchical UCM. In this paper, we compute the expectation of times for which a region is superimposed in the whole hierarchical segmentation space to determine whether a region has a closed outline or not in segmentation Pξ = {Ri}. That is, the more times the holistic homogeneous regions are activated, the more salient they are. An indicator map Q at level ξ is defined:

where \(P_{\xi }^{in} =\{R_{i}|R_{i}\cap BG=\emptyset \}\) is the set of inner regions, \(P_{\xi }^{out} =P_{\xi } \setminus P_{\xi }^{in}\) is the set of outer regions. Different from [29], we adopt BG instead of BS in \(P_{\xi }^{in}\). The rationale behind this is that it may yield a bad result if we adopt BS in \(P_{\xi }^{in}\) when the salient objects connect to the boundary (see the third row in Fig. 5d).

Because the accuracy of the saliency map is sensitive to the number of superpixels, the problem of an optimal threshold selection needs to be solved in thresholding segmentation. However, it is difficult for us to obtain an optimal threshold value by computing directly. To handle the problem, we first introduce saliency weights to assign a different probability to each pixel belonging to be the salient object. Here, we adopt the saliency value of SB as the weights of each pixel, defined as s. By statistically averaging the expectation of the entire hierarchical segmentation space, we obtain the contour completeness saliency map:

where ξ obeys a uniform distribution with the probability density function Pξ. Besides, we compute the saliency map at different resolutions to further deal with the problem that salient objects are likely to appear at different scales, so then they are resized into the standard size and integrated to retrieve the strong map SC, as shown in Fig. 5e. From observations, we note that the proposed method can work well in the cases where salient regions appear at the boundary.

2.4 Integration

The saliency maps measured by boundary contrast and contour completeness are complementary to each other: the former prefers to highlight the object while the latter aims to suppress noise. Thus, effective integration is a wise operation. Although previous works adopt linear combinations to fuse individual saliency maps using weight for simplicity, it is not feasible because such processing will either weaken the suppression of background noise or interfere with foreground detection.

In order to better achieve the purpose of seeking common ground while reserving differences, we have designed a fine-grained fusion method. The mathematical definition of the fusion mechanism can be expressed as follows:

Here Υ{x} = 1 if x is true, and Υ{x} = 0 otherwise. S∗ is a saliency vector by binarizing a saliency map with a threshold calculated from S using the algorithm in [31]. Figure 6d demonstrates that the integration map is more accurate than the saliency map of Fig. 6b and c in the detection of foreground and background, which further proves the effectiveness of the proposed integration method.

2.5 Iterative Bayesian optimization framework

In order to optimize the integration results, an iterative Bayesian optimization framework is proposed. According to Bayesian inference, posterior probability \(P(\varTheta _{k},k\in \{F,B\}|x_{i})\) representing the probability that region xi belongs to the salient ΘF (background ΘB) can be computed as:

where \(P(x_{i}|\varTheta _{k},k\in \{F,B\})\) is a conditional probability. P(ΘF) and P(ΘB) = 1- P(ΘF) are the prior probabilities of being salient and background, respectively. In this work, we set the fusion result SI as the initial prior probability, i.e., P(ΘF) = SI and P(ΘB)= 1-SI. How to calculate the conditional probability will be described in detail as follows.

The key to the method based on the Bayesian framework is to obtain conditional probability. The current conventional method is to obtain a conditional probability by calculating the proportion of each quantized feature in a binary image. However, a simple thresholding operation may mislead further inferences, and it is also challenging to get a reliable threshold. Therefore, a soft approach is proposed based on iterative optimization to solve the problem. We define the probability of each region as the weighted average of all the saliency values. The conditional probabilities can be defined by [32]:

f(z) is an enhancement function that makes the difference between foreground and background more apparent while keeping the saliency value of superpixel near the threshold (Th). The parameter 𝜃i is mainly to make sure that f(z) can pass through three defined coordinate points ((0, 0), (1, 1) and (Th, Th)), and τ = 4. In this paper, we get the optimal threshold Th by size prediction and feature weight:

where \({S}^{i}_{T}\)(\({B}^{i}_{T}\)) and \({W}^{f}_{T}\)(\({W}^{B}_{T}\)) correspond to the mean value and the weight of the foreground (background) histogram in channel i of opponent color space [33], respectively. T denotes the percentage of the potential salient regions, and we set T ∈ [0.1,0.5]. The rationality of this hypothesis lies in that the size proportion of salient objects is usually in a range from 0.05 to 0.6 [34]. ρi is feature weight, which is measured by computing its separating power based on variance ratio.

where ϕ is a constant used to prevent the numerator and denominator from being 0. \({\Gamma }={\sum }_{i}\rho _{i}\). Lg(i) is the log-likelihood of foreground and background, where s(i) and b(i) denote the discrete probability distributions of foreground and background, respectively.

Based on the above formulas, we can get \(P(\varTheta _{F}|x_{i})\). In order to optimize the results, we iteratively re-assign the prior function with P(ΘF) = \(P(\varTheta _{F}|x_{i})\) and the feature weights ρi with new ρi. To eliminate the disconnectedness and small fragments and improve the accuracy of detection, we utilize the smooth result of \(P(\varTheta _{F}|x_{i})\) to re-assign the prior function in the experiment. Herein, we adopt the guided filter [35] to smooth \(P(\varTheta _{F}|x_{i})\), which can well preserve the integrity of the edge of the object. Finally, we denote Fal(t), t = {t0,t1,⋯ ,tn} as the saliency map corresponding to various iterations (t), and Fal(t0) indicates the saliency map without iteration. Figure 6e-f show the result refined by the proposed iterative Bayesian optimization framework. Our model can always highlight the full salient objects while suppressing the background, which illustrates the effectiveness of our system.

3 Experimental results

In this paper, we extensively present comparison of the proposed algorithm against 15 state-of-the-art saliency detection methods that includes BFS [20], BL [36], BSCA [28], BSFC [26], FCB [37], CGV [38], GS [8], HCC [29], LPS [9], MR [15], MB [39], MILPS [34], RCRR [40], SF [41], SRD [42] on the ASD [6], DUT-OMRON [15], SOD [43], and ECSSD [44] datasets. For fair evaluation purposes, either the results provided by the original authors be directly used or execute their own implementations through the source codes publicly available. Admittedly, compared with the traditional methods, saliency methods using deep learning such as MDF [45] and ILS [46] can achieve better performance, although they require a large amount of training data. Considering that this paper is mainly focused on unsupervised learning, the proposed method is solely compared to traditional saliency methods.

3.1 Evaluation metrics

To compare the performance, several popular evaluation metrics are used in our experiments, which include the precision-recall curve (PR), average precision (AP), F- measure, overlapping ratio (OR) and mean absolute error (MAE).

We first use the Precision-Recall (PR) curves to evaluate the performance of the proposed method. For each saliency map, binary maps are generated by binarizing a saliency map with fixed thresholds varying from 0 to 255, and the PR curves are obtained by comparing the ground truth with binary maps.

For saliency detection, precision, and recall are often required to be high, though these two indicators are usually negatively correlated. To comprehensively assess the salient object detection model, the weighted harmonic mean of precision (P) and recall (R) called F-measure is calculated, given as:

where β2 is set to 0.3 to emphasize precision [13]. Since a high recall rate can easily be obtained by detecting the complete image as the salient object, it is not possible to guarantee such accuracy, unfortunately.

Noting that the above metrics mainly focus on the probability of salient pixels being correctly detected and ignore the effects of correct assignment of non-salient pixels, and therefore OR and MAE are adopted to address these issues. MAE represents the measure of the similarity between saliency map S and ground-truth G [41]: MAE = mean(|S − G|). OR is defined as the overlapping ratio between the segmented object mask S′ and ground truth G: OR = |S′∩ G|/|S ∪ G|, where S′ can be obtained by binarization of S with an adaptive threshold, i.e., twice the mean values of S as in [47].

3.2 Quantitative results

We extensively present a comparison of the proposed algorithm against 15 recently proposed algorithms on four datasets, and the experimental results are summarized in Fig. 7 and Table 2. The results show that, in most cases, the algorithm ranks first or second in different evaluation indicators on the test datasets.

Specially, we can observe that our model achieves the best performance in terms of AP (0.9372, 0.8158, 0.7848), F-measure (0.9299, 0.7851, 0.7171), and OR (0.85159, 0.58105, 0.44333) on ASD, ECSSD, and SOD datasets. For the challenging DUT-OMRON dataset, our method performs the highest F-measure (0.669) and OR (0.48892), and the second-best MAE (0.11734) with a minor margin (0.00259) to the best results HCC (0.11475). Besides, except for the MAE on the SOD dataset, all indicators of the algorithm on other datasets are better than BSFC. Besides, our method can achieve the highest precision and recall simultaneously, which ensures that the results obtained by our method are closer to their ground truth, and the proposed method is more applicable to practical applications. Furthermore, our method achieves relatively superior performance compared to the other methods for PR curves on four datasets.

The above quantitative comparisons show that the proposed method consistently outperforms all the compared state-of-the-art approaches on various scenes.

3.3 Qualitative results

We provide some saliency maps of the proposed algorithm and other thirteen state-of-the-art algorithms in Fig. 8. From the results, we can see that the saliency maps generated by our algorithm are of the best similarity to the ground truth. For images with a single object, in the first two rows, our method can ultimately retain salient objects while background noise is well suppressed simultaneously. When an image has complex background structures, the proposed algorithm can still obtain favorable results with a less noisy background. For example, in the 3rd and 4th rows, our saliency maps can uniformly pop out the foreground object of the images, but other algorithms fail to extract the salient object from the scattered background. Also, when salient objects and backgrounds share a similar appearance, our algorithm can detect salient regions accurately. At the same time, other methods either fail to identify the salient objects or incorrectly include background regions into detection results, as shown in the 5th and 6th two rows. Furthermore, as shown in the 7th and 8th two rows, our results perform well and can preserve the completeness while other methods are a weakness when salient objects are on the boundaries, which conclusively prove our high robustness of background selection. All the above results demonstrate the robustness and the effectiveness of our method in highlighting salient objects and restraining the background regions.

3.4 Analysis of our algorithm

-

(1) Effectiveness of the proposed boundary prior map To demonstrate the effectiveness of the proposed boundary prior map, we compute the PR curves and quantitative results of F-measure for boundary prior map on the ASD dataset. Different background seeds are received by three methods (BFS [20], MR [15], and LPS [9]) and construct their corresponding boundary-based saliency maps. The resulting curves and quantitative results are shown in Fig. 9a, where the dotted line shows the performance of each method using their own boundary set while the solid line provides the performance using our boundary nodes. Also, the red line shows the performance of our boundary contrast map. As shown by these curves, the proposed method outperforms those competitive methods. Yet, the performance of three saliency models has also been conspicuously improved, illustrating the effectiveness of the selection of boundary nodes and the computation of the background-based saliency map.

-

(2) Validation of components in contour completeness map Since both the robust boundary background set BG and saliency weights are introduced into the contour completeness map, we further examine the effectiveness of each component for the contour completeness map. Herein, we consider different components for comparative analysis, including:

-

(a)

contour completeness map based on BS without saliency weights (SCBS),

-

(b)

contour completeness map based on BG and without saliency weights (SCBG),

-

(c)

contour completeness map based on BS with saliency weights (SCBSW),

-

(d)

contour completeness map based on BG with saliency weights (SCBGW).

Fig. 9 Precision-recall curves and quantitative results of F-measure to measure the effectiveness of our algorithm. (a) Evaluation of our background-based saliency map on the ASD dataset; (b) Evaluation of our contour completeness saliency map on the SOD dataset; (c) Evaluation of each component in the proposed algorithm on the SOD dataset.

The resulting curves on SOD and the corresponding quantitative results are shown in Fig. 9b. We note that the performance of SCBG (SCBGW) is higher than the performance of SCBS (SCBSW) since the former takes the issue of the object connecting to boundary into consideration while the latter directly use all boundary nodes as background, effectively demonstrating the effectiveness of BS in improving the contour completeness map. Besides, we observe that the performance of SCBSW (SCBGW) is higher than the performance of SCBS (SCBG), due to the recent introduction of saliency weights that assign a different probability to each pixel belonging to be a salient object. Furthermore, Compared with SCBS, SCBG, and SCBSW, SCBGW achieves the best performance, which conclusively proves the necessity of considering the two indexes (BG and saliency weights) in the calculation of the contour completeness map.

-

(a)

-

(3) Examination of refinement Since the system optimization adopts an iterative mechanism, we first verify the stability of the system. To this end, we compute the performance of the proposed method on a different step of iteration with both PR curve and F-measures on two datasets (SOD and ECSSD), as shown in Fig. 10. We note that the system obtains a stable performance with only three steps of iterations (i.e., Fal(t3)), since the performance of the proposed system cannot further be improved by increasing the steps of iterations. Furthermore, to validate the effectiveness of the refinement, a comparison between the final result and other steps from both quantitative and qualitative aspects are processed and shown in Fig. 9c, where the quantitative comparison results of the saliency refinement mechanism on the SOD dataset present performance enhancement of the saliency map. Also shown in Fig. 6e and f, the salient object can be highlighted uniformly, and the background can be suppressed effectively due to the refinement, which qualitatively verifies the effectiveness of the refinement mechanism.

3.5 Running time

The execution time test is conducted on a 64-bit PC with Intel Core i5-4460 CPU @ 3.20GHz and 8G memory. All codes for the experimentation testings are provided by the corresponding authors and executed without any change in MATLAB R2015a with C++ mex implementation, and the average running time is computed on the ASD dataset.

We selected several competitive accuracy methods or those akin to the proposed algorithm, and the results are shown in Table 3. It is significantly faster than LPS, RR, and BFS, even though being slower than MR and GS, the proposed method still outperforms them both considering the overall evaluation performance. Therefore, it can be attained that the proposed algorithm can achieve a reasonable balance between accuracy and efficiency.

3.6 Limitation and analysis

Although the proposed method can perform well in most of the cases, there remain some challenging scenarios that cannot accurately extract the complete salient objects. The last two rows of Fig. 8 show some failure cases. When an image contains multiple salient objects in complex scenes, the proposed method may not work well in highlighting all objects in an image due to the difference and diversity of multi-objects in terms of size and position. However, it is also tricky for state-of-art saliency detection methods. For an image with a complex background structure and small differences between object and background, it is inadequate for our method to achieve the satisfactory detection results by the proposed low-level and mid-level features. Hence, it is an excellent choice to design an even more discriminative similarity metric by incorporating the traditional low-level features and the high-level, in-depth learning features. Besides, the study on the reliability of edge and the selection of an optimal segmentation threshold can improve the performance of saliency detection as well.

4 Conclusions and future work

In this paper, we present an efficient salient object detection algorithm via background scatter and foreground contour completeness. To accurately extract robust background, background scatter is proposed and designed, and the corresponding boundary contrast map can be obtained via boundary prior. Besides, contour completeness embedded with boundary contrast map can be applied together with robust boundary nodes, to generate contour completeness saliency map, so finally, an optimization function is proposed to highlight salient objects uniformly. Experimental results not only demonstrate the superior performance of the proposed method but also the right balance between accuracy and computation cost.

As future work, we investigate efficient methods that incorporate high-level features with the support of deep learning to achieve higher performance. Taking into consideration that image depth information plays an essential role in saliency detection and challenging to collect image depth information via camera, ways how to use non-camera methods to obtain image depth information and improve saliency detection in also be investigated and validated.

References

Bai X, Wang W (2014) Saliency-svm: an automatic approach for image segmentation. Neurocomputing 136:243–255

Ren Z, Gao S, Chia L-T, Tsang IW-H (2014) Region-based saliency detection and its application in object recognition. IEEE Trans Circuits Syst Video Technol 24(5):769–779

Hadizadeh H, Bajic IV (2014) Saliency-aware video compression. IEEE Trans Image Process 23(1):19–33

Gao X, Shi X, Zhang G, Jin L, Liao M, Li KC, Li C (2018) Progressive Image Retrieval With Quality Guarantee Under MapReduce Framework. IEEE Access 6:44685–44697

Li Y, Lu H, Li KC, Kim H, Serikawa S (2018) Non-uniform de-scattering and de-blurring of underwater images. Mob Netw Appl 23(2):352–362

Achanta R., Hemami S., Estrada F., Susstrunk S. (2009) Frequency-tuned salient region detection. In: IEEE conference on computer vision and pattern recognition, pp. 1597–1604

Margolin R., Tal A., Zelnik-Manor L. (2013) What makes a patch distinct. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1139–1146

Wei Y., Wen F., Zhu W., Sun J. (2012) Geodesic saliency using background priors. In: Proceedings of the IEEE conference on european conference on computer vision, pp. 29–42

Li H, Lu H, Lin Z, Shen X, Price B (2015) Inner and inter label propagation: salient object detection in the wild. IEEE Trans Image Process 24(10):3176–3186

Li C., Yuan Y., Cai W., Xia Y., Dagan Feng D. (2015) Robust saliency detection via regularized random walks ranking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2710–2717

Sun J, Lu H, Liu X (2015) Saliency region detection based on markov absorption probabilities. IEEE Trans Image Process 24(5):1639–1649

Cheng M-M, Warrell J, Lin W-Y, Zheng S, Vineet V, Crook N (2013) Efficient salient region detection with soft image abstraction. In: Proceedings of the IEEE international conference on computer vision, pp. 1529–1536

Cheng M-M, Mitra NJ, Huang X, Torr PH, Hu S-M (2015) Global contrast based salient region detection. IEEE Trans Patt Anal Mach Intell 37(3):569–582

Itti L, Koch C, Niebur E (1998) A model of saliency-based visual attention for rapid scene analysis. IEEE Trans Patt Anal Mach Intell 20(11):1254–1259

Yang C, Zhang L, Lu H, Ruan X, Yang M (2013) Saliency detection via graph-based manifold ranking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3166–3173

Jiang H, Wang J, Yuan Z, Wu Y, Zheng N, Li S (2013) Salient object detection: A discriminative regional feature integration approach. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2083–2090

Gong C, Tao D, Liu W, Maybank SJ, Fang M, Fu K, Yang J (2015) Saliency propagation from simple to difficult. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2531–2539

Lu H, Li X, Zhang L, Ruan X, Yang M. -H. (2016) Dense and sparse reconstruction error based saliency descriptor. IEEE Trans Image Process 25(4):1592–1603

Zhang H, Xia C, Gao X (2017) Robust saliency detection via corner information and an energy function. IET Comput Vis 11(6):379–388

Wang J, Lu H, Li X, Tong N, Liu W (2015) Saliency detection via background and foreground seed selection. Neurocomputing 152:359–368

Zhou L, Yang Z, Zhou Z, Hu D (2017) Salient region detection using diffusion process on a two-layer sparse graph. IEEE Trans Image Process 26(12):5882–5894

Lee G, Tai Y-W, Kim J (2016) Deep saliency with encoded low level distance map and high level features. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 660–668

Hou Q, Cheng M-M, Hu X, Borji A, Tu Z, Torr PH (2019) Deeply supervised salient object detection with short connections. IEEE Trans Patt Anal Mach Intell 41(4):815–828

Liu Y, Fan D-P, Nie G-Y, Zhang X, Petrosyan V, Cheng M-M Dna: Deeply-supervised nonlinear aggregation for salient object detection, arXiv:1903.12476

Yu J-G, Gao C, Tian J (2016) Collaborative multicue fusion using the cross-diffusion process for salient object detection. J Opt Society Am A Opt Image Sci Vis 33(3):404–415

Xia C, Zhao Q, Zhang S, Gao X (2019) A hybrid of background scatter and foreground contour completeness for salient object detection. In: International conference on applications and techniques in cyber security and intelligence, pp. 1266–1275

Dollár P, Zitnick CL (2013) Structured forests for fast edge detection. In: Proceedings of the IEEE international conference on computer vision, pp. 1841–1848

Qin Y, Lu H, Xu Y , Wang H (2015) Saliency detection via cellular automata. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 110–119

Liu Q, Hong X, Zou B, Chen J, Chen Z, Zhao G (2017) Hierarchical contour closure-based holistic salient object detection. IEEE Trans Image Process 26(9):4537–4552

Jiang H, Wang J, Yuan Z, Liu T, Zheng N , Li S (2011) Automatic salient object segmentation based on context and shape prior. In: Proceedings of the British Machine Vision Conference, pp. 9

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern 9 (1):62–66

Huo S, Zhou Y, Xiang W, Kung S (2018) Semisupervised learning based on a novel iterative optimization model for saliency detection. IEEE Trans Neural Netw Learn Syst (99) pp. 1–17

Frintrop S, Werner T, Martin Garcia G (2015) Traditional saliency reloaded: A good old model in new shape. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 82–90

Huang F, Qi J, Lu H, Zhang L, Ruan X (2017) Salient object detection via multiple instance learning. IEEE Trans Image Process 26(4):1911–1922

He K, Sun J, Tang X (2013) Guided image filtering. IEEE Trans Patt Anal Mach Intell (6) pp. 1397–1409

Tong N, Lu H, Ruan X, Yang M-H (2015) Salient object detection via bootstrap learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1884–1892

Liu GH, Yang JY (2018) Exploiting color volume and color difference for salient region detection. IEEE Trans Image Process 28(1):6–16

Yang KF, Li H, Li CY, Li YJ (2016) A unified framework for salient structure detection by contour-guided visual search. IEEE Trans Image Process 25(8):3475–3488

Zhang J, Sclaro S, Lin Z, Shen X, Price B, Mech R (2015) Minimum barrier salient object detection at 80 fps. In: Proceedings of the IEEE international conference on computer vision, pp. 1404–1412

Yuan Y, Li C, Kim J, Cai W (2017) D. F Reversion correction and regularized random walk ranking for saliency detection. IEEE Trans Image Process 27(3):1311–1322

Perazzi F, Krähenbühl P, Pritch Y (2012) A. Hornung, Saliency filters: Contrast based filtering for salient region detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 733–740

Zhou L, Yang Z, Yuan Q, Zhou Z, Hu D (2015) Salient region detection via integrating diffusion-based compactness and local contrast. IEEE Trans Image Process 24(11):3308–3320

Martin D, Fowlkes C, Tal D, Malik J (2001) A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings of the IEEE international conference on computer vision, pp. 416–423

Yan Q, Xu L, Shi J, Jia J (2013) Hierarchical saliency detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1155–1162

Li G, Yu Y (2016) Visual saliency detection based on multiscale deep cnn features. IEEE Trans Image Process 25(11):5012– 5024

Wang L, Lu H, Wang Y, Feng M, Wang D, Yin B, Ruan X (2017) Learning to detect salient objects with image-level supervision. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 136–145

Li X, Li Y, Shen C, Dick AR, van den Henge A (2013) Contextual hypergraph modeling for salient object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3328–3335

Acknowledgements

This work was supported by University-level Key projects of Anhui University of science and technology (Grant No. QN2019102, QN2017208), China Postdoctoral Science Foundation (Grant No. 2019M660149), National Natural Science Foundation of China (Grant No. 61806006), Natural Science Research Project of Colleges and Universities in Anhui Province (Grant No. KJ2018A0083).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xia, C., Gao, X., Li, KC. et al. Salient object detection based on distribution-edge guidance and iterative Bayesian optimization. Appl Intell 50, 2977–2990 (2020). https://doi.org/10.1007/s10489-020-01691-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-020-01691-7