Abstract

In this paper, the blocking flow shop problem is considered. An exact algorithm for solving the blocking flow shop problem is developed by means of the bounded dynamic programming approach. The proposed algorithm is tested on several well-known benchmark instances. Computational results show that the presented algorithm outperforms all the state-of-the-art exact algorithms known to the author. Additionally, the optimality is proven for 26 previously open instances. Furthermore, we provide new lower bounds for several benchmark problem sets of Taillard requiring a relatively short CPU time.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The permutation flow shop problem is a special case of the flow shop problem where n jobs have to be processed on m machines. The objective is to minimize the time at which all jobs are completed. If there does not exist any storage space between machines, then the problem is called the blocking flow shop problem denoted by \(Fm|block|C_{\max }\). This problem will be considered in the present paper. For the two machine case, Reddi and Ramamoorthy (1972) presented a polynomial algorithm which gives an exact solution. However, for \(m>2\), the \(Fm|block|C_{\max }|\) problem is strongly NP-hard as it is shown by Hall and Sriskandarajah (1996) using a result from Papadimitriou and Kanellakis (1980).

The majority of research is focussed on developing the heuristics. We will highlight only the most important papers. Grabowski and Pempera (2007) developed a tabu search method for the \(Fm|block|C_{\max }\) problem. However, there were not done further investigations of the tabu search approach. Wang et al. (2010) proposed a novel hybrid discrete differential evolution algorithm. Ribas et al. (2011) presented an iterated greedy (IG) algorithm. Lin and Ying (2013) developed a revised artificial immune system algorithm (AIS) that is a stochastic computational technique. This technique combines the features of the artificial immune system with the annealing process of simulated annealing algorithms. Pan et al. (2013) developed a memetic algorithm. By means of this algorithm, 75 out of 120 best-known solutions for Taillard benchmark instances were improved. Zhang et al. (2015) proposed an algorithm that combines the variable neighborhood and simulated annealing approach. Tasgetiren et al. (2015) developed an extremely effective populated local search algorithm. The idea is to learn some unknown parameters through a differential evolution based on the work of Tasgetiren et al. (2013). Ultimately, 90 out of 120 best-known solutions for Taillard benchmark instances were improved showing the effectiveness of the proposed algorithm. Recently, Tasgetiren et al. (2017) presented highly efficient iterated greedy algorithms. Also, speed-up methods are used to obtain a better performance of the algorithms. Computational results show that the iterated greedy algorithms proposed by Tasgetiren et al. (2017) outperform all the state-of-the-art heuristics currently reported in the literature.

Several articles stress the necessary of developing exact procedures for the \(Fm|block|C_{\max }\) problem, e.g. Lin and Ying (2013). However, only a few exact methods have been given for the \(Fm|block|C_{\max }\) problem. The simplest way is to enumerate all permutations. However, this method is not practical even for problems of small size. Ronconi (2005) proposed an exact branch and bound (B&B) algorithm. This algorithm provides lower and upper bounds for several sets of benchmark instances. Companys and Mateo (2007) proposed the LOMPEN algorithm. This algorithm is based on the double branch and bound method. The idea is to apply simultaneously B&B algorithms to direct and reverse instances and then to create links and data exchanges between both processes. Companys and Ribas (2011) proved the optimality for only one Taillard \(20\times 10\) type benchmark instance using the LOMPEN algorithm. Bautista et al. (2012) proposed the bounded dynamic programming (BDP) algorithm. This method combines features of dynamic programming with features of B&B. The BDP algorithm is proposed as a heuristic. However, this algorithm can easily be interpreted as the exact algorithm.

The dynamic programming approach has also been developed for the job shop scheduling problem. This problem is another variant of the shop scheduling. Gromicho et al. (2012) [a corrigendum on this paper by van Hoorn et al. (2016)] proposed the DP algorithm with a complexity proven to be exponentially lower than exhaustive enumeration. Recently, van Hoorn (2016) provided the BDP algorithm that extends the previous version of the DP algorithm for the job shop.

The objective of the present paper is to develop the exact procedure to solve the \(Fm|block|C_{\max }\) problem. Main contributions of this paper can be summarized as follows. We improve the BDP approach. Computational results confirm that our version of the BDP algorithm significantly outperforms the base version proposed by Bautista et al. (2012). Furthermore, computational results show that our version of the BDP algorithm outperforms all the afore mentioned state-of-the-art exact algorithms. Finally, we show that the presented BDP algorithm can be successfully applied for lower bound calculations.

This paper is organized as follows. Section 2 presents a problem description and basic notations. In Sect. 3, we describe lower bounds that are used in the present work. Section 4 proposes our version of BDP. Computational results are given in Sect. 5. Finally, concluding remarks and some possible directions for future research are described in Sect. 6.

2 Basic notations

The basic notations used in this paper are summarized in Table 1.

In the permutation flow shop problem, n jobs have to be processed in m machines in the same order, from machine 1 to machine m. However, this order is not known. The processing time associated with a specific machine \(k\in {\mathcal {M}}=\{1,\ldots ,m\}\) and a specific job \(i\in {\mathcal {J}}=\{1,\ldots ,n\}\) is denoted by \(p_{i,k}\). These times are non-negative. The objective is to find a permutation \(\pi \) of n jobs such that the maximum completion time (the time when all jobs are completed) is minimal. However, if there does not exist any storage capacity amongst machines, a job cannot leave the machine while the next machine is not free. In this case, the permutation flow shop problem becomes the blocking flow shop problem which is denoted by \(Fm|block|C_{\max }\).

Let S be a set of scheduled jobs and let \(\pi \in \Pi (S)\) be the job permutation associated with S. Here \(\Pi (S)\) stands for the set of all job permutations associated with S. Let

be the vector consisting of departure times \(e_k(\pi )\) on machine \(k\in {\mathcal {M}}\) and let

be the vector consisting of the heads \(r_k(\pi )\) for each machine k. These heads can be interpreted as lower bounds for the starting time of any job in machine k. By defining the head \(r_k(\pi )\) we take into account that no job can be processed simultaneously by two or more machines. Define time lags \(\ell _i(k_1,k_2)\) between machines \(k_1\) and \(k_2\) (\(k_1<k_2\)) for each job i in the following way:

The head \(r_{k}(\pi )\) is defined as

where \(\overline{S}={\mathcal {J}}\setminus S\) and

From 2 it follows that \(e_k(\pi )\le r_k(\pi )\) for all \(k\in {\mathcal {M}}\), \(S\subset {\mathcal {J}}\), and \(\pi \in \Pi (S)\).

We study an example in order to explain the concepts of heads. In this example we have five jobs and five machines. The sequence \(\pi =(4,5)\) is depicted in Fig. 1. Thus, \(\overline{S}=\{1,2,3\}\). Processing times of remaining jobs are given in Table 2.

Figure 1 shows that

for \(k\in \{2,3,4\}\). The heads \(r_{i,k}\) for \(i\in \{1,2,3\}\) and \(k\in {\mathcal {M}}\) are given in Table 2. These heads are calculated using (2). Values in bold correspond to those \(r_{j,k}\) that are minimal among all \(r_{i,k}\) with \(i\in \overline{S}\).

We define dominance ‘\(\prec \)’ and equivalence ‘\(\equiv \)’ relations between job permutations \(\pi ^1,\pi ^2\in \Pi (S)\) in the following way:

In (3), Bautista et al. (2012) used \(e(\pi )\) instead of \(r(\pi )\) to define the dominance and equivalence. However, our version of the DP can also discard additional job permutations. It is shown by the following theorem as well as by the counterexample illustrated in Fig. 2:

Theorem 1

Let \(S\subset {\mathcal {J}}\), \(S\ne {\mathcal {J}}\), and let \(\pi ^1,\pi ^2\in \Pi (S)\). If \(e_k(\pi ^1)\le e_k(\pi ^2)\) for all \(k\in {\mathcal {M}}\), then \(\pi ^1\prec \pi ^2\) or \(\pi ^1\equiv \pi ^2\).

Proof

We will prove that

for all \(i\in \overline{S}\) and \(k\in {\mathcal {M}}\). Then the statement

follows from (1)

Fix \(i\in \overline{S}\) and set \(k=1\). Then \(r_{i,k}(\pi ^1)=e(\pi ^1)\) and \(r_{i,k}(\pi ^2)=e(\pi ^2)\) from which it follows that (4) holds for \(k=1\).

Assume that (4) holds for all \(k\in \{1,\ldots ,h\}\) where \(h\in \{1,\ldots ,m-1\}\). Then we prove that (4) holds also for \(k=h+1\). Using the assumptions formulated in the theorem we have

from which it follows that (4) holds. Since i is fixed, then by recursion it can be obtained that (4) is true for all \(i\in \overline{S}\) and \(k\in {\mathcal {M}}\). \(\square \)

Now a simple example will be given. This example shows that \(\pi ^1\equiv \pi ^2\), whereas the statement

is not true.

Processing times are given in Table 3. Select \(S = \{1,2\},\; \pi ^1 =(1,2),\; \pi ^2=(2,1)\). Then from Table 3 it can be seen that \(\pi ^1\equiv \pi ^2\). On the other hand,

3 Lower bounds for bounded dynamic programming algorithm

The performance of the BDP algorithm is strongly dependent on the quality of lower bounds. In this section, we will briefly describe lower bounds used in the current work. These LB are based on the optimal makespan of one or two machines.

Let \(S\subset {\mathcal {J}}\) (\(S\ne {\mathcal {J}}\)) and \(\pi \in \Pi (S)\) be given. The lower bound \(LB^{(0)}\) was used by Bautista et al. (2012). Here,

where the tails \(q_{i,k}\) are obtained as follows:

The first lower bound used in the current work is a slight modification of \(LB_k^{(0)}\) where \(e_{k}(\pi )\) in (5) is replaced by \(r_k(\pi )\), i.e.

Hence, a valid lower bound for \(\pi \) is

The lower bound \(LB^{(0)}\) will be used only for an illustrative example. The lower bound \(LB^{(1)}(\pi )\) can be computed in \(O(m\cdot \overline{n})\) time.

The second lower bound used in the present work is based on two machines. Denote by \(C^{k_1,k_2}(\pi )\) the optimal makespan of the two machine scheduling problem with time lags \(F2|\ell _i(k_1,k_2)|C_{\max }\). This makespan is computed for the machine pair \((k_1,k_2)\) where \(k_1<k_2\). Lenstra et al. (1977) showed that \(C^{k_1,k_2}\) can be obtained by applying Johnson rule to \(F2||C_{\max }\) with processing times \((\ell _i(k_1,k_2),p_{i,k_2}+\ell _i(k_1,k_2)-p_{i,k_1})\). In this case the artificial job 0 is added with the following characteristics:

Then

where \(1\le k_1 < k_2 \le m\). The resulting lower bound is

Computing \(LB_{k_1,k_2}^{(2)}(\pi )\) in (8) requires the complexity \(O(\overline{n}\log \overline{n})\). However, the complexity can be reduced by pre-calculating the order of tails at the beginning of the algorithm. Therefore, applying Johnson rule to \(F2||C_{\max }\) will require only \(O(\overline{n})\). Thus, the computation of \(LB^{(2)}\) in (9) leads to the complexity \(O(m^2\cdot \overline{n})\)

Consider now the machine pair \((k,k+1)\) where \(1\le k<m\). Gilmore and Gomory (1964) algorithm can be applied for the \(F2|block|C_{\max }\) case since this problem can be reduced to the travelling salesman problem (TSP) with \(\overline{n}+1\) nodes. In this case the following parameters for the artificial job 0 are used:

Thus,

where \(C^{k}(\pi )\) is obtained using the Gilmore and Gomory algorithm to the \(F2|block|C_{\max }\) including the artificial job 0. Finally,

The Gilmore and Gomory algorithm requires sorting \(\overline{n}\) jobs that cannot be initialized at the beginning of the algorithm. Therefore, the complexity is \(O(\overline{n}\cdot \log \overline{n})\) in order to calculate \(LB_{k}^{(3)}(\pi )\) (see (12)). Finally, looping through all machines requires the overall complexity \(O(m\cdot \overline{n}\log \overline{n}))\) to calculate the lower bound \(LB^{(3)}(\pi )\) in (13).

Consider now the example that illustrates the calculation of lower bounds. Benchmark instance car1 with processing times depicted in Table 4 is chosen and the job permutation \(\pi =(8,5,4,2,3,6)\) is taken. Table 5 shows that \(LB_5^{(1)}(\pi )>LB_5^{(0)}(\pi )\) since \(r_5(\pi )\) is greater than \(e_5(\pi )\). This leads to the greater overall lower bound \(LB^{(1)}(\pi )>LB^{(0)}(\pi )\). Further, \(LB_{k_1,k_2}^{(2)}(\pi )>LB^{(1)}(\pi )\) for several machine pairs \((k_1,k_2)\) as can be seen in Tables 5 and 6. Therefore, the second lower bound

Finally, the third lower bound

is greater than \(LB^{(2)}(\pi )\) because the Gilmore and Gomory TSP algorithm for machine \(k=4\) gives a better makespan than the Johnson algorithm, which ignores the blocking constraint.

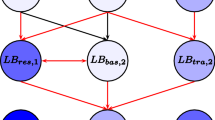

Figures 3 and 4 illustrate how the lower bounds \(LB^{(2)}(\pi )\) and \(LB^{(3)}(\pi )\) are obtained. In Fig. 3 it is shown that job \(j=9\) on machine \(k=5\) is delayed (i.e. starts later than the completion time of job on machine 4) because of the inequality \(r_5(\pi )-r_4(\pi )>p_{9,4}\). This case demonstrates the usefulness of artificial job 0 with parameters defined in (7). The Gilmore and Gomory algorithm gives different job order than that of the Johnson algorithm because the latter ignores the blocking constraint. In addition, the use of artificial job 0 with parameters defined in (10) strengthens the lower bound \(LB^{(3)}(\pi )\), see Fig. 4 for the explanation. Summary of the lower bounds is given in Table 7. This example shows that the values of all four lower bounds may differ.

4 Bounded dynamic programming algorithm

Let \(\Pi (S)\) be a set consisting of all job permutations associated with S and let Z(S) be a subset of \(\Pi (S)\). We formulate the following generalized Bellman equation for the \(Fm|block|C_{\max }\) problem:

Here, \(\mathop {\hbox {Min}}\limits A\subset A\) stands for the set of all minimal elements according to ‘\(\prec \)’ and ‘\(\equiv \)’. It means that for all \(\pi \in \mathop {\hbox {Min}}\limits A\) there does not exist another element that dominates \(\pi \) or is equivalent to \(\pi \). The solution of the blocking flow shop problem is \(Z({\mathcal {J}})\). Note that for the solution \(Z({\mathcal {J}})\) of system (14)–(16) the property \(|Z({\mathcal {J}})|=1\) holds. Thus, \(e_m(\pi )\) (\(\pi \in Z({\mathcal {J}})\)) is the optimal makespan of the given \(Fm|block|C_{\max }\) problem. The state space of the dynamic programming algorithm can be reduced by discarding those job permutations \(\pi \) for which \(LB(\pi )\ge UB\). In addition, if \(|Z|_t>H\) (i.e. the number of the job permutations with a size t is greater than a fixed window width H), then a sub-problem can be solved. Let \(Z_1(S)\) be another subset of \(\Pi (S)\). Then the following Bellman equation is formulated for \(Z_1\):

where \(t_0=t-1\) and \(T\subset \{S\subset {\mathcal {J}}:|S|=t_0\}\). Note that if \(t_0=1\) and \(T=\{\{i\}|i\in {\mathcal {J}}\}\), then the Bellman equation (14)–(16) is equivalent to the Bellman equation (17)–(19) for a sub-problem.

Algorithm 2 presents the dynamic programming algorithm based on the Bellman equation. This algorithm creates \(Z({\mathcal {J}})\). If it is necessary, then T is also created.

Now we briefly describe Algorithm 2, which solves the \(Fm|block|C_{\max }\) problem to optimality. Initially, n job permutations, which consist of a single job, are constructed. Then a loop through stages \(t=1\) to \(n-2\) has been done (line 1.2). For each stage t, we loop through all subsets S of size t (line 1.3) and for each chosen subset S, we loop through all job permutations \(\pi \in Z(S)\) (line 1.4). These job permutations are expanded with job i, which is not scheduled. If there does not exist another job permutation that dominates \(\pi \oplus i\) or is equivalent to \(\pi \oplus i\), then the lower bound of \(\pi \oplus i\) is estimated. Finally, if

then \(\pi \oplus i\) is included in \(Z(S\cup \{i\})\). In addition, all job permutations that are dominated by \(\pi \oplus i\) are removed from \(Z(S\cup \{i\})\).

If the size of \(Z(S\cup \{i\})\) becomes too large, then the memory can be exceeded. A typical termination criterion can be \(|Z|_{t+1}>H\), i.e. the number of job permutations that are stored in new stage \(t+1\) becomes greater than a fixed constant. In general, H depends on m, n, and the computer system requirements. The procedure BDP is recursively executed (line 1.14) and we go to the next stage by setting \(t=t+1\) since there does not exist \(S\subset {\mathcal {J}}\) with \(|S|=t\). When the for loop is ended (line 1.2), the optimal makespan \(Opt=e_m(\pi )\) (\(\pi \in Z({\mathcal {J}})\)) is found. However, if \(Z({\mathcal {J}})\) is empty, then the optimal makespan is equal to UB. Generally speaking, \(UB_0\) can be replaced by the general bound \(B_0\). If the final UB is not less than \(B_0\), then the BDP algorithm will prove that \(B_0\) is the lower bound of the given blocking flow shop instance.

The major changes of the BDP against the algorithm given by Bautista et al. (2012) are summarized below.

-

1.

The given algorithm is proposed as an exact algorithm instead of heuristics.

-

2.

Bautista et al. (2012) used only the lower bound \(LB^{(0)}\). In this work tighter lower bounds \(LB^{(1)}\), \(LB^{(2)}\), and \(LB^{(3)}\) are used. These lower bounds have been described in Sect. 3.

-

3.

The head \(r(\pi )\) (see equation (1)) instead of the vector of the departure times \(e(\pi )\) is used. It can be expected that the total number of job permutations |Z| will be reduced in this case. In addition, the lower bounds \(LB^{(h)}\), \(h\in \{1,2,3\}\), can be strengthened if \(r_k(\pi )>e_k(\pi )\) for at least one machine \(k\in {\mathcal {M}}\).

-

4.

The value \(|Z|_{t+1}\) can grow too large. Therefore the procedure BDP is recursive and the termination criterion is included to avoid memory overreaching (lines 1.11–1.14). Therefore, the given algorithm is theoretically able to solve any instance without running out of the given amount of memory. Note that when the sup-problem (line 1.14) has been solved, then the upper bound UB can be improved.

-

5.

We have formulated the Bellman equations (14)–(16) and (17)–(19) for the \(Fm|block|C_{\max }\) problem that has not been done before.

Now we inspect an example to illustrate Algorithm 2. Bechmark instance car7 with processing times given in Table 8 is chosen.

Initially, we set \(UB=6888\) and \(H=13\). The DP algorithm returns the optimal schedule \(\pi _{Opt}=(4,5,2,6,7,3,1)\) with

Hence, the optimal makespan is \(Opt=e_7(\pi _{Opt})=6788\). Table 9 shows that the value \(|Z|_3\) exceeds the window width \(H=13\). Therefore, the sub-problem has to be solved with the initial condition

In this case the set T used in equation (17)) is equal to \(\{\{4,6\},\{4,7\},\{1,5\},\{3,5\}\}\). For the sub-problem \(|Z_1|_5=0\) since the lower bound is greater than UB for all extensions of \(\pi =(4,7,3,2)\).

An unanswered question remains about the effective calculation of r. Let \(\pi \) be a given job permutation with \(|\pi |<n\). The vector of departure times \(e(\pi \oplus i)\) can be recursively obtained from \(e(\pi )\) using the following recurrence equation:

with the artificial departure times

An index i in (20) denotes the job given in Algorithm 1. Clearly, the computation of e requires the complexity O(m) in the worst case. However, the calculation of r is more expensive requiring the worst case complexity \(O(nm^2)\). Since \(r_k\ge e_k\) for all \(k\in {\mathcal {M}}\), we can efficiently obtain r in practice using Algorithm 3.

The effectiveness of Algorithm 3 lies in the principle that the use of (1) can be avoided as well as the further search through the set \({\mathcal {J}}\) if job j satisfying \(r_{j,k}(\pi )=e_k(\pi )\) (line 3.6) is found.

We continue to inspect the previously described example (see Tables 4 and 5). Table 10 demonstrates the application of Algorithm 3. For example, if jobs are selected in increasing order of the job numbers, then it is sufficient to calculate \(r_{1,3}(\pi )\) and \(r_{1,4}(\pi )\) in order to obtain \(r_{3}(\pi )\) and \(r_{4}(\pi )\) respectively. However, when \(k=2\) or \(k=5\), it is necessary to calculate \(r_{i,k}\) for all \(i\in \{1,7,9,10,11\}\) since \(r_k(\pi )>e_k(\pi )\) for \(k\in \{2,5\}\).

5 Computational results

We implement the BDP algorithm in C++ programming environment and compiled it with Microsoft Visual Studio. Windows 64 bit operating system with 8 GB RAM memory and 2.8 GHz CPU was used. Several sets of benchmark instances are used: Taillard (1993), Reeves (1995), Heller (1960). The number of jobs is 20 or 50. The number of machines goes from 5 to 20. The effectiveness of the proposed algorithm will be analysed in terms of CPU time, \(|Z_{\max }|\), and |Z| where

is the total number of job permutations and

is the maximum number of job permutations stored at one stage. The value \(|Z|_t\) in (21) stands for the number of job permutations stored at t, i.e.

The termination criterion (line 1.11 in Algorithm 1) depends on the problem and is set taking into account the system limits. In practice, the limit of \(|Z_{\max }|\) is taken as \(4.05\cdot 10^7\) or \(4.1\cdot 10^7\) for \(20\times 20\) type benchmark instances. The sub-problem should be solved when the termination criterion is met (line 1.14 in Algorithm 1).

Table 11 reports the results of the improved BDP algorithm with \(UB_0=BKS\) in Algorithm 2. An asterisk in Table 11 refers to the case when it is previously proven that the best-known solution (BKS) is equal to the optimal makespan, see Companys and Ribas (2011). All remaining BKS are, in general, not optimal.

Table 11 shows that the optimality is proven for all inspected benchmark instances with \(n=20\). Unfortunately, our CPU times cannot be compared with those that are obtained by Companys and Ribas (2011) since the authors did not report the corresponding CPU times. Furthermore, the optimality is proven for 13 out of 14 instances of type \(20\times 10\) and for all \(20\times 15\) and \(20\times 20\) type instances that has not been done before. All \(20\times 10\) type instances were solved within a CPU time less than 30 min except for the instance reC07 that required the CPU time 50 min. \(20\times 15\) type instances were solved in less than two hours. It is significantly harder to prove the optimality for \(20\times 20\) type instances as shows the CPU times that varies from 2-3 hours up to 35 hours. However, our improved BDP algorithm still cannot solve benchmark instances with at least \(n=30\) jobs in a reasonable time limit.

Note that the termination criterion is met for five instances of type \(20\times 20\). However, lines 1.11–1.14 in Algorithm 1 allow us to avoid from the memory overreaching. For instances with m equal to 10 or 5, the proposed BDP algorithm solves all instances being far away from the termination criterion as it is shown under column ‘\(|Z_{\max }|\)’ in Table 11.

Now we apply the BDP algorithm to calculate the lower bounds. We use the following strategy. We start with an initial bound \(B_0\) that is placed in Algorithm 2 by replacing \(UB_0\). Then we run the BDP algorithm. If the used bound is not lowered after the execution of the algorithm, then it is clear that the lower bound cannot be less than \(B_0\). Therefore, the current bound is increased by 10, and we rerun the BDP algorithm. The run is interrupted if there exists t with \(|Z|_t>H\) or the optimal makespan is found. The value \(\sum |Z|\) in Table 12 stands for the sum of all |Z| that is obtained from all previous bounds. The value LBroot denotes the combination of one- and two-machine based lower bound \(\max \{LB^{(1)},LB^{(2)},LB^{(3)}\}\) (see (6), (9), and (13) in Sect. 3) that is obtained at the root of the BDP algorithm, i.e. \(\pi \) is empty and \(S=\emptyset \). The window width H is set as 1000, 10000, or 100000, respectively. For instances of type \(20\times 5\) or \(20\times 10\) we also inspect the case when H is not taken into account thus solving the \(Fm|block|C_{\max }\) problem to optimality. In this case, we do not use BKS values thus obtaining the optimal makespan independently of other algorithms.

Table 12 shows that all Taillard \(20\times 5\) type instances can be solved to optimality in minutes. \(20\times 10\) type instances were solved in one hour on average. We do not try to solve \(20\times 20\) type instances to optimality since even proving the optimality takes a significant time amount.

Now we analyse lower bounds that are obtained from the BDP algorithm. Table 12 shows that the exact method is able to improve the root lower bound by a substantial margin. For \(H=1000\), CPU times are small and do not exceed eight seconds for the largest instances with \(50\times 20\). However, for the most part of instances, CPU times are less than one second. By increasing the window width to \(H=10000\), lower bounds are improved in a few seconds for most instances. Setting \(H=100000\) requires minutes for larger instances. The performance of the BDP algorithm is better if the proportion m / n is greater. If \(m/n=0.1\) (the case \(m=5\) and \(n=50\)), the benefit of the BDP algorithm is negligible for the selected H values. However, if \(m/n=1\), the BDP algorithm is more efficient and LB values are substantially improved by increasing H.

It is natural to inspect other exact algorithms. We compare our BDP algorithm with the exact branch and bound algorithm proposed by Ronconi (2005). This algorithm is denoted as RON in Table 13. CPU times given by RON are comparable with those obtained by the BDP algorithm since RON is coded using a Pentium 4 with 2.4 GHz. Results of Ronconi (2005) are reported in Grabowski and Pempera 2007; Wang et al. 2010; Lin and Ying 2013 as reference values. Ronconi (2005) presented an interesting version of the branch and bound algorithm. However, the one-machine based lower bound is used. Table 13 reports average values for several benchmark sets of Taillard. As shown in Table 13, even the root lower bound, which is based on two machines, is competitive with the final lower bound LBRON provided by RON. For the case \(20\times 20\), LBroot significantly outperforms LBRON on average. It can be observed that LB values can be significantly improved running the BDP algorithm with the fixed H. The higher is H, the better is LB on average. However, the balance between CPU times and the selected H should be respected. Choosing \(H=10000\) guarantees that almost all CPU times are less than those reported by RON. On the other hand, LB values obtained by BDP algorithm can be even drastically higher than those obtained by RON. Examples with a higher proportion m / n highlight the superiority of BDP over RON.

Table 14 reports the average CPU times using different strategies of the BDP algorithm. BDP\(_0\) stands for the results reported in Bautista et al. (2012). BDP1 and BDP2 stands for our version of the BDP algorithm. BDP1 referees to the case when \(UB=BKS\) (see Table 11), and BDP2 referees to the strategy in which the optimal makespan was obtained dynamically increasing the bound (see Table 12). Average relative percentage deviation (ARPD in Table 14) is calculated as

In Table 14, we skip the column ARPD below titles ‘BDP1’ and ‘BDP2’ since the final \(RPD=0\) for all smaller benchmark instances.

Table 14 shows that our version of the BDP algorithm drastically increases the applicability of the BDP approach for solving the \(Fm|block|C_{\max }\) problem. For \(20\times 5\) type instances, CPU times decrease by 85% compared to those of BDP\(_0\). Moreover, BDP\(_0\) fails to solve optimally any instance from Taillard sets and the ARPD value was still high. For the \(20\times 10\) case, CPU times are competitive with those reported in our work. However, the value ARPD is significantly greater than 0 and the quality of final upper bounds obtained by BDP\(_0\) is poor.

Table 14 above shows that BDP\(_1\) is remarkably faster than BDP\(_2\). Therefore, in order to solve small instances to optimality, a better strategy is to use good initial upper bound of the given instance. Several heuristics reported in the literature can obtain BKS (not proving the optimality) for smaller Taillard instances in a negligible time.

6 Conclusions

In this paper, the bounded dynamic programming (BDP) algorithm that solves the blocking flow shop problems is studied. We developed BDP for solving the blocking flow shop problem optimally. The proposed algorithm is able to obtain the optimal solution for instances with up to 400 operations (e.g. 20 jobs and 20 machines), and we report proven optimal solutions for 26 previously open benchmark instances. In addition, we provide new lower bounds for several sets of benchmark instances while requiring a relatively short CPU time.

There is still room for the improvement of the BDP algorithm. The algorithm that calculates an improved head presented in this paper could be improved thus reducing the computational expenses. Another idea could be to use some priority rules for the order of selecting job permutations from which the new job permutations are developed. The BDP approach could be applied for solving simultaneously direct and inverse instances exchanging data between them. How to do it remains an open question. The author believes that the optimal solution of the blocking flow shop problems could be obtained in a more reasonable time for benchmark instances with higher n or m (e.g. \(m=10\) and \(n=30\)) than investigated in the present work.

References

Bautista J, Cano A, Companys R, Ribas I (2012) Solving the \(Fm|block|Cmax\) problem using bounded dynamic programming. Eng Appl Artif Intell 25(6):1235–1245

Companys R, Mateo M (2007) Different behaviour of a double branch-and-bound algorithm on \(Fm|prmu|Cmax\) and \(Fm|block|Cmax\) problems. Comput Oper Res 34(4):938–953

Companys R, Ribas I (2011) New insights on the blocking flow shop problem. Best solutions update. Tech. rep., working paper

Gilmore P, Gomory R (1964) Sequencing a one state-variable machine: a solvable case of the traveling salesman problem. Oper Res 12(5):655–679

Grabowski J, Pempera J (2007) The permutation flow shop problem with blocking. A tabu search approach. Omega 35(3):302–311

Gromicho J, Van Hoorn J, Saldanha-da Gama F, Timmer G (2012) Solving the job-shop scheduling problem optimally by dynamic programming. Comput Oper Res 39(12):2968–2977

Hall N, Sriskandarajah C (1996) A survey of machine scheduling problems with blocking and no-wait in process. Oper res 44(3):510–525

Heller J (1960) Some numerical experiments for an \(M\times J\) flow shop and its decision-theoretical aspects. Oper Res 8(2):178–184

Lenstra J, Rinnooy Kan A, Brucker P (1977) Complexity of machine scheduling problems. Ann Discrec Math 1:343–362

Lin S, Ying K (2013) Minimizing makespan in a blocking flowshop using a revised artificial immune system algorithm. Omega 41(2):383–389

Pan Q, Wang L, Sang H, Li J, Liu M (2013) A high performing memetic algorithm for the flowshop scheduling problem with blocking. IEEE Trans Auto Sci Eng 10(3):741–756

Papadimitriou C, Kanellakis P (1980) Flowshop scheduling with limited temporary storage. J ACM (JACM) 27(3):533–549

Reddi S, Ramamoorthy C (1972) On the flow-shop sequencing problem with no wait in process. Oper Res Quart pp 323–331

Reeves C (1995) A genetic algorithm for flowshop sequencing. Comput Oper Res 22(1):5–13

Ribas I, Companys R, Tort-Martorell X (2011) An iterated greedy algorithm for the flowshop scheduling problem with blocking. Omega 39(3):293–301

Ronconi D (2005) A branch-and-bound algorithm to minimize the makespan in a flowshop with blocking. Ann Oper Res 138(1):53–65

Taillard E (1993) Benchmarks for basic scheduling problems. Eur J Oper Res 64(2):278–285

Tasgetiren M, Pan Q, Suganthan P, Buyukdagli O (2013) A variable iterated greedy algorithm with differential evolution for the no-idle permutation flowshop scheduling problem. Comput Oper Res 40(7):1729–1743

Tasgetiren M, Pan Q, Kizilay D, Suer G (2015) A populated local search with differential evolution for blocking flowshop scheduling problem. In: 2015 IEEE Congress on Evolutionary Computation (CEC), pp 2789–2796

Tasgetiren M, Kizilay D, Pan Q, Suganthan P (2017) Iterated greedy algorithms for the blocking flowshop scheduling problem with makespan criterion. Comput Oper Res 77:111–126

van Hoorn JJ (2016) Dynamic programming for routing and scheduling: Optimizing sequences of decisions

van Hoorn JJ, Nogueira A, Ojea I, Gromicho JA (2016) An corrigendum on the paper: solving the job-shop scheduling problem optimally by dynamic programming. Comput Oper Res

Wang L, Pan Q, Suganthan P, Wang W, Wang Y (2010) A novel hybrid discrete differential evolution algorithm for blocking flow shop scheduling problems. Comput Oper Res 37(3):509–520

Zhang C, Xie Z, Shao X, Tian G (2015) An effective VNSSA algorithm for the blocking flowshop scheduling problem with makespan minimization. In: 2015 International Conference on Advanced Mechatronic Systems (ICAMechS), IEEE, pp 86–89

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ozolins, A. Improved bounded dynamic programming algorithm for solving the blocking flow shop problem. Cent Eur J Oper Res 27, 15–38 (2019). https://doi.org/10.1007/s10100-017-0488-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10100-017-0488-5