Abstract

In this paper, we prove that a \(3 \rho \)-locally maximum volume submatrix \({\hat{A}} \in {\mathbb {R}}^{r \times r}\) in the matrix \(A \in {\mathbb {R}}^{M\times N}\) can be found in \(O\left( MNr \left( \log r + \log _{\rho } r \right) \right) \) operations, and a \(\rho \)-locally maximum volume submatrix for \(\rho \leqslant 3\) can be found in \(O\left( MNr^3 \log _{\rho } r \right) \) operations. Based on these submatrices, it is possible to construct a rank revealing LU decomposition with guarantees for the approximation accuracy in spectral and Chebyshev norms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

To quickly construct low-rank matrix approximations, it is advantageous to use CUR (or CGR) cross approximation based on a small number of columns \(C \in {\mathbb {R}}^{M \times r}\) and rows \(R \in {\mathbb {R}}^{r \times N}\) of the original matrix \(A \in {\mathbb {R}}^{M\times N}.\) Generator U is commonly chosen as \(U = {\hat{A}}^{-1},\) where \({\hat{A}} \in {\mathbb {R}}^{r\times r}\) is a submatrix at the intersection of rows R and columns C. Approximation \(C {\hat{A}}^{-1} R\) has an important interpretation: it is equivalent to an incomplete LU decomposition of the matrix A with rows and columns rearranged accordingly. In other words, it is given by the first r steps of Gaussian elimination, with the choice of initial rows and columns corresponding to \({\hat{A}}\) submatrix. In practice, \(C {\hat{A}}^{-1} R\) approximations are built using adaptive cross approximation (ACA) [1] or maxvol [2] algorithm. They also play an important role in the construction of tensor trains [3]. If the resulting submatrix turns out to be a submatrix of locally maximum volume (we will give the exact definition later), then there exist error bounds for the \(C {\hat{A}}^{-1} R\) approximation [4, 5].

Note that in many papers [4, 6, 7] the emphasis is placed on the submatrices of maximum volume. On the other hand, in order to achieve the corresponding estimates, it already suffices to find a submatrix of locally maximum volume. The difference between them is very significant, since finding a submatrix of the maximum volume or a volume close to the maximum is an NP-hard problem [8, 9]. Until now, it was not clear whether there is an algorithm that can find a submatrix \({\hat{A}} \in {\mathbb {R}}^{r \times r}\) of almost locally maximum volume in the matrix \(A \in {\mathbb {R}}^{M \times N}\) of a general form in polynomial time. In this paper, such an algorithm is presented for the first time, estimates for the number of steps are given and the accuracy of the obtained approximation is proved. Obtaining a submatrix of almost locally maximum volume allows us to construct cross and incomplete LU decompositions with guaranteed accuracy in the spectral norm and Chebyshev norms.

Before we go any further, it is important to mention, that for symmetric positive semi-definite (SPSD) matrices locally maximum volume search is much easier, as one can only consider principle submatrices. A \(\rho \)-locally maximum volume principle submatrix can be found using the strong rank revealing Cholesky factorization algorithm [10]. Since any SPSD matrix \(A \in {\mathbb {R}}^{N \times N}\) can be written in the form \(B^T B,\) \(B \in {\mathbb {R}}^{N \times N},\) the search of the locally maximum volume principle submatrix is equivalent to the search for \(N \times r\) locally maximum volume columns in B, which requires \(O \left( r \log _{\rho } r \right) \) column exchanges [11, Theorem 2]. Consequently, strong rank revealing Cholesky factorization can also be achieved in \(O \left( r \log _{\rho } r \right) \) exchanges, which require in total \(O \left( N r^2 \log _{\rho } r \right) \) operations, when using the algorithm from [10]. This complexity bound has been proven directly for SPSD matrices in [12] (Section 2.2.3).

2 Definitions

We will consider the problem of finding the submatrix \({\hat{A}}\) in terms of constructing the corresponding incomplete LU decomposition. Incomplete LU decomposition can serve as a criterion to estimate the rank of a matrix if the rows and columns are chosen appropriately. Such LU decompositions are called rank revealing LU (RRLU).

Similarly, RRQR is an abbreviation for the rank revealing QR decomposition. Strong RRQR decomposition plays an important role in constructing estimates and algorithms for RRLU decompositions.

Definition 1

Incomplete QR decomposition with column pivoting

where \(Q \in {\mathbb {R}}^{M \times M}\) is orthogonal and \(R \in {\mathbb {R}}^{r \times r}\) is upper triangular is called “rank revealing”, if

and

where \(p_1(r, N)\) and \(p_2(r, N)\) are polynomials or some functions which can be bounded by polynomials in r and N.

It is called “spectrum revealing” if also

It is called “strong” [13] if also

and

where

is the Chebyshev norm.

Similar definitions apply for RRLU.

Definition 2

Incomplete LU decomposition with row and column pivoting

where \(L_{11} \in {\mathbb {R}}^{r \times r}\) is unit lower triangular and \(U_{11} \in {\mathbb {R}}^{r \times r}\) is upper triangular is called “rank revealing”, if

and

where \(p_1(r, M, N)\) and \(p_2(r, M, N)\) are polynomials or some functions which can be bounded by polynomials in r, M and N.

It is called “spectrum revealing” [14] if also

It is called “strong” [15] if also

and

Construction of RRQR and RRLU decompositions can be considered equivalent to choosing r columns in the case of RRQR and r rows and columns in the case of RRLU, which are given by permutations of \(\Pi ,\) \(\Pi _1\) and \(\Pi _2\) respectively. From an algorithmic perspective, searching for these permutations usually takes more time than constructing RRLU or RRQR based on rows and columns, which are already given. In particular, after choosing columns, constructing an incomplete QR decomposition takes \(O \left( \text {nnz} \left( A \right) r + M r^2 \right) \) operations, where \(\text {nnz} \left( A \right) \) is the number of nonzero elements of the matrix A. The construction of the factors \(L = \left[ {\begin{array}{ll} {L_{11}} \\ {L_{21}} \end{array}} \right] \) and \(U = \left[ {\begin{array}{ll} {U_{11}}&U_{12} \end{array}} \right] \) in the incomplete LU decomposition requires only \(O \left( \left( M + N \right) r^2 \right) \) arithmetic operations (when the rows and columns, corresponding to \(L_{11}\) and \(U_{11},\) are known). Due to the linear complexity of RRLU in terms of matrix sizes, algorithms for finding a large volume submatrix are often used, which also have linear complexity [1, 2]. They do not, however, guarantee high approximation accuracy precisely because that linear complexity does not allow them to consider the entire matrix and select close to optimal rows and columns.

As will be shown, to achieve strong RRLU decomposition, it suffices to choose permutations \(\Pi _1\) and \(\Pi _2\) so that the first r rows and columns correspond to a submatrix of \(\rho \)-locally maximum volume, \(\rho = {\textrm{const}}.\) Thus, the problem of constructing a strong RRLU decomposition is reduced to finding a submatrix of \(\rho \)-locally maximum volume and proving the “strong” properties of the corresponding decomposition.

Definition 3

The volume of the matrix \({\hat{A}} \in {\mathbb {R}}^{n \times r},\) \(n \geqslant r,\) is the following quantity:

In the case of a square matrix \((n = r),\)

Definition 4

A full rank submatrix \({\hat{A}} \in {\mathbb {R}}^{m \times n}\) of a matrix \(A \in {\mathbb {R}}^{M \times N}\) is said to have \(\rho \)-locally maximum volume (hereinafter, estimates with \(\rho \geqslant 1\) are considered) if the permutation of its arbitrary i-th row and/or j-th column with any other row k and/or column l of the matrix A increases its volume by at most \(\rho \) times.

A submatrix is said to have locally maximum volume if \(\rho = 1.\)

Note that, unlike the definition in [16], one row and one column can be replaced at the same time.

Taking into account the definitions above, we can now formulate the main result of this paper.

Theorem 1

Strong RRLU decomposition of rank r of the matrix \(A \in {\mathbb {R}}^{M \times N}\) can be constructed on the basis of a submatrix of \(3 \rho \)-locally maximum volume of size \(r \times r.\) Moreover, such a submatrix can be found in \(O \left( MNr \left( \log r + \log _{\rho } r \right) \right) \) operations, and \(\rho \)-locally maximum volume submatrix with \(\rho \leqslant 3\) can be found in \(O \left( MN r^3 \log _{\rho } r \right) \) operations.

Before we move on to construct a decomposition, which satisfies Theorem 1, it is worth paying attention to other existing methods for constructing RRQR and RRLU decompositions with proven bounds on the number of steps and approximation error, since they can also be used to obtain the required spectral norm approximation accuracy.

3 Strong RRQR

We start with RRQR decomposition, since it can be used as a first step to constructing RRLU decomposition. The following theorem was proved in [13].

Theorem 2

[13] Let the submatrix \(A_1 \in {\mathbb {R}}^{M \times r}\) consisting of r columns of the matrix \(A \in {\mathbb {R}}^{M \times N}\) have \(\rho \)-locally maximum volume.

Then, based on the columns \(A_1,\) one can construct strong RRQR decomposition with

and

Moreover, a \(\rho \)-locally maximal submatrix can be found in \(O\left( \left( nnz(A)r + \left( M+N \right) r^2 \right) \left( 1 + \log _ {\rho } r \right) \right) \) operations, where nnz(A) is the number of nonzero elements in the matrix A.

The complexity bound was first proved in [17] and then improved in [11].

4 Rank revealing LU

Now we move on to constructing RRLU decomposition. Recall the main theorem from [16] and an algorithm for obtaining bounds from it, based on strong RRQR [13]. Note that an alternative algorithm suggested in [16] does not have a bound on the number of steps.

Theorem 3

[16] Let the submatrix \(A_1 \in {\mathbb {R}}^{M \times r}\) consisting of r columns of the matrix \(A \in {\mathbb {R}}^{M \times N}\) have \(\rho \)-locally maximum volume in A. Let the submatrix \({\hat{A}} \in {\mathbb {R}}^{r \times r}\) have \(\rho \)-local maximum volume in \(A_1.\)

Then, based on the columns \(A_1\) and the rows corresponding to \({\hat{A}},\) one can construct a spectrum revealing LU decomposition with

In addition,

and

where \(A_1^{\dagger }\) denotes the Moore–Penrose pseudoinverse of \(A_1.\)

Moreover, submatrices \(A_1\) and \({\hat{A}}\) can be found in \(O\left( (nnz(A)r + (M+N)r^2) \left( 1 + \log _{\rho } r \right) \right) \) operations, where nnz(A) is the number of nonzero elements of the matrix A.

The bound on the complexity follows directly from Theorem 2.

Since in [16] it was not proved that the decomposition is spectrum revealing, we prove it here. To do this, we will apply spectrum revealing estimates for RRQR twice.

Using the spectrum revealing property of QR based on the submatrix \(A_1\) of \(\rho \)-locally maximum volume (Theorem 2), we obtain the inequality

Next, columns \(A_1^T \in {\mathbb {R}}^{r \times M}\) contain the submatrix \({\hat{A}}^T \in {\mathbb {R}}^{r \times r}\) of \(\rho \)-locally of maximum volume, and the QR decomposition \(A_1^T \Pi = Q_1 \left[ R_1 \; B_1 \right] ,\) where \(Q_1 R_1 = {\hat{A}}^T,\) according to Theorem 2 has the following spectrum revealing property:

Since \({\hat{A}} = L_{11} U_{11},\) and \(A_1 = \left[ {\begin{array}{ll} {{L_{11}}} \\ {{L_{21}}} \end{array}} \right] {U_{11}},\) then, combining (6) and (5) together leads to the following inequality:

which proves the spectrum revealing property of the resulting RRLU decomposition.

The columns \(A_1\) can be found numerically using the RRQR algorithm from [13], while the submatrix \({\hat{A}} \in {\mathbb {R}}^{r \times r}\) in the already fixed columns \(A_1 \in {\mathbb {R}}^{M \times r}\) can also be found using the maxvol [2] algorithm.

Although we are not aware of an example, which shows that Theorem 3 may not provide the strong RRLU bounds (2), it can definitely violate the condition (4), which, as we will see later, is crucial for proving the bounds (2).

For example, consider the matrix

with arbitrary small \(\varepsilon \) and its rank 1 approximation, based on the Theorem 3. First, we select the locally maximum volume column, i.e., the column with the maximum length. This is always going to be the first column. Then we select \(1 \times 1\) maximum volume submatrix in this column, which is going to be the element \(1 + \varepsilon .\) It differs from the locally maximum volume submatrix in the first row by a factor arbitrarily close to \(\sqrt{N}.\) Namely, we have \(f = \sqrt{N}/(1 + \varepsilon ),\) which depends on the matrix size and thus contradicts the “strong” property (4). Nevertheless, it does not directly prevent (2), which may or may not hold.

5 Strong RRLU

As we have seen, rank revealing LU from the previous section provides reasonable approximation accuracy in the spectral norm, from which the Chebyshev norm bound can also be derived. Nevertheless, finding close to locally maximum volume submatrix not only additionally guarantees the “strong” properties (2)–(4), but also provides a much better Chebyshev norm bound, which does not depend on matrix sizes [5].

For the first time, it was proposed to search for a submatrix of locally maximum volume in the entire matrix in [15]. In addition, the following criterion was proved. Here Eqs. (8) and (9) provide the same bounds as Eqs. (3) and (4).

Lemma 1

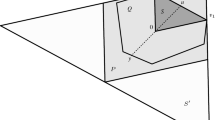

[15] Consider a matrix \(A \in {\mathbb {R}}^{M \times N}\) with the following block structure

with

and

Then submatrix \({\hat{A}} = A_{11} \in {\mathbb {R}}^{r \times r}\) has \(\rho \)-locally maximum volume if and only if the following inequalities are true :

Moreover, when the i-th row of the submatrix \({\hat{A}}\) is replaced by the k-th row of the matrix A, the volume of the submatrix is multiplied by the factor \(\left|\left( C {\hat{A}}^{-1} \right) _{ki} \right|;\) when the j-th column of the submatrix \({\hat{A}}\) is replaced by the l-th column of the matrix A, the volume is multiplied by the factor \(\left|\left( {\hat{A}}^{-1} R \right) _{jl} \right|;\) when replacing a row and a column, the volume is multiplied by the factor \(\left|{{{\left( {{{{\hat{A}}}^{ - 1}}R} \right) }_{jl}}{{\left( {C{{{\hat{A}}}^{ - 1}}} \right) }_{ki}} + {\hat{A}}_{ji}^{ - 1}{{\left( {A - C{{{\hat{A}}}^{ - 1}}R} \right) }_{ kl}}} \right|.\) Hereinafter \({\hat{A}}_{ji}^{-1}\) denotes the ji-th element of \({\hat{A}}^{-1}.\)

Finally, the following theorem was proved.

Theorem 4

[15] Based on rows \(R \in {\mathbb {R}}^{r \times N}\) and columns \(C \in {\mathbb {R}}^{M \times r}\) corresponding to submatrix \({\hat{A}} \in {\mathbb {R}}^{r \times r}\) of matrix \(A \in {\mathbb {R}}^{M \times N}\) such that

and

(it is also sufficient that \({\hat{A}}\) has \(\sqrt{\rho }\)-locally maximum volume in the entire matrix) one can construct a strong RRLU with

In [15] the result was formulated less rigorously, so we repeat its proof here.

Proof

First, we prove that

Like in Lemma 1, we can assume \({\hat{A}}\) is on the intersection of the first r rows and columns, \({\hat{A}} = A_{11},\) where the entire matrix A can be written in block form as

Then \(U_{22} = A_{22} - A_{21} A_{11}^{-1} A_{12}\) and submatrix \({\hat{A}} = A_{11}\) corresponds to the rows \(R = \left[ {\begin{array}{ll} {{A_{11}}}&{{A_{12}}} \end{array}} \right] \) and columns \(C =\left[ {\begin{array}{ll} {{A_{11}}} \\ {{A_{21}}} \end{array}} \right] .\) The error of the corresponding cross approximation is

Consider the matrix

where

Observe that

where we used condition (11). Hereinafter

denotes the spectral norm.

Then, using (13), we can write the following inequalities for the singular values, corresponding to the submatrices of D:

Since \(\textrm{rank}\hspace{2.0pt}A_{11} = r,\) the first r largest singular values of D (12) correspond to the submatrix \(ab A_{11},\) so for the rest of its singular values

Now, let us factorize matrix D as follows:

Then

Spectral norms of the block matrices can be estimated as

Similarly,

Substituting (15) and (16) into (14) leads us to the desired inequality:

Next, we prove the “spectrum revealing” property for \(p_1 \left( r, M, N \right) = p_2 \left( r, M, N \right) \):

To do that, we can use the following factorization:

Then

We again estimate the spectral norms, similar to (15) and (16):

and

Moreover,

as now we have \(i \leqslant r,\) and we have already shown that the first r singular values correspond to the submatrix \(A_{11}.\) Combining together Eqs. (18) through (21), we obtain

Equation (17) follows directly from (22). \(\square \)

However, it is still not clear how to find a submatrix of \(\rho \)-locally maximum volume fast enough. The authors themselves state in [14] that there are still no effective algorithms for its search. Let us show that it is possible to reach the bounds of Theorem 4 fairly quickly.

We will start from the initial \(r \times r\) submatrix, constructed using Gaussian elimination with complete pivoting. For our purposes, we will require the following result, which is also useful for adaptive cross approximation [18].

Lemma 2

[18] Let the rows of the submatrix \({\hat{A}} \in {\mathbb {R}}^{r \times r}\) be obtained by Gaussian elimination with partial pivoting in the columns \(C \in {\mathbb {R}}^{M \times r}.\) Then

Obviously, the same result will hold for complete pivoting. In complete pivoting, we will also have the inequality for \(\left\| {\hat{A}}^{-1} R \right\| _C \leqslant 2^{r-1}.\) Moreover, in [19] the following result was proved.

Lemma 3

[19] Let the rows \(R \in {\mathbb {R}}^{M \times r}\) and columns \(C \in {\mathbb {R}}^{r \times N}\) corresponding to submatrix \({\hat{A}} \in {\mathbb {R}}^{r \times r}\) be the first r rows and columns selected by Gaussian elimination with complete pivoting for the matrix \(A \in {\mathbb {R}}^{M \times N}.\) Then

where \(\gamma _r \leqslant 2 \sqrt{r+1} (r+1)^{\frac{\ln (r+1)}{4}}\) is the growth factor.

Corollary 1

Under the conditions of Lemma 3,

Proof

Consider the submatrix \({\bar{A}} \in {\mathbb {R}}^{(r-1) \times (r-1)},\) obtained after \(r-1\) steps of Gaussian elimination. Applying Lemma 3 to the matrix \({\hat{A}}\) (instead of A), after \(r-1\) steps (instead of r) we have

where \({\hat{R}} \in {\mathbb {R}}^{(r-1) \times r}\) and \({\hat{C}} \in {\mathbb {R}}^{r \times (r-1)}\) are rows and columns of \({\hat{A}},\) corresponding to \({\bar{A}}.\)

Then, by definition of the growth factor, we can estimate the error at the r-th step of Gaussian elimination:

\(\square \)

Combining Lemma 2 and Corollary 1, we see that Gaussian elimination produces a \(\rho \)-locally maximum volume submatrix, albeit with quite a large \(\rho .\)

Corollary 2

Let the rows \(R \in {\mathbb {R}}^{M \times r}\) and columns \(C \in {\mathbb {R}}^{r \times N}\) corresponding to submatrix \({\hat{A}} \in {\mathbb {R}}^{r \times r}\) be the first r rows and columns selected by Gaussian elimination with complete pivoting for the matrix \(A \in {\mathbb {R}}^{M \times N}.\) Then \({\hat{A}}\) is a \(\rho \)-locally maximum volume submatrix with

Proof

Comparing Lemma 2 with the conditions (8) and (9) of Lemma 1, we see that they are satisfied for \(\rho = 2^{r-1}.\) Let us now check the last condition, where we substitute the inequalities, provided by Lemma 2 and Corollary 1:

\(\square \)

Finally, we will need a result on the closeness of the volume of a submatrix of locally maximum volume to the volume of the maximum volume submatrix.

Lemma 4

Let \({\hat{A}} \in {\mathbb {C}}^{r \times r}\) be a \(\rho \)-locally maximum volume in the matrix \(A \in {\mathbb {C}}^{M \times N}.\) Let \(A_M \in {\mathbb {C}}^{r \times r}\) be a submatrix of maximum volume in A. Then

Proof

Let us start with the observation, that there exists a submatrix \({\tilde{A}} \in {\mathbb {C}}^{m \times n}\) with \(m, n \leqslant 2r,\) which contains the submatrices \({\hat{A}}\) and \(A_M.\)

According to Theorem 4, when constructing LU for \({\tilde{A}}\) based on \({\hat{A}},\)

and therefore

Taking the product over all singular values, we get

\(\square \)

We are now ready to prove the main Theorem 1. To do that, we are going to use the following Algorithm 1.

It starts from Gaussian elimination, and then exchanges rows and columns based on the best of three values \(\rho _1,\) \(\rho _2\) and \(\rho _3.\) They correspond to the estimated volume increase after exchanging a row, a column, or both a row and a column respectively. Note, that if it stops, the following inequalities are satisfied:

The first two follow directly from the fact that \(\max \left( \rho _1, \rho _2 \right) \leqslant \rho _n \leqslant \sqrt{\rho }\) (inequality on line 15 is false if “break” happened), and the third one follows from

so

From the conditions (23) we also find that the output submatrix has \(3 \rho \)-locally maximum volume. According to Lemma 1, it is enough to satisfy the conditions (8)–(10). First two follow directly from (23) and the last condition (with \(3 \rho \) in place of \(\rho \)) follows from

Algorithm 1 also does eventually stop, as the volume is multiplied at least by a factor \(\max \left( \rho _1, \rho _2, \rho _3 \right) > \sqrt{\rho } \geqslant 1\) at each iteration. Thus, it can’t visit the same submatrix twice, and there is a finite number of submatrices to check.

If we want to reach a \(\rho \)-locally maximum volume submatrix, we first run Algorithm 1 with \(\rho = \sqrt{3}\) (although any constant greater than 1 would suffice) and then improve it making exchanges following Lemma 1. According to Lemma 1, Algorithm 2 eventually outputs a \(\rho \)-locally maximum volume submatrix. And it also eventually stops for the same reason.

We now estimate the number of steps required to reach \(3 \rho \)-locally maximum volume and \(\rho \)-locally maximum volume submatrices in the following proposition.

Proposition 1

Algorithm 1 stops after \(O \left( r \left( 1 + \frac{1}{\log \rho } \right) \log r \right) \) iterations and has the total computational cost of \(O \left( MNr \left( 1 + \frac{1}{\log \rho } \right) \log r \right) .\)

Algorithm 2 performs no more than \(O \left( r \log _{\rho } r \right) \) additional iterations and has the total computational cost of \(O \left( MNr \left( 1 + \frac{r^2}{\log \rho } \right) \log r \right) .\)

Proof

The starting submatrix \(A_{(0)}\) is chosen using Gaussian elimination with complete pivoting. By Corollary 2, \(A_{(0)}\) has \(\rho _{(0)}\)-locally maximum volume with

Consider now the sequence of submatrices \({\hat{A}} = A_{(s)}\) obtained after s steps of column and/or row exchanges. According to Lemma 4,

where \(A_M \in {\mathbb {R}}^{r \times r}\) is the maximum volume submatrix.

At each step of the Algorithm 1, we make an exchange according to the following rule. We select a new row k and/or a new column l that match the value of

where we substituted the definitions of the indices \(i_1, k_1, j_2, k_2, i_3, j_3, k_3, l_3\) from the Algorithm 1.

Such a choice increases the volume by at least \(\rho '\) times, since we have chosen \(\rho ' = \max \left( \rho _1, \rho _2, \rho _3 \right) .\)

Moreover, if at the s-th step we have exactly \(\rho _{(s)}\)-locally maximum volume submatrix with \(\rho _{(s)} \geqslant 3,\) then \(\rho ' \geqslant \sqrt{\rho _{(s)}/3}.\) Indeed, if \(\max \limits _{j,l} \left|{\hat{A}}^{-1} R \right|_{jl} < \sqrt{\rho _{(s)}/3}\) and \(\max \limits _{i,k} \left|C {\hat{A}}^{-1} \right|_{ki} < \sqrt{\rho _{(s)}/3},\) then

Taking (25) into account, we get that the ratio to the maximum volume changes as

Let us denote

Substituting \(\alpha _{(s)}\) (28) into (27), we can estimate how \(\alpha _{(s)}\) changes after each step:

Thus, to reach, for instance, \(\alpha _{(s)} \leqslant 3^{1/4} < 2^r r^{\frac{2 + \ln r}{8}},\) one would need

steps. After that

Then, while \(\rho ' \geqslant \sqrt{\rho },\) the Algorithm 1 increases the volume by at least a factor of \(\sqrt{\rho }\) at every step. Since we cannot exceed the maximum volume, the algorithm requires at most

steps until termination.

Each step of the algorithm is an update of rank no greater than 2 for the submatrices \({\hat{A}},\) C, and R, since no more than one row and no more than one column is replaced. It follows that the recalculation of \(C {\hat{A}}^{-1},\) \({\hat{A}}^{-1} R\) and \(C {\hat{A}}^{-1} R\) is also of low rank and requires a total of at most O(MN) operations per step, after which the choice of indices i, j, k, l based on \(\rho '\) (26) also takes at most \(O \left( MN \right) \) operations. Given the constraints (31) and (32), the total number of steps is \(s_1 + s_2 = O \left( r \left( 1 + \frac{1}{\log \rho } \right) \log r \right) ,\) and the total computational complexity of the algorithm is \(O \left( MNr \left( 1 + \frac{1}{\log \rho } \right) \log r \right) .\) Gaussian elimination with complete pivoting takes \(O \left( M N r \right) \) operations, which does not affect the general asymptotics.

If it is necessary to search for an \(\rho \)-locally maximum volume with \(\rho \leqslant 3,\) then we can also make exchanges of rows and columns after \(3 \rho \)-locally maximum volume is reached for \(\rho = \sqrt{3}\) (which will take \(O \left( M N r \log r \right) \) operations), but then checking

which is what Algorithm 2 does.

Since in this case we guarantee an increase in volume by at least \(\rho \) times, with Lemma 4 the number of additional steps can be bounded by

However, now at each step it is required to check all \(MN r^2\) variants of the quadruple i, j, k, l, so these (at most) \(s_3\) steps are \(r^2\) times more expensive. Thus, the total cost of the search for \(\rho \)-locally maximum volume is \(O \left( MNr \left( 1 + \frac{r^2}{\log \rho } \right) \log r \right) .\) For \(\rho \leqslant 3\) it simplifies to \(O \left( MNr^3 \log _{\rho } r \right) .\) \(\square \)

Note that, as shown in [5], cross approximation based on a \(\rho \)-locally maximum volume submatrix also allows achieving high accuracy in the Chebyshev norm (which does not depend on matrix sizes). Let us formulate this fact in terms of the LU decomposition.

Theorem 5

[5] Let an incomplete LU decomposition (1) be constructed based on the submatrix \({\hat{A}} = L_{11} U_{11} \in {\mathbb {R}}^{r \times r}\) of \(\rho \)-locally maximal volume. Then

where matrix E is the error of the best approximation in the Chebyshev norm.

So, finding \(3 \rho \)-locally maximum volume submatrix also guarantees a good Chebyshev norm bound.

An algorithm, which allows to guarantee the bound (33) was recently suggested in [20]. Namely, it was shown that a factor of \(\rho \) in the Chebyshev norm can be reached without considering all \(MN r^2\) possible exchanges. Following [20, Lemma 3.15], we show that it is possible to quickly reach a factor of \(\rho \) instead of \(3 \rho \) in Chebyshev norm, even when we only guarantee to find a \(3 \rho \)-locally maximum volume submatrix. It should be noted, however, that the algorithm, described in [20], is asymptotically slower and that reaching Chebyshev norm bound does not, by itself, guarantee either “spectrum revealing” or “strong” RRLU properties (see Definition 2), which are necessary to obtain a \(\rho \)-locally maximum volume submatrix (see Theorem 4).

To guarantee the bound (33), we calculate the value \(\rho _3\) in the Algorithm 1 more efficiently, by directly finding the maximum possible volume multiplier over all i and j. We write the result as Algorithm 3.

Proposition 2

Algorithm 3 requires at most \(O \left( MNr \left( 1 + \frac{1}{\log \rho } \right) \log r \right) \) operations, guarantees the same bounds as Algorithm 1 and also allows to construct an incomplete LU decomposition, such that

Proof

In Algorithm 3, calculating \(\rho _3\) allows additionally checking the condition

for all i and j and for

If, given \(k_3\) and \(l_3,\) it is possible to find i and j such that the condition (35) is violated, then the increase in volume with the corresponding replacement is at least \(\sqrt{\rho }\) times, according to the Lemma 1. Only if this growth is higher than all other options for \(\rho '\) (26), then we make this replacement. We note right away that this will not increase the cost of the algorithm, since the cost of going through all pairs of i and j requires only \(O \left( r^2 \right) \) arithmetic operations, which does not increase the asymptotic cost of one step of the algorithm. In addition, the bound on the number of steps also does not change, as each increase in volume is not lower than before.

Now we show that when the algorithm stops, the inequality (34) holds. We argue from the contrary: if the inequality for the Chebyshev norm fails, then, in particular, it fails for those k and l where the error reaches its maximum. It is these new row \(k_3\) and new column \(l_3\) that we are checking. Consider the submatrix \({\tilde{A}} \in {\mathbb {R}}^{(r+1) \times (r+1)},\) which is the extension of the submatrix \({\hat{A}}\) by the found row \(k_3\) and column \(l_3.\) If inequality (34) is false, then

where \(\Vert {\tilde{E}}\Vert _C\) is the error of the best approximation of \({\tilde{A}}\) in Chebyshev norm, submatrices \({\tilde{L}} \in {\mathbb {R}}^{(r+1) \times r}\) and \({\tilde{U}} \in {\mathbb {R}}^{r \times (r+1)}\) of L and U correspond to the rows and columns of \(\tilde{A}.\) Then from Theorem 5 we get that the submatrix \({\hat{A}}\) does not have \(\rho \)-locally maximum volume inside \({\tilde{A}},\) and hence there is a replacement that includes only the row \(k_3\) or only the column \(l_3,\) increasing the volume by more than \(\rho \) times (which contradicts (23)), or simultaneous replacement of some row i and column j by \(k_3\) and \(l_3,\) which increases the volume by more than \(\rho \) times, which contradicts the additional check (35) that we introduced into the algorithm. This contradiction shows that after termination the inequality (34) must be satisfied. \(\square \)

6 Numerical experiments

In this section, we demonstrate the effectiveness of various methods for constructing rank revealing LU decompositions. The purpose of this chapter is to compare the proposed method for finding submatrices of locally maximum volume with other methods, which search for a large volume submatrix.

The fastest method based on volume maximization is the adaptive cross approximation (ACA) [1]. It uses an incomplete Gaussian elimination with partial pivoting. Each new row and column are chosen corresponding to the search for the maximum element in the current row/column of the error. At each step, only one row and one column are searched, and the final row is chosen as the starting row for the next step. The rows and columns thus added are used to construct the \(C {\hat{A}}^{-1} R\) decomposition, also known as the cross or skeleton [6].

As an alternative to ACA, one can also use an incomplete Gaussian elimination with the choice of the next maximum element in the entire matrix (complete pivoting). This guarantees the stability of the method, but greatly increases its computational complexity.

The maxvol algorithm [2] is more efficient, but also slightly more expensive compared to the ACA method. It allows replacing the chosen rows and columns of the submatrix. After termination, it guarantees that the found submatrix will have \(\rho \)-locally maximum volume inside its corresponding rows and columns. To limit its running time, one can directly prohibit more than r row and column swaps.

Finally, we will look at the rank revealing LU decompositions, based on Theorems 3 and 4.

Complexities and known estimates for all the above methods are shown in Table 1 for the case of a square matrix \(A \in {\mathbb {R}}^{N \times N}.\) From Theorem 3 one can derive the following bound on the Chebyshev norm

where \(E_{\textrm{best}}\) is the matrix of the lowest rank r approximation error in the Chebyshev norm.

To test these methods, we will use the RANDSVD ensemble [21], which consists of matrices of the form

where S is a fixed diagonal matrix of singular values and U, V are independent random orthogonal matrices.

Since in practice the algorithms stop after a small number of replacements even for \(\rho = 1,\) we will use \(\rho = 1,\) although the obtained estimates for the number of steps do not hold in this case.

Table 2 shows the results of rank 20 approximations of random RANDSVD matrices with singular values

It can be seen that, in order to achieve a high-quality approximation, it is usually not necessary to iterate over all rows and columns, and the faster ACA and maxvol algorithms are sufficient. At the same time, although the estimate of Theorem 3 does not imply achieving high accuracy in the Chebyshev norm, and the found submatrix may differ from the submatrix of locally maximum volume, the corresponding algorithm allows one to achieve approximations of higher accuracy and in less time than search for a locally maximum volume in the entire matrix. At the moment, we do not have a theoretical justification for this feature.

Despite the efficiency of ACA and maxvol on average, it is easy to give a lot of examples where it is necessary to consider the entire matrix to build a high accuracy approximation. Consider, for example, an arbitrary matrix with a hidden block structure

where E is some noise and \(\Pi _1\) and \(\Pi _2\) are permutations of rows and columns. In the worst case, when the starting submatrix is located entirely in one of the blocks, replacing the row or column will not allow leaving the current block and moving to another. This is due to the fact that any column in the rows corresponding to \(B_1\) submatrix will either be inside \(B_1\) or consist entirely of noise. Only the simultaneous replacement of a row and a column allows the algorithm to “see” elements from another block. Even if the initial submatrix is not entirely in one of the blocks, such a structure still significantly complicates the search for the optimal submatrix and leads to an increase in the approximation error.

We will take the blocks \(B_1\) and \(B_2\) also from the RANDSVD ensemble with singular values

and E as a random Gaussian matrix in which each element has zero mean and variance \({\mathbb {E}} \left|E_{ij}\right|^2 = 10^{-14},\) so that \(\left\| E \right\| _F \approx 10^{-4}.\) Let us again look for an approximation of rank 20 of such a matrix. The optimum in this case is to take 10 rows and columns from the submatrix \(B_1\) and 10 more from the submatrix \(B_2,\) so that the error in the spectral norm and the Frobenius norm will be about \(10^{-3}.\) The results of the same algorithms for the matrix B are presented in Table 3. As we can see, the adaptive cross and maxvol methods in this case work much worse and do not allow building an approximation with high accuracy.

Overall, the choice of the rank revealing LU decomposition algorithm depends on the requirements for the speed and accuracy of the method. Adaptive cross approximation [1] is significantly faster than all other methods, but also leads to the largest error. The maxvol algorithm [2] reduces the error several times, while maintaining the same asymptotic cost as the adaptive cross. On average, it can even outperform complete pivoting in accuracy (see Table 2). However, like the adaptive cross, it does not provide any guarantees for the accuracy of the approximation, and can partially lose its accuracy if the approximated matrix has some hidden structure. The algorithm corresponding to Theorem 3 guarantees the best accuracy in terms of the spectral norm and also shows the best results in approximation accuracy among all the given algorithms. However, the presence of the factor N in the Chebyshev norm error estimate does not make this bound very useful. The algorithm for finding a locally maximum volume, proposed in this paper, although looks a little less efficient in practice, allows for high accuracy of the approximation in the Chebyshev norm, as well as the “strong” property (Definition 2) of the resulting RRLU decomposition.

The algorithms from Tables 2 and 3 are realised in Fortran and are available in https://github.com/RodniO/Projective-volume-low-rank (file ExampleLU.f90).

7 Conclusion

The obtained estimates show that, in contrast to submatrices of almost maximum volume, the search for which is an NP-hard problem [8, 9], submatrices close to locally maximum volume can be found in polynomial time, which ensures that the various bounds that are often associated with maximum volume submatrices are satisfied. In particular, it is possible to quickly construct cross approximations with guarantees of accuracy in the Chebyshev norm and the spectral norm.

References

Bebendorf, M., Rjasanow, S.: Adaptive low-rank approximation of collocation matrices. Computing 70, 1–24 (2003). https://doi.org/10.1007/s00607-002-1469-6

Goreinov, S.A., Oseledets, I.V., Savostyanov, D.V., Tyrtyshnikov, E.E., Zamarashkin, N.L.: How to find a good submatrix. In: Matrix Methods: Theory, Algorithms, Applications, pp. 247–256 (2010). https://doi.org/10.1142/9789812836021_0015

Oseledets, I.V., Tyrtyshnikov, E.E.: TT-cross approximation for multidimensional arrays. Linear Algebra Appl. 432, 70–88 (2010). https://doi.org/10.1016/J.LAA.2009.07.024

Goreinov, S.A., Tyrtyshnikov, E.E.: The maximal-volume concept in approximation by low-rank matrices. Contemp. Math. 268, 47–51 (2001). https://doi.org/10.1090/conm/280

Goreinov, S.A., Tyrtyshnikov, E.E.: Quasioptimality of skeleton approximation of a matrix in the Chebyshev norm. Dokl. Math. 83(3), 1–2 (2011). https://doi.org/10.1134/S1064562411030355

Goreinov, S.A., Tyrtyshnikov, E.E., Zamarashkin, N.L.: A theory of pseudo-skeleton approximations. Linear Algebra Appl. 261, 1–21 (1997). https://doi.org/10.1016/S0024-3795(96)00301-1

Osinsky, A.I., Zamarashkin, N.L.: Pseudo-skeleton approximations with better accuracy estimates. Linear Algebra Appl. 537, 221–249 (2018). https://doi.org/10.1016/j.laa.2017.09.032

Çivril, A., Magdon-Ismail, M.: On selecting a maximum volume sub-matrix of a matrix and related problems. Theor. Comput. Sci. 410(47–49), 4801–4811 (2009). https://doi.org/10.1016/j.tcs.2009.06.018

Çivril, A., Magdon-Ismail, M.: Exponential inapproximability of selecting a maximum volume sub-matrix. Algorithmica 65(1), 159–176 (2013). https://doi.org/10.1007/s00453-011-9582-6

Gu, M., Miranian, L.: Strong rank revealing Cholesky factorization. Electron. Trans. Numer. Anal. 17, 76–92 (2004)

Osinsky, A.: Rectangular maximum volume and projective volume search algorithms. Preprint at arXiv:1809.02334v1 (2018)

Massei, S.: Some algorithms for maximum volume and cross approximation of symmetric semidefinite matrices. BIT Numer. Math. 62, 195–220 (2022). https://doi.org/10.1007/s10543-021-00872-1

Gu, M., Eisenstat, S.C.: Efficient algorithms for computing a strong rank-revealing QR factorization. SIAM J. Sci. Comput. 17(4), 848–869 (1996). https://doi.org/10.1137/0917055

Anderson, D.G., Gu, M.: An efficient, sparsity-preserving, online algorithm for low-rank approximation. In: Precup, D., Teh, Y.W. (eds.) Proceedings of the 34th International Conference on Machine Learning, vol. 70, pp. 156–165. PMLR, Sydney (2017)

Miranian, L., Gu, M.: Strong rank revealing LU factorizations. Linear Algebra Appl. 367, 1–16 (2003). https://doi.org/10.1016/S0024-3795(02)00572-4

Pan, C.-T.: On the existence and computation of rank revealing LU factorizations. Linear Algebra Appl. 316, 199–222 (2000). https://doi.org/10.1016/S0024-3795(00)00120-8

Boutsidis, C.: Topics in matrix sampling algorithms. PhD thesis, Rensselaer Polytechnic Institute (May 2011)

Bebendorf, M.: Approximation of boundary element matrices. Numer. Math. 86, 565–589 (2000). https://doi.org/10.1007/PL00005410

Cortinovis, A., Kressner, D., Massei, S.: On maximum volume submatrices and cross approximation for symmetric semidefinite and diagonally dominant matrices. Linear Algebra Appl. 593, 251–268 (2020). https://doi.org/10.1016/j.laa.2020.02.010

Cortinovis, A.: Fast deterministic and randomized algorithms for low-rank approximation, matrix functions, and trace estimation. PhD thesis, EPFL (June 2022)

Osinsky, A.I., Zamarashkin, N.L.: On the accuracy of cross and column low-rank maxvol approximations in average. Comput. Math. Math. Phys. 61, 786–798 (2021). https://doi.org/10.1134/S0965542521050171

Acknowledgements

The author thanks Nikolay Zamarashkin for comments that greatly improved the manuscript. The author is also thankful to Stanislav Stavtsev for providing a fast and stable code of the adaptive cross approximation algorithm. The study was supported by a grant from the Russian Science Foundation No. 22-11-35045, https://rscf.ru/project/22-11-35045/.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Osinsky, A. Polynomial time \(\rho \)-locally maximum volume search. Calcolo 60, 42 (2023). https://doi.org/10.1007/s10092-023-00536-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-023-00536-2