Abstract

The paper deals with the approximation of integrals of the type

where \({\mathrm {D}}=[-\,1,1]^2\), f is a function defined on \({\mathrm {D}}\) with possible algebraic singularities on \(\partial {\mathrm {D}}\), \({\mathbf {w}}\) is the product of two Jacobi weight functions, and the kernel \({\mathbf {K}}\) can be of different kinds. We propose two cubature rules determining conditions under which the rules are stable and convergent. Along the paper we diffusely treat the numerical approximation for kernels which can be nearly singular and/or highly oscillating, by using a bivariate dilation technique. Some numerical examples which confirm the theoretical estimates are also proposed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The paper deals with the approximation of integrals of the type

where f is a sufficiently smooth function inside \({\mathrm {D}}:=[-\,1,1]^2\) with possible algebraic singularities along the border \(\partial {\mathrm {D}}\), \({\mathbf {w}}\) is the product of two Jacobi weight functions, and \({\mathbf {K}}\) is one of the following kernels

where \(0\ne \omega \in \mathbb {R},\) g is an oscillatory smooth function with frequency \(\omega \). Moreover, we will consider also kernels which can be possible combinations of the types \({\mathbf {K}}_1,\) \({\mathbf {K}}_2\). In what follows we will consider also

The numerical evaluation of these integrals presents difficulties for “large” \(\omega ,\) since \({\mathbf {K}}_1\) is close to be singular, \({\mathbf {K}}_2\) highly oscillates, while \({\mathbf {K}}_3\) includes both the aforesaid problematic behaviors. In all the cases, for these kernels the modulus of the derivatives grows as \(\omega \) grows.

\({\mathbf {K}}_1\)-type kernels appear, for instance, in two-dimensional nearly singular BEM integrals on quadrilateral elements (see for instance [8, 13]). Highly oscillating kernels of the type \({\mathbf {K}}_2\) are useful in computational methods for oscillatory phenomena in science and engineering problems, including wave scattering, wave propagation, quantum mechanics, signal processing and image recognition (see [7] and references therein). The combination of the two aspects, i.e. integrals with nearly singular and oscillating kernels appear for instance in the solution of problems of propagation in uniform waveguides with nonperfect conductors (see [5] and the references therein).

Here we propose a “product cubature formula” obtained by replacing the “regular” function f by a bivariate Lagrange polynomial based on a set of knots chosen to assure the stability and the convergence of the rule. Despite the simplicity of these formulas, the computation of their coefficients is not yet an easy task. In the analogous univariate case, to compute the corresponding coefficients one needs to determine “modified moments” by means of recurrence relations, and to examine the stability of these latter (see for instance [6, 9, 12, 17, 20]). This approach, however, does not appear feasible for multivariate not degenerate kernels. Here we present a unique approach for computing the coefficients of the aforesaid cubature rule when \({\mathbf {K}}\) belongs to the types (2–3). Such method, that we call 2D-dilation method, is based on a preliminary “dilation” of the domain and, by suitable transformations, on the successive reduction of the initial integral to the sum of ones on \({\mathrm {D}}\) again. These manipulations “relax” in some sense the “too fast” behavior of \({\mathbf {K}}\) as \(\omega \) grows. For a correct use of the 2D-dilation method, which could be also applied directly to compute integrals with kernels of the kind (2–3), we determine conditions under which the rule is stable and convergent.

Both the rules have advantages and drawbacks. The product integration rule requires a smaller number of evaluations of the integrand function f, while the number of samples involved in the 2D-dilation rule increases as \(\omega \) increases. On the other hand, the product rule involves the computation of \(m^2\) coefficients, which are integrals, and for this reason, in general its computational cost can be excessively high. However, as we will show in Sect. 4.2.1, this cost can be drastically reduced when the kernels present some symmetries.

We point out that many of the existing methods on the approximation of multivariate integrals are reliable for very smooth functions (see [1,2,3, 7, 19, 21] and references therein). Some of them treat degenerate kernels [18], others require changes of variable generally not adequate for weighted integrands [7, 8]. Our procedure allows to compute not degenerate weighted integrals, with oscillating and/or nearly singular kernels.

The paper is organized as follows. After some notations and preliminary results stated in Sects. 2 and 3 contains the product cubature rule with results on the stability and convergence for a wide class of kernels. In Sect. 4 we describe the 2D-dilation rule in a general form, proving results about the stability and the rate of convergence of the error. Moreover, we give some details for computing the coefficients of the product cubature rule with kernels as in (2–3). In Sect. 5 we present some numerical examples, where our results are compared with those achieved by other methods. In Sect. 6 we suggest some criteria on the choice of the stretching parameter in the 2D-dilation formula, and in Sect. 7 we propose a test for comparing our cubature formulae with respect to their CPU time. Section 8 is devoted to the proofs of the main results. Finally, the “Appendix” provides a detailed exposition of the formula 2D-dilation introduced in Sect. 4.

2 Notations and tools

Along all the paper the constant \(\mathcal {C}\) will be used several times, having different meaning in different formulas. Moreover from now on we will write \(\mathcal {C} \ne \mathcal {C}(a,b,\ldots )\) in order to say that \(\mathcal {C}\) is a positive constant independent of the parameters \(a,b,\ldots \), and \(\mathcal {C} = \mathcal {C}(a,b,\ldots )\) to say that \(\mathcal {C}\) depends on \(a,b,\ldots \). Moreover, if \(A,B > 0\) are quantities depending on some parameters, we write \(A \sim B,\) if there exists a constant \(0<\mathcal {C}\ne {\mathcal {C}}(A,B)\) s.t.

\(\mathbb {P}_m\) denotes the space of the univariate algebraic polynomials of degree at most m and \(\mathbb {P}_{m,m}\) the space of algebraic bivariate polynomials of degree at most m in each variable.

In what follows we use the notation \(v^{\alpha ,\beta }\) for a Jacobi weight of parameters \(\alpha ,\beta \), i.e. \(v^{\alpha ,\beta }(z):=(1-z)^\alpha (1+z)^\beta , \ z\in (-\,1,1), \ \alpha ,\beta >-\,1.\)

Along the paper we set

Finally, we will denote by \(N_1^m\) the set \(\{1, 2, \dots , m\}\).

2.1 Function spaces

From now on we set

with \(\gamma _i,\delta _i \ge 0, i=1,2.\) Define

equipped with the norm

Whenever one or more of the parameters \(\gamma _1,\delta _1,\gamma _2,\delta _2\) are greater than 0, functions in \(C_{\varvec{\sigma }}\) can be singular on one or more sides of \({\mathrm {D}}.\) In the case \(\gamma _1=\delta _1=\gamma _2=\delta _2=0\) we set \(C_{\varvec{\sigma }}=C({\mathrm {D}})\).

For smoother functions, i.e. for functions having some derivatives which can be discontinuous on \(\partial {\mathrm {D}}\), for \(r\ge 1\) we introduce the following Sobolev–type space

where \(\varphi _1(x_1)=\sqrt{1-x_1^2}\), \(\varphi _2(x_2)=\sqrt{1-x_2^2}\). We equip \(W_r(\varvec{\sigma })\) with the norm

Denote by \(\displaystyle E_{m,m}(f)_{\varvec{\sigma }}\) the error of best polynomial approximation in \(C_{\varvec{\sigma }}\) by means of polynomials in \(\mathbb {P}_{m,m}\)

In [15] (see also [11]) it was proved that for any \(f\in W_r(\varvec{\sigma })\)

where \(0<{\mathcal {C}}\ne {\mathcal {C}}(m,f)\) and \(\mathcal {M}_r(f,\varvec{\sigma })\) defined in (5).

For \(f,g\in C_{\varvec{\sigma }}\), the following inequality can be easily proved

where \(M=\left\lfloor \frac{m}{2}\right\rfloor .\)

In what follows we use the notation

where \(A\subseteq {\mathrm {D}}\) will be omitted if \(A\equiv {\mathrm {D}}\).

Finally, let us denote by \(L^p_{\varvec{\sigma }}({\mathrm {D}})\), \(1\le p<\infty \) the collection of the functions \(f({\mathbf {x}})\) defined on \({\mathrm {D}}\) such that \(\Vert f\varvec{\sigma }\Vert _p=\left( \int _{{\mathrm {D}}} |f({\mathbf {x}})\varvec{\sigma }({\mathbf {x}})|^p \ d{\mathbf {x}}\right) ^\frac{1}{p}<\infty \). For \(\varvec{\sigma }\equiv 1\), \(L^p_{\varvec{\sigma }}({\mathrm {D}})=L^p({\mathrm {D}})\).

2.2 Gauss–Jacobi cubature rules

For any Jacobi weight \(u=v^{\alpha ,\beta }\), let \(\{p_m(u)\}_{m=0}^\infty \) be the corresponding sequence of orthonormal polynomials with positive leading coefficients, i.e.

and let \(\{\xi _i^{u}\}_{i=1}^m\) be the zeros of \(p_m(u)\).

From now on we set

with \(\alpha _1,\beta _1,\alpha _2, \beta _2 >-\,1.\)

Consider now the tensor-product Gauss–Jacobi rule,

where \(\xi _{i,j}^{w_1,w_2}:=(\xi _i^{w_1},\xi _j^{w_2}),\) \(\lambda _i^{w_j}\), \(i=1,\ldots ,m,\) denote the Christoffel numbers w.r.t \(w_j,\ j=1,2\) and \(\mathcal {R}_{m,m}^{\mathcal {G}}(P)=0\) for any \(P\in \mathbb {P}_{2m-1,2m-1}\).

For its remainder term, the following bound holds (see [15]).

Proposition 1

Let \(f\in C_{\varvec{\sigma }}\). Under the assumption

we have

where \(\mathcal {C}\ne {\mathcal {C}}(m,f)\).

2.3 Bivariate Lagrange interpolation

Let \(\mathcal {L}_{m,m}({\mathbf {w}}, f,{\mathbf {x}})\) be the bivariate Lagrange polynomial interpolating a given function f at the grid points \(\{\xi _{i,j}^{w_1,w_2}, \ (i,j)\in N_1^m\times N_1^m\}\), i.e.

The polynomial \(\mathcal {L}_{m,m}({\mathbf {w}}, f)\in \mathbb {P}_{m-1,m-1}\) and \(\mathcal {L}_{m,m}({\mathbf {w}}, P)= P\), for any \(P\in \mathbb {P}_{m-1,m-1}\). An expression of \(\mathcal {L}_{m,m}({\mathbf {w}}, f)\) is

3 The product cubature rule

Let

\({\mathbf {w}}=w_1w_2\) defined in (8), \({\mathbf {t}}\in \mathrm {T}\) and \({\mathbf {K}}\) defined in \({\mathrm {D}}\times \mathrm {T}\). By replacing the function f in (11) with the Lagrange polynomial \(\mathcal {L}_{m,m}({\mathbf {w}}, f;{\mathbf {x}})\), we obtain the following product cubature rule

where

and

is the remainder term. About the stability and the convergence of the product rule we are able to prove the following

Theorem 1

Let \({\mathbf {w}}({\mathbf {x}})=w_1(x_1)w_2(x_2)\) be defined in (8) and \({\mathbf {t}}\in \mathrm {T}\). If there exist a \(\varvec{\sigma }\) as defined in (4) s.t. \(f\in C_{\varvec{\sigma }}\) and the following assumptions are satisfied

then we have

where \({\mathcal {C}}\ne {\mathcal {C}}(m,f)\). Moreover, the following error estimate holds true

where \({\mathcal {C}}\ne {\mathcal {C}}(m,f)\).

Remark 1

From (17) it follows that for \(m\rightarrow \infty ,\) the product rule error rate of decay is bounded by that of the error of the best polynomial approximation of the only function f. This appealing speed of convergence holds under the “exact” computation of the coefficients in \({\varSigma }_{m,m}(f,{\mathbf {t}})\). Their (approximate) evaluation is however not a simple task; only for kernels having special properties it can be performed with a low computational cost. Details on the computation of the coefficients (13) for some kernels will be given in the next section.

4 The formula 2D-dilation and the computation of the product rule coefficients for some kernels

In this Section we fix our attention on the kernels

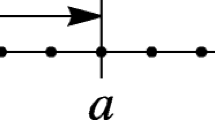

\(0\ne \omega \in \mathbb {R}, \) under the assumption that the function g is sufficiently smooth. The graphs in Figs. 1, 2, 3, 4, 5 and 6 show the behavior of some kernels of the types (18) for some choices of the parameter \(\omega \).

To compute the product rule coefficients (13), we will use the 2D-dilation formula that we describe next. Since the latter can be used in more general cases, we present the dilation formula in a general form, by proving its stability and convergence under suitable assumptions. Successively, we apply it for computing the coefficients \(A_{r,s}({\mathbf {K}}_i,\omega ),\ i=1,2,3\) of the product rule in (12). With regard to this last aspect, we will show also how to reduce the computational cost in some special cases.

4.1 The 2D-dilation formula

To perform the evaluation of integral (1), with \(p=2\) and a kernel of type (18), the main idea is to dilate the integration domain \({\mathrm {D}}\) by a change of variables in order to relax in some sense the “too fast” behavior of \({\mathbf {K}}\) when \(\omega \) grows. Successively the new domain \(\varOmega \) is divided into \(S^2\) squares \(\left\{ {\mathrm {D}}_{i,j}\right\} _{(i,j)\in N_1^S\times N_1^S}\) and each integral is reduced into \({\mathrm {D}}\) one more time. At last, the integrals are approximated by suitable Gauss–Jacobi rules. For 1-dimensional unweighted integrals with a nearly singular kernel in Love’s equation [16] and for highly oscillating kernels in [4], a “dilation” technique has been developed.

Here we describe a dilation method for weighted bivariate integrals having nearly singular kernels, highly oscillating kernels and also for their composition. Consider integrals of the type

where \({\mathbf {K}}({\mathbf {x}},\omega )\) is one of the types in (18). Setting \(\omega _1=|\omega |^\frac{1}{2}\), by the changes of variables \(x_1=\frac{\eta }{\omega _1},\quad x_2=\frac{\theta }{\omega _1},\) we get

and choosing \(d\in \mathbb {R}^+\) s.t. \(S=\frac{ 2\omega _1}{d}\in \mathbb {N},\) we have

where \(\tau _0=\omega _1^{2}\) and

By mapping each square \(D_{i,j}\) into the unit square \({\mathrm {D}}\) and setting for \( k\in N_1^S\)

and for \((i,j)\in N_1^S\times N_1^S\)

we get

where the cubature formula \(\varPsi _{m,m}(F,\omega )\) has been obtained by applying suitable Gauss–Jacobi cubature rules to evaluate the \(S^2\) integrals in (19) and

is the remainder term. Deferring to the “Appendix” the explicit expression of \(\varPsi _{m,m}(F,\omega )\), we state now a result about the stability and the convergence of the rule.

Theorem 2

Assume \({\mathbf {w}}\) as in (8) and \({\mathbf {K}}(\cdot ,\omega )\) as in (18). Then, if there exist a \(\varvec{\sigma }\) as defined in (4) s.t. \(F\in C_{\varvec{\sigma }}\) and the following assumptions are satisfied

then

Moreover, for any \(F\in W_r(\varvec{\sigma })\) under the assumptions \(g\in C^\infty (\varOmega )\), \(\varOmega \equiv [-\omega _1,\omega _1]^2\), \(S\ge 2\),

we get

where

and \(0<{\mathcal {C}}\ne {\mathcal {C}}(F,m)\).

4.2 Computation of the product rule coefficients for kernels (18)

We provide now some details for computing the coefficients in (12) when the kernels are of the types in (18), i.e.

We point out that without loss of generality, we assume \({\mathbf {x}}_0\) a fixed data in the problem, so that \(\mathrm {T}=\mathbb {R}\) and \({\mathbf {t}}=\omega \). By using 2D-dilation formula (19) of degree m with \(F=\ell _{r,s}^{w_1,w_2},\) we have

About the rate of convergence of (23) we state the following

Corollary 1

Under the hypotheses of Theorem 2, for \(m>\frac{ d}{2} e^{\frac{1}{\omega _1}}\) and for \(d\ge 2\), \(\omega _1\ge 1\), the following error estimate holds

where

and \({\mathcal {C}}\ne {\mathcal {C}}(m,\omega ).\)

Following the previous work-scheme, the evaluation of the coefficient \(A_{r,s}({\mathbf {K}},\omega )\) requires \(m^4S^2\) long operations, with S increasing as \(\omega \) increases. However, as the numerical tests will show, the implementation of the product rule for smooth integrands functions f and independently on the choice of the parameter \(\omega \), will give accurate results for “small” values of m.

4.2.1 Cases of complexity reduction

In some cases the computational complexity can be drastically reduced. Assume \(w_1(x)=v^{\alpha _1,\alpha _1}(x_1)\). Slightly changing the notation set in Sect. 2, let us denote by \(\{\xi _i^{w_1}\}_{i=-M}^M,\) \(M=\left\lfloor \frac{m}{2} \right\rfloor \), \(\xi _0^{w_1}=0\) for m odd, the zeros of \(p_m(w_1)\). Since \(w_1\) is an even weight function, it is \(\xi _i^{w_1}=-\xi _{-i}^{w_1},\quad i=1,2,\dots ,M\) we have

Thus, assuming \({\mathbf {w}}({\mathbf {x}})=w_1(x_1)w_2(x_2)\), \(w_1=v^{\alpha _1,\alpha _1}\), \(w_2=v^{\alpha _2,\alpha _2}\), if \({\mathbf {K}}({\mathbf {x}},\omega )\) is symmetric through the axes \(x_1=0\) and \(x_2=0\), i.e.

it is

and the global computational cost has a reduction of \(75\%\). If in addition \(\alpha _1 = \alpha _2\), i.e. \({\mathbf {w}}=v^{\alpha _1,\alpha _1}v^{\alpha _1,\alpha _1},\) since it is also

a reduction of 87.5% is achieved.

In the case \({\mathbf {K}}({\mathbf {x}},\omega )\) is odd w.r.t. both the coordinate axes, i.e.

and \({\mathbf {w}}=v^{\alpha _1,\alpha _1}v^{\alpha _2,\alpha _2},\) it is

CC (short for Computational Complexity) has a reduction of 75%. If, in addition, \(\alpha _1 = \alpha _2\), the following additional relations hold

and CC has a reduction of 87.5%.

4.3 Degenerate kernels

Whenever the kernels satisfy \({\mathbf {K}}({\mathbf {x}},\omega )=k_i(x_1,\omega )k_j(x_2,\omega )\), for \(i,j\in \{4,5\}\) where

with \(x_0\in (-\,1,1),\ \lambda \in \mathbb {R}^+,\) \(G\in C^\infty ([-\omega ,\omega ]),\) the coefficients take the form

where

Also in this case the computation effort is drastically reduced, since \(B_r(k_j,\omega ),\) \( \ j=1,2\) can be approximated by implementing the 1-D dilation method (see [4, 16]).

5 Numerical examples

In this section we present some examples to test the cubature rule proposed in Sect. 3, comparing our results with those obtained by other methods. To be more precise, we approximate each integral by the cubature rule (12) for increasing values of m, choosing three different values of \(\omega \) and computing the coefficients in (12) via 2D-dilation rule. In each example we state also the numerical results obtained by the Gauss–Jacobi cubature rule (shortly GJ-rule) and those achieved by the straightforward application of the 2D-dilation rule (2D-d). About the first two tests involving nearly singular kernels \({\mathbf {K}}_1(\cdot ,\omega )\), we provide also the results obtained by the iterated \(\sinh \) transformation proposed by Johnston et al. [8] (shortly JJE-method). The integrals in Examples 3 and 4 involve oscillatory kernels of the type \({\mathbf {K}}_2(\cdot ,\omega )\). In Example 3 our results are compared with those achieved by the method proposed by Huybrechs and Vandevalle [7] (shortly HV-method), since the function f satisfies their assumptions of convergence. The last two tests involve “mixed” kernels and for them we compare our results with those achieved by the JJE-method related to the kernel \({\mathbf {K}}_1(\cdot ,\omega )\) with function f replaced by \(f{\mathbf {K}}_2(\cdot ,\omega )\).

We point out that all the computations were performed in double-machine precision (\(eps \approx 2.22044e{-}16\)) and in the tables the symbol “–” will mean that machine precision has been achieved.

Example 1

The function \(f\in W_r(\varvec{\sigma })\) with \(\varvec{\sigma }=1\) for any \(r\ge 1.\) In Table 1 the results obtained by implementing the product rule (12) show that the machine precision is attained at \(m=16\) for any choice of \(\omega \). A similar behavior presents the 2D-d, whose results are set in Table 2. Also the JJE-method (Table 3) fastly converges, achieving almost satisfactory results, even if it is required the use of the Gauss–Laguerre cubature rule of order \(m=1024\) to obtain 13 digits. Finally, as we can expect, by using the GJ-rule, as \(\omega \) increases a progressive loss of precision is detected, until results become completely wrong (Table 4).

Example 2

Also in this case the function \(f\in W_r(\varvec{\sigma })\) for any \(r\ge 1.\) In Table 5 the results obtained by implementing the product rule (12) show that the machine precision is attained for \(m=32\). In this case the value of the seminorm growth faster than the previous example. For instance, \(\mathcal {M}_{10}(f)\sim 2.5\times 10^4\). A similar behavior presents the 2D-d, whose results are given in Table 6. In this case the JJE-method in Table 7 converges lower than the previous example, achieving 8–9 exact digits. In this case the changes of variables are applied to the whole integrand, including two Chebyshev weights, and this explains this bad behavior. Similar to the previous test, by the GJ-rule a progressive loss of precision occurs as \(\omega \) increases, till \(\omega =10^6\) for which the values are completely wrong (Table 8).

Example 3

The function \(f\in W_r(\varvec{\sigma })\) for any \(r\ge 1,\) with \(\varvec{\sigma }=1.\) By Table 9, containing the results of the product rule (12), the machine precision is attained with \(m=16\) for \(\omega _1=10, 10^2\), while for greater values of \(\omega _1\) the convergence is slower. Similar is the behavior of the 2D-d whose results are in Table 10, where, as well as in other examples, 1–2 final digits are lost w.r.t. the product rule \(\varSigma _{m,m}(f)\). HV-method in Table 11 gives very good results and this is not surprising, since, according to the convergence hypotheses of the HV-method, the oscillator (\(x+y\)) and the function f are both analytic. Finally, for large \(\omega _1\), the GJ-rule doesn’t give any correct result till \(m\le 512\), achieving acceptable results only for \(m=1024\) (see Table 12).

Example 4

The function \(f\in W_{11}(\varvec{\sigma })\) for \(\sigma _1=\sigma _2=1.\) By Table 13 which contains the results of the product rule (12), the machine precision is attained with \(m=512\) for \(\omega =10^2\), while for greater values of \(\omega \) the convergence is slower, but 14 digits are taken. However, the results are coherent with the theoretical estimate (17) combined with (6), since the seminorm \(\mathcal {M}_{11}(f)\sim 10^{11}\). Similar is the behavior of the 2D-d whose results are in Table 14, where, as well as in other examples, 1–2 final digits are lost w.r.t. the cubature formula \(\varSigma _{m,m}(f)\). Since the assumptions of the HV-method are not satisfied, we didn’t implement it. Finally, for large \(\omega \), the GJ-rule doesn’t give any correct result till \(m\le 512\), achieving acceptable results for \(m=1024\) only (see Table 15).

Example 5

The integral contains a mixed-type kernel, with \(f\in W_r(\varvec{\sigma })\) for any r. The results of the product rule (12) given in Table 16 are coherent with the theoretical estimates, since the values of the seminorms are too large. For instance for \(r=20\), it is \(\mathcal {M}_r(f)\sim 10^{18}.\) Comparing our results with those obtained with the 2D-d given in Table 17, we observe that more or less 2 digits are lost w.r.t. the product rule. In absence of other procedures, we have forced the use of the JJE-method, by which for \(\omega = 100\) the results present 12 correct digits, while with larger \(\omega \) the results are completely wrong (see Table 18). However this bad behavior is to be expected, since the oscillating factor is not covered within their method. Finally, the results in Table 19 evidence that GJ-rule is unreliable for \(\omega \) large.

Example 6

We conclude with a test on a mixed-type kernel. Here the function \(f\in W_7(\varvec{\sigma })\). Since the seminorm \(\mathcal {M}_r(f)\sim 6\times 10^3\), according to the theoretical estimate, 15 exact (not always significant) digits are computed for \(m=512\) (Table 20). The results are comparable with those achieved by the 2D-d (Table 21), while the GJ-rule results in Table 23, as well as those achieved by the JJE-method in Table 22, give poor approximations.

Errors behaviors for different choices of d in Example 1

6 The choice of the parameter d

Now we want to discuss briefly how to choose the number \(S^2\) of the domain subdivisions in the 2D-dilation rule, or equivalently how to set the length d of the squares side, since \(S=\frac{2\omega _1}{d}\). By the error estimate (22), assuming negligible the contribute of \(\mathcal {N}_{r}(F,{\mathbf {K}})\) and fixing the desired computational accuracy toll, m and S are inversely proportional. Therefore, whenever let be useful to have m as small as possible, we have to take larger S. We point out that this behavior depends on the slower rate of convergence of the involved Gauss–Jacobi cubature rules when the “stretching” parameter S is “too small” or d is too large.

Of course, the previous considerations are not yet conclusive on the choice of S. However, by numerical evidence, a good “compromise” to reduce m seems to be \(S=\left\lfloor \omega _1\right\rfloor \) and therefore \(d=\frac{2\omega _1}{S}\sim 2\). To show this behavior, we propose the graphic of the relative errors achieved for some values of d chosen between 2 and \(\omega _1\), referred to the first two numerical tests produced in the Sect. 5 (see Figs. 7, 8).

Errors behaviors for different choices of d in Example 2

7 A comparison between product and 2D-dilation rules

We propose a comparison between the proposed rules w.r.t. the time complexity. The following Tables 24 and 25 contain the computational times (in seconds) obtained by implementing the product rule \(\varSigma _{m,m}(f)\) and the 2D-dilation rule \(\varPsi _{m,m}(f,\omega )\) for the integrals given in Examples 1–2 of Sect. 5. For each of them the times have been computed for \(\omega =10^2, 10^4, 10^6\), by implementing both the algorithms in Matlab version R2016a, on a PC with a Intel Core i7-2600 CPU 3.40 GHz and 8 GB of memory. We point out that times related to the product formula \(\varSigma _{m,m}(f)\) include those spent for computing the coefficients \(\{A_{r,s}({\mathbf {K}},\omega )\}_{(r,s)\in N_1^m\times N_1^m}\).

As one can see, the timings required by the product rule are a little bit longer, but not too much, than those required by the 2D-dilation rule, till m is small. However, in the Example 2, with \(m=32\) and for all the values of \(\omega \), the timings required by the product rule are a little bit smaller than those required by the 2D-dilation rule . Indeed, 2D-dilation requires \((mS)^2\) samples of the integrand function f, where S increases on \(\omega \). Thus the global time strongly depend on the computing time of the function. In Example 2 the time complexity for evaluating \(f({\mathbf {x}})=\log ^{\frac{15}{2}}(x_1+x_2+4)\) is longer than the time for computing \(f({\mathbf {x}})=e^{x_1x_2}\) in Example 1. This variability cannot happen in the product rule, where the number m of function samples is independent of \(\omega \). In any case, since in the product rule the main effort is mainly due to the computation of its coefficients, it should be preferable to use it when the kernels present some symmetry properties, by virtue of them, the number of the coefficients is drastically reduced (see Sect. 4.2.1).

8 Proofs

First we recall a result needed in the successive proof. Let

be the m-th Fourier sum of the univariate function \(h\in L^p_{\sigma _1}((-\,1,1))\) and let \(S_{m,m}({\mathbf {w}},G)\) be the bivariate \(m-\)th Fourier sum in the orthonormal polynomial systems \(\{p_m(w_1)\}_m\), \(\{p_m(w_2)\}_m\) of a given function \(G\in L^p_{\varvec{\sigma }}({\mathrm {D}}),\) i.e.

where \(S_{m,m}({\mathbf {w}},G)\in \mathbb {P}_{m,m}\). For \(1<p<\infty \), under the assumptions (see for instance [15, p.2332])

then, for any \(f\in C_{\varvec{\sigma }}\)

Proof of Theorem 1

First we prove

which implies (16). For any fixed \({\mathbf {t}}\in \mathrm {T}\), let \(g_{m}=sgn(\mathcal {L}_{m,m}({\mathbf {w}};f) K({\mathbf {x}},{\mathbf {t}}))\). Then,

By Hölder inequality

Now, taking into account (26) and the assumptions (15)

Moreover,

and taking into account the relationship (see [14, p. 673 (14)])

it follows

Combining last inequality and (32) with (28), (27) follows. To prove (17), start from

where \(\displaystyle P_{m-1,m-1}^*({\mathbf {x}})\) is the best approximation polynomial of \(f\in C_{\varvec{\sigma }}\). By Hölder inequality and taking into account (14) and (15) it follows

Since by (27)

(17) follows combining (35), (36) with (34). \(\square \)

Proof of Theorem 2

First we prove (21). Starting from expression (38) given in the “Appendix”, we obtain the following bound:

where

and using (33), we have

Then, taking into account the assumption (20) and Proposition 1, in view of the boundedness of \({\mathbf {K}}(\cdot ,\omega )\), we can conclude

Now we prove (22). By (38) taking into account (10) and under the assumption (20) we have

Taking into account (7) we get

where

and

Since for \(h\in \{1,2\}\) and \((i,j)\in N_1^{S}\times N_1^{S}\)

we have

and therefore, taking into account (6), by (37) it follows

where

and the thesis follows. \(\square \)

Proof of Corollary 1

In order to use Theorem 2 with \(r=2m\), we have to estimate \(\mathcal {N}_{2m}(\ell _{r,s}^{w_1,w_2},{\mathbf {K}}).\) By iterating the weighted Bernstein inequality (see for instance [10, p.170])

and taking into account that under the hypotheses (15) [10, Th.4.3.3, p.274 and p.256]

with \(\mu \) defined in (25), we can conclude

Hence,

and by (22) and using

for \(m>\frac{d}{4} e^\frac{1}{\omega _1}\), the thesis follows. \(\square \)

References

Caliari, M., De Marchi, S., Sommariva, A., Vianello, M.: Padua2DM: fast interpolation and cubature at the Padua points in Matlab/Octave. Numer. Algorithms 56(1), 45–60 (2011)

Davis, P.J., Rabinowitz, P.: Methods of Numerical Integration, 2nd edn. Computer Science and Applied Mathematics. Academic Press Inc, Orlando, FL (1984)

Da Fies, G., Sommariva, A., Vianello, M.: Algebraic cubature by linear blending of elliptical arcs. Appl. Numer. Math. 74, 49–61 (2013)

De Bonis, M.C., Pastore, P.: A quadrature formula for integrals of highly oscillatory functions. Rend. Circ. Mat. Palermo (2) Suppl. 82, 279–303 (2010)

Dobbelaere, D., Rogier, H., De Zutter, D.: Accurate 2.5-D boundary element method for conductive media. Radio Sci. 49, 389–399 (2014)

Gautschi, W.: On the construction of Gaussian quadrature rules from modified moments. Math. Comput. 24, 245–260 (1970)

Huybrechs, D., Vandewalle, S.: The construction of cubature rules for multivariate highly oscillatory integrals. Math. Comput. 76(260), 1955–1980 (2007)

Johnston, B.M., Johnston, P.R., Elliott, D.: A sinh transformation for evaluating two dimensional nearly singular boundary element integrals. Int. J. Numer. Methods Eng. 69, 1460–1479 (2007)

Lewanowicz, S.: A fast algorithm for the construction of recurrence relations for modified moments, (English summary). Appl. Math. (Warsaw) 22(3), 359–372 (1994)

Mastroianni, G., Milovanović, G.V.: Interpolation Processes. Basic Theory and Applications. Springer Monographs in Mathematics. Springer, Berlin (2008)

Mastroianni, G., Milovanović, G.V., Occorsio, D.: A Nyström method for two variables Fredholm integral equations on triangles. Appl. Math. Comput. 219, 7653–7662 (2013)

Mastronardi, N., Occorsio, D.: Product integration rules on the semiaxis. In: Proceedings of the Third International Conference on Functional Analysis and Approximation Theory, vol. II (Acquafredda di Maratea, 1996). Rend. Circ. Mat. Palermo (2) Suppl. No. 52, Vol. II 605–618 (1998)

Monegato, G., Scuderi, L.: A polynomial collocation method for the numerical solution of weakly singular and singular integral equations on non-smooth boundaries. Int. J. Methods Eng. 58, 1985–2011 (2003)

Nevai, P.: Mean convergence of Lagrange Interpolation. III. Trans. Am. Math. Soc. 282(2), 669–698 (1984)

Occorsio, D., Russo, M.G.: Numerical methods for Fredholm integral equations on the square. Appl. Math. Comput. 218, 2318–2333 (2011)

Pastore, P.: The numerical treatment of Love’s integral equation having very small parameter. J. Comput. Appl. Math. 236, 1267–1281 (2011)

Piessens, R.: Modified Clenshaw–Curtis integration and applications to numerical computation of integral transforms, Numerical integration (Halifax, N.S., 1986), NATO Advanced Science Institutes Series C, Mathematical and Physical Sciences, vol. 203, pp. 35–51. Reidel, Dordrecht (1987)

Serafini, G.: Numerical approximation of weakly singular integrals on a triangle. In: AIP Conference Proceedings 1776, 070011. https://doi.org/10.1063/1.4965357 (2016)

Sloan, I.H.: Polynomial interpolation and hyperinterpolation over general regions. J. Approx. Theory 83(2), 238–254 (1995)

Van Deun, J., Bultheel, A.: Modified moments and orthogonal rational functions. Appl. Numer. Anal. Comput. Math. 1(3), 455–468 (2004)

Xu, Y.: On Gauss–Lobatto integration on the triangle. SIAM J. Numer. Anal. 49(2), 541–548 (2011)

Acknowledgements

We want to thank the anonymous referee for the careful reading of the manuscript and for the valuable comments. We are also grateful to Professor G. Mastroianni for his helpful suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

The authors were partially supported by University of Basilicata (local funds) and by National Group of Computing Science GNCS–INDAM. The second author was supported in part by the CUC (Centro Universitario Cattolico). The Research has been accomplished within the RITA “Research ITalian network on Approximation”.

Appendix

Appendix

Now we derive the expression of the cubature rule (2). Recalling the settings

we get

where

and

Then, approximating each integral by the proper Gauss–Jacobi rule depending on the couple of weight functions arising in the integral, according to the notation in (9), we get

Rights and permissions

About this article

Cite this article

Occorsio, D., Serafini, G. Cubature formulae for nearly singular and highly oscillating integrals. Calcolo 55, 4 (2018). https://doi.org/10.1007/s10092-018-0243-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-018-0243-x