Abstract

In this article, an adaptive neural network (NN) control problem is studied for nonstrict-feedback multi-input multi-output (MIMO) nonlinear systems with unmeasurable states and unknown hysteresis. Firstly, to estimate the unmeasurable states, a NN state observer is constructed. Additionally, the unknown nonlinear terms are online approximated by using radial basis function-neural networks (RBF-NNs). And then, the complexity problem is addressed by using the dynamic surface control (DSC), which is easy to overcome the problem of repeated differentiations for virtual control signals. Furthermore, a nonlinear gain feedback function is introduced into the backstepping design procedure to improve the dynamic performance of the closed-loop system. Meanwhile, to satisfy the practical engineering application, a prescribed performance control (PPC) technique is implemented to guarantee the tracking error can converge to a preassigned area. By using the proposed control scheme, all closed-loop signals are semi-global uniformly ultimately bounded (SGUUB). At last, the preponderance and usefulness of the proposed controller are indicated by simulation results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, the control problem for nonlinear systems has received much research attention in the past few years, and numerous control strategies have been developed, such as adaptive control [1]; sliding-mode control [2]; robust control [3]; etc. Since the backstepping control strategy is proposed [4], it has become a preeminent tool for stability analysis and control design of uncertain nonlinear systems. It is worth noting that the backstepping control strategy was first proposed to solve the problem of nonlinear systems subject to unmatched conditions, where the system nonlinearities are assumed to be known in advance. At the same time, the development of intelligent control theory, such as fuzzy logic systems (FLSs) and neural networks (NNs), becomes a useful tool to deal with the problem of control containing unknown nonlinear functions. Therefore, lots of adaptive backstepping-based NNs (and fuzzy) control strategies are developed for uncertain nonlinear systems [5,6,7,8]. Zuo [5] studied an adaptive backstepping control method for nonlinear multiagent systems by using the approximation ability of FLSs. Xu et al. [6] presented a fuzzy adaptive controller for SISO strict-feedback nonlinear systems with actuator quantization and mismatched external disturbances. Soon afterwards, the results in [6] were extended to solve the control problem of MIMO nonlinear systems in [7]. However, the complexity of computational, caused by repeated differentiation for virtual control laws, is unavoidable, which deteriorates obviously with the enlargement of the order for the backstepping-based nonlinear systems. Therefore, an advanced method called dynamic surface control (DSC) was reported to avoid such an issue by passing the intermediate control signals through the first-order filter, and in this way, the differentiations of intermediate control signals are not required in the process of the backstepping framework. Meanwhile, it should be noticed that the DSC technique relaxes the demands of the reference trajectories and the smoothness of nonlinear functions. Motivated by the DSC approach, on the basis of theoretical investigations and practical implementations, some original DSC-based results have been developed in [9,10,11].

Not all system states for actual engineering systems are easily quantifiable. Even if these states can be measured, more sensors are still needed, which raises the complexity of the control system. Therefore, it is necessary to explore deeply adaptive control strategies for nonlinear systems subject to unmeasurable states. In order to save the cost of control system, a series of observer-based adaptive output-feedback control methods have been published [12,13,14,15,16,17,18,19,20]. For example, the issue of adaptive output-feedback neural control for nonlinear systems in strict-feedback structure subject to input dead-zone constraints has been considered by [13]. By developing an extend state observer, a novel backstepping-based adaptive prescribed control approach has been proposed for the hydraulic systems under full-state constraints [14]. Zhang et al. [15] explained an adaptive formation containment control algorithm for linear multiagent systems with unmeasurable states and bounded unknown input. To estimate the unmeasured states in uncertain singular systems with unknown time-varying delay and nonlinear input, Tang et al. [16] proposed a simplified observer to give an adaptive sliding mode control method. A fuzzy observer-based adaptive control problem has been reported in [17] for nonstrict feedback systems under function constraints. Li et al. [18] investigated a learning-observer neural adaptive tracking problem of multiagent systems with quantized input. Wu et al. [19] focused on an adaptive quantized tracking control problem for nonlinear systems in nonstrict-feedback structure with sensor fault. In addition, based on a neural state observer and the command filter backstepping method, an adaptive control issue of pneumatic active suspension subject to sensor fault and vertical constraint has been studied in [20].

On the other hand, reducing tracking error for tracking control is a long-lasting yet challenging problem [21]. For the purpose of restricting system output within desired boundaries, Bechlioulis and Rovithakis originally proposed the prescribed control (PPC) technique in [22], which is a powerful tool to satisfy the high accuracy control requirements for different control systems. The work in [23] has studied an adaptive control problem for constrained nonlinear systems by using the barrier Lyapunov function method. Wang et al. [24] developed a finite-time adaptive PPC strategy for strict-feedback nonlinear systems with dynamic disturbances, actuator faults and time-varying parameters. By utilizing FLSs and PPC, an adaptive prescribe control strategy has been designed for nonlinear multiagent systems in [25]. Considering quantized input and tracking accuracy, a self-scrambling gain feedback controller has been proposed for MIMO nonlinear systems [26]. In [27], an adaptive control technique is developed for SISO nonlinear systems with hysteresis input, which guarantees the tracking error converges to the preassigned area by using PPC. The authors in [28] proposed a reinforcement learning-based control algorithm for an unmanned surface vehicle with prescribed performance.

Motivated by the above observations, this paper continues to focus on an adaptive PPC for a class of MIMO nonlinear systems with hysteresis input and unmeasurable states. Compared with the existing related results, the main advantages of this article are as follows.

-

(1)

It is a nontrivial work to investigate the adaptive control algorithm for MIMO nonstrict-feedback nonlinear systems with unmeasurable states and unknown hysteresis by using nonlinear error feedback. Moreover, compared with the MIMO strict (or pure)-feedback nonlinear systems [29, 30] and SISO nonlinear systems [31], the developed control approach in this paper can be used in more general situations.

-

(2)

Different from the case considered in [32,33,34], this article further studies the hysteresis input and the unmeasurable states that exist in actual engineering systems. Besides, the unmeasurable states of the system can be obtained by designing a NN state observer and the effect of the unknown hysteresis can be removed by constructing an adaptive update function.

-

(3)

In comparison to the traditional linear feedback control methods in [35,36,37], this study utilizes a novel nonlinear gain function in the backstepping design, which improves the dynamic performance of closed-loop system and also facilitates the closed-loop system stability analysis using its properties. Meanwhile, the computational explosion is addressed by applying DSC and the prescribed tracking error can be guaranteed.

2 Problem formulation and preliminaries

2.1 Plant description

The plant can be considered as the following nonstrict-feedback form

where \(n_m, m\in N_+\), \(n_m>1\), \(m>1\), \(X=[x_1^\mathrm{T}, x_{2}^\mathrm{T}, \dots , x_m^\mathrm{T}]^\mathrm{T}\) with \(x_m=[x_{m,1}, x_{m,2}, \dots , x_{m, n_m}]^\mathrm{T}\) is the state variable, \(y_m\in \mathbb {R}\) indicates the system output, \(f_{m,j}(\cdot )\) denotes the unknown smooth nonlinear function, \(d_{m,j}(t)\) is the continuous time-varying disturbance, which is subject to \(d_{m,j}(t)\le d_{m,j}^*\) with \(d_{m,j}^*\) being an unknown constant. \(u_m(v_m)\) represents the system input and the output of the backlash-like hysteresis with \(v_m\in \mathbb {R}\) is the input of the backlash-like hysteresis.

Remark 1

It should be noticed that the nonlinear system (2) can be employed to represent many practical systems, such as unmanned surface vehicles [28], and connected inverted pendulums systems [10]. Thus, it is necessary to investigate the adaptive control problem for such MIMO nonlinear systems.

From [38], the hysteresis input \(v_m\) and the system input \(u_m(v_m)\) can be expressed as

where \(\varrho _m\), \(c_m\), and \(D_m\) denote the unknown parameters and \(\varrho _m>0\), \(c_m>D_m\).

Furthermore, the solution of (2) can be solved as

where \(v_{m,0}\) and \(u_{m,0}\) are the initial conditions of \(v_m\) and \(u_m\), respectively. \(h_m(v_m)\) is bounded, which satisfies \(|h_m(v_m)|\le h_m^*\) and \(h_m^*\) is the unknown bound.

From (3), it is easy to rewrite system (1) as the following matrix form

where

\(L_m=[l_{m,1},\dots ,l_{m,n_m}]_{n_m\times 1}^\mathrm{T}\), \(B_{m,j}=[\underbrace{0,\dots ,1}_{j},\dots ,0]_{n_m\times 1}^\mathrm{T}\), \(d_m=[d_{m,1}(t), \dots , d_{m,n_m-1}(t), d_{m,n_m}(t)+h_m(v_m)]_{n_m\times 1}^\mathrm{T}\), \(G_m=[1,\dots ,0]_{1\times n_m}\).

Some assumptions are made throughout this paper.

Assumption 1

For \(\forall \imath _1, \imath _2\), there exists a positive constant \(k_{m,j}\) such that the nonlinear function \(f_{m,j}(\cdot )\) satisfies the following inequality

Assumption 2

The reference tracking signal \(y_{m,d}(t)\), \(\dot{y}_{m,d}(t)\) and \(\ddot{y}_{m,d}(t)\) are bounded and continuous.

Lemma 1

[39] For \(\forall (\ell _1, \ell _2)\in \mathbb {R}^2\), the following inequality can be obtained

where \(\hbar >0\) and \(1/p+1/q=1\) with \(q>1\) and \(p>1\).

2.2 Neural network

Due to the universal approximation property of RBF-NNs, it can be utilized to approximate the unknown nonlinearities [33]. As a consequence, for a continuous function \(F_{nn}(\wp )\) over a compact set \(\Omega _{\wp }\subset \mathbb {R}^q\), the NNs \(W^{*\mathrm{T}}\varphi (\wp )\) can approximate it to a desired accuracy \(\xi ^*>0\), which is expressed as

where \(W^*\) denotes the ideal constant weight vector and it is defined as \(W^*=\arg \min _{W\in \mathbb {R}^i}[\sup _{\wp \in \Omega _\wp }|F_{nn}(\wp )-W^\mathrm{T}\varphi (\wp )|]\), \(\wp \in \Omega _\wp\) is the input vector, \(W=[W_1, \dots , W_i]^\mathrm{T}\in \mathbb {R}^i\) represents the ideal weight vector with the number of neural nodes \(i>1\), and \(\varphi (\wp )=[\varphi _1(\wp ),\dots ,\varphi _i(\wp )]^\mathrm{T}\) denotes the basis function vector with \(\varphi _q(\wp )\) being chosen as the Gaussian function, which has the following form

where \(\underline{\mu }_q=[\underline{\mu }_{q1},\underline{\mu }_{i2}, \cdots ,\underline{\mu }_{il}]^\mathrm{T}\) and \(\bar{\sigma }_q\) represent the center of the receptive field and the width of Gaussian function, respectively. Furthermore, it is worth noting that the Gaussian function satisfies \(\varphi _{m,j}^\mathrm{T}(\cdot )\varphi _{m,j}(\cdot )\le \zeta _m\) with a positive constant \(\zeta _m\).

3 Neural network observer

Due to the fact that only the system output is directly obtained, it is necessary to design an observer to estimate the unmeasurable state \(x_{m,j},~i=1,\dots ,m, j=2,\dots , n_m\).

By utilizing the RBF-NNs, the following approximation result can be obtained

where \(\hat{X}\) and \(W_{m,j}\) are the estimations of X and \(W_{m,j}^*\). And \(W_{m,j}^*\) is expressed as

where \(\Omega _{m,j}\) and \(\hat{U}_{m,j}\) represent compact regions of \(W_{m,j}\), X and \(\hat{X}\).

Similar to [17], we can design the following NN state observer

where \(c_m\) is estimated by \(\hat{c}_m\).

The coefficient vector \(L_m\) is selected, such that \(A_m\) is Hurwitz. And then, for a given \(Q_m\) with \(Q_m=Q_m^\mathrm{T}>0\), there exists a positive definite matrix \(P>0\) satisfying \(A_m^\mathrm{T}P_m+P_m A_m=-Q_m\).

The observation error is defined as

satisfies

where \(\tilde{W}_{m,j}=W_{m,j}^*-W_{m,j}\), \(\xi _m=[\xi _{m,1},\dots , \xi _{m,n_m}]^\mathrm{T}\), \(\triangle F_m=[\triangle f_{m,1}, \dots , \triangle f_{m,n_m}]^\mathrm{T}\), \(\triangle f_{m,j}= f_{m,j}(X)- f_{m,j}(\hat{X})\) and \(\tilde{c}=c_m-\hat{c}_m\).

Consider the Lyapunov candidate as

Taking the time derivative of \(V_{m,0}\) yields

From Lemma 1, Assumption 2 and \(\varphi _{m,j}^\mathrm{T}(\hat{X})\varphi _{m,j}(\hat{X})\le \zeta _m\), one gets

where \(\xi _m^*=[\xi _{m,1}^*,\dots ,\xi _{m,n_m}^*]^\mathrm{T}\), \(d_m^*=[d_{m,1}^*,\dots ,d_{m,n_m}^*]^\mathrm{T}\).

Substituting (12) and (13) into (11), it produces

where \(q_0=\lambda _{\min }(Q)-4-\Vert P_m\Vert ^2\sum _{j=1}^{n_m}k_{m,j}^2\), \(M_{m,0}=\Vert P_m\Vert ^2\Vert d_m^*\Vert ^2+\Vert P_m\Vert ^2\Vert \xi _{i}^*\Vert ^2\).

4 Main results

In this section, the design procedure of backstepping controller will be presented, which contains the developed control strategy in four parts: the prescribed performance function and the nonlinear gain function with their properties are given, and the nonlinear gain-based adaptive NN controller is proposed by using the backstepping technique. And then, the design is augmented with a first-order filter and an adaptive function to solve the complexity explosion and the hysteresis input.

4.1 Prescribed performance

Define the following smooth monotone decreasing function

where \(\mu _{0,m,1}\) is the initial condition of \(\mu _{m,1}(t)\), \(\mu _{\infty ,m,1}\) is the ultimate value of \(\mu _{m,1}(t)\) and \(a_{m,1}\) is a positive constant. Furthermore, it is simple to obtain that \(\lim _{t\rightarrow \infty }\mu _{m,1}(t)\rightarrow \mu _{\infty ,m,1}\). The prescribed steady-state and transient bounds can be defined by utilizing the following constraint conditions

or

where \(0<\lambda _{m,1}\le 1\) and \(e_m(t)\) means the tacking error.

Furthermore, the error transformation function is selected as

where \(\iota =1\), if \(e_{i}\ge 0\) and 0 otherwise. \(\bar{\phi }_{m,1}(t)\) and \(\underline{\phi }_{m,1}(t)\) are chosen as: if \(e_m(0)\ge 0\), \(\bar{\phi }_{m,1}(t)=\mu _{m,1}(t)\), \(\underline{\phi }_{m,1}(t)=-\lambda _{m,1}\mu _{m,1}(t)\), or else \(\bar{\phi }_{m,1}(t)=\lambda _{m,1}\mu _{m,1}(t)\), \(\underline{\phi }_{m,1}(t)=-\mu _{m,1}(t)\).

Lemma 2

[40] The introduced \(\digamma _{m,j}\) satisfies \(0<\digamma _{m,j}(t)<1\) is true if only if \(\mu _{0,m,j}\), \(\mu _{\infty ,m,j}\), \(a_{m,j}\) and \(\lambda _{m,j}\) satisfying (16) and (17).

4.2 Nonlinear gain function

Design a smooth nonlinear gain function, which can be described as

where \(\nu >0\) and \(o>1\). \(\nu\) means the joint point between the linear and nonlinear gain term in (19). If the variable \(\imath\) is small \((|\imath |<\nu )\), \(\Gamma (\imath )\) utilizes the linear part to realize the stable regulation. If the variable \(\imath\) is large \((|\imath |>\nu )\), \(\Gamma (\imath )\) takes nonlinear gain part to reject aggressive input. Moreover, o represents the damped coefficient. It is noteworthy that the slope can be changed by tuning o.

The properties of the nonlinear gain function (19) can be listed as follows

Property 1

The nonlinear gain function \(\Gamma (\imath )\) is continuously differentiable monotonically increasing and its derivative along with \(\imath\) satisfies

Property 2

Let \(\Gamma _f(\imath )=\Gamma _d(\imath )\cdot \imath +\Gamma (\imath )\), then \(\Gamma _f(\imath )\) is a monotone increasing function. Furthermore, \(\Gamma _f(\imath )\cdot \imath \ge \Gamma (\imath )\cdot \imath\) can be guaranteed.

Property 3

Define \(\Gamma _h(\imath )=\frac{\Gamma _f(\imath )}{\imath }\), it is known that \(\Gamma _h(\imath )>0\) is true for any \(\imath \ne 0\). Let \(\Gamma _h^+\) as

As a result, if \(\imath \ne 0\), \(\Gamma _f(\imath )/\Gamma _h^+(\imath )=\imath\), and if \(\imath =0\), \(\Gamma _f(\imath )/\Gamma _h^+(\imath )=\Gamma _f(\imath )/2=\imath\).

To demonstrate the properties and the advantages of nonlinear function \(\Gamma _f(\imath )\), the difference between linear feedback and nonlinear feedback has been given in Fig. 1. It is shown that when the error variable \(\imath\) is small, \(\Gamma _f(\imath )\) gives a big control gain, which ensures the closed-loop system has a faster transient response; when the error variable \(\imath\) is large, \(\Gamma _f(\imath )\) gives a small control gain and the nonlinear system can avoid the neglected effects of system disturbances. Nevertheless, the linear feedback only gives a linear control gain, which will generate the closed-loop system does not have a good dynamic performance.

Remark 2

By using the characteristic of small tracking error versus large control gain and large tracking error versus small control gain, the controller proposed in this article is more suitable for actual engineering applications. However, although nonlinear gain feedback has better dynamic performance than linear feedback, nonlinear gain feedback will produce composite functions, which will further create difficulties in the stability analysis compared with the stability analysis using general quadratic Lyapunov function.

Remark 3

If traditional Lyapunov function in quadratic form is utilized, the system stability can not be obtained. Thus, by employing the Property 1 and Property 2, a new Lyapunov function is proposed in the backstepping design procedure, in which \(\Gamma (\imath )\imath\) is contained in the designed Lyapunov function. In addition, it should be noticed that \(\Gamma (\imath )\imath\) derivatives with respect to time t yields its derivative \(\Gamma _f(\imath )\dot{\imath }\). It will further facilitate the stability analysis.

4.3 Controller design

Define the change of coordinates as follows

where \(\alpha _{m,j-1}\) represents the virtual controller, \(\gamma _{m,j}\) is the filter signal and \(\varsigma _{m,j}\) means the output error surface with \(j=2,\dots ,n_m\).

Step m, 1: According to (22), it yields

Consider a Lyapunov function as

where \(\delta _{m,1}>0\) is the designed parameter.

The derivative of \(V_{m,1}\) along with (24) yields

Based on Lemma 1, one gets

where \(\tau >0\) is a constant.

Select the intermediate controller \(\alpha _{m,1}\), and adaptive updating law \(\dot{W}_{m,1}\) as

where \(m_{m,1}\), \(\epsilon _{m,1}\) and \(\sigma _{m,1}0\) are positive design parameters.

Invoking (29), (30), we can deduce

where \(q_{m,1}=q_{m,0}-\frac{1}{2}\), \(M_{m,1}=M_{m,0}+\frac{1}{2}\Vert \xi _m^*\Vert ^2+\frac{1}{2}\Vert d_m^*\Vert ^2+\frac{2\zeta _m}{\tau }\Vert W_{m,1}^*\Vert ^2\).

The following filter is constructed

According to (32), it is easy to know \(\dot{\gamma }_{m,2}=-\frac{\varsigma _{m,2}}{\varpi _{m,2}}\), which implies

where

is continuous function with a maximum value.

Remark 4

The first-order filter is proposed in (32) to address the repetitive derivation problem of the virtual control signal, which can alleviate the additional computation burden. However, it should be noticed that the developed first-order linear filter inevitably generates the filtering error.

Step m, 2: According to (1) and (22), \(\dot{s}_{m,2}\) can be calculated as

Chose a Lyapunov function as

where \(\delta _{m,2}>0\) is the designed parameters.

By Lemma 2, (33) and (34), the time differentiation of \(V_{m,2}\) can deduced as

According to Lemma 1, Assumptions 2 and 1, one has

The intermediate control signal \(\alpha _{m,2}\) and the adaptive updating function \(\dot{W}_{m,2}\) are designed as

where \(m_{m,2}\), \(\epsilon _{m,2}\) and \(\sigma _{m,2}\) are positive design parameters.

It follows from (34)–(40), one has

where \(M_{m,2}=M_{m,1}+\frac{2\zeta _m}{\tau }\Vert W_{m,2}^*\Vert ^2\)

Define the following filter

Defining \(\varsigma _{m,3}=\dot{\gamma }_{m,3}-\alpha _{m,2}\), we can obtain \(\dot{\gamma }_{m,3}=-\frac{\varsigma _{m,3}}{\varpi _{m,3}}\). Then, one has

where

is a continuous function with a maximum value.

Step m, j (\(3\le j\le n_m-1\)): By (1) and (22), \(\dot{s}_{m,j}\) is calculated as

where \(\hat{\bar{x}}_{m,j}=[\hat{x}_{m,1},\dots ,\hat{x}_{m,j}]^\mathrm{T}\).

Consider the Lyapunov function candidate as

where \(\delta _{m,j}>0\) is the designed parameters.

In the same case of step m, 2, the following inequalities hold

Select the intermediate controller \(\alpha _{m,j}\), and adaptive updating law \(\dot{W}_{m,j}\) as

where \(m_{m,j}\), \(\epsilon _{m,j}\) and \(\sigma _{m,j}\) are positive design parameters.

According to (46)–(50), it procedures

where \(M_{m,j}=M_{m,j_1-1}+\frac{\zeta _m}{2}\Vert W_{m,j}^*\Vert ^2\).

Define the following filter

Defining \(\varsigma _{m,j+1}=\dot{\gamma }_{m,j+1}-\alpha _{m,j}\), \(\dot{\gamma }_{m,j+1}=-\frac{\varsigma _{m,j+1}}{\varpi _{m,j+1}}\) can be obtained. Then, it easily procedures

where

is a continuous function with a maximum value.

Step \(m, n_m\): According to (22), we can obtain

Define the Lyapunov function as

where \(\delta _{m,n_m}\) and \(\varepsilon _m\) are positive design parameters.

By using Lemma 1, one has

where \(\rho _m\) is a positive constant.

Select the following control law and the adaptive laws as

where \(m_{m,n_m}\), \(c_{m,n_m}\), \(a_m\) and \(\delta _{m,n_m}\) are positive designed parameters.

By using (55)–(59), one can obtain

where \(M_{m,n_m}=M_{m,n_m-1}\).

According to the relationship \(\tilde{W}_{m,j}=W_{m,j}^*-W_{m,j}\) and \(\tilde{c}_m=c_m-\hat{c}_m\), the following inequality holds

For any constants \(\Re >0\) and \(\aleph >0\), the set \(\Omega _{y_{m,d}}=\{(y_{m,d}, \dot{y}_{m,d}, \ddot{y}_{m,d})^\mathrm{T}:y_{m,d}^2+\dot{y}_{m,d}^2+\ddot{y}_{m,d}\le \Re \}\) and \(\Omega _{m,j}:=\{\tilde{x}^\mathrm{T}P\tilde{x}+\sum _{k=1}^{j} \Gamma (s_{m,j})s_{m,j}+\sum _{k=1}^{j}(1/\delta _{m,j}) \tilde{W}_{m,j}^\mathrm{T}\tilde{W}_{m,j}+ \sum _{k=2}^{j}\varsigma _{m,j}^2\le 2\aleph \}\) are compact in \(\mathbb {R}^3\) and \(\mathbb {R}^{3j}\), respectively. Thus, \(\Omega _{y_{m,d}}\times \Omega _{m,j}\) is also compact in \(\mathbb {R}^{3\times 3j}\). Therefore, \(Y_{m,j}(\cdot )\) has a maximum \(\bar{Y}_{m,j}>0\). such that \(|Y_{m,j}(\cdot )|\le \bar{Y}_{m,j}\), and the following inequality holds

Submitting (61)–(63) into (60), one can obtain

where \(M_m=M_{m,n_m}+2\tau +\frac{\epsilon _m}{\varepsilon _m}c_m^{2}\).

4.4 Stability analysis

Theorem 1

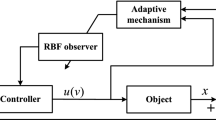

Consider the nonstrict-feedback MIMO systems (1). The actual control input (57) is designed with the NN observer (7) and the adaptive functions (30), (40), (50), (58) and (59), described in Fig. 2 and Algorithm 1. Furthermore, all the closed-loop variables can be adjusted to be bounded and the output of each subsystem can track the reference signals.

Proof: Choose a Lyapunov function \(V=\sum _{i=1}^{m}V_m\) and calculate its time differentiation, one gets

where

From Property 2, we can obtain the following inequality

it gives

where \(C=\min \left\{ C_1,C_2,\dots ,C_{n_m}\right\}\),

\(M=\min \left\{ M_1, M_2,\dots ,M_{n_m}\right\}\).

Multiplying (68) by \(e^{Ct}\) and integrating it over [0, t], one obtains

According to (69), we can learn that the output \(y_m\) can track the reference trajectory \(y_{m,d}\) with a minimal error. Meanwhile, if \(s_{m,1}\le \nu\), \(\Gamma (s_{m,1})s_{m,1}=s_{m,1}^2\), if not \(\Gamma (s_{m,1})s_{m,1}=[\frac{\log _o(1-\ln o\cdot \nu +\ln o\cdot |s_{m,1}|)}{\ln o}+\nu ]|s_{m,1}|\ge \nu |s_{m,1}|\). Form the definition V, one has \(\Gamma (s_{m,1})s_{m,1}\le V\), then, \(|s_{m,1}|\le \sqrt{V(0)e^{-Ct}+\frac{M}{C}}\); if \(|s_{m,1}|\le \nu\), otherwise, \(|s_{m,1}(t)|\le \frac{V(0)e^{-Ct}}{\nu }+\frac{M}{C\nu }\). Finally, \(s_{m,1}\) satisfies \(|s_{m,1}|\le \max \left \{\frac{V(0)e^{-Ct}}{\nu }+\frac{M}{C\nu }, \min \left \{\sqrt{V(0)e^{-Ct}+\frac{M}{C}}\right\}\right\}\). Since \(\lim _{t\rightarrow \infty }e^{-Ct}\rightarrow 0\), the ultimate bound of tracking error \(e_m\) is \(\lim _{t\rightarrow \infty }|e_m|\le \max \left \{\frac{V(0)e^{-Ct}}{\nu }+\frac{M}{C\nu }\right\}\). Thus, we can easily conclude that the error \(e_m\) can be adjusted to arbitrarily small by choosing the appropriate parameters C, M and \(\nu\).

Remark 5

For the tracking error, it should be noticed that if the performance function \(\mu _{m,1}\) is a continuous function with \(0<\mu _{m,1}(0)<1\), \(\forall t>0\), \(\mu _{m,1}\) satisfies \(0<\mu _{m,1}(t)<1\) from Lemma 2. According to \(\mu _{m,1}(t)=\frac{e_1}{\phi _{m,1}(t)}\), we can conclude that \(|e_m(t)|\le |\phi _{m,1}(t)|\) holds. This further means that the steady-state and transient output tracking error \(e_m(t)\) will not violate the predefined range by performance function.

Remark 6

Due to the fact that each subsystem nonlinear function \(f_{m,j}(x_m)\) contains the whole states \(x_m\), the nonlinear system (1) is said to be in nonstrict-feedback structure, which will increase the difficulty for controller design. In this paper, the approximation ability of RBF-NNs is utilized to estimate the nonlinear function \(f_{m,j}(x_m)\). It should be noticed that the whole states \(x_m\) appear in the j-th step backstepping design to further generate the algebraic loop problem. To deal with this obstacle, \(W_{m,j}^{\mathrm{T}}\varphi _{m,j}(\hat{x}_m)=W_{m,j}^{*\mathrm{T}}\varphi _{m,j}(\hat{x}_m) -W_{m,j}^{*\mathrm{T}}\varphi _{m,j}(\hat{\bar{x}}_{m,j}) +W_{m,j}^\mathrm{T}\varphi _{m,j}(\hat{\bar{x}}_{m,j})+\tilde{W}_{m,j}^\mathrm{T}\varphi _{m,j}(\hat{\bar{x}}_{m,j})\) is transformed in (33). According to this variable separation technique, the algebraic loop problem can be solved.

Remark 7

It is noteworthy that some control approaches have been reported in [6, 29, 41] for nonlinear systems. The primary discrepancies between the results in [6, 29, 41] and our result are summarized as follows: (1) The reported control algorithms in [6, 29, 41] are in the sense of strict-feedback SISO systems. In contrast, we extend the strict-feedback SISO systems to nonstrict-feedback MIMO systems. And the nonlinear-gain based controller developed in our result can be employed to control the SISO systems. (2) It can be seen that the approximation ability of RBF-NNs is used to estimate the unknown packaged functions and the unknown functions in [42], which indicates \(2n_m\) adaptive parameters should be online estimated, which will increase the computation burden. In order to save computing resources, we only utilize \(n_m\) adaptive parameters to realize the controller design. Therefore, the complexity of computing can be alleviated.

Remark 8

The parameters of the proposed controller are chosen by the characteristics of the considered systems and the stability criteria. It is obvious that all designed parameters should be selected to ensure Theorem 1 holds. We can obtain the selection principles of these parameters and their impacts on the system performance. According to (65), the small error signals \(e_m\), \(s_{m,j}\) and \(\tilde{W}_{m,j}\) may be obtained by choosing the larger \(\epsilon _{m,j}\), \(\tau\) and \(\sigma _{m,j}\). Nevertheless, the larger \(\epsilon _{m,j}\), \(\tau\) and \(\sigma _{m,j}\) may lead to the poor transient performance and the high control input. Hence, the trial-and-error method, a widely employed approach, can be utilized for parameter selection.

5 Simulation results

In order to demonstrate the effectiveness and application of the proposed control algorithm, this section provides two simulation examples.

Example 1

Consider the MIMO nonstrict-feedback nonlinear systems

where \(f_{1,1}(X)=1-\cos (x_{1,1}x_{1,2}x_{2,1}x_{2,2})+x_{1,1}\), \(f_{1,2}(X)=2x_{1,1}\cos (x_{1,1}x_{1,2})+x_{1,1}x_{1,2}x_{2,1}x_{2,2}e^{x_{1,2}}\), \(f_{2,1}(X)=2-\cos (x_{1,1}x_{1,2}x_{2,1}x_{2,2})+3x_{1,1}x_{1,2}x_{2,1}x_{2,2}\), \(f_{2,2}=x_{2,1}x_{2,2}e^{x_{2,2}}+x_{1,1}x_{1,2}x_{2,2}\). The tracking signals are selected as \(y_{1,d}(t)=\frac{1}{2}\sin (t)+\frac{1}{2}\sin (\frac{t}{2})\), \(y_{2,d}(t)=\frac{1}{2}\sin (t)-\frac{1}{4}\cos (2t)\). According to [38], the parameters of the hysteresis input are selected as \(\varrho _m=1\), \(c_m=5\) and \(D_m=0.5\).

The basis vector function \(\varphi _{m,j}\) is constructed by choosing the width of Gaussian functions and the centers of the receptive field as \(\bar{\sigma }_m=2\) and \(\underline{\mu }=[-1.5, -1, -0.5, 0, 0.5, 1, 1.5]^\mathrm{T}\). Prescribed performance function is \(\mu _{1,1}=\mu _{2,1}=(0.5-0.015)e^{-2t}+0.015\) and it is easy to know \(\mu _{0,m,1}=0.5\), \(\mu _{\infty ,m,1}=0.05\), \(a_{m,1}=2\), \(\mu _{\infty ,m,1}=0.005\). The parameters of nonlinear gain function are selected as \(o=10\) and \(\nu =0.005\). Furthermore, all designed parameters are chosen as \(l_{1,1}=l_{1,2}=25\), \(l_{2,1}=l_{2,2}=20\), \(\epsilon _{1,1}=\epsilon _{1,2}=1.5\), \(\epsilon _{2,1}=\epsilon _{2,2}=2.5\) \(\sigma _{1,1}=\sigma _{1,2}=1.2\), \(\sigma _{2,1}=\sigma _{2,2}=1.5\), \(\delta _{1,1}=\delta _{1,2}=1.5, \delta _{2,1}=\delta _{2,2}=1.8\), \(\tau =1\). The initial values are given as \(x_1(0)=[0.2, 0.01]^\mathrm{T}\), \(x_2(0)=[-0.23, 0]^\mathrm{T}\), \(\hat{x}_1(0)=[0.35, 0.35]^\mathrm{T}\), \(\hat{x}_{2}(0)=[0.18, 0.18]^\mathrm{T}\), \(\hat{c}_m(0)=0.05\), \(W_{1,1}(0)=[0.2, 0.1, 0.1, 0, 0.1, 0, 0]^\mathrm{T}\), \(W_{1,2}(0)=[0.2, 0.1, 0, 0.1, 0, 0, 0]^\mathrm{T}\), \(W_{2,1}(0)=[0.2, 0.2, 0.2, 0.2, 0.2, 0, 0]^\mathrm{T}\), \(W_{2,2}(0)=[0.15, 0, 0.15, 0.15, 0.15, 0.15, 0]^\mathrm{T}\). Figures 3, 4, 5, 6, 7, 8 and 9 illustrate the simulation results. According to Fig. 3, it is easy to conclude that the proposed control strategy can derive the output to track the reference signal. Figures 4 and Fig. 5 are described to show the tracking error, it can be seen that the nonlinear feedback control, the linear feedback (LF)-DSC and the LF-PPC have a similar control performance with a small tracking error. Compared with the LF control method, the nonlinear gain feedback control proposed in the paper can drive the tracking error retained within the predefined range with better tracking performance. Figures 6 and 7 are used to show the trajectories of states \(x_{m,1}\) and \(x_{m,2}\) and the NN observer values \(\hat{x}_{m,1}\), \(\hat{x}_{m,2}\). \(\hat{x}_{m,1}\) and \(\hat{x}_{m,2}\) are used to obtain the system states \(x_{m,1}\) and \(x_{m,2}\), respectively. The trajectories of \(\Vert W_{m,j}\Vert ^2\) are given in Fig. 8. Figure 9 indicates the control signals \(u_m(v_m)\) and \(v_m\).

Example 2

Consider the tracking error problem for a helicopter (CE-150) in [43], see Fig. 10. The helicopter system can be described as the following MIMO systems

where various parameters are shown in Table 1, \(I_{l_2}\) and \(I_c\) are defined as

where \(m=m_l+m_1+m_2\).

By defining \(x_{1,1}=\varphi\), \(x_{1,2}=\dot{\varphi }\), \(x_{2,1}=\theta\) and \(x_{2,2}=\dot{\theta }\), (71) can be rewritten as

where \(\vartheta _{1,2}=\frac{1}{J_1}\), \(\vartheta _{2,2}=\frac{1}{J_2}\), \(d_{1,1}(t)=d_{2,1}(t)=0\), \(d_{2,1}(t)=0.01\cos (t)\), \(d_{2,2}(t)=0.02\sin (t)\).

The target signals are chosen as \(y_{1,d}(t)=\sin (t)\), \(y_{2,d}(t)=\sin (t)\). The prescribe performance function and the nonlinear gain function are kept same with Example 1. And all designed parameters are selected as \(l_{1,1}=l_{1,2}=15\), \(l_{2,1}=l_{2,2}=10\), \(\epsilon _{1,1}=\epsilon _{1,2}=1.2\), \(\epsilon _{2,1}=\epsilon _{2,2}=1.6\) \(\sigma _{1,1}=\sigma _{1,2}=1.8\), \(\sigma _{2,1}=\sigma _{2,2}=2\), \(\delta _{1,1}=\delta _{1,2}=1.5, \delta _{2,1}=\delta _{2,2}=1.8\), \(\tau =1\), \(x_1(0)=[0.25, 0]^\mathrm{T}\), \(x_2(0)=[0.1, 0]^\mathrm{T}\), \(\hat{x}_1(0)=[0.35, 0.35]^\mathrm{T}\), \(\hat{x}_{2}(0)=[0.25, 0.25]^\mathrm{T}\), \(\hat{c}_m(0)=0.1\). Figures 11, 12, 13, 11, 11, 11 and 17 show the effectiveness and the practicality of the proposed controller, which is employed to helicopter systems. The tracking results are demonstrated in Fig. 11 and the tracking errors are depicted in Figs 12 and 13. As shown in Figs. 14 and 15, the constructed observer can estimate the unmeasurable states to satisfy the controller design. Fig. 16 gives the curves of \(\Vert W_{m,j}\Vert ^2\). Finally, Fig. 17 indicates the control signals \(u_m(v_m)\) and \(v_m\) under the hysteresis nonlinearity.

6 Conclusion

In this paper, a tracking control problem of MIMO nonlinear systems with hysteresis input and unknown states is investigated and verified. The NN observer has been constructed to estimate the unmeasurable states. A nonlinear gain function is used in the process of backstepping design procedure, which brings a better dynamic performance for the closed-loop system. Meanwhile, by designing a novel Lyapunov function, we can easy to deal with the difficulties caused by the nonlinear gain function for stability analysis. Furthermore, the algebraic loop problem is addressed by using the property of the NNs. According to the benefits of DSC technique, an adaptive tracking control method is proposed, which guarantees that the closed-loop system is SGUUB and the tracking error converges to the prescribed bounds. Further work is considered to deal with the tracking control problem by using the fractional order control method the finite-time control method.

Data Availability

No data was used for the research described in the article.

References

Zou W, Shi P, Xiang Z, Shi Y (2020) Consensus tracking control of switched stochastic nonlinear multiagent systems via event-triggered strategy. IEEE Trans Neural Netw Learn Syst 31(3):1036–1045

Zhu B, Wang Y, Zhang H, Xie X (2022) Fuzzy functional observer-based finite-time adaptive sliding-mode control for nonlinear systems with matched uncertainties. IEEE Trans Fuzzy Syst 30(4):918–932

Shi P, Sun W, Yang X (2022) RBF neural network-based adaptive robust synchronization control of dual drive gantry stage with rotational coupling dynamics. IEEE Trans Autom Sci Eng. https://doi.org/10.1109/TASE.2022.3177540

Kanellakopoulos I, Kokotovic PV (1991) Morse: Systematic design of adaptive controllers for feedback linearizable systems. IEEE Trans Autom Control 36(11):1241–1253

Zou W, Ahn C, Xiang Z (2021) Fuzzy-approximation-based distributed fault-tolerant consensus for heterogeneous switched nonlinear multiagent systems. IEEE Trans Fuzzy Syst 29(10):2916–2925

Xu B, Wang X, Chen W, Shi P (2021) Robust intelligent control of SISO nonlinear systems using switching mechanism. IEEE Trans Cybern 51(8):3975–3987

Xie K, Lyu Z, Liu Z, Zhang Y, Chen CL (2020) Adaptive neural quantized control for a class of MIMO switched nonlinear systems with asymmetric actuator dead-zone. IEEE Trans Neural Netw Learn Syst 31(6):1927–1941

Zhang Y, Chadli M, Xiang Z (2022) Predefined-time adaptive fuzzy control for a class of nonlinear systems with output hysteresis. IEEE Trans Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2022.3228012

Zhai J, Wang H, Tao J (2023) Disturbance-observer-based adaptive dynamic surface control for nonlinear systems with input dead-zone and delay using neural networks. Neural Comput Appl 35:4027–4049

Liu X, Wu Y, Wu N, Yan H, Wang Y (2023) Finite-time-prescribed performance-based adaptive command filtering control for MIMO nonlinear systems with unknown hysteresis. Nonlinear Dyn 111:7357–7375

Sui S, Tong S (2018) Observer-based adaptive fuzzy quantized tracking DSC design for MIMO nonstrict-feedback nonlinear systems. Neural Comput Appl 30:3409–3419

Chen L, Yan B, Wang H, Shao K, Kurniawan E, Wang G (2022) Extreme-learning-machine-based robust integral terminal sliding mode control of bicycle robot. Control Eng Pract 121:105064

Zong G, Wang Y, Karimi HR, Shi K (2022) Observer-based adaptive neural tracking control for a class of nonlinear systems with prescribed performance and input dead-zone constraints. Neural Netw 147:126–135

Xu Z, Deng W, Shen H, Yao J (2022) Extended-state-observer-based adaptive prescribed performance control for hydraulic systems with full-state constraints. IEEE/ASME Trans Mechatron 27(6):5615–5625

Zhang X, Wu J, Zhan X, Han T, Yan H (2023) Observer-based adaptive time-varying formation-containment tracking for multiagent system with bounded unknown input. IEEE Trans Syst Man Cybern Syst 53(3):1479–1491

Tang X, Liu Z (2022) Sliding mode observer-based adaptive control of uncertain singular systems with unknown time-varying delay and nonlinear input. ISA Trans 128:133–143

Gao T, Li T, Liu YJ, Tong S, Sun F (2022) Observer-based adaptive fuzzy control of non-strict feedback nonlinear systems with function constraints. IEEE Trans Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2022.3228319

Li Z, Liu Y, Ma H, Li H (2023) Learning-observer-dased adaptive tracking control of multiagent systems using compensation mechanism. IEEE Trans Artif Intell. https://doi.org/10.1109/TAI.2023.3247550

Wu T, Yu Z, Li S (2022) Observer-based adaptive fuzzy quantized fault tolerant control of nonstrict-feedback nonlinear systems with sensor fault. IEEE Trans Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2022.3216113

Ho CM, Ahn KK (2022) Observer based adaptive neural networks fault-tolerant control for pneumatic active suspension with vertical constraint and sensor fault. IEEE Trans Veh Technol. https://doi.org/10.1109/TVT.2022.3230647

Shao K, Zhang J, Wang H, Wang X, Lu R, Man Z (2021) Tracking control of a linear motor positioner based on barrier function adaptive sliding mode. IEEE Trans Ind Inf 17(11):7479–7488

Bechlioulis CP, Rovithakis GA (2008) Robust adaptive control of feedback linearizable mimo nonlinear systems with prescribed performance. IEEE Trans Autom Control 53(9):2090–2099

Xu Z, Xie N, Shen H, Hu X, Liu Q (2021) Extended state observer-based adaptive prescribed performance control for a class of nonlinear systems with full-state constraints and uncertainties. Nonlinear Dyn 105(1):345–358

Wang H, Bai W, Zhao X, Liu PX (2022) Finite-time-prescribed performance-based adaptive fuzzy control for strict-feedback nonlinear systems with dynamic uncertainty and actuator faults. IEEE Trans Cybern 52(7):6959–6971

Zhang L, Che W, Chen B, Lin C (2022) Adaptive fuzzy output-feedback consensus tracking control of nonlinear multiagent systems in prescribed performance. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2022.3171239

Gao S, Wu C, Dong H, Ning B (2020) Control with prescribed performance tracking for input quantized nonlinear systems using self-scrambling gain feedback. Inf Sci 529:73–86

Liu Y, Zhang H, Wang Y, Ren H, Li Q (2022) Adaptive fuzzy prescribed finite-time tracking control for nonlinear system with unknown control directions. IEEE Trans Fuzzy Syst 30(6):1993–2003

Wang N, Gao Y, Zhang X (2021) Data-driven performance-prescribed reinforcement learning control of an unmanned surface vehicle. IEEE Trans Neural Netw Learn Syst 32(12):5456–5467

Sun K, Liu L, Qiu J, Feng G (2020) Fuzzy adaptive finite-time fault-tolerant control for strict-feedback nonlinear systems. IEEE Trans Fuzzy Syst 29(4):786–796

Zuo R, Dong X, Liu Y, Liu Z, Zhang W (2019) Adaptive neural control for MIMO pure-feedback nonlinear systems with periodic disturbances. IEEE Trans Neural Netw Learn Syst 30(6):1756–1767

Gao S, Dong H, Ning B (2017) Neural adaptive dynamic surface control for uncertain strict-feedback nonlinear systems with nonlinear output and virtual feedback errors. Nonlinear Dyn 90(4):2851–2867

Chen C, Liu Z, Xie K, Zhang Y, Chen CP (2017) Adaptive neural control of MIMO stochastic systems with unknown high-frequency gains. Inf Sci 418:513–530

Wang H, Chen B, Lin C, Sun Y (2016) Observer-based adaptive neural control for a class of nonlinear pure-feedback systems. Neurocomputing 171:1517–1523

Zhou J, Wen C, Zhang Y (2006) Adaptive output control of nonlinear systems with uncertain dead-zone nonlinearity. IEEE Trans Autom Control 51(3):504–511

Peng Z, Wang D, Wang J (2021) Data-driven adaptive disturbance observers for model-free trajectory tracking control of maritime autonomous surface ships. IEEE Trans Neural Netw Learn Syst 32(12):5584–5594

Li Y, Zhang J, Tong S (2022) Fuzzy adaptive optimized leader-following formation control for second-order stochastic multi-agent systems. IEEE Trans Ind Inf 18(9):6026–6037

Yue J, Liu L, Peng Z, Wang D, Li T (2022) Data-driven adaptive extended state observer design for autonomous surface vehicles with unknown input gains based on concurrent learning. Neurocomputing 467:337–347

Zhao Z, Liu Y, Zou T, Hong K, Li H (2023) Robust adaptive fault-tolerant control for a riser-vessel system with input hysteresis and time-varying output constraints. IEEE Trans Cybern 53(6):3939–3950

Cui D, Xiang Z (2022) Nonsingular fixed-time fault-tolerant fuzzy control for switched uncertain nonlinear systems. IEEE Trans Fuzzy Syst 31(1):174–183

Han SI, Lee JM (2014) Partial tracking error constrained fuzzy dynamic surface control for a strict feedback nonlinear dynamic system. IEEE Trans Fuzzy Syst 22(5):1049–1061

Tong S, Min X, Li Y (2020) Observer-based adaptive fuzzy tracking control for strict-feedback nonlinear systems with unknown control gain functions. IEEE Trans Cybern 50(9):3903–3913

Chen B, Lin C, Liu X, Liu K (2016) Observer-based adaptive fuzzy control for a class of nonlinear delayed systems. IEEE Trans Syst Man Cybern Syst 46(1):27–36

Zhao J, Tong S, Li Y (2021) Observer-based fuzzy adaptive control for MIMO nonlinear systems with non-constant control gain and input delay. IET Control Theory Appl 15(11):1488–1505

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant (52101346), (62122046), (61973204) and supported by the Shanghai Committe of Science and Technology, China Grant (23010500100).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, X., Shi, Y., Wu, N. et al. Observer-based adaptive backstepping control for Mimo nonlinear systems with unknown hysteresis: a nonlinear gain feedback approach. Neural Comput & Applic 35, 23265–23281 (2023). https://doi.org/10.1007/s00521-023-08896-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08896-0