Abstract

In this paper, we study the global asymptotic stability of fractional-order BAM neural networks. We take both time delay and impulsive effects into consideration. Based on Lyapunov stability theorem, fractional Barbalat’s lemma and Razumikhin-type stability theorem, some stability conditions that are independent of the form of specific delays can be obtained. At last, two illustrative examples are given to show the independence of the obtained two main results and to show the effectiveness of the obtained results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As an extension of integer-order calculus, fractional-order calculus has drawn much attention from many researchers. Lots of dynamics can be described by fractional differential equations, such as electrochemistry [1], diffusion [2], viscoelastic materials [3] and control [4]. Recently, fractional neural networks have been studied. Kaslik studied stability, bifurcations and chaos of fractional-order Hopfield neural networks [5]. Ref. [6] investigated the global Mittag–Leffler stability and synchronization of a class of fractional-order memristor-based neural networks. Due to the finite speed of the signal transmission between neurons, time delay often exists in almost every neural networks. At the same time, time delay could also effect the dynamic behavior of neural networks. Thus, time delay is unavoidable in the analysis of neural networks. Time-delayed fractional-order neural networks also have been researched. For example, Chen discussed the existence, uniqueness and stability of a fractional-order delay neural network’s equilibrium point [7]; Stamova investigated global Mittag–Leffler stability and synchronization of impulsive fractional-order cellular neural networks with time-varying delays [8]. More results about fractional neural networks can be found in Refs. [9–12].

Bidirectional associative memory (BAM) neural networks attract many studies due to its applications in many fields, such as signal processing [13], image processing [14] and pattern recognition [15]. In 1987, Kosko introduced BAM neural networks [16], which are composed of neurons arranged in two layers, i.e., the U-layer and V-layer. The neurons in one layer are fully interconnected to the one in the other layer, while there are no interconnections among neurons in the same layer. Stability plays an important role on the study of neural networks, which is the premise for the application. There are also lots of results about the stability for BAM neural networks in recent years. For instance, in [17], Sakthivel analyzed the stability for a class of delayed stochastic BAM neural networks with Markovian jumping parameters and impulses. The global robust stability problem of BAM neural networks with multiple time delays under parameter uncertainties has been researched by Feng et al [18]. Exponential stability via impulsive control is investigated in [19] for the Markovian jumping stochastic BAM neural networks with mode-dependent probabilistic time-varying delays. Ref. [20] discussed the stabilization of BAM neural networks with time-varying delays in the leakage terms by using sampled-data control. More impressive results can be found and their references in herein [21–25].

So far, the study of BAM neural networks mainly focused on neural networks with only first derivative of the states. As we all known, the memory is one of the main features of BAM neural networks [16]. There is a particular attract point that fractional-order derivative is nonlocal and has weakly singular kernels. Thus, the major advantage of the fractional-order derivatives is the description of memory [26, 27]. However, a few studies focused on fractional BAM neural networks [28, 29]. Cao investigated the finite-time stability of fractional-order BAM neural networks with distributed delay in [28]. Ref. [29] studied the uniform stability analysis of fractional-order BAM neural networks with delays in the leakage terms. Instead of Lyapunov approach, inequality technique plays an important role in the previous two papers [28, 29]. As is well known, most of results related to stability of integer-order BAM neural networks are obtained by constructing Lyapunov function [17–25]. Some results about the stability of fractional-order systems via Lyapunov function approach have been published [30–33]. Based on Lyapunov function approach, this paper devotes to presenting a sufficient criterion for asymptotic stability of fractional-order BAM neural networks.

In addition, many real-world systems often suddenly receive external disturbance, which makes systems undergo abrupt changes in a very short time. This phenomenon is called impulse. Dynamic systems with impulses are neither purely continuous time nor purely discrete time and exhibit a combination of continuous and discrete characteristics. It is clear that such a short-time disturbance must have some effects on dynamics of systems. There are some results about integer-order impulsive network systems (see for example [34–36]). Due to the finite speed of the signal transmission between neurons, time delay exists in almost every neural networks. Since the existence of delays and impulses is frequently result in instability, bifurcation and chaos for neural networks, it is necessary to study the delay and impulse effects on the stability of networks. Integer-order BAM neural networks with delay and impulse effects have gotten some results [17, 22, 37, 38], but impulsive fractional-order BAM neural networks with time delay have not been seen yet.

Motivated by the above discussions, we study the asymptotic stability of a class of impulsive fractional-order BAM neural networks with time delay. We shall study the fractional-order BAM neural networks by employing both fractional Barbalat’s lemma [33] and Razumikhin-type stability theorems [39]. Some stability criteria are obtained for ensuring the equilibrium point of the system to be global asymptotic stability.

The rest of this paper is organized as follows: In Sect. 2, we introduce some definitions and some lemmas which are necessary for presenting our results in the following. The main results about stability conditions for fractional-order BAM neural networks are presented in Sect. 3. Then, two examples will be given to demonstrate the effectiveness of our results in Sect. 4. Conclusions are finally drawn in Sect. 5.

2 Model description and preliminaries

In this section, some definitions and lemmas about fractional calculus are introduced, which will be used in deriving the main results. Then, the time-delayed fractional-order BAM model with impulsive effects will be introduced.

There are some definitions for fractional derivative, such as Riemann–Liouville derivative (R–L derivative), Caputo derivative and Grünwald–Letnikov derivative (G–L derivative).

R–L fractional operator often plays an important role in the stability analysis of fractional-order systems. Moreover, the R–L derivative is a continuous operator of the order \(\alpha\) and is a natural generalization of classical derivative [40]. Consequently, we will choose R–L derivative in this paper.

Definition 1

[4] The fractional integral of order \(\alpha\) for a function w(t) is defined as

where \(t\geqslant t_{0}\) and \(\alpha >0\).

Definition 2

[4] The R–L fractional derivative with order \(\alpha\) for a continuous function x(t) is defined as follows:

in which \(m-1<\alpha <m,\ m\in Z^{+}.\)

Without loss of generality, the order \(\alpha\) of R–L derivative is given as \(0<\alpha <1\) in Definition 2. For simply, denote \(D^{\alpha }x(t)\) as the R–L derivative \(^{RL}D^{\alpha }_{t_{0},t}x(t)\). Some properties of R–L derivative are listed in the following lemma.

Lemma 1

[4] If \(w(t), u(t)\in C^{1}[t_{0},b]\), and \(\alpha >0, \beta >0\), then

Considering the following time-delayed fractional BAM neural networks with impulsive effects:

There were two layers in a BAM, where \(U=\{x_{1}, x_{2},\ldots x_{n}\}\) and \(V=\{y_{1}, y_{2},\ldots y_{m}\}\). In which, \(x_{i}(t)\) and \(y_{j}(t)\) denote the membrane voltages of ith neuron in the U-layer and the membrane voltages of jth neuron in the V-layer, respectively. \(a_{i}>0, b_{j}>0\) denote decay coefficients of signals from neurons \(x_{i}\) to \(y_{j}\), respectively. \(f_{i}\) and \(g_{j}\) denote the transfer function for neurons. \(c_{ij}\) and \(d_{ji}\) denote connection strengths between neuron \(x_{i}\) and \(y_{j}\). \(I_{i}\) and \(J_{j}\) denote external input of U-layer and V-layer, respectively. In addition, \(\Delta x_{i}(t_{k})=x_{i}(t_{k}^{+})-x_{i}(t_{k}^{-})\) and \(\Delta y_{j}(t_{k})=y_{j}(t_{k}^{+})-y_{j}(t_{k}^{-})\). Impulsive moment \(\{t_{k}|k=1,2,3,\ldots \}\) satisfies \(0\leqslant t_{0}<t_{1}<t_{2}<\ldots <t_{k}<\ldots , t_{k}\rightarrow +\infty\) as \(k\rightarrow +\infty\), and \(x(t_{k}^{+})=\lim _{t\rightarrow t_{k}^{+}}x(t)\) and \(x(t_{k}^{-})=x(t_{k})\).

Lemma 2

[33] (Fractional Barbalat’s lemma) If \(\int _{t_{0}}^{t}w(s){\text{d}}s\) has a finite limit as \(t\rightarrow +\infty , D^{\alpha }w(t)\) is bounded, then \(w(t)\rightarrow 0\) as \(t\rightarrow +\infty\), where \(0<\alpha <1\).

Lemma 3

[39] Suppose that \(\omega _1, \omega _2\): \(R\rightarrow R\) are continuous nondecreasing functions, \(\omega _1(s)\) and \(\omega _2(s)\) are positive for \(s>0\), and \(\omega _1(0)=\omega _2(0)=0, \omega _1, \omega _2\) strictly increasing. If there exists a continuously differentiable function \(V: R\rightarrow R\) such that \(\omega _1(\parallel x(t)\parallel )\leqslant V(t,x(t))\leqslant \omega _2(\parallel x(t)\parallel )\) holds, and there exist two constants \(q>p>0\) such that for any given \(t_0\in R\) the R–L fractional derivative of V along the solution x(t) of R–L system \(D^{\alpha }x(t)=f(t,x(t),x(t-\tau ))\) satisfies

for \(t\geqslant t_0\), then R–L system \(D^{\alpha }x(t)=f(t,x(t),x(t-\tau ))\) is globally asymptotically stable.

Furthermore, the transfer functions \(f_{j}, g_{i}\) and impulsive operator satisfy the following assumptions:

(H1) The functions \(f_{i},g_{j}(i=1,2,\ldots ,n; j=1,2,\ldots ,m)\) are Lipschitz continuous. That is, there exist positive constants \(F_{j},G_{i}\) such that

(H2) The impulsive operators \(\gamma ^{(1)}_{k}(x_{i}(t_{k}))\) and \(\gamma ^{(2)}_{k}(y_{j}(t_{k}))\) satisfy

where \(\lambda _{ik}^{(1)}\in (-2,0),(i=1,2,\ldots ,n;\quad k=1,2,\ldots )\) and \(\lambda _{jk}^{(2)}\in (-2,0), (j=1,2,\ldots ,m;\quad k=1,2,\ldots )\).

3 Main results

In this section, we will state our main results in the following theorems.

Theorem 1

Suppose that (H1) and (H2) hold, and then the equilibrium \((x^*,y^*)\) of system (3) is globally asymptotically stable if \(\hat{\xi }^{(1)}>0\) and \(\hat{\xi }^{(2)}>0\), where

Proof

Translating the equilibrium point to the origin via the transformation: \(x_{i}(t)=u_{i}(t)+x^*, y_{i}(t)=v_{i}(t)+y^*\), then Eq. (3) is converted into:

Consider a Lyapunov function defined by

When \(t\ne t_{k}\), calculating the derivatives of V(t) along the solutions of system (6), based on the definition of R–L derivative and Lemma 1, we obtain

then, for \(\forall t\in [t_{k-1},t_{k})\), we have

On the other hand, from (5), one has

let \(U(t)=\sum _{i=1}^{n}\left| u_{i}(t)\right| +\sum _{j=1}^{m}\left| v_{j}(t)\right|\), then \(\forall t\in [t_{k-1},t_{k}), V(t)\leqslant -\int_{t_{k-1}}^{t}U(s){\text{d}}s+ V(t_{k-1}^{+})\leqslant -\int_{t_{k-1}}^{t}U(s){\text{d}}s+ V(t_{k-1}^{-})\leqslant -\int_{t_{k-2}}^{t}U(s){\text{d}}s+ V(t_{k-2}^{-})\leqslant \ldots \leqslant -\int_{t_{0}}^{t}U(s){\text{d}}s+ V(t_{0}).\)

Thus

let \(t\rightarrow +\infty\), it is obviously that \(\lim _{t\rightarrow +\infty }U(t)\) is bounded. According to Eq. (6), \(\mid D^{\alpha }u_{i}(t)\mid\) and \(\mid D^{\alpha }v_{j}(t)\mid\) are bounded. From the fractional Barbalat’s lemma, it follows \(\sum _{i=1}^{n}|u_{i}(t)|\rightarrow 0\) and \(\sum _{j=1}^{m}|v_{j}(s)|\rightarrow 0\) as \(t\rightarrow +\infty\). Therefore, the equilibrium \((x^*,y^*)\) of system (3) is global asymptotic stability. This completes our proof. \(\square\)

Corollary 1

Suppose that (H1) and (H2) hold, then the equilibrium \((x^*,y^*)\) of system (3) is globally asymptotically stable if

Proof

By some simple computations, all the conditions of Theorem 1 hold, then the equilibrium \((x^*,y^*)\) is globally asymptotically stable. \(\square\)

Theorem 2

Under (H1) and (H2), then the equilibrium \((x^*,y^*)\) of system (3) is globally asymptotically stable if \(q>p>0\), where

and

Proof

Based on Eq. (6), considering the following Lyapunov function:

When \(t\ne t_{k}\), calculating the derivatives of V(t) along the solutions of system (6), one has

where \(\bar{V}(t)=sup_{t-\tau ^{*}\leqslant s\leqslant t}V(s)\).

On the other hand, by (H2), we have

From Lemma 2, the equilibrium \((x^*,y^*)\) of system (3) is global asymptotic stability. This completes our proof. \(\square\)

Remark 1

Because the R–L derivative is a continuous operator of the order \(\alpha\), when \(\alpha =1\), the fractional-order BAM neural network will become the first-order derivative model. From the proof of above results, it is obvious that when \(\alpha =1\), both Theorems 1 and 2 still hold.

Remark 2

In addition, the sufficient conditions in Theorems 1 and 2 are independent, which can be checked in the numerical simulations.

4 Numerical simulations

In this section, we will illustrate our results from two examples.

Two low-dimensional cases of Example 1 will be used to compare our results in Theorems 1 and 2. We will also give a higher dimension to illustrate the usefulness of our results in Example 2. The algorithm to simulate the R–L fractional-order neural network can be seen in Ref. [33].

Example 1

Case 1 Consider the following impulsive fractional-order BAM neural network with five neurons, three in the first field and others in the second field, which can be described as:

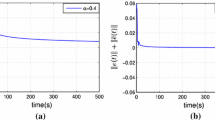

In this case, set \(a_{1}=1.5, a_{2}=0.5, a_{3}=0.5, b_{1}=0.5, b_{2}=1.5, C=[-0.05, 0.1; 0.02, 0.2; -0.01, 1], D=[-0.05, 0.1, 0.02;0.7, -0.01, 0.01]\); \(I_{i}=J_{j}=0,i=1,2,3;j=1,2;\) Let \(F_{j}=G_{i}=1,i=1,2,3;j=1,2\), by some simple computations, one has \(\xi _{1}^{(1)}=0.75, \xi _{2}^{(1)}=0.39, \xi _{3}^{(1)}=0.47\), and \(\xi _{1}^{(2)}=0.42, \xi _{2}^{(2)}=0.2\); it is easy to check that \(\hat{\xi }^{(1)}=0.39>0\) and \(\hat{\xi }^{(2)}=0.2>0\), the conditions in Theorem 1 are satisfied. So, the equilibrium point of system (3) is globally asymptotically stable. Figures 1 and 2 show the trajectories of variable \(x_{i}(t)\) and \(y_{j}(t)\) of system (3).

Time response of state variables x(t) in system (3)

Time response of state variables y(t) in system (3)

Case 2 In this case, set \(a_{1}=1.5, a_{2}=0.5, a_{3}=0.5, b_{1}=0.5, b_{2}=1.2, C=[-0.1, 0.45;0.3, 0.45;0.3, 0.4], D=[0.45, -0.45, 0.3;-0.1, -0.4, 0.45]\); \(I_{i}=J_{j}=0,i=1,2,3;j=1,2;\) Let \(F_{j}=G_{i}=1,i=1,2,3;j=1,2\), by some simple computations, one has \(\hat{c}=\hat{d}=0.5, \hat{a}=0.7\) and \(\hat{b}=0.6\); it is easy to check that \(q=0.6>p=0.5>0\), the conditions in Theorem 2 are satisfied. So, the equilibrium point of system (3) is globally asymptotically stable. Figures 3 and 4 show the trajectories of variable \(x_{i}(t)\) and \(y_{j}(t)\) of system (3).

Time response of state variables x(t) in system (3)

Time response of state variables y(t) in system (3)

Remark 3

In Example 1, it is easy to get that \(\hat{a}=\hat{b}=0.5, \hat{c}=1\) and \(\hat{d}=0.7\), then \(q=0.5<p=0.7\), which does not meet the criteria in Theorem 2. On the other hand, in Example 2, one has \(\xi _{1}^{(1)}=0.95, \xi _{2}^{(1)}=-0.35, \xi _{3}^{(1)}=-0.25\) and \(\xi _{1}^{(2)}=-0.2, \xi _{2}^{(2)}=-0.1\), it is easy to check that \(\hat{\xi }^{(1)}=-0.35>0\) and \(\hat{\xi }^{(2)}=-0.2<0\), which does not meet the criteria in Theorem 1. Thus, the sufficient conditions in Theorems 1 and 2 are independent.

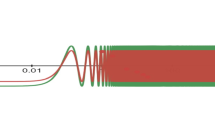

Example 2

Consider the following impulsive fractional-order BAM neural network with two hundred neurons, one hundred in the first field and others in the second field. Under the conditions of Theorems 1 and 2, we select suitable higher-dimensional matrices A, B, C and D. Other parameters are the same with them of Example 1. The time responses of state variables are shown in Figs. 5 and 6.

Remark 4

The uniform stability of fractional-order BAM neural networks has been investigated in Ref. [29]. Time delay has been taken into account, but the impulsive effects have not been considered, compared with which this paper has studied the asymptotic stability of time-delayed BAM neural networks with impulsive effects, and the above numerical simulation can be checked for our theoretical result.

5 Conclusion

Two sufficient conditions were obtained to ensure the impulsive fractional-order BAM networks to be globally asymptotically stable in this paper. By employing the fractional Barbalat’s lemma and Razumikhin-type stability theorems, the new results were easy to test in the practical fields. Furthermore, the methods employed in this paper were useful to study some other time-delayed fractional-order neural systems. In the end, two examples were given to show that two sufficient conditions that we have got are independent of each other. We would like to point out that there are lots of results of BAM neural networks about practical application in engineering science; however, there are few results about the practical application of fractional-order BAM, which will be our future works.

References

Laskin N (2000) Fractional market dynamics. Phys A 287:482–492

Metzler R, Klafter J (2000) The random walk’s guide to anomalous diffusion: a fractional dynamics approach. Phys Rep 339:1–77

Shimizu N, Zhang W (1999) Fractional calculus approach to dynamic problems of viscoelastic materials. JSME Int J Ser C 42:825–837

Sabatier J, Agrawal OP, Machado JAT (2007) Advances in fractional calculus. Springer, Dordrecht

Kaslik E, Sivasundaram S (2012) Nonlinear dynamics and chaos in fractional-order neural networks. Neural Netw 32:245–256

Chen JJ, Zeng ZG, Jiang P (2014) Global Mittag-Leffler stability and synchronization of memristor-based fractional-order neural networks. Neural Netw 51:1–8

Chen LP, Chai Y, Wu RC, Ma TD, Zhai HZ (2013) Dynamic analysis of a class of fractional-order neural networks with delay. Neurocomputing 111:190–194

Stamova I (2014) Global Mittag-Leffler stability and synchronization of impulsive fractional-order neural networks with time-varying delays. Nonlinear Dyn 77:1251–1260

Ke YQ, Miao CF (2015) Stability analysis of fractional-order Cohen–Grossberg neural networks with time delay. Int J Comput Math 92:1–12

Rakkiyappan R, Cao JD, Velmurugan G (2014) Existence and uniform stability analysis of fractional-order complex-valued neural networks with time delays. IEEE Trans Neural Netw Learn Syst 26:84–97

Yang XJ, Song QK, Liu YR, Zhao ZJ (2015) Finite-time stability analysis of fractional-order neural networks with delay. Neurocomputing 152:19–26

Bao HB, Cao JD (2015) Projective synchronization of fractional-order memristor-based neural networks. Neural Netw 63:1–9

Tavan P, Grubmuller H, Kuhnel H (1990) Self-organization of associative memory and pattern classification: recurrent signal processing on topological feature maps. Biol Cybern 64:95–105

Sharma N, Ray AK, Sharma S, Shukla KK, Pradhan S, Aggarwal LM (2008) Segmentation and classification of medical images using texture-primitive features: application of BAM-type artificial neural network. J Med Phys 33:119–126

Carpenter GA, Grossberg S (1991) Pattern recognition by self-organizing neural networks. MIT Press, Cambridge

Kosko B (1987) Adaptive bidirectional associative memories. Appl Opt 26:4947–4960

Sakthivel R, Raja R, Anthoni SM (2011) Exponential stability for delayed stochastic bidirectional associative memory neural networks with Markovian jumping and impulses. J Optim Theory Appl 150:166–187

Feng W, Yang SX, Wu H (2014) Improved robust stability criteria for bidirectional associative memory neural networks under parameter uncertainties. Neural Comput Appl 25:1205–1214

Rakkiyappan R, Chandrasekar A, Lakshmanan S, Park JH (2014) Exponential stability for Markovian jumping stochastic BAM neural networks with mode-dependent probabilistic time-varying delays and impulse control. Complexity 20:39–65

Li L, Yang YQ, Lin G (2015) The stabilization of BAM neural networks with time-varying delays in the leakage terms via sampled-data control. Neural Comput Appl. doi:10.1007/s00521-015-1865-4

Chen B, Yu L, Zhang WA (2011) Exponential convergence rate estimation for neutral BAM neural networks with mixed time-delays. Neural Comput Appl 20:451–460

Lu DJ, Li CJ (2013) Exponential stability of stochastic high-order BAM neural networks with time delays and impulsive effects. Neural Comput Appl 23:1–8

Ke YQ, Miao CF (2013) Stability and existence of periodic solutions in inertial BAM neural networks with time delay. Neural Comput Appl 23:1089–1099

Raja R, Raja UK, Samidurai R, Leelamani A (2014) Passivity analysis for uncertain discrete-time stochastic BAM neural networks with time-varying delays. Neural Comput Appl 25:751–766

Cao JD, Wan Y (2014) Matrix measure strategies for stability and synchronization of inertial BAM neural network with time delays. Neural Netw 53:165–172

Gemant A (1936) A method of analyzing experimental results obtained from Elasto–Viscous bodies. J Appl Phys 7:311–317

Krepysheva N, Di PL, Neel MC (2006) Fractional diffusion and reflective boundary condition. Phys A 368:355–361

Cao YP, Bai CZ (2014) Finite-time stability of fractional-order BAM neural networks with distributed delay. Abstr Appl Anal 2014:1–8

Yang XJ, Song QK, Liu YR, Zhao ZJ (2014) Uniform stability analysis of fractional-order BAM neural networks with delays in the leakage terms. Abstr Appl Anal 2014:1–16

Li Y, Chen YQ, Podlubny I (2010) Stability of fractional-order nonlinear dynamic systems: Lyapunov direct method and generalized Mittag–Leffler stability. Comput Math Appl 59:1810–1821

Trigeassou JC, Maamri N, Sabatier J, Oustaloup A (2011) A Lyapunov approach to the stability of fractional differential equations. Sig Process 91:437–445

Hu JB, Lu GP, Zhang SB, Zhao LD (2015) Lyapunov stability theorem about fractional system without and with delay. Commun Nonlinear Sci Numer Simul 20:905–913

Wang F, Yang YQ, Hu MF (2015) Asymptotic stability of delayed fractional-order neural networks with impulsive effects. Neurocomputing 154:239–244

Sakthivel R, Raja R, Anthoni SM (2010) Asymptotic stability of delayed stochastic genetic regulatory networks with impulses. Phys Scr 82:055009

Sakthivel R, Samidurai R, Anthoni SM (2010) New exponential stability criteria for stochastic BAM neural networks with impulses. Phys Scr 82:045802

Sakthivel R, Samidurai R, Anthoni SM (2010) Asymptotic stability of stochastic delayed recurrent neural networks with impulsive effects. J Optim Theory Appl 147:583–596

Balasubramaniam P, Vembarasan V (2011) Asymptotic stability of BAM neural networks of neutral-type with impulsive effects and time delay in the leakage term. Int J Comput Math 88:3271–3291

Li CJ, Li CD, Liao XF, Huang TW (2011) Impulsive effects on stability of high-order BAM neural networks with time delays. Neurocomputing 74:1541–1550

Chen BS, Chen JJ (2015) Razumikhin-type stability theorems for functional fractional-order differential systems and applications. Appl Math Comput 254:63–69

Deng WH, Li CP (2008) The evolution of chaotic dynamics for fractional unified system. Phys Lett A 372:401–407

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was jointly supported by the National Natural Science Foundation of China under Grant 11226116, the Fundamental Research Funds for the Central Universities JUSRP51317B.

Rights and permissions

About this article

Cite this article

Wang, F., Yang, Y., Xu, X. et al. Global asymptotic stability of impulsive fractional-order BAM neural networks with time delay. Neural Comput & Applic 28, 345–352 (2017). https://doi.org/10.1007/s00521-015-2063-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-015-2063-0