Abstract

In image processing and computer vision, the denoising process is an important step before several processing tasks. This paper presents a new adaptive noise-reducing anisotropic diffusion (ANRAD) method to improve the image quality, which can be considered as a modified version of a speckle-reducing anisotropic diffusion (SRAD) filter. The SRAD works very well for monochrome images with speckle noise. However, in the case of images corrupted with other types of noise, it cannot provide optimal image quality due to the inaccurate noise model. The ANRAD method introduces an automatic RGB noise model estimator in a partial differential equation system similar to the SRAD diffusion, which estimates at each iteration an upper bound of the real noise level function by fitting a lower envelope to the standard deviations of pre-segment image variances. Compared to the conventional SRAD filter, the proposed filter has the advantage of being adapted to the color noise produced by today’s CCD digital camera. The simulation results show that the ANRAD filter can reduce the noise while preserving image edges and fine details very well. Also, it is favorably compared to the fast non-local means filter, showing an improvement in the quality of the restored image. A quantitative comparison measure is given by the parameters like the mean structural similarity index and the peak signal-to-noise ratio.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As it is well known, machine vision cameras capture the spatial distribution of a light incident on a light-sensitive device and they produce, therefore, bidimensional descriptions of this distribution, known as images. Charge-coupled devices (CCD) were suggested and experimentally verified in the 1970s as image sensors. They have become the major piece of imaging technology and have been included in a lot of current cameras. CCD-based digital cameras are commonly used in a variety of commercial, medical, scientific, and military applications. In its acquisition and transmission, an image is often corrupted by different sources of noise. As a result, an image, degraded by noise, may lead to a significant reduction in its quality. Thus, a pre-processing step (or denoising) is required for the noisy image before it can be used in various applications, for example edge detection and compression. The goal of image denoising is to estimate the unknown signal of interest from the available noisy data. There have been many powerful denoising algorithms proposed over the past few years. Most of them often require their parameters to be adjusted according to the noise level (or noise variance) parameter [1–4]. In this case, it is desirable to know the noise type and its statistical characteristics (variance, probability density function). In some works, the noise type and characteristics are assumed to be known in advance, which is not actually valid in practical circumstances. However, in current studies, the noise characteristics are usually provided manually, which is a good manner to estimate a reliable noise level, but it becomes tedious and it increases the execution time of the algorithms. As a consequence, several automatic noise estimators for a gray-level image have been suggested such as the algorithms proposed in [5–7]. The problem of such methods is that they often assume the noise model to be white, signal independent, and with a constant variance over the whole image and to be either pure additive or pure multiplicative. Recently, it has been clearly demonstrated that this noise model is not adequate enough for images captured from the CCD digital cameras [8–10]. Hence, these approaches cannot fully fit the noise level from the CCD cameras. Consequently, the denoising algorithms with a given poor noise level estimation does not give an optimal image quality. This means that it is necessary to correctly estimate the noise level to improve the effectiveness of the denoising algorithms. In the recent years, particular attention has been given to denoise ultrasound images by the method proposed by Yu and Acton [11], named the speckle-reducing anisotropic diffusion (SRAD). The SRAD filter is very commonly used to reduce, specially, speckle noise. The speckle noise is a type of granular noise which can be found in several kinds of coherent imaging systems like the synthetic aperture radar (SAR), ultrasound, or laser-illuminated images. Regardless of the theoretical goodness of the SRAD to restore an ultrasound image, the key point is the accurate estimation of such a noise parameter. Indeed, they used some assumptions to consider the noise characteristics to be white and signal independent, and purely multiplicative [12]. Unfortunately, the aforementioned assumptions are not always true for a wide range of the images formed by the CCD digital cameras. Specifically, unprocessed raw data produced straightly from the CCD image sensors contain white noise. However, the raw image goes through various image processing steps such as demosaicing, color correction, gamma correction, color transformation, and JPEG compression. As a consequence, the noise characteristics in the final output image deviate significantly from the used noise model (i.e., white multiplicative) [8, 13]. From this fact, it becomes clear that the demand for color image denoising exceeds widely the white noise case [14, 15]. Hence, the main interest of this study is to put forward a new image filtering technique, called the adaptive noise-reducing anisotropic diffusion (ANRAD) filter, using the SRAD filter and a more general automatic noise estimator from a single image in order to better remove the color noise produced by a CCD digital camera. The noise level that will be used is colored, mixed, and modeled as signal-dependent noise whose standard deviation is represented by a function of pixel intensity and is called the noise level function (NLF). That allows to reduce noise to a minimum, in order to achieve good image quality as that of photography and obtain more information from CCD image data. The strength of the proposed filter is that it can therefore be used for images corrupted with any kind of noise, whether multiplicative, additive, or mixed. The amount of denoising is controlled locally by the values of the noise variances correspondent to each intensities of the whole image allowing a better job of a denoising image. In addition, the ANRAD allows denoising to be different along different directions, i.e., denoising on both sides of an edge can be prevented while enabling the denoising along the edge.

The rest of this paper is organized as follows. In Sect. 2, a survey of related works is given. Section 3 bears on the strategy for deriving ANRAD filter. The experimental evaluations and the comparative analysis are presented and discussed in Sect. 4. The advantages and limitations of the ANRAD are presented in Sect. 5. Section 6 is dedicated to the conclusion and future directions.

2 Related work

In image analysis, the goal of image restoration is to relieve human observers from this task (and perhaps even to improve their abilities) by reconstructing a plausible estimate of the original image from the distorted or noisy observation. For several years, a lot of studies have been done on the improvement of the digital images to increase the quality of visual rendering and to enhance contrast and sharpness of the image in order to facilitate a later analysis. Most of these “filtering” methods are:

Bilateral filter: It was developed by Tomasi and Manduchi [16]. It smoothes flat surfaces as the Gaussian filter, while preserving sharp edges in the image. The idea underlying bilateral filtering is to compute the weight of each pixel using a spatial kernel and multiply it by an influence function in the intensity domain (range kernel) that decreases the weight of pixels with large intensity differences. However, the bilateral filter in its direct form cannot handle speckle noise, and it has the tendency to oversmooth edges. Also, it is slow when the kernel is large. Nonetheless, several solutions have been proposed to accelerate the evaluation of the bilateral filter such as [17–21]. Unfortunately, most of these approaches rely on approximations that are not based on firm theoretical foundations, and it is hard to evaluate their accuracy. The essential link between bilateral filtering and anisotropic diffusion is examined in [22]. In more recent years, adaptive bilateral filters are proposed in [23–25]. These filters retain the general form of the bilateral filter, but differ when introducing an offset in the range of the filter. Both the offset and the width of the range change locally in the image. These locally adaptive approaches require a complicated training-based method and are especially used for image enhancement. In [26], a recursive implementation of the bilateral filter is suggested, where computational and memory complexities are linear in both image size and dimensionality. However, its range filter kernel uses the pixel connectivity and thus cannot be directly employed for applications ignoring the spatial relationships.

Wavelet transforms: Signals are always the input for wavelet transforms, where a signal can be decomposed into several scales that represent different frequency bands. At each scale, the position of the signal’s instantaneous structures can be determined approximately. Such a property can be manipulated in many ways to achieve certain results. These include denoising [27–29], compression [30–32], feature detection [33–35], etc. Several noise reduction techniques based on the approaches of wavelet have been proposed in the literature [36–38]. In [39], Yansun et al. developed a denoising method based on the direct spatial correlation between the wavelet transforms over adjacent scales. Threshold-based denoising is another powerful approach based on wavelet transforms to noise reduction. It was first suggested by Donoho [40, 41], which transformed the noisy signal into wavelet coefficients, then employed a hard or soft threshold at each scale, and finally transformed the result back to the original domain and got the estimated signal. In [42], Chang et al. put forward a spatially adaptive wavelet thresholding method based on a context modeling technique. Context modeling is used to estimate the local variance for each wavelet coefficient and then is used to adapt the thresholding strategy. Li and Wang in their work [43] proposed a wavelet-based method, where they decomposed the noisy image in order to get a different sub-band image. They maintain low-frequency wavelet coefficients unchanged, and then, taking into account the relation of horizontal, vertical, and diagonal high-frequency wavelet coefficients, they compare them with the Donoho threshold to achieve image denoising. In [29], image denoising using a wavelet-based fractal method is implemented. In [27, 36], wavelet filters were used for denoising the speckle noise in optical coherence tomography data. Wavelets may be a good tool for denoising images because of their energy compactness, sparseness, and correlation properties, but they are still inadequate in their denoising performance due to the simple thresholding methods.

Non-Local Means (NLM) filter: Buades et al. introduced the NLM filter [44], which assumed that similar patches could be found in the image. The used patch similarity was estimated by a statistically grounded similarity criterion which it derived from the noise distribution model. This filter offers a good performance to reduce the additive white noise Gaussian. However, the NLM filter has a complexity that is quadratic in the number of pixels in the image, which makes the technique computationally intensive and even impractical in real applications. For this reason, a number of NLM methods have been developed such as the fast non-local image denoising algorithm [45], which proposes an algorithmic acceleration technique based on neighborhood pre-classification. In [46], authors gave a comprehensive survey of patch-based non-local filtering of radar imaging data. In [47], the authors implemented an image denoising method that used the principal component analysis (PCA) in conjunction with the NLM image denoising. Wua et al. suggested a version of NLM filter [48], where firstly the curvelet transform was used to produce reconstructed images. Then, the similarity of two pixels in the noisy image was computed. Finally, the pixel similarity and the noisy image were utilized to obtain the final denoised result using the non-local means method.

Anisotropic diffusion: Perona and Malik anisotropic diffusion (PMAD) filtering, being the most common nonlinear technique [49], was inspired from the heat diffusion equation by introducing a diffusion function that was dependent on the norm of the gradient of the image. The diffusion function, therefore, had the effect of reducing the diffusion for high gradients. It has been widely used for various applications such as satellite images [11, 50, 51], astronomical images [52, 53], medical images [54–56], and forensic images [57, 58]. However, the PMAD filter has had two limitations up to now. First, it smoothes the information identically in all directions (isotropic). Second, the choice of the threshold on the norm of the gradient needed for the diffusion function is not evident, which makes the technique not truly automatic and cannot effectively remove noise. For this reason, some improvements have been proposed by different researchers. In [59], Weickert suggested a diffusion matrix instead of a scalar. Karl et al. proposed a version of anisotropic diffusion, where diffusion was controlled by the local orientation of the structures in the image [60]. Yu and Acton [11] developed a version of the PMAD filter, called the SRAD, based on the speckle noise model, where the diffusion was controlled by the local statistics of the image. To adopt correctly the SRAD for multiplicative noise, Aja-Fernández and Alberola-López [61] suggested a detail preserving anisotropic diffusion (DPAD) filter, which estimated the noise using the mode of the distributions of local statistics of the whole image [61, 62]. In the recent years, other studies have been developed on new well posed equations such as [63–68]. In [63], the authors implemented a ramp preserving PMAD model based on an edge indicator, a difference curvature, which can distinguish edges from flat and ramp regions. Since the anisotropic diffusion is an iterative process, the problem of choosing the optimal stopping time and preventing an over smoothed result is crucial. Therefore, various methods estimating this parameter were proposed [69–71]. For instance, Tsiotsios and Petrou, in their work [69], have been proposed a version of anisotropic diffusion filter where an automatic stopping criterion is used which takes into consideration the quality of the preserved edges. More recently, a modified diffusion scheme, suitable for textured images has been described in [72].

3 Proposed method

3.1 Camera noise model

3.1.1 Signal-dependent noise model

In the CCD-based digital cameras, the photons transmitted through the lens system are converted to charge in the CCD sensor. Then, the charge is amplified, sampled, and digitally enhanced to become an image (bits). The typical imaging pipeline of the CCD camera is shown in Fig. 1. The image provided by the CCD imaging system is characterized by high quality; however, it is not completely free from different types of distortions and artifacts. There exist mainly five noise sources, as mentioned in [73, 74]: fixed pattern noise (FPN), dark current noise, thermal noise (\(N_{\rm c}\)), shot noise (\(N_{\rm s}\)), and amplifier and quantization noise (\(N_{\rm q}\))—which is negligible in this work [15].

The final brightness \(I_{N}\) produced by a real CCD imaging system is related to the scene radiance L via the camera response function f (nonlinear imaging system) and is given as [75, 76]:

Similarly, for an ideal system, if it is necessary to find L, the inverse function g is required, where \(g\,=\,f^{-1}\), and then \(L=g(I)\). Thus, (1) can be modeled as:

Here, I denotes the noise-free image, \(N_{\rm s}\) defines a multiplicative noise component modeled as a Gaussian distribution with a zero mean and the relative variance \(I\cdot \sigma _{\rm s}^{2}\), and \(N_{\rm c}\) describes an additive noise component with a zero-mean Gaussian distribution with the variance \(\sigma _{\rm c}^{2}\) [10, 77]. It can be noted that the multiplicative component of the noise directly depends on the image brightness.

To obtain unknown color information at each pixel location from the CCD image sensor, some interpolation forms (called demosaicing) are carried out to get the full-resolution color image [78]. Accordingly, the noise variance of the interpolated pixels tends to become smaller than that of the directly observed pixels [79]. As a consequence, this process introduces spatial correlations [80] to the noise characteristics in the demosaiced image (see Fig. 2). Thus, the noise becomes colored instead of being white.

3.1.2 Noise model estimation

Let \({\varSigma }^{2}(u, f, \sigma _{\rm s}, \sigma _{\rm c})\) be a correlated NLF to estimate, which depends on the local intensity u and on a set of parameters f, \(\sigma _{\rm s}\) and \(\sigma _{\rm c}\) that are determined by the image acquisition protocol [10, 15, 81]. To compute it, an iterative noise level estimation process is presented in Fig. 3. There are three basic stages we should perform to conduct the noise level function \({\varSigma }^{2}\) effectively:

-

Theoretical model of NLF

-

NLF from a single image

-

Maximum likelihood estimation

(a) Theoretical model of NLF

The variance of the generalized noise model term of Eq. 2 is written as [15, 81]:

where \(\mathbb {E}\left[ .\right]\) is the expected value of the random variable, \(u_{n}\) is the synthesized noisy pixel value, and u is the noise-free pixel value. By changing the three NLF parameters, \(f, \sigma _{\rm s}\) and \(\sigma _{\rm c}\), Eq. (3) can represent the whole space of the NLFs, named the noise level functions database (NLFD). To do that, it is necessary to have a test pattern image u containing 256 gray scale levels (Fig. 4) as well as 201 camera response functions f, which are downloaded from [82], where some are shown in Fig. 5 and where \(\sigma _{\rm s}\) is from 0 to 0.16 and \(\sigma _{\rm c}\) is from 0.01 to 0.06 [15]. For instance, Fig. 6 presents f(60) and some of its NLFs estimated at different values of \(\sigma _{\rm c}\) and \(\sigma _{\rm s}\).

The NLFD is a matrix (\({\varGamma }\)) that consists of all the existing NLFs. Each column in \({\varGamma }\) represents an NLF for a given \(f , \sigma _{\rm c}\) and \(\sigma _{\rm s}\). Also, the column indexes refer to 256 intensity values ranging from 0 to 1. Applying the PCA on \({\varGamma }\), a general form of the approximation model of the NLFs for each red, green, and blue channel is given by [15]:

where \(V_{i}\) and \(\overline{{\varSigma }^{2}}\) \(\in\) \(\mathbb {R}^{d}\) (\(d=256\)) are, respectively, the eigenvectors and the mean of the NLF obtained by the PCA, and where \(\lambda _{1},\ldots ,\lambda _{m}\) are the unknown parameters of the model with the number of retained principal components \(m=6\) [15].

(b) NLF From a single image

Basically, one-channel images are considered, assuming that similar operations are performed for each component image of multichannel data. This process is based on the next four steps:

-

Step 1: Pre-segmentation (see Fig. 7): Firstly, smooth out noisy image by convolving it with a low-pass filter and then partition the smoothed image into homogeneous regions with both similar spatial coordinates and RGB pixel values [10, 83, 84].

-

Step 2: Estimate the noise-free signal and the noise variance for each region as follows:

$$\begin{aligned} \hat{u}_{l}\,=\,& {} \frac{1}{\eta _{l}} \sum _{j=1}^{\eta _{l}} u^{j}_{n} \end{aligned}$$(5)$$\begin{aligned} \hat{\sigma }^{2}_{l}\,=\,& {} \frac{1}{\eta _{l} } \sum _{j=1}^{\eta _{l}} (u^{j}_{n}-\hat{u}_{l})^2 \end{aligned}$$(6)where \(\hat{u}_{l}\) is the estimation of the noise-free signal, \(u^{j}_{n}\) is the jth pixel value in the observed region, \(\eta _{l}\) is the number of pixels in the observed region, \(\hat{\sigma }_{l}^{2}\) is the estimated noise variance, \(l=1,\ldots , N_{bl}\), and \(N_{bl}\) is the number of regions that depends upon the image.

-

Step 3: Form scatter plots of samples of noise variances on the estimated noise-free signals of each RGB channel. An example of the obtained scatter plot is represented in Fig. 8a. The points in the scatter plot have the coordinates \(\hat{\sigma }_{l}^{2}\) for the vertical axis and \(\hat{u}_{l}\) for the horizontal axis [85].

-

Step 4: Select the weak textured regions by discretizing of the range of image intensity into \(\kappa\) uniform intervals and then finding at each interval the region with the minimum variance. Consequently, the lower envelope strictly and tightly below the sample points is the estimated NLF curve (the blue dots in Fig. 8).

However, the estimated variance of each region is an overestimate of the noise level because it may contain the signal, so the obtained lower envelope is an upper bound estimate of the NLF.

(c) Maximum likelihood estimator

In this subsection, an accuracy model of the NLF is presented which combines the general form of the approximation model of Eq. 4 with the selected weak textured regions [85]. The goal is to infer the accurate NLF model from the lower envelope of the samples. To solve that, an inference problem in a probabilistic framework was formulated in [15]. Mathematically, the likelihood function is:

where \(\kappa\) is the number of selected weak textured patches, \(\eta _{t} , \hat{u}_{t}\) and \(\hat{\sigma }^{2}_{t}\) are, respectively, the number of pixels, the mean pixel value, and the estimated variance of the tth weak textured region, \(\varepsilon\) controls how the function should approach the samples, \(\hbox {exp}\left\{ \cdot \right\}\) is the exponential function, and \({\varPhi }(\cdot )\) is the cumulative distribution function of the standard normal distribution. The cost function to be minimized can be obtained from the negative log-likelihood function as:

To minimize the cost function, the MATLAB standard nonlinear constrained optimization function “fmincon” is used.

Based on the effect of the correlation between the RGB channels and using the maximum likelihood estimator (MLE), the best approximation of \({\varSigma }^{2}(u)\) for the RGB channels can be given. For more details, the reader can refer to [15, 85].

3.2 Adaptive noise-reducing anisotropic diffusion

In this section, a new version of the SRAD filter is proposed, called the ANRAD, to enhance the images corrupted by color noise whose general model is in Eq. 2. The conventional SRAD filter is presented in the context of ultrasound images corrupted by speckle noise, where it could be written as [11]:

Here, \(\nabla u\) is the gradient operator, div is the divergence operator, \({\Delta } t\) is the step time, \(u_{i,j;t}\) is the sampled discrete image, i, j denotes the spatial coordinates of a pixel x on a 2D discrete grid (observed image), \(w_{i,j}\) represents the spatial neighbors centered at the current pixel, \(\vert w_{i,j} \vert\) is the size of the four direct neighborhood (4DN) which is equal to 4, and \(c(\ldots )\) is the diffusion function determined by

with

and

where \(q_{i,j;t}\) is the instantaneous coefficient of variation of the image, which allows distinguishing homogeneous regions from edges in bright and dark areas and \(q_{n}(t)\) is the speckle NLF at the time t which controls the amount of smoothing applied to the image. Also, Var(area) and area are, respectively, the variance and the mean values of intensities under a homogeneous image area, and \(\hbox {Var}(u_{i,j;t})\) and \(\overline{u}_{i,j;t}^{2}\) are, respectively, the local variance and mean values at each pixel in the image. The SRAD noise estimation scheme has some disadvantages. It admits the speckle noise with some hypotheses like being: purely multiplicative, uncorrelated, equal variance, signal independent, and produced by a linear imaging system. In addition, it needs to know a homogeneous region within the processed image. Although it is not difficult for a user to select a homogeneous region in the image, it is non-trivial for a machine to do that. With these assumptions, the algorithm is not so practical for images captured from CCD digital cameras because, as noted above, the noise is essentially random and strongly dependent on the image intensity level. Also, there are spatial correlations introduced by the effect of demosaicing (color noise). Nevertheless, this filter is not adequate enough for CCD images and accordingly cannot fully fit the noise characteristics and give an optimal image quality. To overcome the disadvantages of the SRAD [recall (9)–(12)] and to have an improved restoration result, Eq. (12) is replaced by a non-stationary scale factor noise \(Q^{2}_{n}(i,j;t)={\varSigma }^{2}(u_{i,j;t})/u_{i,j;t}^{2}\), where its parameters will be estimated automatically, as described in Sect. 3.1.2, after introducing it in (Eq. 10) to obtain the new diffusion function \(c(q_{i,j;t},Q_{n}(i,j;t))\). Hence, the ANRAD is adopted. As it is noticeable, the filter is based on the estimation of the local coefficients of variation of the image \(q^{2}_{i,j;t}\) and of the noise \(Q^{2}_{n}(i,j;t)\). The better they are estimated, the better the filter performance is. Moreover, the choice of the window size greatly affects the quality of the processed images. If the window is too small, the noise filtering algorithm will not be effective and the filter will become very sensitive to noise. If the window is too large, subtle details of the image will be lost in the filtering process. In our experiments, a \(5\times 5\) window is used as a fairly good choice [62].

Let \(F= c. \nabla u\) be the flow diffusion and \(x=(x_{1},\ldots ,x_{N})\) be the current pixel in N-dimensional. The one-dimensional discrete implementation of the divergence term in Eq. (9) is given by:

where \(F_{x^{+}}= F(x+\frac{\text {d}x}{2},t)\) and \(F_{x^{-}}= F(x-\frac{\text {d}x}{2},t)\).

For an image in N-dimensional, the divergence term is generalized as:

where F is expressed by the vector \((F_{x_{1}},\ldots ,F_{x_{N}})\).

The flow F is estimated between two neighboring pixels, and the following discretization of Eq. 13 can be written as:

with \(\ \ \forall \ i, \ dx_{i}=1, F_{x^{+}_{i}}= F_{x_{i}}(x+\frac{dx_{i}}{2},t)\) and \(F_{x^{-}_{i}}= F_{x_{i}}(x-\frac{dx_{i}}{2},t)\). The focus of the present work is on dealing with a two-dimensional (2D) images; thus, N is equal to 2.

Up to this level, the algorithm cleans up the image noise in the homogeneous areas, but it is not efficient near or on the edges (it does not enhance edges—it only inhibits smoothing near edges). To improve more the robustness of the proposed algorithm, a matrix diffusion D is introduced in Eq. (9) instead of the scalar diffusion c. D is a positive definite symmetry matrix, which can be written in terms of its eigenvectors and eigenvalues. Therefore, the divergence term in Eq. (9) becomes:

Following the chosen eigenvalues and eigenvectors, different matrix diffusions can be obtained [60]. The diffusion matrix proposed by Weickert [59, 86] has the same eigenvectors as the structure tensor, with eigenvalues that are a function of the norm of the gradient. This allows the smoothing effect to be different along various directions. Focusing on edge-enhancing diffusion, the chosen eigenvectors are defined as follows [60, 87]:

where \(\nabla u_{\sigma }\) is the gradient of the regularized (or smoothed) version of the image using a Gaussian filter of a standard deviation \(\sigma\).

Similarly, the diffusion flux \(F= D\cdot \nabla u\) can be decomposed as a sum of diffusions in each direction of the orthogonal basis B = \(\left( v_{1},v_{2}\right)\), and the divergence term becomes [60, 88]:

where \(\phi _{1}=c(\langle u(x)\rangle _{v_{1}}, \hbox {Var}(u(x))_{v_{1}})\cdot u_{v_{1}}\) is the diffusion function in a local isotropic neighborhood and \(\phi _{2}=c(\langle u(x)\rangle _{v_{2}}, \hbox {Var}(u(x))_{v_{2}} )\cdot u_{v_{2}}\) is the diffusion function in a local linear neighborhood oriented by the vectors \(v_{2}\). \(u_{v_{i}}=\triangledown u\cdot v_{i}, \hbox {Var}(u(x))_{v_{i}}\) and \(\langle u(x)\rangle _{v_{i}}\) are, respectively, the first order derivative, the local variance and the local mean of the intensity u at the current point x, estimated in a proposed direction \(v_{i}, i\in \left\{ 1,2\right\}\). In all the experiments, the local scalar mean and variance are used, respectively, as:

and

where p is the neighbor pixel, \(\eta _{x}=5\times 5\) is the neighborhood centered at the current pixel x , and \(\vert \eta _{x} \vert\) is the size of the neighborhood. Also, a linear neighborhood size is chosen subsequently to 7 [88] (see Fig. 9). Thus, the local linear mean and variance values are computed at the pixel x as follows:

and

4 Results

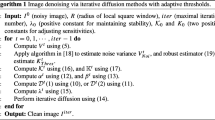

This paper aims to improve the quality of the image using an iterative anisotropic diffusion technique based on the noise level. The implementation of the filter has been done in MATLAB, on a personal computer with a 2.13-GHz Intel Core Duo processor and 4 GB of memory and has achieved a processing rate of 1.1615 s/iteration for a 321 \(\times\) 481 \(\times\) 3 image. In this section, the experiments have been performed on both synthetic and real medical images.

4.1 Noise estimation

Natural images from the Berkeley segmentation data set [89] are used, and synthetic mixed (additive and multiplicative) noise, according to the general noise model in Eq. 2, is generated to test the proposed algorithm.

To illustrate the model noise estimation, an example (Fig. 10) of the scatter plots obtained for the test image is considered, with a great amount of uniform areas (Fig. 10), corrupted by CCD noise (\(f(60) ,\, \sigma _{\rm c}= 0.06\) and \(\sigma _{\rm s}= 0.16\)). In Fig. 10, the red, green, and blue curves represent the estimated NLFs in each corresponding color band, whereas the ground truth NLF (in black) is produced using the training database in Sect 3.1.2. Figure 10 shows that the noise level (or noise variance) depends on the mean local intensity, so there is a good agreement between the training data and the predictions of the model. It can be seen that the NLF curves are found just below the lower envelope of the samples (blue dots). To further verify the ability of the noise level estimation, similar experiments are carried out using additive noise. Three natural images are selected with different color ranges and luminosity and are synthetically degraded by additive Gaussian noise with a 5 % noise level (Figs. 11, 12, 13). It is noted that the noise level is proportional to the intensity throughout the picture. The results presented in Figs. 12 and 13 indicate that the estimated NLFs are significantly modeled even though the color distribution does not span the full intensity range, showing the ability of the method to explore the NLF beyond observed image intensities. From these experiments, it is noticeable that the noise level estimation process is reliable and there is a very good agreement between the NLFs in each color component.

4.2 Filtering of synthetic images

To illustrate the ANRAD filtering behavior, some experiments have been done. First, the proposed algorithm is tested in the event of pure additive noise and then in case of pure multiplicative noise before testing its efficacy for the mixed signal-dependent noise [90]. To evaluate the numerical accuracy, two quality indexes are used: the signal-to-noise ratio (SNR) rate [91], where the higher the SNR is the better the result is, and the Mean Structural Similarity Index Measure (MSSIM) [61]. The later index is used to measure the similarity between the noise-free image and the denoised one, which is between 0 and 1.

4.2.1 Pure additive noise

In the first experiment, a synthetic image (Fig. 14a) is used, which is artificially corrupted with additive Gaussian noise having a 0 mean and standard deviation 0.1 (Fig. 14b). Figure 14 presents the filtering results using three anisotropic diffusion versions (Perona and Malik anisotropic diffusion (PMAD) [49], flux-based anisotropic diffusion (FBAD) [60], and the detail preserving anisotropic diffusion (DPAD) [61]), the proposed filter (ANRAD), and the Bilateral filter [21]. The parameters of each filter are mentioned in Table 1, where the step time is denoted by \({\Delta } t\), the number of iterations is denoted by itr, the window size is denoted by \(\eta _{x}\), and Thres is a constant threshold on the norm of the gradient on the image. In the suggested algorithm, the smoothing step time is set to 0.2 and the denoising process runs adaptively with 100 iterations. The ANRAD shows better results for both SNR and MSSIM, as shown in Table 1, where it has a good performance with the greatest SNR value, which is equal to 76.7737, and the highest MSSIM score which is equal to 0.9831 (close to 1). This means that the denoised image is close to the original one. Figure 14 shows that the recovered image by applying the proposed method (Fig. 14g) has also a better visual quality in comparison with the other methods. Clearly, the ANRAD performs better and produces smoother results, whereas the edges are well preserved and the contrast is improved better.

4.2.2 Pure multiplicative noise

To better evaluate the efficiency of the proposed algorithm, simulation studies have been carried out using a synthetic image downloaded from [89] and synthetically corrupted with pure multiplicative noise (see Fig. 15). In this case, the noise level has been taken as 10 %. In Fig. 15, it can be seen that the estimated NLFs are found just below the lower envelop of the distribution samples, where there is a very good agreement between each color band. Figure 15a shows the noisy image, whereas Fig. 15b–d show the image processed by the SRAD, the DPAD filter, and the ANRAD, respectively. In the SRAD method, the smoothing time step is set to 0.02 and the denoising process ran adaptively with 200 iterations. In the DPAD method, the filtering time step is set to 0.02 and the smoothing process ran adaptively with 200 iterations. In the suggested algorithm, the step time is set to 0.2 and the denoising process ran adaptively with 150 iterations. The performance quality of experiments, in terms of SNR and MSSIM, is listed in Table 2. Comparing the denoising results (Fig. 15b–d), it is noted that the three denoising methods can eliminate pure multiplicative noise in most homogeneous regions. In Table 2, it may be qualitatively observed that a very good restoration result for the DPAD filter compared to the others is obtained. It can be seen that the DPAD filter has a better behavior for both SNR and MSSIM measures. A justification of this behavior is that the DPAD filter has used the mode of the distribution of all the CVs of the noise over the whole of the image as an estimator of the NLF value, which is the best among the other estimators in the case of this kind of noise. Also as noticed before, the use of a \(5\times 5\) neighborhood in the DPAD filter instead of the four direct neighbors, like the original SRAD, to compute the local coefficients of variation is more accurate and thus allows obtaining better results.

4.2.3 Mixed signal-dependent noise

It is interesting in this phase to denoise the images corrupted with mixed color signal-dependent noise according to the general noise model in Eq. 2. Some color test images, namely Peppers, Starfish, and Firefighters, have been used as a ground truth, which are artificially corrupted by mixed color signal-dependent noise according the following parameters: (\(f(30), \sigma _{\rm c}=0.04, \sigma _{\rm s}=0.10\)), (\(f(30), \sigma _{\rm c}=0.06\), and \(\sigma _{\rm s}=0.16\)) and (\(f(60), \sigma _{\rm c}=0.06\), and \(\sigma _{\rm s}=0.16\)) given, respectively, in (Figs. 16, 18, and 20). Figure 16c shows the noise variance maps of each of the red, green, and blue channels of Fig. 16a. It is noticeable that the variance noise varies locally in the image, depending also on each color components. These experiments confirm that noise in photography images is not white and is signal dependent. The proposed filter used the noise variance maps as a common diffusion controlling term for noise filtering of a color image implying a more effective denoising than the traditional SRAD filter. In other words, the amount of noise reducing is not uniform over the whole image. Consequently, an adaptive denoising is treated. The noisy images shown in Figs. 16, 18, and 20 are filtered using the ANRAD, the SRAD, and the DPAD methods, and the results are presented in Figs. 17, 19, and 20. The performance quality of the experiments, in terms of SNR, is presented in Table 3. As indicated in Figs. 17, 19, and 20, the suggested method has achieved better noise removal in most homogeneous regions and structure preservation than the SRAD and DPAD methods. Figure 17 shows the image denoised with the proposed method, the DPAD, and the SRAD. It is observed that the ANRAD reduces color noise and improves the image quality. It can also be seen that there is an improvement in preserving the image structure. Based on the SNR in Table 3, it is also noticeable that the proposed method performs better than the other two methods.

Based on the experiments in Fig. 20, some observations can be drawn. The ANRAD filter can effectively improve the quality of the noisy image and also enhance better edges and preserve more details than the other filters. In contrast with the DPAD filter, the result shows that it blurs the image and causes a loss of information regarding the fine structures of the image and edges.

Result of our filter on loaded image from Berkeley database. The first row original image; image corrupted with color noise (\(f(30), \sigma _{\rm c}=0.04\), and \(\sigma _{\rm s}=0.10\)), result of our filter (SNR \(=\) 48.1295, iter \(=\) 30), DPAD filter (SNR \(=\) 38.6194, iter \(=\) 300), and SRAD filter (SNR \(=\) 36.7871, iter \(=\) 150). Second row corresponding zoomed images

Result of our filter on loaded image from Berkeley database (12003.jpg). The first row original image; image corrupted with color noise (\(f(30), \sigma _{\rm c}=0.06\), and \(\sigma _{\rm s}=0.16\)), result of: ANRAD (SNR \(=\) 48.3407, iter \(=\) 40); DPAD (SNR \(=\) 41.1171, iter \(=\) 400) and SRAD (SNR \(=\) 39.5224, iter \(=\) 600). Second row corresponding zoomed images

Denoising experimental results—from left to right noisy image by color noise ((\(f(60), \sigma _{\rm c}=0.06\), and \(\sigma _{\rm s}=0.16\))); noisy sub-image; sub-image results obtained by: SRAD (SNR \(=\) 31.8152, iter \(=\) 250), DPAD (SNR \(=\) 39.4481, iter \(=\) 600) and ANRAD (SNR \(=\) 40.8260, iter \(=\) 60)

The denoising method in [92], called the fast non-local means (FNLM), is used to show the effectiveness of the proposed method, which outperforms the methods in [93–95] and can be considered as the state of the art in image denoising. To the test Lena image in Fig. 22a (size, 512 \(\times\) 512), the synthesized noise with the following parameters: \(f(60) , \sigma _{\rm c}=0.01\) and \(\sigma _{\rm s}=0.02\) is added. The noisy image is filtered using the FNLM with a fixed NLF as an input value (equal to 0.07, see Table 4). However, the ANRAD uses three estimated NLFs. Figure 21 shows the results of the three estimated NLFs for each component (red, green, and blue). The simulation results are shown in Fig. 22 of both filters. Zoomed restored images are displayed in Figs. 23, 24, and 25 to show more details for small objects after denoising. It can be seen that the proposed method preserves image structures much better than the FNLM filter. The ANRAD algorithm outperforms the FNLM filter and its images get denoised well and the edges and fine details are preserved. For instance, in Fig. 24, the line patterns on the hat of Lena are prominent and with sharp edges in the ANRAD restored image as compared to that of the FNLM restored one. The ANRAD filter seems to have better visual quality than the other filter. The qualitative results are shown in Table 4. It shows a significant rise in the SNR value for the proposed algorithm (SNR \(=\) 66.3848) in comparison with the FNLM filter (SNR \(=\) 63.6967).

4.2.4 Real image results

For decades, the automatic methods for extracting and measuring the vessels in retinal images have been required to save the workload of ophthalmologists and to assist in characterizing the detected lesions and identifying the false positives [87, 96–101]. However, the use of rough images is not desirable, if one wishes to detect automatically the vessels of the vascular network. Therefore, an image pre-processing is required before any treatment. To reduce image noise, most of algorithms assume the noise to be additive, uniform, and independent of the RGB image data [102–108]. Afterward, these approaches cannot effectively recover the “true” signal (or its best approximation) from these noisy acquired observations. In this section, the improvement in the quality of visual rendering of the retinal images using the ANRAD algorithm is focused on in order to facilitate a later analysis. A real retinal image of a human is shown in Fig. 27a. The data have been downloaded from a publicly available database named the STARE Project database [109], which was acquired using a Topcon TRV-50 fundus camera at a 35 field of view (FOV), which was digitized with a 24-bit gray-scale resolution and a size of 700 \(\times\) 605 pixels. The green scale is considered as the natural basis for vessel segmentation because it normally presents a higher contrast between the vessels and the retinal background (Fig. 26a) [110–116]. Figure 26c–f shows the zoomed smoothed images processed by the four filters (the proposed filter, the PMAD, the SRAD, and the DPAD) tested on the gray scale of a real picture (im0077) taken from the Stare Project database. Figure 27 presents the results of the three corresponding model noise (red, green, and blue channels). The figure shows that the estimated curves of the noise function are found just below the lower envelope of the samples. Some other NLF are displayed in Figs. 28, 29, 30, and 31. According to these results, it has been found that the retinal fundus images do not contain uniform, white and additive noise because it can be seen that each estimated curve of the NLF is a nonlinear function describing the variance noise as a function of local intensity throughout the image. Also, it is different from one color channel to another. For the results shown in Fig. 26c, e, f (except d), it appears that the fine vessel at the bottom-right of the image in Fig. 26b has been markedly degraded or lost. Nevertheless, from Fig. 26d, it is seen that the proposed method is much more able to smooth out flat regions and to keep thin vessels than the other methods. The major region boundaries are preserved, especially by the ANRAD technique. Although this processed result is purely qualitative, it shows promise for the ANRAD as a general purpose CCD noise-reducing filter for retinal images.

a Real noisy retinal image (green channel); b sub-image of original image (a); c PMAD diffusion result (Thres \(=\) 15, iter \(=\) 30, \(\hbox{d}t=0.05\)); d ANRAD result (iter \(=\) 30, \({\Delta }t=0.2\)); e SRAD (iter \(=\) 50, \({\Delta }t=0.2\)); f DPAD (iter \(=\) 50, \({\Delta }t=0.2\)) (color figure online)

5 Advantages and limitations

The SRAD method has been developed to remove the speckle noise, a form of multiplicative noise, in imagery by utilizing a variance noise as an input value which is usually done from a selected region from the background pixels. The SRAD assumes noise to be multiplicative, uniform and uncolored. This assumption simplifies image filtering. The proposed denoising method is developed for color signal-dependent noise by using a general NLF as an input value. The estimated noise level is a continuous function describing the variance noise as a function of local intensity throughout the image. Consequently, the current denoising algorithm is truly automatic and can effectively remove various types of image noise. As the SRAD filtering near an edge is very weak, the noise reducing near the edge is also small. The ANRAD allows the filtering to be different along various directions defined by the gradient direction and its orthogonal. Thus, the filtering on both sides of an edge can be prevented while permitting the filtering along the edge. This prevents the edge from being smoothed and then being removed during denoising. As shown in Sect. 4, the experimental results have been conducted, with both quantitatively convincing and visually pleasing results. Some image tests are shown with zoomed zones to validate the efficiency of this approach.

The proposed filter shows a very good behavior both in edge preservation and noise cleaning. However, it does not prevent some limitations from existing: This approach does not work well for images with textures, specially those with great variability, because textures usually contain high frequencies and greatly affect the estimated noise variance. The used size and shape of the window to compute the coefficients of variation may affect the quality of processing images, like eliminating some of the details in the original image and blurring it a little. A more significant limitation is in the computation time. It has an additional expensive step of noise estimation, which makes it slower than the DPAD and SRAD algorithms.

6 Conclusion

In this paper, a new version of the SRAD technique has been developed, named the ANRAD, to remove various types of color image noise produced by today’s CCD digital cameras. Unlike the SRAD technique that processes a known type of noise and with some assumptions, such as a linear system and uncorrelated, white, and purely multiplicative noise, the proposed technique processes the data adaptively with an instantaneous RGB noise level. The adopted noise level is a nonlinear function of the image intensity depending on the external parameters related to the image acquisition system. In all our experiments, the proposed ANRAD method has exhibited better performances than the conventional SRAD technique, in terms of smoothing uniform regions and preserving edges and features. Its performance is directly related to the goodness of the employed noise estimation process. Choosing a good dynamic estimator beside, combined with the iterative process of the ANRAD, shows a very good performance of noise cleaning. This new technique shows the importance of a careful selection of a noise estimator in the SRAD method. The method presented in this study has several possible applications, and a future work will focus on the fact that the filter can be generalized to 3D images and can improve the performances of 2D and 3D segmentation approaches for the reconstruction of image regions.

References

Elad M (2010) Sparse and redundant representations: from theory to applications in signal and image processing. Springer Science & Business Media, New York, p 376

Sendur L, Selesnick IW (2002) Bivariate shrinkage with local variance estimation. IEEE Trans Signal Process Lett 9(12):438–441. doi:10.1109/LSP.2002.806054

Donoho DL (1995) De-noising by soft-thresholding. IEEE Trans Inf Theory 41(3):613–627. doi:10.1109/18.382009

Mallat SG (1999) A wavelet tour of signal processing. Elsevier, USA

Rank K, Lendl M, Unbehauen R (1999) Estimation of image noise variance. IEE Proc Vis Image Signal Process 146(2):8084. doi:10.1049/ip-vis:19990238

Amer A, Mitiche A, Dubois E (2002) Reliable and fast structure-oriented video noise estimation. In: International conference on image processing (ICIP 2002), IEEE. Rochester, New York, pp 840–843. 22–25 Sept. doi:10.1109/TCSVT.2004.837017

Tai SC, Yang SM (2008) A fast method for image noise estimation using laplacian operator and adaptive edge detection. In: 3rd international symposium on communications, control and signal processing, ISCCSP 2008. Malta, pp 1077–1081. 12–14 March. doi:10.1109/ISCCSP.2008.4537384

Uss ML, Vozel B, Lukin VV, Chehdi K (2011) Local signal-dependent noise variance estimation from hyperspectral textural images. IEEE J Sel Top Signal Process 5(3):469–486. doi:10.1109/JSTSP.2010.2104312

Foi A (2009) Clipped noisy images: heteroskedastic modeling and practical denoising. Signal Process 89(12):2609–2629. doi:10.1016/j.sigpro.2009.04.035

Liu X, Tanaka M, Okutomi M, (2013) Estimation of signal dependent noise parameters from a single image. In: Proceedings of the 20th IEEE international conference on image processing (ICIP), 2013. Melbourne, VIC, pp 79–82. 15–18 Sept 2013. doi:10.1109/ICIP.2013.6738017

Yu Y, Acton ST (2002) Speckle reducing anisotropic diffusion. IEEE Trans Image Process 11(11):1260–1270. doi:10.1109/TIP.2002.804276

Abramov S, Zabrodina V, Lukin V, Vozel B, Chehdi K, Astola J (2010) Improved method for blind estimation of the variance of mixed noise using weighted LMS line fitting algorithm. In: Proceedings of 2010 IEEE international symposium on circuits and systems (ISCAS). Paris, pp 2642–2645. 30 May–2 June 2010. doi:10.1109/ISCAS.2010.5537084

Aiazzi B, Alparone L, Barducci A, Baronti S, Marcoionni P, Pippi I, Selva M (2006) Noise modelling and estimation of hyperspectral data from airborne imaging spectrometers. Ann Geogr 49(1):1–9. doi:10.4401/ag-3141

Lebrun M, Colom M, Morel J (2014) The noise clinic: A universal blind denoising algorithm. In: 2014 IEEE international conference on image processing (ICIP). Paris, pp 2674–2678. 27–30 Oct 2014. doi:10.1109/ICIP.2014.7025541

Liu C, Szeliski R, Kang SB, Zitnick CL, Freeman WT (2008) Automatic estimation and removal of noise from a single image. IEEE Trans Pattern Anal Mach Intell 30(2):299–314. doi:10.1109/TPAMI.2007.1176

Tomasi C, Manduchi R (1998) Bilateral filtering for gray and color images. In: Proceedings of the sixth international conference on computer vision. Bombay, pp 839–846. 4–7 Jan 1998. doi:10.1109/ICCV.1998.710815

Pham TQ, van Vliet, LJ (2005) Separable bilateral filtering for fast video preprocessing. In: International conference on multimedia computing and systems/international conference on multimedia and expo-ICME(ICMCS). New York, IEEE Press, pp 454–457. 6–8 July 2005. doi:10.1109/ICME.2005.1521458

Weiss B (2006) Fast median and bilateral filtering. ACM Trans Graph 25(3):519–526. doi:10.1145/1179352.1141918

Paris S, Durand F (2009) A fast approximation of the bilateral filter using a signal processing approach. Int J Comput Vis 81(1):24–52. doi:10.1007/s11263-007-0110-8

Durand F, Dorsey J (2002) Fast bilateral filtering for the display of high-dynamic-range images. ACM Trans Graph TOG 21(3):257–266. doi:10.1145/566654.566574

Elad M (2002) On the origin of the bilateral filter and ways to improve it. IEEE Trans Image Process 11(10):1141–1151. doi:10.1109/TIP.2002.801126

Barash D (2002) A fundamental relationship between bilateral filtering, adaptive smoothing and the nonlinear diffusion equation. IEEE Trans Pattern Anal Mach Intell 24(6):844–847. doi:10.1109/TPAMI.2002.1008390

Zhang B, Allebach JP (2008) Adaptive bilateral filter for sharpness enhancement and noise removal. IEEE Trans Image Process 17(5):664–678. doi:10.1109/TIP.2008.919949

Kim S, Allebach JP (2005) Optimal unsharp mask for image sharpening and noise removal. J Electron Imaging 14(2):0230071. doi:10.1117/12.538366

Hu H, de Haan G (2007) Trained bilateral filters and applications to coding artifacts reduction. In: IEEE international conference on image processing, 2007. ICIP 2007. San Antonio, TX, pp 325–328. 16 Sept–19 Oct. doi:10.1109/ICIP.2007.4378957

Yang Q (2015) Recursive approximation of the bilateral filter. IEEE Trans Image Process 24(6):1919–1927. doi:10.1109/TIP.2015.2403238

Mayer MA, Borsdorf A, Wagner M, Hornegger J, Mardin CY, Tornow RP (2012) Wavelet denoising of multiframe optical coherence tomography data. Biomed Opt Express 3(3):572–589. doi:10.1364/BOE.3.000572

Du Y, Liu G, Feng G, Chen Z (2014) Speckle reduction in optical coherence tomography images based on wave atoms. J Biomed Opt 19(5):056009. doi:10.1117/1.JBO.19.5.056009

Barthel KU, Cycon HL, Marpe D (2003) Image denoising using fractal and wavelet-based methods. Proc SPIE 5266:1018

Chowdhury MMH, Khatun A (2012) Image compression using discrete wavelet transform. IJCSI Int J Comput Sci issues 9(4):1694–1814

Boopathi G (2011) Image compression: an approach using wavelet transform and modified FCM. Int J Comput Appl 28(2):7–12. doi:10.5120/3363-4643

Kamrul HT, Koichi H (2007) Haar wavelet based approach for image compression and quality assessment of compressed image. IAENG Int J Appl Math 36(1):1–8

Sateesh Kumar HC, Raja KB, Venugopal KR, Patnaik LM (2009) Automatic image segmentation using wavelets. IJCSNS Int J Comput Sci Netw Secur 9(2):305–313

Lee J, Kim Y, Park C, Park Changhan, Paik Joonki (2006) Robust feature detection using 2D wavelet transform under low light environment. In: Intelligent computing in signal processing and pattern recognition lecture notes in control and information sciences, vol 345, pp 1042–1050. doi:10.1007/978-3-540-37258-5_134

Ma X, Peyton AJ (2010) Feature detection and monitoring of eddy current imaging data by means of wavelet based singularity analysis. NDT & E Int 43(8):687–694. doi:10.1016/j.ndteint.2010.07.006

Habib W, Siddiqui AM, Touqir I (2013) Wavelet based despeckling of multiframe optical coherence tomography data using similarity measure and anisotropic diffusion filtering. In: 2013 IEEE international conference on bioinformatics and biomedicine (BIBM). Shanghai, pp 330–333. 18–21 Dec. doi:10.1109/BIBM.2013.6732512

Witkin A (1983) Scale space filtering. In: Proceedings of the 8th International Joint Con5 Artficial Zntell. Karlsruhe, pp 1019–1022. August 1983

Mallat S, Hwang WL (1992) Singularity detection and processing with wavelets. IEEE Trans Inf Theory 38(2):617–643. doi:10.1109/18.119727

Yansun X, John BW, Dennis MH Jr, Jian L (1994) Wavelet transform domain filters: a spatially selective noise filtration technique. IEEE Trans Image Process 3(6):747–758. doi:10.1109/83.336245

Donoho D (1995) Adapting to unknown smoothness via wavelet shrinkage. J Am Stat Assoc 90(432):1200–1224. doi:10.1080/01621459.1995.10476626

Donoho D (1995b) De-noising by soft-thresholding. IEEE Trans Inf Theory 41(3):613–627. doi:10.1109/18.382009

Chang SG, Yu B, Vetterli M (2000) Spatially adaptive wavelet thresholding with context modeling for image denoising. IEEE Trans Image Process 9(9):1522–1531. doi:10.1109/83.862630

Li H, Wang S (2009) A new image denoising method using wavelet transform. In: International forum on information technology and applications, 2009. IFITA ’09. In Chengdu, pp 111–114. 15–17 May. doi:10.1109/IFITA.2009.47

Buades A, Coll B, Morel JM (2005) A non-local algorithm for image denoising. In: IEEE computer society conference on computer vision and pattern recognition, 2005. CVPR 2005. San Diego, CA, pp 260–65. 20–25 June. doi:10.1109/CVPR.2005.38

Dauwe A, Goossens B, Luong H, Philips W (2008) A fast non-local image denoising algorithm. In: Proceedings of SPIE electronic imaging. San Diego, CA, pp 681210–681210. 16–21 Feb. doi:10.1117/12.765505

Deledalle C, Denisy L, Poggiz G, Tupinx F, Verdoliva L (2014) Exploiting patch similarity for SAR image processing: the nonlocal paradigm. IEEE Signal Process Mag 31(4):69–78. doi:10.1109/MSP.2014.2311305

Tolga T (2009) Principal neighborhood dictionaries for nonlocal means image denoising. IEEE Trans Image Process 18(12):2649–2660. doi:10.1109/TIP.2009.2028259

Wua K, Zhanga X, Dinga M (2013) Curvelet based nonlocal means algorithm for image denoising. Int J Electron Commun 68(1):3743. doi:10.1016/j.aeue.2013.07.011

Perona P, Malik J (1990) Scale-space and edge detection using anisotropic diffusion. IEEE Trans Pattern Anal Mach Intell 12(7):629–639. doi:10.1109/34.56205

Samson C, Blanc-Féraud L, Aubert G, Zerubia J (2000) A variational model for image classification and restoration. IEEE Trans Pattern Anal Mach Intell 22(5):460–472. doi:10.1109/34.857003

Grazzini J, Turiel A, Yahia H (2005) Presegmentation of high-resolution satellite images with a multifractal reconstruction scheme based on an entropy criterium. In: IEEE international conference on image processing, 2005. ICIP 2005. Italy, pp I-649–652. 11–14 Sept. doi:10.1109/ICIP.2005.1529834

Blanc-Féraud L, Barlaud M (1996) Edge preserving restoration of astrophysical images. Vistas Astron 40(4):531–538. doi:10.1016/S0083-6656(96)00038-4

Chao SM, Tsai DM (2006) Astronomical image restoration using an improved anisotropic diffusion. Pattern Recognit Lett 27(5):335–344. doi:10.1016/j.patrec.2005.08.021

Bao P, Zhang D (2003) Noise reduction for magnetic resonance images via adaptive multiscale products thresholding. IEEE Trans Med Imaging 22(9):1089–1099. doi:10.1109/TMI.2003.816958

Villain N, Goussard Y, Idier J, Allain M (2003) Three-dimensional edge-preserving image enhancement for computed tomography. IEEE Trans Med Imaging 22(10):1275–1287. doi:10.1109/TMI.2003.817767

Hsiao IT, Rangarajan A, Gindi G (2003) A new convex edge-preserving median prior with applications to tomography. IEEE Trans Med Imaging 22(5):580–585. doi:10.1109/TMI.2003.812249

Almansa A, Lindeberg T (2000) Fingerprint enhancement by shape adaptation of scale-space operators with automatic scale selection. IEEE Trans Image Process 9(12):2027–2042. doi:10.1109/83.887971

Meihua X, Zhengming W (2004) Fingerprint enhancement based on edge-directed diffusion. In: International conference on image and graphics—ICIG, IEEE computer society 2004. Hong Kong, pp 274–277. 18–20 Dec. doi:10.1109/ICIG.2004.68

Weickert J (1994) Scale-space properties of nonlinear diffusion filtering with a diffusion tensor. Citeseer report 110, University of Kaiserslautern, P.O. Box 3049, 67653 Kaiserslautern

Krissian K (2002) Flux-based anisotropic diffusion applied to enhancement of 3-D angiogram. IEEE Trans Med Imaging 21(11):1440–1442. doi:10.1109/TMI.2002.806403

Aja-Fernández S, Alberola-López C (2006) On the estimation of the coefficient of variation for anisotropic diffusion speckle filtering. IEEE Trans Image Process 15(9):2694–2701. doi:10.1109/TIP.2006.877360

Aja-Fernández S, Vegas-Sánchez-Ferrero G, Martín-Fernández M, Alberola-López C (2009) Automatic noise estimation in images using local statistics. Addit Mult Cases 27(6):756–770. doi:10.1016/j.imavis.2008.08.002

Chen Q, Montesinos P, Sun QS, Xia DS (2010) Ramp preserving Perona–Malik model. Signal Process 90(6):19631975. doi:10.1016/j.sigpro.2009.12.015

Mittal D, Kumar V, Saxena SC, Khandelwal N, Kalra N (2010) Enhancement of the ultrasound images by modified anisotropic diffusion method. Med Biol Eng Comput 48(12):1281–1291. doi:10.1007/s11517-010-0650-x

Yu J, Tan J, Wang Y (2010) Ultrasound speckle reduction by a SUSAN-controlled anisotropic diffusion method. Pattern Recognit 43(9):3083–3092. doi:10.1016/j.patcog.2010.04.006

Bai J, Feng XC (2007) Fractional-order anisotropic diffusion for image denoising. IEEE Trans Image Process 16(10):2492–2502. doi:10.1109/TIP.2007.904971

Xie MH, Wang ZM (2006) Edge-directed enhancing based anisotropic diffusion. Chin J Electron 34(1):59–64

Liu X, Liu J, Xu X, Chun L, Tang J, Deng Y (2011) A robust detail preserving anisotropic diffusion for speckle reduction in ultrasound images. BMC Genomics 12(Suppl 5):1–10. doi:10.1186/1471-2164-12-S5-S14

Tsiotsios C, Petrou M (2012) On the choice of the parameters for anisotropic diffusion in image processing. Pattern Recognit 46(5):1369–1381. doi:10.1016/j.patcog.2012.11.012

Gilboa G, Sochen N, Zeevi YY (2006) Estimation of optimal PDE-based denoising in the SNR sense. IEEE Trans Image Process 15(8):2269–2280. doi:10.1109/TIP.2006.875248

Papandreou G, Maragos P (2005) A cross-validatory statistical approach to scale selection for image denoising by non linear diffusion. In: IEEE conference on computer vision and pattern recognition. San Diego, CA, pp 625–630. 20–25 June. doi:10.1109/CVPR.2005.21

Cohen E, Cohen LD, Zeevi YY (2014) Texture enhancement using diffusion process with potential. In: 2014 IEEE 28th convention of electrical and electronics engineers in Israel (IEEEI). Eilat, pp 1–5. 3–5 Dec. doi:10.1109/EEEI.2014.7005778

Healey GE, Kondepudy R (1994) Radiometric CCD camera calibration and noise estimation. IEEE Trans Pattern Anal Mach Intell 16(3):267–276. doi:10.1109/34.276126

Irie K, McKinnon AE, Unsworth K, Woodhead IM (2008) A model for measurement of noise in CCD digital-video cameras. Meas Sci Technol 19(4):045207–045211. doi:10.1088/0957-0233/19/4/045207

Grossberg MD, Nayar SK (2003) What is the space of camera response functions. In: Proceedings 2003 IEEE computer society conference on computer vision and pattern recognition, 2003, pp 602–609. 18–20 June 2003. doi:10.1109/CVPR.2003.1211522

Mitsunaga T, Nayar SK (1999) Radiometric self calibration. In: Proceedings IEEE conference on computer vision and pattern recognition CVPR’99. Fort Collins, CO, pp 374–380. 23–25 June 1999. doi:10.1109/CVPR.1999.786966

Ortiz A, Oliver G (2004) Radiometric calibration of CCD sensors: dark current and fixed pattern noise estimation. In: International conference on robotics and automation, ICRA, 2004. New Orleans, pp 4730–4735. 26 April–1 May 2004. doi:10.1109/ROBOT.2004.1302465

Adams Jr, James E (1997) Design of practical color filter array interpolation algorithms for digital cameras. In: Electronic imaging’97. San Jose, CA, pp 117–125. 8–14 Feb 1997. doi:10.1117/12.270338

Takamatsu J, Matsushita Y, Ogasawara T, Ikeuchi K (2010) Estimating demosaicing algorithms using image noise variance. In: 2010 IEEE conference on computer vision and pattern recognition (CVPR). pp 279–286. 13–18 June. doi:10.1109/CVPR.2010.5540200

Ramanath R, Snyder WE, Bilbro GL, Sander WA (2002) Demosaicking methods for Bayer color arrays. J Electron imaging 11(3):306–315. doi:10.1117/1.1484495

Gravel P, Beaudoin G, De Guise JA (2004) A method for modeling noise in medical images. IEEE Trans Med Imaging 23(10):1221–1232. doi:10.1109/TMI.2004.832656

Shree KN (2001) The CAVE databases. www.cs.columbia.edu/CAVE Colombia

Liu X, Tanaka M, Okutomi M (2012) Noise level estimation using weak textured patches of a single noisy image. In: Proceedings of the 19th IEEE international conference on image processing (ICIP), 2012. Orlando, FL, pp 665–668. 30 Sept–3 Oct. doi:10.1109/ICIP.2012.6466947

Arthur D, Vassilvitskii S (2007) k-means++: The advantages of careful seeding. In: Proceedings of the eighteenth annual ACM-SIAM symposium on discrete algorithms. New Orleans, pp 1027–1035. 7–9 Jan. doi:10.1145/1283383.1283494

Abramov S, Zabrodina V, Lukin V, Vozel B, Chehdi K, Astola J (2011) Methods for blind estimation of the variance of mixed noise and their performance analysis. In: Awrejcewicz J (ed) Numerical analysis-theory and applications. InTech, Poland, pp 49–70. doi:10.5772/24596

Cottet GH, Germain L (1993) Image processing through reaction combined with nonlinear diffusion. Math Comput 16(204):659–673. doi:10.1090/S0025-5718-1993-1195422-2

Ben Abdallah M, Malek J, Azar AT, Montesinos P, Belmabrouk H, Esclarin Monreal J, Krissian K (2015a) Automatic extraction of blood vessels in the retinal vascular tree using multiscale medialness. Int J Biomed Imaging 2015, Article ID 519024, 16 pages. doi:10.1155/2015/519024

Krissian K, Aja-Fernández S (2009) Noise-driven anisotropic diffusion filtering of MRI. IEEE Trans Image Process 18(10):2265–2274. doi:10.1109/TIP.2009.2025553

Martin D, Fowlkes C, Tal D, Malik J (2001) A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceedings eighth IEEE international conference on computer vision, 2001. ICCV 2001. Vancouver, BC, pp 416–423. 07–14 July. doi:10.1109/ICCV.2001.937655

Yang SM, Tai SC (2010) Fast and reliable image-noise estimation using a hybrid approach. J Electron Imaging 19(3):033007-1–033007-15. doi:10.1117/1.3476329

Martens JB, Meesters L (1998) Image dissimilarity. Signal Process 70(3):155–176. doi:10.1016/S0165-1684(98)00123-6

Darbon J, Cunha A, Chan TF, Osher S, Jensen GJ (2008) Fast nonlocal filtering applied to electron cryomicroscopy. In: Proceedings of the 5th IEEE international symposium on biomedical imaging: from nano to macro, 2008. ISBI 2008. Paris, pp 1331–1334, 14–17 May. doi:10.1109/ISBI.2008.4541250

Buades A, Coll B, Morel J-M (2005) A non-local algorithm for image denoising. In: IEEE computer society conference on computer vision and pattern recognition, 2005. CVPR 2005, vol 2. San Diego, pp 60–65. 20–25 June. doi:10.1109/CVPR.2005.38

Mahmoudi M, Sapiro G (2005) Fast image and video denoising via nonlocal means of similar neighborhoods. IEEE Signal Process Lett 12(12):839–842. doi:10.1109/LSP.2005.859509

Coupé P, Yger P, Barillot C (2006) Fast non local means denoising for 3D MR images. In: Proceedings of the 9th international conference, medical image computing and computer-assisted intervention-MICCAI 2006. Springer, Copenhagen, pp 33–40. 1–6 Oct. doi:10.1007/11866763_5

Malek J, Azar AT, Nasralli B, Tekari M, Kamoun H, Tourki R (2015) Computational analysis of blood flow in the retinal arteries and veins using fundus image. Comput Math Appl 69(2):101–116

Asad AH, Azar AT, Hassanien AE (2013a) Ant colony-based system for retinal blood vessels segmentation. In: Proceedings of seventh international conference on bio-inspired computing: theories and applications (BIC-TA 2012) advances in intelligent systems and computing, vol 201, 2013, pp 441–452. doi:10.1007/978-81-322-1038-2_37

Asad AH, Azar AT, Hassanien AE (2013b). An improved ant colony system for retinal vessel segmentation. In: 2013 federated conference on computer science and information systems (FedCSIS). Kraków. 8–11 Sept 2013

Asad AH, Azar AT, Hassanien AE (2014) A comparative study on feature selection for retinal vessel segmentation using ant colony system. In: Recent advances in intelligent informatics advances in intelligent systems and computing vol 235, pp 1–11. doi:10.1007/978-3-319-01778-5_1

Malek J, Tourki R (2013) Blood vessels extraction and classification into arteries and veins in retinal images. In: 2013 10th international multi-conference on systems, signals and devices (SSD). Hammamet, pp 1–6. 18–21 March. doi:10.1109/SSD.2013.6564037

Malek J, Azar AT, Tourki R (2014) Impact of retinal vascular tortuosity on retinal circulation. Neural Comput Appl 26(1):25–40. doi:10.1007/s00521-014-1657-2

Emary E, Zawbaa H, Hassanien AE, Schaefer G, Azar AT (2014a) Retinal blood vessel segmentation using bee colony optimization and pattern search. In: IEEE 2014 international joint conference on neural networks (IJCNN 2014). Beijing International Convention Center, Beijing. 6–11 July

Emary E, Zawbaa H, Hassanien AE, Schaefer G, Azar AT (2014b) Retinal vessel segmentation based on possibilistic fuzzy c-means clustering optimised with cuckoo search. In: IEEE 2014 international joint conference on neural networks (IJCNN 2014). Beijing International Convention Center, Beijing. 6–11 July

Malek J, Tourki R (2013) Inertia-based vessel centerline extraction in retinal image. In: 2013 international conference on control, decision and information technologies (CoDIT). Hammamet. p 378381. 6–8 May. doi:10.1109/CoDIT.2013.6689574

Malek J, Ben Abdallah M, Mansour A, Tourki R (2012) Automated optic disc detection in retinal images by applying region-based active aontour model in a variational level set formulation. In: 2012 international conference on computer vision in remote sensing (CVRS). Xiamen, p 3944. 6–18 Dec. doi:10.1109/CVRS.2012.6421230

Yin Y, Adel M, Guillaume M, Bourennane S (2010) Bayesian tracking for blood vessel detection in retinal images. In: 18th European signal processing conference (EUSIPCO 2010). Aalborg. 23–27 Aug. Id: hal-00483834, version 1

Hani AFM, Soomro TA, Faye I, Kamel N, Yahya N (2014) Denoising methods for retinal fundus images. In: 2014 IEEE international conference on intelligent and advanced systems (ICIAS). Kuala Lumpur, pp 1–6. 3–5 June 2014. doi:10.1109/ICIAS.2014.6869534

Sun J, Luan F, Wu H (2015) Optic disc segmentation by balloon snake with texture from color fundus image. Int J Biomed Imaging. ID 528626. 2015:14. doi:10.1155/2015/528626

Hoover A (1975) STARE database. http://www.ces.clemson.edu/ahoover/stare

Ben Abdallah M, Malek J, Azar AT, Belmabrouk H, Esclarin Monreal J (2015b) Performance evaluation of several anisotropic diffusion filters for fundus imaging. Int J Intell Eng Inform 3(1):66–90. doi:10.1504/IJIEI.2015.069100

Asad AH, Azar AT, Hassaanien AE (2012) Integrated features based on gray-level and hu moment-invariants with ant colony system for retinal blood vessels segmentation. Int J Syst Biol Biomed Technol IJSBBT 1(4):60–73

Asad AH, Azar AT, Hassanien AE (2014a) A comparative study on feature selection for retinal vessel segmentation using ant colony system. In: Recent advances in intelligent informatics advances in intelligent systems and computing, vol 235, pp 1–11. doi:10.1007/978-3-319-01778-5_1

Asad AH, Azar AT, Hassanien AE (2014b) A new heuristic function of ant colony system for retinal vessel segmentation. Int J Rough Sets Data Anal 1(2):15–30

Hadj Fredj A, Ben Abdallah M, Malek J, Azar AT (2015) Fundus image denoising using fpga hardware architecture. Int J Comput Appl Technol (in press)

Ricci E, Perfetti R (2007) Retinal blood vessel segmentation using line operators and support vector classification. IEEE Trans Med Imaging 26(10):1357–1365. doi:10.1109/TMI.2007.898551

Marin D, Aquino A, Gegúndez-Arias ME, Bravo JM (2011) A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans Med Imaging 30(1):146–158. doi:10.1109/TMI.2010.2064333

Conflict of interest

The authors declare that they have no conflict of interest.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ben Abdallah, M., Malek, J., Azar, A.T. et al. Adaptive noise-reducing anisotropic diffusion filter. Neural Comput & Applic 27, 1273–1300 (2016). https://doi.org/10.1007/s00521-015-1933-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-015-1933-9