Abstract

The present research proposes a new particle swarm optimization-based metaheuristic algorithm entitled “search in forest optimizer (SIFO)” to solve the global optimization problems. The algorithm is designed based on the organized behavior of search teams looking for missing persons in a forest. According to SIFO optimizer, a number of teams each including several experts in the search field spread out across the forest and gradually move in the same direction by finding clues from the target until they find the missing person. This search structure was designed in a mathematical structure in the form of intragroup search operators and transferring the expert member to the top team. In addition, the efficiency of the algorithm was assessed by comparing the results to the standard representations and a problem with the genetic, grey wolf, salp swarm, and ant lion optimizers. According to the results, the proposed algorithm was efficient for solving many numerical representations, compared to the other algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

An optimization process includes finding the best solution from all feasible solutions for a specific problem. With regard to their nature, optimization algorithms can be widely divided into two groups: deterministic algorithms and stochastic intelligent algorithms (Yang 2008). Deterministic algorithms will produce the same output when the solutions to a problem have the same initial values. These methods are classified as gradient restricted methods that move exactly towards the optimal solution. In contrast, deterministic algorithms are generally recognized as gradient-free techniques used in random steps to find the best solution possible. In this method, the optimization process cannot be repeated in any situation (Brownlee 2011). In most cases, however, favourable final solutions can be produced by both techniques. Stochastic intelligent algorithms are divided into two general modes of heuristic and metaheuristic algorithms (Yang et al. 2012).

As the name implies, heuristic algorithms use trial and error to find the solution that works and most of them are applied in various optimization areas such as bat swarm optimization, hill climbing, and simulation annealing.

Many real-world machine learning and AI problems are generally continuous, discrete, finite, or infinite (Abbassi et al. 2019). Given these characteristics, some problems cannot be easily solved with ordinary mathematical programming approaches such as conjugate gradient, sequential quadratic programming, fast steepest, and quasi-Newton methods (Faris et al. 2019).

Meanwhile, several studies have confirmed the inefficiency or low efficiency of the metaheuristic algorithms in dealing with many large-scale, indirect, and indistinguishable polynomial problems in the real world (Wu et al. 2015). Accordingly, metaheuristic algorithms are designed as a competitive alternative solvent to solve many problems owing to their simplicity and easy implementation process. Moreover, the main operations of these methods do not rely on mathematical features or gradient information. Nevertheless, one of the major drawbacks of metaheuristic algorithms is their sensitivity to setting user-defined parameters. Another problem with these algorithms is their lack of ability to always reach a global optimal solution (Dréo et al. 2006).

Metaheuristic methods are used to solve complicated issues such as scheduling (Wang and Zheng 2018), shape design (Rizk-Allah et al. 2017), economic load dispatch (Zou et al. 2016a, b), large-scale data optimization (Yi et al. 2018, 2020), infinite impulse response (IIR) systems identification (Zou et al. 2018), malware code detection (Cui et al. 2018), error detection (Li et al. 2013; Yi et al. 2018), forecasting promotion places (Nan et al. 2017), unit commitment (Srikanth et al. 2018), classification (Zou et al. 2016a, b, 2017), path planning (Wang et al. 2012a, b, 2016a, b), vehicle navigation (Chen et al. 2018), knapsack problems (Feng et al. 2018a, b), and cyber-physical systems (Cui et al. 2017), neural network (Pandey et al. 2020), trajectory tracking control of unmanned aerial vehicle (Selma et al. 2020).

Metaheuristic algorithms designed are properly implemented for most optimization issues and can successfully provide a suitable and satisfactory situation. In the area of computer sciences, researchers have proposed a new type of gradient-free technique known as optimization techniques called “genetic algorithm (GA)” by the conceptualization of evolution (Goldberg and Holland 1998). After that, several other techniques have been suggested in the field of optimization, including ant colony optimization (Dorigo and Birattari 2010), monarch butterfly optimization (MBO) (Feng et al. 2018a, b), the ant lion optimizer (Mirjalili 2018), moth search (Wang et al. 2018a, b, c), artificial bee colony (Wang et al. 2018a, b, c), evolutionary strategy (Back 1996), harmony search (Geem et al. 2001), imperialist competitive algorithm (Talataheri et al. 2012), earthworm optimization algorithm (Wang et al. 2015), cuckoo search (Wang et al. 2016a, b), biogeography-based optimization (Wang et al. 2014), elephant herding optimization (Wang et al. 2016a, b), and differential evolution (Wang et al. 2012a, b).

In general, metaheuristic algorithms are divided into two main classes (Talbi 2009), single-solution-based (e.g. simulated annealing) and population-based (e.g. GA). In the first class, only one solution is processed in the optimization stage, as the name implies. On the other hand, a set of solutions (i.e. population) evolves with each iteration of the optimization process in the second class. The population-based techniques can often find an optimal or near-optimal solution in the neighbourhood. In addition, population-based metaheuristic techniques are usually inspired by natural phenomena and start the optimization process by producing a set (population) of people, where each person in the population represents a candidate solution to the optimization problem. The population repeatedly evolves with replacing the current population with a new population using some random operators. The optimization process continues until meeting the stopping criteria (i.e. the maximum number of iterations).

2 Literature review

Particle swarm optimization (PSO) is a spirit-based stochastic optimization method proposed by Eberhart and Kennedy (1995) and by Kennedy and Eberhart (1995). Given the inefficiency of the first version of PSO in optimization issues, another version was quickly proposed by shi and eberhart (1998). Afterwards, a reproduction operator was incorporated into the algorithm following presenting the concept of a sub-population GA (SPGA) (Suganthan 1999). Another local PSO version was suggested based on the k-means clustering approach known as the social convergence method with hybrid spatial neighbourhood and ring topology by Kennedy and Stereotyping (2000). Van Den Bergh (2001) analyzed the definitive version of the PSO algorithm followed by presenting a version of the PSO algorithm with reduction factor after analyzing the convergence behavior of PSO algorithm, which guaranteed convergence and improved its rate (Clerc and Kennedy 2002). The bare-bones PSO (BBPSO) was proposed in as a dynamic model of PSO (Kennedy 2003). In 2004, Emara and Fattah (2004) analyzed the continuous version of PSO algorithm and theoretical analysis was performed on the PSO algorithm for symmetric spherical local neighbourhood functions, and numerical tests confirmed the speed characteristics along with reducing variability in the PSO algorithm (Blackwell 2005). Kadirkamanathan et al. (2006) analyzed the dynamic stability of particles using Lyapunov stability analysis and the concept of passive system (Kadirkamanathan 2006). A marine model was studied to promote search diversity in PSO (Poli et al. 2007). In addition, the social and fully aware particle version of the PSO algorithm was also introduced by Poli (2008) and the stable stochastic analysis encompassed higher-ranking moments, proving its suitability for comprehension of dynamics of particle growth and clarifying the convergence features of PSO (Poli 2009). The modified algorithm presented by Park et al. (2009) introduced the chaotic inertia weight that suddenly falls and simultaneously inclines downwards. Zhan et al. (2010) introduced orthogonal learning, in which an orthogonal learning model was applied for efficient sampling.

Li et al. (2011) presented a self-learning PSO algorithm, in which the speed update program can change automatically during the evolution process. Approaches such as search space compression have been proposed dynamically to determine the search space (Barisal et al. 2013). Garcia-Gonzalo and Fernandez-Martinez (2014) presented a convergent reliability and stability analysis of a series of PSO types, and their research was different from the classic PSO in terms of the statistical distribution of PSO parameters. Moreover, Peng and Chen (2015) introduced a coexistence particle optimization algorithm to optimize fuzzy neural networks. Eddaly et al. (2016) presented a hybrid combinatorial particle swarm optimization algorithm (HCPSO) as a resolution technique. An iterated local search algorithm based on probabilistic perturbation is sequentially introduced to the particle swarm optimization algorithm for improving the quality of solution. The computational results showed that their approach was able to improve several best known solutions of the literature. Tanweer et al. (2016) presented a new dynamic particle optimization algorithm and self-resemblance algorithm. Moreover, Wang et al. (2018a, b) proposed a Krill herd algorithm, which is from the category of PSO algorithm. Koyuncu and Ceylan (2018) added a scout bee phase to standard PSO and formed the Scout Particle Swarm Optimization (ScPSO) to design an efficient technique for continuous function optimization; consequently, a robust optimization algorithm was obtained.

3 Search in forest theory

Every year, thousands of people lose their way in forests and mountain areas, and search-and-rescue operations are carried out to find them and bring them to their destination. To this end, some “search agents” are assigned to find the missing people in the forest after passing special education courses in this regard. These individuals are often grouped in “search teams” during operations and attempt to find the target in the depth of forests. It should be noted that in order to perform search operations, different methods can be implemented according to the opinion of the commander. However, given the standard content presented in educational courses held for police and fire brigade, who are among the main forces searching in the forest, multi-member teams are formed at first to search different parts of the forest. Afterwards, each team that finds a clue of the target informs other teams to adjust their direction. Ultimately, all teams search the direction in which most clues are found. Figure 1 graphically shows how to search the forest in accordance with the above-mentioned issues.

Two techniques are applied to organize teams to conduct effective search operations in all forest areas. The first technique is a local search inside each team, in which the commander of each team allocates parts to each member and gives them the responsibility of searching that part. The commander is informed of any clue found by team members, and they will send another team member to that area for more effective search in order to deepen the search. Therefore, all team members attempt to conduct an effective search in the parts allocated to them. In the second technique, the team that finds most clues is enforced by other teams. In fact, commanders of teams send one of their team members to the team with the most clues in order to help conduct an effective search in the forest. Meanwhile, some members remain in the teams that find the least clues in order to continue the search in the forest in case of any mistake made by the top team. In fact, a team may find several clues that are false and cannot lead to finding the target. In this case, other teams continue the search in the forest. However, their search path is in line with the search path of the top team since most clues have been found on this route. The mathematical structure inspired by this behavior will be explained in the next section to solve global optimization problems.

4 Mathematical model of search in forest optimizer

In this section, the steps related to the implementation of the mathematical model of the forest search (SIFO) optimizer are described separately.

5 Research team production

First, M teams were determined, each with N members, in order to establish initial order in the formation of search teams. In fact, \(\left( {P_{n} } \right)_{m}\) population is created, in which m shows the number of search teams and n shows the number of team members. Since team members search the forest space in a stochastic manner and they might find a clue at any time, their movement structure is designed in two sections based on a mathematical model. In the first section, a stochastic step is designed for each m search team in the form of Model 1.

where r1 and r2 are random numbers in the [0, 1] range. Therefore, each search group (population) can search for the missing person stochastic in the forest space. Model 1 produces various stochastic search structures, which are shown in Fig. 2.

5.1 Search space division scheme

Given the fact that each search team searches a specific area of the solution space, a specific movement structure must be determined for each member of each team to ensure a thorough search of the entire solution space. In other words, members of each team must cover the entire solution space based on the angle assigned to them to search using stochastic movements. The search area is assumed to be enclosed in a circle in order to formulate this structure. In addition, it is assumed that all search teams are in the centre of the circle at a zero moment. As such, a proper angle of the circle is allocated to each team n based on the number of search teams (M).

In Fig. 3, the solution space is divided into eight equal parts based on the enclosed circle and space is allocated to each of the eight teams based on \(\theta\) angle. Therefore, the entire solution space will be inspected by the search team members. According to Model 1, all search teams make stochastic movements in the allocated space. In addition, the surrounding space is inspected by the research team while searching the main route of their team’s direction. Since the opposite view of \(\theta\) angle is the sine of the angle, sine function, as shown in Model 2, can be used to mathematically define the movement structure of each search member.

where r is a random number in (0, 1) range. Similar to Fig. 2, the movement structure produced in Model 2 can create various procedures, which ensures the stochastic movement of the entire members of all search teams. The position of each search team in the solution space is determined in a random matrix, in which columns are indicative of the number of search teams, while rows demonstrate the number of members in each team in the form of matrix (3).

where \(T_{mn}\) indicates the nth member of the mth team. Clearly, each team member has a set of decision-making variables, which is shown in a matrix in the form of Eq. (4).

where \(M_{v}\) is the amount of the vth variable in the nth member of the mth population. The fitness of each team member can be estimated based on the problem’s objective function and be considered as Eq. (5).

Obviously, \(f\left( {T_{mn} } \right) = f\left( {T_{mn} \left( {M_{1} ,M_{2} , \ldots ,M_{v} } \right)} \right)\). According to the structure created, it could be expressed that team members have the same role as particles in the PSO algorithm, and the created demographic structure is based on GA.

5.2 Integration of search teams

In algorithm iterations, a number of search teams are dissolved due to failure to find proper clues of the target, and their members are added to other teams. In this regard, each team can attract the members of the dissolved team based on their performance. In fact, the allocation of the members of the dissolved team to other teams depends on the teams’ fitness. However, the teams’ performance must be assessed and the improper teams must be dissolved at the right time. It is best not to eliminate any team at the beginning of the search due to the need for several teams to inspect the entire solution space. As search operations expand, the search teams can be assessed and dissolved in case of poor performance. In this algorithm, it is assumed that the teams have more time to show their performance in primary iterations in order to formulate this behavior mathematically. In final iterations, however, assessments are carried out in shorter periods. This mathematical structure is shown in Model 6.

When the measure of the best fitness increases from the worst fitness related to the best fitness for all teams lower than the empirical value of 0.4 for a team, that team is recognized as the weaker team. It means that the search in the heuristic path would not be productive. Accordingly, before starting the next iteration, the elite members of that group will be transferred to stronger groups. \({\text{Best}}^{t}\) indicates the fitness of the best member in the tth iteration, Worst is indicative of the fitness of the weakest population, and Average function is the mean fitness of the existing populations in that iteration. Following dissolving the team with the weakest performance, its members are transferred to other teams. However, the number of members allocated to each of the remaining teams depends on their fitness based on Eq. 7. In order to improve the stronger teams by transferring the determined elite members to the weaker teams, we should also transfer the elite of these individuals, meaning their ability to discover the best optimal answer. It is obtained via calculating the measure of the best optimal answer of each team related to the sum of all teams' answers and multiplying the result by the number of determined individuals.

where \(N_{r}^{t}\) the number of members and r is the index of the dissolved team. The elite members of the weaker teams are determined so far. Thereby, the stronger team would have n + \(I_{m}^{t}\) members. Accordingly, transferring the elite member of the weaker teams to the stronger teams was performed until the final iteration of the algorithm. Therefore, the teams with a weak performance can be eliminated in various algorithm iterations and the remaining teams’ ability to search can be strengthened through increasing their members.

5.3 Intragroup search

In this section, we describe the local search structure conducted inside each group to improve each of its members and ultimately enhance the group’s ability to search. As explained, an intragroup search occurs when one of the members of the group finds a clue. For a more detailed search, the commander of the group sends other members to the direction of the mentioned member to reinforce the local search to a certain extent. To this end, \(S_{m} \left( t \right)\) is defined as a set of neighbours on the tth variable in \(X_{m} \left( t \right)\) solution.

where \(W_{m}^{ + } \left( t \right)\) and \(W_{m}^{ - } \left( t \right)\) indicate two neighbours of \(X_{m}^{t}\) solution, and \(u_{m} \left( t \right) = \left( {u_{1} \left( t \right),u_{2} \left( t \right), \ldots ,u_{M} \left( t \right)} \right),\) \(m \in \left\{ {1, \ldots ,M} \right\}\) and \(v_{m} \left( t \right) = \left( {v_{1} \left( t \right),v_{2} \left( t \right), \ldots ,v_{M} \left( t \right)} \right)\), \(m \in \left\{ {1, \ldots ,M} \right\}\) refer to two random solutions selected from \(P_{m}\) population. Moreover, c shows the cluttered factor obtained based on the normal distribution of \(N\left( {\mu ,\sigma^{2} } \right)\). While the application of the cluttered factor in the local search algorithm is not considered as a decision-making criterion, it has a slight impact on the improvement process of algorithm implementation. Meanwhile, careful adjustments must be made for u and v factors. However, it is easily justified that u factor must be greater than zero (u > 0). The value of the factor must be determined accurately since very large amounts lead to extremely large values for \(\left( {u_{m} \left( t \right) - v_{m} \left( t \right)} \right)\), which intensifies the cluttered br in the algorithm. On the other hand, the value of the mentioned equation will be very small if very low amounts of the factor are used. This leads to very small values obtained from multiplying the c factor into the factor’s value, very low cluttered factor, and low algorithm convergence. As such, the parameter should be set with great care. In addition, \(\sigma\) parameter’s value should be greater than zero. Notably, very large or very low \(\sigma\) values affect the c parameter, and consequently, the convergence of the algorithm. It is suggested that the values of \(\sigma\) and \(\mu\) be in the (0, 1) range.

The stochastic step created in models 1 and 2 cannot be used directly since the solutions produced by algorithm iterations for each member of the population should be in the permissible range of problem variables. Therefore, necessary numerical conversions should be made using standardization functions.

5.4 Transfer of elite members to the top team

The elite members are the individuals of each team providing the best optimal answer in each search. The superior teams are the ones providing more optimal answers in each search compared to the other teams. Indeed, the teams with more fitness are in the right heuristic path of the total optimal answer compared to the other teams. Transferring the elite individuals to the superior teams leads to their strength in accurately searching the neighbourhood through the heuristic path to find the best fitness, and it is one of the tools for exiting the local optimizations, preventing the algorithm from getting fall in them.

In this research, the final solution was improved using the random walk method (Levy flight) based on the equation below:

where t is the number of algorithm iteration and d is the dimension of the team members’ position vector. The Levy flight value is estimated using the equation below:

where r1 and r2 are random values in the (0, 1) range. In addition, \(\beta\) is a constant number (e.g. 1.5). Finally, \({\upsigma }\) is calculated based on the equation below:

where \(\Gamma \left( {X_{m}^{t} } \right) = \left( {X_{m}^{t} - 1} \right)!\) Therefore, the solutions generated are improved with each algorithm iteration, and the best algorithm solution is considered as the final solution.

5.5 Flowchart and the pseudo-code of the SIFO algorithm

Figure 4 shows flowchart of the SIFO algorithm.

6 Results and discussion

In this section, we evaluate the performance of the proposed algorithm in solving two general categories of optimization problems, including constrained and unconstrained problems. Figure 5 shows diagram block was provided to demonstrate the road map in different steps of this part. All problems have been run in a 3.2 GHz system with 32 GB random access memory in Windows 10.

6.1 Introducing standard unconstrained optimization and parameter regulation tests

In this research, we use four popular standardized tests, including classic unimodal (Yao et al. 1999; Digalakis and Margaritis 2001) and multimodal functions (Molga and Smutnicki 2005), unimodal and multimodal CEC2014 functions and CEC2014 combined functions. The mathematical structure, dimensions, and the range of values of these functions are described in Tables 1, 2, 3, 4.

A very important issue in the implementation of a metaheuristic algorithm is the accurate adjustment of parameters to increase their efficiency. It is worth noting that there is still no comprehensive approach to the assessment of the performance of metaheuristic algorithms (Yang 2010). Therefore, the solutions provided by the proposed algorithm should be compared to the results obtained from other algorithms. Therefore, we considered the GA (Davis 1991), grey wolf optimizer (GWO) (Mirjalili et al.2014), salp swarm (SSA) (Mirjalili et al. 2017), ant lion optimizer (ALO) (Mirjalili 2015), gravitational search algorithm (GSA) (Rashidi and Cook 2009), differential evolution (DE) (Storn and Price 1997), and PSO (Zhou et al. 2003) algorithms as the base algorithms to compare the results. It is thereby necessary to adjust the parameters of each of the mentioned algorithms based on Table 4.

All algorithms are implemented to achieve final results in similar situations. In all algorithms, the population size is estimated at 50 and a total of 1000 iterations are considered. In order to control the performance of all algorithms, the best results are considered among the 30 independent performances. In addition, a number of diagrams are presented below to evaluate the results of the proposed algorithm in comparison with other metaheuristic algorithms in terms of solving standard numerical representations.

-

Diagram of search structure The diagram shows the location of each search agent in the forest space during the implementation of the algorithm. The diagram helps determine the level of success of search agents in the solution space.

-

Improved trajectory The diagram shows the amount of target function in the best solution obtained in each implementation of the algorithm. In fact, the diagram shows the improved trajectory of the best member of the search agent population.

-

Mean solutions The diagram demonstrates the mean of a fitness function for all members in each iteration. The diagram indicates the algorithm’s ability in converging all population members.

-

Convergence diagram The diagram shows the convergence of the algorithm towards the best solution in each iteration.

6.2 Numerical results of the limit of classic unimodal and multimodal samples

The diagrams related to the comparison criteria are presented in Fig. 6 following solving the classic unimodal and multimodal numerical representations with the help of the proposed algorithm.

According to the results, the population members are scattered in the space during the optimization process and are concentrated on the optimal solution. This shows that the algorithm performance has balance in diversification and contemplation phases. In addition, the trajectory of the best member of the population in each iteration shows many numerical changes in the first iterations and then convergence on the final solution. In fact, this confirms a balance in the discovery of proper solutions in the initial iterations (diversification) and focusing on the best solution for local search to make an optimized global finding. Evaluation of the convergent diagram shows that the convergence process is carried out with less slope or is discontinued completely in some iterations. This means that the algorithm has failed to find a solution better than the existing solution. However, a sudden significant improvement is observed and the improvement slope has a suitable state again. This shows that the proposed algorithm can properly pass the trap of local optimization solutions and continue the search in other parts of the space using the operators designed in order to pass local optimization and move towards global optimization. Therefore, the proposed algorithm is able to discover global optimization solutions in all standard representations in a shorter period. The results obtained from solving the classic unimodal and multimodal numerical representations are presented in Table 5 with the help of the base algorithms and proposed algorithm.

As observed, the SIFO optimizer produced better solutions in all numerical representations, compared to SSA, ALO, PSO, and GSA algorithms. Moreover, SIFO shows very competitive results compared to DE in F4, F5, F6, F11, F12, and F13 representations and compared to GWA in F8 and F11 representations. Notably, the mean and standard deviation of solutions are considered in Table 5. Meanwhile, SIFO optimizer produced better solutions in all numerical representations, compared to the other algorithms in independent implementations. In other words, SIFO was able to provide solutions with higher quality in independent implementations. The best and worst values for final solutions obtained from the independent implementation of different algorithms are presented in Table 6 to better show this superiority. In addition, the number of times that the SIFO optimizer has been able to generate better solutions, compared to other algorithms, is shown under the heading NBS.

As observed, SIFO optimizer produced better independent solutions in standard representations, compared to several algorithms, which shows its capacity to be used as an efficient algorithm in solving optimization problems.

6.3 Numerical results obtained from the limit of CEC2014 unimodal and multimodal representations

In this section, CEC2014 standard representations presented in two unimodal and multimodal versions are solved using SIFO optimizer and other comparative algorithms, and the results are presented in the table. It should be pointed out that owing to higher computational complexity, compared to F1–F13 functions, the samples can challenge the solution discovery power of the proposed algorithm in the exploration phase. This is mainly due to the fact that discovering the accurate location of the global optimization solution in the complicated computational space of these algorithms is extremely difficult, and the algorithm may get stuck in the local optimization trap. In fact, the operators presented in different algorithms can be well evaluated in this category of standard functions. The necessary comparative results are presented in Tables 7 and 8. Notably, solving the two standard samples was assessed in two modes of 1000 and 10,000 iterations to evaluate the effect of an increased number of iterations on the final solutions.

As observed, the proposed algorithm was able to provide more efficient solutions in most numerical examples, compared to the other algorithms. This level of superiority is due to the presence of two different populations, which extremely increases search power in the exploration phase.

As observed, the final solutions significantly improved with an increase in the number of iterations, which confirmed the proper implementation of the algorithm’s operators to escape the local optimization trap. In fact, an increase in the number of iterations enabled the algorithms to leave the local optimization space and enter the global optimization space by using operators that have the property of causing mutations in the production responses. A 3D structure can be drawn for other functions (see Fig. 7).

6.4 Numerical results obtained from the limit of CEC2014 combined examples

In this section, six combined numerical examples presented at CEC2014 are considered as evaluation functions and the results of the solution are described as the last numerical analysis presented to test the proposed algorithm using standard examples. These examples can assess the exploitation phase of the proposed algorithm since the space of solutions is designed in a way that it has made the discovery of a global optimization solution in the solution space extremely complicated. In other words, the extend of the solution space leads to the appropriate implementation of the exploration phase during an acceptable period. However, finding a solution to the exploitation phase faces serious challenges. Table 9 presents the numerical results obtained from the implementation of these standard examples.

As observed, the proposed algorithm provided better solutions in solving the combined representations, compared to the other algorithms presented in the research. Therefore, it could be expressed that the application of the proposed algorithm can create a suitable condition for finding the final solution in the global optimization solution space. To sum up, the SIFO optimizer was able to create a proper situation for a general assessment of the solution space using the mechanism of production of multiple populations in the form of search teams and applying various search factors in each team. Moreover, the algorithm reinforced the discovery phase by integrating search teams in various iterations. In addition, using the Levy flight operator to transfer the elite members resulted in a suitable convergence in the problem-solving procedure. A numerical example corresponding to real-world conditions is solved using this algorithm, and the numerical results are fully described to examine the application of the proposed algorithm in solving engineering problems more precisely.

6.5 Solving constrained optimization problems

As mentioned at the beginning of this section, two numerical examples in the area of supply field design are evaluated as constrained optimization problems in order to more accurately evaluate the performance of the proposed algorithm.

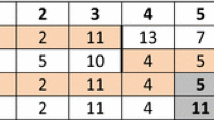

6.5.1 Classic supply chain design

In brief, supply chain management is one of the basic issues in the fields of management, economics, industrial engineering, planning, and other related fields. A part of issues in the area of supply chain management is defined as optimization problems and in the field of operations research (Zou et al. 2016a). In this set of problems, the flow of goods, from the beginning of the production path, which includes the supply of raw materials, manufacturing in production centres, and distribution of the final product, is managed in a way that the final cost of the system is minimized. In this research, the data extracted from the research by (Beamon 1998) are used, which is recognized as one of the most complete classic models in this field. In this research, 20 numerical examples were generated according to the structure of the basic article to more accurately evaluate the behaviour of the SIFO optimizer. In Table 10 the numerical results obtained from comparison with GWA, SSA, and SIFO optimizer were described. Notably, the comparison criteria included the value of the objective function, the amount of deviation of solutions, and the number of iterations required to achieve the final solution.

According to Table 10, the SIFO optimizer had lower objective function values, compared to the other algorithms, which is also observed in Fig. 8. In fact, the algorithm is able to conduct a better search in the solution space and report more appropriate results. This is mainly due to the presence of robust operators based on the crossover and mutation in the algorithm.

Regarding the amount of deviation, the SIFO optimizer had the lowest value in this respect, whereas SSA had the highest value, which showed the higher efficiency of the proposed algorithm in solving problems in this field.

As observed, in Fig. 9 there was a large gap between the solutions provided by the SIFO optimizer and SSA, while GWO produced solutions similar to SIFO optimizer in some cases. Therefore, it is necessary to more analyse the comparison of the GWA and SIFO optimizer, for which statistical tests are used to make a more accurate comparison. Since the tests compared two different societies, the GWO and SIFO optimizer, which had the highest efficiency, was selected as the criterion algorithms and the necessary estimations were carried out. In choosing a statistical test for research, it is necessary to decide on the use of parametric and nonparametric tests in a specific way. In this regard, one of the main criteria for selection is conducting the Kolmogorov–Smirnov test, which shows the normal or abnormal distribution of the data. In general, data have a normal distribution if the mentioned test is rejected, which means that the parametrical statistical tests can be used for research. On the other hand, confirmation of the Kolmogorov–Smirnov test is interpreted as an abnormal distribution of the data, which means that nonparametrical tests should be used in the research. Meanwhile, significant results of the Kolmogorov–Smirnov test (P-value ≤ 0.05) show the abnormal distribution of the data and the need for using nonparametric tests since confirmation of the test is defined as nonparametric data. Figure 10 diagrams show the result of the assessment of all defined criteria in the test.

As observed, K–S test results are indicative of the abnormal distribution of all criteria. Therefore, nonparametric tests should be used to compare the two communities. In this research, we used the Kruskal–Wallis test, the results of which are presented in Tables 11 and 12.

As observed, the two algorithms are different in terms of all criteria, and SIFO optimizer has higher efficiency, compared to GWO, at all times.

However, the difference is not significant, and it can be expressed that the two algorithms had similar functions. As such, SIFO implementation can lead to highly efficient solutions for problems related to the management chain field.

6.6 Advanced supply chain design problem solving

In this research, we focus on the design of a reverse supply chain to minimize the costs of facility location and material transfer between different categories of the chain in question. Evaluation of this issue is important since determining the proper location of the facility and the proper flow of products transferred between the levels of the chain can improve the performance of the chain and ultimately achieve a suitable profit margin for industry managers. The proposed model is explained in Tables 13, 14, 15, 16.

The first sentence of the objective function calculates the cost of activation of a supply centre. The second sentence of the objective function shows the cost of activation of producers. In addition, the third sentence estimates the activation cost of collection centres, whereas the fourth sentence calculates the cost of activation of a destruction centre. Moreover, the fifth sentence calculates savings resulted from matching the centres. Notably, the sentence is presented in the target function with a negative symbol since it must be maximized. Furthermore, the sixth sentence shows the transfer of producers from suppliers to producers, while the seventh sentence estimates the costs of transfer from producer to retailer. The eighth sentence calculates the cost of transfer of returned products from retailer to collection centres, and the ninth centre estimates the cost of transferring products from collection centres to destruction centres. Finally, the 10th sentence shows the entire costs of moving a returned product unit from the collection centre to the supplier. Phrases (16) and (17) guarantee that all demands of customers are responded to the direct flow, and all returned products are collected from retailers in the reverse flow. In addition, phases (18–20) are related to flow balance constraints at nodes. In fact, it is guaranteed in these constraints that the products that enter each chain level must exist from the same level. Moreover, constraints (19–26) guarantee that the flow exists only between points where facilitation has been activated and the entire flow does not exceed the capacity of each facilitation. Furthermore, constraints (29–33) guarantee that only one level of capacity is allocated to each facilitation, whereas constraint (31) guarantees that the necessary module exists in the products sent to the desired customer if the modules are mandatory. Additionally, constraint (34) guarantees that customer demand can only be met by a manufacturer when that manufacturer has the ability to add a mandatory customer module. Finally, constraints (35–36) are logical and obvious limitations related to decision variables of a problem.

6.6.1 Comparison of results obtained from supply chain design problem solving values by SIFO optimizer and latest algorithm

The supply chain model is solved using the random values by SIFO optimizer and latest algorithm, Equilibrium Optimizer (EO) (Farmarzi et al. 2020), Marine Predators Algorithm (MPA) (Farmarzi et al. 2020), Slime mould algorithm (SMA) (Li et al. 2020), Horse herd optimization algorithm (HOA) (MiarNaeimi et al. 2021), Wild horse optimizer (WHO) (Naruei and Keynia 2021), and the results are compared in Table 17. The results of solved supply chain model by SIFO optimizer indicated better optimal values than the MPA, EO, SMA, HOA, and WHO. So, the SIFO is presented to solve industrial engineering and supply chain problems as an efficient metaheuristic algorithm. The parameters of each of the mentioned algorithms are adjusted based on Table 17.

6.6.2 Evaluation of results obtained from supply chain design problem solving

In the numerical example of the present model, we assume that a supply chain network is being designed, where four potential points are available for the activation of suppliers, whereas 6, 20, 4, and 4 potential points exist for activation of production centres, customers, the establishment of collection centres, and construction of destruction centres, respectively. Overall, two suppliers, three producers, two collection centres, and two destruction centres must be constructed among the potential points. Also, all centres have three capacity levels. The amount of customer demand is presented in Tables 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30.

It is possible that all producers are able to upload any module for their customers in order to create more complications in problem-solving process. After solving the problem using the proposed algorithm, the convergence diagram is presented in Fig. 11.

As observed, the SIFO optimizer was suitably converged towards the optimal solution, and the mean of solutions matched the best solution in the final iterations. This shows that the proposed algorithm has efficiently guided all members in search teams towards the final solution. The structure of the supply chain can also be described in Tables 31, 32, 33, 34.

The supplier 1 with capacity level 1 and supplier 4 with capacity level 2 are selected to supply the products required by producers. In addition, producers 1, 2, and 4 with capacity level 1 and producer 6 with capacity level 3 are activated. Moreover, the collection centre 1 with capacity level 1, collection centre 3 with capacity level 2, and collection centres 2 and 4 with capacity level 3 are constructed to collect products used by customers. Furthermore, destruction centres 2, 3, and 4 with capacity level 2 are constructed to destroy the nonrecyclable part of products. In the management of material flow, 151, 1211, and 84 units are delivered to producer 1, 2, and 3, respectively, by supplier 1. In addition, the supplier 4 sends 410 product units to producer 1. Information related to the flow of products from manufacturers to customers is also in accordance with Table 33.

As observed, all demands of each customer are met based on the flow of presented products, which shows the proper performance of the proposed model. The rate of return of products from customers to collection centres is also presented in Table 34.

As observed, the necessary allocations are made based on the parameter of the percentage of returned products and cost of transferring products between customers and each centre in order to conduct the product collection operations with the lowest cost possible. Table 35 shows the product flow between the collection centres and the suppliers, aiming at reusing the recycled materials obtained in the collection centres.

As observed, the total products delivered to suppliers are equal to the deduction of recyclable producers delivered by customers to collection centres, which makes the return of products into the production cycle possible. However, a part of the products is unrecyclable and is directly sent to destruction centres to carry out the destruction stages. The flow of products is presented in Table 35.

7 Conclusions

This article evaluates a new SIFO-based structure to present a new optimal problem solution approach as a metaheuristic algorithm. The mathematical structure of the algorithm involves determining a specific number of groups in the form of teams, each encompassing a number of search members. Two different types of search are carried out in each iteration of the algorithm; the first type is an intragroup search, which is adapted from the organizing structure of search teams to accurately inspect the region, and the second type is local search of all teams in the solution space in order not to cover the entire solution space. This guarantees the effective and parallel assessment of the whole solution space. One of the advantages of the present research is the exploitation of parallel search operators in the algorithm structure, which leads to a proper balance in the diversification and contemplation phases of the algorithm. In addition, standard functions presented in two unimodal and multimodal modes are used to evaluate the efficiency of the proposed algorithm (Digalakis and Margaritis 2001; Molga and Smutnicki 2005). According to the numerical results, the SIFO optimizer yielded better results in numerical representations, compared to GA and ALO. SIFO optimizer can also be used in optimization problems as an efficient algorithm. Moreover, SIFO optimizer yielded far better solutions in solving CEC2014 standard numerical representations, compared to the other algorithms. Furthermore, according to the result obtained from supply chain design problem solving, the algorithm developed in the present research seemed to effectively find optimal solutions in the solution space and converge towards the global optimization solution when solving some practical issues in the field of industrial engineering. As such, the algorithm is proposed to solve optimization problems in various engineering fields. In order to expand the dimensions of research, it is recommended that multi-purpose SIFO optimizer is developed, and numerical results obtained from solving various optimization problems are compared to the existing algorithms.

Data availability

The authors confirm that the data supporting the findings of this research are available within its supplementary materials.

References

Abbassi R, Abbassi A, Heidari AA, Mirjalili S (2019) An efficient salp swarm-inspired algorithm for parameters identification of photovoltaic cell models. Energy Convers Manage 179:362–372

Back T (1996) Evolutionary algorithms in theory and practice: Evolution strategies, evolutionary programming, and genetic algorithms: Oxford University Press. Oxford University Press, Oxford

Barisal A (2013) Dynamic search space squeezing strategy based intelligent algorithm solutions to economic dispatch with multiple fuels. Int J Electr Power Energy Syst 45:50–59

Beamon BM (1998) Supply chain design and analysis: Models and methods. Int J Prod Econ 55:281–294

Blackwell TM (2005) Particle swarms and population diversity. Soft Comput 9:793–802

Brownlee J (2011) clever algorithms: Nature-inspired programming recipes. Published by Jason Brownlee, Melbourne, Australia

Chen S, Chen R, Wang G-G, Gao J, Sangaiah AK (2018) An adaptive large neighborhood search heuristic for dynamic vehicle routing problems. Comput Electr Eng 67:596–607

Clerc M, Kennedy J (2002) The particle swarm-explosion, stability, and convergence in a multidimensional complex space. IEEE Trans Evol Comput 6:58–73

Cui Z, Sun B, Wang G, Xue Y, Chen J (2017) A novel oriented cuckoo search algorithm to improve DV-Hop performance for cyber–physical systems. J Parallel Distrib Comput 52:42–17

Cui Z, Xue F, Cai X, Cao Y, Wang G-g, Chen J (2018) Detection of malicious code variants based on deep learning. IEEE Trans Industr Inf 14:3187–3196

Davis L (1991) Handbook of genetic algorithms

Digalakis JG, Margaritis KG (2001) On benchmarking functions for genetic algorithms. Int J Comput Math 77:481–506

Dorigo M, Birattari M (2010) Ant colony optimization. Springer, Berlin

Dréo J, Pétrowski A, Siarry P, Taillard E (2006) Metaheuristics for hard optimization: methods and case studies. Springer Science and Business Media, Berlin

Eberhart R, Kennedy J (1995) A new optimizer using particle swarm theory. In: MHS'95 Proceedings of the Sixth International Symposium on Micro Machine and Human Science: IEEE. pp 39–43

Eddalya M, Jarbouia M, Siarryba P (2016) Combinatorial particle swarm optimization for solving blocking flow shop scheduling problem. J Comput Des Eng 3:295–311

Emara HM, Fattah HA (2004) Continuous swarm optimization technique with stability analysis. In: Proceedings of the American control conference: IEEE. pp 2811–2817

Faramarzi A, heidarnejad M, Mirjalili M, Gandomi A.H (2020) Marine Predators Algorithm: A Nature-inspired Metaheuristic. Exp Syst Appl, p 152

Faramarzi A, heidarnejad M, Stephens B. Mirjalili M (2020) Equilibrium optimizer: A novel optimization algorithm. Knowledge-Based Syst, p 191

Faris H, Ala’M A-Z, Heidari AA, Aljarah I, Mafarja M, Hassonah MA et al (2019) An intelligent system for spam detection and identification of the most relevant features based on evolutionary random weight networks. Inf Fusion 83:67–42

Feng Y, Wang G-G, Li W, Li N (2018a) Multi-strategy monarch butterfly optimization algorithm for discounted 0–1 knapsack problem. Neural Comput Appl 30:3019–3036

Feng Y, Yang J, Wu C, Lu M, Zhao X-J (2018b) Solving 0–1 knapsack problems by chaotic monarch butterfly optimization algorithm with Gaussian mutation. Memetic Comput 10:135–150

García-Gonzalo E, Fernández-Martínez JL (2014) Convergence and stochastic stability analysis of particle swarm optimization variants with generic parameter distributions. Appl Math Comput 249:286–302

Geem ZW, Kim JH, Loganathan GV (2001) A new heuristic optimization algorithm: harmony search. SIMULATION 76:60–68

Goldberg DE, Holland JH (1988) Genetic algorithms and machine learning. Mach Learn 3:95–99

Kadirkamanathan V, Selvarajah K, Fleming PJ (2006) Stability analysis of the particle dynamics in particle swarm optimizer. IEEE Trans Evol Comput 10:245–255

Kennedy J (2003) Bare bones particle swarms. Proceedings of the IEEE Swarm Intelligence Symposium SIS'03 (Cat No 03EX706): IEEE. pp 80–87

Kennedy J (2000) Stereotyping: Improving particle swarm performance with cluster analysis. Proceedings of the Congress on Evolutionary Computation CEC00 (Cat No 00TH8512): IEEE. pp 1507–1512

Kennedy J, Eberhart R (1995) Particle swarm optimization (PSO) Paper presented at the Proc. IEEE International Conference on Neural Networks, Perth, Australia

Koyuncu H, Ceylan R (2018) A PSO based approach: Scout particle swarm algorithm for continuous global optimization problems. J Comput Des Eng 6:129–142

Li C, Yang S, Nguyen TT (2011) A self-learning particle swarm optimizer for global optimization problems. IEEE Trans Syst Man Cybern Part B (cybern) 7:62–42

Li S, Chen H, Wang M, Heidari AA, Mirjalili S (2020) Slime mould algorithm: A new method for stochastic optimization. Futur Gener Comput Syst 111:300–323

Li X, Qian J, Wang G-g (2013) Fault prognostic based on hybrid method of state judgment and regression. Adv Mech Eng 5:1495–1562

MiarNaeimi F, Azizyan G, Rashki M (2021) Horse herd optimization algorithm: A nature-inspired algorithm for high-dimensional optimization problems. Knowledge-Based Syst 213:106711

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–169

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Mirjalili S (2015) The ant lion optimizer. Adv Eng Softw 83:80–98

Molga M, Smutnicki C (2005) Test functions for optimization needs. Test Funct Optim Needs, p 101

Naruei I, Keynia F (2021) Wild horse optimizer: a new meta-heuristic algorithm for solving engineering optimization problems. Eng Comput, pp 1–32

Nan X, Bao L, Zhao X, Zhao X, Sangaiah A, Wang G-G et al (2017) EPuL: an enhanced positive-unlabeled learning algorithm for the prediction of population sites. Molecules 22:1463

Pandey AS, Ehtesham PV, Hasan M, Parhi R (2020) DV-REP-based navigation of automated wheeled robot between obstacles using PSO-tuned feed forward neural network. J Comput Des Eng 7:427–434

Park J-B, Jeong Y-W, Shin J-R, Lee KY (2009) An improved particle swarm optimization for nonconvex economic dispatch problems. IEEE Trans Power Syst 25:156–166

Peng C-C, Chen C-H (2015) Compensatory neural fuzzy network with symbiotic particle swarm optimization for temperature control. Appl Math Model 39:383–395

Poli R, Kennedy J, Blackwell T (2007) Particle Swarm Optimization. Swarm Intell 1:33–57

Poli R (2008) Dynamics and stability of the sampling distribution of particle swarm optimizers via moment analysis. J Artif Evol Appl, p 15

Poli R (2009) Mean and variance of the sampling distribution of particle swarm optimizers during stagnation. IEEE Trans Evol Comput 13:712–721

Rashidi P, Cook DJ (2009) Keeping the resident in the loop: adapting the smart home to the user. IEEE Trans Syst Man Cybern Part A 39:949–959

Rizk-Allah RM, El-Sehiemy RA, Deb S, Wang G-G (2017) A novel fruit fly framework for multi-objective shape design of tubular linear synchronous motor. J Supercomput 73:1235–1256

Selma B, Chouraqui S, Abouaïssa H (2020) Fuzzy swarm trajectory tracking control of unmanned aerial vehicle. J Comput Des Eng 7:435–447

Shi Y, Eberhart R (1998) A modified particle swarm optimizer. In: IEEE international conference on evolutionary computation proceedings IEEE world congress on computational intelligence (Cat No 98TH8360): IEEE. pp 69–73

Srikanth K, Panwar LK, Panigrahi BK, Herrera-Viedma E, Sangaiah AK, Wang G-G (2018) Meta-heuristic framework: quantum inspired binary grey wolf optimizer for unit commitment problem. Comput Electr Eng 70:243–260

Storn R, Price K (1997) Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11:341–359

Suganthan PN (1999) Particle swarm optimizer with neighborhood operator. In: Proceedings of the Congress on Evolutionary Computation-CEC99 (Cat No 99TH8406): IEEE. pp 1958–62

Talatahari S, Azar BF, Sheikholeslami R, Gandomi A (2012) Imperialist competitive algorithm combined with chaos for global optimization. Commun Nonlinear Sci Numer Simul 17:1312–1319

Talbi E-G (2009) Metaheuristics: from design to implementation. John Wiley and Sons, New Jersey

Tanweer MR, Suresh S, Sundararajan N (2016) Dynamic mentoring and self-regulation-based particle swarm optimization algorithm for solving complex real-world optimization problems. Inf Sci 326:1–24

Van Den Bergh F (2001) An analysis of particle swarm optimizers. University of Pretoria South Africa

Wang G, Guo L, Duan H, Liu L, Wang H, Shao M (2012a) Path planning for uninhabited combat aerial vehicle using hybrid meta-heuristic DE/BBO algorithm. Adv Sci Eng Med 4:550–564

Wang G-G, Chu HE, Mirjalili S (2016a) Three-dimensional path planning for UCAV using an improved bat algorithm. Aerosp Sci Technol 49:231–238

Wang G-G, Deb S, Gandomi AH, Zhang Z, Alavi AH (2016b) Chaotic cuckoo search. Soft Comput 20:334–296

Wang G-G, Gandomi AH, Alavi AH (2014) An effective krill herd algorithm with migration operator in biogeography-based optimization. Appl Math Model 38:2454–2462

Wang G-G (2018a) Moth search algorithm: a bio-inspired meta-heuristic algorithm for global optimization problems. Memetic Comput 10:151–164

Wang H, Yi J-H (2018) An improved optimization method based on krill herd and artificial bee colony with information exchange. Memetic Comput 10:177–198

Wang L, Fu X, Mao Y, Menhas MI, Fei M (2012b) A novel modified binary differential evolution algorithm and its applications. Neurocomputing 98:55–75

Wang L, Zheng X-L (2018b) A knowledge-guided multi-objective fruit fly optimization algorithm for the multi-skill resource constrained project scheduling problem. Swarm Evol Comput 38:54–63

Wang G-G, Bai D, Gong W, Ren T, Liu X, Yan X (2018) Particle-swarm Krill Herd Algorithm. In: Paper presented at the IEEE International Conference on Industrial Engineering and Engineering Management (IEEM)

Wang G-G, Deb S, Coelho LDS (2015) Earthworm optimization algorithm: a bio-inspired metaheuristic algorithm for global optimization problems. Int J Bio-Inspired Comput 7:1–23

Wang G-G, Deb S, Gao X-Z, Coelho LDS (2016) A new metaheuristic optimisation algorithm motivated by elephant herding behaviour. Int J Bio-Inspired Comput 8(6):394–409

Wu G, Pedrycz W, Suganthan PN, Mallipeddi R (2015) A variable reduction strategy for evolutionary algorithms handling equality constraints. Appl Soft Comput 37:774–786

Yang X-S, Gandomi AH, Talatahari S, Alavi AH (2012) Metaheuristics in water, geotechnical and transport engineering. Newnes, Elsevier, Amsterdam

Yang X-S (2008) Introduction to mathematical optimization: From linear programming to metaheuristics. Cambridge International Science Publishing Ltd, Cambridge

Yang, X.-S (2010) Nature-inspired metaheuristic algorithms: L Univer Press

Yao X, Liu Y, Lin G (1999) Evolutionary programming made faster. IEEE Trans Evol Comput 3:82–102

Yi J-H, Deb S, Dong J, Alavi AH, Wang G-G (2018) An improved NSGA-III Algorithm with adaptive mutation operator for big data optimization problems. Futur Gener Comput Syst 88:571–585

Yi J-H, Wang J, Wang G-G (2016) Improved probabilistic neural networks with self-adaptive strategies for transformer fault diagnosis problem. Adv Mech Eng 8:1687814015624832

Yi J-H, Xing L-N, Wang G-G, Dong J, Vasilakos AV, Alavi AH et al (2020) Behavior of crossover operators in NSGA-III for large-scale optimization problems. Inf Sci 509:87–47

Zhan Z-H, Zhang J, Li Y, Shi Y-H (2010) Orthogonal learning particle swarm optimization. IEEE Trans Evol Comput 15:832–847

Zhou C, Gao HB, Gao L, Zhang WG (2003) Particle swarm optimization (PSO) algorithm [J]. Appl Res Comput 12:7–11

Zou D, Li S, Wang G-G, Li Z, Ouyang H (2016a) An improved differential evolution algorithm for the economic load dispatch problems with or without valve-point effects. Appl Energy 181:375–390

Zou D, Wang G-G, Sangaiah AK, Kong X (2017) A memory-based simulated annealing algorithm and a new auxiliary function for the fixed-outline floor planning with soft blocks. J Ambient Intell Humaniz Comput, pp 1–12

Zou D-X, Deb S, Wang G-G (2018) Solving IIR system identification by a variant of particle swarm optimization. Neural Comput Appl 30:685–698

Zou D-x, Wang G-g, Pan G, Qi H-w (2016b) A modified simulated annealing algorithm and an excessive area model for floor planning using fixed-outline constraints. Front Inf Technol Electr Eng 17:1228–1244

Author information

Authors and Affiliations

Contributions

Amin Ahwazian helped in study concept and design, acquisition of data, analysis and interpretation of data, drafting of the manuscript, statistical analysis, critical revision of the manuscript for important intellectual content. Atefeh Amindoust and Reza Tavakkoli-Moghaddam supervised the research. Mehrdad Nikbakht was the research consultant.

Corresponding author

Ethics declarations

Conflict of interest

The authors of the manuscript declare that they have completely avoided publishing ethics, including plagiarism, misconduct, data forgery, or double publishing. There are no commercial interests in this article, and the authors have not received any payment for their manuscript. The authors also claim that this manuscript has not been published elsewhere and has not been published in another journal. All rights to use the content, tables, images, etc., have been assigned to the publisher.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Ahwazian, A., Amindoust, A., Tavakkoli-Moghaddam, R. et al. Search in forest optimizer: a bioinspired metaheuristic algorithm for global optimization problems. Soft Comput 26, 2325–2356 (2022). https://doi.org/10.1007/s00500-021-06522-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-021-06522-6