Abstract

In order to improve the performance of a clustering on a data set, a number of primary partitions are generated and stored in an ensemble and their aggregated consensus partition is used as their clustering. It is widely accepted that the consensus partition outperforms the primary partitions. In this paper, an ensemble clustering method called multi-level consensus clustering (MLCC) is proposed. To construct the MLCC, a cluster–cluster similarity matrix which is achieved by an innovative similarity metric is first generated. The mentioned cluster–cluster similarity matrix is based on a multi-level similarity metric. In fact, it can be computed in a new defined multi-level space. Then, a point–point similarity matrix which is boosted using the mentioned cluster–cluster similarity matrix is generated. The new consensus function applies an average linkage hierarchical clusterer algorithm on the mentioned point–point similarity matrix to make consensus partition. MLCC is better than traditional clustering ensembles and simple versions of clustering ensembles on traditional cluster–cluster similarity matrix. Its computational cost is not very bad too. Accuracy and robustness of the proposed method are compared with those of the modern clustering algorithms through the experimental tests. Also, time analysis is presented in the experimental results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Numerous real-world issues are resolved by utilizing extremely beneficial straightforward machine learning models (Nejatian et al. 2019; Pirbonyeh et al. 2019; Hosseinpoor et al. 2019; Partabian et al. 2020; Shabaniyan et al. 2019; Szetoa et al. 2020; Moradi et al. 2018; Jenghara et al. 2018a; Parvin et al. 2018; Alishvandi et al. 2016; Omidvar et al. 2018; Yasrebi et al. 2018). Nevertheless, since understandable machine learning models encounter difficulties in challenging issues (Nejatian et al. 2018; Jamalinia et al. 2018; Jenghara et al. 2018b), ensemble models have emerged as a new option in regard with the machine learning classification tasks (Rashidi et al. Aug. 2019; Mojarad et al. 2019a,b; Nazari et al. 2019; Bagherinia et al. 2019).

To find a partition with the goal of assigning data points within identical groups is a prominent and complicated matter which has been employed in many real-world problems such as knowledge extraction and pattern recognition. According to no free lunch (NFL), there is no dominant algorithm among many algorithms for solving a problem (Huang et al. 2016b; Fred and Jain 2005). Therefore, we know that there is a dominant algorithm depending on the at-hand data set. However, lack of knowledge on distributions, structures and natures of the clusters in the at-hand data set results in some problems which prevent us from detection of the most suitable clustering algorithm to our specific application (Roth et al. 2002).

Cluster ensembles have attracted enormous interest in result of several successful applications of ensemble learning in supervised fields such as (Freund and Schapire 1995; Ho 1995; Friedman 2001; Soto et al. 2014; Yu et al. 2015,2016a,2017). Many classification applications have shown a tendency towards hybrid methods in the past few years (Faceli et al. 2006) similar to every sub-field of the supervised pattern detection. Generally, several fields including data mining (Hong et al. 2008; Zhang et al. 2012; Yu et al. 2012,2016b; Naldi et al. 2013; Franek and Jiang 2014; Jiang et al. 2015; Huang et al. 2017; Yousefnezhad et al. 2018), multimedia (Rafiee et al. 2013) and bio-informatics (Yu et al. 2011,2013; Hanczar and Nadif 2012) have used various cluster ensemble approaches which have intended to clarify innovative partitions because they are stronger than simple clustering algorithms (Ayad and Kamel 2008). Using ensembles as a group of primary partitions to devise a consensus partitions is the objective of the ensemble clustering (Domeniconi and Al-Razgan 2009; Ghosh and Acharya 2011; Ghaemi et al. 2011). Consensus ensemble uses every primary partition within the ensemble in construction of the consensus partition, and the consensus partition enhances a specific objective function.

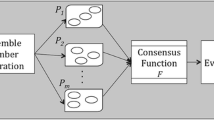

Cluster ensemble consists of two stages. First stage is to generate a pool of primary partitions. These primary partitions usually are the moderate or low-quality ones (Topchy et al. 2003). Actually, they should be low-quality but not without-quality. It is widely acceptable to generate these primary partitions through a simple clusterer method (usually k-means) on a number of dissimilar parameters (Topchy et al. 2005), on a number of dissimilar initializations, on a number of dissimilar data subspaces (Ayad and Kamel 2008), or on a number of dissimilar data subsamples (Minaei-Bidgoli et al. 2004). The proposed method has used all of the mentioned approaches.

In second step, we aim at finding a consensus partition that has maximum similarity to the primary partitions in our ensemble generated in the first step. Evidence accumulation clustering (EAC) is proposed in Fred and Jain (2002) as an ideal consensus function in which the ensemble is initially transformed into a co-association matrix (CAM), and then, the consensus partition is found by employing a single linkage hierarchical clusterer algorithm.

Normalized mutual information (NMI) measure is proposed in Fred and Jain (2005) to assess a cluster which has numerous deficiencies described in Alizadeh et al. (2011a). MAX is an edition of the normalized mutual information measure which has also some flaws. Therefore, Alizadeh–Parvin–Moshki–Minaei (APMM) measure has been introduced in Alizadeh et al. (2011b) as a metric to enhance the MAX. All of mentioned metrics, i.e. NMI, MAX, and APMM, are weak in dealing with complement cluster effect.

In this paper, an innovative cluster–cluster similarity measure is introduced. Then, we extend it to EAC. MLCC is inspired by dual-similarity clustering ensemble (DSCE) method (Alqurashi and Wang 2014,2015). DSCE and MLCC have three differences. First, MLCC has less sensitivity to the usage of the real number of clusters in primary partitions. Second, the MLCC method has less parameters than DSCE. Third, MLCC is a CAM-based consensus clustering, but DSCE is a voting-based consensus clustering that is an important consensus function clustering (Strehl and Ghosh 2000; Fern and Brodley 2003; Breiman 1996; Alizadeh et al. 2015; Iam-On et al. 2010,2012; Gionis et al. 2007; Yi et al. 2012; Mirzaei and Rahmati 2010; Akbari et al. 2015).

This article has been continued as follows. In Sect. 2, the clustering ensemble problem of the paper is defined. In Sect. 3, the related works are presented. Section 4 has explained the proposed method. Section 5 presents the experimental results, and the paper conclusions will be presented in Sect. 6.

2 Related work

Encapsulating ensemble information into a CAM and application of a hierarchical clusterer algorithm on the obtained CAM are needed for extracting the consensus partition in an approach that is based on CAM (Fred and Jain 2002). These types of clustering ensembles take the advantage of bypassing the need for a relabeling phase. As an instance, consensus partition was achieved in Fred and Jain (2005) through applying an average linkage hierarchical clusterer which we have named CO–AL as a slow and CAM-based method. A new CAM which considers the between cluster relations was introduced in Iam-On et al. (2008) where the implemented approach was named SRS which can be considered to be a slow method. It was based on CAM and used link similarity. After that, it was extended it into WCT method (Iam-On et al. 2011).

Instead of an object–object similarity CAM, a cluster–cluster similarity matrix was introduced in Mimaroglu and Aksehirli (2012) where a method named DICLENS was offered as a CAM-based, slow, and robust approach. An object-neighbourhood-based similarity matrix was introduced in where a new cluster–cluster similarity matrix was obtained by neighbourhood and real relation between objects. This method that was named ONCE–AL together with its extension DSCE method introduced in Alqurashi and Wang (2015), can be considered to be a heuristic, fast, and parameter-sensitive approach. The mentioned method later extended (Huang et al. 2016b). An extended version of the DSCE was also proposed in Huang et al. (2015) which introduces WEAC and GPMGLA as a pair of two cluster weighting, CAM-based, slow and robust clustering ensemble methods.

CSEAC was proposed in Alizadeh et al. (2014a) as a CAM-based, very slow robust clustering ensemble method, and also Wisdom of Crowds Ensemble (WCE) was introduced in Alizadeh et al. (2015) as a CAM-based, slow, robust and flexible ensemble method. Another related work is ECSEAC which is a cluster selection-based ensemble clustering algorithm proposed in Parvin and Minaei-Bidgoli (2015) as a cluster weighting, CAM-based, slow and robust approach. TME is also an ensemble method which was proposed in Zhong et al. (2015) as a cluster weighting and CAM-based one; it is widely considered to be a very slow and robust method.

Clustering ensemble has been reformulated as a linear binary programming problem by Huang et al. (2016). This approach was also extended and used for categorical data set by Zhao et al. (2017).

A cluster ensemble was introduced through sampling by Yang and Jiang (2016) which was inspired by bagging and boosting theory. Also, information theory as a suitable tool for data clustering was used to introduce a cluster ensemble method by Bai et al. (2017).

Consensus partition was extracted by Strehl and Ghosh (2003) by partitioning a hyper-graph which is constructed on a CAM so that each row and each column in the CAM are, respectively, assumed to be a node and a hyper-edge. The hyper-graph partitioning algorithm used in the mentioned work could be HMETIS (Dimitriadou et al. 2002). This algorithm is named cluster-based similarity partitioning algorithm (CSPA). Hyper-graph partitioning algorithm (HGPA) and meta-clustering algorithm (MCLA) are some other examples of the methods in which each cluster is considered to be a hyper-edge and each data as a node. CSPA has been extended by Fren and Brodley (2004) as a hybrid bi-partite graph formulation (HBGF) which outperforms HGPA, MCLA and CSPA.

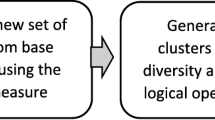

A cluster ensemble method was proposed in Rashidi et al. (2019) which did not need the original features of data set and also did not consider any distribution in the data set. Moreover, it used assessment of the clusters’ undependability and the weighting mechanism to suggest a clustering ensemble. In addition, diversity was defined in cluster level. During consensus function process, quality of the clusters and their diversity were used in order to improve the consensus partition. Any clusters' dependability was computed based on its undependability which itself was calculated according to the entropy in the labels of its data points throughout all the ensemble partitions. Also, an innovational cluster-wise weighing CAM was suggested according to measuring the clusters’ reliability and then emphasizing those with higher reliability in the ensemble. Furthermore, a cluster-wise weighting bi-partite graph was suggested to offer the ensemble based on the related cluster-wise weighing co-association matrix. Finally, the consensus partition was extracted using two mechanisms including application of a simple hierarchical clustering algorithm for cluster-wise weighing of the co-association matrix as a similarity matrix and cluster-wise weighting bi-partite graph partitioning into a certain number of parts.

The idea proposed in Alizadeh et al. (2014a) is to find a subset of primary clusters which performs better in the ensemble, and through participating the clusters with more quality in consensus function process, the consensus partition performance was improved. In this regard, many clusters are first gained by specific generation of primary results. Then, a goodness function is used to evaluate and also to sort the achieved clusters. After that, with the goal of finding more efficient clusters for participating in the ensemble, a specific subset of primary clusters is offered for each class of datasets. The selected clusters are then combined to extract the final clusters which is followed by a post-processing.

A supreme consensus partition was produced in Alizadeh et al. (2013) by combination of spurious clustering results where the objective function was inspired by proposed immature formulation in which simultaneous maximization of the agreement between the ensemble members and minimization of the disagreement was implemented. They improve a nonlinear binary goal function which was introduced to propose a constrained nonlinear fuzzy objective function. The genetic algorithm was also used to solve the proposed model and while any other optimizer could be used to solve the model.

In the clustering ensemble proposed in Minaei-Bidgoli et al. (2014), a simple adaptive attitude was suggested for generating primary partitions. It uses the clustering history. Since it is supposed in the clustering that the ground truth is not achievable in the class labels form, it is needed to use an alternative performance measure for an ensemble of partitions throughout process of the clustering. To determine clustering consistency of data points, a history of cluster assignments was evaluated in the generated sequence of the partitions for each data point which could adapt the data sampling during the partition generation to the current state of an ensemble that improves the confidence in cluster assignments.

By extending the previous clustering frameworks, ensemble and swarm concepts in the clustering field were suggested in Parvin et al. (2012) where an unprecedented clustering ensemble learning method was introduced according to the ant colony clustering algorithm. Since diversity of the ensemble is very important and swarm intelligence is inherently related to random processes, a new dataset space was introduced by diverse partitions of the acquired results from different runs of the ant colony clustering algorithm with different initializations on a data set and they were aggregated into a consensus partition by a simple clusterer algorithm. The contribution of swarm intelligence in reaching better results was also measured.

Benefits of the ensemble diversity together with quality of the fuzzy clustering-level were used to select a subset of fuzzy base-clusterings in Bagherinia et al. (2019). Integrating diversity of the base-clusterings and quality of the clustering level was resulted into enhancement of the final clustering quality. It first calculated a new fuzzy normalized mutual information (FNMI) and then calculated the diversity of each fuzzy base-clustering in relation to other fuzzy base-clusterings. After that, the calculated diversity was used to cluster all the base-clusterings which resulted in clusters of base-clusterings named base-clusterings-clusters. In subsequence, a base-clusterings-cluster is selected which satisfies the quality measure. Final clustering was achieved by using a novel consensus method derived by graph portioning algorithm named FCBGP algorithm or by forming extended fuzzy co-association matrix (EFCo) which is finally considered as similarity matrix.

Because of lower information or higher variances some features of under-consideration data set have more information than the others in that data set, weighted locally adaptive clustering (WLAC) algorithm was proposed in Parvin and Minaei-Bidgoli (2013) as a weighted clustering algorithm which could encounter the imbalanced clustering. WLAC algorithm is very sensitive to the two parameters which was resulted in proposing a simple clustering ensemble framework and an elite cluster ensemble framework to manage those two control parameters. It was later extended into a fuzzy version, i.e. Fuzzy WLAC (FWLAC) algorithm (Parvin and Minaei-Bidgoli 2015).

A novel approach for clustering ensemble was also suggested in Abbasi and Nejatian (2019). It uses a subset of primary clusters. It proposed to evaluate the cluster quality by suggesting stab as a new validity measure so that the clusters that satisfy a stab threshold are qualified to be used in construction of the consensus partition. A set of methods for consensus function such as co-association based ones were used to combine the chosen clusters. Extended evidence accumulation clustering (EEAC) was used for co-association matrix construction from the selected clusters because it was not possible for evidence accumulation clustering (EAC) method to use a subset of clusters in construction of co-association matrix. Other class of consensus functions was also used based on hyper-graph partitioning algorithms. In another one, the chosen clusters were considered to be a new feature space and the consensus partition was extracted by using a simple clusterer algorithm.

Averaged Alizadeh–Parvin–Moshki–Minaei (AAPMM) criterion was introduced in Alizadeh et al. (2014b) for assessing a cluster quality. It was proposed to use AAPMM for obtaining quality of clusters. Then, in their clustering ensemble method, the clusters satisfying an AAPMM value threshold participate in constructing the co-association matrix. In addition, EEAC was proposed as a new method for matrix establishment. Finally, consensus partition extraction was performed by application of a hierarchical clusterer method over the obtained co-association matrix.

A primary partitions' generator was suggested in Parvin et al. (2013). It could be considered to be a boosting mechanism in clustering. As true labels are needed for each cluster to be available in weight updating of boosting, the clustering needs an alternative performance metric for an ensemble of partitions to update the probability sampling vector. This study has determined the clustering consistency of data points was determined to assess a cluster assignment history for every data point in the generated partitions of ensemble. In order to have a suitable data sampling, determination of the clustering consistency for produced partitions was performed during the partition generation. The adaptation concentrated the sampling distribution on problematic regions of the feature space to modify the confidence in cluster assignments. Accordingly, better approximation of the inter-cluster boundaries was performed by concentrating the notice on the data points which have the minimum consistent clustering assignments.

3 Clustering ensemble

3.1 Clustering ensemble definition

Let’s assume a set of data points be denoted by \({\mathcal{X}}\). In the data set \({\mathcal{X}}\), \(i\) th feature of all data points is denoted by data set \({\mathcal{X}}_{:,i}\) and \(j\) th data point is denoted by \({\mathcal{X}}_{j,:}\). The number of data points in the data set \({\mathcal{X}}\) is denoted by \(\left| {{\mathcal{X}}_{:,1} } \right|\). The number of features in the data set \({\mathcal{X}}\) is denoted by \(\left| {{\mathcal{X}}_{1,:} } \right|\). A partition on the data set \({\mathcal{X}}\) is denoted by \(\pi_{{\mathcal{X}}}\) (also, \(\pi_{{\mathcal{X}}}^{k}\) is \(k\) th partition on the data set \({\mathcal{X}}\) where \(k\) is an integer value indicating the index of partition). The partition \(\pi_{{\mathcal{X}}}\) contains a \(\left| {\pi_{{{\mathcal{X}},:}} } \right|\) subsets of \(\left\{ {1,2, \ldots ,\left| {{\mathcal{X}}_{:,1} } \right|} \right\}\). Let’s denote \(p\) th subset of partition \(\pi_{{\mathcal{X}}}\) by \(\pi_{{{\mathcal{X}},p}}\). To name \(\pi_{{\mathcal{X}}}\) a non-overlapping partition, we must have

If the Eq. 1 holds for a partition, we name it a non-overlapping partition. From now on, without loss of generality, we assume all of the partitions, discussed in the paper, are non-overlapping. Any member of a partition, i.e. \(\pi_{{{\mathcal{X}},p}}\), is considered to be a cluster. If the union of all clusters in a partition is \(\left\{ {1,2, \ldots ,\left| {{\mathcal{X}}_{:,1} } \right|} \right\}\), then the partition is named a complete partition. It means the Eq. 2 must be correct for a partition \(\pi_{{\mathcal{X}}}\) to name it a complete partition.

Let’s assume a data set \({\mathcal{X}}\) has a desired clustering similar to its ground-truth labels denoted by \(\pi_{{\mathcal{X}}}^{ + }\). Note that we have not assumed that any of \(\left| {\pi_{{{\mathcal{X}},:}}^{k} } \right|\) should be equal to \(\left| {\pi_{{{\mathcal{X}},:}}^{ + } } \right|\). Each cluster, say \(\pi_{{{\mathcal{X}},p}}\), has a central point denoted by \(C^{{\pi_{{{\mathcal{X}},p}} }}\) defined according to Eq. 3.

We denote the complete version of partition \(\pi_{{\mathcal{X}}}\) by \(\dot{\pi }_{{\mathcal{X}}}\) whose \(p\) th cluster is defined according to Eq. 4.

We consider all of the partitions \(\pi_{{\mathcal{X}}}^{k}\) to be an ensemble (denoted by \({\varvec{\pi}}_{{\mathcal{X}}}\)) with the ensemble size \(\left| {{\varvec{\pi}}_{{\mathcal{X}}}^{:} } \right|\). Our aim is to combine them to extract a consensus partition denoted by \(\pi_{{\mathcal{X}}}^{*}\) in such a way that it is as much robust and high-quality as possible. Formally, the ensemble clustering intends to find a better consensus partition by integrating the information of the primary partitions in the ensemble.

Clustering ensemble domain has examined the creation of an ensemble and also the consensus function construction which both have highly influenced the outcomes of the final clustering. Since variety of the results from the base learners is a crucial factor to make a successful ensemble, the diversity could be extended into the clustering ensemble field in regard to classifier ensemble.

3.2 Ensemble creation

First of all, the ensemble members should be created in clustering ensemble framework. To find various data substructures and also to enhance the potential performance of consensus partition, we must use at least one of the clustering generation methods that guarantee to produce an ensemble of partitions where any of them has a minimum quality and all of them have a minimum diversity.

Many problem-specific ensemble generation methods have been introduced. Subspace clustering has been done on different projections of data set by Strehl and Ghosh (2000). Along to feature subset selection, a subsampling (or instance subset selection) is also used for generating each partition. Almost the same approach was proposed by Fern and Brodley (2003). Breiman (1996) first presents subsampling in machine learning, and later, it was extended by Minaei-Bidgoli et al. (2004).

An alternative to the prior methods including a subset of primary members within the ensemble was used by Alizadeh et al. (2011a,2011b). They used a subsampling for generating each primary partition. Each partition is attained by running a randomly initialized k-means clustering algorithm. Then, a subset of primary partitions that has maximum diversity is selected as final ensemble. A similar study was also conducted by Nazari et al. (2019).

Since k-means clusterer algorithm is an easy and efficient clusterer algorithm (Minaei-Bidgoli et al. 2004), it is considered to be the most suitable clusterer algorithm in ensemble generation. Thus, for each base clustering, k-means clusterer method was used in Alizadeh et al. (2015) with two random elements: randomly initialized seed points and randomly selected number of clusters. These random sources cause to produce a diverse ensemble. It is widely recommended to select an integer value randomly in \(\left[ {2;\sqrt {\left| {{\mathcal{X}}_{:,1} } \right|} } \right]\) as the number of clusters (Iam-On et al. 2010,2012) during generating the ensemble. Different clusterer algorithms can be also a source for generating a diverse ensemble (Gionis et al. 2007; Yi et al. 2012; Mirzaei and Rahmati 2010; Akbari et al. 2015).

For ensemble creation, Wisdom of Crowds (WOC) was proposed in Alizadeh et al. (2015) as a concept in social sciences field that provides suitable criteria for group behaviour. Meeting these criteria could result in favourable ensembles. Therefore, WOC cluster ensemble (WOCCE) has been proposed. It was later extended in Yousefnezhad et al. (2018).

3.3 Ensemble combiner

In the literature, researchers pay more attention to ensemble combiner. It can be viewed as a function with two inputs: (a) ensemble members or partitions and (b) the number of clusters, i.e. \(\left| {\pi_{{{\mathcal{X}},:}}^{ + } } \right|\). It returns an output, widely referred to as consensus or aggregated partition, i.e. \(\pi_{{{\mathbf{\mathcal{X}}},:}}^{*}\).

As there are some widely accepted approaches for ensemble combiner, we will present a brief summarization on them here. Any ensemble combiner is in one category of the following ones: (a) category of CAM based ensemble combiners, (b) category of median partition based ensemble combiners, (c) category of graph partitioning based ensemble combiners, (d) category of voting based ensemble combiners, and (e) category of intermediate space based ensemble combiners.

The methods in the first category transform information of ensemble partitions into a similarity matrix, named CAM, and then, they apply a second clustering, widely a hierarchical one, to find the consensus partition. The methods in the second category transform the finding of consensus partition into an optimization problem, and then, they solve it through an optimizer. The methods in the third category transform the finding of consensus partition into a graph clustering problem, and then, they solve it through a graph partitioning algorithm. The methods in the fourth category first make a relabeling between all of the partitions, and then, they find consensus partition by a voting or averaging mechanism. The methods in the fifth category assume the clusters of data points as new intermediate features, and then, they apply a secondary simple clusterer algorithm on the new intermediate data set to find consensus partition.

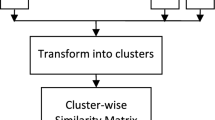

Figure 1 depicts framework of the proposed framework. In the proposed framework, the initial data set is subsampled several times and then by applying a k-means clusterer method on each of them to partition them into a random number of clusters, an ensemble of \(\left|{{\varvec{\pi}}}_{\mathcal{X}}^{:}\right|\) partitions denoted by \({\pi }_{\mathcal{X}}^{q}\) will be generated, where \(q=\left\{1,\dots ,\left|{\pi }_{\mathcal{X}}^{:}\right|\right\}\). After that, each partition is extended to its complete version, i.e. \({\dot{\pi }}_{\mathcal{X}}^{q}\). After that, the ensemble is converted into a cluster representation, and then, a similarity matrix is created to determine the similarities of the clusters. In the next step, a CAM boosted cluster similarity matrix is obtained. Finally, a merging mechanism (a hierarchical clusterer algorithm) and post-processing are used to determine the consensus partition.

4 Proposed consensus function

Ensemble combiner or consensus function is the main part in a clustering ensemble framework. It must be appropriately designed so as to maximally extract ensemble information. We first create a graph according to Eq. 5 for our clustering ensemble \({\varvec{\pi}}_{{\mathcal{X}}}\).

In Eq. 5, \({\mathcal{V}}\left( {{\varvec{\pi}}_{{\mathcal{X}}} } \right)\) is set of indices of all the data clusters that are in the ensemble, i.e. \(\left\{ {1,2, \ldots ,\left| {{\varvec{\pi}}_{{{\mathcal{X}},:}} } \right|} \right\}\) where \(\left| {{\varvec{\pi}}_{{{\mathcal{X}},:}} } \right|\) denotes the number of clusters in ensemble \({\varvec{\pi}}_{{\mathcal{X}}}\) and \({\mathcal{E}}\left( {{\varvec{\pi}}_{{\mathcal{X}}} } \right)\) is set of edge weights for graph \({\mathcal{G}}\left( {{\varvec{\pi}}_{{\mathcal{X}}} } \right)\). The term \(\left| {{\varvec{\pi}}_{{{\mathcal{X}},:}} } \right|\) is defined according to Eq. 6.

In Eq. 5, \({\mathcal{E}}\left( {{\varvec{\pi}}_{{\mathcal{X}}} } \right)\) is defined according to Eq. 7.

where \(w_{{{\varvec{\pi}}_{{\mathcal{X}}} }} \left( {j_{1} ,j_{2} } \right)\) is defined according to Eq. 8.

where \(k_{{j,{\varvec{\pi}}_{{\mathcal{X}}} }}\) and \(p_{{j,{\varvec{\pi}}_{{\mathcal{X}}} }}\) are, respectively, defined according to Eqs. 8 and 9.

In Eq. 8, \(SCCS_{{{\varvec{\pi}}_{{\mathcal{X}}} }} \left( {A,B} \right)\) stands for a simple cluster–cluster similarity function. It is the corrected version of the set correlation ratio. The set correlation first emerged in (Houle, 2008). It is defined according to Eq. 11.

It can be proven that \(SCCS_{{{\varvec{\pi}}_{{\mathcal{X}}} }} \left( {A,B} \right)\) is less than or equal to one and greater than or equal to zero. If the cluster A and cluster B have maximum similarity, it will return one. If the cluster \(A\) and cluster \(B\) have minimum similarity, it will return zero (Vinh and Houle 2010). It can be proven that \(w_{{{\varvec{\pi}}_{{\mathcal{X}}} }}\) is reflexive. Now, assuming we are at the \(j_{1}\) th cluster, i.e. \(\dot{\pi }_{{{\mathcal{X}},p_{{j_{1} ,{\varvec{\pi}}_{{\mathcal{X}}} }} }}^{{k_{{j_{1} ,{\varvec{\pi}}_{{\mathcal{X}}} }} }}\), we intend to define a transition probability to cluster the \(j_{2}\) th cluster, i.e. \(\dot{\pi }_{{{\mathcal{X}},p_{{j_{2} ,{\varvec{\pi}}_{{\mathcal{X}}} }} }}^{{k_{{j_{2} ,{\varvec{\pi}}_{{\mathcal{X}}} }} }}\) after \(r\) steps denoted by \(TP_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r} \left( {j_{1} ,j_{2} } \right)\). For \(r = 1\), it is defined according to Eq. 12.

Now, we have the matrix \({\varvec{TP}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}\) whose \(\left( {i,j} \right)\) th entry shows the probability that a traveller that is currently at the \(i\) th cluster will be at the \(j\) th cluster in the next step.

To compute the probability that a traveller that is currently at the \(i\) th cluster will be at the \(j\) th cluster in the next two steps, we should compute it by Eq. 13.

Therefore, it is easy to prove that \({\varvec{TP}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2} = {\varvec{TP}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{1} \times {\varvec{TP}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{1}\). Also, it is easy to show the transition probability matrix after \(r\) steps, i.e. \({\varvec{TP}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r}\), is computed according to Eq. 14.

Now, we introduce a new feature space for clusters of the ensemble, denoted by \({\varvec{NF}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r}\), as follows in Eq. 15.

Note that each row corresponds to a cluster and each column corresponds to a feature in our new feature space. Therefore, we have \(\left| {{\varvec{\pi}}_{{{\mathcal{X}},:}} } \right|\) rows and \(\left| {{\varvec{\pi}}_{{{\mathcal{X}},:}} } \right| \times r\) defined features. According to a distance metric (here, we use Euclidian distance), the distances between pairs of the ensemble clusters are computed. The mentioned distances for all pairs of clusters in the ensemble are stored in a distance matrix denoted by \({\varvec{EuclidianDis}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r}\) and defined based on Eq. 16.

Correspondingly, a distance matrix can be denoted by \({\varvec{CosineDis}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r}\) and defined based on Eq. 17 if we use Cosine distance.

According to Eq. 18, the cluster–cluster similarity matrix, denoted by \({\varvec{CCS}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r}\), is computed.

Now, we define a point–point similarity matrix, denoted by \({\varvec{PPS}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r}\), according to Eq. 19.

where \(CI_{{{\varvec{\pi}}_{{\mathcal{X}}} }} \left( {{\mathcal{X}}_{j,:} ,b} \right)\) is the index of the cluster to which the \(j\) th data point belongs in the \(b\) th partition (note the index is computed among all clusters of ensemble). It is computed according to Eq. 20.

It is worthy to be mentioned that the matrix \({\varvec{PPS}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r}\) is symmetric, i.e. \(PPS_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r} \left( {j_{1} ,j_{2} } \right) = PPS_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r} \left( {j_{2} ,j_{1} } \right)\), just like \(w_{{{\varvec{\pi}}_{{\mathcal{X}}} }}\). Therefore, we propose to apply an agglomerative hierarchical complete linkage clustering algorithm on the attained point–point similarity matrix, i.e. \({\varvec{PPS}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{r}\), to cluster data points into a set of \(\left| {\pi_{{{\mathcal{X}},:}}^{ + } } \right|\) clusters. The mentioned obtained set of \(\left| {\pi_{{{\mathcal{X}},:}}^{ + } } \right|\) clusters is considered to be consensus partition.

Toy Example: We have assumed a dataset with 13 instances and three clusters. Let’s assume we want to generate an ensemble of size 5 using k-means clusterer algorithm. We have considered k-means clusterer algorithm running 5 times on our dataset to partition them into 2, 2, 3, 3 and 3 clusters, respectively. Let’s assume we have obtained an ensemble of size 5 on 13 instances. The assumptive ensemble is considered to be presented by Fig. 2. Therefore, we have ensemble \({\varvec{\pi}}_{{\mathbf{\mathcal{X}}}} = \left\{ {\pi_{{{\mathbf{\mathcal{X}}},1}} ,\pi_{{{\mathbf{\mathcal{X}}},2}} ,\pi_{{{\mathbf{\mathcal{X}}},3}} ,\pi_{{{\mathbf{\mathcal{X}}},4}} ,\pi_{{{\mathbf{\mathcal{X}}},5}} } \right\}\) where first partition is as follows \(\pi_{{{\mathbf{\mathcal{X}}},1}} = \left\{ {\pi_{{{\mathbf{\mathcal{X}}},1}}^{1} ,\pi_{{{\mathbf{\mathcal{X}}},1}}^{2} } \right\}\), second partition is as follows \(\pi_{{{\mathbf{\mathcal{X}}},2}} = \left\{ {\pi_{{{\mathbf{\mathcal{X}}},2}}^{1} ,\pi_{{{\mathbf{\mathcal{X}}},2}}^{2} } \right\}\), third partition is as follows \(\pi_{{{\mathbf{\mathcal{X}}},3}} = \left\{ {\pi_{{{\mathbf{\mathcal{X}}},3}}^{1} ,\pi_{{{\mathbf{\mathcal{X}}},3}}^{2} ,\pi_{{{\mathbf{\mathcal{X}}},3}}^{3} } \right\}\), fourth partition is as follows \(\pi_{{{\mathbf{\mathcal{X}}},4}} = \left\{ {\pi_{{{\mathbf{\mathcal{X}}},4}}^{1} ,\pi_{{{\mathbf{\mathcal{X}}},4}}^{2} ,\pi_{{{\mathbf{\mathcal{X}}},4}}^{3} } \right\}\), and fifth partition is as follows \(\pi_{{{\mathbf{\mathcal{X}}},5}} = \left\{ {\pi_{{{\mathbf{\mathcal{X}}},5}}^{1} ,\pi_{{{\mathbf{\mathcal{X}}},5}}^{2} ,\pi_{{{\mathbf{\mathcal{X}}},5}}^{3} } \right\}\).

Also, clusters of first partition are as follows: \(\pi_{{{\mathbf{\mathcal{X}}},1}}^{1}\) is equal to \(\left\{ {{\mathcal{X}}_{1,:} ,{\mathcal{X}}_{2,:} ,{\mathcal{X}}_{3,:} ,{\mathcal{X}}_{4,:} ,{\mathcal{X}}_{6,:} ,{\mathcal{X}}_{7,:} ,{\mathcal{X}}_{11,:} } \right\}\), and \(\pi_{{{\mathbf{\mathcal{X}}},1}}^{2}\) is equal to \(\left\{ {{\mathcal{X}}_{5,:} ,{\mathcal{X}}_{8,:} ,{\mathcal{X}}_{9,:} ,{\mathcal{X}}_{10,:} ,{\mathcal{X}}_{12,:} ,{\mathcal{X}}_{13,:} } \right\}\). Clusters of second partition are as follows: \(\pi_{{{\mathbf{\mathcal{X}}},2}}^{1}\) is equal to \(\left\{ {{\mathcal{X}}_{1,:} ,{\mathcal{X}}_{4,:} ,{\mathcal{X}}_{7,:} ,{\mathcal{X}}_{8,:} ,{\mathcal{X}}_{9,:} ,{\mathcal{X}}_{11,:} ,{\mathcal{X}}_{12,:} } \right\}\), \(\pi_{{{\mathbf{\mathcal{X}}},2}}^{2}\) is equal to \(\left\{ {{\mathcal{X}}_{2,:} ,{\mathcal{X}}_{3,:} ,{\mathcal{X}}_{5,:} ,{\mathcal{X}}_{6,:} ,{\mathcal{X}}_{10,:} ,{\mathcal{X}}_{13,:} } \right\}\). Clusters of third partition are as follows: \(\pi_{{{\mathbf{\mathcal{X}}},2}}^{2}\) \(\pi_{{{\mathbf{\mathcal{X}}},3}}^{1}\) is equal to \(\left\{ {{\mathcal{X}}_{1,:} ,{\mathcal{X}}_{2,:} ,{\mathcal{X}}_{3,:} ,{\mathcal{X}}_{4,:} ,{\mathcal{X}}_{11,:} ,{\mathcal{X}}_{12,:} } \right\}\), \(\pi_{{{\mathbf{\mathcal{X}}},3}}^{2}\) is equal to \(\left\{ {{\mathcal{X}}_{8,:} ,{\mathcal{X}}_{9,:} } \right\}\), \(\pi_{{{\mathbf{\mathcal{X}}},3}}^{3}\) is equal to \(\left\{ {{\mathcal{X}}_{5,:} ,{\mathcal{X}}_{6,:} ,{\mathcal{X}}_{7,:} ,{\mathcal{X}}_{10,:} ,{\mathcal{X}}_{13,:} } \right\}\). Clusters of fourth partition are as follows: \(\pi_{{{\mathbf{\mathcal{X}}},4}}^{1}\) is equal to \(\left\{ {{\mathcal{X}}_{3,:} ,{\mathcal{X}}_{5,:} ,{\mathcal{X}}_{6,:} ,{\mathcal{X}}_{9,:} } \right\}\), \(\pi_{{{\mathbf{\mathcal{X}}},4}}^{2}\) is equal to \(\left\{ {{\mathcal{X}}_{10,:} ,{\mathcal{X}}_{11,:} ,{\mathcal{X}}_{12,:} ,{\mathcal{X}}_{13,:} } \right\}\), \(\pi_{{{\mathbf{\mathcal{X}}},4}}^{3}\) is equal to \(\left\{ {{\mathcal{X}}_{1,:} ,{\mathcal{X}}_{2,:} ,{\mathcal{X}}_{4,:} ,{\mathcal{X}}_{7,:} ,{\mathcal{X}}_{8,:} } \right\}\). Finally, clusters of last partition are as follows: \(\pi_{{{\mathbf{\mathcal{X}}},5}}^{1}\) is equal to \(\left\{ {{\mathcal{X}}_{9,:} ,{\mathcal{X}}_{10,:} ,{\mathcal{X}}_{11,:} ,{\mathcal{X}}_{12,:} ,{\mathcal{X}}_{13,:} } \right\}\), \(\pi_{{{\mathbf{\mathcal{X}}},5}}^{2}\) is equal to \(\left\{ {{\mathcal{X}}_{3,:} ,{\mathcal{X}}_{5,:} ,{\mathcal{X}}_{6,:} } \right\}\), \(\pi_{{{\mathbf{\mathcal{X}}},5}}^{3}\) is equal to \(\left\{ {{\mathcal{X}}_{1,:} ,{\mathcal{X}}_{2,:} ,{\mathcal{X}}_{4,:} ,{\mathcal{X}}_{7,:} ,{\mathcal{X}}_{8,:} } \right\}\).

\({\varvec{SCCS}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}\) for the ensemble presented in Fig. 2 is depicted in Fig. 3. Now, we have a graph \({\mathcal{G}}\left( {{\varvec{\pi}}_{{\mathcal{X}}} } \right)\) where set of its vertices, i.e. \({\mathcal{V}}\left( {{\varvec{\pi}}_{{\mathcal{X}}} } \right)\), is \(\left\{ {1,2,3,4,5,6,7,8,9,10,11,12,13} \right\}\) and set of its edges, i.e. \({\mathcal{E}}\left( {{\varvec{\pi}}_{{\mathcal{X}}} } \right)\), is presented in Fig. 4. Figure 5 depicts cluster–cluster similarity matrix, i.e. \({\varvec{CCS}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\), when (a) Euclidian distance, i.e. \({\varvec{EuclidianDis}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\), or (b) Cosine distance, i.e. \({\varvec{CosineDis}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\) is used for our toy ensemble. Finally, Fig. 6 depicts point–point similarity matrix, i.e. \({\varvec{PPS}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\), when (a) Euclidian distance, i.e. \({\varvec{EuclidianDis}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\), or (b) Cosine distance, i.e. \({\varvec{CosineDis}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\) is used for our toy ensemble.

The \({\varvec{SCCS}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}\) for the ensemble presented in Fig. 2

The edges of the transition graph, i.e. \({\mathcal{E}}\left( {{\varvec{\pi}}_{{\mathcal{X}}} } \right)\), for the ensemble presented in Fig. 2

Cluster–cluster similarity matrix, i.e. \({\varvec{CCS}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\), when (a) Euclidian distance, i.e. \({\varvec{EuclidianDis}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\), or (b) Cosine distance, i.e. \({\varvec{CosineDis}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\) is used for the ensemble presented in Fig. 2

Point–point similarity matrix, i.e. \({\varvec{PPS}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\), when (a) Euclidian distance, i.e. \({\varvec{EuclidianDis}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\), or (b) Cosine distance, i.e. \({\varvec{CosineDis}}_{{{\varvec{\pi}}_{{\mathcal{X}}} }}^{2}\) is used for the ensemble presented in Fig. 2

5 Experimental study

5.1 Datasets

Many real-world and synthetic data sets are here employed for assessment. The real-world data sets are as follows: Bupa, Breast-cancer, Glass, Galaxy, Ionosphere, Iris, ISOLET, Landsat-satellite, Letter-Recognition, SAHeart, Wine, and Yeast from UCI machine learning repository (Newman et al. 1998) together with an auxiliary real data set of USPS (Dueck 2009). Bupa, Breast-cancer, Glass, Galaxy, Ionosphere, Iris, ISOLET, Landsat-satellite, Letter-Recognition, SAHeart, USPS, Wine, and Yeast data sets have 345, 683, 214, 323, 351, 150, 7,797, 6,435, 20,000, 462, 11,000, 178, and 1,484 data samples, respectively; they also have 6, 9, 9, 4, 34, 4, 617, 36, 16, 9, 256, 13, and 8 features and 2, 2, 6, 7, 2, 3, 26, 6, 26, 2, 10, 3, and 10 clusters, respectively.

Our benchmark includes two dimensions synthetic data sets including \({Artificial}_{1}\), \({Artificial}_{2}\), \({Artificial}_{3}\), and Halfring. Halfring data set contains two clusters and 400 instances, while other three data sets contain three clusters and 300 instances. The scatter plots of these synthetic data sets are depicted in Fig. 7. We have standardized all of our mentioned data sets so that in all datasets, each feature is transformed into a new range with distribution of \(N\left(\mathrm{0,1}\right)\) and the features with missed values are removed from the data sets.

5.2 Parameters

The ensemble generation has been accomplished according to the technique introduced in Ren et al. (2013). The k-means clustering algorithm was employed for 50 different subsets of a given data set to generate ensemble of size 50. The sampling ratio employed in this paper is 0.8.

The following recent clustering ensembles have been employed as a benchmark to this paper: HBGF (Fern and Brodley 2004), CO–AL (Fred and Jain 2005), SRS (Iam-On et al. 2008), WCT (Iam-On et al. 2011), DICLENS (Mimaroglu and Aksehirli 2012) , ONCE –AL (Alqurashi and Wang 2014), CSEAC (Alizadeh et al. 2014a), DSCE (Alqurashi and Wang 2015), WEAC (Huang et al. 2015), GPMGLA (Huang et al. 2015), WCE (Alizadeh et al. 2015) ECSEAC (Parvin and Minaei-Bidgoli 2015) and TME (Zhong et al. 2015).

For a given clustering ensemble, its initialization is performed according to its corresponding paper. The results of the paper are provided after averaging on 30 independent runs, while all methods are considered with the ensemble size of 100.

5.3 Evaluation metrics

Internal measures such as silhouette or sum of square errors (SSE) computed without caring about the ground-truth labels of the data could be used to assess a clustering result on a given data set. External measures computed considering the ground-truth labels of the data are other set of the measures to assess a resultant partition on a given data set. Note that the ground-truth labels of the data are only used for assessment after obtaining the resultant partition, and they are not used during the clustering task. Although both the internal and external sets of the measures are popular, we have only used four external measures of adjust rand index (ARI), normalized mutual information (NMI) and accuracy (ACC) rate and F-measure (FM). Given the ground-truth labels \({\pi }_{\mathcal{X}}^{+}\), adjust rand index of a resultant partition \({\pi }_{\mathcal{X}}^{*}\) is achieved using the following equation

where \(\left| {\pi_{{{\mathbf{\mathcal{X}}},i}}^{*} \cap \pi_{{{\mathbf{\mathcal{X}}},j}}^{ + } } \right|\) is the number of data points shared between the \(i\) th cluster of consensus partition \(\pi_{{\mathbf{\mathcal{X}}}}^{*}\) and the \(j\) th cluster of target partition \(\pi_{{\mathbf{\mathcal{X}}}}^{ + }\). The binomial function \(\left( {\begin{array}{*{20}c} x \\ 2 \\ \end{array} } \right)\) is obtained as

Given the ground-truth partition \(\pi_{{\mathbf{\mathcal{X}}}}^{ + }\), the normalized mutual information for a resultant partition \(\pi_{{\mathbf{\mathcal{X}}}}^{*}\) is achieved by

Also, accuracy of \(\pi_{*}\) is computed by

where the relabeling task \(\sigma\) is a permutation of \(\left\{ {1,2, \ldots ,\left| {\pi_{{{\mathbf{\mathcal{X}}},:}}^{*} } \right|} \right\}\) so that \(\sigma_{j} \in \left\{ {1,2, \ldots ,\left| {\pi_{{{\mathbf{\mathcal{X}}},:}}^{*} } \right|} \right\}\), \(\forall i \in \left\{ {1,2, \ldots ,\left| {\pi_{{{\mathbf{\mathcal{X}}},:}}^{*} } \right|} \right\}\) and \(\forall i,j \in \left\{ {1,2, \ldots ,\left| {\pi_{{{\mathbf{\mathcal{X}}},:}}^{*} } \right|} \right\}:\left( {i \ne j} \right) \to \left( {\sigma_{i} \ne \sigma_{j} } \right)\).

Finally, for a given target partition \(\pi_{{{\mathbf{\mathcal{X}}},:}}^{ + }\), F-measure of a resultant partition \({\pi }_{\mathcal{X},:}^{*}\) is acquired by

The mentioned measures vary in the range of \(\left[ {0,1} \right]\). For any of them, the greater values indicate better quality of resultant partition \(\pi_{{\mathbf{\mathcal{X}}}}^{*}\). ACC and FM measures are asymmetric, but ARI and NMI are symmetric measures.

5.4 Numerical empirical analysis

The analysis of parameter \(r\) in our method, i.e. MLCC, is presented in Fig. 8. Figure 8 depicts performance of MLCC for different values of parameter \(r\) in terms of NMI. The results indicate that the best result is acquired at \(r = 20\). Indeed, after \(r \ge 10\), the results are acceptable and consistent. Therefore, we set \(r = 15\) from now on.

MLCC algorithm is compared in Fig. 9 with the state-of-the-art baseline methods mentioned in Sect. 5.2 in terms of NMI on several of benchmark data sets. Table 1 has provided the results of Fig. 9 in summary where the triple w-t-l contains integer numbers w, t and l which, respectively, indicate the number of data sets which the proposed method is the superior method on them, the number of data sets that the proposed method is the loser on them and the number of data sets that the proposed method is neither the loser nor the winner on them. Paired t-test has been used to accomplish all the tests. Superiority of the proposed method to all state-of-the-art baseline methods could be concluded from Table 1.

Figures 10, 11 and 12 represent the comparison of the MLCC method with the state-of-the-art baseline methods on all of our data sets, respectively, in terms of adjacent rand index, accuracy and f-measure.

According to Figs. 10, 11 and 12, the following conclusions could be drawn. First, the MLCC algorithm outperforms the state-of-the-art baseline methods in most of the used benchmark data sets. Second, although the state-of-the-art baseline methods outperform the MLCC algorithm in some of the used benchmark data sets, it is always among the top three best methods. All of the results presented in Figs. 9, 10, 11 and 12 have been validated by Friedman ANOVA test. The Friedman ANOVA test shows that there is always significant difference. For further analysis, the detailed results of Figs. 10, 11, 12 and 13 are presented in Table 2. In Table 2, the results of different clustering ensembles in terms of our four metrics have been presented.

We can also prove that the MLCC method has the time complexity of \(O\left( {r \times \left| {{\varvec{\pi}}_{{{\mathcal{X}},:}} } \right|^{3} } \right)\) or \(O\left( {r\left| {{\varvec{\pi}}_{{{\mathcal{X}},:}} } \right|^{3} \left| {{\varvec{\pi}}_{{\mathcal{X}}} } \right|^{3} } \right)\) in its worst case.

5.5 Noise-resistance analysis

We have examined the noise effect by adding Gaussian noise with different energy levels and analyzing robustness of the MLCC, \(ECSEAC\) and \(CSEAC\) as three best methods achieved by Table 1. The \(i\) th cluster of the data set is assumed to have the covariance of \(\Sigma_{i}\), while \(\aleph_{i}\) is considered to be a temporary noise data which has the same size as the cluster of data set mentioned for a noise ratio of \(\varsigma\). Data samples of \(\aleph_{i}\) are with the covariance matrix of \(\varsigma \times \Sigma_{i}\). The data samples of that temporary noise data are here added to the \(i\) th cluster's data samples. For all clusters in data set, the data samples of that temporary noise data with the covariance matrix \(\varsigma \times \Sigma_{i}\) are added to the data samples of the \(i\) th cluster to obtain a new noisy data set with noise ratio \(\varsigma \times 100\)%. We can easily observe that there is no noise when \(\varsigma = 0\). Figure 13 includes the results of the Iris and Wine data sets where NMI value is shown on the vertical axis and amount of the noise ratio \(\varsigma\) is shown on the horizontal axis. Apparently, by adding noise level, the gap between MLCC and other methods will be larger. It means MLCC is more robust against noise.

5.6 News clustering application

A widely used labelled Persian text benchmark was introduced as Hamshahri data set in AleAhmad et al. (2009) which includes 9 classes and 36 subclasses.

We have sampled a subset of Hamshahri data set containing sport, economic, rural, adventure, and foreign classes each of which has 200 texts (1000 texts totally). Efficacy of the k-means clusterer algorithm after obtaining the data set like Shahriari et al. (2015) without using a thesaurus is 60.32% in terms of the f-measure, while efficacy of the MLCC, \(CSEAC\) and \(ECSEAC\) algorithms are, respectively, 64.11%, 62.13% and 62.97%. Since \(CSEAC\), \(ECSEAC\), and MLCC are the best methods according to Table 2, this experiment is carried out using these methods and the results have been summarized in Fig. 14.

6 Conclusions and future work

This work proposes a new consensus function in clustering ensemble named multi-level consensus clustering (MLCC). Since we use a multi-level clustering instead of direct object clustering, MLCC is a really efficient clusterer algorithm. An innovative similarity metric for measuring similarity between clusters is proposed. After obtaining an ensemble, a cluster–cluster similarity matrix using the mentioned metric is generated. The mentioned cluster–cluster similarity matrix is used to make an object–object or point–point similarity matrix. Then, we apply an average hierarchical clusterer algorithm on the point–point similarity matrix to make consensus partition. While MLCC is better than traditional clustering ensembles and simple version of clustering ensembles on traditional cluster–cluster similarity matrix, but its computational cost is not very bad. Accuracy and robustness of the proposed method are compared with the art clustering algorithms through the experimental tests. Also, time analysis is presented in the experimental results. Comparison of the proposed method with the state-of-the-art clustering ensemble methods in terms of 4 different metrics on numerous real-world and synthetic data sets reveals that the proposed method is the superior method which did not need relabeling mechanism.

References

Abbasi S, Nejatian S et al (2019) Clustering ensemble selection considering quality and diversity. Artif Intell Rev 52:1311–1340

Akbari E, Mohamed Dahlan H, Ibrahim R, Alizadeh H (2015) Hierarchical cluster ensemble selection. Eng Appl AI 39:146–156

AleAhmad A, Amiri H, Darrudi E, Rahgozar M, Oroumchian F (2009) Hamshahri: a standard Persian text collection. J Knowl-Based Syst 22(5):382–387

Alishvandi H, Gouraki GH, Parvin H (2016) An enhanced dynamic detection of possible invariants based on best permutation of test cases. Comput Syst Sci Eng 31(1):53–61

Alizadeh H, Minaei-Bidgoli B, Parvin H (2011a) A new criterion for clusters validation. In: Artificial intelligence applications and innovations (AIAI 2011), IFIP, Springer, Heidelberg, Part I, pp 240–246

Alizadeh H, Minaei-Bidgoli B, Parvin H, Moshki M (2011b) An asymmetric criterion for cluster validation, developing concepts in applied intelligence. Stud Comput Intell 363:1–14

Alizadeh H, Minaei-Bidgoli B, Parvin H (2013) Optimizing fuzzy cluster ensemble in string representation. Int J Pattern Recognit Artif Intell 27(2):1350005

Alizadeh H, Minaei-Bidgoli B, Parvin H (2014a) To improve the quality of cluster ensembles by selecting a subset of base clusters. J Exp Theor Artif Intell 26(1):127–150

Alizadeh H, Minaei-Bidgoli B, Parvin H (2014b) Cluster ensemble selection based on a new cluster stability measure. Intell Data Anal 18(3):389–408

Alizadeh H, Yousefnezhad M, Minaei-Bidgoli B (2015) Wisdom of crowds cluster ensemble. Intell Data Anal 19(3):485–503

Alqurashi T, Wang W (2014) Object-neighborhood clustering ensemble method. In Intelligent data engineering and automated learning (IDEAL), Springer, pp 142–149

Alqurashi T, Wang W (2015) A new consensus function based on dual-similarity measurements for clustering ensemble. In: International conference on data science and advanced analytics (DSAA), IEEE/ACM, pp 149–155

Ayad HG, Kamel MS (2008) Cumulative Voting Consensus Method for Partitions with a Variable Number of Clusters. IEEE Trans Pattern Anal Mach Intell 30(1):160–173

Bagherinia A, Minaei-Bidgoli B, Hossinzadeh M, Parvin H (2019) Elite fuzzy clustering ensemble based on clustering diversity and quality measures. Appl Intell 49(5):1724–1747

Bai L, Cheng X, Liang J, Guo Y (2017) Fast graph clustering with a new description model for community detection. Inf Sci 388–389:37–47

Breiman L (1996) Bagging predictors. Mach Learn 24:123–140

Dimitriadou E, Weingessel A, Hornik K (2002) A combination scheme for fuzzy clustering. Int J Pattern Recognit Artif Intell 16(07):901–912

Domeniconi C, Al-Razgan M (2009) Weighted cluster ensembles: methods and analysis. ACM Trans Knowl Disc Data (TKDD) 2(4):1–42

Dueck D (2009) Affinity propagation: clustering data by passing messages. Ph.D. dissertation, University of Toronto

Faceli K, Marcilio CP, Souto D (2006) Multi-objective clustering ensemble. In: Proceedings of the sixth international conference on hybrid intelligent systems

X. Z. Fern and C. E. Brodley, “Random projection for high dimensional data clustering: A cluster ensemble approach”, In: Proceedings of the 20th International Conference on Machine Learning, (2003), pp. 186–193.

Fern XZ, Brodley CE (2004) Solving cluster ensemble problems by bipartite graph partitioning. In: Proceedings of the 21st international conference on machine learning, ACM, p 36

Franek L, Jiang X (2014) Ensemble clustering by means of clustering embedding in vector spaces. Pattern Recogn 47(2):833–842

Fred A, Jain AK (2002) Data clustering using evidence accumulation. In: Intl. conf. on pattern recognition, ICPR02, Quebec City, pp 276–280

Fred A, Jain AK (2005) Combining multiple clustering’s using evidence accumulation. IEEE Trans Pattern Anal Mach Intell 27(6):835–850

Freund Y, Schapire RE (1995) A decision-theoretic generalization of on-line learning and an application to boosting. Comput Learn Theory 55:119–139

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29(5):1189–1232

Ghaemi R, ben Sulaiman N, Ibrahim H, Mustapha N (2011) A review: accuracy optimization in clustering ensembles using genetic algorithms. Artif Intell Rev 35(4):287–318

Ghosh J, Acharya A (2011) Cluster ensembles. Data Min Knowl Disc 1(4):305–315

Gionis A, Mannila H, Tsaparas P (2007) Clustering aggregation. ACM Trans Knowl Discov Data 1(1):4

Hanczar B, Nadif M (2012) Ensemble methods for biclustering tasks. Pattern Recognit 45(11):3938–3949

Ho TK (1995) Random decision forests. In: Proceedings of 3rd international conference on document analysis and recognition, vol. 1, pp 278–282. https://doi.org/10.1109/ICDAR.1995.598994

Hong Y, Kwong S, Chang Y, Ren Q (2008) Unsupervised feature selection using clustering ensembles and population based incremental learning algorithm. Pattern Recogn 41(9):2742–2756

Hosseinpoor MJ, Parvin H, Nejatian S, Rezaie V (2019) Gene regulatory elements extraction in breast cancer by Hi-C data using a meta-heuristic method. Russ J Genet 55(9):1152–1164

Huang D, Lai JH, Wang CD (2015) Combining multiple clusterings via crowd agreement estimation and multi-granularity link analysis. Neurocomputing 170:240–250

Huang D, Lai J, Wang CD (2016) Ensemble clustering using factor graph. Pattern Recogn 50:131–142

Huang D, Lai J, Wang CD (2016b) Robust ensemble clustering using probability trajectories. The IEEE Trans Knowl Data Eng

Huang D, Wang CD, Lai JH (2017) Locally weighted ensemble clustering. IEEE Trans Cybern 99:1–14. https://doi.org/10.1109/TCYB.2017.2702343

N. Iam-On, T. Boongoen and S. M. Garrett, “Refining Pairwise Similarity Matrix for Cluster Ensemble Problem with Cluster Relations”, Discovery Science, (2008), 222–233.

Iam-On N, Boongoen T, Garrett S (2010) LCE: a link-based cluster ensemble method for improved gene expression data analysis. Bioinformatics 26(12):1513–1519

Iam-On N, Boongoen T, Garrett S, Price C (2011) A link based approach to the cluster ensemble problem. IEEE Trans Pattern Anal Mach Intell 33(12):2396–2409

Iam-On N, Boongeon T, Garrett S, Price C (2012) A link based cluster ensemble approach for categorical data clustering. IEEE Trans Knowl Data Eng 24(3):413–425

Jamalinia H, Khalouei S, Rezaie V, Nejatian S, Bagheri-Fard K, Parvin H (2018) Diverse classifier ensemble creation based on heuristic dataset modification. J Appl Stat 45(7):1209–1226

Jenghara MM, Ebrahimpour-Komleh H, Parvin H (2018a) Dynamic protein–protein interaction networks construction using firefly algorithm. Pattern Anal Appl 21(4):1067–1081

Jenghara MM, Ebrahimpour-Komleh H, Rezaie V, Nejatian S, Parvin H, Syed-Yusof SK (2018b) Imputing missing value through ensemble concept based on statistical measures. Knowl Inf Syst 56(1):123–139

Jiang Y, Chung FL, Wang S, Deng Z, Wang J, Qian P (2015) Collaborative fuzzy clustering from multiple weighted views. IEEE Trans Cybern 45(4):688–701

Mimaroglu S, Aksehirli E (2012) DICLENS: Divisive clustering ensemble with automatic cluster number. IEEE/ACM Trans Comput Biol Bioinf 9(2):408–420

Minaei-Bidgoli B, Topchy A, Punch WF (2004) Ensembles of partitions via data resampling. In: Intl. conf. on information technology, ITCC 04, Las Vegas, pp 188–192

Minaei-Bidgoli B, Parvin H, Alinejad-Rokny H, Alizadeh H, Punch WE (2014) Effects of resampling method and adaptation on clustering ensemble efficacy. Artif Intell Rev 41(1):27–48

Mirzaei A, Rahmati M (2010) A Novel hierarchical-clustering-combination scheme based on fuzzy-similarity relations. IEEE Trans Fuzzy Syst 18(1):27–39

Mojarad M, Parvin H, Nejatian S, Rezaie V (2019a) Consensus function based on clusters clustering and iterative fusion of base clusters. Int J Uncertain Fuzziness Knowl-Based Syst 27(1):97–120

Mojarad M, Nejatian S, Parvin H, Mohammadpoor M (2019b) A fuzzy clustering ensemble based on cluster clustering and iterative Fusion of base clusters. Appl Intell 49(7):2567–2581

Moradi M, Nejatian S, Parvin H, Rezaie V (2018) CMCABC: Clustering and memory-based chaotic artificial bee colony dynamic optimization algorithm. Int J Inf Technol Decis Mak 17(04):1007–1046

Naldi MC, De Carvalho ACM, Campello RJ (2013) Cluster ensemble selection based on relative validity indexes. Data Min Knowl Disc 27(2):259–289

Nazari A, Dehghan A, Nejatian S, Rezaie V, Parvin H (2019) A comprehensive study of clustering ensemble weighting based on cluster quality and diversity. Pattern Anal Appl 22(1):133–145

Nejatian S, Parvin H, Faraji E (2018) Using sub-sampling and ensemble clustering techniques to improve performance of imbalanced classification. Neurocomputing 276:55–66

Nejatian S, Rezaie V, Parvin H, Pirbonyeh M, Bagherifard K, Yusof SKS (2019) An innovative linear unsupervised space adjustment by keeping low-level spatial data structure. Knowl Inf Syst 59(2):437–464

Newman CBDJ, Hettich SS, Merz C (1998) UCI repository of machine learning databases. http://www.ics.uci.edu/˜mlearn/MLSummary.html.

Omidvar MN, Nejatian S, Parvin H, Rezaie V (2018) A new natural-inspired continuous optimization approach, Journal of Intelligent & Fuzzy Systems, 1–17,

Partabian J, Rafe V, Parvin H, Nejatian S (2020) An approach based on knowledge exploration for state space management in checking reachability of complex software systems. Soft Comput 24(10):7181–7196

H. Parvin, B. Minaei-Bidgoli, A clustering ensemble framework based on elite selection of weighted clusters, Advances in Data Analysis and Classification (2013) 1–28.

Parvin H, Minaei-Bidgoli B (2015) A clustering ensemble framework based on selection of fuzzy weighted clusters in a locally adaptive clustering algorithm. Pattern Anal Appl 18(1):87–112

Parvin H, Beigi A, Mozayani N (2012) A clustering ensemble learning method based on the ant colony clustering algorithm. Int J Appl Comput Math 11(2):286–302

Parvin H, Minaei-Bidgoli B, Alinejad-Rokny H, Punch WF (2013) Data weighing mechanisms for clustering ensembles. Comput Electr Eng 39(5):1433–1450

Parvin H, Nejatian S, Mohamadpour M (2018) Explicit memory based ABC with a clustering strategy for updating and retrieval of memory in dynamic environments. Appl Intell 48(11):4317–4337

Pirbonyeh A, Rezaie V, Parvin H, Nejatian S, Mehrabi M (2019) A linear unsupervised transfer learning by preservation of cluster-and-neighborhood data organization. Pattern Anal Appl 22(3):1149–1160

Rafiee G, Dlay SS, Woo WL (2013) Region-of-interest extraction in low depth of field images using ensemble clustering and difference of Gaussian approaches. Pattern Recognit 46(10):2685–2699

Rashidi F, Nejatian S, Parvin H, Rezaie V (2019) Diversity based cluster weighting in cluster ensemble: an information theory approach. Artif Intell Rev 52(2):1341–1368

Ren Y, Zhang G, Domeniconi C, Yu G (2013) Weighted object ensemble clustering. In Proceedings of the IEEE 13th international conference on data mining (ICDM), IEEE, pp 627–636

Roth V, Lange T, Braun M, Buhmann J (2002) A resampling approach to cluster validation. Intl. conf. on computational statistics, COMPSTAT

Shabaniyan T, Parsaei H, Aminsharifi A, Movahedi MM, Jahromi AT, Pouyesh S, Parvin H (2019) An artificial intelligence-based clinical decision support system for large kidney stone treatment. Australas Phys Eng Sci Med 42(3):771–779

Shahriari A, Parvin H, Monajati A (2015) Exploring weights of hierarchical and equivalency relationship in general Persian texts. EANN Workshops 7(1):7

Soto V, Garcia-Moratilla S, Martinez-Munoz G, Hernandez- Lobato D, Suarez A (2014) A double pruning scheme for boosting ensembles. IEEE Trans Cybern 44(12):2682–2695

Strehl A, Ghosh J (2000) Value-based customer grouping from large retail data sets. In AeroSense, International Society for Optics and Photonics, pp 33–42

Strehl A, Ghosh J (2003) Cluster ensembles—a knowledge reuse framework for multiple partitions. J Mach Learn Res 3:583–617

Szetoa PM, Parvin H, Mahmoudi MR, Tuan BA, Pho KH (2020) Deep neural network as deep feature learner. J Intell Fuzzy Syst. https://doi.org/10.3233/JIFS-191292

Topchy AP, Jain AK, Punch WF (2003) Combining multiple weak clusterings. In: IEEE international conference on data mining, pp 331–338

Topchy A, Jain AK, Punch W (2005) A mixture model of clustering ensembles. Proc SIAM Int Conf Data Min, Citeseer 27(12):1866–1881

N. X. Vinh and M. E. Houle, “A set correlation model for partitional clustering”, In: Advances in Knowledge Discovery and Data Mining, Springer, (2010) pp. 4–15.

Yang Y, Jiang J (2016) Hybrid sampling-based clustering ensemble with global and local constitutions. IEEE Trans Neural Netw Learn Syst 27(5):952–965

Yasrebi M, Eskandar-Baghban A, Parvin H, Mohammadpour M (2018) Optimisation inspiring from behaviour of raining in nature: droplet optimisation algorithm. Int J Bio-Inspired Comput 12(3):152–163

Yi J, Yang T, Jin R, Jain AK, Mahdavi M (2012) Robust ensemble clustering by matrix completion. In: Proceedings of the IEEE 12th international conference on data mining (ICDM), IEEE, pp 1176–1181

Yousefnezhad M, Huang SJ, Zhang D (2018) WoCE: a framework for clustering ensemble by exploiting the wisdom of crowds theory. IEEE Trans Cybernetics 48(2):486–499

Yu Z, Wong HS, You J, Yang Q, Liao H (2011) Knowledge based cluster ensemble for cancer discovery from biomolecular data. IEEE Trans Nanobiosci 10(2):76–85

Yu Z, You J, Wong HS, Han G (2012) From cluster ensemble to structure ensemble. Inf Sci 198:81–99

Yu Z, Chen H, You J, Han G, Li L (2013) Hybrid fuzzy cluster ensemble framework for tumor clustering from biomolecular data. IEEE/ACM Trans Comput Biol Bioinf 10(3):657–670

Yu Z, Li L, Liu J, Han G (2015) Hybrid Adaptive Classifier Ensemble. IEEE Transactions on Cybernetics 45(2):177–190

Yu Z, Zhu X, Wong HS, You J, Zhang J, Han G (2016a) Distribution-based cluster structure selection. IEEE Trans Cybern 99:1–14. https://doi.org/10.1109/TCYB.2016.2569529

Yu Z, Chen H, Liu J, You J, Leung H, Han G (2016b) Hybrid k-nearest neighbor classifier. IEEE Trans Cybern 46(6):1263–1275

Yu Z, Lu Y, Zhang J, You J, Wong HS, Wang Y, Han G (2017) Progressive semisupervised learning of multiple classifiers. IEEE Trans Cybern 99:1–14

Zhang S, Wong HS, Shen Y (2012) Generalized adjusted rand indices for cluster ensembles. Pattern Recognit 45(6):2214–2226

Zhao X, Liang J, Dang C (2017) Clustering ensemble selection for categorical data based on internal validity indices. Pattern Recognit 69:150–168

Zhong C, Yue X, Zhang Z, Lei J (2015) A clustering ensemble: two-level-refined co-association matrix with path-based transformation. Pattern Recogn 48(8):2699–2709

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Pho, KH., Akbarzadeh, H., Parvin, H. et al. A multi-level consensus function clustering ensemble. Soft Comput 25, 13147–13165 (2021). https://doi.org/10.1007/s00500-021-06092-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-021-06092-7