Abstract

Metaheuristics have proven their efficiency in treating complex optimization problems. Generally, they produce good results quite close to optimal despite some weaknesses such as premature convergence and stagnation in the local optima. However, some techniques are used to improve the obtained results, one of them is the adoption of chaos theory. Including chaotic sequences in metaheuristics has proven its efficiency in previous studies by improving the performance and quality of the results obtained. In this study, we propose an improvement of the metaheuristic lightning search algorithm (LSA) by using chaos theory. In fact, the idea is to replace the values of random variables with a chaotic sequences generator. To prove the success of the metaheuristic—chaos theory association, we tested five chaotic version of lightning search algorithm on a benchmark of seven functions. Experimental results show that sine or singer map are the best choices to improve the efficiency of LSA, in particular with the lead projectile update.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recent studies in the field of optimization have shown a growing interest in problem-solving methods using metaheuristics. In fact, metaheuristics have advantages over other deterministic resolution approaches. Among its advantages are: simplicity, the possibility to solve large scale and non-linear problems and their flexibility. Metaheuristics deal with complex optimization problems such as manufacturing systems design (Gen and Cheng 1996), mechanical engineering (He et al. 2004), flowshop scheduling (Murata and Ishibuchi 1994), image enhancement and segmentation (Paulinas and Ušinskas 2007), transport problem solving (Vignaux and Michalewicz 1991), calibration of fractional fuzzy controllers (Zhou et al. 2019a) and data clustering (Pacheco et al. 2018; Zhou et al. 2019b, 2017).

In general, metaheuristics try to imitate the behaviour of living beings or reproduce biological and physical phenomena. They can be classified into categories, population-based [Particle swarm optimization (PSO) (Eberhart and Kennedy 1995), Genetic algorithm (GA) (Holland 1992)] and single-point search [Itarated local search (ILS) (Lourenço et al. 2003), Simulated annealing (SA) (Van Laarhoven and Aarts 1987)], memoryless (SA) and memory usage methods [Ant colony optimization (ACO) (Dorigo and Di Caro 1999)], nature-inspired [GA, PSO, SA, ACO, Harris hawks optimizer (HHO) (Heidari et al. 2019)] and non-nature inspiration metaheuristics [Tabu search (TS) (Glover and Laguna 1998), Harmony search (HS) (Yang 2009b)]. In addition, metheuristics propose practically a similar iterative operating scheme which consists of four steps: The first step consists in initializing randomly the start population, the second aims to search better solutions by using generation rules, third, those potential solutions are updated, and finally, if the stopping conditions are satisfied, we provide the best found solution, otherwise we return to the second step. Independently of previous steps, metaheuristics are based on randomness, which directly affects performance and efficiency, mainly in the exploration and exploitation that form the basis for the problem solving. Exploitation is the search phase of new areas in the search space while exploitation is the stage in which search is carried out to the promising areas. However, even if the operating mode of different metaheuristics is very similar, performance and efficiency are not identical. This is confirmed by the No Free Lunch Theorem (NFLT) (Ho and Pepyne 2002) which proves that there is no methaheuristic capable of surpassing others in all optimization problems.

However, despite the advantages presented above, some metaheuristics suffer from premature convergence problems. Indeed, random values is widely used in metaheuristics. These values can provide good results for some problems and that is not the case for others. To tackle this problem, which affects the exploration and exploitation phases, recent researches have adopted new techniques such as hybridization (Blum et al. 2011), local search (Lim et al. 2004) and random walk (Yang et al. 2013).

A promising approach that joins the list is the use of chaos theory (Thietart and Forgues 1995). Chaotic system is a non-linear dynamic system, characterized by its unpredictability, non-periodic, spread-spectrum character, ergodic properties, and highly sensitive to the initial conditions. The latest works integrating chaotic sequences instead of random values produced by uniform or gaussian distribution for example, indicates an improvement in the performance of modified algorithms (Alatas 2010a; Arora and Singh 2017; Chen et al. 2020; Mitić et al. 2015; Zhang and Feng 2018). In fact, compared to stochastic searching, the use of chaotic sequences improves the performance of the targeted optimization algorithms because it avoids local optima, improves the searching capability and accelerates the convergence of metaheuristics. Moreover, the integration and implementation of chaotic sequences is easy to perform. However, the efficiency of the algorithms, for large scale optimization problems, decreases due to the high number of iterations needed to reach the global optimum.

In this study we are interested in the metaheuristic lightning search algorithm (LSA) (Shareef et al. 2015). Indeed, LSA is a recent algorithm that proved its efficiency to solve optimization problems and produced better results compared to other methods. Howerver, a recent work has proposed an extended version of LSA named Binary lightning search algorithm (Islam et al. 2017). Furthermore, another extension has been proposed: the use of the Quantum theory. This variant is applied in many works on several problems such as charging stations placement for electric vehicles (Aljanad et al. 2018) and the control of induction motors (IM) (Hannan et al. 2017, 2018). Nevertheless, LSA suffers to obtain correct results for some tests as for the resolution of multimodal and non-separable functions. In this paper, we propose an improvement of LSA through the use of chaos theory.

The rest of the paper is organized as follows: a review of chaotic metaheuristics is presented in the Sect. 2. Section 3 offers a brief introduction to the LSA algorithm. Section 4 gives a brief idea about chaotic maps. Section 5 presents the benchmark used for the comparison of the proposed method. Section 6 describes the proposed method chaotic LSA, and Sect. 7 is dedicated to the interpretation of the results obtained.

2 Highlights of recent chaotic metaheuristics

In this sections, we present the recent methods that use chaotic sequences to improve their performance compared to the original versions, these methods are for the most part nature-based algorithms.

2.1 Chaotic Bat Algorithm (CBA)

Bat algorithm (BA) (Yang 2010) is based on the echolocation of microbats. Micobats generally tune frequency and increase the pulse rate emission whenever a potential prey is close. An improvement of this method is proposed. In fact, four versions integrating chaotic generators are presented (Gandomi and Yang 2014):

-

CBA-I: replace the parameter \(\beta \) in the equation

$$\begin{aligned} f = f_\mathrm{min}+(f_\mathrm{max}-f_\mathrm{min})\beta \end{aligned}$$(1)by chaotic map in the equation with f is the frequency and \(\beta \) is a random number between 0 and 1.

-

CBA-II: replace the parameter \(\lambda \) by a chaotic map in the equation

$$\begin{aligned} v^t= v^{t-1}+(x^t-x^*)\lambda \end{aligned}$$(2)where \(v^t\) designates the speed at time step t, \(x^t\) the position at time step t, and \(\lambda \) is a random number between 0 and 1.

-

CBA-III: replace loudness in BA with a chaotic map.

-

CBA-IV: replace the pulse emission rate by a chaotic map.

The results show that CBA-IV is the most effective between proposed versions.

2.2 Chaotic Cuckoo Search (CCS)

Cuckoo search (CS) (Yang and Deb 2009) is inspired by the incubation behaviour of cuckoos birds. Each cuckoo egg in the nest is considered as a solution. To generate a new cuckoo \(x^{t+1}\) we use the following formula:

with \(\alpha \) is the step size. CCS (Wang et al. 2016) improves performance by modifying the alpha parameter with a series of chaotic values normalized between 0 and 2.

2.3 Firefly Algorithm with Chaos (FAC)

Firefly algorithm (FA) (Yang 2009a) imitates the behaviour of fireflies during summer nights. The authors develop a metaheuristic by taking in consideration the ability of firefly to emit light, Following rules are respected:

-

All fireflies are unisex.

-

The attractiveness is proportional to the brightness.

-

Brightness is proportional to the landscape of cost function.

For that, light intensity is expressed by the following equation:

where \(\gamma \) is the coefficient light absorption and r is the distance between two firefly.

Attractiveness is defined by:

\(\beta _0\) represents attractiveness at \(r= 0\).

The movement of the firefly i to another more attractive j is calculated with :

FAC (Gandomi et al. 2013) offers two improvements, the first one is tuning light absorption coefficient \(\gamma \) with chaotic maps and the second is based on the tuning of the attractiveness coefficient \(\beta \) with chaotic maps. Simulations results show a considerable improvement in performance by using the second chaotic version of FA.

2.4 Chaotic Grey Wolf Optimization (CGWO)

Grey wolf optimization (GWO) (Mirjalili et al. 2014) is inspired by hunting behaviour and the social hierarchy that organizes troop life among grey wolves. There are 4 groups of wolves, \(\alpha \), \(\beta \), \(\delta \) who command wolves \(\omega \), and who during the hunting operation move to the promising areas. CGWO (Yu et al. 2016) is based on the chaotic local search technique which is applied to the position of the current best wolf \(X_{\alpha }\) using the following formula:

If \(X_{n}<X_{\alpha }\) then update position \(X_{\alpha }\). Search is done in the neighbourhood of \(X_{n}\), \(X_{\alpha }\) is the center of a sphere of radius r, U and L denotes the upper and lower boundary of the search area and finally z is a chaotic variable.

2.5 Chaotic Krill Herd Optimization Algorithm (CKHO)

Krill herd optimization algorithm (KHO) (Gandomi and Alavi 2012) mimic the behaviour of individual krill in krill herd. The algorithm reproduces the three main activities of krill which are:

-

inducted motion : which refers to the density maintenance of the herd, it is defined as follows:

$$\begin{aligned} N_i(t+1)&= N_\mathrm{max}\alpha _i+\omega _nN_i(t) \end{aligned}$$(9)$$\begin{aligned} alpha_i&=alpha_i^\mathrm{local}+alpha_i^\mathrm{target} \end{aligned}$$(10)where \(N_\mathrm{max}\) is the maximum induced speed, \(\omega _n\) is the inertia weight, \(\alpha _i^\mathrm{local}\) and \(alpha_i^\mathrm{target}\) are local and target effect and \(alpha_i^\mathrm{target}\) is defined by:

$$\begin{aligned} alpha_i^\mathrm{target} = C^\mathrm{best}K_\mathrm{i, best}X_\mathrm{i, best}X_\mathrm{i, best} \end{aligned}$$(11)with \(C^\mathrm{best}\) is a coefficient and determined by:

$$\begin{aligned} C^\mathrm{best} = 2 \left( \frac{r+1}{Imax}\right) \end{aligned}$$(12)where r is a random value between 0 and 1.

-

Foraging

-

Random diffusion

The authors consider that the most important value to be tuned is r, and replaces it with a chaotic value (Saremi et al. 2014). The results prove the superiority of CKHO compared to KHO. In fact the modifications allows KHO to avoid the local optima and to have faster convergence.

2.6 Chaotic Harmony Search Algorithm (CHSA)

Harmony search algorithm (Yang 2009b) based on the search for the best state of harmony in the process of composing a melody. Finding the best harmony means finding the best solution determined by an objective function. To improve the global convergence and avoid stagnating in local optima, authors propose seven CHSA algorithms (Alatas 2010b), the first one initializes the harmony memory solution using chaotic maps using the following formula:

where \(x_j^\mathrm{max}\) and \(x_j^\mathrm{min}\) are the upper and the lower bound of the jth decision parameter respectively, and \(CM_{i,j}\) is a chaotic map which replace a random value generated from a uniform distribution. others tuning the Pitch Adjusting Rate (PAR) and bandwidth (bw) parameters by creating six other combinations of CHS. Tests reveal that three CHS algorithms produce better results than the original version of HS.

2.7 Chaos Embadded Particle Swarm Optimization Algorithms (CEPSOA)

Particle Swarm Optimisation (PSO) (Eberhart and Kennedy 1995) simulate the social behaviour of bird flocking and fish schooling through a model. PSO is a simple and effective metaheuristic, but it suffers from the problem of premature convergence. In order to find the best solution, each particle use the following formulas for the next iteration:

where \(x_i(t+1)\) is the position of the ith particle at the tth iteration, pbest is the best position encountered by the particle, gbest is the best position encountered by the whole swarm, v(t) is the velocity at tth iteration, \(w \in [0.8, 1.2]\) is the inetia weight, \(c_1\) and \(c_2 \in [0,2]\) are cognitive and social parameter respectively, \(r_1\) and \(r_2\) are a random values generated by a uniform distribution. To improve the performance of PSO, the authors propose twelve chaotic variants of PSO (CEPSOA) (Alatas et al. 2009) by replacing, in the form of combination, the parameters w, \(c_1\), \(c_2\), \(r_1\) and \(r_2\) by chaotic values.

3 Lightning Search Algorithm (LSA)

This algorithm is based on the step leader propagation phenomenon. During physical reactions inside the thundercloud, projectiles are ejected into space, and can create a step leader. These projectiles are considered as initial population size, and the solution is the tip of the current step leader energy Ec. The projectiles move with a velocity:

where \(v_p\) is the current velocity and \(v_0\) are the initial velocity of the projectile, c is the speed of light, \(F_i\) is the constant ionization rate, m is the mass of the projectile, and s is the length of the travelled path.

Another major property described in LSA is forking. It is realized because of nuclei collision. It is done in two ways. The first is the appearance of two symmetrical channels, in which case the opposite projections are expressed as follows:

with a and b are the boundary limits. This technique can improve the proposed solutions by removing the channel with the lowest energy. In the second type of forking, the energy redistributed after several unsuccessful propagation of the leaders which is qualified as channel time.

Moreover, LSA defines three types of projectiles:

-

Transition projectile Which creates the first step leader, and which represents the initial population, they can be modelled using values generated by a uniform distribution as shown in the following equation:

$$\begin{aligned} p_\mathrm{i-new}^T= unifrand()*({\text {UB}}(d)-{\text {LB}}(d))+{\text {LB}}(d) \end{aligned}$$(18)where unifrand() is a number generated by uniform distribution, UB(d) and LB(d) are the the upper and lower dth decision parameter respectively.

-

Space projectile The positions of the projectiles at step \(t+1\) is modelled using the values generated by an exponential distribution with shaping parameter \(\mu \) which controls the direction and position of the projectiles. The position of the projectile \(p_i^S\) at \( t+1\) is described as follows:

$$\begin{aligned} p_\mathrm{i-new}^S= p_i^S\pm exprand(\mu _i) \end{aligned}$$(19)where \(exprand(\mu _i)\) is an exponential random value. The stepped leader \(sl_i\) is extended to a new position \(sl_\mathrm{i-new}\) if \(p_\mathrm{i-new}^S\) provides good solution at \(t+1\).

-

Lead projectile The projectile associated with the step leader moving near the ground and modelled using a random value belonging to the normal distribution, the lead projectile position \(p^L\) at \(t+1\) is:

$$\begin{aligned} p_\mathrm{new}^L=p^L+normrand(\mu _L, \sigma _L) \end{aligned}$$(20)where normrand is a value belonging to the normal distribution having parameters \(\mu _\mathrm{L} \) which takes the value of \(p^L\), and \(\sigma _\mathrm{L}\) which exponentially decreases as it progresses toward the Earth or as it finds the best solution. As for space projectiles, \(p^L\) takes the value \(p_\mathrm{new}^L\) if the latter provides a better solution and the step leader \(sl_i\) is extended to a new position \(sl_\mathrm{i-new}\).

4 Chaotic maps

All metaheuristics include random elements, the main property for these methods is stochasticity carried out using values belonging to statistical distributions. The idea in this study is to substitute this values with other values provided by chaotic maps, which by their ergodicity, solve the problems of some metaheuristics such as fast convergence and stagnation in local optima. Indeed, the use of chaotic maps improves search speed, which is not insignificant when dealing with large scale problems. In this section, we present chaotic maps to be tested in the different LSA variants. For the realization of our simulations, we propose to test 11 chaotic maps:

-

1.

Chebyshev map It is represented by the following equation:

$$\begin{aligned} x_{k+1}=\cos (k\cos ^{-1}(x_{k})) \end{aligned}$$(21)it generates chaotic values between − 1 and 1.

-

2.

Circle map It is formulated as:

$$\begin{aligned} x_{k+1}=x_{k}+b-\frac{a}{2\pi }\sin (2\pi x_{k}) mod(1) \end{aligned}$$(22)for a = 0.5 and b = 0.2, it generates chaotic values between 0 and 1.

-

3.

Gauss/mouse map Can be defined as follows:

$$\begin{aligned} x_{k+1}=\left\{ \begin{matrix} 0 &{}\quad x_{k}=0 \\ \frac{1}{x_{k}\mod (1)} &{} \end{matrix}\right. \end{aligned}$$(23)it generates chaotic values between 0 and 1.

-

4.

Iterative map With infinite collapses represented by the following equation :

$$\begin{aligned} x_{k+1}= \sin \left( \frac{a\pi }{x_k}\right) \end{aligned}$$(24)it generates chaotic values between − 1 and 1

-

5.

Liebovitch map Defined as follow:

$$\begin{aligned} x_{k+1}=\left\{ \begin{matrix} \alpha x_k &{}\quad 0<x_k \le P_1 \\ \frac{P-x_k}{P_2-P_1}&{}\quad P_1<x_k \le P_2\\ 1-\beta (1-x_k)) &{}\quad P_2<x_k\le 1 \end{matrix}\right. \end{aligned}$$(25)where \(\alpha < \beta \) and

$$\begin{aligned} \alpha&= \frac{P_2}{P_2}(1-(p_2-P_1)) \end{aligned}$$(26)$$\begin{aligned} \beta&= \frac{1}{P_2-1}((P_2-1)-P_1(P_2-P_1)) \end{aligned}$$(27) -

6.

Logistic map It is described as follow:

$$\begin{aligned} x_{k+1}=ax_{k}(1-x_{k}) \end{aligned}$$(28)If \(a=4\), Logistic map generates chaotic values between 0 and 1.

-

7.

Piecewise map can be written as:

$$\begin{aligned} x_{k+1}=\left\{ \begin{array}{ll} \frac{x_{k}}{P}&{}\quad 0\le x_{k}< P \\ \frac{x_{k}-P}{0.5-P} &{}\quad P\le x_{k}< \frac{1}{2} \\ \frac{1-P-x_{k}}{0.5-P}&{}\quad \frac{1}{2} \le x_{k}<1-P \\ \frac{1-x_{k}}{P} &{}\quad 1-P\le x_{k}<1 \end{array}\right. \end{aligned}$$(29)where \(P=0.4\), Piecewise map generates chaotic values between 0 and 1.

-

8.

Sine map can be defined as :

$$\begin{aligned} x_{k+1}=\frac{a}{4}\sin (\pi x_{k}) \end{aligned}$$(30)where \(a=4\), Sine map generates chaotic values between 0 and 1.

-

9.

Singer map can be written as :

$$\begin{aligned} x_{k+1}=\mu (7.86x_k-23.31x_k^2+28.75x_k^3-13.3x_k^4) \end{aligned}$$(31)where \(\mu = 1.07\), Singer map generates chaotic values between 0 and 1.

-

10.

Sinusoidal map defined as :

$$\begin{aligned} x_{k+1}=ax_{k}^{2}\sin (\pi x_{k}) \end{aligned}$$(32)where a=2.3, Sinusoidal map generates chaotic values between 0 and 1.

-

11.

Tent map is very similar to Logistic map, it is given by:

$$\begin{aligned} x_{k+1}=\left\{ \begin{array}{ll} \frac{x_{k}}{0.7} &{}\quad x_{k}<0.7\\ \frac{10}{3}(1-x_{k}) &{}\quad \mathrm{Otherwise} \end{array}\right. \end{aligned}$$(33)

It is necessary to precise that the chaotic maps that do not produce values belonging to [0,1] are normalized to have the same scale.

5 Numerical simulations

Different chaotic LSAs are tested using a benchmark of seven functions. Table 1 contains the parameters used for different LSA variants. The values of these parameters are set according to the reference paper of LSA (Shareef et al. 2015).

5.1 Benchmark functions

Table 2 summarizes the properties of the functions used for the tests.

5.2 Performance measures

To evaluate the performance of the different variants of the proposed algorithm we use classical statistical indicator such as the mean and standard deviation, as well as the success rate which is defined and used by many related works (Gandomi and Yang 2014; Gandomi et al. 2013; Mitić et al. 2015):

where \(Nb^\mathrm{test}\) is the number of run, \(Nb^\mathrm{sucess}\) is the number of times the tests are successful. In this study, we set variable the \(Nb^\mathrm{test}\) to 100 and we consider a successful test only if the result obtained is near to the optimal solution. Taking into account the search space defined for each function, a test can be successfully defined as follows:

where \(X^\mathrm{gb}\) is the obtained global best, \(X^*\) is the global optima, UB and LB are the upper and lower bounds respectively.

6 Chaotic lightning search algorithm

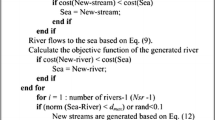

In this section, we use chaotic maps to test different variants of the proposed CLSA algorithms. The flowchart of a schematic chaotic LSA is presented in Fig. 1. In the following section we present the modifications made to the parameters.

First of all, we present the results obtained by the LSA algorithm after 100 runs. The success rate of the different functions is given in the Table 3.

6.1 CLSA-I

The value generated by the exponential distribution in Equation (19) is modified by a chaotic map (\(CM_i\)) for each iteration i, so the equation of the new position of the space projectile can be written as :

Random value in the original equation is a number between 0 and 1, it is substituted by a chaotic value in the same interval for 11 different chaotic maps. The success rate after 100 runs with different chaotic maps are shown in the Table 4. This variant produces acceptable results only for the Shekel 7 function with four chaotic maps.

6.2 CLSA-II

The value generated by the standard normal distribution in Eq. (20) is modified by a chaotic map, so the equation of the new position of the lead projectile can be written as:

Random value in the original equation is a number between 0 and 1, it is substituted by a chaotic value in the same interval. The success rate after 100 runs with different chaotic maps are shown in the Table 5.

6.3 CLSA-III

CLSA-I and CLSA-II are combined, the random values in the Eqs. (19) and (20) are replaced by a chaotic map. The success rate after 100 runs with different chaotic maps are shown in the Table 6.

6.4 CLSA-IV

The value generated by the uniform distribution for the forking mechanism is modified by a chaotic map, so, forking happens if

Random value in the original inequality is a number between 0 and 1, it is substituted by a chaotic value in the same interval. The success rate after 100 runs with different chaotic maps are shown in the Table 7.

6.5 CLSA-V

During the simulations it appeared that CLSA-II provides the best results, so we decided to combine it with CLSA-IV. The success rate after 100 runs with different chaotic maps are shown in the Table 8.

7 Discussions

A first remark to note is that algorithms LSA, CLSA-II, CLSA-IV, and CLSA-V display a correct performance regarding the Sphere function (f1). Concerning Schwefel 2.22 function f2, the best results are obtained by CLSA-II which are significantly better than the others by using Gauss/mouse map and Singer map. However, all algorithms have failed to reach a tolerance threshold for the search for the optimum for the Rosenbrock function (f3). Then, according to the results, we can observe that the CLSA-II algorithm is a little more efficient in terms of success rate compared to the other methods, and this by using the Logistic map. For the Griewank function (f5), the CLSA-II, CLSA-IV and CLSA-V show performances that exceed the standard LSA version, especially when adopting Singer map in CLSA-II. For the Kowalik function, the results displayed by the CLSA-II, CLSA-IV and CLSA-V variants seem to be the best, especially when adopting Sine map for CLSA-II. However, it is noted that only CLSA-I, CLSA-III succeed to reach the tolerance threshold for the Shekel 7 function (f7).

In general, it can be concluded that the CLSA-II variant provides the best results by using Sine and Singer map, followed by the CLSA-V and CLSA-IV variants which produce results higher than LSA. Finally, we can observe that chaotic variants of LSA outperform the standard version in terms of quality of results for multimodal and non-separable functions, such as CLSA-II for f5 and f6, and CLSA-I for f7.

Tables 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19 and 20 provide a statistical description of the simulations by comparing the results obtained by CLSA-II with LSA. The results displayed show the average, the median, the best results, worst results, and the standard deviation of the best fitness costs for 100 runs. These results show that the use of chaotic series for LSA significantly improves performance.

After an evaluation of the different values presented in the previous tables we can see that except for Gauss mouse map and tent map, the other maps in CLSA-II produce averages higher than LSA for f1. For f2 all chaotic maps show better results than LSA for the average and the best obtained. For f3, the performance is comparable, the best average is obtained by iterative map and the best is provided by logistic map. For f4, both Singer map and sinusoidal map perform better than LSA in terms of average, the results however are similar. For f5, all maps except Liebovitch map offer better results than LSA for the average of the best runs. For f6, Sinusoidal map outperforms LSA in terms of average while Gauss mouse map offers the best performance for f7.

The results presented in the previous tables are confirmed by Figs. 7, 8, 2, 3, 4, 5 and 6. Hence, we can see that there is always a chaotic map that allows to have the best average and the best result for each function compared to the standard version of LSA. Moreover, we can notice that for certain functions like f1, f2, f3, and f5 the median obtained by some chaotic maps are much superior to the median obtained by LSA, and the interquartile range is smaller for these maps.

8 Conclusion

The use of chaos theory is one of the techniques that improve the performance of metaheuristics. In this study, chaotic variants of Lightning Search Algorithm were proposed. Three of the five variants proposed, for instance CLSA-II, CLSA-IV, and CLSA-V, significantly improves the results obtained, while other variants are able to produce good results for particular functions such as the CLSA-I algorithm for Shekel 7 function. We have chosen to adopt a variant that seems to provide the best results, e.g CLSA-II, which aims to tune lead projectile. According to the results presented based on two metrics which are success rate and statistical indicators (average, standard deviation ...), introducing a chaotic value generator improves the success rate, optimality and quality of the results, especially for multimodal and non-separable functions, by escaping the local optima. The advantages offered using chaos theory and the promising results encourage us to adopt this technique with other metaheuristics and test through experiments the impact of the modified parameters in a distributed or parallel way as well. This is our short-term objective. Furthermore, we aim to investigate the efficiency of combining metaheuristics with chaos theory to solve real world engineering problems. Finally, in this study, we adopted the general version of LSA. Therefore, we will explore in future work, the impact of using other variants of LSA with the chaos theory.

References

Alatas B (2010a) Chaotic bee colony algorithms for global numerical optimization. Expert Syst Appl 37(8):5682–5687

Alatas B (2010b) Chaotic harmony search algorithms. Appl Math Comput 216(9):2687–2699

Alatas B, Akin E, Ozer AB (2009) Chaos embedded particle swarm optimization algorithms. Chaos Solitons Fractals 40(4):1715–1734

Aljanad A, Mohamed A, Shareef H, Khatib T (2018) A novel method for optimal placement of vehicle-to-grid charging stations in distribution power system using a quantum binary lightning search algorithm. Sustain Cities Soc 38:174–183

Arora S, Singh S (2017) An improved butterfly optimization algorithm with chaos. J Intell Fuzzy Syst 32(1):1079–1088

Blum C, Puchinger J, Raidl GR, Roli A (2011) Hybrid metaheuristics in combinatorial optimization: a survey. Appl Soft Comput 11(6):4135–4151

Chen H, Zhang Q, Luo J, Xu Y, Zhang X (2020) An enhanced bacterial foraging optimization and its application for training kernel extreme learning machine. Appl Soft Comput 86:105884

Dorigo M, Di Caro G (1999) Ant colony optimization: a new meta-heuristic. In: Proceedings of the 1999 congress on evolutionary computation-CEC99 (Cat. No. 99TH8406), IEEE, vol 2, pp 1470–1477

Eberhart R, Kennedy J (1995) Particle swarm optimization. In: Proceedings of the IEEE international conference on neural networks, Citeseer, vol 4, pp 1942–1948

Gandomi AH, Alavi AH (2012) Krill herd: a new bio-inspired optimization algorithm. Commun Nonlinear Sci Numer Simul 17(12):4831–4845

Gandomi AH, Yang XS (2014) Chaotic bat algorithm. J Comput Sci 5(2):224–232

Gandomi AH, Yang XS, Talatahari S, Alavi AH (2013) Firefly algorithm with chaos. Commun Nonlinear Sci Numer Simul 18(1):89–98

Gen M, Cheng R (1996) Genetic algorithms and manufacturing systems design. Wiley, New York

Glover F, Laguna M (1998) Tabu search. Handbook of combinatorial optimization. Springer, New York, pp 2093–2229

Hannan MA, Abd Ali J, Hussain A, Hasim FH, Amirulddin UAU, Uddin MN, Blaabjerg F (2017) A quantum lightning search algorithm-based fuzzy speed controller for induction motor drive. IEEE Access 6:1214–1223

Hannan MA, Ali JA, Mohamed A, Amirulddin UAU, Tan NML, Uddin MN (2018) Quantum-behaved lightning search algorithm to improve indirect field-oriented fuzzy-PI control for IM drive. IEEE Trans Ind Appl 54(4):3793–3805

He S, Prempain E, Wu Q (2004) An improved particle swarm optimizer for mechanical design optimization problems. Eng Optim 36(5):585–605

Heidari AA, Mirjalili S, Faris H, Aljarah I, Mafarja M, Chen H (2019) Harris Hawks optimization: algorithm and applications. Fut Generat Comput Syst 97:849–872

Ho YC, Pepyne DL (2002) Simple explanation of the no-free-lunch theorem and its implications. J Optim Theory Appl 115(3):549–570

Holland JH (1992) Genetic algorithms. Sci Am 267(1):66–73

Islam MM, Shareef H, Mohamed A, Wahyudie A (2017) A binary variant of lightning search algorithm: BLSA. Soft Comput 21(11):2971–2990

Lim A, Rodrigues B, Zhang X (2004) Metaheuristics with local search techniques for retail shelf-space optimization. Manag Sci 50(1):117–131

Lourenço HR, Martin OC, Stützle T (2003) Iterated local search. Handbook of metaheuristics. Springer, New York, pp 320–353

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61

Mitić M, Vuković N, Petrović M, Miljković Z (2015) Chaotic fruit fly optimization algorithm. Knowl Based Syst 89:446–458

Murata T, Ishibuchi H (1994) Performance evaluation of genetic algorithms for flowshop scheduling problems. In: Proceedings of the first IEEE conference on evolutionary computation, IEEE World Congress on Computational Intelligence, IEEE, pp 812–817

Pacheco TM, Gonçalves LB, Ströele V, Soares SSR (2018) An ant colony optimization for automatic data clustering problem. In: 2018 IEEE congress on evolutionary computation (CEC), IEEE, pp 1–8

Paulinas M, Ušinskas A (2007) A survey of genetic algorithms applications for image enhancement and segmentation. Inf Technol control 36(3):278–284

Saremi S, Mirjalili SM, Mirjalili S (2014) Chaotic krill herd optimization algorithm. Proc Technol 12:180–185

Shareef H, Ibrahim AA, Mutlag AH (2015) Lightning search algorithm. Appl Soft Comput 36:315–333

Thietart RA, Forgues B (1995) Chaos theory and organization. Organ Sci 6(1):19–31

Van Laarhoven PJ, Aarts EH (1987) Simulated annealing. In: Simulated annealing: theory and applications, Springer, New York, pp 7–15

Vignaux GA, Michalewicz Z (1991) A genetic algorithm for the linear transportation problem. IEEE Trans Syst Man Cybernet 21(2):445–452

Wang GG, Deb S, Gandomi AH, Zhang Z, Alavi AH (2016) Chaotic cuckoo search. Soft Comput 20(9):3349–3362

Yang XS (2009a) Firefly algorithms for multimodal optimization. In: International symposium on stochastic algorithms, Springer, New York, pp 169–178

Yang XS (2009b) Harmony search as a metaheuristic algorithm. In: Music-inspired harmony search algorithm, Springer, New York, pp 1–14

Yang XS (2010) A new metaheuristic bat-inspired algorithm. In: Nature inspired cooperative strategies for optimization (NICSO 2010), Springer, New York, pp 65–74

Yang XS, Deb S (2009) Cuckoo search via lévy flights. In: 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), IEEE, pp 210–214

Yang XS, Ting T, Karamanoglu M (2013) Random walks, Lévy flights, markov chains and metaheuristic optimization. In: Future information communication technology and applications, Springer, New York, pp 1055–1064

Yu H, Yu Y, Liu Y, Wang Y, Gao S (2016) Chaotic grey wolf optimization. In: 2016 international conference on progress in informatics and computing (PIC), IEEE, pp 103–113

Zhang X, Feng T (2018) Chaotic bean optimization algorithm. Soft Comput 22(1):67–77

Zhou Y, Zhou Y, Luo Q, Abdel-Basset M (2017) A simplex method-based social spider optimization algorithm for clustering analysis. Eng Appl Artif Intell 64:67–82

Zhou Y, Miao F, Luo Q (2019a) Symbiotic organisms search algorithm for optimal evolutionary controller tuning of fractional fuzzy controllers. Appl Soft Comput 77:497–508

Zhou Y, Wu H, Luo Q, Abdel-Baset M (2019b) Automatic data clustering using nature-inspired symbiotic organism search algorithm. Knowl Based Syst 163:546–557

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ouertani, M.W., Manita, G. & Korbaa, O. Chaotic lightning search algorithm. Soft Comput 25, 2039–2055 (2021). https://doi.org/10.1007/s00500-020-05273-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-020-05273-0