Abstract

Given an undirected, connected, edge-weighted graph G and a positive integer d, the degree-constrained minimum spanning tree (dc-MST) problem aims to find a minimum spanning tree T on G subject to the constraint that each vertex is either a leaf vertex or else has degree at most d in T, where d is a given positive integer. The dc-MST is \(\mathcal {NP}\)-hard problem for d\(\ge \) 2 and finds several real-world applications. This paper proposes a hybrid approach (\(\mathcal {H}\)SSGA) combining a steady-state genetic algorithm and local search strategies for the this problem. An additional step (based on perturbation strategy at a regular interval of time) in the replacement strategy is applied in order to maintain diversity in the population throughout the search process. On a set of available 107 benchmark instances, computational results show the superiority of our proposed \(\mathcal {H}\)SSGA in comparison with the state-of-the-art metaheuristic techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Given an undirected, connected and edge-weighted graph G(V, E, w), where V is the set of nodes or vertices; E is the set of edges; and w(i, j) is a positive weight that is associated with each edge \(e_{ij} \in E\) whose end points are i and j vertices, the degree-constrained minimum spanning tree (dc-MST) problem aims to find a minimum spanning tree (T) of G such that each vertex is either a leaf vertex or else has degree at most d in T, where d is a given positive integer.

There is a rich literature related to dc-MST problem. For example, minimum branch vertices and minimum degree sum of branch vertices based spanning tree problems (Cerrone et al. 2014; Gargano et al. 2002; Marín 2015; Moreno et al. 2018; Silvestri et al. 2017; Sundar and Singh 2012), and a closely related version of dc-MST has been recently introduced in an uncertain random network, where some weights are uncertain variables and others are random variables (Gao et al. 2017).

The dc-MST is \(\mathcal {NP}\)-Hard for d\(\ge \) 2 (Garey and Johnson 1979). This problem finds several real-world applications, such as in the context of backplane wiring among pins, where any pin could be wrapped by at most a fixed number of wire-ends on the wiring panel (Boldon et al. 1996); in designing the road system that can be used to serve the suburbs with the constraint that no more than four roads may meet at any crossing (Savelsbergh and Volgenant 1985); in VLSI designing, where the number of transistors that can be driven by the output current of a transistor is the degree bound for VLSI routing trees (Boldon et al. 1996); in electrical circuits design (Narula and Ho 1980); and in communication networks where the maximum degree in a spanning tree is a measure of vulnerability to single-point failures (Ravi et al. 1993).

The dc-MST is a well-studied problem. Many approaches including exact as well as heuristic approaches have been developed for this problem. Among these approaches, Narula and Ho (1980) proposed a primal and a dual heuristic procedure and a branch-and-bound algorithm for this problem. Later, two general heuristic and a branch-and-bound algorithm were proposed (Savelsbergh and Volgenant 1985). Boldon et al. (1996) proposed four heuristic based on Prim’s algorithm (Prim 1957).

Literature has also witnessed a number of metaheuristic approaches for this problem. Among metaheuristic approaches, various versions of genetic algorithm (GA) have been proposed. For example, Zhou and Gen (1997) presented a GA based on Prufer-encoding, Knowles and Corne (2000) presented a GA based on a \(|V| \times \) (d-1) array encoding, and Raidl and Julstrom (2000) presented GA using a weight-coding. Later, Raidl and Julstrom (2003) further presented two versions (ES-EA and HES-EA) of evolutionary approach in which the first version (ES-EA) demonstrates the usefulness of the edge-set encoding, and the second version (HES-EA) which also uses edge-set encoding, but incorporates edge-cost heuristic in the initialization, crossover operator and mutation operator. The basic idea behind edge-cost heuristic in HES-EA (Raidl and Julstrom 2003) is to include low-cost edges into a candidate solution with higher probabilities than high-cost edges. Experimental results show that the edge-set encoded with edge-cost heuristic, i.e., HES-EA, performs better than the previous versions of GA.

In addition, various ant colony optimization approaches (Bau et al. 2005; Bui and Zrncic 2006; Doan 2007) as well as particle swarm optimization approaches (Binh and Nguyen 2008; Ernst 2010) have been proposed for the dc-MST problem. Bui et al. (2012) proposed an ant-based algorithm (ABA) for the dc-MST problem. In ABA, ants, while exploring the graph, identify a subset of edge-set so that a degree-constrained spanning tree can be constructed from this set of edges. ABA uses two local search strategies—2-EdgeReplacement and 1-EdgeReplacement—to further improve the solution quality of currently constructed solution. In 2-EdgeReplacement, two edges associated with the current feasible degree-constrained spanning tree (say T) are examined for replacement with the two new edges \(\in E \setminus T\) without violating the degree constraint of T with the aim of further reduction in the weight of T. Whereas, in 1-EdgeReplacement, an edge in the current T is examined for replacement with a new edge \(\in E \setminus T\) without violating the degree constraint of T with the aim of further reduction in the weight of T.

GA is a well-known evolutionary algorithm and has an array of various encodings, genetic operators (crossover and mutation operators) for combinatorial optimization problems. Even GA is flexible to integrate with problem-specific heuristic in order to find high-quality solutions to the combinatorial optimization problem under consideration, leading to various variants of GA or hybrid GAs for the same combinatorial optimization problem in the literature. For the dc-MST problem, many researchers have proposed many variants of GA or hybrid GA (Raidl and Julstrom 2000, 2003; Zhou and Gen 1997) in search of finding high-quality solutions. In this paper, we also develop a variant of hybrid genetic algorithm (hybrid approach) for the dc-MST problem. The motivation behind the development of hybrid approach is our new designed problem-specific crossover operator which is quite different from the existing crossover operators including crossover operator of HES-EA (Raidl and Julstrom 2003) (see Sect. 3.5). To make our hybrid approach effective and robust, we incorporate various strategies (such as local search strategies, if applied, are used to intensify the search around the generated child solution and an additional step (based on perturbation strategy at a regular interval of time) in the replacement strategy is applied in order to maintain diversity in the current population throughout the search process) that try to balance the trade-off between exploitation and exploration throughout the search. Hence, our hybrid approach combines a steady-state genetic algorithm and local search strategies for the dc-MST problem. On the available 107 benchmark instances, experimental results show the superiority of our proposed hybrid approach in comparison with the best-so-far hybrid GA (i.e., HES-EA Raidl and Julstrom 2003) and other state-of-the-art metaheuristic technique (ABA Bui et al. 2012). Hereafter, our proposed hybrid approach will be referred to as \({\mathcal {H}}\)SSGA. Note that our proposed hybrid approach \({\mathcal {H}}\)SSGA is quite different the hybrid approach (HES-EA) (Raidl and Julstrom 2003) on mainly three components—problem-specific crossover operator, local search strategies and an additional step in the replacement strategy. Also, note that local search strategies in \({\mathcal {H}}\)SSGA which is based on two-edges replacement (referred to as 2ER) and one-edge replacement (referred to as 1ER) are applied conditionally. 2ER follows the idea of 2-EdgeReplacement local search strategy used in ABA (Bui et al. 2012), but 1ER which is common idea is different from 1-EdgeReplacement local search strategy used in ABA (Bui et al. 2012).

The organization of the remaining paper is as follows: Sect. 2 presents a brief discussion on steady-state genetic algorithm (SSGA); Sect. 3 presents our proposed hybrid approach (\({\mathcal {H}}\)SSGA) for the dc-MST problem; Sect. 4 presents computational results; and Sect. 5 presents concluding remarks.

2 Steady-state genetic algorithm

Genetic algorithm (GA) is a stochastic search technique that is stem from the principles of natural evolution (Holland 1975). In nature, during the evolution of the population, individuals in the population compete with each other to survive. Individuals who are more fit remain intact, while less fit individuals do not survive. Similarly, in GA, more fit chromosomes (solutions) have higher chances to participate in genetic operators and to propagate their genes from one generation to another. Genetic operators help GA in exploiting the promising regions of the search space as well as in exploring new region of the search space. Readers who are interested in a general introduction to GA and its applications may find in Cerrone et al. (2016), Goldberg (1989), Iordache et al. (2007) , Pop et al. (2013).

This paper presents a hybrid steady-state genetic algorithm for the dc-MST problem. Steady-state GA (SSGA) is different from generational GA (GGA) (Davis 1991), as GGA, in each generation, generates a population of new child solutions from the old population with the help of genetic operators and replaces usually the current parent population with the newly generated child population. While SSGA, in each generation, typically generates a single new child solution from the old population with the help of genetic operators and replaces an individual (solution) in the current population with the newly generated child solution.

3 Hybrid steady-state genetic algorithm for the dc-MST

This section discusses the framework of our proposed hybrid approach (\({\mathcal {H}}\)SSGA) for the dc-MST problem that combines a steady-state genetic algorithm and local search strategies.

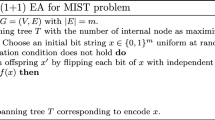

Algorithm 1 presents the pseudocode of \({\mathcal {H}}\)SSGA for the dc-MST problem, where \( < {T}_1, {T}_2, \dots , {T}_{pop}>\) are feasible solutions of the population with population size pop; \(T^{gb}\) stores the best-so-far solution; \( BTS(T_1, T_2, \dots , T_{pop})\) is a function of binary tournament selection method that returns a parent solution; \(Xover(p_1, p_2)\) is a function of crossover operator applied on two selected parent solutions (\(p_1\) and \(p_2\)) and returns a child solution \(T^C\); and \(Mut(p_1)\) is a function of mutation operator applied on the selected parent solution (\(p_1\)) and returns a child solution \(T^C\). Both crossover (Xover) and mutation (Mut) operators are applied in a mutually exclusive way. u01 is a uniform variate, and \(P_c\) is a probability parameter that is to be determined empirically. Once the child solution \(T^C\) is generated, local search strategies (LS) based on two-edge replacement (2ER) and one-edge replacement (1ER) methods are applied conditionally (See line no. 11 of Algorithm 1) in order to further improve the solution quality of \(T^C\). Hereafter, if the current child solution \(T^C\) is found to be unique, then it is inserted into the current population by replacing an individual (solution) of the current population whose fitness is greater than the average fitness of its current population. If \(T^C\) is not unique, \(T^C\) is discarded. An additional step in the replacement strategy is applied in order to maintain diversity in the current population throughout the search process. Once the termination criterion is met, \({\mathcal {H}}\)SSGA stops executing.

The following subsections discuss the details of various components of \({\mathcal {H}}\)SSGA.

3.1 Encoding

To represent a chromosome (solution or spanning tree (\(T_i\))), an edge-set encoding (Raidl and Julstrom 2003) is used. As per this encoding, each solution \(T_i\) consists of a set of |V|-1 edges. The reason to choose this encoding is that it not only offers high locality and heritability, but also adaptive to problem-specific genetic operators.

3.2 Generation of initial solutions of the population

\({\mathcal {H}}\)SGGA follows Prim’s algorithm (Prim 1957) to generate an initial solution of the population. Initially, a degree-constrained spanning tree (\(T_i\)) and the set S are empty; create a copy, say U, of V; label each vertex \(v \in V\)unmarked (\(mark[v] \leftarrow 0 \)); and set the degree of each vertex \(v \in V\) of \(T_i\) to zero (\(deg[v] \leftarrow 0 \)). Select a vertex \(v_1 \in U\) randomly, and add this selected \(v_1\) to S. Delete this selected \(v_1\) from U. Label \(v_1\)marked (\(mark[v_1] \leftarrow 1\)). Select a random edge \(e_{{v_1}{v_2}}\) that connects a vertex \(v_1 \in S\) to a vertex \(v_2 \in U\), and add this selected edge \(e_{{v_1}{v_2}}\) to \(T_i\). Increment the value of \(deg[v_1]\) and \(deg[v_2]\) in \(T_i\) by one due to addition of an edge \(e_{{v_1}{v_2}}\) to \(T_i\). Add \(v_2\) to S, and then delete \(v_2\) from U. Label \(v_2\)marked (\(mark[v_2] \leftarrow 1\)). Hereafter, iteratively at each step, search a random edge (say \(e_{ij}\)) that connects a vertex \(i \in S\) (\(deg[i] < d\)) to an unmarked vertex \(j \in U\). Add this searched \(e_{ij}\) to \(T_i\) and increment the value of deg[i] and deg[j] in \(T_i\) by one. Add j to S, and then delete j from U. Label jmarked (\(mark[j] \leftarrow 1\)). This whole procedure is repeated again and again until U becomes empty. At this juncture, a feasible degree-constrained spanning tree (solution) \(T_i\) is constructed.

Hereafter, uniqueness of each generated \(T_i\) is checked against the initial solutions of the population generated so far. If the current generated initial solution is unique, it is included into the population, otherwise it is discarded.

3.3 Fitness

Once an initial solution \(T_i\) is generated, its fitness (say (\(F(T_i)\)) is computed as the sum of weight of edges in \(T_i\).

3.4 Selection

\({\mathcal {H}}\)SGGA follows binary tournament selection method to select a parent solution. This method is applied two times in order to select two parent solutions for the crossover operator, whereas this method is applied one time in order to select a parent solution for the mutation operator. As per this method, two different solutions are picked uniformly at random from the current population. With probability (\(P_{b}\)), fitter one between these two selected solutions is selected as a parent solution, otherwise the worse one is selected as a parent solution with probability (1 -\(P_{b}\)).

3.5 Crossover operator

Our proposed crossover operator (Xover) is a problem-specific crossover operator (say Xover) that tries to inherit good edges of parent individuals in the newly generated child solution (say \(T^C\)) as much as possible and at the same time Xover maintains the degree constraint of all non-leaf vertices of \(T^C\). Algorithm 2 presents the pseudocode of Xover for \({\mathcal {H}}\)SSGA whose description is as follows:

Xover starts with selecting two chromosomes (solutions) as parents (say, \(p_1\) and \(p_2\) ) from the population with the help of binary tournament selection method. Initially, consider the degree-constrained spanning tree T associated with an empty solution (say child solution \(T^C\)) as an empty set, and also consider a set (say S) as an empty set. Set the degree of each vertex \(v \in V\) in T zero (i.e., \(deg[v] \leftarrow 0 ~ \forall v\in V\)), and label each vertex \(v \in V\)unmarked (\(mark[v] \leftarrow 0 \)). At the beginning of Xover, add a vertex \(v_1 \in V\), which is selected uniformly at random, to S. Label \(v_1\)marked (\(mark[v_1] \leftarrow 1\)). With probability \((Pb_{{p_1}{p_2}})\), pick a random edge among all candidate edges in \(p_1\), otherwise pick a random edge among all candidate edges in \(p_2\) with probability (1-\(Pb_{{p_1}{p_2}}\)). Here a candidate edge is an edge that connects a vertex in S to a vertex \( v_2 \in V \setminus S\); and the probability \(Pb_{{p_1}{p_2}}\) is defined as \(\frac{1/F(p_1)}{1/F(p_1)+1/F(p_2)}\) (Beasley and PC 1996). \(F(p_1)\) and \(F(p_2)\), respectively, are the fitness of \(p_1\) and \(p_2\). Such probability mechanism favors the fitter parent so that more and more number of edges of fitter parent would participate in constructing T of \(T^C\) in comparison with that of lesser fit parent. Add this selected edge \(e_{{v_1}{v_2}}\) to T of \(T^C\). Increment the value of \(deg[v_1]\) and \(deg[v_2]\) by one. Add \(v_2\) to S, and label \(v_2\)marked (\(mark[v_2] \leftarrow 1\)). Hereafter, iteratively at each step, search a random edge (say \(e_{ij}\)) that connects a vertex \(i \in S\) (\(deg[i] < d\)) to an unmarked vertex \(j \in V \setminus S\), from either \(p_1\) with probability \((Pb_{{p_1}{p_2}})\) or \(p_2\) with probability (\(1-Pb_{{p_1}{p_2}}\)). If the search is successful, add this searched \(e_{ij}\) to T of \(T^C\). Increment the value of deg[i] and deg[j] by one. Add j to S, and label jmarked (\(mark[j] \leftarrow 1\)). If the search is not successful, Xover switches to other parent in order to search another random edge (say \(e_{ij}\)). If the search is successful, add this searched edge \(e_{ij}\) to T of \(T^C\). Increment the value of deg[i] and deg[j] by one. Add j to S, and label jmarked (\(mark[j] \leftarrow 1\)). If the search is still not successful, then search a minimum edge-weight edge (say \(e_{ij}\)) that connects a vertex \(i \in S\)\((deg[i]<d)\) to a vertex \(j \in V\setminus S\) from E-\(\{\) edges of both parent solutions \(p_1\) and \(p_2\)\(\}\). Add this searched edge \(e_{ij}\) to T of \(T^C\). Increment the value of deg[i] and deg[j] by one. Add j to S, and label jmarked (\(mark[j] \leftarrow 1\)). This procedure is repeated until \(V \setminus S\) becomes empty. At this juncture, a feasible degree-constrained spanning tree T of \(T^C\) (newly generated child solution) is constructed.

Though our Xover shares similar ideas of crossover operator used in ES-EA (Raidl and Julstrom 2003) and HES-EA (Raidl and Julstrom 2003), but the way of inheriting edges from parents is quite different. In ES-EA, the crossover operator selects edges of parents in a random order, whereas in HES-EA, the crossover operator uses edge-cost heuristic to select edges of parents. The basic idea of edge-cost heuristic is to include low-cost edges into a candidate solution with higher probabilities than high-cost edges. After considering edges from both parents in ES-EA and HES-EA, if the generated degree-constrained spanning tree T associated with the child solution is not feasible, then to make it feasible, edges are selected from the set of edges \(E \setminus T\).

Crossover in HES-EA (Raidl and Julstrom 2003)

We present an example that illustrates the difference between crossover operator used in HES-EA (Raidl and Julstrom 2003) and our proposed Xover. For that we consider the dc-MST problem with \(d=3\). In this example, we consider an edge-weighted, undirected and connected graph \(G_1 (V, E, w)\), where \(|V|=7\); \(|E|=21\); and a weight (w) is associated with each edge \(\in E\) (one can see Fig 1). Figure 1 presents the edge-weight matrix (\(C_1\)) of \(G_1\). Figure 2a, b represents two parent solutions \(p_1\) and \(p_2\), respectively. The fitness of \(p_1\) and \(p_2\) is 27 and 24, respectively. To generate a child solution \(T^C\), Xover for \({\mathcal {H}}\)SSGA starts with selecting a vertex (say \(v_7\)) randomly (see Fig. 3a). Xover probabilistically selects an edge \(e_{{v_7}{v_6}}\) that connects a vertex \(v_7 \in S\) to a vertex \(v_6 \in V \setminus S\) from parent \(p_2\) (as per Algorithm 2) and adds this selected edge to the empty degree-constrained spanning tree (say T) of \(T^C\) (see Fig. 3b). Hereafter, iteratively, at each step, Xover selects an edge either from \(p_1\) with probability \((Pb_{{p_1}{p_2}})\) or from \(p_2\) with probability (1-\(Pb_{{p_1}{p_2}}\)). Once an edge is selected, it is added to T. Continuing this iterative process, an edge \(e_{{v_7}{v_2}}\) is selected from parent \(p_2\) and is added to T of \(T^C\) (Fig. 3c). In a similar way, \(e_{{v_7}{v_4}}\) and \(e_{{v_6}{v_3}}\), respectively, selected from parent \(p_1\) and \(p_1\) are added to T of \(T^C\) (see Fig. 3d, e). Figure 3e shows the situation of no candidate edge from the current parent \(p_1\), then Xover switches to \(p_2\) in order to select a candidate edge; however, Xover also fails to find a single candidate edge after switching to \(p_2\). Xover, then, greedily selects a candidate edge \(e_{{v_6}{v_5}} \in E \setminus T\) (see Fig. 3f). Similarly, edge \(e_{{v_1}{v_2}} \in E \setminus T\) is also selected and is added to T of \(T^C\) (see Fig. 3g). Figure 3g presents a feasible generated T of \(T^C\) whose fitness is 13. Figure 4a–f illustrates how the crossover operator in HES-EA (Raidl and Julstrom 2003) is applied to generate a child solution \(T^C\). Figure 4a shows the set of edges (\(E_{p_1p_2}\)) that are common (\(E_{p_1} \cap E_{p_2} \)) to both parents (\(p_1\) and \(p_2\)), where \(E_{p_1}\) and \(E_{p_2}\) are the set of edges of \(p_1\) and \(p_2\), respectively. Figure 4b shows the set of edges (say \(E'\)) that includes \((E_{p_1} \cup E_{p_2}) - (E_{p_1} \cap E_{p_2} )\) edges from both parents. According to crossover operator used in HES-EA (Raidl and Julstrom 2003), first it includes all edges from the set \(E_{p_1p_2}\) to the empty degree-constrained spanning tree (T) of the child solution \(T^C\) (see Fig. 4c). Hereafter, iteratively, at each step, it greedily selects an edge from the set \(E'\) without violating the degree constraint of T of \(T^C\). Continuing this iterative process, first an edge \(e_{{v_7}{v_6}}\) is selected (see Fig. 4d). Hereafter, edges \(e_{{v_7}{v_4}}\) and \(e_{{v_4}{v_2}}\) are selected and are added to T of \(T^C\) (see Fig. 4e, f). Figure 4f denotes the resultant feasible T of \(T^C\) whose fitness is 23. ES-EA (Raidl and Julstrom 2003) follows the same procedure to generate \(T^C\) with the difference that it selects edges randomly from the set \(E'\).

3.6 Mutation operator

The role of mutation operator is used to provide diversity in the population. In \({\mathcal {H}}\)SSGA, mutation operator (referred to as Mut) starts with a parent solution (\(p_1\)) selected from the population with the help of binary tournament selection method. Copy this selected parent solution to an empty child solution (say \(T^C\)). Hereafter, Mut performs edge-deletion-insertion operation on \(T^C\). In this operation, first deletion of an edge (say \(e_{uv}\)) selected randomly from the spanning tree T of \(T^C\) is performed, leading to the partition of T into two different components (say \(T_u\) and \(T_v\)). To connect these two components, an edge (different from \(e_{uv}\)) is searched in \(E \setminus T\) in such a way that the degree constraint of the resultant T does not get violated after insertion of this searched edge. Note that ES-EA (Raidl and Julstrom 2003) and HES-EA (Raidl and Julstrom 2003) follow edge-insertion-deletion in mutation operator, whereas Mut follows edge-deletion-insertion. HES-EA (Raidl and Julstrom 2003) inserts low-weight edge instead of a random edge like ES-EA.

Also note that similar to (Sundar 2014; Sundar and Singh 2015, 2017), crossover operator (Xover) and mutation operator (Mut) for \({\mathcal {H}}\)SSGA are applied in a mutually exclusive way which is different from the way crossover and mutation operators are applied in ES-EA (Raidl and Julstrom 2003) and HES-EA (Raidl and Julstrom 2003). With probability \(P_c\), Xover is selected, otherwise Mut is selected with the probability (1-\(P_c\)). The reason behind this one is that Xover generates \(T^C\) with potentially good edges inherited from their parents, whereas Mut generates \(T^C\) based on edge-deletion-insertion operation. If Mut is applied after Xover, then the chances are high that the resultant child solution may lose some potentially good edges inherited from parent solutions of Xover.

3.7 Local search strategies (LS)

To further improve the quality of a currently generated child solution \(T^C\), local search strategies (LS) based on two-edges replacement (referred to as 2ER) and one-edge replacement (referred to as 1ER) methods are applied conditionally. Edge replacement strategy is a common idea that is based on deletion of an edge \(e_{uv} \in T\) of \(T^C\) and inclusion of a new edge \(e_{xy} \in E\). LS are applied on \(T^C\) only when the following condition holds true:

where \(T^{gb}\) is the best-so-far generated solution; \(F(T^{gb})\) and \(F(T^C)\) are, respectively, the fitness of \(T^{gb}\) and \(T^C\); \(\alpha \) is a parameter to be determined empirically; and \(dis(T^{gb}, ~T^C)\) denotes the distance between \(T^{gb}\) and \(T^C\) in terms of fraction of edges of \(T^C\) that are not common to the edges of \(T^{gb}\). The first part \(\frac{F(T^{gb})}{F(T^C)}\) in conjunction with the second part \(\alpha \times dis(T^{gb}, ~T^C)\) of Eq. 1 relates to the quality-and-distance feature. The rationale behind using this condition (equation) is that this equation avoids applying LS on such generated child solution which is slightly inferior to \(F(T^{gb})\) (quality), but is not sufficiently far from \(T^{gb}\) (distance). This way also saves the computational time. If the current child solution \(T^C\) satisfies equation 1, then the LS will be applied on \(T^C\). LS is applied on \(T^C\) in the following order: two-edges replacement (2ER) \(\rightarrow \)one-edge replacement (1ER). Descriptions of 2ER and 1ER are as follows:

2ER in \({\mathcal {H}}\)SSGA follows the idea of 2-EdgeReplacement local search strategy (Bui et al. 2012); however, 2-EdgeReplacement local search strategy in Bui et al. (2012) uses |V| / 2 iterations until no improvement is possible in Bui et al. (2012), whereas 2ER is applied at most three iterations. In each iteration of 2ER, a random edge (say \(e_{{u}{v}} \in T\) of \(T^C\)) is picked, and among all candidate non-adjacent edges of \(e_{{u}{v}}\) in T of \(T^C\), only that edge (say \(e_{{w}{x}}\)) is picked if replacement of \(e_{{u}{v}}\) and \(e_{{w}{x}}\) with two new edges \(e_{{u}{w}} \in E \setminus T \) and \(e_{{v}{x}} \in E \setminus T\) leads to the maximum improvement in the resultant T of \(T^C\).

1ER in \({\mathcal {H}}\)SSGA examines the edges of T of \(T^C\) for possible edge-replacement in two stages followed one-by-one. In the first stage of 1ER, for each edge \(e_{{u}{v}} \in T\) whose degree of at least one end point (vertex) is equal to d (i.e., \(deg[u] == d\) or \(deg[v] == d\)), search an appropriate edge \(e_{xy} \in E \setminus T\) whose \(w(x, y) \le w(u, v)\) for edge-replacement without violating the degree constraint of the resultant T of \(T^C\) if such edge-replacement takes place. If the search is successful, then \(e_{uv}\) is replaced with \(e_{xy}\) in T (see Algo. 3, line no. 5–9). The idea behind this edge-replacement is that if the degree of the vertex v (\(deg[v] == d\)) in T is reduced, then in the second stage, there will be possibility that the edge with lesser weight may attach to v. Keeping this idea, after completion of the first stage, the second stage is applied. In the second stage, for each edge \(e_{{u}{v}} \in T\), search an appropriate edge \(e_{xy} \in E \setminus T\) whose edge-weight w(x, y) is less than edge-weight w(u, v) of \(e_{uv}\) for exchange. If the search is successful, replace \(e_{uv}\) with \(e_{xy}\) in T (see Algo. 3, line no. 10–14), resulting in the reduction of weight of the resultant T of \(T^C\).

Note that our proposed 1ER for \({\mathcal {H}}\)SSGA is different from 1-EdgeReplacement (Bui et al. 2012). 1ER consists of two stages and is based on first-fit improvement strategy, whereas 1-EdgeReplacement (Bui et al. 2012) consists of only one stage and is based on best-fit improvement. Also, 1-EdgeReplacement in Bui et al. (2012) is applied repeatedly until no improvement is possible, whereas 1ER is applied at most three iterations (see the pseudocode of 1ER of LS in Algorithm 3).

3.8 Replacement strategy (RS)

Uniqueness of each newly generated child solution \(T^C\) is checked against all individuals of the current population. If \(T^C\) is found to be unique, then \(T^C\) replaces a randomly selected solution of the current population whose fitness is greater than the average fitness of the current population.

In addition to the above replacement strategy (RS), we follow one more step in this replacement strategy (referred to as RS+). This strategy is followed only when \(T^C\) is unique. The need of RS+ was felt intuitively during our initial experimentations that the search process of \({\mathcal {H}}\)SSGA without RS+ often got trapped into local optimum, indicating the lack of carrying out diversity in the population generation-over-generation in the search space effectively. RS+ is applied only when this indication is identified. Our assumption of this identification is based on this fact if the best-so-far generated solution (\(T^{gb}\)) stops emerging (in terms of better solution quality) for a certain number of generations, say \(PR_{pop}\) (\(PR_{pop}\) is set to 500 empirically after a large number of trials) in the course of search. In RS+, a perturbation strategy is applied on the current population at a regular interval of time (i.e., \(PR_{pop}\)). In this perturbation strategy, a subset (say rs) of individuals (solutions) of the current population is selected randomly, where rs is a parameter to be determined empirically. Each solution (say \(T_i \in rs\)) is perturbed with the help of mutation operator Mut (see Sect. 3.6). If the perturbed solution \(T^{'}\) of \(T_i\) is unique against all individuals of the current population, then \(T^{'}\) is included into the current population by replacing its own old solution \(T_i\). Otherwise, \(T^{'}\) is discarded. Applying perturbation strategy on a set of random solutions (rs) force the population to be diversified throughout the search process, helping in finding high-quality solutions.

4 Computational results

\({\mathcal {H}}\)SSGA is implemented in C and executed on a Linux-based operating system with the configuration of Intel Core i5 processor 3.3 GHz \(\times \) 4 with 4 GB RAM. In all our experiments with \({\mathcal {H}}\)SSGA, we have used \(pop = 300\) (population of solutions), \(P_b = 0.90\) (see Sect. 3.4) and \(P_c = 0.50\) (crossover probability, see Sect. 3.6), \(\alpha = 0.10\) (see Sect. 3.7), and \(rs = 0.05\) (5% solutions of pop are perturbed (see Sect. 3.8)). All these parameters are set empirically after a large number of trials. Although these parameter values provide good results on most of the instances, they may not be optimal for all instances. We have tested \({\mathcal {H}}\)SSGA on the available 107 benchmark instances which were also used for the recent one ant-based algorithm (ABA) (Bui et al. 2012). Some of these instances were also used for HES-EA (Raidl and Julstrom 2003). These instances can be classified into two groups—Euclidean and non-Euclidean instances. Euclidean instances consist of three different sets, i.e., CRD, SYM and STR, whereas non-Euclidean instances consist of three different sets, i.e., SHRD, random hard (R) and misleading hard (M). These benchmark instances can be downloaded from the link https://turing.cs.hbg.psu.edu/benchmarks/file_instances/spanning_tree/. The descriptions of 107 benchmark instances that are classified into six different data sets with varying sizes from 15 to 500 vertices (nodes) are as follows:

CRD data set This data set consists of graphs whose sizes vary from 50 to 100 vertices. Vertices in such a graph are generated by using a uniform distribution in a two-dimensional plane and edge-weight is the Euclidean distance between two vertices.

SYM data set This data set consists of graphs whose sizes vary from 50 to 70 vertices. These graphs are analogous to the CRD instances except vertices are generated by using a uniform distribution in a higher dimensional Euclidean space. Edge-weight is the Euclidean distance between two vertices.

STR data set This data set consists of graphs whose sizes vary from 50 to 100 vertices. Vertices in such a graph are randomly distributed points in a higher dimensional space grouped together as cluster and edge-weight is the Euclidean distance between two vertices.

SHRD data set This data set consists of graphs whose sizes vary from 15 to 30 vertices. These graphs are generated by assignment of non-Euclidean distances to the graph edges in such a way that the number of optimal solutions is limited (Krishnamoorthy et al. 2001).

Random-hard (R) data set This data set consists of graphs whose sizes vary from 50 to 200 vertices. These are non-Euclidean graph instances and edge-weights are randomly generated from a pre-defined interval by using a uniform distribution.

Misleading-hard (M) data set This data set consists of graphs whose sizes vary from 50 to 500 vertices. These are non-Euclidean graph instances and edge-weights are randomly generated from a pre-defined interval by using a uniform distribution. Graphs of M-data sets are designed to mislead greedy algorithms.

We compare our approach \({\mathcal {H}}\)SSGA with two state-of-the-art metaheuristic techniques, i.e., ant-based algorithm (ABA) (Bui et al. 2012) and HES-EA (Raidl and Julstrom 2003). Since authors (Bui et al. 2012) carried out all their experiments on a system based on Intel Core 2 Duo E8600 at 3.33 GHz with 6 GB RAM and executed ABA for 50 runs on each instance. Note that authors (Bui et al. 2012) used available 97 benchmark instances, but results of ABA on 97 instances reported by authors are partial in terms of average solution quality and average total execution time. Out of 97 instances, authors (Bui et al. 2012) reported the best value obtained over 50 runs on all instances, but did not report the average solution quality and average total execution time over 50 runs for 56 and 40 instances, respectively. Also, the computer system used for \({\mathcal {H}}\)SSGA is different from that of ABA (Bui et al. 2012). For HES-EA (Raidl and Julstrom 2003), authors performed 50 runs on each considered instance and used a termination criterion when the best-so-far solution obtained does not improve over 1,00,000 generations on considered instance, but did not report computational time on each considered instance. Authors carried out their experiments on a system based on Pentium-III/800-MHz PC, which is different from the computer system used for \({\mathcal {H}}\)SSGA. Hence, we find difficulty to analyze exact comparison with ABA due to different computer platform and the way the computational results are reported in their paper as well as HES-EA due to different computer platform and unavailability of computational time on each considered instance. Looking at all these aspects of difficulties, we have implemented HES-EA and ABA using same values of parameters mentioned in their respective papers for the purpose of giving a fair comparison with \({\mathcal {H}}\)SSGA. Like \({\mathcal {H}}\)SSGA, we have implemented ABA and HES-EA in C and executed on a Linux-based operating system with the configuration of Intel Core i5 processor 3.3 GHz \(\times \) 4 with 4 GB RAM. All approaches (\({\mathcal {H}}\)SSGA, HES-EA and ABA) have been executed for 50 independent runs on each instance in order to test their robustness. We have set the same stopping criterion (in terms of computational time) for each approach (\({\mathcal {H}}\)SGGA, HES-EA and ABA) as per the instance size (1 s for \(|V|<\)100, 10 s for \(|V|==100\), 60 s for \(|V|==200\), 200 s for \(|V|==300\), 600 s for \(|V|==400\), and 1000 s for \(|V|==500\)).

Subsequent subsections discuss a detailed comparison of \({\mathcal {H}}\)SSGA with HES-EA (Raidl and Julstrom 2003) and ABA (Bui et al. 2012).

4.1 Comparison of \({\mathcal {H}}\)SSGA with HES-EA (Raidl and Julstrom 2003) and ABA (Bui et al. 2012)

\({\mathcal {H}}\)SSGA has been compared with HES-EA (Raidl and Julstrom 2003) and ABA (Bui et al. 2012) on a set of available 107 benchmark instances. Since ABA uses the two local search strategies based on 2-EdgeReplacement and 1-EdgeReplacement in order to further improve the solution quality of the currently constructed solution, and our proposed approach \({\mathcal {H}}\)SSGA combines a steady-state genetic algorithm (SSGA) and local search strategies based on 1ER and 2ER. Therefore, in addition to analyzing the comparison of \({\mathcal {H}}\)SSGA with ABA, we have also analyzed the individual effect of 1ER and 2ER that combines with only SSGA (including RS+ step) part of \({\mathcal {H}}\)SSGA (referred to as, respectively, (SSGA)+1ER and (SSGA)+2ER) on benchmark instances.

Tables 1, 2 and 3 report the results of HES-EA, ABA, (SSGA)+2ER, (SSGA)+1ER and \({\mathcal {H}}\)SSGA on Euclidean and non-Euclidean instances. In these tables, column Instance denotes the name of instance; column |V| denotes the number of vertices corresponding to its instance; column d denotes the degree constraint on its corresponding instance; each next four columns Best, Avg, SD and ATET, respectively, denote the best value, the average solution quality, standard deviation and the average total execution time obtained by HES-EA, ABA, (SSGA)+2ER, (SSGA)+1ER and \({\mathcal {H}}\)SSGA over 50 runs. For each instance, the best value (Best) and the best average solution quality (Avg) among HES-EA, ABA, (SSGA)+2ER, (SSGA)+1ER and \({\mathcal {H}}\)SSGA are highlighted in bold.

Table 1 reports the results of 27 Euclidean instances. Comparing with HES-EA, \({\mathcal {H}}\)SSGA, in terms of Best, is better on 19, equal on 6 and is worse on 2 instances; \({\mathcal {H}}\)SSGA, in terms of Avg, is better on 18, equals on 1 and is worse on 8 instances. Comparing with HES-EA, (SSGA)+1ER, in terms of Best, is better on 20, equals on 6 and is worse on 1 instances; (SSGA)+1ER, in terms of Avg, is better on 21, equals on 1 and is worse on 5 instances. Similarly, comparing with HES-EA, (SSGA)+2ER, in terms of Best, is better on 8, equals on 4 and is worse on 15 instances; (SSGA)+2ER, in terms of Avg, is better on 2, equals on 1 and is worse on 24 instances. Comparing with ABA, \({\mathcal {H}}\)SSGA, in terms of Best, is better on 23 and equals on 4 instances; \({\mathcal {H}}\)SSGA, in terms of Avg, is better on 26 and equals on 1 instances. Comparing with ABA, (SSGA)+1ER, in terms of Best, is better on 22 and equals on 5; (SSGA)+1ER, in terms of Avg, is better on 26 and equals on 1 instances. Similarly, comparing with ABA, (SSGA)+2ER, in terms of Best, is better on 20, equals on 2 and is worse on 5; (SSGA)+2ER, in terms of Avg, is better on 17 equals on 1 and is worse on 9 instances.

Table 2 reports the results of 52 instances. Comparing with HES-EA, \({\mathcal {H}}\)SSGA, in terms of Best, is better on 11 and equals on 41 instances; \({\mathcal {H}}\)SSGA, in terms of Avg, is better on 26, equals on 24 and is worse on 2 instances. Comparing with HES-EA, (SSGA)+1ER, in terms of Best, is better on 11, equals on 40 and is worse on 1 instances; (SSGA)+1ER, in terms of Avg, is better on 20, equals on 28 and is worse on 4 instances. Similarly, comparing with HES-EA, (SSGA)+2ER, in terms of Best, is better on 5, equals on 29 and is worse on 18 instances; (SSGA)+2ER, in terms of Avg, is better on 11, equals on 7 and is worse on 34 instances. Comparing with ABA, \({\mathcal {H}}\)SSGA, in terms of Best, is better on 19 and equals on 33 instances; \({\mathcal {H}}\)SSGA, in terms of Avg, is better on 31 and equals on 21 instances. Comparing with ABA, (SSGA)+1ER, in terms of Best, is better on 19 and equals on 33 instances; (SSGA)+1ER, in terms of Avg, is better on 29, equals on 22 and worse on 1 instances. Similarly, comparing with ABA, (SSGA)+2ER, in terms of Best, is better on 16, equals on 22 and is worse on 14 instances; (SSGA)+2ER, in terms of Avg, is better on 23, equals on 6 and is worse on 23 instances.

Table 3 reports the results of 28 non-Euclidean instances. Comparing with HES-EA, \({\mathcal {H}}\)SSGA, in terms of Best, is better on 5 and equal on 23 instances; \({\mathcal {H}}\)SSGA, in terms of Avg, is better on 12 and equals on 16 instances. Comparing with HES-EA, (SSGA)+1ER, in terms of Best, is better on 4 and equals on 24 instances; (SSGA)+1ER, in terms of Avg, is better on 8, equals on 15 and worse on 5 instances. Similarly, comparing with HES-EA, (SSGA)+2ER, in terms of Best, is better on 3 and worse on 25 instances; (SSGA)+2ER, in terms of Avg, is better on 3 and worse on 25 instances. Comparing with ABA, \({\mathcal {H}}\)SSGA, in terms of Best, is better on 6 and equals on 22 instances; \({\mathcal {H}}\)SSGA, in terms of Avg, is better on 16, equals on 11 and worse on 1 instances. Comparing with ABA, (SSGA)+1ER, in terms of Best, is better on 3, equals on 22 and worse on 3 instances; (SSGA)+1ER, in terms of Avg, is better on 10, equals on 10 and worse on 8 instances. Similarly, comparing with ABA, (SSGA)+2ER, in terms of Best, is worse on all 28 instances; (SSGA)+2ER, in terms of Avg, is worse on all 28 instances.

One can observe clearly from the results of Tables 1, 2 and 3 that \({\mathcal {H}}\)SSGA and (SSGA)+1ER are superior to both HES-EA and ABA in terms of both Best and Avg.

4.2 Analyses on the two key components of \({\mathcal {H}}\)SGGA

In this subsection, we carry out analyses on the two key components of \({\mathcal {H}}\)SGGA: (i) effectiveness of quality-and-distance feature in the local search strategies (ii) effectiveness of RS+ in the replacement strategy. In addition, we also carry out statistical analyses about significant differences between \({\mathcal {H}}\)SGGA vs HES-EA and \({\mathcal {H}}\)SGGA vs ABA.

4.2.1 Effectiveness of quality-and-distance feature in the local search strategies

To analyze the effectiveness of quality-and-distance feature in the local search strategies (LS), we have performed experiments based on (i) \({\mathcal {H}}\)SSGA that uses quality-and-distance feature in the LS and (ii) \({\mathcal {H}}\)SSGA that does not use quality-and-distance feature (i.e., \({\mathcal {H}}\)SSGA - {quality-and-distance}) in the LS for the dc-MST problem. In these experiments, we consider two instances (SYM702 and SYM704 for \(d=2\)) selected randomly. The stopping criterion of \({\mathcal {H}}\)SSGA and \({\mathcal {H}}\)SSGA - {quality-and-distance} is set to 1 s for SYM702 and SYM704 instances. Figure 5a, b depicts the evolution of average solution quality (Avg) based on 50 runs) over average total execution time (ATET). Y-axis represents the AverageSolutionQuality, whereas X-axis represents the AverageTotalExecutionTime. The curves in Fig. 5a, b clearly demonstrate that \({\mathcal {H}}\)SSGA that uses quality-and-distance feature in the LS finds better solution qualities as well as converges faster than that of \({\mathcal {H}}\)SSGA - {quality-and-distance}. Hence, such experiments justify the usefulness of quality-and-distance feature in the LS.

4.2.2 Effectiveness of RS+ in the replacement strategy

To analyze the effect of RS+, we have performed experiments based on (i) \({\mathcal {H}}\)SSGA that uses RS+ and (ii) \({\mathcal {H}}\)SSGA without RS+ (i.e., \({\mathcal {H}}\)SSGA - {RS+}) for the dc-MST problem. In these experiments, we consider two instances (SYM702 and SYM704 for \(d=2\)) selected randomly. Figure 6a, b exhibit the average solution quality (Avg) over 50 runs versus the number of generations on considered instances. In these experiments, \({\mathcal {H}}\)SSGA and \({\mathcal {H}}\)SSGA - {RS+} are allowed to execute over 50000 generations. X-axis represents the Generation, while Y-axis represents Average Solution Quality. The curves in Fig. 6a, b clearly demonstrate that \({\mathcal {H}}\)SSGA that uses RS+ finds better solution qualities as well as converges faster than that of \({\mathcal {H}}\)SSGA - {RS+}. Hence, such experiments justify the usefulness of RS+ in the replacement strategy.

4.2.3 Statistical analysis

For statistical analysis, we perform nonparametric Wilcoxon’s signed-rank test (García et al. 2009) on each group of instances (Euclidean and non-Euclidean) in order to compare \({\mathcal {H}}\)SSGA with HES-EA (Raidl and Julstrom 2003) and ABA (Bui et al. 2012) in terms of the best (Best) and average solution quality (Avg). We use Wilcoxon’s signed-rank test calculator available at the link http://www.socscistatistics.com/tests/signedranks/Default2.aspx. For this statistical test, we first calculate the difference between the results obtained by each two compared approaches (HES-EA vs. \({\mathcal {H}}\)SSGA and ABA vs. \({\mathcal {H}}\)SSGA) on each group, and then, rank them according to its absolute value. For each group, \(R^+\) is the sum of ranks in which the second approach outperforms the first, while \(R^-\) denotes the sum of ranks for the opposite case. If \(min\{R^+;R^-\}\) is less than or equal to the critical value, then this test detects significant difference between the two compared approaches. The critical values are taken from the statistical table available at the link http://users.stat.ufl.edu/athienit/Tables/tables. Table 4 and 5 report the results of Wilcoxon’s signed-rank test with a level of significance \(\alpha = 0.05\) for the Best and Avg over 50 runs, respectively.

In Tables 4 and 5, column Group denotes the name of each groups; column Comparison denotes the name of two compared approaches; the next five columns Sample Size, Critical Value, \(R^+\), \(R^-\) and Significant, respectively, denote the sample size, critical value, \(R^+\), \(R^-\) and significant difference between the two compared approaches (“yes” if there exists a significant difference between two compared approaches, otherwise “no”) for each Best and Avg on each group. On comparison with HES-EA, \({\mathcal {H}}\)SSGA, in terms of Best, is better on 3 out of 4 statistical tests, and \({\mathcal {H}}\)SSGA, in terms of Avg is better on 4 out of 4 statistical tests. The result of test HES-EA vs. \({\mathcal {H}}\)SSGA for the Best is unknown because the sample size is not big enough to return a critical value at the level of significance \(\alpha = 0.05\). On comparison with ABA, \({\mathcal {H}}\)SSGA, in terms of Best, is better on 4 out of 4 statistical tests, and \({\mathcal {H}}\)SSGA, in terms of Avg is better on 4 out of 4 statistical tests. This test discloses significant differences between HES-EA vs. \({\mathcal {H}}\)SSGA and ABA vs. \({\mathcal {H}}\)SSGA. Tables 4 and 5 clearly show that \({\mathcal {H}}\)SSGA is superior to HES-EA and ABA.

4.3 Collective picture

This subsection presents a collective picture that describes an overall comparison of \({\mathcal {H}}\)SSGA with HES-EA (Raidl and Julstrom 2003) and ABA (Bui et al. 2012) on 107 benchmark instances.

Table 6 gives an overall comparison of \({\mathcal {H}}\)SSGA with HES-EA (Raidl and Julstrom 2003) and ABA (Bui et al. 2012) on both best value (Best) and average solution quality (Avg). One can observe clearly from the results of Table 6 that \({\mathcal {H}}\)SSGA is superior to HES-EA and ABA in terms of both Best and Avg.

5 Conclusion

In this paper, we have presented a hybrid approach (\({\mathcal {H}}\)SSGA) combining a steady-state genetic algorithm and local search strategies for the degree-constrained minimum spanning tree (dc-MST) problem. \({\mathcal {H}}\)SSGA is quite different from the hybrid approach (HES-EA) (Raidl and Julstrom 2003) which is the best one among all existing variants of genetic algorithm for dc-MST problem, particularly on three components—problem-specific crossover operator, local search strategies and an additional step in the replacement strategy. The way problem-specific crossover operator (Xover) inherits good edges of parent individuals in the newly generated child solution (say \(T^C\)) as much as possible and at the same time Xover maintains the degree constraint of all non-leaf vertices of \(T^C\) makes it quite different from the existing crossover operators designed for this problem. The role of local search strategies if applied on newly generated child solution is used to intensify the search around the generated child solution, whereas the role of an additional step (based on perturbation strategy at a regular interval of time) in the replacement strategy is used to maintain diversity in the current population throughout the search process. All components of \({\mathcal {H}}\)SSGA effectively coordinate with each other and help in making \({\mathcal {H}}\)SSGA more effective and robust in finding high-quality solutions. On a set of 107 benchmark instances, computational results show that \({\mathcal {H}}\)SSGA is overall superior to state-of-the-art metaheuristic techniques (HES-EA Raidl and Julstrom 2003 and ABA Bui et al. 2012). We have also performed experimental analyses that justify the usefulness of quality-and-distance feature in the local search strategies and RS+ in the replacement strategy in \({\mathcal {H}}\)SSGA.

In future work, quality-and-distance feature in the local search strategies as well as RS+ in the replacement strategy in \({\mathcal {H}}\)SSGA can be applied to develop variants of hybrid genetic algorithm for other \(\mathcal {NP}\)-hard spanning tree problems as well as other \(\mathcal {NP}\)-hard combinatorial optimization problems. Even quality-and-distance feature in the local search strategies in \({\mathcal {H}}\)SSGA can be applied to develop other metaheuristic techniques for \(\mathcal {NP}\)-hard combinatorial optimization problems. The idea used in designing problem-specific crossover operator for the dc-MST problem can be also applied to develop variants of hybrid genetic algorithm for other \(\mathcal {NP}\)-hard spanning tree problems.

References

Bau Y, Ho CK, Ewe HT (2005) An ant colony optimization approach to the degree-constrained minimum spanning tree problem. In: Proceedings of the computational intelligence and security, international conference (CIS 2005), Xi’an, China, December 15–19, 2005, Part I, pp 657–662

Beasley JE, PC C (1996) A genetic algorithm for the set covering problem. Eur J Oper Res 94:394–404

Binh HTT, Nguyen TB (2008) New particle swarm optimization algorithm for solving degree constrained minimum spanning tree problem. In: Proceedings of the PRICAI 2008: trends in artificial intelligence, 10th Pacific rim international conference on artificial intelligence, Hanoi, Vietnam, December 15–19, 2008, pp 1077–1085

Boldon B, Deo N, Kumar N (1996) Minimum-weight degree-constrained spanning tree problem: heuristics and implementation on an SIMD parallel machine. Parallel Comput 22(3):369–382

Bui TN, Zrncic CM (2006) An ant-based algorithm for finding degree-constrained minimum spanning tree. In: Proceedings of the genetic and evolutionary computation conference, GECCO 2006, Seattle, Washington, USA, July 8–12, 2006, pp 11–18

Bui TN, Deng X, Zrncic CM (2012) An improved ant-based algorithm for the degree-constrained minimum spanning tree problem. IEEE Trans Evol Comput 16(2):266–278

Cerrone C, Cerulli R, Raiconi A (2014) Relations, models and a memetic approach for three degree-dependent spanning tree problems. Eur J Oper Res 232(3):442–453

Cerrone C, Cerulli R, Gaudioso M (2016) OMEGA one multi ethnic genetic approach. Optim Lett 10(2):309–324

Davis L (1991) Handbook of genetic algorithms. Van Nostrand Reinhold, New York

Doan MN (2007) An effective ant-based algorithm for the degree-constrained minimum spanning tree problem. In: Proceedings of the IEEE congress on evolutionary computation (CEC 2007), 25–28 September 2007, Singapore, pp 485–491

Ernst AT (2010) A hybrid Lagrangian particle swarm optimization algorithm for the degree-constrained minimum spanning tree problem. In: Proceedings of the IEEE congress on evolutionary computation, CEC 2010, Barcelona, Spain, 18–23 July 2010, pp 1–8

Gao X, Jia L, Kar S (2017) Degree-constrained minimum spanning tree problem of uncertain random network. J Ambient Intell Humaniz Comput 8(5):747–757

García S, Molina D, Lozano M, Herrera F (2009) A study on the use of non-parametric tests for analyzing the evolutionary algorithms’ behaviour: a case study on the CEC’2005 special session on real parameter optimization. J Heuristics 15(6):617–644

Garey MR, Johnson DS (1979) Computers and intractability: a guide to the theory of NP-completeness. Freeman, San Francisco

Gargano L, Hell P, Stacho L, Vaccaro U (2002) Spanning trees with bounded number of branch vertices. In: Proceedings of 29th international colloquium, ICALP 2002 Malaga, Spain, vol 2380, pp 355–365

Goldberg DE (1989) Genetic algorithms in search optimization and machine learning. Addison-Wesley, Reading

Holland JH (1975) Adaptation in natural and artificial systems: an introductory analysis with applications in biology, control, and artificial intelligence. University of Michigan Press, Ann Arbor

Iordache GV, Boboila MS, Pop F, Stratan C, Cristea V (2007) A decentralized strategy for genetic scheduling in heterogeneous environments. Multiagent Grid Syst 3(4):355–367

Knowles JD, Corne D (2000) A new evolutionary approach to the degree-constrained minimum spanning tree problem. IEEE Trans Evol Comput 4(2):125–134

Krishnamoorthy M, Ernst AT, Sharaiha YM (2001) Comparison of algorithms for the degree constrained minimum spanning tree. J Heuristics 7(6):587–611

Marín A (2015) Exact and heuristic solutions for the minimum number of branch vertices spanning tree problem. Eur J Oper Res 245(3):680–689

Moreno J, Frota Y, Martins S (2018) An exact and heuristic approach for the d-minimum branch vertices problem. Comput Opt Appl 71(3):829–855

Narula SC, Ho CA (1980) Degree-constrained minimum spanning tree. Comput OR 7(4):239–249

Pop F, Cristea V, Bessis N, Sotiriadis S (2013) Reputation guided genetic scheduling algorithm for independent tasks in inter-clouds environments. In: 27th International conference on advanced information networking and applications workshops, WAINA 2013, Barcelona, Spain, March 25–28, 2013, pp 772–776

Prim R (1957) Shortest connection networks and some generalizations. Bell Syst Tech J 36:1389–1401

Raidl GR, Julstrom BA (2000) A weighted coding in a genetic algorithm for the degree-constrained minimum spanning tree problem. In: Proceedings of the 2000 ACM symposium on applied computing, Villa Olmo, Via Cantoni 1, 22100 Como, Italy, March 19–21, 2000, vol 1, pp 440–445

Raidl GR, Julstrom BA (2003) Edge sets: an effective evolutionary coding of spanning trees. IEEE Trans Evol Comput 7:225–239

Ravi R, Marathe M, Ravi S, Rosenkrantz D, Hunt H III (1993) Many birds with one stone: multi-objective approximation algorithms. In: Proceedings of the 25th annual ACM STOCS, pp 438–447

Savelsbergh MWP, Volgenant T (1985) Edge exchanges in the degree-constrained minimum spanning tree problem. Comput OR 12(4):341–348

Silvestri S, Laporte G, Cerulli R (2017) A branch-and-cut algorithm for the minimum branch vertices spanning tree problem. Comput Oper Res 81:322–332

Sundar S (2014) A steady-state genetic algorithm for the dominating tree problem. In: Proceedings of the simulated evolution and learning—10th international conference, SEAL 2014, Dunedin, New Zealand, December 15–18, 2014, pp 48–57

Sundar S, Singh A (2012) New heuristics for two bounded-degree spanning tree problems. Inf Sci 195:226–240

Sundar S, Singh A (2015) Metaheuristic approaches for the blockmodel problem. IEEE Syst J 9(4):1237–1247

Sundar S, Singh A (2017) Two grouping-based metaheuristics for clique partitioning problem. Appl Intell 47(2):430–442

Zhou G, Gen M (1997) A note on genetic algorithms for degree-constrained spanning tree problems. Networks 30(2):91–95

Acknowledgements

This work is supported in part by a grant (Grant Number YSS/2015/000276) from the Science and Engineering Research Board—Department of Science & Technology, Government of India.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by V. Loia.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Singh, K., Sundar, S. A hybrid genetic algorithm for the degree-constrained minimum spanning tree problem. Soft Comput 24, 2169–2186 (2020). https://doi.org/10.1007/s00500-019-04051-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-019-04051-x