Abstract

The working world is undergoing profound changes, and occupational accidents are always a global concern due to substantial impacts on productivity collapse and workers’ safety. To address this problem, Failure Mode and Effects Analysis (FMEA) has been widely implemented to assess such risks. This, however, fails to provide reliable results because of some shortcomings of the risk priority number score of the FMEA including neglecting the weight of risk factors, having doubtful formulation, and performing poorly in distinguishing risks. This study presents a two-phase approach to identify and prioritize Health, Safety and Environment (HSE) risks to focus on critical risks instead of diverting organizational efforts to non-critical ones and overcoming the shortcomings of the traditional score. In the first phase, potential risks are identified, and after determining the value of risk factors using the FMEA technique, Fuzzy C-means (FCM) algorithm is applied to cluster these risks. Then, the weight of risk factors is calculated based on the Fuzzy Best–Worst Method (FBWM), and following this, clusters are labeled based on weighted Euclidean distance. In the second phase, a hybrid Multi-Criteria Decision-Making (MCDM) method is proposed based on the FBWM and combined compromise solution to prioritize risks belonging to the critical cluster. This is to create a distinct priority for risks and facilitate the implementation of corrective/preventive actions. This approach is applied in the automotive industry, and results are compared with other FMEA-based MCDM methods to validate findings. Eventually, a sensitivity analysis is designed to show the ability and applicability of the proposed approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Human resources as a crucial factor in production industries and services are always threatened by several factors that one of the most important of which is occupational accidents. Every year, millions of people succumb to such accidents or work-related diseases, which correspond to the loss of existing human resources (Moatari-Kazerouni et al. 2015). Such incidents are bound to result in disastrous economic consequences for workers and their families, employers, insurance companies, and the community (Smith et al. 2015). Aside from this, occupational accidents can be associated with mental and psychological problems as well as financial problems for individuals that disability or death of the worker can exacerbate the gravity of the situation. In terms of global economic effects, withdrawal of active labor from the production cycle and reduction in the global Gross Domestic Product (GDP) by 4–6% or even more can be remarked as the important ones (Soleimani and Fattahi 2017; ILO 2019). According to the International Labour Organization (ILO) report, annually, occupational accidents and work-related diseases account for 2.78 million deaths that 86.3 percent of which (2.4 million) are disease-related, and this corresponds to 5 to 7 percent of mortality around the world (ILO 2019). Figure 1 demonstrates the common occupational disease which results in death by continents. In addition to this issue, 374 million workers suffer damages caused by non-fatal occupational accidents every year (Hämäläinen et al. 2017). Due to the mentioned points, implementing Health, Safety, and Environment (HSE) management systems has been increasingly recognized as a significant priority among industries and organizations.

Work-related mortality rate derived from occupational disease by continents (Hämäläinen et al. 2017)

Since the automotive industry generally consists of various sections like assembly, painting, mold making, etc. lines, workers are in danger of being poisoned by lots of toxic chemicals and injured by physical activities. Some of these factors are chemicals inhalation, high-frequency sound, electricity shocks, poor ergonomics, falling mold or parts during work, etc. which are liable to result in dangerous accidents or disease (Yousefi et al. 2018). This issue manifests the necessity of implementing the HSE management system. That is to say; this system brings about a significant mitigation in the likelihood of risk factors occurrence by improving health and safety levels and rising environmental awareness in all the organizational levels (Rezaee et al. 2020). This systematic framework provides methods and guidelines to identify hazardous risks, and consequently control or alleviating the side-effects of such threats in the workplace (Pourreza et al. 2018). Hence, how to carry out an efficient risk assessment process has received significant attention from managers and researchers.

There is a diverse array of risk assessment approaches that Failure Mode and Effects Analysis (FMEA) is one of the standard techniques used widely. This technique which has a proactive nature is used in identifying, evaluating, preventing/eliminating causes or effects of potential failures in a system (Liu 2016). Despite having numerous shortcomings, in most studies, the FMEA technique has been applied based on the conventional Risk Priority Number (RPN) score (Liu et al. 2019a; Huang et al. 2020). This score is derived from multiplying three risk factors as Severity (S), Occurrence (O), and Detection (D). There is, however, no scientific logic behind this multiplication, and risk prioritization based on this score cannot be reliable (Kutlu and Ekmekçioğlu 2012). In addition to this, in real-world applications SOD factors do not have equal importance, and should not be assumed the same in the ranking of risks/failure modes. At the same time, the conventional RPN score neglects the relative importance of risk factors (Chanamool and Naenna 2016). Indeed, this score, in terms of improvement efforts, places a premium on a risk that has a higher priority. Although, this risk may have a lower severity than other risks which have a lower RPN (Baghery et al. 2018). As another critical defect, the conventional RPN fails to distinguish risks with the same RPN score, while the value of risk factors is different (Rezaee et al. 2018b). Furthermore, in the RPN formula, other essential factors such as risk occurrence cost which can play a critical role in risk assessment are not considered (Rezaee et al. 2017a). On the other hand, the traditional FMEA technique cannot provide a framework to compare risks based on the value of risk factors, directly instead of multiplication of these (Tay et al. 2015). A risk assessment approach should provide decision-makers with outputs with high separability and reliability. These features can empower managers to plan corrective and preventive measures accurately due to limited organizational resources and prevent them from focusing on non-critical risks. Therefore, an effective approach is needed to identify critical risks more appropriately.

This study aims to present a novel approach that can address the mentioned shortcomings of the traditional FMEA technique. The proposed plan is performed in two main phases using FMEA, Fuzzy Best–Worst Method (FBWM), Fuzzy C-means (FCM) algorithm, and Combined Compromise Solution (CoCoSo) method. In the first phase, risks are identified through the FMEA technique. After determining the value of risk factors, including SOD and the other two main managerial criteria, FBWM is applied to calculate the weight of these factors. The reason for using this method is threefold (Guo and Zhao 2017): firstly, to cover the weighting problem in the traditional FMEA technique; secondly, to cover uncertainty derived from ambiguous and equivocate opinions given by experts in this technique. Finally, having less pairwise comparisons compared to Analytic Hierarchy Process (AHP), and higher consistency ratio compared to conventional Best–Worst Method (BWM), and also solving the complexity of applying tailed scales (e.g. 1–9 scale) in expressing preferences. After reaching the final weight of risk factors, the unsupervised FCM algorithm is used to cluster recognized risks into distinct risk categories. In fact, in this phase, instead of calculating the RPN score, it has been tried to compare risks based on risk factors, directly, and draw a sensible and detailed comparison among risks. In other words, risks clustering enables Decision-Makers (DMs) to reach a quick comparison of them without losing any piece of information, especially when there are resource or time limitations (Zhang et al. 2018; Duan et al. 2019).

After clustering risks, to determine the critical cluster by considering the relative importance of risk factors, weighted Euclidean distance is calculated in such a way that the more distance a cluster has, the more critical it is. Each cluster has a different number of risks which may be a large number, especially in large-scale problems. Since providing corrective/preventive actions for all risks belonging to the most critical cluster is likely to be time or cost-consuming, so DMs would face a challenging issue to prioritize risks. To address this issue, the CoCoSo method in the second phase as a novel powerful ranking method (Yazdani et al. 2019) is used to increase separability among risks priority and reliability of results in comparison with the FMEA. The weights derived from the FBWM in the first phase are applied in the calculation process of this method. To illustrate the applicability of the proposed approach, it is used in a manufacturing company. Although, various ranking Multi-Criteria Decision Making (MCDM) methods have been proposed, in this study the results of some other methods are provided to make a comparison with this method, and to manifest the robustness of this. The main contribution of the proposed approach is to focus on critical risks through clustering and prioritizing them to reduce the negative effects of these risks on the system significantly, compared to the FMEA technique and other similar approaches. Additionally, other contributions of this study are applying different weights to risk factors to determine the critical cluster using the FCM-FBWM approach and considering these weights and uncertainty of experts' opinions in the risk prioritization process to increase reliability and separability of outputs.

It should be noted that since uncertainty in the FMEA team members’ opinions on risk factors is an inevitable issue; the fuzzy form of BWM is applied. Furthermore, regarding the studies using clustering algorithms in the FMEA technique, it can be seen that prioritization of risks in the critical cluster has not been implemented; while due to the resource and time limitations, determining the most critical one is of cardinal importance. To solve this problem, in this study CoCoSo method as one of the newest and more robust MCDM techniques (Ulutaş et al. 2020) is used to determine the order of risks in the critical cluster to increase separability among risks priority and reliability of results compared to the FMEA.

The rest of this study is organized as follows: Sect. 2 is dedicated to reviewing some studies about FMEA applications. In Sect. 3, supplementary explanations of the FCM algorithm, FBWM, and CoCoSo method are presented. In Sect. 4, the proposed approach is explained in detail. In Sect. 5, results are presented and discussed comprehensively along with a comparison with other methods and sensitivity analysis. Finally, in Sect. 6, concluding remarks and suggestions for future studies are presented.

2 Literature review

FMEA as a proactive group-oriented technique for risk assessment and reliability analysis has been successfully applied in a wide range of real case studies (Liu et al. 2018; Rastayesh et al. 2019). To implement this technique, it is first necessary to create a multi-disciplinary team in the field of study (Carpitella et al. 2018). After that, the following stages are often executed (Yousefi et al. 2018): (I) identifying potential failures in a system and determining the value of those failures in the form of SOD factors by the FMEA team; (II) calculating the RPN score for each failure and prioritization of those; (III) scheming corrective/preventive actions for failures which have priority. According to the growing number of carried out studies on FMEA, the number of developed or modified methods and applications is significantly increasing. Some of these methods have been presented using artificial intelligence algorithms (Jee et al. 2015; Adar et al. 2017; Rezaee et al. 2017b; Renjith et al. 2018; Yousefi et al. 2020), and mathematical programming (Garcia et al. 2013; Chang et al. 2013; Behraftar et al. 2017; Rezaee et al. 2017a; Bakhtavar and Yousefi 2019). Since the conventional RPN cannot show acceptable performance in risks prioritization, and due to the existence of multiple risk factors in the FMEA technique, MCDM techniques are required to solve this problem (Liu et al. 2019a; Huang et al. 2020; Karunathilake et al. 2020).

Various MCDM methodologies have been integrated with the traditional FMEA technique that some of which are as follows. Helvacioglu and Ozen (2014) presented a combined approach based on the Technique for Order Preference by Similarity to Ideal Solution (TOPSIS) and trapezoidal fuzzy numbers for ranking failure modes in the yacht system design process. Ilangkumaran et al. (2014) adopted fuzzy AHP to prioritize critical risks in the paper industry. Liu et al. (2015) in their study, utilized fuzzy AHP, and entropy method with the purpose of weighting risk factors, and applied the VIekriterijumsko KOmpromisno Rangiranje (VIKOR) method to order failure modes. Tooranloo and Sadat-Ayatollah (2016) surveyed uncertain concepts and insufficient data in failure mode analysis. They used fuzzy linguistic terms and intuitionistic fuzzy hybrid TOPSIS to assign the weight of risk factors and ranking failure modes, respectively. Safari et al. (2016), instead of using the conventional RPN score, applied fuzzy VIKOR for risks prioritization. Liu et al. (2016) applied expert judgment and entropy method to weight risk factors and used the ELimination Et Choice Translating REality (ELECTRE) method to rank those risks. Wang et al. (2017) proposed a house of reliability-based rough VIKOR approach to determine the order of failures and considering the ambiguity in stated opinions by the FMEA team. Bian et al. (2018) took advantage of the D-number theory to handle the uncertainty of the FMEA team members’ opinions and then applied TOPSIS to rank the identified failures. Tian et al. (2018) presented a hybrid approach using the FBWM, relative entropy, and VIKOR methods to order failures.

Sakthivel et al. (2018) employed multiple methods to achieve an acceptable risk prioritization. They used AHP for determining the weight of risk factors, and fuzzy TOPSIS, and fuzzy VIKOR methods for ranking. To improve the risk assessment process using the FME technique, Liu et al. (2019b) used the AHP method with the aim of weighting risk factors, fuzzy Graph Theory, and Matrix (GTM) approach, and the DEcision-MAking Trial and Evaluation Laboratory (DEMATEL) technique to prioritize failure modes in their study. Yazdi (2019) presented an interactive risk assessment approach based on AHP and entropy methods. He modified the conventional RPN score based on the fuzzy set theory. Dabbagh and Yousefi (2019) in their hybrid approach, utilized fuzzy cognitive map and Multi-Objective Optimization based on Ratio Analysis (MOORA) method to rank risks that in which the former method was used to weight decision criteria with considering their casual relationships, and the latter applied to determine the order of risks. Ghoushchi et al. (2019) investigated combining Z-theory with the FMEA technique to consider both the uncertainty and reliability concepts in the risk assessment process. They used FBWM to weight SOD factors and the MOORA method based on the Z-theory to determine critical failure. In the more recent study, Lo et al. (2020) presented an integrated approach based on the DEMATEL technique and the TOPSIS method to determine the critical failure mode. Yucesan and Gul (2021) introduced a holistic FMEA approach based on the FBWM method and fuzzy Bayesian network to determine the weight of risk factors and assess the occurrence probabilities of the identified failure modes in an industrial kitchen equipment manufacturing facility. Celik and Gul (2021) extended an approach using BWM and Measurement of Alternatives and Ranking to Compromise Solution (MARCOS) methods under the context of interval type-2 fuzzy sets to assess dam construction safety. Yucesan et al. (2021) developed an extended version of the FMEA technique based on neutrosophic AHP to address the shortcomings of the RPN score in a case study of the textile industry.

Nowadays, applications of machine learning and data mining techniques due to their inherent characteristics have been widespread in various fields, including energy (Faizollahzadeh Ardabili et al. 2018; Shamshirband et al. 2019; Fan et al. 2020), environment science (Wu and Chau, 2013; Taormina and Chau 2015), aquaculture (Banan et al. 2020), medical science (Rezaee et al. 2021; Onari et al. 2021), manufacturing (Wang et al. 2020; Dogan and Birant, 2021),. In the meantime, one of the issues that can be addressed using data mining techniques is clustering. In the following, this study will focus on the applications of clustering techniques along with the FMEA approach in the risk analysis field. Tay et al. (2015) applied the fuzzy Adaptive Resonance Theory (ART) technique to cluster failure modes. In this study, first, the Euclidean distance-based similarity measure was used to calculate the similarity degree among failure modes. Then failure modes were clustered. Chang et al. (2017) applied the Self-Organizing Map (SOM) to cluster corrective actions of failure modes, and employed RPN interval to order the components of each group. Duan et al. (2019) categorized failure modes using the k-means clustering algorithm in such a way that initially, Double Hierarchy Hesitant Fuzzy Linguistic Term Sets (DHHFLTSs) were used to describe the FMEA team members’ linguistic opinions on failure modes. After that, the weight of SOD factors was determined by maximizing the deviation method, and finally, failure modes clustered into high, medium, and low-risk groups.

As can be seen, a significant number of studies are related to using RPN-based approaches, and as mentioned in the previous section, the conventional RPN has numerous shortcomings. As a viable alternative, presenting a methodology based on the original values of risk factors are likely to result in more compatible outcomes with practical applications (Tay et al. 2015). On the other hand, in real-world problems especially HSE risk assessment, in addition to SOD factors, some other essential factors such as treatment cost and duration resulting from the occurrence of each risk should be taken into consideration (Yousefi et al. 2018). Additionally, limited studies have paid attention to weight risk factors in the FMEA technique. To deal with such drawbacks, this study attempts to provide a proper risks clustering approach using the FCM algorithm based on SOD, C, and T (SODCT) factors. This algorithm determines a membership degree for each risk factor. It enables DMs to specify the number of risks in each cluster based on this degree, according to the problem studied (Zeraatpisheh et al. 2019). Also, in the presented hybrid approach, the FBWM, which is one of the powerful and latest weighting methods (Guo and Zhao 2017) is used with the aim of weighting SODCT factors.

It should be noted that since uncertainty in the FMEA team members’ opinions on risk factors is an inevitable issue; the fuzzy form of BWM is applied. Furthermore, regarding the studies using clustering algorithms in the FMEA technique, it can be seen that prioritization of risks in the critical cluster has not been implemented; while due to the resource and time limitations, determining the most critical one is of cardinal importance. To solve this problem, in this study CoCoSo method as one of the newest and more robust MCDM techniques (Ulutaş et al. 2020) is used to determine the order of risks in the critical cluster to increase separability among risks priority and reliability of results compared to the FMEA.

3 Methodology

As stated, this study aims to present an approach to cluster and prioritize critical HSE risks based on the FMEA technique using FBWM, FCM algorithm, and CoCoSo method. Further explanations of these methods are provided in the following subsections.

3.1 FBWM

Rezaei (2015) introduced a novel MCDM technique based on pairwise comparisons. Determining the weight of decision criteria by definitive values (1–9 scale), this model cannot be implemented in case of existence uncertain decision data. Guo and Zhao (2017) developed the BWM and presented FBWM to model ambiguity and uncertainty in human judgments. In this new method, DMs express their opinions on the criteria in the form of linguistic variables. This is done in such a way that Absolutely Important (AI) indicates that in the pairwise comparisons, one criterion is much more important than the other. Equally Importance (EI) shows that there is the same importance of the pair compared. In the FBWM, after determining the decision-making criteria \(c_{j} , j = 1,2,...,n\) the best and the worst criteria are represented as \(c_{B}\) and \(c_{W}\), respectively. In this study, risk factors are considered as decision-making criteria and pairwise comparisons between these factors have been made. In the next step, the fuzzy preference vector of the best criterion over others, and the fuzzy preference vector of others over the worst criterion are determined as \(\tilde{A}_{B}\) and \(\tilde{A}_{W}\), respectively using linguistic variables according to Table 1.

If \(\tilde{A}_{B} = (\tilde{a}_{{B1}} ,\tilde{a}_{{B2}} , \ldots ,\tilde{a}_{{Bn}} )\) and \(\tilde{A}_{W} = (\tilde{a}_{{1w}} ,\tilde{a}_{{2w}} , \ldots ,\tilde{a}_{{nw}} )\), the fuzzy performance of the \(c_{B}\) over \(c_{j}\) is represented as \(\tilde{a}_{{Bj}} = (l_{{Bj}} ,m_{{Bj}} ,u_{{Bj}} )\). The fuzzy performance of the \(c_{j}\) over \(c_{W}\) is represented as \(\tilde{a}_{{jW}} = (l_{{jW}} ,m_{{jW}} ,u_{{jW}} )\) where \(l\), \(m\) and \(u\), respectively indicate the lower, medial, and upper values. It should be noted that \(a_{{BB}}^{ \sim } = (1,1,1)\) and \(a_{{WW}}^{ \sim } = (1,1,1)\). Considering \(\bar{w}_{j}\), \(\bar{w}_{W}\) and \(\bar{w}_{B}\) as fuzzy triangular numbers, \(\bar{w}_{j} = (l_{j}^{w} ,m_{j}^{w} ,u_{j}^{w} )\) is used to define the fuzzy weights of \(c_{j}\). After defining these needed items, the fuzzy weights \((\bar{w}_{1} ^{*} ,\bar{w}_{2} ^{*} , \ldots ,\bar{w}_{n} ^{*} )\) can be obtained by the following model (Guo and Zhao 2017):

where \(\tilde{\xi } = (l^{\xi } ,m^{\xi } ,u^{\xi } )\) and it is considered \(l^{\xi } \le m^{\xi } \le u^{\xi }\) and supposed \(\tilde{\xi }^{*} = (k^{*} ,k^{*} ,k^{*} ),\,k^{*} \le l^{\xi }\). R is a set and a fuzzy number \(\tilde{a}\) on R is defined as a triangular fuzzy number if its membership function \(\mu _{{\tilde{a}}} (x):\,\,R \to [0,1]\) is equal to Eq. (7).

Following achieving fuzzy weights, the Graded Mean Integration Representation (GMIR)\(R(\tilde{a})\) is used to transform the fuzzy weights of the criterion to a crisp weight. The GMIR \(R(\tilde{a}_{j} )\) formula is as follow:

In the final stage, the Consistency Ratio (CR) can be computed based on the formula \(CR = \tilde{\xi }^{*} /CI\) to evaluate the consistency degree of pairwise comparisons. In this formulation, \(CR\) is the optimal solution of the FBWM and the Consistency Index (CI) should not exceed the maximum possible CI shown in Table 1. It should be noted the \(CR \le 0.1\) is acceptable (Tian et al. 2018).

3.2 FCM algorithm

Clustering is an automated data analysis process in which a given data set is divided into different clusters in such a way that data points with similar traits clustered in a group are more diverse than those in other groups. In real-world applications, however, some data points may belong to multiple clusters. To solve this problem, fuzzy clustering algorithms have been introduced, which can segregate overlapping data points using fuzzy logic (Rezaee et al. 2018a). FCM algorithm is one of the common fuzzy clustering algorithms presented by Duda (1973) for the first time. During the implementation of this algorithm, each data point can belong to more than one cluster with different membership degrees. Indeed, the membership of data has a fuzzy (uncertain) nature and is a criterion between 0 and 1. After defining the data point vector \(X = (x_{1} ,x_{2} , \ldots ,x_{n} )^{T} \subseteq R\) as input, and determining the number of clusters, \(k\), the FCM algorithm is implemented, and the value of matrixes \(U\) and \(V\) are calculated. The matrix \(V = (v_{1} ,v_{2} , \ldots ,v_{k} )^{T}\) includes the cluster centers vector, and the matrix \(U = [u_{{ij}} ]_{{\kappa \times n}}\) is the membership matrix. The objective function of this algorithm is as follows (Bezdek 2013):

In Eq. (9), \(u_{{ij}}\) and \(\mathop v\limits^{ } _{{_{i} }}\) represent the membership of \(x_{j}\) in the \(i\) th cluster, and the center of the \(i\) th cluster, respectively. Also, \(||\mathop {x_{j} }\limits^{{}} - \mathop v\limits^{ } _{{_{i} }} ||\) indicates Euclidean distance norm of \(x_{j}\) and \(\mathop v\limits^{ } _{{_{i} }}\), and \(m\) is a parameter which can be any real number greater than 1, employed to fuzzify the memberships. It should be noted that \(\sum\nolimits_{{i = 1}}^{k} {u_{{ij}}^{{}} } = 1 \forall j,j = 1,2, \ldots ,n\). Equation (9) fails to be minimized directly, so Alternating Optimization (AO) algorithm, which is an iterative technique is used to do this. Based on the AO, the optimal solution minimizing the \(J_{m} (u,v;x)\) and the center of \(i\) th cluster, are shown in Eqs. (10) and (11), respectively.

3.3 CoCoSo method

The CoCoSo method has been newly proposed by Yazdani et al. (2019) which can compete with other MCDM techniques such as TOPSIS, COmplex PRoportional ASsessment (COPRAS), VIKOR, and MOORA, and produce more robust results. The final ranking of the CoCoSo method is done based on three aggregator strategies. To perform this method, first, it is needed to define alternatives and related criteria. In this study, the identified risks and five risk factors are considered as the alternatives and decision-making criteria in the CoCoSo method, respectively, to determine the priority of each critical risk. If \(x_{{ij}}\) shows the value of the criterion \(j,j = 1,2, \ldots ,n\), for the alternative \(i,i = 1,2, \ldots ,m\), the decision matrix is \(x_{{ij}} = \left[ \begin{gathered} x_{{11}} x_{{12}} \ldots x_{{1n}} \hfill \\ x_{{21}} x_{{22}} \ldots x_{{2n}} \hfill \\ \ldots \ldots \ldots \ldots \hfill \\ x_{{m1}} x_{{m2}} \ldots x_{{mn}} \hfill \\ \end{gathered} \right]\) which is normalized using compromise normalization equations as follows:

After obtaining the normalized matrix, the weight of each criterion \(w_{j}\) is determined by DM, then \(S_{i}\) and \(P_{i}\) showing the weighted comparability sequences and the exponential weight of comparability sequences, respectively, are calculated for each alternative by Eqs. (14) and (15).

It is needed to say that Eqs. (14) and (15) are based on the aggregated Simple Additive Weighting (SAW) and Exponentially Weighted Product (EWP) methods. In the next stage, three aggregator strategies (kia, kib, kic) are established to compute the relative weight of alternatives, according to Eqs. (16) to (18).

where \(\lambda\) is determined by DMs (usually equals 0.5). The final ranking is done based on Eq. (19) in such a way the after calculating this score for each alternative, all these scores are sorted in decreasing order, and the more significant, the better.

4 Proposed approach

In this section, the presented a decision support system for prioritization of critical HSE risks is explained in detail. This approach is implemented in two combined phases: I) FMEA, FBWM and FCM algorithm, II) FBWM and CoCoSo. In the first phase, initially, the HSE risks are identified, and the values of their risk factors are determined by the multi-disciplinary FMEA team, which is the initial decision matrix. These factors include SOD factors, and also two extra factors C and T representing the treatment cost and treatment duration, respectively, are considered in this study due to their necessity in the HSE risk assessment process. In addition to this, they determine the best and the worst risk factors in terms of importance and then drew pairwise comparisons between risk factors and the best and worst factors using linguistic variables presented in Table 1.

In practical applications, the ambiguity in an individual’s judgment cannot be neglected, and ignoring this issue may lead to unreliable results. To address this problem, the FBWM model is employed in this phase. To perform this model, first, the linguistic variables should be converted into fuzzy values according to the defined fuzzy numbers for each linguistic term shown in Table 1. After that, by implementing the model described in subsection 3.1, the weights of risk factors are obtained. Since calculated weights are fuzzy numbers, Eq. (8) is utilized to achieve crisp numbers. One of the main attempts of this study is to provide a ranking approach based on the original values of risk factors instead of the conventional RPN score. Therefore, in the next stage of this phase, all risks are clustered using an unsupervised algorithm. This is because such algorithms can be implemented independently of whether the data is labeled or not. The FCM algorithm is employed due to its soft computations compared to the k-means algorithm (Cardone and Di Martino 2020). By performing this algorithm, the membership degrees of identified HSE risks per cluster are obtained to enable DMs to specify the number of risks in each cluster according to the problem studied. Then, the Euclidean distance between the center point of each cluster and the origin of the coordinate system is calculated. Since the aim is to identify the critical cluster based on the weight of SODCT factors, the weighted Euclidean distance measure is computed, and the more this measure is significant, the more the cluster is critical.

In the second phase, risks belonging to the critical cluster should be prioritized. This is attributed to several reasons including time, financial budget, and human resource limitations in taking corrective/preventive actions for all risks. Therefore, prioritizing critical risks is of considerable importance in reducing their negative impacts on the system effectively. To this end, the new MCDM technique, namely the CoCoSo method, which can produce robust results in comparison with other similar techniques (Yazdani et al. 2019) is applied. This MCDM technique is implemented based on obtained outputs from previous steps. To put it precisely, this study considers the existing risks in the critical cluster and their related five risk factors as alternatives and evaluation criteria, respectively. In the first step of the CoCoSo method, the decision matrix is determined and normalized using Eqs. (12) and (13). Then using the weights stem from the FBWM, the aggregator strategies \(k_{{ia}}\),\(k_{{ib}}\) and \(k_{{ic}}\) are calculated. As the final stage, the score \(k_{i}\) is achieved, and the more critical risk is recognized. This more clear prioritization enables DMs to handle critical risks of a system in a more efficient way regarding the resource. The implementation stages of the proposed approach have been shown in Fig. 2.

5 The results analysis

The results of performing the presented approach for prioritization of HSE risks in the studied company are discussed in subsection 5.1. To validate this approach, the obtained results are compared with other similar methods explained in subsection 5.2. In the last subsection, 5.3, sensitivity analysis is carried to show the applicability of this approach.

5.1 The results of performing the proposed approach

As described in the previous sections, the proposed approach is based on the FMEA technique, FBWM, FCM algorithm, and CoCoSo method. The results of each phase are explained in the three subsequent parts.

5.1.1 Risks identification by the FMEA technique

The proposed approach has been employed in a manufacturing company active in the automotive industry to prioritize the identified HSE risks. Following the HSE rules in the automotive industry is of importance because workers are in danger of being exposed to lots of toxic chemicals and physical hazards, such as unpleasant smells, chemicals inhalation, high-frequency sound, inappropriate lighting, electricity shocks, etc. These risks may lead to an accident or chronic occupational diseases. Therefore, this study tries to implement the proposed approach in this industry. The 62 risks in this field of study were identified by the FMEA team (Yousefi et al. 2018), and the value of risk factors per risk determined by them is shown in Table 2.

This Table, in addition to the value of three main FMEA factors (SOD), illustrates the value of two extra factors C and T given by the FMEA team. As can be seen, these values are between 1 and 5 in such a way that if one of the SOD factors of risk is equal to 1, demonstrates its very low importance, and 5 demonstrates its very high importance. That is to say, the rate 1 means the severity of injuries stem from the related risk is very low, occurs rarely, and is detectable. While the rate five means that the related risk can cause death or permanent disability, it happens frequently, and it is partially detectable. If the treatment cost of risk is less than 5,000,000 or more than 20,000,000 currencies, its relative importance is equal to 1 and 5, respectively (Dabbagh and Yousefi 2019). In terms of T, rates 1 and 5 means that the treatment duration of risk is less than a week and more than eight weeks, respectively.

5.1.2 Determining the weight of risk factors by the FBWM

To weight risk factors under the uncertain circumstance, the FBWM have been used in this study. This model enables DMs to determine the weight of decision criteria more flexibly and achieve reliable and precise results than the conventional BWM. According to the implementation stages of the FBWM, firstly, the FMEA team determined the best and the worst factors, and then made a pairwise comparison using linguistic variables as shown in Table 1. Risk factors S and O were selected as the best and the worst criteria, respectively that the preferences of the best criterion among the overall criteria \((\tilde{A}_{B} = \mathop a\limits^{ \sim } _{{_{{SS}} }} ,\mathop a\limits^{ \sim } _{{_{{SO}} }} ,\mathop a\limits^{ \sim } _{{_{{SD}} }} ,\mathop a\limits^{ \sim } _{{_{{SC}} }} ,\mathop a\limits^{ \sim } _{{_{{ST}} }} )\) and all criteria over the worst criterion \((\tilde{A}_{w} = \mathop a\limits^{ \sim } _{{_{{SO}} }} ,\mathop a\limits^{ \sim } _{{_{{OO}} }} ,\mathop a\limits^{ \sim } _{{_{{DO}} }} ,\mathop a\limits^{ \sim } _{{_{{CO}} }} ,\mathop a\limits^{ \sim } _{{_{{TO}} }} )\) have been represented in Table 3. The linguistic terms of risk factors can be converted into fuzzy numbers using Table 1. Therefore, \(\tilde{A}_{B}\) = [(1,1,1), (7/2,4,9/2), (5/2,3,7/2), (3/2,2,5/2), (5/2,3,7/2)] and \(\tilde{A}_{w}\) = [(7/2,4,9/2), (1,1,1), (3/2,2,5/2), (5/2,3,7/2), (2/3,1,3/2)]. Eventually, the FBWM describe in subsection 3.1 was solved applying LINGO 17.0 software, and after achieving fuzzy weights, final crisp weights were calculated based on Eq. (8). The results have been illustrated in Table 3.

According to the results, risk factors S with the weight of 0.4024, and O with the weight of 0.0935 have the highest and the lowest weights, respectively as would be expected. Also, risk factors C, D, T with the weights of 0.2397, 0.1465, and 0.1179 are in the next places, respectively. Regarding the \(\tilde{\xi }^{*}\) = (0.4258, 0.4258, 0.4258), and based on the CI shown in Table 1, the \(CR\) = 0.4258/8.04 = 0.053, which is less than 0.1 showing the acceptable consistency rate.

5.1.3 Identifying the cluster of critical risks by the FCM algorithm

According to the proposed approach descriptions, the aim is to provide a more clear prioritization in comparison with the traditional FMEA technique to reduce the implementation costs of corrective/preventive actions. Therefore, it is required to cluster identified risks shown in Table 2 based on the values of SODCT factors. The FCM clustering algorithm can act more flexibly facing overlapping data points, and each of these can belong to at least two clusters with different memberships. Therefore, DMs can determine the number of data points in each cluster based on different minimum membership thresholds. To implement the FCM algorithm, initially, the number of clusters is specified, and then the values of SODCT factors for each risk are given to this algorithm as inputs. In this study, four clusters were considered, and the algorithm was implemented with the help of MATLAB 2016a software.

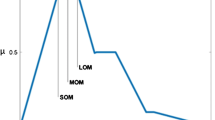

The outputs were the membership degree of risks per cluster. By determining the minimum membership threshold, it can be specified which of these risks belong to the cluster examined. Since the data used are of unlabeled type, it should be determined which of the clusters is critical. To this end, the center point of each cluster was calculated based on Eq. (11), and then the Euclidean distance between the center point and origin of the coordinate system was computed per cluster. To providing a more compatible clustering with practical problems, the weights of the SODCT factors obtained from the FBWM were used to compute the weighted Euclidean distance. These clusters have been labeled based on this distance in four classes Intolerable (unacceptable), Major, Tolerable, and Minor (acceptable). The more this distance is significant, the more risks of that cluster are critical. Clusters Intolerable and Minor have the highest and the lowest weighted Euclidean distance, respectively. This measure enables DMs to label the clusters based on the importance of risk factors in a system studied. The results have been listed in Table 4.

As can be seen, cluster four with the weighted Euclidean distance 3.7816 includes intolerable risks; therefore, this cluster was considered as the critical cluster. In the next stage, risks belonging to this cluster should be determined to be prioritized in the next phase of the presented approach. To specify this, the minimum membership threshold was considered equal to 0.45, and using membership degrees, risks of this cluster were determined highlighted in Table 5.

5.1.4 Prioritizing the critical risks by the CoCoSo method

In this part, the results of the second phase are explained. According to Table 5, there are 17 risks specified in the critical cluster which have to be ranked by the CoCoSo method. The method is implemented base on outputs of both the FBWM and FCM algorithm in such a way that alternatives of this method are those 17 critical risks, and the weights of criteria are the weights of SODCT factors obtained from the FBWM.

To implement this method, regarding subsection 3.3, after forming the normalized decision-making matrix, the weighted comparability sequence \(S_{i}\) and the exponential weight of comparability sequence \(P_{i}\) were calculated using Eqs. (14) and (15) as follows.

Afterward, three aggregators strategies \(k_{{ia}}\), \(k_{{ib}}\) and \(k_{{ic}}\) were computed based on the Eqs. (16) to (18), respectively, as follows. It should be noted that, in this study \(\lambda = 0.5\).

After reaching these values, the final ranking score \(k_{i} \forall i = 1,2, \ldots ,17\) was calculated using Eq. (19). The results have been demonstrated in Table 6.

As can be seen, the 17 identified risks that belonged to the critical cluster, were prioritized in 14 ranks that risk R10, risks (R17&R18) and risk R27 with the scores 2.1276, 2.05, and 2.027 have been placed in first, second and third ranks, respectively. In addition to this, risks (R01&R38), (R17&R18), and (R11&R51) have the same ranks due to the equal value of SODCT factors (see Table 2). This more clear prioritization allows DMs to manage critical risks more effectively according to both human and financial resources and also time limitation.

5.2 Comparison with other methods

In this part, it has been attempted to compare the results of the proposed approach with other conventional methods to manifest the applicability of this approach. To this end, a comparison has been drawn between the prioritization results of the FBWM-CoCoSo and traditional FMEA and some popular FMEA-based MCDM methods such as TOPSIS, VIKOR, and MOORA. The results have been shown in Table 7. To making a fair comparison, the weights of risk factors SODCT obtained from the FBWM have been applied in all MCDM-based risk prioritization methods.

According to Table 7, risks R10 and R27 with the RPN = 800 have been placed in the top priority, risk R17, and R18 are jointly in the second, and risk R26 has been a stand in the third priority. As can be seen, since the current RPN score, do not consider the weight of risk factors, unlike other methods, and relying merely on the multiplication of these factors’ values, it failed to distinguish the priority of risks. R10 and R27 have different S and D values while having the same priority showing the disability of this score in distinguishing the rank of such risks. In other words, this technique has created only 10 places for all studied risks which can confound DMs in determining the order of risks for implementing corrective/preventive action regarding the resource limitations. For this reason, drawing more distinctions among risks’ prioritization by considering the weight of risk factors, and taking advantage of the FMEA technique can boost the decision-making power of DMs. In this regard, various researchers have tried to modify or develop the FMEA technique using other methods such as MCDM techniques. Most of these, however, failed to preserve the merits of this traditional technique for the FMEA team, including compatibility with its opinions and practical applications. In other words, from the viewpoint of the FMEA team, a method can be efficacious in the risk assessment process that not only does not make unrealistic changes when the weight of risk factors is applied but also can create more separability among risk priorities. To measure this, the RPN-Based Spearman Correlation Coefficient (RBSCC) can be exploited. The results of the four FMEA-based MCDM methods in Table 7 show that all of these methods have made a higher distinction than the traditional FMEA technique. While the proposed approach with RBSCC = 0.96 indicates that the results, in addition to creating greater separability among risks priority, have increased reliability of the results compared to other methods because of having a significant consistency with the nature of the FMEA technique (See Fig. 3).

For example, risks R10 and R27, which were jointly in the first place regarding the conventional RPN scores, have been ranked in first and third places, respectively, based on the proposed approach. Also, risks R12 and R50 have been stood in two distinct ranks of fourth and fifth based on the proposed approach instead of the joint fourth rank. On the other side, based on the TOPSIS method, the critical risk R27 is in the 13th priority, which was in the first order based on the traditional FMEA technique. Although this can be partly due to the applying risk factors weights in the prioritization process, by examining the values of these factors in Table 2, it can be seen that this risk has the highest possible value of O, D, C, and T factors and the determined rank is not following reality. This rank change can be attributed to the value allocated to the severity factor of risk R27 and the structure of the TOPSIS method. This risk has the lowest severity factor, while this factor is the most important in risk assessment (see Table 3). This issue has led to RBSCC = 0.48 for the TOPSIS method, while the VIKOR method has the RBSCC = 0.46. This measure shows its more reduced performance compared to other methods, and in addition to ranking risk R27 in the 12th priority, risk R26 (the third rank based on the FMEA technique) has fallen to the 9th priority. Meanwhile, the MOORA method has shown, to some extent, acceptable performance with RBSCC = 0.76. Regarding risks R26 and R27, the MOORA method assigned the 6th and 7th priorities to these risks, respectively, while the VIKOR method has assigned the 9th and 12th priorities, respectively to these critical risks.

5.3 Sensitivity analysis

In this section, a sensitivity analysis has been designed to evaluate the results of the proposed approach based on weight (weights of SODCT factors) replacement scenarios. For this purpose, three scenarios have been defined for each risk factor in which the weight of the factor evaluated has been increased by 0.05, 0.15, and 0.25, respectively, compared to the original, and the weight of other factors have been decreased by 0.0125, 0.0375 and 0.0625, respectively. 15 scenarios arranged can be seen in Table 8, and the results of implementing the proposed approach according to these scenarios have been presented in Tables 9 ,10, 11.

Due to the increasing trend in the weight of the factor S (scenarios 1 to 3), it can be said that the Spearman Correlation Coefficient (SCC) index has experienced a relatively large decrease from 1 to 0.92 (see Table 9). This reduction indicates the results of the original state show a relatively high sensitivity to changes in the weight of factor S, especially in Scenario 3. Possessing the highest weight in the original state, factor S has led to a decrease in the importance of other factors in the prioritization process by experiencing a further increase in the weight. Additional investigations show risk R27, which had the third priority in the original state, has fallen to the 8th priority. Although risk R27 has the highest values of O, D, C, and T factors, due to the lowest value of factor S, making a further increase in this value has resulted in falling its priority to the 8th rank. By contrast, risk R41 has reached the third priority in Scenario 3 due to having the highest value of the factor S (see Fig. 4).

In terms of increasing the value of the factor O (scenarios 4 to 6 in Table 9), the SCC index has had a tolerable decrease showing marginal changes in risk priorities based on the proposed approach.

According to scenarios 7 to 9 (see Table 10) related to weight replacement of factor D, it can be seen that the SCC index has decreased from 1 to 0.93 after remaining static in Scenario 7 despite an increase by 0.005 in the value of this factor. To put it another way, although risk priorities based on the proposed approach stayed at a steady level in scenario 7, experienced considerable changes in Scenario 9. Take R10 as an example; the priority of this risk in scenarios 8 and 9 has dropped to second place from the first place in the original state, whilst risk R27 has jumped to the first rank. As a reason for this issue, it can be said that by making an increase in the weight of factor D, this factor has been considered as the most important factor instead of the factor S in scenario 9. In this case, having the highest value of factor D, risk R27 has reached the top priority.

Examining the increasing trend in the value of factor C using scenarios 10 to 12 (see Table 10 and Fig. 5), it can be seen that risk priorities have remained fairly stable in scenario 10. After that, the order of risks has experienced noticeable changes, and led to shrinking the SCC index from 1 to 0.9; since in scenarios 11 and 12, the value of factor C was more than other factors. Because of this issue, risk R05 with C = 5 rose to 6th priority from 10th priority in the original case, and risk R26 with C = 3 dropped to the 10th priority from 6th priority.

Eventually, according to scenarios 13 to 15 (see Table 11) showing the changing trend of the factor T, it can be said that the SCC index after staying the same in Scenario 13, has gradually decreased from 1 to 0.94. These changes illustrate a gradual sensitivity to increase the weight of the factor T. The reason for this can be attributed to the high value of the factor T per risk and the effect of factors S and C in the risk prioritization process. These two factors have the highest weight compared to other risk factors and play a significant role in risk prioritization based on the FMEA team members. In this case, risk R12 despite the similarity in the value of SOD factors with risk R50, only because T = 5 has risen to the fourth priority from the fifth priority, and R50 has dropped to the 6th priority from the fourth priority in the original state.

6 Discussion and conclusion

Occupational accidents and work-related diseases that, in some cases, lead to death can produce short-term and long-term undesirable outcomes in both individual’s life and economic terms. In this regard, the HSE management system can bring about significant prosperity in terms of creating a healthy and safe workplace. To implement this system more efficiently, it needs to determine potential risks and control the critical risks to reduce their adverse effects on the activities and workers. Although the FMEA technique is one the most popular methods in the identification of critical risks, its score namely conventional RPN has numerous shortcomings such as failing to create a distinct prioritization of risks, considering only SOD factors and the same importance for these. This issue in real-world applications can baffle DMs when corrective/preventive actions are taken, and there are financial and human resource limitations. Therefore, it is of cardinal importance to propose a more efficient approach that can address the mentioned shortcomings. This study aimed to prioritize HSE risks based on the FMEA technique and using the FCM clustering algorithm and a hybrid MCDM method. This approach was implemented in two phases. Firstly, after identifying the potential risks and determining the value of SOD factors and two extra factors C and T, the FCM algorithm was used to cluster risks, and the weighted Euclidean distance measure calculated using the weights obtained from the FBWM to specify the critical cluster. Following this phase, the FBWM-CoCoSo model was solved to prioritize the risks of the critical cluster. That is to say, in this study in addition to considering the uncertainty in the risk assessment process, critical risks were recognized based on the value of risk factors instead of the RPN score to boost the separability among risk priority. The results of the implementation of the proposed approach in a company active in the automotive industry show its high separability and reliability of the risks prioritization in comparison with traditional FMEA and other common FMEA-based MCDM methods. Since this study considers uncertainty in the critical risk prioritization process, future research can simultaneously apply the uncertainty and reliability concepts by developing the CoCoSo technique based on the Z-number theory to increase the reliability of outputs and separability among the priority of risks. Also, in the case of the existing large data set, unlike the data set used in this study, other clustering algorithms such as density-based spatial clustering of applications with noise can be applied to identify the critical cluster.

References

Adar E, İnce M, Karatop B, Bilgili MS (2017) The risk analysis by failure mode and effect analysis (FMEA) and fuzzy-FMEA of supercritical water gasification system used in the sewage sludge treatment. J Environ Chem Eng 5(1):1261–1268

Baghery M, Yousefi S, Rezaee MJ (2018) Risk measurement and prioritization of auto parts manufacturing processes based on process failure analysis, interval data envelopment analysis and grey relational analysis. J Intell Manuf 29(8):1803–1825

Bakhtavar E, Yousefi S (2019) Analysis of ground vibration risk on mine infrastructures: integrating fuzzy slack-based measure model and failure effects analysis. Int J Environ Sci Technol 16(10):6065–6076

Banan A, Nasiri A, Taheri-Garavand A (2020) Deep learning-based appearance features extraction for automated carp species identification. Aquac Eng 89:102053

Behraftar S, Hossaini SMF, Bakhtavar E (2017) MRPN technique for assessment of working risks in underground coal mines. J Geol Soc India 90(2):196–200

Bezdek JC (2013) Pattern recognition with fuzzy objective function algorithms. Springer Science & Business Media, Berlin

Bian T, Zheng H, Yin L, Deng Y (2018) Failure mode and effects analysis based on D numbers and TOPSIS. Qual Reliab Eng Int 34(4):501–515

Cardone B, Di Martino F (2020) A novel Fuzzy entropy-based method to improve the performance of the Fuzzy C-means algorithm. Electronics 9(4):554

Carpitella S, Certa A, Izquierdo J, La Fata CM (2018) A combined multi-criteria approach to support FMECA analyses: a real-world case. Reliab Eng Syst Saf 169:394–402

Celik E, Gul M (2021) Hazard identification, risk assessment and control for dam construction safety using an integrated BWM and MARCOS approach under interval type-2 fuzzy sets environment. Autom Constr 127:103699

Chanamool N, Naenna T (2016) Fuzzy FMEA application to improve decision-making process in an emergency department. Appl Soft Comput 43:441–453

Chang KH, Chang YC, Tsai IT (2013) Enhancing FMEA assessment by integrating grey relational analysis and the decision making trial and evaluation laboratory approach. Eng Fail Anal 31:211–224

Chang WL, Pang LM, Tay KM (2017) Application of self-organizing map to failure modes and effects analysis methodology. Neurocomputing 249:314–320

Dabbagh R, Yousefi S (2019) A hybrid decision-making approach based on FCM and MOORA for occupational health and safety risk analysis. J Safety Res 71:111–123

Dogan A, Birant D (2021) Machine learning and data mining in manufacturing. Exp Syst Appl 166:114060

Duan CY, Chen XQ, Shi H, Liu HC (2019) A new model for failure mode and effects analysis based on k-means clustering within hesitant linguistic environment. IEEE Trans Eng Manage. https://doi.org/10.1109/TEM.2019.2937579

Duda RO, Hart PE, Stork DG (1973) Pattern classification and scene analysis, vol 3. Wiley, New York

Faizollahzadeh Ardabili S, Najafi B, Shamshirband S, Minaei Bidgoli B, Deo RC, Chau KW (2018) Computational intelligence approach for modeling hydrogen production: a review. Eng Appl Comput Fluid Mech 12(1):438–458

Fan Y, Xu K, Wu H, Zheng Y, Tao B (2020) Spatiotemporal modeling for nonlinear distributed thermal processes based on KL decomposition, MLP and LSTM network. IEEE Access 8:25111–25121

Garcia PADA, Leal Junior IC, Oliveira MA (2013) A weight restricted DEA model for FMEA risk prioritization. Production 23(3):500–507

Ghoushchi SJ, Yousefi S, Khazaeili M (2019) An extended FMEA approach based on the Z-MOORA and fuzzy BWM for prioritization of failures. Appl Soft Comput 81:105505

Guo S, Zhao H (2017) Fuzzy best-worst multi-criteria decision-making method and its applications. Knowl-Based Syst 121:23–31

Hämäläinen P, Takala J, Kiat TB (2017) Global estimates of occupational accidents and work-related illnesses 2017. World, pp 3–4

Helvacioglu S, Ozen E (2014) Fuzzy based failure modes and effect analysis for yacht system design. Ocean Eng 79:131–141

Huang J, You JX, Liu HC, Song MS (2020) Failure mode and effect analysis improvement: a systematic literature review and future research agenda. Reliab Eng Sys.safety 199:106885

Ilangkumaran M, Shanmugam P, Sakthivel G, Visagavel K (2014) Failure mode and effect analysis using Fuzzy analytic hierarchy process. Int J Product Qual Manag 14(3):296–313

ILO (2019) Safety and health at the heart of the future of work, building on 100 years of experience. International Labor Organization

Jee TL, Tay KM, Lim CP (2015) A new two-stage fuzzy inference system-based approach to prioritize failures in failure mode and effect analysis. IEEE Trans Reliab 64(3):869–877

Karunathilake H, Bakhtavar E, Chhipi-Shrestha G, Mian HR, Hewage K, Sadiq R (2020) Decision making for risk management: a multi-criteria perspective. In: Methods in chemical process safety, Elsevier (Vol. 4, pp. 239–287)

Kutlu AC, Ekmekçioğlu M (2012) Fuzzy failure modes and effects analysis by using fuzzy TOPSIS-based fuzzy AHP. Expert Syst Appl 39(1):61–67

Liu HC (2016) FMEA using uncertainty theories and MCDM methods. In: FMEA using uncertainty theories and MCDM methods, Springer, Singapore, pp 13–27

Liu HC, You JX, You XY, Shan MM (2015) A novel approach for failure mode and effects analysis using combination weighting and fuzzy VIKOR method. Appl Soft Comput 28:579–588

Liu HC, You JX, Chen S, Chen YZ (2016) An integrated failure mode and effect analysis approach for accurate risk assessment under uncertainty. IIE Trans 48(11):1027–1042

Liu HC, Hu YP, Wang JJ, Sun M (2018) Failure mode and effects analysis using two-dimensional uncertain linguistic variables and alternative queuing method. IEEE Trans Reliab 68(2):554–565

Liu HC, Chen XQ, Duan CY, Wang YM (2019a) Failure mode and effect analysis using multi-criteria decision making methods: a systematic literature review. Comput Ind Eng 135:881–897

Liu HC, You JX, Shan MM, Su Q (2019b) Systematic failure mode and effect analysis using a hybrid multiple criteria decision-making approach. Total Qual Manag Bus Excell 30(5–6):537–564

Lo HW, Shiue W, Liou JJ, Tzeng GH (2020) A hybrid MCDM-based FMEA model for identification of critical failure modes in manufacturing. Soft Comput. https://doi.org/10.1007/s00500-020-04903-x

Moatari-Kazerouni A, Chinniah Y, Agard B (2015) A proposed occupational health and safety risk estimation tool for manufacturing systems. Int J Prod Res 53(15):4459–4475

Onari MA, Yousefi S, Rabieepour M, Alizadeh A, Rezaee MJ (2021) A medical decision support system for predicting the severity level of COVID-19. Complex Intell Syst. https://doi.org/10.1007/s40747-021-00312-1

Pourreza P, Saberi M, Azadeh A, Chang E, Hussain O (2018) Health, safety, environment and ergonomic improvement in energy sector using an integrated Fuzzy cognitive map–Bayesian network model. Int J Fuzzy Syst 20(4):1346–1356

Rastayesh S, Bahrebar S, Bahman AS, Sørensen JD, Blaabjerg F (2019) Lifetime estimation and failure risk analysis in a power stage used in wind-fuel cell hybrid energy systems. Electronics 8(12):1412

Renjith VR, Kumar PH, Madhavan D (2018) Fuzzy FMECA (failure mode effect and criticality analysis) of LNG storage facility. J Loss Prev Process Ind 56:537–547

Rezaee MJ, Salimi A, Yousefi S (2017a) Identifying and managing failures in stone processing industry using cost-based FMEA. Int J Adv Manuf Techno 88(9–12):3329–3342

Rezaee MJ, Yousefi S, Babaei M (2017b) Multi-stage cognitive map for failures assessment of production processes: An extension in structure and algorithm. Neurocomputing 232:69–82

Rezaee MJ, Jozmaleki M, Valipour M (2018a) Integrating dynamic fuzzy C-means, data envelopment analysis and artificial neural network to online prediction performance of companies in stock exchange. Physica A Stat Mech Appl 489:78–93

Rezaee MJ, Yousefi S, Valipour M, Dehdar MM (2018b) Risk analysis of sequential processes in food industry integrating multi-stage fuzzy cognitive map and process failure mode and effects analysis. Comput Ind Eng 123:325–337

Rezaee MJ, Yousefi S, Eshkevari M, Valipour M, Saberi M (2020) Risk analysis of health, safety and environment in chemical industry integrating linguistic FMEA, fuzzy inference system and fuzzy DEA. Stoch Env Res Risk Assess 34(1):201–218

Rezaee MJ, Eshkevari M, Saberi M, Hussain O (2021) GBK-means clustering algorithm: an improvement to the K-means algorithm based on the bargaining game. Knowl Based Syst 213:106672

Rezaei J (2015) Best-worst multi-criteria decision-making method. Omega 53:49–57

Safari H, Faraji Z, Majidian S (2016) Identifying and evaluating enterprise architecture risks using FMEA and fuzzy VIKOR. J Intell Manuf 27(2):475–486

Sakthivel G, Saravanakumar D, Muthuramalingam T (2018) Application of failure mode and effect analysis in manufacturing industry-an integrated approach with FAHP-fuzzy TOPSIS and FAHP-fuzzy VIKOR. Int J Product Qual Manag 24(3):398–423

Shamshirband S, Rabczuk T, Chau KW (2019) A survey of deep learning techniques: application in wind and solar energy resources. IEEE Access 7:164650–164666

Smith PM, Saunders R, Lifshen M, Black O, Lay M, Breslin FC, LaMontagne AD, Tompa E (2015) The development of a conceptual model and self-reported measure of occupational health and safety vulnerability. Accid Anal Prevent 82:234–243

Soleimani H, Fattahi Ferdos T (2017) Analyzing and prioritization of HSE performance evaluation measures utilizing Fuzzy ANP (Case studies: Iran Khodro and Tabriz Petrochemical). J Ind Eng Manag Stud 4(1):13–33

Taormina R, Chau KW (2015) ANN-based interval forecasting of streamflow discharges using the LUBE method and MOFIPS. Eng Appl Artif Intell 45:429–440

Tay KM, Jong CH, Lim CP (2015) A clustering-based failure mode and effect analysis model and its application to the edible bird nest industry. Neural Comput Appl 26(3):551–560

Tian ZP, Wang JQ, Zhang HY (2018) An integrated approach for failure mode and effects analysis based on fuzzy best-worst, relative entropy, and VIKOR methods. Appl Soft Comput 72:636–646

Tooranloo HS, sadat Ayatollah A (2016) A model for failure mode and effects analysis based on intuitionistic fuzzy approach. Appl Soft Comput 49:238–247

Ulutaş A, Karakuş CB, Topal A (2020) Location selection for logistics center with fuzzy SWARA and CoCoSo methods. J Intell Fuzzy Syst (preprint). https://doi.org/10.3233/JIFS-191400

Wang Z, Gao JM, Wang RX, Chen K, Gao ZY, Zheng W (2017) Failure mode and effects analysis by using the house of reliability-based rough VIKOR approach. IEEE Trans Reliab 67(1):230–248

Wang C, Tan XP, Tor SB, Lim CS (2020) Machine learning in additive manufacturing: state-of-the-art and perspectives. Addit Manuf 36:101538

Wu CL, Chau KW (2013) Prediction of rainfall time series using modular soft computingmethods. Eng Appl Artif Intell 26(3):997–1007

Yazdani M, Zarate P, Zavadskas EK, Turskis Z (2019) A Combined Compromise Solution (CoCoSo) method for multi-criteria decision-making problems. Manag Decis 57(9):2501–2519

Yazdi M (2019) Improving failure mode and effect analysis (FMEA) with consideration of uncertainty handling as an interactive approach. Int J Interact Des Manuf (IJIDeM) 13(2):441–458

Yousefi S, Alizadeh A, Hayati J, Baghery M (2018) HSE risk prioritization using robust DEA-FMEA approach with undesirable outputs: a study of automotive parts industry in Iran. Saf Sci 102:144–158

Yousefi S, Jahangoshai Rezaee M, Moradi A (2020) Causal effect analysis of logistics processes risks in manufacturing industries using sequential multi-stage fuzzy cognitive map: a case study. Int J Comput Integr Manuf 33(10–11):1055–1075

Yucesan M, Gul M (2021) Failure modes and effects analysis based on neutrosophic analytic hierarchy process: method and application. Soft Comput. https://doi.org/10.1007/s00500-021-05840-z

Yucesan M, Gul M, Celik E (2021) A holistic FMEA approach by fuzzy-based Bayesian network and best–worst method. Complex Intell Syst. https://doi.org/10.1007/s40747-021-00279-z

Zeraatpisheh M, Ayoubi S, Brungard CW, Finke P (2019) Disaggregating and updating a legacy soil map using DSMART, fuzzy c-means and k-means clustering algorithms in Central Iran. Geoderma 340:249–258

Zhang H, Dong Y, Palomares-Carrascosa I, Zhou H (2018) Failure mode and effect analysis in a linguistic context: a consensus-based multiattribute group decision-making approach. IEEE Trans Reliab 68(2):566–582

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Valipour, M., Yousefi, S., Jahangoshai Rezaee, M. et al. A clustering-based approach for prioritizing health, safety and environment risks integrating fuzzy C-means and hybrid decision-making methods. Stoch Environ Res Risk Assess 36, 919–938 (2022). https://doi.org/10.1007/s00477-021-02045-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-021-02045-6