Abstract

Understanding the geological uncertainty of hydrostratigraphic models is important for risk assessment in hydrogeology. An important feature of sedimentary deposits is the directional ordering of hydrostratigraphic units (HSU). Geostatistical simulation methods propose efficient algorithm for assessing HSU uncertainty. Among different geostatistical methods to simulate categorical data, Bayesian maximum entropy method (BME) and its simplified version Markov-type categorical prediction (MCP) present interesting features. In particular, the zero-forcing property of BME and MCP can provide a valuable constrain on directional properties. We illustrate the ability of MCP to simulate vertically ordered units. A regional hydrostratigraphic system with 11 HSU and different abundances is used. The transitional deterministic model of this system presents lateral variations and vertical ordering. The set of 66 (11 × 12/2) bivariate probability functions is directly calculated on the deterministic model with fast Fourier transform. Despite the trends present in the deterministic model, MCP is unbiased for the HSU proportions in the non-conditional case. In the conditional cases, MCP proved robust to datasets over-representing some HSU. The inter-realizations variability is shown to closely follow the amount and quality of data provided. Our results with different conditioning datasets show that MCP replicates adequately the directional units arrangement. Thus, MCP appears to be a practical method for generating stochastic models in a 3D hydrostratigraphic context.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The limited sampling available to study complex geological systems are not sufficient to properly characterize critical features, such as the proportions and connectivity of units, that control groundwater flow simulation responses (Jakeman et al. 2016; Refsgaard et al. 2012). These attributes are essential to assess the response of a hydrogeological system to external stimuli. Hence, adequate characterization of uncertainty about unit proportions and connectivity is of paramount importance for hydrogeological modeling and prediction of aquifer responses (Molson and Frind 2012).

Most groundwater flow models are based on deterministic models that lack any quantification of uncertainty in the geometry, connectivity, and relative proportions of the geological units. Geostatistical simulation methods aim to propose different hydrogeological models called realizations (Chilès and Delfiner 2012). Each realization is typically an input to a flow simulator from which a response to a specified stimulus is computed. The distribution of modeled responses describes the uncertainty about the real system response to a similar physical stimulus (Zhou and Li 2011). To be useful, the approach requires each hydrogeological model to be consistent with the relevant natural features expected to be found in the subsurface. Moreover, the ensemble of models should provide a fair assessment of uncertainty. Hence, the models must include all the geological knowledge available while preserving the variability due to incomplete knowledge.

An important common feature in sedimentary deposits is the directional ordering of stratigraphical geological units. For example, in undeformed glacial stratigraphies, this is represented by the law of superposition. This constraint is difficult to incorporate in traditional categorical geostatistical simulation methods like PluriGaussian Simulation (PGS).

The PGS method of Armstrong et al. (2011) with independent Gaussians does not allow asymmetrical ordering, as all transitions are symmetric by construction. Some asymmetry can be introduced using correlated and spatially delayed Gaussians (Le Blévec et al. 2017). However, this approach does not allow to fully control the transition probabilities in presence of numerous units.

The multiple-point statistical methods (MPS) have two main variants: either pixel- (e.g. Strebelle 2002; Mariethoz et al. 2010) or patch-based (e.g. Rezaee et al. 2015; Arpat and Caers 2007). Both are based on the use of one or many training images that synthesize geological knowledge. Although directionality can be present in a training image, it might be difficult to strictly enforce transitional features into realizations, especially for the patch-based variant. Furthermore, multipoint method used with training image that have low pattern repeatability like the ones seen in sedimentary environments with directional trends (Boucher et al. 2014) may generate realizations lacking variability.

Categorical simulation (units or facies) based on transition probabilities like Bayesian maximum entropy (BME) (Bogaert 2002; Bogaert and D’Or 2002; D’Or 2003) can reproduce complex geological structures that account for asymmetries in the bivariate probabilities. However, the computations explode for multiple facies and multiple points. Computationally simpler approach like Li’s algorithm (Li et al. 2004; Li and Zhang 2007; Li 2007) or Markov Category Prediction method (MCP) (Allard et al. 2011) also enable to account for asymmetries in bivariate probabilities. Allard et al. (2011) demonstrated that MCP constitutes a good approximation to BME. However, MCP has never been tested on a complex system with more than 3 units and strong directional control.

The main objective of this study is to demonstrate the ability of MCP to simulate units with directional ordering. After reviewing the MCP approach and its properties, effect of conditioning data is discussed and illustrated with a simple synthetic example. Then, a complex real 3D deterministic model, representing a sub-domain of the Simcoe County hydrostratigraphic system in south-central Ontario, is used as training image for the application of MCP. Various simulation scenarios using different types and levels of information are compared and discussed.

2 MCP simulation methodology

The MCP approach was introduced by Allard et al. (2011) as an efficient substitute for the computationally intensive Bayesian maximum entropy (BME) method. The BME, initially developed to estimate continuous variables (Christakos 1992; Serre and Christakos 1999; Serre et al. 2003; D’Or et al. 2001; Orton and Lark 2007), was later adapted for the estimation of categorical variables (Bogaert and D’Or 2002; D’Or and Bogaert 2004). In the BME approach for categorical variables, the parameters of the joint discrete distributions are estimated so as to match simultaneously the bivariate probabilities between all pairs. By contrast, in the MCP approach only the bivariate probabilities involving the point to estimate and each data point are considered. The bivariate probabilities between pairs of data points are ignored. Allard et al. (2011) showed this corresponds assuming a conditional independence hypothesis: the joint category distributions at locations \({{\mathbf {x}}}_{i}, \ i=1 \ldots n\) are considered independent once the category at estimation location \({\mathbf{x }}_{0}\) is known.

2.1 MCP definition

The conditional probability to observe the category \(i_0\) at location \({\mathbf{x }}_{0}\) given the categories \(i_1, \ldots i_n\) observed at locations \({\mathbf{x }}_{1}, \ldots {\mathbf{x }}_{n}\) is by definition:

where I is the number of categories.

In the BME approach, one seeks to estimate directly the above joint distributions subject to a series of constraints aimed at recovering imposed univariate and bivariate distributions. In practice, BME is limited to use of small neighborhood due to the difficulty to estimate high-dimensional joint distributions.

In the MCP method, one assumes a conditional independence hypothesis such that:

which enables to write Eq. 1 as:

Hence, only the bivariate distributions involving the estimated point \(\mathbf {x_0}\) are required in the MCP approach. It was shown by Allard et al. (2011) through a series of examples that, despite the rather strong conditional independence hypothesis, differences observed between the BME and the MCP results were negligible.

One interesting feature of MCP, called the zero-forcing property, is the proper integration of 0/1 probabilities. As soon as \(p_{i_k|i_0=j}=0\) for any k, one has \({p}_{i_{0}=j|i_{1},\ldots ,i_{n}}^{MCP}=0\). Similarly, when \(p_{i_k|i_0=j}=1\) one has \({p}_{i_{0}=j|i_{1},\ldots ,i_{n}}^{MCP}=1\). Therefore, directional sequence of categories can be easily reproduced by MCP as noted by Allard et al. (2011). It makes the method attractive to simulate stratigraphic models with naturally ordered units arrangement.

2.2 MCP simulation

We apply the MCP approach for the particular case where a training image (TI) is available either in the form of a conceptual model or a deterministic one. In both cases, we assume the basic grid cell is the same size in the TI and the simulated field. Moreover, we assume that all hard data are observed directly at the cell scale or are deemed representative of this scale.

2.2.1 Bivariate probabilities

The first step in MCP is to estimate the bivariate probabilities. These are computed directly from the TI for all separation vectors. For efficient computation, we use the fast Fourier transform algorithm (FFT) (Marcotte 1996). The bivariate probabilities are computed simultaneously by FFT for each variable pair and every separation vector. During sequential simulation, the separation vectors between the point to simulate and the known points (data or previously simulated) in the neighborhood are computed and used to read directly the corresponding bivariate probabilities. We stress that because everything is defined at a cell scale there is no modeling of bivariate probabilities required nor any smoothing. We simply read directly the experimental probabilities for the MCP computations.

As an example, suppose 11 HSU are available in a 3D setting. One wants to simulate the HSU at cell \({\mathbf {x_0}}=(50,50,5)\). Also assume that HSU 5 and 7 were observed at cells \(\mathbf {x_1}\) = (49, 52, 4) and \(\mathbf {x_2}\) = (55, 46, 7) respectively. These two informed cells define the neighborhood of \(\mathbf {x_0}\). Then, the two separation vectors are \((1,-2,1)\) and \((-5,4,-2)\). To compute Eq. 3, we read directly the 11 values (for \(i_0=1 \ldots 11\)) of \(p_{i_0,i_1=5}(1,-2,1)\) and the 11 values of \(p_{i_0,i_2=7}(-5,4,-1)\).

The bivariate probability of observing categories i at location \({{\mathbf {x}}}\) and j at location \(\mathbf {x+h}\) is simply equal to the non-centered cross-indicator covariance \(P(I({{\mathbf {x}}}),J({\mathbf {x+h}}))=E[I({{\mathbf {x}}})J({\mathbf {x+h}})]\) where \(I({{\mathbf {x}}})\) is the indicator variable that takes value one if HSU i is observed at point \({{\mathbf {x}}}\) and value zero otherwise. Similarly \(J(\mathbf {x+h})\) takes value one if HSU j is observed at point \(\mathbf {x+h}\). The expectation is estimated by \(\frac{1}{N({\mathbf {h}})} \sum _{{{\mathbf {x}}}} I({{\mathbf {x}}})J({\mathbf {x+h}})\) over the \(N({\mathbf {h}})\) pair of points with separation vector \({\mathbf {h}}\). As described in Marcotte (1996), for data on a (possibly incomplete) regular grid, this can be computed by FFT using the following equations:

where F represents the FFT, \(F^{-1}\) the FFT-inverse, matrix \({{\mathbf {K}}}\) takes the value one at all points of the deterministic model and zero outside, matrices \({{\mathbf {I}}}\) and \({{\mathbf {J}}}\) take the value one at locations where respectively categories i and j are observed and zero elsewhere. The matrices \({{\mathbf {I}}}\) and \({{\mathbf {J}}}\) are extended by zero-padding to nullify periodic repetitions implicit in FFT. The over-line indicates complex conjugate. Note that the FFT computes the indicator cross-covariances simultaneously for all lag-distances \({\mathbf {h}}\). The fast computation in Eq. 5 is repeated separately for each pair of categories.

2.2.2 Data and pseudo-data

Conditioning to hard data (HD) is straightforward in MCP as opposed to some other categorical simulation methods like object-based, process-based or patch-based variants of MPS. The HD simply populate the corresponding cells and are never changed during the simulation. In the absence of HD, the algorithm starts either by drawing directly from the marginal distributions or, preferably, by imposing a pseudo-data (PD). Pseudo-data can be provided, as examples, by surficial maps or bedrock topography interpretations. Another possible source of pseudo-data can be a deterministic model, such as a geologist-interpreted CAD model. Taking a small proportion of the deterministic model as pseudo-data helps to reproduce the main characteristics and constraints interpreted by the geologist whilst keeping variability in the simulated models. However, one should refrain from taking too much pseudo-data as this will reduce variability in the realizations.

2.2.3 Simulation algorithm

The simulation proceeds following the sequential simulation framework where the conditional distributions are computed using Eq. 3. The simulated value at the current cell is obtained by a random draw from the category conditional probabilities. The use of a multigrid approach (Tran 1994) helps to better reproduce the long range structural characteristics described by the TI. The neighborhood search parameters include: extent and anisotropy of the search, number of neighbors to use and search by octant. The effect of these choices can be important (see Sect. 2.5).

In the particular case of highly ordered arrangement of units like the ones considered in the next sections, it is advisable to avoid drawing from the marginal distributions as nothing guarantees that the desired order of units will be preserved. A simple modification of the simulation path alleviates this problem by re-simulating at the end of path any location that does meet a minimum number of data found in the neighborhood search.

2.2.4 Assessing quality of realizations

Two statistics are used to measure the quality of the realizations: the Kullback-Leibler divergence (C1) from Kullback and Leibler (1951) and the average spread of HSU proportions (C2) where the spread for a given HSU is measured by the difference between the proportion quantiles 0.95 and 0.05 among the \(n_r\) realizations. Hence,

where I is the number of HSU, \(pTI_{i}\) represents the \({\hbox {HSU}}_i\) proportion in the TI, \(\overline{pMCP_{i}}\) is the average proportion of \({\hbox {HSU}}_i\) in the \(n_r\) realizations and \(q_{5,i}\) and \(q_{95,i}\) are the 0.05 and 0.95 quantiles of distribution obtained with the \(n_r\) realizations for \({\hbox {HSU}}_i\) proportion. The first criterion measures similarity of the distribution of HSU proportions in realizations compared to the TI proportions. Criterion C2 measures the variability of the simulated HSU proportions among the different realizations.

The above criteria were used to assess the impact of various choices for the control parameters. We generally seek to favor small C1 and large C2 values. These two objectives tend to oppose each other. For example, taking a larger proportion of pseudo-data from a deterministic model favors the reproduction of the proportions globally but also in each individual realization which reduces the inter-realization variability of HSU proportions.

In addition to the global statistics C1 and C2, we also computed dissimilarity map between the model used as TI and the different realizations using:

where \(n_r\) is the number of realizations, and \(I_{S_r,TI}({\mathbf{x }})\) is an indicator variable taking value zero when the simulated unit at location \({\mathbf{x }}\) coincides with unit in TI, and value one otherwise. The dissimilarity map provides a spatial representation of where the uncertainty is large with respect to the deterministic model.

2.3 Illustration of MCP

A synthetic example based on a simple deterministic model is used to illustrate typical results obtained by MCP. The deterministic model used as TI consists of four horizontal hydrogeological units with increasing proportions from top to bottom (respectively 15, 20, 30 and 35%).

Figure 1 presents four different realizations obtained using three initial scenarios: non-conditional simulation (NCS), conditional simulation with 1% data extracted from the TI (CS1) and conditional simulation with 20% data extracted from the TI (CS2). Simulations are performed on a 2D 25 × 20 grid. The minimum and maximum number of neighbors are set to 0 and 5. The search is done by quadrant with a maximum of 2 samples per quadrant.

Based on the 0/1 forcing property of MCP, the sequence of units present in the TI is respected in each realization. As expected, the dissimilarity decreases with the addition of data and the dissimilarity is stronger close to units interfaces.

Figure 2 shows the boxplots of the units proportions obtained from 100 realizations of NCS, CS1 and CS2 for the synthetic model. The TI proportions are well recovered for each unit, in all cases. For NCS, the mean proportions obtained from the realizations are not statistically different from proportions in the TI (test on equality of proportions), indicating the absence of bias of the method. A similar test cannot be applied for cases CS1 and CS2 due to the correlation between realizations induced by the conditioning data. However, the boxplots strongly suggest absence of bias of the method. As expected, variability of unit proportions in the realizations decreases with the amount of conditioning data. The expected variability of MCP conditional simulation is studied more in detail in Sect. 2.4.

Table 1 presents statistics C1 and C2 (see Eq. 7), for the different scenarios.

The NCS presents the highest values for C1, and C2. Adding a few data randomly (CS1 vs. NCS) decreases strongly both statistics values. As expected, adding more data reduces further C2 (CS2 vs. CS1).

From this synthetic example, we conclude that the MCP is unbiased. Its 0/1 forcing property is determinant to enforce directional units sequence. The variability of the resulting realizations is controlled by the number of data available. The realizations appear as different versions of the deterministic model, especially in presence of conditioning data.

2.4 MCP variability in the Gaussian case

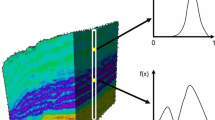

To illustrates expected variability of MCP conditional simulation in a spatial context, we compare the variability of its transitional bivariate probability functions with the multiGaussian case.

We first perform conditional simulation of 100 realizations of a Gaussian variable using FFTMA algorithm (Ravalec et al. 2000) on a 2D simulation grid (250 × 250 cells) using a cubic covariance function with an isotropic range representing 1/4 of the field size. An initial realization was sampled at 251 cells (0.4% of the total number of cells) to provide the conditioning data. Gaussian realizations are post-conditioned by kriging and then truncated using thresholds −0.67 and 0.52 to define three units with theoretical proportions of 0.25, 0.45 and 0.30 respectively.

For each truncated conditional realization we calculated the experimental non-centered cross-indicator covariance that estimates \(E[I({{\mathbf {x}}})J({\mathbf {x+h}})]\). At each lag, the 100 experimental non-centered covariances and cross-covariances were sorted and used to define 95% confidence interval of bivariate probabilities (blue lines in Fig. 3) for the Gaussian case.

Next, we calculated for each lag distance the theoretical bivariate probabilities from the biGaussian law using the same covariance model and unit proportions (Chilès and Delfiner 2012, p. 104). We used the theoretical bivariate probabilities for MCP simulation with search radii equal to field size and using a maximum of 5 neighbors. From MCP realizations, we calculated the experimental bivariate probabilities for the MCP case (grey lines in Fig. 3).

Figure 3 shows a rather good agreement of MCP bivariate probabilities with those obtained in the Gaussian case. There is no strong bias for probabilities and the variability of the probabilities match well the confidence intervals obtained from exact Gaussian simulations. This example illustrates that MCP is able to reproduce variability comparable to the one obtained from an exact Gaussian method in the conditional Gaussian case. Admittedly, results can vary with the number of conditioning points used.

2.5 Sensitivity to neighborhood selection

To assess the effect of choices related to the main simulation parameters (number of neighbors and search radius), a sensitivity analysis is performed on the synthetic case shown in Fig. 1 based on C1 and C2 criterion values. One hundred conditional realizations with 10 HD were run. In the first test, the search radius (dmax) covers the full extent of the domain and maximal number of neighbors (nmax) varies (Fig. 4-top left). In the second test, nmax is set at 10 with increasing dmax (Fig. 4-top right). In the third test, nmax is set at 10, dmax covers the full extent of the domain and number of HD increases (ndata). In the fourth test, nmax is set at 10, dmax covers the full extent of the domain, the number of HD is 10 (ndata) and number of realizations increases. In these four tests no other constraints are applied. Hence, multigrid, correction for inversion, search by quadrant, and minimal number of neighbor are all deactivated.

Top left C1 and C2 as a function of nmax using dmax infinite and ndata = 10; top right C1 and C2 as a function of dmax with nmax = 10 and ndata = 10; bottom left C1 and C2 as a function of ndata with nmax = 10 and dmax infinite; bottom right C1 and C2 as a function of number of realizations with nmax = 10 and dmax infinite

Figure 4-top left shows that C1 and C2 decrease to zero as nmax increases. With numerous neighbors, the data event becomes so constraining that a single category at simulation point is observed in the TI (C2 goes to zero). On the opposite, a lack of neighbors does not bring enough constraints to reproduce correctly the TI proportions, so C1 is high. For this particular example, values of nmax between say 5–20 could be envisaged as they allow adequate reproduction of TI proportions without eliminating totally the variability.

Figure 4-top right shows globally fluctuations around the levels observed for C1 and C2 in the Fig. 4-top left for nmax = 10, except for small dmax where both C1 and C2 are higher. When dmax is small, it is likely that less than nmax neighbors are found, so the simulation behaves like with a smaller nmax. This indicates that results are rather robust to the choice of dmax. As soon as dmax is not set too small, one can expect a range of values for nmax where C1 is simultaneously small and C2 not close to zero. This range is expected to be case dependent, so the selection of an acceptable nmax may require a few trials.

Figure 4-bottom left shows that C1 and C2 results are robust to the number of HD initially available as soon as ndata \(\ge\) 10. C1 is close to zero and C2 decreases slowly with ndata. So the variability obtained is more directly related to the number of neighbors used in the simulation (nmax) than the number of initially available HD (ndata). This result is rather expected as each simulated cell is added sequentially to the list of available data. As the initial HD represent only a small portion of cells present in the simulated field, the neighborhood becomes quickly composed mostly of previously simulated points.

Figure 4-bottom right shows convergence of C1 to zero and stabilization of C2 as the number of realizations increases. Note that in this case for each realization a new drawing of HD is done so as to eliminate the effect of any particular drawing.

3 Case study: MCP simulation of a Basin glacial stratigraphy

We apply the MCP method to a complex deterministic model comprising 11 HSU with strong vertical ordering. After introducing the study area and the deterministic model, five scenarios for simulation by MCP are considered and their results compared.

3.1 Hydrostratigraphic setting: Simcoe County area

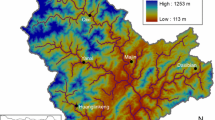

The 3D TI represents a small portion of a larger deterministic model describing the regional hydrostratigraphy of South Simcoe County in South-central Ontario, Canada (Fig. 5). The deterministic model and the accompanying data were provided by the Ontario Geological Survey.

The 11 HSU show proportions varying from 0.25 to 27.28%. The first two letters of the HSU label refer to sand and gravel aquifers (AF) or silty and clayey aquitards (AT). From bottom to top, the hydrostratigraphy comprises: Ordovician limestone bedrock (23.24%) and pre-Middle Wisconsin stratified sand and gravel aquifers (AFF1; 0.25%) and aquitards ATE1 (12.19%). The overlying Thorncliffe Formation consists of interbedded aquifers (AFD4: 2.00%; AFD1: 2.90%) and aquitards (ATD2: 1.43%, ATD1; 27.28%). Stratified deposits of AFD4 uncomformably overlies ATE1 which in this area occurs as a fine textured diamicton. Newmarket till (ATC1; 4.00%) caps the sedimentary sequence and acts as a regional aquitard. The till is eroded locally and has been traced down into broad valley systems interpreted to be tunnel channels. Glaciofluvial sand and gravel aquifers (AFB2; 2.45%) occur at the base of the tunnel channels and are equivalent in age to early Oak Ridges moraine deposits. AFB2 includes gravelly and sandy sediments at the base and is capped by a sequence of glaciolacustrine silt, clay and subordinate sand (aquitard ATB2; 22.27%). Shallow regressional deposits of sand and gravel (AFB1; 1.99%) completes the stratigraphic sequence. The HSU thickness is highly variable (Fig. 6). Each HSU is composed of one or more successive stratigraphic sub-units (hydrofacies) with similar hydrogeologic properties determined at reservoir scale.

3.2 Simulation scenarios and parameters

Five different scenarios are compared. The first scenario is a non-conditional simulation (NCS). It uses only the TI in Fig. 5. The four other scenarios (CS1 to CS4) are conditional to information depicted in Fig. 7 and described in Table 2.

Two types of HD are used. The first one comes from continuously sampled high quality boreholes. The second type represents picks (3D points) interpreted by the geologist from low quality borehole logs from public domain database. The picks are used to control unit geometry. Each pick corresponds to the top of a certain HSU. The HSU immediately above a pick is considered unknown although it must obviously be a younger unit. In addition to HD, pseudo-data are used in some of the scenarios. The sources of pseudo-data are: (i) the hydrostratigraphic units derived from surficial geology mapping, (ii) the bedrock interpolated surface and (iii) random points selected within the deterministic model. In the latter case, a new drawing is done for each realization.

The simulation grid is regular. Each cell is 200 × 200 × 1 m. The deterministic model (TI) and simulated field are defined over the same grid of 76 × 5 × 193 cells. The bivariate probabilities are calculated over the entire 3D TI.

One hundred realizations are produced for each scenario. MCP is applied with search radii of 10 × 5 × 25 cells and a maximum of 5 neighbors with at most two neighbors per octant. A multigrid procedure is activated comprising 9 levels. All simulation results are compared to the deterministic model considered as a smoothed interpretation of the ground truth.

3.2.1 Non-conditional simulation (NCS)

The NCS scenario illustrates the capacity of MCP to preserve HSU relationships found in the TI (Fig. 8). The directional HSU sequence is respected (Fig. 8b) due to the zero-forcing property. The spatial variability of the HSU is larger close to HSU contacts (Fig. 8c). The TI HSU proportions are well reproduced (Fig. 8d) by the MCP realizations, even for units representing very low proportions. The proportion variability is large and approximately proportional to the TI proportions as expected for a non-conditional case.

The differences between the TI proportions and the mean proportions over the realizations are statistically non-significant for 9 of the 11 HSU (we have \(\left| \overline{pMCP_{i}}-pTI_{i} \right| / \sqrt{pTI_i(1-pTI_i)/n}<z_{0.05/2}=1.96\)), including the four most abundant HSU. The only two HSU showing significant differences, AFD4 and AFF1, are among the least abundant ones.

Figures 9 shows boxplots of HSU thicknesses measured over all vertical scan lines for the 100 realizations. The thickness distributions compares well to the mean and maximum thicknesses observed in the 3D TI for the different HSU.

3.2.2 Conditional simulation with HD: CS1

Data used for CS1 scenarios are 176 picks (CS1a) or 176 double picks (i.e. in all 352 picks obtained by adding a pick just above the 176 original picks) (CS1b) and HSU extracted from two high quality continuous drilling (Bajc et al. 2015). A single pick represents the top of a HSU while a double pick represents the top of a HSU and the bottom of the overlying HSU as identified from the TI. Data locations are illustrated in Fig. 7-top.

Figures 10 and 11 show the strong effect of conditioning data on MCP realizations. Compared to NCS, adding a few data helps reproduce the main TI structures. Uncertainty is larger close to HSU contacts (Fig. 10c). HSU proportions variability is greatly reduced (Fig. 10d) compared to NCS. The differences between scenarios CS1a and CS1b are subtle, mainly visible in the leftmost part of the sections and on the dissimilarity maps where CS1a shows more variability with respect to the TI. Moreover the abundance of AFD4 is slightly better reproduced with CS1b than with CS1a and the HSU units appear laterally a bit more continuous.

We note that many HSU have mean proportions in the realizations different from the TI. For the three most abundant HSU (ATB2, ATD1 and bedrock) the mean proportions are less in CS1 realizations than in TI. Considering only the picks (i.e. excluding borehole data), the ATB2, ATD1 and bedrock HSU were also less abundant than in the TI (respectively 17.1–22.5, 23.5–27.1, 0.5–24% for ATB2, ATD1 and bedrock picks—TI). Hence, in the initial steps of MCP a simulated point remote from the boreholes will find fewer ATB2, ATD1 and bedrock data in its neighborhood than present in the TI. This could explain the observed disparity between MCP and TI HSU proportions.

Figure 12 shows four realizations obtained with CS1b of AFD4, ATE1, AFF1 and Bedrock HSU sub-ensemble. Notice variations in thickness of ATE1 and AFF1 HSU across the realizations. ATE1 has many direct contacts with the bedrock in the fourth realization but almost none in the second one. This could illustrate erosional events or local discontinuities. The 0/1 forcing capability of MCP preserves nevertheless the upward depositional sequence.

3.2.3 Conditional simulation with pseudo-data: CS2

In this scenario we do not use the available HD. Instead, 44 pseudo-data are extracted from the deterministic model by random sampling. Hence, the HSU proportions in pseudo-data and TI are similar. The comparison of results for the “balanced” case CS2 with the “unbalanced” case CS1a aims to assess the influence of differences between data and TI HSU proportions on global HSU proportions obtained in realizations.

Figure 13 shows the results for CS2. One can still observe differences between HSU proportions in the simulation and TI for AFB2, ATD1, AFD4, AFF1 and Bedrock, but the differences are globally reduced compared to CS1. Having conditioning data more evenly spread in the TI helps reduce the observed discrepancies in HSU proportions. However, it is not clear whether or not the observed proportion differences are statistically significant for both CS1 and CS2 when compared to proportions in TI.

3.2.4 Conditional simulation with data and pseudo-data: CS3

One source of commonly available geological information is the surficial geology for which reliable maps exist. A second source is the bedrock topography which can be interpolated reliably given the availability of all the boreholes archived in databases. These two sources can be used to provide pseudo-data that help constrain the simulated models.

Figure 14 presents the results of conditional simulation using pseudo-data from surficial geology (all 324 cells) and bedrock (37 cells) in addition to the HD set (see Fig. 7c). The most striking effect of the added pseudo-data is the reduction of variability observed on the boxplots compared to NCS and CS2.

3.2.5 Conditional simulation with HD and pseudo-data from TI: CS4

As indicated before and as used in CS2, the deterministic model can itself be a source of pseudo-data. The added pseudo-data from the TI helps to control the HSU proportions in the simulated models. However, their number must be kept small to preserve variability of the realizations.

Figure 15 presents an example using a small proportion of the TI (0.3%) obtained from a random sampling that adds 143 pseudo-data to the available HD. As with CS3, the main effect of added pseudo-data is to diminish strongly the variability of proportions among realizations.

3.3 Statistics of the different scenarios

The criteria C1 and C2 are shown in Table 2 for the five different scenarios. The NCS scenario has the most variability, it presents the largest C2 values. Adding data (CS1) or pseudo-data (CS3) unevenly distributed in the simulation field affects slightly the reproduction of HSU proportions compared to a more balanced sampling as shown by the higher C1 values for CS1 and CS3 compared to NCS, CS2 and CS4. Adding more data reduces the variability as indicated by statistic C2 lower for CS4 compared to S2 and CS1, and lower for CS3 than for CS1.

3.4 Illustration of local proportions variability

Maps of local proportions are computed over the TI and the realizations to help visualizing the reproduction of local trends (Fig. 16). Maps of average and standard deviations of the local proportions over the 100 realizations are also included. The maps represent the three most abundant units: ATB2, ATD1 and ATE1 for scenario CS1a. The results show that TI local proportions are well reproduced by MCP realizations and variability occurs close to HSU locations identified in the deterministic model.

CS1a: Local HSU proportions within 25 × 25 moving windows over central vertical section. First column: local mean in TI, second column: mean of proportions (over 100 realizations); third column: standard deviation of the local proportions across realizations. From top row to bottom row: three most abundant units, ATB2, ATD1 and ATE1

4 Discussion

MCP has been proposed recently as an efficient alternative to the computationally intensive BME for the simulation of fields showing complex unit arrangements and non-symmetric transition probabilities as are often found in sedimentary environments. It is based on the rather strong conditional independence hypothesis that assumes the unit at data points are independent once the unit at the estimation point is known. This assumption is more likely to be valid when working with small neighborhoods and conditioning data points evenly dispersed around the estimation point. Hence, we selected a localized octant search with a maximum of two points per octant and a maximum of 5 points over all octants.

Results by Allard et al. (2011) on a series of small datasets show no significant differences between BME and MCP. The same authors provide theoretical justifications for the use of MCP. However, to our knowledge, the method has never been tested on a complex model based on real geological data such as Simcoe hydrostratigraphic system which comprises 11 different HSU having quite different proportions and a clear directional control.

The 0/1 forcing property of MCP is determinant in reproducing the directional trends. This property can deal with transitional cases, like those presented in the synthetic and Simcoe County examples, without the need to impose directional trend with auxiliary fields as with PGS. Also, it is not necessary to segment the studied field into homogeneous subdomains. The field can be handled globally at once by MCP, which greatly facilitates the analysis.

The most practical and influential aspect in the application of MCP is the definition of the neighborhood. We obtained better results visually and as measured by the statistics C1 and C2 when using octant search and a multigrid approach. The number of neighbors and extent of the search should be large enough to avoid unit inversions in the simulated field, but small enough to favor variability between realizations. Good compromises between proportional reproduction and variability were obtained with five neighbors for both the synthetic and the Simcoe County test cases. Using more neighbors generally decreased too much C2. D’Or et al. (2001) reported that five neighbors was enough in BME to stabilize the C1 criterion. Our findings in this 3D case confirm those of Allard et al. (2011) that the number of neighbors need not to be large provided the neighbors are well spread around the simulated point.

Despite the care employed in the definition of the neighborhood, unit inversions can still seldom occur due to the peculiarities of the available HD and previously simulated points found in the neighborhood. Inversions can be corrected on the fly in the sequential simulation. The procedure consists to verify whether the candidate HSU at a simulated cell satisfies the vertical hydrostratigraphy depicted by the deterministic model. It compares the simulated cell with conditioning data and previously simulated cells in the same column. When in conflict with the hydrostratigraphy, the candidate HSU is discarded and the cell returned at the end of the list of remaining cells to simulate. In the Simcoe County test case, less than 0.3% of the points presented unit inversions, a small number considering that 11 units are present.

When the assumption of conditional independence is valid, MCP is unbiased by construction as one is then drawing sequentially from the true conditional distributions. When the conditional independence assumption does not hold completely then one is not drawing from the exact conditional distributions and nothing firm about the bias can be stated. However, our experimental results for the synthetic case (Fig. 2) clearly show absence of any substantial bias in both conditional and unconditional situations. In the more complex Simcoe test case example, Figs. 6d, 8d, 9d, 11d, 12d, and 13d indicate that the bias is less for the unconditional case (Fig. 6d) and for the random sampling of conditioning data (Figs. 11d, 13d), then for cases where a preferential sampling of HD is present (Figs. 8d, 9d, 12d). However, even in the cases of preferential sampling the bias between TI proportions and simulated proportions remains smaller (Fig. 8d) or even much smaller (Fig. 9d, 12d) than the bias observed in the preferential sampling (see Fig. 12d). The over-representation of bedrock and surficial HSU in CS3 compared to CS1 had no significant impact on the average simulated proportions. The additional data did reduce variability as expected (C2 smaller) without further distorting the average proportions (C1 comparable). Admittedly, whether these good results about bias and robustness to preferential sampling can be extrapolated to other different complex cases remains to be verified.

In the Simcoe County test case, the bivariate probabilities required for MCP were obtained by direct computation on the deterministic model. This model summarizes all the geological knowledge and available data in the area. This justified the use of TI as a source of pseudo-data to increase control of the unit proportions. Proportions of the different HSU in the deterministic model were assumed representative of the ground truth. In other applications or in earlier stages of application, the deterministic model could be unavailable. In these cases, a simpler conceptual model can be used as TI to provide the bivariate probabilities. However, no pseudo-data should be sampled directly from the conceptual model as the unit proportions and locations in the conceptual model are only loosely known. Pseudo-data from surficial geology or bedrock can still be used however, but one would be prudent to verify the robustness of simulated unit proportions relative to the quantity of pseudo-data added.

One reviewer, quoting the usual practice in MPS studies to borrow the TI from an external source to represent texture/structure, raised an important concern about the idea of using a deterministic model as the source for the TI. Most published MPS studies were done in the petroleum domain where the TI represents essentially borrowed analogous textures from external sources (e.g. satellite images, object-based simulation or process-based simulations). The textures are then conditioned to HD and modulated in space by combining with soft data coming from seismic survey. In other fields of study, such external sources and soft data do not exist. In mining for example each deposit is unique and complex and seismic data are usually not available or simply cannot identify mineralized zones. Possible sources for TIs could be in open pits the blast holes on a few benches located close to the area to simulate. The TI is then expected to be also close to the “target field” (Ortiz 2003). Another source in mining is a deterministic (geological) model (Boucher et al. 2014; Rezaee et al. 2014). In regional hydrogeology, the quasi-universal practice is to elaborate a deterministic model integrating all available information: sedimentary environment and geological context, known stratigraphy, water ages, geomorphology, surficial geology, few sampled ”quality” boreholes, the low quality boreholes registered in governmental data bases, pumping and tracer tests, borehole permeability tests and sample size grading, and few geophysical data sometimes available in part of the area. These soft informations are not of the same nature as seismic data in petroleum studies. They are complex, elusive, often rare and with very partial coverage, and represent a variety of supports, so they cannot be combined directly with a TI representing merely the texture, as in the petroleum case with seismic data. The available information have to be incorporated in the deterministic model thanks to the geologist’s knowledge. Despite all the expertise involved in the design of the deterministic model, it nevertheless remains a quite idealized and smoothed interpretation of the real field. If the stratigraphy and global proportions of each HSU may be well represented in the deterministic model, the HSU vertical thicknesses are likely much more variable than assumed. Similarly, lateral continuity of HSU is probably exaggerated in the deterministic model. As these thickness and lateral variations might influence significantly the flow response of the model, it is important to consider alternate models around the deterministic one to be able to assess flow uncertainty. This was the goal pursued in this paper.

5 Conclusion

This study has applied the MCP simulation method to both a synthetic and to the complex stratigraphic succession within a glacial sedimentary basin. The MCP simulation method has succeeded at simulating the complex depositional system involving many units and a clear directional trend inducing asymmetry between units transition probabilities. The method was shown to be unbiased in the non-conditional case. The different realizations obtained by MCP propose different field models around the overly smoothed deterministic model. The alternative models are essential inputs to assess uncertainty on groundwater flow and transport problems.

References

Allard D, D’Or D, Froidevaux R (2011) An efficient maximum entropy approach for categorical variable prediction. Eur J Soil Sci 62:381–393. https://doi.org/10.1111/j.1365-2389.2011.01362.x

Armstrong M, Galli A, Beucher H, Loc’h G, Renard D, Doligez B, Eschard R, Geffroy F (2011) Plurigaussian simulations in geosciences. Springer, Berlin

Arpat GB, Caers J (2007) Conditional simulation with patterns. Math Geol 39(2):177–203. https://doi.org/10.1007/s11004-006-9075-3

Bajc A, Mulligan R, Rainsford D, Webb J (2015) Results of 2011–13 overburden drilling programs in the southern part of the County of Simcoe, south-central Ontario; Ontario Geological Survey, Miscellaneous Release-Data 324. Techical report

Bogaert P (2002) Spatial prediction of categorical variables: the Bayesian maximum entropy approach. Stoch Environ Res Risk Assess 16(6):425–448. https://doi.org/10.1007/s00477-002-0114-4

Bogaert P, D’Or D (2002) Estimating soil properties from thematic soil maps: the Bayesian Maximum Entropy approach. Soil Sci Soc Am J 66(5):1492–1500. https://doi.org/10.2136/sssaj2002.1492

Boucher A, Costa JF, Rasera LG, Motta E (2014) Simulation of geological contacts from interpreted geological model using multiple-point statistics. Math Geosci 46(5):561–572. https://doi.org/10.1007/s11004-013-9510-1

Chilès J, Delfiner P (2012) Geostatistics: modeling spatial uncertainty, 2nd edn. Wiley, New York

Christakos G (1992) Random field models in earth sciences. Academic Press, San Diego. https://doi.org/10.1016/B978-0-12-174230-0.50005-6

D’Or D (2003) Spatial prediction of soil properties, the Bayesian Maximum Entropy approach. Ph.D. thesis, Université catholique de Louvain

D’Or D, Bogaert P (2004) Spatial prediction of categorical variables with the Bayesian Maximum Entropy approach: the Ooypolder case study. Eur J Soil Sci 55(4):763–775. https://doi.org/10.1111/j.1365-2389.2004.00628.x

D’Or D, Bogaert P, Christakos G (2001) Application of the BME approach to soil texture mapping. Stoch Environ Res Risk Assess 15(1):87–100. https://doi.org/10.1007/s004770000057

Jakeman A, Barreteau O, Hunt R, Rinaudo JD, Ross A (2016) Integrated groundwater management—concepts, approaches and challenges, vol 1. Springer, Berlin

Kullback S, Leibler RA (1951) On information and sufficiency. Ann Math Stat 22(1):79–86. http://www.jstor.org/stable/2236703

Le Blévec TL, Dubrule O, John CM, Hampson GJ (2017) Modelling asymmetrical facies successions using pluri-Gaussian simulations. Geostatistics Valencia 2016, quantitative geology and geostatistics. Springer, Cham, pp 59–75. https://doi.org/10.1007/978-3-319-46819-8_4

Li W (2007) Markov chain random fields for estimation of categorical variables. Math Geol 39(3):321–335. https://doi.org/10.1007/s11004-007-9081-0

Li W, Zhang C (2007) A random-path Markov chain algorithm for simulating categorical soil variables from random point samples. Soil Sci Soc Am J 71(3):656–668. https://doi.org/10.2136/sssaj2006.0173

Li W, Zhang C, Burt JE, Zhu AX, Feyen J (2004) Two-dimensional Markov chain simulation of soil type spatial distribution. Soil Sci Soc Am J 68(5):1479–1490. https://doi.org/10.2136/sssaj2004.1479

Marcotte D (1996) Fast variogram computation with FFT. Comput Geosci 22(10):1175–1186. https://doi.org/10.1016/S0098-3004(96)00026-X

Mariethoz G, Renard P, Straubhaar J (2010) The direct sampling method to perform multiple-point geostatistical simulations. Water Resour Res 46(11):W11536. https://doi.org/10.1029/2008WR007621

Molson JW, Frind EO (2012) On the use of mean groundwater age, life expectancy and capture probability for defining aquifer vulnerability and time-of-travel zones for source water protection. J Contam Hydrol 127(1–4):76–87. https://doi.org/10.1016/j.jconhyd.2011.06.001

Ortiz JM (2003) Characterization of high order correlation for enhanced indicator simulation. Ph.D. thesis, University of Alberta, Edmonton, Alberta, Canada

Orton TG, Lark RM (2007) Accounting for the uncertainty in the local mean in spatial prediction by Bayesian Maximum Entropy. Stoch Environ Res Risk Assess 21(6):773–784. https://doi.org/10.1007/s00477-006-0089-7

Ravalec ML, Noetinger B, Hu LY (2000) The FFT moving average (FFT-MA) generator: an efficient numerical method for generating and conditioning Gaussian simulations. Math Geol 32(6):701–723. https://doi.org/10.1023/A:1007542406333

Refsgaard JC, Christensen S, Sonnenborg TO, Seifert D, Højberg AL, Troldborg L (2012) Review of strategies for handling geological uncertainty in groundwater flow and transport modeling. Adv Water Resour 36:36–50. https://doi.org/10.1016/j.advwatres.2011.04.006

Rezaee H, Asghari O, Koneshloo M, Ortiz JM (2014) Multiple-point geostatistical simulation of dykes: application at Sungun porphyry copper system, Iran. Stoch Environ Res Risk Assess 28(7):1913–1927. https://doi.org/10.1007/s00477-014-0857-8

Rezaee H, Marcotte D, Tahmasebi P, Saucier A (2015) Multiple-point geostatistical simulation using enriched pattern databases. Stoch Environ Res Risk Assess 29(3):893–913. https://doi.org/10.1007/s00477-014-0964-6

Serre ML, Christakos G (1999) Modern geostatistics: computational BME analysis in the light of uncertain physical knowledge—the Equus Beds study. Stoch Environ Res Risk Assess 13(1–2):1–26. https://doi.org/10.1007/s004770050029

Serre ML, Christakos G, Li H, Miller CT (2003) A BME solution of the inverse problem for saturated groundwater flow. Stoch Environ Res Risk Assess 17(6):354–369. https://doi.org/10.1007/s00477-003-0156-2

Strebelle S (2002) Conditional simulation of complex geological structures using multiple-point statistics. Math Geol 34(1):1–21. https://doi.org/10.1023/A:1014009426274

Tran TT (1994) Improving variogram reproduction on dense simulation grids. Comput Geosci 20(7):1161–1168. https://doi.org/10.1016/0098-3004(94)90069-8

Zhou Y, Li W (2011) A review of regional groundwater flow modeling. Geosci Front 2(2):205–214. https://doi.org/10.1016/j.gsf.2011.03.003

Acknowledgements

Constructive comments from two anonymous reviewers were helpful improving the manuscript. In particular, one reviewer suggested to us the idea of comparing MCP to Gaussian simulator as in Sect. 2.4. The authors thank A. Bolduc, Y. Michaud and H. Russell for their support from the Groundwater Geoscience Program, Geological Survey of Canada, Natural Resources Canada. Research was partly financed by NSERC (RGPIN-2015-06653).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Benoit, N., Marcotte, D., Boucher, A. et al. Directional hydrostratigraphic units simulation using MCP algorithm. Stoch Environ Res Risk Assess 32, 1435–1455 (2018). https://doi.org/10.1007/s00477-017-1506-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-017-1506-9