Abstract

The goal of this article is to provide basic modeling and simulation techniques for systems of multiple interacting Unmanned Aerial Vehicles, so called “swarms”, for applications in mapping. Also, the paper illustrates the application of basic machine-learning algorithms to optimize their information gathering. Numerical examples are provided to illustrate the concepts.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The advances in Unmanned Aerial Vehicles (UAV) technologies have the potential to revolutionize rapid mapping capabilities of complex environments to directly benefit society. Furthermore, advances in manufacturing, three dimensional (3D) printing, embedded microscale and nanoscale electronics, Lidar (Light Detection and Ranging), hyperspectral cameras and associated technologies have made the production of sophisticated multi-UAV systems, so-called “swarms”, for mapping very economical. One key enabling technology for such systems is rapid, adaptive, path planning for such systems. Accordingly, the objective of this paper is to develop relatively simple mechanistic models and numerical solution strategies for the direct simulation of path planning of swarms, which can be achieved with relatively standard laptop-level computing equipment for deployed use in the field. The models developed here are simple enough to be computed several thousand times per hour, thus enabling machine-learning algorithms to optimize the multi-UAV system performance.

2 A brief history of UAVs/drones

Following a review found in Zohdi [1], in 2019, the use of UAVs range from (a) hobbyists, (b) mid-sized military and commercial applications and (c) large-scale military vehicles and (d) stealth combat vehicles. Issues include (1) structural design, (2) power supply, (3) onboard computing, (4) sensing devices, (5) generated acoustics, (6) data acquisition software, (7) control algorithms, (8) communications and (9) autonomy. Research on UAVs started in the early 1900s. Initially, most of the research was geared towards military applications. This research accelerated moderately during World War II, to train antiaircraft gunners and to fly attack missions. However, with the exception of the V-2 (Vergeltungswaffe) rocket system program in Germany, they were primarily “toy” airplanes. It was not until the 1960’s, during the cold war, when the US was involved in a variety of military conflicts and the US Air Force was concerned about losing pilots over hostile territory, that UAV research started to grow rapidly. As of 2012, the US Air Force operated approximately 7500 UAVs. As of 2017, nearly every industrialized country has a growing UAV manufacturing base.

Due to a steady increase in inexpensive Unmanned Aerial Vehicle (UAV) and camera technology, there are a wide variety of non-military applications, such as world-wide anti-poaching and anti-whaling efforts. Furthermore, for example in oil and gas exploration, UAVs have been used for geophysical mapping, in particular geomagnetic surveys, where measurements of the Earth’s varying magnetic field strength are used to calculate the nature of the underlying magnetic rock structure, in order to locate mineral deposits. Because of the huge expanse associated with oil and gas pipelines, monitoring activity can be enhanced and accelerated by deployment of UAVS. In the field of archaeology, drones are used to accelerate surveying to protect sites from looters. Another obvious application is cargo transport, which has been promoted by Amazon, DHL, Google, etc. The use of UAVs in agriculture is also obvious for crop dusting and crop health monitoring.

However, the overarching goal of all of these applications is the real-time mapping of large areas, such as those struck by after a multi-location disaster, such as an earthquake, fire, tsunami, etc., by multiple UAV’s; so-called “swarms”. Because of the complex multifaceted infrastructure that needs to be mapped (roads, bridges, pipelines, power grid and water) after a disaster, there exists the need for different mapping strategies (Fig. 1). Such sectors need to be mapped with different technologies (infrared, RF, optical, microwave, etc). Small UAVs are usually battery powered (Fig. 1), thus they have limited range and their paths must be planned carefully to conserve power. Specifically, the objective of this work is to provide an introduction to the basic modeling and simulation techniques for multiple interacting UAVs for a target audience of young scientists. Specifically, simultaneous advances in inexpensive UAVs, computational modeling techniques, camera and sensor technologies have made rapid post-disaster mapping a potential reality. One motivator for this research is the monitoring/maintenance of ultra-large facilities, such as industrial-scale solar farms. However, an over-arching key motivator that is driving multiple-UAV system development is large-area mapping for disaster mitigation and management, for example, the devastating 2017 Sonoma/Napa fires and the 2018 Paradise fires in California. In terms of disaster response, integration of UAVs, advanced sensing, communications and rapid simulation techniques with fire-fighting to develop large-scale rapid-response systems for emergency fire control, management and risk assessment is of high interest. A key objective is to develop paradigms that provide accurate, real-time feedback to deployed firefighters. A core issue across all domains of application is the ability of a system to adapt to rapid changes in the environment and system capabilities by autonomously modifying tasks and relationships with other agents, and then to apply various data-collection techniques. Oftentimes, autonomous capabilities will be necessary for continued operations due to the large distances, resulting communications delay, and lack of 24/7 connectivity to the systems (a function of limited ground stations in the tracking, telemetry, and commanding network). These factors can be incompatible with human reaction/decision times.

Simultaneous advances in inexpensive UAVs, computational modeling techniques, camera and sensor technologies have made rapid pre- and post-disaster mapping of complex terrain a reality. Agent-based paradigms for simulation of coupled complex systems have become powerful predictive tools. Because different infrastructures have different grids and different quantities to be mapped, the optimal path for a set of released swarms will vary over the same terrain. It is relatively easy to develop so-called agent-based models for a team of swarm-members (UAVs) intending to map large areas with various optimality conditions: minimum time, minimum energy usage, optical sensing, infrared sensing, acoustic sensing, water spillage sensing, etc. Although UAV’s in themselves are of interest, the long-term goal is coordination of large-numbers of them, so-called “swarms”, which is the focus of this work. At the end of this paper, ancillary technologies, such as UAV integration and Lidar are also discussed, with an eye towards systems which blend artificial intelligence, machine learning, and software analytics with data to create living digital computer models that can update and change in tandem with their physical counterparts.

Remark

It is important to note that new FAA regulations require eligible owners to register their UAV’s prior to flight. For owners less than 13 years old, a parent or other responsible person must file an FAA registration form, and the UAV’s must have an FAA-issued registration number. In June 2016, the FAA announced regulations for commercial operation of small UAVs, those between 0.55 and 55 pounds (about 0.250–25 kg), including payload, which require the onsite presence of licensed Remote Pilot in Command (above 16 years of age). Because of the growing use of UAVs, privacy concerns have mounted, and led to property owners shooting down such vehicles which enter their airspace. Shooting down a drone is illegal, since the debris can harm people below. Such vehicles are shot down at a rate of once a month in the US (in 2016). In Zohdi [2] the dynamical response of a quadcopter to a series of random external impulses, such as from shotgun pellets, was formulated using a Discrete Element Method (DEM), and allowed one to compute trajectories and the distribution of the debris field. This is potentially useful in settling disputes over location of drones at the time of shooting. This topic is outside of the scope of the current paper, however, we refer interested readers to Zohdi [2].

3 Modeling and rapid simulation of swarms

The origins of swarm modeling has origins in the description of biological groups (flocks of birds, schools of fish, crowds of human beings, etc.) responses to to predators or prey [3]. We focus on decentralized paradigms where there is no leader, making the overall system less vulnerable. Early approaches that rely on decentralized organization can be found in Beni [4], Brooks [5], Dudek et al [6], Cao et al [7], Liu and Passino [8] and Turpin et al [9]. Usual models incorporate a tradeoff between long-range interaction and short-range repulsion between individuals, dependent on the relative distance between individuals (see Gazi and Passino [10], Bender and Fenton [11] or Kennedy and Eberhart [12]). The most basic model is to treat each individual as a point mass [13], which we adopt here, and to allow the system to evolve, based on Newtonian mechanics, using a combination of short-range and long-range interaction forces [10,11,12,13,14,15].Footnote 1

Remark

For some creatures, the “visual field” of individuals may play a significant role, while if the agents are robots or UAVs, the communication can be electronic. However, in some systems, agents interact with a specific set of other agents, regardless of whether they are far away [20]. This appears to be the case for Starlings (Sturnus vulgaris). In Ballerini et al [21], the authors concluded, that such birds communicate with a certain number of birds surrounding it and that that interactions are governed by topological distance and not metric distance. Interested readers are referred to Ballerini et al [21].

3.1 Notation

Throughout the analysis, the objects are assumed to be small enough to be considered (idealized) as point-masses and that the effects of their rotation with respect to their mass center is considered unimportant to their overall motion. Boldface symbols imply vectors or tensors. A fixed Cartesian coordinate system will be used throughout this work. The unit vectors for such a system are given by the mutually orthogonal triad of unit vectors \((\varvec{e}_1, \varvec{e}_2, \varvec{e}_3)\). We denote the position of a point (swarm) in space by the vector \(\varvec{r}\). In fixed Cartesian coordinates we have

and for the velocity we have

and acceleration we have

3.2 Construction of a swarm

In the analysis to follow, we treat the swarm members as point masses, i. e. we ignore their dimensions (Fig. 2). For each swarm member (\(N_s\) in total) the equations of motion are

where \(\varvec{\Psi }^{tot}_i\) represents the total forces acting on a swarm member i, \(\varvec{N}^{mt}_i\) represents the interaction between swarm member i and targets to be mapped, \(\varvec{N}^{mo}_i\) represents the interaction between swarm member i and obstacles and \(\varvec{N}^{mm}_i\) represents the interaction between swarm member i and other members. In order to illustrate the overall computational framework, we focus on a model problem having a sufficiently large parameter set which allows for complex dynamics. The parameters optimized to drive the system towards desired behavior via a Machine-Learning algorithm. This approach can be used on a variety of models.

4 Characterization of interaction

4.1 Member-target interaction

Consider member-target interaction

where \(\varvec{T}_j\) is the position vector to target j and the direction to each target is

For each swarm-member (i), we compute a weighted direction to each target

where the \(w_{ti}\) are weights reflecting the importance of the target, \(a_i\) are decay parameters, which is summed (and normalized later in the analysis) to give an overall direction to move towards

4.2 Member-obstacle interaction

Now consider member-obstacle interaction

where \(\varvec{O}_j\) is the position vector to obstacle j and the direction to each obstacle is

For each swarm-member (i), we compute a weighted direction to each obstacle

where the \(w_{oi}\) are weights reflecting the importance of the obstacle, \(b_i\) are decay parameters, which is summed (and normalized later in the analysis) to give an overall direction to move towards

4.3 Member-member interaction

Now consider member(i)–member(j) interaction

and the direction to each swarm-member

For each swarm-member (i), we compute a weighted direction to each swarm-member

where the \(w_{mi}\) are weights reflecting the importance of the members, \(c_i\) are decay parameters, which is summed (and normalized later in the analysis) to give an overall direction to move towards

4.4 Summation of interactions

We now aggregate the contributions by weighting their overall importance with weights for swarm-member/target interaction, \(W_{mt}\), swarm-member/obstacle interaction, \(W_{mo}\) and swarm-member/swarm-member interaction, \(W_{mm}\):Footnote 2

normalize the result

The forces are then constructed by multiplying the thrust force available by the UAV propulsion system, \(F_i\), by the overall normal direction

We then integrate the equations of motion:

yielding

and

Note that if

then we define \(\varvec{v}^{old}_i(t+\Delta t)=\varvec{v}_i(t+\Delta t)\) and the velocity is rescaled

with \(\varvec{v}_i(t+\Delta t)=\varvec{v}^{new}_i(t+\Delta t)\). We then determine if any targets have been mapped by checking the distance between swarm-members and targets

For any \(\varvec{T}_j\), if any swarm-member has satisfied the criteria, then take \(\varvec{T}_j\) out of the system for the next time step so that no swarm-member wastes resources by attempting to map \(\varvec{T}_j\). Similarly, if \(\Vert {\varvec{r}}_i -{\varvec{O}}_j \Vert \le \) Tolerance, then \({\varvec{r}}_i\) is immobilized. This stops the ith swarm-member from contributing further to the mapping. The entire process is then repeated for the next time step.

5 An algorithm for mapping of a region

To “map” a region, consider the following algorithm:

- 1.

Initialize the locations of the targets to be mapped: \(\varvec{T}_i=(T_x,T_y,T_z)_i\), i=1, 2,...\(N_T\)=targets.

- 2.

Initialize the locations of the obstacles to be mapped: \(\varvec{O}_i=(O_x,O_y,O_z)_i\), i=1, 2,...\(N_O\)=obstacles.

- 3.

Initialize the locations of the swarm-members (UAVs): \(\varvec{r}_i=(r_x,r_y,r_z)_i\), i=1, 2,...\(N_s\)=swarm-members.

- 4.

For each swarm-member (i), determine the distance and directed normal to each target, obstacle and other swarm-members.

- 5.

For each swarm-member (i), determine interaction functions \(\varvec{N}^{mt}_{i}\), \(\varvec{N}^{mo}_{i}\), \(\varvec{N}^{mt}_{i}\) and \(\varvec{n}^*_i\).

- 6.

For each swarm-member (i), determine force acting upon it, \(\varvec{\Psi }^{tot}_{i}=F_i\varvec{n}^*_i\).

- 7.

For each swarm-member (i), integrate the equations of motion (checking constraints) to produce \(\varvec{v}_i(t+\Delta t)\) and \(\varvec{r}_i(t+\Delta t)\).

- 8.

Determine if any targets have been mapped by checking the distance between swarm-members and targets

$$\begin{aligned} ||\varvec{r}_i-\varvec{T}_j||\le Tolerance. \end{aligned}$$(5.1)For any \(\varvec{T}_j\), if any swarm-member has satisfied the this criteria, then take \(\varvec{T}_j\) out of the system for the next time step so that no swarm-member wastes resources by attempting to map \(\varvec{T}_j\). As indicated before, if \(\Vert {\varvec{r}}_i -{\varvec{O}}_j\Vert \le \) Tolerance, then \({\varvec{r}}_i\) is immobilized.

- 9.

The entire process is then repeated for the next time step.

6 Preliminary numerical example

As a preliminary example, consider the following parameters:

Mass \(=10\) kg,

100 swarm-members,

100 targets,

100 obstacles,

\(T=30\) s,

\(\Delta t=0.001\) s,

Initial swarm velocity, \(\varvec{v}_i(t=0)=\mathbf{0}~\hbox {m/s}\),

Initial swarm domain (10,10,10) meters,

Thrust force available by the UAV propulsion system, \(F_i=10^6~\hbox {Nt}\),

Domain to be mapped (500,500,10) meters,

Maximum velocity swarm-member \(v_{max}=100~\hbox {m/s}\).

The “design” vector of system parameter inputs

was given by a randomly generated vector

in the following intervals:

Overall weights: \(0\le W_{mt}, W_{mo}, W_{mm} \le 10\),

Target weights: \(0\le w_{t1}, w_{t2} \le 1\),

Obstacle weights: \(0 \le w_{o1}, w_{o2} \le 1\),

Member weights: \(0 \le w_{m1}, w_{m2} \le 1\) and

Decay coefficients: \(0\le a_1, a_2 \le 1\), \(0\le b_1, b_2 \le 1\), \(0\le c_1, c_2 \le 1\).

We allowed a long enough time to map the whole domain (30 seconds). The results are shown in Figs. 3 and 4. The sequences of mapping the model problem show initially green (unmapped) targets, which are marked as blue after they are mapped. The vectors on the swarm-members represent the velocities. The algorithm is quite adept in picking up missed targets by successive sweeps. We note that as the targets get mapped, they are dropped from the system, and the swarm-members naturally aggregate to the targets that are remaining. The decisions needed to deploy and operate such systems optimally, require guidance from real-time modeling and simulation that the preceeding model can provide, if the parameters are known, which is the topic of the next section.

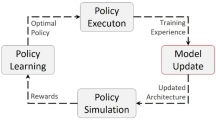

7 Machine-learning for rapid UAV path planning

There are many parameters in the system, warranting the use a Machine-Learning Algorithm. Here we follow Zohdi [22,23,24] in order to optimize behavior by minimizing a cost function. For example, let us consider minimizing the following cost function

where A represents the percentage of targets remaining to mapped, \(0\le T_m\le 1\) represents the percentage time (normalized by the maximum simulation time) for this event to occur. In other words, the system is being driven to the parameters generating the best case scenario. The design vector of system parameters is:

Cost functions associated with optimization of complex behavior are oftentimes nonconvex in design parameter space and often nonsmooth, as is the case for the system of interest. Their minimization is usually difficult with direct application of gradient methods. This motivates nonderivative search methods, for example those found in Machine-Learning Algorithms (MLA’s). One of the most basic subset of MLA’s are so-called Genetic Algorithms (GA’s). Typically, one will use a GA first in order to isolate multiple local minima, and then use a gradient based algorithm in these locally convex regions or reset the GA to concentrate its search over these more constrained regions. GA’s are typically the simplest scheme to start the analysis, and one can, of course, use more sophisticated methods if warranted. For a review of GA’s, see the pioneering work of John Holland (Holland [25]), as well as Goldberg [26], Davis [27], Onwubiko [28], Lagaros et al. [29], Papadrakakis et al. [30,31,32,33] and Goldberg and Deb [34]. The GA approach is extremely well-suited for nonconvex, nonsmooth, multicomponent, multistage systems, and involves the following essential concepts:

- 1.

POPULATION GENERATION: Generate system population: \(\varvec{\Lambda }^{i}{\mathop {=}\limits ^\mathrm{def}}\{\Lambda ^i_1, \Lambda ^i_2, \Lambda ^i_3, \Lambda ^i_4,\ldots , \Lambda ^i_N\}=\{interaction~ parameters, \ldots ,etc.\}^i\)

- 2.

PERFORMANCE EVALUATION: Compute fitness/performance of each genetic string: \(\Pi (\varvec{\Lambda }^i)\) and rank them \((i=1,\ldots , N)\)

- 3.

MATING PROCESS: Mate pairs/produce offspring: \(\varvec{\lambda }^i {\mathop {=}\limits ^\mathrm{def}}\Phi ^{(I)} \varvec{\Lambda }^{i}+(1-\Phi ^{(I)}) \varvec{\Lambda }^{i+1}\) where \(0\le \Phi \le 1\) (Fig. 5)

- 4.

GENE ELIMINATION: Eliminate poorly performing genetic strings, keep top parents and generated offspring

- 5.

POPULATION REGENERATION: Repeat the process with the new gene pool and new random genetic strings

- 6.

SOLUTION POST-PROCESSING: Employ gradient-based methods afterwards in the local “valleys”-if smooth enough

- (i)

Algorithmic specifics

Following Zohdi [22,23,24], the algorithm is as follows:

STEP 1: Randomly generate a population of S starting genetic strings, \(\varvec{\Lambda }^i, (i=1,2, 3,\ldots , S):\)

$$\begin{aligned} \varvec{\Lambda }^{i}&{\mathop {=}\limits ^\mathrm{def}}\{\Lambda _1, \Lambda _2\ldots \Lambda _N\}\\&=\{W_{mt},W_{mo},W_{mm}, w_{t1},w_{t2},w_{o1},w_{o2},w_{m1},\\&\qquad w_{m2},a_1,a_2,b_1,b_2,c_1,c_2\}. \end{aligned}$$STEP 2: Compute fitness of each string \(\Pi (\varvec{\Lambda }^{i})\), (i=1,..., S)

STEP 3: Rank genetic strings: \(\varvec{\Lambda }^{i}\), (i=1,..., S)

STEP 4: Mate nearest pairs and produce two offspring, (i=1,..., S)

$$\begin{aligned}&\varvec{\lambda }^i {\mathop {=}\limits ^\mathrm{def}}\Phi ^{(I)} \varvec{\Lambda }^{i}+(1-\Phi ^{(I)}) \varvec{\Lambda }^{i+1}, \\&\varvec{\lambda }^{i+1} {\mathop {=}\limits ^\mathrm{def}}\Phi ^{(II)} \varvec{\Lambda }^{i}+(1-\Phi ^{(II)}) \varvec{\Lambda }^{i+1} \end{aligned}$$STEP 5: Eliminate the bottom \(M<S\) strings and keep top \(K<N\) parents and top K offspring (\(K \hbox { offspring}+K \hbox { parents}+M=S\))

STEP 6: Repeat STEPS 1-6 with top gene pool (K offspring and K parents), plus M new, randomly generated, strings

NOTE: \(\Phi ^{(I)}\) and \(\Phi ^{(II)}\) are random numbers, such that \(0 \le \Phi ^{(I)}\le 1\), \(0\le \Phi ^{(II)} \le 1\), which are different for each component of each genetic string

OPTION: Rescale and restart search around best performing parameter set every few generations

REMARK: The system parameter search is conducted within the constrained ranges of \(\Lambda _1^{(-)} \le \Lambda _1 \le \Lambda _1^{(+)}\), \(\Lambda _2^{(-)} \le \Lambda _2 \le \Lambda _2^{(+)}\) and \(\Lambda _3^{(-)} \le \Lambda _3 \le \Lambda _3^{(+)}\), etc., etc. These upper and lower limits would, in general, be dictated by what is physically feasible.

7.1 Model problem

We applied the Machine-Learning Algorithm and reduced the allowable simulation time from \(T=30\) seconds (as in the previous example) to \(T=10\) s, in order to make it difficult find parameter sets that deliver optimal performance. Shown are the best performing gene (design parameter set, in red) as a function of successive generations, as well as the average performance of the population of the top four genes (designs, in green). The design parameters were optimized over the following intervals:

Overall weights: \(0\le W_{mt}, W_{mo}, W_{mm} \le 10\),

Target weights: \(0\le w_{t1}, w_{t2} \le 1\),

Obstacle weights: \(0 \le w_{o1}, w_{o2} \le 1\),

Member weights: \(0 \le w_{m1}, w_{m2} \le 1\) and

Decay coefficients: \(0\le a_1, a_2 \le 1\), \(0\le b_1, b_2 \le 1\), \(0\le c_1, c_2 \le 1\).

We used the following MLA settings:

Population size per generation: 20,

Number of parents to keep in each generation: 4,

Number of children created in each generation: 4,

Number of completely new genes created in each generation: 12

Number of generations for readaptation around a new search interval:10

Number of generations: 120. and

\(w_1 = 1, w_2 = 0.0001\) in Eq. 7.1

Machine learning output, generation after generation-the reduction of the cost function (\(\Pi \)) for the 15 parameter set is shown. This cost function represents the percentage of unmapped sites in the zone of interest. Shown are the best performing gene (red) as a function of successive generations, as well as the average performance of the population of genes (green)

The algorithm was automatically reset every 10 generations. The entire 120 generation simulation, with 20 genes per evaluation (2400 total designs) took on the order of 4 minutes on a laptop, making it ideal as a design tool. Figure 6 (average of all genes performance and top gene performance) and Table 1 (values of the gene components) illustrate the results. The MLA/GA is able to home in of a variety of possible designs, including the one corresponding to the original set of parameters that generated the observations and alternatives that achieve virtually the same results. This allows system designers to more flexibility in parameter selection. We note that, for a given set of parameters, a complete simulation takes on the order of 0.1 seconds, thus over 36,000 parameter sets can be evaluated in an hour, without even exploiting the inherent parallelism of the MLA.

Remark 1

If one wishes to have more detailed descriptions beyond a point mass model (for example a quadcopter), one must augment the balance of linear momentum (\(\varvec{G}_{cm, i}\))

with a balance of angular momentum (\(\varvec{H}_{cm,i}\)), given by

where \(\varvec{M}^{tot}_{cm,i}\) is the total external moment about the center of mass, \(\overline{\mathcal{I}}_i\) is the mass moment of inertia and \(\varvec{\omega }_i\) is the angular velocity. There are a number of numerical methods that are capable handling complex interaction of multiple vehicles, for example see Zohdi [2]. Another issue that was not taken into account are the details on the actuation and motor control that appear in the models as simply “attraction” and “repulsion”. For example, for detailed modeling of the dynamics and control of UAVs we refer the reader to Mueller and D’Andrea [35, 36], Mueller et al. [37], Hehn et al [38], Houska et al [39] and Zohdi [2].

Remark 2

In many applications, the computed positions, velocities and accelerations of the members of a swarm, for example people or vehicles, must be translated into realizable movement. Furthermore, the communication latency and information exchange poses a significant technological hurdle. In practice, further sophistication, i.e. constraints on movement and communication, must be embedded into the computational model for the application at hand. However, the fundamental computational philosophy and modeling strategy should remain relatively unchanged.

Remark 3

One could reformulate the cost function to minimized energy usage, incorporating the range and performance of actual UAV’s (see Taglibue et al. [40] and Holda et al [41]).

8 Summary and extensions

A key for multi-UAV technology to flourish are efficient mapping algorithms. In this regard, agent-based algorithms are a viable approach. Agent-based paradigms for simulation of coupled complex systems have become powerful predictive tools. One of the main proposed uses of multi-UAV systems has been the deployment to help fight fires. Thus, in closing, we highlight some ongoing technological issues a that are being pursued in order make such systems a robust reality. In this regard, it is important to fully embrace mobile computing, rapid telecommunication and hyperspectral sensing in harsh environments from high- and/or lower-altitude UAVs, and ground vehicles with “humans in the loop”. An important part of autonomy is energy autonomy, which is especially crucial in the performance of aerial vehicles and all deployed devices. The ability of such systems to adapt their behavior in response to energy consumption will allow them to perform longer. Algorithmic approaches to this problem are of particular interest, as they may often be retrofitted onto existing platforms or added to new platforms at negligible per-unit cost. For example, UAVs equipped with cameras can collect videos or images for pre-emptive strategic analysis. Since the swarm would include UAVs to enable visible inspection with the cameras, one can implement a deep learning approach for image/video pattern/feature recognition. In this regard, remote sensing has become an integral part of UAV-based imaging systems. In particular, Lidar (light detection and ranging) and time-of-flight signal processing have become key tools. Lidar usually uses light in the high-frequency ultraviolet, visible and near infrared spectrum [42,43,44,45,46]). It is classified as a “time-of-flight” type technology, utilizing a pulse of light and the time of travel to determine the relative distance of an object. Over the last 20 years, these devices have steadily improved and have become quite lightweight [47,48,49,50,51,52,53]. There are a variety of time-of-flight technologies that have been developed, primarily for military reasons, of which Radar, Sonar and Lidar are prime examples. The various types range from (1) conventional radar, (2) laser/radar altimeters, (3) ultrasound/sonar/seismograms, (4) radiometers and photometers-which measure emitted radiation, (5) hyperspectral cameras, where each pixel has a full spectrum and (6) geodetic-gravity detection, etc. For example, from satellites, the spatial resolution is on the order of pixel-sizes of 1–1000 m using infrared wavelengths of 700–2100 nm. Hyperion-type cameras have even a broader range, 400–2500 nm with 200 bands (channels) and 100 nm per band. For example, thermographic/infrared cameras, form a heat-zone image (700–14,000 nm), however, the focusing lens cannot be glass, and are typically made of germanium or sapphire. These devices are fragile and require coatings, making them expensive. There are two main thermographic camera types: (a) cameras using cooled infrared detectors, which need specialized semiconductors, and have a relatively high resolution and (b) cameras using uncooled detectors, sensors and thermo-electronic resistance, which have relatively lower resolution. Furthermore, the initial image is monochrome, and must be color-mapped. Additionally, there are a variety of “corrective” measures (post-processors), such as (1) radiometric corrections, which correct the illumination for material properties, (2) topographic corrections, which correct the reflectivity due to shade, sunniness, etc. and (3) atmospheric corrections, which correct for atmospheric haze. There is a wide range of satellites available, such as Landsat, Nimbus (Weather), Radarsat, UARS (Civil, Research and Military), etc., which utilize these technologies. Typically, Lidar will employ thousands of narrow pulses per second to scan a domain, which can be time-consuming (Fig. 7). Accordingly, wide-area flash pulse Lidar has started to become popular. In addition to their speed, flash-type cameras/scanners have some advantages because:

The systems are simple, since they do not have moving parts associated with a scanner, and can thus be made very compact.

The systems measure the entire surface in a single pulse, hence they are fast and can be used in real time and

The systems do not require sophisticated post-processing units and are therefore inexpensive.

However, the greatest problems arise from multiple reflections of a pulse from a nonconvex surface, which can ruin time-of-flight calculations and other subsequent post processing. This is a subject of current investigation by the author, and we refer the reader to Zohdi [54] for more details.

Notes

There are other modeling paradigms, for example mimicing ant colonies [16] which exhibit foraging-type behavior and trail-laying-trail-following mechanisms for finding food sources (see Kennedy and Eberhart [12] and Bonabeau et. al [16], Dorigo et. al, [17], Bonabeau et. al [16], Bonabeau and Meyer [18] and Fiorelli et al [19]).

The parameters in the model will be optimized shortly.

References

Zohdi TI (2018) Multiple UAVs for Mapping: a review of basic modeling, simulation and applications. Ann Rev Environ Resour. https://doi.org/10.1146/annurev-environ-102017-025912

Zohdi TI (2017) On the dynamics and breakup of quadcopters using a discrete element method framework. Comput Methods Appl Mech Eng 327:503–521

Breder CM (1954) Equations descriptive of fish schools and other animal aggregations. Ecology 35(3):361–370

Beni G (1988) The concept of cellular robotic system. In: IEEE international symposium on intelligent control, pp 57–62

Brooks RA (1991) Intelligence without reason. In: Proceedings of the international joint conference on artificial intelligence (IJCAI-91), pp 569–595

Dudek G, Jenkin M, Milios E, Wilkes D (1996) A taxonomy for multi-agent robotics. Auton Robots 3:375–397

Cao YU, Fukunaga AS, Kahng A (1997) Cooperative mobile robotics: antecedents and directions. Auton Robots 4(1):7–27

Liu Y, Passino KM (2000) Swarm intelligence: literature overview. Technical report, Ohio State University

Turpin M, Michael N, Kumar V (2014) Capt: concurrent assignment and planning of trajectories for multiple robots. Int J Robot Res. https://doi.org/10.1177/0278364913515307

Gazi V, Passino KM (2002) Stability analysis of swarms. In: Proceedings of the American control conference. Anchorage, AK May 8–10

Bender J, Fenton R (1970) On the flow capacity of automated highways. Transp Sci 4:52–63

Kennedy J, Eberhart R (2001) Swarm intelligence. Morgan Kaufmann Publishers, Burlington

Zohdi TI (2003) Computational design of swarms. Int J Numer Methods Eng 57:2205–2219

Zohdi TI (2009) Mechanistic modeling of swarms. Comput Methods Appl Mech Eng 198(21–26):2039–2051

Zohdi TI (2017) An agent-based computational framework for simulation of competing hostile planet-wide populations. Comput Methods Appl Mech Eng. https://doi.org/10.1016/j.cma.2016.04.032

Bonabeau E, Dorigo M, Theraulaz G (1999) Swarm intelligence: from natural to artificial systems. Oxford University Press, New York

Dorigo M, Maniezzo V, Colorni A (1996) Ant system: optimization by a colony of cooperating agents. IEEE Trans Syst Man Cybern Part B 26(1):29–41

Bonabeau E, Meyer C (2001) Swarm intelligence: a whole new way to think about business. Harv Bus Rev 79(5):106–114

Fiorelli E, Leonard NE, Bhatta P, Paley D, Bachmayer R, Fratantoni DM (2004) Multi-auv control and adaptive sampling in monterey bay. In: Autonomous underwater vehicles, 2004 IEEE/OES, pp 134–147

Feder T (2007) Statistical physics is for the birds. Phys Today 60:28–29

Ballerini M, Cabibbo N, Candelier R, Cavagna A, Cisbani E, Giardina I, Lecomte V, Orlandi A, Parisi G, Procaccini A, Viale M, Zdravkovic V (2008) Interaction ruling animal collective behavior depends on topological rather than metric distance: evidence from a field study. PNAS 105(4):1232–1237

Zohdi TI (2003) Genetic design of solids possessing a random-particulate microstructure. Philos Trans R Soc Math Phys Eng Sci 361(1806):1021–1043

Zohdi TI (2017) Dynamic thermomechanical modeling and simulation of the design of rapid free-form 3D printing processes with evolutionary machine learning. Comput Methods Appl Mech Eng. https://doi.org/10.1016/j.cma.2017.11.030

Zohdi TI (2018) Electrodynamic machine-learning-enhanced fault-tolerance of robotic free-form printing of complex mixtures. Comput Mech. https://doi.org/10.1007/s00466-018-1629-y

Holland JH (1975) Adaptation in natural and artificial systems. University of Michigan Press, Ann Arbor

Goldberg DE (1989) Genetic algorithms in search, optimization and machine learning. Addison-Wesley, Boston

Davis L (1991) Handbook of genetic algorithms. Thompson Computer Press, Washington, DC

Onwubiko C (2000) Introduction to engineering design optimization. Prentice Hall, New Jersey

Lagaros N, Papadrakakis M, Kokossalakis G (2002) Structural optimization using evolutionary algorithms. Comput Struct 80:571–589

Papadrakakis M, Lagaros N, Thierauf G, Cai J (1998a) Advanced solution methods in structural optimisation using evolution strategies. Eng Comput J 15(1):12–34

Papadrakakis M, Lagaros N, Tsompanakis Y (1998b) Structural optimization using evolution strategies and neutral networks. Comput Methods Appl Mech Eng 156(1):309–335

Papadrakakis M, Lagaros N, Tsompanakis Y (1999a) Optimization of large-scale 3D trusses using evolution strategies and neural networks. Int J Space Struct 14(3):211–223

Papadrakakis M, Tsompanakis J, Lagaros N (1999b) Structural shape optimisation using evolution strategies. Eng Optim 31:515–540

Goldberg DE, Deb K (2000) Special issue on genetic algorithms. Comput Methods Appl Mech Eng 186(2–4):121–124

Mueller MW, D’Andrea R (2014) Stability and control of a quadrocopter despite the complete loss of one, two, or three propellers. In: IEEE international conference on robotics and automation (ICRA), 2014

Mueller MW, D’Andrea R (2015) Relaxed hover solutions for multicopters: application to algorithmic redundancy and novel vehicles. Int J Robot Res 35(8):873–889

Mueller MW, Hehn M, D’Andrea R (2015) A computationally efficient motion primitive for quadrocopter trajectory generation. IEEE Trans Robot 31(8):1294–1310

Hehn M, Ritz R, D’Andrea R (2012) Performance benchmarking of quadrotor systems using time-optimal control. Auton Robots 33(1–2):69–88

Houska B, Ferreau H, Diehl M (2011) ACADO Toolkit: an open source framework for automatic control and dynamic optimization. Optim Control Appl Methods 32(3):298–312

Tagliabue A, Wu X, Mueller MW (2018) Model-free online motion adaptation for optimal range and endurance of multicopters. In: IEEE international conference on robotics and automation (ICRA), IEEE, 2019

Holda C, Ghalamchi B, Mueller MW (2018) Tilting multicopter rotors for increased power efficiency and yaw authority. In: International conference on unmanned aerial systems (ICUAS), IEEE, pp 143–148

Ring J (1963) The laser in astronomy. p. 672-3, New Scientist

Cracknell AP, Hayes L, (2007) Introduction to remote sensing, 2 edn. Taylor and Francis, London. ISBN 0-8493-9255-1. OCLC 70765252

Goyer GG, Watson R (1963) The laser and its application to meteorology. Bull Am Meteorol Soc 44(9):564–575 [568]

Medina A, Gaya F, Pozo F (2006) Compact laser radar and three-dimensional camera. J Opt Soc Am A 23:800–805

Trickey E, Church P, Cao X (2013) Characterization of the OPAL obscurant penetrating LiDAR in various degraded visual environments. In: Proceedings SPIE 8737, degraded visual environments: enhanced, synthetic, and external vision solutions 2013, 87370E (16 May 2013). https://doi.org/10.1117/12.2015259

Hansard M, Lee S, Choi O, Horaud R (2012) Time-of-flight cameras: principles, methods and applications. SpringerBriefs in Computer Science. https://doi.org/10.1007/978-1-4471-4658-2. ISBN 978-1-4471-4657-5

Schuon S, Theobalt C, Davis J, Thrun S (2008) High-quality scanning using time-of-flight depth superresolution. In: IEEE computer society conference on computer vision and pattern recognition workshops, 2008. Institute of Electrical and Electronics Engineers. pp 1–7

Gokturk SB, Yalcin H, Bamji C (2005) A time-of-flight depth sensor-system description, issues and solutions. In: IEEE computer society conference on computer vision and pattern recognition workshops, 2004. Institute of Electrical and Electronics Engineers, pp 35–45. https://doi.org/10.1109/CVPR.2004.291

ASC’s 3D Flash LIDAR camera selected for OSIRIS-REx asteroid mission. NASASpaceFlight.com. 2012-05-13

Jan Aue, Dirk Langer, Bernhard Muller-Bessler, Burkhard Huhnke, (2011). Efficient segmentation of 3D LIDAR point clouds handling partial occlusion. In: 2011 IEEE intelligent vehicles symposium (IV). Baden-Baden, Germany: IEEE. https://doi.org/10.1109/ivs.2011.5940442. ISBN 978-1-4577-0890-9

Hsu S, Acharya S, Rafii A, New R (2006) Performance of a time-of-flight range camera for intelligent vehicle safety applications. In: Advanced microsystems for automotive applications 2006. VDI-Buch. Springer, pp 205–219 (Archived from the original (pdf) on 2006-12-06. Retrieved 2018-06-25). https://doi.org/10.1007/3-540-33410-6-16. ISBN 978-3-540-33410-1

Elkhalili O, Schrey OM, Ulfig W, Brockherde W, Hosticka BJ (2006) A 64x8 pixel 3-D CMOS time-of flight image sensor for car safety applications. IN: European solid state circuits conference 2006, pp 568–571 (retrieved 2010-03-05). https://doi.org/10.1109/ESSCIR.2006.307488, ISBN 978-1-4244-0302-8

Zohdi TI (2019) Rapid simulation-based uncertainty quantification of flash-type time-of-flight and Lidar-based body-scanning processes. Comput Methods Appl Mech Eng. https://doi.org/10.1016/j.cma.2019.03.056

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zohdi, T.I. The Game of Drones: rapid agent-based machine-learning models for multi-UAV path planning. Comput Mech 65, 217–228 (2020). https://doi.org/10.1007/s00466-019-01761-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00466-019-01761-9