Abstract

A more sums than differences (MSTD) set A is a subset of \(\mathbb {Z}\) for which \(|A+A| > |A-A|\). Martin and O’Bryant used probabilistic techniques to prove that a non-vanishing proportion of subsets of \(\{1, \dots , n\}\) are MSTD as \(n \rightarrow \infty \). However, to date only a handful of explicit constructions of MSTD sets are known. We study finite collections of disjoint intervals on the real line, \(\mathbb {I}\), and explore the MSTD question for such sets, as well as the relation between such sets and MSTD subsets of \(\mathbb {Z}\). In particular we show that every finite subset of \(\mathbb {Z}\) can be transformed into an element of \(\mathbb {I}\) with the same additive behavior. Using tools from discrete geometry, we show that there are no MSTD sets in \(\mathbb {I}\) consisting of three or fewer intervals, but there are MSTD sets for four or more intervals. Furthermore, we show how to obtain an infinite parametrized family of MSTD subsets of \(\mathbb {Z}\) from a single such set A; these sets are parametrized by lattice points satisfying simple congruence relations contained in a polyhedral cone associated to A.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let A be a finite subset of the integers. The sumset and difference set are defined, respectively, as:

If \(|A-A| > |A+A|\), we say that the set A is difference dominant. If \(|A+A| > |A-A|\), we say that A is sum dominant or, following the terminology of [10], a more sums than differences (MSTD) set. If \(|A-A| = |A+A|\), we say A is balanced.

Due to the commutativity of addition, there is a lot of redundancy in \(A+A\), and its size is at most \(\left( {\begin{array}{c}n\\ 2\end{array}}\right) + n = \frac{n(n+1)}{2}\) where \(n = |A|\) (with equality being achieved with a geometric progression, for example). In the difference set, although 0 can be represented in numerous ways (e.g. \(0 = a_i - a_i\) for any i), as subtraction is not commutative there are at most \(n^2 - n + 1\) elements in \(A-A\) (again with equality being achieved when A is a geometric progression).

Since the difference set has the potential to be much larger than the sumset, we might naively believe that in general the difference set is larger. A well-known example of an MSTD set, whose exact origin is not clear, is \(\{0\), 2, 3, 4, 7, 11, 12, \(14\}\); others were given in [5, 11]. Ruzsa [13,14,15] used probabilistic techniques to prove the existence of many MSTD sets. Though Roesler [12] was able to show that the average size of the difference set is larger than the average size of the sumset for subsets of \([n] := \{1, \dots , n\}\), surprisingly, Martin and O’Bryant [6] proved using probabilistic techniques that for all \(n \ge 15\), the probability of being MSTD among subsets of [n] is at least \(2 \times 10^{-7}\). Zhao [19] showed that the probability of being sum dominant converges to a limit as \(n \rightarrow \infty \) and that this limiting probability is at least \(4.28 \times 10^{-4}\). Based on computer simulation, we expect the true limiting value to be around \(4.5 \times 10^{-4}\). If, however, we independently choose elements of [n] to be in A with probability p(n) which tends to zero with n, then Hegarty and Miller [4] proved that with probability 1 such a set is difference dominated.

In the last decade there has been considerable interest in explicit constructions of MSTD sets (which in light of [6] must exist in large numbers). A well-known technique for producing an infinite family of MSTD sets from a single one is base expansion: let \(A = \{a_1, \dots , a_n\}\) be an MSTD set. For each \(t \in \mathbb {N}\) and some fixed \(m > 2 * \max \{|a_i|: i \in [n]\}\), define \(A_t\) as

Then \(|A_t \pm A_t| = |A \pm A|^t\), thus leading to a parametrized infinite family of MSTD sets, though of extremely low density. Nathanson in [9] asks if there are other parametrized families of MSTD sets. Hegarty [3] and Nathanson provide a positive answer; in both cases their ideas involve taking some set which is symmetric (and thus balanced) and perturbing it slightly so as to increase the number of sums while keeping the number of differences the same. Hegarty [3] then posits: “More interesting, though, would be to have explicit examples of MSTD sets which are, in some meaningful sense, ‘radically’ different from some perturbed symmetric set.”

A new method of explicitly constructing MSTD sets was found by Miller et al. [7]. Their idea is to find sets whose sumset contains all possible sums (i.e., all integers between \(2 a_1\) and \(2 a_n\)). Then by appropriately adding elements to the fringes of such a set, one obtains an MSTD set. This technique was furthered by Zhao [18], who found a larger class of sets whose sumset is as large as possible. These methods yield densities on the order of \(1/n^r\) (the ideas in [7] yield \(r=2\), while those in [18] give \(r=1\)).

We introduce another “radically” different way of constructing MSTD sets. The heuristic behind our techniques is that the property of being an MSTD set should be “stable” under small perturbations. In order to make this notion rigorous, we must pass from the realm of the discrete to the realm of the continuous (but we will ultimately return to the discrete setting). Let \(\mathbb {I}\) denote the set of all collections of finitely many disjoint open intervals on the real line, and let \(\mathbb {I}_n\) denote the set of all collections of n disjoint open intervals on the real line.Footnote 1 For each \({\mathcal {A}} \in \mathbb {I}\), we define \({\mathcal {A}} + {\mathcal {A}}\) and \({\mathcal {A}} - {\mathcal {A}}\) as in the discrete case. However, we are no longer interested in the cardinality of these sets, but rather in the (Lebesgue) measure, \(\mu \). We say that \({\mathcal {A}}\) is difference dominant, sum dominant, or balanced if \(\mu ({\mathcal {A}} - {\mathcal {A}}) > \mu ({\mathcal {A}} + {\mathcal {A}})\), \(\mu ({\mathcal {A}} - {\mathcal {A}}) < \mu ({\mathcal {A}} + {\mathcal {A}})\), or \(\mu ({\mathcal {A}} - {\mathcal {A}}) = \mu ({\mathcal {A}} + {\mathcal {A}})\), respectively.

The foundational result of this paper is the following, which is proven in Sect. 2.

Theorem 1.1

Let \(A \subset \mathbb {Z}\) with \(|A| < \infty \). Then, there exists an \({\mathcal {A}} \in \mathbb {I}\) such that \(|A + A| = \mu ({\mathcal {A}} + {\mathcal {A}})\) and \(|A - A| = \mu ({\mathcal {A}} - {\mathcal {A}})\).

We will show that the construction of \({\mathcal {A}}\) from A is very natural and straightforward.

Theorem 1.1 justifies the study of the additive behavior of elements in \(\mathbb {I}\) as a means to study the additive behavior of subsets of \(\mathbb {Z}\). The space \(\mathbb {I}_n\) (and related spaces) have a natural topology, and thus we have the utility of continuity arguments at our disposal in this setting. In the latter half of Sect. 2, we discuss how to topologize \(\mathbb {I}_n\) and related spaces.

In Sect. 3, we introduce a number of tools from discrete geometry to analyze the MSTD question for elements in \(\mathbb {I}\). The main result of that section is the following.

Theorem 1.2

For \(n \le 3\), there does not exist \({\mathcal {A}} \in \mathbb {I}_n\) such that \({\mathcal {A}}\) is MSTD. For all \(n \ge 4\), there do exist MSTD \({\mathcal {A}} \in \mathbb {I}_n\).

This theorem may be loosely interpreted as the continuous analogue of the theorem of Hegarty [3] that there are no MSTD subsets of \(\mathbb {Z}\) of cardinality less than 8.

In addition to allowing us to prove Theorem 1.2, the tools developed in Sect. 3 will give us a way of producing an infinite parametrized family of MSTD subsets of \(\mathbb {Z}\) from a single MSTD set (either in the discrete or continuous sense). This infinite family will in fact have a simple algebraic structure. As is to be seen, our techniques in some sense allow one to uncover the structure of the set which resulted in it being MSTD and then to systematically enumerate all MSTD sets with this same structure. This is progress towards answering the open-ended question of Nathanson in [9]: “What is the structure of finite sets satisfying \(|A+A| > |A-A|\)?” These ideas are presented in Sect. 4.

In Sect. 5 we present a sort of converse result to Theorem 1.1, namely that up to affine transformation, given any \({\mathcal {A}} \in \mathbb {I}\), we can find an \(A \subset \mathbb {Z}\) such that the additive behavior of \({\mathcal {A}}\) and A are as similar as we like. Finally, in Sect. 6, we present some experimental data and pose some open questions and lines of further research.

2 Discrete to Continuous

In the sequel, A always denotes a finite subset of \(\mathbb {Z}\). For convenience, in this section we assume that elements of \(\mathbb {I}\) consist of closed intervals.

Definition 2.1

Let \(a, b \in \mathbb {Z}\) with \(a \le b\). We call the set \([a, b]_\mathbb {Z} := \{a \le x \le b \,{|}\, x \in \mathbb {Z}\}\) a closed integer interval.

Definition 2.2

The interval decomposition of A is the unique decomposition of A into closed integer intervals \(A = [b_1, c_1]_\mathbb {Z} \cup [b_2, c_2]_\mathbb {Z} \cup \dots \cup [b_k, c_k]_\mathbb {Z}\) such that for all \(i \ne j\), we have \(|b_i - c_j| \ge 2\) (that is, adjacent integers are always grouped into the same closed integer interval; see Example 2.3).

Example 2.3

Let \(A = \{0, 1, 3, 4, 5, 7, 9, 10\}\). Then the interval decomposition of A is

Definition 2.4

Suppose A has interval decomposition \(A = [b_1, c_1]_\mathbb {Z} \cup \dots \cup [b_k, c_k]_\mathbb {Z}\). The continuous representation of A, denoted \(A^*\), is

We are now ready to state our main theorem of this section.

Theorem 2.5

Let A and B be finite subsets of \(\mathbb {Z}\), and \(A^*\) and \(B^*\) their continuous representations. Let \(\mu \) be the Lebesgue measure on the real line. Then

and

Before proving this theorem we shall prove a sequence of ancillary propositions. The following proposition is a straightforward exercise.

Proposition 2.6

Let \(A = [a_1, a_2]_\mathbb {Z}\) and \(B = [b_1, b_2]_\mathbb {Z}\) with \(a_1 < b_1\). Then the following are true:

and

Corollary 2.7

If \(A = [a_1, a_2]_\mathbb {Z}\) and \(B = [b_1, b_2]_\mathbb {Z}\), then \(|A + B| = \mu (A^* + B^*)\) and \(|A - B| = \mu (A^* - B^*)\).

Proposition 2.8

Let A and B be finite subsets of \(\mathbb {Z}\). Let n be in \(\mathbb {Z}\). Then n is in \(A+B\) if and only if n is in \(A^* + B^*\). Similarly, n is in \(A-B\) if and only if n is in \(A^* - B^*\).

Proof

This follows almost immediately from Proposition 2.6. Let \(A = [a_1, b_1]_\mathbb {Z} \cup \dots \cup [a_k, b_k]_\mathbb {Z}\) and \(B = [c_1, d_1]_\mathbb {Z} \cup \dots \cup [c_\ell , d_\ell ]_\mathbb {Z}\) be the interval decompositions of A and B respectively. Suppose \(n \in A + B\). This clearly can only happen if \(n \in [a_i, b_i]_\mathbb {Z} + [c_j, d_j]_\mathbb {Z}\) for some i and j. By Proposition 2.6, letting \(A' = [a_i, b_i]_\mathbb {Z}\) and \(B' = [c_j, d_j]_\mathbb {Z}\), we know that \(n \in A'^* + B'^*\), and therefore n is also in \(A^* + B^*\).

Now suppose that \(n \in A^* + B^*\) with \(n \in \mathbb {Z}\). This implies that \(n \in [a_i, b_i]_\mathbb {Z}^* + [c_j, d_j]_\mathbb {Z}^*\) for some i and j. By Proposition 2.6, this implies that \(n \in [a_i, b_i]_\mathbb {Z} + [c_j, d_j]_\mathbb {Z}\) since \(([a_i, b_i]_\mathbb {Z}^* + [c_j, d_j]_\mathbb {Z}^*) \cap \mathbb {Z} = [a_i, b_i]_\mathbb {Z} + [c_j, d_j]_\mathbb {Z}\). Thus we get that \(n \in A+B\).

By switching the plus signs above to minus signs, we obtain a proof for the second half of the proposition statement. \(\square \)

Proposition 2.9

Let A and B be finite subsets of \(\mathbb {Z}\). Let \(C_{A^* + B^*}\) and \(C_{A^* - B^*}\) be defined as

and

where \({\overline{B}}_{\frac{1}{2}}(n)\) is the closed ball of radius \(\frac{1}{2}\) centered at n. Then \(C_{A^* + B^*} = A^* + B^*\) and \(C_{A^* - B^*} = A^* - B^*\).

Proof

Notice that this statement is clearly true in the case that \(A = [a_1, a_2]_\mathbb {Z}\) and \(B = [b_1, b_2]_\mathbb {Z}\) from Proposition 2.6. Suppose now that \(A = \bigcup _{i = 1}^k I_i\) and \(B = \bigcup _{j = 1}^\ell J_j\) where the \(I_i\) are the integer intervals in the interval decomposition of A, and the \(J_j\) are the integer intervals in the interval decomposition of B. Thus

As before, by changing plus signs to minus signs we get a proof for the latter part of the proposition statement. \(\square \)

We are now ready to prove Theorem 2.5.

Proof of Theorem 2.5

By Proposition 2.9, we know that \(C_{A^* + B^*} = A^* + B^*\). The set \(C_{A^* + B^*}\) is composed to sets of measure 1 such that the intersection of any pair of them is either empty or a single point. This implies that the measure of the intersection of any pair of these sets is 0. We can therefore conclude that \(\mu (A^* + B^*) = \#\,\{(A^* + B^*) \cap \mathbb {Z}\}\). However, by Proposition 2.8, we know that \(\#\,\{(A^* + B^*) \cap \mathbb {Z}\} = |A + B|\). Therefore, \(\mu (A^* + B^*) = |A + B|\). Showing that \(\mu (A^* - B^*) = |A - B|\) follows analogously. \(\square \)

The above results show that by studying sumsets and difference sets for collections of intervals, we can retrieve results about collections of intervals as a special case. However, the power of instead studying collections of intervals is that, as we continuously vary the endpoints of our intervals, the sizes of the sumset and the difference set also vary continuously. Therefore, for example, given a single MSTD collection of intervals, we can vary the endpoints of these intervals slightly and still have an MSTD set.

In general, rather than deal with \(\mathbb {I}\), we shall fix some n and deal with \(\mathbb {I}_n\). Since additive behavior (in particular the property of being MSTD) is invariant under affine transformation, modding out by affine equivalence does not change \(\mathbb {I}_n\) in a meaningful way. With this in mind, there are several natural ways to topologize \(\mathbb {I}_n\) and its quotient by some or all of the affine group. These fall into two broad categories of parametrizations: endpoint parametrizations and interval-gap parametrizations.

Endpoint parametrizations refer to subsets of some Euclidean space where each component of a vector in the space is either a left or right endpoint for some interval on the real line. The free simplex model is the subset of \(\mathbb {R}^{2n}\) composed of vectors of the form \((a_1, b_1, \dots , a_n, b_n)\) with the condition that \(a_1 \le b_1 \le \dots \le a_n \le b_n\). We think of this vector as representing the set \([a_1, b_1] \cup [a_2, b_2] \cup \dots \cup [a_n, b_n]\). This model is a parametrization of all of \(\mathbb {I}_n\); we have not modded out by any affine equivalences. This model will be particularly useful in the next section.

One disadvantage of the above model is that the space is not compact. A similar model, which we call the simplex model is the subset of \(\mathbb {R}^{2n}\) composed of vectors \((a_1, b_1, \dots , a_n, b_n)\) with the condition that \(0 \le a_1 \le b_1 \le \dots \le b_1 \le b_2 \le 1\). This model is named as such because the points in this space all live in a 2n-dimensional simplex.

Yet another disadvantage of both of the above models is that there is some redundancy: up to affine transformation we still have several representatives for the same set. We can mod out by all affine transformations (with positive determinant) by requiring that our leftmost interval start at 0 and our rightmost interval end at 1. We thus define the restricted simplex model to be those vectors \((b_1, a_2, b_2, \dots , a_{n-1}, b_{n-1}, a_n)\) in \(\mathbb {R}^{2n-2}\) such that \(0 \le b_1 \le a_2\le \cdots \le b_{n-1} \le a_n \le 1\) with the understanding that this vector corresponds to the collection of intervals \([0, b_1] \cup [a_2, b_2] \cup \dots \cup [a_n, 1]\).

Another class of natural parametrizations we call interval-gap parametrizations; in these, instead of designating where intervals begin and end, we designate how long each interval is and how long the gaps between consecutive intervals are (thus we have already modded out by translations). The free cube model consists of vectors \((\ell _1, g_1, \dots , g_{n-1}, \ell _n)\) in \(\mathbb {R}^{2n-1}\) such that \(\ell _i,g_i \ge 0\) for all i. Given such a vector, we construct a collection of n intervals as follows: the leftmost interval is \([0, \ell _1]\). The gap between the leftmost interval and its neighboring interval to the right is \(g_1\) and the length of this next interval is \(\ell _2\), and so on.

The above model has a natural compactification which we call the unit cube model in which we require that \(0 \le \ell _i, g_i \le 1\) for all i (so that we may think of our points as living in the unit cube in \(\mathbb {R}^{2n-1}\)). Though we do not use this model in this paper, this model was utilized in [8] to give a geometric interpretation to the enumeration and growth rate of bidirectional ballot sequences/(1, 1)-culminating paths (these sequences were used by Zhao in his constructions of MSTD sets).

Lastly, analogously to the restricted simplex model, we can mod out by all affine transformations to get the restricted unit cube model. That is, we can additionally require that \(\ell _1 + g_1 + \dots + \ell _n = 1\). This model is essentially the same as the restricted simplex model.

3 A Geometric Perspective

The main goal of this section is to prove Theorem 1.2. However, as we shall see, in doing so we shall develop a powerful set of tools for analyzing continuous sumsets and difference sets. These tools will end up being useful for studying subsets of \(\mathbb {Z}\) as well.

In the sequel we shall generally let n be fixed, and let J represent some element in \(\mathbb {I}_n\) consisting of closed intervals. Thus, \(J = \{J_1, \dots , J_n\}\) where \(J_i = [x_i, y_i]\), and for \(i < j\), \(J_i\) is to the left of \(J_j\) on the number line. Vectors will be denoted by parentheses (i.e., [x, y] is an interval and (x, y) is a vector).

To handle the \(n=1\) case of Theorem 1.2 is more or less trivial. Already with the \(n=2\) case some work is required; the analysis of the \(n=2\) case reveals most of the important ideas that go into the \(n \ge 3\) case, and we choose to analyze the \(n=2\) case in such a way that the core ideas are clearest, rather than using the more powerful but harder to visualize framework used in the \(n \ge 3\) case.

Lemma 3.1

There are no MSTD sets in \(\mathbb {I}_1\).

Proof

Suppose \(J = [x_1, y_1]\). The sumset consists of a single interval, \([x_1 + x_1, y_1 + y_1]\), which has length \(2 y_1 - 2 x_1\). The difference set also consists of a single interval, \([x_1 - y_1, y_1 - x_1]\), which has length \(2 y_1 - 2 x_1\). Thus, the length of the sumset is exactly equal to the length of the difference set, so in all cases J is balanced. Notice that this analysis reveals that there is only one type of behavior in the \(n=1\) case, which is to be expected since all intervals are equivalent mod affine transformations. \(\square \)

The \(n=2\) case requires some more work. As has been previously discussed, \(J+J\) can be expressed as the union over all i and j of intervals of the form \(J_i + J_j\) (and similarly for the difference set). If we know the locations of all intervals of the form \(J_i + J_j\) (or \(J_i - J_j\)) relative to each other, then we know exactly what the sumset (or difference set) is. More precisely, if we know the total ordering on the left and right endpoints of all intervals of the form \(J_i + J_j\) (or \(J_i - J_j\)), then we know exactly which points are in \(J+J\) (or \(J-J\)), and additionally, we know the measure of \(J+J\). This motivates the following definition.

Definition 3.2

Given J, the total ordering on the left and right endpoints of intervals of the form \(J_i + J_j\)\((J_i - J_j)\) is called the structure of the sumset (difference set). The structure of J refers to the structure of both the sumset and the difference set.

Thus, stated succinctly, the above observations say that if we know the structure of the sumset/difference set, then we know exactly what the sumset/difference set is.

Another notational definition which will make analysis easier is the following:

Definition 3.3

Let \((J_i \pm J_j)_L\) and \((J_i \pm J_j)_R\) denote the left and right endpoints respectively of the interval \(J_i \pm J_j\).

Lemma 3.4

There are no MSTD sets in \(\mathbb {I}_2\).

Proof

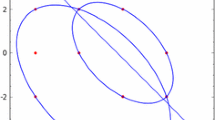

Let \(J = J_1 \cup J_2\). If we use the free simplex model, then J has four degrees of freedom. However, if we instead use the restricted simplex model, then J only has two degrees of freedom, so all possible cases for the structure of J can be readily visualized. Using this model, we can represent a given J as a point in \(\mathbb {R}^2\): if \(J = \{[0, y_1], [x_2, 1]\}\), we represent this as the point \({\overline{J}} = (y_1, x_2) \in \mathbb {R}^2\). The restricted simplex model restricts to the region in the plane simultaneously satisfying the following inequalities:

We call this region A.

First we see what information we need to figure out the structure of \(J+J\). There are three intervals we are concerned with: \(J_1 + J_1\), \(J_1 + J_2\) and \(J_2 + J_2\). Note that since \(J_1\) is to the left of \(J_2\), we immediately know that:

Thus to figure out the structure of \(J+J\), we only need the following information:

These two inequalities in question are equivalent to knowing on which side of the following lines in the plane our point \({\overline{J}}\) lies:

We now turn to determining the structure of \(J-J\). From (3.1) to (3.3), we already know:

By the symmetry of the difference set, we only need to know the following information to determine the structure of the difference set:

These three inequalities in question are equivalent to knowing on which side of the following lines in the plane our point \({\overline{J}}\) lies:

However notice that (3.11) is the same as (3.6), and also (3.12) is the same as (3.7). Thus for points within A, by knowing on which side of the three lines given by (3.6), (3.7), and (3.10) our point \({\overline{J}}\) lies, we completely know the structure of J. All of the above information is captured geometrically in Fig. 1. We see that A is divided into 6 regions such that all points in the same region have the same structure. Table 3 at the end of this paper enumerates which structure each of these regions corresponds to.

In Table 3 we claim that regions 1-4 are difference dominant, and regions 5 and 6 are balanced. That regions 3 and 4 are difference dominant and that regions 5 and 6 are balanced are immediate to see. To see that regions 1 and 2 are difference dominant is also straightforward. We shall show this is the case for region 1. The proof for region 2 is similar.

In (the interior of) region 1, the following inequalities hold:

From Table 3 we see that \(\mu (J+J) = 3 y_1 - 3 x_2 + 3\) and \(\mu (J-J) = 4 y_1 - 2 x_2 + 2\). Therefore, \(\mu (J-J) - \mu (J+J) = y_1 + x_2 - 1\). We thus are interested in the following inequality:

However from (3.13) we know that this inequality holds true everywhere in region 1. Therefore, region 1 is difference dominant. \(\square \)

In handling the \(n = 3\) case of Theorem 1.2, rather than use the restricted simplex model, we shall use the free simplex model. The main utility of this model is that the sum of two vectors in \(\mathbb {I}_n\), as represented in this model, is again in \(\mathbb {I}_n\) in this model (that is, \(\mathbb {I}_n\) has a semigroup structure). Similarly to before, if \(J = \{[x_1, y_1], \dots , [x_n, y_n]\}\), we associate to this the point \({\overline{J}} = (x_1, y_1, \dots , x_n, y_n)\). The goal is again to determine the structure of J. Like in the \(n=2\) case, in order to figure out the total ordering on the left and right endpoints of the intervals in the sumset/difference set, it suffices to know the outcomes of every comparison between endpoints. Each such comparison can be expressed as evaluation of a linear polynomial in the \(x_i\)s and \(y_i\)s. After a bit of thought, one realizes that up to multiplication by unit, there are four types of such polynomials that we are interested in, as described in Table 1. We call such polynomials comparison polynomials.

For each linear polynomial as in Table 1, we have an associated coefficient vector, v, namely the vector which when dotted with \((x_1, y_1, \dots , x_n, y_n)\) gives the linear polynomial in question. Additionally, to each such linear polynomial we have an associated hyperplaneH, namely the hyperplane obtained by setting the polynomial equal to zero. This hyperplane partitions \(\mathbb {R}^{2n}\) into two pieces corresponding to the two different outcomes of the comparison which the linear polynomial represents. Note that v is a basis for the orthogonal complement to H.

We now recall some definitions from discrete geometry.

Definition 3.5

A central hyperplane arrangement is a finite collection of hyperplanes which all pass through the origin.

Note that the set of associated hyperplanes to the linear polynomials of interest form a central hyperplane arrangment which we call the structure arrangement.

Definition 3.6

A conical combination of vectors \(v_1\), \(\dots \), \(v_m\) is any combination of the form

such that \(\alpha _i \ge 0\) for all \(1 \le i \le m\). The set of all conical combinations of a set of vectors is called the conical span.

Definition 3.7

A polyhedral cone is the conical span of a fixed finite set of vectors. Equivalently, it is all points in the intersection of finitely many halfspaces for which the corresponding set of hyperplanes forms a central hyperplane arrangement.

In this paper we shall be dealing exclusively with rational polyhedral cones, so we can always assume that each generator of the cone is a vector in \(\mathbb {Z}^n\) with relatively prime entries (a primitive integer vector).

Definition 3.8

An orientation on a hyperplane is a choice to label one of the corresponding halfspaces as positive and the other as negative. Equivalently, it is a choice of a non-zero vector b in the (1D) orthogonal complement to the hyperplane: the positive halfspace is the set of points v such that \(v \cdot b \ge 0\). We call b a positive normal.

If we have a central hyperplane arrangement, then for each way of simultaneously orienting all the hyperplanes in the arrangement, we get an associated polyhedral cone (this cone may just be zero). Our space is thus partitioned into disjoint polyhedral cones such that disjoint cones have disjoint interiors. Dually, if we choose a specific polyhedral cone arising from a central hyperplane arrangement, then we get an induced orientation on the hyperplane arrangement as follows: for each hyperplane, we say that the halfspace containing the polyhedral cone is the positive halfspace.

Given the structure arrangement, we get a partition of \(\mathbb {R}^{2n}\) into finitely many polyhedral cones, each of which we call a structure cone. For a given structure cone, when choosing positive normals for the induced orientation on the arrangement, we may choose for each hyperplane either the corresponding coefficient vector or its negative.

Definition 3.9

Let V be a polyhedral cone in \(\mathbb {R}^n\). Let \(V^* = \{w \in \mathbb {R}^{n} : \forall \ v \in V,\ w \cdot v \ge 0 \}\). Then \(V^*\) is called the dual cone of V.

We may interpret the dual cone to V as the set of all possible positive normals to oriented hyperplanes such that all of V lies on the positive side of the hyperplane (together with the zero vector).

The following basic result from the theory of polyhedral cones makes it easy to find dual cones, especially when the polyhedral cones are given in terms of intersections of half-spaces (as is the case here).

Proposition 3.10

Given a polyhedral cone, V, the conical span of the set of positive normals in the induced orientation on V is the dual cone of V.

Suppose V is a structure cone. For all J such that \({\overline{J}} \in V\), the structure of J is the same. For all \({\overline{J}} \in V\), there exists a single homogeneous linear polynomial in the \(x_i\)’s and \(y_i\)’s, call it \(P_+\) with coefficient vector \(v_+\), such that \(\mu (J+J) = v_+ \cdot {\overline{J}}\). Analogously there exists \(P_-\) with coefficient vector \(v_-\) such that \(\mu (J-J) = v_- \cdot {\overline{J}}\). The vectors \(v_+\) and \(v_-\) are called the sum vector and difference vector, respectively, for the cone V. The vector \(d = v_+ - v_-\) is called the MSTD vector for V; a collection of intervals \(J \in \mathbb {I}_n\) with \({\overline{J}} \in V\) is an MSTD set if and only if \(d \cdot {\overline{J}} > 0\). Note that if \(d \cdot {\overline{J}} = 0\), then J is balanced, and if \(d \cdot {\overline{J}} < 0\), then J is difference dominant.

The MSTD vector gives rise to an oriented hyperplane, namely the orthogonal complement to the span of d, with positive normal equal to d. We thus have the following crucial observation.

Observations 3.11

Let V be a structure cone with MSTD vector d. If and only if \(d = 0\), then all of V is balanced. Now suppose d is non-zero. Then, if and only if d is in the dual cone to V, then V is sum-dominant (except possibly on the boundary which may be balanced). If and only if \(-d\) is in the dual cone to V, then V is difference domaint (except possibly on the boundary which may be balanced). In all other cases, V splits into a sum dominant region and a difference dominant region.

Lemma 3.12

For all \(J \in \mathbb {I}_3\), J is not an MSTD set.

Proof

We now have all the tools necessary to handle the \(n = 3\) case of Theorem 1.2. In light of Observation 3.11, rather than needing to (somehow) check the uncountably many possible collections of three intervals, we instead now need only check that for each structure cone V for the \(\mathbb {I}_3\) structure arrangement, either \(d = 0\) or \(-d \in V^*\). To prove the \(n = 3\) case, we proceed as in the \(n = 2\) case, namely we enumerate all structure cones and show that in all cases either \(d = 0\) or \(-d \in V^*\). We present an algorithm to carry our this procedure. A variant on this algorithm was implemented in SAGE [2] on a computer to enumerate and check all cases. Whereas there were only six cases for \(n = 2\), there are over 500 cases for \(n = 3\).

The difficult part of the algorithm is enumerating all of the structure cones. We shall describe a simple algorithm for doing just that; after stating the algorithm we shall discuss exactly what it does (and why it works).

In essence, Algorithm 1 adds one hyperplane at a time to our arrangement, keeping track at each step what the non-trivial cones are (the cones that are not just the zero vector). It represents each cone by the generators of its dual cone, that is by the choice of orientation (positive normals) on the partial hyperplane arrangement which gives rise to that specific cone.

Since for all \(J \in \mathbb {I}_n\), \(x_1 \le y_1 \le \dots \le x_n \le y_n\), for every structure cone of interest to us, the vectors \({\hat{y}}_1 - {\hat{x}}_1, \dots , {\hat{y}}_n - {\hat{x}}_n\) must be positive normals (where \({\hat{x}}_i\) is the coefficient vector to the polynomial \(x_i\)). This explains line 3. The variable partial_dual_cones keeps track of all partial choices of positive normals for structure cones in our arrangement.

In line 4 of Algorithm 1, the function GENERATE_LIST_OF_NORMALS returns the coefficient vectors for all the relevant comparison polynomials (irredundantly up to multiplication by unit).

The remainder of the algorithm consists of two loops. The outer loop iterates through the set of normals in this list, and the inner loop iterates through the cones in our partial hyperplane arrangement. A single iteration of the outer loop introduces a new hyperplane to the arrangement (as represented by its normal new_normal) and a single iteration of the inner loop keeps track if a given cone in the partial arrangement (as represented by partial_dual_cone) splits into two cones. The function IS_CONSISTENT(partial_dual_cone, new_normal) tests whether or not −new_normal is in the conical span of the elements of partial_dual_cone. In other words, it tests if the cone corresponding to partial_dual_cone lies entirely on the negative side of the oriented hyperplane with positive normal new_normal. If so, then adding new_normal to partial_dual_cone as a positive normal would result in a cone with empty interior. Any such cone would be on the boundary of some other cone with non-empty interior, and thus is safe to ignore since it would be handled by other cases. An example of an inconsistent choice of positive normal is the following: suppose we already know that \(x_1 \le x_2 \le x_3\). Then we necessarily know that \(x_1 + x_2 \le x_2 + x_3\), and thus adding the vector \({\hat{x}}_1 + {\hat{x}}_2 - {\hat{x}}_2 - {\hat{x}}_3\) to our list of positive normals is inconsistent. Testing consistency in this sense can be phrased a feasibility problem in linear programming, and thus there exist efficient algorithms to solve this problem. The variable new_partial_dual_cones keeps track of the new cones in our new hyperplane arrangement with non-empty interior. Once the interior loop has finished, we set partial_dual_cones equal to new_partial_dual_cones and repeat the outer loop, until we finally finish and return the set of cones with non-empty interior in our structure hyperplane arrangement.

Once we have all of our structure cones, as represented by the the list of positive normals for the induced orientation on the hyperplane arrangement, we have all of the information we need to figure out the sum vector and difference vector for each cone. We do not explicity describe an algorithm to do so here, but leave it as an exercise to the interested reader to think about how to do this.

Once we have the sum and difference vectors, we have the MSTD vector. Testing if the MSTD vector is in the dual cone is a cone membership problem and thus can also be solved by linear programming techniques.

We implemented in SAGE a slight variation on Algorithm 1. In particular, our algorithm does not enumerate all regions where the structure is constant (i.e. all structure cones), but rather just those regions such that within a region, the sum vector and difference vector is constant (e.g. in the \(n = 2\) case, regions 5 and 6 have the same sum formula and difference formula, but have different structures; using an improvement on Algorithm 1, these two regions would not be distinguished). We do not describe the improved algorithm here; the mere existence of an algorithm such as Algorithm 1 is what is most important. When the improved algorithm ran, it found 502 cases, and in all such cases either \(d = 0\) or \(-d\) was in the dual cone implying there are no sum dominant sets for \(n = 3\). On a personal computer with 4GB of RAM, the computation took around 30 minutes to complete. \(\square \)

Proof of Theorem 1.2

To complete the proof of Theorem 1.2, we must show that for \(n \ge 4\), there do exists collections of n intervals which are sum dominant. Note that it suffices to just show that this is the case when \(n = 4\) (if this is not clear, see Sect. 6 on cleaving). We can actually turn to the existing literature on MSTD sets of integers to find an example. In Hegarty [3], we have

The corresponding collection of intervals is:

4 From One MSTD Set to Many

In this section we show a method of producing a large class of MSTD sets (both continuous and discrete) from a single set. In particular this gives a new method of producing parametrized families of MSTD sets of integers.

The idea of the method is straightforward given the ideas in Sect. 3: given \(J \in \mathbb {I}_n\), we find the generators for its structure cone. We then also find the MSTD vector, d, for this cone. Since J is MSTD, we know that either \(d \in V^*\), implying the entire cone is MSTD, or else the oriented hyperplane with positive normal d partitions the hyperplane into two cones, both with non-empty interior. One of these cones is entirely MSTD. We then find the generators for this cone.

Before formally presenting the details of the algorithm (Algorithm 2), we show the procedure on a concrete example. This example has a few peculiarities which make the example simpler than most, but the core ideas are present.

Example 4.1

Let \(G = \{0, 1, 2, 4, 5, 9, 12, 13, 14\}\) and let J be its continuous representation. A computer program reveals that J is on the boundary of 108 different structure cones. We choose one of these cones arbitrarily and call it V. The extremal rays of V are the columns of the following matrix:

We then compute the MSTD vector, d, for V:

We check if \(d \in V^*\) and find that it is not. We therefore add d to \(V^*\) and then take its dual cone to get a new cone W. The extremal rays for W are the columns of the following matrix:

Note then that any vector in the conical column span of A is either balanced or sum dominant. A closer examination reveals that all the columns give rise to balanced sets except for the 5th column. Therefore, any vector of the form Az with \(z_i \ge 0\) and \(z_5 > 0\) gives rise to a continuous MSTD set. Thus from finding a single MSTD set, we have found a huge class of continuous MSTD sets.

With just a bit more work we can find an infinite family of MSTD sets of integers as well. In fact, we shall find all MSTD subsets of \(\mathbb {Z}\) whose continuous representation corresponds to a point in W. Recall that given a point corresponding to an MSTD set of the form \(a = (x_1 - 1/4, y_1 + 1/4, \dots , x_n - 1/4, y_n + 1/4)\) where each \(x_i\) and \(y_i\) is in \(\mathbb {Z}\), then we can obtain an MSTD set of integers, namely \(\{[x_1, y_1]_\mathbb {Z}, \dots , [x_n, y_n]_\mathbb {Z}\}\). If a is in some cone X, then \(\alpha a \in X\) for all \(\alpha \ge 0\). In particular, \(4 a \in W\); note that \(4 a \in \mathbb {Z}^{2n}\). Furthermore, mod 4, the entries of 4a must be \((3, 1, 3, 1, \dots , 3, 1)\). Conversely, if some point in \(\mathbb {Z}^{2n}\) is of the form \((3, 1, \dots , 3, 1)\) mod 4, then we can find an MSTD set of integers from that point by dividing by 4 and undoing the discrete to continuous process. We say that a point \(x \in \mathbb {R}^{2n}\) is an \(({{\alpha , \beta }})\)-lattice point modk if \(x \in \mathbb {Z}^{2n}\) and mod k, \(x = (\alpha , \beta , \dots , \alpha , \beta )\). Thus our goal is to find the (3, 1)-lattice points mod 4 in the conical column span of A.

Let B be the matrix A without the last column (the last two columns are linearly dependent, so right now we can ignore the last column). From the above observations, we are interested in finding solutions to the following system of equations:

Using Mathematica [17], we get that the unique such \(\alpha \) is

Therefore, by dividing any vector of the form below by 4 and then undoing the discrete to continuous process, we obtain an MSTD set of integers (see Table 2 for some examples):

Furthermore, since the B has 8 rows, which is the dimension of the vector space W lives in, and since the determinant of B is -1, we know that in fact all MSTD sets of integers in W are obtained in this way (in general it will not be the case that the number of generators is equal to the dimension of the space, or even if the number of generators is equal to the dimension, that the determinant of the matrix whose rows are those generators will be \(\pm 1\); we shall discuss how to deal with these issues shortly).

In the above procedure, we took a single MSTD set and not only found a huge class of continuous MSTD sets, but also a non-trivial infinite family of discrete MSTD sets (in fact all of the discrete MSTD sets in the structure cone). Furthermore, the MSTD set we started with led us to find 108 different MSTD cones, so from the same starting MSTD set, we can carry out the above process 107 more times to find even more MSTD sets (all of this arising from finding a single MSTD set)!

We now discuss a more general algorithm for carrying out the above procedure. There are three main issues which up to this point we have glossed over.

-

(1)

The set J may be contained in multiple structure cones, so we need a way of enumerating all structure cones containing J; this can be resolved using ideas similar to those presented in Algorithm 1.

-

(2)

A given structure cone containing J may have more generators than the dimension of the space; the trick here is to partition the cone into a collection of simplicial cones.

-

(3)

For a given rational simplicial cone with generators represented as primitive integer vectors, if the determinant of the corresponding matrix is not \(\pm 1\), then integer conical combinations of the generators will not necessarily give all lattice points in the cone (and in particular will not necessarily give all lattice points corresponding to MSTD subsets of \(\mathbb {Z}\)).

First we discuss issue (1); Algorithm 2 gives a way of resolving this issue. In words, this algorithm first orients those hyperplanes for which \({\overline{J}}\) is on the strictly positive side. This results in a polyhedral cone for which \({\overline{J}}\) is in the interior. The remaining hyperplanes (as represented by non_strict_normals) are then one by one tested to see if they partition the partial cones found so far into two cones with non-empty interiors. Lines 15-28 are virtually identical to Algorithm 1 and thus their function should be clear.

Once we have the representation of a polyhedral cone as an intersection of halfspaces, there are algorithms to find its representation as the concial span of a collection of generators. Issue (2) is then quite straightforward to deal with. Partitioning a polyhedral cone into simplicial cones is virtually the same as partitioning a compact polytope into simplices and there exist algorithms to do so.

Issue (3) is also not too bad to deal with. Let V be a rational simplicial cone. Let A be a matrix whose columns are primitive integer vectors generating the cone. Then, \(\det (A) \in \mathbb {Z}\). Let \(D = |\det (A)|\). Then, since \(A^{-1} = adj (A)/\det (A)\), all x such that \(Ax \in \mathbb {Z}\) are in \(\mathbb {Z}^{n}/D\), that is the set of points whose product with D is in \(\mathbb {Z}^n\). Suppose \(x \in \mathbb {Z}^n/D\) such that Ax is a (3, 1)-lattice point mod 4. Then, A(Dx) must be a (3D, D)-lattice point mod 4D. Conversely, if Ay is a (3D, D)-lattice point mod 4D, then A(y / D) is a (3, 1)-lattice point mod 4. Therefore, finding all \(x \in \mathbb {Q}^n\) such that Ax is a (3, 1)-lattice point mod 4 is equivalent to finding all \(y \in \mathbb {Z}^n\) such that Ay is a (3D, D)-lattice point mod 4D. To do this we need only find the set of solutions to the following system of equations over \(\mathbb {Z}/(4 D \mathbb {Z})\).

Suppose that \(v_1, \dots , v_{2n}\) are the generators for some simplicial cone, S, arising as the MSTD refinement of an MSTD structure cone with MSTD vector d. Let \(v_{i_1}, \dots , v_{i_k}\) be those \(v_i\) such that \(v_i \cdot d > 0\). Let p be any particular solution to 4.7. A point m is an MSTD (3, 1)-lattice point mod 4 if and only if it can be expressed as

where k is in the kernel of A as an endomorphism on \((\mathbb {Z}/(4D)\mathbb {Z})^{2n}\), and \(\ell \in \mathbb {Z}^{2n}\) and such that \(f = p/D + k/D + 4\ell \) satisfies \(f \cdot {\hat{e}}_i \ge 0\) for all \(i \in [2n]\) and \(f \cdot {\hat{e}}_{i_j} > 0\) for some \(j \in [k]\).

5 Continuous to Discrete

In this short section we prove a simple “converse” to Theorem 1.1: up to scaling, every element in \(\mathbb {I}\) can be arbitrarily well approximated by (the continuous representation of) a finite collection of integers.

Theorem 5.1

Let \({\mathcal {A}} \in \mathbb {I}\). For every \(\varepsilon > 0\), there exists \(\alpha > 0\) and \(B \subset \mathbb {Z}\), with continuous representation \({\mathcal {B}}\), such that

and

Proof

The idea of the proof of Theorem 5.1 is to dilate the set \({\mathcal {A}}\) and then approximate each dilated interval by the set of integers contained in the interval. Without loss of generality, we may assume that \({\mathcal {A}} \subset [0, 1]\). Suppose that \({\mathcal {A}}\) consists of k intervals, that is \({\mathcal {A}} = J_1 \cup \dots \cup J_k\) with \(J_i = [x_i, y_i]\) and with \(J_i\) to the left of \(J_j\) for \(i < j\). Suppose the length of the shortest of these intervals is \(\delta \). Let \(N \in \mathbb {Z}\) be any number such that

Let \(F_N = \{i/N : 0 \le i \le N, \ i \in \mathbb {Z}\}\). By (5.1), we know

Let \(\ell _i, r_i \in \mathbb {Z}\) be such that \(\ell _i/N = \min (J_i \cap F_N)\) and \(r_i/N = \max (J_i \cap F_N)\). Notice that by (5.1),

and

Let \(B_i = [\ell _i + 1, r_i - 1]_{\mathbb {Z}}\) (by (5.2) each \(B_i\) is non-empty). Let \(B = \bigcup _i B_i\). Let \({\mathcal {B}}\) be the continuous representation of B with \({\mathcal {B}}_i\) the continuous representation of \(B_i\). Let \({\mathcal {C}}\) be the set \({\mathcal {B}}\) scaled by 1 / N, and \({\mathcal {C}}_i\) the set \({\mathcal {B}}_i\) scaled by 1 / N. Notice that \({\mathcal {C}} \subseteq {\mathcal {A}}\). Therefore

Let \(x \in {\mathcal {D}}\). We have that

Therefore

By (5.3) and (5.4), we know that

Therefore,

6 Open Questions and Concluding Remarks

The ideas presented in Sects. 3 and 4 motivate several interesting follow-up questions. First, there is the question of whether or not a more elegant proof of Theorem 1.2 exists.

Question 6.1

Is there a proof of Theorem 1.2 that does not reduce to casework?

There are also several interesting combinatorial questions that arise. One basic question is:

Question 6.2

How many cones are there in the structure hyperplane arrangement for \(\mathbb {I}_n\)?

A closely related question has been investigated before in [1]. The number of such regions is closely related to (and upper bounded by) the even indexed entries in OEIS A237749. There is a rich theory of counting the number of regions in a hyperplane arrangement (see [16], e.g.), and perhaps these techniques could answer Question 6.2.

Another basic question is:

Question 6.3

How many MSTD structure cones are there for \(\mathbb {I}_n\)? What are their relative (intrinsic) volumes? Is there a “dominating” MSTD cone?

In our opinion, one of the most interesting subsequent question is the following.

Question 6.4

Do the set of MSTD points in \(\mathbb {I}_n\) form a connected region? If so, what is the degree of connectivity of this region (is it 2n-connected?)? If not, how many connected components does it contain? Does the number of connected components change as n increases?

If the answer to Question 6.4 is yes, then it would in some sense imply that there is only one “type” of MSTD set, from a single MSTD set (with a fixed number of intervals), all other MSTD sets can be found by perturbing that set (and keeping it MSTD along the way).

Given an MSTD \(J \in \mathbb {I}_n\), there are several ways of naturally “embedding” this set into \(\mathbb {I}_{n+1}\). If J is composed of open intervals, then removing any single point in J results in \(n+1\) intervals, call it \(J'\), but the sets J and \(J'\) are basically the same. We say that \(J'\) is obtained from J by cleaving. Assuming the answer to Question 6.4 is no, a refined question is

Question 6.5

Can every MSTD point in \(\mathbb {I}_{n+1}\) be obtained by an MSTD path from the image of some cleaved MSTD point in \(\mathbb {I}_n\)?

If we deal with a compact parameter space, as in the simplex model and unit cube model, we may then talk about the probability that a point is MSTD, balanced, or difference dominant. Figures 2 and 3 show approximations of these probabilities based on Monte Carlo simulation with 10 million trials.

Interestingly, the probabilities for being MSTD and balanced appear to be different for the simplex model and unit cube model. However, in both cases, the probability of being sum-dominant appears to converge to a similar value to the limiting probability in the discrete case (\(\sim 4.5 \times 10^{-4}\)).

Question 6.6

Do the probabilities of being sum dominant and balanced converge for the simplex model and the cube model? What is the relationship between these MSTD probabilities and the limiting MSTD probability in the discrete case?

One of the main open questions in the study of MSTD sets is to construct a constant density family of MSTD sets as \(n \rightarrow \infty \). Thus we may ask:

Question 6.7

Can the techniques in this paper be used to construct a constant density family of MSTD subsets of [n] as \(n \rightarrow \infty \)?

There are several interesting subsequent lines of inquiry stemming from the ideas in the paper. More generally, we believe that there is a lot of utility in passing from the discrete to the continuous as in this paper. Ideas closely related to those here were utilized in the related paper [8] to reveal a geometric structure of a certain family of combinatorial objects which was not visible previously. We believe there may be several further fruitful applications of the ideas of this paper (Table 3).

Notes

As if often the case when dealing with measure theoretic arguments, it does not make a meaningful difference if we use open or closed intervals (or even half-open/half-closed intervals). Certain arguments are cleaner if one uses one or the other, and thus in this paper we shall sometimes assume that the elements of \(\mathbb {I}\) and \(\mathbb {I}_n\) consist of collections of open intervals, and at other times closed intervals.

References

Beck, M., Bogart, T., Pham, T.: Enumeration of Golomb rulers and acyclic orientations of mixed graphs. Electron. J. Comb. 19(3), Paper No. 42 (2012)

Developers, T.S.: SageMath, the Sage Mathematics Software System (Version 7.2) (2016). http://www.sagemath.org

Hegarty, P.V.: Some explicit constructions of sets with more sums than differences. Acta Arith. 130(1), 61–77 (2007). https://doi.org/10.4064/aa130-1-4

Hegarty, P.V., Miller, S.J.: When almost all sets are difference dominated. Random Struct. Algorithms 35(1), 118–136 (2009)

Marica, J.: On a conjecture of Conway. Can. Math. Bull. 12, 233–234 (1969). https://doi.org/10.4153/CMB-1969-029-4

Martin, G., O’Bryant, K.: Many sets have more sums than differences. In: Granville, A., Nathanson, M.B., Solymosi, J. (eds.) Additive Combinatorics. CRM Proceedings and Lecture Notes, vol. 43, pp. 287–305. American Mathematical Society, Providence (2007)

Miller, S.J., Orosz, B., Scheinerman, D.: Explicit constructions of infinite families of MSTD sets. J. Number Theory 130(5), 1221–1233 (2010). https://doi.org/10.1016/j.jnt.2009.09.003

Miller, S.J., Peterson, C., Sprunger, C., Van Peski, R.: The bidirectional ballot polytope. Integers 18, Paper No. A81 (2018)

Nathanson, M.B.: Problems in additive number theory. I. In: Granville, A., Nathanson, M.B., Solymosi, J. (eds.) Additive Combinatorics. CRM Proceedings and Lecture Notes, vol. 43, pp. 263–270. American Mathematical Society, Providence (2007)

Nathanson, M.B.: Sets with more sums than differences. Integers 7, Paper No. A5 (2007)

Pigarev, V.P., Freĭman, G.A.: The relation between the invariants \(R\) and \(T\). In: Freĭman, G.A., Rubinov, A.M., Novoselov, E.V. (eds.) Number-Theoretic Studies in the Markov Spectrum and in the Structural Theory of Set Addition, pp. 172–174. Kalinin. Gos. Univ., Moscow (1973). (in Russian)

Roesler, F.: A mean value density theorem of additive number theory. Acta Arith. 96(2), 121–138 (2000). https://doi.org/10.4064/aa96-2-2

Ruzsa, I.Z.: On the cardinality of \(A+A\) and \(A-A\). In: Combinatorics, Vol. II. Colloquia Mathematica Societatis János Bolyai, vol. 18, pp. 933–938. North-Holland, Amsterdam (1978)

Ruzsa, I.Z.: Sets of sums and differences. In: Bertin, M.-J., Goldstein, C. (eds.) Seminar on Number Theory, vol. 51, pp. 267–273. Birkhäuser, Boston (1984). Progress in Mathematics

Ruzsa, I.Z.: On the number of sums and differences. Acta Math. Hung. 59(3–4), 439–447 (1992). https://doi.org/10.1007/BF00050906

Stanley, R.P.: An introduction to hyperplane arrangements. In: Miller, E., Reiner, V., Sturmfels, B. (eds.) Geometric Combinatorics. IAS/Park City Mathematics Series, vol. 13, pp. 389–496. American Mathematical Society, Providence (2007)

Wolfram Research, Inc.: Mathematica 9.0. https://www.wolfram.com

Zhao, Y.: Constructing MSTD sets using bidirectional ballot sequences. J. Number Theory 130(5), 1212–1220 (2010). https://doi.org/10.1016/j.jnt.2009.11.005

Zhao, Y.: Sets characterized by missing sums and differences. J. Number Theory 131(11), 2107–2134 (2011). https://doi.org/10.1016/j.jnt.2011.05.003

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor in Charge: Kenneth Clarkson

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The first named author was partially supported by NSF Grants DMS1265673 and DMS1561945, and the second by DMS1347804. We thank Mel Nathanson, Carsten Sprunger, and Roger Van Peski for helpful conversations, and the referee for a careful reading of the manuscript.

Rights and permissions

About this article

Cite this article

Miller, S.J., Peterson, C. A Geometric Perspective on the MSTD Question. Discrete Comput Geom 62, 832–855 (2019). https://doi.org/10.1007/s00454-019-00109-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-019-00109-7