Abstract

Despite significant progress in the theory of evolutionary algorithms, the theoretical understanding of evolutionary algorithms which use non-trivial populations remains challenging and only few rigorous results exist. Already for the most basic problem, the determination of the asymptotic runtime of the \((\mu +\lambda )\) evolutionary algorithm on the simple OneMax benchmark function, only the special cases \(\mu =1\) and \(\lambda =1\) have been solved. In this work, we analyze this long-standing problem and show the asymptotically tight result that the runtime T, the number of iterations until the optimum is found, satisfies \(E[T] = \Theta \bigg (\frac{n\log n}{\lambda }+\frac{n}{\lambda / \mu } + \frac{n\log ^+\log ^+ (\lambda / \mu )}{\log ^+ (\lambda / \mu )}\bigg )\), where \(\log ^+ x := \max \{1, \log x\}\) for all \(x > 0\). The same methods allow to improve the previous-best \(O(\frac{n \log n}{\lambda } + n \log \lambda )\) runtime guarantee for the \((\lambda +\lambda )\) EA with fair parent selection to a tight \(\Theta (\frac{n \log n}{\lambda } + n)\) runtime result.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Evolutionary algorithms are general-purpose optimization heuristics and have been successfully applied to a broad range of computational problems. While the majority of the research in evolutionary computation is applied and experimental, the last decades have seen a growing number of theoretical analyses of evolutionary algorithms. Due to the difficult nature of the stochastic processes describing the runs of evolutionary algorithms, the vast majority of these works regards very simple algorithms like the \({(1 + 1)}\) EA, which has both a parent population and an offspring population of size one. Such works, while innocent looking in their problem statement, can be surprisingly challenging from the mathematical point of view, see, e.g., the long series of works on how the \({(1 + 1)}\) EA optimizes pseudo-Boolean linear functions which started with the seminal paper [16]. Also, while these example problems are far from the real applications, many of the theoretical works have contributed to the understanding of the working principles of evolutionary algorithms (see, e.g, [11, 14, 26]), have given advice on how to set parameters and take other design choices (see, e.g., [3, 43, 49]), and even have proposed new algorithms (see, e.g, [9, 21]).

Still, it remains dissatisfying that there are only relatively few works on true population-based algorithms as this bears the risk that we do not really understand the role of populations in evolutionary computation. What is clearly true, and the reason for the lack of such works, is that the stochastic processes become much more complicated when non-trivial populations come into play.

To make some progress towards a better understanding of population-based algorithms, we regard the most simple population-based problem, namely how the elitist \({(\mu + \lambda )}\) EA optimizes the OneMax benchmark problem (see Sect. 2.2 for the details of this problem). With the corresponding problem for the \({(1 + \lambda )}\) EA mostly solved in 2005 [33] (see [18, Section 8] for a more complete picture) and the problem for the \({(\mu + 1)}\) EA solved in 2006 [47], it is fair to call this a long-standing open problem. In the conclusion of his paper [47], Witt writes “the most interesting direction seems to be an extension to \((\mu +\lambda )\) strategies by a combination with the existing theory on the \((1+\lambda )\) EA.”

1.1 Our Results

We give a complete answer to this question and prove that for arbitrary values of \(\mu \) and \(\lambda \) (which can be functions of the problem size n, however, for the lower bound we assume that \(\mu \) is at most polynomial in n), the expected number of iterations the \({(\mu + \lambda )}\) EA takes to find the optimum of the OneMax function is

where \(\log ^+ x := \max \{1, \log x\}\) for all \(x > 0\). This result subsumes the previous results for the \({(1 + \lambda )}\) EA and \({(\mu + 1)}\) EA obtained in [18, 33, 47].

This runtime guarantee shows, e.g., that using a true parent population of size at most \(\max \{\log n, \lambda \}\) does not reduce the asymptotic runtime compared to \(\mu =1\). Such information can be useful since it is known that larger parent population sizes can increase the robustness to noise, see, e.g., [28].

With our methods, we can also analyze a related algorithm. He and Yao [32] and Chen, He, Sun, Chen, and Yao [7] analyzed a version of the \({(\mu + \lambda )}\) EA in which \(\mu = \lambda \) and each parent produces exactly one offspring. We shall call this algorithm the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA for brevity. We prove a tight runtime bound of \(\Theta (\frac{n \log n}{\lambda } + n)\) iterations, which also shows that the fairness in the parent selection does not change the asymptotic runtime in this problem.

To prove our bounds, we in particular build on Witt’s [46,47,48] family tree argument. The main idea of this argument is to consider a tree graph the vertices of which are the individuals created during the evolution process and each path from the root to a vertex corresponds to the series of mutations which led to the creation of this vertex. Selection does not play any role in this structure, so when working with family trees we usually assume that all individuals with a corresponding vertex in the tree can potentially be present in the current population. Different from Witt’s approach (and different from all other works using his method that we are aware of), we work in a complete tree that contains all possible family trees and in this structure argue about which individuals really exist and whether they are an optimal solution. It appears to us that this approach is technically easier than the previous approaches, which first argue that with high probability the true family tree has a certain structure (e.g., a small height) and then, conditioning on this, argue that within such a restricted structure an optimal solution is hard to reach. We also believe that our approach facilitates the uniform analysis of trees having different structures (as, e.g., in our work, in which different relative sizes of \(\mu \) and \(\lambda \) can lead to very different characteristics of the tree).

Using this argument that does not regard the selection process we have obtained the lower bound that holds not only for the OneMax function, but for any function with a unique optimum in the same way as it was done for the \({(\mu + 1)}\) EA in [47]. Our arguments let us extend the lower bounds to the functions with multiple optima (however, the number of the optima in these functions must be restricted). In the case of \({(\mu + 1)}\) EA this extension holds for a broader class of functions than the similar extension in [47] which works only for the functions with a unique optimum.

1.2 Previous Works

The field of mathematical runtime analysis of evolutionary algorithms aims at increasing our understanding via proven results on the performance of evolutionary algorithms. Due to the difficulty of mathematical understanding of complicated population dynamics, the large majority of works in this field considers algorithms with trivial populations. These algorithms may seem trivial, however already allow deep results like the proof of the \(O(n \log n)\) expected runtime of the \((1+1)\) EA on all linear pseudo-Boolean functions [12, 16]. They give surprising insights like the fact that monotonic functions can be difficult for simple EAs [6, 15, 37], and have spurred the development of many useful analysis methods [17, 31].

Despite the mathematical challenges, some results exist on algorithms using non-trivial populations. While such results are quite rare, due the growth of the field in the last 20 years they are still too numerous to be described here exhaustively. Therefore, we describe in the following those results which regard our research problem or special cases of it as well as a few related results.

The two obvious special cases of our problem are runtime analysis of the \({(1 + \lambda )}\) EA and the \({(\mu + 1)}\) EA on OneMax. In [18], the runtime of the \({(1 + \lambda )}\) EA on the class of linear functions is analyzed, which contains the OneMax function. A tight bound of \(\Theta (\frac{n\log n}{\lambda } + \frac{n\log ^+\log ^+\lambda }{\log ^+\lambda })\) is proven for the expected runtime (number of iterations until the optimum is found) of the \({(1 + \lambda )}\) EA maximizing the OneMax function. This extends the earlier result [33], which shows this bound for \(\lambda = O(\frac{\log (n) \log \log (n)}{\log \log \log (n)})\), note that in this case the bound simplifies to \(\Theta (\frac{n \log (n)}{\lambda })\), and which shows further that for asymptotically larger values of \(\lambda \), the expected runtime is \(\omega (\frac{n \log (n)}{\lambda })\).

Witt [47] studied the \((\mu +1)\) EA on the three pseudo-Boolean functions LeadingOnes, OneMax and SPC. For the OneMax problem, under the mild assumption that \(\mu \) is polynomially bounded in n, he proved that the expected runtime of the \((\mu + 1)\) EA is \(\Theta (\mu n + n\log n).\)

For algorithms with non-trivial parent and offspring population sizes, the following is known. The only work regarding the classic \({(\mu + \lambda )}\) EA for general \(\mu \) and \(\lambda \) is [40]. Using the recent switch analysis technique [50] and assuming that \(\mu \) and \(\lambda \) are polynomially bounded in n, it was shown that the \({(\mu + \lambda )}\) EA needs an expected number of

iterations to find the optimum of any function \(f : \{0,1\}^n \rightarrow {\mathbb {R}}\) with unique optimum. This bound is of smaller asymptotic order (and thus weaker) than ours when \(\mu = \omega (\log n)\) and \(\frac{\lambda }{\mu } < e^e\) or when \(\log \frac{\lambda }{\mu }=\omega (\log n)\), see the discussion at the end of Sect. 4.

For the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA the first result [32, Theorem 4] considers the runtime on the OneMax in a special case when \(\lambda = n\). It shows an upper bound of O(n) iterations,which is tight as shown by our lower bound.

For the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA with general \(\lambda \), Chen et al. [7, Proposition 4] show an optimization time of \(O(\frac{n \log n}{\lambda } + n \log \lambda )\) iterations. They conjecture a runtime of \(O(\frac{n \log n}{\lambda } + n \log \log n)\) [7, Conjecture 3], which is asymptotically at least as good and which is stronger for \(\lambda =\omega (\log n)\). Our result improves over this bound and the conjecture for \(\lambda =\omega (\frac{\log n}{\log \log n})\) as discussed in Sect. 6.

We also find notable the result of Corus et al. [5] (see [2] for recent small improvements) where they proved the upper bound for the \({(\mu , \lambda )}\) EA on the OneMax of \(O(n\lambda )\) fitness evaluations when \(\lambda > \mu e\) and \(\lambda = \Omega (\log (n))\). This runtime can be seen as the upper bound for the \({(\mu + \lambda )}\) EA, since the population of the \({(\mu + \lambda )}\) EA is always better than the population of \({(\mu , \lambda )}\) EA after the same number of iterations in the dominating sense. In Sect. 3.4 we prove that in the parameters setting regarded in [5] our upper bound is asymptotically smaller.

1.3 Organization of the Work

The remainder of the paper is organized as follows. Section 2 gives a formal description of the \({(\mu + \lambda )}\) EA and introduces the notation that we use in the paper. In Sect. 3 we prove an upper bound of \(O(\frac{n\log n}{\lambda }+\frac{n\mu }{\lambda }+n)\) for the general case and a tighter bound of \(O(\frac{n\log \log \frac{\lambda }{\mu }}{\log \frac{\lambda }{\mu }} + \frac{n\log n}{\lambda } )\) for the case of \(\frac{\lambda }{\mu } > e^e\), when the algorithm is able to gain more than one fitness level during the major part of the optimization process. Section 4 introduces the notion of complete trees and proves a lower bound matching our upper bounds. In Sect. 5 we extend our lower bounds to a much broader class of functions than just OneMax. In Sect. 6 we provide an analysis of the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA, for which our results cannot be applied directly. The paper ends with a short conclusion and ideas for future work in Sect. 7.

2 Preliminaries

2.1 Notation

In this subsection we shortly overview the notation used in this paper in order to avoid misunderstanding raised by the plenty of other notations used in the mathematical world nowadays. By \({\mathbb {N}}\) we denote the set of positive integer numbers and by \({\mathbb {N}}_0\) we denote the set of non-negative integer numbers. By [a..b] with \(a, b \in {\mathbb {N}}\) we denote an integer interval which includes its borders. By [a, b] and (a, b) with \(a, b \in {\mathbb {R}}\cup \{-\infty , +\infty \}\) we denote a real-valued interval including and excluding its borders respectively. We denote the binomial distribution with parameters n and p by \({{\,\mathrm{Bin}\,}}(n, p)\). If some random variable X follows some distribution D, we write \(X \sim D\). We use a multiplicative notation of the binomial coefficient, that is

This implies that the binomial coefficients are also defined for \(n < k\) and in this case \(\left( {\begin{array}{c}n\\ k\end{array}}\right) = 0\).

2.2 Problem Statement

In this section, we provide the definitions necessary to formalize the problem we analyze in this work. Our study focuses on evolutionary algorithms that aim at optimizing pseudo-Boolean functions, that is, functions of the form \(f:\{0, 1\}^n\rightarrow {\mathbb {R}}\).

The \({(\mu + \lambda )}\) EA formulated as Algorithm 1 is a simple mutation-based elitist evolutionary algorithm. In each iteration of the algorithm, we independently generate \(\lambda \) offspring each by selecting an individual from the parent population uniformly at random and mutating it. We use standard-bit mutation with the standard mutation rate \(p = \frac{1}{n}\), that is, we flip each bit independently with probability \(\frac{1}{n}\). We note without proof that our results hold as well for any other mutation rate \(p = c/n\), where c is a constant.

As objective function f, also called fitness function, we consider the classic OneMax function, which was the starting point for many theoretical investigations in this field. This function \(\textsc {OneMax}: \{0,1\}^n \rightarrow {\mathbb {R}}\) is defined by \(\textsc {OneMax} (x) = \sum _{i = 1}^n x_i\) for all \(x \in \{0,1\}^n\). In other words, OneMax returns the number of one-bits in its argument. Without proof we note that due to the unbiasedness of the operators used by all considered algorithms all our results also hold for the so-called generalized OneMax function, denoted by \(\textsc {OneMax} _z\). This function has some hidden bit-string z and returns the number of coinciding bits in its argument and z. In other words,

where H(x, z) stands for the Hamming distance.

2.3 Useful Tools

A central argument in our analysis is the following Markov chain argument similar to the classic fitness levels technique of Wegener [45].

Theorem 1

Let the space S of all possible populations of some population-based algorithm be divided into m disjoint sets \(A_1, \dots , A_m\) that are called levels. We write \(A_{\ge i} = \bigcup _{j = i}^{m} A_j\) for all \(i \in [1..m]\).

Let \(P_t\) be the population of the algorithm after iteration t. Assume that for all \(t \ge 0\) and \({i \in [2..m]}\), we have that \(P_t \in A_{i}\) implies \(\Pr [P_{t + 1} \in A_{\ge i}] = 1\). Let T be the minimum number t such that \(P_t \in A_m\).

-

1.

Assume that there are \(T_1, \dots , T_{m-1} \ge 0\) such that for all \(t \ge 0\) and \({i \in [1..m-1]}\) we have that if \(P_t \in A_i\), then \(E[\min \{s \mid s \in {\mathbb {N}}, P_{t + s} \in A_{\ge i+1}\}] \le T_i\) (for all possible \(P_0, \dots , P_{t - 1}\)). Then

$$\begin{aligned} E[T] \le \sum _{i=1}^{m-1} T_i. \end{aligned}$$ -

2.

Assume that there are \(p_1, p_2, \dots , p_{m-1}\) such that for all \(t \ge 0\) and \({i \in [1..m-1]}\) we have that if \(P_t \in A_i\), then \(\Pr [P_{t + 1} \in A_{\ge i+1}] \ge p_i\) (for all possible \(P_0, \dots , P_{t - 1}\)). Then

$$\begin{aligned} E[T] \le \sum _{i = 1}^{m - 1} \frac{1}{p_i}. \end{aligned}$$

The proof is standard, but for the reason of completeness we quickly state it.

Proof

We start by proving the first claim. Consider a run of the algorithm. Let \(t_i = \min \{t \mid P_t \in A_{\ge i}\}\). Let \(i \in [1..m-1]\). We analyze the random variable \(t_{i+1} - t_i\). If there is no t with \(P_t \in A_i\), then \(t_i = t_{i+1}\) simply by the definition of the \(t_i\). Otherwise, by our assumptions, we have \(E[t_{i+1}-t_i] \le T_i\). Note that this applies trivially also to the first case where we just saw that \(t_{i+1} - t_i = 0\). Hence, from \(T = t_m = \sum _{i = 1}^{m-1} (t_{i+1} - t_i)\) we conclude

To prove the second claim, we note that by our assumptions \(t_{i+1} - t_i\) is stochastically dominated by a geometric distribution with success rate \(p_i\). Hence \(E[t_{i+1} - t_i] \le \frac{1}{p_i}\), and the claim follows as above. \(\square \)

To ease the presentation, we use the following language. We say that the algorithm is on level i if the current population is in the level \(A_i\). We also say that the algorithm gains a level or the algorithm leaves the current level if the new population is at the higher level than the previous one.

In our proofs we shall use the following result for random variables with binomial distribution from [29]. An elementary proof for it was given in [22].

Lemma 1

Let \(X \sim {{\,\mathrm{Bin}\,}}(n, p)\) such that \(p > 1/n\). Then \(\Pr (X \ge E[X]) > 1/4\).

We also use frequently the following inequality in our proofs, so we formulate it as a separate lemma.

Lemma 2

For any \(x \in (0, 1]\) and any \(n > 0\) we have

As was pointed out by one of the reviewers, this lemma is a special case of Lemma 31 in [20], which states that for all \(n \in {\mathbb {N}}\) and \(x \ge 0\) we have \(1 - (1 - x)^n \ge 1 - e^{-xn} \ge \frac{xn}{1 + xn}\), except we do not have the constraint that n is an integer. Although the proof of [20, Lemma 31] is true for the case \(x \in (0, 1]\), we find it wrong for, e.g., \(x = 3\) and \(n = 2\), when the leftmost part of the inequality is negative, and others are positive. For this reason we show a simple proof here.

Proof

By [43, Lemma 8] we have \((1 - x)^n \le \frac{1}{1 + xn}\). Therefore, following the arguments that were used in [43, Theorem 9] we conclude

\(\square \)

3 Upper Bounds

In this section, we prove separately two upper bounds for the runtime of the \({(\mu + \lambda )}\) EA on the OneMax problem, the first one being valid for all values of \(\mu \) and \(\lambda \) and the second one giving an improvement for the case that \(\lambda \) is large compared to \(\mu \), more precisely, that \(\lambda /\mu \ge e^e\).

Where not specified differently, we denote the current best fitness in the population by i and the number of best individuals in the population by j.

3.1 Increase of the Number of the Best Individuals

In this subsection we analyze how the number of individuals on the current-best fitness level increases over time and derive from this two estimates for the time taken for a fitness improvement. We note that often it is much easier to generate an additional individual with current-best fitness by copying an existing one than to generate an individual having strictly better fitness by flipping the right bits. Consequently, in a typical run of the \({(\mu + \lambda )}\) EA, first the number of best individuals will increase to a certain number and only then it becomes likely that a strict improvement happens.

Since the increase of the number of individuals on the current-best fitness level via producing copies of such best individuals is independent of the fitness function, we formulate our results for the optimization of an arbitrary pseudo-Boolean function and hope that they might find applications in other runtime analyses as well. So let \(f : \{0,1\}^n \rightarrow {\mathbb {R}}\) be an arbitrary fitness function which we optimize using the \({(\mu + \lambda )}\) EA.

Assume that the \({(\mu + \lambda )}\) EA starts in an arbitrary state where the best individuals have fitness i and there are \(j_1\) such individuals in the population. At this point due to the elitism the algorithm cannot decrease the best fitness i and it also cannot decrease the number of the best individuals \(j_1\) until it increases the best fitness. Following [44, Lemma 2] we call an individual fit if it has a fitness i or better. For \({j_2 \in {\mathbb {N}}}\), we define \(\tau _{j_1,j_2}(i)\) to be the first time (number of iterations) at which the population of the \({(\mu + \lambda )}\) EA contains at least \(j_2\) fit individuals. We note that this random variable \(\tau _{j_1,j_2}(i)\) may depend on the particular initial state of the \({(\mu + \lambda )}\) EA, but since our results are independent of this initial state (apart from i and \(j_1\)) we suppress in our notation the initial state.

The time \(\tau _{1,\mu }(i)\), that is, the specific case that \(j_1 = 1\) and \(j_2 = \mu \), is also called the takeover time of a new best individual. For this takeover time, Sudholt [44, Lemma 2] proved the upper bound

for any \(i \in [0..n-1]\).

In this section we improve this result by (i) treating the general case of arbitrary \(j_1, j_2 \in [1..\mu ]\) and (ii) by showing an asymptotically smaller bound for the case \(\lambda = \omega (\mu )\). In our main analysis of the \({(\mu + \lambda )}\) EA, we need takeover times for general values of \(j_2\) to profit from the event when we get a fitness gain before the population contains only best individuals, which is likely to happen on the lower fitness levels as we show further. The extension to general values of \(j_1\) is not needed, but since it does not take extra effort, we do it on the way.

We first prove the following result for arbitrary values of \(\mu \) and \(\lambda \). We need this result since it allows arbitrary target numbers \(j_2\).

Lemma 3

Let \(i \in [0..n-1]\) and \(j_1, j_2 \in [1..\mu ]\) with \(j_1 < j_2\). Then

Proof

To prove this lemma we use Theorem 1. For this purpose we define levels \(A_{j_1}, \dots , A_{j_2}\). For any \(j \in [j_1..j_2 - 1]\) the populations in level \(A_j\) have exactly j fit individuals. The level \(A_{j_2}\) consists of all populations with at least \(j_2\) fit individuals. Note that the \({(\mu + \lambda )}\) EA cannot go from level \(A_j\) to any other level with smaller index, since it cannot decrease the number of the fit individuals due to the elitist selection.

If there are j fit individuals in the population, then the probability \(p_1(j)\) to create as one offspring a copy of a fit individual is the probability to select one of j fit individuals as a parent multiplied by the probability not to flip any bit of it during the mutation. Hence,

where we used the inequality \((1 - \frac{1}{n})^n \ge \frac{1}{2e}\) that holds for all \(n \ge 2\).

The probability \(p_2(j)\) to leave level \(A_j\) in one iteration is at least the probability to create a copy of a fit individual as one of the \(\lambda \) offspring. Hence, by Lemma 2 we have

By Theorem 1 we have

\(\square \)

We note that in case when \(j_1 = 1\) and \(j_2 = \mu \) our upper bound is \(O(\frac{\mu \log \mu }{\lambda } + \mu )\). This is weaker than the upper bound (1) given in [44, Lemma 2] if \(\lambda = \omega (\log \mu )\). Without proof we note that in all other cases the two bounds are asymptotically equal.

The reason that our bound is weaker in some cases is that we do not consider the event that the algorithm generates more than one fit offspring in one iteration, while Sudholt in [44, Lemma 2] proved that the number of the fit offspring is multiplied by some constant factor in every \(32\mu /\lambda \) iterations. The same idea may be used to prove the bound

We still prefer to use to Lemma 3 in our proofs, since it gives us a bound that is easier to operate with due to the simpler leading constants of each term, while the greater terms do not affect our main results.

We now give a second bound for the case that \(\frac{\lambda }{\mu }\ge e^e\). It is asymptotically stronger than (1) when \(\lambda = \omega (\mu )\) and \(\mu = \omega (1)\).

Lemma 4

Let \(\frac{\lambda }{\mu } \ge e^e\). Let \(i \in [1..n-1]\) and \(j_1, j_2 \in [1..\mu ]\) with \(j_1 < j_2\). Then

Proof

Let the current population have j fit individuals. Then by (2) the probability that a fixed offspring is a copy of a fit individual is \(p_1(j) \ge \frac{j}{2e\mu }\). Therefore, the number N of fit individuals among the \(\lambda \) offspring dominates stochastically a random variable B with binomial distribution \({{\,\mathrm{Bin}\,}}\left( \lambda ,\frac{j}{2e\mu }\right) \). We have \(E[B] = \frac{\lambda j}{2e\mu }\). By Lemma 1, \(\Pr [B \ge E[B]] \ge \frac{1}{4}\) and thus \(\Pr [N \ge \frac{j}{2e\mu }] \ge \frac{1}{4}\). Consequently, in each iteration with probability at least \(\frac{1}{4}\) the number of the fit individuals in the population is multiplied by a factor of at least \((1 + \frac{\lambda }{2e\mu })\) (but obviously it cannot become greater than \(\mu \)).

For a formal proof we define the levels \(A_1, \dots , A_m\), where

Level \(A_m\) consists of the populations with at least \(j_2\) fit individuals. For \(k \in [1..m-1]\) the populations of level \(A_k\) have exactly j fit individuals, where

and \(j < j_2\). To leave any level it is enough to multiply the number of the best individuals by \(1 + \frac{\lambda }{2e\mu }\), and the probability of this event is at least \(\frac{1}{4}\). By Theorem 1 we have

\(\square \)

We note that the proof of Lemma 4 holds for the weaker assumption \(\frac{\lambda }{\mu }> 2e\) as well. However in order not to confuse the reader in Sect. 3.3 where we consider the case \(\frac{\lambda }{\mu }> e^e\) and where this lemma is used, we formulate Lemma 4 with unnecessarily stronger condition.

When \(j_2 = \mu \) and \(j_1 = 1\) the bound yielded by Lemma 4 is at least as tight as that of (1). For the general values of \(j_1\) and \(j_2\) our bound is at least as tight as the bound (4). When \(\lambda / \mu \ge e^e\) the bound (4) simplifies to \(O(\log \frac{j_2}{j_1})\). If \(\lambda = \omega (\mu )\) and \(\frac{j_2}{j_1} = \omega (1)\) then we have

Therefore, in this case the bound given in Lemma 4 is asymptotically smaller than (4). In all other cases the two bounds are asymptotically equal.

The reason that we have obtained a tighter bound is that we have proven that the number of the fit individuals is multiplied by a more than constant factor with constant probability, while the proof of [44, Lemma 2] considers only the multiplication by a constant factor.

We now use Lemmas 3 and 4 to prove estimates for the time it takes to obtain a strictly better individual once the population contains at least one individual of fitness i. We define \(\tilde{T}_i\) as the number of iterations before the algorithm finds an individual with fitness greater than i, if it already has an individual with fitness i in the population. As before, this random variable depends on the precise initial state, but since our results do not rely on the initial state, we suppress it in this notation.

To prove upper bounds on \({{\tilde{T}}}_i\), we estimate the time it takes until some number \(\mu _0(i) \in [1..\mu ]\) of individuals with fitness at least i are in the population and then estimate the time to find an improving solution from this situation. We phrase our results here in terms of \(\mu _0(i)\) and optimize the value of \(\mu _0(i)\) in the later subsections.

Corollary 1

For any \(i \in [0..n-1]\) and \(\mu _0(i)\in [1..\mu ]\), we have

Proof

Even if the algorithm has only one best individual in the population, in \(\tau _{1,\mu _0(i)}(i)\) iterations it will have at least \(\mu _0(i)\) individuals with fitness at least i. Assume that at this time we have no individuals with fitness better than i (since otherwise we are done). Let \(\tau ^+(i)\) be the runtime until the algorithm creates an individual with fitness at least \(i + 1\) if it already has at least \(\mu _0(i)\) individuals with fitness i in the population.

In this setting the probability \(p'(i)\) that a particular offspring has fitness better than i is at least the probability to choose one of the \(\mu _0(i)\) best individuals and to flip only one of \(n - i\) zero-bits in it. We estimate

By Lemma 2 the probability \(p''(i)\) to create at least one superior individual among the \(\lambda \) offspring is

With \(p''(i)\) we estimate \(E[\tau ^+(i)] \le \frac{1}{p''(i)}\). Therefore, by Lemma 3 we have

\(\square \)

Corollary 2

If \(\frac{\lambda }{\mu } > e^e\) then for any \(i \in [0..n-1]\) and \(\mu _0(i)\in [1..\mu ]\), we have

Proof

Using the same arguments as in the proof of Corollary 1 [in particular, the estimate for \(p''(i)\) given in (5)] and by Lemma 4 we estimate

\(\square \)

We note that Lemma 4 is tight in the sense that we cannot obtain a better upper bound using only the argument of copying the fit individuals.

To formalize this we assume that there is a set \(D \subseteq \{0,1\}^n\) of desired individuals. We regard the variant \(\hbox {EA}^0\) of the \({(\mu + \lambda )}\) EA which only accepts offspring which are desired individuals identical to their parent. Note that the number of desired individuals in a run of this artificial algorithm can never decrease. Assuming that the initial population of the \(\hbox {EA}^0\) contains exactly \(j_1\) desired individuals, we define \(\tau _{j_1, j_2}^*(i)\) as the number of iterations until the population of the \(\hbox {EA}^0\) contains at least \(j_2\) desired individuals (unlike before, this notation does not depend on the precise initial population as long as it has exactly \(j_1\) desired individuals, but this fact is not important in the following). We show the following result.

Lemma 5

Let \(\frac{\lambda }{\mu }\ge e^e\). Let \(j_1, j_2\) be some integer numbers in \([1..\mu ]\) such that \(j_2 > j_1\). Then

Proof

If \(\frac{j_2}{j_1} \le \frac{\lambda }{\mu }\), then

and the claim is trivial, since we need at least one iteration to increase the number of the copies in the population.

Consider \(\frac{j_2}{j_1} \ge \frac{\lambda }{\mu } \ge e^e\). Let j(t) be the number of the desired individuals after iteration t. We have \(j(0) = j_1\). Let N(t) be the number of desired individuals newly created in iteration t. Then N(t) follows a binomial law \({{\,\mathrm{Bin}\,}}(\lambda , \frac{j(t - 1)}{e_n\mu })\), where \(e_n :=(1 - \frac{1}{n})^{-n} \ge e\). Hence, we have \(E[N(t) \mid j(t - 1)] = \frac{j(t - 1)\lambda }{e_n\mu } \le \frac{j(t - 1)\lambda }{e\mu }\).

For any \(t \in {\mathbb {N}}\) we have \(j(t) \le j(t - 1) + N(t)\), where strict inequality occurs only if \(j(t - 1) + N(t) > \mu \). Therefore, we have

By induction, we obtain

and by Markov’s inequality, we have

For

we obtain

Hence, the probability that the \(\hbox {EA}^0\) does not obtain \(j_2\) desired individuals in \(t = \Omega (\frac{\log (j_2/j_1)}{\log (\lambda /\mu )})\) iterations is at least constant. Thus, the expected number of iterations before this happens is at least \(\Omega (\frac{\log (j_2/j_1)}{\log (\lambda /\mu )})\). \(\square \)

3.2 Unconditional Upper Bound

Having the results of Sect. 3.1 we first prove the following upper bound, which is valid for all values of \(\mu \) and \(\lambda \). When \(\lambda \) is not significantly larger than \(\mu \), then the \({(\mu + \lambda )}\) EA typically increases the best fitness by at most a constant in each iteration. For this reason, we can use Theorem 1 and obtain a runtime bound that will turn out to be tight for this case.

Theorem 2

The expected number of iterations for the \(\left( \mu +\lambda \right) \) EA to optimize the OneMax problem is

Proof

To use Theorem 1 we define levels \(A_0, \dots , A_n\) such that level \(A_i\), \(i \in [0..n]\), consists of all populations having maximum fitness equal to i. In Corollary 1 we have already estimated the expected times \(E[{{\tilde{T}}}_i]\) the \({(\mu + \lambda )}\) EA takes to leave these levels. These estimates depended on the number \(\mu _0(i)\) of individuals of fitness i we aim at before leaving the level. By choosing suitable values for the \(\mu _0(i)\) we prove our bound.

The choice of \(\mu _0(i)\) is guided by the following trade-off. If we choose \(\mu _0(i) = \mu \), then after having \(\mu \) best individuals in the population we have the highest probability to find a better individual. However, we pay for it with the time we spend on obtaining \(\mu \) copies of the best individual. On the other hand, if we choose \(\mu _0(i) = 1\) we do not spend any iteration filling the population with copies of the best individual, but we have a low chance to increase the current fitness. How this trade-off is optimally resolved, and hence the optimal value of \(\mu _0(i)\), depends on the probability to create a better individual and thus on the current fitness i.

We distinguish three cases depending on current fitness i. The “milestones” which mark the transition between these cases are the fitness values \(i = \lceil n - \frac{n}{2 + \lambda /(e\mu )} \rceil \) and \(i = \lfloor n - \frac{n}{\mu (2 + \lambda /e)} \rfloor \). While the best fitness is below the first milestone, the probability to increase the fitness is so high that we do not need to have more than one best individual in the population. Beyond the second milestone this probability is so low that we better spend the time to fill the population with the copies of the best individual. Between the two milestones we have to find a suitable value of \(\mu _0(i)\) to give a balanced trade-off.

To simplify the notation, we define \(\Delta _i:=1+\sqrt{1+\frac{n\lambda }{e(n - i)\mu }}\). Note that

This value of \(\Delta _i\) arises from the computation of the derivative of the upper bound on \(E[{{\tilde{T}}}_i]\) from Corollary 1, which is needed to find the optimal value of \(\mu _0(i)\) in the second case, when the current fitness is between the two milestones.

For \(i \le \lceil n - \frac{n}{2 + \lambda /(e\mu )} \rceil \) we define \(\mu _0(i) :=1\). By Corollary 1 we have

Let \(T_1\) be the number of iterations before the \({(\mu + \lambda )}\) EA finds an individual with fitness greater than \(\lceil n - \frac{n}{2 + \lambda /(e\mu )} \rceil \) for the first time. Then by Theorem 1 we have

where we used the estimate \(\frac{e\mu }{\lambda }\ln (2 + \frac{\lambda }{ e\mu }) = O(1 + \frac{\mu }{ \lambda })\) that holds for any asymptotic behavior of \(\mu /\lambda \).

For \(\lceil n - \frac{n}{2 + \lambda /(e\mu )} \rceil < i \le \lfloor n - \frac{n}{\mu (2 + \lambda /e)} \rfloor \) we define \(\mu _0(i):=\lceil \frac{n}{(n - i)\Delta _i}\rceil \). By Corollary 1 we have

By (6), we have

Let \(T_2\) be the number of iterations until the \({(\mu + \lambda )}\) EA finds an individual with fitness greater than \(\lfloor n - \frac{n}{\mu (2 + \lambda /e)} \rfloor \) for the first time if it already has an individual with fitness greater than \(\lceil n - \frac{n}{2 + \lambda /(e\mu )} \rceil \) in the population. By Theorem 1 and by (7), we obtain

We regard three sums in (8) separately. First, by the estimate \(\sum _{i = 1}^n 1/\sqrt{i} \le 1 + \int _1^n (1/\sqrt{x}) dx < 2\sqrt{n}\), we obtain

To analyze the second sum we also use the estimate \(\frac{1 + t}{2 + t} < 1\) valid for all \(t \in [0, +\infty )\). We obtain

For the last sum we use the logarithmic version of Stirling’s formula, that is, \(\ln (n!) = n\ln (n) - n + O(\log (n))\) (see, e.g. [41] or [24, Theorem 1.4.10]), and the estimate \(\frac{\ln (2 + t) + 2}{2 + t} \le 2\) for all \(t \in [0, +\infty )\). We obtain

Finally, by putting (9), (10) and (11) into (8) we obtain

For \(n - 1 \ge i > \lfloor n - \frac{n}{\mu (2 + \lambda /e)} \rfloor \) we define \(\mu _0(i) :=\mu .\) Note that this case can only appear when \(\frac{n}{\mu (2 + \lambda /e)} \ge 1\) and thus \(\mu \le \frac{n}{(2 + \lambda /e)} = O(n/\lambda )\). By Corollary 1 the expected waiting time for a fitness gain is at most

Let \(T_3\) be the number of iterations until the \({(\mu + \lambda )}\) EA finds the optimum starting from the moment when it has an individual with fitness greater than \(\lfloor n - \frac{n}{\mu (2 + \lambda /e)} \rfloor \) in the population. Then by Theorem 1 we have

Summing the expected runtimes for all cases, we obtain the upper bound for the expected total runtime.

\(\square \)

3.3 Upper Bound with Large \(\lambda \)

In this section we consider the case when \(\frac{\lambda }{\mu } > e^e\). Due to the large number of offspring the algorithm performs significantly better in this case. The first reason of this speed-up is that the algorithm can now gain several fitness levels in one iteration with high probability when the current-best fitness is small. The second reason is the faster increase of the number of best individuals, see Corollary 2.

These two observations allow us to prove the following upper bound on the runtime.

Theorem 3

If \(\frac{\lambda }{\mu }\ge e^e\) then the expected number of iterations for the \(\left( \mu +\lambda \right) \) EA to optimize the OneMax problem is

Note that the bound given in Theorem 3 is asymptotically the same as the bound given in Theorem 2 when \(\frac{\lambda }{\mu }= \Theta (1)\). The difference between the two bounds becomes asymptotically significant only when \(\frac{\lambda }{\mu }= \omega (1)\). Therefore it does not matter which constant we choose to distinguish the fast regime of the algorithm. The main purpose of the choice of \(e^e\) as a border value is to simplify the proofs and to improve their readability. However without proof we note that all arguments used in this section hold also for the smaller values of \(\frac{\lambda }{\mu }\) which are greater than 2e.

To prove Theorem 3 we split the optimization process into four phases. Each phase corresponds to some range of the best fitness values, and the phase transition occurs at fitness values \(n - \frac{n}{\ln \frac{\lambda }{\mu }}\), \(n -\frac{\mu n}{\lambda }\) and \(n - \frac{n}{\lambda }\). In each phase the \({(\mu + \lambda )}\) EA has a specific behavior, so we analyze each phase separately in the following four lemmas.

During the first phase, while the fitness of the best individual is below \(n~-~\frac{n}{\ln \frac{\lambda }{\mu }}\), regardless of the number of best individuals, with constant probability we generate an offspring increasing the best fitness in the population by at least \(\gamma :=\lfloor \frac{\ln \frac{\lambda }{\mu }}{2\ln \ln \frac{\lambda }{\mu }}\rfloor \). So we need not more than an expected number of \(O(\frac{n}{\gamma })\) iterations to finish the first phase.

Let \(R_1\) be the runtime of the \({(\mu + \lambda )}\) EA until it finds an individual with fitness at least \(n - \frac{n}{\ln \frac{\lambda }{\mu }}\), in other words, the duration of the first phase. We prove the following upper bound on the expected value of \(R_1\).

Lemma 6

(Phase 1) If \(\frac{\lambda }{\mu }\ge e^e\), then we have

Proof

To use Theorem 1, we split the space of populations S into levels \(A_1, \dots A_m\), where

If \(k < m\), then the populations of level \(A_k\) have the fitness of the best individual in \([(k - 1)\gamma ..k\gamma - 1]\) (but less than \(n - \frac{n}{\ln \frac{\lambda }{\mu }}\)). The level \(A_m\) consists of all populations containing an individual of fitness at least \(n - \frac{n}{\ln \frac{\lambda }{\mu }}\).

To show that we have a constant probability to leave any level, we consider the probability that a particular offspring has a fitness exceeding the current best fitness i by at least \(\gamma \). This is at least the probability to choose one of the best individuals and to flip exactly \(\gamma \) zero-bits in it and not to flip the other \(n - \gamma \) bits, namely

The probability to increase the best fitness by at least \(\gamma \) with one of \(\lambda \) offspring is at least \(1 - (1 - p_\gamma (i))^\lambda \). Thus, by Lemma 2, the expected number of iterations for this to happen is not larger than

Since \(\frac{\lambda }{\mu } \ge e^e\), we have \(\gamma = \lfloor \frac{\ln \frac{\lambda }{\mu }}{2\ln \ln \frac{\lambda }{\mu }}\rfloor \ge \lfloor \frac{e}{2} \rfloor = 1\). Using this and the estimate \(\frac{n}{n - i} \le \ln \frac{\lambda }{\mu }\) valid during this phase, we compute

Therefore, the expected time to increase the fitness by \(\gamma \) (and thus to leave level \(A_k\) for any \(k < m\)) is at most \(1 + e\). Summing over the levels \(A_1, \dots , A_{m - 1}\) , by Theorem 1 we have

\(\square \)

Having found an individual with fitness at least \(n - \frac{n}{\ln \frac{\lambda }{\mu }}\), we enter the second phase. Due to the elitist selection, the minimum fitness in the population does not decrease, so there is no risk of a fall-back into the first phase.

In the second phase, due to the smaller distance from the optimum, fitness gains by more than a constant are too rare to be exploited profitably. However, even when we only have one best individual in the population, the probability to create at least one better individual in one iteration will still be constant. Consequently, we do not need the arguments of Sect. 3.1 analyzing how the the number of best individuals grows. This phase ends when the best fitness in the population is \(n - \frac{\mu n}{\lambda }\) or more.

Let \(R_2\) be the runtime of the \({(\mu + \lambda )}\) EA until it finds an individual with fitness at least \(n - \frac{\mu n}{\lambda }\) starting from the moment when it has an individual with fitness at least \(n - \frac{n}{\ln \frac{\lambda }{\mu }}\) in the population. In other words, \(R_2\) is the duration of the second phase.

Lemma 7

(Phase 2) If \(\frac{\lambda }{\mu }\ge e^e\), then we have

Proof

For

the level \(B_i\) is defined as the set of all populations in which the best individuals have fitness i. For \(i = \lceil n - \frac{\mu n}{\lambda } \rceil \) let the level \(B_i\) consist of all populations with best fitness at least i.

By Corollary 2 and defining \(\mu _0(i) :=1\) for all i, we have

where the last estimate follows from \(i \le n - \frac{\mu n}{\lambda }\). Therefore, by Theorem 1

\(\square \)

After completion of the second phase, generating a strictly better individual is so difficult that it pays off (in the analysis) to wait for more than one best individual in the population. More precisely, depending on the current best fitness i we define a number \(\mu _0(i)\) and compute the time to reach \(\mu _0(i)\) best individuals and argue that the expected time to generate a strict improvement when at least \(\mu _0(i)\) best individuals are in the population is only constant. Since, as discussed in Sect. 3.1, specifically in Lemma 4, the number of the best individuals in the population roughly increases by a factor \((1 + \frac{\lambda }{2e\mu })\) in each iteration, the algorithm obtains \(\mu _0(i)\) individuals reasonably fast.

Let \(R_3\) be the runtime of the \({(\mu + \lambda )}\) EA until it finds an individual with fitness at least \(n - \frac{n}{\lambda }\), the end of the third phase, starting from the moment when it has an individual with fitness at least \(n - \frac{\mu n}{\lambda }\) in the population.

Lemma 8

(Phase 3) If \(\frac{\lambda }{\mu }\ge e^e\), then we have

Proof

During this phase the best fitness i in the population satisfies

which implies

For these values of i we define \(\mu _0(i) :=\lceil \frac{n\mu }{(n - i)\lambda }\rceil \). Note that \(\mu _0(i) \in [1..\mu ]\).

For

level \(C_i\) is defined as a set of all populations in which the best individuals have fitness i. For \(i = \lceil n - \frac{n}{\lambda } \rceil \) let the level \(C_i\) consist of all populations with best fitness at least i.

By Corollary 2 and by the definition of \(\mu _0(i)\) we have

By Theorem 1 we obtain

We estimate \(\sum \limits _{i = \lceil n - n\mu /\lambda \rceil }^{\lceil n - n/\lambda \rceil - 1} \ln \frac{n\mu }{(n - i)\lambda }\) using Stirling’s formula as in (11). We also notice that this phase occurs only when \(\frac{n\mu }{\lambda } > 1\), thus we have \((\ln \frac{n\mu }{\lambda } - \ln \lfloor \frac{n\mu }{\lambda }\rfloor ) \le 1\). Hence, we obtain.

Therefore,

\(\square \)

When the algorithm is closer to the optimum than in the third phase, then we cannot expect to have a constant probability for a strict fitness improvement even when the whole population consists of individuals of best fitness. In this forth and last phase, we thus always wait (in the analysis) until the population only contains best individuals and then estimate the expected time for an improvement. We denote by \(R_4\) the runtime until the algorithm finds the optimum if it already has an individual with fitness at least \(n - \frac{n}{\lambda }\) in the population.

Lemma 9

(Phase 4) If \(\frac{\lambda }{\mu }\ge e^e\) then

Proof

For

we define level \(D_i\) as a set of all populations in which the best individuals have fitness i. We also define \(\mu _0(i) = \mu \) for these values of i.

By Corollary 2 we have

Therefore, by Theorem 1, we obtain

\(\square \)

Finally, we prove Theorem 3.

Proof (Theorem 3)

Since we consider an elitist algorithm that cannot reduce the best fitness, by linearity of expectation and Lemmas 6 to 9 we have

\(\square \)

3.4 Comparison With Other Upper Bounds

We first note that our upper bound

for the runtime of the \({(\mu + \lambda )}\) EA on OneMax subsumes the known bounds

for the \({(\mu + 1)}\) EA [47] and

for the \({(1 + \lambda )}\) EA [18].

We are not aware of any previous result for the \({(\mu + \lambda )}\) EA for general values of \(\mu \) and \(\lambda \). We believe that a domination argument allows to transfer the results of Corus et al. [5] for the \({(\mu , \lambda )}\) EA to the \({(\mu + \lambda )}\) EA. Since we prove in this work a bound that is at least as strong and stronger in some cases, we sketch this argument now, but do not give formal proofs.

We recall that the \({(\mu , \lambda )}\) EA differs from the \({(\mu + \lambda )}\) EA only in the selection mechanism, which disallows the \({(\mu , \lambda )}\) EA to select any parent individual into the next population. This imposes a constraint on the parameters requiring \(\lambda \) to be at least \(\mu \). For the case that \(\lambda > (1+\delta ) e\mu \), \(\delta > 0\) a constant, and \(\lambda \ge c\ln (n)\) with sufficiently large constant c, Corus et al. [5, Theorem 3] proved that the \({(\mu , \lambda )}\) EA within an expected number of O(n) iterations finds the optimum of OneMax.

Since the \({(\mu + \lambda )}\) EA uses elitist selection, we conjecture that the fitness values of its population always stochastically dominate those of the population of the \({(\mu , \lambda )}\) EA. More precisely, for a run of the \({(\mu + \lambda )}\) EA let us for \(i \in [1..\mu ]\) and \(t \in {\mathbb {N}}\) denote by \(f_{it}\) the fitness of the i-th individual in the parent population after iteration t, where we assume that the individuals are sorted by decreasing fitness. Let us denote by \(f'_{it}\) the same for the \({(\mu , \lambda )}\) EA. Then for all i and t, the random variable \(f_{it}\) stochastically dominates \(f'_{it}\). Presumably, this can be shown via coupling in a similar fashion as in the proof of Theorem 23 in [23]. Thus the upper bound given by Corus et al. is also valid for the \({(\mu + \lambda )}\) EA. For the case \(\lambda > (1+\delta )e\mu \) and \(\lambda = \Omega (\log n)\) regarded by Corus et al., our bound becomes

which is of an asymptotically slightly smaller order than that of [5] when \(\lambda = \omega (\mu + \log (n))\).

4 Lower Bounds

In this section, we show the lower bounds corresponding to the upper bounds we proved in the previous section. They in particular imply the lower bounds for the \({(\mu + 1)}\) EA given in [47] and the \({(1 + \lambda )}\) EA given in [18]. Hence our proof method is a unified approach to both these algorithms as well. The arguments we use do not consider selection phase at all, thus they hold also for all functions with a unique optimum and for other selection mechanisms, including the \({(\mu , \lambda )}\) EA.

The main problem when proving lower bounds for population-based algorithms is that many individuals which are created during the run of the EA are removed at some stage by selection operations. This creates a complicated population dynamics, which is very hard to follow via mathematical means.

One way to overcome this difficulty is to try to disregard the effect of selection and instead regard an optimistic version of the evolutionary process in which no individuals are removed. This idea can be traced back to [42]. In the context of evolutionary computation, it has been first used in [46] (see [48] for an extended version) in the analysis of a steady-state genetic algorithm with fitness-proportionate selection. In [34], this argument was used in the analysis of a \((\mu +1)\) evolution strategy (in continuous search spaces). Not surprisingly, the analysis of the \({(\mu + 1)}\) EA [47] uses the artificial populations argument as well.

This technique then found applications in the analysis of memetic algorithms [44], aging-mechanisms [35], and non-elitist algorithms [36, 39]. The artificial population argument was also used to overcome the difficulties imposed by another removal mechanism, namely Pareto domination in evolutionary multi-objective optimization [19]. While similar in spirit, this work however uses quite different techniques, e.g., it does not represent the search process via tree structures.

Of course, to make the new process really an optimistic version of the original one, we have to ensure that, despite the larger population present, each individual which is also present in the true population has the same power of creating good solutions as in the original process. To ensure this in our process, we assume that in the artificial process each individual creates \({{\,\mathrm{Bin}\,}}(\lambda ,1/\mu )\) offspring. This assumption, in fact, leads to a much more drastic growth of the artificial population than the fact that we disregard selection.

When working with such an artificially enlarged population, there is a risk that the larger population finds it easier to create the optimal solution. This would give weaker lower bounds. So the main art in this proof approach is setting up the arguments in a way that the larger population does still, in an asymptotic sense, not find the optimum earlier than the original process. The reason why this is possible at all is that once selection is disregarded, the process consists only of independent applications of the mutation operator. This allows to use strong-concentration arguments which in the end give the desired result that none of the many members of the artificial population is the optimal solution.

To make this approach formal, we use the following notion of a complete tree, which, in simple words, describes all possible (iterated) offspring which could occur in a run of the evolutionary algorithm. This notion is different from those used in the works above, which all work with certain subtrees of the complete tree and use suitable arguments to reason that the restricted tree still covers all individuals that can, with reasonable probability, appear. We feel that our approach of working in the complete tree is technically simpler. For example, compared to [47], we do not first need to argue that with high probability the true tree has only certain depths and then, conditional on this event, argue that it does not contain an optimal solution. Working in the complete tree, we also do not need arguments from branching processes as used in [39]. Of course, the key argument that without selection we only do repeated unguided mutation, is used by us in the same flavor as in all previous works.

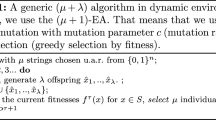

More precisely, the complete tree with initial individual \(x_0\) is defined recursively as follow. Every vertex is labeled with some individual (a bit-string) which could potentially occur in the evolution process. The labels are not necessarily unique, but every vertex, except the root vertex \(v_0\) is uniquely defined by the tuple (v, t, i), where v is the parent vertex (that is either the root vertex, or another vertex defined by a tuple), \(t \in {\mathbb {N}}\) is the iteration when this vertex was created and \(i \in [1..\lambda ]\) is the number of the vertex among the vertices with the same v and t. The tree \(T_0 = (V_0,E_0)\) at time \(t=0\) consists of the single (root) vertex \(v_0\) that is labeled with the bit-string \(c(v_0) = x_0\). Hence \(E_0 = \emptyset \). If \(T_t = (V_t,E_t)\) is defined for some \(t \ge 0\), then we define the tree \(T_{t+1} = (V_{t+1},E_{t+1})\) as follows. For each vertex in \(V_t\), we add \(\lambda \) vertices, connect them to this vertex, and generate their labels via standard-bit mutation from the parent. More precisely, let \(N_{t+1} :=\{(v_t,t+1,i) \mid v_t \in V_t, i \in [1..\lambda ]\}\) and \(V_{t+1} = V_t \cup N_{t+1}\). We call \(v_t\) the parent of \((v_t,t+1,i)\) and \((v_t,t,i)\) the i-th child of \(v_t\) in iteration \(t+1\). We generate the label \(c(v_t,t+1,i)\) by applying standard-bit mutation to \(c(v_t)\). We connect each new vertex with its parent, that is, we define \(E_{t+1} = E_t \cup \{(v_t,(v_t,t+1,i)) \mid v_t \in V_t, i \in [1..\lambda ]\}\). A simple example of a complete tree structure is shown in Figure 1.

The structure of the complete tree for the \((3 + 2)\) EA after \(t = 2\) iterations. The green vertices are the initial vertices, the blue vertices were created in the first iteration and the orange vertices were created in the second iteration. Each vertex is uniquely defined by a tuple of its parent vertex v, iteration it was created t and its number i among the children of its parent vertex created at the same iteration (the vertices in the figure are labeled with this number i). The highlighted vertices are the ones which were actually created by the algorithm. The labels are omitted in this illustration for reasons of readability

It is easy to see that a complete tree at time t contains exactly \((\lambda +1)^t\) nodes, since each vertex from \(V_t\) has exactly \(\lambda \) new children in \(V_{t + 1}\). As said earlier, it thus massively overestimates the size of the true population of the EA.

For our purposes, it is not so much the total size of the tree that is important, but rather the number of nodes in a certain distance from the root. We estimate these in the following elementary lemma. Here and in the remainder, by distance we mean the graph theoretic distance, that is, the length of the (in this case unique) path between the two vertices. Observe that this can be different from the iteration in which a node was generated. For example, the vertex \((v_0,t,i)\), which is generated in iteration t from the initial vertex, has distance one from \(v_0\).

Lemma 10

Let \(T_t\) be a complete tree at time t. Let \(\ell \in {\mathbb {N}}_0\). Then \(T_t\) contains exactly

nodes in distance exactly \(\ell \) from the root.

Proof

If \(t < \ell \), then there are no vertices in distance \(\ell \) (recall that in our notation \(\left( {\begin{array}{c}t\\ \ell \end{array}}\right) = 0\) in this case). Otherwise, let v be a vertex in distance exactly \(\ell \) from the root. Then there are times \(1 \le t_1< \dots < t_\ell \le t\) and offspring numbers \(i_1, \dots , i_\ell \in [1..\lambda ]\) such that with the recursive definition of the vertices \(v_1, \dots , v_\ell \) via \(v_d = (v_{d-1},t_d,i_d)\) for all \(d \in [1..\ell ]\), we have \(v = v_\ell \). Hence, there are at most \(\left( {\begin{array}{c}t\\ \ell \end{array}}\right) \lambda ^\ell \) vertices in distance \(\ell \) from the root. Conversely, each tuple of times and offspring numbers as above defines a different vertex in distance \(\ell \). Hence, there are at least \(\left( {\begin{array}{c}t\\ \ell \end{array}}\right) \lambda ^\ell \) different vertices in distance \(\ell \) from the root. \(\square \)

Since there is no selection in the complete tree, the vertex labels simply arise from repeated mutation. More precisely, a vertex in distance \(\ell \) from the root has a label that is obtained from \(\ell \) times applying mutation to the root label. This elementary observation allows to estimate the probability that a node label is equal to some target string.

Lemma 11

Consider a complete tree with root label \(c(v_0) = x_0\). Let \(x^* \in \{0,1\}^n\) with \(H(x^*,x_0) \ge n/4\) (where H is the Hamming distance). Let x be the node label of a node in distance \(\ell \) from \(v_0\). Then

Proof

The probability that \(x = x^*\) is at most the probability that each of the \(H(x_0,x^*)\) bits in which \(x_0\) and \(x^*\) differ was flipped in at least one of the \(\ell \) applications of the mutation operator which generated x from \(x_0\). For one particular position the probability that this position was involved in one of \(\ell \) mutations is \(1 - (1 - \frac{1}{n})^\ell \). For \(H(x_0, x^*)\) positions the probability that all of them were involved in one of \(\ell \) mutations is

where we used the estimates \((1 - 1/n)^{(n - 1)r} \ge e^{-r}\) valid for all \(n \ge 1\) and any positive \(r \in {\mathbb {R}}\), and \(e^{-r} \ge 1 - r\) valid for all \(r \in {\mathbb {R}}\). \(\square \)

We are now ready to prove our lower bound. Since the proof is valid not only for the OneMax function, but for any pseudo-Boolean function with a unique optimum, we formulate the result for such functions. We show extensions to many functions with multiple optima in the following section.

Theorem 4

If \(\mu \) is polynomial in n, then the \((\mu +\lambda )\) EA with any type of selection of the new parent population (including only selecting from the offspring population) needs an expected number of

iterations to optimize any pseudo-Boolean function with a unique optimum.

If further \(\frac{\lambda }{\mu } \ge e^e\), then the stronger bound

holds.

Proof

Without any loss of generality in this proof we assume that the function optimized by the algorithm has an optimum in \(x^* = (1, \dots , 1)\).

In our proofs we use the following tool. To prove that the expected runtime of the algorithm is \(\Omega (f(n))\) for some function f(n), it is enough to prove that the probability that the runtime is less than f(n) is less than some constant \(\gamma < 1\), since in this case the expected runtime is not less than \((1 - \gamma )f(n)\).

We first note that the bound \(\Omega (\frac{n\log n}{\lambda })\) is easy to prove for the OneMax function. A short, but deep argument for this bound is that the \({(\mu + \lambda )}\) EA is an unary unbiased black-box complexity algorithm in the sense of Lehre and Witt [38]. Any such algorithm needs an expected number of \(\Omega (n \log n)\) [38] or, more precisely, of at least \(n \ln (n) - O(n)\) [10] fitness evaluations to find the optimum of the OneMax function.

However, we prove the lower bounds for any function with a unique optimum, so we use an elementary argument essentially identical to the one of [47] as follows. The lower bound \(\Omega (\frac{n\log n}{\lambda })\) needs to be shown only in the case \(\mu \le c \log n\), where c is an arbitrarily small constant. For any bit position we have the probability \(q_1\) that all individuals in the initial population have a zero-bit in that position that is calculated as

Thus, the expected number z of the bit positions such that all individuals in the initial population have a zero-bit in that position is

We call such positions initially wrong positions. Since the bit values in each bit position and each initial individual are independent, each position is initially wrong or not independently on other positions. Hence, by Chernoff bounds (see, e.g., Theorem 1.10.5 in [24]) the probability \(q_2\) that we have at least \(E[z]/2 = n^{1 - c\log (2)}/2\) such bit positions is calculated as

Now we are ready to show that the algorithm does not flip at least one of the bits in the initially wrong positions in \(t :=\lfloor \frac{\alpha (n - 1)\log (n)}{\lambda }\rfloor \) iterations, where \(\alpha \) is a constant that will be defined later, with a high (at least \(1 - o(1)\)) probability. We calculate the probability \(q_3\) that one particular bit is flipped at least once in t iterations (or in \(\lambda t\) mutations) as

If we have at least \(n^{1 - c\log (2)}/2\) initially wrong positions, then the probability \(q_4\) that all of them are flipped at least once in t iterations is

Thus we have the probability \(q_5\) that at least one of the initially wrong bits is not flipped (and thus, the optimum is not found) in \(t = \Theta (\frac{n \log (n)}{\lambda })\) iterations at least

Hence, if \(\alpha \) and c satisfy \(c\log (2) + \alpha < 1\), (e.g., \(\alpha :=\frac{1}{2}\) and \(c :=\frac{1}{2}\)) then the expected runtime of the algorithm is \(\Omega (\frac{n \log (n)}{\lambda })\).

To prove the remaining two bounds, we argue as follows. Again using a simple Chernoff bound argument, we first observe that the probability \(q_6\) that the number of zero-bits y in the one particular individual in the initial population is less than n/4, is estimated as

Hence, all \(\mu \) individuals of the initial population have a Hamming distance of at least n/4 from the optimum \(x^*\) with probability

Since \(\mu \) is polynomial in n, we have \(\frac{\mu }{e^\frac{n}{16} - 1} = o(1)\) and therefore, \(q_7 = 1 - o(1)\). Further in this proof we assume that all initial individuals have at least n/4 zero-bits.

Clearly, a run of the \({(\mu + \lambda )}\) EA creates a subforest of \(\mu \) disjoint complete trees with random root labels (complete forest). Whether a node of the complete forest appears in the forest describing the run of the \({(\mu + \lambda )}\) EA (the forest of the family trees) depends on the node labels (more precisely, on their fitness). However, regardless of the node labels the following is true: If some node \(v_s\) is present in the population at iteration t, then the edge \((v_s,(v_s,t,i))\) is present in the subforest at most with probability \(1/\mu \), because for this it is necessary that the i-th offspring generated in iteration t chooses \(v_s\) as parent. Consequently, regardless of the nodes labels, the probability that a node in distance \(\ell \) from the root in the complete forest enters the population of the \({(\mu + \lambda )}\) EA, is at most \(\mu ^{-\ell }\). Since we have not taken into account the node labels, we observe that the probability that a particular node of the complete forest (i) is labeled with the optimum and (ii) makes it into the population of the \({(\mu + \lambda )}\) EA, is at most \(\mu ^{-\ell } p(\ell ,n)\) with \(p(\ell ,n)\) as defined in Lemma 11.

Using a union bound over all nodes in the complete forest up to iteration t, cf. Lemma 10, we see that the probability that the \({(\mu + \lambda )}\) EA finds the optimum within t iterations, is at most

Let first \(t :=\lfloor \mu n / 8e\lambda \rfloor \). Using the inequality \(\left( {\begin{array}{c}t\\ \ell \end{array}}\right) \le (et / \ell )^\ell \) that follows from Stirling’s formula, we estimate the summand \(s(\ell ) :=\left( {\begin{array}{c}t\\ \ell \end{array}}\right) (\frac{\lambda }{\mu })^\ell p(\ell ,n)\) of \(q_{opt}\) for every \(\ell \in [0..t]\).

-

By Lemma 11 we have \(p(\ell ,n) \le 1\). Thus, if \(\ell \ge n/4\), we estimate

$$\begin{aligned} s(\ell ) = \left( {\begin{array}{c}t\\ \ell \end{array}}\right) \left( \frac{\lambda }{\mu }\right) ^\ell p(\ell ,n) \le \left( \frac{et\lambda }{\ell \mu }\right) ^\ell \le \left( \frac{n}{8\ell }\right) ^\ell \le (1/2)^\ell \le (1/2)^{n/4}. \end{aligned}$$ -

By Lemma 11 we have \(p(\ell ,n) \le (\ell /(n - 1))^{n/4}\). Hence, if \(n/4 \ge \ell > 0\), we estimate

$$\begin{aligned} s(\ell )&= \left( {\begin{array}{c}t\\ \ell \end{array}}\right) \left( \frac{\lambda }{\mu }\right) ^\ell p(\ell ,n) \le \left( \frac{et\lambda }{\ell \mu }\right) ^\ell \left( \frac{\ell }{n - 1}\right) ^{n/4} \le \left( \frac{n}{8\ell }\right) ^\ell \left( \frac{\ell }{n - 1}\right) ^{n/4} \\&\le \left( \frac{n}{4\ell }\right) ^\ell \left( \frac{\ell }{n - 1}\right) ^{n/4} \le \left( \frac{n}{4\ell } \cdot \frac{\ell }{n - 1}\right) ^{n/4} \le (1/2)^{n/4}. \end{aligned}$$ -

Finally, for \(\ell = 0\) we have \(p(\ell , n) = 0\) and thus \(s(\ell ) = 0\).

Consequently, the optimum is found in less than t iterations if either there is an individual with less than n/4 zero-bits in the initial population, or with an exponentially small probability otherwise. Therefore, the probability \(q_8\) of finding the optimum in less than t iterations is bounded as

since we assumed \(\mu \) to be at most polynomial in n.

We finish the proof by showing the lower bound \(\Omega \left( \frac{n\log \log \frac{\lambda }{\mu }}{\log \frac{\lambda }{\mu }}\right) \) in case when \(\frac{\lambda }{\mu } \ge e^e\). For this purpose let \(t = \lfloor \frac{(e - 2)n\ln \ln \frac{\lambda }{\mu }}{4(e + 1)\ln \frac{\lambda }{\mu }}\rfloor \). Using the complete tree notation we show that the probability that the algorithm finds an optimum in less than t iterations is very small.

For all \(\ell \in [0..t]\) consider \(s(\ell )\). Using the inequality \(\left( {\begin{array}{c}t\\ \ell \end{array}}\right) \le (et / \ell )^\ell \) we estimate the upper bound for it as follows.

Consider precisely the argument of the exponential function from the last equality in (16). For this purpose define \(f(\ell ) :=\ell \ln \frac{et\lambda }{\ell \mu } + \frac{n}{4} \ln \frac{\ell }{n - 1}.\) By considering the derivative of \(f(\ell )\) on segment [0, t] one can see that it is a monotonically increasing function. Since \(t \ge \ell \) and \(\frac{\lambda }{\mu } \ge e^e\), we have \(\frac{et\lambda }{\ell \mu } \ge e^{e + 1}\) and thus, \(\ln \frac{et\lambda }{\ell \mu } \ge e + 1\). Hence,

Thus, \(f(\ell )\) reaches its maximum when \(\ell = t\). Therefore,

Notice that \(\frac{\ln x}{x} \le \frac{1}{e}\) for all \(x \ge 1\) and that \(\ln \frac{(e - 2)n}{4(e + 1)(n - 1)} < 0\) for all \(n > 1\). Therefore we have

Thus, by (16) we have

By (14) summing up \(\mu s(\ell )\) for all \(\ell \in [0..t]\) we obtain the following upper bound on the probability \(q_9\) that the algorithm finds the optimum in less than \(t = \Theta (\frac{n\log \log \frac{\lambda }{\mu }}{\log \frac{\lambda }{\mu }})\) iterations.

Notice that \(q_9\) is o(1), since we assumed that \(\mu \) is polynomial in n. Hence, the expected runtime of the algorithm is \(\Omega (\frac{n\log \log \frac{\lambda }{\mu }}{\log \frac{\lambda }{\mu }})\) \(\square \)

4.1 Comparison With Other Lower Bounds

Since all results involved are asymptotically tight, our lower bounds subsume the previous bounds for the \({(\mu + 1)}\) EA and the \({(1 + \lambda )}\) EA in the way as discussed for upper bounds in Sect. 3.4.

For general values of \(\mu \) and \(\lambda \), the only result [40] we are aware of proves that for any \(\mu \) and \(\lambda \) that are at most polynomial in n the runtime of the \({(\mu + \lambda )}\) EA on every pseudo-boolean function with a unique global optimum is

By comparing the three terms of this bound with the corresponding terms of our bound

we immediately see that our bound is asymptotically at least as large as the one in (18); note that for the third term, this follows trivially from the assumption that \(\lambda \) is polynomial in n and the fact that \(x \mapsto \frac{\log \log (x)}{\log (x)}\) is decreasing for x sufficiently large.

There are two cases when our bound is asymptotically greater than (18).

Setting 1. Let \(\frac{\lambda }{\mu } = O(1)\) and \(\mu = \omega (\log (n))\). Then our bound is \(\Omega (\frac{n\mu }{\lambda })\), which is at least \(\Omega (n)\). On the other hand, (18) is

Setting 2. Let \(\log \frac{\lambda }{\mu } = \omega (\log n)\). This implies that \(\frac{\lambda }{\mu }= \omega (n)\) and thus

Therefore, we have

Hence, the lower bound given in Theorem 4 simplifies to \(\Omega (\frac{n \log \log \frac{\lambda }{\mu }}{\log \frac{\lambda }{\mu }})\).

On the other hand, the bound (18) is of the asymptotically smaller order \(o(\log n) + o(1) + O(\frac{n \log \log n}{\log n}) = O(\frac{n \log \log n}{\log n})\).

5 Extending the Lower Bounds to All Functions Having Not Excessively Many Global Optima

Since the family tree technique depends little on the particular function to be optimized, Witt [47] extended his lower bounds for OneMax to a much broader class of functions. He proved that the \({(\mu + 1)}\) EA needs \(\Omega (\mu n)\) iterations to find a global optimum of any function that satisfies one of the following conditions. (i) The function has at most \(2^{o(n)}\) optima. (ii) All optima have at least \(n/2 + \varepsilon n\) one-bits or all optima have at least \(n/2 + \varepsilon n\) zero-bits, where \(\varepsilon >0\) is an arbitrary constant.

In this section we extend our lower bounds of Sect. 4 to a wide class of functions as well. In particular, we show that Witt’s results are valid for all functions with at most \(2^{\beta n}\) optima, where \(\beta \) is some constant less than \(\frac{1}{16\ln 2}\), regardless of the positions of the optima.

To reach our goal we exploit the fact that in Theorem 4 we proved very small values for the probabilities that the runtime is less than some threshold [see (13), (15) and (17)], while it would have been enough to prove that they are some constants less than one.

Theorem 5

For any constant \(\varepsilon > 0\) there exists another constant \(c > 0\) such that if \(\mu < c \ln n\), then for any n-dimensional pseudo-Boolean function with not more than \(2^{n^{1 - \varepsilon }}\) optima the \({(\mu + \lambda )}\) EA takes at least \(\Omega (\frac{n\log n}{\lambda })\) iterations in expectation and with high probability to find an optimum.

Proof

Let c be some arbitrary small positive constant and let \(\mu < c\ln n\). By (13) the probability that the algorithm finds a particular optimum in less than \(t :=\frac{\alpha n\log n}{\lambda }\) iterations (where \(\alpha \) is some arbitrary constant) is

If we have at most \(2^{n^{1 - \varepsilon }}\) optima, then by a union bound over all optima we obtain that the probability \(q_{10}\) that the algorithm finds an optimum in less than t iterations is

This probability \(q_{10}\) tends to zero with growing n if and only if the argument of the exponential function tends to negative infinity. It does so if and only if \(\alpha \) and c satisfy \(\alpha + c\ln 2 < \varepsilon \). Since \(\varepsilon \) is a positive constant, we can choose \(\alpha :=\varepsilon /2\) and \(c :=\varepsilon /2\) to satisfy this condition. \(\square \)

The actual reason that the algorithm cannot find an optimum faster than in \(\Omega (\frac{n\log n}{\lambda })\) iterations is the coupon collector effect when the algorithm tries to flip the few wrong bits left in the end of the optimization. However, if we have \(2^{\Theta (n)}\) optima, the algorithm avoids this effect. To illustrate this idea consider the \({(1 + 1)}\) EA that optimizes the OneMax function, but the bit-strings with less than cn zero-bits, where c is some small constant, are considered optimal. Thus, this functions has no more than \(O(2^{c\log _2(1/c)n}) \subseteq 2^{\Theta (n)}\) optima. Clearly, the runtime of the \({(1 + 1)}\) EA on such function is linear, which may be proven with simple additive drift argument.

The following two theorems extend our \(\Omega (\frac{n\mu }{\lambda })\) and \(\Omega (\frac{n\log \log \frac{\lambda }{\mu }}{\log \frac{\lambda }{\mu }})\) bounds to the functions with \(2^{O(n)}\) optima.

Theorem 6

If \(\mu \) is at most polynomial in n, then the \({(\mu + \lambda )}\) EA optimizes any pseudo-Boolean function with at most \(2^{\beta n}\) optima, where \(\beta \) is some constant less than \(\frac{1}{16\ln 2}\), in \(\Omega (\frac{\mu n}{\lambda })\) iterations. If \(\frac{\lambda }{\mu }> e^e\), then the stronger bound \(\Omega (\frac{n\log \log \frac{\lambda }{\mu }}{\log \frac{\lambda }{\mu }})\) holds.

Proof

By (15) the probability that the algorithm finds a particular optimum in less than \(t :=\lfloor \frac{\mu n}{8e\lambda } \rfloor \) iterations is

By a union bound taken over no more than \(2^{\beta n}\) optima, the probability \(q_{11}\) that the algorithm finds any optimum in this time is

Since \(\beta < \frac{1}{16\ln 2}\) and \(\beta \) is a constant, we have both \((\ln 2)\beta n - \frac{n}{16} < 0\) and \(\beta n - \frac{n}{4} < 0\) (and both of them are linear in n). Thus, \(q_{11}\) tends to zero with growing n. Hence, the expected runtime of the algorithm is \(\Omega (t) = \Omega (\frac{\mu n}{\lambda })\).

To prove the \(\Omega (\frac{n\log \log \frac{\lambda }{\mu }}{\log \frac{\lambda }{\mu }})\) bound we argue in a similar way. By (17) the probability that the algorithm finds a particular optimum in less than \(t :=\lfloor \frac{(e - 2)n\ln \ln \frac{\lambda }{\mu }}{4(e + 1)\ln \frac{\lambda }{\mu }}\rfloor \) iterations is

By a union bound taken over no more than \(2^{\beta n}\) optima, the probability \(q_{12}\) that the algorithm finds any optimum in this time is

Since \(\beta < \frac{1}{16\ln 2}\) and \(\beta \) is a constant, we have both \((\ln 2)\beta n - \frac{n}{16} < 0\) and \(\beta n\ln 2 - \frac{n}{4} < 0\) (and both of them are linear in n). Thus, \(q_{12}\) tends to zero with growing n. Hence, the expected runtime of the algorithm is \(\Omega (t) = \Omega (\frac{n\log \log \frac{\lambda }{\mu }}{\log \frac{\lambda }{\mu }})\). \(\square \)

6 Analysis of the \((\lambda \overset{1:1}{+}\lambda )\) EA

In this section we prove that our results (both upper bound from Theorem 2 and lower bound from Theorem 4) hold in an analogous fashion also for the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA, that is, we show that this algorithm optimizes OneMax in an expected number of \(\Theta (\frac{n \log n}{\lambda } + n)\) iterations. This improves over the \(O(\frac{n \log n}{\lambda } + n \log \lambda )\) proven bound and the \(O(\frac{n \log n}{\lambda } + n \log \log n)\) conjecture of [7].

Due to the differences in the algorithms, to prove our results we obviously cannot just apply the previous theorems in this work to the case \(\lambda = \mu \). We recall that the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA uses a different parent selection. While the classic \({(\mu + \lambda )}\) EA chooses each parent independently and uniformly at random from the \(\mu \) individuals, the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA creates exactly one offspring from each parent. The pseudocode of the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA is shown in Algorithm 2. We note that [7] also use a slightly different way of selecting the next parent population. In principle, they take as new parent population the \(\mu \) best individuals among parents and offspring (plus-selection). If this would lead to a new parent population only consisting of offspring, they remove the weakest offspring and replace it with the strongest individual from the previous parent population. Since this appears to be a not very common way of selecting the new population, we shall work with the classic plus-selection, favoring offspring in case of ties, and breaking further ties randomly (though, indeed, the tie-breaking is not important when optimizing OneMax via unary unbiased black-box algorithms). We note without proof that the following results and proofs are valid for the precise algorithm regarded in [7] as well.

We start by proving the upper bound for the runtime.

Theorem 7

The expected runtime of the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA on the OneMax function is \(O(\frac{n\log n}{\lambda } + n)\).

Proof

We aim at adapting Theorem 2 for the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA. For this purpose we note that the proof of Theorem 2 only depends on the expected level improvement times \(E[{{\tilde{T}}}_i]\) computed in Corollary 1, which again depend on the times needed for increasing the number of fit individuals computed in Lemma 3. Therefore, it suffices to show that the estimates of Lemma 3 and Corollary 1 are also valid for the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA.

We prove that Lemma 3 holds for the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA by observing that the probability \(p_2(j)\) to create at least one copy of the fit individual satisfies the same estimate as the one used for the \({(\mu + \lambda )}\) EA, which is (3), with \(\mu =\lambda \). For the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA, \(p_2(j)\) is at least the probability that at least one of the j fit parent individuals creates as offspring a copy of it. By Lemma 2 we have

which is the same estimate as for the \({(\mu + \lambda )}\) EA (with \(\mu = \lambda \)).

To prove that Corollary 1 holds for the \((\lambda \overset{_{1:1}}{+} \lambda )\) EA as well, it is sufficient to show that the probability \(p''(i)\) to create a superior individual satisfies as well the estimate (5) in the case \(\mu =\lambda \). The probability \(p''(i)\) is at least the probability that for at least one of the \(\mu _0(i)\) best individuals the offspring is better than its parent. Using Lemma 2 we calculate

which is the same value as in Corollary 1 when \(\mu = \lambda \). \(\square \)