Abstract

Many herbivorous insects die of pathogen infections, though the role of plant traits in promoting the persistence of these pathogens as an indirect interaction is poorly understood. We tested whether winter leaf retention of bush lupines (Lupinus arboreus) promotes the persistence of a nucleopolyhedroviruses, thereby increasing the infection risk of caterpillars (Arctia virginalis) feeding on the foliage during spring. We also investigated whether winter leaf retention reduces viral exposure of younger caterpillars that live on the ground, as leaf retention prevents contaminated leaves from reaching the ground. We surveyed winter leaf retention of 248 lupine bush canopies across twelve sites and examined how it related to caterpillar infection risk, herbivory, and inflorescence density. We also manipulated the amount of lupine litter available to young caterpillars in a feeding experiment to emulate litterfall exposure in the field. Greater retention of contaminated leaves from the previous season increased infection rates of caterpillars in early spring. Higher infection rates reduced herbivory and increased plant inflorescence density by summer. Young caterpillars exposed to less litterfall were more likely to starve to death but less likely to die from infection, further suggesting foliage mediated exposure to viruses. We speculate that longer leaf life span may be an unrecognized trait that indirectly mediates top-down control of herbivores by facilitating epizootics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

To herbivorous insects, a host plant is both a food source and a habitat. Therefore, plant traits not only mediate interactions between herbivores and plants, but also between the herbivore and other organisms that the herbivore encounters. For example, some plant traits can influence interactions between herbivores and the natural enemies of herbivores, indirectly benefiting the plant (Pearse et al. 2020). Leaf longevity may be one of such traits that affects the top-down pressure that predators, parasitoids, and pathogens exert on herbivores (Kahn and Cornell 1989; Yamazaki and Sugiura 2008). As leaves often provide critical habitats for the natural enemies of herbivores (Lynch et al. 1980; McGuire et al. 1994; Hsieh and Linsenmair 2012), less ephemeral leaves could promote the persistence and recruitment of the enemies of herbivores, thereby increasing top-down pressure.

Unlike the free-living natural enemies of herbivores, for pathogens, environmental persistence often depends on plants because they have limited mobility and environmental factors strongly affect their survival outside of their primary host. Thus, plant traits are thought to affect key processes in herbivore–pathogen interactions from initial exposure to viral transduction because horizontal transmission often occurs on plants (Cory and Hoover 2006). This is true for both the pathogens of invertebrate (Shikano 2017) and vertebrate herbivores (Pritzkow et al. 2015). Leaf longevity can affect pathogen transmission in several ways. Infection risk of herbivores may be increased by retaining leaves that harbor entomopathogens. Dense leaf canopies can shield insect pathogens from rainfall that would otherwise wash them away (D’Amico and Elkinton 1995; Inglis et al. 1995). Holding pathogen contaminated leaves for longer may prevent them from moving away from the plant canopy due to wind or water or from becoming buried. Older foliage may also have a greater amount of time to accumulate pathogens by virtue of its longevity. Those pathogens that remain on leaves may also be sheltered against ultraviolet radiation or high temperature, having substantially higher survival as a result (Biever and Hostetter 1985; Inglis et al. 1993; Raymond et al. 2005). However, retaining pathogen contaminated leaves in the canopy necessarily reduces the amount of pathogen transferred to other areas, such as below the canopy, where some herbivores dwell. Therefore, herbivores that overwinter under plant canopies or feed on understory plants during early development could experience reduced disease risk when leaves remain in the canopy.

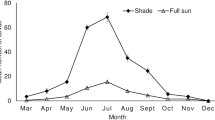

In this study, we focused on the evergreen perennial bush lupine Lupinus arboreus (Fabaceae) and an univoltine woolly bear caterpillar herbivore, Arctia virginalis (Lepidoptera: Arctiinae), in coastal Northern California. Due to the mild and wet winters, plants in this Mediterranean habitat have less abiotic pressure to adopt a strictly deciduous or evergreen leaf habit, allowing more plastic responses of leaf longevity to biotic pressures (Mooney and Dunn 1970). Despite being an evergreen, L. arboreus exhibits substantial intraspecific and seasonal variation in leaf retention. A prolonged and asynchronous period of peak leaf fall occurs in the winter and is followed by a surge of new leaf production in the early spring. Flowering and seed production occur during late spring and early summer. Early instar A. virginalis caterpillars live under the L. arboreus canopy, feeding on detritus, fallen lupine litter, and understory plants (Karban et al. 2012; 2017). Mid- to late instar caterpillars crawl up onto lupine bushes during late winter and early spring and feed on lupine leaves in the canopy. In late spring, they pupate and emerge as moths.

An alphabaculovirus infects A. virginalis caterpillars and likely causes cyclic population dynamics in A. virginalis populations in the western U.S. (Pepi et al. 2022). This virus, Arctia virginalis nucleopolyhedroviruses (AvNPV), was recently identified from infected individuals after sequencing of PCR-amplified polyhedrin, late expression factor-8, and late expression factor-9 genes (Pepi et al. 2022). Alphabaculoviruses are dsDNA viruses that are enclosed in protective protein shells, forming relatively resistant occlusion bodies (OB) that allow their persistence outside of the host. Horizontal transmission occurs when a caterpillar feeds on an OB-contaminated food source on which the virus rests passively without replication (Vega and Kaya 2012). The ingested OBs dissolve in the alkaline gut of their host and release occlusion-derived virions. These virions infect the caterpillar starting from the epithelial tissue and then move to other parts of the body, including the trachea, fat body, and hemolymph (Barrett et al. 1998). Infection causes high caterpillar mortality rates, though infected individuals may continue to grow and may successfully pupate (Pepi et al. 2022). Although infected caterpillars rarely lyse unless under extremely heavy infection, they release more OBs into the environment, including onto the lupines, via extensive regurgitation and excretion of OB-contaminated feces.

Using a series of field surveys and laboratory experiments, we tested the hypothesis that winter leaf retention promoted pathogen persistence in the canopy, leading to higher infection rates among caterpillars (Fig. 1). We predicted that L. arboreus leaf retention would decrease the infection risk for A. virginalis feeding on the litterfall under the canopy during winter but increase the infection risk for older A. virginalis feeding on the canopy in early spring. We also tested the hypothesis that this trait-mediated indirect interaction positively affects lupines. Hence, we predicted that higher infection and possibly death rates of herbivores would lead to less damage to lupines, which would allow them to produce more inflorescences. Direct manipulation of winter leaf retention is not possible with evergreen plants without introducing experimental artifacts. Therefore, we relied heavily on natural variation in leaf retention, and structural equation models to make causal inferences.

Materials and methods

Field observations: Leaf retention, caterpillar infection, and inflorescence density

We conducted the study during 2020. In the first survey, we characterized infection risk factors in the field. In addition to examining the quantity of lupine leaves in the canopy and in the litter, we also examined litter depth because it is a strong proxy for the availability of non-lupine food sources for early instar A. virginalis. We surveyed 248 lupine bushes from January 31–February 2, across twelve sites at the Bodega Marine Reserve located at Bodega Bay, California (Figure S1). We randomly selected 17–35 bushes at each site along one or two transects every five meters. We visually assessed the proportion of branches that held leaves, the litter depth, and the proportion of lupine leaves in the litter for each bush. Litter depth was measured with a ruler and quantified the distance between the inorganic solid ground surface and the top surface of the organic layer, which included the leaf blades of understory plants. We roughly assessed the proportion of lupine in the litter by looking at the fraction of ground area covered by lupine leaves out of the total ground area covered by all litter. Measurements of litter depth and proportion of lupine in litter were taken twice from opposite sides of the bush and averaged. We returned to each plant on March 25 and collected one to three late instar caterpillars per bush where they were present (74/248 lupines). Caterpillars were frozen for subsequent dissection to quantify infection rate and infection severity.

To determine the infection risk of woolly bear caterpillars, we quantified the infection status and infection severity of each caterpillar in all experiments. Caterpillar samples frozen at − 20 °C were thawed at room temperature and subsequently dissected. For each caterpillar, we extracted four to eight fat body tissue samples and then smeared each tissue sample on a glass slide with a drop of water. For small second instar caterpillars, we only took two samples because we were limited by the amount of available tissue. We looked for the visible OBs (< 1.5 μm) under a light microscope at 200 × magnification with phase contrast. The identity of suspected OBs was confirmed by adding a drop of 1 M NaOH, which causes the OBs to dissolve and turn transparent (Lacey and Solter 2012; Figure S2a-b). Caterpillars were deemed infected when at least two NaOH tests were positive and deemed uninfected when all eight samples returned negative results. For infected caterpillars, infection severity was rated as low, medium, high, or very high based on the number of samples required to detect the virus, the density of OBs in tissue smear samples, and the coloration of hemolymph and fat body (Figure S3a-e). Infected hemolymph is cloudy, whereas infected fat body is yellowish brown.

Since greater leaf retention in the winter might be associated with higher caterpillar abundance, leading to higher viral infection risk (Pepi et al. 2022), we sought to eliminate these alternative paths by statistically controlling for the density of caterpillars during the current year and the previous year. Same year caterpillar density was estimated by counting the number of caterpillars found on each bush in late March. Caterpillar density in the previous year was calculated as a site-level mean for ten lupine bushes in late March of the previous year (Karban et al. 2012). We could not use previous year caterpillar density for individual plants because individual bushes were not uniquely marked.

Infection rate was analyzed with a binomial generalized linear mixed model (GLMM) (package: glmmTMB, Brooks et al. 2017). Infection severity for each caterpillar, ranging from uninfected to highly infected, was analyzed with a cumulative link mixed model (CLMM) (package: ordinal, Christensen 2019). For the analysis of the March infection rate, we included canopy leaf retention, litter depth, and proportion of lupine litter as predictors. We included log same year and previous year caterpillar density as fixed effects to control for their effect on infection. We also included the order of caterpillar dissection a fixed effect to account for skill improvement over the course of dissecting the caterpillars, as the detection of covert infections (asymptomatic infections with low virulent virus, reviewed in Williams et al. 2017) was difficult to master; though, its inclusion does not change our conclusions qualitatively. Individual plants nested within site were included as random effects to account for non-independence. Sensitivity analysis was performed to test for hidden confounders (see supplemental methods).

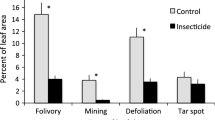

We assessed the total effects of winter leaf retention on individual plant inflorescence density. We also assessed the effect of higher caterpillar infection and lower herbivory on this measure of plant reproduction. We resurveyed the 248 plants during July 5–7. We excluded 14 plants because their tags were missing. We visually scored the proportion of branches with visible leaf chewing damage and the density of inflorescences on each plant using a 50 × 50 cm quadrat randomly placed over the canopy. Because A. virginalis leaf damage cannot be uniquely identified, the estimate includes damage done by other chewing herbivores. We also recorded the presence/absence of Western tussock caterpillars (Orgyia vetusta). This species causes significant defoliation in the summer but has little impact on the same year inflorescence production which largely occurs one to three months before caterpillars become abundant (but see Harrison and Maron 1995).

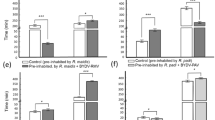

Inflorescence density was analyzed with a negative binomial GLMM. We first fitted inflorescence density against canopy leaf retention as a fixed effect and site as a random effect to characterize their overall relationship. Then, to test whether our hypothesized mechanism through caterpillar infection and herbivory caused the correlation between canopy leaf retention and inflorescence density, we performed confirmatory path analysis and estimated the contribution of higher caterpillar infection risk and lower herbivory to this relationship (package: piecewiseSEM, Lefcheck 2016). To do so, we selected the subset of lupine bushes without tussock moths and averaged the caterpillar infection rate and caterpillar infection severity at each bush (n = 48). Infection severity was turned into a proportion by dividing the numeric rank by the maximum rank. We constructed a four-part piecewise structural equation model according to our structural hypothesis (Fig. 2): (i) a binomial GLMM of mean bush infection rate, (ii) a beta GLMM of mean infection severity, (iii) a GLMM of proportion of July herbivory, and (iv) a negative binomial GLMM of inflorescence density. The first two sub-models were weighted by the number of caterpillars examined. July herbivory was logit transformed prior to fitting (Warton and Hui 2011). Site was included as a random effect for all individual models. Conditional independences implied by our structural hypothesis were tested using Shipley’s d-separation test (Shipley 2009).

Structural equation model of the effect of winter leaf retention on spring and summer inflorescence production. Arrow sizes were scaled relative to the coefficients standardized with the latent linear method as displayed (Grace et al. 2018). Arrow line type shows the statistical significance of the paths. Shipley’s d-separation test did not find any evidence of independence violation (Fisher's C = 5.9, df = 6, P = 0.44)

Leaf feeding trial: Infection from leaf-feeding

We tested whether horizontal transmission occurred as the result of feeding on lupine leaves in the canopy and the litter. On April 6, we randomly assigned 84 late instar caterpillars to one of three different diet treatments: a virus free lettuce control (n = 21), lupine canopy leaves (n = 42), and lupine litter (n = 21). Caterpillars were collected from a site (FIR; Figure S1) with one of the highest caterpillar densities (mean ± SE: 2.4 ± 0.69 per bush). Leaf samples were randomly taken from, or under, the canopy of ten lupine bushes at a site (AFL) and stored at 4 °C. The caterpillars were fed three times at four-day intervals then weighed and frozen on day 12 (see supplemental methods for rearing procedures). Fifteen caterpillars that formed cocoons were frozen early. Caterpillars were subsequently dissected to determine infection rate and infection severity.

We fitted models of infection rate and infection severity for the three diet treatments in a binomial generalized linear model and cumulative link model, respectively. We controlled for whether the caterpillar pupated since caterpillars that pupated stopped feeding early and may therefore have reduced infection rate or infection severity due to the shorter exposure time.

Litter feeding trial: Infection of caterpillars in the litter

In the litter feeding trial, we tested whether higher litterfall increased infection risk for younger caterpillars in the litter and how this may have impacted caterpillar performance. We exposed 134s instar caterpillars from a laboratory colony to the litterfall collected from 134 different lupine bushes across our twelve sites (see supplemental methods for laboratory colony setup). These lupine bushes were the same bushes from which we collected caterpillars in the spring and measured leaf retention in the winter. The litterfall was collected in July by placing a bucket below the canopy and shaking the canopy vigorously. The collected litter was stored in individual bags and refrigerated at 4 °C. On day zero, we weighed each caterpillar and randomly assigned each caterpillar to receive approximately 60 mL (high) or 20 mL (low) of litter, reflecting the realistic range of litter densities that caterpillars naturally encountered at our study site. Although this level of leaf litter limited caterpillar growth (caterpillars reared on lettuce can reach pupation in a growth chamber within three months, as opposed to ~ 10 months at our field site), we believe that this design more realistically assesses the infection risk and net effect of litter density on caterpillar mortality in the field. The dry summer in California is a stressful time for early instar A. virginalis, which generally struggle to obtain enough food to survive and grow. We added the same amount of litter again on day six without removing the unfinished food. On day nine, we reweighed each caterpillar and began feeding the caterpillars lettuce and checking for survival every three to four days until day 29 when we froze all survivors. Caterpillars that died prematurely were weighed and frozen. We later dissected the caterpillars to assess infection rate and infection severity.

To analyze caterpillar infection severity, we included litter treatment, day-zero weight, canopy leaf retention, survival time, and caterpillar densities as fixed effects, and site as a random effect in a CLMM (n = 134, package: brms, Bürkner 2017). To determine if infection of early instar caterpillars in July was directly related to leaf retention, instead of through infection of the earlier generation of late instar caterpillars in March or another correlated factor (Figure S4), we fitted the same model again conditioning on an estimate of mean bush infection severity using a subset of plants from which we collected caterpillars in the spring (n = 44).

Caterpillar weight was analyzed with a log-normal GLMM. We fitted day-nine weight against day-zero weight, litter treatment, and a random effect for site. Because we expected infection mediated survival patterns to exhibit a time lag and nutrition mediated survival patterns to appear more immediately, we analyzed survival at two timescales. Short-term survival within the first nine days when the caterpillars were still feeding exclusively on litter was analyzed with a binomial GLMM. We fitted a model of death at day nine with litter treatment, day-zero weight, and day-nine weight as fixed effects, and site as a random effect. We analyzed longer-term survival time over the entire experiment in a survival analysis (package: survival Therneau 2020). Litter treatment, day-zero weight, and site were included as predictors. In a separate model, we conditioned on day-nine weight to isolate the effect of viral infection, rather than starvation, on mortality risk. Predictors were Z-score transformed prior to fitting and all analyses were conducted in R, version 4.0.2 (R Core Team 2020).

Results

Field observations: Infection of caterpillars in the canopy and impact on inflorescence density

We collected a total of 108 caterpillars from the field in March. Rates of infection were high (88/108), but most cases were of low infection severity (48/88). Caterpillars collected from plants that retained more leaves in winter were more likely to be infected in spring. Consistent with our hypothesis, controlling for caterpillar densities and the order of dissection, we found that winter leaf retention was the only predictor we tested that significantly increased infection rate of larvae on plants (Wald test: β = 0.84, Z = 2.3, P = 0.022). A one SD increase in total winter leaf retention corresponded to a 132% increase in the odds of infection (Fig. 3a; see supplemental results on sensitivity analysis; Figure S6). Total litter depth (β = − 0.24, Z = − 0.10, P = 0.30) and the proportion of lupine in the litter (β = 0.10, Z = 0.36, P = 0.72) were not significant explanatory variables. A similar, albeit weaker, pattern was found when we examined infection severity. Leaf retention led to a marginal trend of higher infection severity (β = 0.42, Z = 1.8, P = 0.072). A one SD increase in total winter leaf retention corresponded to a 52% increase in the odds of more severe infections (Fig. 3b, c). Litter depth (β = − 0.14, Z = − 0.88, P = 0.38) and proportion of lupine in the litter (β = 0.040, Z = 0.20, P = 0.84) were again both non-significant.

Infection risk of woolly bear caterpillars in March collected in the field. Plot A shows the probability of infection with a 95% confidence interval as predicted by a GLMM (marginal R2 = 0.31). The size of each point was scaled relative to the number of identical cases for ease of display (i.e., caterpillars were not binned in the analysis). Plot B shows the infection severity and plot C shows the proportion of each infection severity level as predicted by a CLMM (marginal R2 = 0.12). Ribbons represent the upper and lower bounds of the 95% confidence interval. There were no highly infected caterpillars in this experiment

Plants that held more leaves through winter had a higher inflorescence density. Of the 234 plants we resurveyed, winter leaf retention was associated with a moderately higher density of inflorescences in July (β = 0.16, Z = 2.6, P = 0.011). A one SD higher proportion of winter leaf retention corresponded to an 18% increase in inflorescence density the following summer. In our structural equation model, higher winter leaf retention led to a higher caterpillar infection rate, higher infection severity, lower herbivory, and consequently increased plant inflorescence density by summer (Fig. 2). Although this was the only significant path we detected in our analysis from winter leaf retention to flower production, a stronger but marginally non-significant direct path was also detected (β = 0.11, Z = 1.8, P = 0.071). If this direct path is included, the indirect path through pathogen infection (path coefficient: 0.018) mediated about 14% of the total effect (path coefficient: 0.13) of winter leaf retention on summer inflorescence density.

Leaf feeding trial: Infection from leaf feeding

Leaves in lupine canopies were a source of virus. After only 12 days of exposure, caterpillars that fed on leaves from the canopy had six times the odds of being infected (β = 1.8, Z = 2.1, P = 0.032) and three times the odds of having higher infection severity compared to feeding on the lettuce control (β = 1.1, Z = 2.1, P = 0.033; Figure S5a-b). In contrast, leaves from the litter did not significantly increase the probability of infection (β = 0.62, Z = 0.77, P = 0.44) nor infection severity (β = 0.22, Z = 0.39, P = 0.70). However, our detection power was low due to the overall high background infection rate (16/22 infected in control group). One additional caveat is that the difference in infection among leaf feeding treatment groups might have been driven by host plant differences (lettuce vs. L. arboreus) that altered the progression of infection (Hoover et al. 2000).

Litter feeding trial: Infection of caterpillars in the litter

All second instar caterpillars in our feeding trial were infected and infections were generally severe (115/134 highly or very highly infected). We therefore only analyzed infection severity. Controlling for caterpillar densities, winter leaf retention was associated with a moderate increase in the severity of infections among young caterpillars in July (β = 0.41, 95% Credible Interval (CI) = [0.052, 0.77]; Fig. 4a). Litterfall may also serve as a source of infection for young caterpillars. The treatment that provided less litter to caterpillars decreased the odds of more severe infections by 44% (β = − 0.58 95% CI = [− 1.2, 0.046]; Fig. 4b), although this was only marginally non-significant.

Infection severity of second instar caterpillars in the litter feeding trial in July. The first row (A, B) shows the effect of winter leaf retention and litter treatment as predicted by a CLMM without including mean March caterpillar infection severity in the model (n = 134, marginal R2 = 0.12 ± 0.046). The second row (C, D) shows the effect of mean March caterpillar infection severity and litter treatment as predicted by a second CLMM (n = 44, marginal R2 = 0.36 ± 0.069). All caterpillars were infected in this experiment

The positive association between winter leaf retention and caterpillar infection severity in July may be because of greater persistence of OBs from the previous year or because of greater OBs deposition in the spring when late instar caterpillars were infected. Therefore, we performed a mediation analysis (Zhao et al. 2010) to examine whether the effect of winter leaf retention on early instar caterpillar infection severity in July was mediated through infection severity of late instar caterpillars in March. This was indeed the case. When we controlled for the effect of mean infection severity of caterpillars in March, winter leaf retention was no longer significant (β = 0.39, 95% CI = [− 0.44, 1.2]), indicating that winter leaf retention was not directly responsible for the observed pattern (Figure S4). Rather, the relationship between winter leaf retention and young caterpillar infection severity in July was mediated through mean March caterpillar infection severity, which was strongly positively associated with higher July infection severity (β = 0.91, 95% CI = [0.14, 1.7]; Fig. 4c). Controlling for mean March infection severity, the estimated effect of lower litterfall was also stronger in the negative direction and the 95% CI no longer overlaps zero (β = − 1.5, 95% CI = [− 2.8, − 0.23]; Fig. 4d). Caterpillars in the low litter treatment had four times lower odds of having severe infections.

Although litter may have been a reservoir for the virus, it was also an important source of food for caterpillars. Analysis of caterpillar weight revealed that caterpillars in the treatment that received less litter weighed an average of 25% less than caterpillars in the high litter treatment by day nine when the diet was switched from litter to lettuce (β = − 0.29, Z = − 3.8, P < 0.001; Fig. 5a). This weight was highly predictive of the probability of death by day nine (β = − 3.7, Z = − 5.1, P < 0.001; Fig. 5b), and when statistically controlled for, explained away the effect of lower litter exposure on caterpillar mortality (β = − 1.5, Z = − 1.5, P = 0.13). Over a longer 29-day-period, over which we expect the effect of viral infection to be more pronounced, heavier caterpillars on day-nine (β = 0.40, Z = 7.3, P < 0.001; Fig. 5c) and lighter caterpillars on day-zero (β = 0.070, Z = − 2.2, P = 0.029) had prolonged survival time. Lower litter exposure also reduced survival time (β = 0.14, Z = 2.3, P = 0.020). This reversal of the effect of litter exposure on mortality is consistent with our expectation of greater viral induced mortality. When we examined the net effect of litter treatment without removing its effect through day-nine caterpillar weight, we found no effect of litter exposure on survival time (β = − 0.016, Z = − 0.26, P = 0.80), indicating that the benefit from lower starvation is canceled out by the risk from infection (Figure S4).

The weight and survival of second instar caterpillars in the litter feeding experiment. Caterpillars in the low litter treatment are shown in purple, whereas those in the high litter treatment are shown in green. The boxplots in plot A show the 25th, 50th, and 75th percentile with outliers as colored points and raw data as black points. The ribbons in plots B and C represent 95% confidence intervals. The black line in plot B shows the mortality pattern exhibited by caterpillars in both treatment groups. Purple and green lines in plot C show the survival patterns for each treatment group. The size of the points was scaled relative to the number of caterpillars with the same value. In plot C, caterpillars that were killed were shown as triangles, while caterpillars that died naturally were shown as circles

Discussion

Late instar A. virginalis in the spring were more likely to be infected on L. arboreus that retained more leaves over winter, and more likely to sustain a more severe infection. Winter leaf retention led to an overall higher inflorescence density and 14% of this effect was attributed to a reduction in herbivory caused by the higher caterpillar infection rate in a structural equation model. Winter leaf retention also led to more inflorescences due to mechanisms that were unrelated to herbivory. On lupines with greater winter leaf retention, the higher infection rate among late instar caterpillars in the spring may have further increased the infection severity of young caterpillars of the following generation in the summer through contact with more contaminated foliage, frass, or regurgitant dropped into the litter layer.

Our results are consistent with our hypothesis that winter leaf retention promoted the persistence of viral OBs from the previous generation of caterpillars on the plant canopy, possibly through physical retention or shielding from ultraviolet radiation inactivation (Biever and Hostetter 1985; Pepi et al. 2022), resulting in increased viral exposure of the subsequent generation of caterpillars. However, we do not claim that this is the only possible explanation for the observed patterns. Without direct measurements of OB activity on leaves, it is possible that differences in plant chemistry related to leaf retention altered caterpillar infection susceptibility (Hay et al. 2020). Horizontal transmission could have also largely occurred in the understory (Richards et al. 1999), and infected caterpillars disproportionally climbed onto the taller canopy to feed in spring, a behavioral modification known to occur since the nineteenth century (Vega and Kaya 2012). It is worth noting that these alternative explanations must have an effect several times the size of direct and delayed density dependence to be a sufficient explanation, as revealed by our sensitivity analysis (Figure S6). Our study therefore suggests further lines of inquiry of potentially high biological significance.

Leaf longevity as an indirect resistance

While our results do not directly test a fitness effect, they may be consistent with an indirect defensive function (whether coincidental or adapted is another question). We therefore begin by speculating on leaf life span as an indirect defense. Since Faeth et al. (1981) proposed the appealing hypothesis that leaf abscission can drop insect folivores to the ground, thereby killing or starving them, the hypothesis that shortened leaf life span may be adaptive as a direct resistance against herbivores has been questioned on empirical merits (Pritchard and James 1984; Kahn and Cornell 1989; Stiling and Simberloff 1989). While this mechanism of direct resistance is unlikely to apply to most mobile organisms, the high dispersal limitation of pathogens makes them prime candidates. Leaf longevity may thus provide unrecognized indirect resistance to plants that facilitates greater top-down pressure on herbivores caused by their pathogens. While this strategy of indirect resistance is likely not a common explanation for patterns of leaf life spans seen in nature (including for L. arboreus), the strategy does offer an additional selective pressure, among many, that may drive the evolution of evergreen or marcescent leaf habits (Otto and Nilsson 1981; Chabot and Hicks 1982; Nilsson 1983; Wright et al. 2004).

Baculoviruses have been suggested as a key factor that drives many insect population cycles and regulates outbreaks (Anderson and May 1980; Cory and Myers 2003; Myers and Cory 2013). In those systems, baculoviruses can impose extremely effective top-down control, often reducing herbivore populations by multiple orders of magnitude (Myers and Cory 2016). This was indeed the case for A. virginalis, for which we observed > 90% mortality following a viral outbreak (Pepi et al. 2022). Pathogens more generally have been identified as a key driver of density dependence and regulator of population density in many systems (Anderson and May 1978). Considering that many of these pathogens reside on the phylloplane and face the ubiquitous challenge of environmental persistence, we can expect leaf persistence to facilitate a great diversity of bodyguards. A diverse portfolio of potential bodyguards creates greater stability of the defense efficacy (Bolnick et al. 2011). Indeed, this generality ensures that specific taxa of herbivores and pathogens need not reliably interact with the plant or alter the plant fitness for the bodyguard effect to be a selective pressure for longer leaf life span. For instance, at our field sites, O. vetusta often completely defoliate L. arboreus and are similarly infected by a baculovirus (VSP personal obs). Leaf longevity may affect both pathogens in a similar manner. Of course, plants themselves and the mutualists of plants may also be the subjects of infection. Therefore, whatever fitness benefit was acquired from reduced herbivory is weighted against the associated phytopathogen risk and indirect effects through other mutualists.

Future directions

While leaf life span effects on pathogens might affect herbivores in many systems, it is likely that consequential benefits to the plant will not occur until herbivores reach a sufficient density to support a large enough population of pathogens in the environment, coinciding to when herbivores may have the largest fitness effects. Having pathogen bodyguards then may not lower the herbivory plants usually experience, but the upper quantile of herbivory via stronger negative density dependence. As plant fitness–herbivory relationships are likely generally concave-down (Marquis 1996), these rare high herbivory events would have higher than expected impact on plant fitness. Further empirical tests of the hypothesis may require sampling across a wider herbivory gradient, with emphasis on whether a reduction in skewness of the herbivory distribution is associated with longer leaf life spans or the presence of entomopathogens.

More broadly, the idea that any plant trait may be adaptive by facilitating pathogen control of herbivores has been proposed as a general plant defensive strategy (Elliot et al. 2000; Cory and Hoover 2006; Pearse et al. 2020), though many mechanisms are little examined. For instance, silica bodies and plant spines are hypothesized to facilitate the entry of pathogenic microbes into herbivores (Lev-Yadun and Halpern 2008; but see Keathley et al. 2012). Reallocation of plant secondary metabolites to avoid inhibiting baculovirus pathogenicity has also been hypothesized (Shikano et al. 2017). Induced plant resistance has been suggested to increase pathogen risk by encouraging cannibalism among herbivores (Orrock et al. 2017) as well as prolonging the window of vulnerability during development (Shikano et al. 2018). Through differences in phytochemistry, plants could potentially facilitate or exclude certain pathogen genotypes that differ in pathogenicity, speed of kill, or pathogen yield to their own benefit (Hodgson et al. 2002). However, empirical data on these relationships remain scant and suffer from the lack of evidence about the plant fitness costs and benefits (Inyang et al. 1999; Hountondji et al. 2005; Rosa et al. 2018; Gange et al. 2019; Gasmi et al. 2019; this paper).

In future, it would be highly profitable to investigate how plant traits maintain or recruit entomopathogens (Cory and Hoover 2006). We advocate for a plant centric perspective to understand the fitness costs and benefits of these traits. This research direction will not only help us better understand how pathogens persist in the field but may also prove invaluable for selecting plant traits that enhance biocontrol efficacy (Cortesero et al. 2000).

Data availability

The data and code are deposited on Dryad and Zenodo respectively. Pan, Vincent; Pepi, Adam; LoPresti, Eric; Karban, Richard (2023), The Consequence of Leaf Life Span to Virus Infection of Herbivorous Insects, Dryad, Dataset, https://doi.org/10.25338/B8PD2K.

References

Anderson RM, May RM (1978) Regulation and Stability of Host-Parasite Population Interactions: I. Regulat Proces J Anim Ecol 47:219–247

Anderson RM, May RM (1980) Infectious diseases and population cycles of forest insects. Science 210(4470):658–661

Barrett JW, Brownwright AJ, Primavera MJ, Palli SR (1998) Studies of the Nucleopolyhedrovirus infection process in insects by using the green fluorescence protein as a reporter. J Virol 72(4):3377–3382

Biever KD, Hostetter DL (1985) Field persistence of Trichoplusia ni (Lepidoptera: Noctuidae) single-embedded nuclear polyhedrosis virus on cabbage foliage. Environ Entomol 14(5):579–581

Bolnick DI, Amarasekare P, Araújo MS et al (2011) Why intraspecific trait variation matters in community ecology. Trends Ecol Evol 26:183–192

Brooks ME, Kristensen K, van Benthem KJ, Magnusson A, Berg CW, Nielsen A et al (2017) GlmmTMB balances speed and flexibility among packages for zero-inflated generalized linear mixed modeling. R J 9(2):378–400

Bürkner PC (2017) brms: An R package for bayesian multilevel models using Stan. J Stat Softw 80(1):1–28

Chabot BF, Hicks DJ (1982) The ecology of leaf life spans. Annu Rev Ecol Syst 13(1):229–259

Christensen, R. H. B. (2019). ordinal: Regression models for ordinal data (Version 2019.4–25). Retrieved from https://CRAN.R-project.org/package=ordinal

Cortesero AM, Stapel JO, Lewis WJ (2000) Understanding and manipulating plant attributes to enhance biological control. Biol Control 17(1):35–49

Cory JS, Hoover K (2006) Plant-mediated effects in insect–pathogen interactions. Trends Ecol Evol 21(5):278–286

Cory JS, Myers JH (2003) The ecology and evolution of insect baculoviruses. Annu Rev Ecol Evol Syst 34:239–272

D’Amico V, Elkinton JS (1995) Rainfall effects on transmission of gypsy moth (Lepidoptera: Lymantriidae) nuclear polyhedrosis virus. Environ Entomol 24(5):1144–1149

Elliot SL, Sabelis MW, Janssen A, van der Geest LPS, Beerling EAM, Fransen J (2000) Can plants use entomopathogens as bodyguards? Ecol Lett 3(3):228–235

Faeth SH, Connor EF, Simberloff D (1981) Early leaf abscission: a neglected source of mortality for folivores. Am Nat 117(3):409–415

Gange AC, Koricheva J, Currie AF, Jaber LR, Vidal S (2019) Meta-analysis of the role of entomopathogenic and unspecialized fungal endophytes as plant bodyguards. New Phytol 223(4):2002–2010

Gasmi L, Martínez-Solís M, Frattini A, Ye M, Collado MC, Turlings TCJ et al (2019) Can herbivore-induced volatiles protect plants by increasing the herbivores’ susceptibility to natural pathogens? Appl Environ Microbiol 85(1):e01468-e1518

Grace JB, Johnson DJ, Lefcheck JS, Byrnes JEK (2018) Quantifying relative importance: computing standardized effects in models with binary outcomes. Ecosphere 9(6):e02283

Harrison S, Maron JL (1995) Impacts of defoliation by tussock moths (Orgyia vetusta) on the growth and reproduction of bush lupine (Lupinus arboreus). Ecol Entomol 20(3):223–229

Hay WT, Behle RW, Berhow MA, Miller AC, Selling GW (2020) Biopesticide synergy when combining plant flavonoids and entomopathogenic baculovirus. Sci Rep 10:6806

Hodgson DJ, Vanbergen AJ, Hartley SE, Hails RS, Cory JS (2002) Differential selection of baculovirus genotypes mediated by different species of host food plant. Ecol Lett 5(4):512–518

Hoover K, Washburn JO, Volkman LE (2000) Midgut-based resistance of Heliothis virescens to baculovirus infection mediated by phytochemicals in cotton. J Insect Physiol 46(6):999–1007

Hountondji FCC, Sabelis MW, Hanna R, Janssen A (2005) Herbivore-induced plant volatiles trigger sporulation in entomopathogenic fungi: the case of Neozygites tanajoae infecting the cassava green mite. J Chem Ecol 31(5):1003–1021

Hsieh Y-L, Linsenmair KE (2012) Seasonal dynamics of arboreal spider diversity in a temperate forest. Ecol Evol 2(4):768–777

Inglis GD, Goettel MS, Johnson DL (1993) Persistence of the entomopathogenic fungus Beauveria bassiana, on phylloplanes of crested wheatgrass and alfalfa. Biol Control 3:258–270

Inglis GD, Johnson DL, Goettel MS (1995) Effects of simulated rain on the persistence of Beauveria bassiana conidia on leaves of alfalfa and wheat. Biocontrol Sci Technol 5(3):365–370

Inyang EN, Butt TM, Beckett A, Archer S (1999) The effect of crucifer epicuticular waxes and leaf extracts on the germination and virulence of Metarhizium anisopliae conidia. Mycol Res 103(4):419–426

Kahn DM, Cornell HV (1989) Leafminers, early leaf abscission, and parasitoids: a yritrophic interaction. Ecology 70(5):1219–1226

Karban R, Grof-Tisza P, Maron JL, Holyoak M (2012) The importance of host plant limitation for caterpillars of an arctiid moth (Platyprepia virginalis) varies spatially. Ecology 93(10):2216–2226

Karban R, Grof-Tisza P, Holyoak M (2017) Wet years have more caterpillars: Interacting roles of plant litter and predation by ants. Ecology 98(9):2370–2378

Keathley CP, Harrison RL, Potter DA (2012) Baculovirus infection of the armyworm (Lepidoptera: Noctuidae) feeding on spiny- or smooth-edged grass (Festuca spp.) leaf blades. Biol Control 61:147–154

Lacey LA, Solter LF (2012) Initial handling and diagnosis of diseased invertebrates. In: Lacey LA (ed) Manual of Techniques in Invertebrate Pathology. Academic press, London

Lefcheck JS (2016) piecewiseSEM: Piecewise structural equation modelling in R for ecology, evolution, and systematics. Methods Ecol Evol 7(5):573–579

Lev-Yadun S, Halpern M (2008) External and internal spines in plants insert pathogenic microorganisms into herbivore’s tissues for defense. In: Trends MER (ed) T, vol Dijk. Nova Biomedical Books, New York, pp 155–168

Lynch RE, Lewis LC, Berry EC (1980) Application efficacy and field persistence of Bacillus thuringiensis when applied to corn for European corn borer control. J Econ Entomol 73(1):4–7

Marquis RJ (1996) Plant architecture, sectoriality and plant tolerance to herbivores. Vegetatio 127:85–97

McGuire MR, Shasha BS, Lewis LC, Nelsen TC (1994) Residual activity of granular starch-encapsulated Bacillus thuringiensis. J Econ Entomol 87(3):631–637

Mooney HA, Dunn EL (1970) Photosynthetic systems of mediterranean-climate shrubs and trees of California and Chile. Am Nat 104(939):447–453

Myers JH, Cory JS (2013) Population cycles in forest Lepidoptera revisited. Annu Rev Ecol Evol Syst 44(1):565–592

Myers JH, Cory JS (2016) Ecology and evolution of pathogens in natural populations of Lepidoptera. Evol Appl 9(1):231–247

Nilsson SG (1983) Evolution of leaf abscission times: alternative hypotheses. Oikos 40:318

Orrock J, Connolly B, Kitchen A (2017) Induced defences in plants reduce herbivory by increasing cannibalism. Nat Ecol Evol 1(8):1205–1207

Otto C, Nilsson LM (1981) Why do beech and oak trees retain leaves until spring? Oikos 37(3):387

Pearse IS, LoPresti E, Schaeffer RN, Wetzel WC, Mooney KA, Ali JG et al (2020) Generalising indirect defence and resistance of plants. Ecol Lett 23(7):1137–1152

Pepi A, Holyoak M, Karban R (2021) Altered precipitation dynamics lead to a shift in herbivore dynamical regime. Ecol Lett 24(7):1400–1407

Pepi A, Pan V, Rutkowski D, Mase V, Karban R (2022) Influence of delayed density and ultraviolet radiation on caterpillar baculovirus infection and mortality. J Anim Ecol 91(11):2192–2202

Pritchard IM, James R (1984) Leaf fall as a source of leaf miner mortality. Oecologia 64(1):140–141

Pritzkow S, Morales R, Moda F, Khan U, Telling GC, Hoover E et al (2015) Grass plants bind, retain, uptake and transport infectious prions. Cell Rep 11(8):1168–1175

R Core Team. (2020). R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. Retrieved from https://www.r-project.org/

Raymond B, Hartley SE, Cory JS, Hails RS (2005) The role of food plant and pathogen-induced behaviour in the persistence of a nucleopolyhedrovirus. J Invertebr Pathol 88(1):49–57

Rosa E, Woestmann L, Biere A, Saastamoinen M (2018) A plant pathogen modulates the effects of secondary metabolites on the performance and immune function of an insect herbivore. Oikos 127(10):1539–1549

Shikano I (2017) Evolutionary ecology of multitrophic interactions between plants, insect herbivores and entomopathogens. J Chem Ecol 43(6):586–598

Shikano I, Shumaker KL, Peiffer M, Felton GW, Hoover K (2017) Plant-mediated effects on an insect–pathogen interaction vary with intraspecific genetic variation in plant defences. Oecologia 183(4):1121–1134

Shikano I, McCarthy E, Hayes-Plazolles N, Slavicek JM, Hoover K (2018) Jasmonic acid-induced plant defenses delay caterpillar developmental resistance to a baculovirus: Slow-growth, high-mortality hypothesis in plant–insect–pathogen interactions. J Invertebr Pathol 158:16–23

Shipley B (2009) Confirmatory path analysis in a generalized multilevel context. Ecology 90(2):363–368

Stiling P, Simberloff D (1989) Leaf Abscission: Induced Defense against Pests or Response to Damage? Oikos 55(1):43–49

Therneau, T. M. (2020). survival: Survival Analysis (Version 3.2–7). Retrieved from https://CRAN.R-project.org/package=survival

Vega F, Kaya H (2012) Insect Pathology, 2nd edn. Academic Press, San Diego, CA

Warton DI, Hui FKC (2011) The arcsine is asinine: the analysis of proportions in ecology. Ecology 92(1):3–10

Williams T, Virto C, Murillo R, Caballero P (2017) Covert Infection of Insects by Baculoviruses. Front Microbiol 8:1337

Wright IJ, Reich PB, Westoby M, Ackerly DD, Baruch Z, Bongers F et al (2004) The worldwide leaf economics spectrum. Nature 428(6985):821

Yamazaki K, Sugiura S (2008) Deer predation on leaf miners via leaf abscission. Sci Nat 95(3):263–268

Zhao X, Lynch JG Jr, Chen Q (2010) Reconsidering baron and kenny: myths and truths about mediation analysis. J Consum Res 37:197–206

Acknowledgements

We thank Harry Kaya, Danielle Rutkowski, Vinay Mase, Michael Turelli, and Jenny Cory for helping with the identification of the virus, Neal Williams for sharing microscope equipment and freezer, Jay Rosenheim for sharing incubators and lab space, Claire Beck and Jasmine Daragahi for help with rearing caterpillars, Jackie Sones for aiding access to the Bodega Marine Reserve, the joint-insect ecology lab group at UC Davis for providing feedback, Hanna Kahl and Emily Meineke for commenting on our manuscript, and the anonymous reviewers for providing constructive comments.

Funding

VSP, AP, and RK were funded by NSF-LTREB (1456225) and an NSF-REU supplement (DEB-2018169). EFL was funded by start-up funds from Oklahoma State University.

Author information

Authors and Affiliations

Contributions

VSP, AP, and RK conceived the study and collected the data. VSP and AP conducted the analysis. VSP wrote the manuscript. AP, EFL, and RK provided editorial support. All authors contributed critically to the drafts and approved final publication.

Corresponding author

Ethics declarations

Conflict of interest

We declare no conflict of interest.

Additional information

Communicated by Caroline Müller.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pan, V.S., Pepi, A., LoPresti, E.F. et al. The consequence of leaf life span to virus infection of herbivorous insects. Oecologia 201, 449–459 (2023). https://doi.org/10.1007/s00442-023-05325-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00442-023-05325-w