Abstract

Multiple sclerosis (MS) is a demyelinating neurological disorder with a highly heterogeneous clinical presentation and course of progression. Disease-modifying therapies are the only available treatment, as there is no known cure for the disease. Careful selection of suitable therapies is necessary, as they can be accompanied by serious risks and adverse effects such as infection. Magnetic resonance imaging (MRI) plays a central role in the diagnosis and management of MS, though MRI lesions have displayed only moderate associations with MS clinical outcomes, known as the clinico-radiological paradox. With the advent of machine learning (ML) in healthcare, the predictive power of MRI can be improved by leveraging both traditional and advanced ML algorithms capable of analyzing increasingly complex patterns within neuroimaging data. The purpose of this review was to examine the application of MRI-based ML for prediction of MS disease progression. Studies were divided into five main categories: predicting the conversion of clinically isolated syndrome to MS, cognitive outcome, EDSS-related disability, motor disability and disease activity. The performance of ML models is discussed along with highlighting the influential MRI-derived biomarkers. Overall, MRI-based ML presents a promising avenue for MS prognosis. However, integration of imaging biomarkers with other multimodal patient data shows great potential for advancing personalized healthcare approaches in MS.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Multiple sclerosis (MS) is the primary cause of neurological disability afflicting young adults, with a worldwide prevalence of more than 2.5 million people [1, 2]. Although there is uncertainty surrounding the exact cause of MS, its multifactorial nature has been established, arising from a combination of various factors including genetics, vitamin D deficiency, Epstein–Barr infection, smoking and obesity [3, 4]. MS affects the central nervous system (CNS), characterized by demyelinating lesions throughout the brain, optic nerves and spinal cord, and followed by neurodegeneration [5, 6].

Two main disease courses are observed in MS. The first involves cycles of relapse and remission, known as relapse-remitting MS (RRMS), which often commences in younger individuals, and is followed by slow, gradual progression at later stages known as secondary progressive MS (SPMS). The second course, which only represents around 10% of cases [7], is more common in older adults, bypassing the cyclic nature and following a continuous course of disease progression, termed primary progressive MS (PPMS) [8].

The earliest manifestation of MS is known as clinically isolated syndrome (CIS), which resembles relapses occurring in MS but manifests as an isolated incident and does not necessarily progress to overt MS [9]. Recent evidence also points to the possibility of a prodromal phase in disease evolution, in which non-specific neurological symptoms occur but do not qualify as CIS or definite MS [10]. While not officially part of the MS spectrum, radiologically isolated syndrome (RIS) involves the discovery of white matter (WM) lesions indicative of demyelinating disease without accompanying clinical symptoms [11, 12]. More than fifty percent of individuals with RIS develop clinical symptoms within a decade [13].

Despite the central focus on lesion formation and dissemination in MS [14], disability progression often occurs irrespective of new findings of lesions [15]. Two distinct processes contribute to disability accumulation in MS, namely relapse-associated worsening and progression independent of relapse activity (PIRA) [16, 17]. Due to the significant contribution of PIRA and the limited success of immunomodulatory therapy in MS, the current autoimmune dogma has been challenged, suggesting the disease is essentially caused by a diffuse smoldering process with focal inflammation as a secondary manifestation [15].

MS profoundly affects quality of life through disability accumulation [18] and a wide range of symptoms. While there is no cure, a broad spectrum of disease-modifying therapies (DMTs) are available for slowing down disease progression and disability accumulation [19, 20].

Hence, predicting a patient’s course of disease and risk of progression is pertinent to properly evaluate the benefits of early intervention while minimizing the adverse effects associated with DMT administration, particularly highly effective treatments [21, 22]. However, the heterogeneous nature of MS disease course precludes an accurate prediction with existing prognostic indices and established biomarkers [23], which take a ‘one size fits all’ approach without accounting for individual differences. Artificial intelligence (AI) applications are now extensively being researched for the purpose of personalized healthcare [24], including their use in MS [25].

Magnetic resonance imaging (MRI) has proven to be a pivotal imaging biomarker in MS, playing an essential role in diagnosis, monitoring, prognosis, and outcome assessment [26, 27]. However, expert visual inspection of images is laborious and time-consuming, bringing about the need for automated tools to extract beneficial biomarkers [28]. Furthermore, despite the leaps in MRI biomarker extraction, their association with clinical disability in MS remains moderate at best [29]. To overcome this issue, current research is now combining MRI predictive power with AI, particularly machine learning (ML) [30].

Significant milestones in the MS disease course are the conversion of CIS to clinically definite MS and subsequent signs of increased disease activity reflecting the exacerbation of MS-related disabilities. The most prominent of these disabilities with the greatest impact on quality of life are motor and cognitive impairments [31], with the Expanded Disability Status Scale (EDSS) representing the current standard for assessing MS-related disability [32]. However, the EDSS heavily emphasizes ambulation as a disability measure, with limited contribution of cognition and upper limb function, particularly toward the higher end of the scale [33], necessitating additional assessments for a comprehensive picture of disability in MS patients. Furthermore, these aspects of disability should be independently assessed as they do not necessarily manifest together [34].

The current review provides a comprehensive overview of ML models integrating MRI biomarkers for the task of predicting disease progression in MS. Various reviews on the applications of ML in MS exist in the literature [28, 35,36,37]. However, this work provides a more focused perspective integrating disease progression, MRI biomarkers and ML methods. As such, this paper is meant to serve as a reference for both clinicians and researchers for this application.

Methods, scope and structure

To identify relevant studies for this review, Web of Science, Scopus and PubMed were searched for original research papers published between January 2015 and July 2024 with the following keywords: “machine learning” OR “artificial intelligence” OR “neural networks” OR “deep learning” AND “multiple sclerosis” OR “MS”) AND (“MRI” OR “magnetic resonance imaging”). Additional records were identified through hand search of relevant studies and review papers.

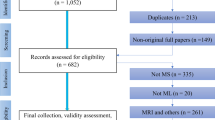

Research papers were included if they adhered to the following criteria: (1) Applied ML techniques in predictive tasks for MS disease progression, (2) incorporated MRI biomarkers as predictors and (3) involved predictive modeling with longitudinal data, using baseline data to forecast future outcomes. The results of the search are outlined in Fig. 1.

Through the identified body of research, studies investigating the value of ML algorithms in predicting MS disease course included the five following areas: predicting the conversion of CIS to MS, cognitive outcome, EDSS-related disability, motor disability and disease activity.

The current review is structured in accordance with the existing literature into the aforementioned five tasks. Sections “Magnetic resonance imaging biomarkers in MS” and “Overview of machine learning” aim to provide an overview of MRI-derived biomarkers (see Fig. 2) and ML methods for unfamiliar readers, and subsequent sections discuss each of the MS predictive tasks including the utilized ML models, MRI-derived predictors and model performance.

MRI-based biomarkers for MS. Traditional biomarkers include lesion features, such as lesion count, volume and location, in addition to the volume of various brain structures. More recent biomarkers include the central vein sign (CVS), paramagnetic rim lesions (PRL), diffusely abnormal white matter (DAWM) and normal appearing white matter (NAWM). NAWM is not visible on conventional MRI, but can be measured indirectly through structural integrity features such as those summarized above. Additionally, biomarkers derived from network neuroscience, including structural and functional connectivity measures, are useful in characterizing MS

Magnetic resonance imaging biomarkers in MS

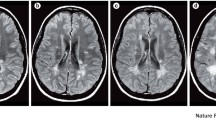

MRI has proven to be a pivotal imaging biomarker in MS, playing an essential role in diagnosis, monitoring, prognosis, and outcome assessment [26, 27, 38, 39]. Conventional MRI modalities utilized in MS include T1 (T1w) and T2-weighted imaging (T2w), and fluid-attenuated inversion recovery (FLAIR). WM lesions have a characteristic hyperintense appearance on T2w and FLAIR. Some MS lesions are also visible on T1w as hypointensities and are commonly termed as black holes [40,41,42]. When employing gadolinium-based enhancement with T1w images (T1w-Gd), active lesions can be captured through the leakage of Gd through the compromised blood brain barrier, creating a hyperintense appearance [43].

Classic MRI biomarkers usually involve quantification through the number and volume of these lesions. However, these conventional lesion biomarkers, such as the number of lesions, are only moderately associated with MS clinical disability and disease progression at best [29], commonly referred to as the clinico-radiological paradox (CLRDP), primarily due to their failure to capture the diffuse effects of the disease on the WM beyond the focal lesions [40, 44]. Resolving this paradox is at the forefront of contemporary MS biomarker research for both cognitive and physical outcomes of MS [29, 45,46,47,48].

MS prolonged inflammatory activity can be determined through the presence of an iron-laden paramagnetic rim (PRL) surrounding chronic lesions, visualized with susceptibility-based MRI. Another viable biomarker of chronic inflammation is the slowly expanding lesions (SELs) [49, 50], that exhibit a linear expansion on consecutive T1w and T2w scans due to chronic demyelination. SELs contribute to MS disease trajectory and are of particular interest due to their association with smoldering disease activity [15]. The central vein sign is a relatively new imaging biomarker, appearing due to the perivenular nature of MS lesions, and can be captured in ultra-high field MRI studies and T2* sequences [51]. It appears as a central line hypointensity, manifesting partially or completely within the lesion. Its primary function is in diagnosis, as it can successfully discriminate MS from disorders with a similar presentation of WM hyperintensities, such as neuromyelitis optica spectrum disorder and cerebral small vessel disease [52, 53]. However, some recent studies have raised the possibility that CVS may be a prognostic biomarker of RIS converting to MS [54]. Originally thought to be restricted to the WM, MS lesions also impact the gray matter (GM), including both cortical and deep subcortical regions [5, 6, 55, 56]. Within this context, multiple measures have been explored, such as cortical volume/thickness (whole vs. regional), whole brain parenchymal volume, subcortical volume (i.e., thalamus), as elaborated in the subsequent chapters focusing on predicting the course of MS disease. Furthermore, GM pathology has been observed earlier in the disease than WM damage and may also contribute to the CLRDP seen when only accounting for focal WM lesions [57], and is better correlated with physical and cognitive disabilities in patients [58, 59]. GM damage cannot be visualized clearly using T2w and FLAIR sequences due to the low myelin content and poor contrast generated by demyelination [55, 60, 61]. Thus, there have been increasing efforts to develop alternatives aiding the quantification of GM damage. Double inversion recovery offers superior sensitivity for the detection of cortical lesions by contrast enhancement through suppression of WM and cerebrospinal fluid (CSF) signals, though its acquisition is time-consuming and suffers from artifacts [62, 63]. Alternatively, phase-sensitive inversion recovery can be used with comparable performance while being acquired in a clinically feasible timeframe [63].

While MRI investigations concerning MS have centered on the brain, it's crucial to note that the spinal cord also plays a significant role in MS-related disability. However, conducting MRI scans of the spinal cord presents more challenges due to its smaller anatomical size and heightened susceptibility to artifacts compared to brain MRI, explaining the lack of inclusion of this biomarker in the prediction of MS disease outcomes [64].

Further, some of the more advanced MRI biomarkers, that quantify damage beyond focal lesions, are the diffusely abnormal WM (DAWM) and the normal appearing WM (NAWM). NAWM appears healthy on conventional MRI, but damage within can be visualized using advanced MRI (e.g., Diffusion weighted (DWI)/ Diffusion tensor imaging (DTI)-based fractional anisotropy (FA) and mean diffusivity (MD)) [65] and histopathology [66]. On the other hand, DAWM is widespread, appearing isointense to GM on conventional MRI, and is commonly situated near the occipital horns of the lateral ventricles (LV) [66,67,68,69]. Another property of integrity that can be used as an indirect measure of myelin content in brain tissue is the magnetization transfer ratio (MTR) [70], which has been shown to relate to motor disability in MS [71].

Finally, an alternative approach to representing lesion-inflicted damage in MS is through structural connectivity, which can be measured by tractography with DWI and DTI. Using this method, the brain is represented as a mathematical graph with GM structures as nodes and WM tracts as connecting edges. As such, WM lesions lead to disruptions in neuronal connectivity, and these structural disconnections are associated with cognitive and motor impairment in MS patients [72, 73]. In addition to structural MRI, useful biomarkers in MS can be obtained from functional MRI (fMRI) through measures of functional connectivity (FC) [74, 75]. Recent research on FC emphasizes the notable effects on deep GM regions, particularly the thalamus, where both increased FC (with hippocampus, motor, occipital, temporal cortical region) and decreased FC (with frontal cortical regions) was observed [75].

Overview of machine learning

Big data is at the center of modern healthcare, revolutionizing the way we understand and approach medical challenges. The advent of AI has empowered the processing of vast datasets in an unprecedented manner, enabling healthcare professionals to effectively analyze patient data and make informed decisions [76,77,78]. ML is one of the most utilized branches of AI, particularly in healthcare applications [79]. ML avoids explicit programming in favor of a task- or data-driven approach in which a specific algorithm is fit to the supplied data with the purpose of learning features that may be generalized to make predictions on new, unseen data [80]. The two main branches of ML are supervised and unsupervised learning [81].

The major factor distinguishing supervised from unsupervised ML is the availability of labeled data. Supervised learning involves mapping input data to output labels using a predefined mathematical function, including either classification or regression. Classification tasks aim to predict discrete labels, while regression is used to predict continuous output [82]. Classification can be used to predict a binary outcome, known as binary classification, or for three or more outcomes, known as multi-class classification [83]. Contrarily, unsupervised ML is employed to learn underlying patterns from unlabeled data, comprising primarily of dimensionality reduction and clustering tasks [80].

The primary problem in ML is overfitting, whereby the model memorizes patterns—including noise—from the data it is trained on, leading to subpar performance on unseen data. The main causes of overfitting, which impairs the models' capacity for generalization, are insufficient training data and unduly complicated models. Reducing learnable parameters (model complexity) and obtaining additional training data via augmentation techniques are two ways to mitigate overfitting [84, 85].

Examples of supervised learning algorithms include linear (LR) and logistic regression (LoR), support vector machine (SVM), K-nearest neighbors (KNN) and naïve Bayes (NB). LR is the most basic form of regression, mapping predictors linearly to a continuous outcome variable [86]. Generalized linear models (GLM) are a class of models that extend LR by allowing for different types of distributions for the dependent variable, using a link function to model the relationship between the dependent and independent variables. LoR is a type of GLM that assumes a linear relationship between the predictor variables and the logit of the outcome, and produces output bounded between 0 and 1. This is useful for classification tasks, as a threshold can be applied to the output to produce binary classes of 0 and 1. When more than 2 classes are used, it is known as multinomial LoR [87].

SVMs can be used for both classification and regression tasks, utilizing a subsample of the training data known as the support vectors to find an optimal hyperplane capable of maximizing the separation between the classes of interest. Depending on the linear separability of the dataset, various kernel functions can be used such as linear, radial and polynomial kernels [88, 89]. Another algorithm for classification and regression is KNN. This model does not explicitly map variables to their labels, but instead relies on the proximity of data points in the feature space. When a new data point is introduced to the model, its label is predicted based on the labels or values of a predetermined number, or k, of its nearest neighbors [90]. On the other hand, NB, which is based on Bayes theorem, is primarily used for classification [91].

Additional, tree-based algorithms are decision trees (DT) and random forest (RF). DTs work by continuously dividing the data into smaller subsets according to various attribute values until a predefined stopping condition is reached. This iterative partitioning creates a tree-like diagram where each node signifies a decision point or a split based on a particular attribute [92,93,94]. RFs are an extension of DT, which combine a number of DTs as base learners to form a more robust model by averaging the predictions of individual trees [95, 96]. This technique is known as ensemble learning, which can also aid in alleviating overfitting [97]. Another form of ensembles is boosting, which iteratively adds weaker base learners, or DTs in this case, with each new tree added to correct the errors made by the previous ones, thereby improving the model's overall accuracy and robustness. Examples of this are Adaptive Boosting (AdB), Gradient Boosting (GB) and eXtreme Gradient Boosting (XGBoost) [95, 96].

Unsupervised ML, as previously referred to, identifies underlying patterns or structures within the data without requiring labeled outcomes. Clustering algorithms, such as k-means clustering, partition datapoints into groups based on similarities or distances from each other, aiming to minimize within group variance and maximize intergroup variance [98, 99]. For example, Gaussian Mixture Models (GMMs) extend the concept of Gaussian Probability (GP) using multiple Gaussian distributions to model complex data structures. While GP describes a single Gaussian, GMMs combine several components, each with their mean and covariance [98].

Alternatively, dimensionality reduction techniques transform datasets into a lower-dimensional space while retaining as much of the original variability or essential information as possible [98, 99]. Examples of this are principal component analysis (PCA) and the uniform manifold approximation and projection (UMAP). Where PCA finds linear combinations of the variables, UMAP captures nonlinear relationships by preserving the local and global structure of the data in the reduced space [100].

The aforementioned algorithms are part of traditional ML, requiring feature engineering to curate raw data into useful features that can greatly influence model performance [101]. Additionally, the emergence of high dimensional datasets brought about the well-known “curse of dimensionality”, when features are highly sparse and exceed the number of training points. High dimensionality can also contribute to overfitting, thus requiring feature selection methods to select a smaller subset of the variables, or projection into a smaller feature space through dimensionality reduction techniques discussed above [102]. Feature selection can be carried out in three different manners according to the level of integration with the model. Filter methods are done independently prior to model training, and rely on statistical techniques such as correlation analysis or information gain. Wrapper methods, on the other hand, are employed as part of the training process. Feature selection relies on the performance of the model, such as recursive feature elimination (RFE), where features with the least importance are systematically removed, and the model is re-evaluated until the optimal subset of features is identified. Finally, embedded methods are integrated into the training algorithm of the model, such as the addition of regularization, which penalizes added complexity by shrinking or eliminating the contribution of some features, thereby automatically performing feature selection. Examples include LASSO and ridge regularization, often combined with LR and LoR modeling [103,104,105].

Deep learning (DL) is a branch of ML that differs from traditional techniques by removing the necessity for feature engineering [101]. In essence, DL can be seen as a revival of the artificial neural network, where artificial neurons are arranged in stacked layers [106]. Modern DL architectures include multilayer perceptrons (MLP), convolutional neural networks (CNN), recurrent neural networks (RNN), generative adversarial networks (GAN), long short-term memory networks (LSTM), gated recurrent units (GRU), and encoder-decoder, among others [107].

CNNs are the most popular architecture for computer vision applications, consisting of a series of layers that obtain latent features from an image in a process known as feature extraction, which is done through convolutional operations that apply filters to the input image [108]. Residual Networks (ResNet) is a deep CNN architecture that uses skip connections, or residual learning, to allow for deeply stacked layers without suffering performance degradation [109]. Another form of deep CNN consisting of either 16 or 19 layers is the VGG-Net architecture, which works by capturing fine-grained patterns through small convolutional filters [110]. Specifically designed for image segmentation, the U-Net architecture is another variation of the CNN, in which an encoder–decoder structure is used to first compress the input image into a lower-dimensional representation and then reconstruct it with high spatial resolution, enabling precise localization of features within the image [111].

A specialized form of DL models, known as the graph neural network (GNN), is useful for handling data structured as graphs, composed of a series of vertices and edges, with edges representing the relationships between vertices. Such models are especially useful in neurological disorders, where different brain regions and their connectivity patterns can be modeled as graphs. Examples of GNN architectures include graph convolutional networks (GCN) and graph attention networks (GAT) [112,113,114,115].

Despite rapid developments in ML and DL algorithms, numerous challenges persist in healthcare predictive tasks. Medical datasets often suffer from class imbalance due to the relatively low prevalence of certain conditions. Most classification algorithms do not perform well on imbalanced datasets, often showing bias toward the majority class [116]. To address this, popular methods include up-sampling of the minority class, such as the synthetic minority oversampling technique (SMOTE) [117].

Another major challenge in disease risk prediction is the black box nature of many predictive models. Commonly used models, such as RF, SVM, and DL models, are effective but often lack interpretability. This lack of transparency can hinder their utility in healthcare, where clear and understandable explanations are crucial for supporting medical professionals in decision-making. To circumvent this, various interpretability methods have been developed, including Shapley Additive exPlanations (SHAP), which borrows from game theory to assess the importance of predictive features after model training [118, 119].

Medical image analysis has undergone a transformation with the wake of DL, producing impressive outcomes in tasks like registration, segmentation, and classification [108, 120]. Neuroradiology is at the forefront of ML and DL applications, and MS ranks among the ten most actively explored neurological diseases in this area of research [37]. Applications have included MS diagnosis, disease course, disability progression, in addition to treatment responses [35, 36, 121]. Figure 3 displays the most popular ML algorithms utilized for prognostic tasks in MS with the inclusion of MRI-based biomarkers.

The application of MRI-based biomarkers and ML methods for prognostic tasks in MS. The most popular ML algorithms include logistic regression (LR), support vector machine (SVM), random forest (RF), multilayer perceptrons (MLP) and convolutional neural networks (CNN). Prognostic applications include predicting the conversion of clinically isolated syndrome (CIS) to MS, cognitive outcome, EDSS-related disability, motor outcome and disease activity

Machine learning studies for the prediction of multiple sclerosis progression with MRI biomarkers

This section offers a discussion of the most recent studies integrating MRI biomarkers into ML models for the prognosis of MS progression. Disease prognosis is divided into five main categories: conversion of CIS to overt MS, cognitive outcome, EDSS-related disability, motor disability and disease activity. The model architectures and MRI-derived biomarkers are discussed, and details for included studies are displayed in Tables 1, 2, 3, 4, 5, 6. Additionally, Fig. 4 shows the frequency of use for different ML model types across the five prognostic applications, and Fig. 5 illustrates biomarkers used throughout the discussed studies, specifically those related to MS lesion imaging.

Proposed ML models and their frequency according to application in MS disease prognosis. The most popular algorithms include random forest (RF), support vector machine (SVM) and convolutional neural networks (CNN). Additional models include AdaBoost decision trees (AdB-DT), deep belief networks (DBN), decision trees (DT), graph neural networks (GNN), ensemble modeling, K-nearest neighbors (KNN), light gradient boosting (L-GBT), logistic regression (LR), multiple kernel learning (MKL), multilayer perceptrons (MLP), residual networks (ResNet), ridge regression (RR), uniform manifold approximation and projection (UMAP), U-Nets and extreme gradient boosting (XGBoost)

Conversion of clinically isolated syndrome to overt multiple sclerosis

CIS describes the condition preceding MS, in which patients present with a single episode suggestive of CNS demyelination, most often impacting the optic nerve, brain stem or spinal cord [11, 122]. For a diagnosis of CIS, episodes must persist for at least 24 h without qualifying for a diagnosis of MS, and devoid of signs and symptoms indicative of an alternative condition [123]. While 85% of MS patients originally present with CIS, around 20% of those with CIS never progress to overt MS [124], implying a heterogeneous and unpredictable course of disease.

Timely administration of DMTs can delay CNS damage and prevent future disabilities [124, 125], but comes with several risks, such as serious infections and DMT-specific infections [126,127,128]. Therefore, caution is required for selecting patients most likely to benefit from early intervention, which is currently problematic due to the low specificity of the 2017 McDonald criteria [14] alone for identification of early MS [124]. Therefore, advancing methods to predict CIS to MS conversion is vital for personalized patient care, including the utilization of ML with MRI biomarkers for this purpose.

In [129,130,131,132], traditional ML algorithms were employed for predicting CIS conversion to MS. In Zhang et al. [129] and Bendfeldt et al. [131], MRI-derived lesion geometric features were highlighted as features of importance. The first study [129] utilized a RF model incorporating lesion shape features, with minimum sphericity, surface–volume ratio as well as mean lesion volume, achieving a superior specificity compared to the 2010 McDonald criteria (50% versus 22%). The second study [131] employed linear and kernel-based SVMs, integrating Minkowski functionals of lesion geometry from T1w-Gd lesion masks (volume, surface area, mean breadth and Euler-Poincare characteristic). Combining these features with clinical variables achieved an accuracy of 70.4% in patients under interferon beta-1b treatment, particularly when summarizing lesion characteristics for the entire brain as opposed to specific regions of interest.

For Wottschel et al. [130] and Rasouli et al. [132], lesion location was accounted for as a MRI predictor. In [130], the average distance of lesions from the brain center and the shortest distance from the vertical axis horizontally were selected as influential features through forward RFE and subsequent SVM modeling. In [132], an XGBoost model was employed using clinical, demographic, and brain/spinal cord MRI lesion location to predict conversion to MS within 10 years. The model achieved an area under the receiver operating characteristic curve (AUROC) of 0.858 on test data, with periventricular and infratentorial lesions emerging as the strongest predictors as determined by SHAP.

Tayyab et al. [133] introduced two aspects of novelty to their predictive model. First, the volumes of deep GM nuclei were included as predictor variables along with T2-w lesion load, brain parenchymal fraction (BPF) and anatomical location of CIS onset. Second, instead of excluding participants with uncertain outcome labels, they were included in a probabilistic RF model, which models outcome as a probability rather than a deterministic label. This approach outperformed models trained by either excluding subjects with uncertain labels or assigning post hoc labels. In terms of feature importance, thalamic and lesion volumes were the highest-ranking predictors. This model achieved a particularly high recall at 0.923, however, the AUROC was modest at 0.76.

The challenges of MRI data heterogeneity in multicenter datasets were highlighted in [134]. Despite the inclusion of biomarkers that are more robust to MRI variations, including GM probability and cortical thickness, predictive modeling with linear SVM and RFE produced a large variation in accuracies between single-center experiments, extending from 0.73 to 0.93 when using leave-one-out cross-validation scheme. Furthermore, models utilizing combined datasets from multicenter data achieved the lowest accuracy when patients from all centers were included in the analysis with a value of 0.70. However, on RFE, a consistent subset of features was selected including deep GM, frontal, temporal and limbic lobe-derived features.

Recently, studies have gravitated toward utilizing latent imaging features obtained from segmented lesion masks via DL models, rather than traditionally extracted MRI lesion features that are heavily reliant on domain expertise and are prone to bias. An example of this application is evident in the prediction of CIS disease progression as demonstrated in [135,136,137,138].

Two related studies [136, 137] explored the use of CNNs for predicting the two-year outcome of CIS based on lesion masks and other features. The first study [137] focused on using preprocessed lesion masks, incorporating downsampling and Euclidean distance transform as inputs to the CNN model, achieving an accuracy of 0.729 on sevenfold cross-validation. The subsequent study [136] extended this approach by adding demographic, clinical, and additional MRI parameters as detailed in Table 1. This enhanced model, which also utilized latent features from lesion masks preprocessed in the same manner as [137], achieved a higher accuracy of 0.75 and particularly improved specificity, outperforming the previous model and other ML techniques like multivariate LoR and RF. Although the gain in accuracy was not large, the comparative results emphasize the importance of incorporating a broader range of features alongside lesion masks for more accurate and balanced predictions in CIS outcomes.

On the other hand, Afzal et al. [135] directly fed T2w images into the CNN network, mitigating overfitting with data augmentation and a smaller network architecture. Due to limited data availability, model evaluation was carried out with the baseline MRIs of only 6 subjects to predict conversion to CDMS after one year. Prediction accuracy ranged from 0.833 to 1.0 when varying the data subset utilized for training. However, the extremely small sample size raises concerns about the study's generalizability and the potential for overfitting, even with the applied mitigation strategies. The reported high accuracy, especially given the limited data, suggests that the model might have learned specific characteristics of the training set rather than generalizable patterns. Additionally, the variation in accuracy depending on the training subset used indicates a lack of consistency, further questioning the robustness of the model.

Furthermore, a study by Pareto et al. [138] attempted to predict a second clinical event in CIS patients based solely on features derived from T1w MRIs. However, the performance did not outperform random guessing, suggesting the need for other MRI modalities and clinical variables to enhance the separation of patient subgroups.

While the discussed predictive models for the conversion from CIS to overt MS present promising avenues for early intervention, comparing their efficacy poses significant challenges. The reliance on cross-validation in these studies raises concerns about potential overfitting and the true generalizability of the models to unseen data. Moreover, the studies use various prediction intervals and definitions for conversion to MS that further complicates comparisons. Different criteria were used across the studies to classify patients as having CIS according to differing versions of the McDonald criteria. The 2005 McDonald criteria [139] required more stringent clinical and MRI evidence for diagnosing MS, often resulting in more patients being classified with CIS until a second clinical event occurred. The 2010 [140] and 2017 [14] updates relaxed these requirements, allowing for earlier diagnosis based on MRI findings alone. Therefore, many CIS cases diagnosed according to earlier criteria would have qualified for a diagnosis of MS.

Cognitive outcome

Cognitive impairment (CI) is a common disability affecting individuals with MS, with as many as 70% of patients impacted [141], and may be the earliest neurological symptom in patients with RIS [142, 143]. The aspects of cognition most often altered in MS are working memory and information processing speed (IPS), in addition to executive functions, complex attention, and visuospatial ability [144, 145]. Despite the debilitating effects of cognitive decline in this group of patients, the bulk of research efforts is focused on physical disability, and the cognitive domain remains largely underrepresented [146]. Depending on the course of the disease, CI may develop insidiously over time, or present as an abrupt decline during disease relapses [147].

Treatment of existing cognitive decline in MS has largely shown to be ineffective, and compensatory solutions for its manifestations are scarce, rendering prevention of its occurrence the best course of action [148, 149]. Furthermore, clinical trials with cognition as a primary endpoint are rare [150, 151]. Therefore, early prediction of susceptible individuals for inclusion can aid in enhancing the predictive power of these trials to produce reliable assessments of experimental therapies [152].

Damage to the GM and WM of the brain are likely the cause of CI in MS patients. However, the etiology is complex, and conventional MRI biomarkers of structural integrity do not necessarily correlate with symptoms of CI in this subpopulation [141]. Studies in functional brain networks have revealed a possible compensatory effect to structural damage offered by dynamic changes in brain connectivity, with functional reorganization potentially masking the clinical expression of CI [153]. While GM damage, WM lesions, and changes in structural and functional connectivity all offer insights to the heterogenous presentation of CI in MS, cortical atrophy has been shown to be the strongest MRI predictor of future cognitive decline [147].

A series of studies [154,155,156] used statistical models to identify MRI biomarkers predicting CI in MS patients. Key findings included the role of various brain volumes as predictors of CI, with cortical GM volume (GMV) emerging as a strong predictor of CI across different MS stages. Furthermore, various cross-sectional studies [157,158,159] explored the use of ML and MRI biomarkers for the prediction of the Symbol Digit Modalities Test (SDMT) scores [160]. SDMT, which can be carried out within 5 min, is considered the gold standard for quick cognitive assessment in MS. However, it can only assess a single aspect of CI, namely IPS [142]. A variety of ML models were employed including XGBoost [157], RF [158] and SVM [159], all using a combination of clinical and MRI predictors for analysis. These studies reiterated the significance of cortical and deep GMVs for the prediction of CI, in addition to the increased connectivity of the cingulo-opercular task control network in resting-state fMRI [158].

Three studies [152, 161, 162] involved predictive modeling across different timeframes for various aspects of cognitive decline, revealing the limited contribution of lesion volume alone [152] and emphasizing the added benefit of integrating multimodal MRI features. Labiano-Fontcuberta et al. [152] constructed a longitudinal ML model designed specifically for routine clinical practice, thus utilizing features that are feasibly attainable in a clinical setting. A single aspect of cognitive functioning was measured through the Processing Speed Test (PST), serving as a substitute for the SDMT. The only MRI biomarker employed was T2 lesion load, along with multiple clinical and demographic predictors as detailed in Table 2. Various linear, nonlinear and tree-based models were utilized along with SMOTE for class-balancing, with the tree-based models XGBoost and RF performing best on both training and testing data, achieving an AUROC of 0.94 and 0.90 respectively. Given the emphasis on clinical application in this study, variable importance scores were computed for each of the predictors, placing T2 lesion load on the lower end of variable importance.

In the remaining two studies [161, 162], multiple aspects of cognition were assessed employing a larger number of MRI biomarkers. While [162] utilized lesion volume and 76 cortical/subcortical GMVs, Al-iedani et al. [161] offered the most advanced MRI biomarkers including DTI-derived measures, MRS-derived neurometabolite concentrations from the hippocampus, prefrontal cortex and posterior cingulate cortex, in addition to lesion and structural volumes. Given the large number of predictor variables employed, both studies leveraged multivariable GLMs with regularization to select the best subset of features for subsequent modeling. The variables selected for [162] were best for the prediction of IPS (Acc = 73%) and verbal memory (Acc = 79%), whereas those utilized in [161] did not perform well for IPS, yielding an R2 of only 0.31 as opposed to a value of 0.54 for the remaining cognitive tests. However, the results are not necessarily comparable given the heterogeneous cohort in [162] involving various MS disease stages, as opposed to [161] which only constituted RRMS. Furthermore, a train-test split was not employed in [161], thus lacking information on model generalizability. Nonetheless, the most promising biomarkers included the FA of NAWM and Glutathione levels in the prefrontal cortex for [161], and cingulate and medial temporal lobe volumes for the models in [162].

A scarcity is evident for applications of ML to predictive modeling of cognitive decline, and this is further accentuated for longitudinal predictive tasks. The feasibility of implementing various cognitive tests in a clinical setting is challenged by time constraints, emphasizing the need for standardized testing protocols to ensure comparable and reliable results. The influence of the disease stage on predictive accuracy warrants further exploration, as does the need for consensus on defining CI, including the choice of thresholds and the number of cognitive domains exhibiting decline. Additionally, the integration of motor components, especially in patients with upper limb disabilities, remains a critical consideration for accurate predictions given the reliance of these testing procedures on upper limb mobility [163]. Addressing these nuances will enhance the practicality and effectiveness of ML models in predicting cognitive outcomes in MS patients.

EDSS-related disability

The Expanded Disability Status Scale (EDSS) [164] is the most commonly employed instrument for assessing disease severity and tracking disease progression in MS [32]. It consists of a scale ranging from 0 to 10, with 0 indicating no neurological abnormalities, and 10 representing death due to MS. Seven functional systems are assessed, including the pyramidal, cerebellar, brainstem, sensory, bowel/bladder, mental and visual functions, in addition to an 8th category signifying the presence of other symptoms outside of the aforementioned categories [165]. The final EDSS step is established based on the interplay between the eight functional systems and ambulatory function [33]. Despite its widespread use, the reliability of EDSS has been questioned due to several limitations: emphasis on ambulation at the higher end of the scale [33], nonlinear nature of the scale [166], variable duration at each stage [165], and underrepresentation of vision, cognition and upper limb function at values of 4 and above [167]. Nonetheless, since its inception in 1983, the EDSS has been valuable for objective long-term monitoring of MS patients [166].

Numerous studies have investigated the relationship between MRI biomarkers and EDSS in MS [168,169,170], revealing moderate relationships with T2 lesion volume (T2LV) and its change [168], cerebral atrophy rate [170], and various brain volumes [168]. This relationship is more pronounced, however, in the earlier stages of the disease [171]. Unconventional biomarkers were also associated with EDSS progression, such as signal intensity variations of lesions through inversion recovery ultrashort echo time (IR-UTE) [172], volume of MS lesions present at baseline but atrophied on follow-up [173], and structural cortical networks [174]. Therefore, various studies have incorporated MRI biomarkers in ML models for prediction of disease progression as determined through EDSS [175,176,177,178,179,180], which are summarized in Table 3.

Combining MRI volumetric, connectivity and radiomics features with clinical variables, Pontillo et al. [181] utilized a range of ML regression models for the prediction of EDSS. With over 125,000 extracted features, a combination of collinearity, variance, LASSO regression and correlation analyses were employed to reduce the predictor variables to only 9 features, which included a subset of radiomics features obtained from the cerebellum, prefrontal cortex, and deep GM structures. The best-performing models were SVM and ridge regression, achieving a MAE of 1.112 and 1.155 on external validation, respectively [181]. Tommasin et al. [182] included FA maps of NAWM in 1000 RF classifier models that were trained to assess EDSS worsening in addition to cerebellar and thalamic volumes, among others detailed in Table 3. Classifiers trained with radiological features alone outperformed those trained on clinical data or combined clinical/radiological variables, achieving an AUROC of 0.92. The only variable identified as influential in all model iterations was T2 lesion load, while the remaining MRI features were only prominent in less than 40% of trained models.

By modeling MS disease trajectories as changes in EDSS, Campanioni et al. [183] employed regressors and classification models to forecast disability accrual using baseline MRI features, patient age and sex. Regression models aimed to predict the change in EDSS, normalized by time interval, while the classification models were for the purpose of predicting EDSS values at 5 different timepoints, where categories represented the individual scores on the scale. XGBoost models performed best for both tasks, and SHAP identified the most influential feature across most models as the number of brain lesions on MRI.

The value of ensemble modeling for prediction of future decline, as measured through EDSS, was assessed by authors in [175,176,177], with all models utilizing BPF and T2LV as MRI-derived predictors. Zhao et al. [175] focused on optimizing SVM models with strategies like undersampling, bagging, and penalizing false negatives, finding that BPF was a predictor for non-progressive MS, while T2LV predicted EDSS progression. Interestingly, longitudinal changes in EDSS, ambulation index and FS scores were also incorporated into the model. When limiting predictors to clinical and demographic data, an overall accuracy of 0.55 and a sensitivity of 0.78 were achieved, while including MRI biomarkers improved these scores to 0.67 and 0.81, respectively. This work was extended in [177] using two independent datasets (EPIC and CLIMB) with both homogeneous and heterogeneous ensembles. Similarly, longitudinal changes in clinical variables were employed, revealing that, while GMV and ventricular CSF were strong predictors in homogeneous models, these clinical features remained the most influential across all models.

In [176], the authors aimed to develop a prognostic tool for predicting EDSS-based disease progression in SPMS patients, also leveraging ensemble learning. Various ensemble methods were considered, including LoR, linear SVM, and tree-based models, such as RF and AdaBoost decision trees (AdB-DT). Tree-based models showed superior performance, particularly in specificity, compared to parametric models. Among parametric models, BPF was more effective than T2LV, whereas tree-based models performed better with T2LV. Nonetheless, clinical features like baseline EDSS and 9-Hole Peg Test scores were more predictive than MRI-based features.

Roca et al. [178] extended ensemble modeling by combination with DL, constructing a model consisting of a RF regressor, UMAP and CNN for latent feature extraction from FLAIR images and lesion masks. MRI biomarkers fed into the RF and UMAP included whole brain and WM tract-based lesion load, in addition to LV volume. By combining the aforementioned features with age in the ensemble regressor, MSE values of 3 were achieved on unseen test data. The performance of the various models varied across EDSS scores, with most models performing best in the intermediate range of the EDSS and more prone to error at the higher and lower ends of the scale. Overall, RF models utilizing engineered features performed better in predicting EDSS than latent features extracted by CNN. Notably, the standout performers among these features were the lesion load in the posterior corona radiata L tract and the volume of the LV [178].

In [179], multicenter clinical trial data was also utilized to build a classifier to identify progressors in terms of EDSS over one year. This approach relied entirely on DL, namely CNN blocks consisting of parallel pathways with various resolutions that are concatenated before being passed to the next layer. Five MRI modalities were included as input (T2w, T1p, T1c, PDw and FLAIR), in addition to T2w and T1w-Gd lesion masks. When including the 5 modalities alone, the AUROC achieved was 0.66, while including the lesion masks boosted the performance to 0.701. Dular et al. [184] also utilized multimodal MRI in a DL architecture, employing transfer learning for enhanced latent feature extraction. A VGG network was trained for the task of brain age estimation, and the resulting pretrained model was then leveraged for the task of disease progression classification according to changes in EDSS. This study, however, performed poorly in terms of precision and recall, which can be seen in Table 3.

Utilizing the DL architecture ResNet50, Taloni et al. [180] constructed a classifier discriminating MS progressors from non-progressors over the course of 4 years. Rather than implementing a 3D-based model on patient MRIs, 2D slices were extracted in the coronal, sagittal and axial planes, then further divided into 4 slabs of slices per plane according to anatomical location and structural similarity. The model training unfolded in a dual-phase approach: initially, a pretrained ResNet50 underwent three rounds of fine-tuning—once for each plane—to discern and classify slices based on their slab location. The outcome of this process yielded 3 Brain Scan Optimized Models (BSOM). The 3 models were then expanded to a total of 12 by passing the slices of each slab through the finetuned ResNet50, this time with the prediction of EDSS-progressors as the target. Overall, models exploiting individual slices for prediction performed better than those incorporating 3D slabs of MRI data for prediction, particularly axially directed slices incorporating information on the lateral and third ventricles.

Leveraging a multimodal deep neural network, Zhang et al. [185] integrated various encoder architectures into a single DL model for the classification of patients based on three-year EDSS scores. ResNet, 1D CNNs, and graph attentional convolutional encoders were used to generate embeddings from MRIs, structured electronic health records and unstructured clinical notes, respectively. Given the integration of longitudinal data, a bidirectional GRU was integrated into the pipeline as the decoder structure. Including MRI embeddings improved AUROC from 0.8078 to 0.8380 for classification of patients reaching a milestone of EDSS greater than 4 [185].

It is crucial to note that there were varied definitions of sustained disability increase across the discussed ML models (Table 3), highlighting the need for standardized criteria in future research. Furthermore, prediction accuracies may benefit from the inclusion of spinal cord parameters. Spinal cord imaging is not recommended for routine monitoring of MS patients [186], hence the lack of their incorporation in the aforementioned studies. Given the motor-centric focus of the EDSS, it is unsurprising that significant correlations have been found between spinal cord imaging features and this disability scale [187, 188].

Motor disability

The most recognized symptoms of MS are the occurrence of motor impairments, which impart a heavy impact on patient quality of life. Frequently manifesting deficits include ambulation, upper limb (UL) movements, balance [189, 190] and gait impairment, which is the clinical hallmark of MS [191]. However, MS patients are not characterized by a typical gait due to the wide dissemination of lesions throughout the central nervous system, which can impact gait in various manners [192]. Balance impairment is a pivotal concern, as it increases the risk of falls in patients with MS and hinders independence in daily life [193].

Walking impairment was reported to have the greatest impact on quality of life by both MS patients and physicians [191], with as many as 75% of patients reporting reduced mobility as a consequence [192]. In a cross-sectional study by Buckova et al. [194], fMRI-derived FC measures combined with a SVM model were predictive of walking ability as measured by the Multiple Sclerosis Walking Scale (MSWS).

Despite the wide focus on lower limb function, UL impairments are significantly experienced in MS, ranging from fine to gross movements [195, 196]. The gold standard for assessing distal UL function, or manual dexterity, is the nine-hole peg test (9HPT). 9HPT measures manual dexterity by recording the amount of time taken to place and remove nine pegs from nine holes within a peg board, assessing both hands separately [197, 198]. In Cordani et al. [199], the MD of premotor cortex transcallosal fibers, GM, WM and whole brain volumes were predictive of 9HPT scores in a cross-sectional cohort using RF modeling.

The only recent study, to our knowledge, assessing longitudinal motor impairment in MS patients is Mostafa et al. [200], which assessed changes in UL function as determined by 9HPT scores over 144 weeks for a cohort of PPMS patients in an ocrelizumab clinical trial. A variety of ML models were used along with demographic, clinical and MRI biomarkers. Specifically, brain volume, T2LV and Gd + lesion count were employed as the MRI-derived features. All models performed similarly in spite of algorithmic complexity, however, there was a notable drop in performance on testing (AUROC = 0.63–0.67) in comparison to training (AUROC = 0.74–0.89) when experiments with 6 different splits of the data were performed, most likely reflecting a problem of overfitting. However, this study provides a critical contribution to the field by the inclusion of a relatively large cohort of PPMS patients. The focus on PPMS patients addresses a crucial gap in research focused on prediction of progression in this less frequently studied but significant subgroup of MS patients [201].

Due to combined cognitive and motor deficits, MS patients are at increased risk of experiencing falls, which adversely affects daily life on various physical, psychological, and social fronts. Fall history is the greatest predictor of future events. However, this impairs preventative strategies and does not offer an understanding of the mechanism behind fall occurrences [202, 203]. Furthermore, the multifactorial nature of falls encompassing motor, sensory and cognitive deficits hinders the use of a single clinical measure for accurate risk assessment.

In [71], classification models distinguishing fallers from non-fallers in a cohort of RRMS patients were constructed using multiple LR with forward stepwise selection of variables based on Akaike Information Criterion (AIC). To enhance fall prediction accuracy, the study proposed a comprehensive approach integrating clinical measures and advanced imaging techniques, specifically corticospinal tract (CSI) FA and magnetization transfer ratio (MTR). In addition, MRI measures, clinical measures of walking assessment and vibration sensation were incorporated into the modeling. Inclusion of CST measures in the model raised the accuracy from 50 to 73.8%, where CST MTR was selected in the final model. The major drawback of this study was the retrospective prediction of falls, using data collected after the fall occurrence rather than baseline data prior to the event.

Notably, a significant gap exists in our ability to predict future motor progression through baseline MRI and clinical data. While cross-sectional studies and retrospective analyses contribute valuable insights into the current state of motor impairment, they fall short in providing robust models for forecasting the course of MS over time. The lack of predictive models based on longitudinal data hampers our capacity to identify individuals at risk for escalating motor deficits early on, hindering the timely implementation of targeted interventions. This underscores the urgent need for prospective, longitudinal studies that integrate comprehensive baseline assessments.

Disease activity

Considering the complexity of the MS disease course, evaluating disease activity requires a holistic approach incorporating various clinical, imaging and serum biomarkers [204]. Commonly used to assess disease activity is the No Evidence of Disease Activity (NEDA), termed NEDA-3 due to its combined assessment of 3 aspects of the disease. To be classified as NEDA-3, a patient must exhibit no evidence of relapses, MRI activity or EDSS progression [205, 206]. NEDA-4 has incorporated brain volume loss as an additional criterion [205]. Accurate prediction of future disease activity can aid in determining patients most likely to benefit from new therapeutics [207].

In [208], LV intensity was recognized as an important predictive feature of future disease activity as assessed by NEDA-3. A combination of clinical variables, MRI-derived features (T2w lesion count, WMV, GMV, CSF volume and total brain volume) and flattened, cropped FLAIR images centralized around the LV were utilized for modeling. MKL was employed for the prediction task, with each of the three feature subsets represented as a radial basis function kernel. The model combining all three kernels revealed an AUROC of 0.70 and balanced accuracy of 0.61, improving these metrics from 0.63 and 0.55 achieved by the model excluding LV-cropped images [208]. Also considering NEDA as an endpoint, Andorra et al. [209] utilized RF modeling integrating clinical, MRI, genetic and omics data for two-year prediction of disease activity. MRI measures included whole brain volume, GMV, WMV, T2LV and number of T1w-Gd lesions. The dataset was high dimensional, including 100 features, requiring dimensionality reduction techniques including correlation analysis, PCA and selection using feature importance. A balanced accuracy and AUROC of 0.6 and 0.79 were obtained. However, MRI features were not ranked among the top 10 predictors [209].

In a small study conducted by Du et al. [210], multimodal MRI and PET radiomics features were extracted from WM lesions and employed in subsequent ML modeling. Various combinations of ML models and feature elimination methods were tested, with the best-performing combination being SVM with RFE with an AUROC of 0.96. The individual best-performing modality was T2w, outperforming both PET and T1w radiomics features. However, the combination of all modalities provided the best performance by a wide margin.

Similarly, Basu et al. [211] aimed to predict disease activity using XGBoost modeling in a cohort of patients enrolled in cladribine clinical trials. The MRI features involved total number and volume of T1w, T2w and T1w-Gd lesions, in addition to combined unique lesion count of the various modalities and new T1w lesions. Disease activity was also defined by a composite score involving EDSS, relapses and MRI activity. By combining the MRI-derived features with various neurological, hematological, and biochemical testing results, the XGBoost model achieved an AUROC of 0.8 and balanced accuracies of 0.75–0.8. Using SHAP, the most influential MRI predictors were the multimodal unique lesion count and new T1w lesions.

Two studies defined disease activity by combining EDSS with cognitive outcome [212]. Storelli et al. [213] developed a DL algorithm utilizing baseline T2- and T1-weighted MRI scans to predict disease worsening in MS patients over 2 years. The algorithm was based on a CNN designed to predict clinical worsening, cognitive deterioration, or both, using EDSS and SDMT as outcome measures. The model demonstrated high predictive accuracy, reaching up to 85.7%, and outperformed two expert physicians (70%). In Nateboom et al. [212], motor disability progression was also evaluated through the 9HPT and Timed 25-Foot Walk Test (T25FW), in addition to EDSS and SDMT. This study was conducted on two separate cohorts, as described in Table 5, utilizing combinations of clinical and MRI-derived global and regional volumes. However, evaluation on the test set yielded poor results for both cohorts, most likely due to the large class imbalance present in the datasets.

Monitoring disease activity through lesion activity on MRI is particularly advantageous, as it can allow for earlier determination of treatment efficacy [214]. The relationship between individual MS lesion patterns and clinical disability is a research topic of rising interest, though routine clinical MRI modalities have limitations in the inflammatory characterization of lesions. T2w images are sufficiently sensitive for the detection of WM lesions, however, more advanced modalities are required for the differentiation of lesion status, such as T1w-Gd imaging and quantitative T1 relaxation time mapping (T1-RT) [215,216,217,218,219].

The evolution of WM and GM lesions in MS entails cycles of inflammation and demyelination, remyelination, and axonal loss, progressing through four main stages [220]. Early lesions are characterized by an active, inflammatory demyelination with infiltration of macrophages, lymphocytes and microglia surrounding a central vein [221] [222]. Once the inflammatory cells retreat to the border of the lesion, rendering the lesion center demyelinated and hypocellular, the lesion is considered chronic active. This is followed by a period of inactivity, and finally remyelination or repair of the lesion, known as lesion resolution [220].

Interest in chronic active lesions has gained momentum due to their high prevalence [220], association with clinical disability and specificity to MS in comparison to other neurological conditions [223]. These lesions are also known as smoldering lesions or SELs, due to the active demyelination occurring at the lesion borders [216, 220], which can be detected by the presence of a paramagnetic rim, primarily characterized on 7 T MRI [224]. SELs are of particular interest due to their association with smoldering disease activity, which is theorized to be the true pathological basis of MS [15].

Longitudinal analysis of serial MRIs in MS poses a significant challenge due to the diversity introduced by varying MRI machines and acquisition protocols, in addition to the nuances of registration in the case of pathological brains [225]. Various methods have been developed to overcome this challenge, which substantially improve downstream prediction tasks reliant on accurate segmentation and detection of new and enlarging lesions (NEL). However, advances in segmentation architectures are not discussed in the current work, and interested readers are encouraged to refer to comprehensive review papers for an in-depth discussion of this particular field of research [225,226,227].

Numerous studies have investigated ML for the prediction of MS lesion emergence and expansion, with various textural and geometric descriptors of lesions leveraged as model features. In [228], GMMs were employed to categorize subtypes of lesion features from textural information, including RIFT, local binary pattern and intensity features. For each patient, a “bag of lesions” representation was constructed based on the lesion type probabilities obtained from the GMM, which was then fed into a RF model to predict whether the subject will develop new or enlarging T2 lesions within the next 24 months. In [229], however, the emphasis of the ML model was on the prediction of individual unenhanced lesion evolution in terms of expansion/shrinkage using FLAIR radiomics-based features. Given the large number of available attributes, various combinations of feature selection and ML algorithms were evaluated, demonstrating non-linear SVM with ReliefF as the best-performing model at an accuracy of 0.827.

Similar to [228], Sepahvand et al. [230] predicted MRI activity status within 2 years using baseline images (T1w, T2w, FLAIR), in addition to T2w lesions masks. The method relied on latent features extracted through a CNN for prediction, achieving a maximum accuracy, sensitivity and specificity of around 80%. Providing the segmented masks to the network proved crucial in these experiments, as utilizing only MRI modalities as input diminished accuracy to only 26.31%, primarily due to the reduced sensitivity for detection of active cases (8.45%) [230]. The authors later developed a follow-up model, named NE Subnet, for the segmentation of new and enlarging T2w lesions using a U-Net with subtraction imaging, included as an attention mechanism, which improved the detection of small-scale changes and differentiation from noise. Classification of future disease activity was then carried out on the basis of this model, achieving a higher sensitivity of around 0.977, with a trade-off in specificity (0.693) [231].

Alternatively, Acar et al. [232] predicted the emergence of new lesions utilizing 3D discrete wavelet transforms of whole FLAIR images, where images were treated as 2D signals. Specifically, the second-level LLL (low pass filtering) sub-bands were utilized as the feature vector for subsequent ML modeling. Out of the 10 different wavelet families analyzed, the best-performing features were extracted from the Symlet8 family when combined with a SVM model, achieving a measure of 0.95 in precision, recall and F1 score.

More recently, treatment-modified course of disease activity was predicted using a combination of baseline mixed MRI modalities and clinical data. The goal was to develop a clinical tool for evaluating the efficacy of various treatment options for MS, weighing the associated risk with the predicted benefit in terms of reduction in NEL. MRI-specific features were extracted using a ResNet encoder, concatenated with clinical features, and finally passed through a multihead MLP, with the various output heads representing the treatment-specific outcome in terms of future disease activity. Implementation of this architecture for regression provided superior results to classification, where regression predicted the difference in lesion count from baseline, while classification simply predicted future disease activity status based on the minimal evidence of disease activity on future T2 sequences (MEDA-T2), which applies a threshold of 3 NEL for recommendation of MEDA-T2 status. Based on this model, a clinical tool was implemented in which a patient’s predicted NEL according to treatment arm was employed for improved treatment recommendation [233].

In [234], the authors harnessed the power of GNNs to forecast inflammatory disease activity within a period of 1–2 years, defined by the emergence of new or enlarged inflammatory lesions on MRI within the specified timeframe. Self-supervised features were extracted from all segmented MRI lesions, and various GNNs were employed to generate a classification of inflammatory status, with individual lesions representing graph nodes, and spatial locations of lesions characterized through edge information. To enhance the interpretability of the model, a self-pruning module was introduced. This module played a pivotal role in identifying and highlighting the specific lesions that significantly contributed to the final prediction. Using this method, the best-performing GNN was the GCN, and outperformed other traditional ML and DL methods. The results from [228,229,230,231, 233, 234] are summarized in Table 6.

Predicting disease activity in MS is an extensive topic of interest, highlighted by the large number of studies discussed in this section. While NEDA and MRI activity are of growing interest as endpoints for clinical trials, EDSS remains the primary endpoint most commonly used for clinician assessment [235, 236]. Furthermore, NEDA has also been suggested for monitoring the effectiveness of DMTs to avoid the accumulation of irreversible disability [237]. However, the focus on the inflammatory aspect in this measure has raised doubts regarding its suitability in PPMS patients with limited MRI inflammatory activity. Finally, the definition of disease activity in terms of timeframes, and whether to include only new lesions or both new and enlarging lesions differs between studies, further complicating standardization and hindering comparisons between studies [236].

Conclusions

The integration of ML and MRI biomarkers for predictive tasks in MS progression presents a promising avenue for advancing clinical insights. Though the primary emphasis of this review centers around brain imaging, it is evident that relying on a singular measure is insufficient for obtaining predictions that are accurate enough to be deployed in clinical practice. The integration of multimodal data is likely the best solution for a personalized medicine approach in MS prognosis [238].

The integration of network neuroscience with ML may prove advantageous in overcoming the CRDP given the recognition of MS as a disconnection syndrome. This can be done by integrating both structural and functional connectivity-derived measures into ML models [29, 239]. Additionally, combining graph representations of neurological disorders such as MS with DL in graph neural network models leverages the advantages of spatially aware geometric deep learning [112]. Finally, a multiresolution, multimodal representation of brain connectivity could potentially improve prognostic accuracy [240].

Furthermore, the use of ML and DL comes with a number of limitations that need to be addressed. First, the quality of the data is paramount to the success of any AI model, requiring extensive datasets sufficiently representing the heterogeneity of population characteristics, disease course and scanning parameters [241]. Moreover, there remains a considerable gap, particularly for DL, between AI and medical applications due to a lack of consideration for explainability, which is a necessary ethical and legislative consideration for any clinical decision support system [119]. In addition, the lack of consensus regarding clinical endpoints for measuring disease progression in MS studies hinders direct comparison of model architectures and the predictive power of utilized features. Finally, only a few of the discussed models were externally validated. Prognostic models cannot be deployed in clinical practice before undergoing external validation on different datasets from the ones on which they were trained. Given that performance is usually inferior outside of the training population, this is essential to avoid adverse effects by making clinical decisions based on incorrect predictions [242].

Abbreviations

- 9-HPT:

-

Nine-hole peg test

- AI:

-

Artificial intelligence

- AUPRC:

-

Area under precision–recall curve

- AUROC:

-

Area under the receiver operating characteristic curve

- BPF:

-

Brain parenchymal fraction

- CI:

-

Cognitive impairment

- CIS:

-

Clinically isolated syndrome

- CNN:

-

Convolutional neural network

- CNS:

-

Central nervous system

- CLRDP:

-

Clinico-radiological paradox

- CSF:

-

Cerebrospinal fluid

- DL:

-

Deep learning

- DMT:

-

Disease-modifying therapies

- DT:

-

Decision trees

- DTI:

-

Diffusion tensor imaging

- DWI:

-

Diffusion weighted imaging

- EDSS:

-

Expanded disability status scale

- fMRI:

-

Functional MRI

- FA:

-

Fractional anisotropy

- FC:

-

Functional connectivity

- FLAIR:

-

Fluid-attenuated inversion recovery

- GM:

-

Gray matter

- GMM:

-

Gaussian mixture model

- GMV:

-

Gray matter volume

- GRU:

-

Gated recurrent unit

- KNN:

-

K-nearest neighbor

- LR:

-

Linear regression

- LoR:

-

Logistic regression

- LSTM:

-

Long short-term memory

- LV:

-

Lateral ventricles

- MAE:

-

Mean absolute error

- MD:

-

Mean diffusivity

- ML:

-

Machine learning

- MLP:

-

Multi-layer perceptron

- MRI:

-

Magnetic resonance imaging

- MS:

-

Multiple sclerosis

- MSWS:

-

Multiple sclerosis walking scale

- MT:

-

Magnetization transfer imaging

- MTR:

-

Magnetization transfer ratio

- PASAT:

-

Paced auditory serial addition test

- PCA:

-

Principal component analysis

- PDw:

-

Proton density weighted imaging

- PIRA:

-

Progression independent of relapse activity

- PPMS:

-

Primary progressive MS

- ResNet:

-

Residual networks

- RF:

-

Random forest

- RNN:

-

Recurrent neural network

- RRMS:

-

Relapse-remitting MS

- SDMT:

-

Symbol digit modalities test

- SHAP:

-

Shapley additive explanations

- sNfL:

-

Serum neurofilament light chain

- SPMS:

-

Secondary progressive MS

- SVM:

-

Support vector machine

- T1w:

-

T1-weighted imaging

- T2LV:

-

T2w lesion volume

- T2w:

-

T2-weighted imaging

- T25w:

-

Timed 25 walk

- UL:

-

Upper limb

- WM:

-

White matter

- XGBoost:

-

Extreme gradient boosting

References

Attfield KE, Jensen LT, Kaufmann M et al (2022) The immunology of multiple sclerosis. Nat Rev Immunol 22:734–750. https://doi.org/10.1038/s41577-022-00718-z

Hauser SL, Cree BAC (2020) Treatment of multiple sclerosis: a review. Am J Med 133:1380-1390.e2. https://doi.org/10.1016/j.amjmed.2020.05.049

Yang JH, Rempe T, Whitmire N et al (2022) Therapeutic advances in multiple sclerosis. Front Neurol. https://doi.org/10.3389/fneur.2022.824926

Dobson R, Giovannoni G (2019) Multiple sclerosis—a review. Eur J Neurol 26:27–40. https://doi.org/10.1111/ene.13819

Lassmann H (2018) Multiple sclerosis pathology. Cold Spring Harb Perspect Med 8 (3):a028936. https://doi.org/10.1101/cshperspect.a028936

Filippi M, Bar-Or A, Piehl F et al (2018) Multiple sclerosis. Nat Rev Dis Primers. https://doi.org/10.1038/s41572-018-0041-4

Kuhlmann T, Moccia M, Coetzee T et al (2023) Multiple sclerosis progression: time for a new mechanism-driven framework. Lancet Neurol 22:78–88. https://doi.org/10.1016/S1474-4422(22)00289-7

Lassmann H (2019) Pathogenic mechanisms associated with different clinical courses of multiple sclerosis. Front Immunol 22:78–88. https://doi.org/10.3389/fimmu.2018.03116

Brownlee WJ, Miller DH (2014) Clinically isolated syndromes and the relationship to multiple sclerosis. J Clin Neurosci 21:2065–2071. https://doi.org/10.1016/j.jocn.2014.02.026

Marrie RA, Allegretta M, Barcellos LF et al (2022) From the prodromal stage of multiple sclerosis to disease prevention. Nat Rev Neurol 18:559–572. https://doi.org/10.1038/s41582-022-00686-x

Hosseiny M, Newsome SD, Yousem DM (2020) Radiologically isolated syndrome: a review for neuroradiologists. Am J Neuroradiol 41:1542–1549. https://doi.org/10.3174/ajnr.A6649

De Stefano N, Giorgio A, Tintoré M et al (2018) Radiologically isolated syndrome or subclinical multiple sclerosis: MAGNIMS consensus recommendations. Mult Scler 24:214–221. https://doi.org/10.1177/1352458517717808

Benito-León J, del Pino AB, Aladro Y et al (2023) Abnormal functional connectivity in radiologically isolated syndrome: a resting-state fMRI study. Mult Scler J 29:1393–1405. https://doi.org/10.1177/13524585231195851

Thompson AJ, Banwell BL, Barkhof F et al (2018) Diagnosis of multiple sclerosis: 2017 revisions of the McDonald criteria. Lancet Neurol 17:162–173. https://doi.org/10.1016/S1474-4422(17)30470-2

Giovannoni G, Popescu V, Wuerfel J et al (2022) Smouldering multiple sclerosis: the ‘real MS.’ Ther Adv Neurol Disord. https://doi.org/10.1177/17562864211066751

Lublin FD, Häring DA, Ganjgahi H et al (2022) How patients with multiple sclerosis acquire disability. Brain 145:3147–3161. https://doi.org/10.1093/brain/awac016

Tur C, Carbonell-Mirabent P, Cobo-Calvo Á et al (2023) Association of early progression independent of relapse activity with long-term disability after a first demyelinating event in multiple sclerosis. JAMA Neurol 80:151–160. https://doi.org/10.1001/jamaneurol.2022.4655

Gil-González I, Martín-Rodríguez A, Conrad R, Pérez-San-Gregorio MÁ (2020) Quality of life in adults with multiple sclerosis: a systematic review. BMJ Open. https://doi.org/10.1136/bmjopen-2020-041249

Fox RJ, Mehta R, Pham T et al (2022) Real-world disease-modifying therapy pathways from administrative claims data in patients with multiple sclerosis. BMC Neurol. https://doi.org/10.1186/s12883-022-02738-7

Liu Z, Liao Q, Wen H, Zhang Y (2021) Disease modifying therapies in relapsing-remitting multiple sclerosis: a systematic review and network meta-analysis. Autoimmun Rev 20:102826. https://doi.org/10.1016/j.autrev.2021.102826

Langer-Gould AM, Smith JB, Gonzales EG et al (2023) Multiple sclerosis, disease-modifying therapies, and infections. Neurol Neuroimmunol Neuroinflamm. https://doi.org/10.1212/NXI.0000000000200164

Zingaropoli MA, Pasculli P, Iannetta M et al (2022) Infectious risk in multiple sclerosis patients treated with disease-modifying therapies: a three-year observational cohort study. Mult Scler J Exp Transl Clin. https://doi.org/10.1177/20552173211065731