Abstract

As one of fundamental texture classification methods, LBP-based descriptors have attracted considerable attention due to the efficiency, simplicity, and high performance. However, most of binary pattern methods cannot effectively capture the texture information with scale changes. Inspired by this, this paper proposes a multi-scale threshold integration encoding strategy for texture classification. The essence of this strategy is to introduce the multi-scale local texture information in the view of thresholding. Based on this, we propose the local multi-scale center pattern, local multi-scale sign pattern, and local multi-scale magnitude pattern to extract and describe the multi-scale local texture information. Then, the three sub-patterns are jointly combined to generate the final descriptor for texture classification tasks. The experimental results on three popular texture databases significantly demonstrate that the proposed texture descriptor is very discriminative and powerful for visual texture classification tasks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Texture classification is one of fundamental tasks in image processing and pattern recognition with wide applications, such as medical imaging analysis [1], remote sensing [2, 3], face recognition [4], fingerprint [5], and object detection [6]. The classical texture classification task includes two important parts: texture feature representation and classifier design. As texture representation methods play a decisive role for the texture classification task, this paper focuses on developing powerful feature representation methods to deal with scale changes.

The purpose of texture representation is to extract robust and discriminative texture features that provide key visual cues and surface properties. Binary pattern family, one of notable texture descriptors, has achieved great successes on various texture analysis tasks due to its efficiency and high performance. Despite the promising performance, it remains a challenging task [7] to design a discriminative and robust texture descriptor for handling various complicated situations, such as illumination variation, image rotation, viewpoint and scale changes.

Ojala et al. [8] proposed the local binary pattern (LBP) to extract the local texture information by encoding the difference sign between the center pixel and local neighbors. In the past decades, it has emerged as one of the most popular texture descriptors depending on its promising discriminativeness and simplicity. However, it cannot efficiently address challenging scenarios due to some inherent limitations, such as single feature representation and single-scale encoding.

In the past decades, lots of LBP-based descriptors have been proposed to solve various challenges in texture analysis. Most of them try to enhance the texture representation power by designing and encoding multiple sub-patterns. The completed LBP (CLBP) [9], one of the most notable LBP variants, models the completed representation of local texture by dividing the local neighborhood into three sub-components: CLBP_S, CLBP_M, and CLBP_C. Recently, CLBP and its variants have gathered the significant attention in texture analysis due to their satisfactory performance. However, the sub-feature imbalance is a crucial issue to be addressed because the CLBP_C is only 1-bit. Moreover, they cannot effectively deal with scale variations.

The scale is one of the most important attributes for texture images. Scale variations of texture images may degenerate feature representation. As the scale invariance is a very demanding task for the texture analysis, the multi-scale texture representation has attracted widespread attention. Specifically, Li et al. [10] conducted scale- and rotation-invariant texture analysis by finding an optimal scale to improve the robustness to scale changes. Reza et al. [11] studied the scale space theory and proposed an automatic scale selection approach to achieve scale-invariant texture analysis. The authors of [12] proposed a scale selective local binary pattern to find pre-learned dominant binary patterns in different scale spaces for texture classification. Subsequently, Adel et al. [13] introduced an adaptive analysis window and proposed the adaptive median binary pattern to address scale and illumination variations. The author of [14] combined counting and difference representation at different scales and presented a multi-scale counting and difference representation for visual texture classification. However, this method concatenated all single-scale representation at different scales that leaded to large feature dimension.

Recently, Wu et al. [15] proposed the joint-scale LBP to combine micro- and macro- texture structures among different scales and achieved striking classification performance. However, its scale fusion depended strongly on local neighborhood sampling radius and points. The author of [16] introduced a scale selective extended LBP which built scale spaces by Gaussian filters and calculated the multi-scale histogram as the final feature vector. However, its feature dimension dramatically increased with the number of scales. The author of [17] presented a multi-scale symmetric dense micro-block difference descriptor to capture rotation invariance and multi-scale spatial texture information. In 2019, Pan et al. [18] developed a scale-adaptive local binary pattern to adaptively select optimal scale neighbors for texture classification. The scale-adaptive strategy inevitably yielded extra computation cost.

Through reviewing relative literatures, there are still two inherent problems. First, the center pixel-based sub-pattern usually is 1-bit, such as CLBP_C, leading to serious feature dimension imbalance. Second, existing multi-scale schemes suffer from thorny computational challenges. Specifically, two categories dominate current research. One selects and/or combines multiple binary patterns from different scale sampling neighborhoods. The other one fuses multiple texture descriptors from different scale spaces built by Gaussian filters. They all neglect the importance of encoding thresholds and inevitably generate a rather high feature dimension.

In view of above problems, this paper develops a multi-scale threshold integration encoding strategy to introduce multi-scale texture information for binary patterns in the view of thresholding. To be specific, we divide the local neighborhood into three sub-components including center pixel, local magnitude, and local sign. Then, we encode these sub-components by the proposed strategy, respectively, and jointly combine them into a novel completed multi-scale neighborhood encoding pattern (CMNEP) descriptor for visual texture classification.

The contributions of this paper are as follows.

First, for the center pixel, we propose a simple and effective local multi-scale center pattern. It utilizes different scale thresholds to encode the center pixel into multiple 1-bit binary patterns and combines them by the multi-pattern encoding strategy to generate the local multi-scale center pattern.

Second, for the local magnitude and sign, we fuse different sampling resolutions texture information and propose the multi-scale threshold integration encoding strategy. Then, the local multi-scale sign pattern and local multi-scale magnitude pattern are developed for representing multi-scale local neighborhood information.

Third, we jointly combine the three sub-patterns to generate a completed multi-scale neighborhood encoding pattern. It is promising to resist variations in illumination, rotation, viewpoint, and scale.

Finally, extensive experiments on public texture databases show the proposed descriptor achieves the top-ranked texture classification performance.

The rest of this paper is as follow. The next section reviews the CLBP descriptor. Section 3 details the proposed completed multi-scale neighborhood encoding pattern descriptor. In Sect. 4, we conduct experimental evaluations and provide performance discussions. Finally, conclusions are given in Sect. 5.

2 The completed local binary pattern

In this section, we briefly review the basic theory of the traditional CLBP. The CLBP [9] is one of the most successful local texture descriptors that divides the local region into local difference sign, local difference magnitude, and center pixel and generates three sub-features, namely CLBP_C, CLBP_S, and CLBP_M.

Given a radius R, a center pixel \(g_{c}\) and corresponding P local neighborhood pixels \(g_{p} ,p = 0,...,P - 1\), then the local difference can be calculated by \(g_{p} - g_{c}\), which contains discriminative local texture information. The local difference can be further divided into two complementary parts: the local difference sign and the local difference magnitude. The local difference sign can be represented as \(s_{p} = s\left( {g_{p} - g_{c} } \right)\). \(s\left( x \right) = \left\{ {\begin{array}{*{20}c} {1,x \ge 0} \\ {0,x < 0} \\ \end{array} } \right.\) is the sign function. The local difference magnitude is denoted as \(m_{p} = \left| {g_{p} - g_{c} } \right|\), \(\left| \cdot \right|\) is the absolute operation.

The CLBP_S represents the local difference sign information that contains most of local discriminative information. It is mathematically represented by

where “\(riu2\)” represents the uniform and rotation invariance mapping. \(U\left( \cdot \right)\) counts the times of bitwise 0/1 changes.

The CLBP_M is a significant supplement of CLBP_S that describes the local difference magnitude information. It can be defined as

where \(t\left( {x,c} \right) = \left\{ {\begin{array}{*{20}c} {1,x \ge c} \\ {0,x < c} \\ \end{array} } \right.\), c is a threshold generated by the mean of \(m_{p}\) from the whole texture image.

The CLBP_C contains important grayscale information of texture images, which is obtained by

where \(c_{I}\) is the threshold given by the mean of \(g_{c}\) in the whole image.

As shown in [9], combining different sub-features will contribute to comprehensive local information representation. These sub-features can be combined with two ways, including concatenation (such as CLBP_S_M) and joint (such as CLBP_S/M). Further, the three sub-features can be fused by different ways, such as CLBP_S/M/C and CLBP_S_M/C.

The CLBP is one of the most successful LBP variants that provides a good discovery for comprehensive texture information representation. Recently, many extensions of CLBP have been presented, such as CLBC [19], multi-scale CLBP [20], SALBP [18], LDEP [21], and CLEBP [22].

In this paper, we refer to the neighborhood division from CLBP. To make the sub-features be robust to scale changes, we design a multi-scale threshold integration encoding strategy to encode the three sub-components and propose the completed multi-scale neighborhood encoding pattern for visual texture classification.

3 The completed multi-scale neighborhood encoding pattern

In this section, we elaborate the proposed completed multi-scale neighborhood encoding pattern in detail. First, we analysis the motivation. Then, we propose the local multi-scale center pattern to extract the multi-scale texture information in center region. Finally, we develop the local multi-scale sign pattern and local multi-scale magnitude pattern based on the multi-scale threshold integration encoding strategy. By combing the three sub-patterns, we present the completed multi-scale neighborhood encoding pattern to represent multi-scale local texture information.

3.1 Motivation

In spite of the wide popularity of CLBP and its variants, there are two inherent drawbacks.

-

1.

Dimensions of sub-patterns in CLBP are extremely unbalanced. Specifically, the CLBP_C is only 1-bit, while CLBP_S and CLBP_M have P + 2 bins in their histograms. This unbalanced sub-feature dimensions limit the performance of the fusion feature.

-

2.

The CLBP descriptor cannot resist variations in scale. To handle this problem, many researches introduce multi-scale strategies, which focus on two aspects.

-

a.

Providing multi-scale texture information by combining multiple binary patterns from different scale sampling neighborhoods.

-

b.

Fusing multiple texture descriptors from different scale spaces built by Gaussian filters.

-

a.

However, existing multi-scale schemes inevitably lead to serious dimension expansion.

To address the first problem, this paper proposes a (N + 1)-bit local multi-scale center pattern (N is the number of scales), which utilizes different scale thresholds to encode the center pixel. It not only significantly boosts the discrimination of the center pixel-based sub-pattern during the feature fusion processing, but also introduces multi-scale information from the point of encoding thresholds. Section 3.2 shows the detailed description.

For solving the second problem, we design an efficient multi-scale threshold integration encoding strategy with a new perspective. Compared to existing multi-scale schemes, it has two noteworthy advantages: one is that it allows the threshold to have the ability of multi-scale information representation; the other one is that binary patterns encoded by our proposed strategy have the same dimension feature vector with those without using the strategy. In light of above idea, we encode the local sign and magnitude and propose the local multi-scale sign pattern and local multi-scale magnitude pattern, respectively. In Sects. 3.3 and 3.4, we elaborate these in detail.

3.2 Local multi-scale center pattern

The traditional CLBP uses the mean of the whole image as the threshold to encode the center pixel and provides a 1-bit binary code, named CLBP_C. However, on one hand, the too small feature dimension fails to provide enough discriminative information. It is hard to be an individual feature to represent the texture image. On the other hand, it fails to encode multi-scale grayscale information.

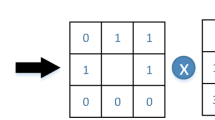

In this section, we propose a local multi-scale center pattern (LMCP) based on the multi-scale threshold integration encoding strategy. As shown in Fig. 1, instead of a single threshold for center pixels, we encode the center pixel by four different scale thresholds which represent different scale texture information around the center pixel.

Given a center pixel \(g_{c}\), and \(R_{1} ,R_{2} ,...,R_{N}\) are radiuses of different scale sampling circles. For each scale sampling circle, the corresponding \(P_{i}\) local neighborhood pixels are \(g_{{R_{i} ,p}}\), \(p = 0,...,P_{i} - 1\),\(i = 1,...,N\). We define the multi-scale threshold as follows:

Specifically, we define the original threshold \(thre_{0}\) as the mean of all pixels in the whole image. To improve the discriminative power, for each sampling scale, we use the mean value of circular neighbors as the corresponding encoding threshold. \(thre_{i} ,i = 1,2, \cdots ,N\) is the multi-scale sampling neighborhood threshold that contains different sampling neighborhoods texture information. Then, based on the multi-pattern encoding strategy [42], these \(N{ + }1\) different scale thresholds \(thre_{i} ,i = 0,...,N\) are utilized to encoding the center pixel \(g_{c}\), respectively, that generate the (N + 1)-bit LMCP code. The LMCP is denoted as:

It is interesting to notice that when N = 0, the LMCP is equivalent to the original CLBP_C. In this paper, we set \(N{ = }3\), and the different sampling circles are \(\left( {R_{1} , \, P_{1} } \right) = \left( {1, \, 8} \right)\), \(\left( {R_{2} , \, P_{2} } \right) = \left( {2, \, 16} \right)\), and \(\left( {R_{3} , \, P_{3} } \right) = \left( {3, \, 24} \right)\). Then we generate a 4-bit LMCP code and the dimensions of the LMCP feature vector is 16.

Figure 1 gives the illustration of LMCP. It not only represents the grayscale information of center pixel, but also extracts the texture information of different scale sampling neighborhoods. Compared with the traditional CLBP_C, the LMCP eliminates the feature imbalance problem and capture more discriminative multi-scale local texture information.

3.3 Local multi-scale sign pattern

The local difference sign contains important information about the local texture structure. For most LBP variants, they utilize a fixed threshold, the center pixel, to encode the sign component. This strategy makes the texture feature more prone to be disrupted by complex scenarios and fails to capture multi-scale texture structure.

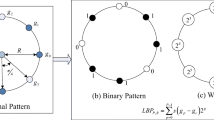

Motivated by above problems, we build an efficient multi-scale threshold integration encoding strategy and propose the local multi-scale sign pattern (LMSP). The multi-scale threshold integration encoding strategy introduces different sampling resolutions texture information into the binary encoding, which significantly improves the robustness against scale variations.

Given a center pixel \(g_{c}\) and N sets of corresponding neighboring pixels \(g_{{R_{i} ,p}}\), \(p = 0, \ldots ,P_{i} - 1\), \(i = 1, \ldots ,N\). We compute different scale threshold for local difference sign as follows:

Here, we define the \(thre_{0}^{S}\) is the value of center pixel. \(thre_{i} ,i = 1,2, \cdots ,N\) is the multi-scale neighborhood threshold.

For exploiting multi-scale sign information, we design a multi-scale threshold integration encoding strategy for combining different sampling scale thresholds to generate the multi-scale threshold for the sign component, which is defined as:

Then, \(thre^{S}\) is used to encode the sign component, called multi-scale neighborhood sign threshold. Then the proposed local multi-scale sign pattern can be mathematically represented as follows:

As elaborated in (8), the multi-scale threshold integration encoding strategy introduces multi-scale representation for the LMSP in the view of threshold. In this way, the LMSP can represent multi-scale and discriminative texture information at the same time without increasing the feature dimension. Note that, when N = 0, the LMSP is equivalent to the original CLBP_S.

Take N = 3 as example, Fig. 2 gives the illustration of LMSP. It is worth to noting that if we select the same encoding neighborhood, the CLBP_S and LMSP would have the same feature dimension. The CLBP_S only represents single-scale local texture information, but the LMSP carries multiple scale sampling neighborhoods information. Thanks to the multi-scale threshold integration encoding strategy, the proposed LMSP provides the multi-scale texture representation with low feature dimension.

3.4 Local multi-scale magnitude pattern

As elaborated in [9], the local difference magnitude is an important complementary information for sign component. In this section, we design a local multi-scale magnitude pattern (LMMP) based on the multi-scale threshold integration encoding strategy to strengthen the discriminative ability and robustness for scale changes. Benefited from the proposed multi-scale threshold integration encoding strategy, the LMMP significantly improves the multi-scale analysis ability with the same dimension of the CLBP_M.

For a center pixel \(g_{c}\) and N sets of corresponding neighboring pixels \(g_{{R_{i} ,p}}\), \(p = 0,...,P_{i} - 1\), \(i = 1,...N\), the local difference magnitude component is represented as \(m_{{R_{i} ,p}} = \left| {g_{{R_{i} ,p}} - g_{c} } \right|\). We represent different scale threshold for local magnitude component as follows.

Here, \(thre_{0}^{M}\) denotes the mean of \(m_{{R_{N} ,p}}\) from the whole texture image.

With the multi-scale threshold integration encoding strategy, the multi-scale neighborhood magnitude threshold is represented as

Then the local multi-scale magnitude pattern is encoded by \(thre^{M}\), which is described as

Similar to the LMSP, the LMMP capture discriminative and multi-scale local magnitude information without expending the feature dimension. Take \(N{ = }3\) as an example, Fig. 3 shows illustratively how the LMMP builds.

To obtain multi-scale and discriminative feature representation, we combine the LMCP, LMSP, and LMMP jointly, and generate the completed multi-scale neighborhood encoding pattern (CMNEP), as shown in Fig. 4. In the CMNEP descriptor, the sub-feature CMNEP_C is equal to LMCP, CMNEP_S is equal to LMSP, and CMNEP_M is equal to LMMP. Then, the final fusion feature vector is represented as CMNEP_SMC.

Main differences between CMNEP and CLBP are fourfold. First, CMNEP_C adopts different scale thresholds to encode the center pixel and generates a (N + 1)-bit binary pattern. CLBP_C only uses the mean of the whole image to encode the center pixel and obtains a 1-bit binary pattern without multi-scale analysis power. Second, in CMNEP_S, the encoding threshold is generated by our proposed multi-scale threshold integration strategy. In CLBP_S, the threshold is the center pixel, which represents single-scale texture information. For CMNEP_M and CLBP_M, we can find similar conclusions. Third, in CMNEP_SMC, CMNEP_C provides a proper feature dimension, which avoids the imbalance problem during the feature fusion. However, in CLBP_SMC, CLBP_C is too short to offer discriminative information carried by the center pixel, which leads to the imbalance among sub-patterns. Fourth, benefited from the multi-scale threshold integration strategy, CMNEP have an enticing scale-adaptive ability. But CLBP cannot deal with scale variations.

3.5 Distance measure

For the fair comparison, the proposed texture descriptor and all competitors adopt the same classifier for experimental evaluations.

More especially, this paper uses the nonparametric nearest neighbor classifier with \(\chi^{2}\) distance to measure the dissimilarities between training data and sample images. The histograms of the sample image and training image are described as \(H^{S}\) and \(H^{T}\), respectively. Then the \(\chi^{2}\) distance between the sample feature vector and the training feature vector is defined as

where M is the total number of bins in the histogram vector. I refers to the ith bin in the corresponding histogram.

4 Experimental evaluation

To investigate the effectiveness of the proposed CMNEP, we conduct comprehensive experiments in publicly available databases (including Outex [23], UMD [24], and UIUC [25] database) to evaluate the proposed CMNEP descriptor in comparison with some classical texture descriptors and recent state-of-the-art texture classification methods.

Figure 5 shows some texture samples from Outex, UIUC, and UMD database. Table 1 reports the summary of all databases used in our experiments.

4.1 Experimental evaluation on Outex database

In this section, we evaluate the rotation and illumination invariance of the proposed CMNEP descriptor on Outex database (including Outex_TC10 and Outex_TC12). It has 24 classes with 200 samples each. The texture images are collected under three illuminations (“inca,” “t184,” and “horizon”), and nine directions (0°, 5°, 10°, 15°, 30°, 45°, 60°, 75°, 90°). We use the TC10 test suit to evaluate the rotation invariance of the CMNEP descriptor and TC12 (including Outex_TC12_000 and Outex_TC12_001) is used to test rotation and illustration invariance of the CMNEP descriptor.

To evaluate the texture classification performance on Outex database, we conduct a set of experiments to compare sub-features and fusion features from the proposed CMNEP and CLBP. Figures 6, 7, and 8 shows classification accuracies of different sub-features (including S and M) and fusion features (containing S/C, M/C, S/C_M, M/C_S, and S/M/C) for CMNEP and CLBP with (R, P) = (3, 24). To make it more visible, we adopt the blue bar and orange bar to represent the CMNEP and CLBP descriptor, respectively.

It is observed that all sub-features and fusion features of the CMNEP are higher than those of CLBP, which is benefited by the proposed multi-scale threshold integration encoding strategy. For example, the CMNEP_S and CLBP_S have the same dimension. However, the CMNEP_S provides a 1.04%, 3.12%, and 6.96% performance increase compared to the base CLBP_S on TC10, TC12_000, and TC12_001, respectively. For CMNEP_M and CLBP_M, the results come to similar conclusions.

Moreover, the CMNEP’s fusion features also yield better results than those of CLBP. As shown in Fig. 6, the CMNEP_S/C performs better that the CLBP_S/C on TC10. The CMNEP_S/C_M also significantly outperforms the CLBP _S/C_M. Particularly, the CMNEP_S/M/C achieves the best result of 99.71% on TC10, which is higher than the CLBP_S/M/C by 0.78%. Similarly, as shown in Figs. 7 and 8, we can reach same conclusions compared with other types of fusion features on TC12_000 and TC12_001.

These conclusions indicate that the proposed multi-scale threshold integration encoding strategy helps the binary pattern to capture multi-scale texture information by encoding threshold, which significantly enhances the texture feature representation and boosts the robustness against images rotation and illumination variance. It is worth noting that our multi-scale threshold integration encoding strategy can be easily extended to more general binary pattern methods.

To further verify the superiority of the proposed CMNEP descriptor, Table 2 reports the classification accuracies of the CMNEP descriptor and other competitors. As shown in Table 2, the CMNEP descriptor achieves the best performance in both TC10 (99.71%) and TC12 (98.63% for TC12_000 and 98.61% for TC12_001). The detailed analysis is as follows. On TC10, compared with some classical LBP variants, the proposed CMNEP improves the classification accuracy significantly. For instance, our method outperforms the baseline LBP by 4.64%. It is also higher than CLBP and CLBC by 0.78% and 1.93%, respectively. Moreover, it also performs favorably against many state-of-the-art texture descriptors. For instance, the best classification result of the CMNEP descriptor have 0.33%, 0.13%, and 0.31% improvements over SALBP, FbLBP, and CLSP, respectively.

For TC12, the proposed CMNEP also achieves significantly and consistently performance superiority compared to other competitors. For TC12_000, the CMNEP gets the best classification accuracy (98.63%), which achieves an improvement of 13.59%, 3.31%, 4.63%, and 1.80% over LBP, CLBP, CLBC, and CRLBP. Furthermore, it also outperforms the recent SALBP, FbLBP, CLSP, and CMPE by 1.69%, 3.88%, 0.32%, and 0.37%, respectively. Similar findings can be found for TC12_001. More precisely, the CMNEP can reach the best result of 98.61%, which significantly surpasses the results achieved by some traditional LBP variants, such as LBP, CLBP, and CLBC. It also keeps a superiority of 1.83% to the recent FbLBP, 1.30% to CLSP, and 0.69% to CMPE. These results firmly demonstrate that the proposed CMNEP is not only robust to rotation variance but also stable to illustration variance. Therefore, the proposed multi-scale threshold integration encoding strategy plays an essential role in extracting robust and discriminative texture information.

4.2 Experimental evaluation on UIUC database

The UIUC database consists of 25 different classes and each class has 40 texture samples with the resolution of \(640 \times 480\). The texture images are collected under different scales and viewpoints. In this experiment, we randomly choose different numbers of texture images (20, 15, 10, and 5) per class for training, and the rest images are used for test sets. The classification results are reported over 50 random training/testing splits.

To verify the effectiveness of the proposed CMNEP in promoting texture classification accuracy, we firstly compare it with the base CLBP on UIUC database. Figure 9 shows the classification results of different sub-features and fusion features from CMNEP and CLBP with respect to the number of training samples.

It can be seen that classification accuracies of CMNEP and CLBP increase monotonically with respect to the number of training samples. The CMNEP’s sub-features and fusion features perform much better than those in CLBP. Specifically, the CMNEP_S and CLBP_S have the same feature dimension (26 bins with (R, P) = (3, 24)), but the CMNEP_S outperforms the CLBP_S by 11.98%, 12.56%, 12.84%, and 13.21% corresponding to 20, 15, 10, and 5 training samples, respectively. Similarly, the CMNEP_M is also higher than CLBP_M with different training samples.

Moreover, fusion features of our CMNEP achieve significantly and consistently better performance compared to CLBP. For example, the CMNEP_S/C_M provides 7.98%, 6.64%, 10.27%, and 13.12% improvements over CLBP_S/C_M corresponding to 20, 15, 10, and 5 training samples. As shown in Fig. 9g, the CMNEP_S/M/C reaches the best classification result of 95.69% with 20 training samples, which is much higher than the CLBP_S/M/C by 4.50%. Similar conclusions can be made for other fusion features. It is clear that the proposed CMNEP always outperforms the base CLBP, which demonstrates that the proposed multi-scale threshold integration encoding strategy is very discriminative and powerful for enhancing the classification accuracy. The reason is that the proposed multi-scale threshold integration encoding strategy introduces the multi-scale local texture information into the binary pattern threshold.

To further highlight the performance of the proposed CMNEP, Table 3 lists the classification accuracies obtained by it and other competitors.

In comparison with other LBP variants, the proposed CMNEP descriptor outperforms all competitors in terms of classification accuracy. More concretely, with 20 training images, the proposed CMNEP descriptor has accuracy improvements of 31.64%, 14.21% and 4.50% over LBP, LTP, and CLBP, respectively. In addition, compared with some successful CLBP-based methods, it also provides competitive performance improvement. For example, the proposed CMNEP outperforms the LQC_C(6)N(4), AECLBP, EMCLBP, and CDLF_S/M/C_AHA by 2.52%, 3.51%, 2.7%, and 1.92%.

Moreover, its classification accuracy is also much higher than recent LBP variants, such as FbLBP, CJLBP, CLSP, and CMPE. For example, the best classification accuracy of proposed CMNEP is 95.69% with 20 training samples, which is higher than the best result of 94.17% of FbLBP with the same training samples. The CMNEP also outperforms the CJLBP by 0.56%. In addition, our CMNEP descriptor could even outperforms the CLSP published in 2020 and CMPE published in 2021 by 1.19% and 0.80%, respectively. These results verify the effectiveness of the proposed multi-scale threshold integration encoding strategy in promoting texture classification accuracy.

In addition, the proposed CMNEP also achieves impressive classification results when the number of training images reduce to 15, 10, and 5. For example, compared with the base CLBP, the proposed CMNEP achieves 5.21%, 6.20%, and 7.46% improvement corresponding to the training numbers for each texture class set as 15, 10, and 5, respectively. It is notable that the CMNEP has the extremely prominent superiority with the small training samples. Specifically, in the case of 5 training samples, the CMNEP achieves an improvement of 5.76%, 3.61%, 5.82%, and 2.54% compared to CLBC, CRLBP (\(\alpha = 1\)), AECLBP, and CLTP, respectively.

Furthermore, the proposed CMNEP performs favorably against many recent binary pattern methods when the number of training images reduce to 15, 10, and 5. For example, the difference in classification result between the CMNEP and the CLSP published in 2020 are 1.61%, 2.14%, and 3.99% with corresponding to 15, 10, and 5, respectively. The CMNEP also achieves an improvement of 0.91%, 1.97%, and 2.78%, respectively, over the classification accuracies of CMPE published in 2021. Similar conclusions can be found for other competitors.

In summary, the proposed CMNEP descriptor significantly improves the classification performance on UIUC database. It is demonstrated that the proposed multi-scale threshold integration encoding strategy can substantially increase the multi-scale information representation and the discriminative power of the binary pattern method.

4.3 Experimental evaluation on UMD databases

To better investigate the effectiveness of the CMNEP descriptor, we conduct contrastive experiments on UMD database. The UMD database has 25 classes with 40 samples each. The texture image has \(1280 \times 960\) high resolution and contains different challenges, such as rotation variance, different scales, viewpoints, and lighting conditions. In this section, for every class, we randomly select 20, 15, 10, and 5 images for training, and the rest images are used for testing. Table 4 exhibits the classification accuracies of the CMNEP and other competitors.

First, the CMNEP descriptor achieves consistently better classification performance than other texture descriptors in comparison. For example, the CMNEP reaches the best classification result of 98.96% in the case of 20 training images, which achieves improvements of 12.71%, 8.53%, and 5.80% over the best accuracies of the traditional LBP, LTP, and CLBP, respectively.

Second, compared with some successful CLBP-based descriptors, the proposed CMNEP also yields significant accuracy improvements. For example, the CMNEP outperforms the AECLBP by 2.18%, 1.79%, 3.21%, and 3.28% for 20, 15, 10, and 5 training samples. It is also higher than the CLBPCPS by 5.46%, 5.72%, 6.33%, and 6.76% corresponding to 20, 15, 10, and 5 training samples, respectively. The striking performance of the CMNEP is mainly attributed to the powerful multi-scale threshold integration encoding strategy that significantly improves the texture classification performance.

Third, compared with some recent texture descriptors, the proposed CMNEP descriptor also obtains impressive texture classification results. For example, the CMNEP descriptor outperforms the CLSP published in 2020 by 1.12%, 1.56%, 2.13%, and 4.10% with 20, 15, 10, and 5 training samples, respectively. It also achieves an improvement of 0.12%, 0.46%, 0.65%, and 1.81% over the CMPE in the case of 20, 15, 10, and 5 training samples. The impressive classification results demonstrate that the discriminability of CMNEP descriptor is more powerful than those of state-of-the-art texture descriptors, the multi-scale threshold integration encoding strategy promotes the multi-scale representation and enhances texture classification performance.

Four, the proposed CMNEP descriptor also achieves the best classification results for different number of training images per class. Especially for the small number of training images, the CMNEP significantly outperforms other representative competitors. For example, the CMNEP achieves the best classification accuracy of 95.07% with only 5 training images, which is higher than the original CLBP by 8.31%. It also yields significant improvements of 3.07% and 1.81% compared to the recent CLSP and CMPE. Similar findings can be made when compared to other representation methods. The main reason is that our CMNEP can extract multi-scale details about the local neighborhood, meanwhile, it maintains the competitive advantage of feature dimension.

In summary, the performance superiority of the proposed CMNEP demonstrates the proposed multi-scale threshold integration encoding strategy contributes multi-scale and discriminative local texture information for the descriptor without dimension increase. The proposed strategy can be popularized to other binary pattern methods.

5 Conclusion

This paper presents a simple and efficient multi-scale texture descriptor, the completed multi-scale neighborhood encoding pattern, for visual texture classification. The proposed CMNEP consists of local multi-scale center pattern, local multi-scale sign pattern, and local multi-scale magnitude pattern. The LMCP is used for capturing the central region texture information by applying four different scale thresholds. Based on the proposed multi-scale threshold integration encoding strategy, the LMSP and LMMP are proposed to represent the multi-scale local neighborhood texture structure. To achieve a complementary information representation, the three sub-patterns are jointly fused to form the CMNEP descriptor. Experimental results on three popular databases show that the CMNEP is powerful discriminative and robust to illumination, rotation, viewpoint and scale changes. In future work, an adaptive scale selection strategy for visual texture classification will be investigated.

References

Nanni, L., Lumini, A., Brahnam, S.: Local binary patterns variants as texture descriptors for medical image analysis. Artif Intell Med. 49(2), 117–125 (2010)

Duque, J.C., Patino, J.E., Ruiz, L.A., Pardo-Pascual, J.E.: Measuring intra-urban poverty using land cover and texture metrics derived from remote sensing data. Landscape Urban Plan 135, 11–21 (2015)

Wood, E.M., Pidgeon, A.M., Radeloff, V.C., Keuler, N.S.: Image texture as a remotely sensed measure of vegetation structure. Remote Sens Environ. 121, 516–526 (2012)

Chakraborty, S., Singh, S.K., Chakraborty, P.: Local directional gradient pattern: a local descriptor for face recognition. Multimedia Tools Appl. 76, 1201–1216 (2017)

Vaidya, S.P.: Fingerprint-based robust medical image watermarking in hybrid transform. Vis. Comput. (2022). https://doi.org/10.1007/s00371-022-02406-4

Guo, Z., Shuai, H., Liu, G., et al.: Multi-level feature fusion pyramid network for object detection. Vis. Comput. (2022). https://doi.org/10.1007/s00371-022-02589-w

Tuceryan, M., Jain, A.K., et al.: Texture Analysis, Handbook of Pattern Recognition and Computer Vision, Vol, 2, pp. 207–248 (1993)

Ojala, T., Pietikäinen, M., Maenpää, T.: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell. 24(7), 971–987 (2002)

Guo, Z., Zhang, L., Zhang, D.: A completed modeling of local binary pattern operator for texture classification. IEEE Trans Image Process. 19(6), 1657–1663 (2010)

Li, Z., Liu, G., Yang, Y., You, J.: Scale- and rotation-invariant local binary pattern using scale-adaptive texton and sub uniform-based circular shift. IEEE Trans Image Process. 21(4), 2130–2140 (2012)

Davarzani, R., Mozaffari, S., Yaghmaie, K.: Scale- and rotation-invariant texture description with improved local binary pattern features. Signal Process. 111, 274–293 (2015)

Guo, Z., Wang, X., Zhou, J., et al.: Robust texture image representation by scale selective local binary patterns. IEEE Trans Image Process 25(2), 687–699 (2015)

Hafiane, A., Palaniappan, K., Seetharaman, G.: Joint Adaptive Median Binary Patterns for texture classification. Pattern Recogniti. 48, 2609–2620 (2015)

Dong, Y., Feng, J., Yang, C., et al.: Multi-scale counting and difference representation for texture classification. Vis. Comput. 34(10), 1315–1324 (2018)

Wu, X., Sun, J.: Joint-scale LBP: a new feature descriptor for texture classification. Vis. Comput. 33(3), 317–329 (2017)

Hu Y., Long Z., Alregib G.: Scale selective extended local binary pattern for texture classification. In: 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, pp. 1413–1417 (2017)

Dong, Y., Wu, H., Li, X., et al.: Multiscale symmetric dense micro-block difference for texture classification. IEEE Trans. Circuits Syst. Video Technol. 29(12), 3583–3594 (2018)

Pan, Z., Wu, X., Li, Z.: Scale-adaptive local binary pattern for texture classification. Multimed. Tools Appl. 79, 5477–5500 (2020)

Hao, Y., Huang, D.S., Jia, W.: Completed local binary count for rotation invariant texture classification. IEEE Trans. Image Process. 21(10), 4492–4497 (2012)

Chen, C., Zhang, B., Su, H., et al.: Land-use scene classification using multi-scale completed local binary patterns. Signal Image Video. 10, 745–752 (2016)

Dong, Y., Wang, T., Yang, C., et al.: Locally directional and extremal pattern for texture classification. IEEE Access. 99, 87931–87941 (2019)

Xiaochun, X., Yibing, L., Wu, Q.M.J.: A multiscale hierarchical threshold-based completed local entropy binary pattern for texture classification. Cogn Comput. 12(1), 224–237 (2020)

Ojala, T., Maenpaa, T., Pietikainen, M., Viertola, J., Kyllonen, J., Huovinen, S.: Outex—new framework for empirical evaluation of texture analysis algorithms. In: IEEE International Conference Pattern Recognit (ICPR). pp. 701–706 (2002)

Yong X., Xiong Y., Ling H., et al.: A new texture descriptor using multifractal analysis in multi-orientation wavelet pyramid. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 161–168. (2010)

Lazebnik, S., Schmid, C., Ponce, J.: A sparse texture representation using local affine regions. IEEE Trans. Pattern Anal. Mach. Intell. 27(8), 1265–1278 (2005)

Liu, L., Long, Y., Fieguth, P.W., Lao, S., Zhao, G.: BRINT: binary rotation invariant and noise tolerant texture classification. IEEE Trans. Image Process. 23(7), 3071–3084 (2014)

Tan, X., Triggs, B.: Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans. Image Process. 19(6), 1635–1650 (2010)

Song, K., Yan, Y., Zhao, Y., et al.: Adjacent evaluation of local binary pattern for texture classification. J. Vis. Commun. Image Represent. 33, 323–339 (2015)

Zhang, Z., Liu, S., Mei, X., et al.: Learning completed discriminative local features for texture classification. Pattern Recognit. 67, 263–275 (2017)

Liu, L., Lao, S., Fieguth, P.W., et al.: Median robust extended local binary pattern for texture classification. IEEE Trans. Image Process. 25(3), 1368–1381 (2016)

Zhao, Y., Jia, W., Hu, R.X., et al.: Completed robust local binary pattern for texture classification. Neurocomputing 106, 68–76 (2013)

Nguyen, V.D., Nguyen, D.D., Nguyen, T.T., et al.: Support local pattern and its application to disparity improvement and texture classification. IEEE Trans. Circuits Syst. Video Technol. 24(2), 263–276 (2013)

Wang, K., Bichot, C.E., Li, Y., et al.: Local binary circumferential and radial derivative pattern for texture classification. Pattern Recognit. 67, 213–229 (2017)

Hao Y., Li S., Mo H., et al.: Affine-gradient based local binary pattern descriptor for texture classification. International Conference on Image and Graphics. Springer, Cham, pp.199–210 (2017)

Song, T., Xin, L., Gao, C., et al.: Grayscale-inversion and rotation invariant texture description using sorted local gradient pattern. IEEE Signal Process. Lett. 25(5), 625–629 (2018)

H. Taha, Rassem, et al., Completed local ternary pattern for rotation invariant texture classification. Sci. World J. pp. 1–10 (2014)

Pan, Z., Li, Z., Fan, H., et al.: Feature based local binary pattern for rotation invariant texture classification. Expert Syst. Appl. 88, 238–248 (2017)

Xu, X., Li, Y., Wu, Q.M.J.: A completed local shrinkage pattern for texture classification. Appl. Soft Comput. 97, 106830 (2020)

Pan, Z., Wu, X., Li, Z.: Central pixel selection strategy based on local gray-value distribution by using gradient information to enhance LBP for texture classification. Expert Syst. Appl. 120, 319–334 (2019)

Zhao, Y., Wang, R.G., Wang, W.M., et al.: Local quantization code histogram for texture classification. Neurocomputing 207, 354–364 (2016)

Shakoor, M.H., Boostani, R.: Extended mapping local binary pattern operator for texture classification. Intern. J. Pattern Recognit. Artif. Intell. 31(06), 1750019 (2017)

Xu, X., Li, Y., Wu, Q.M.J.: A compact multi-pattern encoding descriptor for texture classification. Digital Signal Process. 114, 103081 (2021)

Acknowledgments

The paper is funded by the National Key Research and Development Program of China (Grant No. 2016YFF0102806), the National Natural Science Foundation of China (Grant No. 51809056), the Natural Science Foundation of Heilongjiang Province, China (Grant No. F2017004).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, B., Li, Y. & Wu, Q.M.J. A multi-scale threshold integration encoding strategy for texture classification. Vis Comput 39, 5747–5761 (2023). https://doi.org/10.1007/s00371-022-02693-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02693-x