Abstract

This paper presents the generalized nonlinear delay differential equations of fractional variable-order. In this article, a novel shifted Jacobi operational matrix technique is introduced for solving a class of multi-terms variable-order fractional delay differential equations via reducing the main problem to an algebraic system of equations that can be solved numerically. The suggested technique is successfully developed for the aforementioned problem. Comprehensive numerical experiments are presented to demonstrate the efficiency, generality, accuracy of proposed scheme and the flexibility of this method. The numerical results compared it with other existing methods such as fractional Adams method (FAM), new predictor–corrector method (NPCM), a new approach, Adams–Bashforth–Moulton algorithm and L1 predictor–corrector method (L1-PCM). Comparing the results of these methods as well as comparing the current method (NSJOM) with the exact solution, indicating the efficiency and validity of this method. Note that the procedure is easy to implement and this technique will be considered as a generalization of many numerical schemes. Furthermore, the error and its bound are estimated.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The analysis and applications of fractional calculus are an active and the fastest growing region for research in the last three decades. It has currently become an important tool owing to its wide applications in various scientific disciplines, such as physics, regular variation in thermodynamics, blood flow phenomenons, biophysics, electro-dynamics of complex medium, capacitor theory, chemistry, polymer rheology, dynamical systems, fitting of experimental data, etc. ([1,2,3, 7] and references therein). The increasing development of appropriate and efficient method to solve FDEs has aroused more interest of researchers in this field. Solving the nonlinear FDEs is relatively difficult compared to its linear type. In this regard, analytical methods such as, homotopy perturbation method (HPM), homotopy analysis method (HAM), new iterative methods and Adomian decomposition, have been used in the recent literature widely [8, 9, 11,12,13,14, 53] . On the other hands, some researchers such as Diethelm et al [2, 16] have developed standard numerical methods, such as Adams–Bashforth methods have been used for numerical integration of FDEs. Lakestani et al [54] have peresented The construction of operational matrix of fractional derivatives using B-spline functions, Application of Taylor series in obtaining the orthogonal operational matrix is proposed by Eslahchi and Dehghan [56], Kayedi-Bardeh et al have provided A method for obtaining the operational matrix of the fractional Jacobi functions and application [57].

Inclusion of delay in the differential equations of fractional order creates new perspectives especially in the field of bioengineering [6], because in bioengineering, the understanding of the dynamics that occur in biological tissues, is improved by fractional derivatives [4, 6].

In mathematics, delay differential equations (DDEs) are a type of differential equation in which the derivative of the unknown function at a certain time is given in terms of the values of the function at previous times. The DDEs are also called time-delay systems, systems with aftereffect or deed-time, hereditary systems, equations with deviating argument, or differential–difference equations [17].

Fractional delay differential equations differ from ordinary differential equations in that the derivative at any time depends on the solution (and in the case of neutral equations on the derivative) at prior times. Many events in the natural world can be modeled to form of fractional order of delay differential equations [7]. The fraction order of differential equations have many application in various scientific disciplines by modeling of various problem such as economy, electrodynamics, biology, control, finance, chemistry, physics and so on, for more detail, we refer the interested reader to [17,18,19,20,21,22,23].

In recent years, Margado et al in [24] analyzed numerical solution and approximated it for FDDEs. Cermak et al in [25], analyzed stability regions of FDDEs system. Stability for FDDEs systems by means of Gr\(\ddot{u}\)nwalds approach is analyzed by Lazarovic and Spansic [26]. Abbaszadeh and Dehghan have presented Numerical and analytical investigations for neutral delay fractional damped diffusion-wave equation based on the stabilized interpolating element free Galerkin (IEFG) method [55], Daftardar-Gejji et al in [12] have proposed a new predictor–corrector method and based on the realizer developed new iteration method presented by Daftardar-Gejji and Jafari [27] , for solving FDEs numerically. Bhalekar and Daftardar-Gejji proposed A predictor–corrector scheme to solve non-linear fractional-order delay differential equations in [10]. In [5], the author generalized the Adams–Bashforth–Moulton algorithm introduced in [2, 16, 28] to the delay FDEs. Varsha et al [6], have presented a new approach to solve non-linear fractional- order differential equations involving delay. Ghasemi et al [17], have employed Reproducing kernel Hilbert Space method to solve nonlinear delay differential equation of fractional order. Jhing and Daftardar-Gejji have presented a new numerical method to solve FDDEs [4].

Moreover, the spectral methods that basically depends on a set of orthogonal polynomials, are used to solve the differential equations of fractional-order. One of the most famous of them, is the classical Jacobi polynomials that shown as follows:

These polynomials have been used extensively in mathematical analysis and practical applications because it has the advantages of gaining the numerical solutions in \(\alpha \) and \(\beta \) parameters. Thus, it would be beneficial to carry out a systematic study via Jacobi polynomials with general indexes \( \alpha \) and \( \beta \) and this clearly considered one of the aims and the novelly of the time interval \( t\,\in [0,\,I] \) [15]. Furthermore, recently interest of researchers has increased in this field (field of variable fractional differential equations) [29, 30]. So, many methods are used to find the numerical solution of these equations [31,32,33].

Now, the aim of this paper is to generalize the orthogonal polynomials in the base of solution. In fact, this technique is introduced in [15] and we present a new shifted Jacobi operational matrix for the fractional derivative to solve the multi-terms variable-order FDDEs which as follows:

where \( \alpha _{j}\, \in {\mathbb {R}} (j=1,2,\ldots ,n+1)\,,\alpha _{n+1}\ne 0,\,0<T\,.\) and \( D^{\eta _{j}(t)} w(t)\,(\,j\,=\,1 , 2,\ldots , n\,)\) are the derivatives of variable-order fractional in the Caputo sense.

Note 1

If \( \eta _{j}(t) \,(\,j\,=\,1 , 2,\ldots , n\,)\) are constants, then equation (1) will be as follows:

Also note that: we can use many polynomials such as Legendre polynomials, Gegenbauer polynomials, all Chebyshev polynomials, Lucas polynomials, Vieta-Lucas polynomials, and Fibonacci polynomials in our new suggestion technique.

The numerical results obtained for the mentioned equation in this study reveals that the present method is of highly accuracy. By focusing on numerical experiments gained by this method with other available methods, and comparing them, we can find that the proposed scheme capable of solving the variable-order fractional delay differential equation, playing role of a powerful effective and practical numerical technique.

2 Fundamentals and preliminaries

In the first part of this section, we review some of the basic and most important properties of fractional calculus theory. Then recall some important properties of the Jacobi polynomials which help us for developing the suggested technique. So we refuse to include duplicate concepts in other related articles and unnecessary content but for more detail, we refer the interested reader to [34, 37, 38].

2.1 The fractional derivative and integral

There are several used definitions for fractional derivatives but the three most usual are Riemann–Liouville, Gr\(\ddot{u}\)nwald-Letincov and Caputo definitions. This article is based on Caputo definition because ,as well as know, only the Caputo sense has the same form as integer-order differential equations in initial conditions.

Definition 2.1

The left- and right-sided Caputo fractional derivatives of order \(\eta \,(n-1<\eta \le n\,)\) are defined as

that

and

where \(\lceil . \rceil \) is the ceiling function and \(M_0= \lbrace 0, 1, 2, \cdots \rbrace .\)

Also

where \( \gamma \)and \(\delta \) are constants.

Definition 2.2

The Caputo fractional derivatives of variable-order \(\eta (t)\) for \( w(t) \in C^m[0,\,T] \) are given respectively as [30, 35]:

At the beginning time and for \( 0< \eta (t)<1 \), we have:

also, if a and b are constant then

According to Eq. (6), we have:

On the other hand

2.2 Shifted Jacobi polynomials and their properties

Denote \( P_n^{(\alpha ,\beta )}(z) ;\, \alpha>-1 \,, \beta >-1\) as the \(n-\)th order Jacobi polynomial in z defined on \(\left[ -1,\,1\right] \).

As all classic orthogonal polynomials, \( P_n^{(\alpha ,\beta )}(z)\) form an orthogonal system with respect to weight function \( \omega ^{(\alpha ,\beta )}(z)\,=\,(1+z)^{\alpha }\,(1-z)^{\beta } \), in other words [34]:

where \( \delta _{i,j} \) is the Kronecker function and

also the i-th order Jacobi polynomial has the following analytical form [15]

If we implement the change of variable \( z\, =\,(\dfrac{2t}{T}-1) \), then we can use the polynomial of Eq. (12) on the interval \( t\in [0,T] \). Therefore, we have so-called the shifted Jacobi polynomials \( P_n^{(\alpha ,\beta )}(\dfrac{2t}{T}-1)\) which denoted by \( P_{T,i}^{ (\alpha ,\beta )}(t) \). Then \( P_{T,i}^{ (\alpha ,\beta )}(t) \) form an orthogonal system with respect to weight function \( \omega _{T}^{(\alpha ,\beta )}(t)\,=\,t^{\alpha }\,(T-t)^{\beta } \) in the interval \( [0,\,T] \) with follow orthogonality trait:

where \( \delta _{i,j} \) is the Kronecker function and

also the i-th order Shifted Jacobi polynomial has the following analytical form [15]

And in the endpoint values are given as

Also, \( P_{T,i}^{(\alpha ,\beta )}(t) \) can be obtained via the recurrence relation, we refer the interested reader to [15].

Note 2

Furthermore, since the shifted Jacobi polynomials includes unlimited number of orthogonal polynomials such as the shifted Gegenbauer polynomials \( G_{T,i}^{(\alpha ,\beta )}(t) \), the shifted Legendre polynomials \( L_{T,i}^{(\alpha ,\beta )}(t) \), and the shifted Chebyshev polynomials of the first, second, third and fourth kinds \( T_{T,i}^{(\alpha ,\beta )}(t) \), \( U_{T,i}^{(\alpha ,\beta )}(t) \), \( V_{T,i}^{(\alpha ,\beta )}(t) \) and \( W_{T,i}^{(\alpha ,\beta )}(t) \), respectively. All these orthogonal polynomials are contacted with \( P_{T,i}^{(\alpha ,\beta )} (t)\) through the relations:

3 Function approximation by shifted Jacobi polynomials

The function w(t) , square integrable with respect to \( \omega _{T}^{(\alpha ,\beta )}(t) \) in \( [0,\, T] , \) can be expanded as the following expression [15, 34]:

where \( a_i \) (the coefficients of the series) are obtained by

So, we can estimate the approximate solution by taking \((N+1)\)-terms of the series in Eq. (15) and we will have

where \( A \,=\,[ a_0,a_1, \cdots , a_N]^T\), and \( \Phi _{T,N}(t)\,=\,[P_{T,0}^{(\alpha ,\beta )}(t),P_{T,1}^{(\alpha ,\beta )}(t),\cdots , P_{T,N}^{(\alpha ,\beta )}(t)] ^T\).

Here, we suppose that

By Eq. (17), the vector \( \Phi _{T,N}(t)\,\) can be presented as

where \( B_{(\alpha ,\beta )} \) is a square matrix of order \( (N+1) \times (N+1) \) that given as follows

for \( 0 \le i,j \le N \).

For example, if \(N=4,\, \alpha \, =\,\beta =\,0\), then B as follows

Hence, using Eq. (19) , we get

Note 3

Note that, we obtain this square matrix B for all other orthogonal polynomials as well. For example, if \(N=4,\, \alpha \, =\dfrac{1}{2},\,\beta =\,\dfrac{-1}{2}\) then the square matrix B for the fourth kind shifted Chebyshev polynomials as follows

4 Shifted Jacobi polynomials operational matrix (SJOM)

Operational matrices, which are used in different areas of numerical analysis and they are especially important to solve a variety of problems in different fields such as integral equations, differential equations, integro-differential equations, ordinary and partial fractional differential equations and etc [31, 34,35,36, 39,40,41,42,43,44,45,46,47]. In this section, we investigate the (SJOM) of fractional variable-order to support the numerical solution of Eq. (1). Therefore, we convert the problem into the system of algebraic of equations which solved numerically in collocation points.

At first, \( D^{\eta _{i}(t)} \Phi _{T,N}(t) ,\,(i=1,2,\cdots ,n)\) can be deduced as the following:

since \( \Phi _{T,N}(t)\,=\, B_{(\alpha ,\beta )} S(t) \), then we have

Combining Eqs. (10) and (24), it gives

where

Using Eq. (22), then

The operational matrix of \( D^{\eta _{i}(t)} \Phi _{T,N}(t)\,,(i\, = \, 1, 2,\cdots , n.) \) is \( B_{(\alpha ,\beta )} Q_i(t)\, B_{(\alpha ,\beta )}^{-1} \).

Now, we can estimate the variable-order fractional of the approximated function that obtained in Eq. (17) as the following

By using Eq. (28), hence the Eq. (1) is turned into

Finally, we use \( t_j\,(j\, = \, 0,1, 2,\cdots , m.) \) where they are the roots of \(P_{T,m+1}^{(\alpha ,\beta )}(t)\). Then Eq. (29) can be converted into the following algebraic system

So, the system in Eq. (30) can be solved numerically for determining the unknown vector A. Therefore, the numerical solution that presented in Eq. (17) can be obtained.

5 Error analysis

In this part of paper, we estimate an upper bound of the absolute errors via using the Lagrange interpolation polynomials. Also, by using the current method (NSJOM) with error approximation and the residual correction method [48, 49], an efficient error approximation will be obtained for the variable-order fractional differential equations.

5.1 Error bound

Now, our aim is to gain an analytic expression of error norm for the best approximation of a smooth function \( w(t) \in [0,T] \) via its expansion in terms of Jacobi polynomials. Let

In this part of the discussion, we always assume that \(w_{N}(t) \in \prod _{N}^{\alpha ,\beta }\) is the best approximation of w(t), then by definition of the best approximation, we have

It’s obvious that the above inequality is also true if \( v_N (t) \) be the interpolating polynomials at node points \( t_i \, (i=0,1,\cdots , m)\), where \( t_i \) are the roots of \(P_{T,m+1}^{(\alpha ,\beta )}(t)\). Then by the Lagrange interpolation polynomials formula and its error formula, we have

where \( \xi \in [0, T]\), and hence we obtain that

We note that w(t) is a smooth function on \( [0,\,T] \), therefore, there exist a constant \( C_1 \), such that

By minimizing the factor \( \parallel \prod _{j=0}^{N}(t-t_j)\parallel _{\infty } \) as follows, we will have:

If use the one-to-one mapping \( t=\dfrac{T}{2}(z+1) \) between the interval \( [-1,\,1] \) and \( [0,\,T] \) to deduce that [34, 50]

where \( \lambda _{N}^{(\alpha ,\beta )}= \dfrac{\Gamma (2N+\alpha +\beta +1)}{2^{N}N! \Gamma (N+\alpha +\beta +1)} \) is the leading coefficient of \( P_{N+1}^{(\alpha ,\beta )}(z) \) and \( z_j \) are the roots of \( P_{N+1}^{(\alpha ,\beta )}(z) \). It is clear that

Using Eqs. (34) and (35), gives the following result

Then, we estimate an upper bound for absolute error of the exact and approximate solutions.

5.2 Error function estimation

In this subsection, we have introduced the error estimation based on the residual error function for the presented technique and the approximate solution (17) is refined via the residual correction scheme. The residual error estimation was used for the error estimation of some methods for different equations [36, 49, 51, 52].

At first, we denote \( e_{N}(t)\,=w_{N}(t)\,- w(t)\) as the error function of the NSJOM method approximation \( w_{N}(t) \) to w(t) , where w(t) is the exact solution of Eq. (1).

Therefore, \( w_{N}(t) \) satisfies the following equation

where \( R_{N}(t)\) is the residual function of Eq. (1), which is estimated by replacing the \( w_{N}(t) \) with w(t) in Eq. (1).

By subtract Eq. (1) from Eq. (37), the error problem is constructed as follows:

where

Thus, the (38) can be solved in the same way as in the previous section and we obtain the following approximation to \( e_{N}(t) \).

Then the maximum absolute error can be obtained approximately by

Note 4

Note that if the exact solution of the problem (1) is unknown, in real practical experiment, we have trouble with computing \(R_{N,F}\). But, the replacement strategy is to be approximated by its bound, say \(D_1|e_{N}(t)|+D_2|e_{N}(t-\tau )|\) with \(D_1\) and \(D_2\) as positive constants. In fact, it is possible by supposing that the nonlinear term F satisfies Lipschitz condition with respect to its all arguments.

The above estimation of error (41), depends on the convergence rates of expansions in Jacobi polynomial. Thus, it provides reasonable convergence rates in temporal discretizations [34, 52].

6 Numerical experiments

In this section, based on the previous discussion, some numerical examples are given to illustrate the accuracy, efficiency, applicability, generality and validity of the proposed technique. In all examples, the results of the present method are computed by Mathematica 10 software. In order to test our scheme, we compared it with other known methods in terms of absolute errors \( \mid w_{exact}(t)- w_{n}(t)\mid \), relative errors \( \mid \dfrac{ w_{exact}(t)- w_{n}(t)}{ w_{exact}(t)}\mid \) and the CPU time required for solving all examples.

Comparison of the results obtained by this scheme with the exact solution of each example shows that this new technique has a better agreement than other methods. The stability, consistency and easy implementation of this technique cause this method to be more applicable and reliable.

Example 6.1

[6, 10] Consider the following delay fractional order equation for \(0 <\eta \le 1\,,\tau >0\)

Note that \(w(t)=\,t^{2}\) is the exact solution and \(0 \le t \le T\,, T=2,\, \tau =0.3\,,\,\eta =0.6\).

By the concepts presented in Sect. 4, we consider the approximate solution with \((N+1)\) finite terms that presented in Eq. (17) for this problem and substitute in main problem. Then by using Eq. (28), this problem is converted to form of the Eq. (29), finally using \(t_i\), therefore, a system of algebraic equations emerges which can be solved numerically to determine the unknown vector A with initial value \(w_0 =0\). The solution of the Eq. (42) approximated using new method compared to other methods, is in the best agreement with the exact solution. The absolute errors ( at some nodal points) of this method and methods in [6, 10] are presented and compared in Table 1, also the CPU time needed for these methods is given in this Table. From this Table, it is observed that the numerical results are very close to the exact solution and we achieved an excellent approximation for the exact solution by employing current scheme and it was found the our method in comparison with mentioned methods is better with view to utilization, accuracy and more time efficiency. Figure 1 compares the exact and approximated solution which confirms the reliability of NSJOM method. Moreover, in Fig. 2 we plot the absolute error for this example. Note that the Figs. 1 and 2 show a proper agreement between approximate and exact solutions. In this example for \( N=2 \) and \( N=7 \), we have \(A\,=[ 1.33333,2,0.66667]^T , \,A\,=[ 1.33333,2,0.66667,2.95496\times 10^{-16},8.71848\times 10^{-17},0,3.55601\times 10^{-16},-2.82553\times 10^{-16}]^T \), respectively.

Example 6.2

[4, 27] Consider the following delay fractional order equation for \(0 <\eta \le 1\,,\tau >0\)

In above problem \(w(t)=\,t^{2} -t\) is the exact solution and \(0 \le t \le T\,, T=10,\, \tau =0.1\,,\,\eta =0.9\).

Using the process mentioned in Ex. 1, we get the solution of this problem. The solution of the Eq. (43) approximated using current method compared to other methods, is in the best agreement with the exact solution. The absolute and relative errors ( at some nodal points) of this method and methods in [4, 27] are presented and compared in Tables 2, 3, also the CPU time needed for these methods is given in these Tables. From these Tables, it is observed that the numerical results are very close to the exact solution and we achieved an excellent approximation for the exact solution by employing new technique and it was found the current method in comparison with mentioned methods is better with view to utilization, accuracy and more time efficiency. Figure 3 compares the exact and approximated solution which confirms the reliability of NSJOM method. Moreover, in Fig. 4 we plot the absolute error for this example. Note that the Figs. 3 and 4 show a proper agreement between approximate and exact solution. In this example for \( N=2 \) and \( N=3 \), we have \(A\,=[ 28.33333,45,16.66667]^T , \,A\,=[ 28.33333,45,16.66667,0]^T \), respectively.

Example 6.3

[5] Consider the following delay fractional order equation for \(0 <\eta \le 1\,,\tau >0\)

In this problem \(w(t)=\,t^{2} -t\) is the exact solution and \(0 \le t \le T\,\).

Like pervious examples, we get the solution of this problem in accordance with delay being constant or time varying. The solution of the Eq. (44) approximated using current method compared to other methods, is in the best agreement with the exact solution. The absolute (\( E _A \)) and relative errors (\( E _R \)) (at \(t=T \)) of this method and method in [5] are displayed and compared in Tables 4, 5. From these Tables, it is observed that the numerical results are very close to the exact solution and we gained an excellent approximation for the exact solution by employing new technique and it was found the current technique in comparison with mentioned method is better with view to utilization, accuracy and more time efficiency. Figures 5, 7 compare the exact and approximated solution which confirms the reliability of NSJOM method. Moreover, in Figs. 6, 8 we plot the absolute errors of this example in accordance with delay being constant or time varying. Note that the Figs. 5 ,6, 7 and 8 show a proper agreement between approximate and exact solution.

Example 6.4

[6, 10] As the last and general example, consider the following delay fractional order equation for \(0 <\eta (t) \le 1\,,\tau >0\)

Note that \(w(t)=\,t^{2}\) is the exact solution and \(0 \le t \le T\,, T=1,\, \tau =0.3\,,\,\eta (t)=0.6 t \).

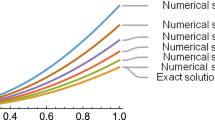

By the concepts presented in Sect. 4, we consider the approximate solution with \((N+1)\) finite terms that presented in Eq. (17) for this problem and substitute in main problem. Then by using Eq. (28), this problem is converted to form of the Eq. (29), finally using \(t_i\), therefore, a system of algebraic equations emerges which can be solved numerically to determine the unknown vector A. The solutions of the Eq. (45) approximated for various values of \( \alpha ,\,\beta \), via new method compared to other methods, is in the best agreement with the exact solution. The absolute and relative errors ( at some nodal points) of this method are shown in Tables 6, 7, 8, 9, 10, 11. From these Tables, it is observed that the numerical results are very close to the exact solution and we achieved an excellent approximation for the exact solution by employing current scheme and it was found the our method in comparison with mentioned methods is better with view to utilization, accuracy and more time efficiency. Figure 9 compares the exact and approximated solution which confirms the reliability of NSJOM method. Moreover, in Fig. 10 we plot the absolute error with \( \alpha =1 ,\,\beta =1 \) for this example . Note that the Figs. 9 and 10 show a proper agreement between approximate and exact solution. In this example, we have

7 Conclusions

In the current paper, we have proposed the novel shifted Jacobi operational matrix (NSJOM) scheme for variable-order fractional delay differential equations via reducing the main problem to an algebraic system of equations that can be solved numerically. We have shown that the proposed method has good convergence, is easy to implement and its concepts are simple. The obtained results are excellent compared to other methods. Finally, the numerical results have been presented to clarify the effectiveness and accuracy of this technique.

References

Baleanu D, Magin RL, Bhalekar S, Daftardar-Gejji V (2015) Chaos in the fractional order nonlinear Bloch equation with delay. Commun Nonlinear Sci Numer Simul 25(1–3):41–49

Diethelm K, Ford NJ, Freed AD (2004) Detailed error analysis for a fractional Adams method. Numer Algorithms 36(1):31–52

Kuang Y (1993) Delay differential equations: with applications in population dynamics, vol 191. Academic Press, London

Jhinga A, Daftardar-Gejji V (2019) A new numerical method for solving fractional delay differential equations. Comput Appl Math 38:166

Wang Z (2013) A numerical method for delayed fractional-order differential equations, Hindawi Publishing Corporation Journal of Applied Mathematics Volume 2013

Daftardar-Gejji V, Sukale Y, Bhalekar S (2015) Solving fractional delay differential equations: a new approach. Int J Theory Appl 18:2

SaedshoarHeris M, Javidi M (2017) On fractional backward differential formulas for fractional delay differential equations with periodic and anti-periodic conditions. Appl Numer Math 118:203–220

Adomian G (1988) A review of decomposition method in applied mathematics. J Math Anal Appl 135:501–544

Bhalekar S, Daftardar-Gejji V (2011) Convergence of the new iterative method. Int J Differ Equ

Bhalekar S, Daftardar-Gejji V (2011) A predictor-corrector scheme for solving non-linear delay differential equations of fractional order. J Fract Calculus Appl 1(5):1–8

Daftardar-Gejji V, Jafari H (2005) Adomian decomposition: a tool for solving a system of fractional differential equations. J Math Anal Appl 301(2):506–518

Daftardar-Gejji V, Jafari H (2006) An iterative method for solving non linear functional equations. J Math Anal Appl 316:753–763

Hemeda A (2012) Homotopy perturbation method of solving systems of nonlinear coupled equations. Appl Math Sci 6:4787–4800

Wazwaz A (1999) A reliable modification in Adomian decomposition method. Appl Math Comput 102:77–86

El-Sayed AA, Baleanu D, Agarwal P (2020) A novel Jacobi operational matrix for numerical solution of multi-term variable-order fractional differential equations. J Taibah Univ Sci 14(1):963–974

Diethelm K, Ford NJ, Freed AD (2002) A predictor–corrector approach for the numerical solution of fractional differential equations. Nonlinear Dyn 29:3–22

Ghasemi M, Fardi M, Ghaziani R Khoshsiar (2015) Numerical solution of nonlinear delay differential equations of fractional order in reproducing kernel Hilbert space. Appl Math Comput 268:815–831

Ockendon JR, Tayler AB (1971) The dynamics of a current collection system for an electric locomotive. Proc R Soc Lond Ser A 322:447–468

Buhmann MD, Iserles A (1993) Stability of the discretized pantograph differential equation. J Math Comput 60:575–589

Shakeri F, Dehghan M (2008) Solution of delay differential equations via a homotopy perturbation method. Math Comput Model 48:486–498

Shakeri F, Dehghan M (2008) The use of the decomposition procedure of a domian for solving a delay diffusion equation arisingin electrodynamics. Phys Scr Phys Scr 78:065004, 11pp

Sedaghat S, Ordokhani Y, Dehghan M (2012) Numerical solution of the delay differential equations of pantograph type via Chebyshev polynomials. Commun Nonlin Sci Numer Simul 17:4125–4136

Ajello WG, Freedman HI, Wu J (1992) A model of stage structured population growth with density depended time delay. SIAM J Appl Math 52:855–869

Morgado ML, Ford NJ, Lima P (2013) Analysis and numerical methods for fractional differential equations with delay. J Comput Appl Math 252:159–168

Čermák J, Horníček J, Kisela T (2016) Stability regions for fractional differential systems with a time delay. Commun Nonlinear Sci Numer Simul 31(1):108–123

Lazarević MP, Spasic AM (2009) Finite-time stability analysis of fractional order time-delay systems: Gronwall’s approach. Math Comput Model 49(3):475–481

Daftardar-Gejji V, Sukale Y, Bhalekar S (2014) A new predictor–corrector method for fractional differential equations. Appl Math Comput 244:158–182

Diethelm K, Ford NJ (2002) Analysis of fractional differential equations. J Math Anal Appl 265(2):229–248

Tavares D, Almeida R, Torres DFM (2016) Caputo derivatives of fractional variable order: numerical approximations. Commun Nonlinear Sci Numer Simul 35:69–87

Liu J, Li X, Wu L (2016) An operational matrix of fractional differentiation of the second kind of Chebyshev polynomial for solving multi-term variable order fractional differential equation. Math Probl Eng, 10 pages

Nagy AM, Sweilam NH, El-Sayed AA (2018) New operational matrix for solving multi-term variable order fractional differential equations. J Comp Nonlinear Dyn 13:011001–011007

El-Sayed AA, Agarwal P (2019) Numerical solution of multi-term variable-order fractional differential equations via shifted Legendre polynomials. Math Meth Appl Sci 42(11):3978–3991

Mallawi F, Alzaidy JF, Hafez RM (2019) Application of a Legendre collocation method to the space-time variable fractional-order advection-dispersion equation. J Taibah Univ Sci 13(1):324–330

Bhrawy AH, Zaky MA (2014) A method based on the Jacobi tau approximation for solving multi-term time-space fractional partial differential equations. J. Comput, Phys

Chen YM, Liu LQ, Li BF et al (2014) Numerical solution for the variable-order linear cable equation with Bernstein polynomials. Appl Math Comput 238:329–341

Abbasbandy S, Taati A (2009) Numerical solution of the system of nonlinear Volterra integrodifferential equations with nonlinear differential part by the operational Tau method and error estimation. J Comput Appl Math 231(1):106–113

Szeg\(\ddot{o}\) G (1985) Orthogonal polynomials, Am. Math. Soc. Colloq. Pub. 23

Doha EH, Bhrawy AH, Ezz-Eldien SS (2012) A new Jacobi operational matrix: an application for solving fractional differential equations. Appl Math Model 36:4931–4943

Yousefi SA, Behroozifar M (2010) Operational matrices of Bernstein polynomials and their applications. Int J Syst Sci 32:709–716

Labecca W, Guimaraes O, Piqueira JRC (2014) Dirac’s formalism combined with complex Fourier operational matrices to solve initial and boundary value problems. Commun Nonlinear Sci Numer Simul 19(8):2614–2623

Razzaghi M, Yousefi S (2005) Legendre wavelets method for the nonlinear Volterra-Fredholm integral equations. Math. Comput. Simul. 70:1–8

Danfu H, Xufeng S (2007) Numerical solution of integro-differential equations by using CAS wavelet operational matrix of integratio. Appl Math Comput 194:460–466

Behiry SH (2014) Solution of nonlinear Fredholm integro-differential equations using a hybrid of block pulse functions and normalized Bernstein polynomials. J Comput Appl Math 260:258–265

Saadatmandi A, Dehghan M (2010) A new operational matrix for solving fractional-order differential equations. Comput Math Appl 59:1326–1336

Saadatmandi A (2014) Bernstein operational matrix of fractional derivatives and its applications. Appl Math Model 38:1365–1372

Atabakzadeh MH, Akrami MH, Erjaee GH (2013) Chebyshev operational matrix method for solving multi-order fractional ordinary differential equations. Appl Math Model 37:8903–8911

Bhrawy AH, Alofi AS (2013) The operational matrix of fractional integration for shifted Chebyshev polynomials. Appl Math Lett 26:25–31

Oliveira (1980) Collocation and residual correction. Numer Math 6:27–31

Shahmorad S (2005) Numerical solution of the general form linear Fredholm-Volterra integrodifferential equations by the Tau method with an error estimation. Appl Math Comput 167:1418–1429

de Villiers J (2012) Mathematics of Approximation. Atlantis Press, New York

Yöuzbasi S (2012) An efficient algorithm for solving multi-pantograph equation systems. Comput Math Appl 64(4):589–603

Zlatev Z, Faragó I, Havasi Á (2012) Richardson extrapolation combined with the sequential splitting procedure and \(\theta -\)method. Central Eur J Math 10(1):159–172

Dehghan M, Manafian J, Saadatmandi A (2010) Solving nonlinear fractional partial differential equations using the homotopy analysis method. Numer Methods Partial Diff Equ 26(2):448–479

Lakestani M, Dehghan M, Irandoust-pakchin S (2012) The construction of operational matrix of fractional derivatives using Bspline functions. Commun Nonlinear Sci Numer Simul 17(3):1149–1162

Abbaszadeh M, Dehghan M (2019) Numerical and analytical investigations for neutral delay fractional damped diffusion-wave equation based on the stabilized interpolating element free Galerkin (IEFG) method. Appl Numer Math 145:488–506

Eslahchi MR, Dehghan M (2011) Application of Taylor series in obtaining the orthogonal operational matrix. Comput Math Appl 61(9):2596–2604

Kayedi-Bardeh A, Eslahchi MR, Dehghan M (2014) A method for obtaining the operational matrix of the fractional Jacobi functions and applications. J Vib Control 20(5):736–748

Acknowledgements

We are grateful to two anonymous reviewers for their helpful comments, which undoubtedly led to the definite improvements in the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Khodabandehlo, H.R., Shivanian, E. & Abbasbandy, S. Numerical solution of nonlinear delay differential equations of fractional variable-order using a novel shifted Jacobi operational matrix. Engineering with Computers 38 (Suppl 3), 2593–2607 (2022). https://doi.org/10.1007/s00366-021-01422-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-021-01422-7

Keywords

- Nonlinear delay differential equations of fractional variable-order

- Jacobi polynomials

- Operational matrix technique

- Caputo differential operator