Abstract

A wavelet-based optical flow method for high-resolution velocimetry based on tracer particle images is presented. The current optical flow estimation method (WOF-X) is designed for improvements in processing experimental images by implementing wavelet transforms with the lifting method and symmetric boundary conditions. This approach leads to speed and accuracy improvements over the existing wavelet-based methods. The current method also exploits the properties of fluid flows and uses the known behavior of turbulent energy spectra to semi-automatically tune a regularization parameter that has been primarily determined empirically in the previous optical flow algorithms. As an initial step in evaluating the WOF-X method, synthetic particle images from a 2D DNS of isotropic turbulence are processed and the results are compared to a typical correlation-based PIV algorithm and previous optical flow methods. The WOF-X method produces a dense velocity estimation, resulting in an order-of-magnitude increase in velocity vector resolution compared to the traditional correlation-based PIV processing. Results also show an improvement in velocity estimation by more than a factor of two. The increases in resolution and accuracy of the velocity field lead to significant improvements in the calculation of velocity gradient-dependent properties such as vorticity. In addition to the DNS results, the WOF-X method is evaluated in a series of two-dimensional vortex flow simulations to determine optimal experimental design parameters. Recommendations for optimal conditions for tracer particle seed density and inter-frame particle displacement are presented. The WOF-X method produces minimal error at larger particle displacements and lower relative error over a larger velocity dynamic range as compared to correlation-based processing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quantitative velocity measurements are paramount in fluid dynamics research for understanding flow physics. Over the last 30 years of application and refinement, particle image velocimetry (PIV) has become a well-established and the most utilized experimental method for determining fluid velocities. PIV is a field measurement that allows velocity to be determined at multiple spatial positions simultaneously over an entire image domain. This facet enables the determination of multiple components of the velocity vector and spatial derivative quantities, including vorticity, and strain rates, which is a primary advantage of the technique. Tracer particles are seeded into the flow and illuminated with successive laser sheets with a known time separation. Scattered light from the tracer particles is collected onto a camera (or series of cameras) and the velocity is calculated by determining displacements of the particle fields between the two image frames and using the known time separation between laser pulses (Adrian 2005; Westerweel 1997).

The standard approach for determining the particle displacement in PIV is by cross correlation of regions in the image domain known as interrogation windows (Westerweel et al. 2013). The interrogation windows are subdomains within the image and typically range in size between \(64 \times 64\) and \(16 \times 16\) pixels. One velocity vector is produced for each interrogation window, which is assumed to represent the velocity at every pixel inside the window. This type of velocity field estimation is called “nondense”, because it produces fewer velocity vectors than pixels in the source images. This implies that the spatial resolution of the PIV-based velocity measurement is more than an order-of-magnitude lower than the spatial resolution of the detector, since the PIV resolution is directly related to the size of the interrogation window (Kähler et al. 2012). This is a drawback of the cross-correlation method for determining particle displacements because it can lead to inaccuracies in high-gradient regions of flows and in calculating derivative quantities. The inherent spatial resolution limitations of PIV with correlation-based analysis is a well-recognized issue and there have been many efforts to improve the spatial resolution of PIV and related measurement techniques (e.g. Kähler et al. 2012; Hart 1999; Takehara et al. 2000; Scarano 2002; Susset et al. 2006; Schanz et al. 2016); the discussion of which is beyond the scope of this manuscript.

Optical flow motion estimation presents an alternative to cross-correlation-based processing of tracer particle images. Unlike cross-correlation analysis, optical flow produces velocity fields that are dense (one velocity vector for every pixel). In addition, some methods have been shown to be more accurate than cross-correlation algorithms on synthetic particle images from a direct numerical simulation (DNS) of isotropic turbulence (Heitz et al. 2010; Chen et al. 2015; Liu et al. 2015). However, optical flow methods are not without their potential drawbacks. The majority of the previous methods appearing in the literature require small displacements [O(1) pixel] to achieve sufficient accuracy (Liu and Shen 2008). To address this, optical flow methods are typically embedded into sequential coarse-to-fine multiresolution schemes (Heitz et al. 2010), although this approach can lead to “freezing” of large-scale motions as the algorithm proceeds (Dérian et al. 2012). Due to the assumption of conservation of brightness described below in Sect. 2.1, optical flow methods may exhibit difficulties with experimental noise or events such as particles entering or leaving the image plane that commonly occur in experiments (Stanislas et al. 2003, 2005, 2008). Optical flow methods also require a regularization parameter that has been tuned empirically previously and is dependent on the flow under consideration (Corpetti et al. 2002, 2006; Dérian et al. 2012). Several optical flow methods in the literature also are designed and optimized for divergence-free motions (Chen et al. 2015; Kadri-Harouna et al. 2013), or use periodic boundary conditions that are not well suited for experimental data (Kadri-Harouna et al. 2013; Dérian et al. 2013). Finally, optical flow methods typically are computationally intensive, requiring approximately ten times the amount of processing time per image pair compared to correlation-based methods, although some optical flow methods can take advantage of parallelization for faster processing (Plyer et al. 2016; Dérian and Almar 2017). These issues make confidently applying the existing optical flow methods as a primary diagnostic tool to real-world experimental data difficult.

The objectives of this work are to present an optical flow method for processing tracer particle images that addresses many of the previous weaknesses listed above, and to develop guidelines for experimental design when an optical flow method is to be used for velocimetry. Specifically, a wavelet-based optical flow method (WOF-X) is described that is designed for use on experimental data to achieve higher resolution and accuracy compared to correlation-based PIV processing. In the first part of the current manuscript, the WOF-X method is presented, validated with synthetic particle images generated from a DNS of isotropic turbulence, and compared with the other methods in the literature including both optical flow and correlation-based methods. In the second part of this manuscript, a series of Lamb–Oseen vortex flow simulations are presented to determine experimental “design guidelines” for WOF-X equivalent to the well-known recommendations for correlation-based PIV (Keane and Adrian 1990). In particular, recommendations for two critical design parameters, particle density and displacement, are given.

2 Method

2.1 Optical flow

“Optical flow motion estimation” or OFME is a well-recognized method within the computer vision community that has been used for determining rigid motion in image sequences since its introduction by Horn and Schunck (1981). Fundamentally, optical flow methods assume the conservation of brightness or intensity from one image in a sequence to the next, and use the temporal and spatial variations of intensity to infer the underlying motion. More specifically, OFME seeks to estimate the multidimensional motion that leads an image at time t to evolve to its state at time \(t+{\Delta } t\). In this manner, the intensity in an image \(I(\underline{x},t)\) obeys an advection equation of the form Horn and Schunck (1981):

where \(\underline{v}\) is the velocity. Equation 1 is integrated from time \(t_0\) to \(t_1\) assuming constant velocity over the time interval to yield the displaced frame difference (DFD) equation. Since the time interval \({\Delta } t = t_1 - t_0\) is a constant, it can be taken as unity during integration (and omitted) yielding:

OFME has been applied to fluid flows, with the initial work using similar algorithms and methodologies as developed for rigid motions with varying levels of success (Liu and Shen 2008). More recently, advanced optical flow methods have been presented by the applied mathematics and computer vision communities that may be better suited for the nonlinear and multiscale nature of fluid dynamics (e.g. Chen et al. 2015; Corpetti et al. 2006; Atcheson et al. 2009; Cassisa et al. 2011; Zillé et al. 2014; Cai et al. 2018). For example, Corpetti et al. (2002) correctly point out that the advection equation and the DFD equation shown in Eqs. 1 and 2 implicitly assume that the velocity field is divergence-free, i.e., \(\underline{\nabla } \cdot \underline{v} = 0\). They alternatively propose the use of the integrated continuity equation (ICE), which is a time-integrated version of the continuity equation in fluid mechanics, but with image intensity substituted for density:

This formulation is based on the assumption that the measured intensity is proportional to the integrated density across a measurement domain. This assumption is not generally valid for many flow visualization experiments including particle scattering experiments. Nevertheless, this formulation produced encouraging results using particle scattering measurements in a mixing layer and cylinder wake (Corpetti et al. 2006). As discussed by Liu and Shen (2008), the fortuitous results were due to the fact that the integrated continuity equation has the same form as a more rigorously defined, physics-based equation that relates optical to fluid flow.

Although optical flow was not originally developed for the study of fluid flows, one can recognize that Eq. 1 is the transport equation for a nondiffusive passive scalar in a divergence-free flow, with scalar quantity I (Tokumaru and Dimotakis 1995). The general fluid dynamic transport equation describing the evolution of any flow tracer (gas or particle) in a constant-density flow is an advection–diffusion equation:

where \(\zeta \left( \underline{x},t \right)\) represents any general scalar, \(D\left( \underline{x},t \right)\) is the diffusion coefficient, and \(R\left( \underline{x},t \right)\) is a source or sink of the quantity \(\zeta\). The diffusion term can be neglected, since the time interval between frames, \({\Delta } t\), typically is short and the diffusion coefficient is very small for discrete particles. The current tracer particles are inert, so if particle loss due to out-of-plane particle displacement is neglected, then the scalars are passive and \(R\left( \underline{x}, t \right) = 0\). This is a common assumption in correlation-based PIV, as well. This leads to a simplification of Eq. 4:

The field quantity, \(\zeta \left( \underline{x},t \right)\), can be considered as particle density (particles per unit volume, N) for the present case and the collected signal or intensity on the detector is proportional to N. In this manner, \(\zeta \left( \underline{x},t \right) \propto I \left( \underline{x},t \right)\). Thus, if the flow is divergence-free, Eq. 1 is the appropriate transport equation and Eq. 2 is a valid expression for obtaining velocity from particle images. This is consistent with the work from Liu and Shen (2008) who show that Eqs. 1 and 2 are appropriate in the limit of a thin laser sheet, divergence-free flow conditions, and constant particle density across the thin laser sheet. In general, \(\underline{\nabla } \cdot \underline{u} \left( \underline{x},t \right) \ne 0\) for 2D imaging even when the density is constant, since fluid can move through the laser sheet in a real 3D flow (Liu and Shen 2008). When divergence-free assumptions are not appropriate, Eq. 5 can be integrated to obtain the formulation shown in Eq. 3. Thus, Eqs. 2 and 3 represent physically sound models for OFME in fluid flows.

It is noted that Eq. 3 is a highly nonlinear function of the unknown velocity field \(\underline{u}\), and in fact, we observe that the DFD equation (Eq. 2) actually produces more accurate results than the ICE (Eq. 3) for the data analyzed in the present study. This result may be due solely to the fact that the velocity fields examined in the current work are divergence-free, but the optical flow method presented in this manuscript has features that overcome several limitations previously identified by Corpetti et al. (2002) in applying the DFD equation. Therefore, it is possible that the present method performs best with the DFD equation (as opposed to the ICE) even when flows are not divergence-free. This aspect will be examined extensively in future work, and it should be pointed out that implementing the ICE in the current framework instead of the DFD equation is extremely simple. For all results presented in this manuscript, the DFD equation (Eq. 2) is used.

In the current work, Eq. 2 is cast as a minimization problem for the velocity field \(\underline{v}\) by forming a penalty function (such as a quadratic function) \(J_\mathrm{{D}}\) from the DFD equation. However, it should be noted that the resulting minimization problem is under-constrained, since there are more unknowns than equations. For example, in the 2D problem, there are two components of \(\underline{v}\) and only one DFD equation [with input \(I\left( \underline{x},t\right)\)]. This is frequently addressed by the addition of a convex regularization term \(J_\mathrm{{R}}\) to \(J_\mathrm{{D}}\) that enforces some smoothness constraint on the velocity field, often acting on its derivative(s) (e.g. Corpetti et al. 2002). The terms are balanced by a parameter \(\lambda\) that is determined empirically in the literature. Thus, the estimated velocity field \({\hat{\underline{v}}}\) is found by solving:

2.2 Wavelet-based optical flow

The present method uses an approach for estimating the velocity field from Eq. 6 using wavelet transforms as first proposed by Wu et al. (2000) and further developed by Dérian et al. (2013). Our method is based on the approach described by Kadri-Harouna et al. (2013) with some key differences as described below. Thus, only a brief description of the general wavelet-based optical flow (WOF) methodology will be included here, with a focus on the distinctions of the current approach. Appendix 1 gives a brief description of wavelet transforms and signal decomposition as well as some of the features specific to WOF-X. The reader is referred to Kadri-Harouna et al. (2013) and Dérian et al. (2013) for a more complete discussion of applications of wavelets to optical flow.

The main idea of WOF is to perform the minimization in Eq. 6, not over the velocity field \(\underline{v}\), but instead over its wavelet transform \(\underline{\psi }\). A key feature of wavelet transforms is their ability to compress regular signals, which means that a sufficiently regular signal can be accurately represented by a significantly reduced number of non-zero wavelet coefficients compared to the number of samples in the signal (Mallat 2009). Wavelet transforms convert a signal (or image in two dimensions) into sets of coefficients at different scales, forming a multiresolution analysis (Mallat 1989). If the image has \(2^F \times 2^F\) pixels, its wavelet transform will have F scales and the finest scale has \(2^{F-1} \times 2^{F-1}\) coefficients, and the next finest has \(2^{F-2} \times 2^{F-2}\), and so on. Compression is commonly achieved in a linear fashion by setting wavelet coefficients above a scale L to zero, where \(L<F\).

WOF is performed by starting at a selected coarsest scale C (often 0) and proceeding to a predetermined finest estimated scale L, subject to \(0 \le C \le L < F\). At each scale S, a velocity field with \(2^S \times 2^S\) nonzero wavelet coefficients is estimated by inverting \(\underline{\psi }\) to find \(\underline{v}\) and evaluating Eq. 6. The result at each scale is then inserted into the next finer scale until the velocity field is estimated at \(S=L\). Since even the finest possible scale, \(S = F-1\), has four times fewer nonzero coefficients than the total number of velocity components in the field and hence half as many coefficients as there are pixels in the images, Eq. 6 is over-constrained and no longer ill-posed.

It is important to examine the effect of the truncation of fine scales on the reconstruction of the velocity field. Due to the properties of wavelets, if the velocity field is spatially well resolved, its spatial variations are smooth and can be well approximated by reconstructions at coarser scales. To demonstrate this, Fig. 1 shows vorticity fields and velocity spectra calculated from truncated wavelet transforms of a velocity field from a DNS of isotropic turbulence with \(F=8\), the details of which are given in Sect. 3. The true vorticity field and velocity spectra determined from the DNS also are shown in Fig. 1 for comparison. It is observed that truncation of the two finest scales does not introduce significant errors, so this velocity field with \(256 \times 256 \times 2 = 131072\) velocity components can be accurately represented by only \(64 \times 64 \times 2 = 8192\) wavelet coefficients.

a Vorticity fields from a DNS of isotropic turbulence produced from the truncated velocity fields with \(2^{7^2}\), \(2^{6^2}\), and \(2^{5^2}\) nonzero wavelet coefficients. b One-dimensional velocity spectra for the velocity field and truncated velocity fields at various scales. \(\kappa _1\) is the normalized wavenumber

WOF has several advantages as compared to other optical flow techniques. Because of the multiresolution analysis inherent in the wavelet-based estimation strategy, there is no need to use coarse-to-fine pyramidal schemes to handle large displacements such as the ones often used in the conventional optical flow approaches (Chen et al. 2015; Corpetti et al. 2006; Yuan et al. 2007), as the displacements are estimated at the coarse scales and refined as S increases. Velocity estimates from truncated wavelet transforms also inherently correct for local violations of the DFD equation due to occlusions or other effects and address motion ambiguity caused by the aperture problem (Ullman 1979). This is because the estimated velocity at each pixel is not determined solely by the intensity information at that particular pixel, but it is informed by the velocity estimations at nearby pixels as well due to the nature of wavelet scaling functions. In some ways, the multiresolution properties of wavelet scaling functions can be thought in a similar manner to how interpolating functions estimate function values between sampled points (for a discussion on the relation between multiresolution approximation and interpolation, see Sect. 7.6 of Mallat 2009).

A final advantage of WOF compared to the other approaches is that certain properties of wavelet transforms can be exploited to easily penalize the derivatives of the velocity field when computing the regularization term \(J_\mathrm{{R}}\) in Eq. 6. Specific details are given in Kadri-Harouna et al. (2013) and rely on the results derived by Beylkin (1992). In short, the magnitude of the derivatives of \(\underline{v}\) can be attenuated by penalizing the components of its wavelet transform \(\underline{\psi }\). The implementation is simple, efficient, and numerically stable, and, hence, does not require complex schemes such as those used in Yuan et al. (2007) and Chen et al. (2015).

2.3 Wavelet-based optical flow for experimental data

As described above, the wavelet formalism itself addresses several of the difficulties commonly associated with optical flow methods outlined in Sect. 1, namely the handling of large displacements and dealing with non-ideal experimental conditions. The other issues of empirically tuning the regularization parameter \(\lambda\) in Eq. 6, boundary conditions, non-divergence-free motion, and computation time are addressed in this section, where the differences between the current method and that of Kadri-Harouna et al. (2013) are highlighted. For clarity our method will be referred to as WOF-X (wavelet-based optical flow for experimental data), since it is designed specifically to be used in future work to analyze data from experiments.

WOF-X is currently implemented in MATLAB. Similar to the work of Dérian et al. (2013), it uses the l-BFGS algorithm (Schmidt 2005) to minimize Eq. 6, which is highly efficient in terms of memory and speed. However, in the current work, the objective function \(J_\mathrm{{D}}\) is not a simple quadratic function as in Kadri-Harouna et al. (2013) and Dérian et al. (2013), but rather a Lorentzian (Black and Anandan 1996) of Eq. 2 is used instead. Thus, \(J_\mathrm{{D}}\) takes the following form:

where \({\Omega }\) represents the image domain and \(\sigma\) is a parameter describing the strength of the penalty function. Results of optimization are not very sensitive to the value of \(\sigma\) and it is set to 2 in the present work. A Lorentzian penalty function is “softer” than a quadratic, meaning that it is less sensitive to outliers and noise, potentially leading to higher accuracy in experiments. WOF-X uses a bi-cubic warping operator to create the motion-compensated image in the DFD equation \([I_1(\underline{x}+\underline{v}(\underline{x}))]\) that takes advantage of MATLAB’s efficient architecture for interpolation of gridded data. The gradient of \(J_\mathrm{{D}}\) with respect to \(\underline{\psi }\) must be supplied to the l-BFGS minimization algorithm. It is determined from the wavelet transform of the gradient of \(J_\mathrm{{D}}\) with respect to \(\underline{v}\left( \underline{x} \right)\) using the calculus of variations:

The issues of computational burden and boundary conditions are both addressed with the use of an alternative wavelet function. Previous works have used orthogonal Daubechies wavelets or Coiflets which inherently produce periodic velocity fields and are implemented with circular convolutions. The current WOF-X method uses biorthogonal 9–7 wavelets. Biorthogonal 9–7 wavelets are popular in image compression and are used in the JPEG-2000 standard (Taubman and Marcellin 2002) because their inverse transforms produce smooth functions and the wavelets are symmetric, which allows them to be implemented with symmetric (i.e., zero-gradient) boundary conditions. Furthermore, they can be implemented with the efficient lifting transform (Daubechies and Sweldens 1998) which is significantly faster than convolution. The total speed improvement using the combination of biorthogonal 9–7 wavelets and the lifting transform is about a factor of 2.5 compared to that in Kadri-Harouna et al. (2013). While WOF-X currently is still slower than a correlation-based program such as DaVis, Dérian’s wavelet-based optical flow algorithm, named Typhoon, has been implemented on NVIDIA’s CUDA platform, so that parallel processing can be performed on GPUs, yielding a speedup of a factor of 10–100 (Dérian et al. 2015). In principle, a similar implementation could be done for WOF-X if processing time compared to correlation methods becomes a significant obstacle, as could be the case for large images (\(>1024 \times 1024\) pixels) or three-dimensional measurements. Currently, for smaller \(256 \times 256\) pixel images, WOF-X is approximately five times slower than LaVision’s DaVis 8. However, this is primarily a function of the fact that WOF-X yields more than an order-of-magnitude more velocity vectors than correlation-based methods.

The regularization term \(J_\mathrm{{R}}\) in Eq. 6 performs third-order smoothing of the velocity field; that is, it penalizes the magnitude of the third derivative of both components of velocity, unlike the second-order smoothing in Kadri-Harouna et al. (2013). This is computed in the wavelet domain using the operator discrete approximation method described in Kadri-Harouna et al. (2013). The expression for \(J_\mathrm{{R}}\) is given in Appendix 1. The third derivatives penalized do not include cross derivatives (e.g., \(\frac{\partial ^3}{\partial x^2 \partial y}\)) due to the fact that the two-dimensional wavelet transforms are constructed from two one-dimensional transforms. The penalty function used for \(J_\mathrm{{R}}\) is a simple quadratic. This regularization method does not penalize the divergence or curl of the flow field like common regularization schemes as first presented by Corpetti et al. (2006), Horn and Schunck (1981). Penalization of the divergence or curl leads to “physical smoothing” of the velocity gradient fields and a significant underestimation of their magnitudes. Alternatively, the penalization of the third derivative of velocity (“jounce”) enforces smoothness in the velocity field as well as its first two derivatives without attenuating the velocity gradient magnitudes. This is very important in determining derivative-based kinematic properties such as strain rate and vorticity accurately. Performing this penalization in the wavelet domain is simple and efficient, and does not require the complex schemes needed for direct high-order derivative penalization (Yuan et al. 2007; Chen et al. 2015). It, therefore, represents a physically sound regularization term for fluid flows that only marginally increases the computational complexity of the optical flow problem.

Perhaps the most significant improvement in WOF-X compared to other optical flow methods for application to experimental data is the ability to compute the regularization coefficient \(\lambda\) with minimal empiricism. It is similar in principle to the methods of Héas et al. (2013) and Beyou et al. (2013) in that it uses properties of the estimated velocity field to tune the regularization term, but, unlike these methods, it exploits the known physical behavior of velocity spectra instead of the statistics of the velocity field from one time instance to the next. The small amount of user input involved in estimating \(\lambda\) in the proposed method makes the approach more versatile and potentially more accurate than the simpler parameter-free method of Cai et al. (2018) that is based on an a priori scaling argument. First, it is observed that for sufficiently high regularization, the results of any WOF method are identical for several values of the highest estimated scale L. Therefore, the finest estimated scale is always set to \(L=F-1\) and the effect of changing L does not need to be considered as in Dérian et al. (2013). It is also observed that the same value for \(\lambda\) produces similar results for all image pairs in a given data set acquired from the same flow, so \(\lambda\) can be optimized for a single image pair and then applied to the entire data set.

The underlying principle in determining \(\lambda\) in the current work is to use the properties of the turbulence spectra of fluid flows (Pope 2001). The method presented here should be valid for the spectrum of either component of velocity along either spatial direction, but it is recommended to use \(E_{11} \left( \kappa _1 \right)\) (see Sect. 6.5 of Pope 2001) where \(E_{11} \left( \kappa _1 \right)\) represents the energy spectrum in the principal flow direction. The principal direction is taken as the one that affords the largest number of pixels for the Fourier transform when computing the spectrum. This strategy only requires that the spectrum shows a net transfer of energy from larger scales to smaller scales over the majority of wavenumber space, and, therefore, is applicable to a wide range of flows.

It is first recognized that increasing \(\lambda\) has the effect of removing energy from the flow, first at higher wavenumbers and finally at lower ones, as shown in Fig. 2a. Note that the wavenumber \(\kappa _1\) is a normalized wavenumber. It is also noted from Fig. 2a that, for sufficiently small values of \(\lambda\), the regularized spectra and the un-regularized (\(\lambda =0\)) spectrum coincide at low wavenumbers. Finally, it is observed that the slope of the \(\lambda =0\) spectrum (in log–log space) increases at some value of \(\lambda\) where the optical flow algorithm is artificially adding energy to the flow at fine scales to more precisely minimize the DFD equation (cf. Dérian et al. 2013). This spectral feature is not physical, as kinetic energy in fluid flows is primarily dissipative, and thus, the energy cascade should be reflected in a spectrum that has a constant or decreasing slope as the wavenumber increases.

a Spectra for the same image pair with increasing value of \(\lambda\). \(\lambda =0\) is shown in black. \(\kappa _1\) is a normalized wavenumber. b Spectra with the location of the slope deviation point, the linear fitting point, and the objective end point. A line is fit to the \(\lambda =0\) spectrum between the deviation point and the linear fitting point, which is projected forward in wavenumber space until the objective end point. The spectrum is matched to this line by a heuristic algorithm. c The spectrum for \(\lambda =0\) and the spectrum after optimization

To address this facet, the current algorithm first locates the point where the slope of the \(\lambda =0\) spectrum begins to deviate in the positive direction; it is marked in Fig. 2b with a red circle. Subsequently, the spectrum is smoothed with a moving average filter to mitigate noise. The user then selects a point on the spectrum at a lower wavenumber than the deviation point, such that the spectrum is approximately linear between the selected point and the deviation point. This location is referred to as the “objective start point” and is denoted in Fig. 2b. A line is fit to the spectrum between the objective start point and the slope deviation point and projected forward in wavenumber to a point specified by the user (denoted as the “objective end point”). This “objective line” and the objective end point also are shown in Fig. 2b. Once the objective line is computed, a heuristic algorithm is used to find the value of \(\lambda\) that produces a spectrum with minimal error along this line. That value of \(\lambda\) is taken to be the optimum and is shown in Fig. 2c. The precision of the heuristic algorithm increases with each iteration, but it is found that a sufficiently precise value for \(\lambda\) is produced after 14 executions of the WOF-X algorithm. This routine for optimizing \(\lambda\) has been successfully tested on numerous synthetic data sets as well as some preliminary experimental data that are not presented in the current manuscript. These data sets include turbulent flows with widely varying Reynolds numbers, as well as some laminar flows.

To summarize this section, a step-by-step description of the operation of the WOF-X algorithm at a given scale S is given below. In this example, the images are square with \(2^F \times 2^F\) pixels. Starting at a coarse scale \(S=C\) and proceeding to a finer scale \(S=L\), the following steps are performed for each value of S.

-

1.

The wavelet transform of the velocity field from the previous (coarser) scale \(\underline{\psi }_{S-1}\) is used to initialize the l-BFGS minimization algorithm.

-

2.

An estimate of the velocity field \(\underline{v}\) is found by performing an inverse wavelet transform of the transformed velocity field \(\underline{\psi }\). The transform in WOF-X is performed using biorthogonal 9–7 wavelets and a lifting transform, unlike in previous wavelet-based optical flow methods (Kadri-Harouna et al. 2013; Dérian et al. 2013)

-

3.

Both the penalty function of the regularization term \(J_\mathrm{{R}}\) and its gradient \(J_\mathrm{{R}}^\prime\) are computed from the velocity field as described in Eqs. 13 and 14 in Appendix 1.

-

4.

The motion-compensated image \(I_1 \left( \underline{x} + \underline{v} \left( \underline{x} \right) \right)\) in Eq. 2 is computed using bi-cubic spline interpolation with not-a-knot end conditions.

-

5.

The objective function to be minimized in Eq. 6 is evaluated using the penalty function of the data term \(J_\mathrm{{D}}\) (Eq. 7) and the regularization term \(J_\mathrm{{R}}\), both of which depend on the estimate of the velocity field \(\underline{v}\) found in step 2 from \(\underline{\psi }\).

-

6.

The gradient of the motion-compensated image is computed with centered finite differences, which is then used to compute the gradient of the data term in the objective function \(J_\mathrm{{D}}^\prime\) (Eq. 8).

-

7.

The gradient of the objective function formed from \(J_\mathrm{{D}}^\prime\) and \(J_\mathrm{{R}}^\prime\) is transformed into the wavelet domain by a forward wavelet transform.

-

8.

The objective function and its gradient (steps 5 and 7) are used by the l-BFGS minimization algorithm to obtain a new estimate for \(\underline{\psi }\) at scale S and steps 2 through 7 are repeated until the objective function in step 5 is minimized.

When the algorithm converges to a final result at scale \(S=L\), the resulting estimate \(\underline{\psi }_L\) is transformed with an inverse wavelet transform to the physical domain to obtain the final estimate \({\hat{\underline{v}}}\). Two wavelet transforms are required at each iteration of the l-BFGS algorithm: an inverse transform in step 2 to find \(\underline{v}\) from \(\underline{\psi }\), and a forward transform of the gradient of the objective function in step 7. The improvements implemented in WOF-X compared to the wavelet-based optical flow method in Kadri-Harouna et al. (2013) include the use of the Lorentzian penalty function, biorthogonal 9-7 wavelets with symmetric boundary conditions, the choice of third-order smoothing instead of second-order, and the semi-automatic tuning procedure to find the weighting coefficient \(\lambda\) for \(J_\mathrm{{R}}\).

3 Validation on synthetic data

WOF-X was first validated on a set of synthetic data. The velocity fields and particle images were generated from a DNS of isotropic turbulence with periodic boundary conditions and Reynolds number 3000 by Carlier and Wieneke (2005) that has been used to evaluate several optical flow algorithms. A sample particle image and vorticity field are shown in Fig. 3. Each image is \(256 \times 256\) pixels, with 100 images in the sequence. Using synthetic data does not address certain complexities of experimental data including 3D effects, noise, and nonuniform illumination. However, these issues are intentionally avoided in the current manuscript to assess the accuracy of the method in an ideal environment where a ground truth velocity is known. The sensitivity of WOF-X to other factors present in experimental data will be addressed in subsequent work in a systematic way.

a A sample particle image from the DNS of Carlier and Wieneke (2005) used for validation of the WOF-X method. b Sample vorticity field derived from an instantaneous velocity field

The estimated velocity from the WOF-X method is compared against the true velocity from the DNS, as well as velocity estimations using a cross-correlation-based PIV algorithm (DaVis, LaVision Inc.), the Typhoon WOF algorithm by Kadri-Harouna et al. (2013), Dérian et al. (2015), and the DenseMotion optical flow algorithm by Corpetti et al. (2002), Corpetti et al. (2006). The optimal value for \(\lambda\) was found according to the procedure described in Sect. 2.3 and was determined to be \(1.433 \times 10^{-13}\) for this data set. Results from DaVis were produced by preprocessing the images with a sliding background filter and then performing two passes each at interrogation windows of \(96 \times 96\) pixels and \(16 \times 16\) pixels with 75% overlap. The correlation algorithm uses a multigrid approach with image deformation using sixth-order B-splines and Gaussian weighting of the interrogation windows. The Typhoon algorithm used the second-order regularization with a value for the regularization parameter of 0.04, which produced minimal error. The DenseMotion algorithm contains several user-specified parameters, which were combined to produce minimal error in the estimation.

Velocity estimation errors are computed using root-mean-square endpoint error (RMSE) and average angular error (AAE):

In Eqs. 9 and 10, N is the number of velocity vectors, \(\underline{v}_\text {ref}\) is the true (reference) velocity, and \({\hat{\underline{v}}}\) is the estimated velocity. To mitigate boundary effects, the velocities at the boundaries are ignored when computing the errors. The vectors closest to the boundaries are ignored in the correlation results, and the corresponding eight closest vectors to the boundaries are ignored in the optical flow results, since the optical flow estimations are \(8 \times 8\) times more dense than the correlation estimation.

Figure 4 compares the RMSE and AAE for the examined velocimetry methods. The results show that the optical flow methods outperform the correlation-based approach. The RMSE and AAE errors for the WOF-X method are approximately the same as those for the Typhoon algorithm, both of which are more accurate than DenseMotion. This demonstrates the high accuracy of wavelet-based optical flow approaches. It should be noted that although points on the boundaries are ignored when computing the error metrics, the boundary conditions in the WOF methods still influence the overall error because of the influence of nearby points in the domain on one another as explained in Sect. 2.2. Recall that the Typhoon algorithm uses periodic boundary conditions. Since the DNS also has periodic boundary conditions, this leads to small amount of additional accuracy when using the Typhoon algorithm for this particular case. However, it should be noted that actual experimental data do not have periodic boundary conditions. A comparison between results using the current WOF-X and the Typhoon algorithm for data without periodic boundary conditions is performed in Sect. 4.2.

The advantages of the WOF-X method compared to a correlation-based PIV approach are further illustrated by examining results from a single image pair in the sequence. Figure 5 shows slices of the horizontal component of velocity taken along four horizontal cuts through the domain. The locations where the correlation-based approach (analyzed with the DaVis software) produces a velocity vector are marked, clearly illustrating the advantage of dense estimations. Using WOF-X, velocity vectors are produced at every pixel as opposed to one vector per interrogation window. The generation of a single velocity vector per interrogation window (when using correlation) produces a lower-resolution result which tends to smear out sharp gradients in the velocity field, which is observed in Fig. 5. In contrast, the WOF-X algorithm accurately reproduces rapid fluctuations in the velocity field, thus accurately preserving velocity gradients. Figure 6 shows calculated probability density functions (PDFs) of the error for both scalar components of velocity using the WOF-X method and correlation-based methods. The PDFs are generated using the data from the entire image sequence from the DNS database and the error is calculated as \(\epsilon _{v_i} = {\hat{v}}_i - v_{i,{\mathrm {ref}}}\). The PDFs demonstrate the increased accuracy of WOF-X compared to correlation-based methods, both in terms of overall accuracy (i.e., an increased number of vectors with low error) and fewer outliers with high error magnitude. Gaussian fits to the calculated PDFs are also shown in Fig. 6, where the error distributions for both methods are approximately Gaussian over about 2 decades of values beyond which both methods exhibit some high-magnitude error deviations from a Gaussian distribution. Note that the asymmetry in the PDFs for errors with large magnitudes is likely an artifact of the specific flow being studied. In addition, there are relatively very few velocity samples with large magnitudes, and thus, the statistics are not converged for these values. The results of Figs. 4, 5, and 6 show that the WOF-X approach produces both higher accuracy and resolution as compared to the correlation-based approach.

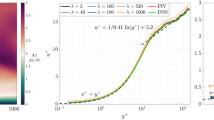

Figure 7 shows one-dimensional turbulence energy spectra calculated from the velocity results determined using both correlation and the WOF-X approach. In addition, the one-dimensional energy spectrum from the “true” velocity from the DNS also is shown for comparison. Because of its lower spatial resolution, the correlation-based result only can estimate the spectrum in the lower two-thirds of the wavenumber space. However, the energy spectrum determined using the WOF-X method matches the true spectrum very closely, even at high wavenumbers. This is a result of proper determination of the regularization parameter \(\lambda\).

The higher resolution and accuracy of the velocity estimation produced by WOF-X means that the calculation of derivative quantities such as vorticity is more accurate than those calculated with correlation-based approaches. Figure 8 shows an example set of instantaneous vorticity fields. The leftmost image is the vorticity field calculated from the 2D DNS of isotropic turbulence, which is considered the “truth.” The center image is determined from the velocity field estimated with WOF-X, while the rightmost image is calculated from the velocity field using the correlation-based PIV algorithm. While the correlation-based approach captures the larger features in the vorticity field, it does not have the spatial resolution required to reproduce the details of true vorticity field. The WOF-X method clearly shows a significant improvement in the estimation of the vorticity field. In fact, the vorticity field estimated with the WOF-X method captures the majority of the true topology of the vorticity field, including the complex small-scale structure. This point is further demonstrated in Fig. 9, which shows horizontal slices through the vorticity field. The correlation-based results clearly show a low-pass-filtered version of the vorticity field, while there are minimal differences between the true vorticity profiles and the WOF-X estimations.

Sample vorticity profiles extracted from the 2D vorticity images in Fig. 8

4 Determination of experimental design parameters

Because of the maturity of the correlation-based PIV methodology, design parameters for PIV experiments are well known. Two key parameters are the seeding or “image” density and the maximum in-plane particle displacement in the interval between successive frames. According to Keane and Adrian (1990), each interrogation box should contain at least 12 particles, which corresponds to approximately 0.05 particles per pixel (ppp) for a \(16 \times 16\) pixel interrogation window, and the maximum displacement should be less than or equal to one quarter of the size of the interrogation window. Correlation-based algorithms are more accurate for smaller displacements, but this guideline is intended to maximize the method’s dynamic range.

Similar quantitative guidelines for experiments do not yet exist for optical flow approaches. Establishing these guidelines is an important step towards freely using optical flow methods for evaluation of experimental data. Qualitatively, it has been suggested that the seeding density used for optical flow might be higher than for correlation and that the inter-frame displacement should be kept small (Liu and Shen 2008); however, these suggestions have not been rigorously tested, nor have they been considered in the context of wavelet-based optical flow.

4.1 Vortex flow simulation

A set of flow simulations with synthetic particle tracers were performed to determine optimal experimental design parameters for the WOF-X method. A suitable flow should meet several requirements. First, the time step between frames (and, therefore, the fluid displacement) should be variable without changing other features of the flow, so that results with different displacements can be reliably compared. Second, the particle image density from synthetic images must be variable, again without changing any flow features. Third, the flow should ideally have an exact expression for velocity at any spatial position and not just at the explicit grid points, such that particles can be transported accurately and an error between the estimated and true velocities can be determined without bias. Finally, the flow should be nontrivial; that is, it should be temporally evolving and have complex spatial topography which includes large spatial variations in velocity.

The selected flow field is a set of 32 non-temporally decaying Lamb–Oseen vortices of random size, strength, location, and sign. The vortices are permitted to move under one another’s influence, creating a temporally evolving flow with rapidly spatially varying characteristics. The induced velocity of a single Lamb–Oseen vortex is given by the following:

where \(V_{\theta ,\text {max}}\) is the maximum velocity produced by the vortex at a radius of \(r_\text {max}\) and \(\alpha\) is a parameter approximately equal to 1.25643 that relates \(r_\text {max}\) to the circulation contained in the vortex. The expression is analytic, so the velocity produced by a set of Lamb–Oseen vortices can be determined at any location in the domain without approximation by summing the velocity induced by each vortex at that location.

The computational domain for the flow is a \(360 \times 360\)-pixel region, where only the central \(256 \times 256\) pixels are used when producing images for velocimetry calculations. This creates realistic boundary conditions for the central image domain. The time step of the computation is taken to be unity for simplicity. Values for \(V_{\theta ,\text {max}}\) are randomly sampled from a uniform distribution on \([-11.25,-8.75] \times 10^{-3} \ \cup \ [8.75,11.25] \times 10^{-3}\). These values for \(V_{\theta ,\text {max}}\) ensure that the vortices are all of comparable strength, so that the flow field is not dominated by one or two strong vortices. In addition, they set the maximum Courant number to approximately 0.025 when accounting for the interaction of two strong vortices. This Courant number is sufficiently small for convergence of the tracer particle locations. The simulation is run for 32,000 time steps by time-marching the positions of the vortices using a fourth-order Adams–Bashforth–Moulton (ABM4) scheme.

Tracer particles are treated as sizeless, inertialess Lagrangian trackers. 51,840 particles are initialized at \(t=0\) at random locations in the \(360 \times 360\)-pixel domain (particle density \(=0.4\) ppp). These particles are grouped into sets of 1296 (particle density \(=0.01\) ppp) which allows a parametric evaluation of the effect of particle density. The particles are convected by the velocity field using the same ABM4 scheme used to solve the motion of the vortices. Particle positions are treated as periodic with respect to the boundaries of the \(360 \times 360\)-pixel computational domain, so that no new particles are generated during the course of the simulation.

Synthetic particle images are created from the particle locations as described in Appendix 1. Each particle is assigned a diameter and center brightness along with its location. The diameter and center brightness values for a given particle are constant in time. Because the particles are subdivided into groups of 1296, images at the same time instance with varying particle density are created by considering only a subset of particle groups. In this way, images with particle densities between 0.01 and 0.4 ppp are created for each sequence for the exact same flow.

To produce data sets with larger displacements than the one determined by the temporal resolution of the simulation, a specified number of time steps are skipped when creating particle images. Sets of 51 images and velocity fields (50 image pairs) are created from the complete 32,000 image sequence, with various values of the number of time steps skipped, ranging from 0 to 640. Each set begins at \(t=0\), which is the first time step in the complete flow simulation. Therefore, velocimetry algorithms can be evaluated on image sequences of the same flow, but with different values for the maximum particle displacement and particle density, both of which can be varied independently. A sample synthetic particle image with particle density of 0.1 ppp and the corresponding velocity vector field are shown in Fig. 10. Figure 11 shows example velocity slices extracted from the domain for the same velocity field. Similar to the isotropic turbulence DNS results shown in Sect. 3, the WOF-X method produces higher resolution as compared to the correlation-based PIV analysis. However, the correlation-based PIV and WOF-X results appear to show similar accuracy based on the sample velocity profiles, which is in contrast to the turbulent flow results shown in Sect. 3. This is due to the fact the velocity field produced by the set of Lamb–Oseen vortices is fairly smooth without significant gradients. It is well known that correlation-based PIV yields accurate results under these conditions. In the few instances of sharper gradients (see panel 3 in Fig. 11), the current WOF-X approach is able to accurately resolved the high-spatial-gradient regions, whereas the correlation-based method cannot.

Velocity plotted along horizontal slices in the domain from the sample field shown in Fig. 10b. Maximum displacement is \({\Delta } x = 2.5\) pixels and particle density is 0.3 ppp

4.2 Results

Optimal tracer seeding density and inter-frame displacement for the WOF-X method are determined by analyzing velocimetry results with all combinations of the two parameters for the vortex flow simulation described in Sect. 4.1. The error metric for a given combination of particle density and maximum displacement is the median RMSE (see Eq. 9) normalized by the rms velocity of the 50-image pair series with those parameters, denoted \(\epsilon _v\). Velocimetry results were determined with both WOF-X and the DaVis correlation-based PIV algorithms. In addition, a “hybrid” method was analyzed. The hybrid method seeks to combine optical flow and correlation-based approaches to arrive at a method with the advantages of both. Specifically, the hybrid WOF-X method would have the high-spatial resolution and accuracy of WOF-X, but the ability to handle large particle displacements, characteristic of correlation. The hybrid results are achieved by first determining the velocity using a cross-correlation-based PIV algorithm (DaVis). Subsequently, this output is interpolated to a dense grid to initialize the WOF-X algorithm. Initialization begins at a scale of \(C=4\) instead of \(C=0\) for the pure WOF-X approach to retain some of the features of the results from DaVis. Finally, the WOF-X algorithm is applied from \(C=4\) to \(L=F-1\). Cross-correlation results were similarly used to estimate coarse-scale motions in non-wavelet-based optical flow approaches by Alvarez et al. (2009) and Heitz et al. (2008).

Figure 12 shows the error as a function of the particle density for correlation, WOF-X, and the hybrid WOF-X methods for several values of the particle displacement \({\Delta } x\). A few observations can be made from the results shown in Fig. 12. First, for any value of particle displacement the error is highest for low particle densities and then decreases rapidly before asymptoting towards a constant value as the particle density increases. This result is observed for all the methods. The correlation-based method approaches its minimal error at lower seed densities than the WOF-X or hybrid methods. For the correlation-based method, the error decreases significantly until a value of 0.05 ppp and then either remains constant or decreases slowly for increasing seed densities. For the WOF-X or hybrid methods, the error decreases significantly until a seed density of approximately 0.1 ppp with some further decrease as the seed density increases. The correlation results were produced with \(16 \times 16\)-pixel interrogation windows, so the value of 0.05 ppp corresponds to 12–13 particles per interrogation window, in agreement with the suggested guideline of the minimum particle image density recommended within the literature (Keane and Adrian 1990). The WOF-X and hybrid methods perform better with higher seeding densities, which agrees with intuition based on the dense nature of the estimation approach. It can also be observed that the correlation-based method has lower error only for conditions of low particle densities and small displacements (where \(\epsilon _v\) is high for all methods); otherwise, the WOF-X and hybrid methods produce more accurate results.

Error as a function of particle displacement is shown in Fig. 13 for three values of particle density. First, it is noted that, for flows with a maximum displacement \(\gtrsim 5\) pixels, the WOF-X method fails to find a solution. Estimations from the correlation and the hybrid methods converge to solutions at these large displacements, but they are less accurate than the results from smaller displacements. Over the range where all three methods are applicable, it is observed that the correlation-based method produces a more accurate result only for the extreme conditions of displacements and seeding densities. For example, the correlation-based method is more accurate for all displacements less than 15 pixels for a very low seeding density of 0.02 ppp and displacements less than 0.15 pixels for a seeding density of 0.05 ppp, which are not recommended operating conditions for achieving accurate results for any method. For all other operating conditions, the WOF-X and hybrid methods appear to be more accurate. Another important observation from the results of Fig. 13 is that the minimum error for WOF-X and the hybrid method occurs at similar displacements compared to that of correlation. In this manner, WOF-X and the hybrid approach are suited for maximizing both accuracy and dynamic range (increased \({\Delta } x\)) simultaneously. Finally, it is noted that the hybrid method represents a methodology that can extend the usable range of particle displacements to that consistent with correlation-based analysis with minimal loss of accuracy as compared to WOF-X. Trends in error as a function of these two parameters in Figs. 12 and 13 agree qualitatively with those observed in Liu et al. (2015) for a non-wavelet-based optical flow method.

The information from Fig 12 suggests that the error is nearly independent of particle seed density for levels greater than 0.05 ppp. Thus, Fig. 13c can be used to determine general experimental parameters. Figure 13c shows that the correlation-based estimation has a peak accuracy in flows with a particle displacement between 0.5 and 8 pixels. This result is in agreement with established PIV design criteria, which suggests that particles should move a maximum of one quarter of an interrogation window, which would be 4 pixels for the current \(16 \times 16\) pixel interrogation window. However, it is noted that the initial window size of \(96 \times 96\) pixels in the multipass schemes extends this somewhat. The WOF-X and hybrid methods produce slightly different results, displaying a distinct minimal error for particle displacements of about 2.5 pixels. This is a surprising result, as previous researchers have suggested that optical flow methods would be most accurate for very small displacements (Liu and Shen 2008). The WOF-X method is accurate for large displacements until the method fails to converge (approximately \({\Delta } x = 5\) pixels), and the hybrid method is reasonably accurate for very large displacements up to \(\sim 10\) pixels. The fact that WOF-X (and the hybrid method) is accurate at relatively high values of displacement is likely due to the multiresolution strategy built into the wavelet formalism discussed in Sect. 2.2. The initial estimations at coarse scales capture the large displacements and subsequent estimations at finer scales refine motions occurring over small scales.

A final result from the vortex flow simulation demonstrates the importance of the symmetric boundary conditions utilized by WOF-X. Fig. 14 compares the RMSE and AAE of WOF-X, correlation, and Typhoon for a 50-image pair series with “on design” values for particle density and displacement, specifically 0.3 ppp and a particle displacement of 2.5 pixels. As in Sect. 3 (Fig. 4), pixels near the boundaries are ignored when computing errors. It is observed that WOF-X is approximately a factor of two more accurate than correlation-based PIV and approximately 20% more accurate than Typhoon in terms of RMSE and AAE, respectively, for flows with more realistic boundary conditions such as those found in experiments. This is due to the fact that the estimations at the image boundaries influence the results in the interior in WOF methods. For real flows (that do not have periodic boundary conditions), the use of wavelets with periodic boundary conditions will produce significant errors at the boundaries, which can influence the results away from the boundaries. WOF-X reduces this effect using wavelets with symmetric boundary conditions.

5 Conclusions

A wavelet-based optical flow method was presented for highly accurate and highly resolved velocimetry measurements based on tracer particle images. The current WOF-X method was designed specifically for processing experimental image data and was compared to previous optical flow methods and a conventional correlation-based method. The WOF-X method contains several improvements compared to existing optical flow methods, including previous wavelet-based methods, that mitigate many of the documented drawbacks of optical flow velocimetry techniques. Unlike many previous optical flow methods, it uses only minimal empiricism, but rather takes advantage of inherent turbulent flow properties and uses turbulence energy spectra and a semi-automated heuristic algorithm to optimize the weighting parameter for the regularization term. In the current manuscript, the WOF-X method is assessed on synthetic data generated from a 2D DNS calculation of isotropic turbulence. This data set serves as an appropriate initial test bed to analyze the potential benefits of the proposed methodology as it represents an “ideal case.” For the synthetic data sets presented, the WOF-X method is shown to yield a factor of 1.5–2 improvement in velocity estimation accuracy compared to the correlation-based approach, along with the benefits of a dense estimation of the velocity field, which yields approximately an order-of-magnitude higher spatial resolution as compared to conventional correlation-based PIV. Such improvements in velocity accuracy and spatial resolution are critical in resolving important derivative quantities such as vorticity. The improvements in accuracy and spatial resolution cost approximately a factor of 5–10 in processing time compared to cross correlation.

A series of vortex flow simulations were performed to determine the sensitivity of WOF-X results to user-selected experimental parameters of particle density and particle inter-frame displacement. These parameters are systematically varied to develop recommendations for experimental design. WOF-X was found to be most accurate for particle densities \(\ge 0.1\) ppp compared to \(\ge 0.05\) ppp for correlation. However, it is noted that WOF-X is more accurate than correlation-based analysis for all seeding densities greater than 0.02 ppp. For WOF-X, maximum displacements \(\le 5\) pixels yield the highest accuracy which is the same for correlation with \(16 \times 16\)-pixel interrogation windows. WOF-X fails to converge to a solution for maximum displacements \(\gtrsim 5\) pixels, but a hybrid method is introduced that is accurate for maximum displacements up to 10 pixels. The hybrid approach uses the results of a correlation-based method to initialize WOF-X at a finer initial scale compared to pure WOF-X and is able to converge to a solution for higher displacements. The hybrid approach will likely be the best approach for experiments, as it is shown to be only marginally less accurate compared to “pure” WOF-X but gains the robustness of a correlation-based method. The additional time cost of processing the image data with traditional PIV software prior to processing with WOF-X is small (10–20%) compared to the computational time for WOF-X alone. A surprising result is that WOF-X and the hybrid method achieve minimal error for maximum displacements of 2.5 pixels, which is much greater than previous assertions concerning optical flow methods. In addition, WOF-X retains higher relative accuracy as compared to the correlation-based approach over a broad range of particle displacements and thus maximizes the accuracy of the velocity estimation over a larger range of velocity values. Finally, it is noted that the current WOF-X method showed increased accuracy compared to the previous wavelet-based optical flow methods for flows without periodic boundary conditions, as will be encountered in real experimental data. Future work is required to study the influence of nonideal experimental effects (i.e., noise, laser sheet intensity fluctuations, and out-of-plane particle displacement), three-dimensional, and divergent flows on the accuracy of the WOF-X method before it can be applied freely to experimental data.

References

Adrian RJ (2005) Twenty years of particle image velocimetry. Exp Fluids 39:159

Alvarez L, Castano CA, García M, Krissian K, Mazorra L, Salgado A, Sánchez J (2009) A new energy-based method for 3D motion estimation of incompressible PIV flows. Comput Vis Image Underst 113:802

Atcheson B, Heidrich W, Ihrke I (2009) An evaluation of optical flow algorithms for background oriented schlieren imaging. Exp Fluids 46(3):467

Beylkin G (1992) On the representation of operators in bases of compactly supported wavelets. SIAM J Numer Anal 6(6):1716

Beyou S, Cuzol A, Gorthi SS, Mémin E (2013) Weighted ensemble transform Kalman filter for image assimilation. Tellus A 65(1):18803

Black MJ, Anandan P (1996) The robust estimation of multiple motions: parametric and piecewise-smooth flow fields. Comput Vis Image Underst 63(1):75

Cai S, Mémin E, Dérian P, Xu C (2018) Motion estimation under location uncertainty for turbulent fluid flows. Exp Fluids 59:8

Carlier J, Wieneke B (2005) Report 1 on production and diffusion of fluid mechanics images and data. Fluid project deliverable 1.2. European Project “Fluid image analisys and description” (FLUID). http://www.fluid.irisa.fr

Cassisa C, Simoens S, Prinet V, Shao L (2011) Subgrid scale formulation of optical flow for the study of turbulent flows. Exp Fluids 51(6):1739

Chen X, Zillé P, Shao L, Corpetti T (2015) Optical flow for incompressible turbulence motion estimation. Exp Fluids 56:8. https://doi.org/10.1007/s00348-014-1874-6

Corpetti T, Heitz D, Arroyo G, Mémin E, Santa-Cruz A (2006) Fluid experimental flow estimation based on an optical-flow scheme. Exp Fluids 40(1):80. https://doi.org/10.1007/s00348-005-0048-y

Corpetti T, Mémin E, Pérez P (2002) Dense estimation of fluid flows. IEEE Trans Pattern Anal Mach Intell 24(3):365–380

Daubechies I, Sweldens W (1998) Factoring wavelet transforms into lifting steps. J Fourier Anal Appl 4(3):247–269

Dérian P, Almar R (2017) Wavelet-based optical flow estimation of instant surface currents from shore-based and UAV videos. IEEE Trans Geosci Remote Sens 55(10):5790

Dérian P, Héas P, Herzet C, Mémin É (2012) Wavelet-based fluid motion estimation. In: Bruckstein AM, ter Haar Romeny BM, Bronstein AM, Bronstein MM (eds) Scale space and variational methods in computer vision. SSVM 2011. Lecture notes in computer science, vol 6667. Springer, Berlin, Heidelberg

Dérian P, Héas P, Herzet C, Mémin E (2013) Wavelets and optical flow motion estimation. Num Math 6:116

Dérian P, Mauzey CF, Mayor SD (2015) Wavelet-based optical flow for two-component wind field estimation from single aerosol lidar data. J Atmos Ocean Technol 32(10):1759

Hart DP (1999) Super-resolution PIV by recursive local-correlation. J Vis 10:1–10

Heitz D, Héas P, Mémin E, Carlier J (2008) Dynamic consistent correlation-variational approach for robust optical flow estimation. Exp Fluids 45:595

Heitz D, Mémin E, Schnörr C (2010) Variational fluid flow measurements from image sequences: synopsis and perspectives. Exp Fluids 48:369. https://doi.org/10.1007/s00348-009-0778-3

Horn BKP, Schunck BG (1981) Determining optical flow. Artif Intell 17:185–203

Héas P, Herzet C, Mémin E, Heitz D, Mininni PD (2013) Bayesian estimation of turbulent motion. IEEE Trans Pattern Anal Mach Intell 35(6):1343–1356

Kadri-Harouna S, Dérian P, Héas P, Mémin E (2013) Divergence-free wavelets and high order regularization. Int J Comput Vis 103:80–99. https://doi.org/10.1007/s11263-012-0595-7

Keane RD, Adrian RJ (1990) Optimization of particle image velocimeters. I. Double pulsed systems. Meas Sci Technol 1:1202. https://doi.org/10.1088/0957-0233/1/11/013

Kähler CJ, Scharnowski S, Cierpka C (2012) On the resolution limit of digital particle image velocimetry. Exp Fluids 52(6):1629

Liu T, Merat A, Makhmalbaf MHM, Fajardo C, Merati P (2015) Comparison between optical flow and cross-correlation methods for extraction of velocity fields from particle images. Exp Fluids 56:166

Liu T, Shen L (2008) Fluid flow and optical flow. J Fluid Mech 614:253. https://doi.org/10.1017/S0022112008003273

Mallat SG (1989) Multiresolution approximations and wavelet orthonormal bases of \(\text{ l }^2\text{(r) }\). Trans Am Math Soc 315(1):69–87

Mallat SG (2009) A wavelet tour of signal processing. Elsevier, New York

Plyer A, Besnerais GL, Champagnat F (2016) Massively parallel Lucas Kanade optical flow for real-time video processing applications. J Real Time Image Process 11(4):713

Pope SB (2001) Turbulent flows. IOP Publishing, Bristol

Scarano F (2002) Iterative image deformation methods in PIV. Meas Sci Technol 13:R1

Schanz D, Gesemann S, Schröder A (2016) Shake-the-box: Lagrangian particle tracking at high particle image densities. Exp Fluids 57(5):70

Schmidt M (2005) minFunc: unconstrained differentiable multivariate optimization in matlab. https://www.cs.ubc.ca/~schmidtm/Software/minFunc.html

Stanislas M, Okamoto K, Kähler CJ (2003) Main results of the first international PIV challenge. Meas Sci Technol 14:R63

Stanislas M, Okamoto K, Kähler CJ, Scarano F (2008) Main results of the third international PIV challenge. Exp Fluids 45(1):27

Stanislas M, Okamoto K, Kähler CJ, Westerweel J (2005) Main results of the second international PIV challenge. Exp Fluids 39(2):170

Susset A, Most JM, Honoré D (2006) A novel architecture for a super-resolution PIV algorithm developed for the improvement of the resolution of large velocity gradient measurements. Exp Fluids 40(1):70

Takehara K, Adrian RJ, Etoh GT, Christensen KT (2000) A Kalman tracker for super-resolution PIV. Exp Fluids 29(1):S034–S041

Taubman D, Marcellin M (2002) JPEG2000: standard for interactive imaging. Proc IEEE 90:1336

Tokumaru PT, Dimotakis PE (1995) Image correlation velocimetry. Exp Fluids 19:1

Ullman S (1979) The interpretation of visual motion. Massachusetts Inst of Technology Pr, Oxford

Westerweel J (1997) Fundamentals of digital particle image velocimetry. Meas Sci Technol 8:1379

Westerweel J, Elsinga GE, Adrian RJ (2013) Particle image velocimetry for complex turbulent flows. Ann Rev Fluid Mech 45:409

Wu Y, Kanade T, Li C, Cohn J (2000) Image registration using wavelet-based motion model. Int J Comput Vis 38(2):129

Yuan J, Schnörr C, Mémin E (2007) Discrete orthogonal decomposition and variational fluid flow estimation. J Math Imaging Vis 28:67

Zillé P, Corpetti T, Shao L, Chen X (2014) Observation model based on scale interactions for optical flow estimation. IEEE Trans Image Process 23(8):3281

Acknowledgements

This work was partially sponsored by the Air Force Office of Scientific Research under Grant FA9550-16-1-0366 (Chiping Li, Program Manager).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Wavelet transforms and signal decomposition

A wavelet is a mathematical function that can be used to divide a given function f(x) into different scale components. A wavelet transform is the representation of f(x) through a linear combination of a set of basis functions that are translations and dilations of a fast-decaying function known as the mother wavelet, \(\psi\), along with an associated scaling function, \(\varphi\). Specifically, a wavelet transform of f(x) first computes the set of inner products of f(x) with a wavelet atom \(\psi _{j,n}\) at scales j and positions n, resulting in a set of detail coefficients, \(d_j [n] = \langle f, \psi _{j,n} \rangle\). Wavelet atoms are defined by scaling and translating the mother wavelet \(\psi\) as \(\psi _{j,n} = \frac{1}{2^j} \psi \left( \frac{x-2^j n}{2^j} \right)\). Associated with the “high-pass” mother wavelet is a scaling function \(\varphi\) that gives a low-pass representation of the function f(x). Thus, at each scale j and position n, approximation coefficients are computed as \(a_j [n] = \langle f, \varphi _{j,n} \rangle\), where \(\varphi _{j,n} = \frac{1}{2^j} \varphi \left( \frac{x-2^j n}{2^j} \right)\). The wavelet transform at each scale j produces a set of detail coefficients \(d_j\) and approximation coefficients \(a_j\), both of which are contained within \(\varTheta\) as shown below.

In signal processing, one has a discrete signal \(f \left[ x_i \right]\) with a length of \(2^F\). The wavelet transforms are applied at increasingly coarser scales, starting from the finest scale \(\left( j = F \right)\) down to a predetermined coarse scale \(j_0<F\). At each subsequent scale, the approximation coefficients are divided into coarser approximations and details; that is, the transform at each scale \(j-1\) operates on the coarse approximation from the next finest scale \(a_j\) to produce \(d_{j-1}\) and \(a_{j-1}\). Thus, a discrete wavelet transform (DWT) applied to \(f \left[ x_i \right]\) forms a multiscale representation of the signal, where the detail coefficients from all scales \(d_{j_0}, d_{j_0+1},..., d_{F-1}, d_F\) and the remaining coarse approximation \(a_C\) are stored as:

which also has a length of \(2^F\) like the original signal \(f \left[ x_k \right]\). The exact mathematical details of the lifting DWT used by WOF-X are given in Mallat (2009) and Daubechies and Sweldens (1998). If the wavelets and scaling functions form an orthonormal basis as is the case of the biorthogonal 9–7 wavelets used by WOF-X, then the wavelet transform can be inverted and \(f \left[ x_k \right]\) is recovered exactly. Thus, it is concluded that the output from a wavelet transform is a set of wavelet coefficients that exactly describe the input signal in the wavelet basis.

1.1 Wavelet transforms of images

The above procedure can be applied to higher-dimensional signals (i.e. images) by applying the wavelet transform along each dimension isotropically at each scale, demonstrated schematically in Fig. 15.

Schematic of an isotropic 2D wavelet transform. The transform is performed in two steps at each scale. (1) Apply a 1D wavelet transform to each column in the image in the vertical direction to obtain vertical approximation and detail coefficients. (2) Apply a 1D wavelet transform to each row in the vertically transformed image. This gives new approximation coefficients \(\underline{a}_{F-1}\) and detail coefficients \(\underline{d}_{F-1}\) in the diagonal blocks, and sets of mixed coefficients in the off-diagonals. Step 3 is to repeat this procedure on the upper-leftmost block containing approximation coefficients \(\underline{a}_j\) at each scale j until scale \(j_0\) is reached. The inverse transform is performed by simply reversing these steps

For the wavelet-based optical flow described in the current manuscript, the generic signal \(f \left[ x_{k_1}^1,x_{k_2}^2 \right]\) is replaced with the individual velocity components \(v_i \left( \underline{x} \right)\) (which are two-dimensional). Truncated transforms are formed by setting all detail coefficients, including the mixed coefficients, above a specified scale L to zero. The truncated transform is then inverted to obtain a coarse approximation of the original signal \(f_L \left[ x_{k_1}^1,x_{k_2}^2 \right]\).

1.2 Derivative regularization in the wavelet domain

This section outlines the construction of the regularization term \(J_\mathrm{{R}}\) in Eq. 6 and its gradient. The proof that the following definition of \(J_\mathrm{{R}}\) indeed penalizes the third derivative of the velocity field is described in Kadri-Harouna et al. (2013) and will not be given here. Kadri-Harouna et al. (2013) show that the regularization term \(J_\mathrm{{R}}\) for penalization of the third derivative of a velocity field \(\underline{v}\) is given by the following:

where \(\underline{\psi }\) is the wavelet transform of the velocity field \(\underline{v}\) and the superscript \(^V\) indicates the vectorization operation. \(\underline{\varLambda }\) is a matrix matching the dimensions of \(\underline{\psi }\) whose elements are \(4^{3j}\) (see Fig. 15) for every scale above \(j_0\) and 0 for every element corresponding to \(\underline{a}_{j_0}\). Its gradient with respect to \(\underline{\psi }\) is easily found:

Appendix 2: Synthetic particle image generation

Synthetic particle images are generated in a similar manner as described by Carlier and Wieneke (2005). Each particle i is centered at a location \(\left( x_0^i,y_0^i\right)\) in the image domain, with diameter \(d_i\) and a center brightness value \(c_i\). The \(d_i\) values are selected from a normal distribution with mean \(\bar{d}\) and standard deviation \(\sigma _d\). The center brightness is a simulation of out-of-plane displacement in a real-world experiment, and is given by \(c_i = d_i^2 \exp \left( -b_i^2 \right)\), where \(b_i\) is normally distributed with a mean of zero and a standard deviation \(\sigma _b\). The \(c_i\) values are then normalized, such that the largest particle in the domain has a value of \(c_i = 1\). The intensity distribution of particle i is then given by the following:

A simulated digitized image can be created by considering the brightness at some pixel k. Its brightness is computed by summing the contribution of each particle and “digitizing” by integration over the pixel. The integration is done by convolving the intensity function of each particle with a rectangle function and the Dirac delta function as follows in Eq. 16:

Carrying out the convolution for pixel width \(\varDelta\), the resulting synthetic image is determined by the following:

Pixels are synthetically saturated when their calculated pixel value \(I \left( x_k,y_k \right)\) is greater than one. These pixel values are then set to a value of one.

Rights and permissions

About this article

Cite this article

Schmidt, B.E., Sutton, J.A. High-resolution velocimetry from tracer particle fields using a wavelet-based optical flow method. Exp Fluids 60, 37 (2019). https://doi.org/10.1007/s00348-019-2685-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-019-2685-6