Abstract

Purpose

As computational power has improved over the past 20 years, the daily application of machine learning methods has become more prevalent in daily life. Additionally, there is increasing interest in the clinical application of machine learning techniques. We sought to review the current literature regarding machine learning applications for patient-specific urologic surgical care.

Methods

We performed a broad search of the current literature via the PubMed-Medline and Google Scholar databases up to Dec 2020. The search terms “urologic surgery” as well as “artificial intelligence”, “machine learning”, “neural network”, and “automation” were used.

Results

The focus of machine learning applications for patient counseling is disease-specific. For stone disease, multiple studies focused on the prediction of stone-free rate based on preoperative characteristics of clinical and imaging data. For kidney cancer, many studies focused on advanced imaging analysis to predict renal mass pathology preoperatively. Machine learning applications in prostate cancer could provide for treatment counseling as well as prediction of disease-specific outcomes. Furthermore, for bladder cancer, the reviewed studies focus on staging via imaging, to better counsel patients towards neoadjuvant chemotherapy. Additionally, there have been many efforts on automatically segmenting and matching preoperative imaging with intraoperative anatomy.

Conclusion

Machine learning techniques can be implemented to assist patient-centered surgical care and increase patient engagement within their decision-making processes. As data sets improve and expand, especially with the transition to large-scale EHR usage, these tools will improve in efficacy and be utilized more frequently.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The accurate assessment and interpretation of clinical data are essential to deliver patient-specific care. With increased access to robust data sets, physicians are challenged with interpreting complex information to diagnose and treat urologic disease. However, the application of advanced computational techniques can assist with data mining and interpretation, improving patient care [1]. The field of artificial intelligence (AI) has been applied commonly in daily life allowing for the rapid analysis of large, non-linear data sets via developed algorithms and statistical models [2]. A field within AI, machine learning (ML), involves the development of algorithms to allow for complex pattern recognition and output prediction, which improve in accuracy as data inputs increase. With patient health data consisting of multifactorial and non-linear variables, ML can be a powerful tool for enhancing patient-specific care for urologic surgery [3].

Machine learning has been applied across multiple areas of medicine, allowing for improved disease diagnosis, treatment selection, patient monitoring, and risk stratification for primary prevention [2]. Incorporating ML techniques to enhance surgical systems requires accurate automated interpretation of perioperative patient imaging, as well as surgical anatomy and instrument tracking in the operative field. Although, no clinical surgical system exists that can completely perform these tasks autonomously, there have been several robots proving the feasibility of autonomous tasks such as anatomic tracking, suturing, and biopsy sampling [4]. Furthermore, in urology, some semi-autonomous surgical systems, such as Aquablation™, have been emerging and have proven the feasibility of robotic directed therapies [5]. Developing both autonomous methods that facilitate surgical candidate selection and automated surgical robotic systems could improve surgical accuracy and patient outcomes. The first step, however, is to develop accurate ML algorithms to improve evaluation and enhance the treatment of urologic disease.

The aim of this review article is to evaluate the current state of ML algorithms that could apply to patient-specific interventions in urologic surgery. Though no current systems exist, by improving ML algorithms and applying them to current surgical systems, the field could take steps toward autonomous surgery.

Background

Machine learning (ML) is the application of artificial intelligence techniques to generate computational systems that can simulate intellectual processes. Through sophisticated, non-linear modelling, ML can perform reasoning, learning, and problem-solving tasks. Specifically, it allows for the creation of computer algorithms that are not programmed with specific rules. By being exposed to sample data (i.e. “training data”), the computational algorithm identifies and adapts to specific patterns in the data set. This algorithm, in turn, can be used to interpret novel data. Machine learning tools have proven highly valuable in modern life to perform basic and complex human decisions such as traffic prediction, spam filtering, text prediction, and online advertising. Moreover, the ability to develop non-linear algorithms is particularly useful in analyzing medical data, which is often complex and nuanced.

Having access to high-quality, large data sets is essential to train any ML algorithm. When a data set is large enough, current algorithms can be trained to interpret noisy inputs to yield accurate outputs. As with any statistical model, large, retrospective datasets have missing data points, confounders, and biases which could impact the training of any algorithm. Thus, having a validated “ground-truth” data set can be used to assess the accuracy of any algorithm [6].

Within ML, there are multiple subfields dealing with the interpretation of different data types. Each subfield demonstrates that machines do not need explicit executable steps to function and make decisions but can generate knowledge and predict outcomes through pattern recognition and inference. For example, natural language processing (NPL) focuses on a computer’s analysis and comprehension of human language. Computer vision (CV) relates to machine analysis of image or video, such as radiographic or endoscopic images. Within medicine, much of ML has focused on the subfield of artificial neural networks (ANN). Like biological neurons, an ANN is a composition of individual processing nodes, similar to that of neurons, arranged in a layered architecture that enables machine systems to develop pattern recognition from sophisticated inputs. These layers consist of an input layer made of input nodes, a hidden layer made of hidden nodes, and an output layer made of output nodes. The characteristics of these layers, in both depth and width, determine the functionality of the ANN. Increasing depth and width enables increasing processing and learning capability (Fig. 1) [7].

Above is the framework for a neural network structure. Such algorithms can combine multiple inputs through a series of hidden layers to form an interpretable output. The layers are considered “hidden” as they may not be directly observable. By training an algorithm with a dataset, each node is given a certain weight for its contribution to output prediction. The number of inputs and layers can be varied to optimize data interpretation. Too many inputs and nodes can lead to overfitting, while too few may decrease the prediction accuracy of the algorithm

The introduction of these computational tools can assist with the interpretation of complex medical data and lead to improvements in both clinical and surgical practice. Developing autonomous surgical systems would require incorporating each different subfield of machine learning to analyze and combine different kinds of data. Specifically, these tools can be applied to assist with imaging interpretation and surveillance, operative planning and guidance, medical management, as well as outcome prediction.

Evidence acquisition

We performed a search of the current literature via the PubMed-Medline database up to Dec 2020. The search terms “urologic surgery” as well as “artificial intelligence”, “machine learning”, “neural network”, and “automation” were used. We reviewed 346 articles based on title and abstract and selected those directed towards machine learning applications for patient care during urologic surgery. Following full-text evaluation of 65 manuscripts, evidence was selected based on study relevance, strengths, and limitations. Twenty-six studies were included in our review and summarized.

Patient counseling and disease-specific outcomes for surgical candidate selection

Though thorough history and physical are still the foundation for medical decision making, ML tools using electronic health record (EHR), imaging, and laboratory data can further facilitate disease diagnosis and treatment. The ability to rapidly synthesize patient data would allow for “machine-guided” patient-specific therapeutic decisions and enhance surgical planning. These tools will continue to improve and are an essential step in developing larger systems, which could optimize surgical care for a variety of genitourinary pathologies. Currently, multiple ML methods are being evaluated to help facilitate surgical candidate selection and predict outcomes. Many of these methods are disease-specific and, below, we highlight select studies that can impact patient counseling for surgery (Table 1).

Nephrolithiasis

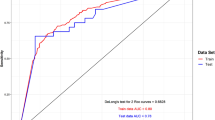

There is concern regarding stone-free rates (SFR) after surgery for renal stones as residual fragments can increase subsequent stone events [8]. Current development of machine learning methods for nephrolithiasis treatment focus on SFR prediction based on surgical technique. Aminsharifi et al. evaluated the use of an ANN to predict percutaneous nephrolithotomy (PCNL) postoperative SFR [9]. A 200 patient set was used to train the ANN using preoperative features and evaluate postoperative outcomes. Through a 254 patient test set, both preoperative stone burden and stone complexity were found as the most significant preoperative predictors of SFR. The group further developed a machine learning method to predict outcomes of PCNL [10]. Using 146 adult patients and a support vector machine (SVM) model, predictive outcomes were compared to the actual outcome. The ML system predicted PCNL outcomes with 80–95% accuracy. To compare the ML system with Guy’s stone score (GSS) and Clinical Research Office of Endourological Society (CROES) nomogram, a receiver operating characteristic curve was created for each method and area under curves (AUC) were compared. The ML software showed an excellent AUC (0.92) as compared to GSS (0.62) and CROES (0.62).

Likewise, shock wave lithotripsy outcomes have been evaluating using various neural networks. In two studies, researchers evaluated an ANN to predict SFR following shock wave lithotripsy [11, 12]. Gomha et al. used 10 parameters to develop a model to analyze SFR status. Parameters included patient age, sex, renal anatomy, stone location, side, number, stone length, stone width, whether stones were de novo or recurrent, and stent use. Results of a logistical regression (LR) model and the ANN were then compared to show respective sensitivities (100, 78%), specificities (0.0, 75%), positive predictive values (93, 97%), and overall accuracies (93, 78%). Similarly, Seckiner et al. analyzed 11 variables through a 139 patient training group to predict SFR after SWL. In a 32 patient test group, SFR was predicted with 99% accuracy in the training group, 85% in the validation group, and 89% in the test group. Thus, both neural networks showed high efficacy in predicting patient outcomes using multiple variables.

Kidney cancer

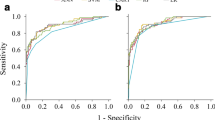

There is interest in developing novel methods to improve the prediction of renal mass pathology and prevent interventions on benign masses [13]. Several studies have exemplified ML applications in predicting tumor pathology of renal masses based on computerized tomography (CT) imaging data [14]. A recent study performed by Tanaka et al. focused on small renal cell masses (less than or equal to 4 cm) to determine malignancy using multiphase contrast-enhanced CT and deep learning techniques. Using 1807 image sets from 159 lesions with known pathologies (training set, N = 1526, validation set, n = 134), researchers evaluated neural networks for the separate phases of CT imaging. Accuracy in the prediction of malignancy across all phases was about 80%, showing potential ML tools in renal mass evaluation [15]. Furthermore, it is possible that improved image analysis techniques utilizing computer vision algorithms can improve pathologic prediction for renal masses. For example, Yu et al. evaluated 119 patients with pathologically evaluated renal masses and generated a machine learning tool using texture analysis techniques of four-phase CT image data. By doing so, they were able to identify unique features from imaging data that could discriminate clear cell renal cell carcinoma and oncocytoma, respectively (AUC 0.91, 0.93, p < 0.01) [16]. Further techniques can be incorporated into clinical practice, enhancing preoperative surgical counseling.

Prostate cancer

Other applications of ML techniques have demonstrated potential for guiding patients to treatment decisions. For example, Auffenberg et al. employed a novel machine learning model to help guide men with newly diagnosed prostate cancer to a decision on treatment approach [17]. To accomplish this, the group created a database of clinical features of 7543 men who had been counseled regarding options for prostate cancer treatment, including radical prostatectomy, surveillance, radiation therapy, androgen deprivation, and watchful waiting. A multinomial random forest ML algorithm was developed using two-thirds of patients, with one-third as a validation cohort. The group showed that, based on clinical features included, they could predict treatment decisions for prostate cancer (AUC 0.81), suggesting that this algorithm could help further inform patients and guide treatment decisions.

Other ML applications have focused on disease recurrence for prostate cancer (i.e. biochemical recurrence). For example, Zupan et al. created two separate algorithms, one using preoperative data and one using postoperative features of patients undergoing prostatectomy [18]. Preoperative features included Gleason score, clinical stage, and preoperative PSA, while postoperative features included Gleason score from the surgical specimen, prostatic capsular invasion, surgical margin status, seminal vesicle invasion, and lymph node status. By training two separate Naïve Bayes classifier algorithms, the group showed that pre and post-operative data could predict recurrence with an accuracy of 71 and 78%, respectively compared to a Cox proportional hazards model pre and post-operative accuracy of 70 and 79%. With more robust models, however, accuracy could improve. For example, Wong et al. examined ML prediction of prostate cancer biomarker recurrence following robot-assisted prostatectomy for localized prostate cancer after 1 year [19]. The group used 19 clinical features to train three separate ML models K-nearest neighbor, random forest tree, and LR. These were compared to classic Cox regression analysis. All AUCs for K-nearest neighbor (0.90), random forest tree (0.92), and LR (0.94) outperformed the Cox regression (0.87). Additionally, K-nearest neighbor, random forest tree, and LR demonstrated accuracy prediction scores of 98, 95, and 98%, respectively. By further validating and improving these algorithms, clinical systems could develop to improve prostate cancer counseling and outcomes.

Bladder cancer

As stage typically drives bladder cancer treatment, current ML efforts involve preoperative stage prediction using imaging to help with decision management. For example, Garapati et al. investigated the feasibility of machine learning modeling to predict bladder cancer staging based on preoperative CT urography [20]. A dataset of 84 bladder cancer lesions was segmented on pre-operative imaging and grouped based on pathological stage after cystectomy (≥ pT2 or < pT2). CT images were analyzed and specific characteristics from segmented tumors were extracted. Several models were trained based on the imaging and had AUC-ROCs ranging from 0.88 to 0.97, showing promise of correct staging. It is possible that such an algorithm could aid in classifying patients for neoadjuvant chemotherapy. However, larger data sets and algorithm optimization is needed.

Intraoperative applications

The successful application of ML to real-time, automated, intraoperative interventions would involve developing a multifaceted, AI platform. The platform would ideally be able to identify patient anatomy, as well as track equipment being used while adapting to a continually changing operative environment. Such a tool could aid in the physician’s intraoperative decisions or even give active feedback on surgical techniques. Though no automated ML systems yet exist for the operating room, many studies have laid the groundwork for this technology to develop in the future.

Preoperative identification and tracking of patient anatomy

Developing programs to accurately identify patient anatomy is the first step in implementing automated surgical systems to enhance surgical procedures. As radiologic imaging interpretation is critical to surgical management, there have been many efforts to apply ML techniques in imaging analysis. As described above, a subset of machine learning dealing with image analysis, computer vision (CV), can enhance the diagnosis of urologic pathologies. For example, in stone disease, several studies have demonstrated the use of CV techniques on CT abdominal imaging data to identify stone location [21]. These techniques depend on improved processing of imaging signals to make more robust algorithms [22]. By doing so, even visually subtle differences between pathologic and normal anatomy can be computationally recognized.

Furthermore, through segmentation (i.e. anatomic localization) of imaging, target surgical anatomy can be automatically delineated by computational algorithms. Currently, this process is done manually and has many “image-guided” surgical applications. However, manual segmentation of target anatomy and vasculature is very time-consuming. Automating this process involves CV techniques to quickly analyze imaging studies and interpret specific anatomical details. Recently, using multi-atlas segmentation, wherein a specific patient’s anatomy is analyzed by an algorithm trained on a large dataset of other imaging data, has shown improved detection of anatomic variation and segmentation accuracy [23]. This technique has been investigated to aid in surgical planning. For example, Huo et al. investigated using multi-atlas segmentation to analyze patient pyelocaliceal anatomy [24, 25]. Using CT urography, 3D anatomy characterization was performed automatically removing the need for manual dimension measurement. Successful segmentation was done for 8 of 11 pyelocaliceal systems to measure the infundibulopelvic angle (IPA). Though some errors were identified in image labeling, the study demonstrates the feasibility of multi-atlas segmentation for anatomic characterization. Further improvements in the technique will allow for the accurate construction of isolated anatomical features and improve localization and characterization of complex patient anatomy that is otherwise not easily captured through current methods.

Automatic tracking of intraoperative patient anatomy requires the ability to overcome the visual obstruction of instruments, blood, smoke, and adipose tissue while predicting tissue deformation during dissection. Though challenging, novel techniques are being developed to improve the automatic identification of patient anatomy. Nosrati et al. developed a multimodal approach to align pre-operative data with intraoperative endoscopic imaging during partial nephrectomy [26]. The alignment method used subsurface feature cues, such as vessel pulsation patterns, as well as color and texture information to automatically register the workspace to the preoperative imaging. Our group has similarly developed a method of quickly registering preoperative imaging to surgical anatomy using robotic tip position data (Fig. 2) [27]. Though not automated, registration was near instantaneous with limited target registration error (0.75–2.2 mm). Further work to automatically identify and register intraoperative patient anatomy to preoperative imaging for surgical guidance is ongoing.

Future applications

As we look toward the future, technologies that support accurate, automated patient-specific care are expected. Some automated surgical systems are already being used clinically. For example, in urologic surgery, procept biorobotics has introduced the first fully automated system for prostate adenoma ablation (aquablation) [5]. The technology has proven to be efficient and safe for the treatment of benign prostatic hypertrophy. There are additional automated surgical systems currently being developed for a variety of surgical fields [28]. It is only a matter of time until they are introduced into clinical practice.

Additionally, there has been an increasing interest in the evaluation of surgical technique and its correlation to surgical outcomes. The ability to automatically evaluate the surgical technique in real-time offers a unique training capability that could be used in education, credentialing, and quality improvement. Multiple studies have investigated the use of machine learning as a tracking tool for surgical techniques. For example, Ghani et al. examined bladder neck anastomosis videos of 11 surgeons to train a computer vision algorithm to detect velocity, trajectory, smoothness of instrument movement, as well as the relationship to contralateral instrument in a frame-by-frame manner [29]. Surgeons were then categorized into high and low skill. A final, 12th, video was used to validate the system repeatedly (n = 12) and the results were averaged. Evaluations were compared to blinded review by 25 peer surgeons using the global evaluative assessment of robotic skills (GEARS) tool. The algorithm proved an accuracy of skill categorization of 83% when using single instrument points and 92% when incorporating joint movement. Further incorporation of contralateral instrument raised the accuracy to 100% in binary skill level categorization. They found the most correlated metrics predicting skill were the relationship between needle driver forceps and joint position, acceleration, and velocity.

These intraoperative metrics, moreover, can be correlated with clinical outcomes. For example, Hung et al. demonstrated that algorithms can be trained to predict surgical specific outcomes. In one study, the group developed an ML algorithm to interpret automated performance metrics from surgical videos and were able to predict a length of hospital stay greater than 2 days with 87% accuracy [30]. In another study, the group demonstrated their algorithm's ability to predict improved continence based on the intraoperative performance metrics [31]. These techniques will be used to inform system outcomes research and shape both practice and policy. Additionally, the technology will undoubtedly play a role in credentialing and surgical training as well.

Current limitations and barriers to implementation

Though the previously discussed studies show promise in incorporating ML algorithms to augment patient-specific care, the technology remains largely investigational. The strength of ML algorithms correlates with the robustness of the input data. Training any algorithm requires rigorous data pre-processing, and large and diverse datasets are mandatory to refine safe patient-specific tools. Furthermore, not all ML tools are designed to be explainable, so it is critical for clinicians to understand the data used for algorithm training to accurately interpret results [32]. Most of the above studies are limited by data size and lack of external validation, though widespread usage of EHRs offers a future avenue for high-volume data collection. Similar to the integration of previous novel technologies, the incorporation of ML tools to improve disease diagnosis and treatment will be gradual and still require human regulation [32, 33]. Though further work is necessary to examine and validate ML tools in patient-specific care in urologic surgery, its widespread adoption in other areas of modern life offers encouragement that these challenges are only temporary barriers.

Conclusion

Machine learning techniques can be implemented to assist patient-centered surgical care and increase patient engagement within their decision-making processes. Though the technology remains largely investigational, refining algorithms through large-scale EHR datasets will improve their efficacy and facilitate incorporation into clinical practice. Furthermore, these tools have shown viability in the evaluation of surgeon skill as well. With scalability to larger data sets, ML systems will continue to have promising applications within urology both on the patient and health care systems levels.

References

Beckmann JS, Lew D (2016) Reconciling evidence-based medicine and precision medicine in the era of big data: challenges and opportunities. Genome Med 8:134. https://doi.org/10.1186/s13073-016-0388-7

Yu K-H, Beam AL, Kohane IS (2018) Artificial intelligence in healthcare. Nat Biomed Eng 2:719–731. https://doi.org/10.1038/s41551-018-0305-z

Tran BX, Vu GT, Ha GH et al (2019) Global evolution of research in artificial intelligence in health and medicine: a bibliometric study. J Clin Med. https://doi.org/10.3390/jcm8030360

Moustris GP, Hiridis SC, Deliparaschos KM, Konstantinidis KM (2011) Evolution of autonomous and semi-autonomous robotic surgical systems: a review of the literature. Int J Med Robot Comput Assist Surg MRCAS 7:375–392. https://doi.org/10.1002/rcs.408

Roehrborn CG, Teplitsky S, Das AK (2019) Aquablation of the prostate: a review and update. Can J Urol 26:20–24

Rajkomar A, Dean J, Kohane I (2019) Machine learning in medicine. N Engl J Med 380:1347–1358. https://doi.org/10.1056/NEJMra1814259

Kriegeskorte N, Golan T (2019) Neural network models and deep learning. Curr Biol 29:R231–R236. https://doi.org/10.1016/j.cub.2019.02.034

Raman JD, Aditya B, Karim B et al (2010) Residual fragments after percutaneous nephrolithotomy: cost comparison of immediate second look flexible nephroscopy versus expectant management. J Urol 183:188–193. https://doi.org/10.1016/j.juro.2009.08.135

Aminsharifi A, Irani D, Pooyesh S et al (2017) Artificial neural network system to predict the postoperative outcome of percutaneous nephrolithotomy. J Endourol 31:461–467. https://doi.org/10.1089/end.2016.0791

Aminsharifi A, Irani D, Tayebi S et al (2020) Predicting the postoperative outcome of percutaneous nephrolithotomy with machine learning system: software validation and comparative analysis with Guy’s stone score and the CROES nomogram. J Endourol 34:692–699. https://doi.org/10.1089/end.2019.0475

Gomha MA, Sheir KZ, Showky S et al (2004) Can we improve the prediction of stone-free status after extracorporeal shock wave lithotripsy for ureteral stones? a neural network or a statistical model? J Urol 172:175–179. https://doi.org/10.1097/01.ju.0000128646.20349.27

Seckiner I, Seckiner S, Sen H et al (2017) A neural network—based algorithm for predicting stone-free status after ESWL therapy. Int Braz J Urol Off J Braz Soc Urol 43:1110–1114. https://doi.org/10.1590/S1677-5538.IBJU.2016.0630

Osawa T, Hafez KS, Miller DC et al (2016) Comparison of percutaneous renal mass biopsy and R.E.N.A.L. nephrometry score nomograms for determining benign vs malignant disease and low-risk vs high-risk renal tumors. Urology 96:87–92. https://doi.org/10.1016/j.urology.2016.05.044

Kocak B, Kaya OK, Erdim C et al (2020) Artificial intelligence in renal mass characterization: a systematic review of methodologic items related to modeling, performance evaluation, clinical utility, and transparency. AJR Am J Roentgenol 215:1113–1122. https://doi.org/10.2214/AJR.20.22847

Tanaka T, Huang Y, Marukawa Y et al (2020) Differentiation of small (≤ 4 cm) renal masses on multiphase contrast-enhanced CT by deep learning. Am J Roentgenol 214:605–612. https://doi.org/10.2214/AJR.19.22074

Yu H, Scalera J, Khalid M et al (2017) Texture analysis as a radiomic marker for differentiating renal tumors. Abdom Radiol 42:2470–2478. https://doi.org/10.1007/s00261-017-1144-1

Auffenberg GB, Ghani KR, Ramani S et al (2019) askMUSIC: leveraging a clinical registry to develop a new machine learning model to inform patients of prostate cancer treatments chosen by similar men. Eur Urol 75:901–907. https://doi.org/10.1016/j.eururo.2018.09.050

Zupan B, Demsar J, Kattan MW et al (2000) Machine learning for survival analysis: a case study on recurrence of prostate cancer. Artif Intell Med 20:59–75. https://doi.org/10.1016/s0933-3657(00)00053-1

Wong NC, Lam C, Patterson L, Shayegan B (2019) Use of machine learning to predict early biochemical recurrence after robot-assisted prostatectomy. BJU Int 123:51–57. https://doi.org/10.1111/bju.14477

Garapati SS, Hadjiiski L, Cha KH et al (2017) Urinary bladder cancer staging in CT urography using machine learning. Med Phys 44:5814–5823. https://doi.org/10.1002/mp.12510

Liu J, Wang S, Turkbey EB et al (2015) Computer-aided detection of renal calculi from noncontrast CT images using TV-flow and MSER features. Med Phys 42:144–153. https://doi.org/10.1118/1.4903056

Liu J, Wang S, Linguraru MG et al (2015) Computer-aided detection of exophytic renal lesions on non-contrast CT images. Med Image Anal 19:15–29. https://doi.org/10.1016/j.media.2014.07.005

Iglesias JE, Sabuncu MR (2015) Multi-atlas segmentation of biomedical images: a survey. Med Image Anal 24:205–219. https://doi.org/10.1016/j.media.2015.06.012

Huo Y, Braxton V, Herrell SD et al (2017) Automated characterization of pyelocalyceal anatomy using CT urograms to aid in management of kidney stones. In: Cardoso MJ, Arbel T, Luo X et al (eds) Computer assisted and robotic endoscopy and clinical image-based procedures. Springer, pp 99–107

Wang H, Suh JW, Das SR et al (2013) Multi-atlas segmentation with joint label fusion. IEEE Trans Pattern Anal Mach Intell 35:611–623. https://doi.org/10.1109/TPAMI.2012.143

Nosrati MS, Amir-Khalili A, Peyrat J-M et al (2016) Endoscopic scene labelling and augmentation using intraoperative pulsatile motion and colour appearance cues with preoperative anatomical priors. Int J Comput Assist Radiol Surg 11:1409–1418. https://doi.org/10.1007/s11548-015-1331-x

Kavoussi NL, Pitt B, Ferguson JM et al (2020) Accuracy of touch-based registration during robotic image-guided partial nephrectomy before and after tumor resection in validated phantoms. J Endourol. https://doi.org/10.1089/end.2020.0363

Svoboda E (2019) Your robot surgeon will see you now. Nature 573:S110–S111. https://doi.org/10.1038/d41586-019-02874-0

Ghani KR, Yunfan L, Hei L et al (2017) Pd46-04 video analysis of skill and technique (vast): machine learning to assess surgeons performing robotic prostatectomy. J Urol 197:e891–e891. https://doi.org/10.1016/j.juro.2017.02.2376

Hung AJ, Chen J, Che Z et al (2018) Utilizing machine learning and automated performance metrics to evaluate robot-assisted radical prostatectomy performance and predict outcomes. J Endourol 32:438–444. https://doi.org/10.1089/end.2018.0035

Hung AJ, Chen J, Ghodoussipour S et al (2019) A deep-learning model using automated performance metrics and clinical features to predict urinary continence recovery after robot-assisted radical prostatectomy. BJU Int 124:487–495. https://doi.org/10.1111/bju.14735

Kantarjian H, Yu PP (2015) Artificial intelligence, big data, and cancer. JAMA Oncol 1:573–574. https://doi.org/10.1001/jamaoncol.2015.1203

Ngiam KY, Khor IW (2019) Big data and machine learning algorithms for health-care delivery. Lancet Oncol 20:e262–e273. https://doi.org/10.1016/S1470-2045(19)30149-4

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Doyle, P.W., Kavoussi, N.L. Machine learning applications to enhance patient specific care for urologic surgery. World J Urol 40, 679–686 (2022). https://doi.org/10.1007/s00345-021-03738-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00345-021-03738-x